Abstract

The multiple-sets split feasibility problem is the generalization of split feasibility problem, which has been widely used in fuzzy image reconstruction and sparse signal processing systems. In this paper, we present an inertial relaxed algorithm to solve the multiple-sets split feasibility problem by using an alternating inertial step. The advantage of this algorithm is that the choice of stepsize is determined by Armijo-type line search, which avoids calculating the norms of operators. The weak convergence of the sequence obtained by our algorithm is proved under mild conditions. In addition, the numerical experiments are given to verify the convergence and validity of the algorithm.

Similar content being viewed by others

1 Introduction

Let \(H_{1}\) and \(H_{2}\) be real Hilbert spaces, \(t\geq 1\) and \(r\geq 1\) be integers, \(\{C_{i}\}_{i=1}^{t}\) and \(\{Q_{j}\}_{j=1}^{r}\) be the nonempty, closed, and convex subsets of \(H_{1}\) and \(H_{2}\).

In this paper, we study the multiple-sets split feasibility problem (MSSFP). This problem is to find a point \(x^{*}\) such that

where \(A:H_{1}\rightarrow H_{2}\) is a given bounded linear operator and \(A^{*}\) is the adjoint operator of A. Censor et al. [6] first proposed this problem in finite-dimensional Hilbert spaces, mainly based on the inverse problem modeling in the intensity modulation radiation treatment modeling, the signal processing, and image reconstruction. Because of these implements, there are many algorithms that are proposed to work out the multiple-sets split feasibility problem, such as [24, 25, 28–30]. If \(t=r=1\), the multiple-sets split feasibility problem turns into the split feasibility problem, see [5].

It is well known that the split feasibility problem amounts to the following minimization problem:

where \(P_{C}\) is the metric projection on C and \(P_{Q}\) is the metric projection on Q. It is important to note that since a projection on an ordinary closed convex set has not closed form method, it is difficult to calculate its projection. Fukushima [11] proposed a new relaxation projection formula to overcome this difficulty. Specifically, he calculated a projection on a convex functions level set by calculating a series of projections onto half-spaces containing the general level set. Yang [26] proposed a relaxed CQ algorithm for working out the split feasibility problem in the context of a finite-dimensional Hilbert space, in which closed convex subsets C and Q are the level sets of convex functions, which are proposed as follows:

where \(c:H_{1}\rightarrow R\) and \(q:H_{2}\rightarrow R\) are convex functions which are weakly lower semi-continuous. Meanwhile, they assumed that c is subdifferentiable on \(H_{1}\) and ∂c is a bounded operator in any bounded subset of \(H_{1}\). Similarly, q is subdifferentiable on \(H_{2}\) and ∂q is also a bounded operator in any bounded subset of \(H_{2}\). Then two sets are defined at point \(x_{n}\) as follows:

where \(\xi _{n}\in \partial {c(x_{n})}\), and

where \(\zeta _{n}\in \partial {q(Ax_{n})}\). We can easily see that \(C_{n}\) and \(Q_{n}\) are half-spaces. For all \(n \geq 1\), we easily know that \(C_{n} \supset C\) and \(Q_{n}\supset Q\). Under this framework, the projection can be simply computed because of the particular form of the metric projection of the sets \(C_{n}\) and \(Q_{n}\), for details, please see [18]. Using this framework, Yang [26] built a new relaxed CQ algorithm, which was used to solve the split feasibility problem by using the semi-spaces \(C_{n}\) and \(Q_{n}\), rather than the sets C and Q. Whereafter, Shehu [19] came up with a relaxed CQ method with alternating inertial extrapolation step, which was used to solve the split feasibility problem by using the half spaces \(C_{n}\) and \(Q_{n}\). At the same time, they verified their convergence in certain appropriate step size.

In this paper, we consider a class of multiple-sets split feasibility problem (1.1), where the convex sets are defined by

where \(c_{i}:H_{1}\rightarrow R\ (i=1,2,\ldots,t)\) and \(q_{j}:H_{2}\rightarrow R\ (j=1,2,\ldots,r)\) are the convex functions which are weakly lower semi-continuous. Meanwhile, it is assumed that \(c_{i}\ (i=1,2,\ldots,t)\) are subdifferentiable on \(H_{1}\) and \(\partial c_{i}\ (i=1,2,\ldots,t)\) are the bounded operators in any bounded subsets of \(H_{1}\). Similarly, \(q_{j}\ (j=1,2,\ldots,r)\) are subdifferentiable on \(H_{2}\) and \(\partial q_{j}\ (j=1,2,\ldots,r)\) are the bounded operators in any bounded subsets of \(H_{2}\). In the whole study, we represent the solution set of the multiple-sets split feasibility problem (1.1) by S, when it is consistent. Censor et al. [6] invented the following distance function:

where \(l_{i}\ (i=1,2,\ldots,t)\) and \(\lambda _{j}\ (j=1,2,\ldots,r)\) are positive constants such that \(\sum_{i=1}^{t} l_{i}+\sum_{j=1}^{r} \lambda _{j}=1\). Then we know that

They proposed the following algorithm:

where \(\Omega \subseteq R^{N}\) is the auxiliary brief nonempty closed convex set satisfying \(\Omega \cap S\neq \emptyset \) and \(\rho >0\). When L was the Lipschitz constant of \(\nabla f(x)\) and \(\rho \in (0,2/L)\), they proved that the sequence \(\{x_{n}\}\) produced by (1.9) converged to a solution of the multiple-sets split feasibility problem.

In order to improve the practicability of the method, in allusion to the split convex programming problem, Nesterov [17] proposed the next iterative process.

where \(\lambda _{n}\) is a positive array and \(\theta _{n}\in [0,1)\) is an inertial element. Besides that, there are many other correlative algorithms, for example, the inertial forward-backward splitting method, the inertial Mann method, and the moving asymptotes method, for details, please see [1–4, 10, 12, 14–16].

Under the motivation of the above study, we provide a relaxed CQ algorithm to solve the multiple-sets split feasibility problem by using an alternating inertial step. In this algorithm, the stepsize is determined by line search. Hence, it avoids the calculation of the operators norms. Furthermore, we prove the weak convergence of the algorithm under some mild conditions. In addition, the inertial factor of the controlling parameters \(\beta _{n}\) can be selected as far as possible to close to the one, such as [7–9, 20–23, 27].

The structure of the paper is as follows. The basic concepts, definitions, and related results are described in Sect. 2. The third section presents the algorithm and its proof, and the fourth section provides the corresponding numerical experiment, which verifies the validity and stability of the algorithm. The final summarization is offered in Sect. 5.

2 Preliminaries

In this section, we give some basic concepts and relevant conclusions. Suppose that H is a Hilbert space.

Look back upon that a mapping T: \(H \rightarrow H\) is called

-

(a)

nonexpansive if \(\Vert {Tx-Ty}\Vert \leq \Vert {x-y}\Vert \) for all \(x,y\in H\);

-

(b)

firmly nonexpansive if \(\Vert {Tx-Ty}\Vert ^{2} \leq \Vert {x-y}\Vert ^{2}-\Vert {(I-T)x-(I-T)y} \Vert ^{2}\) for all \(x,y\in H\). Equivalently, for all \(x,y\in H\), \(\Vert {Tx-Ty}\Vert ^{2} \leq \langle x-y,Tx-Ty\rangle \).

As we all know, T is firmly nonexpansive if and only if \(I-T\) is firmly nonexpansive.

For a point \(u\in H\) and C is a nonempty, closed, and convex set of H, there is a unique point \(P_{C}u\in C\) such that

where \(P_{C}\) is the metric projection of H on C. The following is a list of the significant quality of the metric projection. It is well known that \(P_{C}\) is the firmly nonexpansive mapping on C. Meanwhile, \(P_{C}\) possesses

Moreover, the characteristic of the \(P_{C}x\) is

This representation means that

Suppose that a function \(f:H\rightarrow R\), the element \(g\in H\) is thought to be the \(subgradient\) of f on a point x if

Besides, \(\partial f(x)\) is the subdifferential of f at the point x which is described by

The function \(f:H\rightarrow R\) is thought to be weakly lower semi-continuous on a point x if \(\{x_{n}\}\) converges weakly to x. It means that

Lemma 2.1

([23])

Suppose that \(\{C_{i}\}_{i=1}^{t}\) and \(\{Q_{j}\}_{j=1}^{r}\) are the closed and convex subsets of \(H_{1}\) and \(H_{2}\), and \(A:H_{1}\rightarrow H_{2}\) is the bounded linear operator. At the same time, suppose that \(f(x)\) is a function described by (1.7). Then \(\nabla f(x)\) is Lipschitz continuous with \(L=\sum_{i=1}^{t} l_{i}+\Vert A\Vert ^{2} \sum_{j=1}^{r} \lambda _{j}\) as a Lipschitz constant.

Lemma 2.2

([19])

Suppose \(x,y\in H\). Then

(i) \(\Vert x+y\Vert ^{2} = \Vert x\Vert ^{2}+2\langle x,y \rangle +\Vert y\Vert ^{2}\);

(ii) \(\Vert x+y\Vert ^{2} \leq \Vert x\Vert ^{2}+2\langle y,x+y \rangle \);

(iii) \(\Vert \alpha x+\beta y\Vert ^{2} = \alpha (\alpha +\beta ) \Vert x\Vert ^{2}+\beta (\alpha +\beta )\Vert y\Vert ^{2}-\alpha \beta \Vert x-y\Vert ^{2}, \forall \alpha,\beta \in R\).

Lemma 2.3

([18])

Suppose that the half-spaces \(C_{k}\) and \(Q_{k}\) are defined as (1.4) and (1.5). Then the projections onto them from the points x and y are given as follows, respectively:

and

3 The algorithm and convergence analysis

For \(n \geq 1\), define

where \(\xi _{i}^{n}\in \partial {c_{i}(x_{n})}\) for \(i=1,2,\ldots,t\), and

where \(\zeta _{j}^{n}\in \partial {q_{j}(Ax_{n})}\) for \(j=1,2,\ldots,r\). We can easily see that \(C_{i}^{n}\ (i=1,2,\ldots,t)\) and \(Q_{j}^{n}\ (j=1,2,\ldots,r)\) are half-spaces. It is easy to see that, for all \(n \geq 1\), \(C_{i}^{n} \supset C_{i}\ (i=1,2,\ldots,t)\) and \(Q_{j}^{n}\supset Q_{j}\ (j=1,2,\ldots,r)\). We define

where \(C_{i}^{n}\ (i=1,2,\ldots,t)\) and \(Q_{j}^{n}\ (j=1,2,\ldots,r)\) are respectively given by (3.1) and (3.2). Then we know

where \(A^{*}\) denotes the adjoint operator of A. And \(l_{i}\ (i=1,2,\ldots,t)\) and \(\lambda _{j}\ (j=1,2,\ldots,r)\) are positive constants such that \(\sum_{i=1}^{t} l_{i}+\sum_{j=1}^{r} \lambda _{j}=1\).

Now, we propose an algorithm for solving the multiple-sets split feasibility problem (1.1), where \(C_{i}\ (i=1,2,\ldots,t)\) and \(Q_{j}\ (j=1,2,\ldots,r)\) are as shown in (1.6).

Algorithm 3.1

(The inertial relaxed algorithm with Armijo-type line search)

Step 1: Given \(\gamma > 0\), \(l \in (0,1)\), \(\mu \in (0,1)\), select the parameter \(\beta _{n}\) such that

Select starting points \(x_{0}, x_{1} \in H_{1}\) and set \(n = 1\).

Step 2: For the iterations \(x_{n}, x_{n-1}\), calculate

Step 3: Calculate

where \(\tau _{n} = \gamma l^{m_{n}}\) and \(m_{n}\) is the smallest nonnegative integer such that

Step 4: Compute the new iterate point

Step 5: Set \(n \leftarrow n+1\), and go to Step 2.

In the following, we prove the convergence of Algorithm 3.1.

Lemma 3.1

Suppose that the solution set of MSSFP is nonempty, that is, \(S \neq \emptyset \) and {\(x_{n}\)} is any sequence generated by Algorithm 3.1. Then {\(x_{2n}\)} is Fejer monotone with respect to S (i.e., \(\Vert x_{2n+2}-z \Vert \leq \Vert x_{2n}-z \Vert, \forall z \in S\)).

Proof

Choose a point z in S. We have

Due to the fact that \(z_{2n+1} \in \Omega \), we have

As a result,

As \({I-P_{C_{i}^{2n+1}}}\) and \({I-P_{Q_{j}^{2n+1}}}\) are firmly-nonexpansive and \(\nabla f_{2n+1}(z_{2n+1})=0\), then

Putting (3.10), (3.11), (3.12) into (3.9), one has

Similar to the discussion of (3.13), we can know

According to (3.6), we obtain

Substituting (3.14) and (3.15) into (3.13), one has

Note that

Combining (3.16) and (3.17), we have

where \(\mu \in (0,1)\), \(\beta _{2n+1}\in [0,\frac{1-\mu }{1+\mu }]\), so \((1-\mu ^{2})>0\), \((1+\beta _{2n+1})(1-\mu ^{2})-\beta _{2n+1}(1+\beta _{2n+1})(1+\mu )^{2}>0\), \(1+\beta _{2n+1}>0\), \(\frac{2\mu l}{\Vert A \Vert ^{2}}>0\).

Hence,

□

Theorem 3.1

Suppose that \(S \neq \emptyset \) and {\(x_{n}\)} is any sequence generated by Algorithm 3.1. Then {\(x_{n}\)} converges weakly to a point in S.

Proof

According to Lemma 3.1, it is easy to know that \(\lim_{n \to \infty }\Vert x_{2n+2}-z \Vert \) exists. This means that {\(x_{2n}\)} is bounded. In addition, from (3.18), we conclude that

That is to say,

and

Besides,

Owing to \({I-P_{C_{i}^{2n}}}\) and \({I-P_{Q_{j}^{2n}}}\) are nonexpansive, then

and

According to (3.19) and (3.21), from (3.22), we conclude that

According to (3.20) and (3.21), from (3.23), we conclude that

Similar to the discussion in (3.24) and (3.25), we know

As \(\partial {c_{i}}\) for \(i=1,2,\ldots,t\) are bounded on bounded sets, we have a constant \(\xi > 0\) such that \(\Vert \xi _{i}^{2n}\Vert \leq \xi\ (i=1,2,\ldots,t)\). On account of \(P_{C_{i}^{2n}}x_{2n}\in C_{i}^{2n}\), we obtain from the algorithm and (3.24) that

As {\(x_{2n}\)} is bounded, there exists a weakly convergent subsequence \(\{x_{2n_{k}}\}\subset \{x_{2n}\}, k\in N\), such that \(x_{2n_{k}}\rightharpoonup x^{*}, x^{*}\in H_{1}\). According to \(c_{i}\ (i=1,2,\ldots,t)\) being continuous and (3.27), we have

So \(x^{*} \in C_{i}\) for \(i=1,2,\ldots,t\).

As \(\partial {q_{j}}\) for \(j=1,2,\ldots,r\) are bounded on bounded sets, we have a constant \(\zeta > 0\) such that \(\Vert \zeta _{j}^{2n}\Vert \leq \zeta \ (j=1,2,\ldots,r)\). On account of \(P_{Q_{j}^{2n}}x_{2n}\in Q_{j}^{2n}\), we obtain from the algorithm and (3.25) that

According to \(q_{j}\) for \(j=1,2,\ldots,r\) are continuous and (3.29), we have

So \(Ax^{*} \in Q_{j}\) for \(j=1,2,\ldots,r\).

Thus, \(x^{*}\in S\).

Now, we are going to prove that {\(x_{2n+1}\)} converges to \(x^{*}\). Just for the sake of convenience, we are still going to use {\(x_{2n+1}\)} for the proof. According to \(\lim_{n \to \infty }\Vert x_{2n}-x^{*}\Vert \) exists and \(\lim_{n \to \infty }\Vert x_{2n_{k}}-x^{*}\Vert =0\), these mean that \(\lim_{n \to \infty }\Vert x_{2n}-x^{*}\Vert =0\). Thus, \(x^{*}\) is sole.

Using the same discussion in (3.9)–(3.13), we can know that

Thus,

Consequently,

To sum up,

□

4 Numerical examples

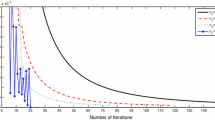

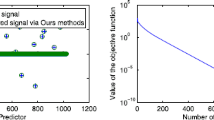

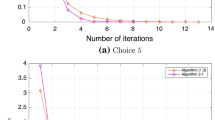

As in Example 4.1, we will provide the results in this section. The whole codes are written in Matlab R2012a. All the numerical results are carried out on a personal Lenovo Thinkpad computer with Intel(R) Core(TM) i7-3517U CPU 2.40 GHz and RAM 8.00GB. Firstly, we are going to come up with some different \(x_{0},x_{1}\) in our Algorithm 3.1. These results are provided in Table 1 and Fig. 1. Secondly, we contrast Algorithm 3.1 in this paper and Algorithm 3.1 in [23]. From the numerical results of Example 1 in [23], it is better than the results in [13]. So our algorithm is compared to Algorithm 3.1 in [23]. These results are provided in Table 2. Lastly, we check the stability of the iteration number for Algorithm 3.1 in this paper comparing with Algorithm 3.1 in [23]. These results are provided in Figs. 2–4.

Error history in Example 4.1

Example 4.1

([13])

Suppose that \(H_{1}=H_{2}=R^{3}\), \(r=t=2\), and \(l_{1}=l_{2}=\lambda _{1}=\lambda _{2}=\frac{1}{4}\). We give that

and

To find \(x^{*}\in C_{1}\cap C_{2}\) such that \(Ax^{*}\in Q_{1}\cap Q_{2}\).

In the first place, let \(\gamma =2\), \(l=0.5\), \(\mu =0.95\), and \(\beta _{n}=\frac{1}{n+1}\). Next, we study the iteration number required for the convergence of the sequence under different initial values. The condition for stopping the iteration is

We select diverse options of \(x_{0}\) and \(x_{1}\) as follows.

-

Option 1:

\(x_{0}=(1,1,5)^{T}\) and \(x_{1}=(5,-3,2)^{T}\);

-

Option 2:

\(x_{0}=(-4,3,-2)^{T}\) and \(x_{1}=(-5,2,1)^{T}\);

-

Option 3:

\(x_{0}=(7,5,1)^{T}\) and \(x_{1}=(7,-3,-1)^{T}\);

-

Option 4:

\(x_{0}=(1,-6,-4)^{T}\) and \(x_{1}=(-4,1,6)^{T}\);

-

Option 5:

\(x_{0}=(-4,-2,-3)^{T}\) and \(x_{1}=(-5,-2,-3)^{T}\);

-

Option 6:

\(x_{0}=(-5.34,-7.36,-3.21)^{T}\) and \(x_{1}=(0.23,-2.13,3.56)^{T}\);

-

Option 7:

\(x_{0}=(-2.345,2.431,1.573)^{T}\) and \(x_{1}=(1.235,-1.756,-4.234)^{T}\);

-

Option 8:

\(x_{0}=(5.32,2.33,7.75)^{T}\) and \(x_{1}=(3.23,3.75,-3.86)^{T}\).

From Table 1, we can see the iteration number and running time of Algorithm 3.1 in this paper for diverse options of \(x_{0}\) and \(x_{1}\).

In Algorithm 3.1, if we choose \(x_{0}\) and \(x_{1}\) as Option 4 and Option 5, the compartment of the error \(E_{n}\) is gradually converging, for details, please see Fig. 1. For other options, they are also gradually converging, which we do not show here.

Now, we compare Algorithm 3.1 in this paper and Algorithm 3.1 in [23]. The results are as shown in Table 2. Furthermore, in order to test the stability of the iteration number, 500 diverse initial value points are randomly selected for the experiment in the context of Algorithm 3.1 in this paper, for instance,

the consequences are separately shown in Fig. 2(a), Fig. 3(a), and Fig. 4(a).

In the same way, we also offer 500 experiments for diverse initial value points which are randomly selected in the context of Algorithm 3.1 in [23]. For \(\forall n\in N\), let \(\alpha _{n}=\frac{1}{n+1}\), \(\rho _{n}=3.95\), and \(\omega _{n}=\frac{1}{{1+n}^{1.2}}\). Suppose \(\beta =0.5\) and \(\beta _{n}=\beta \), for instance,

the consequences are separately shown in Fig. 2(b), Fig. 3(b), and Fig. 4(b).

From Tables 1–2 and Figs. 1–4, we can obtain the following conclusions.

1. Algorithm 3.1 in this paper is efficient for some different options and has a nice convergence speed and lower iteration number.

2. As you can see, for every option of \(x_{0}\) and \(x_{1}\), there is no important difference in CPU running times or iteration number. Therefore, our preliminary speculation is that the different options of \(x_{0}\) and \(x_{1}\) have negligible influence on the convergence of this algorithm.

3. With regard to Table 2, for some different options of \(x_{0}\) and \(x_{1}\), our Algorithm 3.1 clearly outperforms Algorithm 3.1 in [23].

4. According to Figs. 2–4, we conclude that the iteration number of Algorithm 3.1 in this paper is stable. Moreover, we can see the iteration number of Algorithm 3.1 in this paper is lower than that of Algorithm 3.1 in [23]. For example, in Fig. 4, the iteration number of Algorithm 3.1 in this paper is basically stable at about 50. However, Algorithm 3.1 in [23] is basically stable at about 150.

5 Conclusions

In this paper, we propose the inertial relaxed CQ algorithm for solving the convex multiple-sets split feasibility problem. And the global convergence conclusions are obtained. Our consequences generalize and produce some existing associated outcomes. Moreover, the preliminary numerical conclusions reveal that our presented algorithm is superior to some existing relaxed CQ algorithms in some cases about solving the convex multiple-sets split feasibility problem.

Availability of data and materials

All data generated or analysed during this study are included in this manuscript.

References

Abass, H.A., Aremu, K.O., Jolaoso, L.O., Mewomo, O.T.: An inertial forward-backward splitting method for approximating solutions of certain optimization problems. J. Nonlinear Funct. Anal. 2020, Article ID 6 (2020)

Bot, R.I., Csetnek, E.R.: An inertial alternating direction method of multipliers. Minimax Theory Appl. 1, 29–49 (2016)

Bot, R.I., Csetnek, E.R.: An inertial forward-backward-forward primal-dual splitting algorithm for solving monotone inclusion problems. Numer. Algorithms 71, 519–540 (2016)

Bot, R.I., Csetnek, E.R., Hendrich, C.: Inertial Douglas–Rachford splitting for monotone inclusion. Appl. Math. Comput. 256, 472–487 (2015)

Censor, Y., Elfving, T.: A multiprojection algorithm using Bregman projection in product space. Numer. Algorithms 8, 221–239 (1994)

Censor, Y., Elfving, T., Kopf, N., Bortfeld, T.: The multiple-sets split feasibility problem and its applications for inverse problem. Inverse Probl. 21, 2071–2084 (2005)

Chuang, C.S.: Hybrid inertial proximal algorithm for the split variational inclusion problem in Hilbert spaces with applications. Optimization 66, 777–792 (2017)

Dang, Y., Gao, Y.: The strong convergence of a KM-CQ-like algorithm for a split feasibility problem. Inverse Probl. 27, 015007 (2011)

Dang, Y.Z., Sun, J., Xu, H.K.: Inertial accelerated algorithms for solving a split feasibility problem. J. Ind. Manag. Optim. 13, 1383–1394 (2017)

Dong, Q.L., Yuan, H.B., Cho, Y.J., Rassias, T.: Modified inertial Mann algorithm and inertial CQ algorithm for nonexpansive mappings. Optim. Lett. 12, 87–102 (2018)

Fukushima, M.: A relaxed projection method for variational inequalities. Math. Program. 35, 58–70 (1986)

Guessab, A., Driouch, A., Nouisser, O.: A globally convergent modified version of the method of moving asymptotes. Appl. Anal. Discrete Math. 13, 905–917 (2019)

He, S., Zhao, Z., Luo, B.: A relaxed self-adaptive CQ algorithm for the multiple-sets split feasibility problem. Optimization 64, 1907–1918 (2015)

Lorenz, D.A., Pock, T.: An inertial forward-backward algorithm for monotone inclusions. J. Math. Imaging Vis. 51, 311–325 (2015)

Maingé, P.E.: Inertial iterative process for fixed points of certain quasi-nonexpansive mappings. Set-Valued Anal. 15, 67–79 (2007)

Mostafa, B., Thierry, E., Guessab, A.: A moving asymptotes algorithm using new local convex approximation methods with explicit solutions. Electron. Trans. Numer. Anal. 43, 21–44 (2014)

Nesterov, Y.: A method for solving the convex programming problem with convergence rate O(1/k2). Dokl. Akad. Nauk SSSR 269, 543–547 (1983)

Polyak, B.T.: Minimization of unsmooth functionals. USSR Comput. Math. Math. Phys. 9, 14–29 (1969)

Shehu, Y., Gibali, A.: New inertial relaxed method for solving split feasibilities. Optim. Lett. 5, 1–18 (2020)

Shehu, Y., Iyiola, O.S.: Convergence analysis for the proximal split feasibility problem using an inertial extrapolation term method. J. Fixed Point Theory Appl. 19, 2483–2510 (2017)

Shehu, Y., Vuong, P.T., Cholamjiak, P.: A self-adaptive projection method with an inertial technique for split feasibility problems in Banach spaces with applications to image restoration problems. J. Fixed Point Theory Appl. 21, 1–24 (2019)

Suantai, S., Pholasa, N., Cholamjiak, P.: The modified inertial relaxed CQ algorithm for solving the split feasibility problems. J. Ind. Manag. Optim. 14, 1595–1615 (2018)

Suantai, S., Pholasa, N., Cholamjiak, P.: Relaxed CQ algorithms involving the inertial technique for multiple-sets split feasibility problems. Rev. R. Acad. Cienc. Exactas Fís. Nat., Ser. A Mat. 113, 1081–1099 (2019)

Wang, F.: A new algorithm for solving the multiple-sets split feasibility problem in Banach spaces. Numer. Funct. Anal. Optim. 35, 99–110 (2014)

Xu, H.K.: A variable Krasonosel’skii–Mann algorithm and the multiple-set split feasibility problem. Inverse Probl. 22, 2021–2034 (2006)

Yang, Q.: The relaxed CQ algorithm for solving the split feasibility problem. Inverse Probl. 20, 1261–1266 (2004)

Yao, Y., Qin, X., Yao, J.C.: Convergence analysis of an inertial iterate for the proximal split feasibility problem. J. Nonlinear Convex Anal. 20, 489–498 (2019)

Zhang, W., Han, D., Li, Z.: A self-adaptive projection method for solving the multiple-sets split feasibility problem. Inverse Probl. 25, 115001 (2009)

Zhao, J., Yang, Q.: Self-adaptive projection methods for the multiple-sets split feasibility problem. Inverse Probl. 27, 035009 (2011)

Zhao, J., Zhang, Y., Yang, Q.: Modified projection methods for the split feasibility problem and multiple sets feasibility problem. Appl. Math. Comput. 219, 1644–1653 (2012)

Acknowledgements

We thank the anonymous referees and the editor for their constructive comments and suggestions, which have improved this manuscript.

Funding

This manuscript is supported by the Natural Science Foundation of China (Grant No. 11401438) and Shandong Provincial Natural Science Foundation, China (Grant No. ZR2020MA027, ZR2019MA022).

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the writing of this paper. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests regarding the present manuscript.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chen, W., Li, M. The inertial relaxed algorithm with Armijo-type line search for solving multiple-sets split feasibility problem. J Inequal Appl 2021, 190 (2021). https://doi.org/10.1186/s13660-021-02725-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-021-02725-5