Abstract

We describe a new two-step iterative method for solving the absolute value equations \(Ax-|x|=b\), which is an NP-hard problem. This method is globally convergent under suitable assumptions. Numerical examples are given to demonstrate the effectiveness of the method.

Similar content being viewed by others

1 Introduction

We consider a kind of important absolute value equations (AVEs):

where \(A\in R^{n\times n} \), \(b\in R^{n}\), and \(\vert \cdot \vert \) indicates absolute value. Another general form of the AVEs (1):

where \(B\in R^{n\times n}\) was introduced and investigated in [1]. The unique solvability of AVEs (2) was given in [2]. The algorithm which can compute all solutions of AVEs (2) (\(A,B\) square) with several steps, was proposed in [3]. Using the relationship between the absolute value equations and second order cone, a generalized Newton algorithm was introduced in [4] for solving AVEs (2). When the matrix B is reversible, AVEs (2) can be converted into AVEs (1), so some scholars have begun to study AVEs (1) instead of AVEs (2). AVEs (1) was investigated in theoretical detail in [5], when the smallest singular value of A is not less than 1, AVEs (1) is equivalent to the generalized LCP, the standard LCP and the bilinear program, based on the LCP, Mangasarian introduced the sufficient conditions of the existence of unique solution, \(2^{n}\) solutions and nonnegative solutions and the nonexistence of solutions. Rohn [6] proposed a new sufficient condition for unique solvability which is superior to that presented by Mangasarian and Meyer in [5], conflating these two sufficient conditions to a new one, and using it. An iterative method based on minimization technique for solving AVEs (1) was proposed in [7]; compared with the linear complementary problem, this method is simple in structure and easy to implement. Iqbal et al. [8] showed the Levenberg–Marquardt method which combines the advantages of both steepest descent method and Gauss–Newton method for solving AVEs (1). When the AVEs (1) has multiple solutions, Hossein el at. [9] shown that the AVEs (1) is equivalent to a bilinear programming problem, they solved AVEs (1) via the principle of simulated annealing, and then found the minimum norm solution of the AVEs (1). The sparse solution of the AVEs (1) with multiple solutions was found in [10] by an optimization problem. Yong proposed a hybrid evolutionary algorithm which integrates biogeography and differential evolution for solving AVEs (1) in [11]. Abdallah et al. [12] converted AVEs (1) into a horizontal linear complementarity problem, and solved it by a smoothing technique, meanwhile, this paper can provide some error estimation for the solutions of AVEs (1). A modified generalized Newton method was proposed in [13], this method has second order convergence and the convergence conditions are better than existing methods, but only as regards local convergence. Mangasarian proposed some new sufficient solvability and unsolvability conditions for AVEs (1) in [14], and focused on theoretical research.

According to the advantages and disadvantages of the above algorithms, we will present a two-step iterative method for effectively solving AVEs (1). Firstly, we present a new iterative formula, which absorbs the advantages of both the classic Newton and the two-step Traub iterative formulas, it has the characteristics of fast iteration and good convergence. In addition, we incorporate the good idea of solving 1-dimensional nonlinear equations to our iterative formula. Then a new algorithm for solving AVEs (1) is designed, we can prove that this method can converge to the global optimal solution of AVEs (1). Finally, numerical results and comparison with the classic Newton and two-step Traub iterative formulas show that our method converges faster and the solution accuracy is higher.

This article is arranged as follows. Section 2 is the preliminary about AVEs (1). We give a new two-step iterative method and prove the global convergence of the proposed method in Sect. 3. In Sect. 4 we present the numerical experiments. Some concluding remarks to end the paper in Sect. 5.

2 Preliminaries

We now describe our notations and some background materials. Let I and e be a unit matrix and a unit vector, respectively. \(\langle x,y \rangle \) denotes the inner product of vectors x and y (\(x, y \in R^{n} \)). \(\Vert x\Vert \) is the two-norm \((x^{T} x)^{\frac{1}{2}}\), while \(\vert x\vert \) denotes the vector whose ith component is \(\vert x_{i}\vert \). \(\operatorname{sign}(x)\) also can be seen as a vector with components equal to −1, 0 or 1 depending on whether the corresponding component of x is negative, zero or positive. In addition, \(\operatorname{diag}(\operatorname{sign}(x))\) is a diagonal matrix whose diagonal elements are \(\operatorname{sign}(x)\). A generalized Jacobian \(\partial \vert x\vert \) is given by the following diagonal matrix \(D(x)\) in [11, 15]:

We define a function \(f(x)\) as follows:

A generalized Jacobian of \(f(x)\) at x is

where \(D(x)\) is defined by Eq. (3).

For solving AVEs (1), Mangasarian [16] presented a new Newton iteration formula which is given by

By calculating 100 randomly generated 1000-dimensional AVEs (1), Mangasarian proved that this Newton iteration is an effective method, when the singular values of A are not less than 1. The vector iterations \(\{x^{k}\}\) linearly converge to the true solution of AVEs (1),

Haghani [17] extended the well-known two-step Traub method and solved AVEs (1), the iterative formula is given in Eq. (5):

Although the computation time of the iterative formula (4) is greater than that of (5), the experiment’s results obtained by the iterative formula (4) are better than that of (5).

Some iterative methods with higher order convergence and high precision for solving nonlinear equations \(g(x)=0\), where \(g:D\subset R\rightarrow R\), are in [18], which give us some inspiration and motivate us to extend those methods to the n-dimensional problem, especially the high-dimensional absolute value equations. Combining with the above-mentioned methods, we designed the following effective methods.

3 Algorithm and convergence

In this section, we introduce a new two-step iterative method for solving AVEs (1), the iterative formula is given as follows:

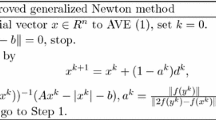

Based on the iterative formula (6), we design Algorithm 3.1 for solving AVEs (1).

Algorithm 3.1

-

Step 1.

Randomly generated an initial vector \(x_{k}\in R^{n}\) to AVEs (1), set \(k=0\).

-

Step 2.

Compute \(x^{k+1}\) by (6).

-

Step 3.

If \(\Vert Ax^{k+1}-\vert x^{k+1}\vert -b\Vert =0\), stop, otherwise go to Step 4.

-

Step 4.

Set \(k:=k+1\), go to Step 2.

Next, we prove the global convergence of Algorithm 3.1.

Lemma 3.1

The singular values of the matrix \(A\in R^{n\times n}\) exceed 1 if and only if the minimum eigenvalue of \(A'A\) exceeds 1.

Proof

See [5]. □

Lemma 3.2

If all the singular values of \(A\in R^{n\times n}\) exceed 1 for the method (6), then, for any diagonal matrix D, whose diagonal elements \(D_{ii}=+1, 0, -1\), \(i=1, 2,\ldots, n\). \((A-D)^{-1}\) exists.

Proof

If \((A-D)\) is singular, then \((A-D)x=0\), for some \(x \neq 0\). Now, according to this and Lemma 3.1, we have the following contradiction:

Hence, \((A-D)\) is nonsingular, and the sequence \(\{x^{k}\}\) produced by (6) is well defined for any initial vector \(x_{0} \in R ^{n}\). This proof is similar to [16]. □

Lemma 3.3

(Lipschitz continuity of the absolute value)

Let the vectors \(x, y \in R^{n}\), then

Proof

The proof follows the lines of [16].

Let \(d^{k}_{1}=(A-D(x^{k}))^{-1}f(x^{k})\), \(d^{k}_{2}=-(A-D(x^{k}))^{-1}(f(y ^{k})-f(x^{k}))\), then the new two-step iteration (6) can be written in a simple form:

□

Lemma 3.4

If the singular values of symmetric matrix \(A\in R^{n\times n}\) exceed 1, then the direction \(d^{k}_{2}\) of (9) is a descent direction for the objective function \(F(x)\), where \(F(x)=\frac{1}{2} \Vert f(x)\Vert ^{2}\).

Proof

Since \(f(x)=Ax-\vert x\vert -b\), \(f'(x)=\partial f(x)=A-D(x)\), \((A-D(x))^{-1}\) exists for any diagonal matrix D, whose diagonal elements \(D_{ii}=+1, 0, -1\), \(i=1, 2,\ldots, n\), and \((f'(x))^{T}=f'(x)\), \(F(x)=\frac{1}{2}\Vert f(x)\Vert ^{2}\), \(F'(x)=f'(x)f(x)\).

So, for \(\forall k \in Z\),

Thus, \(d^{k}_{1}\) is not a descent direction of \(F(x)\), we have \(\Vert f(y^{k})\Vert >\Vert f(x^{k})\Vert \).

Also,

where the last inequality holds according to \(\Vert f(y^{k})\Vert > \Vert f(x ^{k})\Vert \), \(k=0, 1, 2,\ldots \) . Hence \(d^{k}_{2}\) is a descent direction of \(F(x)\), then \(\Vert f(x^{k})\Vert \rightarrow 0\), as \(k\rightarrow \infty \). □

Theorem 3.1

(Global convergence)

If the norm of the symmetric matrix A exists, then the norm of \(A-D\) is existent for any diagonal matrix D whose diagonal elements are ±1 or o. Consequently, the sequence \(\{x^{k}\}\) is a Cauchy series, thus \(\{x^{k}\}\) converges to the unique solution x̄ of AVEs (1).

Proof

For \(\forall k \in Z\), \(f(x^{k})=Ax^{k}-\vert x^{k}\vert -b\), \(f(y ^{k})=Ay^{k}-\vert y^{k}\vert -b\).

So

Then, for \(\forall m \in Z\),

So, \(\Vert f(x^{k})\Vert \rightarrow 0\), as \(k \rightarrow \infty \), we get \(\Vert x^{k+m}-x^{k}\Vert \rightarrow 0\), as \(k \rightarrow \infty \). Consequently, \(\{x^{k}\}\) is a Cauchy series, and it converges to the unique solution of AVEs (1). □

4 Numerical results

In this section we consider some examples to illustrate the feasibility and effectiveness of Algorithm 3.1. All the experiments are performed by Matlab R2010a. We compare the proposed method (TSI) with the generalized Newton method (4) (GNM) and the generalized Traub method (5) (GTM).

Example 1

Let \(A=(a_{ij})_{n\times n}\), each element in A is given as follows:

Let \(b=(A-I)e\), the minimum singular value of each A exceeds 1.

Example 2

We choose a random matrix A from a uniform distribution on \([-10,10]\) and a random vector x from a uniform distribution on \([-2,2]\). All the information are generated by the following MATLAB procedure:

In order to guarantee that the minimum singular value of A is greater than 1, we first calculate the minimum singular value: \(\sigma _{\min }(A)\), then adjust A by \(\sigma _{\min }(A)\) multiplied by a random number \(\gamma \in [1,2]\).

Algorithm 3.1 is used to solve Example 1 and 2; Tables 1 and 2 record the experiment results.

In Tables 1 and 2, Dim, K, ACC and T denote the dimension of the problem, the number of iterations, \(\Vert Ax^{k}-\vert x^{k}\vert -b\Vert _{2}\) and time(s), respectively. It is clear from Tables 1 and 2 that the new two-step iterative method is very effective in solving absolute value equations, especially the high dimension problem.

Figures 1 and 2 show the convergence curves of three algorithms for solving Examples 1 and 2. For Example 1, We find that the convergence of the TSI is the best among three methods, obviously. For Example 2, the convergence of the TSI is litter better than GTM, they are both better than GNM. So Algorithm 3.1 is superior in convergence and the quality of solution for solving AVEs (1).

Comparison of GNM, GTM and TSI for Example 1 with \(n=1000\)

Comparison of GNM, GTM and TSI for Example 2 with \(n=1000\)

5 Conclusions

In this paper, we propose a new two-step iterative method for solving non-differentiable and NP-hard absolute value equations \(Ax-\vert x\vert =b\), when the minimum singular value of A is greater than 1. Compared with the existing methods GNM and GTM, our new method has some nice convergence properties and better calculation consequences. In the future, we have the confidence to continue an in-depth study.

References

Rohn, J., Hooshyarbakhsh, V., Farhadsefat, R.: An iterative method for solving absolute value equations and sufficient conditions for unique solvability. Optim. Lett. 8(1), 35–44 (2014)

Rohn, J.: On unique solvability of the absolute value equation. Optim. Lett. 3(4), 603–606 (2009)

Rohn, J.: An algorithm for computing all solutions of an absolute value equation. Optim. Lett. 6(5), 851–856 (2012)

Hu, S.L., Huang, Z.H., Zhang, Q.: A generalized Newton method for absolute value equations associated with second order cones. J. Comput. Appl. Math. 235(5), 1490–1501 (2011)

Mangasarian, O.L., Meyer, R.R.: Absolute value equations. Linear Algebra Appl. 419(2), 359–367 (2006)

Rohn, J., Hooshyarbakhsh, V., Farhadsefat, R.: An iterative method for solving absolute value equations and sufficient conditions for unique solvability. Optim. Lett. 8(1), 35–44 (2014)

Noor, M.A., Iqbal, J., Noor, K.I., et al.: On an iterative method for solving absolute value equations. Optim. Lett. 6(5), 1027–1033 (2012)

Iqbal, J., Iqbal, A., Arif, M.: Levenberg–Marquardt method for solving systems of absolute value equations. J. Comput. Appl. Math. 282(10), 134–138 (2015)

Moosaei, H., Ketabchi, S., Jafari, H.: Minimum norm solution of the absolute value equations via simulated annealing algorithm. Afr. Math. 26(7–8), 1221–1228 (2015)

Zhang, M., Huang, Z.H., Li, Y.F.: The sparsest solution to the system of absolute value equations. J. Oper. Res. Soc. China 3(1), 31–51 (2015)

Yong, L.Q.: Hybrid differential evolution with biogeography-based optimization for absolute value equation. J. Inf. Comput. Sci. 10(8), 2417–2428 (2013)

Abdallah, L., Haddou, M., Migot, T.: Solving absolute value equation using complementarity and smoothing functions. J. Comput. Appl. Math. 327(1), 196–207 (2018)

Zainali, N., Lotfi, T.: On developing a stable and quadratic convergent method for solving absolute value equation. J. Comput. Appl. Math. 330(4), 742–747 (2018)

Mangasarian, O.L.: Sufficient conditions for the unsolvability and solvability of the absolute value equation. Optim. Lett. 11(7), 1–7 (2017)

Polyak, B.T.: Introduction to Optimization. Optimization Software Inc., New York (1987)

Mangasarian, O.L.: A generalized Newton method for absolute value equations. Optim. Lett. 3(1), 101–108 (2009)

Haghani, F.K.: On generalized Traub’s method for absolute value equations. J. Optim. Theory Appl. 166(2), 619–625 (2015)

Singh, S., Gupta, D.K.: Iterative methods of higher order for nonlinear equations. Vietnam J. Math. 44(2), 387–398 (2016)

Acknowledgements

The authors are very grateful to the editors and referees for their constructive advice.

Funding

The research are supported by the National Natural Science Foundation of China (Grant No. 61877046); the second batch of young outstanding talents support plan of Shaanxi universities.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to the manuscript, and they read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Feng, J., Liu, S. A new two-step iterative method for solving absolute value equations. J Inequal Appl 2019, 39 (2019). https://doi.org/10.1186/s13660-019-1969-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-019-1969-y