Abstract

Background

Excessive screen time (\(\ge\) 2 h per day) is associated with childhood overweight and obesity, physical inactivity, increased sedentary time, unfavorable dietary behaviors, and disrupted sleep. Previous reviews suggest intervening on screen time is associated with reductions in screen time and improvements in other obesogenic behaviors. However, it is unclear what study characteristics and behavior change techniques are potential mechanisms underlying the effectiveness of behavioral interventions. The purpose of this meta-analysis was to identify the behavior change techniques and study characteristics associated with effectiveness in behavioral interventions to reduce children’s (0–18 years) screen time.

Methods

A literature search of four databases (Ebscohost, Web of Science, EMBASE, and PubMed) was executed between January and February 2020 and updated during July 2021. Behavioral interventions targeting reductions in children’s (0–18 years) screen time were included. Information on study characteristics (e.g., sample size, duration) and behavior change techniques (e.g., information, goal-setting) were extracted. Data on randomization, allocation concealment, and blinding was extracted and used to assess risk of bias. Meta-regressions were used to explore whether intervention effectiveness was associated with the presence of behavior change techniques and study characteristics.

Results

The search identified 15,529 articles, of which 10,714 were screened for relevancy and 680 were retained for full-text screening. Of these, 204 studies provided quantitative data in the meta-analysis. The overall summary of random effects showed a small, beneficial impact of screen time interventions compared to controls (SDM = 0.116, 95CI 0.08 to 0.15). Inclusion of the Goals, Feedback, and Planning behavioral techniques were associated with a positive impact on intervention effectiveness (SDM = 0.145, 95CI 0.11 to 0.18). Interventions with smaller sample sizes (n < 95) delivered over short durations (< 52 weeks) were associated with larger effects compared to studies with larger sample sizes delivered over longer durations. In the presence of the Goals, Feedback, and Planning behavioral techniques, intervention effectiveness diminished as sample size increased.

Conclusions

Both intervention content and context are important to consider when designing interventions to reduce children’s screen time. As interventions are scaled, determining the active ingredients to optimize interventions along the translational continuum will be crucial to maximize reductions in children’s screen time.

Similar content being viewed by others

Introduction

In the past decade, screen time has become a ubiquitous behavior in the daily lives of children and adolescents worldwide. The Sedentary Behavior Research Network defines screen time as the amount of time spent engaging with screens – such as tablets, computers, or smartphones – while sitting, standing, or being physically active [1]. Between 45–80% of children and adolescents fail to meet international recommendations of < 2 h per day of screen time [2, 3]. According to international 24-h movement guidelines, infants (birth to 1 year old) and toddlers (less than 2 years old) should not engage in sedentary screen time [4]. Further, preschoolers (ages 3–4 years old) should not exceed 1 h of sedentary screen time per day [4]. As children age, screen time guidelines are adjusted to recommend no more than 2 h per day of recreational screen time for children and adolescents (5–17 years old) [5]. Independent of physical activity and sedentary behavior, excess screen time (\(\ge\) 2 h per day) is associated with childhood overweight and obesity (OWOB) [6,7,8]. Excess screen time is also associated with unfavorable obesogenic behaviors, such as physical inactivity, increased sedentary time, unfavorable dietary behaviors, and disrupted sleep [9, 10]. Interventions targeting screen time can lead to reductions in screen use, as well as improvements in physical activity, reductions in sedentary time, and better sleep [11,12,13].

Meta-analyses show interventions targeting reductions in children’s screen time, either alone or as part of a multi-behavioral intervention, are effective in reducing children’s body mass index (BMI) and decreasing children’s screen time [11, 14]. Despite evidence on the effectiveness of interventions to reduce children’s screen time [11, 13, 14], it remains unclear what study characteristics and behavior change techniques are the most critical to include in the design of screen time interventions. Behavior change techniques are the components or elements of an intervention, such as self-monitoring, social support, and signing behavioral contracts, that may serve as potential mechanisms underlying the effectiveness of behavioral interventions [15]. Although prior reviews provided initial evidence of the ability to intervene upon and reduce children’s and adolescents’ screen time, these reviews did not explore the potential mechanisms underlying the behavior changes documented in these interventions [11, 13, 14]. Identification of the behavior change techniques that are associated with maximal intervention effectiveness can be used to streamline and optimize the delivery of interventions to reduce screen time. Resources, such as time and money, may often be limited when designing and implementing behavioral interventions. Thus, identifying the “active” components of behavioral interventions can be used to maximize intervention results while minimizing the use of limited resources. The purpose of this systematic review and meta-analysis is to identify the behavior change techniques and study characteristics associated with treatment effectiveness in behavioral interventions to reduce children’s (0–18 years) screen time.

Methods

The review process is reported according to the PRISMA 2020 guidelines statement [16]. This review protocol was not registered with PROSPERO.

Search strategy

A comprehensive literature search was conducted between January and February 2020. Four databases (Ebscohost, Web of Science, EMBASE, and Ovid Medline/PubMed) were searched from their earliest record of publication through articles published in January 2020. The Ebscohost meta-database was used to search articles indexed in the following databases: Academic Search Complete, CINAHL Complete, CINAHL Plus with Full Text, ERIC, Health and Psychosocial Instruments, Health Source: Nursing/Academic Edition, MEDLINE with Full Text, PsycARTICLES, Psychology and Behavioral Sciences Collection, PsycINFO, PsycTESTS, Social Sciences Full Text, and Social Work Abstracts. The search strategy used a mixture of keywords, Boolean operators, and expanded vocabulary terms where appropriate for study design (intervention, trial, experiment, program), participants (child, preschool, adolescents, school, youth), and intervention target (television, computer, “media use,” “screen time,” “video game,” “recreational media,” and sedentary). To account for articles indexed after the initial search process, an updated search of articles published between February 2020 and July 2021 was performed in July 2021 using the original search strategy described above. The search strategy was developed by two authors (AJ and MB) and is provided in Additional file 1. Reference lists of systematic reviews and meta-analyses were reviewed to identify additional studies that were not captured in the initial search [14, 17,18,19,20,21,22,23,24,25,26,27,28,29,30,31,32,33]. The citations for identified articles were uploaded into an EndNote (version X9.2) library for reference management.

Study inclusion criteria

Articles were eligible for review if they: 1) targeted children ≤ 18 years, 2) were a behavioral intervention that targeted a reduction in screen/sedentary time (i.e., television, video games, computer, etc.) or reported screen/sedentary time as an outcome, and 3) were published in an English, peer-reviewed journal. For this review, “screen/sedentary time” was defined as screen-based activities (i.e., television, video games, computer, etc.). The combination of screen/sedentary time was included as these behaviors are often conflated in the literature despite being distinct behavioral constructs. However, for the purposes of this review, articles targeting reductions in sitting time, without a focus on screens, were excluded. There were no geographic restrictions. In addition to randomized controlled trials (RCTs), quasi-experimental, single-group pre-post, and pilot studies were eligible for inclusion. Studies that took place in a lab or weight management clinic were excluded as the ability to generalize to free-living intervention studies is limited. The complete list of inclusion and exclusion criteria are provided in Additional file 2.

Study identification

EndNote reference management software (version X9.2) was used to initially discard duplicate articles. Citations were then uploaded into the Covidence Systematic Review Management Program. Titles and abstracts were screened for relevancy by one reviewer (AJ), and those meeting initial inclusion criteria were forwarded to a full-text review by two independent reviewers (AJ and HP). Cases of disagreement were resolved by discussion between two reviewers (AJ and HP). Eligible articles were retained for qualitative and quantitative data extraction.

Data extraction and quality assessment

Two authors independently (AJ and HP) extracted qualitative and quantitative data from the full text of eligible articles. Qualitative information regarding study-level characteristics (e.g., design, sample size, duration, participant characteristics, study location, self-identified pilot status), intervention characteristics (e.g., number of sessions, setting, intervention delivery, intervention recipient, screen device target), underlying theoretical framework (e.g., social cognitive theory [SCT]), and the behavior change techniques incorporated into the intervention (e.g., information, goal-setting, feedback, etc.) were extracted into a custom Microsoft Excel file (version 2012) developed for this review. The Abraham and Michie Taxonomy of Behavior Change Techniques [15] was used as the framework for extracting information on the individual behavior change techniques used in each article. As a second step, the individual behavior change techniques were grouped into behavior change clusters (i.e., Goals, Feedback, and Planning; Knowledge and Consequences; Behavioral Repetition and Practice; Social Comparison) based on the Abraham and Michie Hierarchical Behavior Change Technique Taxonomy [34]. Risk of bias in individual studies was examined by extracting information on whether a study was described as a randomized trial, whether the treatment allocation was concealed, and whether the participants or outcome assessors were blind to intervention group assignment. The NHLBI Study Quality Assessment Tool for Controlled Intervention Studies was used as a reference when extracting information on the risk of bias in individual studies [35]. Studies were considered “poor” quality if they did not meet these three criteria or if the study did not report on these criteria. Studies were considered “fair” quality if they met at least one or two of these criteria. Studies were considered “good” quality if they met all three of these criteria. Studies that are considered “poor” quality reflect a high risk of bias whereas studies that are considered “good” quality may have the least risk of bias. Qualitative data were coded into categorical variables in Stata (version 16) to be quantitatively analyzed. The codebook used for this review is provided in Additional file 3.

The I2 statistic was calculated as \({I}^{2}=\frac{Q-df}{Q}*100\%\), where Q is the chi-square test statistic and df is the corresponding degrees of freedom (Cochrane handbook). Generally accepted values for the interpretation of the I2 statistic were used, where 0–40% suggests non-impactful inconsistency, 30–60% may represent moderate heterogeneity, 50–90% may represent substantial heterogeneity, and 75–100% may represent considerable heterogeneity (Cochrane handbook). Visual inspection of funnel plots and Egger’s test for small-study effects were used to assess risk of small-study publication bias.

The reported screen time was extracted from each study. There was variability in how screen time was reported across studies. Some studies reported total daily screen time whereas other studies reported screen time during specific time periods (e.g., weekday versus weekend). Screen time could be reported as a cumulative total across all screen-based devices, or it could be reported as a “device-specific” estimate, such as the number of hours per day spent watching television or playing video games. Contextual information (i.e., time of day, device specific, weekday versus weekend) on how screen time was measured was extracted from each study. Means, standard deviations (SD), and sample sizes (N) at baseline and post-intervention were extracted for each treatment group. When 95% confidence intervals were reported, the SD was calculated using the following formula, \(SD=\left(\sqrt{N}\right)*(\frac{Upper limit-Lower limit}{3.92})\). When an interquartile range (IQR) was reported, the SD was calculated using the following formula, \(SD=\frac{Upper limit-Lower limit}{1.95}\). When a standard error (SE) was reported, the SD was calculated using the following formula, \(SD=\left(\sqrt{N}\right)*SE\). Within- and between-group change scores were extracted, if presented. Discrete data were extracted as the event and total sample size, within each group, at baseline and post-intervention. Odds ratios and relative risks with the corresponding 95% confidence interval were extracted for discrete outcomes, where presented. When outcomes were reported at multiple timepoints, each measurement was recorded. Data were extracted for the overall sample and for each subgroup when presented.

Data analysis

The standardized difference of the mean (SDM) was calculated for each observation. Observations were aggregated at the study level to create an average SDM for each study. The SDM for each study was used to calculate a summary effect for the random-effects meta-analysis using Comprehensive Meta-Analysis (version 3) software. All effects were coded to have positive effects representing a beneficial impact of the intervention on screen time (e.g., a decrease in screen time for the treatment group compared to the control group) and a negative effect indicated either 1) a greater reduction in screen time in the control group compared to the intervention group, or 2) a smaller amount of screen time at baseline compared to post-intervention. Meta-regressions were used to analyze the association between behavior change techniques, study-level characteristics, and intervention effectiveness. With a meta-regression, the techniques of simple regression, where outcome data is analyzed at a subject-level, are applied to the collection of identified studies, and outcome data is analyzed at the study-level [36]. Meta-regressions were used to explore whether the number and type of behavior change clusters (i.e., Goals, Feedback, and Planning; Knowledge and Consequences; Behavioral Repetition and Practice; Social Comparison), theoretical frameworks (e.g., SCT), intervention components (e.g., intervention delivery agent, intervention recipient) and study-level characteristics (e.g., sample size, duration, etc.) were associated with increased intervention effectiveness. For the present review, the dependent variable of interest was the intervention effectiveness – as measured with the SDM – and the covariates of interest were the behavior change techniques, theoretical frameworks, intervention components, and study-level characteristics previously identified. The meta-analysis package (meta) in Stata (version 16) was used to conduct the meta-regression analyses.

Availability of data and materials

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

Results

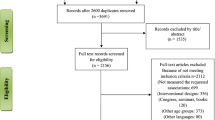

The original systematic search strategy identified 11,949 articles. After removing duplicates (n = 1,969), 9,980 articles were included in the initial title and abstract screen. Of the 9,980 articles screened for relevancy, 598 studies were retained for full-text eligibility screening, of which 287 studies were eligible for data extraction, and 216 articles were included in the systematic review. Of these 216 articles, 186 studies provided quantitative data that could be extracted and used in the meta-analysis. An additional 3,580 articles were identified in the updated search that was performed in July 2021. Of the 3,580 articles identified, 2,846 were duplicates, and 734 articles were included in the title and abstract screen. Of the 734 articles screened for relevancy, 82 were included in the full-text eligibility screening, and an additional 18 studies provided quantitative data for the meta-analysis. The meta-analysis included a final sample of 204 studies. An explanation of the criteria of exclusion for individual articles will be provided by the authors upon request. Study characteristics for the articles included in the meta-analysis are presented in Additional file 4. Figure 1 depicts the PRISMA flow chart of the initial screening process. Figure 2 depicts the PRISMA flow chart for the updated searches performed in July 2021.

Study characteristics

Articles were published between 1998 and 2021. Nearly half of the studies took place in North America (k = 92, 45.1%) [37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123,124,125,126], with Europe (k = 49, 24.0%) [127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144,145,146,147,148,149,150,151,152,153,154,155,156,157,158,159,160,161,162,163,164,165,166,167,168,169,170,171,172,173,174,175], Oceania (k = 34, 16.7%) [176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201,202,203,204,205,206,207,208,209], Asia (k = 17, 8.3%) [210,211,212,213,214,215,216,217,218,219,220,221,222,223,224,225,226], South America (k = 11, 5.4%) [227,228,229,230,231,232,233,234,235,236,237], and Africa (k = 1, 0.5%) [238] also represented. There was considerable heterogeneity across the studies (I2 = 98.15%). Of the 204 articles included in the review, 147 studies (72%) self-identified as “randomized,” 22 studies (11%) provided information on allocation concealment, and 36 studies (18%) indicated that either the participant or outcome assessors research staff were blind to intervention group assignment. Of the included studies, 55 articles (27%) were “poor”, 136 (67%) were “fair” quality, and only 13 studies were considered “good” quality.

The average frequency represents the average across all studies included in the meta-analysis (k = 204). Across studies, there was variability in intervention duration (median = 24 weeks, IQR = 11 to 52 weeks). Interventions longer than 53 weeks (average frequency = 17%) were less common compared to interventions < 12 weeks (average frequency = 40%) and between 13–52 weeks (average frequency = 43%). Studies ranged considerably in sample size (n = 6 to n = 35,157; median = 317, IQR = 102 to 699) with 67 studies being self-identified pilot studies. Given the considerable range in the continuous sample size variable, a categorical sample size variable based on the interquartile range was created (n < 95, n = 96–312, n = 313–696, and n > 697).

The variation in study-level characteristics and intervention characteristics is presented by child age category in Table 1. Interventions delivered in schools were most common among adolescents 13 years and older (frequency = 86%). Interventions delivered by teachers were most reported in studies with children 6 years and older (frequency = 45%). Nearly all interventions (97%) used a subjective measure to quantify screen time. Interventions delivered to the parent only were most common in studies with children between 0 and 5 years old (frequency = 48%). The Social Comparison cluster was most common among studies with children 13 years and older (frequency = 93%). The Knowledge and Consequences cluster was most common among studies with children between the ages of 6 and 12 years (frequency = 97%). The Behavioral Repetition and Practice cluster was most common among studies with children 6 years and older (frequency = 84%). The Goals, Feedback, and Planning Cluster was most common among studies with children 13 years or older (frequency = 87%).

The overall summary of random effects demonstrated a small, positive impact of screen time interventions compared to controls (SDM = 0.116, 95CI 0.08 to 0.15). The impact of study-level characteristics on intervention effectiveness is presented in Table 2. The largest treatment effects were observed in interventions delivered to children 12 years old or younger (k = 24, SDM = 0.209, 95CI 0.05 to 0.37). Shorter studies (< 12 weeks SDM = 0.151, 95CI 0.0 to 0.23 and 13–52 weeks SDM = 0.113, 95CI 0.07 to 0.16) were associated with larger intervention effects compared to studies > 53 weeks in duration (SDM = 0.061, 95CI -0.03 to 0.15). As sample size increased, there was a corresponding decrease in the magnitude of intervention effectiveness (n < 95, SDM = 0.298, 95CI 0.20 to 0.39 vs. n > 697, SDM = 0.054, 95CI 0.02 to 0.09). Interventions delivered by research staff (k = 35, SDM = 0.300, 95CI 0.16 to 0.44) and interventions delivered by other individuals, such as community members (k = 61, SDM = 0.149, 95CI 0.09 to 0.21), demonstrated a significant positive impact on children’s screen time.

Intervention characteristics

The estimate of intervention effectiveness by the presence or absence of a behavior change techniques is presented in Table 3. Of the 21 potential behavioral techniques, the 3 most frequently included were information on the behavior health link (k = 168), instruction (k = 145), and social support (k = 134). Behavioral contracts (k = 13), prompting cues (k = 18), and motivational interviewing (k = 22) were the least frequently included behavior change techniques (see Table 3). Studies did not use behavior change techniques in isolation. On average, interventions included 8.0 (range 0 to 18) different behavior change techniques, with some interventions including as many as 18 of the 21 techniques. Using the Hierarchical Behavior Change Technique Taxonomy [34], 4 clusters of co-occurring behavior strategies were identified: 1) Goals, Feedback, and Planning, 2) Social Comparison, 3) Knowledge and Consequences, and 4) Behavioral Repetition and Practice. To be included in a cluster, studies had to use at least one of the individual behavior change techniques. The Knowledge and Consequences cluster was the most common cluster (k = 186) whereas the Behavioral Repetition and Practice cluster was the least common cluster (k = 148). Including the Goals, Feedback, and Planning cluster was associated with the largest impact on intervention effectiveness (k = 160, SDM = 0.145, 95CI 0.11 to 0.18) compared to those studies that did not include the Goals, Feedback, and Planning cluster (k = 44, SDM = 0.001, 95CI -0.11 to 0.11, test of difference SDM = 0.154, 95CI 0.07 to 0.24). The inclusion of the Social Comparison, Behavioral Repetition and Practice, or the Knowledge and Consequences clusters were not associated with greater intervention effectiveness when compared to interventions that did not include these clusters, respectively (see Table 3).

Follow-up analyses were conducted to examine how study-level characteristics of intervention duration, sample size, and intervention delivery agent modified the impact of including the Goals, Feedback, and Planning cluster on intervention effectiveness (see Table 4). As intervention duration increased from < 12 to > 53 weeks, the influence of having the Goals, Feedback, and Planning behavioral cluster dampened (SDM = 0.182, 95CI 0.12 to 0.25 versus SDM = 0.108, 95CI 0.05 to 0.17). The inclusion of the Goals, Feedback, and Planning cluster amplified the magnitude of effect for studies < 12 weeks (test of difference SDM = 0.200, 95CI 0.02 to 0.38) and > 53 weeks (test of difference SDM = 0.244, 95CI 0.04 to 0.45) in duration compared to studies that did not include this cluster.

Interventions delivered by research staff that included the Goals, Feedback, and Planning cluster were significantly associated with reductions in children’s screen time (k = 28, SDM = 0.341, 95CI 0.18 to 0.50). Interventions delivered by healthcare professionals that included the Goals, Feedback, and Planning cluster demonstrated a significant, positive impact on screen time compared to interventions delivered by healthcare professionals without the Goals, Feedback, and Planning cluster (test of difference SDM = 0.500, 95CI 0.21 to 0.79). Sample size attenuated the impact of the Goals, Feedback, and Planning cluster, with small studies (n < 95) driving the magnitude of effect (SDM = 0.306, 95CI 0.20 to 0.41) and tapering off as sample size increased (n > 697; SDM = 0.062, 95CI 0.02 to 0.11). Across studies of the same sample size, those that included the Goals, Feedback, and Planning cluster demonstrated larger intervention effects compared to studies that did not include this cluster. However, this effect was only significant when sample sizes were between 313–696 participants (test of difference SDM = 0.184, 95CI 0.03 to 0.34).

Visual inspection of the contour-enhanced funnel-plot suggested evidence of publication bias due to small-study effects (see Fig. 3). Egger’s test for small-study effects highlighted significant evidence of small-study publication bias (p < 0.001). The trim-and-fill analysis estimated 58 studies were missing due to publication bias, with the imputed studies reducing the overall SDM from 0.116 (95CI 0.08 to 0.15) to 0.007 (95CI -0.04 to 0.05).

Discussion

The current meta-analysis found an overall small, positive effect of screen time interventions, compared to controls, for reducing children’s screen time. The magnitude and direction of effects in the current meta-analysis is consistent with prior meta-analyses of behavioral interventions to reduce children’s screen time [11, 13, 14, 239]. The current study uniquely contributes to the literature by including children from birth through 18 years and identifying which behavior change techniques and study-level characteristics are associated with intervention effectiveness. The findings that the presence of goal setting strategies along with study-level characteristics (i.e., sample size, duration, and intervention delivery agent) are associated with larger improvements in children’s screen time have important implications for the content and design of interventions.

To optimize intervention effectiveness and maximize resources, an understanding of the “active ingredients” in behavioral interventions to reduce children’s screen time is warranted. We found that study characteristics such as smaller sample sizes and shorter intervention durations were associated with larger effects. Previous meta-analyses have shown the magnitude of intervention effectiveness decreased as sample size increased and that interventions with shorter durations (< 7 months) are more effective at reducing children’s screen time compared to interventions with longer durations [11, 240]. Collectively these studies suggest delivering a behavioral intervention under “ideal” conditions may be a primary ingredient to obtaining statistically significant improvements in children’s screen time. Our findings are aligned with recent reviews which have suggested that as interventions progress from the pilot stage to a larger, well-powered trial, there is a corresponding drop in the magnitude of the intervention effects [241]. Features such as small sample sizes and short intervention durations, which are characteristic of pilot studies, may introduce bias into the interpretation of the effectiveness of the intervention [241]. Subsequently, the conclusions drawn from these pilot studies can impact decisions regarding the scalability and generalizability of the intervention.

Although intervention context and delivery are important, intervention content (i.e., specific behavior change techniques), can modify the intervention effectiveness. Behavioral interventions that included techniques such as goal setting, goal review, and self-monitoring had larger effects compared to interventions that did not include these techniques. These findings are consistent with a recent review that identified goal setting, positive reinforcement, and family social support as active ingredients in behavioral interventions targeting reductions in children’s sedentary behavior [240]. Some techniques, such as goal setting, may be effective in reducing both children’s sedentary time and screen time. Yet, it is important to note that not all sedentary time involves screens. Thus, different behavioral strategies may be required to differentially target these often conflated and overlapping behavioral constructs.

The inclusion of the Goals, Feedback, and Planning cluster was the driving behavioral strategy associated with enhanced intervention effectiveness. Yet, there was variability across interventions in how this cluster of behavior change techniques was delivered during the intervention. For example, Dennison et al. [53] had participants identify alternative activities to television viewing whereas studies by Gorin et al. [73] and Morgan et al. [195] used a combination of goal-setting and self-monitoring to reduce children’s screen time. Thus, it is unclear whether some individual behavior change techniques, such as goal review, may be more effective at reducing children’s screen time compared to other techniques, such as barrier identification. Further, it is unclear whether there is a linear association between the number of individual behavior change techniques incorporated in an intervention and intervention effectiveness. Over half the studies (88%) included the Goals, Feedback, and Planning cluster in combination with another behavior change cluster indicating the use of this behavior change technique did not occur in isolation. Goal setting may catalyze behavior change by serving as the “initial step” in the behavior change process. Goal setting may represent the starting point from which actionable steps can be taken to reach the destination of behavior change.

There are a variety of hypothesized mechanisms relating goal setting to behavior change. Some authors [242] suggest motivation is the link between goal setting and behavior. Without motivation, individuals will not set goals and make plans to modify their behavior. Other authors [243] suggest goal setting should be paired with a complementary behavior change technique, such as feedback, to create a feed-forward mechanism that synergistically contributes to behavior change. A recent meta-analysis suggested that goal setting, in combination with feedback, behavioral repetition and practice, instruction, or modeling did not increase intervention effectiveness compared to interventions that included goal setting as the sole behavior change strategy [243]. However, the combination of goal setting with self-monitoring was found to strengthen the effect of the intervention [243]. Although goal setting is intertwined with other behavioral strategies, such as feedback and modeling, not all pairings of complementary behavior change techniques are equal. The cyclical nature of goal setting and self-monitoring may reflect the dynamic and ongoing process of behavior change that is not reflected when goal setting is paired with other behavior change techniques. Future studies should attempt to disentangle the additive effects of complementary behavior change techniques in relation to their impact on the effectiveness of behavioral interventions.

The current study examined the interaction between intervention content and a single domain of intervention context (i.e., duration, sample size, intervention delivery agent). Among studies that included the Goals, Feedback, and Planning cluster, there was a significant decrease in the magnitude of treatment effects as intervention duration increased from < 12 to > 53 weeks. Of the studies that included the Goals, Feedback, and Planning cluster, interventions delivered by research staff were associated with the largest treatment effects. Further, there was a descending magnitude of treatment effects as sample size increased for those studies that included the Goals, Feedback, and Planning cluster. In the absence of the Goals, Feedback, and Planning cluster, the impact of intervention duration, intervention delivery, and sample size was non-significant.

Behavioral interventions are complex, and the decision of which behavioral strategies to use may depend on the factors such as who the intervention is being delivered to and what resources are available to deliver the intervention. As researchers develop interventions, strategies that may be feasible to deliver to small samples may be impractical to deliver to larger samples. For example, strategies such as motivational interviewing and barrier identification may require intense, one-on-one contact between intervention personnel and participants. Further, strategies such as setting goals, providing graded tasks, or establishing a behavioral contract are often tailored to an individual’s needs. Screen use, including the timing, the amount, and the type of device used is highly variable within an individual. Thus, the use of highly tailored behavioral strategies may lead to larger improvements in screen time at the individual level. However, in studies delivered in group settings or with larger samples, the behavioral strategies may be adapted. Strategies such as social support and role modeling may be incorporated into larger trials due to the qualities of accountability and comparison that are inherently linked with these strategies. The decision to modify the behavioral strategies or the “ingredients” of the intervention may result in a corresponding change in the effectiveness of the intervention. As interventions are scaled, researchers should explore how a change in an intervention’s behavioral strategies or intervention “ingredients” impact the effectiveness of the intervention.

The current study is among the first to identify the behavioral strategies and study-level characteristics associated with the effectiveness of interventions to reduce children’s screen time. This meta-analysis was guided by a widely used taxonomy of behavior change techniques [15, 34], included 204 studies, and included screen time from multiple devices as an outcome. However, the current meta-analysis is not without its limitations. We acknowledge that this review protocol was not registered a priori with the PROSPERO systematic review trial registry. However, the PROSPERO database was searched to identify whether similar reviews were registered to avoid unintended duplication.

There were insufficient sample sizes to examine subgroup outcomes stratified by measurement tool. Almost all interventions (97%) measured screen time via self- or parent-report. These findings are consistent with a recent review that reported that no articles used an objective, device-based tool to measure screen time in children 6 years old or younger [244]. Interventions that objectively measured screen time were associated with larger, albeit non-significant effects, compared to interventions that measured screen time via self- or parent-report. The difference in the magnitude of effectiveness between measurement tools may be due to self-report or recall bias. New technology, such as passive mobile sensing, may improve the ability to objectively monitor screen time [245, 246]. As objective measures of screen time are developed and disseminated to researchers, future studies may explore how the precision of the measurement tool used to quantify screen time is associated with intervention effectiveness.

The current meta-analysis highlights the impact intervention delivery agents and intervention duration have on an intervention’s impact when compared to interventions delivered by individuals with less expertise or interventions delivered over longer durations. Our results suggest that larger treatment effects are observed in smaller studies whose intervention delivery, duration, and setting are often tightly controlled. These smaller studies are often “pilot studies,” a primary function of which is to aid decisions regarding the scaling up of an intervention [241]. During the transition from small, “pilot” interventions to larger efficacy trials, there may be changes in intervention delivery that are associated with a corresponding drop in the magnitude of intervention effectiveness [241]. These seemingly small decisions can introduce bias when interpreting the effectiveness of the larger intervention. Decisions to modify the components of a pilot study when scaled often occur due to funding constraints or logistical requirements for collecting and delivering an intervention to a larger sample [247]. Yet before a decision is made, researchers may want to carefully consider the consequences of tinkering an intervention that demonstrated promise in the pilot phase. Researchers can begin by thinking with “the end in mind” and design pilot study protocols that will be mirrored and implemented in the larger trial. If we intend for the results obtained in small studies to inform and generalize to larger scaled trials, then we need to be designing such studies with an eye toward scalability.

As the technological landscape continues to rapidly evolve, the way children and adolescents interact with technology will continue to change. Rather than simply designing interventions to reduce “screen time” which can apply to any electronic, screen-based activity, future researchers may consider designing targeted interventions that are device-specific. Further, increased access and use of screens can also modify how interventions to reduce screen time are delivered. Researchers are no longer constrained to in-person settings but can use mHealth or mobile platforms to deliver an intervention. Thus, the setting or the medium through which an intervention is delivered may modify the effectiveness of the intervention. Although mHealth interventions may increase the accessibility of a behavioral intervention, the use an electronic device to reduce screen time may be negated by the need to interact with the device in order to receive the intervention material. Future researchers may consider exploring whether using technology to deliver an intervention targeted at reducing screen time negates or amplifies the effectiveness of the intervention.

Finally, the current study found a small, positive effect of interventions to reduce children’s screen time, yet it is unknown whether the effect is clinically meaningful. International recommendations suggest children and adolescents (5–17 years old) engage in \(\le\) 2 h per day of screen time (5). However, these guidelines were based on perceived population-level associations between screen time and other negative obesogenic behaviors, such as physical inactivity, and rates of overweight and obesity. Clinical meaningfulness is often based on the public health impact of an intervention or the average improvement in a behavior for a large group of individuals. Although small improvements at the population-level may be beneficial, there is considerable within-person variability in screen time, including what devices are used, when they are most frequently used, and how much screen time is being accumulated. Identification for whom and at what times screen time is most problematic may result in larger improvements in screen time at the person level. As science continues to move forward, researchers should consider whether they are designing public health interventions or person-level interventions.

Overall, behavioral interventions to reduce children’s screen time appear effective. As interventions are scaled, it is important to determine whether the catalyzing effect of specific behavioral strategies on reducing children’s screen time remains significant. Smaller studies have the advantage of stronger internal validity in terms of sample selection or delivery agent, may have potentially larger effect sizes. Factors that are highly controlled and that contribute to the internal validity in smaller studies are the same factors that reduce the ecological validity of these studies. The decision to tinker with these components during the process of scaling smaller studies make it untenable to assume that the effects documented in small studies will generalize to larger trials. If the purpose of a pilot study is to inform the decision to translate the intervention to larger trials, researchers should be designing pilot interventions with an emphasis on scalability and generalizability. Neglecting or minimizing the importance of scalability in the pilot design phase will continue to result in a literature base that shows that for a small number of people, under specific conditions (i.e., intervention setting, duration, delivery agent), with certain behavioral strategies, interventions can be effective. However, the public health implications of such a narrow purview are likely to be minimal. If our goal as public health researchers is to improve the health of the population, we need to expand and generalize our thinking during the early stages of intervention development to effectively utilize our resources and improve health outcomes for the maximum number of individuals.

Availability of data and materials

The datasets generated and analyzed during the current study are available from the corresponding author on reasonable request.

References

Tremblay MS, Aubert S, Barnes JD, Saunders TJ, Carson V, Latimer-Cheung AE, et al. Sedentary Behavior Research Network (SBRN) - terminology consensus project process and outcome. Int J Behav Nutr Phys Act. 2017;14(1):75.

Houghton S, Hunter SC, Rosenberg M, Wood L, Zadow C, Martin K, Shilton T. Virtually impossible limiting Australian children and adolescents daily screen based media use. BMC Public Health. 2015;15(5):1–11.

AAP Council on Communications and Media. Media and Young Minds. Pediatrics. 2016;138(5):1–8. https://doi.org/10.1542/peds.2016-2591.

Tremblay MS, Chaput JP, Adamo KB, et al. Canadian 24-Hour Movement Guidelines for the Early Years (0–4 years): an Integration of Physical Activity, Sedentary Behaviour, and Sleep. BMC Pub Health. 2017;17(874):1–215. https://doi.org/10.1186/s12889-017-4859-6.

Tremblay MS, Carson V, Chaput JP, Gorber SC, Dinh T, Duggan M, et al. Canadian 24-hour movement guidelines for children and youth: an integration of physical activity, sedentary behaviour, and sleep. Applied Physiology, Nutrition, and Metabolism. 2016;41(6 (Suppl. 3)):S311–S327. https://doi.org/10.1139/apnm-2016-0151.

Jackson DM, Djafarian K, Stewart J, Speakman JR. Increased television viewing is associated with elevated body fatness but not with lower total energy expenditure in children. Am J Clin Nutr. 2009;89(4):1031–6.

Fang K, Mu M, Liu K, He Y. Screen time and childhood overweight/obesity: a systematic review and meta-analysis. Child Care Health Dev. 2019;45(5):744–53.

Engberg E, Figueiredo RAO, Rounge TB, Weiderpass E, Viljakainen H. Heavy screen use on weekends in childhood predicts increased body mass index in adolescence: a three-year follow-up study. J Adolesc Health. 2020;66(5):559–66.

Mayne SL, Virudachalam S, Fiks AG. Clustering of unhealthy behaviors in a nationally representative sample of U.S. children and adolescents. Prev Med. 2020;130:105892.

Marsh S, Ni Mhurchu C, Maddison R. The non-advertising effects of screen-based sedentary activities on acute eating behaviours in children, adolescents, and young adults. A systematic review. Appetite. 2013;71:259–73.

Wu L, Sun S, He Y, Jiang B. The effect of interventions targeting screen time reduction: a systematic review and meta-analysis. Medicine (Baltimore). 2016;95(27):e4029.

Biddle SJ, O’Connell S, Braithwaite RE. Sedentary behaviour interventions in young people: a meta-analysis. Br J Sports Med. 2011;45(11):937–42.

Ramsey Buchanan L, Rooks-Peck CR, Finnie RKC, Wethington HR, Jacob V, Fulton JE, et al. Reducing recreational sedentary screen time: a community guide systematic review. Am J Prev Med. 2016;50(3):402–15.

Maniccia DM, Davison KK, Marshall SJ, Manganello JA, Dennison BA. A meta-analysis of interventions that target children’s screen time for reduction. Pediatrics. 2011;128(1):e193-210.

Abraham C, Michie S. A taxonomy of behavior change techniques used in interventions. Health Psychol. 2008;27(3):379–87.

Page M, McKenzie JE, Bossuyt PM, Boutron I, Hoffman TC, Mulrow CD, The PRISMA, et al. Statement: an updated guideline for reporting systematic reviews. BMJ. 2020;2021:372.

Friedrich RR, Polet JP, Schuch I, Wagner MB. Effect of intervention programs in schools to reduce screen time: a meta-analysis. J Pediatr (Rio J). 2014;90(3):232–41.

Dobbins M, Husson H, DeCorby K, LaRocca RL. School-based physical activity programs for promoting physical activity and fitness in children and adolescents aged 6 to 18. Cochrane Database Syst Rev. 2013;2013(2):CD007651. https://doi.org/10.1002/14651858.CD007651.pub2.

Egan CA, Webster CA, Beets MW, Weaver RG, Russ L, Michael D, et al. Sedentary time and behavior during school: a systematic review and meta-analysis. Am J Health Educ. 2019;50(5):283–90.

McMichan L, Gibson AM, Rowe DA. Classroom-based physical activity and sedentary behavior interventions in adolescents: a systematic review and meta-analysis. J Phys Act Health. 2018;15(5):383–93.

Jones M, Defever E, Letsinger A, Steele J, Mackintosh KA. A mixed-studies systematic review and meta-analysis of school-based interventions to promote physical activity and/or reduce sedentary time in children. J Sport Health Sci. 2020;9(1):3–17.

Borelli B, Tooley EM, Scott-Sheldon LAJB. Motivational interviewing for parent-child health interventions a systematic review and meta-analysis. Pediatr Dent. 2015;2015:37.

Schmidt ME, Haines J, O’Brien A, McDonald J, Price S, Sherry B, et al. Systematic review of effective strategies for reducing screen time among young children. Obesity (Silver Spring). 2012;20(7):1338–54.

Downing KL, Hnatiuk JA, Hinkley T, Salmon J, Hesketh KD. Interventions to reduce sedentary behaviour in 0-5-year-olds: a systematic review and meta-analysis of randomised controlled trials. Br J Sports Med. 2018;52(5):314–21.

Beets MW, Beighle A, Erwin HE, Huberty JL. After-school program impact on physical activity and fitness: a meta-analysis. Am J Prev Med. 2009;36(6):527–37.

Shin Y, Kim SK, Lee M. Mobile phone interventions to improve adolescents’ physical health: a systematic review and meta-analysis. Public Health Nurs. 2019;36(6):787–99.

Campbell K, Waters E, O’Mears S, Summerbell C. Interventions for preventing obesity in childhood a systematic review. Obes Rev. 2001;2:149–57.

van de Kolk I, Verjans-Janssen SRB, Gubbels JS, Kremers SPJ, Gerards S. Systematic review of interventions in the childcare setting with direct parental involvement: effectiveness on child weight status and energy balance-related behaviours. Int J Behav Nutr Phys Act. 2019;16(1):110.

Nguyen S, Hacker AL, Henderson M, Barnett T, Mathieu ME, Pagani L, et al. Physical activity programs with post-intervention follow-up in children: a comprehensive review according to categories of intervention. Int J Environ Res Public Health. 2016;13(7):664.

Nally S, Carlin A, Blackburn NE, Baird JS, Salmon J, Murphy MH, et al. The effectiveness of school-based interventions on obesity-related behaviours in primary school children: a systematic review and meta-analysis of randomised controlled trials. Children (Basel). 2021;8(6):489.

Lewis L, Povey R, Rose S, Cowap L, Semper H, Carey A, et al. What behavior change techniques are associated with effective interventions to reduce screen time in 0–5 year olds? A narrative systematic review. Prev Med Rep. 2021;23:101429.

Martin KB, Bednarz JM, Aromataris EC. Interventions to control children’s screen use and their effect on sleep: a systematic review and meta-analysis. J Sleep Res. 2021;30(3):e13130.

Blackburn NE, Wilson JJ, McMullan II, Caserotti P, Gine-Garriga M, Wirth K, et al. The effectiveness and complexity of interventions targeting sedentary behaviour across the lifespan: a systematic review and meta-analysis. Int J Behav Nutr Phys Act. 2020;17(1):53.

Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, et al. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46(1):81–95.

National Heart L, and Blood Institute. Study quality assessment tools: NHLBI; 2021. Available from: https://www.nhlbi.nih.gov/health-topics/study-quality-assessment-tools. Accessed 26 July 2021.

Borenstein M, Hedges LV, Higgins JPT, Rothstein HR. Introduction to meta-analysis. 1 ed. Chichester: Wiley; 2009.

Adams EL, Marini ME, Stokes J, Birch LL, Paul IM, Savage JS. INSIGHT responsive parenting intervention reduces infant’s screen time and television exposure. Int J Behav Nutr Phys Act. 2018;15(1):24.

Anand S, Davis AD, Ahmed R, Jacobs R, Xie C, Hill A, Sowden J, Atkinson S, Blimkie C, Brouwers M, Morrison K, de Koning L, Gerstein H, Yusuf S. A family-based intervention to promote healthy lifestyles in an aboriginal community in Canada. Can J Public Health. 2007;98(6):447–52.

Birken CS, Maguire J, Mekky M, Manlhiot C, Beck CE, Degroot J, et al. Office-based randomized controlled trial to reduce screen time in preschool children. Pediatrics. 2012;130(6):1110–5.

Brotman LM, Dawson-McClure S, Huang KY, Theise R, Kamboukos D, Wang J, et al. Early childhood family intervention and long-term obesity prevention among high-risk minority youth. Pediatrics. 2012;129(3):e621–8.

Brown B, Harris KJ, Heil D, Tryon M, Cooksley A, Semmens E, et al. Feasibility and outcomes of an out-of-school and home-based obesity prevention pilot study for rural children on an American Indian reservation. Pilot Feasibility Stud. 2018;4:129.

Buscemi J, Odoms-Young A, Stolley MR, Schiffer L, Blumstein L, Clark MH, et al. Comparative effectiveness trial of an obesity prevention intervention in EFNEP and SNAP-ED: primary outcomes. Nutrients. 2019;11(5):1012.

Byrd-Bredbenner C, Martin-Biggers J, Povis GA, Worobey J, Hongu N, Quick V. Promoting healthy home environments and lifestyles in families with preschool children: HomeStyles, a randomized controlled trial. Contemp Clin Trials. 2018;64:139–51.

Canavera M, Sharma M, Murnan J. Development and pilot testing a social cognitive theory-based intervention to prevent childhood obesity among elementary students in rural Kentucky. Int Q Community Health Educ. 2008;29(1):57–70.

Cespedes EM, Horan CM, Gillman MW, Gortmaker SL, Price S, Rifas-Shiman SL, et al. Participant characteristics and intervention processes associated with reductions in television viewing in the High Five for Kids study. Prev Med. 2014;62:64–70.

Chen JL, Guedes CM, Lung AE. Smartphone-based healthy weight management intervention for Chinese American adolescents: short-term efficacy and factors associated with decreased weight. J Adolesc Health. 2019;64(4):443–9.

Cong Z, Feng D, Liu Y, Esperat MC. Sedentary behaviors among Hispanic children: influences of parental support in a school intervention program. Am J Health Promot. 2012;26(5):270–80.

Contento IR, Koch PA, Lee H, Sauberli W, Calabrese-Barton A. Enhancing personal agency and competence in eating and moving: formative evaluation of a middle school curriculum–choice, control, and change. J Nutr Educ Behav. 2007;39(5 Suppl):S179–86.

Contento IR, Koch PA, Lee H, Calabrese-Barton A. Adolescents demonstrate improvement in obesity risk behaviors after completion of choice, control & change, a curriculum addressing personal agency and autonomous motivation. J Am Diet Assoc. 2010;110(12):1830–9.

Cronk CE, Hoffmann RG, Mueller MJ, Zerpa-Uriona V, Dasgupta M, Enriquez F. Effects of a culturally tailored intervention on changes in body mass index and health-related quality of life of Latino children and their parents. Am J Health Promot. 2011;25(4):e1-11.

Crouter SE, de Ferranti SD, Whiteley J, Steltz SK, Osganian SK, Feldman HA, et al. Effect on physical activity of a randomized afterschool intervention for inner city children in 3rd to 5th grade. PLoS One. 2015;10(10):e0141584.

Cullen KW, Thompson D, Boushey C, Konzelmann K, Chen TA. Evaluation of a web-based program promoting healthy eating and physical activity for adolescents: teen choice: food and fitness. Health Educ Res. 2013;28(4):704–14.

Dennison BA, Russo TJ, Burdick PA, Jenkins PL. An intervention to reduce television viewing by preschool children. Arch Pediatr Adolesc Med. 2004;158:170–6.

Dickin KL, Hill TF, Dollahite JS. Practice-based evidence of effectiveness in an integrated nutrition and parenting education intervention for low-income parents. J Acad Nutr Diet. 2014;114(6):945–50.

Dunton GF, Lagloire R, Robertson T. Using the RE-AIM framework to evaluate the statewide dissemination of a school-based physical activity and nutrition curriculum: “Exercise Your Options.” Am J Health Promot. 2009;23(4):229–32.

Eagle TF, Gurm R, Smith CA, Corriveau N, DuRussell-Weston J, Palma-Davis L, et al. A middle school intervention to improve health behaviors and reduce cardiac risk factors. Am J Med. 2013;126(10):903–8.

Early GJ, Cheffer ND. Motivational interviewing and home visits to improve health behaviors and reduce childhood obesity: a pilot study. Hisp Health Care Int. 2019;17(3):103–10.

Epstein LH, Paluch RA, Kilanowski CK, Raynor HA. The effect of reinforcement or stimulus control to reduce sedentary behavior in the treatment of pediatric obesity. Health Psychol. 2004;23(4):371–80.

Escobar-Chaves SL, Markham CM, Addy RC, Greisinger A, Murray NG, Brehm B. The Fun Families Study: intervention to reduce children’s TV viewing. Obesity (Silver Spring). 2010;18(Suppl 1):S99-101.

Essery EV, DiMarco NM, Rich SS, Nichols DL. Mothers of preschoolers report using less pressure in child feeding situations following a newsletter intervention. J Nutr Educ Behav. 2008;40(2):110–5.

Fitzgibbon ML, Stolley MR, Schiffer L, Van Horn L, KauferChristoffel K, Dyer A. Two-year follow-up results for Hip-Hop to Health Jr.: a randomized controlled trial for overweight prevention in preschool minority children. J Pediatr. 2005;146(5):618–25.

Fitzgibbon ML, Stolley MR, Schiffer L, Van Horn L, KauferChristoffel K, Dyer A. Hip-Hop to Health Jr for Latino preschool children. Obesity. 2006;14(9):1616–25.

Fitzgibbon ML, Stolley MR, Schiffer LA, Braunschweig CL, Gomez SL, Van Horn L, et al. Hip-Hop to Health Jr. obesity prevention effectiveness trial: postintervention results. Obesity (Silver Spring). 2011;19(5):994–1003.

Fitzgibbon ML, Stolley MR, Schiffer L, Kong A, Braunschweig CL, Gomez-Perez SL, et al. Family-based hip-hop to health: outcome results. Obesity. 2013;21(2):274–83.

Folta SC, Kuder JF, Goldberg JP, Hyatt RR, Must A, Naumova EN, et al. Changes in diet and physical activity resulting from the Shape Up Somerville community intervention. BMC Pediatr. 2013;13:157.

Ford BS, McDonald TE, Owens AS, Robinson TN. Primary care interventions to reduce television viewing in African-American children. Am J Prev Med. 2002;22(2):106–9.

Foster GD, Sherman S, Borradaile KE, Grundy KM, Vander Veur SS, Nachmani J, et al. A policy-based school intervention to prevent overweight and obesity. Pediatrics. 2008;121(4):e794-802.

French SA, Gerlach AF, Mitchell NR, Hannan PJ, Welsh EM. Household obesity prevention: Take Action–a group-randomized trial. Obesity (Silver Spring). 2011;19(10):2082–8.

French SA, Sherwood NE, JaKa MM, Haapala JL, Ebbeling CB, Ludwig DS. Physical changes in the home environment to reduce television viewing and sugar-sweetened beverage consumption among 5- to 12-year-old children: a randomized pilot study. Pediatr Obes. 2016;11(5):e12–5.

French SA, Sherwood NE, Veblen-Mortenson S, Crain AL, JaKa MM, Mitchell NR, et al. Multicomponent obesity prevention intervention in low-income preschoolers: primary and subgroup analyses of the NET-Works randomized clinical trial, 2012–2017. Am J Public Health. 2018;108(12):1695–706.

Fulkerson JA, Friend S, Horning M, Flattum C, Draxten M, Neumark-Sztainer D, et al. Family home food environment and nutrition-related parent and child personal and behavioral outcomes of the healthy Home Offerings via the Mealtime Environment (HOME) Plus program: a randomized controlled trial. J Acad Nutr Diet. 2018;118(2):240–51.

Gentile DA, Welk G, Eisenmann JC, Reimer RA, Walsh DA, Russell DW, et al. Evaluation of a multiple ecological level child obesity prevention program: switch what you do, view, and chew. BMC Med. 2009;7:49.

Gorin A, Raynor H, Chula-Maguire K, Wing R. Decreasing household television time: a pilot study of a combined behavioral and environmental intervention. Behav Interv. 2006;21(4):273–80.

Gortmaker SL, Cheung LW, Peterson KE, Chornitz G, Cradle JH, Dart H, Fox MK, Bullock RB, Sobol AM, Colditz G, Field AE, Laird N. Impact of a school-based interdisciplinary intervention on diet and physical activity among urban primary school children eat well and keep moving. Arch Pediatr Adolesc Med. 1999;153:975–83.

Gortmaker SL, Peterson K, Wiecha J, Sobol AM, Dixit S, Fox MK, Laird N. Reducing obesity via a school-based interdisciplinary intervention among youth planet health. Arch Pediatr Adolesc Med. 1999;153:409–18.

Haines J, McDonald J, O’Brien A, Sherry B, Bottino CJ, Schmidt ME, et al. Healthy habits, happy homes: randomized trial to improve household routines for obesity prevention among preschool-aged children. JAMA Pediatr. 2013;167(11):1072–9.

Hull PC, Buchowski M, Canedo JR, Beech BM, Du L, Koyama T, et al. Childhood obesity prevention cluster randomized trial for Hispanic families: outcomes of the healthy families study. Pediatr Obes. 2018;13(11):686–96.

Jamerson T, Sylvester R, Jiang Q, Corriveau N, DuRussel-Weston J, Kline-Rogers E, et al. Differences in cardiovascular disease risk factors and health behaviors between black and non-black students participating in a school-based health promotion program. Am J Health Promot. 2017;31(4):318–24.

Johnson DB, Birkett D, Evens C, Pickering S. Statewide intervention to reduce television viewing in WIC clients and staff. Am J Health Promot. 2005;19(6):418–21.

Johnston BD, Huebner CE, Anderson ML, Tyll LT, Thompson RS. Healthy steps in an integrated delivery system child and parent outcomes at 30 months. Arch Pediatr Adolesc Med. 2006;160:793–800.

Jones D, Hoelscher DM, Kelder SH, Hergenroeder A, Sharma SV. Increasing physical activity and decreasing sedentary activity in adolescent girls–the Incorporating More Physical Activity and Calcium in Teens (IMPACT) study. Int J Behav Nutr Phys Act. 2008;5:42.

Keita AD, Risica PM, Drenner KL, Adams I, Gorham G, Gans KM. Feasibility and acceptability of an early childhood obesity prevention intervention: results from the healthy homes, healthy families pilot study. J Obes. 2014;2014:378501.

Kelder S, Hoelscher DM, Barroso CS, Walker JL, Cribb P, Hu S. The CATCH Kids Club: a pilot after-school study for improving elementary students’ nutrition and physical activity. Public Health Nutr. 2005;8(2):133–40.

Killough G, Battram D, Kurtz J, Mandich G, Francis L, He M. Pause-2-Play: a pilot school-based obesity prevention program. Rev Bras Saude Metem Infant. 2010;10(3):303–11.

King MH, Lederer AM, Sovinski D, Knoblock HM, Meade RK, Seo DC, et al. Implementation and evaluation of the HEROES initiative: a tri-state coordinated school health program to reduce childhood obesity. Health Promot Pract. 2014;15(3):395–405.

Knowlden AP, Sharma M, Cottrell RR, Wilson BR, Johnson ML. Impact evaluation of Enabling Mothers to Prevent Pediatric Obesity through Web-Based Education and Reciprocal Determinism (EMPOWER) randomized control trial. Health Educ Behav. 2015;42(2):171–84.

Kong AS, Burks N, Conklin C, Roldan C, Skipper B, Scott S, et al. A pilot walking school bus program to prevent obesity in Hispanic elementary school children: role of physician involvement with the school community. Clin Pediatr (Phila). 2010;49(10):989–91.

Kong A, Buscemi J, Stolley MR, Schiffer LA, Kim Y, Braunschweig CL, et al. Hip-Hop to Health Jr. randomized effectiveness trial: 1-year follow-up results. Am J Prev Med. 2016;50(2):136–44.

Ling J, Robbins LB, Zhang N, Kerver JM, Lyons H, Wieber N, et al. Using Facebook in a healthy lifestyle intervention: feasibility and preliminary efficacy. West J Nurs Res. 2018;40(12):1818–42.

Lumeng JC, Miller AL, Horodynski MA, Brophy-Herb HE, Contreras D, Lee H, et al. Improving self-regulation for obesity prevention in head start: a randomized controlled trial. Pediatrics. 2017;139(5):e20162047.

Lynch BA, Gentile N, Maxson J, Quigg S, Swenson L, Kaufman T. Elementary school-based obesity intervention using an educational curriculum. J Prim Care Community Health. 2016;7(4):265–71.

McGarvey E, Keller A, Forrester M, Williams E, Seward D, Suttie DE. Feasibility and benefits of a parent-focused preschool child obesity intervention. Am J Public Health. 2004;94(9):1490–5.

Mendelsohn AL, Dreyer BP, Brockmeyer CA, Berkule-Silberman SB, Huberman HS, Tomopoulos S. Randomized controlled trial of primary care pediatric parenting programs effect on reduced media exposure in infants, mediated through enhanced parent-child interaction. Arch Pediatr Adolesc Med. 2011;165(1):42–8.

Mendoza JA, Baranowski T, Jaramillo S, Fesinmeyer MD, Haaland W, Thompson D, et al. Fit 5 Kids TV reduction program for Latino preschoolers: a cluster randomized controlled trial. Am J Prev Med. 2016;50(5):584–92.

Mouttapa M, Corliss C, Bryars T, Khatib DA, Napoli J, Patterson D, et al. A preliminary evaluation of a cost-effective, in-class physical activity and nutrition education intervention for 3rd through 6th grade students. Health Behav Policy Rev. 2016;3(3):248–58.

Natale RA, Lopez-Mitnik G, Uhlhorn SB, Asfour L, Messiah SE. Effect of a child care center-based obesity prevention program on body mass index and nutrition practices among preschool-aged children. Health Promot Pract. 2014;15(5):695–705.

Neumark-Sztainer DR, Friend SE, Flattum CF, Hannan PJ, Story MT, Bauer KW, et al. New moves-preventing weight-related problems in adolescent girls a group-randomized study. Am J Prev Med. 2010;39(5):421–32.

Ostbye T, Krause KM, Stroo M, Lovelady CA, Evenson KR, Peterson BL, et al. Parent-focused change to prevent obesity in preschoolers: results from the KAN-DO study. Prev Med. 2012;55(3):188–95.

Paradis G, Levesque L, Macaulay AC, Cargo M, McComber A, Kirby R, et al. Impact of a diabetes prevention program on body size, physical activity, and diet among Kanien’keha:ka (Mohawk) children 6 to 11 years old: 8-year results from the Kahnawake Schools Diabetes Prevention Project. Pediatrics. 2005;115(2):333–9.

Patrick K, Calfas KJ, Norman GJ, Zabinski MF, Sallis JF, Rupp J, Covin J, Cella J. Randomized controlled trial of a primary care and home-based intervention for physical activity and nutrition behaviors PACE+ for adolescents. Arch Pediatr Adolesc Med. 2006;160:128–36.

Pbert L, Druker S, Barton B, Olendzki B, Andersen V, Persuitte G, et al. Use of a FITLINE to support families of overweight and obese children in pediatric practices. Child Obes. 2016;12(1):33–43.

Peterson KE, Spadano-Gasbarro JL, Greaney ML, Austin SB, Mezgebu S, Hunt AT, et al. Three-year improvements in weight status and weight-related behaviors in middle school students: the healthy choices study. PLoS One. 2015;10(8):e0134470.

Robinson TN. Behavioural treatment of childhood and adolescent obesity. Int J Obes. 1999;23:S52–7.

Robinson TN, Killen JD, Matheson DM, Pruitt LA, Owens AS, Flint-Moore NM, Brown RT, Rochon J, Green S, Varady A. Dance and reducing television viewing to prevent weight gain in African-American girls the Stanford GEMS pilot study. Ethn Dis. 2003;13:65–7.

Robinson TN, Borzekowski DLG. Effects of the SMART classroom curriculum to reduce child and family screen time. J Commun. 2006;56(1):1–26.

Robinson TN, Matheson DM, Kraemer HC, Wilson DM, Obarzanek E, Thompson NS, Alhassan S, Spencer TR, Haydel KF, Fujimoto M, Varady A, Killen JD. A randomized controlled trial of culturally tailored dance and reducing screen time to prevent weight gain in low-income African American girls: Stanford GEMS. Arch Pediatr Adolesc Med. 2010;164(11):995–1004.

Saelens BE, Sallis JF, Wilfley DE, Patrick K, Cella JA, Buchta R. Behavioral weight control for overweight adolescents initiated in primary care. Obes Res. 2002;10(1):22–32.

Sanders W, Parent J, Forehand R. Parenting to reduce child screen time: a feasibility pilot study. J Dev Behav Pediatr. 2018;39(1):46–54.

Schwartz RP, Vitolins MZ, Case LD, Armstrong SC, Perrin EM, Cialone J, et al. The YMCA Healthy, Fit, and Strong Program: a community-based, family-centered, low-cost obesity prevention/treatment pilot study. Child Obes. 2012;8(6):577–82.

Spruijt-Metz D, Nguyen-Michel ST, Goran MI, Chou CP, Huang TT. Reducing sedentary behavior in minority girls via a theory-based, tailored classroom media intervention. Int J Pediatr Obes. 2008;3(4):240–8.

St George SM, Wilson DK, Schneider EM, Alia KA. Project SHINE: effects of parent-adolescent communication on sedentary behavior in African American adolescents. J Pediatr Psychol. 2013;38(9):997–1009.

Story M, Sherwood NE, Himes JH, Davis M, Jacobs DR, Cartwright Y, Smyth M, Rochon J. An after-school obesity prevention program for African-American girls: the Minnesota GEMS pilot study. Ethn Dis. 2003;13:54–64.

Taveras EM, Blackburn K, Gillman MW, Haines J, McDonald J, Price S, et al. First steps for mommy and me: a pilot intervention to improve nutrition and physical activity behaviors of postpartum mothers and their infants. Matern Child Health J. 2011;15(8):1217–27.

Taveras EM, Gortmaker SL, Hohman KH, Horan CM, Kleinman KP, Mitchell K, et al. Randomized controlled trial to improve primary care to prevent and manage childhood obesity: the High Five for Kids study. Arch Pediatr Adolesc Med. 2011;165(8):714–22.

Todd MK, Reis-Bergan MJ, Sidman CL, Flohr JA, Jameson-Walker K, Spicer-Bartolau T, et al. Effect of a family-based intervention on electronic media use and body composition among boys aged 8–11 years: a pilot study. J Child Health Care. 2008;12(4):344–58.

Tomayko EJ, Prince RJ, Cronin KA, Adams AK. The Healthy Children, Strong Families intervention promotes improvements in nutrition, activity and body weight in American Indian families with young children. Public Health Nutr. 2016;19(15):2850–9.

Trost SG, Tang R, Loprinzi PD. Feasibility and efficacy of a church-based intervention to promote physical activity in children. J Phys Act Health. 2009;6:741–9.

Tucker SJ, Ytterberg KL, Lenoch LM, Schmit TL, Mucha DI, Wooten JA, et al. Reducing pediatric overweight: nurse-delivered motivational interviewing in primary care. J Pediatr Nurs. 2013;28(6):536–47.

Whaley SE, McGregor S, Jiang L, Gomez J, Harrison G, Jenks E. A WIC-based intervention to prevent early childhood overweight. J Nutr Educ Behav. 2010;42(3 Suppl):S47-51.

Whittemore R, Jeon S, Grey M. An internet obesity prevention program for adolescents. J Adolesc Health. 2013;52(4):439–47.

DA Williamson CA, Anton SD, Champagne C, Han H, Lewis L, Martin C, Newton RL, Sothern M, Stewart T, Ryan D. Wise Mind Project: a school-based environmental approach for preventing weight gain in children. Obesity. 2007;15(4):906–17.

Wofford L, Froeber D, Clinton B, Ruchman E. Free afterschool program for at-risk African American children: findings and lessons. Fam Community Health. 2013;36(4):299–310.

Zimmerman FJ, Ortiz SE, Christakis DA, Elkun D. The value of social-cognitive theory to reducing preschool TV viewing: a pilot randomized trial. Prev Med. 2012;54(3–4):212–8.

Haire-Joshu D, Schwarz C, Jacob R, Kristen P, Johnston S, Quinn K, et al. Raising Well at Home: a pre-post feasibility study of a lifestyle intervention for caregivers and their child with obesity. Pilot Feasibility Stud. 2020;6:149.

Bickham DS, Hswen Y, Slaby RG, Rich M. A preliminary evaluation of a school-based media education and reduction intervention. J Prim Prev. 2018;39(3):229–45.

Walton K, Filion AJ, Gross D, Morrongiello B, Darlington G, Randall Simpson J, et al. Parents and Tots Together: pilot randomized controlled trial of a family-based obesity prevention intervention in Canada. Can J Public Health. 2016;106(8):e555–62.

Aittasalo M, Jussila AM, Tokola K, Sievanen H, Vaha-Ypya H, Vasankari T. Kids Out; evaluation of a brief multimodal cluster randomized intervention integrated in health education lessons to increase physical activity and reduce sedentary behavior among eighth graders. BMC Public Health. 2019;19(1):415.

Bäcklund C, Sundelin G, Larsson C. Effects of a 2-year lifestyle intervention on physical activity in overweight and obese children. Adv Physiother. 2011;13(3):97–109.

Bjelland M, Bergh IH, Grydeland M, Klepp K, Andersen LF, Anderssen SA, Ommundsen Y, Lien N. Changes in adolescents’ intake of sugar-sweetened beverages and sedentary behavior: results at 8 month mid-way assessment of the HEIA study - a comprehensive, multi-component school-based randomized trial. Int J Behav Nutr Phys Act. 2011;8(63):1–11.

Breslin G, Brennan D, Rafferty R, Gallagher AM, Hanna D. The effect of a healthy lifestyle programme on 8–9 year olds from social disadvantage. Arch Dis Child. 2012;97(7):618–24.

Busch V, De Leeuw JR, Zuithoff NP, Van Yperen TA, Schrijvers AJ. A controlled health promoting school study in the Netherlands: effects after 1 and 2 years of intervention. Health Promot Pract. 2015;16(4):592–600.

Centis E, Marzocchi R, Di Luzio R, Moscatiello S, Salardi S, Villanova N, et al. A controlled, class-based multicomponent intervention to promote healthy lifestyle and to reduce the burden of childhood obesity. Pediatr Obes. 2012;7(6):436–45.

Chin APMJ, Singh AS, Brug J, van Mechelen W. Why did soft drink consumption decrease but screen time not? Mediating mechanisms in a school-based obesity prevention program. Int J Behav Nutr Phys Act. 2008;5:41.

Davoli AM, Broccoli S, Bonvicini L, Fabbri A, Ferrari E, D’Angelo S, Di Buono A, Montagna G, Panza C, Pinotti M, Romani G, Storani S, Tamelli M, Candela S, Rossi PG. Pediatrician-led motivational interviewing to treat overweight children: an RCT. Pediatrics. 2013;132e:e1236–46.

De Coen V, De Bourdeaudhuij I, Vereecken C, Verbestel V, Haerens L, Huybrechts I, et al. Effects of a 2-year healthy eating and physical activity intervention for 3-6-year-olds in communities of high and low socio-economic status: the POP (Prevention of Overweight among Pre-school and school children) project. Public Health Nutr. 2012;15(9):1737–45.

Ezendam NP, Brug J, Oenema A. Evaluation of the Web-based computer-tailored FATaintPHAT intervention to promote energy balance among adolescents: results from a school cluster randomized trial. Arch Pediatr Adolesc Med. 2012;166(3):248–55.

Fassnacht DB, Ali K, Silva C, Goncalves S, Machado PP. Use of text messaging services to promote health behaviors in children. J Nutr Educ Behav. 2015;47(1):75–80.

Gonzalez-Jimenez E, Cañadas GR, Fernandez-Castillo R, Canadas GA. Analysis of the life-style and dietary habits of a population of adolescents. Nutr Hosp. 2013;28(6):1937–42.

Grydeland M, Bergh IH, Bjelland M, Lien N, Andersen LF, Ommundsen Y, Klepp K, Anderssen SA. Intervention effects on physical activity: the HEIA study a cluster randomized controlled trial. Int J Behav Nutr Phys Act. 2013;10(17):1–13.

Handel MN, Larsen SC, Rohde JF, Stougaard M, Olsen NJ, Heitmann BL. Effects of the Healthy Start randomized intervention trial on physical activity among normal weight preschool children predisposed to overweight and obesity. PLoS One. 2017;12(10):e0185266.

Harrison M, Burns CF, McGuinness M, Heslin J, Murphy NM. Influence of a health education intervention on physical activity and screen time in primary school children: ‘Switch Off-Get Active.’ J Sci Med Sport. 2006;9(5):388–94.

Kipping RR, Payne C, Lawlor DA. Randomised controlled trial adapting US school obesity prevention to England. Arch Dis Child. 2008;93(6):469–73.

Kipping RR, Howe LD, Jago R, Campbell R, Wells S, Chittleborough CR, et al. Effect of intervention aimed at increasing physical activity, reducing sedentary behaviour, and increasing fruit and vegetable consumption in children: active for Life Year 5 (AFLY5) school based cluster randomised controlled trial. BMJ. 2014;348:g3256.

Kobel S, Wirt T, Schreiber A, Kesztyus D, Kettner S, Erkelenz N, et al. Intervention effects of a school-based health promotion programme on obesity related behavioural outcomes. J Obes. 2014;2014:476230.

Nyberg G, Sundblom E, Norman A, Bohman B, Hagberg J, Elinder LS. Effectiveness of a universal parental support programme to promote healthy dietary habits and physical activity and to prevent overweight and obesity in 6-year-old children: the Healthy School Start Study, a cluster-randomised controlled trial. PLoS One. 2015;10(2):e0116876.

Nyberg G, Norman A, Sundblom E, Zeebari Z, Elinder LS. Effectiveness of a universal parental support programme to promote health behaviours and prevent overweight and obesity in 6-year-old children in disadvantaged areas, the Healthy School Start Study II, a cluster-randomised controlled trial. Int J Behav Nutr Phys Act. 2016;13:4.

O’Dwyer MV, Fairclough SJ, Knowles Z, Stratton G. Effect of a family focused active play intervention on sedentary time and physical activity in preschool children. Int J Behav Nutr Phys Act. 2012;9(117):1–13.

Plachta-Danielzik S, Pust S, Asbeck I, Czerwinski-Mast M, Langnase K, Fischer C, Bosy-Westphal A, Kriwy P, Muller MJ. Four-year follow-up of school-based intervention on overweight children: the KOPS study. Obesity. 2007;15(12):3159–69.

Plachta-Danielzik S, Landsberg B, Lange D, Seiberl J, Muller MJ. Eight-year follow-up of school-based intervention on childhood overweight–the Kiel Obesity Prevention Study. Obes Facts. 2011;4(1):35–43.

Puder JJ, Marques-Vidal P, Schindler C, Zahner L, Niederer I, Burgi F, et al. Effect of multidimensional lifestyle intervention on fitness and adiposity in predominantly migrant preschool children (Ballabeina): cluster randomised controlled trial. BMJ. 2011;343:d6195.

Rito AI, Carvalho MA, Ramos C, Breda J. Program Obesity Zero (POZ)–a community-based intervention to address overweight primary-school children from five Portuguese municipalities. Public Health Nutr. 2013;16(6):1043–51.

Serra-Paya N, Ensenyat Solé A, Blanco NA. Intervención multidisciplinar y no competitiva en el ámbito de la salud pública para el tratamiento del sedentarismo, el sobrepeso y la obesidad infantil: Programa NEREU. Apunts Educació Física i Esports. 2014;117:7–22.

Simon C, Schweitzer B, Oujaa M, Wagner A, Arveiler D, Triby E, et al. Successful overweight prevention in adolescents by increasing physical activity: a 4-year randomized controlled intervention. Int J Obes (Lond). 2008;32(10):1489–98.

Singh AS, Paw MJCA, Brug J, Van Mechelen W. Dutch obesity intervention in teenagers: effectiveness of a school-based program on body composition and behavior. Arch Pediatr Adolesc Med. 2009;163(4):309–17.

Slootmaker SM, Chinapaw MJ, Seidell JC, van Mechelen W, Schuit AJ. Accelerometers and Internet for physical activity promotion in youth? Feasibility and effectiveness of a minimal intervention [ISRCTN93896459]. Prev Med. 2010;51(1):31–6.

Tuominen PPA, Husu P, Raitanen J, Kujala UM, Luoto RM. The effect of a movement-to-music video program on the objectively measured sedentary time and physical activity of preschool-aged children and their mothers: a randomized controlled trial. PLoS One. 2017;12(8):e0183317.

Van Grieken A, Renders CM, Veldhuis L, Looman CWN, Hirasing RA, Raat H. Promotion of a healthy lifestyle among 5-year-old overweight children health behavior outcomes of the ‘Be active eat right’ study. BMC Public Health. 2014;14(59):1–13.

Van Stralen MM, de Meij J, Te Velde SJ, Van Der Wal MF, Van Mechelen W, Knol DL, Chinapaw MJM. Mediators of the effect of the JUMP-in intervention on physical activity and sedentary behavior in Dutch primary schoolchildren from disadvantaged neighborhoods. Int J Behav Nutr Phys Act. 2012;9(131):1–12.

Verbestel V, De Coen V, Van Winckel M, Huybrechts I, Maes L, De Bourdeaudhuij I. Prevention of overweight in children younger than 2 years old: a pilot cluster-randomized controlled trial. Public Health Nutr. 2014;17(6):1384–92.

Verloigne M, Bere E, Van Lippevelde W, Maes L, Lien N, Vik FN, et al. The effect of the UP4FUN pilot intervention on objectively measured sedentary time and physical activity in 10–12 year old children in Belgium: the ENERGY-project. BMC Public Health. 2012;12:805.

Vik FN, Lien N, Berntsen S, De Bourdeaudhuij I, Grillenberger M, Manios Y, et al. Evaluation of the UP4FUN intervention: a cluster randomized trial to reduce and break up sitting time in European 10–12-year-old children. PLoS One. 2015;10(3):e0122612.

Wadolowska L, Hamulka J, Kowalkowska J, Ulewicz N, Hoffmann M, Gornicka M, et al. Changes in sedentary and active lifestyle, diet quality and body composition nine months after an education program in Polish students aged 11(-)12 years: report from the ABC of healthy eating study. Nutrients. 2019;11(2):331.

Willis TA, George J, Hunt C, Roberts KP, Evans CE, Brown RE, et al. Combating child obesity: impact of HENRY on parenting and family lifestyle. Pediatr Obes. 2014;9(5):339–50.

Willis TA, Roberts KP, Berry TM, Bryant M, Rudolf MC. The impact of HENRY on parenting and family lifestyle: a national service evaluation of a preschool obesity prevention programme. Public Health. 2016;136:101–8.