Abstract

Background

The integration of Objective Structured Clinical Examinations (OSCEs) within the professional pharmacy program, contributes to assessing the readiness of pharmacy students for Advanced Pharmacy Practice Experiences (APPEs) and real-world practice.

Methods

In a study conducted at an Accreditation Council for Pharmacy Education (ACPE)-accredited Doctor of Pharmacy professional degree program, 69 students in their second professional year (P2) were engaged in OSCEs. These comprised 3 stations: best possible medication history, patient education, and healthcare provider communication. These stations were aligned with Entrustable Professional Activities (EPAs) and Ability Statements (AS). The assessment aimed to evaluate pharmacy students’ competencies in key areas such as ethical and legal behaviors, general communication skills, and interprofessional collaboration.

Results

The formulation of the OSCE stations highlighted the importance of aligning the learning objectives of the different stations with EPAs and AS. The evaluation of students’ ethical and legal behaviors, the interprofessional general communication, and collaboration showed average scores of 82.6%, 88.3%, 89.3%, respectively. Student performance on communication-related statements exceeded 80% in all 3 stations. A significant difference (p < 0.0001) was found between the scores of the observer and the SP evaluator in stations 1 and 2 while comparable results (p = 0.426) were shown between the observer and the HCP evaluator in station 3. Additionally, a discrepancy among the observers’ assessments was detected across the 3 stations. The study shed light on challenges encountered during OSCEs implementation, including faculty involvement, resource constraints, and the necessity for consistent evaluation criteria.

Conclusions

This study highlights the importance of refining OSCEs to align with EPAs and AS, ensuring a reliable assessment of pharmacy students’ clinical competencies and their preparedness for professional practice. It emphasizes the ongoing efforts needed to enhance the structure, content, and delivery of OSCEs in pharmacy education. The findings serve as a catalyst for addressing identified challenges and advancing the effectiveness of OSCEs in accurately evaluating students’ clinical readiness.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Background

The Objective Structured Clinical Examinations (OSCEs) were first described by Harden et al. in 1975 with their purpose to objectively assess the clinical competence of medical students in a safe practice environment using standardized medical case scenarios that simulate real case scenarios [1]. In 1988, Harden further refined the definition of OSCEs as “an approach to the assessment of clinical competence in which the components of competence are assessed in a planned or structured way with the attention being paid to the objectivity of the examination” [2]. Compared to other assessments of learning in practice, OSCEs are generally more objective and less biased considering the input of several examiners along with the use of different evaluation rubrics in the process [3].

Historically, pharmacy programs have mostly relied on standardized exams in the formative assessment of students’ learning outcomes. However, the shift of pharmacy education from the product to the patient has required the adoption of competency-based assessments such as OSCEs that objectively appraise students’ hands-on clinical competence, critical thinking, teamwork, problem-solving and communication skills, among other required competencies in patient care [4, 5]. Accordingly, OSCEs are being increasingly used by pharmacy programs as formative and summative assessments of students’ application of knowledge and their readiness for practice and team work [6, 7]. Furthermore, OSCEs have become part of the licensing examination for pharmacists in Canada and have been recommended for inclusion in the competency-based learning and assessment by several societies and accreditation agencies [4, 8].

Standard 24 of the 2016 accreditation standards of the Accreditation Council for Pharmacy Education (ACPE) requires that “the college or school develops, resources, and implements a plan to assess attainment of educational outcomes to ensure that graduates are prepared to enter practice” [9]. ACPE provides guidance documents for the competencies and skills that students should demonstrate at each level of knowledge including the domains and Ability Statements (AS) that are central to the preparation of pharmacy students prior to their advanced pharmacy practice experiences (APPEs) [10]. In addition, the core Entrustable Professional Activities (EPAs), published by the American Association of Colleges of Pharmacy (AACP), define the “essential activities and tasks that all new pharmacy graduates must be able to perform without direct supervision upon entering practice” [11]. AS and EPAs are matched with the educational outcomes of the Center of Advancement of Pharmacy Education (CAPE) 2013 and the Pharmacists’ Patient Care Process (PPCP) [12]. While both AS and EPAs share a common objective of ensuring that pharmacy graduates are prepared for practice, they differ however in their approach and focus. AS delineate the knowledge, skills, attitudes and abilities expected from pharmacy graduates and typically serve as a guide for curriculum development. EPAs, however, are specifically focused on the practical tasks and responsibilities that pharmacy graduates should be entrusted to perform autonomously and serve as the basis for competency assessment in evaluating students’ work-readiness.

Despite the widespread acceptance of EPAs in pharmacy education, their integration in learning and assessment practices remains limited and inconsistent [13]. For instance, it is argued that EPAs are professional responsibilities and should not be used to assess performance in a classroom setting because such assessment requires direct and multiple observations of the learner performing the EPA without supervision [14]. Yet, there are reports of EPAs being successfully implemented in experiential pharmacy settings and OSCEs [15,16,17]. Accordingly, EPAs were reported as reliable assessment tools to assess pharmacy students’ performance in their first professional year as part of introductory pharmacy practice experiences (IPPEs), in the first pharmacy professional year, or in OSCEs [16–17]. Yet, there remains a need to evaluate the use of EPAs in later stages of pharmacy education particularly nearing graduation [18].

Accordingly, the aim of this study was to describe the development and implementation of a pharmacy OSCE with a focus on EPAs and AS, and report the student performance on competencies related to APPE- and practice-readiness such as ethical and legal behaviors, general communication skills, and interprofessional collaboration.

Methods

Description of the educational activity and setting

The Doctor of Pharmacy (Pharm.D.) program at the Lebanese American University (LAU) School of Pharmacy spans six years, and is accredited by ACPE. It is a 201 credit-hour program including 61 didactic and 15 experiential courses that cover a diverse range of areas including biomedical, pharmaceutical, social, behavioral, administrative, and clinical sciences. Experiential education that comprises students’ internships in hospitals, community pharmacies and other healthcare settings is integrated throughout the program. Successful completion of all the required courses is mandatory for graduation.

In 2019, a school-based OSCEs workgroup implemented a plan for longitudinal simulation activities in professional years 1 (P1), 2 (P2) and 3 (P3). The OSCEs blueprint is based on the core EPAs of AACP and the pre-APPE Performance Domains and Abilities of the 2016 ACPE standards guidance document [8,9,10]. All OSCEs were conducted in LAU’s Clinical Simulation Center. The cohort included all student pharmacists with a P2 standing who are qualified to progress into P3 i.e., carrying no more than one P2 didactic course to P3. The LAU SOP OSCEs is not yet a high-stakes exam. However, if a student misses or does not pass the OSCE, they will sit for a make-up OSCE.

There were 3 different OSCE stations: station 1 for best possible medication history; station 2 for patient education; and station 3 for healthcare provider (HCP) communication. Station 1 focused on eliciting comprehensive medical and medication histories that are essential elements of patient care. Students are engaged with a simulated scenario involving a patient admitted to the emergency department with severe headache, tasked with gathering pertinent information to guide subsequent clinical decisions. At Station 2, students are tasked with patient education, particularly counseling on the appropriate usage of anticoagulant medication, while fostering patient understanding and compliance [see supplementary material 1]. Station 3 is dedicated to refining communication with healthcare providers (HCPs) and practicing clinical decision-making within a collaborative framework. In this station, students reviewed a patient chart, reconciled the discharge medications, and engaged in dialogue with physicians to propose and discuss recommended interventions. This station underscores the importance of effective interprofessional communication and teamwork in optimizing patient outcomes.

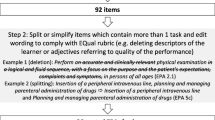

OSCEs stations were mapped to the AS (Table 1) and EPAs (Table 2). The 3 stations were found to map to 6 out of the 11 AS and to 8 out of the 15 EPAs. Specifically, station 1 addressed AS2 (basic patient assessment) and EPA1 (collect patient information). Station 2 focused on AS8 (patient education) and EPA11 (patient and HCP education). Station 3 addressed AS7 (general communication abilities) and EPA6 (interprofessional collaboration). Of note, AS6 (ethics, professional, and legal behavior), AS7 and EPA 6 were assessed in all 3 stations. AS pertaining to patient safety, pharmaceutical calculations, drug information analysis, health and wellness, and insurance/prescription drug coverage were not appropriately aligned with the competencies evaluated in the stations and were consequently not assessed further in this study. Similarly, the EPAs associated with identifying patients at risk for prevalent diseases in a population, maximizing the appropriate use of medications, ensuring patient immunization against vaccine-preventable diseases, utilizing evidence-based information for patient care advancement, overseeing pharmacy operations for a designated work shift, fulfilling medication orders, and crafting a written plan for continuous professional development, were not aligned with the competencies evaluated in the stations. Consequently, these EPAs were excluded from further assessment in this study.

Each station was mapped to a major AS and EPA in consultation with faculty content experts based on AS and EPA guiding documents. For each station, students interacted with a standardized patient (SP) and/or an HCP. In each station, assessment was performed by a faculty observer and other evaluators, either SP or HCP, depending on the activities and nature of the station. Debriefing was thereafter performed in small groups, during which students shared their feedback on the strengths and weaknesses of their experience and suggested areas for improvement.

Students were evaluated by a faculty member who was present in each station as an observer, in addition to the SP in stations 1 and 2 and the standardized HCP in station 3. The HCP is best portrayed by a faculty member who is well-versed in medical terms for optimal interaction, considering that this is the only station where the discussion is not conducted with a patient. The observer was considered an objective evaluator who filled the checklist for the interaction between the HCP and the student, similar to other stations. Grading rubrics and checklists were used to assess students’ clinical competencies, communication skills and attitude. Checklists for stations 1 and 2 were completed by the faculty observer and the SP evaluator, while the station 3 checklist was completed by the faculty observer and the HCP evaluator. Thus, students’ performance was assessed using two evaluation forms completed for each student per station. In addition, students’ scores per AS and EPA were computed as a direct assessment method to evaluate students’ achievements and their readiness for APPEs and practice, respectively.

Each station is modeled to mimic a real clinic setting and has a duration of 15 min. A coordinator is assigned to proctor and oversee the timing. Students are provided with a guide map that outlines the sequence of the 3 designated stations, to be completed consecutively within a 45-minute timeframe. Every student rotates through an identical set of 3 stations, each featuring the exact same scenarios. Students are directed to spend up to 4 min reading the initial scenario, which is placed on a table outside the station. This scenario is accompanied by a concise patient chart and a brief outline of the tasks that the student must complete during the subsequent 10-minute interaction inside the station. During this interaction, students engage with either a SP or HCP, depending on the theme of the station. If time allows, the evaluator may offer feedback to the student while waiting before exiting the station at the end of the 15 min. Prior to the cumulative OSCEs, faculty delivered orientation sessions to both students and SPs along with generic checklists and also engaged them in mock OSCEs to simulate scenarios in preparation for the actual assessments. Of note, the SPs are recruited by the LAU Clinical Simulation Center and trained by school faculty in preparation for the pharmacy-based OSCEs.

In preparation for the cumulative OSCEs at the end of P2, students were exposed in formative simulation sessions that are integrated in designated P1 and P2 courses (professional communication, dosage forms, select pharmacotherapeutics, and pharmaceutical care and dispensing). These are aimed at advancing students’ knowledge, clinical skills and professional attitudes including professional communication, disease screening, medication preparation, dose calculation, and patient education and counseling. Students are assessed through grading rubrics and checklists. OSCEs are integrated into the summer experiential education course in the P2 year. The assessment of students’ performance in OSCEs were included as an evaluation component of this course.

Statistical methods

To report student performance on competencies related to the APPE and practice readiness, data were collected during OSCEs, then entered and tabulated in an Excel spreadsheet while maintaining anonymity, and analyzed using SPSS® v27, 2022. The evaluation rubrics and checklists were mapped to EPAs and AS. Grades were calculated for AS and EPAs. A grade of 70% or higher on an AS and EPAs was considered as passing. Analysis of variance (one-way ANOVA) was used to compare students’ scores between stations and across evaluators. The t-test was used to compare scores between evaluators (SP and HCP). All values are expressed as means. Differences were considered significant when a priori p value was lower than 0.05.

The study was approved by the LAU Institutional Review Board and granted exempt status under the code number LAU.SOP.HM2.12/Sep/2023. Access to any identifiable data were limited to the investigators and data documents were stored within locked locations. Security codes were assigned to computerized records with names replaced with codes to ensure that data were analyzed without revealing the identity of the participants.

Results

In total, 69 P2 students completed the three-station OSCEs. The evaluation of ethical and legal behaviors (mapped to AS6), the interprofessional general communication (AS7) and collaboration (EPA6) revealed respectively average scores of 82.6%, 88.3%, 89.3% of students scoring above the 70% cut point across all stations. It is worth noting that the lowest student scores were observed in station 3 where students were evaluated by a HCP (Fig. 1).

Based on the direct assessment of student APPE- and practice-readiness per station, the observer ratings showed satisfactory ratings were observed in station 3 for AS7 (78%) and EPA6 (67%) as opposed to suboptimal performance in stations 1 and 2 for AS2-basic patient assessment (22%), EPA1-collect patient information (22%), AS8-patient education (29%) and EPA11-patient and HCP education (33%). This indicates a discrepancy in the observer assessments across the 3 stations. A significant difference using t-test (p < 0.0001) was detected between the scores of the observer and the SP evaluator in stations 1 (EPA1: observer 22% vs. SP evaluator 88%; EPA 11: observer 33% vs. SP evaluator 83%) and 2 (AS8: observer 29% vs. SP evaluator 67%). Scores of station 3 (p = 0.426) showed comparable results between the observer and the HCP evaluator, implying a higher level of agreement in their evaluations (Fig. 2).

The majority of the SP and HCP evaluators’ scores on communication-related statements in each of the OSCE stations exceeded 80% (Table 3).

However, the statement “summarized information back when appropriate” received scores below or equal to 70% in all stations, suggesting an area that may require improvement. For the statement “voiced empathy for patient situation/problem,” the scores given by the HCP evaluators in station 3 were significantly lower (32.6%) compared to the scores provided by the SP evaluators in stations 1 (81.8%) and 2 (86.9%) (p < 0.00001). Similarly, for the statement “served as a patient advocate,” the HCP scores in station 3 (55%) were significantly lower than the SP scores in stations 1 (78.26%) and 2 (85.51%) (p < 0.00001). Furthermore, in the statement “the evaluator would be willing to recommend a patient to see this student pharmacist,” the HCP scores in station 3 (60.87%) were significantly lower than the SP scores in station 1 (78.9%) and 2 (84.8%) (p = 0.00014).

The OSCEs coordinator received suggestions during the debriefing sessions. Those included: adjusting the allocated time for each station based on the task, simplifying the case scenario to enhance focus on the main station goal, clearly highlighting the theme of each station on the opening scenario at the entrance of each station, integrating role play into the preparatory orientation for each station, and ensuring key information is included on both the grading checklist and the patient case scenario.

Discussion

OSCEs have become more commonly utilized in pharmacy programs as a way to assess students’ practice competencies and as a capstone exam at the end of P2 to assess students’ APPE-readiness [19]. Moreover, OSCEs have been aligned with pharmacy curricula [20], pharmacotherapeutics courses [21], program educational outcomes to assess APPE-readiness [19], and general pharmacy practice competencies such as critical thinking, patient care process and communication skills [22–23].

Since the release of the report on the core EPAs by the 2015–2016 AACP Academic Affairs Standing Committee of AACP, EPAs have been considered as a new approach to define and assess pharmacy practice skills that mark a shift from time-based to outcome-based learning [11, 24, 25]. EPAs are expected to be tasks that are observable, measurable, and require professional training for execution [26]. Various applications of EPAs have been proposed, yet they all converge to prepare graduates for professional practice [26–29] and to serve as a guide to document student performance and progression in the program [15, 16, 30–33].

This study details the integration of EPAs and AS within an OSCE, serving as a transitional step for pharmacy students as they progress towards their APPEs and practical experiences. It further reports on the inclusion of ACPE’s pre-APPE ability statements to help identify essential competencies for APPE-readiness. The study findings reveal a congruence of competencies between EPAs and AS. The 3-station OSCE model used, included 8 EPAs out of 15 EPAs selected by the preceptors who were involved in developing the OSCEs. The method of selecting core EPAs adheres to practices documented in the literature. In this approach, the clinical faculty determines the significance of specific EPAs for pharmacy practice and subsequently incorporate them into the selection process [33]. Furthermore, the activities that featured in each of the OSCE stations are comparable to those used by Bellottie et al. who identified specific activities tailored to pre-APPE core domains as a foundational framework for designing pharmacy practice laboratory exercises [34]. In a recent pilot OSCE test, core competency domains and case scenarios were created through a literature review, brainstorming by researchers, and consensus from external experts, to evaluate its appropriateness as a tool for assessing Korean pharmacy students’ clinical pharmacist competency for APPEs [35].

The incorporation of OSCEs at the end of the P2 year along with the integration of simulation sessions within specific pharmacy courses in P1 and P2 further enhance the preparation of students for their IPPEs in P3. Furthermore, the implementation of the pharmacists’ patient care process (PPCP) model during the P2 plays a pivotal role in promoting students’ readiness for real-world practice scenarios [36]. These strategic program integrations are designed to advance students’ knowledge, and refined clinical skills and professional attitudes such as professional communication, disease screening, medication preparation, dose calculation, and patient education and counseling techniques. While IPPEs are progressively laid out throughout the first 3 professional years (P1, P2, P3), P3 IPPEs are specifically focused on clinical practice that include [480 h], alongside with community and hospital [490 h]. This provides extensive hands-on experiences in various pharmacy practice settings where student performance is assessed via direct preceptor observation.

In this study, students displayed competence in various aspects of AS and EPA. High scores in ethical and legal behavior (AS6) reflected their proficiency in demonstrating related principles. Similarly, a strong average score of 87% across all 3 stations in general communication abilities (AS7) showcased adeptness in effectively communicating with patients and HCPs. Additionally, high evaluation scores in interprofessional collaboration across all stations signify a notable level of competence in working collaboratively with other healthcare team members. The LAU Health Science and Nutrition programs pioneered the implementation of interprofessional education (IPE) in the region, contributing significantly to students’ readiness for interprofessional practice [37]. Nevertheless, consistent low scores in AS6, AS7, and EPA6, particularly noted by healthcare providers (HCP) evaluators in station 3, suggest a need for refining evaluation criteria and additional targeted training.

The implementation of OSCEs may present numerous challenges and is restricted by the availability of resources [38]. From our perspective, the execution of OSCEs has been linked to various challenges concerning faculty involvement as observers or evaluators, particularly in connection with faculty workload and resource constraints. This underscores the importance of developing user-friendly grading rubrics and checklists, and discussing content alignment with course coordinators [19]. In this research, the elevated scores on communication-related statements across all 3 stations validate students’ proficiency in communication skills, achieved through purposeful and focused practice throughout the program. In effect, the observers have mostly likely served as members of the OSCE development team, thereby establishing a robust consensus on the incorporation of EPAs and students’ expectations. This compares to efforts described in the literature about the design and evaluation of a program implemented to prepare clinical faculty members to use EPAs for teaching and assessment in experiential education [39]. Similarly, preceptors’ development programs were reported to be effective in establishing a standardized understanding of the level of entrustability and help ensure that preceptors consistently assign levels of engagement to EPA-based tasks performed by students during their experiential rotations [30]. Another challenge is the limited number of trained faculty in planning and delivering the OSCEs. Institutions should invest in professional development initiatives to train faculty and preceptors on simulation-based education and assessment.

Moreover, the substantial agreement in the students’ evaluations between observers and HCP in station 3, can be attributed to the fact that both are faculty members. However, the observed inconsistency in scores between the observer and the SP evaluator in stations 1 and 2 could be attributed to differences in evaluators’ perspectives and overestimation of student performance by the SP, which suggests a need for better SP training on performance-based assessment. Evidence from a six-year experience suggests that the use of SPs produced accurate simulations and reliable evaluation of student performance [40]. Yet, SPs could not replace pharmacist evaluators in the context of entry-to-practice certification purposes and were actually suggested to potentially rather belong in an educational context [41]. When OSCE scores between faculty and SPs were compared, the SP global assessment scores were significantly higher than faculty scores, suggesting that increased experience within the station and familiarity with the role contribute to enhanced performance [42]. It is important to note that SPs assessed student communication skills. From this perspective, the SPs may have been assessing students’ empathy, patience, caring attitude, whereas the observer was mainly focused on ensuring that required information and questions are covered from a clinical point of view. These results underscore the importance of consistency for a reliable assessment. It may be beneficial to further explore the factors contributing to the discrepancies observed and implement measures to enhance the reliability and validity of the evaluations in all stations. Continuous evaluation and refinement can lead to more accurate assessments of student performance and facilitate targeted interventions for improvement. So far and based on the OSCEs findings and students’ debriefing, curricular enhancements have been implemented in formative simulations (embedded in courses) and in future OSCE stations. For instance, improvement was introduced to the patient education activity in the Dispensing Laboratory course and to the interprofessional communication activity in one of the six courses of the pharmacotherapeutics series.

Limitations

The authors acknowledge the following study limitations: (1) the design of OSCEs incorporated 3 stations, and to enhance validity, a greater number of stations is recommended; (2) the reported dataset spans a year and it would be valuable to accumulate additional data over a lengthier time to discern more defined patterns in student performance; (3) the performance of students in real-world situations may not be entirely mirrored by their performance on the OSCEs which may question the level or extent of entrustability; (4) Checklists were used to assess students’ performance at each station. However, assessment did not incorporate the evaluators’ assessment of the level of entrustment, indicating an area of improvement.

Conclusion

The study findings emphasize on the importance of implementing a consistent and reliable process in OSCEs used in assessing the readiness of pharmacy students for Advanced Pharmacy Practice Experiences (APPEs) and real-world practice. It is imperative to explore the contributing factors to observed discrepancies and implement measures that enhance the reliability and validity of evaluations across all stations. The continuous evaluation and refinement process holds the potential to yield more accurate assessments of student performance, enabling targeted interventions for improvement. This study pioneers the application of EPAs and AS as a framework for developing and implementing OSCEs at the P2-P3 level, aiming to assess pharmacy students’ APPE- and practice-readiness. Aligning specific EPAs and AS with learning objectives in various stations, coupled with dedicated evaluation rubrics, has been a key aspect. Despite this progress, ongoing efforts are necessary to enhance the structure, content, and delivery of OSCEs, ensuring broader coverage of EPAs for sustained improvement. Future studies should aim to broaden the scope of OSCE stations to encompass additional EPAs and incorporate levels of entrustment as a scale for evaluation.

Data availability

All data generated or analyzed during this study are included in this published article. Additional material is available from the corresponding author on reasonable request.

Abbreviations

- AACP:

-

American Association of Colleges of Pharmacy

- ACPE:

-

Accreditation Council for Pharmacy Education

- APPE:

-

Advanced Pharmacy Practice Experience

- AS:

-

Ability Statement

- EPA:

-

Entrustable Professional Activity

- HCP:

-

Healthcare Professional

- IPE:

-

Interprofessional Education

- IPPE:

-

Introductory Pharmacy Practice Experience

- OSCE:

-

Objective Structured Clinical Examination

- P:

-

Pharmacy Professional Year

- PPCP:

-

Pharmacists’ Patient Care Process

- SP:

-

Standardized Patient

References

Harden RM, Stevenson M, Downie WW, Wilson GM. Assessment of clinical competence using objective structured examination. Br Med J. 1975;1:447.

Harden RM. What is an OSCE? Med Teach. 1988;10(1):19–22.

Rushforth HE. Objective structured clinical examination (OSCE): review of literature and implications for nursing education. Nurse Educ Today. 2007;27(5):481–90.

Shirwaikar A. Objective structured clinical examination (OSCE) in pharmacy education - a trend. Pharm Pract (Granada). 2015;13(4):627.

Croft H, Gilligan C, Rasiah R, Levett-Jones T, Schneider J. Current trends and opportunities for competency assessment in pharmacy education–a literature review. Pharmacy. 2019;7(2):67.

Fulford M, Souza JM, Are You A. CAPE-A.B.L.E.? Center for the Advancement of Pharmacy Education: An Assessment Blueprint for Learning Experiences. 2014. https://www.aacp.org/sites/default/files/2017-10/Assessment%20CAPE%20Paper-%20Final%2011.pdf. Accessed October 22, 2023.

Kristina SA, Wijoyo Y. Assessment of pharmacy students’ clinical skills using objective structured clinical examination (OSCE): a literature review. Sys Rev Pharm. 2019;10(1):55–60.

Accreditation Council for Pharmacy Education. Guidance for the accreditation standards and key elements for the professional program in pharmacy leading to the doctor of pharmacy degree (guidance for standards 2016). https://www.acpe-accredit.org/pdf/GuidanceforStandards2016FINAL.pdf. Accessed October 30, 2023.

Accreditation Council for Pharmacy Education. Accreditation standards and guidelines for the professional program in pharmacy leading to the doctor of pharmacy degree (Standards 2016). Standards2016FINAL2022.pdf (acpe-accredit.org). Accessed October 20, 2023.

Entrustable professional activities (EPAs). Appendix 1: core entrustable professional activities for new pharmacy graduates. American Association of Colleges of Pharmacy. https://www.aacp.org/sites/default/files/2017–10/Appendix1CoreEntrustableProfessionalActivities%20%281%29.pdf. Accessed October 20, 2023.

Haines ST, Pittenger AL, Stolte SK, et al. Core entrustable professional activities for new pharmacy graduates. Am J Pharm Educ. 2017;25(1):S2.

Haines ST, Gleason BL, Kantorovich A, et al. Report of the 2015-16 Academic affairs Standing Committee. Am J Pharm Educ. 2016;80(4):57.

Abeyaratne C, Galbraith K. A review of entrustable professional activities in pharmacy education. Am J Pharm Educ. 2023;87(3):ajpe8872.

Persky AM, Fuller KA, cate OT. True entrustment decisions regarding entrustable professional activities happen in the workplace, not in the classroom setting. Am J Pharm Educ. 2021;85(5). Article 8536.

Smith C, Stewart R, Smith G, Anderson HG, Baggarly S. Developing and implementing an entrustable professional activity assessment for pharmacy practice experiences. Am J Pharm Educ. 2020;84(9):ajpe7876.

Rhodes LA, Marciniak MW, McLaughlin J, Melendez CR, Leadon KI, Pinelli NR. Exploratory analysis of entrustable professional activities as a performance measure during early pharmacy practice experiences. Am J Pharm Educ. 2019;83(2):6517.

Beckett RD, Gratz MA, Marwitz KK, Hanson KM, Isch J, Robison HD. Development, validation, and reliability of a P1 objective structured clinical examination assessing the national EPAs. Am J Pharm Educ. 2023;87(6):100054. https://doi.org/10.1016/j.ajpe.2023.

Michael M, Griggs AC, Shields IH, Sadighi M, Hernandez J, Chan C, et al. Improving handover competency in preclinical medical and health professions students: establishing the reliability and construct validity of an assessment instrument. BMC Med Educ. 2021;21:518.

Ruble MJ, Serag-Bolos ES, Wantuch GA, Dell KA, Cole JD, Noble MB, et al. Evolution of a capstone exam for third-year doctor of pharmacy students. Curr Pharm Teach Learn. 2023;15:599–606.

Kirton SB, Kravitz L. Objective structured clinical examinations (OSCEs) compared with traditional assessment methods. Am J Pharm Educ. 2011;75(6):111.

Martin RD, Ngo N, Silva H, Coyle WR. An objective structured clinical examination to assess competency acquired during an introductory pharmacy practice experience. Am J Pharm Educ. 2020;84(4):7625.

Black EP, Jones M, Jones M, Williams H, Julian E, Wilson DR. Validation of longitudinal progression examinations for prediction of APPE Readiness. Am J Pharm Educ. 2023;87(12):100137.

McLaughlin JE, Khanova J, Scolaro K, Rodgers PT, Cox WC. Limited predictive utility of admissions scores and objective structured clinical examinations for APPE performance. Am J Pharm Educ. 2015;79(6):84.

Pittenger AL, Chapman SA, Frail CK, Moon JY, Undeberg MR, Orzoff JH. Entrustable professional activities for pharmacy practice. Am J Pharm Educ. 2016;80(4):57.

Shorey S, Lau TC, Lau ST, Ang E. Entrustable professional activities in health care education: a scoping review. Med Educ. 2019;53(8):766–77.

Elmes AT, Schwartz A, Tekian A, Jarrett JB. Evaluating the quality of the core entrustable professional activities for new pharmacy graduates. Pharm (Basel). 2023;11(4):126.

Westein MPD, de Vries H, Floor A, Koster AS, Buurma H. Development of a postgraduate community pharmacist specialization program using CanMEDS competencies, and entrustable professional activities. Am J Pharm Educ. 2019;83(6):6863.

Carraccio C, Englander R, Gilhooly J, Mink R, Hofkosh D, Barone MA, et al. Building a framework of entrustable professional activities, supported by competencies and milestones, to bridge the educational continuum. Acad Med. 2017;92(3):324–30.

Kanmaz TJ, Culhane NS, Berenbrok LA, Jarrett J, Johanson EL, Ruehter VL, et al. A curriculum crosswalk of the core entrustable professional activities for new pharmacy graduates. Am J Pharm Educ. 2020;84(11):8077.

Frenzel JE, Richter LM, Hursman AN, Viets JL. Assessment of preceptor understanding and use of levels of entrustment. Curr Pharm Teach Learn. 2021;13(9):1121–6.

Jarrett JB, Berenbrok LA, Goliak KL, Meyer SM, Shaughnessy AF. Entrustable professional activities as a novel framework for pharmacy education. Am J Pharm Educ. 2018;82:6256.

Jarrett JB, Goliak KL, Haines ST, Trolli E, Schwartz A. Development of an entrustment-supervision assessment tool for pharmacy experiential education using stakeholder focus groups. Am J Pharm Educ. 2022;86(1):8523.

Haines ST, Pittenger AL, Gleason BL, Medina MS, Neely S. Validation of the entrustable professional activities for new pharmacy graduates. Am J Health-Syst Pharm. 2018;75(23):1922–9.

Bellottie GD, Kirwin J, Allen RA, Anksorus HN, Bartelme KM, Bottenberg MM, et al. Suggested pharmacy practice laboratory activities to align with pre-APPE domains in the doctor of pharmacy curriculum. Curr Pharm Teach Learn. 2018;10(9):1303–20.

Song YK, Chung EK, Lee YS, Yoon JH, Kim H. Objective structured clinical examination as a competency assessment tool of students’ readiness for advanced pharmacy practice experiences in South Korea: a pilot study. BMC Med Educ. 2023;23(1):231.

Nasser SC, Chamoun N, Kuyumjian YM, Dimassi H. Curricular integration of the pharmacists’ patient care process. Curr Pharm Teach Learn. 2021;13:1153–9.

Farra A, Zeenny R, Nasser S, Asmar N, Milane A, Bassil M, et al. Implementing an interprofessional education programme in Lebanon: overcoming challenges. East Mediterr Health J. 2018;24(9):914–21.

Cusimano MD, Cohen R, Rucker W, Murnaghan J, Kodama R, Reznick R. A comparative analysis of the costs of administration of an OSCE. Acad Med. 1994;69(7):571–6.

Rivkin A, Rozaklis L, Falbaum S. A program to prepare clinical pharmacy faculty members to use entrustable professional activities in experiential education. Am J Pharm Educ. 2020;84(9):ajpe7897.

Vu NV, Barrows HS, Marcy ML, Verhulst SJ, Colliver JA, Travis T. Six years of comprehensive, clinical, performance-based assessment using standardized patients at the Southern Illinois University School of Medicine. Acad Med. 1992;67(1):42–50.

Austin Z, O’Byrne C, Pugsley JA, Munoz LQ. Development and validation processes for an objective structured clinical examination (OSCE) for entry-to-practice certification in pharmacy: the Canadian experience. Am J Pharm Educ. 2003;67(3).

Barrickman AL, Galvez-Peralta M, Maynor L. Comparison of pharmacy student, evaluator, and standardised patient assessment of Objective Structured Clinical Examination (OSCE) performance. Pharm Educ. 2023;23(1):314–20.

Acknowledgements

Not applicable.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

SN and RK had equal contribution as co-first authors. SN, RK, HM, LK conceptualized the initial research idea. SN, RK, LK contributed to the design of the study. SN, ReK, CA collected the data. SN, RK, LK, ReK analyzed and interpreted the data. SN, LK, RK, HM, CA drafted the original manuscript. SN, LK, IB, RK, HM critically reviewed and edited the final manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was reviewed and approved by the Lebanese American University Institutional Review Board and granted exempt status under the code number (LAU.SOP.HM2.12/Sep/2023). All methods were performed in accordance with the relevant guidelines and regulations. Waiver of informed consent was granted by the Lebanese American University IRB for this study, as it constituted an internal school assessment exempt from IRB review.

Consent for publication

The authors report no conflict of interest and give their consent for publication.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Nasser, S.C., Kanbar, R., Btaiche, I.F. et al. Entrustable professional activities-based objective structured clinical examinations in a pharmacy curriculum. BMC Med Educ 24, 436 (2024). https://doi.org/10.1186/s12909-024-05425-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-024-05425-y