Abstract

Background

Teaching is an important professional skill for physicians and providing feedback is an important part of teaching. Medical students can practice their feedback skills by giving each other peer feedback. Therefore, we developed a peer feedback training in which students observed a peer that modelled the use of good feedback principles. Students then elaborated on the modelled feedback principles through peer discussion. This combination of peer modelling and discussing the modelled feedback principles was expected to enhance emulation of the feedback principles compared to (1) only peer modelling and (2) discussing the feedback principles without previous modelling.

Methods

In a quasi-experimental study design, 141 medical students were assigned randomly to three training conditions: peer modelling plus discussion (MD), non-peer modelled example (NM) or peer modelling without discussion (M). Before and after the training, they commented on papers written by peers. These comments served as a pre- and a post-measure of peer feedback. The comments were coded into different functions and aspects of the peer feedback. Non-parametrical Kruskall-Wallis tests were used to check for pre- and post-measure between-group differences in the functions and aspects.

Results

Before the training, there were no significant between-group differences in feedback functions and aspects. After the training, the MD-condition gave significantly more positive peer feedback than the NM-condition. However, no other functions or aspects were significantly different between the three conditions, mainly because the within-group interquartile ranges were large.

Conclusions

The large interquartile ranges suggest that students differed substantially in the effort placed into giving peer feedback. Therefore, additional incentives may be needed to motivate students to give good feedback. Teachers could emphasise the utility value of peer feedback as an important professional skill and the importance of academic altruism and professional accountability in the peer feedback process. Such incentives may convince more students to put more effort into giving peer feedback.

Similar content being viewed by others

Background

Teaching is regarded internationally as an important physician skill [1, 2]. Medical students can learn this skill by teaching their peers [3,4,5,6], which can be operationalised as near-peer teaching when the teaching student is more advanced than the learning student, or same-level teaching when students have no developmental differences [7, 8]. One way of same-level peer teaching is giving peer feedback, which has been implemented in medical education to assess e.g. professional behaviour [9], teaching skills [10] and communication skills [11]. Giving peer feedback allows students to develop professional teaching skills, like evaluating performance, justifying evaluations and helping others improve [12,13,14]. It may also improve their own learning performance [15,16,17,18]. Therefore, peer feedback can be used to prepare medical students for their future teaching role as a physician.

However, research shows that students need feedback training in order to give good peer feedback [14, 19,20,21]. Several training methods have been developed for this purpose [22,23,24,25,26], but a method that has not been studied frequently is peer modelling. Modelling in general means that a person (e.g. a teacher) demonstrates how to perform a certain task or behaviour [27]. In the case of peer modelling, these persons are fellow students. Peer modelling has been researched for various learning tasks [28,29,30] and has shown to be effective for improving e.g. writing skills [31, 32]. However, to our knowledge only one study investigated the effect of peer modelling on giving peer feedback [33]. In that experimental study, students either observed peer models who demonstrated a text review strategy or practiced the same review strategy at once. Students then emulated the review strategy either individually or in pairs. After observing the peer models, students who emulated the review strategy in pairs used more features of the review strategy in their own peer reviews than students who emulated the review strategy individually. In contrast, after practicing the review strategy right away, students who emulated the review strategy in pairs used fewer aspects of the review strategy than students who emulated the strategy individually. In other words, there was a significant interaction effect between type of instruction (peer modelling or practice) and follow-up activity (emulation in pairs or alone). This interaction effect suggested that observing peer modelling was only effective when followed by emulation in pairs.

Theoretically, this effect can be explained as cognitive elaboration that occurs when students discuss new knowledge with fellow students [34, 35]. Peer discussion is a powerful form of active learning that can lead to co-construction of knowledge, the formation of new ideas [36, 37] and the development of elaborate mental models [38]. In the case of peer modelling, students may elaborate more deeply on what peer models demonstrate when they discuss the modelled performance with each other. As a consequence, they may emulate more features of the modelled performance.

In sum, medical students can practice future teaching skills by giving peer feedback. Modelling good feedback principles may be an effective method to teach students how to give peer feedback, especially when modelling is followed by peer discussion in order to elaborate on the modelled feedback principles. However, this hypothesis has not been tested yet in medical education.

Therefore, we investigated the effect of peer modelling followed by peer discussion of the modelled feedback principles on students’ emulation of the feedback principles. We expected that peer discussion would increase elaboration on the feedback principles and emulation of these principles. As a consequence, we hypothesised that peer modelling plus discussion would lead to more emulation of the feedback principles than either peer modelling alone or peer discussion of the feedback principles without previous modelling of these principles.

Methods

Design and setting

This study used a quasi-experimental, between-groups, pre-/post-measure design with three conditions: peer modelling plus discussion (MD), non-peer modelled example (ED), or peer modelling without discussion (M). The pre- and post-measures consisted of annotated peer feedback comments. The study took place in a Dutch medical school while students were writing their bachelor thesis (in the Netherlands, medical school starts at the bachelor level, when students are approximately 18 years old). In this particular medical school, students give peer feedback on multiple occasions throughout their undergraduate bachelor curriculum. For instance, in year 1 they role play consultations in the role of patient, doctor or observer and give peer feedback to the doctor. In addition, they peer review academic writing assignments in their first and second year of study. Therefore, peer feedback is implemented consistently throughout the curriculum.

This study took place in the third (also last) year of the bachelor program. The bachelor thesis was a ‘Critical Appraisal of a Topic’ (CAT): a structured synthesis of research literature based on a clinical question, including a literature search strategy, a critical appraisal of the literature and an explanation of the clinical application of the results [39]. Students wrote their CAT-paper as part of a research project that lasted several months. They were supervised by PhD students and communication teachers who taught academic writing. The communication teachers also taught sessions in which students learned how to peer review each other’s papers and the training took place during these sessions. In these sessions, they explained the importance of peer feedback to students by emphasising that peer feedback is an important academic and professional skill. Moreover, it was discussed with students that the process of providing feedback would develop their own abilities as academic communicators [18]. Students were required to peer review CAT-papers of other students before and after these sessions.

Participants

All students who started their bachelor thesis were invited to participate in the study through an informed consent letter which they could sign if they wanted to participate. Thus, students who wanted to participate gave written informed consent. 245 students (mean age 21.0 years, SD = 1.4) signed this letter and within this group, complete data of 141 students was obtained.

Materials

One of the communication teachers selected a CAT-paper that needed improvement of a student from the previous year. The teacher wrote annotated feedback comments in the second chapter of this thesis. Informed by literature on good feedback principles [25, 40,41,42], these comments contained evaluations of performance (i.e. explicit or implicit comments on the quality of the text), explanations for these evaluations and suggestions for improvement. For instance, one of the comments was: ‘It is clear that it’s the final bit of information for the section – but I think that you could give a little more information, or tie it all together somehow. Maybe summarise a few main points?’ This comment contained a positive evaluation that was explained, and two suggestions for revision. Table 1 contains some more examples of how the feedback principles were modelled. Specific attention was paid to including positive evaluations in the feedback, because the communication teachers noted that students often forgot to give positive peer feedback.

After the text with annotated comments had been written, the first author (FvB) used this text with the annotated comments to write a video script for the peer model. In this script, the model explained which parts of the text she reviewed and which thoughts came to her mind while she was reviewing the text. The script also described which comments she wrote in the paper. The script was used to record a video in which a professional actress played the peer model. This video was filmed and edited by a video expert and approximately 7 min in length. The video contained a split screen, one showing the actress while she was reviewing the text and the other showing the computer screen as she was typing her feedback. On that computer screen, the students’ paper was shown in the same electronic learning environment (ELO) that students worked in. The feedback comments that the actress wrote appeared on the screen while she was typing them.

The communication teachers received a lesson plan and PowerPoint slides for the feedback training. The lesson plan was the same for all teachers with the exception of the experimental treatment (MD, NM, or M). The first author (FvB) discussed the lesson plan individually with each teacher without stating the research hypothesis. The lesson plan started with the teacher explaining Hattie and Timperley’s (2007) three principles of feedback: feed-up, feed-back and feed-forward. To illustrate feed-up, the teacher showed a part of the scoring rubric that would be used to assess students’ CAT-paper. The teacher then explained the good feedback principles, i.e. that feedback should contain an evaluation, an explanation for the evaluation and a suggestion for improvement [42]. The teachers then gave additional tips to give positive feedback and points for improvement, to focus on helping and to give concrete suggestions or solutions. The remainder of the lesson plan was specific for each experimental treatment and will be explained under ‘Study procedure’.

Study procedure

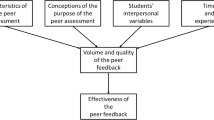

A schematic overview of the experimental procedure can be found in Fig. 1. Before the training, students were instructed to write the first chapter of their CAT-paper and to upload this chapter in the ELO. Within the ELO, each student was assigned randomly and non-anonymously to two other students for peer review. Thus, each student performed and received two peer reviews. Students gave peer feedback in the ELO by writing annotated comments in their peers’ draft papers. They could consult an assessment rubric in the ELO, but were not required to use the rubric to give feedback.

After completing the peer reviews on the first chapter, students were instructed to upload the second chapter of their CAT-paper into the ELO and to bring their laptop to a subsequent workgroup session. These workgroups were assigned randomly to the three experimental conditions: peer modelling plus discussion (MD), non-peer modelled example (NM) and peer modelling without discussion (M). In the MD-condition, students observed the video of the peer model and the teachers paused the video three times to discuss the modelled feedback principles (i.e. evaluation, explanation for evaluation and suggestions for improvement). Students were instructed to identify these principles in the feedback and to discuss why these were good feedback principles. The M-condition followed the same procedure, except that the video was not paused for peer discussion. In the NM-condition, students did not observe the video, but instead read a hand-out of the paper with the annotated feedback comments. This was the same paper with the same feedback comments as in the video. Reading the feedback comments was followed by the same discussion as in the MD-condition, i.e. students identified the good feedback principles in the comments and discussed why these were good feedback principles.

After these experimental treatments, all students were instructed to peer review one of their peers’ second chapters on their laptop by writing annotated comments. They worked in silence and were instructed to complete both their peer reviews after the workgroup session.

Analyses of the peer feedback

The peer feedback comments that students wrote before and after the training served as a pre- and post-measure of peer feedback. The feedback comments were coded based on an existing framework for coding peer feedback [42]. In this framework, four functions of feedback are distinguished: analysis, evaluation, explanation and revision. ‘Analysis’ means feedback aimed at understanding the text, whereas ‘evaluation’ refers to implicit and explicit quality judgments. ‘Explanation’ contains arguments that support an evaluation and ‘revision’ means suggestions for improvement. For ‘evaluation’, we further specified positive evaluation and negative evaluation and for ‘explanation’, we specified explanation for evaluation and explanation for revision. The feedback coding framework [42] also distinguishes three aspects of feedback: content, structure and style. ‘Content’ refers to feedback on the content of the text, e.g. its’ relevance, argumentation and clarity. ‘Structure’ refers to the internal consistency of the text and ‘style’ to the use of language, grammar and spelling. Thus, the eventual coding framework contained nine measures of peer feedback: six functions and three aspects.

The first author (FvB) and a second coder, who was unaware of the research hypothesis, independently coded the functions and aspects of four randomly selected students in iterative rounds of coding. They coded the functions and aspects of one student, compared their results, discussed differences in interpretations and made a list of coding agreements. Subsequently, they coded the functions and aspects of the next student, etcetera. After the fourth student, they had reached a satisfactory inter-observer agreement (Cohen’s Kappa = 0.87). Subsequently, the second coder coded the remainder of the peer feedback.

Following Field [43], assumptions of normality were checked with tests of normality (Kolmogorov-Smirnov) and by looking at the frequency distributions of the coded peer feedback. The Kolmogorov-Smirnov tests were performed on the nine post-measures within each experimental condition. This resulted in 27 tests (9 post-measures times 3 condition). 23 of these tests revealed significant p-values (p = .04 or lower). Four tests were non-significant: negative evaluation in the M-condition, D(59) = 0.09, p = .20, explanation for revision in the M-condition, D(59) = 0.10, p = 20, content in the MD-condition, D(36) = 0.12, p = .20 and style in the NM-condition, D(46) = 0.11, p = .19. Visual inspection revealed a positive skew (i.e. skew to the right) for all the dependent variables. This confirmed that the data was non-normally distributed. Therefore, non-parametrical Kruskall-Wallis tests were used to check for significant between-group differences in the pre- and post-measures of peer feedback.

Results

We obtained complete feedback data of 141 participants (MD-condition: n = 36, NM-condition: n = 46; M-condition: n = 59). Table 2 (pre-measure) and Table 3 (post-measure) give an overview of all the different types of peer feedback that students gave. As can be seen in Table 3, the most prevalent type of feedback after the intervention was revision of style (e.g. ‘Maybe you could remove one or two commas out of this sentence, so it might have a smoother flow’). This type of feedback occurred 1545 times, covering 27.18 % of the total provided peer feedback. The next most frequently occurring feedback was analysis of content (e.g. ‘What is Optiflow therapy?’), which occurred 754 times (13.26 % of the total feedback). The third most frequently provided type of feedback was revision of content (e.g. ‘Maybe you can explain what reduction actually entails’). This type of feedback was given 702 times, or 12.35 % of the total feedback. Together, these three types of feedback contained more than half of the peer feedback. Thus, students commented predominantly on style-issues and clarification of the text.

The Kruskal-Wallis tests (see Table 4) revealed no significant between-group differences in peer feedback before the feedback training. In contrast, there was one significant difference in peer feedback after the training: students in the MD-condition gave significantly more positive feedback than students in the NM-condition, H(2) = 6.33, p = .04. Pairwise comparisons corrected for multiple comparisons (Bonferroni) showed a higher degree of positive evaluation in the MD-condition than in the NM-condition, p = .04. There were no significant differences in positive evaluation between the NM-condition and the M-condition, p = .62 and between the MD-condition and the M-condition, p = .43. Table 5 shows the median feedback scores on the post-measure, including the inter quartile ranges (IQRs). As can be seen in that table, the IQRs were large, meaning the amount of provided peer feedback varied substantially between students.

Discussion

Teaching and communicating clearly are important physician skills [1, 2]. Students can practice these skills by giving peer feedback. Therefore, we developed a training in which a peer model demonstrated on video how to give peer feedback, after which students discussed the good feedback principles that she modelled. Since peer discussion can enhance cognitive elaboration [34, 35], we expected peer modelling plus discussion of the modelled feedback principles to have a beneficial effect on students’ use of the feedback principles compared to (1) only observing the peer model or (2) discussing the feedback principles without observing the peer model. However, except for positive evaluation, we found no significant differences between the three conditions in the amount of peer feedback that students gave. Positive evaluation was provided significantly more often in the MD-condition than in the NM-condition.

A reason for the lack of significant effects was that the amount of peer feedback varied a lot between students, suggesting that some students put considerably more effort into giving feedback than others. This notion can be supported by a previous study in which students experienced large differences in quality of peer feedback they provided and received. Students reported giving more peer feedback than they received, delays in receiving peer feedback until the day of the submission deadline, and hearing from other students that they were too busy to give peer feedback. Students also reported that peers should be held accountable for giving good feedback. Moreover, students who were committed to giving good feedback seemed to be driven by ‘academic altruism’, an altruistic motivation to provide good peer feedback [44].

It is also possible that students’ motivation to give peer feedback varied depending on how much they valued the peer feedback task. According to expectancy-value theory, the perceived utility value of a task promotes engagement with that task [45]. This mechanism may also apply in contexts in which students give peer feedback [46]. That is, engagement with peer feedback may depend on how important students find peer feedback for developing their own skills. A high perceived utility value has been found to be positively associated with performance [47]. Therefore, students who value the utility of giving peer feedback may also give better feedback. This may explain why some students gave more and better feedback than others.

The finding that the MD-condition gave significantly more positive feedback than the NM-condition may be explained by the fact that the peer model demonstrated explicitly how to give positive feedback and that students elaborated on this principle in the MD-condition. Students in the NM-condition did not see the demonstration of positive feedback, although they could read it in the hand-out. We should also remind the reader here that the teachers encouraged giving positive feedback. Therefore, this seemed to be more evident than other aspects that were modelled. The possible effect of modelling on positive feedback can be seen as a desirable outcome, as feedback should encourage motivation and self-esteem [48, 49] under the condition that it is aimed at the task and not the person [50].

Our findings show that students provided more positive than negative peer feedback in general, which is in line with previous studies [23, 51]. Students may feel uncomfortable in giving negative peer feedback because they wish to maintain good social relationships with their peers [14]. Although this seems understandable, it has also been argued that ‘balancing rules’ for positive and negative feedback, such as the sandwich method, can harm the authenticity of feedback processes and put too much focus on feedback messages instead of using feedback for improvement [52]. Therefore, although positive feedback should be encouraged, the added value of ‘balancing rules’ can be debated.

Although the purpose of this study was not to compare pre- and post-intervention frequencies of peer feedback, these frequencies do perhaps provide useful information for designing peer feedback training. In both the pre- and post-measure, the most occurring type of feedback was revision on style. In general, revision on style consisted of simple, local (i.e. sentence-level) suggestions for revision. However, high quality peer review is characterised by a balanced mix of feedback on both global and local text issues [20]. Therefore, educators may focus peer feedback training more on providing global-level feedback in order to improve the quality of peer feedback.

A question that can be raised is whether the outcomes would have been different in a different learning task. Providing feedback on academic writing requires different skills than on clinical tasks. For instance, peer review requires the skill to distinguish global and local writing issues [20] and detect problems in style, structure and content of writing [42]. As a comparison, feedback on (for instance) clinical consultation may require the skill of detecting problems in building a trusting relationship with the patient, structuring a consultation and dealing with patients’ emotions [53]. These are very different skills.

Also, students may attribute more utility value to learning tasks that they perceive as more clinically or professionally relevant than academic writing. This seems to be an unresearched area. To our knowledge, the criteria ‘evaluation’, ‘explanation’ and ‘suggestion’ (or similar criteria) have only been used to analyse written peer feedback on academic writing [54,55,56,57] or concept maps [58]. Therefore, we also applied the feedback training to a writing task.

However, to our knowledge there is no research examining the quality of peer feedback provided on other tasks than written tasks. For instance, one study investigated the effect of expert- and peer feedback on ratings of students’ communications skills, but not the peer feedback that students provided [11]. In a review study of peer feedback during collaborative learning, no studies were found in which faculty evaluated the quality of feedback [9]. Also in a recent scoping review on feedback for early career professionals, no findings on feedback quality are reported [59]. Therefore, it seems that peer feedback has not been analysed in learning tasks other than written tasks, although the criteria that can be used to analyse feedback seem to be generic [25, 40,41,42]. This may call for new research to peer feedback in other learning tasks as well, especially in professional learning tasks that students find relevant.

Limitations

There are some limitations to this study. First, not all students who gave informed consent fully completed the peer feedback assignment. A possible reason for this is that students gave peer feedback at later time points, outside of the approved ELO. Still, it should be noted that the majority of the students did give peer feedback in the ELO.

Second, due to the online setting of the peer feedback process, face-to-face feedback dialogue between peers was not possible. Feedback dialogue prevents a unilateral transmission of feedback from the provider to the receiver and can therefore lead to a better shared understanding of the feedback and facilitate acting upon the feedback [60, 61]. However, research shows that students do not frequently engage in online feedback dialogue after giving and receiving peer feedback [62]. Therefore, a future challenge for online peer feedback may be to promote online feedback dialogue between students.

Third, we did not collect feedback from the students on the training and on their perceptions of feedback in general. This would have provided more insights in why the present results were found. Future research should provide more explanatory evidence on why certain effects do or do not occur.

Conclusions

Although modelling good feedback principles and discussing these modelled principles may increase students’ use of positive feedback, students also differ substantially in the amount of peer feedback that they give each other. This suggests that additional incentives are needed to motivate students to give peer feedback. Such an incentive could be to provide feedback training in learning tasks that students perhaps see as more clinically relevant. A further incentive may be to emphasise the utility value of peer feedback as preparation for future teaching practice. Another incentive could be to stress the importance of altruism and professional accountability in the peer feedback process. Future interventions may focus on such incentives in order to encourage high-quality peer feedback. Perhaps the next step is to add an explanation of the importance and relevance of feedback training and apply the feedback training model to a clinical subject.

Availability of data and materials

The anonymised dataset and SPSS-syntax can be sent by the corresponding author upon reasonable request.

Abbreviations

- CAT:

-

Critical Appraisal of a Topic

- ELO:

-

Electronic Learning Environment

- M:

-

Peer modelling without discussion

- MD:

-

Peer modelling plus discussion

- NM:

-

Non-peer modelled example

References

CanMEDS. CanMEDS 2015 Physician Competency Framework Ottawa: Royal College of Physicians and Surgeons of Canada; 2015 [Available from: http://canmeds.royalcollege.ca/uploads/en/framework/CanMEDS%202015 %20Framework_EN_Reduced.pdf.

GMC. Outcomes for graduates London: General Medical Council; 2018 [Available from: https://www.gmc-uk.org/-/media/documents/outcomes-for-graduates-a4-6_pdf-78952372.pdf.

Rees EL, Quinn PJ, Davies B, Fotheringham V. How does peer teaching compare to faculty teaching? A systematic review and meta-analysis. Med Teach. 2016;38(8):829–37.

Ten Cate O, Durning S. Peer teaching in medical education: Twelve reasons to move from theory to practice. Med Teach. 2007;29(6):591–9.

Dandavino M, Snell L, Wiseman J. Why medical students should learn how to teach. Med Teach. 2007;29(6):558–65.

Amorosa JMH, Mellman LA, Graham MJ. Medical students as teachers: How preclinical teaching opportunities can create an early awareness of the role of physician as teacher. Med Teach. 2011;33(2):137–44.

Ten Cate O. Perspective Paper / Perspektive: Peer teaching: From method to philosophy. Zeitschrift für Evidenz, Fortbildung und Qualität im Gesundheitswesen. 2017;127–128:85–7.

Ten Cate O, Durning S. Dimensions and psychology of peer teaching in medical education. Med Teach. 2007;29(6):546–52.

Lerchenfeldt S, Mi M, Eng M. The utilization of peer feedback during collaborative learning in undergraduate medical education: A systematic review. BMC Med Educ. 2019;19(1):321.

Rees EL, Davies B, Eastwood M. Developing students’ teaching through peer observation and feedback. Perspectives on Medical Education. 2015;4(5):268–71.

Krause F, Schmalz G, Haak R, Rockenbauch K. The impact of expert- and peer feedback on communication skills of undergraduate dental students - a single-blinded, randomized, controlled clinical trial. Patient Educ Couns. 2017;100(12):2275–82.

Van Popta E, Kral M, Camp G, Martens RL, Simons PRJ. Exploring the value of peer feedback in online learning for the provider. Educ Res Rev. 2017;20:24–34.

Topping K. Peer assessment between students in colleges and universities. Rev Educ Res. 1998;68(3):249–76.

Burgess AW, Roberts C, Black KI, Mellis C. Senior medical student perceived ability and experience in giving peer feedback in formative long case examinations. BMC Med Educ. 2013;13(1):79.

Cho K, MacArthur C. Learning by reviewing. J Educ Psych. 2011;103(1):73–84.

Li L, Liu X, Steckelberg AL. Assessor or assessee: How student learning improves by giving and receiving peer feedback. British J Educ Tech. 2010;41(3):525–36.

Lu J, Law N. Online peer assessment: effects of cognitive and affective feedback. Instr Sci. 2012;40(2):257–75.

Lundstrom K, Baker W. To give is better than to receive: The benefits of peer review to the reviewer’s own writing. J Second Lang Writing. 2009;18(1):30–43.

Van Zundert MJ, Sluijsmans DMA, Van Merrienboer JJG. Effective peer assessment processes: Research findings and future directions. Learn Instr. 2010;20(4):270–9.

Chang CY-H. Two decades of research in L2 peer review. J Writing Res. 2016;8(1):81–117.

McConlogue T. Making judgements: Investigating the process of composing and receiving peer feedback. Stud High Educ. 2015;40(9):1495–506.

Alqassab M, Strijbos J-W, Ufer S. Training peer-feedback skills on geometric construction tasks: Tole of domain knowledge and peer-feedback levels. Eur J Psychol Educ. 2018;33(1):11–30.

Sluijsmans DMA, Brand-Gruwel S, Van Merriënboer JJG. Peer assessment training in teacher education: Effects on performance and perceptions. Assess Eval Higher Educ. 2002;27(5):443–54.

Gielen M, De Wever B. Structuring peer assessment: Comparing the impact of the degree of structure on peer feedback content. Comput Human Behav. 2015;52:315–25.

Gielen S, Peeters E, Dochy F, Onghena P, Struyven K. Improving the effectiveness of peer feedback for learning. Learn Instr. 2010;20(4):304–15.

Wichmann A, Funk A, Rummel N. Leveraging the potential of peer feedback in an academic writing activity through sense-making support. Eur J Psych Educ. 2018;33(1):165–84.

Van Gog T, Rummel N. Example-based learning: Integrating cognitive and social-cognitive research perspectives. Educational Psychology Review. 2010;22(2):155–74.

Schunk DH, Hanson AR. Peer models: Influence on children’s self-efficacy and achievement. J Educ Psych. 1985;77(3):313–22.

Schunk DH, Hanson AR, Cox PD. Peer-model attributes and children’s achievement behaviors. J Educ Psych. 1987;79(1):54–61.

Braaksma MAH, Rijlaarsdam G, van den Bergh H, van Hout-Wolters BHAM. Observational learning and its effects on the orchestration of writing processes. Cogn Instr. 2004;22(1):1–36.

Zimmerman BJ, Kitsantas A. Acquiring writing revision and self-regulatory skill through observation and emulation. J Educ Psychol. 2002;94(4):660–8.

Braaksma MAH, Rijlaarsdam G, van den Bergh H. Observational learning and the effects of model-observer similarity. J Educ Psychol. 2002;94(2):405–15.

Van Steendam E, Rijlaarsdam G, Sercu L, Van den Bergh H. The effect of instruction type and dyadic or individual emulation on the quality of higher-order peer feedback in EFL. Learn Instr. 2010;20(4):316–27.

Slavin RE, Hurley EA, Chamberlain A. Cooperative learning and achievement: Theory and research. In: Miller GE, Reynolds WM, editors. Handbook of psychology: Educational psychology. 7. Hoboken: Wiley; 2003. pp. 177–98.

O’Donnell AM. The role of peers and group learning. In: Winne PH, Alexander PA, editors. Handbook of educational psychology. Mahwah: Erlbaum; 2006. pp. 781–802.

Chi MTH. Active-constructive-interactive: A conceptual framework for differentiating learning activities. Topics Cogn Sci. 2009;1(1):73–105.

Chi MTH, Wylie R. The ICAP Framework: Linking cognitive engagement to active learning outcomes. Educ Psychol. 2014;49(4):219–43.

Webb NM, Troper JD, Fall R. Constructive activity and learning in collaborative small groups. J Educ Psychol. 1995;87(3):406–23.

Fetters L, Figueiredo EM, Keane-Miller D, McSweeney DJ, Tsao C-C. Critically Appraised Topics. Pediatr Phys Ther. 2004;16(1):19–21.

Hattie J, Timperley H. The power of feedback. Review of Educational Research. 2007;77(1):81–112.

Kulhavy RW, Stock WA. Feedback in written instruction: The place of response certitude. Educational Psychology Review. 1989;1(4):279–308.

Van den Berg I, Admiraal W, Pilot A. Designing student peer assessment in higher education: analysis of written and oral peer feedback. Teaching in Higher Education. 2006;11(2):135–47.

Field A. Discovering statistics using SPSS 3ed. London: SAGE; 2009. 779 p.

Cartney P. Exploring the use of peer assessment as a vehicle for closing the gap between feedback given and feedback used. Assess Eval Educ. 2010;35(5):551–64.

Wigfield A, Eccles JS. Expectancy–value theory of achievement motivation. Contemp Educ Psychol. 2000;25(1):68–81.

Huisman B, Saab N, Van Driel J, Van Den Broek P. A questionnaire to assess students’ beliefs about peer-feedback. Innov Educ Teach Int. 2019:1–11.

Hulleman CS, Durik AM, Schweigert SB, Harackiewicz JM. Task values, achievement goals, and interest: An integrative analysis. J Educ Psychol. 2008;100(2):398–416.

Nicol DJ, Macfarlane-Dick D. Formative assessment and self‐regulated learning: a model and seven principles of good feedback practice. Stud High Educ. 2006;31(2):199–218.

Evans C. Making sense of assessment feedback in higher education. Review of Educational Research. 2013;83(1):70–120.

Kluger AN, DeNisi A. The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychol Bull. 1996;119(2):254–84.

van Blankenstein FM, Truțescu G-O, van der Rijst RM, Saab N. Immediate and delayed effects of a modeling example on the application of principles of good feedback practice: A quasi-experimental study. Instr Sci. 2019.

Molloy E, Ajjawi R, Bearman M, Noble C, Rudland J, Ryan A. Challenging feedback myths: Values, learner involvement and promoting effects beyond the immediate task. Medical Education. 2019;0(0).

Engerer C, Berberat PO, Dinkel A, Rudolph B, Sattel H, Wuensch A. Specific feedback makes medical students better communicators. BMC Med Educ. 2019;19(1):51.

Huisman B, Saab N, van Driel J, van den Broek P. Peer feedback on academic writing: Undergraduate students’ peer feedback role, peer feedback perceptions and essay performance. Assess Eval High Educ. 2018;43(6):955–68.

Zhang F, Schunn CD, Baikadi A. Charting the routes to revision: An interplay of writing goals, peer comments, and self-reflections from peer reviews. Instr Sci. 2017;45(5):679–707.

Gielen M, De Wever B. Scripting the role of assessor and assessee in peer assessment in a wiki environment: Impact on peer feedback quality and product improvement. Comput Educ. 2015;88:370–86.

Voet M, Gielen M, Boelens R, De Wever B. Using feedback requests to actively involve assessees in peer assessment: effects on the assessor’s feedback content and assessee’s agreement with feedback. Eur J psychol Educ. 2018;33(1):145–64.

Camarata T, Slieman TA. Improving student feedback quality: A simple model using peer review and feedback rubrics. J Med Educ Curricular Dev. 2020;7.

Mattick K, Brennan N, Briscoe S, Papoutsi C, Pearson M. Optimising feedback for early career professionals: A scoping review and new framework. Med Educ. 2019;53(4):355–68.

Boud D, Molloy E. Rethinking models of feedback for learning: The challenge of design. Assess Eval High Educ. 2013;38(6):698–712.

Nicol D. From monologue to dialogue: Improving written feedback processes in mass higher education. Assess Eval High Educ. 2010;35(5):501–17.

Filius RM, de Kleijn RAM, Uijl SG, Prins FJ, van Rijen HVM, Grobbee DE. Strengthening dialogic peer feedback aiming for deep learning in SPOCs. Comput Educ. 2018;125:86–100.

Acknowledgements

The authors would like to thank Anouk Bakker for coding the peer feedback data.

Funding

No external funding was acquired for this study.

Author information

Authors and Affiliations

Contributions

FvB led the experiment and data analysis, and wrote the manuscript. JS contributed to the study design and to the teaching content used. He has read and approved the final manuscript. NS made contributions to the conception, the design and the interpretation of the data. In addition she was a contributor in writing the manuscript. She read and approved the final manuscript. PS contributed to analysing and interpreting the data, performing the statistical analyses and writing the manuscript. He has read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by the Leiden University Graduate School of Teaching Research Ethics Committee (reference number: IREC_ICLON 2017-17). All participants gave written informed consent to participate in the study.

Consent for publication

All the participants in this study signed a letter in which they gave informed consent to analyse their peer feedback and to publish the results of these analyses. In the letter, they were informed about how their peer feedback would be coded, where this data would be stored and who had access to the data.

Competing interest

No competing interests are declared for this study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

van Blankenstein, F.M., O’Sullivan, J.F., Saab, N. et al. The effect of peer modelling and discussing modelled feedback principles on medical students’ feedback skills: a quasi-experimental study. BMC Med Educ 21, 332 (2021). https://doi.org/10.1186/s12909-021-02755-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-021-02755-z