Abstract

Purpose

Exercise is efficacious for people living after a cancer diagnosis. However, implementation of exercise interventions in real-world settings is challenging. Implementation outcomes are defined as ‘the effects of deliberate and purposive actions to implement new treatments, practices, and services’. Measuring implementation outcomes is a practical way of evaluating implementation success. This systematic review explores the implementation outcomes of exercise interventions evaluated under real-world conditions for cancer care.

Methods

Using PRISMA guidelines, an electronic database search of Medline, PsycInfo, CINAHL, Web of Science, SportsDiscus, Scopus and Cochrane Central Registry of Controlled Trials was conducted for studies published between January 2000 and February 2020. The Moving through Cancer registry was hand searched. The Implementation Outcomes Framework guided data extraction. Inclusion criteria were adult populations with a cancer diagnosis. Efficacy studies were excluded.

Results

Thirty-seven articles that described 31 unique programs met the inclusion criteria. Implementation outcomes commonly evaluated were feasibility (unique programs n = 17, 54.8%) and adoption (unique programs n = 14, 45.2%). Interventions were typically delivered in the community (unique programs n = 17, 58.6%), in groups (unique programs n = 14, 48.3%) and supervised by a qualified health professional (unique programs n = 14, 48.3%). Implementation outcomes infrequently evaluated were penetration (unique programs n = 1, 3.2%) and sustainability (unique programs n = 1, 3.2%).

Conclusions

Exercise studies need to measure and evaluate implementation outcomes under real-world conditions. Robust measurement and reporting of implementation outcomes can help to identify what strategies are essential for successful implementation of exercise interventions.

Implications for cancer survivors

Understanding how exercise interventions can be successful implemented is important so that people living after a cancer diagnosis can derive the benefits of exercise.

Similar content being viewed by others

Background

Cancer is a leading cause of disease burden worldwide. In 2020, 19.2 million new cases of cancer and 9.9 million cancer-related deaths occurred globally [1]. Cancer rates are projected to rise steadily in the coming decades, in part due to population growth, ageing and more people surviving a cancer diagnosis because of improvements in early detection and treatment advances [2, 3].

Exercise is important in addressing the sequala of disease and impacts of a cancer diagnosis, as demonstrated in the robust efficacy base of systematic reviews, meta-analyses and meta reviews [4,5,6,7,8,9,10,11]. High quality or ‘level one evidence’, as gathered through systematic reviews and meta-analyses, informs the development of clinical practice guidelines (CPGs). CPGs are evidence-based statements that include recommendations to optimise patient care [12]. In 2019, the American College of Sports Medicine (ACSM) updated evidence-based advice for cancer and exercise testing, prescription and delivery in cancer survivors. The consensus statement provides exercise prescription recommendations for common cancer-related health outcomes including depression, fatigue and quality of life [13]. The ACSM is one of many organisations worldwide that recommend exercise be incorporated within the routine care for people with cancer [14,15,16,17].

The development of CPGs, whilst fundamental to informing evidence-based care, is unlikely to directly change clinical practice [18]. To facilitate the implementation of their consensus statement, ACSM published additional resources describing how implementation can be fostered [19] and created the Moving through Cancer registry to connect people with cancer to local exercise services [20]. This signifies greater attention to translating research findings into practice and moving beyond demonstrating exercise efficacy for different cancer types.

Most research that establishes the efficacy of health interventions is conducted in tightly controlled research settings, focusing on internal validity [21, 22]. Efficacy studies exclude many participants in an attempt to recruit a homogenous sample. Such research studies are often well funded and have access to the required resources needed to deliver the evidence-based intervention, health program or innovation (hereafter ‘intervention’) with high fidelity to the described study protocol. Further, research staff often take part in extensive training sessions to deliver the intervention [23, 24]. These conditions rarely reflect the conditions under which an intervention is implemented in healthcare settings. That is, staff may have limited time to instruct patients during clinical consultations, inadequate training to prescribe exercise interventions or insufficient physical space to establish an exercise intervention [25]. It is common for efficacious interventions to fail in practice [26] or have reduced clinical impact when replicated to reach more of the population for which they are intended [27, 28]. Pragmatic study designs seek to address these issues through answering the question “Does this intervention work under usual conditions?” [29]. That is, they seek to reflect population diversity in study samples and explore whether it is realistic to implement the intervention. Despite the growth in cancer studies about exercise in recent years, relatively little is known about the outcomes of exercise interventions when implemented using pragmatic study designs, or the ‘external validity’ of how best to implement and evaluate exercise interventions in practice [22].

Proctor and colleagues [30] have developed an Implementation Outcomes Framework to evaluate implementation success. If implementation is successful, the proposed theory of change suggests this contributes to desired clinical or health service outcomes (e.g., a safe, efficient service that successfully addresses patient symptomology). Evaluating the outcomes of implementation efforts can also reduce the risk of incorrectly concluding that an intervention is ineffective, when in fact, poor implementation may be the most significant contributor to failure [30, 31]. Implementation science frameworks that evaluate implementation outcomes may therefore be useful to determine whether failure is due to the intervention or the implementation process [32, 33]. Proctor and colleagues [30] define eight implementation outcomes for this purpose: acceptability, adoption, appropriateness, cost, feasibility, fidelity, penetration and sustainability.

The Implementation Outcomes Framework was used to inform the outcomes of interest for this review. The aim of this review was to examine the implementation outcomes that are evaluated under real-world conditions when exercise interventions are implemented for the care of people diagnosed with cancer.

Table 1 provides a description of how the implementation outcomes were operationalised in this study.

Methods

Protocol and registration

This review was registered in the PROSPERO database (CRD42019123791) and conducted in accordance with the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement [34].

The search strategy was developed in consultation with a librarian experienced in systematic reviews. First, the search strategy of a recent meta-review that summarised the efficacy of exercise and cancer was replicated and augmented with additional search terms for exercise (e.g., physical activity) [5]. Second, this search was combined with terms derived from the Implementation Outcomes Framework (e.g., adoption, acceptability) [35]. Finally, the reference list of relevant articles and the Moving through Cancer program registry were also screened to identify potentially relevant studies [20, 36, 37]. The Moving through Cancer registry website was selected for screening because it provides a comprehensive and publicly accessible database that details established exercise interventions for people diagnosed with cancer and supports the implementation of the ACSM recommendations. Details of the search strategy are provided in Supplementary Table 1.

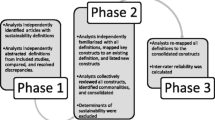

An electronic database search was conducted from January 2000 to 6 February 2020 (Medline, PsychInfo, CINAHL, Web of Science, SPORTDiscus, Scopus and Cochrane Central Register of Controlled Trials). Two reviewers (LC, JR) independently completed the title and abstract screening and full text review. Disagreements were resolved through discussion until a consensus was reached. Where agreement was unable to be reached, a third reviewer was available to inform the final decision (EZ). Covidence software was used to manage the screening and data extraction process [38].

Definition of terms

Physical activity is defined as “any bodily movement produced by skeletal muscles that requires energy expenditure” [39]. Exercise is “a subset of physical activity that is planned, structured, and repetitive and has as a final or an intermediate objective the improvement or maintenance of physical fitness” [39].

Inclusion and exclusion criteria

The inclusion and exclusion criteria for this review are summarised in Table 2. All types of physical activity and/or exercise (for example, aerobic, resistance, yoga, tai chi, Pilates, high intensity interval training) were included in the review. There were no restrictions placed on moderators of exercise (for example, supervised and unsupervised, home-based, and community/hospital-based settings, group and individual classes, face-to-face and virtual [online/video]). Further, any studies at translational stages prior to and including efficacy studies were excluded. As such, studies described as effectiveness or implementation/dissemination were included. Definitions for the categorisation of studies is supplied in Supplementary Table 2.

Data extraction and quality assessment

A data extraction tool was developed with reference to the published literature [41]. One author (LC) extracted data on: study type (effectiveness or implementation/dissemination study), implementation outcome, the level at which the implementation outcome was measured (patient, provider, intervention, organisation or a combination) and the exercise intervention composition and setting [19]. The Consensus on Exercise Reporting Template (CERT template) provides reporting recommendations and was used to detail the composition of exercise interventions [42].

Study quality was assessed using one of two tools. The Joanna Briggs Institute (JBI) suite of Critical Appraisal Tools were used to assess quality in quantitative and qualitative studies (the relevant JBI tool was selected for each study based upon the study design) [43]. The Mixed Methods Appraisal Tool was used to critically appraise studies that described a mixed method design [44]. The outcomes of the quality assessment are provided in Supplementary Table 3. An independent compliance check of data extraction and quality assessment was completed by two authors (NR, EZ) for 10% of the included studies.

Data synthesis and analysis

The Implementation Outcomes Framework guided the initial data synthesis [30]. Data were extracted, collated and analysed based upon the eight implementation outcomes. Quantitative and qualitative results were extracted and analysed concurrently and integrated to produce the final synthesis. Descriptive statistics and frequencies (using the total possible number of outcomes as the denominator) were calculated to synthesise the study type and the total number of implementation outcomes explored in the included studies.

Results

Search results

A total of 7123 articles were identified through the database search. After de-duplication, 4563 articles remained and 11 additional citations were identified through the manual search of reference lists and the Moving through Cancer exercise program registry [45,46,47,48,49,50,51,52,53,54,55]. After full text screening, 37 articles were included in the final review, which represented 31 unique programs. Descriptive statistics reported within the manuscript reflect outcomes for unique programs. Figure 1 presents a flow diagram for the results. Supplementary Table 4 provides a list of studies that were excluded after full text review and reasons for exclusion.

Table 3 provides a summary of the characteristics of the studies that met the inclusion criteria.

A collated summary of the included studies is provided in Table 4 and highlights the diversity in study design and composition of exercise interventions. Most interventions (n = 26, 89.7%) included a combination of aerobic, resistance and stretching exercises. Interventions were most often delivered to people with any cancer type (n = 16, 55.2%), using a group-based structure (n = 14, 48.3%), supervised by a qualified health professional (physiotherapist, exercise physiologists) (n = 14, 48.3%) and based in a community setting (n = 17, 58.6%). Of the 58.6% of programs that were based in the community, 27.6% (n = 8) were in specialist exercise clinics and 24.1% (n = 7) were in fitness centres and 6.9% (n = 2) used a combination of specialist clinics and fitness centres. Definitions for the settings are supplied in Table 3.

The results for each implementation outcome and study type are summarised in Table 5. The most common implementation outcomes assessed were feasibility (n = 17, 54.8%) and adoption (n = 14, 45.2%) of exercise interventions. The most common classification was effectiveness study (n = 15, 48.4%).

The results are expanded upon in Supplementary Table 5 and below.

Acceptability

Six studies reported on the acceptability of exercise interventions for people with cancer, measured at the patient-level [53, 67, 70, 73, 76, 77]. Patient satisfaction (variously defined as enjoying the program, finding the program useful/valuable) was generally high, with five studies reporting acceptability levels above 80% [53, 70, 73, 76, 77]. None of the included studies reported on the acceptability of exercise interventions measured at the healthcare professional level.

Adoption

Fourteen studies reported on exercise intervention adoption [49, 50, 55, 56, 58, 61, 63, 65, 69, 74,75,76,77, 81]. Nine studies assessed qualitative barriers and enablers to intervention adoption (refer to supplementary Table 5) but did not measure adoption [50, 55, 56, 61, 69, 74,75,76, 81]. Four studies explored uptake by organisations [49, 63, 65, 77] and one study assessed both organisational uptake and qualitative barriers to adoption [58]. Of the five studies that measured adoption, two reported the percentage of organisations across the country who had adopted exercise oncology programs, with 60% of hospitals in Belgium adopting programs and 18% of YMCA’s in America delivering a specific program (i.e., Livestrong at the YMCA). The three further studies that measured organisation adoption rates provided the raw number of organisations delivering a program, without reference to total possible delivery organisations (i.e., 40 sites across Australia). None of the identified studies reported on overall program uptake rates by healthcare providers, such as the total number of professionals making patient referrals to exercise.

Appropriateness

Thirteen studies reported on the appropriateness of exercise interventions [45, 48, 51, 53, 56, 57, 62, 67, 68, 74, 76, 79, 80], representing 11 unique programs. Six studies [45, 51, 56, 57, 62, 74] reported that appropriateness was established by testing the efficacy of the exercise intervention in the target population (in a previous efficacy trial). Five studies [48, 67, 68, 79, 80] reported using multiple data sources (including a literature review, reference to established models of care and/or review of barriers and enablers) to establish appropriateness, with only two of these studies directly engaging with program staff through the development phase [48, 80]. Two studies stated a phased approach to implementation (a pilot period completed prior to full intervention roll-out) was undertaken to establish appropriateness of the intervention [53, 76].

Cost

Twelve studies reported on costs associated with implementation [45, 48, 56, 60, 61, 63, 65,66,67, 72, 74, 75], representing 11 unique programs. Two studies estimated the intervention implementation costs in the set-up year (e.g., purchase of computers and equipment, cleaning, personnel), stating that it cost $US44,821 and $US46,213, respectively [45, 67]. One study reported the implementation cost to be approximately $350 per participant [74]. Four studies reported that philanthropic donations were used to support the ongoing organisational costs associated with the exercise intervention [61, 63, 65, 75]. Hybrid models of funding subsided the costs associated with intervention use, including a mix of fee-for-service (upfront, set cost per session) and subsidised costs (total session costs off-set through donations, sponsorship) [48, 56, 60, 63, 65, 66, 72, 75]. Studies from the United States and Canada were the only ones to report on costs, where costs were measured as direct healthcare costs.

Feasibility

Twenty-one studies reported on the feasibility of delivering interventions, operationalised as either attendance and/or attrition rates for the exercise interventions [45,46,47,48,49,50,51,52, 54, 59, 62,63,64,65,66,67, 70,71,72, 77, 78], representing 17 unique programs. The attrition rates ranged from 22 to 56% across nine studies, with measurement of program discontinuation occurring between time ranges of 12 weeks to 6 months. The mean attrition rate for exercise intervention was 38.4% (n = 7) [46, 47, 50, 52, 59, 63, 64, 67, 77]. The attendance rates ranged from 30 to 83% across 16 studies. The mean attendance rate was calculated as 63.7% (n = 15) [45, 46, 48, 49, 51, 54, 62, 65,66,67, 70,71,72, 77, 78].

Fidelity

Six studies reported aspects of fidelity were monitored with reference to a documented pre-planned protocol for exercise and cancer [45,46,47,48, 54, 65]. Fidelity is typically measured by comparing the original protocol to what is delivered according to: 1) adherence to the protocol, 2) dose or amount of program (e.g., frequency, duration) delivered (with consideration of the core components that establish intervention effectiveness) and 3) quality of program delivery [82]. One study measured both adherence and quality of the program and stated adherence by football coaches to deliver the intervention as per the documented protocol was approximately 76%, and program quality was achieved through training staff [45]. A further five studies reported that the quality of program delivery was achieved through staff training and/or achieving certification to deliver their program as prescribed [46,47,48, 54, 65]. No studies were identified that monitored the amount of program delivery with respect to the pre-planned protocol.

Penetration

One study reported on exercise intervention penetration, which was defined as patients referred to the intervention reported with consideration to total eligible patient population [67]. This study, which evaluated the implementation of an exercise intervention for people diagnosed with breast cancer, reported that 53% of eligible patients were referred to the program [67].

Sustainability

One study reported on the sustainability of the exercise intervention within the organisational setting [64]. The authors also collected secondary outcome data about sustainability at the patient level, defined as whether the exercise sustained (> 12 months) the desired health outcomes for the patient [64]. Sustaining the program as part of normal organisational operations was attributed to addressing common challenges people diagnosed with cancer face in being active. This included providing tailored exercise by trained staff and establishing a not-for-profit entity to provide these services for free in the community [64]. The secondary outcome identified that the exercise intervention was effective in sustaining improvements to quality of life for patients [64].

Quality assessment

The quality of included studies explored through this review varied (refer to Supplementary Table 2). Studies were generally downgraded because they were not sufficiently powered to allow confidence in the inferences drawn about whole populations, and/or they failed to document possible differences between groups based on participants lost to follow-up. Further, many (64.5%, n = 6) of the studies classified as implementation studies were descriptive, with no objective measure of the implementation outcomes.

Discussion

This review identifies that exercise interventions are being implemented for people diagnosed with cancer using pragmatic study designs, but there is no consensus about how successful implementation should be defined, measured, and reported. Measuring implementation outcomes, using an established framework, can generate new knowledge in this area by conceptualising and defining what constitutes success [33]. To the best of our knowledge, this is the first systematic review that has explored implementation outcomes in exercise and cancer using the Implementation Outcomes Framework [30]. The included studies represent diverse interventions that are delivered across different settings and for various cancer types. For example, interventions involving yoga, sport, aerobic and resistance exercises were identified. These interventions were delivered in communities or hospitals and program eligibility (based on cancer diagnosis) varied across patient sub-type to include any cancer type through to being limited to a specific cancer type. Most studies adopted a quasi-experimental design applied to test effectiveness of the intervention, with descriptive designs more common in studies classified as implementation. The implementation outcomes that were most frequently assessed in the eligible studies were feasibility and adoption. Furthermore, the fidelity to intervention delivery is infrequently reported and the true cost of implementation is relatively unknown. Penetration and sustainability were the least frequently assessed implementation outcomes.

Almost 60% of included studies measured feasibility. Feasibility may have been measured more often than other implementation outcomes because of the interdependence with the clinical outcomes of exercise interventions (e.g., patients must adhere to the intervention to derive the desired clinical effect) and the ease of collection (e.g., staff can record attendance levels). It was also one of the few implementation outcomes that was explored at the patient-level by reporting patient attendance and/or attrition rates, recognising that factors at levels other than the patient can influence this outcome (e.g., resources provided by the organisation or expertise of the healthcare providers). Almost half the studies in this review were classified as effectiveness studies. Effectiveness studies typically focus on patient outcomes [83], conferring a focus on patient-level outcomes in included studies. Whilst outside the scope of this review, future studies should explore the feasibility of exercise interventions for other stakeholders such as those who assume non-clinical roles [84]. For example, this might apply to health administrators who fund exercise interventions and policy makers who establish the strategic policy environment in cancer care. Feasibility of exercise programs for program co-ordinators has been explored in the Canadian setting [84], however more research is needed. Successful implementation involves multiple stakeholders and whilst exercise services appear feasible for patients, it may not be feasible for funders or policy makers. This would also improve consistency with Proctor’s definition of feasibility which suggests measurement at provider, organisation or setting level [30].

Some aspects of adoption were evaluated in the included studies, including the barriers and enablers that impact implementation and organisational uptake rates. Despite this, no studies were identified that measured overall adoption rates by healthcare providers. Measuring the proportion of healthcare providers that adopt the intervention could provide better insights into referral patterns through identifying who is making (and not making) referrals. Further, only the study by Rogers and colleagues [74] applied an implementation science framework to collate the adoption barriers and enablers. Implementation science frameworks can guide the comprehensive compilation of factors that influence implementation [32]. Subsequent research should build on the work of Rogers and colleagues to identify and test the effectiveness (and cost) of different strategies that can mitigate common implementation barriers. This may include the effectiveness of different implementation strategies that can facilitate systematic, routine referral by healthcare providers. A recently validated questionnaire completed by healthcare providers may assist in identifying relevant strategies specific to cancer and exercise [85].

Including a cost evaluation for these strategies would address another gap identified through this review. No studies were identified that measured the cost of implementation strategies. Providing this information would enable policymakers to make astute decisions about the sustainable funding of exercise interventions. Further, evidence suggests implementation strategies, such as staff training, can increase the likelihood of successful implementation [86, 87]. Implementation strategies are the actions undertaken designed to cause the change that produces the desired implementation outcome [88]. Conceptually, within implementation research they are the elements that sit between the intervention and the outcome and are the focus of empirical testing [89]. Most of the articles categorised as implementation in this review were descriptive and did not empirically test implementation strategies. Further, of the 37 included articles, only three were randomised control trials (representing 2 unique programs) and were described as effectiveness trials. Whilst the utility of randomised control trials for implementation research is contested [90], there is a need for implementation studies that use experimental designs to rigorously test strategies [91].

Another important finding established through this review was that fidelity is infrequently measured, with the quality of program delivery most frequently applied. Whilst accurately measuring fidelity is a challenge [82], it typically considers compliance with the intervention protocol and adaptions to this protocol (based on the setting, population). Compliance with the intervention protocol was difficult to establish. Most studies (n = 25, 80.6%) in this review were tailored which is recommended (at the individual level) to ensure exercise programs are suitable for participants [13]. What remains unclear is the extent and type of tailoring of intervention components and whether this extended to significant changes to the intervention which could be considered as ‘adaptions’ to the core elements of the program (consistent with Proctor’s definition). Without this information it is difficult to accurately measure the fidelity of program delivery. More detailed reporting in future studies about how tailoring alters an intervention is needed and whether these changes extended to significant program adaptions and any impact on fidelity of delivery should be specified. For example, the review by Beidas and colleagues reported three changes to their program (training staff, adding a program co-ordinator and implementing a phone call reminder to increase uptake of the program) [56] which was part of a barrier and enabler analysis but is not related back to measuring an implementation outcome such as fidelity.

A major finding of this review relates to the later stages of implementation. Very few studies evaluated penetration and sustainability, indicating limited knowledge about how exercise interventions are continued after initial implementation efforts cease. Evidence suggests that many interventions are not sustained, or only parts of an intervention are sustained [40]. This can contribute to resource waste and delivery of ineffective interventions. More research is needed to investigate how interventions are integrated within organisational activities and sustained over time. This is particularly important given that sustaining interventions is a dynamic process that requires repeated and continued attention [92].

This review was guided by the Implementation Outcomes Framework. Other studies in exercise and cancer have used similar outcomes frameworks to explore the translation potential of exercise interventions based in the community [36], for specific cancer type (breast cancer) [93, 94] and to explore sustainability of interventions [95]. Like Jankowski and colleagues [95], our review confirmed a paucity of research that explores organisational-level factors that impact on sustainability of exercise interventions. However, our review does extend current knowledge beyond identifying adoption barriers and enablers [93] and organisation uptake rates [36] by exploring overall adoption rates of healthcare providers. Additionally, previous research has produced contrary results regarding reach and study participants representativeness of the broader population [93, 94]. The one study that measured penetration in this review found differences (in intervention reach) between those who were referred and those who were not referred to the intervention [67], suggesting a possible referral bias. Furthermore, despite the gaps in measuring and reporting implementation outcomes, effectiveness/implementation study protocols were identified through the screening process that plan to incorporate these outcomes [96,97,98]. This suggests researchers are recognising the value of measuring successful implementation using established outcome frameworks [30, 99]. This type of research will support the translation of research findings into practice, as proposed by the ACSM and other international health organisations.

This review is not without limitations. It was challenging to capture all relevant studies because of the inconsistencies in terminology. For example, in cancer care settings exercise may be included within a rehabilitation program, however we did not include rehabilitation as a search-term due to its generic nature. Several strategies were employed to overcome inconsistencies in terminology, including hand-searching the Moving through Cancer exercise program registry. A second limitation of this review was associated with delineating between efficacy and effectiveness studies. An existing categorisation was used to define studies [100], however some studies that were described by the authors as pragmatic employed methods synonymous with efficacy studies and were therefore excluded. Further, there is a lack of quality assessment tools that are designed specifically for implementation study designs. This resulted in some of the standard quality assessment items being not applicable to the eligible studies. Third, we excluded studies where people were specifically receiving end-of-life care, as distinct from long-term maintenance therapies. Finally, this review identified relatively few unique exercise interventions that were exclusive categorised as either effectiveness or implementation studies. In some cases, single programs were evaluated at multiple time points leading to multiple publications on the same program. As such, caution should be used when drawing conclusions from these findings.

The review results suggest exercise interventions may be successfully implemented, however relatively little information is published about how successful implementation is defined, measured and reported. This review examined all of Proctor et al. implementation outcomes. Future work should build on this review by investigating each implementation outcome in greater detail and across all levels of implementation (such as healthcare provider, organisation, and policy level). Currently, little data exist to: 1) quantify how many providers are adopting exercise interventions; 2) identify what portion of total eligible patient population are being referred to interventions; 3) define the total cost of implementation (including the cost of implementation strategies); and 4) understand how to sustain interventions over time. These outcomes become more valuable as we shift attention to those implementation strategies used in practice. Augmenting measures with qualitative data about how these outcomes were achieved is also required. This is particularly evident with feasibility, where outcomes varied despite high level of measurement. Further understanding how some interventions achieved higher levels of attendance/reduced attrition is required. The actions that lead to these outcomes should then be considered for replication in future implementation efforts. To conclude, measuring and evaluating implementation outcomes in cancer and exercise offers enormous potential to help conceptualise what is ‘implementation success’. It paves the way to develop (and subsequently test) causal relationships between the exercise interventions, the strategies or tools used during implementation and the outcome achieved [101]. Only then will researchers in exercise and cancer begin to unpack the implementation process and explain ‘how and why’ implementation was successful.

Availability of data and materials

All data generated or analysed during this study are included in this published article and its supplementary information files.

Abbreviations

- ACSM:

-

American College of Sports Medicine

- CERT:

-

Consensus on Exercise Reporting Template

- CPGs:

-

Clinical practice guidelines

- JBI:

-

Joanna Briggs Institute

- PRISMA:

-

Preferred Reporting Items for Systematic reviews and Meta-Analysis

References

World Health Organization. Global cancer observatory. France; 2020. https://gco.iarc.fr/. Accessed 4 Jan 2020

Bluethmann S, Mariotto A, Rowland J. Anticipating the “silver tsunami”: prevalence trajectories and comorbidity burden among older cancer survivors in the United States. Cancer Epidemiol Biomark Prev. 2016;25(7):1029–36 https://doi.org/10.1158/1055-9965.

Siegel R, Miller K, Jemal A. Cancer statistics, 2019. Cancer J Clin. 2019;69(1):7–34 https://doi.org/10.3322/caac.21551.

Bourke L, Smith D, Steed L, et al. Exercise for men with prostate cancer: a systematic review and meta-analysis. Eur Urol. 2016;69(4):693–703 https://doi.org/10.1016/j.eururo.2015.10.047.

Fuller J, Hartland M, Maloney L, et al. Therapeutic effects of aerobic and resistance exercises for cancer survivors: a systematic review of meta-analyses of clinical trials. Br J Sport Med. 2018;52(20):1311 https://doi.org/10.1136/bjsports-2017-098285.

Cormie P, Zopf E, Zhang X, et al. The impact of exercise on cancer mortality, recurrence, and treatment-related adverse effects. Epidemiol Rev. 2017;39(1):71–92 https://doi.org/10.1093/epirev/mxx007.

Juvet L, Thune I, Elvsaas I, et al. The effect of exercise on fatigue and physical functioning in breast cancer patients during and after treatment and at 6 months follow-up: a meta-analysis. Breast. 2017;33:166–77 https://doi.org/10.1016/j.breast.2017.04.003.

Meneses-Echávez J, González-Jiménez E, Ramírez-Vélez R. Effects of supervised exercise on cancer-related fatigue in breast cancer survivors: a systematic review and meta-analysis. BMC Cancer. 2015;15(1):77 https://doi.org/10.1186/s12885-015-1069-4.

Craft L, VanIterson E, Helenowski I, et al. Exercise effects on depressive symptoms in cancer survivors: a systematic review and meta-analysis. Cancer Epidemiol Biomark Prev. 2011;21(1):3–19 https://doi.org/10.1158/1055-9965.epi-11-0634.

Mishra S, Scherer R, Snyder C, et al. Exercise interventions on health-related quality of life for people with cancer during active treatment. Cochrane Database Syst Rev. 2012;15(8) https://doi.org/10.1002/14651858.CD008465.pub2.

Speck RM, Courneya KS, Mâsse LC, Duval S, Schmitz KH. An update of controlled physical activity trials in cancer survivors: a systematic review and meta-analysis. J Cancer Surviv. 2010;4(2):87–100 https://doi.org/10.1007/s11764-009-0110-5.

Institute of Medicine Committee on Standards for Developing Trustworthy Clinical Practice G. Clinical practice guidelines we can trust. In: Graham R, Mancher M, Miller Wolman D, Greenfield S, Steinberg E, editors. Clinical practice guidelines we can trust. Washington (DC): National Academies Press (US) Copyright 2011 by the National Academy of Sciences. All rights reserved; 2011.

Campbell K, Winters-Stone K, Wisekemann J, et al. Exercise guidelines for cancer survivors: consensus statement from international multidisciplinary roundtable. Med Sci Sports Exerc. 2019;51(11):2375–90 https://doi.org/10.1249/mss.0000000000002116.

Cormie P, Atkinson M, Bucci L, Cust A, Eakin E, Hayes S, et al. Clinical oncology society of Australia position statement on exercise in cancer care. Med J Aust. 2018;209(4):184–7 https://doi.org/10.5694/mja18.00199.

Segal R, Zwaal C, Green E, Tomasone JR, Loblaw A, Petrella T, et al. Exercise for people with cancer: a clinical practice guideline. Curr Oncol. 2017;24(1):40–6 https://doi.org/10.3747/co.24.3376.

Hayes S, Newton R, Spence R, et al. The exercise and sports science Australia position statement: exercise medicine in cancer management. J Sci Med Sport. 2019;22(11):1175–99 https://doi.org/10.1016/j.jsams.2019.05.003.

Campbell A, Stevinson C, Crank H. The BASES expert statement on exercise and cancer survivorship. J Sports Sci. 2012;30(9):949–52 https://doi.org/10.1080/02640414.2012.671953.

Cabana M, Rand C, Powe N, et al. Why don’t physicians follow clinical practice guidelines? A framework for improvement. JAMA. 1999;282(15):1458–65 https://doi.org/10.1001/jama.282.15.1458.

Schmitz K, Campbell A, Stuiver M, et al. Exercise is medicine in oncology: engaging clinicians to help patients move through cancer. Cancer J Clin. 2019;69(6):468–84 https://doi.org/10.3322/caac.21579.

Exercise is Medicine. Moving through cancer. 2019. https://www.exerciseismedicine.org/support_page.php/moving-through-cancer/. Accessed 25 Nov 2019.

Brownson R, Fielding J, Green L. Building capacity for evidence-based public health: reconciling the pulls of practice and the push of research. Annu Rev Public Health. 2018;39(1):27–53 https://doi.org/10.1146/annurev-publhealth-040617-014746.

Green L. Making research relevant: If it is an evidence-based practice, where's the practice-based evidence? Fam Pract. 2008;25(suppl_1):i20–i4 https://doi.org/10.1093/fampra/cmn055.

Bauer M, Damschroder L, Hagedorn H, et al. An introduction to implementation science for the non-specialist. BMC Psychol. 2015;3(1):32 https://doi.org/10.1186/s40359-015-0089-9.

Singal A, Higgins P, Waljee A. A primer on effectiveness and efficacy trials. Clin Trans Gastroenterol. 2014;5(1):e45 https://doi.org/10.1038/ctg.2013.13.

Czosnek L, Rankin N, Zopf E, Richards J, Rosenbaum S, Cormie P. Implementing exercise in healthcare settings: the potential of implementation science. Sports Med. 2019;50(1):1–14 https://doi.org/10.1007/s40279-019-01228-0.

May C, Johnson M, Finch T. Implementation, context and complexity. Implement Sci. 2016;11(1):141 https://doi.org/10.1186/s13012-016-0506-3.

McCrabb S, Lane C, Hall A, Milat A, Bauman A, Sutherland R, et al. Scaling-up evidence-based obesity interventions: a systematic review assessing intervention adaptations and effectiveness and quantifying the scale-up penalty. Obes Rev. 2019;20(7):964–82 https://doi.org/10.1111/obr.12845.

Welsh B, Sullivan C, Olds D. When early crime prevention goes to scale: a new look at the evidence. Prev Sci. 2010;11(2):115–25 https://doi.org/10.1007/s11121-009-0159-4.

Thorpe KE, Zwarenstein M, Oxman AD, Treweek S, Furberg CD, Altman DG, et al. A pragmatic-explanatory continuum indicator summary (PRECIS): a tool to help trial designers. J Clin Epidemiol. 2009;62(5):464–75 https://doi.org/10.1016/j.jclinepi.2008.12.011.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health Ment Health Serv Res. 2011;38(2):65–76 https://doi.org/10.1007/s10488-010-0319-7.

Linnan L, Steckler A. Process evaluation for public health interventions and research: an overiew. Process evaluation for public health interventions and research. San Francisco: Jossey-Bass; 2002. p. 1–23.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):53 https://doi.org/10.1186/s13012-015-0242-0.

Shepherd HL, Geerligs L, Butow P, Masya L, Shaw J, Price M, et al. The elusive search for success: defining and measuring implementation outcomes in a real-world hospital trial. Front Public Health. 2019;7:293 https://doi.org/10.3389/fpubh.2019.00293.

Moher D, Liberati A, Tetzlaff J, Altman DG, The PRISMA Group. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097 https://doi.org/10.1371/journal.pmed.1000097.

Gerke D, Lewis E, Prusaczyk B, et al. Implementation outcomes. St. Louis: Washington University; 2017. https://sites.wustl.edu/wudandi/. Accessed 30 Oct 2018

Covington K, Hidde M, Pergolotti M, et al. Community-based exercise programs for cancer survivors: a scoping review of practice-based evidence. Support Care Cancer. 2019;27(12):4435–50 https://doi.org/10.1007/s00520-019-05022-6.

Santa Mina D, Sabiston C, Au D, et al. Connecting people with cancer to physical activity and exercise programs: a pathway to create accessibility and engagement. Curr Oncol. 2018;25(2):14–162 https://doi.org/10.3747/co.25.3977.

Covidence. Better systematic review management. Melbourne; 2020. https://www.covidence.org/. Accessed 4 Jan 2020

Caspersen C, Powell K, Christenson G. Physical activity, exercise, and physical fitness: definitions and distinctions for health-related research. Public Health Rep. 1985;100(2):126–31.

Lennox L, Maher L, Reed J. Navigating the sustainability landscape: a systematic review of sustainability approaches in healthcare. Implement Sci. 2018;13(1):27 https://doi.org/10.1186/s13012-017-0707-4.

Matthews L, Kirk A, MacMillan F, Mutrie N. Can physical activity interventions for adults with type 2 diabetes be translated into practice settings? A systematic review using the re-aim framework. Transl Behav Med. 2014;4(1):60–78 https://doi.org/10.1007/s13142-013-0235-y.

Slade S, Cup E, Feehan L, et al. Consensus on exercise reporting template (CERT): modified delphi study. Phys Ther. 2016;96(10):1514–24 https://doi.org/10.2522/ptj.20150668.

Moola S, Munn Z, Tufanaru C, et al. Chapter 7: Systematic reviews of etiology and risk. In: Aromataris E, Munn Z, editors. Joanna briggs institute reviewer's manual: The Joanna Briggs Institute; 2017.

Hong Q, Fàbregues S, Bartlett G, et al. The mixed methods appraisal tool (MMAT) version 2018 for information professionals and researchers. Educ Inf. 2018;34(4):285–91 https://doi.org/10.3233/EFI-180221.

Bjerre E, Brasso K, Jørgensen A, et al. Football compared with usual care in men with prostate cancer (FC prostate community trial): a pragmatic multicentre randomized controlled trial. Sports Med. 2018;49(1):145–58 https://doi.org/10.1007/s40279-018-1031-0.

Brown J, Hipp M, Shackelford D, et al. Evaluation of an exercise-based phase program as part of a standard care model for cancer survivors. Transl J ACSM. 2019;4(7):45–54 https://doi.org/10.1249/TJX.0000000000000082.

Cheifetz O, Park Dorsay J, Hladysh G, MacDermid J, Serediuk F, Woodhouse LJ. Canwell: meeting the psychosocial and exercise needs of cancer survivors by translating evidence into practice. Psycho-Oncology. 2014;23(2):204–15 https://doi.org/10.1002/pon.3389.

Culos-Reed N, Dew M, Zahavich A, et al. Development of a community wellness program for prostate cancer survivors. Transl J ACSM. 2018;3(13):97–106 https://doi.org/10.1249/tjx.0000000000000064.

Irwin M, Cartmel B, Harrigan M, et al. Effect of the livestrong at the ymca exercise program on physical activity, fitness, quality of life, and fatigue in cancer survivors. Cancer. 2017;123(7):1249–58 https://doi.org/10.1002/cncr.30456.

Kimmel G, Haas B, Hermanns M. The role of exercise in cancer treatment: bridging the gap. Transl J ACSM. 2016;1(17):152–8 https://doi.org/10.1249/tjx.0000000000000022.

Mackenzie M, Carlson L, Ekkekakis P, et al. Affect and mindfulness as predictors of change in mood disturbance, stress symptoms, and quality of life in a community-based yoga program for cancer survivors. Evid Based Complement Alternat Med. 2013;2013:419496–13 https://doi.org/10.1155/2013/419496.

Noble M, Russell C, Kraemer L, Sharratt M. Uw well-fit: the impact of supervised exercise programs on physical capacity and quality of life in individuals receiving treatment for cancer. Support Care Cancer. 2012;20(4):865–73 https://doi.org/10.1007/s00520-011-1175-z.

Rajotte E, Yi J, Baker K, et al. Community-based exercise program effectiveness and safety for cancer survivors. J Cancer Surviv. 2012;6(2):219–28 https://doi.org/10.1007/s11764-011-0213-7.

Santa Mina D, Au D, Brunet J, et al. Effects of the community-based wellspring cancer exercise program on functional and psychosocial outcomes in cancer survivors. Curr Oncol. 2017;24(5):284–94 https://doi.org/10.3747/co.23.3585.

Wurz A, Capozzi L, Mackenzie M, Danhauer S, Culos-Reed N. Translating knowledge: a framework for evidence-informed yoga programs in oncology. Int J Yoga Therapy. 2013;23(2):85–90 https://doi.org/10.17761/ijyt.23.2.5533m8376l3q4484.

Beidas R, Paciotti B, Barg F, et al. A hybrid effectiveness-implementation trial of an evidence-based exercise intervention for breast cancer survivors. J Nat Cancer Institute Monographs. 2014;2014(50):338–45 https://doi.org/10.1093/jncimonographs/lgu033.

Bjerre E, Petersen T, Jørgensen A, et al. Community-based football in men with prostate cancer: 1-year follow-up on a pragmatic, multicentre randomised controlled trial. PLoS Med. 2019;16(10):e1002936 https://doi.org/10.1371/journal.pmed.1002936.

Bultijnck R, Van Ruymbeke B, Everaert S, et al. Availability of prostate cancer exercise rehabilitation resources and practice patterns in Belgium: results of a cross-sectional study. Eur J Cancer Care. 2018;27(1):e12788 https://doi.org/10.1111/ecc.12788.

Cheifetz O, Dorsay J, MacDermid J. Exercise facilitators and barriers following participation in a community-based exercise and education program for cancer survivors. J Exerc Rehabil. 2015;11(1):20–9 https://doi.org/10.12965/jer.150183.

Culos-Reed N, Dew M, Shank J, et al. Qualitative evaluation of a community-based physical activity and yoga program for men living with prostate cancer: Survivor perspectives. Glob Adv Health Med. 2019;8:2164956119837487 https://doi.org/10.1177/2164956119837487.

Dalzell M, Smirnow N, Sateren W, et al. Rehabilitation and exercise oncology program: translating research into a model of care. Curr Oncol. 2017;24(3):e191–e8 https://doi.org/10.3747/co.24.3498.

Dolan L, Barry D, Petrella T, et al. The cardiac rehabilitation model improves fitness, quality of life, and depression in breast cancer survivors. J Cardiopulmonary Rehabil Prev. 2018;38(4):246–52 https://doi.org/10.1097/hcr.0000000000000256.

Haas B, Kimmel G. Model for a community-based exercise program for cancer survivors: taking patient care to the next level. J Oncol Pract. 2011;7(4):252–6 https://doi.org/10.1200/JOP.2010.000194.

Haas B, Kimmel G, Hermanns M, et al. Community-based fitsteps for life exercise program for persons with cancer: 5-year evaluation. J Oncol Pract. 2012;8(6):320–4 https://doi.org/10.1200/JOP.2012.000555.

Heston A, Schwartz A, Justice-Gardiner H, et al. Addressing physical activity needs of survivors by developing a community-based exercise program: Livestrong® at the YMCA. Clin J Oncol Nurs. 2015;19(2):213–7 https://doi.org/10.1188/15.CJON.213-217.

Kirkham A, Klika R, Ballard T, et al. Effective translation of research to practice: hospital-based rehabilitation program improves health-related physical fitness and quality of life of cancer survivors. J Natl Compr Cancer Netw. 2016;14(12):1555–62 https://doi.org/10.6004/jnccn.2016.0167.

Kirkham A, Van Patten C, Gelmon K, et al. Effectiveness of oncologist-referred exercise and healthy eating programming as a part of supportive adjuvant care for early breast cancer. Oncologist. 2018;23(1):105–15 https://doi.org/10.1634/theoncologist.2017-0141.

Kirkham A, Bland K, Wollmann H, et al. Maintenance of fitness and quality-of-life benefits from supervised exercise offered as supportive care for breast cancer. J Natl Compr Cancer Netw. 2019;17(6):695–702 https://doi.org/10.6004/jnccn.2018.7276.

Leach H, Danyluk J, Culos-Reed N. Design and implementation of a community-based exercise program for breast cancer patients. Curr Oncol. 2014;21(5):267–71 https://doi.org/10.3747/co.21.2079.

Leach H, Danyluk J, Nishimura K, et al. Evaluation of a community-based exercise program for breast cancer patients undergoing treatment. Cancer Nurs. 2015;38(6):417–25 https://doi.org/10.1097/ncc.0000000000000217.

Leach H, Danyluk J, Nishimura K, et al. Benefits of 24 versus 12 weeks of exercise and wellness programming for women undergoing treatment for breast cancer. Support Care Cancer. 2016;24(11):4597–606 https://doi.org/10.1007/s00520-016-3302-3.

Marker R, Cox-Martin E, Jankowski C, et al. Evaluation of the effects of a clinically implemented exercise program on physical fitness, fatigue, and depression in cancer survivors. Support Care Cancer. 2018;26(6):1861–9 https://doi.org/10.1007/s00520-017-4019-7.

Muraca L, Leung D, Clark A, Beduz MA, Goodwin P. Breast cancer survivors: taking charge of lifestyle choices after treatment. Eur J Oncol Nurs. 2011;15(3):250–3 https://doi.org/10.1016/j.ejon.2009.12.001.

Rogers L, Goncalves L, Martin M, et al. Beyond efficacy: a qualitative organizational perspective on key implementation science constructs important to physical activity intervention translation to rural community cancer care sites. J Cancer Surviv. 2019;13(4):537–46 https://doi.org/10.1007/s11764-019-00773-x.

Santa Mina D, Alibhai S, Matthew A, et al. Exercise in clinical cancer care: a call to action and program development description. Curr Oncol. 2012;19(3):9–144 https://doi.org/10.3747/co.19.912.

Santa Mina D, Au D, Auger L, et al. Development, implementation, and effects of a cancer center's exercise-oncology program. Cancer. 2019;125(19):3437–47 https://doi.org/10.1002/cncr.32297.

Sherman K, Heard G, Cavanagh K. Psychological effects and mediators of a group multi-component program for breast cancer survivors. J Behav Med. 2010;33(5):378–91 https://doi.org/10.1007/s10865-010-9265-9.

Speed-Andrews A, Stevinson C, Belanger L, et al. Predictors of adherence to an iyengar yoga program in breast cancer survivors. Int J Yoga. 2012;5(1):3–9 https://doi.org/10.4103/0973-6131.91693.

Swenson K, Nissen M, Knippenberg K, et al. Cancer rehabilitation: outcome evaluation of a strengthening and conditioning program. Cancer Nurs. 2014;37(3):162–9 https://doi.org/10.1097/NCC.0b013e318288d429.

Van Gerpen R, Becker B. Development of an evidence-based exercise and education cancer recovery program. Clin J Oncol Nurs. 2013;17(5):539–43 https://doi.org/10.1188/13.CJON.539-543.

Dennett A, Peiris C, Shields N, et al. Exercise therapy in oncology rehabilitation in Australia: a mixed-methods study. Asia Pac J Clin Oncol. 2016;13(5):e515–e27 https://doi.org/10.1111/ajco.12642.

Brownson R, Colditz G, Proctor E. Dissemination and implementation research in health: translating science to practice. 2nd ed. New York: Oxford University Press; 2017.

Bauer MS, Kirchner J. Implementation science: what is it and why should I care? Psychiatry Res. 2019;283:112376 https://doi.org/10.1016/j.psychres.2019.04.025.

Santa Mina D, Petrella A, Currie KL, et al. Enablers and barriers in delivery of a cancer exercise program: the Canadian experience. Curr Oncol. 2015;22(6):374–84 https://doi.org/10.3747/co.22.2650.

Nadler MB, Bainbridge D, Fong AJ, Sussman J, Tomasone JR, Neil-Sztramko SE. Moving cancer care ontario’s exercise for people with cancer guidelines into oncology practice: using the theoretical domains framework to validate a questionnaire. Support Care Cancer. 2019;27(6):1965–8 https://doi.org/10.1007/s00520-019-04689-1.

Baker R, Camosso-Stefinovic J, Gillies C, Shaw EJ, Cheater F, Flottorp S, et al. Tailored interventions to address determinants of practice. Cochrane Database Syst Rev. 2015;4 https://doi.org/10.1002/14651858.CD005470.pub3.

Forsetlund L, Bjørndal A, Rashidian A, Jamtvedt G, O'Brien MA, Wolf FM, et al. Continuing education meetings and workshops: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2009;2 https://doi.org/10.1002/14651858.CD003030.pub2.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the expert recommendations for implementing change (ERIC) project. Implement Sci. 2015;10(1):21 https://doi.org/10.1186/s13012-015-0209-1.

Smith JD, Li DH, Rafferty MR. The implementation research logic model: a method for planning, executing, reporting, and synthesizing implementation projects. Implement Sci. 2020;15(1):84 https://doi.org/10.1186/s13012-020-01041-8.

Palinkas LA, Aarons GA, Horwitz S, Chamberlain P, Hurlburt M, Landsverk J. Mixed method designs in implementation research. Admin Pol Ment Health. 2011;38(1):44–53 https://doi.org/10.1007/s10488-010-0314-z.

Wolfenden L, Foy R, Presseau J, Grimshaw JM, Ivers NM, Powell BJ, et al. Designing and undertaking randomised implementation trials: guide for researchers. BMJ. 2021;372:m3721 https://doi.org/10.1136/bmj.m3721.

Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8(1):117 https://doi.org/10.1186/1748-5908-8-117.

Pullen T, Bottorff J, Sabiston C, et al. Utilizing RE-AIM to examine the translational potential of project move, a novel intervention for increasing physical activity levels in breast cancer survivors. Transl Behav Med. 2018;9(4):646–55 https://doi.org/10.1093/tbm/iby081.

White S, McAuley E, Estabrooks P, et al. Translating physical activity interventions for breast cancer survivors into practice: an evaluation of randomized controlled trials. Ann Behav Med. 2009;37(1):10–9 https://doi.org/10.1007/s12160-009-9084-9.

Jankowski CM, Ory MG, Friedman DB, Dwyer A, Birken SA, Risendal B. Searching for maintenance in exercise interventions for cancer survivors. J Cancer Survivorship. 2014;8(4):697–706 https://doi.org/10.1007/s11764-014-0386-y.

Eakin E, Hayes S, Haas M, et al. Healthy living after cancer: a dissemination and implementation study evaluating a telephone-delivered healthy lifestyle program for cancer survivors. BMC Cancer. 2015;15(1):992 https://doi.org/10.1186/s12885-015-2003-5.

Cormie P, Lamb S, Newton R, et al. Implementing exercise in cancer care: study protocol to evaluate a community-based exercise program for people with cancer. BMC Cancer. 2017;17(1):103 https://doi.org/10.1186/s12885-017-3092-0.

McNeely M, Sellar C, Williamson T, et al. Community-based exercise for health promotion and secondary cancer prevention in Canada: protocol for a hybrid effectiveness-implementation study. BMJ Open. 2019;9(9):e029975 https://doi.org/10.1136/bmjopen-2019-029975.

Glasgow R, Vogt T, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–7 https://doi.org/10.2105/ajph.89.9.1322.

Indig D, Lee K, Grunseit A, Milat A, Bauman A. Pathways for scaling up public health interventions. BMC Public Health. 2017;18(1):68 https://doi.org/10.1186/s12889-017-4572-5.

Lewis C, Boyd M, Walsh-Bailey C, et al. A systematic review of empirical studies examining mechanisms of implementation in health. Implement Sci. 2020;15(1):21 https://doi.org/10.1186/s13012-020-00983-3.

National Institute for Health Research. Guidance on applying for feasibility studies; 2017. p. 1. https://www.nihr.ac.uk/documents/nihr-research-for-patient-benefit-rfpb-programme-guidance-%20%20on-applying-for-feasibility-studies/20474#Definition_of_feasibility_vs._pilot_studies. Accessed 10 Jan 2021

Gartlehner G, Hansen RA, Nissman D, et al. Criteria for distinguishing effectiveness from efficacy trials in systematic reviews. Agency for healthcare research and quality (US). 2006; (12). https://www.ncbi.nlm.nih.gov/books/NBK44024/. Accessed 10 Jan 2021.

National Institute Health. Dissemination and implementation research in health (R01 clinical trial optional). https://grants.nih.gov/grants/guide/pa-files/PAR-19-274.html#:~:text=Implementation%20research%20is%20defined%20as,outcomes%20and%20benefit%20population%20health. Accessed 10 Jan 2021.

Acknowledgements

Not applicable.

Funding

SR is funded by an NHMRC Early Career Fellowship (APP1123336). The funding body had no role in the study design, data collection, data analysis, interpretation or manuscript development.

Author information

Authors and Affiliations

Contributions

PC, SR, LC, NR, EZ and JR developed the review concept and design. LC and JR completed article screening with EZ. LC completed data analysis with review from EZ and NR. The first draft of the manuscript was written by LC. All authors commented on previous versions of the manuscript and provided critical review. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

PC is the Founder and Director of EX-MED Cancer Ltd., a not-for-profit organisation that provides exercise medicine services to people with cancer. PC is the Director of Exercise Oncology EDU Pty Ltd., a company that provides fee for service training courses to upskill exercise professionals in delivering exercise to people with cancer.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Supplementary Table 1.

Search Strategy.

Additional file 3: Supplementary Table 3.

Quality Assessment.

Additional file 4: Supplementary Table 4.

Excluded Studies.

Additional file 5: Supplementary Table 5.

Summary of results Implementation Outcomes.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Czosnek, L., Richards, J., Zopf, E. et al. Exercise interventions for people diagnosed with cancer: a systematic review of implementation outcomes. BMC Cancer 21, 643 (2021). https://doi.org/10.1186/s12885-021-08196-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12885-021-08196-7