Abstract

We present analytical results for all master integrals for massless three-point functions, with one off-shell leg, at four loops. Our solutions were obtained using differential equations and direct integration techniques. We review the methods and provide additional details.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Form factors are important quantities in quantum chromodynamics (QCD), \(\mathcal{N}=4\) super Yang–Mills theory, and other theories. In the simplest cases, a single operator is inserted in a matrix element between two massless states, and all propagating particles are massless. Such form factors can be constructed from vertex diagrams with two legs on the light cone, \(p_1^2=p_2^2=0\), such that the corresponding Feynman integrals depend on one mass scale, \(q^2=(p_1+p_2)^2\). In this paper, we consider such integrals in dimensional regularization, where \(d=4-2\epsilon \) is the number of space-time dimensions used to regularize ultraviolet, soft, and collinear divergences.

Two-loop corrections to form factors were computed more than 30 years ago [1,2,3,4]. The first three-loop result was presented in Ref. [5] and later confirmed in Ref. [6]. Analytic results for the three-loop form factor integrals were presented in Ref. [7]. In Ref. [8], the results of Ref. [7] were used to compute form factors at three loops up to order \(\epsilon ^2\), i.e., transcendental weight 8, as a preparation for future four-loop calculations. These integrals and form factors have been confirmed in Ref. [9].

Indeed, four-loop calculations have taken place since then. The first analytical results for the four-loop form factors were obtained for the quark form factor in QCD in the large-\(N_c\) limit, where only planar diagrams contribute [10], and for the fermionic contributions [11]. All the planar master integrals for the massless four-loop form factors were evaluated in [12]. The \(n_f^2\) results were obtained in [13], and the complete contribution from color structure \((d_F^{abcd})^2\) was evaluated in [14] and confirmed in [15]. For the quark and gluon form factors, all corrections with three or two closed fermion loops were calculated in [16, 17], respectively, including also the singlet contributions. The fermionic corrections to quark and gluon form factors in four-loop QCD were evaluated in [18]. The four-loop \(\mathcal{N}=4\) SYM Sudakov form factor was analyzed in [19] and analytically evaluated in [20]. The complete analytical evaluation of the quark and gluon form factors in four-loop QCD was presented in [21]. The four-loop corrections to the Higgs–bottom vertex within massless QCD were evaluated in [22].

In these calculations, our two competing groups applied two methods of evaluating master integrals: the method of differential equations and the evaluation by integrating over Feynman parameters. The first one was applied in [10, 11, 13, 14], and the second one in [12, 15,16,17]. Then our two groups combined their forces and applied these two methods when collaborating [18, 20,21,22]. A crucial building block for these form factor calculations were the solutions for the four-loop master integrals. In the above references, complete weight 8 information has been given only for a subset of the non-planar master integrals. The main purpose of the current paper is to provide full results also for the remaining master integrals.

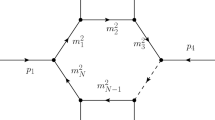

In general, four-loop form factors with one off-shell and two massless legs can involve integrals belonging to 100 reducible and irreducible top-level topologies with 12 lines, as shown in Fig. 1, or sub-topologies thereof. In this work, we present analytical solutions for the \(\epsilon \) expansion of all master integrals in these topologies. The results are given in terms of zeta values and multiple zeta values (MZV), and are complete at least up to and including weight 8, as required for N\({}^4\)LO calculations.

The remainder of this paper is organized as follows. In Sect. 2, we describe how we applied the method of differential equations. In a subsection, we describe peculiarities of using integration by parts (IBP) to perform reduction to master integrals. In Sect. 3, we describe how we applied the method of analytical integration over Feynman parameters. In a subsection, we discuss a dedicated reduction scheme for integrals with many dots. In Sect. 4, we comment on the explicit solutions for the master integrals that we provide in the ancillary files. In Sect. 5, we compare the two basic methods that we used.

2 Evaluation with differential equations

2.1 Two-leg off-shell integrals, reduction to \(\epsilon \)-form

The four-loop form-factor Feynman integrals that we evaluated have the following form:

where \(D_{i}\) are propagators and/or numerators raised to some integer powers \(\nu _i\) (indices). For the calculations presented in this section, we choose the last six indices for numerators, while the first 12 indices can be positive, i.e., they can correspond to propagators. For example, for one of the most complicated diagrams for four-loop form factors, the propagators and numerators can be chosen as

where \(p_1\) and \(p_2\) denote the outgoing momenta of the two massless legs.

According to the strategy of IBP reduction, which was discovered more than 40 years ago [23, 24], the evaluation of integrals of a given family can be reduced to the evaluation of the corresponding master integrals. In the next subsection, we describe how we did this in the case of four-loop form-factor integrals.

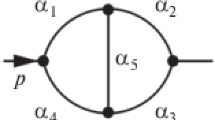

Let us now turn to the method of differential equations [25,26,27,28]. Since \(p_1^2=p_2^2=0\), the integrals of our family depend only on one variable \(q^2=(p_1+p_2)^2\), which we often set to (\(-1\)) in intermediate expressions, since this dependence is easily recovered from dimensional analysis (namely, \(G_{\nu _1,\ldots ,\nu _{18}} \propto \left( q^2\right) ^{2d-\sum \nu _i}\)). In order to make use of the differential equations method, we follow the approach of Ref. [29]. We consider the family of the same topology as in Fig. 2, now assuming that \(p_2^2=xq^2\), and derive the differential system in the variable x. In what follows, we will use the terms two-scale and one-scale master integrals to refer to the master integrals of the family with generic x and with \(x=0\), respectively. At the point \(x=1\), we have \(p_2^2=q^2\), and we can assume not only that \(p_1^2=0\) but also that \(p_1=0\), as can be clearly seen, for example, from Feynman parametric representation. The corresponding propagator-type master integrals were evaluated in an \(\epsilon \) expansion more than 10 years ago [30] and are known even up to weight 12 [31]. The idea is that the differential equations allow us to transfer data from the simple point \(x=1\) to the desired point \(x=0\). The more involved IBP reduction of the family with \(p_2^2\ne 0\) appears to be a fair price for the advantages of the differential equations method. The system of differential equations for the vector of master integrals \({\varvec{j}}\) has the usual form of

where \(M(\epsilon ,x)\) is a matrix, rational in x and \(\epsilon \).

For the family in Fig. 2, the size of the system (the number of two-scale master integrals) is as large as 374, but even larger systems appear in other families. While not immediately obvious, a far more important characteristic of the complexity is the position of singular points in x. Since our final results for the one-scale master integrals involve only non-alternating MZV sums, one might speculate that the only singular points of the emerging differential systems are \(x=0,\ 1,\ \infty \). And indeed, this is the case for many families that we considered. However, a few systems also contained singularities at other points. In particular, the system for the family in Fig. 2 contained singularities for

Systems for other families contained only some of these singularities.

Note that the singularity at \(x=1/4\) is especially troublesome as it lies on the segment [0, 1], exactly on the integration path of the evolution operator connecting the point of interest, \(x=0\), and the point \(x=1\), where the boundary conditions are fixed. The general solution of the differential system does have a branch point at \(x=1/4\). From physical and technical arguments, this point cannot be a branch point of the specific solution on the first sheet (but it is a branch point on other sheets). This requirement provides yet another check of the correctness of our procedure. We may check the absence of a branch point by comparing the results obtained by shifting the integration contour slightly up and down from the real axis, which corresponds to the change \(x\rightarrow x+i0\) and \(x\rightarrow x-i0\), respectively. As the coefficients of the differential system are all real, those two prescriptions are related by complex conjugation. Therefore, the absence of a branch point at \(x=1/4\) can be established by checking that any of these two prescriptions leads to real-valued results. For definiteness, we will assume that the integration contour is shifted up.

In order to reduce the system to \(\epsilon \) form, we use the algorithm of Refs. [28, 32] (see also Sect. 8 in Ref. [33]) as implemented in Libra [34]. We had to introduce algebraic letters

In this way, we reduce the system to the following form:

where

and \(S_k\) are some constant matrices. Note that there is no variable simultaneously rationalizing \(x_1,\ x_2,\), and \(x_3\), as they correspond to more than three square-root branching points: \({0,\infty ,4,1/4}\) (see Ref. [32]). However, it appears that the weights depending on \(x_1,\ x_2\), and \(x_3\) never appear together in one iterated integral. More precisely, the iterated integrals which appear in our results fall into one (or a few) of the following four families:

-

1.

those containing letters in the alphabet \(\{w_1,w_2,w_3\}\),

-

2.

those containing letters in the alphabet \(\{w_1,w_3,w_6\}\),

-

3.

those containing letters in the alphabet \(\{w_1,w_3,w_4,w_7\}\),

-

4.

those containing letters in the alphabet \(\{w_1,w_3,w_5,w_8\}\).

The integrals of the first two families are readily expressed via Goncharov’s polylogarithms with indices \(0,\pm 1\) (for the second family, we have to pass to \(x_1\)). For the integrals of the third family, we pass to the variable \(y_3=\frac{\sqrt{3}}{2x_3}\). When x varies from 0 to 1, \(y_3\) also varies from 0 to 1. Taking into account that

we obtain the result for the integrals of the third family in terms of Goncharov’s polylogarithms with indices \(0,\pm 1,\pm i\sqrt{3}\).

The last family is the most complicated. We introduce the variable \(y_2=P_8=\frac{1}{2}+i x_2\). Taking into account our prescription \(x\rightarrow x+i0\), we establish that \(y_2\) follows the path C depicted in Fig. 3 when x varies from 0 to 1.

We replace this path by the equivalent path \(C^\prime \) depicted in the same figure. Since

we obtain the result for the iterated integrals of this family in terms of Goncharov’s polylogarithms with indices \(0,1,e^{\pm i\pi /3}, 1/2\) and argument \(e^{i\pi /3}\). We can normalize the argument to 1 by using a homogeneity property of polylogarithms (as usual, we must exercise a certain care when dealing with polylogarithms with the trailing zeros)

Finally, the integrals of the fourth family are expressed via Goncharov’s polylogarithms with indices \(0,1,e^{-i\pi /3}, e^{-2i\pi /3}, \frac{1}{2}e^{-i\pi /3}\) and unit argument.

To summarize, we have the following correspondence:

-

Families 1 and 2: integrals are expressed via \(G({\varvec{a}}|1)\) with \(a_k\in \{0,\pm 1\}\) (alternating MZVs).

-

Family 3: integrals are expressed via \(G({\varvec{a}}|1)\) with \(a_k\in \{0,\pm 1,\pm i\sqrt{3}\}\).

-

Family 4: integrals are expressed via \(G({\varvec{a}}|1)\) with \(a_k\in \{0,1,e^{-i\pi /3},e^{-2i\pi /3}, \frac{1}{2}e^{-i\pi /3}\}\).

Note that the polylogarithms for the fourth family are not real-valued, so, as we explained above, the check of “real-valuedness” of the integrals from the fourth family provides a nontrivial check of our setup.

After we have obtained the results for the coefficients of the \(\epsilon \) expansion of all one-scale master integrals in terms of the abovementioned polylogarithms, we used the PSLQ [35] algorithm to recognize the results in terms of simple, non-alternating MZVs.

2.2 IBP reduction of two-scale integrals

There are many public and private codes to perform IBP reduction. In this work, we applied the public code FIRE [36, 37] and the private code Finred by A. von Manteuffel. The IBP reduction of one-scale four-loop form-factor integrals is rather complicated, and the IBP reduction of two-scale integrals when applying the method of differential equations is even more complicated. An important point is to reveal a minimal set of master integrals. It is also important to find a basis such that the only denominators in IBP reductions are either of the form \(a d+b\), where d is the space-time dimension, and a and b are rational numbers, or simple polynomials depending only on kinematic invariants and/or masses. Otherwise, we refer to factors in denominators as bad. To get rid of bad denominators, i.e., to turn to a basis in which no bad denominators appear, one can apply the public code described in Ref. [38] (see also Ref. [39]).

The presence of bad denominators can essentially complicate the IBP reduction. It can happen that it is not possible to get rid of bad denominators. Two examples of such a situation were found in Ref. [40] in the context of five-loop massless propagators. It turned out that there was a hidden relation involving four master integrals with 11 positive indices from four partially overlapping sectors.

For four-loop massless form factors, a similar situation takes place at the level of nine positive indices. This hidden relation is relevant for two one-scale families, one of which is the family corresponding to the graph of Fig. 2,

where all terms from lower sectors (of levels less than nine) are omitted, and dots in the indices mean that the last six indices are zero. The complete relation can be found in a file attached to this paper. We have derived this relation by running FIRE with two different options (with no_ presolve and without it; this option turns off partial solving of IBPs before index substitutions and thus leads to a reduction in other direction), so that it is clear that this relation is a consequence of IBP relations. This relation has previously been depicted diagrammatically in [15], where it had been derived with Finred, using integration-by-parts identities generated from seed integrals in a common parent topology.

Using the same strategy, we have derived a hidden relation also for the two-scale integrals of this family. A file with this relation is also attached to the paper. It has the same form as Eq. (2.10) at level nine, but the contribution from lower levels is different and depends on x. In fact, this relation should transform into the corresponding relation in the one-scale case in the limit \(x \rightarrow 0\), but to see this explicitly is more complicated in comparison to the derivation described above. In each of the two cases, the additional relation is used to reduce the number of the master integrals. In the new basis, all the bad denominators successfully disappear.

Let us mention, for completeness, that relations between a current set of the master integrals can be revealed with the help of various symmetries. This procedure is usually included into codes to solve IBP relations. An explicit example, together with a discussion of various ways of looking for extra relations between master integrals, can be found in [41] in the context of the master integrals needed for the computation of the lepton anomalous magnetic moment at three loops.

For the IBP reduction of one-scale integrals, both our groups applied modular arithmetic (for early discussions of such techniques, see e.g. [42, 43]). One of our groups used the private code Finred by A. von Manteuffel, which was the first code to solve IBP relations with the help of modular arithmetic, and another group used FIRE. For the IBP reduction of two-scale integrals appearing within the method of differential equations, we applied FIRE, also with modular arithmetic. We first performed rational reconstruction to transition from modular arithmetic to rational numbers. Then after fixing d or x, we ran Thiele reconstruction [44] to obtain a rational function of the other variable. Since we have a good basis, the denominators factor into a function of d and a function of x. Hence, the worst possible denominator of the coefficients of the master integrals, i.e., the least common multiple of all occurring denominators, is obtained by multiplying the univariate factors. Knowing the worst denominator, we could multiply the functions being reconstructed by it and perform an iterative Newton–Newton reconstruction [44], i.e., apply two Newton reconstructions with respect to two variables.

3 Evaluation by integration over Feynman parameters

3.1 Finite integrals, analytical integration

Perhaps the most straightforward way to solve a Feynman integral is the direct integration of its Feynman parametric representation. What we wish to obtain is the Laurent expansion of the integral in the regulator \(\epsilon \). We find it convenient to work with integrals which are finite for \(\epsilon \rightarrow 0\), such that we can expand the integrand in \(\epsilon \) and then perform the integrations for the Laurent coefficients. It has been shown in [45, 46] that one can always express an arbitrary (divergent) Feynman integral as a linear combination of a basis of “quasi-finite” integrals, which have convergent Feynman parameter integrations for \(\epsilon \rightarrow 0\). Requiring also the \(\Gamma \) pre-factor involving the superficial degree of divergence to be finite, one can also choose completely finite integrals for \(\epsilon \rightarrow 0\) [9]. In this construction, the finite integrals may live in higher dimensions and may have “dots,” i.e., higher powers of propagators (see [47, 48] for generalizations of quasi-finite integrals). A systematic list of such finite integrals can be obtained easily with the program Reduze 2 [49]. Expressing a divergent Feynman integral in terms of a basis of finite integrals, all poles in \(\epsilon \) become explicit in the coefficients of this rewriting. The explicit linear relations needed to express an integral in terms of finite basis integrals are obtained from dimension-shift identities and integration-by-parts reductions, which will be discussed in more detail below.

The integrands of the finite integrals can easily be expanded in \(\epsilon \). In general, the integrations of the coefficients can lead to complicated special mathematical functions and may be difficult to perform. A given Feynman parametric representation for some Feynman integral can have the property of “linear reducibility.” For linearly reducible integrals, there exists an order of integrations such that each integration can be performed in terms of multiple polylogarithms in an algorithmic way. Thanks to the algorithms of [50,51,52] and their implementation in HyperInt [53], a suitable order of integration can be determined with a polynomial reduction algorithm. If a representation is not linearly reducible, it is sometimes still possible to perform a rational transformation of the Feynman parameters such that the resulting new parametrization is linearly reducible. For integrals resulting in elliptical polylogarithms or more complicated structures, no linearly reducible representations exists. Currently, no algorithm is known to determine unambiguously whether a linearly reducible parameterization exists for a given Feynman integral.

In our case, we have been able to find linearly reducible parametric representations for almost all topologies, with the only exception being two trivalent (top-level) topologies depicted as the last two entries in figure 1 of [15]. For these two topologies, the method of differential equations allows us to obtain the solutions from \(\epsilon \)-factorized differential equations and integrations in terms of multiple polylogarithms, as explained in Sect. 2. We cannot exclude the possibility that a linearly reducible representation could also be found for these topologies. We emphasize that in practice, direct integrations allowed us to derive complete analytical solutions through to transcendental weight 7 for all Feynman integrals, even in those topologies which are not linearly reducible. For the latter, this was achieved by a suitable choice of basis integrals and a high-precision numerical evaluation plus constant fitting for a single remaining integral [54]. The key observation was that certain Feynman integrals involve only the \(\mathcal {F}\) polynomial but not the \(\mathcal {U}\) polynomial at leading order in \(\epsilon \), and the \(\mathcal {F}\) polynomial alone could be rendered linearly reducible in all cases.

We performed the parametric integrations for the finite integrals with the Maple program HyperInt. While straightforward in principle, the integration generates a large number of terms at intermediate stages. Performance challenges arise due to bookkeeping tasks and greatest common divisor computations to combine coefficients of the same multiple polylogarithm. In order to obtain complete information at a given transcendental weight, depending on the choice of basis integrals, one needs a different number of terms in the epsilon expansion, and each such term may require significantly different amounts of computational resources. Usually, the first term in the \(\epsilon \) expansion is relatively inexpensive to compute, and with increasing order, the computational complexity increases substantially. For this reason, we usually start by trying out an over-complete list of candidate integrals and compute the leading term(s) of their \(\epsilon \) expansion. We then select basis integrals, whose \(\epsilon \) expansion starts with high weight and which performed well in terms of run times for the computation of the leading term(s) in \(\epsilon \). By inserting the basis change into form factor expressions, we check for unwanted weight drops due to our choice of basis integrals. For our basis choice, more difficult topologies start to contribute only at relatively high weight.

To perform the integrations at higher weight, in some cases we used a parallelized HyperInt setup on compute nodes with hundreds of gigabytes (GB) of main memory and weeks of run time. In this way, we were able to analytically calculate all Feynman integrals to weight 6, all but one to weight 7 (with the last one guessed from numerical data), and a large number of integrals to weight 8. In all cases, we checked our results with precise numerical evaluations using the program FIESTA [55, 56]. The numerical evaluations were performed for our finite integrals, which allowed for better computational performance than generic integrals.

3.2 IBP reduction of dotted integrals

Our finite integrals typically have dots and are defined in \(d=d_0-2\epsilon \) dimensions, where the reference dimension \(d_0\) in many cases is larger than 4, \(d_0=6,8,\ldots \). In order to express them in terms of integrals with \(d_0=4\) dimensions, we exploit dimension-shift identities as described e.g. in [57], which also introduces dotted integrals. In particular, we employ dimension-increasing shifts which introduce four additional dots for a four-loop integral. We establish the relation between the basis of finite integrals and a conventional basis through integration-by-parts identities, where the particular challenge lies in the reduction of the dotted integrals.

The reduction scheme is based on integration-by-parts relations in the Lee–Pomeransky representation [58,59,60]. Defining the (twisted) Mellin transform of a function \(f(x_1,\ldots ,x_N)\) as

and normalizing the integral (2.1) according to \( I_{\nu _1,\ldots ,\nu _{18}} = {\mathcal {N}}\tilde{I}_{\nu _1,\ldots ,\nu _{18}} /{\Gamma ((L+1)d/2-\nu )} \), one has

Here, \(\mathcal {U}\) and \(\mathcal {F}\) are the first and second Symanzik polynomials, respectively, \(\nu =\sum \nu _i\), \(N=18\), \(L=4\), and \(\mathcal {N}\) is an normalization constant which is not relevant in the following.

The Mellin transform (3.1) has the properties

with multi-index notation such that \(\varvec{\nu }=(\nu _1,\ldots ,\nu _N)\), \(\varvec{e}_i=(0,\ldots ,0,1,0,\ldots ,0)\) has a nonzero entry at position i, and \(\partial _i\equiv \partial /\partial x_i\). From this, it is easy to see how insertions of \(x_i\) and \(\partial _i\) translate to shifts of propagator powers. We use the shift operators

A differential operator P consisting of powers of \(x_i\) and \( \partial _i\) which annihilates \(\mathcal {G}^{-d/2}\),

generates from \(\mathcal {M}\{ P \mathcal {G}^{-d/2}\} = 0\) via the substitutions

a shift relation. In fact, every shift relation is generated in this way [60].

To construct annihilators, we make ansätze of the form

In the following, we will restrict ourselves to at most second-order derivatives in P. The functions \(c_0(x_1,\ldots ,x_N)\), \(c_i(x_1,\ldots ,x_N)\), and \(c_{ij}(x_1,\ldots ,x_N)\) are polynomials in the Feynman parameters \(x_i\) and are determined such that (3.8) is fulfilled, which requires

Since the expressions in brackets are explicitly known, one can interpret this equation as a syzygy constraint for the unknown functions \(c_0\), \(c_i\), and \(c_{ij}\). Such syzygies can be determined algorithmically. Once the functions \(c_0\), \(c_i\), and \(c_{ij}\) are known, one can obtain the desired shift relations for generic \(\nu _1\),...,\(\nu _N\) from (3.8) by replacing the Feynman parameters \(x_1,\ldots ,x_N\) with shift operators \(\hat{1}^+,\ldots ,\hat{N}^+\) in the arguments of \(c_0\), \(c_i\), and \(c_{ij}\):

These equation “templates” are then applied to “seed integrals” with non-negative integer insertions for the \(\nu _i\), followed by a standard “Laporta”-style reduction of these identities for the specific loop integrals. For the latter, we use the modular arithmetic and rational reconstruction methods available in Finred.

We compute the syzygies sector by sector, aiming at the reduction of integrals without irreducible numerators. While we found that annihilators of linear order in the derivatives are insufficient in some cases, annihilators of second order (involving also the \(c_{ij}\)) allowed us to generate the desired reductions. Instead of computing complete syzygy modules, we restrict ourselves in the construction of the \(c_0\), \(c_i\), and \(c_{ij}\) to a maximal degree in the Feynman parameters and employ linear algebra methods (see also [48, 61]) implemented in Finred for their computation.

The fact that we may ignore numerators for the sector in which we construct the annihilator deserves a comment. Linear annihilators produce at most a single decrementing shift operator in each term, such that no numerators will be produced for seed integrals without numerators. This is no longer the case in the presence of a second-order \((\hat{i}^-)^2\) contribution, which can indeed lead to a subsector integral with a numerator. Interestingly, keeping also the subsector identities for a given sector, all of these auxiliary integrals can be eliminated without additional effort.

In practice, we note that for subsectors with fewer lines, integrals with rather large numbers of dots need to be reduced. On the other hand, the identities produced by the annihilator method can be reduced rather quickly. For the present calculation, we chose to reconstruct full reduction identities with full d dependence and rational numbers as coefficients, which required a large number of samples in some cases and thus non-negligible computational effort. We found this approach attractive with regards to workflow considerations, since it decoupled our experiments with different types of basis changes from the computation of the reductions. By storing intermediate reductions of integrals at the level of finite field samples and by reconstructing symbolic expressions only after assembling the desired linear combinations of integrals (e.g., for a specific basis change from finite field to conventional integrals), one can work with a considerably smaller number of samples and decrease the computational effort.

4 Results in electronic files

We provide analytical results for the complete set of all massless, four-loop, three-point master integrals with one off-shell leg at https://www.ttp.kit.edu/preprints/2023/ttp23-034/. Please see the file README for details regarding the employed conventions and for a description of the various files.

Our analytical results for the vertex integrals with one off-shell leg are given as Laurent expansions in \(\epsilon \) and allow quantities to be computed at least up to and including weight 8, in many cases up to and including weight 9. Results obtained by the method of differential equations are given in a UT basis (strictly speaking, the UT property is a conjecture at higher orders in \(\epsilon \)). The complete set of all master integrals is given in terms of finite integrals, which allows for easier numerical checks. In addition, we provide mappings to a more conventional “Laporta basis,” determined by a generic ordering of integrals.

We also provide auxiliary expressions for the vertex integrals with two off-shell legs, which we used to employ the method of differential equations. In particular, we define basis integrals, which lead to \(\epsilon \)-factorized differential equations. Moreover, we also provide the differential equations themselves.

For the calculation of some (physical) quantity to weight 8 using some non-UT basis, it may seem that one needs information from higher-order terms in the \(\epsilon \) expansion that are not provided here. To work around this problem, we introduced tags in the expansions representing specific unknown higher weight contributions. By expanding to sufficiently high order in \(\epsilon \) and making sure that these tags drop out in the final result, one can still use such an alternative basis.

5 Conclusion

We presented solutions for all four-loop master integrals contributing to massless vertex functions with one off-shell leg. Our results for the Laurent expansion in the dimensional regulator \(\epsilon \) are given in terms of regular and multiple zeta values and are complete up to and including at least transcendental weight 8. We provide concise definitions of all master integrals, their analytical solutions, basis transformations, and further auxiliary expressions in electronic files that can be downloaded from https://www.ttp.kit.edu/preprints/2023/ttp23-034/.

We employed two methods to obtain these results: one based on differential equations for topologies with an additional off-shell leg, one based on direct parametric integrations of finite integrals. In a large number of cases, we employed both methods to compute integrals in the same topology. Moreover, almost all integrals were checked up to weight 7 by such redundant calculations. For the weight 6 and weight 7 contributions we also had nontrivial checks available from the cusp and collinear anomalous dimensions extracted from the poles of different form factors. Various weight 8 contributions have been obtained using only one of the two described methods, those we checked against precise numerical evaluations with FIESTA.

We observed that it can be computationally rather inexpensive to compute lower weight contributions in the parametric integration approach, once one has a linearly reducible representation available. Furthermore, a suitable basis choice can avoid contributions from more complicated topologies at lower weight. For that reason, the weight 7 contributions could essentially be obtained from direct integrations. At weight 8, the situation is different. For two particularly complicated top-level topologies we could not even find a suitable starting point, that is, a linearly reducible representation. Moreover, for a number of other topologies, we were not successful with direct integrations due to the high computational resource demands.

Remarkably, in all these challenging cases, the differential equation approach worked well and allowed us to obtain analytical solutions. Despite the fact that one generalizes the problem by taking one of the light-like legs off-shell, the power of the method outweighed this potential drawback in practice. As a bonus, the method allowed us to arrive at uniformly transcendental basis integrals, and one can obtain results also at even higher transcendental weight if needed.

Data availability

This manuscript has associated data in a data repository. [Authors’ comment: please see section 4.]

References

G. Kramer, B. Lampe, Two jet cross-section in \(e^+ e^-\) annihilation. Z. Phys. C 34, 497 (1987). https://doi.org/10.1007/BF01679868

T. Matsuura, W.L. van Neerven, Second order logarithmic corrections to the Drell–Yan cross section. Z. Phys. C 38, 623 (1988). https://doi.org/10.1007/BF01624369

T. Matsuura, S.C. van der Marck, W.L. van Neerven, The calculation of the second order soft and virtual contributions to the Drell–Yan cross-section. Nucl. Phys. B 319, 570 (1989). https://doi.org/10.1016/0550-3213(89)90620-2

T. Gehrmann, T. Huber, D. Maître, Two-loop quark and gluon form-factors in dimensional regularisation. Phys. Lett. B 622, 295 (2005). https://doi.org/10.1016/j.physletb.2005.07.019. arXiv:hep-ph/0507061

P.A. Baikov, K.G. Chetyrkin, A.V. Smirnov, V.A. Smirnov, M. Steinhauser, Quark and gluon form factors to three loops. Phys. Rev. Lett. 102, 212002 (2009). https://doi.org/10.1103/PhysRevLett.102.212002. arXiv:0902.3519

T. Gehrmann, E.W.N. Glover, T. Huber, N. Ikizlerli, C. Studerus, Calculation of the quark and gluon form factors to three loops in QCD. JHEP 06, 094 (2010). https://doi.org/10.1007/JHEP06(2010)094. arXiv:1004.3653

R.N. Lee, V.A. Smirnov, Analytic epsilon expansions of master integrals corresponding to massless three-loop form factors and three-loop g-2 up to four-loop transcendentality weight. JHEP 02, 102 (2011). https://doi.org/10.1007/JHEP02(2011)102. arXiv:1010.1334

T. Gehrmann, E.W.N. Glover, T. Huber, N. Ikizlerli, C. Studerus, The quark and gluon form factors to three loops in QCD through to \(\cal{O} \left(\epsilon ^2\right)\). JHEP 11, 102 (2010). https://doi.org/10.1007/JHEP11(2010)102. arXiv:1010.4478

A. von Manteuffel, E. Panzer, R.M. Schabinger, On the computation of form factors in massless QCD with finite master integrals. Phys. Rev. D 93, 125014 (2016). https://doi.org/10.1103/PhysRevD.93.125014. arXiv:1510.06758

J.M. Henn, A.V. Smirnov, V.A. Smirnov, M. Steinhauser, A planar four-loop form factor and cusp anomalous dimension in QCD. JHEP 05, 066 (2016). https://doi.org/10.1007/JHEP05(2016)066. arXiv:1604.03126

J.M. Henn, A.V. Smirnov, V.A. Smirnov, M. Steinhauser, R.N. Lee, Four-loop photon quark form factor and cusp anomalous dimension in the large-\(N_c\) limit of QCD. JHEP 03, 139 (2017). https://doi.org/10.1007/JHEP03(2017)139. arXiv:1612.04389

A. von Manteuffel, R.M. Schabinger, Planar master integrals for four-loop form factors. JHEP 05, 073 (2019). https://doi.org/10.1007/JHEP05(2019)073. arXiv:1903.06171

R.N. Lee, A.V. Smirnov, V.A. Smirnov, M. Steinhauser, The \(N_f^2\) contributions to fermionic four-loop form factors. Phys. Rev. D 96, 014008 (2017). https://doi.org/10.1103/PhysRevD.96.014008. arXiv:1705.06862

R.N. Lee, A.V. Smirnov, V.A. Smirnov, M. Steinhauser, Four-loop quark form factor with quartic fundamental colour factor. JHEP 02, 172 (2019). https://doi.org/10.1007/JHEP02(2019)172. arXiv:1901.02898

A. von Manteuffel, E. Panzer, R.M. Schabinger, Cusp and collinear anomalous dimensions in four-loop QCD from form factors. Phys. Rev. Lett. 124, 162001 (2020). https://doi.org/10.1103/PhysRevLett.124.162001. arXiv:2002.04617

A. von Manteuffel, R.M. Schabinger, Quark and gluon form factors to four-loop order in QCD: the \(N_f^3\) contributions. Phys. Rev. D 95, 034030 (2017). https://doi.org/10.1103/PhysRevD.95.034030. arXiv:1611.00795

A. von Manteuffel, R.M. Schabinger, Quark and gluon form factors in four loop QCD: the \(N_f^2\) and \(N_{q\gamma } N_f\) contributions. Phys. Rev. D 99, 094014 (2019). https://doi.org/10.1103/PhysRevD.99.094014. arXiv:1902.08208

R.N. Lee, A. von Manteuffel, R.M. Schabinger, A.V. Smirnov, V.A. Smirnov, M. Steinhauser, Fermionic corrections to quark and gluon form factors in four-loop QCD. Phys. Rev. D 104, 074008 (2021). https://doi.org/10.1103/PhysRevD.104.074008. arXiv:2105.11504

R.H. Boels, T. Huber, G. Yang, The Sudakov form factor at four loops in maximal super Yang–Mills theory. JHEP 01, 153 (2018). https://doi.org/10.1007/JHEP01(2018)153. arXiv:1711.08449

R.N. Lee, A. von Manteuffel, R.M. Schabinger, A.V. Smirnov, V.A. Smirnov, M. Steinhauser, The four-loop \( \cal{N}\) = 4 SYM Sudakov form factor. JHEP 01, 091 (2022). https://doi.org/10.1007/JHEP01(2022)091. arXiv:2110.13166

R.N. Lee, A. von Manteuffel, R.M. Schabinger, A.V. Smirnov, V.A. Smirnov, M. Steinhauser, Quark and gluon form factors in four-loop QCD. arXiv:2202.04660

A. Chakraborty, T. Huber, R.N. Lee, A. von Manteuffel, R.M. Schabinger, A.V. Smirnov et al., The \(Hb\bar{b}\) vertex at four loops and hard matching coefficients in SCET for various currents. arXiv:2204.02422

K. Chetyrkin, F. Tkachov, Integration by parts: the algorithm to calculate beta functions in 4 loops. Nucl. Phys. B 192, 159 (1981). https://doi.org/10.1016/0550-3213(81)90199-1

F. Tkachov, A theorem on analytical calculability of four loop renormalization group functions. Phys. Lett. B 100, 65 (1981). https://doi.org/10.1016/0370-2693(81)90288-4

A. Kotikov, Differential equations method: new technique for massive Feynman diagrams calculation. Phys. Lett. B 254, 158 (1991). https://doi.org/10.1016/0370-2693(91)90413-K

T. Gehrmann, E. Remiddi, Differential equations for two loop four point functions. Nucl. Phys. B 580, 485 (2000). https://doi.org/10.1016/S0550-3213(00)00223-6. arXiv:hep-ph/9912329

J.M. Henn, Multi-loop integrals in dimensional regularization made simple. Phys. Rev. Lett. 110, 251601 (2013). https://doi.org/10.1103/PhysRevLett.110.251601. arXiv:1304.1806

R.N. Lee, Reducing differential equations for multiloop master integrals. JHEP 04, 108 (2015). https://doi.org/10.1007/JHEP04(2015)108. arXiv:1411.0911

J.M. Henn, A.V. Smirnov, V.A. Smirnov, Evaluating single-scale and/or non-planar diagrams by differential equations. JHEP 03, 088 (2014). https://doi.org/10.1007/JHEP03(2014)088. arXiv:1312.2588

P.A. Baikov, K.G. Chetyrkin, Four loop massless propagators: an algebraic evaluation of all master integrals. Nucl. Phys. B 837, 186 (2010). https://doi.org/10.1016/j.nuclphysb.2010.05.004. arXiv:1004.1153

R.N. Lee, A.V. Smirnov, V.A. Smirnov, Master integrals for four-loop massless propagators up to transcendentality weight twelve. Nucl. Phys. B 856, 95 (2012). https://doi.org/10.1016/j.nuclphysb.2011.11.005. arXiv:1108.0732

R.N. Lee, A.A. Pomeransky, Normalized Fuchsian form on Riemann sphere and differential equations for multiloop integrals. arXiv:1707.07856

A. Blondel et al., Standard model theory for the FCC-ee Tera-Z stage, in Mini Workshop on Precision EW and QCD Calculations for the FCC Studies: Methods and Techniques CERN, Geneva, Switzerland, January 12–13, 2018, (Geneva), CERN, CERN (2019). https://doi.org/10.23731/CYRM-2019-003. arXiv:1809.01830

R.N. Lee, Libra: a package for transformation of differential systems for multiloop integrals. Comput. Phys. Commun. 267, 108058 (2021). https://doi.org/10.1016/j.cpc.2021.108058arXiv:2012.00279

S. Arno, D.H. Bailey, H.R.P. Ferguson, Analysis of PSLQ, an integer relation finding algorithm. Math. Comput. 68(225), 351 (1999)

A.V. Smirnov, FIRE5: a C++ implementation of Feynman Integral REduction. Comput. Phys. Commun. 189, 182 (2015). https://doi.org/10.1016/j.cpc.2014.11.024. arXiv:1408.2372

A.V. Smirnov, F.S. Chuharev, FIRE6: Feynman Integral REduction with Modular Arithmetic. Comput. Phys. Commun. 247, 106877 (2020). https://doi.org/10.1016/j.cpc.2019.106877. arXiv:1901.07808

A.V. Smirnov, V.A. Smirnov, How to choose master integrals. Nucl. Phys. B 960, 115213 (2020). https://doi.org/10.1016/j.nuclphysb.2020.115213. arXiv:2002.08042

J. Usovitsch, Factorization of denominators in integration-by-parts reductions. arXiv:2002.08173

A. Georgoudis, V. Gonçalves, E. Panzer, R. Pereira, A.V. Smirnov, V.A. Smirnov, Glue-and-cut at five loops. JHEP 09, 098 (2021). https://doi.org/10.1007/JHEP09(2021)098. arXiv:2104.08272

A.V. Smirnov, V.A. Smirnov, FIRE4, LiteRed and accompanying tools to solve integration by parts relations. Comput. Phys. Commun. 184, 2820 (2013). https://doi.org/10.1016/j.cpc.2013.06.016. arXiv:1302.5885

A. von Manteuffel, R.M. Schabinger, A novel approach to integration by parts reduction. Phys. Lett. B 744, 101 (2015). https://doi.org/10.1016/j.physletb.2015.03.029. arXiv:1406.4513

T. Peraro, Scattering amplitudes over finite fields and multivariate functional reconstruction. JHEP 12, 030 (2016). https://doi.org/10.1007/JHEP12(2016)030. arXiv:1608.01902

M. Abramowitz, I.A. Stegun, Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Dover, New York, ninth dover printing, tenth gpo printing ed (1964)

E. Panzer, On hyperlogarithms and Feynman integrals with divergences and many scales, JHEP 03, 071 (2014).https://doi.org/10.1007/JHEP03(2014)071. arXiv:1401.4361

A. von Manteuffel, E. Panzer, R.M. Schabinger, A quasi-finite basis for multi-loop Feynman integrals. JHEP 02, 120 (2015). https://doi.org/10.1007/JHEP02(2015)120. arXiv:1411.7392

R.M. Schabinger, Constructing multi-loop scattering amplitudes with manifest singularity structure. Phys. Rev. D 99, 105010 (2019). https://doi.org/10.1103/PhysRevD.99.105010. arXiv:1806.05682

B. Agarwal, S.P. Jones, A. von Manteuffel, Two-loop helicity amplitudes for \(gg \rightarrow ZZ\) with full top-quark mass effects. JHEP 05, 256 (2021). https://doi.org/10.1007/JHEP05(2021)256. arXiv:2011.15113

A. von Manteuffel, C. Studerus, Reduze 2–distributed Feynman integral reduction. arXiv:1201.4330

F. Brown, The Massless higher-loop two-point function. Commun. Math. Phys. 287, 925 (2009). https://doi.org/10.1007/s00220-009-0740-5. arXiv:0804.1660

F. Brown, On the periods of some Feynman integrals. arXiv:0910.0114

E. Panzer, Feynman integrals and hyperlogarithms, Ph.D. thesis, Humboldt U., Berlin, Inst. Math (2015). arXiv:1506.07243

E. Panzer, Algorithms for the symbolic integration of hyperlogarithms with applications to Feynman integrals. Comput. Phys. Commun. 188, 148 (2015). https://doi.org/10.1016/j.cpc.2014.10.019. arXiv:1403.3385

B. Agarwal, A. von Manteuffel, E. Panzer, R.M. Schabinger, Four-loop collinear anomalous dimensions in QCD and N=4 super Yang–Mills. Phys. Lett. B 820, 136503 (2021). https://doi.org/10.1016/j.physletb.2021.136503. arXiv:2102.09725

A.V. Smirnov, FIESTA 4: optimized Feynman integral calculations with GPU support. Comput. Phys. Commun. 204, 189 (2016). https://doi.org/10.1016/j.cpc.2016.03.013. arXiv:1511.03614

A.V. Smirnov, N.D. Shapurov, L.I. Vysotsky, FIESTA5: numerical high-performance Feynman integral evaluation. arXiv:2110.11660

R.N. Lee, Calculating multi-loop integrals using dimensional recurrence relation and \(D\)-analyticity. Nucl. Phys. Proc. Suppl. 205–206, 135 (2010). https://doi.org/10.1016/j.nuclphysbps.2010.08.032. arXiv:1007.2256

R.N. Lee, A.A. Pomeransky, Critical points and number of master integrals. JHEP 11, 165 (2013). https://doi.org/10.1007/JHEP11(2013)165. arXiv:1308.6676

R.N. Lee, Modern techniques of multi-loop calculations, in Proceedings, 49th Rencontres de Moriond on QCD and High Energy Interactions: La Thuile, Italy, March 22–29, 2014, pp. 297–300 (2014). arXiv:1405.5616

T. Bitoun, C. Bogner, R.P. Klausen, E. Panzer, Feynman integral relations from parametric annihilators. Lett. Math. Phys. 109, 497 (2019). https://doi.org/10.1007/s11005-018-1114-8. arXiv:1712.09215

R.M. Schabinger, A new algorithm for the generation of unitarity-compatible integration by parts relations. JHEP 01, 077 (2012). https://doi.org/10.1007/JHEP01(2012)077. arXiv:1111.4220

J.A.M. Vermaseren, Axodraw. Comput. Phys. Commun. 83, 45 (1994). https://doi.org/10.1016/0010-4655(94)90034-5

D. Binosi, L. Theussl, JaxoDraw: A Graphical user interface for drawing Feynman diagrams. Comput. Phys. Commun. 161, 76 (2004). https://doi.org/10.1016/j.cpc.2004.05.001. arXiv:hep-ph/0309015

Acknowledgements

AvM and RMS gratefully acknowledge Erik Panzer for related collaborations. This research was supported by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) under Grant No. 396021762-TRR 257 “Particle Physics Phenomenology after the Higgs Discovery” and by the National Science Foundation (NSF) under Grant No. 2013859 “Multi-loop amplitudes and precise predictions for the LHC.” The work of AVS and VAS was supported by the Russian Science Foundation under Agreement No. 21-71-30003 (IBP reduction) and by the Ministry of Education and Science of the Russian Federation as part of the program of the Moscow Center for Fundamental and Applied Mathematics under Agreement No. 075-15-2022-284 (numerical checks of results for the master integrals with FIESTA). The work of RNL was supported by the Russian Science Foundation, Grant No. 20-12-00205. We acknowledge the High Performance Computing Center at Michigan State University for computing resources. The diagram in Fig. 2 was drawn with the help of Axodraw [62] and JaxoDraw [63].

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Funded by SCOAP3. SCOAP3 supports the goals of the International Year of Basic Sciences for Sustainable Development.

About this article

Cite this article

Lee, R.N., von Manteuffel, A., Schabinger, R.M. et al. Master integrals for four-loop massless form factors. Eur. Phys. J. C 83, 1041 (2023). https://doi.org/10.1140/epjc/s10052-023-12179-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1140/epjc/s10052-023-12179-2