Abstract

Parton distribution functions (PDFs) are an essential ingredient for theoretical predictions at colliders. Since their exact form is unknown, their handling and delivery for practical applications relies on approximate numerical methods. We discuss the implementation of PDFs based on a global interpolation in terms of Chebyshev polynomials. We demonstrate that this allows for significantly higher numerical accuracy at lower computational cost compared with local interpolation methods such as splines. Whilst the numerical inaccuracy of currently used local methods can become a nontrivial limitation in high-precision applications, in our approach it is negligible for practical purposes. This holds in particular for differentiation and for Mellin convolution with kernels that have end point singularities. We illustrate our approach for these and other important numerical operations, including DGLAP evolution, and find that they are performed accurately and fast. Our results are implemented in the C++ library ChiliPDF.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Theoretical predictions at hadron colliders require parton distribution functions (PDFs), which describe the partonic content of the colliding hadrons. PDFs are nonperturbative objects and their exact form is unknown, such that their handling and delivery in practical applications requires approximate numerical methods. Currently available tools use local interpolation over a finite grid of function values, such as splines. For example, the LHAPDF library [1], which has de facto become the standard interface with which PDFs are provided to the community, implements a cubic spline interpolation.

In this paper, we demonstrate a different approach for the numerical representation of PDFs, which is based on a global, high-order interpolation using Chebyshev polynomials, and which allows for significantly higher numerical accuracy at lower computational cost than local interpolation methods. We have implemented this approach in the C++ library ChiliPDF,Footnote 1 which is used for most of the numerical demonstrations in the following. We think, however, that the methods presented in our paper are of interest beyond their implementation in a specific software package.

There is a long history of using families of polynomials for handling PDFs or related quantities. Without any claim to completeness, let us mention a few examples. To express PDFs in terms of their Mellin moments, Bernstein polynomials were proposed in Ref. [2] and Jacobi polynomials in Ref. [3]. An explicit solution of the evolution equations was obtained in Refs. [4, 5] by expanding PDFs and splitting functions in Laguerre polynomials. More references and discussion can be found in Refs. [6,7,8]. To compute analytically the complex Mellin moments of parton luminosities, Refs. [9, 10] used an expansion in Chebyshev polynomials. The latter also appear in the parameterization of initial conditions in the PDF fits of Refs. [11,12,13,14]. We find that all these methods differ quite significantly from each other and from the method to be presented in this work.

Let us emphasize that we are not concerned here with the question of how best to parameterize input PDFs that are fitted to data, nor with the associated systematic error or bias due to the choice of parameterization. For our purposes, we consider the fitted input PDFs to be known “exactly”. Our method could be used to address this parameterization issue as well, but we leave this for future exploration.

What we are concerned with is the numerical implementation and handling of PDFs in practical applications, for instance when using PDFs evolved to some scale to obtain quantitative predictions from analytic cross section formulae or with Monte-Carlo event generators. The interpolation of PDFs comes with an inherent inaccuracy that is of purely numerical origin, similar to numerical integration errors. Such inaccuracies should at the very least be small compared to uncertainties due to physics approximations such as the perturbative expansion. But ideally, one would like such inaccuracies to be negligible or at least small enough to be of no practical concern. We will show that this goal can indeed be achieved with Chebyshev interpolation.

Several observations lead us to believe that the performance of available local interpolation methods is becoming insufficient. Generically, the relative accuracy of the interpolation provided by LHAPDF is expected (and found to be) in the ballpark of \(10^{-3}\) to \(10^{-4}\). For high-precision predictions this is already close – perhaps uncomfortably close – to the desired percent-level theoretical precision. Moreover, PDFs typically appear in the innermost layer of the numerical evaluation, whose output is then further processed. For example, they enter at the maximally differential level, which is subject to subsequent numerical integrations. Each step comes with some loss of numerical precision, which means the innermost elements should have a higher numerical accuracy than what is desired for the final result. If an integration routine becomes sensitive to numerical PDF inaccuracies, its convergence and hence the computational cost can suffer greatly, because the integrator can get distracted (or even stuck) trying to integrate numerical noise. We have explicitly observed this effect for quadrature-based integrators, which for low-dimensional integrations are far superior to Monte-Carlo integrators, but whose high accuracy and fast convergence strongly depends on the smoothness of the integrand. Another issue is that with increasing perturbative order, the convolution kernels in cross section formulae become more and more steep or strongly localized. This requires a higher numerical accuracy of the PDF, because its convolution with such kernels is sensitive not just to its value but also to its derivatives or its detailed shape. In Ref. [15], it was indeed observed that the limited numerical accuracy of PDFs provided by LHAPDF can cause instabilities in the final result.

Many applications require an accurate and fast execution of nontrivial operations on PDFs, such as taking Mellin moments, computing Mellin convolutions with various kernels, taking derivatives, and so forth. A prominent example is DGLAP evolution, which has been extensively studied in the literature, see e.g. Refs. [16,17,18,19,20], and for which there is a variety of codes that solve the evolution equations either via Mellin moments [21,22,23] or directly in x space [24,25,26,27,28]. The latter require in particular the repeated Mellin convolution of PDFs with splitting functions, in addition to interpolation in x.

Another important application is the computation of beam functions and similar quantities, which typically appear in resummation formulae. In multiscale problems, beam functions can depend on additional dynamic variables [29,30,31,32,33,34,35,36,37], in which case their evaluation necessitates fast on-the-fly evaluation of Mellin convolutions and in some cases their subsequent DGLAP evolution. A further area is the calculation of subleading power corrections, where the first and second derivatives of PDFs with respect to x are explicitly required [38,39,40,41,42,43,44]. With cubic splines, the first and second derivative respectively correspond to quadratic and linear interpolation and hence suffer from a poor accuracy. Numerical instabilities in derivatives computed from spline interpolants were for instance reported in Ref. [45].

Last but not least, for double parton distributions [46] or other multi-dimensional distribution functions, local interpolation methods become increasingly cumbersome due to the large number of variables and even larger number of distributions. By contrast, our global interpolation approach allows for a controllable numerical accuracy with reasonable memory and runtime requirements. This will be discussed in future work.

Clearly, there is always a trade-off between the numerical accuracy of a method and its computational cost, and the accuracy of different methods should be compared at similar computational cost (or vice versa). In our case, the primary cost indicator is the number of points on the interpolation grid, which controls both the number of CPU operations and the memory footprint.Footnote 2 As a result of its low polynomial order, local spline interpolation has an accuracy that scales rather poorly with the grid size, so that even a moderate increase in precision requires a substantial increase in computational effort. This can be (partially) offset by improving the performance of the implementation itself, see for example Refs. [28, 47]. Such improvements can be made for any given method, but they do not change the accuracy scaling of the method itself.

Global interpolation approximates a function over its domain by a single, high-order polynomial. On an equispaced grid, this leads to large oscillations near the edges of the interpolation interval, such that the interpolant never converges and may in fact diverge exponentially. This is well known as Runge’s phenomenon [48]. It is often misinterpreted as a problem of polynomial interpolation in general, which may be one reason why local interpolation methods such as splines tend to be preferred. What is perhaps not sufficiently appreciated [49] is the fact that Runge’s phenomenon is caused by the use of an equidistant grid and can be completely avoided by using a non-equidistant grid that clusters the grid points toward the edges of the interval. An example for this is Chebyshev interpolation, which leads to a well-convergent approximation, i.e., one that can be made arbitrarily precise by increasing the number of interpolation points. Moreover, approximation with Chebyshev polynomials on a finite interval is closely related to and thus as reliable as the Fourier series approximation for periodic functions. We find that Chebyshev interpolation for PDFs has an excellent accuracy scaling. As we will show, it easily outperforms local spline interpolation by many orders of magnitude in accuracy for the same or even lower number of grid points.

In the next section, we provide some mathematical background on Chebyshev interpolation as we require it. In Sect. 3, we present the global Chebyshev interpolation of PDFs used in ChiliPDF and compare its accuracy with local spline interpolation. We also discuss methods for estimating the numerical accuracy, as well as the basic operations of taking derivatives and integrals of PDFs. In Sects. 4 and 5, we describe the implementation and accurate evaluation of Mellin convolutions and of DGLAP evolution with ChiliPDF. We conclude in Sect. 6. In an appendix, we discuss the performance of different Runge–Kutta algorithms for solving the evolution equations.

2 Chebyshev interpolation

In this section, we give a brief account of Chebyshev interpolation, i.e. the interpolation of a function that is discretized on a grid of Chebyshev points. This includes the topics of differentiation, integration, and of estimating the interpolation accuracy. A wealth of further information and mathematical background can be found in Ref. [50].Footnote 3

Throughout this section, we consider functions of a variable t restricted to the interval \([-1, 1]\). The relation between t and the momentum fraction x of a PDF is specified in Sect. 3.

2.1 Chebyshev polynomials

The Chebyshev polynomials of the first and second kind, \(T_k(t)\) and \(U_k(t)\), are defined by

for integer \(k \ge 0\). They are related by differentiation as \(\mathrm {d}T_k (t) / \mathrm {d}t = k \,U_{k-1} (t)\), and they are bounded by \(|T_k(t)| \le 1\) and \(|U_{k}(t)| \le k+1\). The relations

hold for both \(V = T\) and \(V = U\). They show that \(T_k(t)\) and \(U_k(t)\) are indeed polynomials, which may not be immediately obvious from Eq. (1). Both families of polynomials form an orthogonal set, i.e. for \(k, m \ge 0\) they satisfy

where \(\alpha _0 = 2\) and \(\alpha _k = 1\) otherwise.

For given N, the Chebyshev points are given by

They form a descending series from \(t_0 = 1\) to \(t_N = -1\) and satisfy the symmetry property \(t_{N-j} = - t_j\). The polynomial \(T_N(t)\) assumes its maxima \(+1\) and minima \(-1\) at the Chebyshev points. We call the set of Chebyshev points a Chebyshev grid. Using Eq. (1) and expressing sines and cosines as complex exponentials, one readily derives the discrete orthogonality relations

where \(\beta _0 = \beta _N = 1/2\) and \(\beta _j = 1\) otherwise.

Notice that the density of Chebyshev points increases from the center toward the end points of the interval \([-1, 1]\). This feature is crucial to avoid Runge’s phenomenon for equispaced interpolation grids, as discussed in the introduction.

2.2 Chebyshev interpolation and Chebyshev series

We now consider the approximation of a function f(t) by a finite sum of Chebyshev polynomials \(T_{k}(t)\). Using \(T_k(t_j) = T_j(t_k)\) and the first relation in Eq. (5), one finds that the sum

with the interpolation coefficients

satisfies

In other words, \(p_N(t)\) is the unique polynomial of order N that equals the function f(t) at the \(N+1\) Chebyshev points \(t_0, \ldots , t_{N}\). We therefore call \(p_N(t)\) the Chebyshev interpolant.

A sufficiently smooth function f(t) can be expanded in the Chebyshev series

whose series coefficients

readily follow from the first orthogonality relation in Eq. (3). Substituting \(t = \cos \theta \) and using Eq. (1), we recognize in Eqs. (9) and (10) the Fourier cosine series for the function \(F(\theta ) = f(\cos \theta )\), which is periodic and even in \(\theta \). The Chebyshev series on the interval \([-1, 1]\) is thus nothing but the Fourier series of a periodic function in disguise, with the same excellent convergence properties for \(N\rightarrow \infty \). The Chebyshev series \(f_N(t)\) is not immediately useful in practice, because its coefficients can only be obtained by explicitly carrying out the integral in Eq. (10). The Chebyshev interpolant \(p_N(t)\), however, can be computed very efficiently (see below) and only requires evaluating \(f(t_j)\) at the \(N+1\) Chebyshev points. The key property of interpolating in the Chebyshev points is that the resulting interpolation coefficients \(c_k\) approach the series coefficients \(a_k\) in the limit \(N\rightarrow \infty \). Their precise relation can be found in [50, chapter 4]. It is therefore guaranteed that \(p_N(t)\) approaches \(f_N(t)\) and thus f(t) for \(N\rightarrow \infty \).

2.3 Interpolation accuracy

How accurately \(p_N(t)\) approximates the function f(t) depends on the smoothness of f and its derivatives. We give here a convergence statement that is useful for the interpolation of PDFs. For typical parameterizations, input-scale PDFs are analytic functions of the momentum fraction x for \(0< x <1\), but nonanalytic at \(x = 1\), where they behave like \((1-x)^\beta \) with noninteger \(\beta \). We anticipate that this corresponds to a behavior like \((1+t)^\beta \) at \(t= -1\) when we map an x interval onto a Chebyshev grid in t.

Suppose that on the interval \([-1,1]\) the function f and its derivatives up to \(f^{(\nu - 1)}\) with \(\nu \ge 1\) are Lipschitz continuous,Footnote 4 and that the derivative \(f^{(\nu )}\) is of bounded variation V. We recall that a function F(t) is Lipschitz continuous on \([-1,1]\) if there exists a constant C such that \(|F(s) - F(t)| \le C \,|s - t|\) for all \(s, t \in [-1,1]\). A differentiable function F(t) is of bounded variation V on \([-1,1]\) if the integral

is finite, where in the second step we have split the interval \([-1,1]\) into K subintervals with boundaries \(t_0, \ldots , t_{K+1}\) such that on each subinterval \(F'(t)\) has a definite sign. Under these conditions, one has

for all \(N > \nu \) and all \(t \in [-1,1]\). We note that the Chebyshev series has a similar convergence property, which is obtained by replacing \(p_N(t)\) with \(f_N(t)\) and 4V with 2V in Eq. (12).

For the example of a function \(f(t) = (1+t)^{n + \delta }\) with integer \(n \ge 1\) and \(0< \delta < 1\), the above statement holds with \(\nu = n\). The function and its derivatives up to \(f^{(n-1)}\) are Lipschitz continuous on \([-1,1]\). The nth derivative is proportional to \((1+t)^{\delta }\) and not Lipschitz continuous but of bounded variation. The \((n+1)\)st derivative is proportional to \((1+t)^{-1+\delta }\) and thus not of bounded variation, because it diverges at \(t= -1\).

It is important to note that Eq. (12) provides a bound on the maximal absolute interpolation error anywhere in the interval. Often the absolute interpolation error will be much smaller over most of the interval. In the vicinity of a point where f(t) goes to zero, the relative error can still remain large, and the convergence \(p_N(t) / f(t) \rightarrow 1\) for \(N \rightarrow \infty \) is in general not uniform over the full interval. We will indeed see this for the interpolation of PDFs close to zero crossings or to the end point \(x=1\).

2.4 Barycentric formula

A simple and efficient way to compute the Chebyshev interpolant is given by the barycentric formula

where \(t_j\) denotes again the Chebyshev points, and the barycentric basis functions are given by

\(b_j(t)\) is a polynomial of order N, although this is not evident from Eq. (14). The number of operations for evaluating the barycentric formula scales like N. The formula is found to be numerically stable in the interpolation interval. We note that it is not stable for extrapolating the function f(t) outside this interval [50, chapter 5].

The representation given by Eqs. (13) and (14) is a special case of the barycentric formula for the polynomial L(u) of order N that interpolates a function f(u) given on a set of \(N+1\) distinct points \(u_0, \ldots , u_N\,\):

with basis functions

\(L_N\) is called the Lagrange polynomial for the pairs of values \(\{ u_j, f(u_j) \}\). We will again use Eq. (15) below. The simple form of Eq. (14) comes from the fact that the Chebyshev points yield the very simple weights \(\lambda _i = \beta _i \,(-1)^i\,2^{n-1}/n\).

2.5 Differentiation

Given the Chebyshev interpolant \(p_N(t)\) for a function f(t), one can approximate the derivative \(f'(t) = \mathrm {d}f(t)/ \mathrm {d}t\) by the derivative \(p'_N(t)\). Note that in general \(f'\) is not equal to \(p'_N\) at the Chebyshev points. Obviously, one cannot compute the exact values of \(f'(t_j)\) from the function values \(f(t_j)\) on the grid.

The derivative \(p'_N(t)\) is a polynomial of degree \(N-1\) and thus also a polynomial of degree N (with vanishing coefficient of \(t^N\)). It is therefore identical to its own Chebyshev interpolant of order N, so we can compute it on the full interval \([-1,1]\) by the barycentric formula

To obtain the values of \(p'_N(t_j)\), we take the derivative of Eq. (6) using \(T'{\!}_k = k \,U_{k-1}\). The resulting discrete sums are easily evaluated using Eq. (1) and expressing the sine function in terms of complex exponentials. We then obtain the relation

with \(D_{0 0} = - D_{N N} = (2 N^2 + 1) /6\) and

Note that the matrix multiplication (18) maps a vector \(f(t_k) = \text { const}\) onto the zero vector, as it must be because the derivative of a constant function is zero.

Higher derivatives of the Chebyshev interpolant \(p_N(t)\) can be computed by repeated multiplication of \(f(t_k)\) with the differentiation matrix \(D_{j k}\) and subsequent application of the barycentric formula. Since each derivative reduces the degree of the interpolating polynomial by 1, the accuracy of approximating \(f^{(n)}_{}(t)\) by \(p^{(n)}_N(t)\) gradually degrades with increasing n. This loss of accuracy can be accounted for by choosing a sufficiently large order N to start with.

2.6 Integration

Using Eq. (6) together with

one readily obtains the integration rule

with weights

This is known as Clenshaw–Curtis quadrature. A detailed discussion of its accuracy (and comparison with Gauss quadrature) can be found in Ref. [50, chapter 19] and Ref. [51].

2.7 Interpolation without end points

The interpolant (6) is a sum over Chebyshev polynomials \(T_k\). Another interpolant can be obtained from the polynomials \(U_{k}\), namely

with coefficients

Using the second relation in Eq. (5) together with the equality \(\sin \theta _j\, U_{k-1}(t_j) = \sin \theta _k \, U_{j-1}(t_k)\), one finds that

This means that \(q_{N-2}(t)\) interpolates f(t) on the same Chebyshev points as \(p_{N}(t)\), with the exception of the interval end points. Correspondingly, \(q_{N-2}\) has polynomial degree \(N-2\) rather than N, as is evident from Eq. (23). We thus have an alternative approximation for f(t), which can be computed from the same discretized function values as those needed for computing \(p_N(t)\). For sufficiently large N, one may expect that \(q_{N-2}\) approximates f(t) only slightly less well than \(p_N(t)\), given that its polynomial degree is only smaller by two units. We may hence use \(|\,q_{N-2}(t) - p_{N}(t)|\) as a conservative estimate for the interpolation error \(|f(t) - p_{N}(t)|\).Footnote 5

To evaluate \(q_{N-2}(t)\), we can again use a barycentric formula, namely

The corresponding basis functions

can easily be obtained from Eq. (14) by comparing the general expressions (15) and (16) for the sets of points \(t_j\) with \(j=0, \ldots N\) or \(j=1, \ldots N-1\). We note that using the barycentric formula (26) in the full interval \([-1,1] = [t_N, t_0]\) involves an extrapolation from the interval \([t_{N-1}, t_1]\) on which \(q_{N-2}(t)\) interpolates f(t). For sufficiently large N, the extrapolation is however very modest, because \(t_{N-1} - t_{N} = t_0 - t_1 \approx \pi ^2 / (2 N^2)\).

Formulae for differentiation and integration of f(t) that use the approximant \(q_{N-2}\) are easily derived in analogy to the formulae that use \(p_N\). For differentiation, one obtains

and

with

For integration, one uses

to obtain an open integration rule

with weights

This is well known as Fejér’s second rule, see e.g. Ref. [52].Footnote 6

Using Eq. (32) to estimate the error of Clenshaw–Curtis integration (21) is similar to Gauss–Kronrod quadrature [53, 54], where for a grid with \(2 N+1\) points one has an integration rule of order \(3 N + 1\) and a Gauss rule of order \(2 N - 1\), where the latter uses a subset of the points and is used to estimate the integration uncertainty.Footnote 7 The difference in polynomial orders between the two rules is hence larger in this case than for the pair of Clenshaw–Curtis and Fejér rules, so that one may expect the error estimate in the latter case to be closer to the actual error. We shall come back to this in Sect. 3.4.

Given the Chebyshev grid (4) with \(N+1\) points for even N, one might also think of estimating the integration or interpolation accuracy by using the subgrid \(t_0\), \(t_2, \ldots t_{N-2}\), \(t_{N}\), which has \(N/2 + 1\) points and is again a Chebyshev grid. For the grids and functions we will consider in this work, this would however give a gross overestimate of the actual error, because the interpolant with \(N/2 + 1\) points is significantly worse than the one with \(N + 1\) points. By contrast, using interpolation without the end points of the original \(N + 1\) point grid, we obtain error estimates that are rather reliable, as will be shown in Sect. 3.4.

3 Chebyshev interpolation of PDFs

3.1 Interpolation strategy

To interpolate a parton distribution function f(x) in the momentum fraction x, we interpolate the function \({\tilde{f}}(x) = x f(x)\) in the variable \(u = \ln x\). For a given minimum momentum fraction \(x_0\), the interval \([x_0, 1]\) is thus mapped onto the interval \([u_0, 0]\). For reasons discussed below, we usually split the x interval into a few subintervals. On each subinterval, we perform a linear transformation from \(u = \ln x\) to \(t \in [-1,1]\) and introduce a Chebyshev grid in t, which is used to interpolate the function as described in Sect. 2. To specify the full grid, which is a conjunction of k subgrids, we use the notation

where the \(x_i\) are the subinterval boundaries and \(n_i = N_i + 1\) is the number of Chebyshev points for subgrid i. We will refer to this as an \((n_1, n_2, \ldots , n_k)\)-point grid. Note that adjacent subgrids share their end points, so that the total number of grid points is \(n_\mathrm {pts}= \sum _i n_i - (k - 1)\).

To be specific, let us consider one subgrid with \(N+1\) points \(u_0, \ldots , u_N\), which is mapped by a linear transform onto the Chebyshev grid \(t_0, \ldots , t_N\) given by Eq. (4). The corresponding grid points in x are \(x_i = e^{u_i}\). We can then interpolate the PDF using the barycentric formula

where

Here we have used that the form of the barycentric basis functions (14) remains unchanged under a linear transform of the interpolation variable. Formulae analogous to Eq. (35) can be used to interpolate functions derived from PDFs, such as their derivatives (see Sect. 3.3) or Mellin convolutions of PDFs with an integral kernel (see Sect. 4).

One reason to use subgrids concerns error propagation. As seen in Eq. (35), the interpolation of \({\tilde{f}}\) at a certain value x involves the values of \({\tilde{f}}\) on all grid points in the interpolation interval, although the weight of points close to x is higher than the weight of points far away. In an x region where \({\tilde{f}}\) is much smaller than its maximum in the interval, numerical errors from regions of large \({\tilde{f}}\) can strongly affect the interpolation accuracy. This is not much of an issue in our tests below, where \({\tilde{f}}\) is computed from an analytic expression, but it does become important when interpolating a PDF that has been evolved to a higher scale and is thus affected by numerical errors from the solution of the DGLAP equations.

So on one hand, the accuracy depends on the behavior of the interpolated function on each subgrid. On the other hand, using multiple subgrids for a fixed total number \(n_\mathrm {pts}\) of points decreases the polynomial degree of the interpolant on each subgrid, and the accuracy quickly degrades when the polynomial degree becomes too small. Hence, for given \(n_\mathrm {pts}\) and \(x_0\), there is a certain range for the number of subgrids that give the best performance for typical PDFs. We find that the optimum is to take 2 or 3 subgrids for the values of \(n_\mathrm {pts}\) and \(x_0\) used in the following.

To study the accuracy of Chebyshev interpolation for typical PDFs, we consider a number of representative test functions, covering a broad range of shapes and analytic forms,

where \(y(x) = 1 - 2 \sqrt{x}\) and \(T_k\) denotes the Chebyshev polynomials defined in Eq. (1). These functions correspond to PDFs at the input scales of several common PDF sets. Specifically, we have \(f_1 = {\bar{u}}\) at NNLO for ABMP16 [55], \(f_2 = g\) at LO for MMHT2014 [14], \(f_3 = g\) at NLO for HERAPDF2.0 [56], and \(f_4 = d_v\) at NLO for JR14 [57]. We have studied several other functions, including \(x f(x) \propto x^{-0.7} \,(1-x)^{9.2}\), which decreases very steeply and behaves like a typical gluon density at high scale. The results shown in the following are representative of this more extended set of functions.

Throughout this work, the relative numerical accuracy of interpolation for a given quantity is obtained as

where the exact result is evaluated using the analytic form of the functions in Eq. (37). In most plots, we also show the exact result itself as a thick black line, which is solid (dashed) when the result is positive (negative). The relative accuracy for other numerical operations is obtained in full analogy to Eq. (38).

3.2 Interpolation accuracy and comparison with splines

We now compare Chebyshev interpolation with local interpolation. As a prominent example for a method widely used in high-energy physics calculations, we take the interpolation provided by the LHAPDF library [1], which has become a standard interface for accessing parton densities. LHAPDF offers linear and cubic splines in either x or \(\ln x\). We use cubic splines in \(\ln x\), which is the default in LHAPDF and gives the most accurate results among these options.Footnote 8 For brevity, we refer to these as “L-splines” in the following. Since spline interpolants come in a wide variety, we also consider the cubic splines used by the Interpolation command of Mathematica (versions 11 and 12) and refer to them as “M-splines”. For M-splines, both the first and second derivative of the interpolant are continuous, whilst for L-splines only the first derivative is continuous but the second is not.

The interpolation grids used in LHAPDF depend on the PDF set and cover a wide range in the minimum momentum fraction \(x_0\) and in the total number of grid points \(n_\mathrm {pts}\), as shown in Table 1 for several common PDF sets. The resulting average density of points per decade in x is \(\rho = - n_\mathrm {pts}/ \log _{10} (x_0)\) and essentially determines the numerical accuracy of the spline interpolation. For the sake of comparison, we use the grids with the smallest and the largest density among common PDF sets, which happen to be the MMHT2014 grid (\(n_\mathrm {pts}= 64\) with \(\rho = 10.7\)) and the HERAPDF2.0 grid (\(n_\mathrm {pts}= 199\) with \(\rho = 33.1\)).

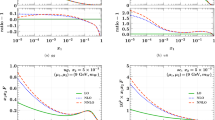

Relative interpolation accuracy (38) for the sample PDFs \(f_1(x)\) and \(f_3(x)\) in Eq. (37). The spline interpolants (green and blue) use the low-density grid with \(n_\mathrm {pts}= 64\). The Chebyshev interpolation (red) uses the grid \([10^{-6}, 0.2, 1]_{(32,\,32)}\), which has \(n_\mathrm {pts}= 63\). Here and in similar plots, the exact result that is being interpolated is shown in black (and dashed where the result is negative)

As Fig. 1, but for denser grids. The spline interpolants (green and blue) use the high-density grid with \(n_\mathrm {pts}= 199\). The Chebyshev interpolation (red) uses the grid \([10^{-6}, 0.2, 1]_{(40,\,32)}\), which has \(n_\mathrm {pts}= 71\)

In Fig. 1 we compare the spline interpolation on the low-density grid (MMHT2014 grid with \(n_\mathrm {pts}= 64\)) with Chebyshev interpolation on a (32, 32)-point grid, which has nearly the same total number of points (\(n_\mathrm {pts}= 63\)). The M-splines turn out to be more accurate than the L-splines, but this comes at the expense of them being more complex to construct. The Chebyshev interpolation achieves a significantly higher accuracy by several orders of magnitude than either of the splines. This reflects that, contrary to splines, Chebyshev interpolation uses polynomials of a high degree. In Fig. 2, we compare splines on the high-density grid (HERAPDF2.0 grid with \(n_\mathrm {pts}= 199\)) with Chebyshev interpolation on a (40, 32)-point grid with a total of \(n_\mathrm {pts}= 71\). Here, even with less than half the number of points, the Chebyshev interpolation achieves several orders of magnitude higher accuracy. This also highlights that the interpolation accuracy scales much better with the number of points for Chebyshev interpolation than for splines.

In Fig. 3 we compare the accuracy of interpolation on a single Chebyshev grid with the accuracy obtained with the two subgrids used in Fig. 2. We see that for the same total number of points, interpolation on two subgrids is more accurate, as anticipated above.

We observe in Figs. 1, 2 and 3 that the relative accuracy varies with x for Chebyshev interpolation somewhat more than it does for splines. In fact, the absolute accuracy of Chebyshev interpolation varies much less with x, as can be seen by comparing Fig. 3 with Fig. 4. The opposite holds for splines, where the relative accuracy shows less variation than the absolute one. This reflects that Chebyshev interpolation is “global” over the full interpolation interval, whilst splines are quite “local” (although the continuity conditions for neighboring splines lead to some correlation over larger distances in x).

As is seen in Figs. 1, 2 and 3, the relative accuracy degrades in the limit \(x \rightarrow 1\) for both splines and Chebyshev interpolation. This is not surprising, because in this limit the PDFs in Eq. (37) approach zero. Moreover, they behave like \((1 - x)^\beta \) with noninteger \(\beta \) and are hence nonanalytic at \(x=1\). This behavior cannot be accurately reproduced by interpolating polynomials in the vicinity of \(x=1\), whatever their degree. We emphasize that this problem concerns the relative interpolation accuracy. As seen in Fig. 4, the absolute error of interpolation does remain small for x up to 1, as is expected from Eq. (12). This is sufficient for many practical purposes, including the evaluation of convolution integrals that appear in cross sections or evolution equations. If high relative accuracy is required at large x, one needs to use subgrids with a sufficient number of points tailored to the region of interest.

Relative interpolation accuracy for the sample PDFs \(f_2(x)\) and \(f_4(x)\) in Eq. (37). The L-splines (green) use the high-density grid with \(n_\mathrm {pts}= 199\). The Chebyshev interpolations use grids with \(n_\mathrm {pts}= 71\), either with a single subgrid (blue) or with two subgrids \([10^{-6}, 0.2, 1]_{(40,\,32)}\) (red)

As Fig. 3, but showing the absolute instead of relative interpolation accuracy for xf(x), i.e. the absolute difference between the interpolated and exact results

3.3 Differentiation and integration

We now turn to Chebyshev interpolation for derivatives of the function \({\tilde{f}}(x) = x f(x)\). We use the barycentric formula (17) and its analog for the second derivative, multiplying of course with the Jacobian for the variable transformation from t to x. For comparison, we also take the numerical derivative \(( {\tilde{f}}_{\text {sp}}(x+h) - {\tilde{f}}_{\text {sp}}(x-h) ) / 2h\) of the L-spline interpolant \({\tilde{f}}_{\text {sp}}(x)\). We use a variable step size \(h = 10^{-4} \, x\), having verified that the result remains stable when decreasing h even further. The second derivative of \({\tilde{f}}_{\text {sp}}\) is evaluated in an analogous way.

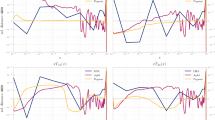

Relative accuracy of the first derivative (top) and second derivative (bottom) for Chebyshev interpolation (red) and numerical differentiation of L-splines (green). The grids used on the left have lower \(n_\mathrm {pts}\) as in Fig. 1, and those on the right have higher \(n_\mathrm {pts}\) as in Fig. 2

In Fig. 5 we consider the quantities

and the accuracy of evaluating them using the two methods just described. We see that with Chebyshev interpolants, a significantly higher accuracy is obtained. This is not surprising, since the polynomials approximating the derivatives are of a high degree in that case, whereas with cubic splines, one locally has a quadratic polynomial for the first derivative and a linear approximation for the second derivative. For the low-density grid with \(n_\mathrm {pts}= 64\), the L-splines in fact give errors around \(10\%\) for the first and \(100\%\) for the second derivative in parts of the x range. We note that on each Chebyshev grid, the absolute accuracy of the derivatives (not shown here) has a milder variation in x than the relative one, following the pattern we saw in Fig. 4 for xf(x) itself.

Relative accuracy of the truncated Mellin moments (40) with \(x_0 = 10^{-9}\) and with \(j=2\) (top) or \(j=10\) (bottom), for \(f_2(x)\) (left) or \(f_4(x)\) (right). We use Chebyshev grids with \(k=1\) to 4 subgrids and \(n_\mathrm {pts}/k\) points per subgrid, namely \([x_0, 1]\) (blue), \([x_0, 0.2, 1]\) (red), \([x_0, 10^{-3}, 0.5, 1]\) (green), and \([x_0, 10^{-6}, 10^{-3}, 0.5, 1]\) (yellow). The results for different \(n_\mathrm {pts}\) are connected by lines to guide the eye

To explore the accuracy of numerical integration, we consider the truncated Mellin moments

where the lower integration limit is the lowest point \(x_0\) of the grid. In Fig. 6, we show the dependence of the relative accuracy on the total number of grid points when using 1 to 4 subgrids of equal size. The advantage of using subgrids is clearly seen, especially for high moment index j, which emphasizes the large x region of the integrand. We find that taking 2 or 3 subgrids gives best results for a wide range of j. The disadvantage of using interpolants with lower polynomial degree becomes the dominant limiting factor with 4 or more subgrids.

3.4 Estimating the numerical accuracy

Let us now take a closer look at methods to estimate the numerical accuracy of interpolation or integration with Chebyshev polynomials. An obvious option is to re-compute the quantity of interest with an increased number of points. However, the examples in Fig. 6 show that the accuracy is not a strictly decreasing function of \(n_\mathrm {pts}\), and we see fluctuations with local minima and maxima as \(n_\mathrm {pts}\) varies by about 10 units. For a reliable estimate, one should therefore take a sufficiently large increase in \(n_\mathrm {pts}\). Increasing \(n_\mathrm {pts}\) in several steps provides an additional way of ensuring that the estimate is trustworthy.

The procedure just described can give sound accuracy estimates and is what we will adopt for assessing the accuracy of DGLAP evolution in Sect. 5.3. However, since the result must be evaluated multiple times on increasingly dense grids, this procedure can be computationally expensive, especially when the cost scales more than linearly with the number of grid points. This is indeed the case of DGLAP evolution, where the evaluation of convolution integrals involves \(n_\mathrm {pts}\times n_\mathrm {pts}\) matrices.

An alternative with much less computational overhead is to estimate the accuracy from the difference between Chebyshev interpolation and interpolation on the same grid without the end points, as described in Sect. 2. This does not require any additional function evaluations. We compare this estimate with the actual interpolation accuracy in Fig. 7. In the subinterval for large x, the estimate is very close to the true error, whilst in the subinterval for small to intermediate x it somewhat overestimates the actual numerical error. The amount of overestimation is highest close to the interval limits, which is not surprising, because this is where the two interpolation methods differ most. The corresponding comparison for differentiation is shown in Fig. 8 and follows the same pattern. We also note that the quality of the estimate for other sample PDFs is as good as or even better than in the examples shown here.

Comparison of the true relative interpolation accuracy (red) and its estimate (blue) obtained from the difference between interpolation on the Chebyshev grid with and without its end points. The (40, 32)-point grid used is the same as in Fig. 2

Comparison of true (red) and estimated (blue) interpolation accuracy as in Fig. 7, but for the first and second derivative of the sample PDF \(f_3(x)\)

Comparison of the relative integration accuracy (solid) and its estimate (dashed) for Clenshaw–Curtis (red) and Gauss–Kronrod (green) quadrature. The considered integrals are the truncated Mellin moments \(M_i(j)\) of the test functions \(f_i(x)\) with \(i=2,3\). For both methods we use two subgrids \([10^{-9}, 0.2, 1]_{(41,\,31)}\) with the appropriate Chebyshev or Gauss–Kronrod points for the integration variable \(\ln x\). In the accuracy estimates, the results for a given integration rule are first added for the two subintervals, and then the absolute difference between the involved higher-order and lower-order rules is taken

The analog for integration of the previous method is to estimate the accuracy of Clenshaw–Curtis integration from the difference to the Fejér quadrature rule.Footnote 9 In Fig. 9, we compare this estimate with the actual numerical error for the Mellin moment (40). We find that it tends to overestimate the actual error by two to three orders of magnitude, which is more than what we saw for interpolation. For comparison, we also show the relative accuracy obtained with the widely used Gauss–Kronrod rule, using the same two subintervals and the same number of grid points per subinterval as for Clenshaw–Curtis. As is commonly done, the accuracy estimate for Gauss–Kronrod is obtained from the difference between the nominal Gauss–Kronrod rule and the lower-order Gauss rule on a subset of the grid points. We see that the Gauss–Kronrod rule has the highest accuracy. In practical applications, the true result is however not known, and an integrator is only as good as its accuracy estimation. Here, the accuracy estimate for Gauss–Kronrod is much worse than our estimate for Clenshaw–Curtis.Footnote 10 As discussed at the end of Sect. 2, this is not unexpected given the comparison of polynomials orders, but it is interesting to see that the quantitative effect can be as pronounced as in our examples. We also note that the quantitative differences between the integration methods vary significantly with the shape of the integrands, as is evident from the two panels in Fig. 9.

Overall, as far as numerical accuracy estimates are concerned, using interpolation, differentiation, or integration without the end points of a Chebyshev grid can be considered as an inexpensive estimate of reasonable quality. It is not an alternative to the high-quality estimate one can achieve by a stepwise increase in the number of grid points. Its biggest advantage is thus to provide a fast and reliable indicator whether the accuracy of a result is sufficient or calls for a dedicated, more expensive investigation.

4 Mellin convolution

In this section, we consider the Mellin convolution of a PDF with an integral kernel, such as a DGLAP splitting function, a beam-function matching kernel, or a hard-scattering coefficient. In terms of the scaled PDF \({\tilde{f}}(x) = x f(x)\), we wish to compute

Here, K(z) is the scaled kernel, given e.g. by \(K(z) = z \,P(z)\) for a DGLAP splitting function P(z).Footnote 11

Let us see how Eq. (41) can be evaluated in a discretized setting. For simplicity, we first consider a single Chebyshev grid for the range \(x_0 \le x \le 1\) as described in Sect. 3.1. The convolution \((K\otimes {{\tilde{f}}})(x)\) is a function of x over the same domain as \({{\tilde{f}}}(x)\). Hence, we can interpolate it in complete analogy to \({{\tilde{f}}}(x)\) itself. For this purpose, we only need to evaluate Eq. (41) at the grid points \(x_i\),

where in the last step we used the barycentric formula (35) to interpolate \({\tilde{f}} (x_i/z)\) under the integral. Introducing the kernel matrix

we can compute the function values of \(K\otimes {{\tilde{f}}}\) on the grid by a simple matrix multiplication,

Importantly, the matrix \(K_{i j}\) only depends on the given kernel and grid but not on \({{\tilde{f}}}\). It can thus be pre-computed once using standard numerical integration routines.

In general, K(z) is a distribution rather than an ordinary function. Restricting ourselves to plus and delta distributions, we write

where \(K_\text {sing}(z)\) is the singular part of the kernel and contains plus distributions. The function \(K_\text {reg}(z)\) contains at most an integrable singularity at \(z=1\), for instance powers of \(\ln (1-z)\). The separation between \(K_{\text {sing}}\) and \(K_{\text {reg}}\) is not unique and may be adjusted as is convenient. The convolution (41) can now be written as

where

can be evaluated analytically if the separation between \(K_{\text {sing}}\) and \(K_{\text {reg}}\) is chosen appropriately. Due to the explicit subtraction of \({{\tilde{f}}}(x)\) in the first integral in Eq. (46), the plus prescription in \(K_{\text {sing}}\) can now be omitted, because

From the definition (47) one readily finds that \(K_d(x)\) diverges like \(\ln ^{n+1}(1-x)\) for \(x\rightarrow 1\) if K(z) contains a term \({\mathcal {L}}_n(1-z)\), where we write

for the logarithmic plus distribution of degree n. However, a PDF vanishes much faster for \(x\rightarrow 1\) than \(K_d(x)\) diverges, such that the product \(K_d(x) \, {\tilde{f}}(x)\) tends to zero in that limit.

Applying Eq. (46) to the evaluation of the \(K_{ij}\) matrix in Eq. (43), we have

where \(u_i\) and \(u_j\) are grid points in u, and where we have changed the integration variable to \(v = \ln z\). The plus prescription in \(K_{\text {sing}}\) can again be omitted, because the term in square brackets vanishes at least linearly in v for \(v \rightarrow 0\). Hence, all integrals can be evaluated numerically, for which we use an adaptive Gauss–Kronrod routine.

Given that \(x_N = 1\), the matrix element \(K_{N \,N}\) is ill defined if K contains a plus distribution, because \(K_d(x)\) diverges for \(x\rightarrow 1\) in that case. This does not present any problem: we already noticed that \(K_d(x) \, {\tilde{f}}(x)\rightarrow 0\) for \(x\rightarrow 1\), which translates to \(K_{N \,N} \,{\tilde{f}}_N = 0\) in the discretized version. This term may hence be omitted in the matrix multiplication (44), along with all other terms \(K_{i N} \,{\tilde{f}}_N\).

It is not difficult to generalize the preceding discussion to the case of several subgrids in u, each of which is mapped onto a Chebyshev grid. One then has a distinct set of barycentric basis functions for each subgrid. If \(u_j\) and \(u_i - v\) are not on the same subgrid, then the basis function \(b_j(u_i - v)\) in Eq. (50) must be set to zero. As a consequence, the matrix \(K_{i j}\) has blocks in which all elements are zero. Let us, however, note that \(K_{i j}\) always has nonzero elements below the diagonal \(i=j\) and is not an upper triangular matrix.

We now study the numerical accuracy of this method relative to the exact result, which we obtain by the direct numerical evaluation of the convolution integral (46). For comparison, we also show the accuracy of the result obtained by approximating \({\tilde{f}}(x)\) in Eq. (46) with its L-splines interpolant and performing the convolution integral numerically. All explicit numerical integrals in this study are performed at sufficiently high precision to not influence the results.

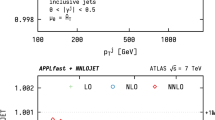

Relative accuracy of Mellin convolutions. The red curves correspond to Chebyshev interpolation for both the PDF and the convolution result, as described in the text. The green curves are obtained by performing the numerical convolution integral for the PDF interpolated with L-splines. Details about the convolution kernels are given in the text. On the left, we use the same grids as in Fig. 1, with \(n_\mathrm {pts}= 63\) for Chebyshev interpolation and \(n_\mathrm {pts}= 64\) for L-splines. On the right, we use the Chebyshev grid \([10^{-9}, 0.2, 1]_{(40, 32)}\) with \(n_\mathrm {pts}= 71\) and for L-splines a grid with \(n_\mathrm {pts}= 150\) (corresponding to the NNPDF3.1 grid in Table 1)

In Fig. 10, we show the results of this exercise for the convolution of our sample PDFs \(f_i(x)\) with kernels that have different singular behavior at the end point \(z=1\). The kernel in the top row is the leading-order DGLAP splitting function \(P_{g g}\), which contains a term \({\mathcal {L}}_0 (1-z)\). The kernel in the second row is \(\ln ^4 (1-z)\), which is the most singular term in the three-loop splitting functions \(P_{g q}\) and \(P_{q g}\) [63, 64]. In the bottom row, we take \({\mathcal {L}}_5(1-z)\) as kernel, which appears in the \(\hbox {N}^{3}\)LO corrections to the rapidity spectrum of inclusive Higgs production in pp collisions [15]. In all cases, the accuracy of our Chebyshev based method is several orders of magnitude higher than the one obtained when interpolating PDFs with L-splines and then performing the convolution integral.

The numerical inaccuracies for the convolution with \({\mathcal {L}}_5 (1-z)\) using L-splines are in the percent range over a wide range of x and much higher than those for the other kernels we looked at. This is in line with the results of Ref. [15], which finds that the convolution of \({\mathcal {L}}_5(1-z)\) with PDFs from the LHAPDF library can lead to considerable numerical instabilities. The PDFs used in that work are those of the NNPDF3.0 analysis [65], and for the sake of comparison we use the corresponding grid for spline interpolation in the plots on the right of Fig. 10.

An alternative method to evaluate the convolution is to use the Chebyshev interpolant of \({{\tilde{f}}}\) and perform the convolution integral itself numerically, analogous to what we did with L-splines above. This avoids the additional interpolation of \((K\otimes {{\tilde{f}}})\) that happens in the above kernel matrix method. The numerical accuracy of this alternative method differs slightly from the accuracy of the kernel matrix method, but it is of the same order of magnitude. Hence, the additional interpolation of \((K\otimes {{\tilde{f}}})\) does not introduce a significant penalty in accuracy beyond that of interpolating \({{\tilde{f}}}\) itself. This makes the kernel matrix method far more attractive, as it avoids the evaluation of a numerical integral for each desired \({{\tilde{f}}}\) and x.

5 DGLAP evolution

5.1 Numerical solution of DGLAP equations

In this section, we present our approach to the numerical solution of the DGLAP evolution equations [66,67,68]. Up to order \(\alpha _s^{n+1}\), they read

where \(P^{(m)}\) is the splitting function at order m. For notational simplicity, we suppress the indices for the parton type and the associated sum on the right-hand side. To solve Eq. (51), we discretize the scaled PDFs \({\tilde{f}}(x) = x f(x)\) on a Chebyshev grid and evaluate the Mellin convolutions on the right-hand side as discussed in Sect. 4. The integro-differential equation (51) then turns into a coupled system of ordinary differential equations (ODEs),

where \({\tilde{f}}_{i}(\mu ) = {\tilde{f}}(x_i,\mu )\) and \({\widetilde{P}}_{i j}^{(m)}\) is the kernel matrix for \(K(z) \equiv z P^{(m)}(z)\) as defined in Eq. (43). This system of ODEs can be solved numerically using the standard Runge–Kutta algorithm, which is described in more detail in Appendix A. This formulation as a linear system of ODEs by discretization in x is common to many approaches that solve the DGLAP equations in x space [17,18,19, 25,26,27]. By using the Chebyshev grid for the discretization in x, the resulting evolved PDF can then be Chebyshev interpolated.

The Runge–Kutta algorithm uses a discretization of the evolution variable t (not to be confused with the argument of Chebyshev polynomials in Sect. 2). It is advantageous if the function multiplying \({\tilde{f}}\) on the right-hand side of the evolution equation depends only weakly on t, because this tends to give a uniform numerical accuracy of evolution with a fixed step size in t. We therefore evolve in the variable

instead of \(\ln \mu ^2\). For the running coupling at order n, we write

and use a Runge–Kutta routine (see the end of Appendix A) to solve for \(\alpha _s^{-1}\) as a function of \(\ln \mu ^2\). At leading order, the DGLAP equation (51) then becomes

with no explicit t dependence on the right-hand side. Using \(\alpha _s = e^{-t}\), we have at NLO

where an explicit t dependence appears in the two-loop terms with \(\beta _1\) and \(P^{(1)}\). The corresponding equations at NNLO and higher are easily written down.

The pattern of mixing between quarks, antiquarks, and gluons under DGLAP evolution is well known. Its structure at NNLO is given in Refs. [63, 69] and remains the same at higher orders. To reduce mixing to a minimum, we work in the basis formed by

where \(q^\pm = q \pm {\bar{q}}\). Contrary to the often-used flavor nonsinglet combinations \(u + d - 2 s\), \(u + d + s - 3 c\), etc., the differences between consecutive flavors in Eq. (57) are less prone to a loss of numerical accuracy due to rounding effects in regions where the strange and heavy-quark distributions are much smaller than their counterparts for u and d quarks. Note that at NNLO, the combination \(\varSigma ^-\) has a different evolution kernel than the flavor differences in the second line of Eq. (57). This is due to graphs that have a t channel cut involving only gluons.

We implement the unpolarized splitting functions at LO and NLO in their exact analytic forms. For the unpolarized NNLO splitting functions, we use the approximate expressions given in Refs. [63, 69], which are constructed from a functional basis containing only the distributions \({\mathcal {L}}_0(1-z)\), \(\delta (1-z)\) and polynomials in z, 1/z, \(\ln z\), and \(\ln (1-z)\). With these parameterizations, the numerical evaluation of the kernel matrices in all channels takes about as long for \(P^{(2)}\) as it does for \(P^{(1)}\).Footnote 12

Both \(\alpha _s\) and the PDFs depend on the number \(n_F\) of quark flavors that are treated as light and included in the \(\overline{\text {MS}}\) renormalization of these quantities. For the conversion between \(\alpha _s\) for different \(n_F\), we use the matching conditions in Ref. [70] at the appropriate order. For the transition between PDFs with different \(n_F\), we implement the matching kernels given in Ref. [71], which go up to order \(\alpha _s^2\). We have verified that they agree with the independent calculation in Ref. [72]. The Mellin convolutions of matching kernels with PDFs are also evaluated as described in Sect. 4.

5.2 Validation

To validate our DGLAP evolution algorithm, we compare our results with the benchmark tables in section 1.33 of Ref. [73] and section 4.4 of Ref. [74]. These tables contain PDFs evolved to the scale \(\mu = 100 \,\mathrm {GeV}\) from initial conditions given in analytic form at \(\mu _0 = \sqrt{2} \,\,\mathrm {GeV}\). Evolution is performed at LO, NLO and NNLO, either at fixed \(n_F = 4\) or with a variable number of flavors \(n_F = 3 \dots 5\) and flavor transitions at scales \(\mu _{c}\) and \(\mu _{b}\). The default choice is \(\mu _c = m_c = \sqrt{2} \,\mathrm {GeV}\) and \(\mu _b = m_b = 4.5 \,\mathrm {GeV}\). We compare with the LO and NLO results in Ref. [73] and with the NNLO results in Ref. [74]. The latter are obtained with the same parameterized NNLO splitting functions that we use. Furthermore, the parameterization in eq. (3.5) of Ref. [22] is used for the two-loop matching kernel for the transition from a gluon to a heavy quark. We also use this parameterization for the sake of the present comparison, noting that visible differences with the benchmark results appear when we use the exact analytic form for this kernel instead.

The benchmark tables were obtained using two programs, HOPPET [25] and QCD-Pegasus [22], both run with high-precision settings. The former solves the evolution equations in x space, whilst the latter uses the Mellin moment technique. The tables give results for the evolved PDFs at 11 values of x between \(10^{-7}\) and 0.9. The results are given with five significant digits, with the exception of several sea-quark combinations at \(x=0.9\), which are very small and are given to only four significant digits.

As specified in Ref. [73], evolution with HOPPET was performed by using seventh-order polynomials for the interpolation on multiple x grids spanning the interval \([10^{-8}, 1]\) with a total of \(n_\mathrm {pts}= 1,\!170\). A uniform grid in \(\ln \mu ^2\) was used, with 220 points between \(\mu = \sqrt{2} \,\,\mathrm {GeV}\) and \(1000 \,\mathrm {GeV}\). The results were verified to be stable within a \(10^{-5}\) relative error for \(x<0.9\) by comparing them with the results of the same program with half the number of points on both the x and the \(\mu \) grids. Furthermore, they were compared with the results obtained with QCD-Pegasus.

For the comparison with our approach, we use a Chebyshev grid with 3 subgrids \([10^{-8}, 10^{-3}, 0.5, 1]_{(24,\,24,\,24)}\) which has \(n_\mathrm {pts}= 70\). The DGLAP equations are solved using a Runge–Kutta algorithm with the DOPRI8 method (see Appendix A) and a maximum step size in t of \(h = 0.1\). This amounts to about 12 Runge–Kutta steps from the starting scale to \(\mu = 100 \,\mathrm {GeV}\). Our results for the benchmark numbers (rounded as stated above) remain the same if we take 40 instead of 24 points for each subgrid in x. They also remain unchanged if we use the same Runge–Kutta method with maximum step size \(h = 0.3\) or \(h = 0.02\).

We compare our results with the numbers reported in tables 2, 3, 4 of Ref. [73] and in tables 14 and 15 of Ref. [74], which cover unpolarized evolution both with fixed \(n_F=4\) and with variable \(n_F=3 \ldots 5\). Whilst the tables also give results for evolution with different scales \(\mu _{\text {r}}\) in \(\alpha _s\) and \(\mu _{\text {f}}\) in the PDFs, we always set \(\mu _{\text {r}} = \mu _{\text {f}}\). We agree with all benchmark numbers,Footnote 13 except for those given in our Tables 2 and 3. In all cases where we differ, the differences can be attributed to the benchmark results and fall into two categories:

-

1.

The benchmark tables contain a number of entries, marked by an asterisk, for which the results of the two used codes differ in the sixth digit and give different numbers when rounded to five digits. The number with the smaller modulus is then given in the tables. In several cases, our result agrees with number with the larger modulus and in this sense agree with the benchmark results.

-

2.

We differ from the benchmark numbers in five more cases. For the two cases at NNLO, the benchmark results have typographical errors in the exponent. In the other three cases, we differ by one unit in the fifth digit. We contacted the authors of the benchmark tables, who confirmed that indeed their respective codes agree with our numbers [75].

5.3 Numerical accuracy

We now study the numerical accuracy of our method in some detail. We use \(x_0 = 10^{-7}\) and 3 subgrids. The evolution equations are solved with the DOPRI8 method (see Appendix A). To assess the accuracy, we compare three settings with different numbers of grid points and different Runge–Kutta steps h:

-

1.

\([10^{-7}, 10^{-2}, 0.5, 1]_{(24,\,24,\,24)}\) (\(n_\mathrm {pts}= 70\)) and \(h=0.1\),

-

2.

\([10^{-7}, 10^{-2}, 0.5, 1]_{(40,\,40,\,40)}\) (\(n_\mathrm {pts}= 118\)) and \(h=0.1\),

-

3.

\([10^{-7}, 10^{-2}, 0.5, 1]_{(40,\,40,\,40)}\) (\(n_\mathrm {pts}= 118\)) and \(h=0.004\).

To estimate the numerical error due to the discretization in x, we take the difference between settings 1 and 2, whereas the error due to the Runge–Kutta algorithm is estimated from the difference between settings 2 and 3. For the combined error, we take the difference between settings 1 and 3. Note that these estimates correspond to the accuracy of setting 1. We use the same initial conditions at \(\mu _0 = \sqrt{2} \,\,\mathrm {GeV}\) as in the benchmark comparison described in the previous subsection. Starting at \(n_F=3\), heavy flavors are added at \(m_c = \sqrt{2} \,\,\mathrm {GeV}\), \(m_b = 4.5 \,\mathrm {GeV}\), and \(m_t = 175 \,\mathrm {GeV}\). In the remainder of this section, we always evolve and match at NNLO.

Relative numerical accuracy of NNLO DGLAP evolution and flavor matching from \(\mu _0 = \sqrt{2} \,\,\mathrm {GeV}\) and \(n_F = 3\) to \(\mu = 100 \,\mathrm {GeV}\) and \(n_F = 5\). We use the PDFs defined in Refs. [73, 74]. The top row shows quark, antiquark, and gluon distributions (with \(f_{\text {sea}} \in \{ {\bar{u}}, {\bar{d}}, s, {\bar{s}}, c, {\bar{c}}, b, {\bar{b}} \}\)), and the bottom row shows the differences \(q - {\bar{q}}\)

As Fig. 11, but for evolution and flavor matching up to \(\mu = 10 \,\mathrm {TeV}\) and \(n_F = 6\)

The combined discretization and Runge–Kutta accuracy for evolution to \(\mu = 100 \,\mathrm {GeV}\) and \(\mu = 10 \,\mathrm {TeV}\) is shown in Figs. 11 and 12, respectively, both for the individual parton flavors g, q, \({\bar{q}}\), and for the valence combinations \(q^- = q - {\bar{q}}\). The relative accuracy is better than \(10^{-7}\) up to \(x \le 0.8\), and much better than that for smaller x. The same holds when we evolve to \(\mu = 1.01 \,m_c\) or \(\mu = 1.01 \,m_b\), where the charm or bottom distributions are very small. The relative accuracy of \(u_v\) and \(d_v\) increases towards small x. This reflects the strong decrease of these distributions in the small-x limit, as we already observed and explained in Sect. 3. The combinations \(s^-\), \(c^-\), \(b^-\), and \(t^-\) show less variation at small x, and so does their relative accuracy.

In Fig. 13 we show examples for the separate errors due to discretization and the Runge–Kutta algorithm. With our settings, the overall numerical accuracy is entirely determined by the discretization in x, whilst the inaccuracy due to the Runge–Kutta algorithm can be neglected. The Runge–Kutta accuracy for \(u_v\) is in fact determined by the machine precision except for very large x, as signaled by the noisy behavior of the error curve.

Relative accuracy of NNLO DGLAP evolution at fixed \(n_F = 5\) from \(\mu _0 \rightarrow \mu _1 \rightarrow \mu _0\) (left) and from \(\mu _1 \rightarrow \mu _0 \rightarrow \mu _1\) (right). The scales are \(\mu _0 = 2.25 \,\mathrm {GeV}\) and \(\mu _1 = 1 \,\mathrm {TeV}\), and the DOPRI8 method with maximum step size \(h = 0.1\) is used to solve the evolution equations. The same grid in x is used as for the benchmark comparison in Sect. 5.2

Relative accuracy of different Runge–Kutta methods as a function of the total number \(N_{\text {fc}} = s \,N_{\text {steps}}\) of calls to the function F(t, y) in Eq. (58). The accuracy is evaluated for the truncated Mellin moment M(j) of the sample PDF \(f_1(x)\), evolved from \(t_0 = 0.7\) to \(t_f = 1.7\). The lines connecting the points correspond to a fitted power law \((N_{\text {fc}})^{-p}\) with p reported in the legend

It is often found that backward evolution, i.e. evolution from a higher to a lower scale, is numerically unstable.Footnote 14 The structure of the DGLAP equations is such that the relative uncertainties (physical or numerical) of PDFs become larger when one evolves from a scale \(\mu _1\) to a lower scale \(\mu _0\). This property is shared by other renormalization group equations in QCD, including the one for \(\alpha _s(\mu )\). To which extent it leads to numerically unreliable results is, however, a separate question.

To study backward evolution within our method, we perform the following exercise. We start with the input PDFs of the benchmark comparison, evolve them with \(n_F = 4\) from \(\sqrt{2} \,\,\mathrm {GeV}\) to \(m_b / 2 = 2.25 \,\mathrm {GeV}\) and match to \(n_F = 5\) at that point.Footnote 15 The result is taken as initial condition for \(n_F = 5\) evolution from \(\mu _0 = 2.25 \,\mathrm {GeV}\) to \(\mu _1 = 1 \,\mathrm {TeV}\) (step 1). The result of step 1 is evolved down to \(\mu _0\) (step 2), and the result of step 2 is again evolved up to \(\mu _1\) (step 3). We thus have two quantities that are sensitive to the accuracy of backward evolution:

-

the difference between the output of step 2 and the input to step 1, which corresponds to the evolution path \(\mu _0 \rightarrow \mu _1 \rightarrow \mu _0\),

-

the difference between the output of step 3 and the input to step 2, corresponding to the evolution path \(\mu _1 \rightarrow \mu _0 \rightarrow \mu _1\).

The relative accuracy for the two evolution paths is shown in Fig. 14 for the DOPRI8 method with maximum step size \(h = 0.1\). In both cases, we find very high accuracy, in some cases near machine precision as signaled by the noisy behavior of the curves. Relative errors tend to be larger for the evolution path \(\mu _0 \rightarrow \mu _1 \rightarrow \mu _0\), but they are all below \(10^{-8}\) for \(x \le 0.9\) (except in the vicinity of zero crossings). Note that a high accuracy is also obtained for the small combinations \(s^-\), \(c^-\), and \(b^-\), which are induced by evolution at order \(\alpha _s^3\).

We have also repeated our exercise with the widely-used RK4 method and \(h = 0.03\), which requires approximately the same number of function calls as DOPRI8 with \(h = 0.1\) (see the appendix for more detail). This yields relative errors that are orders of magnitude larger for both evolution paths, but still below \(10^{-5}\) for \(x \le 0.9\) and away from zero crossings. This shows the significant benefit of using Runge–Kutta methods with high order for DGLAP evolution. Nevertheless, even with the standard RK4 method, we find no indication for numerical instabilities of backward evolution in our setup.

Finally, we note that a Runge–Kutta method with less than the 13 stages of DOPRI8 can be useful if one needs to perform evolution in many small steps, for instance when computing jet cross sections with \(\mu \sim p_T\) for a dense grid in the transverse jet momentum \(p_T\). Setting \(h \sim 0.03\), we find the DOPRI6 method with its 8 stages to be well suited for such situations.

6 Conclusions

We have shown that a global interpolation using high-order Chebyshev polynomials allows for an efficient and highly accurate numerical representation of PDFs. Compared with local interpolation methods on equispaced grids, such as splines, our method can reach much higher numerical accuracies whilst keeping the computational cost at a comparable or reduced level.

Not only interpolation, but also differentiation and integration of PDFs are numerically accurate and computationally simple in our approach. The same holds for the Mellin convolution of a PDF with an integral kernel, which can be implemented as a simple multiplication with a pre-computed matrix. In particular, high accuracy is retained if the kernel has a strong singularity at the integration end point, such as \(\bigl [ \ln ^5 (1-z) / (1-z) \bigr ]_+\) or \(\ln ^4(1-z)\). Even for such demanding applications, it is possible to achieve a numerical accuracy below \(10^{-6}\) with only about 60 to 70 grid points. Hence, with our method, numerical inaccuracies become safely negligible for practical physics calculations involving PDFs. If desired, the inaccuracy caused by the interpolation can be estimated with modest additional computational effort by using interpolation on the same grid without the end points. This yields an estimate that is appropriately conservative, but not as overly conservative as the estimate we obtain with Gauss–Kronrod quadrature in the case of integration.

By combining our Chebyshev-based interpolation with a Runge–Kutta method of high order, we obtain a very accurate implementation of DGLAP evolution. Using 70 grid points in x and evolving from \(\mu = 1.41 \,\mathrm {GeV}\) to \(\mu = 10 \,\mathrm {TeV}\), we find relative errors below \(10^{-7}\) for x between \(10^{-7}\) and 0.8. We successfully tested our implementation against the benchmark evolution tables in Refs. [73, 74]. Performing backward evolution with our method, we find no indications for any numerical instabilities.

Let us briefly point out differences between our implementation of Chebyshev interpolation and the use of Chebyshev polynomials in parameterizations of PDFs. Perhaps most striking is that the order of the highest polynomial used is much larger in our case. There are several reasons for this. First, our approach aims at keeping uncertainties due to interpolation small compared to physics uncertainties. By contrast, as argued in [13], a PDF parameterization need not be much more accurate (relative to the unknown true form) than the uncertainty resulting from fitting the PDFs to data. Second, as we have seen, polynomials up to order 20 or 40 can be handled with ease in our method, whereas PDF determinations naturally tend to limit the number of parameters to be fitted to data. Third, the detailed functional forms used in the PDF parameterizations Refs. [11,12,13,14] at a fixed scale \(\mu \) differ from each other and from the form (35) we use for interpolation at any scale \(\mu \). It may be interesting to further investigate the impact of these differences on the number of polynomials required for a satisfactory description of PDFs.

Our approach is implemented in the C++ library ChiliPDF, which is still under development and which we plan to eventually make public. To give a sense of its current performance, we note that the evaluation of the interpolated PDF takes less than a microsecond. With the settings in Sect. 5.2, NNLO DGLAP evolution from \(\mu = 1.41 \,\mathrm {GeV}\) to \(\mu = 100 \,\mathrm {GeV}\) at \(n_F = 4\) takes on the order of 10 to 20 milliseconds.Footnote 16 If significantly faster access to evolved PDFs is needed, it may be preferable to pre-compute the \(\mu \) dependence and use interpolation in both x and \(\mu \). This can easily be done using the techniques we have described.

To conclude, let us mention additional features that are already available in ChiliPDF but not discussed here: (i) polarized evolution up to NNLO for longitudinal and up to NLO for transverse and linear polarizations, with flavor matching up to NLO for all cases, (ii) combined QCD and QED evolution of PDFs with kernels up to \({\mathcal {O}}(\alpha _s \,\alpha )\) and \({\mathcal {O}}(\alpha ^2)\), with QED flavor matching up to \({\mathcal {O}}(\alpha )\), and (iii) evolution and flavor matching of double parton distributions \(F(x_1, x_2, \mathbf {y}\,; \mu _1, \mu _2)\). For the latter, accuracy goals are typically lower than for computations involving PDFs, but the possibility to work with a low number of grid points is crucial for keeping memory requirements manageable. This will be described in a future paper.

Data Availability Statement

This manuscript has no associated data or the data will not be deposited. [Authors’ comment: There is no associated data for this paper.]

Notes

Chebyshev Interpolation Library for PDFs.

Of course, the inherent complexity of a method matters as well. For instance, more complex and thereby more accurate splines tend to have a higher computational cost per grid point. However, these are secondary effects we will not focus on.

The first chapters of this book are available on https://people.maths.ox.ac.uk/trefethen/ATAP.

The following corresponds to theorem 7.2 in Ref. [50], with the condition of absolute continuity being replaced by the stronger condition of Lipschitz continuity, which we find sufficient for our purpose and easier to state.

To be precise, since \(p_N(t)\) is more accurate than \(q_{N-2}(t)\), the difference \(|\,q_{N-2}(t) - p_{N}(t)|\) is actually an estimate of the accuracy of \(q_{N-2}(t)\) and thus a conservative estimate for the accuracy of \(p_N(t)\).

This paper is also available at http://www.sam.math.ethz.ch/~waldvoge/Papers/fejer.html.

An integration rule is of order p if polynomials up to order p are integrated exactly. For odd N, the order of the Gauss–Kronrod rule is increased from \(3 N + 1\) to \(3 N + 2\), because odd polynomials are correctly integrated to zero for symmetry reasons [54]. Likewise, the order of the quadrature rules (21) and (32) for even N is increased from N to \(N+1\) and from \(N-2\) to \(N-1\), respectively.

Technically, we generate LHAPDF data files for the functions in Eq. (37) and then run the LHAPDF interpolation routines with the option logcubic. The LHAPDF data files are generated with double precision to avoid any artificial loss of numerical accuracy due to the intermediate storage step.

We always refer to what is known in the literature as Fejér’s second rule, given in Eq. (32).

Expert readers will know that it is in fact not uncommon for Gauss–Kronrod integrators to have overly conservative accuracy estimates.

We recall that \(h_1 = h_2 \otimes h_3\) implies \({\tilde{h}}_1 = {\tilde{h}}_2 \otimes {\tilde{h}}_3\) with \({\tilde{h}}_i(z) = z h_i(z)\).

Using the approximate, parameterized NNLO splitting functions is a matter of convenience and for compatibility with the benchmark results below. It is also possible to use the exact expressions, since the kernel matrices are evaluated only once.

See, however, Ref. [76] for an early study that concluded the contrary.

Note that by matching at \(\mu = m_b / 2\), we obtain nonzero values for the b and \({\bar{b}}\) distributions.

These timings are on a recent desktop or laptop computer with an Intel® Core™ i5 or i7 processor. We expect that with a dedicated performance tuning, the code can still be made faster.

Note that F(t, y) is vector valued in the discretized DGLAP equation (52). Its evaluation accounts for the bulk of the computational cost of evolution.

References

A. Buckley, J. Ferrando, S. Lloyd, K. Nordström, B. Page, M. Rüfenacht et al., LHAPDF6: parton density access in the LHC precision era. Eur. Phys. J. C 75, 132 (2015). https://doi.org/10.1140/epjc/s10052-015-3318-8arXiv:1412.7420

F.J. Yndurain, Reconstruction of the deep inelastic structure functions from their moments. Phys. Lett. B 74, 68 (1978). https://doi.org/10.1016/0370-2693(78)90062-X

G. Parisi, N. Sourlas, A simple parametrization of the \(Q^2\) dependence of the quark distributions in QCD. Nucl. Phys. B 151, 421 (1979). https://doi.org/10.1016/0550-3213(79)90448-6

W. Furmanski, R. Petronzio, A method of analyzing the scaling violation of inclusive spectra in hard processes. Nucl. Phys. B 195, 237 (1982). https://doi.org/10.1016/0550-3213(82)90398-4

R. Kobayashi, M. Konuma, S. Kumano, FORTRAN program for a numerical solution of the nonsinglet Altarelli–Parisi equation. Comput. Phys. Commun. 86, 264 (1995). https://doi.org/10.1016/0010-4655(94)00159-YarXiv:hep-ph/9409289

J. Chyla, J. Rames, On methods of analyzing scaling violation in deep inelastic scattering. Z. Phys. C 31, 151 (1986). https://doi.org/10.1007/BF01559606

J. Blümlein, M. Klein, G. Ingelman, R. Rückl, Testing QCD scaling violations in the HERA energy range. Z. Phys. C 45, 501 (1990). https://doi.org/10.1007/BF01549682

V.G. Krivokhizhin, S.P. Kurlovich, R. Lednicky, S. Nemecek, V.V. Sanadze, I.A. Savin et al., Next-to-leading order QCD analysis of structure functions with the help of Jacobi polynomials. Z. Phys. C 48, 347 (1990). https://doi.org/10.1007/BF01554485

M. Bonvini, S. Forte, G. Ridolfi, Soft gluon resummation of Drell–Yan rapidity distributions: theory and phenomenology. Nucl. Phys. B 847, 93 (2011). https://doi.org/10.1016/j.nuclphysb.2011.01.023arXiv:1009.5691

M. Bonvini, S. Marzani, Resummed Higgs cross section at \(\text{ N}^{3}\)LL. JHEP 09, 007 (2014). https://doi.org/10.1007/JHEP09(2014)007arXiv:1405.3654

J. Pumplin, Parametrization dependence and \(\Delta \chi ^2\) in parton distribution fitting. Phys. Rev. D 82, 114020 (2010). https://doi.org/10.1103/PhysRevD.82.114020arXiv:0909.5176

A. Glazov, S. Moch, V. Radescu, Parton distribution uncertainties using smoothness prior. Phys. Lett. B 695, 238 (2011). https://doi.org/10.1016/j.physletb.2010.11.025arXiv:1009.6170

A.D. Martin, A.J.T.M. Mathijssen, W.J. Stirling, R.S. Thorne, B.J.A. Watt, G. Watt, Extended parameterisations for MSTW PDFs and their effect on lepton charge asymmetry from W decays. Eur. Phys. J. C 73, 2318 (2013). https://doi.org/10.1140/epjc/s10052-013-2318-9arXiv:1211.1215

L.A. Harland-Lang, A.D. Martin, P. Motylinski, R.S. Thorne, Parton distributions in the LHC era: MMHT 2014 PDFs. Eur. Phys. J. C 75, 204 (2015). https://doi.org/10.1140/epjc/s10052-015-3397-6arXiv:1412.3989

F. Dulat, B. Mistlberger, A. Pelloni, Differential Higgs production at \(\text{ N}^{3}\)LO beyond threshold. JHEP 01, 145 (2018). https://doi.org/10.1007/JHEP01(2018)145arXiv:1710.03016

M. Miyama, S. Kumano, Numerical solution of \(Q^2\) evolution equations in a brute force method. Comput. Phys. Commun. 94, 185 (1996). https://doi.org/10.1016/0010-4655(96)00013-6arXiv:hep-ph/9508246

P.G. Ratcliffe, A matrix approach to numerical solution of the DGLAP evolution equations. Phys. Rev. D 63, 116004 (2001). https://doi.org/10.1103/PhysRevD.63.116004arXiv:hep-ph/0012376

C. Pascaud, F. Zomer, A fast and precise method to solve the Altarelli–Parisi equations in x space. arXiv:hep-ph/0104013

M. Dasgupta, G. Salam, Resummation of the jet broadening in DIS. Eur. Phys. J. C 24, 213 (2002). https://doi.org/10.1007/s100520200915arXiv:hep-ph/0110213

NNPDF Collaboration, L. Del Debbio, S. Forte, J.I. Latorre, A. Piccione, J. Rojo, Neural network determination of parton distributions: the Nonsinglet case. JHEP 03, 039 (2007). https://doi.org/10.1088/1126-6708/2007/03/039. arXiv:hep-ph/0701127

S. Weinzierl, Fast evolution of parton distributions. Comput. Phys. Commun. 148, 314 (2002). https://doi.org/10.1016/S0010-4655(02)00584-2arXiv:hep-ph/0203112

A. Vogt, Efficient evolution of unpolarized and polarized parton distributions with QCD-PEGASUS. Comput. Phys. Commun. 170, 65 (2005). https://doi.org/10.1016/j.cpc.2005.03.103arXiv:hep-ph/0408244

A. Candido, F. Hekhorn, G. Magni, EKO: evolution kernel operators. arXiv:2202.02338

A. Cafarella, C. Coriano, M. Guzzi, Precision studies of the NNLO DGLAP evolution at the LHC with CANDIA. Comput. Phys. Commun. 179, 665 (2008). https://doi.org/10.1016/j.cpc.2008.06.004arXiv:0803.0462

G.P. Salam, J. Rojo, A Higher Order Perturbative Parton Evolution Toolkit (HOPPET). Comput. Phys. Commun. 180, 120 (2009). https://doi.org/10.1016/j.cpc.2008.08.010arXiv:0804.3755

M. Botje, QCDNUM: fast QCD evolution and convolution. Comput. Phys. Commun. 182, 490 (2011). https://doi.org/10.1016/j.cpc.2010.10.020arXiv:1005.1481

V. Bertone, S. Carrazza, J. Rojo, APFEL: a PDF evolution library with QED corrections. Comput. Phys. Commun. 185, 1647 (2014). https://doi.org/10.1016/j.cpc.2014.03.007arXiv:1310.1394

V. Bertone, APFEL++: a new PDF evolution library in C++. PoS DIS2017, 201 (2018). https://doi.org/10.22323/1.297.0201. arXiv:1708.00911

M. Procura, W.J. Waalewijn, L. Zeune, Resummation of double-differential cross sections and fully-unintegrated parton distribution functions. JHEP 02, 117 (2015). https://doi.org/10.1007/JHEP02(2015)117arXiv:1410.6483

G. Lustermans, J.K.L. Michel, F.J. Tackmann, W.J. Waalewijn, Joint two-dimensional resummation in \(q_{T}\) and 0-jettiness at NNLL. JHEP 03, 124 (2019). https://doi.org/10.1007/JHEP03(2019)124arXiv:1901.03331

G. Lustermans, J.K.L. Michel, F.J. Tackmann, Generalized threshold factorization with full collinear dynamics. arXiv:1908.00985