Abstract

A filtering algorithm based on frequency domain spline type, frequency domain spline adaptive filters (FDSAF), effectively reducing the computational complexity of the filter. However, the FDSAF algorithm is unable to suppress non-Gaussian impulsive noises. To suppression non-Gaussian impulsive noises along with having comparable operation time, a maximum correntropy criterion (MCC) based frequency domain spline adaptive filter called frequency domain maximum correntropy criterion spline adaptive filter (FDSAF-MCC) is developed in this paper. Further, the bound on learning rate for convergence of the proposed algorithm is also studied. And through experimental simulations verify the effectiveness of the proposed algorithm in suppressing non-Gaussian impulsive noises. Compared with the existing frequency domain spline adaptive filter, the proposed algorithm has better performance.

Similar content being viewed by others

Introduction

In dedicated electronic circuits due to the inherent nonlinearity of certain hardware components, nonlinear modeling and system identification are important. The power amplifier (PA), for example, is typically operated in its nonlinear region to improve power efficiency. The nonlinear spline adaptive filter (SAF) algorithm is widely utilized in nonlinear modeling due to its simple structure. The basic framework of SAF includes Wiener spline filter1, Hammerstein spline filter2, Sandwich 1 SAF, Sandwich 2 SAF3. Some researchers carry out the steady-state performance analysis study of SAF4,5. Some researchers have improved the SAF algorithm from the cost function on the basis of Wiener spline filter. A kind of normalized SAF algorithm (SAF-NLMS), is improved the stability of SAF, proposed by Guan6. A sign normalized least mean square algorithm (SNLMS) based on SAF, and the variable step-size scheme is introduced, called SAF-VSS-SNLMS, proposed by Liu7. The algorithm through introduce momentum in stochastic gradient descent, formed SAF-ARC-MMSGD8, against an impulsive environment. The weight update of the normalized subband spline adaptive filter algorithm is conducted the principle of minimum disturbance9. The maximum versoria criterion (MVC) is introduced nonlinear spline adaptive filter, formed SAF-MVC-VMSGD10. The algorithms combine the logarithmic hyperbolic cosine (LHC) as cost function for nonlinear system identification11,12. Based on logarithmic hyperbolic cosine (LHC) cost function, proposed novel cost function exponential hyperbolic cosine function (EHCF)13, generalized hyperbolic secant14, formed adaptive filtering. The above SAF type algorithms are carried out in time domain. As the order of the filter increases, the computational complexity will increases. In order to solve this problem, a frequency domain spline adaptive filter (FDSAF) is proposed15. Frequency domain spline adaptive filtering (FDSAF) can effectively reduce the computational complexity.

However, the frequency domain spline adaptive filtering is derived by minimising the squared value of the instantaneous error, unable to suppress non-Gaussian impulsive noises. According to the maximum correlation entropy criterion (MCC) combined with adaptive filtering14,16,17,18, combined with spline adaptive filtering19,20,21, the robustness of MCC is demonstrated. To suppression non-Gaussian impulsive noises along with having comparable operation time, a frequency domain maximum correntropy criterion spline adaptive filter (FDSAF-MCC) is developed in this paper.

Results

Several experiments are implemented in order to verify the performance of the proposed FDSAF-MCC against non-Gaussian environments. The algorithm performance is measured by the mean square error (MSE), \(\rm{MSE} = 10 \log_{10} {[e(k)]^2}\).

Non-Gaussian noise models are usually classified into heavy-tailed non-Gaussian noise (e.g., Alpha-stable, Laplace, Cauchy, etc.) and light-tailed non-Gaussian noise (i.e., binary, uniform, etc.). This paper mainly focuses on heavy-tail noise. The comparison of the probability density function of heavy tail noise as shown in Fig. 1. We can know that compared with other noise models, alpha-stable distribution noise has heavier tails and sharp peaks. Therefore, an alpha-stable distribution is uitilized for the experiment. An alpha-stable distribution, with \(\alpha \in \left( 0,2\right]\) is a characteristic exponent representing the stability index which determines the strength of impulse, \(\beta \in \left[ -1,1 \right]\) is a symmetry parameter, \(\iota >0\) is a dispersion parameter, and \(\varrho\) is a location parameter22. Which can be expressed as

Where

In this paper, the parameters of alpha-stable distribution are set as follows, \(\alpha =1.6, \beta = 0, \iota = 0.05, \varrho = 0\). We adding to the output of the unknown system, an independent white Gaussian noise v(n) with the signal to noise ratio (SNR=30dB). In adaptive system, the frequency domain weight is initialized as \({\mathbf {w}}_F(0)=FFT [1, 0, . . ., 0]^T \in {\mathbb {R}}^{2M\times 1}\). The spline knots are initialized as \({\mathbf {G}}(0)= [-2.2, -2.0, \ldots , 2.0, 2.2]^T\), with the interval \(\Delta x = 0.2\). The input signal x(n) is a stochastic process with uniform distribution limited to \([-1, 1]\), the signal samples number are 100,000. The MSE curves are obtained through independent Mento Carlo trials. The parameters of the experiments are set as Table 1.

Experiment 1

Experiment 1, the nonlinear system to be identified is composed by a linear FIR filter followed by a nonlinear spline curve. The linear FIR filter system transfer function is described

The control points of nonlinear spline curve are setting as \({\mathbf {g}}^* = [-2.2, -2.0,\) \(\ldots\), \(-0.8, -0.91, 0.42, -0.01, -0.1, 0.1, -0.15, 0.58, 1.2, 1.0, 1.2\),\(\ldots\), \(2.0, 2.2]^T\)23.

Figure 2 shown the MSE curves in different \(\delta\) parameters. This work is carried out in without non-Gaussian noise environment. With the parameter \(\delta\) increase, the convergence performance of MSE curve becomes better. However, when the parameters \(\delta\) increase to a certain value, the MSE curve will not change any more. In subsequent experiments, setting the parameter \(\delta =6\).

Figure 3 shown the MSE curves comparison between FDSAF, the proposed FDSAF-MCC, and SAF-MCC under without non-Gaussian noise environment. The convergence performance of the three algorithms is consistent, but the running time of FDSAF-MCC is 0.13309 ms, which is shorter, than the FDSAF, which is 0.13661 ms, than the SAF-MCC, which is 0.5064 ms.

The FDSAF-MCC algorithm to track the weight of FIR filtering with non-Gaussian noise environment, as shown in Fig. 4. The proposed algorithm has good tracking performance. The FDSAF-MCC algorithm to track the spline knots with non-Gaussian noise environment, as shown in Fig. 5. The proposed algorithm has good tracking performance.

Figure 6 shown the MSE curves comparison between FDSAF, the proposed FDSAF-MCC, and SAF-MCC under non-Gaussian noise environment. The convergence performance of FDSAF algorithm is bad, the MSE curve oscillates randomly. The proposed FDSAF-MCC and SAF-MCC algorithms have better convergence performance.

Figure 7 shown the MSE curves comparison between FDSAF, the proposed FDSAF-MCC, and SAF-MCC under non-Gaussian noise and a sudden change. The proposed FDSAF-MCC and SAF-MCC algorithms have better convergence performance. After a sudden change, the MSE curve converges of the FDSAF-MCC and the SAF-MCC algorithms, the MSE curve divergent of the FDSAF algorithm.

Experiment 2

Experiment 2, consists of a 7-order linear FIR filtering sub-system and a saturated nonlinear sub-system. The linear subsystem with 7th order FIR filtering is shown

The saturated nonlinearity is described by

p described the nonlinear input, f(p) described the nonlinear output.

Figure 8 shown the MSE curves comparison between FDSAF, the proposed FDSAF-MCC, and SAF-MCC under impulsive noise environment. The convergence performance of FDSAF algorithm is poor. FDSAF algorithm cannot suppress impulsive noise. The proposed FDSAF-MCC algorithm has the better convergence performance than SAF-MCC algorithm.

Figure 9 shown the MSE curves comparison between FDSAF, the proposed FDSAF-MCC, and SAF-MCC under impulsive noise and a sudden change. The convergence performance of the FDSAF and the SAF-MCC algorithm is poor. FDSAF algorithm cannot suppress a sudden change with non-Gaussian noise. The proposed FDSAF-MCC algorithm shown a convergence trend, but the convergence effect is not very good after sudden change.

Experiment 3

In order to further verify the convergence performance under non-Gaussian noise and/or a sudden change environment, this example compares the FDSAF and FDSAF-MCC under different input signals. The input signal x(n) is generated by

\(a\in [0,1)\) is a correlation coefficient determining the correlation relation between x(n) and \(x(n-1)\), and \(\xi (n)\) is a white Gaussian stochastic process with zero mean and unitary variance. When \(a=0\), the input signal x(n) is the white noise. When a is close to 1, the input signal x(n) is colored noise. In this experiment, the cases of \(a = 0, 0.9\) are considered. The MSE curves of FDSAF-MCC and FDSAF are compared in the experiment.

Figure 10 shown the MSE curves comparison between FDSAF and the proposed FDSAF-MCC under white noise input and colored noise input. The convergence performance of the FDSAF algorithm and the proposed FDSAF-MCC algorithm is compared under the same parameter a. When \(a = 0\), input with the white noise, the proposed FDSAF-MCC algorithm has the better convergence performance than the FDSAF algorithm. When \(a = 0.9\), input with the colored noise, the proposed FDSAF-MCC algorithm has the better convergence performance than the FDSAF algorithm.

Figure 11 shown the MSE curves comparison between FDSAF and the proposed FDSAF-MCC with different a under non-Gaussian noise and a sudden change. The convergence performance of the proposed FDSAF-MCC algorithm better than the FDSAF algorithm when in the same a.

Methods

FDSAF-MCC filtering

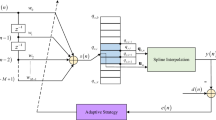

The basic structure of SAF in Fig. 12 is the cascade of a linear adaptive filter and a nonlinear cubic CR-spline interpolation function23. The structure of the frequency domain maximum correntropy criterion spline adaptive filter (FDSAF-MCC) is shown in Fig. 13. Frequency domain adaptive filtering (FDAF), used to linear module of the SAF24. During this process, the filtering and parameter updating are performed every M instants. Let the length of data buffer equal to the length of FIR filter weight, M. The input signal x(n) is stored in the data buffer \({\mathbf {x}}(k)\) for every M samples, where k denotes the data buffer index in time domain. \({\mathbf {x}}(k) = [x(kM + 1), x(kM + 2), \ldots , x(kM + M)]^T\). In order to achieve the optimal efficiency, the \(50\%\) overlap-save method is used, taking the FFT of \({\mathbf {x}}(k)\) and \({\mathbf {x}}(k-1)\) at instant \(n = kM + M\)

\(FFT[\cdot ]\) represents FFT operation for a vector, and \({\tilde{k}}\) is used to denote the index in frequency domain. The FIR filtered output \({\mathbf {s}}(k)\) can be calculated by

\({\mathbf {s}}(k)\) is containing M output elements from instant \(n = kM + 1\) to \(n = kM + M\), where \(IFFT[\cdot ]\) represents IFFT operation for a vector. \({\mathbf {w}}_F({\tilde{k}})\) is the FIR filter weight in frequency domain. \(\odot\) denotes the Hadamard product that represents matrix/vector multiplication by elements.

As shown in Fig. 13, the nonlinear spline interpolation contains table look-up and interpolation two procedures. The look-up table (LUT) is made up of \(N+1\) control points (knots) defined as \({\mathbf {G}}_j=[g_{x,j},g_{y,j}]^T\), \((j=0,1,\dots ,N)\). The subscripts x and y denote abscissa and ordinate, respectively. The abscissas are uniformly distributed with an interval \(\Delta x\). The LUT process will calculate the spline interval index \(i_j\) and the local abscissa \(u_j\) according to \(s(kM+j)\) at instant \(n=kM+j\),

\(\lfloor \cdot \rfloor\) denotes the floor operator. Then vectorizing all normalized abscissas \(u_j\) and the corresponding local spline knots \(g_{i,j}\) of all \(s(kM + j)\) in data buffer. \({\mathbf {u}}_j = [u_j^3 , u_j^2 , u_j , 1]^T\) is the local abscissa vector derived from \(s(kM + j)\). The normalized abscissas \(u_j\) are vectorized as \({\mathbf {U}}(k) \in {\mathbb {R}}^{4\times M}\) which is

In a similar way, \(\dot{{\mathbf {u}}}_j = [3u_j^2 , 2u_j , 1 , 0]^T\) is the differential vector of a local abscissa. We denote \(\dot{{\mathbf {U}}}(k) \in {\mathbb {R}}^{4\times M}\) the differential matrix of normalized abscissas, which can be expressed as

And we denote \({\mathbf {G}}(k) \in {\mathbb {R}}^{4\times M}\) the spline knot matrix, which can be described by,

\({\mathbf {g}}_{i,j}=[g_{i,j},g_{i,j+1},g_{i,j+2},g_{i,j+3}]^T\). After FIR filtering in frequency domain, the intermediate variables s(k) will enter the spline interpolation, obtaining the output signal y(k), from instant \(n=kM+1\) to \(n=kM+M\), which can be written as

The spline interpolation function is represented by \(\varphi (\cdot )\), \(y(kM + j)\) denotes the output of spline filter at instant \(n = kM + j\), \((\cdot )_{ii}\) represents the column vector made up from the diagonal elements of the matrix, \(sum_r(\cdot )\) represents the column vector derived from the summation of the matrix by rows, and \(sum_c(\cdot )\) represents the row vector derived from the summation of the matrix by columns.

Cubic spline curves, which mainly include B-spline25 and Catmul-Rom (CR) spline26. Because of the feature that CR-spline passes through all of the control points, CR-spline may has much better performance in local approximation with respect to B-spline27. Therefore, CR-spline is the only one considered in the paper. The basis matrix \({\mathbf {C}}\)

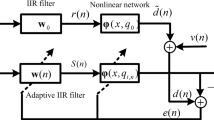

FDSAF-MCC adaptive

As shown in Fig.14 the structure of FDSAF-MCC nonlinear system identification, we will derive the parameter updating rules. The error \({\mathbf {e}}(k)\) can be written as

\(e(kM + j) = d(kM + j)-y(kM + j)\), at instant \(n=kM+j\). \({\mathbf {d}}(k) = [d(kM + 1), d(kM + 2),\ldots , d(kM + M)]^T\) is the desired output.

An maximum correntropy cost function19,20,21 which is insensitive to impulsive noises, given by

The kernel size \(\delta >0\), and \(\sqrt{2\pi }\delta\) is the normalization. We take the derivative of Eq. (17) with respect to \({\mathbf {e}}(k)\), and the result described by \({\mathbf {e}}_d(k)\)

\({\mathbf {e}}_d(k)=[e_d(kM + 1),e_d(kM + 2),\ldots ,e_d(kM + M)]^T\). As there is no FFT transformation in the process of spline interpolation, so the parameter updating of spline knot is the same of time domain SAF. In order to match the data block description in FDSAF-MCC, the parameter updating can be expressed in the vectorization form. We take the derivative of J(k) with respect to \({\mathbf {g}}_{i,j}\)15, described

Then the derivative of J(k) with respect to \({\mathbf {G}}(k)\) can be expressed as a vectorization form

Therefore, the parameter updating of the spline knots matrix can be expressed as

The learning rate \(\mu _g\) on the updating of \({\mathbf {G}}(k)\). We take the derivative of J(k) with respect to \({\mathbf {w}}(k)\)

In which, we denote the back propagation error \(e_s (kM + j) = e_d(kM + j){\dot{\varphi }}(s(kM + j))\), then it can be vectorized as

\({\mathbf {e}}_s (k) = [e_s (kM + 1), e_s (kM + 2),\ldots , e_s (kM + M)]^T\). The vector \({\dot{\varphi }}(k)\) reference to Eq. (14) can be rewritten as

According to the procedures of FDSAF-MCC, the back propagation error vector is transformed into frequency domain by the following mean

A zero vector \({\mathbf {0}}\in {\mathbb {R}}^{M\times 1} = [0, 0,\ldots , 0]^T\) with length of M, and \({\mathbf {e}}_F({{\tilde{k}}}) \in {\mathbb {R}}^{2M\times 1}\)is the back propagation error vector in frequency domain. The gradient vector \(\Delta {\mathbf {w}}(k)\) be implemented in frequency domain

The superscript H represents Hermitian transpose. The length of \(IFFT[{\mathbf {e}}_F({{\tilde{k}}}) \odot {\mathbf {x}}^H_F ({{\tilde{k}}})]\) is 2M, and the actual gradient vector is the first M elements of it. Finally, the parameter updating of frequency domain weight \({\mathbf {w}}_F\) achieved by

The learning rate \(\mu _w\) on the updating of \({\mathbf {w}}_F\), and \({\mathbf {0}}\in {\mathbb {R}}^{M\times 1} = [0, 0,\ldots , 0]^T\) is a zero vector with length of M.

FDSAF-MCC brief

In order to explain the FDSAF-MCC algorithm more clearly, a brief summary of FDSAF-MCC is given in Algorithmic 1.

Convergence performance

The convergence performance of the linear module and the nonlinear module are considered separately. According to the cost function in the parameter updating, \(\Vert e(k+1)\Vert ^2 < \Vert e(k)\Vert ^2\) must be satisfied during filtering. We take the first order Taylor series expansion of \(\Vert e(k+1)\Vert ^2\)

We ignore h.o.t. which represents high order terms, satisfied \(\Vert e(k+1)\Vert ^2 < \Vert e(k)\Vert ^2\)

The bound on learning rate \(\mu _g\) for spline knots complies with

In a similar way, we take the Taylor series expansion of \(\Vert e(k+1)\Vert ^2\) about \({\mathbf {w}}(k)\), at instant k

We ignore h.o.t. which represents high order terms, condition of \(\Vert e(k+1)\Vert ^2 < \Vert e(k)\Vert ^2\), obtained

Conclusion

This paper proposed a new frequency domain maximum correntropy criterion spline adaptive filtering. To suppression non-Gaussian impulsive noise along with having comparable operation time. Instead of using the lest mean square (LMS), the new algorithm employed the maximum correntropy criterion (MCC) as a cost function. Three experimental methods were used to verify the effectiveness of the proposed algorithm in suppressing impulsive noise. Compared with the existing frequency domain spline adaptive filtering algorithm, the proposed algorithms provided better robustness against alpha stable noise. The proposed algorithm has a good effect in suppressing the alpha stable noise in the above-mentioned environments, but it may have a problem of poor convergence in suppressing light-tailed noise. It is necessary to further explore a more appropriate cost function and construct a filtering algorithm to suppress light-tailed models noise. In the future, for the light-tailed noise model, we will be carried out in the frequency domain spline adaptive filtering algorithm.

References

Liu, C. & Zhang, Z. Set-membership normalised least m-estimate spline adaptive filtering algorithm in impulsive noise. Electron. Lett. 54, 393–395 (2018).

Liu, C., Zhang, Z. & Tang, X. Sign normalised hammerstein spline adaptive filtering algorithm in an impulsive noise environment. Neural Process. Lett. 50, 477–496 (2019).

Scarpiniti, M., Comminiello, D., Parisi, R. & Uncini, A. Novel cascade spline architectures for the identification of nonlinear systems. IEEE Trans. Circuits Syst. I Regular Papers 62, 1825–1835 (2015).

Scarpiniti, M., Comminiello, D., Scarano, G., Parisi, R. & Uncini, A. Steady-state performance of spline adaptive filters. IEEE Trans. Signal Process. 64, 816–828 (2015).

Liu, C., Zhang, Z. & Tang, X. Steady-state performance for the sign normalized algorithm based on hammerstein spline adaptive filtering. In 2019 International Conference on Control, Automation and Information Sciences (ICCAIS), 1–4 (IEEE, 2019).

Guan, S. & Li, Z. Normalised spline adaptive filtering algorithm for nonlinear system identification. Neural Process. Lett. 46, 595–607 (2017).

Liu, C., Zhang, Z. & Tang, X. Sign normalised spline adaptive filtering algorithms against impulsive noise. Signal Process. 148, 234–240 (2018).

Yang, L., Liu, J., Yan, R. & Chen, X. Spline adaptive filter with arctangent-momentum strategy for nonlinear system identification. Signal Process. 164, 99–109 (2019).

Wen, P., Zhang, J., Zhang, S. & Qu, B. Normalized subband spline adaptive filter: Algorithm derivation and analysis. Circuits Syst. Signal Process. 40, 2400–2418 (2021).

Guo, W. & Zhi, Y. Nonlinear spline adaptive filtering against non-gaussian noise. Circuits Syst. Signal Process. 1–18. https://doi.org/10.1007/s00034-021-01798-3 (2021).

Yu, T., Li, W., Yu, Y. & de Lamare, R. C. Robust spline adaptive filtering based on accelerated gradient learning: Design and performance analysis. Signal Process. 183, 107965 (2021).

Patel, V., Bhattacharjee, S. S. & George, N. V. A family of logarithmic hyperbolic cosine spline nonlinear adaptive filters. Appl. Acoust. 178, 107973 (2021).

Kumar, K., Pandey, R., Bhattacharjee, S. S. & George, N. V. Exponential hyperbolic cosine robust adaptive filters for audio signal processing. IEEE Signal Process. Lett. 28, 1410–1414 (2021).

Bhattacharjee, S. S., Kumar, K. & George, N. V. Nearest kronecker product decomposition based generalized maximum correntropy and generalized hyperbolic secant robust adaptive filters. IEEE Signal Process. Lett. 27, 1525–1529 (2020).

Yang, L., Liu, J., Zhang, Q., Yan, R. & Chen, X. Frequency domain spline adaptive filters. Signal Process. 177, 107752 (2020).

Shi, L. & Lin, Y. Convex combination of adaptive filters under the maximum correntropy criterion in impulsive interference. IEEE Signal Process. Lett. 21, 1385–1388 (2014).

Chen, B., Xing, L., Liang, J., Zheng, N. & Principe, J. C. Steady-state mean-square error analysis for adaptive filtering under the maximum correntropy criterion. IEEE Signal Process. Lett. 21, 880–884 (2014).

Radhika, S. & Chandrasekar, A. Maximum correntropy criteria adaptive filter with adaptive step size. In 2018 IEEE International Conference on Computational Intelligence and Computing Research (ICCIC), 1–4 (IEEE, 2018).

Peng, S., Wu, Z., Zhang, X. & Chen, B. Nonlinear spline adaptive filtering under maximum correntropy criterion. In TENCON 2015-2015 IEEE Region 10 Conference, 1–5 (IEEE, 2015).

Wang, W., Zhao, H., Zeng, X. & Doğançay, K. Steady-state performance analysis of nonlinear spline adaptive filter under maximum correntropy criterion. IEEE Trans. Circuits Syst. II Express Briefs 67, 1154–1158 (2019).

Wu, Z., Peng, S., Chen, B. & Zhao, H. Robust hammerstein adaptive filtering under maximum correntropy criterion. Entropy 17, 7149–7166 (2015).

Weng, B. & Barner, K. E. Nonlinear system identification in impulsive environments. IEEE Trans. Signal Process. 53, 2588–2594 (2005).

Scarpiniti, M., Comminiello, D., Parisi, R. & Uncini, A. Nonlinear spline adaptive filtering. Signal Process. 93, 772–783 (2013).

Shynk, J. J. et al. Frequency-domain and multirate adaptive filtering. IEEE Signal Process. Mag. 9, 14–37 (1992).

Zhao, J., Zhang, H. & Zhang, J. A. Generalized maximum correntropy algorithm with affine projection for robust filtering under impulsive-noise environments. Signal Process. 172, 107524 (2020).

Zhang, J.-F. & Qiu, T.-S. A robust correntropy based subspace tracking algorithm in impulsive noise environments. Digit. Signal Process. 62, 168–175 (2017).

Comminiello, D. & Príncipe, J. C. Adaptive Learning Methods for Nonlinear System Modeling (Butterworth-Heinemann, 2018).

Acknowledgements

This work is funded by the Supported by Science and Technology on Electromechanical Dynamic Control Laboratory, China, (Grant No. 6142601200605), and the National Natural Science Foundation of China (Grant No. U20B2040).

Author information

Authors and Affiliations

Contributions

Y.Z. managed the project, designed the experiments. W.G. conducted the experiments. W.G. and K.F. analyzed the data, and wrote the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guo, W., Zhi, Y. & Feng, K. Frequency domain maximum correntropy criterion spline adaptive filtering. Sci Rep 11, 24048 (2021). https://doi.org/10.1038/s41598-021-01863-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-021-01863-6

- Springer Nature Limited