Abstract

Microservice architecture (MSA) denotes an increasingly popular architectural style in which business capabilities are wrapped into autonomously developable and deployable software components called microservices. Microservice applications are developed by multiple DevOps teams each owning one or more services. In this article, we explore the state of how DevOps teams in small and medium-sized organizations (SMOs) cope with MSA and how they can be supported. We show through a secondary analysis of an exploratory interview study comprising six cases, that the organizational and technological complexity resulting from MSA poses particular challenges for small and medium-sized organizations (SMOs). We apply model-driven engineering to address these challenges. As results of the second analysis, we identify the challenge areas of building and maintaining a common architectural understanding, and dealing with deployment technologies. To support DevOps teams of SMOs in coping with these challenges, we present a model-driven workflow based on LEMMA—the Language Ecosystem for Modeling Microservice Architecture. To implement the workflow, we extend LEMMA with the functionality to (i) generate models from API documentation; (ii) reference remote models owned by other teams; (iii) generate deployment specifications; and (iv) generate a visual representation of the overall architecture. We validate the model-driven workflow and our extensions to LEMMA through a case study showing that the added functionality to LEMMA can bring efficiency gains for DevOps teams. To develop best practices for applying our workflow to maximize efficiency in SMOs, we plan to conduct more empirical research in the field in the future.

Similar content being viewed by others

Introduction

Microservice architecture (MSA) is a novel architectural style for service-based software systems with a strong focus on loose functional, technical, and organizational coupling of services [54]. In a microservice architecture, services are tailored to distinct business capabilities and executed as independent processes. The adoption of MSA is expected to increase an application’s scalability, maintainability, and reliability [54]. It is frequently employed to decompose monolithic applications for which such quality attributes are of critical scale [9].

MSA fosters the adoption of DevOps practices, because it promotes to (i) bundle microservices in self-contained deployment units for continuous delivery; and (ii) delegate responsibility for a microservice to a single team being composed of members with heterogeneous professional backgrounds [4, 53]. Conway’s Law [15] is a determining factor in DevOps-based MSA engineering. It states that the communication structure of a system reflects the structure of its development organization. Thus, to achieve loose coupling and autonomy of microservices, it is also crucial to divide the responsibility for microservices’ development and deployment between autonomous DevOps teams [53]. As a result, MSA engineering leads to a distributed development process, in which several teams create coherent services of the same software system in parallel.

While various larger enterprises like Netflix,Footnote 1 Spotify,Footnote 2 or ZalandoFootnote 3 regularly report about their successful adoption of MSA, there are only a small number of experience reports (e.g., [12]) about how microservices combined with DevOps can be successfully implemented in small and medium-sized development organizations (SMOs) with less than 100 developers involved. Such SMOs typically do not have sufficient resources to directly apply large-scale process models such as Scrum at Scale [14, 77] in terms of employees, knowledge, and experience.

To support SMOs in bridging the gap between available resources and required effort for a successful adoption of DevOps-based MSA engineering, we (i) investigate the characteristics of small- and medium-scale microservice development processes; and (ii) propose means to reduce complexity and increase productivity in DevOps-based MSA engineering within SMOs. More precisely, the contributions of our article are threefold. First, we identify challenges of SMOs in DevOps-based MSA engineering by analyzing a data set of an exploratory qualitative study and linking it with existing empirical knowledge. Second, we employ model-driven engineering (MDE) [13] to introduce a workflow for coping with the previously identified challenges in DevOps-based MSA engineering for SMOs. Third, we present and validate extensions to LEMMA (Language Ecosystem for Modeling Microservice Architecture), which is a set of Eclipse-based modeling languages and model transformations for MSA engineering [63] enabling sophisticated modeling support for the workflow.

The remainder of this article is organized as follows. In Section “Background”, we describe in detail the microservice architecture style particularly related to the design, development, and operation stages. In addition, we explain organizational aspects that result from the use of microservices. Section “Language Ecosystem for Modeling Microservice Architecture” illustrates LEMMA as a set of modeling languages and tools that address the MDE of MSA. In Section “DevOps-Related Challenges in Microservice Architecture Engineering of SMOs”, we analyze a dataset based on an exploratory interview study in SMOs to identify challenging areas in engineering MSA for DevOps teams in SMOs. Based on these challenge areas, we present a model-driven workflow in Section “A Model-Driven Workflow for Coping with DevOps-Related Challenges in Microservice Architecture Engineering and describe the extensions of LEMMA to support the workflow. In this regard, Section “Derivation of Microservice Models from API Documentations”” present means to derive LEMMA models from API documentation, Section “Assembling a Common Architecture Model from Distributed Microservice Models” presents extensions to the LEMMA languages to assemble individual microservice models, Section “Visualization of Microservice Architecture Models” describes additions to create a visual representation of microservice models, Section 6.5 presents means to specify deployment infrastructure, and Section “Generating Code From Distributed Deployment Infrastructure Models” elaborates on the ability to generate infrastructure code. We validate our contributions to LEMMA in Section “Validation”. Section “Discussion” discusses the model-driven workflow and LEMMA components towards the application in DevOps teams of SMOs. We present related research in Section “Related Work”. The article ends with a conclusion and outlook on future work in Section “Conclusion and Future Work”.

Background

This section provides background on the MSA approach and its relation towards the DevOps paradigm. It details special characteristics in the design, development, operation, and organization of microservice architectures and their realization.

General

MSA is a novel approach towards the design, development, and operation of service-based software systems [54]. Therefore, MSA promotes to decompose the architecture of complex software systems into services, i.e., loosely coupled software components that interact by means of predefined interfaces and are composable to synergistically realize coarse-grained business logic [25].

Compared to other approaches for architecting service-based software systems, e.g., SOA [25], MSA puts a strong emphasis on service-specific independence. This independence distinguishes MSA from other approaches w.r.t. the following features [53, 54, 64]:

-

Each microservice in a microservice architecture focuses on the provisioning of a single distinct capability for functional or infrastructure purposes.

-

A microservice is independent from all other architecture components regarding its implementation, data management, testing, deployment, and operation.

-

A microservice is fully responsible for all aspects related to its interaction with other architecture components, ranging from the determination of communication protocols over data and format conversions to failure handling.

-

Exactly one team is responsible for a microservice and has full accountability for its services’ design, development, and deployment.

Starting from the above features, the adoption of MSA may introduce increases in quality attributes [39] such as (i) scalability, as it is possible to purposefully run new instances of microservices covering strongly demanded functionality; (ii) maintainability, as microservices are seamlessly replaceable with alternative implementations; and (iii) reliability, as it delegates responsibility for robustness and resilience to microservices [19, 20, 54]. Additionally, MSA fosters DevOps and agile development, because its single-team ownership calls for heterogeneous team composition and microservices’ constrained scope fosters their evolvability [17, 80].

Despite its potential for positively impacting the aforementioned features of a software architecture and its implementation, MSA also introduces complexity both to development processes and operation [17, 73, 79]. Consequently, practitioners in SMOs perceive the successful adoption of MSA as complex [9]. Challenges that must be addressed in MSA adoption are spread across all stages in the engineering process, and thus concern the design of the architecture, its development and operation. Furthermore, MSA imposes additional demands on the organization of the engineering process.

Design Stage

A frequent design challenge in MSA engineering concerns the decomposition of an application domain into microservices, each with a suitable functional granularity [32, 73]. Too coarse-grained microservice capabilities neglect the aforementioned benefits of MSA in terms of service-specific independence. Too fine-grained microservices, on the other hand, may require an inefficiently high amount of communication and thus network traffic at runtime [46]. Although there exist approaches such as Domain-driven Design (DDD) [26] to support in the systematic decomposition and granularity determination of a microservice architecture [54], their perceived complexity hampers widespread adoption in practice [9, 28].

An additional specific in microservice design stems from MSA’s omission of explicit service contracts [57]. By contrast to SOA, MSA considers the API of a microservice its implicit contract [85], thereby delegating concerns in API management, e.g., API versioning to microservices [73]. Consequently, microservices must ensure their compatibility with possible consumers and also inform them about possible interaction requirements. Furthermore, implicit microservice contracts foster ad hoc communication, which increases runtime complexity and the occurrence of cyclic interaction relationships [78].

Development Stage

By contrast to monolithic applications, which rely on a holistic, yet vendor-dependent technology stack [19], microservice architectures foster technology heterogeneity [54]. Specifically, due to the increase in service-specific independence, each microservice may employ those technologies that best fit a certain capability. Typical technology variation points [60] comprise programming languages, databases, communication protocols, and data formats. However, technology heterogeneity imposes a greater risk for technical debt, additional maintainability costs, and steeper learning curves, particularly for new members of a microservice team [78].

Operation Stage

MSA usually requires a sophisticated deployment and operation infrastructure consisting of, e.g., continuous delivery systems, a basic container technology and orchestration platform, to cope with MSA’s emphasis of maintainability and reliability [80]. In addition, microservices often rely on further infrastructure components such as service discoveries, API gateways, or monitoring solutions [5], which lead to additional administration and maintenance effort. Consequently, microservice operation involves a variety of different technical components, thereby resulting in a significant complexity increase compared to monolithic applications [73].

Furthermore, technology heterogeneity also concerns microservice operation w.r.t. technology variation points like deployment and infrastructure technologies [60]. Particularly the latter also involve independent decision-making by microservice teams. For example, there exist infrastructure technologies, e.g., to increase performance or resilience, which directly focus on a microservice [5]. Hence, teams are basically free to decide for suitable solutions based on different criteria such as compatibility with existing microservice implementations or available experience.

Organizational Aspects

The use of MSA requires a compatible organizational structure, i.e., following Conway’s law, a structure that corresponds to the communication principle of microservices. This results in the necessity of using separate teams, each of which is fully responsible for one or more services (cf. Section “General”). The requirement that a team should cover the entire software lifecycle of its microservices automatically leads to the need for cross-functional teams. To ensure collaboration between teams, large companies such as Netflix or Spotify usually use established large-scale agile process models [18], e.g., the Scaled Agile Framework (SAFe) [66], Scrum at Scale [77], or the Spotify Model [71]. Establishing such a form of organization and to establish organizational alignment may require upfront efforts [54].

Thus, MSA fosters DevOps practices, which can result in lowered cost and accelerate the pace of product increments [53]. To this end, it is critical to foster a collaborative culture within and across teams to promote integration and collaboration among team members with different professional backgrounds [49].

A key enabler of a collaborative culture is the extensive automation of manual tasks to prevent the manifestation of inter-team and extra-team silos [49]. Specifically, it relieves people from personal accountability for a task and may thus help in reducing existing animosities of team members with different professional backgrounds [45].

Another pillar of a collaborative culture is knowledge sharing following established formats and guidelines [49]. It aims to mitigate the occurrence of insufficient communication, which can be an impediment in both MSA and DevOps [17, 65].

Language Ecosystem for Modeling Microservice Architecture

In our previous works we developed LEMMA [60, 63]. LEMMA is a set of Eclipse-based modeling languages and model transformations that aims to mitigate the challenges in MSA engineering (cf. Section “Background”) by means of Model-driven Engineering (MDE) [13].

To this end, LEMMA refers to the notion of architecture viewpoint [40] to support stakeholders in MSA engineering in organizing and expressing their concerns towards a microservice architecture under development. More specifically, LEMMA clusters four viewpoints on microservice architectures. Each viewpoint targets at least one stakeholder group in MSA engineering, and comprises one or more stakeholder-oriented modeling languages.

The modeling languages enable the construction of microservice architecture models and their composition by means of an import mechanism. As a result, LEMMA allows reasoning about coherent parts of a microservice architecture [40], e.g., to assess quality attributes and technical debt of microservices [62] or perform DevOps-oriented code generation [61].

The following paragraphs summarize LEMMA’s approach to microservice architecture model construction and processing.

Microservice Architecture Model Construction

Figure 1 provides an overview of LEMMA’s modeling languages, their compositional dependencies and the addressed stakeholders in MSA engineering.

Overview of LEMMA’s modeling languages, their compositional dependencies and addressed stakeholders. Arrow semantics follow those of UML for dependency specifications [55]

LEMMA’s Domain Data Modeling Language [63] allows model construction in the context of the domain viewpoint on a microservice architecture. Therefore, it addresses the concerns of domain experts and microservice developers. First, the language aims to mitigate the complexity of DDD (cf. Section “Background”) by defining a minimal set of modeling concepts for the construction of domain concepts, i.e., data structures and list types, and the assignment of DDD patterns, e.g., Entity or Value Object [26]. Additionally, it integrates validations to ensure the semantically correct usage of the patterns. Second, the language considers underspecification in DDD-based domain model construction [59], thereby facilitating model construction for domain experts. However, microservice developers may later resolve underspecification to enable automated model processing [61]. All other LEMMA modeling languages depend on the Domain Data Modeling Language (cf. Fig. 1) because it provides them with a Java-aligned type system [63] given Java’s predominance in service programming [9, 67].

LEMMA’s Service Modeling Language [63] addresses the concerns of microservice developers (cf. Fig. 1) in the service viewpoint on a microservice architecture. One goal of the Service Modeling Language is to make the APIs of microservices explicit (cf. Section “Background”) but keeping their definition as concise as possible based on built-in language primitives. That is, the language provides developers with targeted modeling concepts for the definition of microservices, their interfaces, operations and endpoints. LEMMA service models may import LEMMA domain models to identify the responsibility of a microservice for a certain portion of the application domain [54] and type operation parameters with domain concepts.

LEMMA’s Technology Modeling Language [60] considers technology to constitute a dedicated architecture viewpoint [38] that frames the concerns of technology-savvy stakeholders in MSA engineering, i.e., microservice developers and operators (cf. Fig. 1). The Technology Modeling Language enables those stakeholder groups to construct and apply technology models. A LEMMA technology model modularizes information targeting a certain technology relevant to microservice development and operation, e.g., programming languages, software frameworks, or deployment technologies. Furthermore, it integrates a generic metadata mechanism based on technology aspects [60]. Technology aspects may, for example, cover annotations of software frameworks. LEMMA service and operation models depend on LEMMA technology models (cf. Fig. 1) and import them to apply the contained technology information to, e.g., modeled microservices and containers. In particular, LEMMA’s Technology Modeling Language aims to cope with technology heterogeneity in MSA engineering (cf. Section “Background”) by making technology decisions explicit [74].

LEMMA’s Operation Modeling Language [63] addresses the concerns of microservice operators (cf. Fig. 1) w.r.t. the operation viewpoint in MSA engineering. To this end, the language integrates primitives for the concise modeling of microservice containers, infrastructure nodes, and technology-specific configuration. To model the deployment of microservices, LEMMA operation models import LEMMA service models and assign modeled microservices to containers. Additionally, it is possible to express the dependency of containers on infrastructure nodes such as service discoveries or API gateways [5]. By providing microservice operators with a dedicated modeling language we aim to cope with operation challenges in MSA engineering (cf. Section “Background”). First, the Operation Modeling Language defines a unified syntax for the modeling of heterogeneous operation nodes of a microservice architecture. Second, it is flexibly extensible with support for operation technologies, e.g., for microservice monitoring or security, leveraging LEMMA technology models (cf. Fig. 1). Third, operation models may import other operation models, e.g., to compose the models of different microservice teams to centralize specification and maintenance of shared infrastructure components such as service discoveries and API gateways.

Microservice Architecture Model Processing

LEMMA relies on the notion of intermediate model representation [41] to facilitate the processing of constructed models. To this end, LEMMA integrates a set of intermediate metamodels and intermediate model transformations. The intermediate metamodels define the concepts to which the elements of an intermediate model for a LEMMA model conform. An intermediate model transformation is then responsible for the automated derivation of an intermediate model from a given input LEMMA model.

This approach to model processing yields several benefits. First, intermediate metamodels decouple modeling languages from model processors. Consequently, languages can evolve independently from processors as long as the intermediate metamodels remain stable. For example, it becomes possible to introduce syntactic sugar in the form of additional shorthand notations for language constructs. Second, intermediate metamodels enable to incorporate language semantics into intermediate models so that model processors need not anticipate them. For instance, LEMMA allows modeling of default protocols for communication types within technology models. In case a service model does not explicitly determine a protocol, e.g., for a microservice, the default protocol of the service’s technology model applies implicitly. The intermediate transformation, which converts a service model into its intermediate representation, makes the default protocol explicit. Thus, model processors can directly rely on this information and need not determine the effective protocol for a microservice themselves.

Next to intermediate model representations, LEMMA also provides a model processing framework,Footnote 4 which facilitates the implementation of Java-based model processors, e.g., for microservice developers without a strong background in MDE. To this end, the framework leverages the Inversion of Control (IoC) design approach [42], and its realization based on the Abstract Class pattern [72] and Java annotations [30]. In addition, the framework implements the Phased Construction model transformation design pattern [47]. That is, the framework consists of several phases including phases for model validation and code generation. To implement a phase as part of a model processor, developers need to provide an implementation of a corresponding abstract framework class, e.g., AbstractCodeGenerationModule, and augment the implementation with a phase-specific annotation, e.g., @CodeGenerationModule. At runtime, model processors pass control over the program flow to the framework. The framework will then (i) parse all given intermediate LEMMA models; (ii) transform them into object graphs, which abstract from a concrete modeling technology; and (iii) invoke the processor-specific phase implementations with the object graphs. As a result, the added complexities of MDE w.r.t. model parsing and the construction of Abstract Syntax Trees as instantiations of language metamodels [13] remain opaque for model processor developers. Moreover, LEMMA’s model processing framework provides means to develop model processors as standalone executable Java applications. This characteristic is crucial for the integration of model processors into continuous integration pipelines [43], which constitute a component in DevOps-based MSA engineering [6, 9].

Figure 2 illustrates the interplay of intermediate model transformations, and the implementation and execution of model processors with LEMMA.

Figure 2 comprises two compartments.

The first compartment shows the structure of intermediate model transformations with LEMMA based on the example of a service model constructed with LEMMA’s Service Modeling Language. The service model imports a variety of domain models and technology models constructed with LEMMA’s Domain Data Modeling Language and Technology Modeling Language, respectively. As a preparatory step, the service model is transformed into its intermediate representation by means of LEMMA’s Intermediate Service Model Transformation. Similarly, each imported domain model is transformed into a corresponding intermediate domain model leveraging LEMMA’s Intermediate Domain Model Transformation. To this end, the transformation algorithm restores the existing import relationships between service models and domain models for their derived intermediate representations. However, the algorithm does not invoke intermediate transformations on technology models imported by service models. Instead, the applied technology information becomes part of intermediate service models so that model processors can directly access them. Therefore, LEMMA treats technology models and model processors as conceptual unities. A model processor for a certain technology must be aware of the semantics of the elements in its technology model and be capable in interpreting their application, e.g., within service models.

The second compartment of Fig. 2 concerns model processing. A LEMMA model processor constitutes an implementation conform to LEMMA’s model processing framework, which thus provides the processor with capabilities for model parsing and phase-oriented model processing. Typical results from processing service models comprise (i) executable microservice code; (ii) shareable API specifications, e.g., based on OpenAPI;Footnote 5 (iii) event schemata, e.g., for Apache Avro;Footnote 6 and (iv) measures of static complexity and cohesion metrics applicable to MSA [3, 8, 24, 35, 37].

DevOps-Related Challenges in Microservice Architecture Engineering of SMOs

In this section, we present an empirical analysis of microservice development processes (cf. Section “Organizational Aspects”) in SMOs with the goal of identifying SMO-specific challenges in microservice engineering. For this purpose, we perform a secondary analysis [36] of transcribed qualitative interviews from one of our previous works [75]. Our analytical procedure specifically aims to identify challenges and obstacles during the development process.

Study Design

The study from which the dataset emerged is a comparative multi-case study [84]. The aim of the study was to gain exploratory insights into the development processes of SMOs. To this end, in-depth interviews were conducted on-site in 2019 with five software architects, each from a different company, and afterwards transcribed. The interviews were conducted in a semi-structured manner and covered the areas of (i) applied development process; (ii) daily routines; (iii) meeting formats; (iv) tools; (v) documentation; and (vi) knowledge management. Participants were recruited from existing contacts of our research group to SMOs. Furthermore, we constrained participant selection to the professional level or senior software architects, and SMOs that develop microservice systems with equal or less than 100 people.

Dataset

As depicted in Table 1, the dataset includes transcripts and derived paraphrases covering six different cases (Column C) of microservice development processes in SMOs. In total, we conducted five in-depth interviews (Column I) with software architects whereby I4 covered two cases.

As shown in Table 1, we distinguish the cases into greenfield (new development from scratch), templated greenfield (new development based on legacy system), and migration (transformation of a monolithic legacy system into an MSA-based system) (Column Type). We further categorize each development process by the domain of the microservice application under development (Column Domain). The number of microservices present in the application at the time of the interview (Column #Services), number of people (Column #Ppl) and teams (Column #Teams) involved vary depending on the case. Case 3 is a special case. Although there are only two official teams, short-term teams are formed depending on the scope of customization needed per customer. This results in up to five teams working on the application simultaneously at certain points in time. In all cases the interviewees stated to apply the Scrum framework [68] for internal team organization. By contrast, the collaboration across teams was in all cases not following a particular formal methodology or model (cf. Section “Organizational Aspects”). In addition, all interviewees reported that they strive for a DevOps culture [21] in their SMOs. A detailed description of the cases can be found in our previous work [75].

Analytical Procedure

For the analysis of the dataset, we used the Constant Comparison method [70]. That is, we rescreened existing paraphrases and marked challenges and/or solutions that our interviewees told us about with corresponding codes for challenges, obstacles, and solutions. We then used the coded statements across all cases to combine similar statements to higher-level challenges.

Study Results and Challenges

Our analysis of the dataset resulted in the discovery of several common challenges across all cases. Comparable to other empirical studies, e.g., [81] or [34], our participants reported about the high technical complexity and high training effort during a microservice development process compared to a monolithic approach. Other discovered challenges in line with existing literature, e.g., [29], concern the slicing of the business domain into individual microservices and the most suitable granularity of a microservice (cf. Section “Background”).

In the following, we elaborate on two challenge areas (CA) which we found to be of particular concern for SMOs adopting a DevOps culture in more detail.

CA1: Developing, Communicating, and Stabilizing a Common Architectural Understanding

Developing a common architectural understanding of the architecture components of an application is essential for developing a software in an organization which follows the DevOps paradigm [6]. In particular, this includes an understanding of the goals and communication relationships of architecture components. The interviewees also think that the development of a general understanding of architecture among those involved in development is an important prerequisite for granting teams autonomy and trust.

For cases C2, C4 and C5 (cf. Table 1), which each comprise approx. ten people and two to three teams, the practices to achieve this understanding are Scrum Dailies [68] and regular developer meetings about the current status of the architecture. However, in case of more involved people, achieving a common understanding is reported to be very challenging. For cases C1, C3, and C6, the system development initially started with fewer people, and as the software product became successful, more people and teams were added. Regarding this development and the common architectural understanding I1 states that “From one agile team to multiple agile teams is a huge leap, you have to regularly adapt and question the organization. [...] you need a common understanding of the architecture and a shared vision of where we want to go [...], we are working on that every day and I don’t think we’ll ever be done.”

A strategy that we observed to create this common architectural understanding in C1, C3, and C6 is the creation of new meeting formats. However, a contradicting key aspect of the DevOps culture is to minimize coordination across teams as much as possible [6]. The arising problem is also experienced by our interviewees. The more people and teams involved in exchanging knowledge to develop an architectural understanding, the more time-consuming the exchange becomes. In the case of C6, this has led to the discontinuation of comprehensive knowledge exchanges due to the excessive time involved. They now only meet on the cross-team level to discuss technologies, e.g., a particular authentication framework or a new programming language. We interpret this development as a step towards the introduction of horizontal knowledge exchange formats such as Guilds in the Spotify Model [71]. As a result, C6 is currently challenged with building a common understanding of the architecture only through these technology-focused discussions. This is a problem area that is also evident in the data of other empirical studies. For example, Bogner et al. [9] report on the creation of numerous development guidelines by a large development organization to enforce a common architectural understanding. However, the development of guidelines requires that architecture decisions, technology choices, and use cases are documented [33], a practice we encountered only at C4 and C5.

In terms of technical documentation, the teams in all six cases use Swagger to document the microservices’ APIs. Other documentation, such as a wiki system or UML diagrams, either is not used or not kept up to date. In almost all cases, access to the API documentation is not regulated centrally, but is instead provided by the respective team through explicit requests, e.g., by e-mail. Only C3 has extensive and organization-wide technical documentation as it is described by I3: “Swagger is a good tool, but of course this is not completely sufficient, which is why we have an area where the entire concept of the IT platform [...] is explained. We also have a few tutorials.”

Summarizing CA1, we suspect that SMOs are particularly affected by the challenge of implementing a common architectural understanding as part of a successful DevOps culture. This may be due to a mostly volatile organization in which the number of developers and software features often grows as development progresses, as well as the reported hard transition from a single to multiple agile teams. Documenting architecture decisions, deriving appropriate guidelines, and an accessible technical documentation are key factors for an efficient development process that become more relevant with more teams and developers involved [50] and is therefore often not considered by SMOs early in the development process.

CA2: Complexity of Deployment Techniques and Tools

A recurrent challenge we identified is how to deal with the operation of microservice applications within the development process. While cross-functional teams following the DevOps paradigm are mentioned in the literature, e.g., [53], as being recommended for the implementation of microservices architectures, in each of the researched cases we found specialized units for operating microservices instead of operators included as a part of a microservice team. In C1, C2, and C6 we encountered entire teams solely dedicated to operational aspects. In all cases, the development process included a handover of developed services to those specialized units for operating the microservice application. Although most interviewees were aware that this contradicts the ownership principle of microservices (cf. Section “General”) and they all stated to try to establish a pure DevOps without specialized teams, the effort to learn the basics of the necessary operational aspects is perceived as high. In this regard I2 comments “The complexity (note: of cloud-based deployment platforms) is already very, very high, you know. I would say that each of these functions in such a platform is a technology in itself that you have to learn.” In contrast to operations, the SMOs are successful in including other professions, such as UI/UX, as parts of their cross-functional teams. Our data indicates that this is due to two main reasons. First, the inherently high complexity of the operational technologies and the associated high hurdle of learning and integrating them into the microservice development process. Second, the transfer of this knowledge not only into special units but into the individual microservice teams to do justice to a DevOps approach.

Summarizing CA2, deployment and operation in the SMOs studied is not in the responsibility of the teams to which the respective microservices belong. This seems to be due to the complexity of operation technologies and the associated learning effort. This might particularly be an issue for SMOs due to the challenging environment, where there are few resources to substitute, e.g., for a colleague who needs to learn an operation technology.

Case Study

In this section, we present a case study that we will use in the following sections to illustrate and validate our model-driven workflow (cf. Section “A Model-Driven Workflow for Coping with DevOps-Related Challenges in Microservice Architecture Engineering”) to address the challenges in Section “DevOps-Related Challenges in Microservice Architecture Engineering of SMOs”. We decided on the usage of a case study to show the applicability of our approach because non-disclosure agreements prevent us from presenting our approach in the context of the explored SMO cases (cf. Section “Dataset”). Therefore, we selected an open source case study microservice architecture, which maps to the design and implementation of the explored SMO cases w.r.t. the scope of our approach. More precisely, the case study (i) employs Swagger for API documentation (cf. Section “Study Results and Challenges”), (ii) uses synchronous and asynchronous communication means, (iii) is mainly based on the Java programming language, and (iv) the number of software components matches the smaller SMOs in our qualitative analysis (cf. Section “Dataset”).

The case study is based on a fictional insurance company called Lakeside Mutual [76]. The application serves to exemplify different API patterns and DDD for MSA. The application comprises several micro-frontends [58], i.e., semi-independent frontends that invoke backend functionality, and microservices centered around the insurance sector, e.g., customer administration, risk management, and customer self-administration functions. The application’s source code as well as documentation is publicly available on GitHub.Footnote 7

Figure 3 depicts the architectural design of the Lakeside Mutual application. Overall it consists of five functional backend microservice. Each microservice is aligned with a micro-frontend.

Except for the Risk Management Server, all microservices are implemented in JavaFootnote 8 using the Spring framework.Footnote 9 A micro-frontend communicates with its aligned microservice using RESTful HTTP [27]. Additionally, the Risk Management Client and Risk Management Server communicate via gRPC. For internal service to service communication, the software system also relies on synchronous RESTful HTTP, but also on asynchronous amqp messaging over an Active MQ message broker. The Customer Management Backend and the Customer Core services also provide generated API documentations based on Swagger.Footnote 10

Besides the functional microservices, the Lakeside Mutual application also uses infrastructural microservices. The Eureka Server implements a Service Registry [64] to enable loose coupling between microservices and their different instances. For monitoring purposes, the Spring Boot Admin service provides a monitoring interface for the health status of individual services and the overall application.

A Model-Driven Workflow for Coping with DevOps-Related Challenges in Microservice Architecture Engineering

This section proposes a model-driven workflow based on LEMMA (cf. Section “Language Ecosystem for Modeling Microservice Architecture”) to cope with the challenges identified in Section “DevOps-Related Challenges in Microservice Architecture Engineering of SMOs”. More precisely, the workflow provides a common architectural understanding of a microservice application (cf. Challenge CA1 in Section “Study Results and Challenges”), and reduces the complexity in deploying and operating microservice architectures (Challenge CA2).

In the following subsections, we present the design of the workflow (cf. Section LEMMA-Based Workflow for Coping with DevOps Challenges”). Next, we describe the components, which we have added to LEMMA, to support the workflow. These components include (i) interoperability bridges between OpenAPI and LEMMA models (CA1; cf. Section “Derivation of Microservice Models from API Documentations”); (ii) an extension to the Service Modeling Language to allow the import of remote models (CA1; cf. Section “Assembling a Common Architecture Model from Distributed Microservice Models”); (iii) a model processor to visualize microservice architectures (CA1; cf. “Visualization of Microservice Architecture Models”); (iv) enhancement of the Operation Modeling Language (cf. Section “Enhancing Distributed Microservice Models with Deployment Infrastructure Models”; and (v) code generators for microservice deployment and operation (CA2; cf. Section “Generating Code from Distributed Deployment Infrastructure Models”).

Furthermore, we present in detail prototypical components that we have added to the LEMMA ecosystem to support the workflow. These include deriving models from API documentation (cf. Section “Derivation of Microservice Models from API Documentations”) and assembling microservice models into an architecture model (cf. Section “Assembling a Common Architecture Model from Distributed Microservice Models”) as a means to build a common architectural understanding (CA1). The presented components also comprise means to enrich microservice models with deployment infrastructure models (cf. Section “Enhancing Distributed Microservice Models with Deployment Infrastructure Models”) to more easily handle operational aspects for SMOs (CA2).

To ensure replicability of our results, we have provided a GitHub repositoryFootnote 11 which contains documentation on how to setup LEMMA and its presented extensions. It further contains all generated artifacts as well as sources and scripts to rerun the generations. Finally, it includes a manually constructed set of LEMMA models which represent all Java-based microservices of the Lakeside Mutual case study (cf. Section “Case Study”).

LEMMA-Based Workflow for Coping with DevOps Challenges

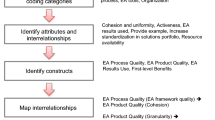

Figue 4 shows the conceptual elements and their relationships which underlie the design of our LEMMA-based workflow for coping with the DevOps challenge areas (cf. Section “DevOps-Related Challenges in Microservice Architecture Engineering of SMOs”).

Overview of the concepts within the workflow and their interrelationships represented as a UML class diagram [55]

An Organization includes multiple DevOps Teams, each responsible for one or more Microservices (cf. Section “Organizational Aspects”). The sum of all microservices forms the Microservice Application that is developed by the organization. Associated with a microservice is a corresponding documentation of its interfaces (API Documentation). For each microservice owned by it, the team constructs a Set of LEMMA Views as a model representation (cf. Section “Language Ecosystem for Modeling Microservice Architecture”). The sum of all LEMMA models forms an Architecture Model which describes the system’s architecture. This model can be used by the organization, e.g., to gain insight into existing dependencies between the microservices involved.

Based on the conceptual elements and their relationships, Fig. 5 shows our model-driven workflow for DevOps-based microservices development in SMOs as a UML activity diagram [55].

Proposed workflow for DevOps teams for model-driven microservices development represented as a UML activity diagram [55]

We depict the workflow from the perspective of a single DevOps team including all steps required for the development of a new microservice. When incremental changes are made to individual aspects of a microservice, only the steps affected by the changes need to be performed.

The process starts with the planning of the development. The team decides whether to follow a code-first or model-first approach. We support both variants to allow the teams autonomy according to the DevOps paradigm [6].

Code-First Approach Here, the team first implements the microservice consisting of structure and behavior. Based on the finished implementation, the team creates an API Documentation, which can be done manually or automatically with tools such as Swagger.Footnote 12 Using the API documentation, a LEMMA domain model and a LEMMA service model are automatically derived (cf. Section “Derivation of Microservice Models from API Documentations”) and, if necessary, refined by the team. In parallel, the team creates a LEMMA operation model, since the information required for this kind of model cannot be derived from the API documentation (cf. Section “Derivation of Microservice Models from API Documentations”).

Model-First Approach Alternatively, the team can decide to first model the structure and operation of the microservice using LEMMA. In the subsequent implementation activity, the structural aspects can be generated based on the previously constructed models and only the manual implementation of the behavior is necessary (cf. Section “Language Ecosystem for Modeling Microservice Architecture”).

Regardless of which of the two approaches was chosen, in the end LEMMA domain, service, and operation models are available and describe the Dev and Ops aspects of the microservice under development.

The operation model is then used to Generate a Deployment Specification for a container-based environment which mitigates the complexity of the operation (cf. Section “Generating Code from Distributed Deployment Infrastructure Models”). The team refines this specification as needed and then deploys the microservice. In parallel, the models generated during the workflow are sent to a central model repository and made available to the entire organization where they can be used by other teams to gain insight and a common understanding of the application’s architecture, e.g., by visualizing its structure.

Based on the use of model transformations and code generation steps, we argue that the application of the workflow is possible with almost the same resources as the current development processes in the individual DevOps teams that we were able to explore as cases in the empirical study (cf. Section “DevOps-Related Challenges in Microservice Architecture Engineering of SMOs”). This applies to both the code-first and model-first approaches. At its core, the code-first approach relies on the same development steps, i.e., implementing structure and behavior of a microservice, as non-model-based processes in the individual teams, so that even teams without experience in MDE can adapt the flow in a non-invasive way. Besides the actual implementation, the workflow provides a service’s description in the form of LEMMA viewpoint models, which can be used as a communication basis and for knowledge transfer to create a common architecture understanding (CA1; cf. Section “Study Results and Challenges”) in the organization. This can be used to, e.g., accelerate verbal coordination processes between teams, improve the documentation, or identify microservice bad smells [78]. In addition, by using LEMMA operation models and generating deployment specifications, it is easier for teams of an SMO to address the Ops aspects themselves without passing on the responsibility for deployment to another unit (CA2; cf. Section “Study Results and Challenges”). This enables teams to foster the ownership principle of MSA (cf. Section “General”).

Derivation of Microservice Models from API Documentations

To enable the model-driven workflow with sophisticated modeling support by LEMMA, we extended the ecosystem with the ability to derive data and service models from API documentation into LEMMA modeling files. In particular, our extension targets API documentation that conforms to the OpenAPI SpecificationFootnote 13 (OAS) [56]. OAS defines a standardized interface to describe RESTful APIs. One of the most popular tools implementing OAS is Swagger, which was used by all SMOs in the qualitative study (cf. Section “DevOps-Related Challenges in Microservice Architecture Engineering of SMOs”).

The transformation of OAS files into LEMMA files can be classified as an interoperability issue in which OAS models are to be converted into LEMMA models. We therefore applied the interoperability bridge process proposed by Brambilla et al. [10]. Figure 6 shows the applied interoperability bridge process.

First, using the Swagger parsing framework,Footnote 14 an OAS conform API model in the YAML [7] or JSON [22] format is converted into an in-memory API Model. We then perform three model transformations where the information from the API Model is transformed into a Domain Data Model, Service Model, and a Technology Model which each correspond to their respective LEMMA metamodel (cf. Section “Language Ecosystem for Modeling Microservice Architecture”). Table 2 describes which OAS objectsFootnote 15 are mapped to which LEMMA model kind.

To be able to transform the in-memory LEMMA models as files, we extended LEMMA with extractors [10] for technology, service, and data models.

Listing 1 and Listing 2 illustrate the application of the process.

Listing 1 shows an excerpt of the API documentation file of the Customer Core microservice from the case study (cf. Section “Case Study”). In detail, the listing presents the OAS description for an HTTP GET request on the path cities/{postalCode} (Lines 2 and 3). This includes, e.g., the unique id getCitiesForPostalCodeUsingGET (Line 6) of the operation, the incoming parameters (Lines 8–14), and the information that a response returns an object based on the CitiesResponseDto schema (Lines 15–20). The excerpt shows only the response for HTTP status code 200 (Line 16). OAS also offers the possibility to define responses for other status codes, e.g., HTTP status code 404, but these are currently not considered in the transformation to LEMMA in our prototypical implementation.

Listing 2 shows the LEMMA service model automatically transformed from the CustomerCore OAS model in Listing 1. First, the results of the other transformations are imported into the service model. This includes the previously transformed LEMMA domain data model customerCore.data resulting from the OAS schemas (Lines 1 and 2), which contains all data structures such as CitiesResponseDto, and the technology model OpenApi.technology (Line 3), which contains, e.g., the OpenAPI-specific primitive data types and the media types used in the CustomerCore OAS model. Line 5 enables the OpenApi technology for the com.lakesidemutual.customercore.CustomerCore microservice, whose definition starts in Lines 6 and 7. The microservice comprises an interface named cityReferenceDataHolder which was derived by the associated tags in the OAS model (Line 8). The interface consists of the operation getCitiesForPostalCodeUsingGET named after the OAS operationId (Lines 18–22). The operation commentary section (Lines 9–14) is populated using the summary information from OAS. The OAS path is added as an endpoint (Line 15) and the operation classified as an HTTP GET request (Line 17). The OAS response associated with the HTTP status code 200 is modeled as an OUT parameter and named returnValue (Lines 20 and 21).

Assembling a Common Architecture Model from Distributed Microservice Models

Microservices can interact with and depend on each other to realize coarse-grained functionality [54]. In the case study (cf. Section “Case Study”), such a relationship is found between the microservices Customer Management Backend and Customer Core. Such dependencies cannot be derived from an API documentation, since its purpose is to describe the provided interface of a service and not the invocation of functionality provided by other architecture components. However, these dependencies are essential to be able to assemble and assess an architecture model and to raise a common architectural understanding across the whole organization (cf. Section “Study Results and Challenges”). Therefore, within the workflow (cf. Section “LEMMA-Based Workflow for Coping with DevOps Challenges”), the dependencies should be added manually by the teams in the LEMMA models. This can be done during the Model Services activity when using the model-first approach and during the Refine Generated Models activity when using the code-first approach.

However, LEMMA service models originally were only able to depend on other LEMMA service models if they are accessible in the local file system. Therefore, to allow teams the expression of interaction dependencies with the microservices of other teams, we have extended LEMMA to allow external service imports. Listing 3 shows the service model of the Customer Management Backend microservice from the case study. The microservice imports show the two alternatives. The syntax for importing locally accessible service files is shown in Lines 3 and 4. Alternatively, the import in Lines Lines 6–8 exemplifies the mechanism for external imports.

As soon as the Eclipse IDE detects such an external import in the model, it offers a quickfix that automatically downloads the referenced file and, if it is OAS-compliant API documentation, starts a corresponding transformation to LEMMA (see Section “Microservice Architecture Model Processing”). This also makes it possible to model a dependency to a service of another team, even if this team does not yet provide its own model but only API documentation.

Since LEMMA models are textual [63] and with the extension it is possible to import external sources, the model files of the different teams can be managed centrally as an architecture model by a version management system such as Git and thus integrated into CI/CD pipelines, e.g., by a Git hookFootnote 16 that copies the models to a central model repository with each release of the microservice.

Visualization of Microservice Architecture Models

To enable visualization of the architecture using LEMMA (cf. Section LEMMA-Based Workflow for Coping with DevOps Challenges”), we have developed the LEMMA Visualizer.Footnote 17 It is able to transform several LEMMA intermediate service models (cf. Section “Microservice Architecture Model Processing”) into a single graphical representation using a model-to-text transformation [13]. In its current form, the visualizer is a standalone executable Jar file. The Jar file can be passed the paths to several service models, which serve as input, and a target path for generating the visualization as arguments through the command line. The steps of the transformation are depicted in Fig. 7.

First, one or more intermediate service model files are passed to the LEMMA Model Processor (cf. Section “Microservice Architecture Model Processing"). These are converted to their in-memory representation and then processed. Using the JGraphT framework [51], we create a directed graph that we populate with microservices found during the processing of the intermediate models as vertices and any existing imports from other services as edges. Microservices that are neither imported nor import another service and are thus without an edge are added as isolated vertices. Then we use JGraphT’s DOTExporter to convert the graph into a textual representation of the graph based on the DOT language.Footnote 18 During the export, we enrich the DOT representation with attributes which describe the appearance of the vertices and edges for the later visualization, e.g., we add coloring and describe vertex shapes. Finally, we use GraphViz [23] to generate an image of the graph’s DOT representation that represents the system architecture in the form of a box-and-line diagram as a Portable Network Graphics (.png) file. The visualizer supports the setting of different levels of detail of the display in relation to the attributes of microservice vertices. A resulting architecture image which shows the functional microservices from the case study is shown in Fig. 8. To generate the image, the visualizer was configured to render with a detail level which shows interfaces but not operations.

Generated visualization of the architecture of the case study (cf. Section 5)

Enhancing Distributed Microservice Models with Deployment Infrastructure Models

In this subsection, we elaborate on the creation of an operation model (cf. Section “Case Study”) that complements the previously created data and service models to form a complete set of LEMMA views describing a microservice (cf. Section “LEMMA-Based Workflow for Coping with DevOps Challenges”). The operation model is constructed using LEMMAs Operation Modeling Language (OML) Section “Microservice Architecture Model Construction”). This approach specifically addresses the Operation and Deployment Stages of MSA (cf. Sections “Operation Stage” and “Development Stage“) and therefore addresses CA2 (cf. Section “Study Results and Challenges”) by providing functionalities for describing the microservice models’ deployment, including their dependencies to infrastructural services, e.g., API gateways, services discoveries, and databases. Additionally, OML abstracts from concrete technology-specific deployment configurations and reduces the overall complexity of deploying a microservice application.

To enable DevOps teams in SMOs to take full ownership of their respective services, which mitigates the need to apply specialized teams dedicated to operating the whole microservice application (cf. Section “DevOps-Related Challenges in Microservice Architecture Engineering of SMOs”), we have extended the OML with means to import other operation models as nodes and, therefore, nest operation specifications with each other. I.e. teams do not have to maintain individual models for infrastructure microservices, but can use the new mechanism to import the operation model, e.g., for a Eureka service discovery (cf. Section “Case Study”), from a central model repository (cf. Section “Assembling a Common Architecture Model from Distributed Microservice Models”).

OML now enables the DevOps Team to describe the deployment of a microservice and all necessary dependencies. Listing 4 shows an excerpt of the operation model for the deployment of the CustomerCore microservice. Lines 1 and 2 of the listing import the customerCore.services model derived from the services’ Open API specification (cf. Section “Derivation of Microservice Models from API Documentations”). The following Lines 3–6 deal with the import of the technology for service deployment. The Container_base technology model uses DockerFootnote 19 and KubernetesFootnote 20 for service deployment. Lines 7 and 8 illustrate the new possibility to import other operation models as nodes by importing the eureka.operation model that describes the deployment of a service discovery by the EurekaFootnote 21 technology.

Lines 10 and 11 assign the technology to the CustomerCoreContainer (Line 12). The container runs the deployed microservices and clusters deployment-relevant information, e.g., dependencies to infrastructural components such as databases, service-specific configurations, and protocol-specific endpoints. For this purpose, Lines 12–14 create the CustomerCoreContainer and assign the Kubernetes deployment technology which is imported from the container_base technology model. The deployment of the CustomerCore microservice via the container is shown in Lines 15 and 16. The following Lines 17–25, show the dependency to the ServiceDiscovery imported from the eureka.operation model. In detail, Line 19 starts the service-specific configuration of the CustomerCore microservice by specifying the eurekaUri responsible for configuring the dependency to the ServiceDiscovery. The CustomerCore microservice exposes its functionality via a rest endpoint (Lines 21–23).

Besides modeling the deployment of microservice-specific configurations, OML also enables the DevOps team to specify infrastructural components’ deployment, e.g., service discoveries and databases. Listing 5 describes the deployment of the ServiceDiscovery. Lines 1–3 import the containerbase.technology and eureka.technology models. The models include the specification of the technology used for the deployment of the ServiceDiscovery. Lines 4 and 5 import the CustomerCore operation model (cf. Listing 4) because the CustomerCore microservice uses the service discovery. Lines 7 and 8 assign the imported technology to the ServiceDiscovery.

Line 9 starts the actual specification of the ServiceDiscovery, which uses the imported Eureka technology. The following Line 10 contains the dependency to the CustomerCoreContainer, specified in Listing 4. The service-specific configuration of the ServiceDiscovery is set via the assignment of default values in Lines 12–16. Lines 13 and 14 set the actual hostname and port of the service.

Overall, LEMMA’s OML enables the DevOps team to construct operation models which specify the deployment of microservices and their dependencies on the microservice application’s infrastructural components. The operation models consist of the concepts of containers and infrastructure nodes. Containers (cf. Listing 4) specify the deployment of microservice, whereby infrastructure nodes contain the configuration for infrastructural components, e.g., API gateways, databases, and service discoveries (cf. Listing 5).

Generating Code from Distributed Deployment Infrastructure Models

In Section “Enhancing Distributed Microservice Models with Deployment Infrastructure Models” we introduced OML as a methodology to describe the deployment of a service-based software system. In this subsection, we contribute a code generation pipeline for creating deployment-related artifacts based on the operation models using LEMMA’s Model Processor (cf. Section “Microservice Architecture Model Processing”). As depicted in Fig. 9, the code generation pipeline consists of two consecutive stages.

The first stage of the code generation pipeline consists of a model-to-model transformation [13] transforming an operation model into an intermediate operation model in the sense of LEMMA’s intermediate model processing (cf. Section “Microservice Architecture Model Processing”).

The second stage of the code generation pipeline deals with the creation of the deployment-relevant artifacts. Based on an intermediate operation model, the code generators already included in LEMMA (cf. Section “Language Ecosystem for Modeling Microservice Architecture”) provide a variety of different functionalities that are usually bound to a specific technology model. As already shown in Listing 4 and Listing 5, the described operation models both use the container_base technology model.

The container_base model clusters a technology stack suited for a service-based software system with focusing on container technologies [44] such as Docker, Docker-Compose, and Kubernetes. Listing 6 shows an excerpt of this specific technology model. Line 1 specifies the actual name of the model. Lines 2–7 describe the deployment technologies of the model, in this particular case Kubernetes. Additionally, Kubernetes supports the operation environments golang, python3, and openjdk as its default.

The second part of Listing 6 contains the definition of operation aspects for further service deployment specification from Lines 8 to 13. Lines 10 and 11 define the Dockerfile aspect, which can be applied to containers in operation models. The aspect consists of a single attribute named content containing the actual content of the Dockerfile. Furthermore, the content attribute has the property mandatory to it, so it can only be configured a single time per container.

The Container Base Code Generator (CBCG)Footnote 22 supports the container_base technology model (cf. Listing 6) to derive a set of deployment-related artifacts such as build scripts, Dockerfiles, and Kubernetes configurations. Furthermore, the CBCG integrates with existing microservice configuration files by extending them with operation-specific entries, e.g., service discovery addresses, as necessary.

Listing 7 shows a Dockerfile generated by the CBCG from the operation model in Listing 4. The Dockerfile contains a basic configuration consisting of a Docker image deduced from the modeled operation environment. In Lines 2–15 several artifacts are copied into the image. Line 17 configures the port 8110 on which the microservice is started. Finally, Lines 18–20 define the entrypoint of the Docker image to compile and run the microservice.

While the CBCG is able to create a basic Dockerfile, the OML also integrates with LEMMA’s aspect mechanism so that it becomes possible to leverage the Dockerfile aspect from the container_base technology model (cf. Listing 6) to customize Dockerfile generation.

In addition to Dockerfiles, the CBCG may also generate Kubernetes deployment files. In general, a derived Kubernetes file consists of parts that concern deployment and service configuration. The deployment part shown in Listing 8 clusters the configuration of the Kubernetes podFootnote 23 to which a microservice gets deployed. Line 1 defines the apiVersion the Kubernetes file uses. Line 2 contains the definition of the configuration kind: deployment. Lines 5–7 assign a name to the deployment, i.e., customercorecontainer. Line 8 determines the configuration of the Kubernetes deployment, specifically the set of replicas that shall be created for the deployment.

Listing 9 contains the service part of the Kubernetes deployment and the configuration on how the microservices application is exposed. Lines 1 and 2 cluster information about the apiVersion and configuration type of the Kubernetes file, i.e., kind: Service, followed by the name assignment in Lines 4–6. Additionally, the listing defines the exposure of the microservice via port 8110 in Lines 9–11.

In addition to the deployment-related generated artifacts, LEMMA’s code generation pipeline also supports the extension of existing service configurations. For this purpose, we implemented additional code generators for technologies like MongoDB,Footnote 24 MariaDB,Footnote 25 Zuul, and Eureka.

Listing 10 contains a variety of configuration options for Spring-based microservice implementations. The spring.application.name and server.port options in Lines 1 and 2 are derived from the modeled microservice’s name and its specified endpoint in the LEMMA models. Lines 3–7 result from the Eureka configuration shown in Listing 4. They configure the endpoints for connecting to the eureka service discovery.

Validation

In this section, we validate the present LEMMA extensions that implement the workflow (cf. Section “A Model-Driven Workflow for Coping with DevOps-Related Challenges in Microservice Architecture Engineering”). To enable replicability of our results, we provide a validation package on GitHub.Footnote 26 To make the validation feasible, we first manually reconstructed the functional backend and infrastructure microservices of Lakeside Mutual (cf. Section “Case Study”) using a systematic process [62]. This step was necessary because the backend and infrastructure microservices of Lakeside Mutual are implemented in Java and not modeled with LEMMA. In detail, our reconstruction includes all four Java-based functional microservices and the infrastructural microservices Eureka Server and Spring Boot Admin (cf. Section “Case Study”).

In addition, we retrieved the current API documentation of Lakeside Mutual by putting the architecture into operation and triggering the generation of the documentation using prepared REST requests. At the end of this process, we could refer to the current API documentations of Lakeside Mutual’s Customer Core and Customer Management Backend (cf. Fig. 3 in Section “Case Study”), which are the two components for which the application provides API documentation.

We then performed the individual generation steps of our workflow (cf. Section “A Model-Driven Workflow for Coping with DevOps-Related Challenges in Microservice Architecture Engineering”) based on our reconstructed LEMMA models and the case study’s API documentation. We illustrate the results of the application of our workflow as shown in Table 3 using the Lines of Code (LoC) metric.

As Table 3 shows, using the OAS-conform API documentation, we were able to generate 171 and 174 LoC of LEMMA Domain Data and Service files for the Customer Core and the Customer Management Backend microservices, respectively. Although the same operations and parameters for interfaces are present in the models generated by our workflow and the reconstructed LEMMA models, the LoC are higher in our reconstructed models. This is due to the fact that, e.g., the operation-related portion of LoC or technology-related annotations for databases are present in the manual models, but not in the generated ones, since no information on this is available from the API documentation.

Regarding the generation of deployment specifications, we were able to generate 285 lines of infrastructure code for Docker and Kubernetes from the reconstructed operation models of the functional microservices. Teams can abstract from technology-specific infrastructure code and, in combination with LEMMA’s source code generators such as the Java Base Generator [61], generate directly executable and deployable stubs of their services.

Discussion

The model-based workflow presented in Section “A Model-Driven Workflow for Coping with DevOps-Related Challenges in Microservice Architecture Engineering” addresses the previously identified challenge areas (cf. Section “DevOps-Related Challenges in Microservice Architecture Engineering of SMOs”). In detail, the workflow provides means to establish a common understanding of architecture in an organization scaling to the level of multiple teams for the first time (CA1) and the complexity of operational aspects in microservice engineering (CA2).

We argue that by documenting the architecture in a centralized manner (cf. Sections “Derivation of Microservice Models from API Documentations” and “Assembling a Common Architecture Model from Distributed Microservice Models”), combined with the ability to visualize it (cf. Section “Visualization of Microservice Architecture Models”), teams and higher-level stakeholders, such as project sponsors, have a good basis for sharing knowledge and gaining insight into each other’s development artifacts through the inherent abstraction property of the models [48]. Box-and-line diagrams, in particular, have the advantage that people can more easily grasp relations between concepts [13].

Another added value of our approach is the ability to seamlessly integrate deployment specifications into architecture models as a LEMMA operation model with the possibility to derive deployment configurations for heterogeneous deployment technologies, i.e., to generate them for Docker and Kubernetes (cf. Section “Generating Code from Distributed Deployment Infrastructure Models”). To this regard, Combemale et al. [13] underline the added value of models to abstract complexity in the deployment process making the process more manageable. However, deployment technologies supported by our workflow constitute de-facto standards [69], LEMMA does limited justice to the heterogeneous technology landscape concerning cloud providers. In particular, we do not specifically address cloud-based deployment platforms such as AWSFootnote 27 or Azure.Footnote 28 Presumably, LEMMA is able to support such technologies through specific technology models (cf. Section “Microservice Architecture Model Construction”). In the future, we plan to address this limitation by providing LEMMA technology models and code generators for languages targeting the Infrastructure as Code [52] paradigm, e.g., Terraform [11]. As a result, LEMMA would support model-based deployment to a variety of cloud-based deployment platforms.

To implement and take advantage of the LEMMA-based workflow, team members need to learn and use a new technology with LEMMA. As the validation (cf. Section “Validation”) shows, teams can significantly increase efficiency through the available generation facilities of LEMMA. However, we need further empirical evaluation in practice (cf. Section “Conclusion and Future Work”) to more accurately assess in which cases the efficiency gains from better documentation, accessible architectural understanding, and generation of deployment specifications outweigh the effort required to learn LEMMA and in which cases they do not.

An important aspect on which the efficiency of the workflow depends is the organization-wide agreement on the level of detail of the models shared between teams. For example, if a very high level of detail is agreed upon, i.e., including as much information as possible from the source code in the models, as we applied to the reconstruction of the case study (cf. Table 3), generated artifacts must be more refined by the DevOps teams. This results in a higher effort. This can exemplified by the Customer Core Service (cf. “Validation”). The reconstructed model contains considerably more LoC, e.g., regarding technologies, than the generated model. In contrast, if the organization agrees on a low level of detail that, e.g., only considers technology-agnostic domain, service, and operation models (cf. Section “Language Ecosystem for Modeling Microservice Architecture”), very few adjustments to the generated models are necessary. This fact is also evident from our experience in performing the validation (cf. “Validation”). Although we were familiar with both LEMMA and the source code from the case study, the manual reconstruction was a tedious and time-consuming task of several hours compared to the automatic generation of the models.

A technical limitation within the LEMMA-based workflow is the unidirectional artifact creation. Changes to the models currently have to be made by the team owning the corresponding microservice. However, to further extend a shared understanding of the architecture as well as to follow DevOps’ minimize communication efforts characteristic [21], it would be beneficial if other teams or stakeholders could request editing of services of other teams directly using the shared models, e.g., to add an attribute to an interface operation.

Related Work

In the following, we describe related work from the areas of service and operation modeling, comparable qualitative studies, and workflows for DevOps-oriented development of microservice architectures in the context of model-driven software engineering.

MSA Service Modeling Terzić et al. [82] present MicroBuilder, a tool that enables the modeling and generation of microservices. At its core, MicroBuilder comprises the MicroDSL modeling language. Like LEMMA, MicroDSL is based on the Eclipse Modeling Framework. Unlike LEMMA, however, MicroBuilder is closely linked to Java and Spring as specific technologies, so that the MicroDSL metamodel would have to be adapted for new technologies. MicroBuilder also addresses only the role of the developer and neglects stakeholders such as domain experts or operators. In addition, MicroBuilder does not address MSA’s characteristic of having multiple teams involved in the development process. Another model-based approach called MicroART [31] is provided by Granchelli et al. MicroART contains a DSL called MicroARTDSL which aims to capture architecture information. The purpose of MicroART is to recover microservice architectures through static and dynamic analysis. As such, MicroART can support organizations in raising a common architectural understanding similar to the visualization we proposed in Section “Visualization of Microservice Architecture Models”. However, MicroART does not provide a model-based workflow for the teams and lacks the rich ecosystem of LEMMA comprising means to also model and generate domain data, operational aspects, and different technologies. Alshuqayran et al. [1] present MiSAR an empirically derived approach for generating architectural models of microservice applications. Like LEMMA, MiSAR also leverages MDE and provides a metamodel centered around the microservice concept. It includes concepts for, e.g., interfaces, operations, and service types. However, MiSAR primarily focuses on reconstructing models from existing source code and does not provide a concrete syntax aligned with the metamodel like LEMMA’s textual notation. In addition, MiSAR has a cohesive metamodel for operation and service modeling. LEMMA, on the other hand, supports separate model kinds derived from the roles in the MSA engineering process and also includes means for modeling domain data.

Qualitative Study Bogner et al. [9] describe a study related to our qualitative empirical analysis (cf. Section “DevOps-Related Challenges in Microservice Architecture Engineering of SMOs”) that includes 14 interviews with software architects. In contrast to our analysis, Bogner et al. do not focus on the challenges in the workflow of the organizations, but on the technologies used and software quality aspects. Another interview study was conducted by Haselböck et al. [34] focusing on software design aspects such as the sizing of microservices. A questionnaire based study on Bad Smells in MSA was conducted by Taibi et al. [78]. The study touches on organizational aspects and is included in our argumentation of the challenges (cf. Section “Study Results and Challenges”, but due to the study design as a questionnaire, the development process as a whole was not considered.

Development Workflows In the context of our proposed workflow (cf. Section “LEMMA-Based Workflow for Coping with DevOps Challenges”), there are several large-scale agile process models or methodologies that can foster the development of MSA by multiple DevOps teams. Examples include Scrum at Scale [77], the Spotify Model [71], or SAFe [66] (cf. Section Organizational Aspects). However, these approaches generally only become viable when an organization has at least 50 or more developers involved [18], and are therefore not suitable for SMOs facing the challenge of initially scaling their small organization from one to two or three teams. In addition, the aforementioned approaches address development at an organizational level and do not address development practices. Therefore, we expect our proposed workflow (cf. Section “LEMMA-Based Workflow for Coping with DevOps Challenges”) to integrate well with the stated large-scale approaches.