Abstract

Purpose

In December 2019, the Covid-19 pandemic began in the world. To reduce mortality, in addiction to mass vaccination, it is necessary to massify and accelerate clinical diagnosis, as well as creating new ways of monitoring patients that can help in the construction of specific treatments for the disease.

Objective

In this work, we propose rapid protocols for clinical diagnosis of COVID-19 through the automatic analysis of hematological parameters using evolutionary computing and machine learning. These hematological parameters are obtained from blood tests common in clinical practice.

Method

We investigated the best classifier architectures. Then, we applied the particle swarm optimization algorithm (PSO) to select the most relevant attributes: serum glucose, troponin, partial thromboplastin time, ferritin, D-dimer, lactic dehydrogenase, and indirect bilirubin. Then, we assessed again the best classifier architectures, but now using the reduced set of features. Finally, we used decision trees to build four rapid protocols for Covid-19 clinical diagnosis by assessing the impact of each selected feature. The proposed system was used to support clinical diagnosis and assessment of disease severity in patients admitted to intensive and semi-intensive care units as a case study in the city of Paudalho, Brazil.

Results

We developed a web system for Covid-19 diagnosis support. Using a 100-tree random forest, we obtained results for accuracy, sensitivity, and specificity superior to 99%. After feature selection, results were similar. The four empirical clinical protocols returned accuracies, sensitivities and specificities superior to 98%.

Conclusion

By using a reduced set of hematological parameters common in clinical practice, it was possible to achieve results of accuracy, sensitivity, and specificity comparable to those obtained with RT-PCR. It was also possible to automatically generate clinical decision protocols, allowing relatively accurate clinical diagnosis even without the aid of the web decision support system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Motivation and problem characterization

The world that emerged after the Second World War was marked by a rapid process of globalization. An interconnected world has emerged, totally connected both by advanced means of transport, such as airplanes and ships, and by means of information and communication technologies. Trade necessarily integrates nations and intensifies the movement of people across the globe. However, from the point of view of Epidemiology, a fully connected world is also a world more susceptible to several threats, including health threats, such as epidemics and pandemics (Crooks et al. 2018, Prior et al. 2019). The pathways through which international trade flows are also the pathways used by infectious disease vectors.

In December 2019, in the city of Wuhan, China, the most critical outbreak in the last hundred years began: Coronavirus Disease 2019 (Covid-19), transmitted by the SARS-CoV2 virus, a virus of zoonotic origin until then unknown, present in bats and pangolins (Barbosa et al. 2021b, Ciotti et al. 2019; de Lima et al. 2020; Rothan and Byrareddy 2020; Wu et al. 2020). SARS-CoV-2, when compared to its predecessors, proved to be much more resistant and infectious. The most common symptoms are fever, dry cough, and tiredness (Ciotti et al. 2019, de Lima et al. 2020, Gomes et al. 2020a, Rothan and Byrareddy 2020, Wu et al. 2020). Pain and discomfort, sore throat, diarrhea, conjunctivitis, headache, loss of taste or smell, rash on the skin, or discoloration of the fingers or toes may also appear (Cascella et al. 2020; Ciotti et al. 2019; Peeri et al. 2020; Rothan and Byrareddy 2020; Wang et al. 2020b; Wu et al. 2020). Its severe symptoms are difficulty in breathing or shortness of breath, pain or pressure in the chest, and loss of speech or movement (Cascella et al. 2020, Ciotti et al. 2019, de Lima et al. 2020, Gomes et al. 2020a, Peeri et al. 2020, Rothan and Byrareddy 2020, Wang et al. 2020b, Wu et al. 2020). Despite the lower lethality, the virus spreads very quickly, producing a large volume of deaths and leaving sequels that are often permanent (Cascella et al. 2020; de Lima et al. 2020; Peeri et al. 2020; Wang et al. 2020b). Due to their high rate of contagion, public health system resources are rapidly depleted (de Lima et al. 2020). The Covid-19 pandemic is one of the biggest health crises in decades. In March 2021, SARS-CoV had already infected almost 130 million people, more than seventy million of whom recovered, while almost 3 million died (Organization 2021). In this context, it is not enough to invest in the opening of new hospital beds for the treatment of patients. It is necessary to have tests that guarantee fast and reliable diagnoses; specific treatments to decrease the lethality of the disease; efficient and low-cost vaccines applied to a considerable portion of the population; and social isolation and quarantine policies to seek to control the disease vector while vaccines and specific treatments are not available for Covid-19.

Several studies have sought to highlight the nature of Covid-19 as a disease that affects the cardiovascular system (Chatterjee et al. 2020; Fan et al. 2020; Gao et al. 2020; Liu et al. 2020; Tan et al. 2020; Zheng et al. 2020). Coronaviruses, such as SARS-CoV and SARS-CoV-2, have the angiotensin-converting zinc metallopeptidase 2 (ACE2), an enzyme present in the cell membranes of the arteries, heart, lungs and other organs as a functional receptor. ACE2 is involved in cardiac function, hypertension and diabetes (Turner et al. 2004). The MERS-CoV and SARS-CoV coronaviruses can cause acute myocarditis and heart failure (Zheng et al. 2020). Some of the impacts of coronaviruses on the cardiovascular system are increased blood pressure and increased levels of troponin I (hs-cTnI) (Zheng et al. 2020). Covid-19 patients may also develop lymphopenia, i.e., low level of lymphocytes (Fan et al. 2020; Liu et al. 2020; Tan et al. 2020); and leukopenia, i.e., few leukocytes. COVID-19 patients may also experience decreased hemoglobin levels, absolute lymphocyte count (ALC), and absolute monocyte count (AMC) (Fan et al. 2020). Patients who have developed severe forms of the disease have significantly higher levels of Interleukin-6 and D-dimer than patients who have developed a moderate form of COVID-19 (Gao et al. 2020). Therefore, considering that COVID-19 is a disease that affects blood parameters, hematological tests can be used to help diagnose the disease.

Given that the result of the RT-PCR test can take hours or even days, given the pandemic situation that increases the demand for tests with the high speed of contamination, clinical diagnosis assumes a fundamental role in determining the treatment and the type of care correct for mild, moderate and severe cases. In this sense, the observation of complementary clinical parameters, such as the hematological parameters obtained from common tests in clinical practice, takes on an important role. Several works have been using machine learning techniques to diagnosis diseases by analyzing hematological parameters automatically (Barbosa et al. 2021b, Guncar et al. 2018; Luo et al. 2016, Tannerˇ et al. 2008). Blood tests are commonly used during medical screening. The most common blood tests, like complete blood count, bilirubin, serum glucose, C-reactive protein, urea, and others, are easily available at low-cost compared with other diagnosis methods. Therefore, intelligent systems can be used to automatically analyze hematological parameters and use them to support COVID-19’s clinical diagnosis and to suggest appropriate patient care (Barbosa et al. 2021b).

In this work we propose two main applications to support the clinical diagnosis of COVID-19 based on hematological parameters: (1) rapid diagnosis based on automatic analysis by an intelligent web system based on particle swarm optimization and random forests; (2) a tool to build clinical analysis protocols, to be used in contexts where the intelligent rapid diagnosis system is not available. In the first approach, an intelligent web system was developed to support decision making. This system was put into operation in the city of Paudalho, Brazil, to support the clinical diagnosis and assessment of the severity of the disease in patients admitted to intensive and semi-intensive care units, in the year 2020. In the second approach, we used decision trees to infer which hematological parameters would be statistically more relevant for clinical diagnosis. In the particular case of COVID-19, the most relevant hematological parameters were ferritin and prothrombin partial time. From these results, these hematological parameters can be inserted as a new medical protocol in the investigation of suspected cases of COVID-19. Finally, the results of general accuracy, sensitivity, and specificity were quite high for both approaches, demonstrating that rapid diagnosis is possible using only well-known and low-cost clinical exams, with the potential support of an intelligent decision support system. The high correlation between ferritin, prothrombin time and a positive diagnosis for COVID-19 in symptomatic patients also points to the need for more research on treatments to combat early clotting and the main symptoms of the disease.

Related works

Several studies emphasize the importance of hematological parameters to support COVID-19 clinical diagnosis. Some of them point to the relevance of using hematological analysis as an indicative of the severity degree of COVID-19. Fan et al. (2020) analyzed hematological parameters of 69 patients with COVID -19. The study was conducted with subjects from the National Center for Infectious Diseases (NCID) in Singapore. 65 of these patients underwent complete blood count (CBC) on the day of admission. 13.4% of patients needed intensive care unit (ICU) care, especially the elderly. During the first exams, 19 patients had leukopenia and 24 had lymphopenia, with 5 cases classified as severe (Absolute Lymphocyte Count (ALC) < 0.5 × 109/L). The study also pointed out that patients who needed to be admitted to the ICU had lower ALC and a higher rate of Lactate Dehydrogenase (LDH). These data indicated that monitoring these hematological parameters can help to identify patients who need assistance in the ICU. The authors found that the patients who were in the ICU had a significant decrease in their hemoglobin levels, ALC and Absolute Monocyte Count (AMC) levels, when compared to the non-ICU group. ICU patients also tend to develop neutrophilia. The platelet count did not prove to be a factor for discrimination between the type of hospitalization.

Gao et al. (2020) assessed hematological characteristics of 43 patients at Fuyang Second People’s Hospital. The patients had diagnosis confirmed by the COVID-19 ground truth test, the fluorescent reverse transcription-polymerase chain reaction (RT-PCR). They were divided into two groups: the moderate group with 28 patients, and the severe group with 15 patients. The groups have no significant difference in age and sex, and both were evaluated by routine blood tests and blood biochemistry parameters. Thereby, from statistical tests, the study noted that the levels of glucose (GLU), C-reactive protein (CRP), interleukin-6 (IL-6), thrombin time (TT), fibrinogen (FIB), and D-dimer were significantly higher in the severe group than in the mild group. Performing this analysis with ROC curves, the authors pointed out that the best indicators for predicting severity were IL-6 and d-D combined, with AUC of 0.840, specificity of 96.4%, and sensitivity of 93.3%. These results indicate that patients with severe conditions would have abnormal coagulation.

Liu et al. (2020) reported that lymphopenia and inflammatory cytokine storm are abnormalities commonly found in other infections caused by coronavirus, such as SARS-Cov and MERS-Cov. With that in mind, they studied 40 patients with positive RT-PCR for COVID-19 at Wuhan Union Hospital. The information provided was epidemiological, demographic, clinical manifestations, and laboratory tests. Similar to the previous study, patients were divided into two groups: mild patients, with symptoms such as epidemiological history, fever or respiratory symptoms, and abnormalities in imaging tests; the second group with severe patients, patients should additionally have symptoms such as shortness of breath, oxygen saturation < 93%, respiratory > 30 times/min, or PaO2/FiO2 < 300 mmHg. A total of 27 patients were classified in the first group, while 13 were classified in the second. The study reported that levels of fibrinogen, D-dimer, total bilirubin, aspartate transaminase, alanine transaminase, lactate dehydrogenase, creatine kinase, C-reactive protein (CRP), ferritin, and serum amyloid A protein were significantly higher in the severe group. Futhermore, most severe patients presented lymphopenia, while white blood cells and neutrophils counts were higher.

These studies have pointed out that hematological parameters can be indicators of the risk factors and degree of severity of COVID-19. The identification of these clinical parameters can be essential to optimize clinical care for each group of patients. In this sense, the development of intelligent systems based on blood tests is useful. Faced with the pandemic scenario, in which most hospitals are full, decision support systems can facilitate clinical management. Thus, it can increase the assertiveness in the treatment for each case and, consequently, the number of lives saved.

Regarding the use of intelligent systems to support COVID-19 diagnosis, Saba and Khan (2021) present a collection of 14 chapters focused on COVID-19 solutions. From these chapters, we highlight the following works related to our proposal: Khan et al. (2021) present a review of the state-of-the-art of the intelligent solutions to support COVID-19 image diagnosis by applying deep learning methods over x-ray thoracic images and computerized tomography x-ray images. Hassan et al. (2021) made a selective assessment of the latest research articles relevant to the applications of artificial intelligence and machine learning (ML) techniques from the databases of the Web of Science, Scopus, and Google Scholar, using keywords of coronavirus, artificial intelligence, and ML. The authors analyzed the use of computed tomography (CT) imaging, X-ray, and magnetic resonance imaging (MRI) and their applications COVID-19 pandemics. Karimi et al. (2021) and Nourbakhsh (2021) present reviews of the state-of-the-art of the use of computational intelligence, on computerized tomography x-ray imaging to support COVID-19 diagnosis and progress evaluation.

Fei et al. (2021) highlighted the applications of modern digital technology, statistical methods, data platforms and data integration systems to improve diagnosis and treatment of diseases in clinical research and novel epidemiologic tools to tackle infection source problems, such as finding Patient Zero in the spread of epidemics. The authors concluded that analyzing and interpreting COVID-19 big data is an incredibly challenging task that requires a multi-disciplinary effort to continuously create more effective methodologies and powerful tools to transfer data information into knowledge that enables informed decision making.

Pani et al. (2022) present a large collection of intelligent solutions in the fight against COVID-19 regarding disease prediction (Pandit et al. 2022; Torcate et al. 2022), temporal and spatiotemporal forecasting (Biswas and Dash 2022; da Silva et al. 2022; Pandit et al. 2022), and intelligent diagnosis on computerized tomography x-ray imaging (Adetunji et al. 2022c; de Santana et al. 2022; Majhi et al. 2022; Pandit et al. 2022), thoracic x-ray imaging (Adetunji et al. 2022c; Majhi et al. 2022; Pandit et al. 2022; Usharani 2022), electrical impedance tomography imaging (Wolff et al. 2022), signals and symptoms (Adetunji et al. 2022a,d; Pandit et al. 2022; Peter et al. 2022; Tripathi et al. 2022), molecular analysis (Adetunji et al. 2022c; Pani et al. 2022; Sharma et al. 2022), and hematological parameters (Torcate et al. 2022) based on ML (shallow and deep algorithms) Adetunji et al. (2022b, c, e) and swarm intelligence Usharani (2022).de Santana et al. (2022) proposed an automatic system for COVID-19 diagnosis using ML techniques and CT X-ray images named IKONOS-CT, an intelligent system dedicated to provide a binary classification, differentiating COVID-19 patients from non- COVID-19 ones. For classification tasks, the authors performed 25 experiments for the following classifiers: multilayer perceptron (MLP), support vector machines (SVM), random tree and random forest, and Bayesian networks. The best overall performance was reached using Haralick as feature extractor and SVM with polynomial kernel of exponent 3. The authors found the following results: accuracy of 96.994% (1.375), sensitivity/recall of 0.952 (0.024), and specificity of 0.987 (0.014). The authors claim that, by using a computationally low-cost method based on the Haralick texture features extractor, it was possible to achieve high diagnosis performance. These experimental results point out that an effective path for COVID-19 diagnosis is composed by the combination of artificial intelligence and human-based clinical analysis.

Torcate et al. (2022) wanted to make predictions regarding treatment and assessments of severity of patients with and without COVID-19 based on blood tests. The authors used hematological data from patients who attended the units of the Brazilian public healthcare system in the city of Paudalho in 2020. Their objective was to analyze intelligent classifiers able to make hospitalization predictions considering three scenarios: regular ward, semi-intensive care unit, and intensive care unit, corresponding to mild (non-critical), moderate, and serious cases. The results obtained in the experiments show that the classifiers managed to perform better with the database balanced with the SMOTE method, and the 100-tree random forest demonstrated the best potential to perform the predictions for regular ward (sensitivity of 0.730, and specificity of 0.913), as for the semi-intensive care unit (sensitivity of 0.890, and specificity of 0.875), and intensive care unit (sensitivity of 0.640, and specificity of 0.947). The authors intended to assist health professionals in decision making, aiming to streamline the process of directing patients to the most suitable care units for each patient.

Guncar et al. (2018) proposed a system based on ML for analyzing blood tests andˇ predicting hematological diseases. Their database was acquired between the years 2005 and 2015 at the University Medical Center of Ljubljana. In this case, 43 diseases and 181 clinical parameters or features were selected to generate a first model (SBA-HEM181). In addition to it, a second model with 61 parameters was also developed (SBA-HEM061). The selection criteria were based on the frequency of use. Regarding the missing values (about 75%), the authors filled in with median values for each attribute. As classification methods, the authors tested classic approaches, such as SVM, Naive Bayes, and random forest. The simulations were repeated 10 times using tenfold cross validation. Finally, the models SBA-HEM181 and SBA-HEM061 reached an accuracy of 57% considering all the diseases chosen. By restricting the prediction to five classes, the systems achieved an accuracy of 88% and 86%, respectively, when using random forest for classification. This study also pointed to the possibility of effectively detecting diseases through blood tests using classic intelligent classifiers.

Barbosa et al. (2021b) proposed an intelligent system to support COVID-19 diagnosis using blood exams. Heg.IA was developed in a context where it was necessary and urgent to develop a diagnostic support system that would provide rapid results with high sensitivity and high specificity. In this context, blood tests have some advantages. First, they are commonly used during medical screening. In addition, blood tests are cheaper and less time consuming than other diagnostic methods, making the system more accessible. By combining these blood test results with analysis based on artificial intelligence, the authors built a relatively robust, efficient and easily available system for diagnosing COVID-19. The intelligent system was built using a 5644-subjects public database from the Hospital Israelita Albert Einstein, Brazil (Kaggle 2020). The authors also optimized the system to reduce the number of tests needed, based on its relevance to describing the diagnostic problem and its price and availability worldwide, especially in low-income communities. First, an automatic selection of exams was performed using the particle swarm optimization method. Then, the authors manually selected some exams with the objective of reaching an ideal combination of price, time and number of procedures. This procedure resulted in 24 blood tests, which can be delivered in up to 1 h. As computational classification can be performed in milliseconds, with the 24 blood test results, a technician can obtain diagnostic results relatively quickly. The user just needs to fill the electronic form with these 24 blood test results. Using classic Bayesian networks, the system returned high diagnostic performance: 95.159% (0.693) overall accuracy, kappa index of 0.903 (0.014), sensitivity of 0.968 (0.007), accuracy of 0.938 (0.010), and specificity of 0.936 (0.010). If compared to methods based on deep learning, the proposed system also reduces the computational cost. Although the study achieved very high results, the set of selected tests did not considered all the exams indicated by subsequent recommendations from the Brazilian Ministry of Health when dealing with COVID-19 patients (Brazilian Ministry of Health Guidelines for the diagnosis and treatment of COVID-192020), since these exams were not established as mandatory for the context of clinical diagnosis of COVID-19 in the time of the publication.

Barbosa et al. (2021a) propose the Heg.IA web system, a solution to optimize the diagnosis of COVID-19 through the automatic analysis of blood tests using machine learning. The system aims to support decision-making regarding the diagnosis of COVID-19 and the indication of admission to a regular ward, semi-ICU or ICU based on the decision of a random forest architecture with 90 trees. To this end, healthcare professionals can enter 41 hematological parameters from common blood tests and arterial blood gases into the system. Then the proposed intelligent system provides a diagnostic report. The Heg.IA web system employs blood tests to support the diagnosis of COVID-19. The machine learning method was trained using the database provided by Hospital Israelita Albert Einstein located in São Paulo, Brazil. The database is formed by information from 5644 patients.

Among them, 559 patients were diagnosis with COVID-19 by RT-PCR with DNA sequencing and identification and additional laboratory tests during a visit to the hospital. For each patient, the database has more than one hundred laboratory tests like blood counts and urine test. From this database we set a new one that contains only 41 blood tests recommended by the Brazilian Ministry of Health when dealing with COVID-19 patients. The system achieved good results both for the diagnosis of COVID-19 and for the recommendation of hospitalization. To support the clinical diagnosis of COVID-19, the solution achieved an accuracy of 92.891% (0.851), kappa index of 0.858 (0.017), sensitivity of 0.936 (0.011), precision of 0.923 (0.011), specificity of 0.921 (0.012), and area under ROC curve of 0.984 (0.003). Regarding the indication for hospitalization, the system provided high performance: accuracy above 99% and kappa, sensitivity, specificity, area under ROC curve, and precision above 0.99. Using a method with low computational cost, based on classical decision trees, the authors obtained a high diagnostic performance. The authors claim that the Heg.IA system could be a way to overcome the unavailability of tests in the context of COVID-19. The work of Barbosa et al. (2021a) is a significant improvement of the proposal of Barbosa et al. (2021b).

Similarly, Soares et al. (2020) use a method based on artificial intelligence to identify COVID-19 through blood tests. As Barbosa et al. (2021b) and Barbosa et al. (2021a) did, they used the database from the Hospital Israelita Albert Einstein. However, since the database has many missing data, they chose to include only the subjects that had most of the data. This procedure reduced the dataset from 5644 to 599 samples. By using Support Vector Machines as a classifier and SMOTE Boost technique to perform oversampling, they achieved average specificity of 85.98%, negative predictive value (NPV) of 94.92%, average sensitivity of 70.25%, and positive predictive value (PPV) of 44.96%.

Martinez-Velazquez et al. (2021) present a solution for detecting COVID-19 infections exclusively on the basis of self-reported symptoms. The motivation of the authors was providing a relatively inexpensive and easy to deploy solution at either an individual or population scale. The authors trained and tested a large set of machine learning models to detect COVID-19 based exclusively on symptoms and signs, similar to the process of anamnesis assessment. Martinez-Velazquez et al. (2021) evaluated 15 different classifiers: decision tree (DT), perceptron neural network (NN), and support vector machine (SVM), random forest (RF), and a voting classifier. The best model was a voting ensemble of a random forest and a decision tree. This model was able to reach a mean area under the ROC curve of 0.728, a sensitivity of 0.752, a specificity of 0.609, and a precision of 0.660. The best classifier among those reported in this study presents a sensitivity and precision higher than health professionals’ anamnesis; however, the specificity is lower. The immediate benefit of adopting such an ML-powered assessment approach is to maximize the use of the limited available RT-PCR tests, to find more COVID-19 positive infections within the community by identifying a higher ratio of actual positive cases (higher sensitivity).

The intelligent systems based on blood tests may play a key role in the process of diagnosing COVID-19, since many studies are confirming evidences of how this disease affects hematological parameters. Positive cases can be forwarded for results confirmation through RT-PCR, computerized tomography scans and/or radiography (Gomes et al. 2020a, b).

Negri et al. (2020) have been using elevated D-dimer as a predictor of severity and mortality in COVID-19 patients. They also observed that COVID-19 patient autopsies revealed thrombi in the microvasculature, suggesting that hypercoagulability is a prominent feature of organ failure. In this context, Negri et al. (2020) performed a clinical study involving 27 COVID-19 patients admitted to Sirio-Libanes Hospital in São Paulo, Brazil. They treated these patients with heparin in therapeutic doses tailored to clinical severity.

Following the steps of (Negri et al. 2020), several studies confirmed the importance of D-dimer as severity predictor and specific treatment based on anticoagulants as capable to reduce COVID-19 mortality and improve prognosis (Chatterjee et al. 2020, Menezes-Rodrigues et al. 2020, Sahu and Agrawal 2020, Shi et al. 2020, Tang et al. 2020, Viecca et al. 2020, Wang et al. 2020c). Klok et al. (2020) findings also state that COVID-19 may predispose patients to both venous and arterial thromboembolism due to excessive inflammation, hypoxia, immobilization, and diffuse intravascular coagulation. The authors’ findings are strongly suggestive of increasing the prophylaxis towards high-prophylactic doses. For Long et al. (2020) and Panigada et al. (2020), D-dimer and prothrombin time are most significant indicators of severe COVID-19 cases and poor prognosis due to hypercoagulation hypercoagulability together with severe inflammatory states. Other clinical studies confirm evidences to this finding and point out to partial thromboplastin time as other important biomarker (Connors and Levy 2020; Iba et al. 2020; Liao et al. 2020; Pavoni et al. 2020; Spiezia et al. 2020; Wright et al. 2020).

For Gómez-Pastora et al. (2020), as ferritin can be actively secreted at the site of infection, it is possible that ferritin can take on other functions in addition to its classic role as an iron storage protein. Ferritin has been shown to be a signaling molecule and direct mediator of the immune system (Rosário et al. 2013). Complex feedback mechanisms may exist between ferritin and cytokines in the control of pro-inflammatory and anti-inflammatory mediators, as cytokines can induce ferritin expression. Ferritin can also induce the expression of pro- and anti-inflammatory cytokines (Rosário et al. 2013). However, the pathogenic role of ferritin during inflammation remains unclear (Kernan and Carcillo 2017). For Gómez-Pastora et al. (2020), it is necessary to investigate the structure of plasma ferritin in patients with COVID-19.

For Vargas-Vargas and Cortés-Rojo (2020), there is a strong evidence supporting the hypothesis that ferritin levels might be a crucial factor influencing the severity of COVID-19. Lin et al. (2020) found an association between hyperferritinemia and disease severity in patients with COVID-19. Lin et al. (2020) conducted a retrospective study on 147 confirmed COVID-19 patients in Changsha, China. The overall proportion of severe disease was 16.32% (24/147). The severe patients had higher levels of serum ferritin than the non-severe patients. Multivariate logistic regression analysis indicated that the serum ferritin level on admission was an independent risk factor for disease severity in COVID-19 patients. Other studies agree on the importance of serum ferritin as a COVID-19 biomarker (Bataille et al. 2020; Cheng et al. 2020; Dahan et al. 2020; Kappert et al. 2020; Tural Onur et al. 2021).

The general objective of this work is to propose a solution to support the clinical diagnosis of COVID-19 through the introduction of rapid protocols based on the automatic analysis of hematological parameters using machine learning. Hematological parameters are obtained from common blood tests in clinical practice: the complete blood count and biochemical tests. These are low-cost tests that are already mandatory to support clinical diagnosis. The rapid protocols are based on two approaches: (a) the introduction of the Heg.IA web tool in clinical diagnostic practice, through the adoption of diagnostic suggestion reports based on the analysis of hematological parameters using machine learning and swarm intelligence; (b) the generation of rapid protocols to support clinical diagnosis by using offline decision trees. In this work, we present an improved version of the web system Heg.IA, developed by Barbosa et al. (2021a). In turn, the work of Barbosa et al. (2021a) is an improvement of the workstation solution developed by Barbosa et al. (2021b). In this newer version, we introduced the functionality of generating offline protocols based on decision trees, to support clinical diagnosis without having the Heg.IA system available. The motivation was to make clinical diagnosis fast and accurate, previously supported by machine learning, but without the need for a web application. Furthermore, the database used in this updated version was the result of a pilot project applied in the public health system of the city of Paudalho, Pernambuco, Brazil, during the years 2020 and 2021, while using the older application version. The database is composed of 6215 records obtained from electronic medical records collected from 2019 to 2020. In Paudalho, the Heg.IA system was used not only to support the diagnosis of COVID-19, but also to monitor the progress of moderate and severe cases of COVID-19 in ICU and semi-ICU. The version of the Heg.IA web proposed in this work also has the functionality of predicting the hospitalization of symptomatic patients with COVID-19 (ICU or semi-ICU) based on hematological parameters. These results, however, were published by Torcate et al. (2022).

Materials and methods

Proposed method

In this work, we propose two approaches of rapid protocols to support the clinical diagnosis of COVID-19 based on hematological parameters: (1) rapid diagnosis based on automatic analysis by an intelligent web system based on particle swarm optimization and random forests; (2) rapid diagnosis by statistical inference by decision trees. In the first approach, we developed an intelligent web system for decision COVID-19 diagnostic support: Heg.IA web. This system was based on a standalone solution based on Windows and Linux PCs, Heg.IA (Barbosa et al. 2021b). The system started operating in the city of Paudalho, Brazil, as a prototype and has been used to support clinical diagnosis and assessment of disease severity in patients admitted to intensive and semi-intensive care units in public health units in the city since 2020.

These approaches are called clinical diagnosis support protocols because they are proposed: (a) as part of the clinical decision-making process, integrating the currently existing processes in the form of an intelligent decision support system using machine learning; or (b) as an application of decision trees to automatically build rules for evaluation of hematological parameters and clinical decision making by the human specialist, i.e., the medical professional.

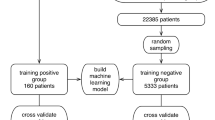

In this approach, we used a knowledge base composed of 6215 patient records seen in health units in the city. The records were represented by age and up to 43 hematological parameters. We do not use biological sex because it is not relevant to the COVID-19 diagnostic problem. Using the particle swarm optimization method, with 20 individuals evolving in 50 generations, we reduced the dimension of the attribute vectors from 43 to 8 statistically more relevant attributes. Both the original knowledge base (43 features) and the simplified knowledge base (8 features) was used to build machine learning models based on the following architectures: multilayer perceptron neural networks (MLP), support vector machines (SVM), naive Bayes classifier, Bayesian network, decision trees, and random forests. The diagram in Fig. 1 shows a summary of this solution. A general method was proposed: patients with characteristic symptoms of COVID-19 are referred to a health unit and are evaluated by a medical team. This team orders blood tests. After obtaining the results, the health professional accesses Heg.IA web. On the website, he must login. Then he can enter the results of the patient’s blood tests. Upon completion, the system will generate a report with a positive or negative diagnosis for COVID-19, in addition to the hospitalization forecast. This report can be printed and used by the medical team to define the final clinical conduct.

General method proposed: The system can be used in two different scenarios. The first scenario consists of using the system as a diagnostic support tool. In this case, patients with characteristic symptoms of COVID-19 are referred to a health unit and are evaluated by a medical team. This team orders blood tests. After obtaining the results, the health professionals access the HegIA web. On the website, they must login. Then these health professionals can enter the results of the patient’s blood tests. Upon completion, the system will generate a report with a positive or negative diagnosis for COVID-19, in addition to the hospitalization forecast. This report can be printed and used by the medical team to define the final clinical conduct. The second scenario consists of using the system to build clinical analysis protocols, in case of absence of the automatic analysis solution. In this case, the physician can generate a protocol document. In this way, the document can guide the request for blood tests, optimizing the time of collection and results. Finally, the medical team will be able to define the clinical management.

In the second approach, we use decision trees to find the most significant relationships between hematological parameters and decision making, i.e., whether COVID-19 is positive or not. This process was used to infer that hematological parameters are statistically more relevant for clinical diagnosis without the support of an intelligent system.

All experiments were performed with 30 repetitions of tenfold cross-validation, resulting in 300 computational experiments. As metrics of quality, we use accuracy, sensitivity, specificity, and area under the ROC curve.

Database

The city of Paudalho is located 38 km from the coastal capital Recife, State of Pernambuco, Brazil, has a semi-arid climate (Vilar and Medeiros 2019) and is inserted in the Atlantic Forest biome. It has a territorial area of 269,651 km2 and its population was around 51,357 in 2010 (last census conducted in Brazil). It is estimated that in 2020 it had 56,933 inhabitants (IBGE 2010). Its demographic density of 185.06 inhabitants/km2 places it among the 40 most populated municipalities in the state.

Some social determinants of health provide a more functional view of the municipality in question. Directly related to health care, the municipality has 21 SUS health establishments, encompassing primary, secondary and tertiary care (IBGE 2010). SUS is an acronym for “Unique Public Health System,” the Brazilian public health system. It presents only 31.5% of households with adequate sanitation (position 132 in the State of Pernambuco) (IBGE 2010) and infant mortality rate of 13.55/1000 live births (IBGE 2010).

This retrospective study used the medical records of patients provided by the Health Secretariat of the city of Paudalho as a database. All procedures for this research were approved by the Research Ethics Committee at the Federal University of Pernambuco under number CAAE 34932020.3.0000.5208.

We used 6215 records of patients seen in outpatient clinics, emergency rooms and the emergency department of SUS in the city in question, from August 30, 2019 to August 17, 2020. Of these records, 57.61% were women (3581) and 0.27% were newborns (17) and did not have their gender identified. The mean age was 41.79 (22.94) years. Among men, 4.54% tested positive for COVID-19 and proportionally the most affected age group was over 90 years, where 20% of patients were victims of the disease. Among the women, 3.88% had the diagnosis of COVID-19 confirmed, with 11.86% of the patients aged 50 to 60 years and 11.88% of the age group 80 to 90 years tested positive.

The graph in Fig. 2 shows the demographic stratification by sex and age of all records used in this study. We highlight the positive cases of COVID-19 from male (COVID-19 M) and female (COVID-19 F) patients using the colors blue and red, whilst the total of male and female patients are associated to orange and green, respectively. Table 1 shows the demographic details of the database, in which 2617 records are related to males, whilst 3581 are females; from these records, 119 (4.54%) are males positive for COVID-19 and 139 (3.88%) are females with COVID-19 as well, in a total of 258 COVID-19 patients distributed among moderate and severe COVID-19 cases.

Demographic stratification by sex and age of all records used in this study. We highlight the positive cases of COVID-19 from male (COVID-19 M) and female (COVID-19 F) patients using the colors blue and red, whilst the total of males and females patients are associated to orange and green, respectively.

Since not all 6215 records correspond to medical records with tests that cover all the hematological parameters provided for in this work, the database has several missing values. To fill in these missing values, we used mean predictors. For each class (positive or negative for COVID-19), the sample mean for each attribute was calculated. This mean was used as a predictor for the missing values in the attribute with missing value in the vector of attributes of the corresponding class. The total of medical records with blood tests considered incomplete does not exceed 30% of the data set.

To perform this research, we initially selected 41 hematological parameters. These hematological parameters correspond to blood exams recommended by the Ministry of Health of Brazil as an initial clinical approach and part of the COVID-19 diagnostic process (Brazilian Ministry of Health Guidelines for the diagnosis and treatment of COVID-192020). Therefore, considering that health centers must already perform these tests, there is no monetary loss or time spent on additional tests. On the contrary, the diagnostic process can be optimized with the system proposed here. The list of 41 hematological parameters is shown in Fig. 3. The Complete Blood Count (CBC) with differential comprises 20 of these hematological parameters, while arterial blood gas analysis includes 9 hematological parameters. The remaining 12 exams are those of total, indirect, and direct bilirubin; serum glucose; lipase dosage; urea; D-dimer; lactic dehydrogenase; C-reactive protein (CRP); creatinine; and partial thromboplastin time (PTT); and prothrombin time activity from coagulogram. To this 41-item list, we added patient’s age and a redundant lymphocytes parameter. This additional lymphocyte item corresponds to a different electronic patient record specification that used to categorize atypical lymphocytes but was abandoned since the beginning of 2020.

Feature selection

The database constructed during the pre-processing was submitted to feature selection using Particle Swarm Optimization (PSO) (Kennedy and Eberhart 1995; Poli et al. 2007; Wang et al. 2007). As objective function, we employed a simple decision tree to guide the optimization process. We set the algorithm to 20 individuals and 500 iterations. The goal of the attribute selection is to find the most significant exams for classification tasks and to reduce the number of required exams for diagnostic support.

We chose the PSO algorithm because it is a well-established search and optimization algorithm with few optimization parameters to be defined (Kennedy and Eberhart 1995; Poli et al. 2007; Wang et al. 2007). The PSO algorithm was created taking inspiration from the behavior of flocks of birds (Kennedy and Eberhart 1995; Poli et al. 2007; Wang et al. 2007). These flocks of birds were modeled as dynamic systems where there is a global leader, who guides the flock in a direction that optimizes a given measure of performance, and local leaders. While the global leader governs the overall dynamics of the flock of birds, local leaders are defined as those who perform best among their neighbors. Each bird is modeled using pairs of spatial position and velocity vectors. Throughout the evolution of the system, it is possible for a local leader to become a global leader, as it is also possible for leaders to become simple members of the flock of birds. Each bird represents a candidate for solving the problem of maximizing or minimizing a given objective function. In the case of maximization problems, the candidates for the solution that correspond to the global maximum and local minimums are the respective global and local leaders. Figure 4 illustrates the metaphor that inspires the PSO algorithm. PSO algorithm uses a population of randomly generated particles. In this approach, each particle corresponds to randomly generated solution and have an associated velocity and position. For each particle, each position vector is binary: 1’s and 0’s corresponds to the presence or the absence of one of the 43 features in the process of training and testing the decision tree classifier associated to the objective function. The output of the objective function is the overall accuracy of a tenfold cross validation training process. In this objective function, a decision tree is used as classifier. We employed meta-heuristic libraries developed in Java for Weka data mining platform (Gnanambal et al. 2018). We adopted the following feature selection methods: individual weight of 0.34, inertia weight of 0.33, mutation probability of 0.01, report frequency of 20, social weight of 0.33 (Bratton and Kennedy 2007, Kennedy and Eberhart 1995, Poli et al. 2007, Van den Bergh and Engelbrecht 2004).

The particle swarm optimization (PSO) algorithm is based on the behavior of flocks of birds. Each bird is modeled by a position vector and a velocity vector. Thus, the movement of a bird in search of resources is governed by a global leader and local leaders whose performance is defined by an objective function. In the problem of selection of the most significant exams, we use a decision tree as an objective function, with the overall accuracy of the training and testing process by tenfold cross-validation being returned as output. Each bird is a candidate for solving the problem of maximizing accuracy. Position vectors are binary, where each coordinate corresponds to the presence or absence of one of the 43 attributes.

The feature selection implementation resulted two databases: the original database with 43 attributes, SARS-CoV-2, and the dimension-reduced database, SARS-CoV-2 (PSO). Age was not selected: it appeared as not statistically relevant according to PSO selection. The selected exams/features were the following: serum glucose, indirect bilirubin, partial thromboplastin time, lactic dehydrogenase, lipase dosage, D-dimer, ferritin, and troponin.

Classification

Multilayer perceptron

Multilayer perceptron (MLP) consists of a generalization of the Perceptron proposed by Franklin Rosenblatt in 1958. Perceptron is the model is the simplest form of a neural network, being able to deal with linearly separable problems. Multilayer perceptron networks, on the other hand, have several interconnected neurons (or nodes), arranged in layers: the input layer, the hidden layers, and the output layer. The input layer only has the network input vector, which is passed on to the next layer. Then, each node in the next layer modifies these input values through non-linear activation functions, generating output signals. In addition, the network nodes are connected by weights, which scales these output signals. Finally, the superposition of several non-linear functions allows the mapping of the input vector to the output vector. As MLPs can have one or multiple hidden layers, this process can be repeated several times, depending on the selected architecture (Barbosa et al. 2020; Gardner and Dorling 1998; Lerner et al. 1994; Phung et al. 2005).

Through the proper selection of activation functions and synaptic weights, an MLP is able to approximate the inputs at the desired outputs. This search and adjustment of parameters is called the training process. MLPs learn in a supervised manner. During this process, errors between the actual and desired outputs are calculated. These errors are used to adjust the network (Gardner and Dorling 1998).

In order to adjust these weights, the backpropagation algorithm is the most computationally straightforward and common algorithm. It occurs in two phases: the forward and backward propagation. In the first step, the initial network weights are set to small random values. Then, this first input vector is propagated through the network to obtain an output. This actual output is compared with the desired one, and the error is calculated. In the second phase, the backward propagation, the error signal is propagated back through the network and the connection weights are updated, aiming to minimize the overall error. These steps can be repeated until the overall error is satisfactory (Haykin 2001).

MLPs and other artificial neural networks architectures are commonly used in support diagnosis applications (Naraei et al. 2016), e.g., liver disease diagnosis (Abdar et al. 2018), heart diasese diagnosis (Hasan et al. 2017), breast cancer diagnosis over breast thermography (de Vasconcelos et al. 2018; Pereira et al. 2020a,b,c; Rodrigues et al. 2019; Santana et al. 2020, 2018) and mammography images (Cordeiro et al. 2016, 2017, Cruz et al. 2018, de Lima et al. 2014, 2016, Lima et al. 2015, Silva and Santana 2020), for recognition of intracranial epileptic seizures (Raghu and Sriraam 2017), and multiple sclerosis diagnosis support (Commowick et al. 2018).

Support vector machines

Support vector machines (SVM) were created by Vladimir Vapnik and Alexey Chervonenkis (Boser et al. 1992; Cortes and Vapnik 1995) in 1963. Their main purpose is to build a linear decision surface, called a hyperplane. The idea is that this hyperplane should be able to separate classes in the best possible way. The optimal hyperplane is found when the margin of separation between it and a given nearest point is maximum (Haykin 2001).

SVM classifier are known for its good generalization performance. They are employed in several healthcare applications, such as breast cancer diagnosis using thermography and mammography (Cordeiro et al. 2016, 2017, Cruz et al. 2018, de Lima et al. 2014, 2016, de Vasconcelos et al. 2018, Lima et al. 2015, Pereira et al. 2020b, Santana et al. 2020, Silva and Santana 2020), diabetes mellitus diagnosis (Barakat et al. 2010), heart valve diseases (Çomak et al. 2007) and pulmonary infections detection (Yao et al. 2011), and also diagnosis of pulmonary cancer (Sun et al. 2013). However, its performance varies depending on the problems complexity. The type of the machine varies with the type of kernel used to build the optimal hyperplane. Table 2 shows the kernel functions used in this study: the polynomial and RBF kernels. For the first case, it was tested exponents of value 1 (linear kernel), 2, and 3.

Decision trees

Decision trees are sequential models, which combine several simple tests. They can be understood as a series of questions with “yes” and “no” answers. These tests can be the comparison of a value with a threshold or a categorical attribute compared to a set of possibilities, for instance. Thus, when analyzing the data with these tests, the decision trees will guide to a certain class in classification problems, or to a continuous value, in cases of regression problems. In this way, a decision tree is built with certain questions, called nodes. Essentially, there are four types of nodes: root, parent, child, and leaf. Starting at the root node, an instance is classified. Then, the outcome for this instance is determined ad the process continues through the tree. In addition, one node may connect to another, establishing a parent–child relationship, in which a parent node generates a child node. Finally, the terminal nodes of the tree are the leaf nodes, and they represent the final decision, that is, the predicted class or value. There are several types of decision trees, depending on the tree structure. The most popular ones are random tree and random forest. Both of them were tested in this study by using multiple configuration parameters (Kotsiantis 2013; Podgorelec et al. 2002).

Random tree uses a tree built by a stochastic process. This method considers only a few randomly selected features in each node of the tree Geurts et al. (2006).

In contrast, random forest is a model made up of many decision trees. In this case, a set of trees is built and their votes are combined to classify an instance, by means of the majority vote. Each decision tree uses a subset of attributes randomly selected from the original set of attributes (Breiman, 2001).

Bayesian network and naive Bayes classifier

Bayesian classifiers are based on Bayes’ decision theory. Among the most popular Bayes’ classifiers are naive Bayes and Bayes Nets, also known as Bayesian networks. Bayesian networks describe the probability distribution over a set of variables. They represent, in a simple way, the causal relationships of the variables of a system using Graph Theory, where the variables are the nodes, and the arcs identify the relationships between the variables. In the learning process, it is necessary to calculate the probability distributions and to identify the network structure. Learning the network structure can be considered an optimization problem, where the quality measure of a network structure needs to be maximized (Bouckaert 2008; Cheng and Greiner 1999).

On the other hand, the Naive Bayes classifier is a simple model that considers that the domain variables are conditionally independent, that is, one characteristic is not related to the other. Its learning is done in an inductive way, presenting a set of training data and calculating the conditional probability of each attribute, given a class. Naive Bayes needs to estimate few configuration parameters (Bouckaert 2008; Cheng and Greiner 2001).

Parameters settings of the classifiers

All experiments were performed using the Weka Java library in 30 runs. During training, we used the k-fold cross-validation method with k = 10 to split the set (Jung and Hu 2015). Therefore, in each run the training was performed 10 times, with 9 parts of the set being used for training and 1 part used for validation. It is worth mentioning that this method guarantees that the validation set does not participate in the training. Thus, we were able to verify the performance of the model against external training data and tenfold cross validation. The experiments were made by using the following methods:

-

Naive Bayes classifier;

-

Bayes net;

-

Multilayer perceptron: one hidden layer, for 20, 50, and 100 hidden neurons;

-

Support vector machines: we tested the following kernels, for the configuration parameter C varying for 0.01, 0.1, 1, and 10: linear kernel, polynomial kernel with degree varying for 2, 3, 4, and 5; and Radial Basis Function (RBF) kernel, with γ of 0.01, 0.25 and 0.5;

-

Decision trees: J48 and random tree; random forests, for 10, 20, 30, 40, 50, 60, 70, 80, 90, and 100 trees.

Metrics

We chose the following metrics to evaluate the performance of diagnostic tests: accuracy, sensitivity, specificity, and the area under ROC curve. Accuracy is the probability that the test will provide correct results, that is, be positive in sick patients and negative in healthy patients. In other words, it is the probability of the true positives and true negatives among all the results. The sensitivity is the rate of true positives and indicates the classifier ability to detect correctly people with COVID-19. Specificity is the capacity of classifying healthy patients as negatives. It is the rate of true negatives. Finally, the area under the ROC curve is a measure of a classifier’s discriminating ability. That is, given two classes — a sick individual and a non-sick individual—chosen at random, the area below the ROC curve that indicates a probability of the latter being correctly classified. If the classifier cannot discriminate between these two separately, an area under a curve is equal to 0.5. When this value is the next 1, it indicates that the classifier is able to discriminate these two cases (Hand 2009).

These metrics allow to discriminate between the target condition and health, in addition to quantifying the diagnostic exactitude (Borges 2016). The accuracy, sensitivity, and specificity can be calculated as following:

where TP is the true positives, TN is the true negatives, FP is the false positives, and FN, the false negatives. TPR and TNR are the true positive and the true negative rates, respectively (Fawcett 2006).

An area under the ROC curve (AUC) is a measure of a classifier’s discriminative ability. The ROC curve is produced by calculating and plotting the true positive rate (TPR) against the false-positive rate (FPR) for a single classifier at a variety of thresholds. Given two classes chosen at random, the area below the ROC curve indicates a probability of the latter being correctly classified. If the classifier cannot discriminate between these two separately, an area under a curve is equal to 0.5. When this value is the next 1, it indicates that the classifier is able to discriminate these two cases. Mathematically (Fawcett 2006):

The classification methods used in the COVID-19 detection task considering vectors of hematological parameters were compared using the following metrics: accuracy, sensitivity, specificity, and area under the ROC curve. Metrics are compared using the sample average and standard error (format: average (standard error)) and boxplots. Two classification methods are considered equivalent according to a given metric if their box plots match, i.e., similarity between median, lower and upper quartiles, and outliers. By means of the qualitative and quantitative comparison of the box plots, it is not necessary to assume a certain probability distribution and, therefore, it is not necessary to use tests of normality or tests of hypotheses that assume normality of the data. The classifiers are first compared considering the most clinically relevant criteria: sensitivity, specificity and area under the ROC curve. Then, they are compared considering box plots of sensitivity and specificity. Furthermore, for the final decision, we selected two of them with best performances according to boxplots and mean values, and performed the paired t-test. We computed p-values and considered a p-value less than or to 0.05 as significant.

Protocol proposals

In this subsection, we present protocol proposals to support the clinical diagnosis of COVID-19 from the hematological parameters obtained clinically from the complete blood count and biochemical tests. Clinical diagnosis support protocols are composed of empirical rules applied to clinical examinations. Our hypothesis is that decision trees, as they are techniques to support decision-making based on rules, can be used both to explain the functioning of intelligent systems to support clinical diagnosis, and to automatically build protocols for clinical diagnosis.

In decision trees, it is natural that one or more attributes dominate the decision process, creating dominant branches in the trees. Seeking to detail the role of each attribute, that is, of each hematological parameter in the construction of the clinical diagnosis, we subsequently decided to build new decision trees by removing the dominant attribute from the previous tree. The process of building new decision trees is interrupted when the diagnostic accuracy drops below an acceptable value. In this way, each decision tree expresses a rapid clinical decision protocol where a hematological parameter is dominant.

Heg.IA web application

After selecting the best classifier, the Heg.IA web system was developed. It can be accessed through the link: https://hegia.ufpe.br/welcome. Its front-end was developed using the library React.js. This library is based on pure JavaScript. It is open source and used to create user interfaces, more specifically, single page application (SPA) web platforms. As for data access and manipulation of application state, we used the Redux-Saga structure, a powerful tool that allows us to manage masterfully asynchronous queries, receiving API data, and trigger actions to the application of state safely and easily to maintain. Furthermore, our back-end was developed in Python (version 3.7.7). Only the random forest classifier was implemented in this final solution. Figure 5 illustrates how Random Forest was be applied as a classifier for the COVID-19 rapid diagnosis proposal we suggest in this work.

On the initial screen, it is possible to visualize a brief description of the intelligent system, as well as the supporters of this initiative: The Federal University of Pernambuco (UFPE) and the Department of Biomedical Engineering at UFPE. To get to know the members of the project’s development team and their respective functions, it is possible to access the “About” option on the top menu of the screen. The options “Login” and “Consult” are also available. For the “Login” option, health professionals, especially medical laboratory professionals and nurses, will be able to access their private account or register a new account, in cases of first access. In the Consult option, it is possible to view the report with the diagnosis for a specific patient, as long as the user has the patient’s personal locator.

After logging into the system, the user can register a patient or view the complete history of registered patients. In the case of a new registration, personal information such as full name, ID, date of birth, telephone, sex, and full home address will be requested. In the following, the user will be directed to the screen shown in Fig. 6. In this screen, the results of the complete blood count (CBC) with differential must be entered. The units and reference values are available next to each of the hematological parameters. After filling in the CBC, the user will be directed to the screens for the other blood tests and arterial blood gas tests, as shown in Figs. 7 and 8. Thus, the list of tests required to make the predictions will be complete. The user can then check the hematological parameters entered to be sure that everything is correct. If he realizes that he made a typo, he can go back to the previous steps and correct it.

Additional serological tests screen: additional tests, such as total, direct, and indirect bilirubin, can be inserted in this screen. In addition, serum glicose, dosage lipase, urea, PTT, D-dimer, lactic dehydrogenase, prothrombin time, CRP, and creatinine results can also be included here. The units and reference values can be viewed next to each hematological parameter.

Finally, the report will be available immediately, similarly to that shown in Fig. 9. The diagnostic report will indicate the positive or negative diagnosis for COVID-19. Hospitalization predictions are also reported, indicating the best type of hospitalization for the patient: regular ward, semi-ICU or ICU. Information on accuracy, kappa index, sensitivity, and specificity of the determination of each of these scenarios are also available, in order to assist the physician’s decision making. In addition to viewing the report, it is also possible to print it.

Results screen: In this screen it is possible to view the patient’s diagnostic report. In the report, the diagnosis for COVID-19 is available, as well as the hospitalization predictions, indicating whether the patient should be admitted to the regular ward, semi-intensive care unit, or to the ICU. Information on accuracy, kappa index, sensitivity, and specificity of the determination of each of these scenarios are also available, in order to assist the physician’s decision making. In addition to viewing the report, it is also possible to print it.

Results

The results of this research are organized in three parts: in the first part, we present the investigation of the best classification architecture for the original dataset and for the version with the reduced number of attributes through feature selection based on the PSO algorithm. The most suitable classifier was used in the implementation of the COVID-19 diagnostic support web system, Heg.IA web. In the second part, we present the use of decision trees as an alternative to build humanly intelligible models to support clinical diagnosis. These models, although less sophisticated, are important for the clinician to have a better understanding of the hematological parameters that are not only more prevalent in the diagnosis, but which can also be important for monitoring the clinical status of patients with COVID-19. Finally, in the third part, we present the prototype of the Heg.IA web system, in operation in the city of Paudalho, Brazil, since June 2020.

Evaluation of classifiers to support the diagnosis of COVID-19

We investigated the best classifier architectures for classifying patterns of hematological parameters. We investigate multilayer perceptron artificial neural networks, support vector machines, Bayesian networks, Naive Bayes classifiers, simple decision trees, and random forests. We studied the behavior of these classification architectures for the 6215 patient records, 258 of which were positive for COVID-19, mostly moderate and severe cases of COVID-19.

Table 3 shows the average and standard error for accuracy, sensitivity, specificity, and area under the ROC curve, taking into account the original dataset, with all 43 attributes. Looking at Table 3, we can see that Bayes Net’s performance is optimal (nearly 1.0000 or 100.00%) for all metrics, with standard error less than 0.0001. The results for Naive Bayes and the three MLP configurations are also considered close to optimal, with the sample means of all metrics greater than 99%, but with sample standard errors much higher than those of Bayes net, at least 100 times greater for AUC and specificity. For the Bayes net, as described on Table 3, sensitivity, specificity, and AUC reached 0.9997 (0.0007), 1.0000 (0.0000), and 1.0000 (0.0000), respectively.

Analyzing the results for the SVMs, we notice that the accuracy values are all greater than 95%. The results for the RBF kernel are similar and stable, concentrated around 95% for C = 0.01. For RBF kernel with G = 0.25 and C = 0.1, sensibility, specificity, and AUC reached 0.9998 (0.0005), 1.000 (0.000), and 0.9999 (0.0003), respectively. For the linear kernel, the accuracy results vary around 99% with less than 1% variation, with higher C values (C = 0.1,1, and 10).

Table 3 also shows that the accuracy results for Random Tree correspond to good classification results and that there was no bias due to base imbalance: the sensitivity was 0.999 (0.001), the specificity was 0.98 (0.03), and the AUC was 0.99 (0.02). Considering the accuracy, the best results were those obtained with 40, 50, 60, 70, 80, 90, and 100 trees. For the last case, sensitivity, specificity, and AUC reached 1.000 (0.000), 0.998 (0.008), and 1.000 (0.000), respectively.

Figures 10 and 11 illustrate the sensitivity and specificity values for the database with all 43 attributes, respectively. For building the boxplots, only one example of each type of classifier that presented a good performance was used, since many of them presented similar results. The selected examples are highlighted in the Table 3.

When we analyze the results of the box plots in Figs. 10 and 11, we can see that the sensitivity and specificity results are quite accurate, with results above 0.9 in both metrics. Considering the sensitivity boxplots, we can see that the selected classifiers have similar performance, difficult to distinguish visually. On the other hand, when analyzing the specificity plots, we see the presence of outliers for the random tree and MLP classifiers.

In order to distinguish the best classifier among those tested, we performed the paired t-test, aiming to compare the random forest and Bayes network, which presented the highest mean values of AUC, and other metrics above 99.9%. In this way, a p-value of 0.000000346 was found, using the accuracy values. It can be interpreted that there is a statistically significant difference between these two classifiers, and, therefore, the random forest with 100 trees classifier was chosen, considering that it has a higher average accuracy.

The feature selection method based on the PSO algorithm returned the following eight attributes that were statistically most relevant for classification: serum glucose, lactic dehydrogenase, lipase dosage, partial thromboplastin time, troponin, ferritin, D-dimer, and indirect bilirubin. Table 4 shows the average and standard error for accuracy, sensitivity, specificity, and area under the ROC curve results, taking into account these 8-feature PSO dimension-reduced dataset. In addition, Figs. 12 and 11 present the sensitivity and specificity behavior according to the set of classifiers we adopted herein this work.

Table 4 presents detailed results as sample average and standard error regarding accuracy, sensitivity, specificity, and AUC. In a similar way to the classification using all the extracted attributes, the sensitivity of the selected settings showed incredibly good results, as can be seen in Fig. 12. In addition, the mean specificity also remained close to 1.000. According to the Table 3, the best results were obtained with the Bayes Net classifier, with all metrics concentrated at 100% out of 1. The other classifiers also showed average accuracy above 95%. Among them, SVMs with C = 0.001 presented the worst performance. In contrast, for experiments with SVMs and C equal to 0.1, the results for the linear kernels, polynomial of degrees 2 and 3, and RBF with γ of 0.25 and 0.5, were significantly improved, all of them in the range of 99.9%. For C equal to 1.0, all results were considerably improved for all settings, including degrees of 4 and 5. For C equal to 10.00, there was no noticeable improvement compared to C equal to 1.00. Table 4 shows that, in this database with the 8 statistically most relevant hematological parameters, all tested SVM configurations were robust to high database imbalance only for C equal to 1.0 and 10.0, according to values of specificity and AUC, with grade 4 and 5 polynomial kernels being the least robust configurations. In addition, Table 4 details that Random Forests and the J48 decision tree are well suited to support the diagnosis of COVID-19 using the eight selected hematological parameters: accuracy of 99.98% (0.05), sensitivity of 0.998 (0.006), and maximum specificity and AUC with sample standard error less than 0.0001, for classification using random forest with 100 trees (Fig. 13).

Humanly intelligible models to support the clinical diagnosis of COVID-19

Figure 14 shows the J48 decision tree trained on the 8-feature dataset: serum glucose, partial thromboplastin time, troponin, lipase dosage, lactic dehydrogenase, ferritin, indirect bilirubin, and D-dimer. Training was done using 10- fold cross validation. The result shows that, for all 6215 records, the accuracy was 99.9839%, the sensitivity, specificity, and AUC were maximum. Only a single instance was misclassified: a negative record for COVID-19 out of the total of 5457 negatives was classified as positive. All COVID-19 positive records were classified correctly.

Clinical decision protocol based on J48 decision tree trained on the 8-feature dataset: serum glucose, partial thromboplastin time, troponin, lipase dosage, lactic dehydrogenase, ferritin, indirect bilirubin, and D-dimer. Training was done using 10- fold cross validation. Reduced partial thromboplastin time (< 22) is associated to symptomatic COVID-19 case. The accuracy was 99.9839%, the sensitivity, specificity, and AUC were maximum. We had 1 false positive.

To assess the influence of the other 7 hematological parameters on the diagnosis of COVID-19, we retrained the J48 decision tree after removing the partial thromboplastin time. Training was done using tenfold cross validation. Figure 15 illustrates the resulting decision-making protocol, where only the following hematological parameters are statistically relevant: ferritin, troponin, lipase dosage, and serum glucose. The accuracy obtained was 99.8552%, sensitivity of 0.999, specificity of 0.985, and AUC of 0.985. We had 5 false positives and 4 false negatives.

Clinical decision protocol based on J48 decision tree trained on the 7-feature dataset. Only the following were statistically relevant: lipase dosage, ferritin, troponin, and serum glucose. Training was done using tenfold cross validation. Lipase dosage was dominant in this decision protocol. The accuracy was 99.8552%, sensitivity of 0.999, specificity of 0.985, and AUC of 0.985. We had 5 false positives and 4 false negatives.

By removing the partial thromboplastin time and lipase dosage, we can assess the importance of the other 6 hematological parameters. We train a J48 decision tree again using tenfold cross validation. The results are shown in Fig. 16. The hematological parameters considered most relevant this time were: ferritin, troponin, D-dimer, and serum glucose. The accuracy obtained was 99.8713%, with a sensitivity of 0.999, specificity of 0.981, and AUC of 0.990. We get 3 false positives and 5 false negatives.

Clinical decision protocol based on J48 decision tree trained on the 6-feature dataset. Only the following were statistically relevant: ferritin, troponin, D-dimer, and serum glucose. Training was done using tenfold cross validation. The accuracy obtained was 99.8713%, with a sensitivity of 0.999, specificity of 0.981, and AUC of 0.990. We get 3 false positives and 5 false negatives.

We retrained a J48 decision tree removing the partial thromboplastin time, ferritin, and lipase dosage from the database. The training was done using tenfold cross validation. The decision protocol is shown in Fig. 17. All 5 remaining hematological parameters were considered relevant in the diagnostic decision: serum glucose, D-dimer, troponin, lactic dehydrogenase, and indirect bilirubin. The accuracy obtained was 99.7908%, with a sensitivity of 0.998, specificity of 0.955, and AUC of 0.982. We got 1 false positive and 12 false negatives.

Clinical decision protocol based on J48 decision tree trained on the 5-feature dataset. All remaining features were statistically relevant: serum glucose, D-dimer, troponin, lactic dehydrogenase, and indirect bilirubin. Training was done using tenfold cross validation. The accuracy obtained was 99.7908%, with a sensitivity of 0.998, specificity of 0.955, and AUC of 0.982. We got 1 false positive and 12 false negatives.

Discussion

Evaluation of classifiers to support the diagnosis of COVID-19

Given that the best results were obtained with random forests and these classifiers are sufficiently robust to noisy data (possible typing errors of hematological parameters and missing data that need to be statistically estimated), we decided to adopt a Random Forest with 100 trees to implement the Heg.IA web for COVID-19 diagnosis support. Another advantage of Random Forests and decision trees in general is their low computational cost of processing, although the memory consumption can be considerable. Also, sorting with decision trees does not require input attributes to be rescheduled through normalization or standardization, a necessary preprocessing stage when we have to work with neural networks, support vector machines or Bayesian methods. Considering the 100-tree random forest, specificity, sensitivity, and AUC reached 1.0000 (0.0000), 0.9983 (0.0079), and 1.0000 (0.0000), respectively.

These results show that the 43 attributes (age and 42 hematological attributes) adopted in this work can be used to diagnose patients with symptoms of COVID-19 with a precision close to that obtained with RT-PCR, as shown in Table 3, for the results with Random Forests. Therefore, the Heg.IA web system can be an important aid for the development of a rapid protocol for the clinical diagnosis of COVID-19, providing more certainty for clinical practice. These results also show that these 42 hematological parameters, given the high accuracy, sensitivity, specificity, and AUC values obtained, comparable to those obtained with RTPCR, can be very important for monitoring COVID-19-positive patients.

Furthermore, the results also show that the eight hematological parameters chosen by the PSO algorithm are very important for the clinical diagnosis of COVID-19 in symptomatic patients, reaching results comparable to those obtained with RT-PCR, the gold standard method for the diagnosis of COVID-19. Thereby, these hematological parameters are strongly related to COVID-19 itself, and should not only be part of COVID-19 clinical diagnosis protocols, but also protocols for patient follow-up and assessment of patient progress and severity of disease, as the review of the state of the art demonstrates.

The change in serum glucose by COVID-19 may explain the fact that diabetes is considered a comorbidity that can increase the chances of worsening the patient’s death by COVID-19. Indirect bilirubin is formed by the breakdown of red blood cells and is then transported to the liver. Bilirubin can be related to several complications in the blood, changes in heart function and liver problems. This, in turn, may be strongly related to how the chances of aggravation and death in patients with heart disease and liver disease may increase. Troponin alterations, also considered statistically relevant in this research, also show how patients with heart disease can have their chances of worsening the disease. D-dimer is one of the breakdown products of fibrin, a protein that is involved in clot formation. Therefore, when there are changes in the coagulation process, it is possible that there is a greater amount of circulating D-dimer. Lactic dehydrogenase is an enzyme present in all cells, but in greater concentration in the lungs, heart, skeletal muscle and liver. High levels of lactic dehydrogenase in patients with COVID-19 increase the chances of aggravation of the infection and, therefore, the probability of death. Lipase is an enzyme that is part of the digestive process. It works by breaking down fat molecules to be more easily absorbed by the intestine. The lipase test is a clinical analysis that helps identify changes in the levels of this enzyme in the individual’s body. High levels of lipase are associated with acute pancreatitis. Several studies show that COVID-19 also affects the pancreas in moderate and severe cases. State-of-the-art investigations show that the occurrence of pancreatitis at different levels is reasonably common in patients positive for COVID-19 and may be a highly important clinical occurrence in intubated patients.