Abstract

Teaching people clever heuristics is a promising approach to improve decision-making under uncertainty. The theory of resource rationality makes it possible to leverage machine learning to discover optimal heuristics automatically. One bottleneck of this approach is that the resulting decision strategies are only as good as the model of the decision problem that the machine learning methods were applied to. This is problematic because even domain experts cannot give complete and fully accurate descriptions of the decisions they face. To address this problem, we develop strategy discovery methods that are robust to potential inaccuracies in the description of the scenarios in which people will use the discovered decision strategies. The basic idea is to derive the strategy that will perform best in expectation across all possible real-world problems that could have given rise to the likely erroneous description that a domain expert provided. To achieve this, our method uses a probabilistic model of how the description of a decision problem might be corrupted by biases in human judgment and memory. Our method uses this model to perform Bayesian inference on which real-world scenarios might have given rise to the provided descriptions. We applied our Bayesian approach to robust strategy discovery in two domains: planning and risky choice. In both applications, we find that our approach is more robust to errors in the description of the decision problem and that teaching the strategies it discovers significantly improves human decision-making in scenarios where approaches ignoring the risk that the description might be incorrect are ineffective or even harmful. The methods developed in this article are an important step towards leveraging machine learning to improve human decision-making in the real world because they tackle the problem that the real world is fundamentally uncertain.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The decisions we have to make in the real world are too complex and too diverse for us to make them all with the same strategy. People therefore need different decision strategies for different types of decisions (Gigerenzer & Todd, 1999; Simon, 1956; Todd & Gigerenzer, 2012). While people often have good heuristics for certain types of decisions (Todd & Gigerenzer, 2012), they often lack good decision strategies for other situations. This negatively affects people’s decisions about their finances, health, and education (O’Donoghue and Rabin, 2015). The resulting mistakes can have devastating consequences for the individuals, families, organizations, and society at large. One way to address this problem is to increase people’s decision-making literacy. This idea is currently being advocated for as a public policy intervention under the name of boosting (Hertwig and Grüne-Yanoff, 2017). One approach to boosting is to discover clever heuristics for the types of decisions that people struggle with and teach them to people (Hafenbrädl et al., 2016; Gigerenzer & Todd, 1999). Recent work has built on the definition of optimal heuristics for human decision-making by (Lieder and Griffiths, 2020) to develop machine learning methods for discovering clever heuristics for human decision-making (Callaway et al., 2018a; Callaway, Gul et al., 2018; Lieder et al., 2017; Krueger et al., 2022; Callaway et al., 2022b; Skirzyński et al., 2021; Consul et al., 2022) as well as intelligent cognitive tutors that teach them to people (Callaway et al., 2022a; Consul et al., 2022) and AI-generated decision aids that guide people through the application of the discovered strategies (Becker et al., 2022). Here, we use the term “heuristic” in the broad sense of “any decision strategy that uses only a subset of all potentially relevant information and is not guaranteed to always yield the optimal solution.”

Automatic strategy discovery methods compute strategies that achieve the best possible trade-off between the quality of the resulting decisions and the amount of effort that people have to expend to reach them (Callaway et al., 2018a; Callaway, Gul et al., 2018; Lieder et al., 2017; Consul et al., 2022; Krueger et al., 2022). This optimization is performed on a model of the environment in which the decision strategies are to be used. This approach works well when the model of the decision environment is accurate. However, when there is a mismatch between the model and reality, the discovered strategy can perform arbitrarily poorly in the real world. To illustrate this problem, let us consider a hypothetical application of automatic strategy discovery to improving how credit officers decide which mortgage applications to approve. In this case, the decision strategy might take the form of a decision tree, such as “If the applicant’s credit score is at least 740, then approve the application. Else, if the applicant’s credit score is at least 580, then approve the application if the applicant’s disposable income is at least x% of the requested amount”.Footnote 1 The model of the decision environment would have to include which information is available to the credit officer (e.g., the price of the house the applicant is planning to buy, the mortgage’s interest rates, the applicant’s credit score, income, and savings), which outcomes of granting the mortgage would have in different scenarios (e.g., housing prices increase/decrease by x%), how probable those scenarios are, and how the outcomes the bank would experience in each of those scenarios (e.g., not getting their money back because the borrower goes bankrupt) depend on the available information. Building such a model requires estimates of the probabilities of various events (e.g., a collapse of the housing market) that have to be obtained from domain experts (e.g., credit officers). In the following, we will refer to such estimates as descriptions of the environment.

One important obstacle to applying automatic strategy discovery to improve human decision-making is that our models of the real-world scenarios in which people have to make decisions will usually be at least somewhat inaccurate (model-misspecification). For instance, prior to the subprime mortgage crisis, most credit officers’ descriptions of the housing market would have severely underestimated the risk that housing prices might drop as precipitously as they subsequently did (Demyanyk and Van Hemert, 2011). If extant automatic strategy discovery methods had been naively applied to the estimates of those domain experts, then they would most likely have recommended heuristics that pay too little attention to information about the applicant’s ability to pay back their mortgage if their house were to lose most of its value. Strategy discovery methods therefore have to be robust to the ways in which the description they are applied to might be wrong. Concretely, in this example, a robust strategy method should produce a heuristic for evaluating mortgage applications that works not only if the credit officers’ estimates were correct but also if those estimates were distorted by fallibility of human judgment that arises from limitations of human memory, systematic errors in people’s judgments (Tversky and Kahneman, 1974), limited information, and fundamental uncertainty about the future (Hertwig et al., 2019). Therefore, a robust strategy discovery method might have recommended a heuristic that pays more attention to the applicant’s income, savings, and credit score than would be necessary if the credit officers’ descriptions of the housing market were correct.

The main contribution of this article is to propose a general machine learning method for discovering clever heuristics that is robust to errors in the description of the decision problem (e.g., deciding which mortgage applications to approve and which to decline). Unlike previous methods, our new method yields good decision strategies even when the description of the decision problem is biased and incomplete (see Fig. 1). The basic idea is to find the heuristic that works best in expectation over all possible realities that could have given rise to the likely incomplete and partially incorrect description provided by a human expert. The resulting uncertainty about what the world might be like is handled using Bayesian inference. Our approach computes the heuristics that performs best in expectation over all possible worlds that might have given rise to the provided specification. In our example, this would include scenarios in which the risk that housing prices might drop is higher than the provided estimate, as well as scenarios in which it is lower. We thereby provide a first proof-of-concept for leveraging machine learning to discover heuristics that are robust to our uncertainty about the environment. We evaluate our new method in simulations and behavioral experiments across two domains: route planning and multi-alternative risky choice. In each case, we apply our machine learning method to derive adaptive decision strategies from incorrect descriptions of multiple decision problems and teach people to apply the automatically discovered strategies. In each case, people who were taught the strategies discovered by our new robust method made significantly better decisions than people who were either taught no strategies or strategies discovered by previous methods. Our findings suggest that our method is robust to common systematic and non-systematic errors in people’s descriptions of different decision problems. The discovered heuristics tend to work well in the true environment, even when the model was derived from a biased description of limited experience. This will be important for future efforts to derive clever heuristics from people’s descriptions of the decisions they face in the real world. Moreover, our definition of rational strategies for robust decision-making has implications for the debate about human rationality. Our theory and methods provide a starting point for understanding the robustness of human decision-making, modeling how people learn robust decision strategies, and recreating this robustness in machines.

The general idea of robust strategy discovery. The goal is to discover heuristics that perform well in the real world when given only people’s biased descriptions. To achieve this, we explicitly model how cognitive biases distort domain expert’s descriptions of the scenarios in which people have to make decisions. Metaphorically speaking, the difference between those descriptions and the real-world scenarios they are meant to capture is akin to the difference between the maps that early explorers like Columbus used and the territory that those maps were meant to depict

The structure for this paper is as follows: We start by introducing the theoretical and technical background for automatic strategy discovery (Section 2). We then introduce our general new approach to making strategy discovery methods robust to uncertainty and model misspecification (Section 3). The following sections apply this general approach to the domains of planning (Section 4 and Section 5) and multi-alternative risky choice (Section 6 and Section 7), respectively. For each domain, we first apply our computational approach to discover robust decision strategies (Section 4 and Section 6) and then conduct experiments to test if teaching them to people is a viable approach to improve human decision-making (Section 5 and Section 7). In both cases, our approach succeeds to help people make better decisions when the true environment is partially unknown. We close with a discussion of directions for future work on understanding and improving human decision-making (Section 8).

Background

Our approach to improving human decision-making through robust strategy discovery builds on the theory of resource rationality, machine learning methods for automatic strategy discovery, empirical methods for assessing human decision-making, intelligent cognitive tutors, and previous findings about people’s cognitive biases. Here, we therefore briefly introduce each of these concepts in turn.

Resource Rationality

Lieder and Griffiths (2020) recently introduced a new theory of bounded rationality that provides a mathematical definition of optimal heuristics for human decision-making. Unlike previous normative theories, such as expected utility theory (von Neumann and Morgenstern, 1944), it takes into account that people’s time and cognitive resources are bounded. Its prescriptions for good decision-making (Lieder and Griffiths, 2020; Lieder et al., 2017; Krueger et al., 2022; Callaway et al., 2022b) thus, at least sometimes, resemble simple fast-and-frugal heuristics (Gigerenzer & Todd, 1999).

Building on the notion of bounded optimality from artificial intelligence (Russell and Subramanian, 1994), the theory of resource rationality states that people should make optimal use of their finite computational resources. These computational resources are modeled as a set of elementary information processing operations. Each of these operations has a cost that reflects how much computational resources it requires. Those operations are assumed to be the building blocks of people’s cognitive strategies. To be resource-rational, a planning strategy has to achieve the optimal tradeoff between the expected return of the resulting decision and the expected cost of the planning operation it will perform to reach that decision. Both depend on the structure of the environment. Concretely, Lieder and Griffiths (2020) define the extent to which using the cognitive strategy h in an environment E constitutes effective use of the limited computational resources of the agent’s brain B as the strategy’s resource rationality

where \(u(\text {result})\) is the agent’s subjective utility u of the outcomes (\(\text {result}\)) of the choices made by the heuristic h, and \(\text {cost}(t_h,\rho )\) denotes the total opportunity cost of investing the cognitive resources \(\rho\) used or blocked by the heuristic h for the duration \(t_h\) of its execution. Both the result of applying the heuristic and its execution time depend on the situation in which it is applied. The expected value (\(\mathbb {E}\)) weighs the utility and cost for each possible situation by their posterior probability given the environment E, the situation s the decision-maker finds themselves in, and the cognitive capacities of the decision-maker (B).

The brain’s computational limitations and uncertainty about the environment limit how effective people’s decision strategies can be. That is, the brain can only execute some strategies (\(H_B\)) but not others, and the extent to which people can adapt to their environment is constrained by the limited data d that they have about the environment and which situations they will encounter (Lieder and Griffiths, 2020). Under these constraints, the resource-rational heuristic is

Automatic Strategy Discovery

Equation 2 specifies a criterion that optimal heuristics must meet. But it does not directly tell us what those optimal heuristics are. Finding out what those optimal heuristics are is known as strategy discovery.

Given a model of the environment, the resource-rational heuristic \(h^\star\) for an agent with the computational resources B can be computed by reformulating the definition of the resource-rational heuristic as the solution to a metalevel Markov decision process (MDP) and applying methods from dynamic programming or reinforcement learning to compute its optimal policy (Callaway et al., 2018a; Callaway, Gul et al., 2018; Lieder et al., 2017; Krueger et al., 2022; Callaway et al., 2022b). This approach models the decision process as a series of computations that can be chosen one by one. Each computation updates the person’s beliefs about the returns of alternative courses of action. Rules for selecting computations correspond to alternative decision strategies.

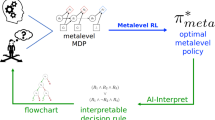

As illustrated in Fig. 2a, the basic idea of this approach is to use a metalevel MDP to model the decision problems that people face and the cognitive architecture that they have available to solve them, and then apply a reinforcement learning method to approximate its optimal policy. Formally, a metalevel MDP (see Fig. 2b) is a four-tuple \(M_{\text {meta}}=(\mathcal {B}, \mathcal {C}, T_{\text {meta}}, r_{\text {meta}})\) comprising the set of possible beliefs \(\mathcal {B}\) that the agent can have, the set of computational primitives \(\mathcal {C}\), a probabilistic model \(T_{\text {meta}}(b,c,b')\) of how possible computations c might update the belief state (e.g., from b to \(b'\)), and the metalevel reward function \(r_{\text {meta}}\) which encodes the cost of computations \(c \in \mathcal {C}\) and the utility of the action chosen when deliberation is terminated. In this formal framework, cognitive strategies correspond to metalevel policies (\(\pi _{\text {meta}}: \mathcal {B} \mapsto \mathcal {C}\)) that specify which computation will be performed in a given belief state.

Automatic strategy discovery. a Illustration of the general approach. We discover optimal decision strategies by modeling the decision problems people face as metalevel Markov decision processes (MDPs) and solving them using reinforcement learning methods. The discovered optimal strategies can then be taught to people using intelligent cognitive tutors. b Illustration of a metalevel MDP. A metalevel MDP is characterized by its set of belief states (B), the computations (C) which represent deliberation, and move an agent from one belief state to another belief state. Computations incur costs, and provide rewards (R) upon termination of deliberation

Methods for Assessing Human Decision-Making and Measuring People’s Decision Strategies

To find out which heuristics people use to make decisions, some decision scientists conduct experiments in which they measure which pieces of information participants acquire at which point in their decision-making process (Payne et al., 1993). These experiments initially conceal all information about the choices behind opaque boxes. To reveal the information underneath a box, the participant must click it. This requirement renders each click indicative of the elementary information processing operation that incorporates the revealed information into the decision. By reporting the sequence of clicks that a participant makes, these methods thereby yield insights into the underlying decision strategies.

We have recently extended this approach to sequential decision problems that require planning (Callaway et al., 2022b; Jain et al., in press). Our Mouselab-MDP paradigm (see Fig. 3) shows the participant a map of an environment where each location harbors an occluded positive or negative reward. To find out which path to take, the participant must click on the locations they consider visiting to uncover their rewards. Each of these clicks is recorded and interpreted as the reflection of one elementary planning operation. The cost of planning is externalized as a fee that people have to pay for each click. People can stop planning and start navigating through the environment at any time. However, once they have started to move through the environment, they cannot resume planning. The participant has to follow one of the paths along the arrows to one of the outermost nodes.

Illustration of the Mouselab-MDP paradigm. Rewards are revealed by clicking with the mouse before selecting a path using the keyboard. This figure shows one concrete task you can create using this paradigm. Many other tasks can be created by varying the size and layout of the environment, the distributions the rewards are drawn from, and the cost of clicking

This experimental task allows us to measure the extent to which a person’s decision-making is resource-rational using the measure of resource rationality specified in Eq. 1. In this task, for a given trial, \(u(\textit{result})\) is the sum of rewards along the chosen path. \(\text {cost}(t_h,\rho )\) is the number of clicks the participant made because their opportunity cost is assumed to be $1 per click. The resource-rationality score \(\text {RR}\) is then measured as the difference of the average result and the average cost of clicking across all the trials a participant goes through.

Intelligent Cognitive Tutors

Early work found that teaching people economic principles for arriving at the best possible decision had limited success at improving their decisions in the real world (Larrick, 2002). This is likely because economic principles ignore crucial constraints on human decision-making (i.e., limited time and bounded cognitive resources). The exhaustive planning that would be required to apply those principles to the real world would waste virtually all of a person’s time on the very first decision (e.g., “Should I get up or go back to sleep?”), thereby depriving them of the opportunities afforded by later decisions. In contrast, resource-rational heuristics allocate people’s limited time and bounded cognitive resources in such a way that they earn the highest possible sum of rewards across the very long series of decisions that constitute life. This is why teaching resource-rational heuristics might improve people’s decisions in large and complex real-world problems where exhaustive planning would either be impossible or take a disproportionately large amount of time that could be better spent on other things.

In some of our prior work, we have built on automatic strategy discovery methods to develop intelligent tutors that teach people the optimal planning strategies for a given environment (Lieder et al., 2019; Callaway et al., 2022a; Consul et al., 2022). Most of the tutors let people practice planning in the Mouselab-MDP paradigm and gave them immediate feedback on each planning operation that they chose to perform. Callaway et al. (2022a) found that participants learned to use the automatically discovered strategies, remembered them, and used them in larger and more complex environments with a similar structure. Furthermore, Callaway et al. (2022a) also found that participants were able to transfer the taught strategy to a naturalistic planning task. These findings suggest that automatic strategy discovery can be used to improve human decision-making if the discovered strategies are well-adapted to the real-world situations where people might use them. Finally, Consul et al. (2022) found that showing people video demonstrations of the click sequences made by the optimal strategy is also highly effective. Here, we build on this finding to develop cognitive tutors that teach automatically discovered strategies by demonstrating them to people.

Biases, Uncertainty, and Ignorance

Existing strategy discovery methods assume that the environment is completely known. But this is rarely the case for decision problems in the real world. On the contrary, decision-making in the real-world is characterized by fundamental uncertainty about the structure of the environment because the real world is very complex and even domain experts have only limited information about its structure (Hertwig et al., 2019). Research on judgment and decision-making has revealed a long list of systematic errors that people make when judging the probabilities of possible outcomes (Kahneman et al., 1982). For instance, people’s estimates of the probability that an event will occur are known to be biased by how easily it comes to mind (Tversky and Kahneman, 1973) and how easily an event comes to mind is affected by several biases in human memory. For example, when a person has experienced an event that was extremely bad or extremely good they remember it much more easily than an equally common event that is more neutral (Madan et al., 2014). Furthermore, recent events come to mind more easily than distant events (Deese and Kaufman, 1957) and people tend to underestimate the frequency of rare events in decisions from experience (Hertwig et al., 2004). As a consequence of these biases, people’s descriptions of real-world environments tend to be incomplete and biased. Modern methods for eliciting estimates from domain experts (Garthwaite et al., 2005) ask experts in such a way that the expert’s answers are not overly distorted by his or her biases, but even the best elicitation methods cannot eliminate the influence of cognitive biases entirely. Moreover, even if these methods succeeded at eliciting the expert’s true beliefs, those beliefs would still be distorted by the biases in how the expert formed those beliefs.

Robust Strategy Discovery

The definition of resource-rational heuristics in Eq. 2 integrates out our uncertainty about the true environment E and the situations that decision-makers might find themselves in. If we ignored this uncertainty and instead used an expert’s description \(d=(d_s,d_E)\) of the environment (\(d_E\)) and the situation (\(d_s\)), then we could approximate the resource-rational heuristic by

The danger of that approach is that the expert’s description of the environment (e.g., the stock market) could be wrong.

Even if we ask domain experts (e.g., investment bankers), their answers could be incomplete or biased in ways that suggest strategies that ignore critical information. For instance, the formal and informal models that led to the excessive risk taking that caused the global financial crisis did not consider that the housing market might collapse, and very few financial experts would have anticipated that a pandemic might cause a global recession in 2020. Overlooking the possibility of such significant extreme events is very dangerous (Taleb, 2007). It is therefore imperative to model and account for the ways in which people’s descriptions of a decision environment might be incorrect. We refer to these discrepancies as model-misspecification. The main contribution of this article is to propose a general method for discovering clever heuristics that are robust to model-misspecification. The basic idea is to find the heuristic that is most resource-rational in expectation over all possible true environments that could have given rise to the likely incomplete and partially incorrect description provided by a human expert. The following three subsections describe the three key steps of this process: (i) formulating a model of the ways in which people’s descriptions might be wrong (Section 3.1), (ii) using this model to infer which true environment might have given rise to this description (Section 3.2), and (iii) training strategy discovery methods (Section 3.3) on potential true environments.

Modeling Model Misspecification

People’s descriptions d of a given environment e vary depending on what they have experienced, which parts of their experience they remember, and how they interpret it. Each of these three aspects varies considerably across different people. We therefore model the process giving rise to a description d as a probabilistic generative model \(P(d,e)=P(e)\cdot P(d|e)\). This model combines two parts: a broad prior distribution P(e) over which environments might be possible and the likelihood function P(d|e) that specifies how likely a given environment e is to give rise to a possible description d. This likelihood function expresses the probability with which different types of model misspecification might occur and what their consequences might be. One way to develop such a likelihood function is to model how likely different aspects of the environment will (not) be observed (\(P(\mathbf {o}|e)\)), what a person who has made those observations (\(\mathbf {o}\)) is likely to remember (\(P(\mathbf {m}|\mathbf {o})\)), and how a person might describe the environment based on their memories (\(P(d | \mathbf {m})\)). The first component will reflect the fact that rare events are unlikely to be experienced—a fact known to cause the underestimation of extreme events in decisions from experience (Hertwig et al., 2004). The latter two elements can be informed by the extension literature on biases in human memory, such as memory biases in favor of extreme events (Madan et al., 2014), and biases in human judgment (Kahneman et al., 1982). The three components can then be combined into a model of model misspecification, that is \(P(d|e) = \sum _{\mathbf {o},\mathbf {m}} P(d|\mathbf {m}) \cdot P(m|\mathbf {o} \cdot P(o|e)) ;\) we will use a simple version of this approach in “4” and “5”. Alternatively, one can empirically estimate P(d|e) by having people interact with a known environment and modeling their descriptions as a function of the true environment; we will use this approach in “6” and “7” (see “6.2.1”).

Performing Bayesian Inference on the True Environment to Compute Resource-Rational Heuristics

According to Eq. 2, the optimal heuristic given a description d achieves the best possible cost-benefit trade-off in expectation across all possible environments. In this expectation, the heuristic’s resource rationality in each possible environment e is weighted by the posterior probability of that environment given the description d. This suggests that strategy discovery methods can be made robust by applying them to samples from the posterior distribution P(E|d) instead of applying them to the description d itself. Therefore, our solution proceeds in two steps:

-

1.

Estimate \(\mathbf {P(E|d)}\). We use Bayesian inference to get a probability distribution over the possible true environments. The posterior distribution over possible environments is

$$\begin{aligned} P(E=e|d) = \frac{P(E=e)\cdot P(D=d|e)}{P(D=d)}, \end{aligned}$$(4)where \(P(E=e)\) is the prior distribution over possible environments, and the likelihood function \(P(D=d|e)\) is a probabilistic model of model misspecification.

-

2.

Apply strategy discovery methods to samples from the posterior distribution. To generate a training set that encourages robust solutions, we independently sample training environments from the posterior distribution, that is \(\{ e_{k} \}_{k=1}^{N} \sim P(E|d).\) Given sufficiently many samples from the posterior distribution, standard reinforcement learning methods can be used to approximate the resource-rational heuristic \(h\star\) defined in Eq. 2 by the policy that maximizes the average return across the MDPs defined by the sampled environments \(\{ e_{k} \}_{k=1}^{N}\).

Methods for (Robust) Strategy Discovery

In this work, we created a robust and a non-robust version of each of the three strategy discovery methods presented in the remainder of this section: deep recurrent Q-learning, deep meta-reinforcement learning, and Bayesian metalevel policy search. The robust versions are trained on the posterior distribution over the possible true environment given the description, whereas the non-robust versions are trained directly on the description or the decision problem. These two approaches approximate the resource-rational heuristic defined in Eq. 2 and the non-robust heuristic defined in Eq. 3, respectively. In the subsequent sections, we will evaluate the policies found by those six methods against each other and against a random policy, which always samples uniformly from the set of available computations.

The neural-network-based methods (i.e., deep recurrent Q-networks and meta reinforcement learning) were implemented using TensorFlow (version 1.12) (Abadi et al., 2015) in Python. For these methods, the Adam optimizer (Kingma & Ba, 2019) was used for training. Furthermore, for these approaches, we employed early stopping (Zhang and Yu, 2005) to prevent overfitting. Hyperparameter selection was carried out following the common principles and standard approaches (Smith, 2018), and the best model was stored for evaluation.

Bayesian Metalevel Policy Search (BMPS)

According to rational metareasoning, an optimal metalevel policy is one that chooses the action that maximizes the value of computation (VOC) at each step (Russell et al., 1991):

where VOC(c,b) is the expected increase in utility on performing computation c in belief state b compared to taking a decision immediately.

The calculations of expected values of various possible computations can be arbitrarily hard. Therefore, the Bayesian metalevel policy search (BMPS) method (Callaway, Gul et al., 2018) attempts to approximate the VOC by a linear combination of information-theoretic features:

where the weights \(\{ w_{i} \}_{i=1}^{3}\) are constrained to lie on the probabilistic simplex and \(w_{4} \in [1, h]\), such that h is an upper bound on the number of performed computations. The weights are learned by using Bayesian Optimization (Mockus, 2012) with the expected return as an objective function.

Consequently, we obtain an approximately optimal policy that selects computations which maximize \(\widehat{\text {VOC}}\):

The following sections describe the methods we used as baselines for our new robust BMPS method; therefore, readers who are primarily interested in the results may wish to skip them.

Deep Recurrent Q-learning

Recent advances in deep reinforcement learning have led to great performance in planning tasks and sequential decision problems (Arulkumaran et al., 2017). The standard deep Q-network (DQN) (Mnih et al., 2013) architecture assumes that the state of the environment is fully observable. However, this is not the case for the robust strategy discovery problem, where the structure of the true environment is unknown. On such, so-called partially observable tasks, the performance of neural networks can be improved by adding recurrent layers to the neural network (Hausknecht & Stone, 2015; Narasimhan et al., 2015). We therefore use the resulting deep recurrent Q-network (DRQN) architecture as one of the models for comparison (Hausknecht & Stone, 2015).

Here, we represented the current state of the environment as a stack of two matrices. Each entry of the first matrix encoded whether the corresponding node had already been inspected or not. For the inspected nodes, the entries of the second matrix were the nodes’ rewards. For the uninspected nodes, the entries of the second matrix were zero. This representation allowed us to treat input as an array and pass it into a convolutional neural network(CNN) which formed the initial layers of our DRQN model. The model architecture consisted of four convolutional layers, which had 32, 64, 128, and 200 filters respectively. The outputs from convolutional layers were passed into a long short-term memory (LSTM) network layer with 200 units (Hochreiter & Schmidhuber, 1997). The activations of this layer were then passed on to a fully connected layer that outputs \(Q_{\text {meta}}\) of the state predicted by the network for each possible computation. Action selection was performed in an epsilon-greedy fashion, that is, the action with the highest activation in the output layer was selected with probability \(1-\varepsilon\) and otherwise the action was selected uniformly at random. The action output was either the number of the node to be clicked on next or the operation that terminates planning and selects the path with the highest expected value according to the current belief state (\(\bot\)).

We also used the additional training utilities like experience replay buffer as used in (Hausknecht & Stone, 2015). Experience buffers enable the learning algorithm to use the seen episodes multiple times while training, and Epsilon-Greedy action selection helps address the exploration-exploitation trade-off.

The pseudocode of our algorithm is provided in Algorithm 2 in Appendix 1. We selected hyperparameters following the standard practices for hyperparameter optimization (Smith, 2018); the resulting values are shown in Table 1 in Appendix 1 and were then used in the simulations reported below.

Deep Meta Reinforcement Learning

Even though the incorporation of a recurrent network makes the DRQN more flexible than the DQN, its architecture and training paradigm only make it suitable for discovering strategies in a single environment. Our aim, however, is to discover robust strategies that perform well across multiple potential true environments. We therefore need learning methods that can incorporate information from multiple training environments. One such method is the deep meta-reinforcement learning (Deep Meta-RL) (Wang et al., 2016). Deep Meta-RL learns to quickly adjust its strategy to the structure of the environment it is.

Our Deep Meta-RL method uses a neural network architecture that is similar to the DRQN architecture (see Fig. 4). The main difference is that the action taken and reward obtained in the previous time step serve as additional inputs to the state of the LSTM in the next time step (Wang et al., 2016). This enables the network to identify whether its current strategy is working or if it needs to be changed, hence increasing the adaptability of the method. The belief state that was an input to the CNN encoder was represented in that same manner as described in the DRQN section above. The CNN encoder had three convolutional layers with 32, 128, and 264 filters, respectively. The LSTM layer had 400 units.

Here, we trained this network architecture with the Asynchronous Actor-Critic Agent (A3C) Algorithm (Mnih et al., 2016). The hyperparameters we used are listed in Table 2 in Appendix 1.

Binz et al. (2022) have recently used a similar approach to discover heuristics for choosing between two alternatives based on multiple attributes.

Discovering Robust Planning Strategies by Modelling Biases in People’s Descriptions of the Environment

To evaluate our general approach to robust strategy discovery, we first apply it to the domain in which automatic strategy discovery has been studied most extensively so far: planning in the Mouselab-MDP paradigm. Concretely, we create two sets of benchmark problems based on two different tasks. For each set, we evaluate the robust versions of the three strategy discovery methods described in Section 3.3 against their non-robust counterparts and a metalevel policy that chooses planning operations randomly. We start by briefly describing how we applied our general approach to robust strategy discovery to the domain of planning, and then present benchmark problems and how well our methods performed on them. We found that Bayesian inference on the structure of the true environment significantly increases the robustness of all strategy discovery methods (Section 4.2). Moreover, we were able to replicate these findings on a second set of more complex benchmark problems (see Appendix 3).

Application of Robust Strategy Discovery to Planning Problems

To evaluate how good our approach is at discovering planning strategies, we applied it to a metalevel MDP model (Section 4.1.2) of the Mouselab-MDP planning paradigm (Section 4.1.1).

Modeling Planning Tasks and Planning Strategies

As it is not possible to observe human planning directly, the underlying cognitive processes must be inferred from their behavior. This makes it difficult to study what strategies they discover, learn, and use. Process-tracing paradigms, such as the Mouselab paradigm (Payne et al., 1988), present participants with tasks that make their behavior highly diagnostic of their unobservable cognitive strategies. The Mouselab-MDP paradigm (Callaway et al., 2017) is a process-tracing paradigm for measuring how people plan. An example Mouselab-MDP environment used in this study is shown in Fig. 5a. In these environments, participants are tasked to select one of several possible paths through a spatial environment, where each location harbors a reward. The participant’s goal is to maximize the sum of the rewards along the chosen path. All the rewards are initially concealed, but the participant can uncover them by clicking on the locations. Critically, each click has a cost of \(\$1\). Thus, the participant has to trade the cost of collecting information off against the value of the collected information for making a better decision.

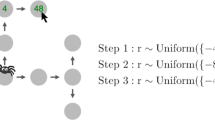

a An example environment of the Mouselab-MDP paradigm as shown to participants. b Alternative representation of an example environment as a grid of three node types. The node values are independently sampled from a uniform distribution (U) with high (H; \(U(\{-48,-24,24,48\})\)), medium (M; \(U(\{-8,-4,4,8\})\)), and low (L; \(U(\{-2,-1,1,2\}\)) variance

Discovering (Robust) Planning Strategies by Solving Metalevel MDPs

Discovering Planning Strategies by Solving Metalevel MDPs

As described in Section 2.2, the problem of deciding how to plan in the Mouselab-MDP paradigm can be modelled as a metalevel MDP. The set of possible beliefs \(\mathcal {B}\) consists of belief states b that represent the belief about the values underlying the all the nodes. The belief about the value of a node is represented by a probability distribution if the node is unobserved and the observed value if the node is observed. In our experiments, we use uniform distributions over a set of values. Thus, the belief state \(b^{(t)}\) at a time t can be represented as (\(R_1^{(t)}, ... R_K^{(t)}\)) where \(R_K^{(t)}\) represents the set of values \(X_k\) the node K can take such that \(b^{(t)}(X_k = x)) = U(x;R_K^{(t)})\) is the probability mass function of a discrete uniform distribution over the set \(R_K^{(t)}\). The set of computations \(\mathcal {C} = \{c_1, c_2, ... c_K, \perp \}\), where \(c_k\) is the click reveals the value of the node k and \(\perp\) terminates planning and selects the path with the highest expected sum of rewards according to the current belief state. The transition function \(T_{\text {meta}}(b^{(t)},c,b^{(t+1)})\) of how the computation \(c_k\) might update the belief state (e.g., from \(b^{(t)}\) to \(b^{(t+1)}\)). Performing computation \(c_k\) sets \(R_k^{(t+1)}\) to {x} with probability \(\frac{1}{|R_k^{(t+1)}|}\) and the metalevel reward function \(r_{\text {meta}}\) which encodes the cost of computations \(c \in \mathcal {C}\) and the utility of the action chosen when computation is terminated. \(r_{meta}(b^{(t)}, c) = -\lambda\) for \(c \in \{c_1, c_2, ... c_k\}\) and \(r_{meta}((R_1, R_2, ..., R_k), \perp ) = \underset{t \in T}{\max } \sum _{k \in t} \frac{1}{|R_k|} \cdot \sum _{x \in R_k}x\) where T is the set of all paths \(\varvec{t}\). In this formal framework, planning strategies correspond to metalevel policies (\(\pi _{\text {meta}}: \mathcal {B} \mapsto \mathcal {C}\)) that specify which computation will be performed in a given belief state. To find out the solution to the metalevel MDP, we use the strategy discovery methods described in Section 3.3.

Applying the Bayesian Approach to Discovering Robust Planning Strategies from Erroneous Descriptions

To discover robust planning strategies, we applied the general robust strategy discovery approach described in Section 3 to descriptions of planning tasks. That is, we perform Bayesian inference on the structure of the true environment given a description and then train our strategy discovery methods on samples from the posterior distribution over planning tasks (see Appendix 2).

In this case, the environment and its description are specified in terms of how variable the possible rewards are at the different locations along the possible routes one could plan (for more detail, see Section 4.2.1).

Strategy Discovery Methods

We discover (robust) planning strategies by solving the metalevel MDPs (Section 4.1.2) of deciding how to plan using the methods described in Section 3.3. In addition to the standard versions of these four learning methods, we also create the corresponding robust versions. To achieve robustness, we train each method on samples from the posterior distribution over possible true environments.

The BMPS policy is defined in terms of features that depend on assumptions about the structure of the environment. Therefore, the robust version of BMPS additionally performs online inference about the structure of the environment during the execution of the BMPS policy. We refer to the resulting version of BMPS as robust BMPS.

Robust Bayesian Metalevel Policy Search (BMPS)

In general, the robustness of the strategy discovery methods is achieved by the usage of the posterior distribution over the possible true environments. BMPS makes use of the posterior distribution by using it in its feature computation step.

The features of the BMPS method rely on a model of the environment. The standard version of the BMPS method would run on a model description d. We introduce a robust version of the BMPS method that performs online inference on the environment (E) based on the description (d) and the belief state (b) that the agent has formed by interacting with the environment, that is, \(\mathrm {E}_{E|d,b}\left[ \widehat{\text {VOC}}_{E}(b,c;\mathbf{w} ) \right]\). Critically, this approximation becomes computationally expensive when there are many possible environments. Therefore, we approximate this expected value by the normalized weighted average across the smallest set of possible environments \(\mathcal {E}_{\text {min}}\) whose combined posterior probability given the current belief state exceeds a threshold \(p_{\text {thresh}}\).

For the purpose of our experiments, we set \(p_{\text {thresh}} = 0.99\).

Evaluation on the Flight Planning Task

The Flight Planning game is a three-step sequential decision-making task that we implemented using the Mouselab-MDP paradigm (see Fig. 5a). In this game, participants plan the route of an airplane across a network of airports. Figure 5b illustrates the statistical structure of one of the Mouselab-MDP task environments we used in this study. This environment is motivated to capture the sequential nature of decision-making in real life.

Benchmarks

To create the first set of benchmarks for robust strategy discovery, we build a dataset comprising 66 (e, d, p)-triplets where the environment e is a version of the task illustrated in Fig. 5, the description d is generated according to the model of model misspecification described below, and the probability \(p=P(E=e)\cdot P(d|e)\) specifies the relative frequency of this pair in the set of benchmarks. The descriptions and the environments are \(3~\times ~3\) matrices where each entry is either L, M, or H (see Fig. 5). Those entries specify whether the variance of the reward distribution at the corresponding location is low (L), medium (M), or high (H). There are 36 equally probable true environments (\(P(E=e)=1/36\)) and they all share the property that each row contains one high (H), one medium (M), and one low (L) variance node. Moreover, the arrangement of node types is the same in the bottom two rows.

For each true environment e, our model of model misspecification yields 2 possible descriptions d—one generated according to the recency effect (Deese and Kaufman, 1957) and the other from the underestimation of rare events (Hertwig et al., 2004). For six such environments, the resulting descriptions from the two biases turned out to be the same.

What makes these benchmark problems difficult is that many environments can give rise to the same specification. As a result, each description d could have plausibly been generated from 11 different true environments.

Model of Model Misspecification

As proof of concept, we worked with two admittedly simplistic models of how the recency effect and the underestimation of rare events affect the way a person would describe a 3-step Mouselab-MDP environment they experienced, represented by \(P(d|e, m_{\text {recency}})\) and \(P(d|e, m_{\text {underestimation}})\), respectively. Figure 6 illustrates these two models.

In brief, the first model incorporates the recency effect in remembering experiences based on how far away in the past they occurred. This model always misremembers the rewards in the first two steps from the starting point as having been identical to the reward in the last step (top row). This is because of the order in which the nodes are walked upon after the participants stop clicking, making the first two steps the less recent ones, whereas the other incorporates the bias in representing rare events. This model underestimates the frequency of rare events by always remembering the rare event in each column as the most frequent one.

For simplicity, we assume that model misspecification arises half of the time from the recency bias and half of the time from the underestimation of the frequency of rare events. We therefore model the probability that a person with experience in an environment e will describe it by the description d as

These assumptions merely serve as a placeholder for a more realistic model of model misspecification to be developed in future work. The contribution of this article is the general approach that combines the Bayesian inversion of such a model with automatic strategy discovery methods.

Simulation Results

We found that robust and non-robust strategy discovery methods discovered qualitatively different types of planning strategies that achieved lower levels of resource rationality.

Figure 7 compares the behavior of two strategies that were discovered by the most-robust method versus the least-robust strategy discovery method, respectively. The strategies are compared in two different scenarios. In scenario A, where the true environment matches the model, both strategies make similar clicks. However, in scenario B, when the true environment differs from the model, the strategy discovered by the non-robust method fails to uncover the high-variance nodes because it inflexibly follows the approach that would have been optimal if the description were correct. In contrast, the robust strategy quickly adapts to the discrepancy between the model and the true environment and collects all the most valuable information. Intuitively, the click sequences generated by this robust strategy seem to illustrate the simple rule of thumb “Find and inspect the large positive or negative outcome of each row. Then choose a path that collects the large positive outcomes and avoids the large negative outcomes.” Critically, the planning strategy discovered with the robust method performs well in both environments, whereas the strategy discovered with the non-robust strategy fails when the true environment does not match the description.

Comparison of the planning strategies discovered by a robust method (BMPS with Bayesian inference) versus a non-robust method (DRQN without Bayesian inference). Example A illustrates the strategies in the specified environment. Example B illustrates their behavior in a different environment that could have given rise to the same description

As shown in Fig. 8, we found that the BMPS method with Bayesian inference on the true environment achieved an almost perfect relative robustness score (\(\rho _{\text {rel}}=0.99\), absolute score = 53.25) and outperformed all the other methods (all \(p<.0001\)). The second-best method was meta-RL with Bayesian inference on the true environment (\(\rho _{\text {rel}}=0.91\), absolute score = 49.16). The addition of Bayesian inference on the true environment significantly improved the robustness of all methods: It improved the resource rationality of the decision strategies discovered by BMPS from \(42.47 \pm 0.41\) to \(53.25 \pm 0.42\) (\(t(47914)=-34.78\), \(p<.001\); effect size \(d=.318\)) and had similar effects for the meta-RL method (\(35.54 \pm 0.43\) vs. \(49.16\pm 0.41\); \(t(47894)=-44.60\), \(p<.001\), \(d=.408\)) and the DRQN method (\(35.75 \pm 0.42\) vs. \(46.26 \pm 0.40\); \(t(47894)=-35.38\), \(p<.001\), \(d=.323\)).

Discussion

We developed an approach for making automatic strategy discovery robust to model-misspecification and evaluated its performance on a set of benchmark problems. We found that Bayesian inference on the true environment that might have led to a given biased description significantly increases the robustness of automatic strategy discovery. Moreover, we were able to replicate this finding on a second set of more complex benchmark problems with a different model of model misspecification (see Appendix 11). This suggests that our approach to robust strategy discovery will likely be beneficial in other environments as well. Furthermore, both evaluations showed that BMPS is a more robust strategy discovery method than previous approaches based on neural networks. These findings suggest that combining BMPS with Bayesian inference is a promising approach to discovering planning strategies that are robust to all the ways in which the real world might differ from our descriptions. This approach might make it possible to improve human planning even when we cannot be sure which planning problems they might face in the real world. In the next section, we test this hypothesis by conducting a large online experiment.

Improving Human Planning

How people plan determines which choices they make. Previous research suggests that people sometimes fail to consider some of the most important potential consequences of their choices (O’Donoghue and Rabin, 1999; Jain et al., 2019; Taleb, 2007). The resulting choices can have devastating consequences for people’s physical, mental, and financial well-being and society at large. If people’s reliance on suboptimal planning strategies is partially responsible for this problem, then teaching near-optimal planning strategies might be a way to ameliorate such problems. To explore whether teaching the heuristics discovered by our robust strategy discovery methods might be a viable approach to improving human decision-making, we leveraged the robust strategy discovery methods introduced above to develop a robust version of the cognitive tutor introduced by (Lieder et al., 2019). This tutor uses our most-robust and best-performing strategy discovery method—the robust BMPS method—to discover a robust planning strategy from a given description of the environment and then teaches it to people by showing them video demonstrations of its planning behavior.

To evaluate how beneficial it is for people to be trained by the robust tutor, we conducted a behavioral experiment with the three-step sequential decision problem introduced in Section 4.2. That is, for each participant, we sampled one of the 66 benchmark problems according to their respective probabilities; for instance, the benchmark problem \((e_k,d_k,p_k)\) would be sampled with probability \(p_k\). The participant was assigned the role of the novice who is being trained by the robust cognitive tutor and then tested on the true environment \(e_k\). Critically, the cognitive tutor does not know the true environment \(e_k\), but only the usually erroneous description \(d_k\). The robust tutor infers what the true environment might be given the description (\(P(E|d_k)\)), derives the optimal strategy from this probabilistic knowledge, and then demonstrates it on environments sampled from its posterior distribution over possible environments (\(P(E|d_k)\)). To find out what aspects of the robust tutor contribute to the effective learning, we compared it to two conditions where no tutor was provided and a condition with a Non-Robust Tutor. The Non-Robust Tutor was based on the best-performing (non-robust) standard machine learning algorithm (non-robust DRQN, which assumes that \(d_k\) is the true environment).Footnote 2

Methods

Participants We recruited 357 participants on Amazon Mechanical Turk (average age 36.4 years, range: 18–77 years; 216 female). Participants were paid $1.20 plus a performance-dependent bonus (average bonus $1.54). The average duration of the experiment was 15.0 min. Participants were randomly assigned to the control condition without tutoring and without practice (89 participants), the control condition without tutoring but with practice (87 participants), the experimental condition with the non-robust cognitive tutor (94 participants), or the experimental condition with the robust cognitive tutor (87 participants).

Materials The experimental task was the Flight Planning game described in Section 4.2. As illustrated in Fig. 5, participants are tasked to select one of nine possible routes from the starting position at the bottom to one of the final destinations at the top. Each location harbors a reward. The participant’s goal is to maximize the sum of the rewards along the chosen path. All the rewards are initially concealed, but the participant can uncover them by clicking on the locations. Critically, each click has a cost of \(\$1\). Thus, the participant has to trade the cost of collecting information off against the value of the collected information for making a better decision.

Procedure We conducted a between-subjects experiment with four groups. For each participant, we first randomly selected one of the 66 benchmark problems described in Section 4.2.1. The participant was not shown the description itself. Instead, the usually inaccurate description of the selected benchmark was used to generate the trials of the training blocks. In contrast, the trials of the subsequent test block were generated according to the benchmark’s true environment.

The experiment was structured into instructions, 5 rounds of playing the Flight Planning game in environments sampled from the prior distribution (see Section 4.2.1), a quiz that tested the participant’s understanding of the game, a training block (except in the control condition without tutoring and without practice) and a test block in which the participant played the Flight Planning game in the benchmark’s true environment. The instructions described the Flight Planning game in general and did not mention the description or potential differences between the practice trials and the training block versus the test block. In the two experimental conditions, the training block comprised 10 tutor demonstrations. Each tutor demonstrated the strategy that it had derived from the usually inaccurate description. In the control condition with practice and without demonstrations, the training block comprised 10 trials of playing the Flight Planning game in the environment specified by the benchmark’s inaccurate description. There was no training block in the control condition without tutoring and without practice.

To motivate participants to pay close attention to these demonstrations, they were told that their bonus would depend on correctly answering a quiz about the demonstrated strategy and were given the option to review the demonstrations before moving on to the quiz.

Each demonstration (see Fig. 7a-c in the training block of the two experimental conditions started from a different fully occluded instance of the task illustrated in Fig. 5a. In the non-robust tutor condition, the spatial layout of the rewards was generated according to the inaccurate description. In the robust tutor condition, the spatial layout of the rewards was sampled from the posterior distribution P(e|d) and each round used a different sample. The demonstration then showed the participant the first click that the automatically discovered strategy would make and the reward that it revealed. After a 1.1-s delay, the demonstration showed the second click that the strategy would make based on the outcome of the first click. This continued until the strategy decided to terminate planning. At this point, the participant was shown the sequence of moves that the strategy would choose, and the rewards collected along the way.

In the first experimental condition, the demonstrations showed the strategies that the DRQN method without Bayesian inference derived from the potentially misspecified models (Non-Robust Tutor). The strategy learned by the Non-Robust Tutor always clicks on the same three nodes that should have high variance according to the description of the environment in all demonstrations, regardless of what their values are (see Fig. 7b and d). In the second experimental condition, the demonstrations showed the strategies discovered by BMPS with Bayesian inference (Robust Tutor). When a high variance node is not in its expected location, then these robust strategies continue to search for it until they find it (see Fig. 7a and c). In the Non-Robust Tutor, all demonstrations were performed on the reward structure specified by the model, as illustrated in Fig. 7b. In contrast, the reward structures in the Robust Tutor were sampled from the posterior distribution over the true environment given the model specification; thus, some demonstrations were performed on environments that differed from the model as illustrated in Fig. 7c.

To ensure high data quality, we applied two pre-determined exclusion criteria. We excluded the 4% of participants who affirmed that they had not paid attention to the instructions or had not tried to achieve a high score in the task. We excluded 12% of the remaining participants who did not make a single click on more than half of the test trials because not clicking is highly indicative of speeding through the experiment without engaging with the task.

Results

Applying the Shapiro-Wilk test for normality, we find that the scores are normally distributed for all the conditions: control condition without tutor demonstrations and without practice (\(W(68)=0.97\), \(p=.086\)), control condition without tutor demonstration but with practice (\(W(77)=0.118\), \(p=.002\)), the non-robust tutor condition (\(W(86)=0.98\), \(p=.100\)) and the robust tutor condition (\(W(85)=0.99\), \(p=.564\)). ANOVA test showed that the three groups differed significantly in their resource-rationality score on the test trials (\(F=4.5\), \(p=.004\)). Planned pair-wise comparisons confirmed that teaching people strategies discovered by the robust method significantly improved their resource-rationality score (41.6 points/trial) compared to the control condition without practice (33.5 points/trial, \(t(153)=3.28\), \(p<.001\), \(d=.528\)) and the control condition with practice (36.2 points/trial, \(t(162)=2.08\), \(p=.0193\), \(d=.326\)). In contrast, teaching strategies discovered by the non-robust method failed to improve people’s resource rationality (33.1 points/trial) when compared to the control condition without practice (\(t(154)=0.0\), \(p=.498\), \(d=0.001\)) and the control condition with practice (\(t(154)=-1.03\), \(p=.153\), \(d=0.161\)), and also led to significantly lower resource-rationality scores than teaching strategies discovered by the robust method (\(t(171)=-3.49\), \(p<.001\), \(d=.533\)). Each person’s resource-rationality score in the Mouselab-MDP task is the sum of the rewards they collected minus the cost of their clicks. We can therefore interpret it as a measure of how well their strategy trades off the quality of the resulting decisions with the cost of decision-making. To make the scores more interpretable, we compute each group’s resource-rationality quotient (\(\frac{\text {RR}_{\text {people}}}{\text {RR}_{h^\star }}\) where \(\text {RR}_{\text {people}}\) is the group’s average score). As shown in Fig. 9, teaching people strategies discovered by the robust method brought their resource rationality closer to that of the best possible heuristic for the true environment. Concretely, people’s resource-rationality quotient increased from 47.5% in the control condition without practice to 75.1% in the robust tutor condition and to only 56.7% in the control condition with practice and 56.8% in the non-robust tutor condition.

These differences in resource rationality reflect differences in the underlying planning strategies. Inspecting the planning strategies that participants used in the test block showed that participants who had been taught by the robust tutor inspected the values of all the most informative high-variance nodes on 62.0% of the trials, whereas participants in the non-robust tutor condition or the control condition did so significantly less often (41.3% and \(46.7\%\), respectively, \(\chi ^2(2) = 117.3\), \(p<.001\)).

Discussion

The findings of our behavioral experiment showed that our new robust strategy discovery method (robust BMPS) can allow us to improve human decision-making in cases where two non-robust off-the-shelf machine learning methods failed. Because we compared BMPS with Bayesian inference against a non-robust machine learning method without Bayesian inference, we cannot be sure which proportion of this improvement was due to performing Bayesian inference on the structure of the environment versus using BMPS. The results shown in Fig. 8 suggest that more than half of the improvement was due to performing Bayesian inference. Future work could test this assumption by running an experiment with a factorial design that manipulates the use of Bayesian inference on the structure of the environment separately from the reinforcement learning algorithm trained on the samples from the posterior distribution (BMPS vs. DRQN).

Our experiment made the simplifying assumption that the utility participants derive from their decision is proportional to the number of points they earned in the task, even though the utility of money is nonlinear (Kahneman and Tversky, 1979). For a more detailed discussion of this issue, see Section 8. Another limitation of the present work is that it assumed a perfect model of the biases in the generation of model specifications. Furthermore, our assumptions about the cognitive biases were very simplistic. Another limitation is that the task used in the experiment is rather artificial. To address these shortcomings, the following two sections evaluate our approach on a more realistic problem, namely deriving investment strategies from erroneous descriptions provided by people. In that application, the cognitive biases are those of real people, and our method has to rely on a model of what those biases might be. Section 6 describes how we applied our robust strategy discovery approach to this problem. Section 7 reports a behavioral experiment in which we tested whether the risky choice strategies discovered in this way can improve people’s ability to make decisions under risk.

Discovering Robust Heuristics for Multi-alternative Risky Choice

In the previous sections, we introduced a robust machine learning method for deriving resource-rational decision strategies from potentially biased and incomplete descriptions of the decision problems to be solved. The robustness of strategy discovery methods is especially important for improving people’s decisions in scenarios where the stakes are high, and the environment is highly uncertain (Hertwig et al., 2019). A prime example of this kind of real-life decisions is investing in the stock market. Previous research has found that people make a number of systematic errors when deciding whether and how to invest in the stock market (Benartzi and Thaler, 1995; Hirshleifer, 2015). The stock market is highly unpredictable, and even experienced investors tend to overlook important eventualities. Therefore, the decision strategies that traders use have to be robust to our uncertainty about what the stock market is really like (Taleb, 2007). It has been argued that simple heuristics can help people make better financial decisions precisely because they are more robust to such uncertainties than more complex decision procedures (Neth et al., 2014; Lo, 2019). Previous work has developed machine learning methods for discovering resource-rational heuristics for risky choice (Lieder et al., 2017; Gul et al., 2018; Krueger et al., 2022). Here, we extend these approaches to a slightly more realistic formulation of risky choice and make them robust to model misspecification.

To capture some challenges of applying our approach to this real-world problem, we first collected people’s descriptions of how likely investments are to yield positive or negative returns of different magnitudes directly from people who had first-hand experience with those investments. We use these descriptions to create a naturalistic set of benchmark problems for discovering robust strategies for making decisions under risk (Section 6.1). We then extend and apply our Bayesian robust strategy discovery approach to this problem in Section 6.2 and evaluate it on the new benchmarks in Section 6.3.

Realistic Benchmarks for the Discovery of Robust Strategies for Risky Choice

To construct a challenging, yet tractable, set of benchmark problems for the robust discovery of risky choice strategies, we chose to work within the widely used Mouselab paradigm for studying risky choice (see Section 6.1.1). To make this paradigm more realistic, we base the distribution of its payoffs on stock market returns (Section 6.1.3). Critically, we base our benchmark problem’s on the generally erroneous descriptions of real people. To obtain those descriptions, we conducted an experiment in which participants first repeatedly chose between a number of investments and then described the distributions of their returns (Section 6.1.4). The resulting benchmark problems are publicly available.

Multi-alternative Risky Choice in the Mouselab Paradigm as a Model of Investment Decisions

Deciding which stock to invest in, like many other real-life decisions, entails choosing between several alternatives based on their multiple attributes. Although perusing the attributes is informative, one also cannot know exactly how well the investment will play out. The decision-maker can increase their chances of making a good decision by collecting more information, but collecting information takes time and effort. Therefore, the decision-maker has to find a good tradeoff between the quality of their decision and its costs. The experimental paradigm known as multi-alternative risky choice captures all of these important aspects of decision-making in the real world (Payne, 1976). In this paradigm, participants choose between multiple risky prospect based on what their payoffs would be in several possible scenarios (see Fig. 10). To make an informed decision, the participant can inspect what the payoffs of different gambles (columns) are in different scenarios (rows) by clicking on the corresponding cell of the payoff matrix for a certain fee. Participants can additionally inspect how likely each scenario is to occur.

Screenshot of the Mouselab paradigm for studying multi-alternative risky choice. Each trial of the paradigm consists of a series of options to choose from, a set of outcomes which can occur for each option, and their corresponding probabilities of occurring. Participants can reveal the outcomes or their probabilities by clicking on the gray cells and paying a cost

Formally, each trial presents a choice among G gambles (see Fig. 10). After the participant has chosen a gamble, one of K event occurs. The payoff of gamble g in the case of the kth event is given by the entry \(v_{k,g}\) of the payoff matrix V. \(\mathbf {p} = [p_{1},\ldots ,p_{k}]\) are the probabilities of the k possible events, where \(p_k\) represents the probability of the \(k^{\text {th}}\) outcome occurring. In our experiments, \(\mathbf {p}\) is sampled from a symmetric Dirichlet distribution. We will vary the distribution parameter \(\alpha\) to model two different situations. A small \(\alpha\) leads to what we call the high dispersion case, wherein one outcome is much more likely than the others. A large \(\alpha\) results in the low dispersion case, wherein the probabilities of the outcomes lie close to each other.

Initially, the values of V and \(\mathbf{p}\) are unknown. To reveal them, the participant has to click on the corresponding cell on the screen. Each click is costly. The participant’s goal is to maximize the expected payoff minus the cost of clicking. Here, we focus on the special case where in each environment e all payoffs \(V_{k,g}\) are independently drawn from the same distribution \(\pi _e\). Moreover, we assume that this distribution is a piece-wise uniform distribution of the form \(\pi _e(x)=\sum _{i=1}^{10} \pi _{e,1}\cdot \text {Uniform}(x; [-100; -80]) + \pi _{e,2}\cdot \text {Uniform}(x; [-80; -60]) + \cdots + \pi _{e,10}\cdot \text {Uniform}(x; [80; 100])\). We can therefore represent any given payoff distribution by a list of 10 numbers \(\pi _{e,1},\cdots ,\pi _{e,10}\) where \(\pi _{e,i}\) is the probability that a payoff falls into the ith bin of that distribution. For instance, \(\pi _{e,1}\) is the probability that the payoff is between $\(-100\) and $\(-80\).

We assume that different risky choice environments differ in the payoff distribution (\(\pi _e\)), the dispersion of the outcome probabilities (\(\alpha _e\)), and the cost of information (\(\lambda _e\)). We can therefore represent each multi-alternative risky choice environment e by a triplet \((\pi _e,\alpha _e,\lambda _e)\).

Problem Formulation

The problem we set out to solve was to develop a machine learning method that can derive resource-rational strategies for multi-alternative risky choice from inaccurate descriptions of payoff distribution. That is, we assume that the payoff distribution \(\pi _e\) of the environment e is unknown and has to be estimated from expert testimony. Therefore, the strategy discovery method only has access to a usually inaccurate description of the true environment that we will refer to as \(d=(\pi _d, \alpha _d, \lambda _d)\), where \(\pi _d=(\pi _{d,1},\cdots ,\pi _{d,10})\) is a list of estimates of the corresponding probabilities of the true environment (i.e., \(\pi _{e,1},\cdots ,\pi _{e,10}\)) and \(\alpha _d\) and \(\lambda _d\) are estimates of the dispersion of the even probabilities (\(\alpha _e\)) and the cost of information (\(\lambda _e\)). For simplicity, we assume that only the description of the payoff distribution (\(\pi _d\)) can be inaccurate, whereas the other two components of the description are known to be accurate. To make this problem formulation more precise, we will now formulate a set of benchmark problems. Each benchmark problem will include a true payoff distribution and a human-generated description of that distribution. The following two sections specify the true payoff distributions and its descriptions, respectively.

Defining Realistic Payoff Distributions for the Risky Choice Problems

To investigate the importance of robustness for investment decisions in the real world, we chose to study decision problems whose payoff distributions mimic those of real-world investments in different kinds of stocks. Since the early 1900s, it was widely believed that stock returns followed the normal distribution. Mandelbrot (1963) pioneered the re-examination of the return distribution in terms of non-normal stable distributionsFootnote 3. Fama (1965) concluded that the distribution of monthly returns belonged to a non-normal member of the stable class of distributions. Various alternative classes of distributions have since been suggested. Examples include the Student’s t-distribution (Blattberg and Gonedes, 1974), the more general class of hyperbolic distributions (Eberlein and Keller, 1995) and the mixture of Gaussians (Kon, 1984). While there does not seem to be a fundamental theory that can suggest a distributional model of stock returns, it is now generally acknowledged that empirical return distributions are skewed and leptokurtic.

In this work, we mainly focus on heavy-tailed distributions because it is in these settings that people’s biases have the potential to be highly costly. We work with the class of stable distributions since they have been shown to be able to capture heavy tails and skewness. Additionally, they are supported by the generalized Central Limit Theorem, which states that stable laws are the only possible limit distributions for properly normalized and centered sums of independent, identically distributed random variables (Borak et al., 2005). One argument that has often been levelled against their usage is that they have infinite variance. However, real data would never have that property since it is bounded. To combat this as well as to make the task of eliciting biases easier, we truncate the distribution between fixed values, that is we assume that stock returns are bounded between -100% and +100%. Concretely, we created 9 stable distributions with varying riskiness and discretized them into 10 bins as shown in Fig. 11. The skewness parameter \(\eta\) was kept negative to obtain thick left tails. The probability of each bin was set to be the difference in cumulative distribution functions of its upper and lower bounds except for the edge bins, which took the mass for the truncated tails as well. Therefore, each of these distributions can be represented by a list of 10 probabilities \(\pi _{e,1},\cdots ,\pi _{e,10}\) that sum to 1. We will use the nine different distributions to define 9 different types of environments that differ in their payoff distributions.

Eliciting Biased Descriptions of Payoff Distributions

To complete the specification of our benchmark problems, we complement the risky choice problems entailed by the 9 payoff distributions shown in Fig. 11 by people’s descriptions of those distributions. To obtain those descriptions, we conducted the online experiment described in the following paragraphs.

Participants We recruited 60 participants on Amazon Mechanical Turk (average age 38.0 years, range: 20–69 years; 36 male, 23 female, 1 other). Participants were paid $1.50 plus a performance-dependent bonus (average bonus $0.96). The average duration of the experiment was 21.1 min.