Abstract

When making decisions, people often overlook critical information or are overly swayed by irrelevant information. A common approach to mitigate these biases is to provide decision-makers, especially professionals such as medical doctors, with decision aids, such as decision trees and flowcharts. Designing effective decision aids is a difficult problem. We propose that recently developed reinforcement learning methods for discovering clever heuristics for good decision-making can be partially leveraged to assist human experts in this design process. One of the biggest remaining obstacles to leveraging the aforementioned methods for improving human decision-making is that the policies they learn are opaque to people. To solve this problem, we introduce AI-Interpret: a general method for transforming idiosyncratic policies into simple and interpretable descriptions. Our algorithm combines recent advances in imitation learning and program induction with a new clustering method for identifying a large subset of demonstrations that can be accurately described by a simple, high-performing decision rule. We evaluate our new AI-Interpret algorithm and employ it to translate information-acquisition policies discovered through metalevel reinforcement learning. The results of three large behavioral experiments showed that providing the decision rules generated by AI-Interpret as flowcharts significantly improved people’s planning strategies and decisions across three different classes of sequential decision problems. Moreover, our fourth experiment revealed that this approach is significantly more effective at improving human decision-making than training people by giving them performance feedback. Finally, a series of ablation studies confirmed that our AI-Interpret algorithm was critical to the discovery of interpretable decision rules and that it is ready to be applied to other reinforcement learning problems. We conclude that the methods and findings presented in this article are an important step towards leveraging automatic strategy discovery to improve human decision-making. The code for our algorithm and the experiments is available at https://github.com/RationalityEnhancement/InterpretableStrategyDiscovery.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

Human decision-making is plagued by many systematic errors that are known as cognitive biases (Gilovich et al., 2002; Tversky & Kahneman, 1974). To mitigate these biases, professionals, such as medical doctors, can be given decision aids such as decision trees and flowcharts, that guide them through a decision process that considers the most important information (Hafenbrädl et al., 2016; Laskey & Martignon, 2014; Martignon, 2003). To be practical in real-life, the strategies suggested by decision aids have to be simple (Gigerenzer, 2008; Gigerenzer & Todd, 1999) and mindful of the decision-maker’s valuable time and the constraints on what people can and cannot do to arrive at a decision (Lieder & Griffiths, 2020). Previous research has identified a small set of simple heuristics that satisfy these criteria and work well for specific decisions (Gigerenzer, 2008; Gigerenzer & Todd, 1999; Lieder & Griffiths, 2020). In principle, this approach could be applied to help people in a wide range of different situations but discovering clever strategies is very difficult. Our recent work suggests that this problem can be solved by leveraging machine learning to discover near-optimal strategies for human decision-making automatically (Lieder et al., 2018, 2019, 2020; Lieder & Griffiths, 2020) (see Sect. 2). Equipped with an automatically discovered decision strategy, we may tackle many real-world problems for which there are no existing heuristics, but which are nevertheless crucial for practical applications. This includes designing decision aids for choosing between multiple alternatives (e.g., investment decisions; see Fig. 2) as well as strategies for planning a sequence of actions (e.g., a road trip, a project, or the treatment of a medical illness). For instance, a strategy for planning a road trip would help people to decide which potential destinations and pit stops to collect more information about depending on what they already know and to recognize when they have done enough planning.

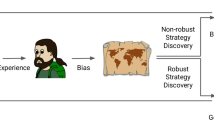

One of the biggest remaining challenges is to formulate the discovered strategies in such a way that people can readily understand and apply them. This is especially problematic when strategies are discovered in the form of complex stochastic black-box policies. Here, we address this problem by developing an algorithm that approximates complex decision-making policies discovered through reinforcement learning by simple human-interpretable rules. To achieve this, we first cluster a large number of demonstrations of the complex policy and then find the largest subset of those clusters that can be accurately described by simple, high-performing decision rules. We induce those rules using Bayesian imitation learning (Silver et al., 2019). As illustrated in Fig. 1, the resulting algorithm may be incorporated into the reinforcement learning framework for automatic strategy discovery, enabling us to automatically discover flowcharts that people can follow to arrive at better decisions. We evaluated the interpretability of the decision rules our method discovered for three different three-step decision problems in large-scale online experiments. In each case people understood the rules discovered by our method and were able to successfully apply them. Importantly, another large-scale experiment revealed that the flowcharts generated by our machine learning powered approach are more effective at improving human decision-making than the status quo (i.e., training people by giving them feedback on their performance and telling them what the right decision would have been when they make a mistake).

Our framework for improving human decision-making through automatic strategy discovery (Lieder et al., 2019, 2020). As illustrated in the upper row, the approach starts with modeling the decision problems people face in everyday life and how they can make those decisions in the framework of metalevel MDPs (Callaway et al., 2018b, 2019; Griffiths et al., 2019; Lieder et al., 2017, 2018, 2019, 2020; Lieder & Griffiths, 2020). The optimal algorithm for human decision-making can be discovered by computing the optimal metalevel policy through metalevel reinforcement learning (Callaway et al., 2018a; Lieder et al., 2017). The contribution of this paper is to develop an algorithm called AI-Interpret that translates the resulting metalevel policies into flowcharts that people can follow to make better decisions

We start with describing the background of our approach in Sect. 2 and present our problem statement in Sect. 3. Section 4 focuses on related work. In Sect. 5, we introduce a new approach to interpretable RL—AI-Interpret—along with a pipeline for generating decision aids through automatic strategy discovery. In Sect. 6, using behavioral experiments, we demonstrate that decision aids designed with the help of automatic strategy discovery and AI-Interpret can significantly improve human decision-making. The results in Sect. 7 show that AI-Interpret was critical to this success. We close by discussing potential real-world applications of our approach and directions for future work.

2 Background

In this section, we define the formal machinery that the methods presented in this article are based on. We start with the basic framework for describing decision problems (i.e., Markov Decision Processes). We then proceed to present a formalism for the problem of deciding how to decide (i.e., metalevel Markov Decision Processes). The possible solutions to the problem of deciding how to decide are known as decision strategies. Our approach to improving human decision making through automatic strategy discovery rests on a mathematical definition of what constitutes an optimal strategy for human decision-making known as resource-rationality. We introduce this theory in the third part of this Background section. Then, we briefly review existing methods for solving metalevel MDPs and move to the topic of imitation learning to describe the family of methods that our algorithm for interpretable RL belongs to. Afterwards, we define disjunctive normal form formulas which constitute the formal output of our algorithm. We finish with describing a baseline imitation learning method we built on.

2.1 Modeling sequential decision problems

In general, AI-Interpret considers reinforcement learning policies defined on finite Markov Decision Processes. A Markov Decision Process (MDP) is a formal framework for modeling sequential decision problems. In a sequential decision problem an agent (repeatedly) interacts with its environment. In each of a potential long series of interactions the agent observes the state of the environment and then selects an action that changes the state of the environment and generates a reward.

Definition 1

(Markov decision process) A Markov decision process (MDP) is a finite process that satisfies the Markov property (each state in the process is independent of the history). It is represented by a tuple \(({\mathcal {S}},{\mathcal {A}},{\mathcal {T}}, {\mathcal {R}}, \gamma\)) where \({\mathcal {S}}\) is a set of states; \({\mathcal {A}}\) is a set of actions; \({\mathcal {T}}(s,a,s') = {\mathbb {P}}(s_{t+1}=s'\mid s_t = s, a_t = a)\) for \(s\ne s'\in {\mathcal {S}}, a\in {\mathcal {A}}\) is a state transition function; \(\gamma \in (0,1)\) is a discount factor; \({\mathcal {R}}:{\mathcal {S}}\rightarrow {\mathbb {R}}\) is a reward function.

Note that \({\mathcal {R}}\) could be also represented as a function dependent on state-action pairs \({\mathcal {R}}:{\mathcal {S}}\times {\mathcal {A}}\rightarrow {\mathbb {R}}\). Policy \(\pi : {\mathcal {S}}\rightarrow {\mathcal {A}}\) denotes a deterministic function that controls agent’s behavior in an MDP and a nondeterministic \(\pi : {\mathcal {S}}\rightarrow Prob({\mathcal {A}})\) defines a probability distribution over the actions. The cumulative return of a policy is a sum of its discounted rewards, i.e. \(G_t^{\pi }=\sum _{i=t}^{\infty }\gamma ^t r_t\) for \(\gamma \in [0,1]\).

2.2 Modeling the problem of deciding how to decide as a metalevel MDP

Computing the optimal policy for a given MDP corresponds to planning a sequence of actions. Optimal planning quickly becomes intractable as the number of states and the number of time steps increase. Therefore, people and agents with performance-limited hardware more generally, have to resort to approximate decision strategies. Decision strategies differ in which computations they perform in which order depending on the problem and the outcomes of previous computations. Each computation may generate new information that allows the agent to update its beliefs about how good or bad alternative courses of action might be. Here, we focus on the case where the transition matrix T is known and the computations reveal the rewards r(s, a) of taking action a in state s. In this context, the agent’s belief \(b_t\) at time t can be represented by a probability distribution \(P\left( {\mathbf {R}} \right)\) on the entries \({\mathbf {R}}_{s,a}=r(s,a)\) of the reward matrix \({\mathbf {R}}\). Computation is costly because the agent’s time and computational resources are limited. The problem of deciding which computations should be performed in which order can be formalized as a metalevel Markov Decision Process (Hay et al., 2012; Griffiths et al., 2019). A metalevel decision process is a Markov Decision Process (see Definition 1) where the states are beliefs and the actions are computations.

Definition 2

(Metalevel Markov decision process) A metalevel MDP (Hay et al., 2012; Griffiths et al., 2019) is a finite process represented by a 4-tuple \(({\mathcal {B}},{\mathcal {C}},{\mathcal {T}}_{meta}, {\mathcal {R}}_{meta})\) where \({\mathcal {B}}\) is a set of beliefs; \({\mathcal {C}}\) is a set of computational primitives; \({\mathcal {T}}_{meta}(b,c,b') = {\mathbb {P}}(b_{t+1}=b'\mid b_t = b, c_t = c)\) is a belief transition function; \({\mathcal {R}}_{meta}:{\mathcal {C}}\cup \{\perp \}\rightarrow {\mathbb {R}}\) is a reward function which captures the cost of computations in \({\mathcal {C}}\) and the utility of the optimal course of actions after terminating the computations with \(\perp\).

Analogically to the previous case, \(\pi _{meta}:{\mathcal {B}}\rightarrow {\mathcal {C}}\) is a deterministic metalevel policy that controls how the agent is making computations, and \(\pi _{meta}:{\mathcal {B}}\rightarrow Prob({\mathcal {C}})\) defines a probability distribution over the computations. One can think of metalevel policies as mathematical descriptions of decision strategies that a person could use to choose between multiple alternatives or plan a sequence of actions.

2.3 Resource-rationality

Our approach to discovering decision strategies that people can use to make better choices is rooted in the theory of resource-rationality (Lieder & Griffiths, 2020). Its basic idea is that people should use cognitive strategies that make the best possible use of their finite time and bounded cognitive resources. The optimal decision strategy critically depends on the structure of the environment E that the decision maker is interacting with and the computational resources afforded by their brain B. These two factors jointly determine the quality of the decision that would result from using a potential decision strategy h and how costly it would be to apply this strategy. The resource-rationality of a strategy is the net benefit of using a decision strategy minus its cost.

Definition 3

(Resource-rationality) The resource-rationality (RR) of using the decision strategy h in the environment E is

where \(s_0=(o,b_0)\) comprises what the agent’s observations about the environment (o) and its internal state \(b_0\), \(u(\text {result})\) is the agent’s utility of the outcomes of the decisions that the strategy h might make, and \(cost(t_h, \rho )\) denotes the total opportunity cost of investing the cognitive resources \(\rho\) used or blocked by strategy h until it terminates deliberation at time \(t_h\). Expectations are taken with respect to the posterior probability distribution of possible results given the environment E and the agent’s observations o about its current state.

Note that the execution time and the possible results of the strategy depend on the situation in which it is applied.

Definition 4

(Resource-rational strategy) A strategy \(h^\star\) is said to be resource-rational for the environment E under the limited computational resources that are available to the agent if

that is when \(h^*\) maximizes the value of resource-rationality among all the strategies \(H_B\) that the agent can execute.

Discovering resource-rational strategies can be expressed as a problem of finding the optimal policy for a metalevel MDP where the states represent the agent’s beliefs, the actions represent the agent’s computations, and the rewards are inherited from the costs of computations and the value of terminating under the current belief state. It is possible to solve for this strategy using dynamic programming (Callaway et al., 2019) and reinforcement learning (Callaway et al., 2018a; Lieder et al., 2017).

2.4 Solving metalevel MDPs

Computing effective decision strategies and modeling the problem of which decision procedure people should use entails using exact or approximate MDP-solvers on metalevel MDPs. Exact methods for solving MDPs, such as dynamic programming, can be applied to small metalevel MDPs. But as the size of the environment increases, dynamic programming quickly becomes intractable. Therefore, the primary methods for solving metalevel MDPs are reinforcement learning (RL) algorithms that approximate the optimal metalevel policy \(\pi ^*_{meta}\) through trial and error (Callaway et al., 2018a; Lieder et al., 2017; Kemtur et al., 2020). The primary shortcoming of most methods for solving (metalevel) MDPs is that the resulting policies are very difficult to interpret. Our primary contribution is to develop a method that makes them interpretable. To achieve this, our method performs imitation learning.

2.5 Imitation learning

Imitation learning (IL) is the problem of finding a policy \({\hat{\pi }}\) that mimicks transitions provided in a dataset of trajectories \({\mathcal {D}}=\{(s_i,a_i)\}_{i=1}^{M}\) where \(s_{i}\in {\mathcal {S}}, a_i\in {\mathcal {A}}\) (Osa et al., 2018). Unlike canonical applications of IL that focus on imitating behavioral policies, our application focuses on metalevel policies for selecting computations.

2.6 Boolean logics

The formal output of the algorithm we introduce is a logical formula in disjunctive normal form (DNF).

Definition 5

(Disjunctive normal form) Let \(f_{i,j}, h: {\mathcal {X}}\rightarrow \{0,1\}, i,j\in {\mathbb {N}}\) be binary-valued functions (predicates) on domain \({\mathcal {X}}\). We say that h is in disjunctive normal form if the following property is satisfied:

and \(\forall i,j_1\ne j_2,\ f_{i,j_1} \ne f_{i,j_2}\).

Equality 3 defines that a predicate is in DNF if it is a disjunction of conjunctions and every predicate appears only once in each conjunction.

2.7 Logical program policies

Logical Program Policies (LPP) is a Bayesian imitation learning method that given a set of demonstrations \({\mathcal {D}}=\{(s_i,a_i)\}_{i=1}^{M}\), outputs a posterior distribution over logical formulas in disjunctive normal form (DNF; see Definition 5) that best describe the generated data. For that purpose, the authors restrict the considered set of solutions to formulas \(\{h_1(s,a),\ldots,h_n(s,a)\}\) defined in a domain-specific language (DSL)—a set of predicates \(f_{i}(s,a):{\mathcal {S}}\times {\mathcal {A}}\rightarrow \{0,1\}\), called (simple) programs. Programs are understood as feature detectors which assign truth values to state-action pairs, and formulas over programs are the titular logical programs. To find the best K logical programs \(h^{\text {MAP}}_i\), the authors employ maximum a posteriori estimation (MAP). Each program \(h^{\text {MAP}}_i\) induces a stochastic policy

which is a uniform distribution over all actions a for which \(h^{\text {MAP}}_i\) is true in state s. The program-level policy \({\hat{\pi }}\) integrates out the uncertainty about the possible programs and selects the action

Importantly for our application, the formulas which constitute the program-level policy come in disjunctive-normal form. To obtain them, the set of demonstrated state-action pairs \((s_i,a_i)\in {\mathcal {D}}\) is used to automatically create binary feature vectors \(v_i^+=\langle f_1(s_i,a_i),\ldots,f_m(s_i,a_i)\rangle\) and binary feature vectors \(v_i^{-}=\langle f_1(s_i,a_j), \ldots, f_m(s_i,a_j)\rangle,\ j\ne i\). The \(v_i^{+}\) vectors serve as as positive examples and the \(v_i^{-}\) vectors, which describe non-demonstrated actions in observed states, as negative examples. After applying an off-the-shelf decision tree induction method (e.g. Pedregosa et al., 2011; Valiant, 1985) on all \(v_i^+\) s and \(v_i^{-}\)s, the formulas are extracted from the tree by treating each path leading to a positive decision as a conjunction of predicates. Considering all positive decision paths as an alternative results in a DNF formula. Further in the text, the notation \(LPP({\mathcal {D}})\) will stand for the formula generated by Logical Program Policies method on the set of demonstrations \({\mathcal {D}}\).

3 Problem definition and proposed solution

The main goal of the presented research is to develop a method that takes in a model of the environment and a model of people’s cognitive architecture and returns a verbal or graphical description of an effective decision strategy that people will understand and use to make better decisions. This problem statement goes beyond the standard formulation of interpretable AI by requiring that when people are given an “interpretable” description of a decision strategy they can execute that strategy themselves. Because of that, we employ a novel algorithm evaluation approach in our work, which has not been utilized in standard interpretablility research. Concretely, we measure how much the generated descriptions boost the performance of human decision makers in simulated sequential decision problems.

Our approach to discovering human-interpretable decision strategies comprises three steps: (1) formalizing the problem of strategy discovery as a metalevel MDP (see Fig. 2, Sect. 2.2), (2) developing reinforcement learning methods for computing optimal metalevel policies (see Sect. 2.4), and (3) describing the learned policies by simple and human-interpretable flowcharts. In previous work, we proposed solutions to the first two sub-problems. First, we conceptualized the strategy discovery as a problem in the realm of metalevel reinforcement learning (Lieder et al., 2017, 2018; Callaway et al., 2018a; Griffiths et al., 2019) as described in Sect. 2.2. Second, we introduced methods to find optimal policies for metalevel MDPs. Our Bayesian Metalevel Policy Search (BMPS) algorithm (Callaway et al., 2018a, 2019; Lieder & Griffiths, 2020) relies on the notion of the value of computation VOC. VOC is the expected improvement in decision quality that can be achieved by performing computation c in belief state b (and continuing optimally) minus the cost of the optimal sequence of computations. BMPS approximates VOC(c, b) by a linear combination of features that are estimated from the original metalevel MDP through agent’s interaction with the environment. Knowing that \(\pi ^*={{\,\mathrm{arg\,max}\,}}_{c\in {\mathcal {C}}} VOC(c,b)\), by approximating VOC, BMPS finds a near-optimal metalevel policy. Apart from BMPS, we also developed a dynamic programming method for finding exact solutions for fairly basic metalevel MDPs (Callaway et al., 2020). In this work, we turn to the third sub-problem: constructing simple human-interpretable descriptions of the automatically discovered decision strategies. Our goal is to develop a systematic method for transforming complex, black-box policies into simple human-interpretable flowcharts that allow people to approximately follow the near-optimal decision strategy expressed by the black-box policy. As a proof of concept, we study this problem in the domain of planning.

Formalizing the optimal algorithms for human decision-making in the real-world as the solution to a metalevel MDP. a) Illustration of a real-life decision-problem and an efficient heuristic decision strategy for making such decisions. In this example, the goal is to choose between multiple investment options based on several attributes. Critically, such decision problems and the optimal strategies for making such decisions can be formalized in the computational framework of metalevel MDPs illustrated in Panel b. The decision-maker’s goal can be modelled as maximizing the expected value of the chosen option minus the time cost of making the decision (Lieder & Griffiths, 2020). The expected subjective value of an alternative a given the acquired information \(B_T\) at the time of the decision (T) can be modelled as a weighted sum of its scores on several attributes (e.g., \(\mathbb {E} \left[ U(a) |B_T \right] = 0.4 \cdot \text {Returns}(a) -0.2\cdot \text {ManagementCharges(a)} - 0.3 \cdot \text {LockIn(a)} + 0.05 \cdot \text {IsMarketLinked}\) where the weights reflect the investor’s preferences). To estimate the alternatives’ subjective values, the decision-maker has to perform computations C by acquiring information (e.g., the management charges for a given investment) and updating its beliefs (B) accordingly. Each computation has a cost (\(\text {cost}(B_T,C_t)\)). The optimal decision strategy maximizes the expected subjective value of the final decision minus the cumulative cost of the decision operations that had to be performed to reach that decision. The sequence of computations suggested in Panel a) is a demonstration of a decision strategy that exploits the decision-maker’s preferences and prior knowledge about the distribution of attribute values to minimize the amount of effort required to identify the best option with high probability. This is achieved by using the most important attribute (Returns) to decide which alternatives to eliminate, which alternative to examine more closely, and when to stop looking for better alternatives. b) Illustration of a metalevel MDP (see Definition 2). A metalevel MDP is a Markov Decision Process where actions are computations (C) and states encode the agent’s beliefs (B). The rewards for computations (\(R_1, R_2, \ldots\)) measure the cost of computation and the reward for terminating deliberation (\(R_T\)) is the expected return for executing the plan that is best given the current belief state (\(B_T\)). Discovering the optimal strategies corresponds to computing the optimal metalevel policy, which achieves an optimal trade-off between decision quality and computational cost

To handle an arbitrary type of an RL policy, thereby generalizing beyond metalevel RL, and simplify the creation of flowcharts, we propose to use imitation learning with DNF formulas. Imitation learning can be applied to arbitrary policies. Moreover, the demonstrations it needs may be gathered by executing the policy either in a simulator or in the real world. Obtaining a DNF formula, in turn, allows one to describe the policy by a decision tree. This representation is a strong candidate for interpretable descriptions of procedures (Gigerenzer, 2008; Hafenbrädl et al., 2016) and may be easily transformed to a flowchart. Formally, we start with a set of demonstrations \({\mathcal {D}}=\{(s_i,a_i\}_{i=1}^{M}\) generated by policy \(\pi\), and denote \(I(f)=\sum _{i=1}^M \mathbb {1}_{\pi _f(s_i)=a_i}\) as the number of demonstrations that the policy induced by formula f can imitate properly. Let’s denote the set of all DNF formulas defined in a domain-specific language \({\mathcal {L}}\) whose longest conjunction is of length d as \(DNF({\mathcal {L}},d)\). Let’s also assume that policy \(\pi _a\) is \(\alpha\)-similar to policy \(\pi _b\) if the expected return of \(\pi _a\): \({\mathbb {E}}(G_0^{\pi _a})\) constitutes at least \(\alpha\) of the expected return of \(\pi _b\), i.e. \({\mathbb {E}}(G_0^{\pi _a}) / {\mathbb {E}}(G_0^{\pi _b}) \ge \alpha\). We denote \(F_{\alpha }^{d,{\mathcal {L}}}=\{f\in DNF({\mathcal {L}}, d): \frac{{\mathbb {E}}(G_0^{\pi _f})}{{\mathbb {E}}(G_0^{\pi })} \ge \alpha \}\) as the set of all DNF formulas with conjunctions smaller than d predicates that induce policies \(\alpha\)-similar to \(\pi\). We would like to find a formula \(f^*\) that belongs to \(F_{\alpha }^{d,{\mathcal {L}}}\) for arbitrary \(\alpha\) and d, maximizing the number of demonstrations that \(\pi _{f^*}\) can imitate, that is

To approximate the optimization process above-specified, we develop a general method for creating descriptions of RL policies: the Adaptive Imitation-Interpretation algorithm (AI-Interpret). Our algorithm captures the essence of the input policy by finding its simpler representation (small d) which performs almost as well (small \(\alpha\)). To accomplish that, AI-Interpret builds on the Logical Program Policies (LPP) method by Silver et al. (2019). Similarly to the latter, AI-Interpret accepts a set of demonstrations of the policy \({\mathcal {D}}\) and \({\mathcal {L}}\), a domain-specific language (DSL) of predicates which captures features of states that could be encountered and actions that could be taken in the environment under consideration. AI-Interpret uses the constructed predicates to separate the set of demonstrations into clusters. Doing so, enables it to consider increasingly smaller sets of demonstrations and employ LPP in a structured search for simple logical formulas in \(F_{\alpha }^{d,{\mathcal {L}}}\). To improve human decision making, we use AI-Interpret in our strategy discovery pipeline (see Fig. 1). After modeling the planning problem as a metalevel MDP, using RL algorithms to compute its optimal policy, gathering this policy’s demonstrations, and creating a custom DSL, the pipeline uses AI-Interpret to find a set of candidate formulas. The formulas are transformed into decision trees, and then visualized as flowcharts that people can follow to execute the strategy in real life.

4 Related work

Strategy discovery Historically, discovering strategies that people can use to make better decisions and developing training programs and decision aids that help people execute such strategies was a manual process that relied exclusively on human expertise (Hafenbrädl et al., 2016; Laskey & Martignon, 2014; Martignon, 2003). Recent work has been increasingly more concerned with discovering human decision strategies automatically (Binz & Endres, 2019; Callaway et al., 2018a, 2018b, 2019; Kemtur et al., 2020; Lieder et al., 2017, 2018) and with using cognitive tutors (Lieder et al., 2019; Lieder et al., 2020). In our previous studies on this topic (Binz & Endres, 2019; Callaway et al., 2018a, 2018b, 2019; Kemtur et al., 2020; Lieder et al., 2017, 2018), we enabled automatic discovery of optimal decision strategies through leveraging reinforcement learning. In technical terms, we formalized decision strategies that make the best possible use of the decision-maker’s precious time and finite computational resources (Lieder & Griffiths, 2020) within the framework of metalevel MDPs (see Fig. 2 and Sect. 2.2). This approach, however, led to stochastic black-box metalevel policies whose behavior can be idiosyncratic.

Visual representations of RL Policies Most approaches to interpretable AI in the domain of reinforcement learning try to describe the behavior of RL policies visually. Lam et al. (2020), for instance, employed search tree descriptions. The search trees had a graphical representation which contained current and expected states, win probabilities for certain actions, and connections between the two. They were learned similarly as in AlphaZero (Silver et al., 2018), through Q function optimization and self-play, and by additionally learning the dynamics model. Lam et al. (2020) studied an interactive, sparse-reward game Tug of War and showed how domain experts may utilize search trees to verify policies for that game. Annasamy and Sycara (2019) proposed the i-DQN algorithm that learned a policy alongside its interpretation in form of images with highlighted elements representative to the decision-making. They achieved that by constraining the latent space to be reconstructible through key-stores, vectors encoding the model’s global attention over multiple states and actions. Their tests point out that inverting the key-stores provides insights into the features learned by the RL model. RL policies represented visually in other ways, for instance as attention or saliency maps, can be also found in Mott et al. (2019), Atrey et al. (2019), Greydanus et al. (2018), Puri et al. (2019), Iyer et al. (2018), Yau et al. (2020).

Decision trees Visual representations may also take a descriptive form of a decision tree, an idea we explored in this paper. For example, Liu et al. (2019) approximated a neural policy using an on-line mimic learner algorithm that returns Linear Model U-Trees (LMUT). LMUT represent Q-functions for a given MDP in a regression decision tree structure (Breiman et al., 1984) and are learned using the stochastic gradient descent algorithm. By extracting rules from the learned LMUT, it is possible to comprehend action decisions for a given state considering conditions that are imposed on its feature-representation. Silva et al. (2020) went even further and introduced RL function approximation technique performed via differentiable decision trees. Their method outperformed neural networks after both were learned with gradient descent, and returned deterministic descriptions that were more interpretable than networks or rule lists. Similar approaches to mimic neural networks with tree structures were presented in Bastani et al. (2018), Alur et al. (2017), Che et al. (2016), Coppens et al. (2019), Jhunjhunwala (2019), Krishnan et al. (1999).

Imitation learning Alternative approaches attempt to describe policies in formalisms other than decision trees. In any case, however, the interpretability methods often employ imitation learning. Notably, the Programmatically Interpretable Reinforcement Learning (PIRL) method, proposed by Verma et al. (2018) combines deep RL with an efficient program policy search. PIRL defines a domain-specific language of atomic statements with an imposed syntax and allows describing neural network-based policies with programs. These programs mimic the policies and may be found using imitation learning methods. Experiments in TORCS car-racing environment (Wymann et al., 2015) showed that this approach can learn well-performing if-else programs (Verma et al., 2018). Araki et al. (2019) proposed to represent policies as finite-state automata (FSA) and introduced a method to derive interpretable transition matrices out of FSA. In their framework, Araki et al. (2019) used expert trajectories to (i) learn an MDP modeling actions in the environment and (ii) learn transitions in an FSA of logical expressions, to then perform value iteration over both. The authors showed how their method succeeds in multiple navigation and manipulation tasks by beating standard value iteration and a convolutional network. Similar approaches that aim to learn descriptions via imitation learning were introduced in Bhupatiraju et al. (2018), Penkov and Ramamoorthy (2019), Verma (2019), Verma et al. (2019).

5 Algorithm for interpretability

In this section, we introduce Adaptive Imitation-Interpretation (AI-Interpret), an algorithm that transforms the policy learned by a reinforcement learning agent into a human-interpretable form (see Sect. 3). We begin with explaining what contribution AI-Interpret makes and then provide a birds-eye view of how its components—LPP and clustering—work together to produce human-interpretable descriptions. Afterwards, we detail our approach and present a heuristic method for choosing the number of demonstration-clusters used by AI-Interpret. In the last part of this section, we analyze the whole pipeline that uses the introduced algorithm to automatically discover interpretable planning strategies.

5.1 Technical contribution

The technical novelty of our algorithm lies in (a) giving the user control over the tradeoff between the complexity of the output policy’s description and the performance of the strategy it conveys; and (b) handling cases when the created DSL is insufficient to imitate the whole set of demonstrations, saving time-consuming fine-tuning of the DSL. We enable these components by approximating the optimization from Eq. 6. Note that neither of them is available with the baseline imitation method we employed.

Firstly, in the original formulation of LPP, the user cannot control the final form of the output other than specifying its DSL, which needs to be optimized to work well with LPP. Similarly, it is unclear how well the policy induced by LPP performs in the environment in question, and how it compares to the policy that is being interpreted. In consequence, LPP generates solutions of limited interpretability.

Secondly, LPP’s performance is highly sensitive to how well the set of domain predicates is paired with the set of demonstrations. Sometimes, this sensitivity prevents the algorithm from finding any solution, even though \(DNF({\mathcal {L}},d)\) is non-empty for some \(d\in {\mathbb {N}}\). Formally, the set of demonstrations \({\mathcal {D}}\) is equally divided into two disjoint sets \({\mathcal {D}}_1, {\mathcal {D}}_2\) where one is used for learning programs \(h_1,h_2,\ldots,h_n\), and the other gives an unbiased estimate of the likelihood \({\mathbb {P}}({\mathcal {D}}\mid h_i)\propto {\mathbb {P}}({\mathcal {D}}_2\mid h_i)\). If the predicates are too specific or too general, many, if not all the considered programs, could generate a likelihood of 0 for \({\mathcal {D}}_2\), given that they were chosen to account well for the data in \({\mathcal {D}}_1\). It can also happen that no formula can be found for \({\mathcal {D}}_1\) itself because the dataset \({\mathcal {D}}\) contains very rare examples of the policy’s behavior (rarely encountered state-action pairs) that the predicates cannot explain. For example, consider a situation where the demonstrations describe a market-trading policy. The birttleness of the market might result in capturing an idiosyncratic behavior of the policy, one which is very local and rather rare. This could break the results for LPP. It could either be because the set of predicates cannot capture the idiosyncratic behavior or that this behavior is used for estimating the likelihood of the programs. Despite the modeler’s prior knowledge, considerable optimization might be needed to obtain a set of acceptable predicates for which neither of those issues arise.

5.2 Overview of the algorithm

To enable innovations mentioned in the previous subsection, we introduce an adaptive manipulation of the dataset \({\mathcal {D}}\). Algorithm 1 revolves around LPP, but outputs an approximate solution even in situations in which LPP would not be able to find one.

Figure 3 depicts a diagram with the workflow of AI-Interpret. The computation starts with a set of demonstrations, and a domain specific language of predicates that describe the environment under consideration. The algorithm turns each of the demonstrations into a binary vector with one entry for each predicate, and with this data uses LPP to find a maximum posterior DNF formula that best explains the demonstrations. Contrary to the vanilla LPP, however, it does not stop after an unsuccessful attempt at interpretation that finds no solution or a solution that does not meet the input constraints (specified by d and \(\alpha\), among others). Instead, it searches for a subset of demonstrations that can be described by an appropriate interpretable decision rule. Concretely, AI-Interpret clusters the binary vectors into J separate sets and simultaneously assigns each a heuristic value. Intuitively, this value describes how simple it is to incorporate the demonstrations of that cluster into the final interpretable description. It then successively removes the clusters with the lowest values until LPP finds a MAP formula that is consistent with all of the remaining demonstrations and abides by the specification provided by the constraints. In this way, AI-Interpret finds a description f tihat belongs to \(F_{\alpha }^{d,{\mathcal {L}}}\) and is nearly optimal for the maximization of \(I(\cdot )\).

Flowchart of the AI-Interpret algorithm. Demonstrations are turned into feature vectors using the Domain Specific Language of predicates, and then clustered into sets encompassing some type of the policy’s behavior. The clusters are then ordered based on how interpretable they are. The LPP method tries to iteratively construct a logical formula that imitates the policy on the demonstrations and meets the input criteria. After every failed iteration, AI-Interpret removes the least interpretable cluster and the process repeats

It is important to note that the algorithm we propose can be combined with any imitation learning method that can measure the quality of the clusters’ descriptions. As such, AI-Interpret may be viewed as a general approach to finding simple policies that successfully imitate the essence of potentially incoherent set of demonstrations and to solving optimization problems similar to this in Eq. 6. In this way, AI-Interpret is able of handling sets that include some idiosyncratic or overly complicated demonstrations which do not require capturing. Moreover, by preforming imitation learning AI-Interpret can be applied to all kinds of RL policies, e.g. flat, hierarchical, metalevel, meta, etc.

5.3 Adaptive imitation-interpretation

In this section we describe the algorithm in more detail. Firstly, AI-Interpret accepts a set of parameters that affect the final quality and interpretability of the result. Secondly, it takes four important steps (see steps 2, 3, 7 and 9) that need to be elaborated on.

We begin with a short explication of the parameters. Note that as it was stated in Sect. 2.7, a logical formula f induces a policy \(\pi _f\) which assumes a uniform distribution over all the actions a accepted by f in state s, that is \(\pi _{f}(a\mid s) = \frac{1}{\left| \{a: f(s,a) = 1\}\right| }\). While describing the parameters, we will refer to this policy as the interpretable policy.

Aspiration value \(\alpha\) Parameter \(\alpha\) specifies a threshold on the expected return ratio. For an interpretable policy \(\pi _f\) to be accepted as a solution the ratio between its estimate of the expected return and the estimated expected return of the demonstrated policy has to be at least \(\alpha\)–\(\delta\) (see the tolerance parameter below).

Number of rollouts L Parameter L is a case-dependent parameter that specifies how many times to initialize a new state and run the interpretable policy in order to reliably estimate its expected return \({\mathbb {E}}(G_0^{\pi _f})\) (within the bounds specified by the tolerance parameter, see below). L should be chosen according to the problem.

Tolerance \(\delta\) The parameter \(\delta\) allows the user to express how much better a more complex decision rule would have to perform than a simpler rule to be preferable. Formally, the return ratio \(r_2\) of the simplified strategy is considered to be significantly better than the return ratio \(r_1\) of another strategy if \(r_2 - r_1 > \delta\).

Mean reward m The mean reward of policy \(\pi\) is what the interpretable policy’s return tries to match in expectation. The maximum deviation from m is controlled by the aspiration value, whereas the expected return of the interpretable policy is calculated by performing rollouts. Mean reward \(m\approx {\mathbb {E}}(G_0^{\pi })\).

Maximum size d Parameter d sets an upper bound on the size of conjunctions in formulas returned by AI-Interpret. In equivalent terms, the tree that is a graphical representation of the algorithm’s output is required to have the depth of at most d nodes. The size of the conjunctions (or depth of the tree) is a proxy for interpretability. Decreasing the depth parameter d can force the solution to use fewer predicates; this can make the formula less accurate but more interpretable. Increasing the depth may allow the method to use more predicates; this could result in overfitting and a decline of interpretability. Alongside the aspiration value and given a DSL, maximum size defines \(F_{\alpha }^{d,{\mathcal {L}}}\) from our formal definition of the problem.

Number of clusters N The number of clusters N determines how coarsely to divide the demonstrations in \({\mathcal {D}}\) based on the similarity of their predicate values. A proper division enables selecting a subset \({\mathcal {D}}_{sub}\subseteq {\mathcal {D}}\) such that \({\mathcal {D}}_{sub}\) guarantees a high probability of being captured with existing predicates, and lowers the chance of the validation set \({\mathcal {D}}_2\subset {\mathcal {D}}_{sub}\) being largely different from \({\mathcal {D}}_1\subset {\mathcal {D}}_{sub},\ {\mathcal {D}}_1\cap {\mathcal {D}}_2=\emptyset\).

Cut-size for the clusters X If a cluster contains less than X% of the demonstrations, then AI-Interpret will disregard it (see step 4). Choosing representative clusters allows to remove the outliers. Since X could be in fact kept fixed for virtually any problem, it is treated as a hyperparameter and does not constitute the input to the algorithm.

Train and formula-validation set split S Another hyperparameter of our method defines how to divide the set of demonstrations \({\mathcal {D}}\) to find a formula using one subset and compute its likelihood using the second subset. The split is applied to each cluster separately. Similarly to the cut-size, it could be kept fixed irrespective of the problem under consideration and hence does not constitute the input to AI-Interpret. Splitting is performed in each iteration by randomly dividing the clusters into train and validation sets and then using the sum of all train sets and the sum of all validation sets as inputs to LPP.

Now we move to the explanation of the steps taken by the algorithm and start with the isolated case of step 7. In the original formulation of LPP, the authors take all the actions that were not taken in a demonstration (s, a) to serve as the negative examples \((s,a'),\ a'\ne a\). Since in our problem we do have access to the policy, we use a more conservative method and select only the state-action pairs which are sub-optimal with respect to \(\pi\). This helps the algorithm find more accurate solutions.

In step 2, the algorithm uses feature vectors corresponding to predicate values and clusters them into separate subsets. It is done through hierarchical clustering with the UPGMA method (Michener & Sokal, 1957), as this method captures the intuition that there may exists a core of predicates which evaluate to the same value for the demonstrations forming the cluster, and that there might also exist irrelevant predicates making up the noise. The elements of the cluster identified by hierarchical clustering are hence well poised to capture different sub-behaviors of the demonstrated policy.

With step 3 the algorithm measures which of the clusters are indeed well described with the predicates. The Bayesian heuristic value of a cluster (Definition 6) is defined as the MAP estimate of its interpretable description found by Logical Program Policies, weighted by the size of the cluster relative to the size of the whole set. The larger the value, the more similar behavior is encompassed by the elements of the cluster, and the more representative it is. Note, that through step 3 (and after applying the cut-size in step 4) it becomes possible to rank order the clusters. In case of a failure in interpreting the policy with existing examples, the cluster with the lowest rank can be removed—see step 21. In this way, the algorithm may disregard a set of demonstrations that are not described with existing predicates as well as the others, and continue with the remaining ones.

In step 9, our algorithm uses the LPP method to extract formulas of progressively larger conjunctions, up to size d specified by the user. It then selects formulas which are not significantly worse than other found ones (according to the tolerance parameter, see step 15), and eventually chooses the formula with the fewest predicates (step 16). This allows our algorithm to consider all decision rules that could be generated for the same (incomplete) demonstration dataset, and return the best and the simplest among them.

The solution is output as soon as the expected reward of the interpreted policy is close enough to the expected reward of the original policy (step 17). If that never happens, the algorithm concludes that the set of predicates is insufficient to satisfy the input constraints.

Definition 6

(Bayesian heuristic value) For a subset \({\mathcal {C}}\subseteq {\mathcal {D}}\) extracted from a dataset of demonstrations \({\mathcal {D}}\) the Bayesian heuristic value of this set is given by:

5.4 Choosing the number of clusters

In this section, we introduce a heuristic that helps to narrow down the list of candidates for parameter N of the algorithm, that is the number of clusters.

In more detail, we adapt the popular elbow heuristic. Our version of this heuristic (see Procedure 1) allows to choose a subset of values for the number of clusters, by specifying how fine-grained the clustering is required to be to most drastically change the Clustering Value (see Definition 7). The Clustering Value of N, CV(N, X), is the sum of Bayesian heuristic values (Definition 6) of all the clusters found by hierarchical clustering with size is at least X% of the whole set. Practically, we use the same X that serves as the cut-size hyperparameter for AI-Interpret, see Sect. 5.3. A leap in the values of CV conveys that the clusters are relatively big and much better described in terms of the predicates as they were for a coarser clustering. We search for an elbow in the Clustering Values because we would like the clusters to be maximally distinct while keeping their number as small as possible. Finally, the heuristic returns a set of candidate elbows since a priori the granularity of the data revealed by the predicates is unknown.

Definition 7

(Clustering value) We define the Clustering Value function \(CV:{\mathbb {N}}\times [0,1]\rightarrow {\mathbb {R}}\) as

where V stands for the Bayesian heuristic value function, N denotes the number of clusters \(C_1,\ldots,C_N\) identified by hierarchical clustering on dataset \({\mathcal {D}}\), that is \(\bigcup _{i=1}^N{\mathcal {C}}_i={\mathcal {D}}\) and \(C_i\cap C_j=\emptyset\) for \(i\ne j\), and X stands for the cut-size value for the size of clusters measured proportionally to \({\mathcal {D}}\).

Procedure 1

(Elbow heuristic) To decide the number of clusters, fix the cut-size hyperparameter X and evaluate the Clustering Value function CV on the set of m candidate values \(N_1,\ldots,N_m\). Identify K values \(N_{i_1},\ldots,N_{i_K}\) for which \(CV(N_{i_j},X)-CV(N_{i_{j}-1},X),\ j\le K\) is the largest, i.e. identify the elbows. The elbows heuristically identify clustering solutions for which the elements within each cluster are similar to one another, can be appropriately described by the predicates, and convey that clusters are reasonably large.

If the value does not ever increase substantially, then the predicates do not capture the general structure of the data. An example of how to use the elbow heuristic is shown in Fig. 4.

5.5 Pipeline for interpretable strategy discovery

To go from a problem statement to an interpretable description of a strategy that solves that problem we take three main steps: (1) formulate the problem in formal terms, (2) discover the optimal strategy using this formulation, (3) interpret the discovered strategy. The first two steps can be broken down to sub-problems that our previous work has already solved (Callaway et al., 2018a, 2018b, 2019) and which we have described in Sect. 3. The last step is feasible through AI-Interpret. We show a pseudo-code which implements the pipeline for automatic discovery of interpretable planning strategies in Algorithm 2.

Our pipeline starts with modeling the problem as a metalevel MDP and then solving it to obtain the optimal policy. To use AI-Interpret, the found policy is used to generate a set of demonstrations. We also create a DSL of predicates that is used to provide an interpretable description of this policy. We then establish the input to AI-Interpret. The mean reward of the metalevel policy is extracted directly from its Q function taking the maximum at the initial state. The number of clusters N is identified automatically by the elbow heuristic (Procedure 1). Since the elbow heuristic returns K candidates for N, our pipeline outputs a set of K possible interpretable descriptions, each for a different clustering. Having a dataset of candidate interpretable descriptions (decision trees) output by the pipeline, one may use background knowledge or a pre-specified criterion to choose the most interpretable tree. Criteria include, but are not limited to, choosing the tree with the least amount of nodes, the interpretability ratings of human judges, or the performance of people who are assisted by alternative decision trees. Our method of extracting the final result is detailed in Sect. 6.2.

6 Improving human decision-making

Having developed a computational pipeline for discovering high-performing and easily-comprehensible decision rules, we now evaluate whether this approach meets our criteria for interpretability. As we mentioned in Sect. 3, our evaluation introduces a new standard to the field of interpretable RL by studying how the descriptions affect human decision makers. As a proof-of-concept, we test our approach on three types of planning tasks that are challenging for people (Callaway et al., 2018b; Lieder et al., 2020). The central question is whether we can support decision-makers in the process of planning by providing them with flowcharts discovered through our computational pipeline for interpretable strategy discovery (see Fig. 1). To achieve that, we perform one large behavioral experiment for each of the three types of tasks and a fourth experiment in which we evaluate our approach to improving human decision-making against conventional training. We find that our approach allows people to largely understand the automatically discovered strategies and use them to make better decisions. Importantly, we also find that our new approach to improving human decision-making is more effective than conventional training.

6.1 Planning problems

Human planning can be understood as a series of information gathering operations that update the person’s beliefs about the relative goodness of alternative courses of actions (Callaway et al., 2020). Planning a road trip, for instance, involves gathering information about the locations one might visit, estimating the value of alternative trips, and deciding when to stop planning and execute the best plan found so far. The Mouselab-MDP paradigm (Callaway et al., 2017) is a computer-based task that emulates these kinds of planning problems (see Fig. 5). It asks people to choose between multiple different paths, each of which involves a series of steps. To choose between those paths, people can gather information about how much reward they will receive for visiting alternative locations by clicking on the corresponding location. Since people’s time is valuable, gathering this information is costly (each click costs $1) but it can also improve the quality of their decisions. Therefore, a good planning strategy has to focus the decision-maker’s attentions on the most valuable pieces of information.

To test our approach to improving human decision-making, we rely on three route-planning tasks that were designed to capture important aspects of why it is difficult for people to make good decisions in real-life (Callaway et al., 2018b). For instance, the first task captures that certain steps that are very valuable in the long-run (e.g., filing taxes) are often unrewarding in the short-run whereas activities that are rewarding in the short-run (e.g., watching cat videos on YouTube) often have little value in the long-run. The three tasks have been previously used to study how people plan (Callaway et al., 2017, 2018b), to train people how to make more far-sighted decisions (Lieder et al., 2019, 2020), and to compare the effectiveness of different ways to improve human decision-making (Lieder et al., 2020).

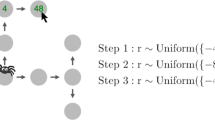

The route planning problems we presented to our participants used the tree environment illustrated in Fig. 5. The node at the bottom of this tree served as the starting node and was connected to 3 other nodes, each of those was connected to one additional node, and finally each of these single connections led to 2 further nodes. We will call this a 3-1-2 structure and refer to the nodes that can be reached in 1, 2, and 3 steps as level 1, level 2, and level 3, respectively. Callaway et al. (2018b) defined the three environments we are using in terms of the distribution of rewards for nodes on each level. These environments differ in that the uncertainty about the rewards either increases, decreases, or stays the same from each step to the next. They created three discrete sequences of discrete uniform distributions, further called variance structures. Building on these structures, we defined three types of environments. Within each type of an environment, the rewards of all nodes at the same level were drawn from the same discrete uniform distribution. As shown below, what distinguishes the environments is the assignment of reward distributions to levels (read as level : support):

-

1.

Increasing variance structure environments where

\(1: \lbrace -4, -2, 2, 4\rbrace,\ 2: \lbrace -8, -4, 4, 8\rbrace,\ 3:\lbrace -48, -24, 24, 48\rbrace\)

-

2.

Decreasing variance structure environments where

\(1: \lbrace -48, -24, 24, 48\rbrace,\ 2: \lbrace -8, -4, 4, 8\rbrace,\ 3: \lbrace -4, -2, 2, 4\rbrace\)

-

3.

Constant variance structure environments where

\(1: \lbrace -10, -5, 5, 10\rbrace,\ 2: \lbrace -10, -5, 5, 10\rbrace,\ 3: \lbrace -10, -5, 5, 10\rbrace\)

Discovering a resource-rational planning strategy corresponds to computing the optimal policy of a metalevel MDP that is exponentially more complex that the acting domain itself. In fact, the number of belief states for all our planning problems equals \(4^{12}\), that is over 16 million. This shows that optimizing a policy for Mouselab-MDP is in reality a very difficult problem. Moreover, creating a description of the optimal policy also poses scalability issues. In particular, the standard symbolic approaches that derive rules from the Q-table of the policy would result in overwhelmingly large set of rules if they accounted for even a fraction of the original metalevel belief state space.

Prior research on the constant and increasing variance environments indicates that tasks in the Mouselab-MDP paradigm come as a challenge to many people (Callaway et al., 2018b; Lieder et al., 2020). In the case of the constant variance structure, even extensive practice is not sufficient for participants to arrive at nearly-optimal strategies (Callaway et al., 2018b). We aimed to help people adopt good approximations to those strategies by the virtue of showing them a decision aid for playing a game defined in the world of Mouselab MDPs. To evaluate our method’s potential for helping people make better decisions in this game, we designed a series of online experiments with real people. In each, participants were making decisions with versus without the support of an interpretable flowchart. In the game we were studying, participants helped a monkey to climb up a tree through a path that enables it to get the highest possible reward, see Fig. 5. Their decisions regarded uncovering the hidden nodes in search of such paths, knowing that each uncovered reward takes some money away from the monkey ($1).

6.2 Designing decision aids with AI-interpret and automatic strategy discovery

To apply our interpretable strategy discovery pipeline (see Fig. 1) to the benchmark problems described in Sect. 6.1, we modeled the optimal planning strategy for each of the three types of sequential decision problems as the solution to a metalevel MDP (see Definition 2) as it was previously done by Callaway et al. (2018b). The belief state of the metalevel MDP corresponds to which rewards have been observed at which locations. The computational actions of the metalevel MDP correspond to the clicks people make to reveal additional information. The cost of those computations is the fee that participants are charged for inspecting a node. We obtained the optimal metalevel policies for the three metalevel MDPs using the simplest approach we had at our disposal, that is dynamic programming method developed by Callaway et al. (2018b). It was distinct from the original algorithm by being able to handle much larger state space typical for metalevel MDPs.

Afterwards, we employed our pipeline for automatic discovery of interpretable strategies from Algorithm 2. First, we generated three sets of 64 demonstrations by running the optimal metalevel policies on their respective metalevel MDPs. Then, we established a novel domain specific language (DSL) \({\mathcal {L}}\) to allow AI-Interpret to describe the demonstrated planning policies in logical sentences via LPP. Our DSL \({\mathcal {L}}\) supplied LPP with the basic building blocks (“words”) for describing the Mouselab environment and the demonstrated information gathering operations. In more detail, \({\mathcal {L}}\) comprised six types of predicates:

-

PRED(b, c) describes the node evaluated by computation c in belief state b,

-

AMONG(PREDS(b, c)) checks if the node evaluated by c in state b satisfies the predicate or a conjunction of predicates PREDS of the type as above,

-

\(AMONG\_PRED(b,c,list)\) checks if the node evaluated by c in state b belongs to list and possesses a special characteristic as the only one in that set (not used on its own),

-

\(AMONG(PREDS,\ AMONG\_PRED)(b,c)\) checks if in belief state b the node evaluated by c satisfies the predicate \(AMONG\_PRED\) among the nodes satisfying the predicate or a conjunction of predicates PREDS,

-

\(ALL(PREDS,\ AMONG\_PRED)(b,c)\) checks if in belief state b all the nodes which satisfy the predicate(s) PREDS also satisfy \(AMONG\_PRED\),

-

\(GENERAL\_PRED(b,c)\) detects whether some feature is present in belief state b.

A probabilistic context-free grammar with 14 base PRED predicates, 15 \(GENERAL\_PRED\) predicates, and 12 \(AMONG\_PRED\) predicates generated \({\mathcal {L}}\) according to the above-mentioned types. This resulted in a set containing a total of 14,206 elements. More information on our DSL can be found in the Supplementary Material. The predicates found in the flowcharts we used for the benchmark problems were of the following types:

\(GENERAL\_PRED,\ AMONG(PREDS)\) and \(AMONG(PREDS,\ AMONG\_PRED)\).

Other parameters necessary to employ AI-Interpret in the search of interpretable descriptions comprised number of rollouts, aspiration value, tolerance, maximum size, number of clusters, mean reward of the expert policy. Preliminary runs performed to establish the expected return of the optimal metalevel policy or of policies which behaved similarly, revealed that \(L=100{,}000\) is the number of rollouts appropriate for all the studied problems. The aspiration value \(\alpha\) was fixed at 0.7 and the tolerance parameter \(\delta\) was equal 0.025. The maximum depth d had a limit value of 5 and the number of clusters N was chosen by the elbow heuristic employed in Algorithm 2. Eventually, N was set to 18 for the increasing and constant variance environments and set to 23 for the decreasing variance environment. Clusters were created based on the output of the UPGMA hierarchical clustering with \(l_1\) distance and average linkage function (Michener and Sokal, 1957). We extracted the mean rewards of the optimal policies by inspecting their Q function in the initial belief state \(b_0\). We also used 2 hyperparameters. To reject the outliers, both in the elbow heuristic and the algorithm, any cluster whose size was less than \(X=2.5\%\) of the whole set of demonstrations was disregarded. The split S for the demonstration set to validate the formulas in each iteration was equal to 0.7.

Applying AI-Interpret with this DSL and parameters to the demonstrations induced the formulas that were most likely to have generated the selected demonstrations. These formulas were subject to inductive constraints of our DSL and the simplicity required by the listed parameters. The formal output of our pipeline (Algorithm 2) comprised a set of \(K=4\) decision trees defined in terms of logical predicates. We chose one output per decision problem by selecting the tree with the fewest nodes, breaking ties in favor of the decision tree with the lowest depth. To obtain fully comprehensible decision aids we turned those decision trees into human-interpretable flowcharts by manually translating their logical predicates into natural language questions. A translation of the \(GENERAL\_PRED\) predicates depended on the particular characteristic they were capturing. For example, a predicate is_previous_observed_max was translated as “Was the previously observed value a 48?”. The translation of AMONG predicates was constructed based on the following prototype: “Is this node/it PRED AMONG_PRED”, “Is this node/it PRED and PRED” or “Is this node/it AMONG_PRED among PRED nodes”. For instance, among(not(is_observed), has_largest_depth) was translated as “Is it on the highest level among unobserved nodes?”. The flowcharts led to two possible high-level decisions, which we named “Click it” and “Don’t click it”. The termination decision was reached when all the possible actions led to “Don’t click it” decision. The flowcharts were eventually polished in the pilot experiments by asking participants for their semantic preferences and by incorporating comments that they submitted.

By applying this procedure to the three types of sequential decision problems described above, we obtained the three flowcharts shown in Fig. 6. The flowchart for the increasing variance environment (Fig. 6a), advises people to inspect nodes of the third level until they uncover the best possible reward (\(+48\)) and then suggests to stop planning and take action. Despite being simpler, this strategy performs almost as well as the optimal metalevel policy that it imitates (39.17 points/episode vs. 39.97 points/episode). The flowchart for the decreasing variance environment (Fig. 6b) allows people to move after clicking all level 1 nodes. This strategy is also simpler that the optimal metalevel policy that it imitates, and performs almost as well (28.47 points/episode vs. 30.14 points/episode). The flowchart for the constant variance environment (Fig. 6c) instructs a person to click level 1 or 2 nodes that lie on the path with the highest expected return until observing a reward of \(+10\). Then, it suggests to click the two level 3 nodes that are above the \(+10\), and then either get back to clicking on level 1 or 2 or, if the best path is a path that passes through the \(+10\), stop planning and take action. In this case we also note that this strategy is simpler than the optimal metalevel policy that it imitates and that it once again performs almost as well (7.03 points/episode vs. 9.33 points/episode).

6.3 Evaluation in behavioral experiments

We evaluated the interpretability of the decision aids designed with the help of automatic strategy discovery and AI-Interpret in a series of 4 behavioral experiments. In the first three experiments we evaluated whether the flowcharts generated by our approach were able to improve human decision-making in the Mouselab-MDP environments with increasing variance, decreasing variance, and constant variance, respectively. In the last experiment we investigated for which environments our approach is more effective than the standard educational method of giving people feedback on their actions.

In each experiment participants were posed a series of sequential decision problems using the interface illustrated in Fig. 5. In each round, the participant’s task was to collect the highest possible sum of rewards, further called the score, by moving a monkey up a tree along one of the six possible paths. Nodes in the tree harbored positive or negative rewards and were initially occluded; they could be made visible by clicking on them for a fee of $1 or moving on top of them after planning. The participant’s score was the sum of the rewards along their chosen path minus the fees they had paid to collect information.

Our experiments focused on two outcome measures: the expected score and the click agreement. The expected score is the sum of revealed rewards on the most promising path in the round right before the participant started to move, minus the cost of his or her clicks. This is true because the expected reward of an occluded node was 0 in all of the chosen decision problems. The fact that the expected score is equal to the value of the termination operation of the corresponding metalevel MDP, makes it the most principled performance metric we could choose. It is also the most reliable measure of the participant’s decision quality because it is their expected performance across all possible environments that are consistent with the observed information. The total score, by contrast, includes additional noise due to the rewards underneath unobserved nodes. Our second outcome measure, the click agreement, quantifies a person’s understanding of the conveyed strategy by measuring how many of his or her clicks or consistent versus inconsistent with that strategy. When the participant clicked a node for which the flowchart said “Click it” this was considered a consistent click. When the participant clicked a node that the flowchart evaluated as “Don’t click it” this was considered as an inconsistent click. We defined the click agreement as the proportion of consistent clicks in relation to all performed clicks, that is

When people made fewer clicks than the flowchart suggested, then the difference between the number of clicks made by the strategy shown in the flowchart and the participant’s number of clicks was counted towards the number of inconsistent clicks. The number of clicks made by the strategy was estimated by its average number of clicks across 1000 simulations.

Differences in the click agreement between participants belonging to separate experimental groups are indicative of differences in understanding the strategy. If one group has significantly higher click agreement, it means that people belonging to that group know the strategy better than people in the other group(s). Our goal was to show that the click agreement for participants who were assisted with our flowcharts was significantly higher than the click agreement for participants not using such an assistance. This would not only show that flowcharts are interpretable, but it would also rule out the possibility that people already knew the strategy or were able to discover it themselves. Since the flowcharts our method finds convey near-optimal strategies, higher click agreement is correlated with higher performance. However, it is unclear what level of understanding is sufficient for participants to benefit from the strategy. To show that the flowcharts are not only interpretable, but interpretable enough to increase people’s performance, we additionally measured our participants’ expected score.

6.3.1 Experiment 1: improving planning in environments with increasing variance

In the first experiment, we evaluated whether the flowchart presented in Fig. 6a can improve people’s performance in sequential decision problems where the uncertainty about the reward increases from each step to the next.

Procedure Each participant was randomly assigned to either a control condition in which no strategy was taught or an experimental condition in which a flowchart conveyed the strategy. The control condition consisted of an introduction to the Mouselab-MDP paradigm including three exploration trials, a quiz to test the understanding of the instructions, ten test trials, and a short survey. We instructed participants to maximize their score using their own strategy and incentivized them by communicating to pay an undefined score-based bonus. After the experiment, they received 2 cents for each virtual dollar they had earned in the game. The experimental condition consisted of an introduction to the Mouselab-MDP paradigm including one exploration trial, an introduction to flowcharts and their terminology including two practice trials, a quiz to test the understanding of the instructions, ten test trials, and a short survey. The practice and test trials displayed a flowchart next to the path-planning problem. The flowchart used in the practice block did not convey a reward enhancing strategy to avoid a training effect, whereas the one in the test block did. We instructed participants to act according to the displayed flowchart. Specifically, participants were asked to first click all nodes for which following the flowchart led to the “Click it” decision and to then move the agent (a monkey) along the path with the largest sum of revealed rewards. To incentivize participants, they were told to receive a bonus depending on how well they followed the flowchart and they received a bonus that was proportional to their click agreement score.

Participants We recruited 172 people on Amazon Mechanical Turk (average age: 37.9 years, range: 18–69 years; 85 female). Each participant received a compensation of $0.15 plus a performance based bonus of up to $0.65. The mean duration of the experiment was 10.3 min. On average, participants needed 1.4 attempts to pass the quiz. Because not clicking is highly indicative of speeding through the experiment without engaging with the task, we excluded 15 participants (i.e., 8.72%) who did not perform any click in the test block. This yielded 78 participants for the control condition and 79 participants for the experimental condition.

Results In addition to a significant Shapiro-Wilk test for normality (\(W = .94, p < .001\)), we observed that the distribution of click agreements is highly left-skewed. Due to this reason we report the median values instead of the mean values for the click agreement and use the non-parametric Mann–Whitney U-test for statistical comparisons. The median click agreement was 46.6% \((M= 46.9\%, SD = 25.8\%)\) in the control condition and 74.1% \((M= 67.0\%, SD = 28.2\%)\) in the experimental condition (see Fig. 7a). The proportion of people who achieved the click agreement above 80% increased from 9% without the flowchart to 48% with the flowchart. Similarly, the proportion of participants who achieved the click agreement above 50% increased from 46 to 70%. A two-sided Mann–Whitney U-test revealed that the click agreements in the experimental condition were significantly higher than in the control condition \((U = 1773.5,\ p < .001)\). Thus, participants confronted with the flowchart followed its intended strategy more often than participants without the flowchart.

Automatic discovered flowchart for the increasing variance environment is interpretable and improves planning: a Proportion of people arranged in five equal sized bins based on the click agreement. b Proportion of people arranged in five equal sized bins based on the average expected score. The error bars enclose 95% confidence intervals. Orange bars represent the control group and blue bars represent the experimental group in Experiment 1

The distribution of the mean score was non-normal due to the Shapiro-Wilk test (\(W = .77, p < .001\)), hence we used the median values and the Mann–Whitney U-test again. The median expected score per trial in the control condition was \(33.25 \; (M= 28.41, SD = 13.33)\) and \(36.80 \; (M= 34.47, SD = 11.85)\) in the experimental condition. This corresponds to 83.2% and 92.1% of the score of the optimal strategy, respectively. A two-sided test showed that the expected scores in the experimental condition were significantly higher than in the control condition \((U = 2110,\ p < .001)\). Thus, the flowchart positively affected people’s planning strategies as participants assisted by the flowchart revealed more promising paths before moving than participants who acted at their own discretion.

In total, participants both understood the strategy conveyed by the flowchart (higher click agreement) and used it, which increased their scores (higher expected rewards).

6.3.2 Experiment 2: improving planning in environments with decreasing variance

In the second experiment, we evaluated whether the flowchart presented in Fig. 6b can improve people’s performance in environments where the uncertainty about the reward decreases from each step to the next.

Procedure The experimental procedure used in this study was identical to the one presented for Experiment 1 (see Sect. 6.3.1) except that the task used the decreasing variance environment instead of the increasing variance environment and that participants in the experimental condition where correspondingly shown the flowchart in Fig. 6b.

Participants We recruited 152 people on Amazon Mechanical Turk (average age 36 years, range: 20–65 years; 62 female). Each participant received a compensation of $0.50 plus a performance based bonus up to $0.50. The mean duration of the experiment was 8.2 min. The participants needed 1.7 attempts to pass the quiz on average. We excluded 6 participants (3.95%) who did not perform any click in the test block. This resulted in 70 participants for the control condition and 76 participants for the experimental condition.

Results Similarly to Experiment 1, Shapiro–Wilk tests revealed that the dependent variables in Experiment 2 were not normally distributed (\(W = .94, p < .001\) for the click-agreement and \(W = .98, p = .019\) for the expected scores). Hence, we report the median values and the results of the Mann–Whitney U-test here as well. The median click agreement in the control condition was \(44.7\% \; (M= 48.6\%, SD = 19.8\%)\) and \(65.7\% \; (M= 70.5\%, SD = 24.0\%)\) in the experimental condition (see Fig. 8a). The proportion of people who achieved the click agreement above 80% increased from 8.57% without the flowchart to 36.84% with the flowchart. Similarly, the proportion of participants who achieved the click agreement above 50% increased from 35.71 to 81.57%. Participants confronted with the flowchart followed its intended strategy significantly more often than participants without the flowchart \((U = 1221.0,\ p < .001)\).

Automatic discovered flowchart for the decreasing variance environment is interpretable: a Proportion of people arranged in five equal sized bins based on the click agreement. b Proportion of people arranged in five equal sized bins based on the average expected score. The error bars enclose 95% confidence intervals. Orange bars represent the control group and blue bars represent the experimental group in Experiment 2

The median expected score per trial was \(22.85 \; (M= 22.75, SD = 8.18)\) in the control condition and \(24.45 \; (M= 24.02, SD = 7.38)\) in the experimental condition. This corresponds to 75.8% and 81.1% of the score of the optimal strategy, respectively. Although the difference between the experimental condition and the control condition was not statistically significant (\((U = 2384.0,\ p = .140)\)), higher click agreement was significantly correlated with higher expected score (\(r(144) = .36, p < .001\)).