Abstract

We propose a geometric theory of non-equilibrium thermodynamics, namely geometric thermodynamics, using our recent developments of differential-geometric aspects of entropy production rate in non-equilibrium thermodynamics. By revisiting our recent results on geometrical aspects of entropy production rate in stochastic thermodynamics for the Fokker–Planck equation, we introduce a geometric framework of non-equilibrium thermodynamics in terms of information geometry and optimal transport theory. We show that the proposed geometric framework is useful for obtaining several non-equilibrium thermodynamic relations, such as thermodynamic trade-off relations between the thermodynamic cost and the fluctuation of the observable, optimal protocols for the minimum thermodynamic cost and the decomposition of the entropy production rate for the non-equilibrium system. We clarify several stochastic-thermodynamic links between information geometry and optimal transport theory via the excess entropy production rate based on a relation between the gradient flow expression and information geometry in the space of probability densities and a relation between the velocity field in optimal transport and information geometry in the space of path probability densities.

Similar content being viewed by others

1 Introduction

A geometric interpretation of thermodynamics originates from the geometric picture of the thermodynamic potential proposed by W. Gibbs in equilibrium thermodynamics and chemical thermodynamics [1]. In non-equilibrium thermodynamics, second-order thermodynamic fluctuations around the equilibrium or steady state have been studied [2,3,4,5,6]. A differential geometry for equilibrium thermodynamics has been proposed by Weinhold [7] and Ruppeiner [8] by considering the fluctuation around the equilibrium state, and the length called thermodynamic length in Weinhold geometry has been proposed to quantify the dissipated availability [9]. Because this geometry for equilibrium thermodynamics is based on the second-order fluctuation of entropy, its generalization [10, 11] has been regarded as information geometry [12, 13], which is the differential geometry for the Fisher metric [14].

In recent years, differential geometry for non-equilibrium thermodynamics, especially for stochastic thermodynamics [15, 16] and non-equilibrium chemical thermodynamics [17, 18], has been used to investigate mathematical properties of entropy production for non-equilibrium transitions and fluctuations around non-equilibrium steady states [11, 19,20,21,22,23,24,25,26,27,28,29,30,31,32]. Because stochastic thermodynamics is based on stochastic processes [33] such as the Fokker–Planck equation [34], differential geometry for non-equilibrium thermodynamics is related to information geometry [12, 13] and optimal transport theory [35, 36].

In this paper, we summarize our recent development of differential geometry for non-equilibrium thermodynamics [22, 24, 25, 29, 31, 37,38,39,40,41,42,43,44,45] and propose several relations between these studies by focusing on non-equilibrium dynamics of the Fokker–Planck equation. Because entropy production for the Fokker–Planck equation can be discussed from the viewpoint of both information geometry and optimal transport theory, these relations provide links between information geometry and optimal transport theory. Our proposed geometrical framework for non-equilibrium thermodynamics, namely geometric thermodynamics, offers a new perspective on links between information geometry and optimal transport theory [46,47,48,49] and the unification of non-equilibrium thermodynamic geometry.

2 Fokker–Planck equation and stochastic thermodynamics

2.1 Setup

We consider the time evolution of a probability density described by the (over-damped) Fokker–Planck equation. Let \(t \in {\mathbb {R}}\) and \({\varvec{x}} \in {\mathbb {R}}^d\) (\(d \in {\mathbb {N}}\)) be time and the d-dimensional position, respectively. The probability density of \({\varvec{x}}\) at time t will be denoted by \(P_t({\varvec{x}})\), which satisfies \(P_t({\varvec{x}}) \ge 0\) and \(\int d{\varvec{x}} P_t({\varvec{x}}) = 1\). The Fokker–Planck equation is given by the following continuity equation,

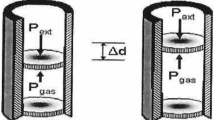

Here, \(\varvec{\nu }_t({\varvec{x}}) \in {\mathbb {R}}^d\) and \({\varvec{F}}_t({\varvec{x}}) \in {\mathbb {R}}^d\) are the vector functions at position \({\varvec{x}}\), \(\mu \in {\mathbb {R}}_{>0}\) and \(T \in {\mathbb {R}}_{>0}\) are positive constants, and \(\nabla \cdot \) and \(\nabla \) are the divergence and the gradient operators, respectively. Physically, the Fokker–Planck equation is used to describe the time evolution of the probability density of an over-damped Brownian particle. For Brownian motion, \(\mu \), T, and \({\varvec{F}}_t({\varvec{x}})\) physically represent the mobility of the Brownian particle, the temperature of the medium scaled by the Boltzmann constant, and the force on the Brownian particle, respectively [33]. The force field \(\varvec{\nu }_t({\varvec{x}})\) is called the mean local velocity because it quantifies the ensemble average of the Brownian particle’s velocity in \({\varvec{x}}\) at time t [16].

This Fokker–Planck equation corresponds to the over-damped Langevin equation, which describes the position of the Brownian particle \({\varvec{X}}(t) \in {\mathbb {R}}^d\) at time t, that is

Here, \(\dot{{\varvec{X}}}(t)\) is the time derivative of position \({\varvec{X}}(t)\), and \((\varvec{\xi } (t))_i = {\xi }_i (t)\) \((i \in \{1, 2, \ldots , d \})\) is the white Gaussian noise that satisfies \(\langle {\xi }_i (t) {\xi }_j (t')\rangle = \delta _{ij} \delta (t-t')\) and \(\langle {\xi }_i (t) \rangle = 0\), where \(\langle \cdot \rangle \), \(\delta _{ij}\), and \(\delta (t-t')\) stand for the ensemble average, the Kronecker delta, and the delta function, respectively \((j \in \{1, 2, \ldots , d \})\). The product of \(\sqrt{2\mu T}\) and \(\varvec{\xi } (t)\) is given by the Ito integral. Mathematically, this correspondence between the Fokker–Planck equation and the over-damped Langevin equation indicates that these two descriptions provide the same transition probability density from position \({\varvec{X}}(\tau )= {\varvec{x}}_{\tau }\) to position \({\varvec{X}}(\tau +dt)= {\varvec{x}}_{\tau +dt}\) during the positive infinitesimal time interval \(dt>0\). The transition probability density from \({\varvec{x}}_{\tau }\) to \({\varvec{x}}_{\tau +dt}\) is given by the Onsager–Machlup theory [33],

where \(\Vert \cdot \Vert \) stands for the \(L^2\) norm, and the transition probability density satisfies \(\int d{\varvec{x}}_{\tau +dt} {\mathbb {T}}_{\tau }({\varvec{x}}_{\tau +dt} \mid {\varvec{x}}_{\tau })=1\) and \({\mathbb {T}}_{\tau }({\varvec{x}}_{\tau +dt} \mid {\varvec{x}}_{\tau }) \ge 0\). Let be \({\mathcal {X}}_{\tau }\) be the random variable corresponding to state \({\varvec{x}}_{\tau }\). The joint probability of \({\mathcal {X}}_{\tau +dt}\) and \({\mathcal {X}}_{\tau }\) being in \({\varvec{x}}_{\tau +dt}\) and \({\varvec{x}}_{\tau }\) is defined as

which satisfies \(\int d {\varvec{x}}_{\tau +dt} d{\varvec{x}}_{\tau } {\mathbb {P}} ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) =1\) and \({\mathbb {P}} ({\varvec{x}}_{\tau +dt} , {\varvec{x}}_{\tau }) \ge 0\). This joint probability \({\mathbb {P}}\) is called the forward path probability density because it is the probability of the forward path from time \(t=\tau \) to time \(t= \tau +dt\).

2.2 Entropy production rate

We introduce stochastic thermodynamics [15, 16], that is a framework for non-equilibrium thermodynamics described by a stochastic process such as the Fokker–Planck equation. In stochastic thermodynamics, the entropy production rate is introduced as a measure of thermodynamic dissipation [34]. The entropy production rate is defined as follows.

Definition 1

For the Fokker–Planck equation (1), the entropy production rate at time \(\tau \) is defined as

Remark 1

This entropy production rate is definitely non-negative, and its non-negativity \(\sigma _{\tau } \ge 0\) is known as the second law of thermodynamics [16].

Remark 2

The entropy production rate is regarded as the sum of the entropy changes in the heat bath and the system [16]. If we assume that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity, the entropy production rate can be rewritten as

where we used Eq. (1), \(\int d {\varvec{x}} P_{\tau } ({\varvec{x}})( \partial _{\tau } \ln {P}_{\tau } ({\varvec{x}})) =\partial _{\tau } \int d {\varvec{x}} P_{\tau } ({\varvec{x}}) = 0\), and \(\int d {\varvec{x}} \nabla \cdot ( \varvec{\nu }_{\tau } ({\varvec{x}}) P_{\tau } ({\varvec{x}}) \ln P_{\tau } ({\varvec{x}}) )=0\) because of the assumption that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. The term

is the time derivative of the differential entropy [50], which is regarded as the entropy change of the system. The term

is the heat dissipation rate and \(- {\dot{Q}}_{\tau }/ T\) is regarded as the entropy change of the heat bath. Thus, the entropy production rate is given by the sum of the entropy changes in the heat bath and the system,

Its non-negativity \(\sigma _{\tau } \ge 0\) provides the Clausius inequality for the Fokker–Planck equation,

which is an expression of the second law of thermodynamics.

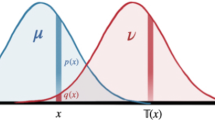

2.3 Kullback–Leibler divergence and entropy production rate

We introduce an expression of the entropy production rate in terms of the Kullback–Leibler divergence, which was discussed in the context of the fluctuation theorem [16, 51,52,53]. We assume that the parity of state \({\varvec{x}}_{\tau }\) is even; in other words, the sign of \({\varvec{x}}_{\tau }\) does not change under the time reversal transformation. Let \({\mathbb {P}}^{\dagger } ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })\) be backward path probability density defined as \({\mathbb {P}}^{\dagger } ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })= {\mathbb {T}}_{\tau } ({\varvec{x}}_{\tau } \mid {\varvec{x}}_{\tau +dt}) P_{\tau +dt}({\varvec{x}}_{\tau +dt})\). Now, we consider the Kullback–Leibler divergence between \({\mathbb {P}} ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })\) and \({\mathbb {P}}^{\dagger } ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })\) defined as

The entropy production rate \(\sigma _{\tau }\) is given by this Kullback-Leibler divergence as follows.

Lemma 1

The entropy production rate \(\sigma _{\tau }\) is given by

Proof We rewrite the Kullback–Leibler divergence \(D_\textrm{KL}(\mathbb {P} \Vert \mathbb {P}^{\dagger })\) as

where \(O(dt^2)\) means the term that satisfies \(\lim _{dt \rightarrow 0} O(dt^2)/dt=0\) and we used \(\int d\varvec{x}_{\tau } \mathbb {P} (\varvec{x}_{\tau +dt}, \varvec{x}_{\tau }) = P_{\tau +dt} (\varvec{x}_{\tau +dt})\) and \(\int d \varvec{x}_{\tau +dt} \partial _{\tau '} P_{\tau '} (\varvec{x}_{\tau +dt}) \mid _{\tau ' =\tau +dt} =0\). From Eq. (3), we obtain

where \(\circ \) stands for the Stratonovich integral defined as \((\varvec{x}_{\tau +dt} - \varvec{x}_{\tau } ) \circ \varvec{\nu }_{\tau } (\varvec{x}_{\tau }) =(\varvec{x}_{\tau +dt} - \varvec{x}_{\tau } ) \cdot \varvec{\nu }_{\tau } ([\varvec{x}_{\tau } + \varvec{x}_{\tau +dt}]/2)\), O(dt) means the term that satisfies \(\lim _{dt \rightarrow 0} O(dt)=0\) and \(O( \Vert \varvec{x}_{\tau +dt} - \varvec{x}_{\tau } \Vert ^2 )\) is the higher order term which satisfies \(\int d\varvec{x}_{\tau +dt } \mathbb {T}_{\tau }(\varvec{x}_{\tau +dt} \mid \varvec{x}_{\tau }) O( \Vert \varvec{x}_{\tau +dt} - \varvec{x}_{\tau } \Vert ^2 )= O(dt)\). Because the Gaussian integral provides

we obtain

Remark 3

The Kullback–Leibler divergence is always non-negative \(D_\textrm{KL}({\mathbb {P}} \Vert {\mathbb {P}}^{\dagger }) \ge 0\) and zero if and only if \({\mathbb {P}}={\mathbb {P}}^{\dagger }\). Thus, \(\sigma _{\tau }= 0\) if and only if \({\mathbb {P}}={\mathbb {P}}^{\dagger }\). Physically, \({\mathbb {P}}={\mathbb {P}}^{\dagger }\) means the reversibility of stochastic dynamics, and \(\sigma _{\tau }= 0\) means that the system is in equilibrium.

Remark 4

The time integral of the entropy production rate \(\Sigma (\tau ';\tau ) = \int _{\tau }^{\tau '} dt \sigma _{t}\) is called the entropy production from time \(t=\tau \) to time \(t=\tau '\). The Lemma 1 implies that the Kullback–Leibler divergence \(D_\textrm{KL}({\mathbb {P}} \Vert {\mathbb {P}}^{\dagger })\) is equivalent to the entropy production from time \(t=\tau \) to \(t=\tau +dt\) up to \(O(dt^2)\),

where \(O(dt^2)\) means the term \(\lim _{dt \rightarrow 0} O(dt^2)/dt =0\).

Remark 5

Because the Fokker–Planck equation describes the Markov process, each increments are independent and the results in this paper can be generalized for the entire path from \(t=0\) to \(t = \tau \). Thus, the results for the entropy production rate \(\sigma _{\tau }\) in this paper can be generalized for the entropy production \(\Sigma (\tau ;0)\) based on the expression,

where \(\hat{{\mathbb {P}}}\) and \(\hat{{\mathbb {P}}}^{\dagger }\) is the forward and backward path probability densities for the entire path \(\Gamma = ({\varvec{x}}_{Ndt}, \ldots ,{\varvec{x}}_{2dt},{\varvec{x}}_{dt}, {\varvec{x}}_{0})\) with \(Ndt =\tau \), defined as \(\hat{{\mathbb {P}}}(\Gamma ) = \prod _{i=1}^{N} {\mathbb {T}}_{(i-1)dt} ({\varvec{x}}_{idt} \mid {\varvec{x}}_{(i-1)dt}) P_{0} ({\varvec{x}}_0)\) and \(\hat{{\mathbb {P}}}^{\dagger }(\Gamma ) = \prod _{i=1}^{N} {\mathbb {T}}_{(i-1)dt} ({\varvec{x}}_{(i-1)dt} \mid {\varvec{x}}_{idt}) P_{\tau } ({\varvec{x}}_{\tau })\) respectively. The Kullback–Leibler divergence is defined as \(D_\textrm{KL}(\hat{{\mathbb {P}}} \Vert \hat{{\mathbb {P}}}^{\dagger })= \int d \Gamma \hat{{\mathbb {P}}}(\Gamma ) \ln [\hat{{\mathbb {P}}}(\Gamma )/\hat{{\mathbb {P}}}^{\dagger }(\Gamma )]\).

This link between the entropy production rate and the Kullback–Leibler divergence in Lemma 1 leads to an information-geometric interpretation of the entropy production rate.

3 Information geometry and entropy production

3.1 Projection theorem and entropy production

We discuss an information-geometric interpretation of the entropy production based on the projection theorem [13], which is obtained for a general Markov jump process in Ref. [25]. The entropy production can be understood in terms of the information-geometric projection onto the backward manifold defined as follows.

Definition 2

Let \({\mathbb {Q}} ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })\) be a probability density that satisfies \({\mathbb {Q}} ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) \ge 0\) and \(\int d{\varvec{x}}_{\tau +dt} d{\varvec{x}}_{\tau } {\mathbb {Q}} ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) =1\). The set of the probability density

is called the backward manifold.

Remark 6

\({\mathcal {M}}_\textrm{B} ({\mathbb {P}})\) depends on \({\mathbb {P}}\) because \({\mathcal {M}}_\textrm{B} ({\mathbb {P}})\) depends on \({\mathbb {T}}_{\tau }\). \({\mathbb {T}}_{\tau }\) is given by the function of \({\mathbb {P}}\) such that \({\mathbb {T}}_{\tau } ( {\varvec{x}}_{\tau +dt} \mid {\varvec{x}}_{\tau }) = {\mathbb {P}}({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })/ [\int d {\varvec{x}}_{\tau +dt} {\mathbb {P}}({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })]\) and \({\mathbb {T}}_{\tau } ( {\varvec{x}}_{\tau } \mid {\varvec{x}}_{\tau +dt})\) is given by the change of variables.

Remark 7

\({\mathbb {P}}^{\dagger } \in {\mathcal {M}}_\textrm{B}({\mathbb {P}})\) because \({\mathbb {P}}^{\dagger } ( {\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })={\mathbb {T}}_{\tau }({\varvec{x}}_{\tau } \mid {\varvec{x}}_{\tau +dt}) P_{\tau +dt}({\varvec{x}}_{\tau +dt})\) and \(P_{\tau +dt}({\varvec{x}}_{\tau +dt})= \int d {\varvec{x}}_{\tau } {\mathbb {P}}^{\dagger } ( {\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })\).

This backward path probability density \({\mathbb {P}}^{\dagger }\) is given by the information-geometric projection from \({\mathbb {P}}\) onto \({\mathcal {M}}_\textrm{B}({\mathbb {P}})\). This information-geometric projection is formulated based on the following generalized Pythagorean theorem.

Lemma 2

For any \({\mathbb {Q}} \in {\mathcal {M}}_\textrm{B}({\mathbb {P}})\), the generalized Pythagorean theorem

holds.

Proof

\({\mathbb {Q}} \in {\mathcal {M}}_\textrm{B}({\mathbb {P}})\) is given by \({\mathbb {Q}} ( {\varvec{x}}_{\tau + dt}, {\varvec{x}}_{\tau }) = {\mathbb {T}}_{\tau }({\varvec{x}}_{\tau } \mid {\varvec{x}}_{\tau +dt}) Q_{\tau + dt} ({\varvec{x}}_{\tau + dt})\) where \(Q_{\tau + dt} ({\varvec{x}}_{\tau + dt}) = \int d {\varvec{x}}_{\tau } {\mathbb {Q}} ({\varvec{x}}_{\tau + dt}, {\varvec{x}}_{\tau })\). Thus,

where we used \(\int d {\varvec{x}}_{\tau +dt}{\mathbb {T}}_{\tau }({\varvec{x}}_{\tau +dt} \mid {\varvec{x}}_{\tau }) = 1\), \(\int d {\varvec{x}}_{\tau }{\mathbb {T}}_{\tau }({\varvec{x}}_{\tau } \mid {\varvec{x}}_{\tau +dt}) = 1\), and \(P_{\tau +dt} ({\varvec{x}}_{\tau +dt})/ Q_{\tau + dt} ({\varvec{x}}_{\tau + dt}) = {\mathbb {P}}^{\dagger } ({\varvec{x}}_{\tau + dt},{\varvec{x}}_{\tau })/ {\mathbb {Q}} ({\varvec{x}}_{\tau + dt},{\varvec{x}}_{\tau })\). \(\square \)

Remark 8

The generalized Pythagorean theorem Eq. (20) can be rewritten as

Information-geometrically, Eq. (22) implies that the m-geodesic between two points \({\mathbb {P}}\) and \({\mathbb {P}}^{\dagger }\) is orthogonal to the e-geodesic between two points \({\mathbb {P}}^{\dagger }\) and \({\mathbb {Q}}\) [12]. The definition of the m-geodesic between two points \({\mathbb {P}}\) and \({\mathbb {P}}^{\dagger }\) is given by \((1- \theta ) {\mathbb {P}} + \theta {\mathbb {P}}^{\dagger }\), where \(\theta \) is an affine parameter \(\theta \in [0,1]\). The definition of the e-geodesic between two points \({\mathbb {P}}^{\dagger }\) and \({\mathbb {Q}}\) is also given by \((1- \theta ) \ln {\mathbb {P}}^{\dagger } + \theta \ln {\mathbb {Q}}\).

The orthogonality based on the generalized Pythagorean theorem leads to the projection theorem, which provides a minimization problem of the Kullback–Leibler divergence. Thus, Lemma 2 implies that the entropy production rate can be obtained from a minimization problem of the Kullback–Leibler divergence.

Theorem 3

The entropy production rate \(\sigma _{\tau }\) is given by the minimization problem,

The entropy production \(\Sigma (\tau +dt;\tau )\) is also given by the minimization problem,

Proof

From Lemma 2, Eq. (20) implies

because \(D_\textrm{KL}({\mathbb {P}}^{\dagger } \Vert {\mathbb {Q}}) \ge 0\) and \({\mathbb {P}}^{\dagger } \in {\mathcal {M}}_\textrm{B}({\mathbb {P}})\). By combining Eqs. (12) and (17) with Eq. (25), we obtain Eqs. (23) and (24), respectively. \(\square \)

Thus, Theorem 3 implies that the entropy production \(\Sigma (\tau +dt;\tau )\) can be obtained from the information-geometric projection onto the backward manifold \({\mathcal {M}}_\textrm{B}({\mathbb {P}})\). This result is helpful in estimating the entropy production \(\Sigma (\tau +dt;\tau )\) numerically by calculating the optimization problem to minimize the Kullback–Leibler divergence \(D_\textrm{KL}({\mathbb {P}}\Vert {\mathbb {Q}})\).

3.2 Interpolated dynamics and Fisher information

We can consider not only the m-geodesic between \({\mathbb {P}}\) and \({\mathbb {P}}^{\dagger }\) in Lemma 2 but also the e-geodesic between \({\mathbb {P}}\) and \({\mathbb {P}}^{\dagger }\). This geodesic can be discussed in terms of the interpolated dynamics, which has been substantially introduced in Refs. [40, 54]. By considering this interpolation, we obtain an expression of the entropy production rate by the Fisher metric, which provides a trade-off relation between the entropy production rate and the fluctuation of any observable. We start with the definition of interpolated dynamics as follows.

Definition 3

Dynamics described by the following continuity equation are called interpolated dynamics for two force fields \(\varvec{\nu }_t ({\varvec{x}}) =\mu ({\varvec{F}}_{t}({\varvec{x}}) - T \nabla \ln P_{t}({\varvec{x}}))\) and \(\varvec{\nu }'_t ({\varvec{x}}) \in {\mathbb {R}}^d\),

where \(\theta \in [0,1]\) is an interpolation parameter.

Remark 9

The corresponding over-damped Langevin equation of interpolated dynamics is given by

Definition 4

The path probability density of interpolated dynamics for two force fields \(\varvec{\nu }_{\tau } ({\varvec{x}}_{\tau }) =\mu ({\varvec{F}}_{\tau }({\varvec{x}}_{\tau }) - T \nabla \ln P_{\tau }({\varvec{x}}_{\tau }))\) and \(\varvec{\nu }'_{\tau } ({\varvec{x}}_{\tau })\) is defined as

Remark 10

The parameter \(\theta \) quantifies a difference between interpolated dynamics and original Fokker–Planck dynamics (1) because \(\theta =0\) provides the transition rate \({\mathbb {T}}^{0}_{\tau ; \varvec{\nu }'_{\tau }}({\varvec{x}}_{\tau +dt} \mid {\varvec{x}}_{\tau }) = {\mathbb {T}}_{\tau }({\varvec{x}}_{\tau +dt} \mid {\varvec{x}}_{\tau }) \) and the forward path probability density \({\mathbb {P}}^{0}_{\varvec{\nu }'_{\tau }}={\mathbb {P}}\) of original Fokker–Planck dynamics \(\partial _t P_t({\varvec{x}}) = - \nabla \cdot (\varvec{\nu }_t({\varvec{x}}) P_t({\varvec{x}}))\).

Remark 11

Because the path probability density is given by

the parameter \(\theta \) can be regarded as a theta coordinate system for the exponential family in information geometry [13]. By neglecting O(dt), \(\ln {\mathbb {P}}^{\theta }_{\varvec{\nu }'_{\tau }}({\varvec{x}}_{\tau +dt},{\varvec{x}}_{\tau })\) can be rewritten as

which implies that \({\mathbb {P}}^{\theta }_{\varvec{\nu }'_{\tau }}\) gives the e-geodesic between two points \({\mathbb {P}}^{0}_{\varvec{\nu }'_{\tau }}={\mathbb {P}}\) and \({\mathbb {P}}^{1}_{\varvec{\nu }'_{\tau }}\).

We next consider the backward path probability density \({\mathbb {P}}^{\dagger }\) in terms of interpolated dynamics. The backward path probability density \({\mathbb {P}}^{\dagger }\) can be regarded as \({\mathbb {P}}^{1}_{-\varvec{\nu }_{\tau }}\) as follows.

Lemma 4

The backward path probability density \({\mathbb {P}}^{\dagger }({\varvec{x}}_{\tau +dt},{\varvec{x}}_{\tau })\) is given by

Proof

The backward path probability density \({\mathbb {P}}^{\dagger }({\varvec{x}}_{\tau +dt},{\varvec{x}}_{\tau })\) is calculated as

where we used \(\ln [P_{\tau +dt} ({\varvec{x}}_{\tau +dt}) /P_{\tau }({\varvec{x}}_{\tau })] = ({\varvec{x}}_{\tau + dt}-{\varvec{x}}_{\tau }) \cdot \nabla \ln P_{\tau }({\varvec{x}}_{\tau }) + O(dt)\). \(\square \)

Lemma 4 implies that the path probability density of interpolated dynamics \({\mathbb {P}}^{\theta }_{-\varvec{\nu }_{\tau }}\) gives the e-geodesic between \({\mathbb {P}}\) and \({\mathbb {P}}^{\dagger }\).

We discuss an information-geometric interpretation of the entropy production based on \({\mathbb {P}}^{\theta }_{- \varvec{\nu }_{\tau }}\). To discuss it, we introduce the following lemma proposed in Ref. [55].

Lemma 5

Let \(\theta \in [0,1]\) and \(\theta ' \in [0,1]\) be two interpolation parameters. The Kullback–Leibler divergence between \({\mathbb {P}}^{\theta }_{\varvec{\nu }_{\tau }'}\) and \({\mathbb {P}}^{\theta '}_{\varvec{\nu }_{\tau }'}\) is given by

Proof

The quantity \(\ln [{\mathbb {T}}^{\theta }_{\tau ;\varvec{\nu }_{\tau }'}({\varvec{x}}_{\tau +dt}\mid {\varvec{x}}_{\tau } )/{\mathbb {T}}^{\theta '}_{\tau ;\varvec{\nu }_{\tau }'}({\varvec{x}}_{\tau +dt}\mid {\varvec{x}}_{\tau } )]\) is calculated as

Thus, the Kullback–Leibler divergence is calculated as

\(\square \)

Remark 12

Let \(g_{\theta (\varvec{\nu }_{\tau }') \theta (\varvec{\nu }_{\tau }')}({\mathbb {P}})\) be the Fisher information of the interpolation parameter \(\theta \) for \({\mathbb {P}}^{\theta }_{\varvec{\nu }_{\tau }'}\) defined as

Information-geometrically, this Fisher information \(g_{\theta (\varvec{\nu }_{\tau }') \theta (\varvec{\nu }_{\tau }')} ({\mathbb {P}})\) can be regarded as a particular Riemannian metric called the Fisher metric [13] at point \({\mathbb {P}}\). If we consider \({\mathbb {P}}^{\theta }_{\varvec{\nu }_{\tau }'} ={\mathbb {P}}^{0}_{\varvec{\nu }_{\tau }'}={\mathbb {P}}\) and \({\mathbb {P}}^{\theta '}_{\varvec{\nu }_{\tau }'} = {\mathbb {P}}^{\Delta \theta }_{\varvec{\nu }_{\tau }'}\) in Lemma 5, the Fisher metric is given by

Based on Lemma 5, we obtain an information-geometric interpretation of the entropy production, which is substantially obtained in Refs. [40, 54].

Theorem 6

Let \(\theta \in [0,1]\) and \(\theta ' \in [0,1]\) be any interpolation parameters. The entropy production rate \(\sigma _{\tau }\) for original Fokker–Planck dynamics (1) is given by

The entropy production \(\Sigma (\tau +dt; \tau )\) for original Fokker–Planck dynamics (1) is also given by

In terms of the Fisher information, the entropy production \(\Sigma (\tau +dt; \tau )\) for original Fokker–Planck dynamics (1) is given by half of the Fisher metric,

Proof

From Lemma 5, the Kullback–Leibler divergence \(D_\textrm{KL}({\mathbb {P}}_{- \varvec{\nu }_{\tau }}^{\theta }\Vert {\mathbb {P}}_{- \varvec{\nu }_{\tau }}^{\theta '})\) is calculated as

Thus, Eqs. (40) and (41) holds. If we consider \({\mathbb {P}}_{- \varvec{\nu }_{\tau }}^{\theta } = {\mathbb {P}}_{- \varvec{\nu }_{\tau }}^{0} ={\mathbb {P}}\) and \({\mathbb {P}}_{- \varvec{\nu }_{\tau }}^{\theta '} = {\mathbb {P}}_{- \varvec{\nu }_{\tau }}^{\Delta \theta }\) for Eq. (43) and use Eq. (38), we obtain Eq. (42). \(\square \)

Remark 13

The entropy production can be regarded as half of the Fisher metric, and thus the entropy production can also be a particular Riemannian metric of differential geometry. Based on the Fisher metric, we can introduce the square of the line element \(ds^2_\textrm{path}\) defined as \(ds^2_\textrm{path} = g_{\theta (-\varvec{\nu }_{\tau }) \theta (-\varvec{\nu }_{\tau })} ({\mathbb {P}}) d\theta ^2 = 2 \Sigma (\tau +dt ;\tau ) d\theta ^2 +O(dt^2)\), where \(\theta \) is the interpolation parameter for \({\mathbb {P}}_{- \varvec{\nu }_{\tau }}^{\theta }\) and this line element is introduced on the corresponding e-geodesic.

3.3 Thermodynamic uncertainty relations

The link between the entropy production rate and the Fisher metric leads to thermodynamic trade-off relations between the entropy production rate and the fluctuation of any observable. A particular case of thermodynamic trade-off relations was proposed as the thermodynamic uncertainty relations [56, 57]. In Refs. [24, 40, 43, 58,59,60], several links between the Cramér–Rao bound and generalizations of the thermodynamic uncertainty relations have been discussed. Here, we newly propose a generalization of the thermodynamic uncertainty relations based on the fact that the entropy production is regarded as half of the Fisher information in Theorem 6. To obtain the proposed thermodynamic uncertainty relation, we start with the Cramér–Rao bound.

Lemma 7

Let \(R({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) \in {\mathbb {R}}\) be any function of states \({\varvec{x}}_{\tau +dt} \in {\mathbb {R}}^d\) and \({\varvec{x}}_{\tau } \in {\mathbb {R}}^d\). The Fisher metric \(g_{\theta (\varvec{\nu }_{\tau }') \theta (\varvec{\nu }_{\tau }')}({\mathbb {P}})\) is bounded by the Cramér–Rao bound as follows,

where \(|_{\theta =0}\) stands for substitution \(\theta =0\), \({\mathbb {E}}_{{\mathbb {P}}^{\theta }_{ \varvec{\nu }_{\tau }'}} [R]\) is the expected value defined as

and the deviation \(\Delta _{{\mathbb {P}}^{\theta }_{\varvec{\nu }_{\tau }'}} R ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })\) is defined as \(\Delta _{{\mathbb {P}}^{\theta }_{\varvec{\nu }_{\tau }'}} R ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) = R ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) - {\mathbb {E}}_{{\mathbb {P}}^{\theta }_{\varvec{\nu }_{\tau }'}} [R] \).

Proof

The Fisher metric \(g_{\theta (\varvec{\nu }_{\tau }') \theta (\varvec{\nu }_{\tau }')}({\mathbb {P}})\) is calculated as

From the Cauchy–Schwartz inequality, we obtain the Cramér–Rao bound,

where we used \(\int d {\varvec{x}}_{\tau +dt} d {\varvec{x}}_{\tau } \partial _{\theta } {\mathbb {P}}^{\theta }_{\varvec{\nu }_{\tau }'} ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) =0\). \(\square \)

By plugging Eq. (42) into the Cramér–Rao bound, the proposed generalized thermodynamic uncertainty relation, which is a trade-off relation between the entropy production rate \(\sigma _{\tau }\) and the fluctuation of any observable \(\textrm{Var} [R]={\mathbb {E}}_{{\mathbb {P}}}[(\Delta _{{\mathbb {P}}} R )^2]\), can be obtained as follows.

Proposition 8

Let \(R({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) \in {\mathbb {R}}\) be any function of states \({\varvec{x}}_{\tau +dt} \in {\mathbb {R}}^d\) and \({\varvec{x}}_{\tau } \in {\mathbb {R}}^d\) such that \( {\mathbb {T}}_{\tau } ({\varvec{x}}_{\tau +dt} \mid {\varvec{x}}_{\tau }) R({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) \rightarrow 0\) at infinity of \({\varvec{x}}_{\tau +dt}\). The entropy production rate \(\sigma _{\tau }\) is bounded by the generalized thermodynamic uncertainty relation,

where \(\textrm{Var} [R]\) is the variance defined as \(\textrm{Var} [R] ={\mathbb {E}}_{{\mathbb {P}}}[(\Delta _{{\mathbb {P}}} R )^2]\) and \({\mathcal {J}} [\varvec{\tilde{R}}] \) is the generalized current defined as

Here, \(\nabla _{ {\varvec{x}}}\) is a gradient operator for \({\varvec{x}} \in {\mathbb {R}}^d\).

Proof

By plugging \({\mathbb {P}}^{\theta }_{\varvec{\nu }_{\tau }'}= {\mathbb {P}}_{-\varvec{\nu }_{\tau }}^{\theta }\) into Lemma. 7, we obtain

where we used \({\mathbb {P}}_{-\varvec{\nu }_{\tau }}^{0}={\mathbb {P}}\). From Eq. (42), we obtain \(g_{\theta (-\varvec{\nu }_{\tau }) \theta (-\varvec{\nu }_{\tau })}({\mathbb {P}}) = 2 \sigma _{\tau } dt + O(dt^2)\). \( \partial _{\theta } {\mathbb {E}}_{{\mathbb {P}}_{-\varvec{\nu }_{\tau }}^{\theta }} [R] \Bigg |_{\theta =0}\) is calculated as

where we used \(\int d {\varvec{x}}_{\tau +dt} \nabla _{ {\varvec{x}}_{\tau +dt}} [{\mathbb {T}}_{\tau } ({\varvec{x}}_{\tau +dt} \mid {\varvec{x}}_{\tau }) R ({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau })]=0\) because of the assumption \( {\mathbb {T}}_{\tau } ({\varvec{x}}_{\tau +dt} \mid {\varvec{x}}_{\tau }) R({\varvec{x}}_{\tau +dt}, {\varvec{x}}_{\tau }) \rightarrow 0\) at infinity of \({\varvec{x}}_{\tau +dt}\). From \(\left( \partial _{\theta } {\mathbb {E}}_{{\mathbb {P}}^{\theta }_{-\varvec{\nu }_{\tau }}} [R] \right) ^2\Bigg |_{\theta =0} = 4 ({\mathcal {J}} [\varvec{\tilde{R}}])^2 (dt)^2\) and \(g_{\theta (-\varvec{\nu }_{\tau }) \theta (-\varvec{\nu }_{\tau })}({\mathbb {P}}) = 2 \sigma _{\tau } dt + O(dt^2)\), Eq. (51) can be rewritten as Eq. (48). \(\square \)

Remark 14

In Ref. [58], a special case of the proposed thermodynamic uncertainty relation (48) was discussed for

where \({\varvec{w}} ({\varvec{x}}_{\tau }) \in {\mathbb {R}}^d\) is any function of \({\varvec{x}}_{\tau }\). In this case, we obtain \(\varvec{\tilde{R}} ({\varvec{x}}_{\tau }) = {\varvec{w}} ({\varvec{x}}_{\tau }) +O(dt)\) and \(\textrm{Var} [R]/dt = 2 \mu T \int d{\varvec{x}}_{\tau } \Vert {\varvec{w}} ({\varvec{x}}_{\tau }) \Vert ^2 P_{\tau }({\varvec{x}}_{\tau })+O(dt)\). Thus, the generalized thermodynamic uncertainty relation Eq. (48) can be rewritten as

This result can also be easily obtained from the Cauchy-Schwartz inequality as follows,

4 Optimal transport theory and entropy production

4.1 \(L^2\)-Wasserstein distance and minimum entropy production

We next discuss a relation between the \(L^2\)-Wasserstein distance in optimal transport theory [36] and the entropy production. We start with the Benamou–Brenier formula [61], which gives the definition of the \(L^2\)-Wasserstein distance.

Definition 5

Let \(P({\varvec{x}})\) and \(Q({\varvec{x}})\) be probability densities at the position \({\varvec{x}} \in {\mathbb {R}}^d\) that satisfy \(\int d{\varvec{x}} P ({\varvec{x}}) = \int d{\varvec{x}} Q ({\varvec{x}})=1\), \(P ({\varvec{x}}) \ge 0\) and \(Q({\varvec{x}}) \ge 0\), where the second-order moments \(\int d{\varvec{x}} \Vert {\varvec{x}} \Vert ^2 P ({\varvec{x}})\) and \(\int d{\varvec{x}} \Vert {\varvec{x}} \Vert ^2 Q ({\varvec{x}})\) are finite. Let \(\Delta \tau \ge 0\) be a non-negative time interval. The \(L^2\)-Wasserstein distance between probability densities Pand Q is defined as

where the infimum is taken among all paths \((\varvec{v}_t (\varvec{x}), \mathcal {P}_t (\varvec{x}) )_{\tau \le t \le \tau + \Delta \tau }\) satisfying the continuity equation

with the boundary conditions

Remark 15

This definition of the \(L^2\)-Wasserstein distance is consistent with the definitions used in the Monge–Kantorovich problem [35]. The definition in the Monge–Kantrovich problem is as follows. Let \(\Pi ({\varvec{x}}, {\varvec{x}}')\) be the joint probability density at positions \({\varvec{x}} \in {\mathbb {R}}^d\) and \({\varvec{x}}' \in {\mathbb {R}}^d\) that satisfies \(\Pi ({\varvec{x}}, {\varvec{x}}') \ge 0\), \(\int d{\varvec{x}}' {\Pi } ({\varvec{x}}, {\varvec{x}}') =P ({\varvec{x}})\), and \(\int d{\varvec{x}} {\Pi } ({\varvec{x}}, {\varvec{x}}') =Q ({\varvec{x}}')\). The \(L^2\)-Wasserstein distance is also defined as

Remark 16

The \(L^2\)-Wasserstein distance is a distance [35], since it is symmetric \({\mathcal {W}}_2(P,Q)= {\mathcal {W}}_2(Q, P)\), non-negative \({\mathcal {W}}_2(P, Q)\ge 0\), and zero \({\mathcal {W}}_2(P, Q)= 0\) if and only if \(P=Q\). The triangle inequality \({\mathcal {W}}_2(P, Q) \le {\mathcal {W}}_2(P, P') + {\mathcal {W}}_2( P', Q)\) for any probability density \(P'({\varvec{x}})\) with a finite second-order moment is satisfied.

Based on the Benamou–Breiner formula, we can consider the minimum entropy production for the Fokker–Planck equation in terms of the \(L^2\)-Wasserstein distance. This link between the \(L^2\)-Wasserstein distance and the entropy production was initially pointed out in the field of the optimal transport theory (for example, in Refs. [36, 62]). After that, it was also discussed in the context of stochastic thermodynamics [19, 63]. This link has been recently revisited in terms of thermodynamic trade-off relations such as the thermodynamic speed limit [31, 64] and the thermodynamic uncertainty relation [40]. The decomposition of the entropy production rate based on the optimal transport theory has also been proposed in Refs. [31, 39].

For a stochastic process evolving according to the Fokker–Planck equation, the entropy production \(\Sigma (\tau + \Delta t; \tau )\) for a fixed initial probability density \(P_{\tau }\) and a fixed final probability density \(P_{\tau +\Delta \tau }\) is bounded by the \(L^2\)-Wasserstein distance as follows. This result is regarded as a thermodynamic speed limit [31, 64], which is a trade-off relation between the finite time interval \(\Delta \tau \) and the entropy production \(\Sigma (\tau + \Delta \tau ; \tau )\).

Lemma 9

The entropy production \(\Sigma (\tau + \Delta \tau ; \tau )\) for fixed probability densities \(P_{\tau }\) and \(P_{\tau +\Delta \tau }\) is bounded by

The entropy production rate \(\sigma _{\tau }\) is also bounded by

Proof

From the definition of the \(L^2\)-Wasserstein distance, we obtain Eq. (61)

because the time evolution of \(P_t( {\varvec{x}})\) with boundary conditions \(P_{\tau }({\varvec{x}})\) and \(P_{\tau + \Delta t}({\varvec{x}})\) is described by the Fokker–Planck equation \(\partial _{t } P_{t } ({\varvec{x}}) = - \nabla \cdot (\varvec{\nu }_{t} ({\varvec{x}}) P_{t} ({\varvec{x}}))\), which is a kind of continuity equation. By using \(\Delta \tau = \Delta t\), we obtain \([{\mathcal {W}}_2(P_{\tau } , P_{\tau + \Delta t})]^2 \le (\Delta t)^2 (\sigma _{\tau } + O(\Delta t))\), and thus Eq. (62) holds. \(\square \)

By considering the geometry of the \(L^2\)-Wasserstein distance and introducing the \(L^2\)-Wasserstein path length, we can obtain another lower bound on the entropy production, which is the tighter than Eq. (61). This bound is proposed as a tighter version of the thermodynamic speed limit in Ref. [31]. The \(L^2\)-Wasserstein path length is defined as follows.

Definition 6

Let \(t \in {\mathbb {R}}\) and \(s \in {\mathbb {R}}\) indicate time. For a fixed trajectory of probability density \((P_{t})_{ \tau \le t \le \tau +\Delta \tau }\), the Wasserstein path length from time \(t=\tau \) to time \(t=\tau +\Delta \tau \) is defined as

Remark 17

We can obtain \({\mathcal {L}}(\tau +\Delta \tau ; \tau ) \ge {\mathcal {W}}_2(P_{\tau } , P_{\tau + \Delta \tau })\) by using the triangle inequality of the \(L^2\)-Wasserstein distance. Thus, the \(L^2\)-Wasserstein distance \({\mathcal {W}}_2(P_{\tau } , P_{\tau + \Delta \tau })\) can be regarded as the minimum Wasserstein path length, that is the geodesic between two points \(P_{\tau }\) and \(P_{\tau + \Delta \tau }\).

Remark 18

In terms of the Wasserstein path length, Eq. (62) can be rewritten as

Theorem 10

The entropy production \(\Sigma (\tau + \Delta \tau ; \tau )\) is bounded by

This lower bound on the entropy production is tighter than the bound Eq. (61) as follows,

Proof

From the Cauchy–Schwartz inequality, we obtain

where we used \(\partial _{s} {\mathcal {L}}_{s} \ge 0\). From Eq. (66), we obtain Eq. (67) as follows,

From the inequality \({\mathcal {L}}(\tau +\Delta \tau ; \tau ) \ge {\mathcal {W}}_2(P_{\tau } , P_{\tau + \Delta \tau })\) and Eq. (67), we obtain Eq. (68). \(\square \)

4.2 Geometric decomposition of entropy production rate

Based on Eq. (66), we can obtain a decomposition of the entropy production into two non-negative parts, namely the housekeeping entropy production rate and the excess entropy production rate. This decomposition has been substaintially obtained in Ref. [31], and discussed in Refs. [39, 40] from the viewpoint of the thermodynamic uncertainty relation.

Here, we define the housekeeping entropy production rate and the excess entropy production rate based on Eq. (66).

Definition 7

The excess entropy production rate is defined as

and the housekeeping entropy production rate is defined as

Remark 19

The excess entropy production rate is definitely non-negative \(\sigma ^\textrm{ex}_{\tau } \ge 0\). The housekeeping entropy production is non-negative \(\sigma ^{\textrm{hk}}_{\tau } \ge 0\) because of Eq. (66). The entropy production rate is decomposed as \(\sigma _{\tau } = \sigma ^{\textrm{ex}}_{\tau } + \sigma ^\textrm{hk}_{\tau }\).

Remark 20

The excess entropy production rate becomes zero \(\sigma ^{\textrm{ex}}_{\tau } = 0\) if and only if the system is in the steady-state \(\partial _t P_t ({\varvec{x}})=0\), or equivalently \(P_{\tau } = P_{\tau + dt}\) for an infinitesimal time interval dt. The decomposition of the entropy production rate such that the excess entropy production rate becomes zero in the steady state is not unique, and another example of the decomposition of the entropy production rate has been obtained in the study of the steady-state thermodynamics [65]. Our definitions of the excess entropy production rate and the housekeeping entropy production rate are generally different from the excess entropy production rate and the housekeeping entropy production rate proposed in Ref. [65]. We discussed this difference in Ref. [40].

Remark 21

The thermodynamic speed limit can be tightened by using the excess entropy production rate as follows.

The contribution of the housekeeping entropy production rate does not affect in the thermodynamic speed limit and the lower bound becomes tighter if \(\sigma ^\textrm{hk}_{t}= 0\). The lower bound \(\Sigma (\tau +\Delta \tau ; \tau ) = [{\mathcal {W}}_2(P_{\tau }, P_{\tau + \Delta \tau })]^2 /(\mu T \Delta \tau )\) is achieved when

and \(\sigma ^{\textrm{hk}}_{t} = 0\) for \(\tau \le t \le \tau + \Delta \tau \). This implies that the geodesic in the space of the \(L^2\)-Wasserstein distance is related to the optimal protocol that minimizes the entropy production in a finite time. The condition \(\sigma ^{\textrm{hk}}_{t} = 0\) is related to the condition of the potential force as discussed below. Thus, if we want to minimize the entropy production in a finite time, the probability density \(P_t\) should be changed along the geodesic in the space of the \(L^2\)-Wasserstein distance by the potential force [31].

To discuss a physical interpretation of this decomposition, we focus on another expression of the optimal protocol proposed in Ref. [61].

Lemma 11

The \(L^2\)-Wasserstein distance is given by

Here, \(\varvec{\nu }^*_t ({\varvec{x}}) \in {\mathbb {R}}^d\) is a vector field, namely an optimal mean local velocity, that satisfies

with a potential \(\phi _t ({\varvec{x}}) \in {\mathbb {R}}\) and a time evolution of \(P_t({\varvec{x}})\) that connects \(P_{\tau }({\varvec{x}})\) and \(P_{\tau + \Delta \tau }({\varvec{x}})\).

Proof

Using the method of Lagrange multipliers, the optimization problem in Eq. (56) with the constraint \(\partial _t P_t({\varvec{x}}) = - \nabla \cdot (\varvec{\nu }^*_t ({\varvec{x}})P_t({\varvec{x}}) )\) can be solved by the calculus of variations for \((P_t)_{\tau<t< \tau +\Delta \tau }\) and \((\varvec{\nu }^*_t)_{\tau \le t< \tau +\Delta \tau }\),

with the Lagrangian

where \(\partial _{P_t({\varvec{x}})}\) and \(\partial _{(\varvec{\nu }^*_t({\varvec{x}}))_i}\) stand for functional derivatives, \((\varvec{\nu }^*_t({\varvec{x}}))_i\) stands for i-th component of \(\varvec{\nu }^*_t({\varvec{x}})\), and \(\phi _t ({\varvec{x}})\) is the Lagrange multiplier. The variations \(\partial _{(\varvec{\nu }^*_t({\varvec{x}}))_i}{\mathbb {L}} (\{P_t \}, \{\varvec{\nu }^*_t \}, \{ \phi _t \}) =0\) and \(\partial _{P_t({\varvec{x}})}{\mathbb {L}} (\{P_t \}, \{\varvec{\nu }^*_t \}, \{ \phi _t \}) =0\) in Eq. (79) are calculated as \(\varvec{\nu }^*_t ({\varvec{x}}) = \nabla \phi _t ({\varvec{x}})\) and \(\partial _t \phi _t ({\varvec{x}}) = \Vert \varvec{\nu }^*_t ({\varvec{x}}) \Vert ^2/2 - (\nabla \phi _t ({\varvec{x}}) \cdot \varvec{\nu }^*_t ({\varvec{x}}))= - \Vert \nabla \phi _t ({\varvec{x}}) \Vert ^2/2\), respectively. \(\square \)

From this optimal protocol, the excess entropy production rate and the housekeeping entropy production rate can be regarded as a potential contribution and a non-potential contribution to the entropy production rate, respectively. This fact is given by the following theorem.

Theorem 12

Let \(\phi _{\tau }({\varvec{x}}) \in {\mathbb {R}}\) be the potential that satisfies

where \(\varvec{\nu }^*_{\tau }({\varvec{x}}) \) is the optimal mean local velocity defined as \(\varvec{\nu }^*_{\tau }({\varvec{x}}) = \nabla \phi _{\tau }({\varvec{x}})\), and \(\partial _{\tau } P_{\tau }({\varvec{x}}) =- \nabla \cdot ( \varvec{\nu }_{\tau } ({\varvec{x}}) P_{\tau } ({\varvec{x}}) )\) is the Fokker–Planck equation with the mean local velocity \(\varvec{\nu }_{\tau } ({\varvec{x}}) = \mu ( {\varvec{F}}_{\tau } ({\varvec{x}}) - T \nabla \ln P_{\tau }({\varvec{x}}) )\). We assume that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. The excess entropy production rate is given by

and the housekeeping entropy production rate is given by

Proof

From Lemma 11, the excess entropy production rate is calculated as

where \(\varvec{\nu }_{\tau }^*({\varvec{x}})\) is the optimal mean local velocity that satisfies \(\varvec{\nu }_{\tau }^*({\varvec{x}}) = \nabla \phi _{\tau } ({\varvec{x}})\) and \(\partial _{\tau } P_{\tau } ({\varvec{x}}) =- \nabla \cdot ( \varvec{\nu }_{\tau }^*({\varvec{x}}) P_{\tau } ({\varvec{x}}) )\). Here, the condition of Eq. (78) is not needed in the definition of the excess entropy production rate because Eq. (84) does not include the time evolution of \(\phi _{\tau } ({\varvec{x}})\). By combining the Fokker–Planck equation \(\partial _{\tau } P_{\tau } ({\varvec{x}}) =- \nabla \cdot ( \varvec{\nu }_{\tau } ({\varvec{x}}) P_{\tau } ({\varvec{x}}) )\) with the condition \(\partial _{\tau } P_{\tau } ({\varvec{x}}) = -\nabla \cdot ( \varvec{\nu }_{\tau }^*({\varvec{x}}) P_{\tau } ({\varvec{x}}) )\), we obtain Eq. (81). We can calculate the quantity \(\int d{\varvec{x}} \varvec{\nu }_{\tau }^*({\varvec{x}}) \cdot (\varvec{\nu }_{\tau }({\varvec{x}}) - \varvec{\nu }_{\tau }^*({\varvec{x}})) P_{\tau } ({\varvec{x}})\) as follows.

where we used \(\nabla \cdot ( (\varvec{\nu }_{\tau } ({\varvec{x}}) - \varvec{\nu }^*_{\tau } ({\varvec{x}}) ) P_{\tau } ({\varvec{x}}) ) =0\) in Eq. (81), and \(\int d{\varvec{x}} \nabla \cdot [ \phi _{\tau } ({\varvec{x}}) (\varvec{\nu }_{\tau }({\varvec{x}}) - \varvec{\nu }_{\tau }^*({\varvec{x}})) P_{\tau } ({\varvec{x}})] = 0\) because of the assumption that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. Thus, the housekeeping entropy production rate is calculated as

\(\square \)

Remark 22

If we introduce the inner product \(\langle {\varvec{a}}, {\varvec{b}} \rangle _{P_{\tau }/(\mu T)} =\int d{\varvec{x}} [ {\varvec{a}} ({\varvec{x}}) \cdot {\varvec{b}} ({\varvec{x}}) ] P_{\tau }({\varvec{x}})/(\mu T)\) for \({\varvec{a}}({\varvec{x}}) \in {\mathbb {R}}^d\) and \({\varvec{b}}({\varvec{x}}) \in {\mathbb {R}}^d\), the entropy production rate, the excess entropy production rate, and the housekeeping entropy production rate are given by \(\sigma _{\tau } = \langle \varvec{\nu }_{\tau }, \varvec{\nu }_{\tau } \rangle _{P_{\tau }/(\mu T)}\), \(\sigma _{\tau }^{\textrm{ex}} = \langle \varvec{\nu }^*_{\tau } , \varvec{\nu }^*_{\tau } \rangle _{P_{\tau }/(\mu T)}\), and \(\sigma _{\tau }^{\textrm{hk}} = \langle \varvec{\nu }_{\tau } - \varvec{\nu }^*_{\tau } , \varvec{\nu }_{\tau } - \varvec{\nu }^*_{\tau } \rangle _{P_{\tau }/(\mu T)}\), respectively. Thus, the decomposition \(\sigma _{\tau } =\sigma ^{\textrm{ex}}_{\tau } + \sigma ^{\textrm{hk}}_{\tau }\) can be regarded as the Pythagorean theorem

where \(\varvec{\nu }_{\tau }({\varvec{x}})\) is orthogonal to \(\varvec{\nu }_{\tau }({\varvec{x}}) - \varvec{\nu }^*_{\tau }({\varvec{x}})\) because of \(\langle \varvec{\nu }^*_{\tau } , \varvec{\nu }_{\tau } - \varvec{\nu }^*_{\tau } \rangle _{P_{\tau }/(\mu T)}=0\). Because the orthogonality is based on \(\varvec{\nu }^*_{\tau } ({\varvec{x}}) = \nabla \phi _{\tau } ({\varvec{x}})\) and \(\nabla \cdot ( (\varvec{\nu }_{\tau } ({\varvec{x}}) - \varvec{\nu }_{\tau }^* ({\varvec{x}}) ) P_{\tau } ({\varvec{x}}) ) =0\), this decomposition is related to the Helmholtz–Hodge decomposition, and the mean local velocity is given by \(\varvec{\nu }_{t}({\varvec{x}}) =\nabla \phi _{\tau } ({\varvec{x}}) + {\varvec{v}}_{\tau } ({\varvec{x}})\) for \({\varvec{v}}_{\tau } ({\varvec{x}}) \in {\mathbb {R}}^d\) that satisfies \(\nabla \cdot ({\varvec{v}}_{\tau } ({\varvec{x}})P_{\tau } ({\varvec{x}}) ) =0\). The decomposition of the entropy production rate based on \(\nabla \cdot ({\varvec{v}}_{\tau } ({\varvec{x}})P_{\tau } ({\varvec{x}}) ) =0\) was discussed in Ref. [66] without considering optimal transport theory.

Remark 23

Let us consider the case that a force \({\varvec{F}}_{\tau }({\varvec{x}})\) is a potential force \({\varvec{F}}_{\tau }({\varvec{x}}) = -\nabla U_{\tau }({\varvec{x}})\) where \(U({\varvec{x}}) \in {\mathbb {R}}\) is a potential. In this case, the local mean velocity is given by \(\varvec{\nu }_{\tau } ( {\varvec{x}}) = \nabla (- \mu U_{\tau }({\varvec{x}}) - \mu T \ln P_{\tau } ({\varvec{x}}) )\) and \(\phi _{\tau }({\varvec{x}})\) can be \(\phi _{\tau }({\varvec{x}}) = - \mu U_{\tau }({\varvec{x}}) - \mu T \ln P_{\tau } ({\varvec{x}})\). Thus, we obtain \(\varvec{\nu }_{\tau }({\varvec{x}}) = \varvec{\nu }^*_{\tau }({\varvec{x}})\), \(\sigma ^{\textrm{ex}}_{\tau } = \sigma _{\tau }\) and \(\sigma ^{\textrm{hk}}_{\tau } = 0\) for a potential force. This fact implies that the excess entropy production rate and the housekeeping entropy production rate quantify contributions of a potential force and a non-potential force to the entropy production rate, respectively.

Based on the expression in Theorem 12, we also obtain a thermodynamic uncertainty relation for the excess entropy production rate [39, 40], which was substaintially obtained in Refs. [24, 67] for the entropy production rate.

Theorem 13

Let \(r({\varvec{x}}) \in {\mathbb {R}}\) be any time-independent function of \({\varvec{x}}\in {\mathbb {R}}^d\). We assume that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. The entropy production rate is bounded by

where \({\mathbb {E}}_{P_{\tau }} (r)\) is the expected value defined as

Proof

The quantity \(\partial _{\tau } {\mathbb {E}}_{P_{\tau }} (r)\) is calculated as

where we used \(\int d {\varvec{x}} \nabla \cdot (\varvec{\nu }^*_{\tau } ({\varvec{x}}) P_{\tau }({\varvec{x}}) r({\varvec{x}}))=0\) because of the assumption that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. From the Cauchy–Schwartz inequality, we obtain

By combining Eq. (91) with \(\sigma _{\tau } \ge \sigma _{\tau }^{\textrm{ex}}\), we obtain Eq. (88). \(\square \)

Remark 24

The weaker inequality \(\sigma _{\tau } \ge [\partial _{\tau } {\mathbb {E}}_{P_{\tau }} (r)]^2/[ \mu T \int d{\varvec{x}} \Vert \nabla r({\varvec{x}}) \Vert ^2P_{\tau }({\varvec{x}})]\) can be regarded as the thermodynamic uncertainty relation Eq. (54) for \({\varvec{w}}({\varvec{x}}) = \nabla r({\varvec{x}})\) where the generalized current is calculated as \({\mathcal {J}}[\nabla r({\varvec{x}})] =\partial _{\tau } {\mathbb {E}}_{P_{\tau }} (r)\). Thus, this result is also regarded as a consequence of the Cramér–Rao bound for the path probability density. In the context of optimal transport theory, a mathematically equivalent inequality, namely the Wasserstein–Cramér–Rao bound, was also proposed in Ref. [68].

5 Thermodynamic links between information geometry and optimal transport

5.1 Gradient flow and information geometry in space of probability densities

In terms of the excess entropy production rate, we can obtain a thermodynamic link between information geometry in the space of probability densities and optimal transport theory. To discuss this thermodynamic link, we start with the definition of the pseudo energy \(U_{t}^*({\varvec{x}})\) and the pseudo canonical distribution \(P^{\textrm{pcan}}_t ({\varvec{x}})\) proposed in Ref. [40].

Definition 8

Let \(\phi _t ({\varvec{x}}) \in {\mathbb {R}}^d\) be a potential which provides the optimal mean local velocity \(\varvec{\nu }^*_{t} ({\varvec{x}}) = \nabla \phi _t ({\varvec{x}}) \in {\mathbb {R}}^d\) for an infinitesimal time such that \(\lim _{\Delta t \rightarrow 0} [{\mathcal {W}}_2 (P_t, P_{t+\Delta t})]^2/(\Delta t)^2 = \int d {\varvec{x}}\Vert \varvec{\nu }^*_{t} ({\varvec{x}}) \Vert ^2 P_t({\varvec{x}})\). The pseudo energy \(U_{t}^*({\varvec{x}}) \in {\mathbb {R}}\) is defined as

and the pseudo canonical distribution \(P^{\textrm{pcan}}_t ({\varvec{x}})\) is defined as

which is a probability density that satisfies \(P^{\textrm{pcan}}_t ({\varvec{x}}) \ge 0\) and \(\int d {\varvec{x}} P^{\textrm{pcan}}_t ({\varvec{x}}) =1\).

Remark 25

The pseudo energy can be defined for a non-potential force \({\varvec{F}}_t({\varvec{x}})\) that satisfies \(\nabla \cdot ( ({\varvec{F}}_t({\varvec{x}})+\nabla U^*_t ({\varvec{x}}) ) P_t({\varvec{x}})) = 0\). Thus, the pseudo energy \(U^*_t ({\varvec{x}})\) is not generally unique. If a force \({\varvec{F}}_t({\varvec{x}})\) is given by a potential force \({\varvec{F}}_t({\varvec{x}}) = -\nabla U_t ({\varvec{x}})\), a potential energy can trivially be a pseudo energy \(U_{t}^*({\varvec{x}}) = U_t ({\varvec{x}})\).

The time evolution of \(P_{t}({\varvec{x}})\) is given by a gradient flow expression. The concept of the gradient flow is originated from the Jordan–Kinderlehrer–Otto scheme [62]. We rewrite the gradient flow expression in Ref. [69] by using a functional derivative of the Kullback–Leibler divergence for general Markov jump processes [43]. The following proposition is a special case of a gradient flow expression [43] for the Fokker–Planck equation.

Proposition 14

The time evolution of \(P_{t}({\varvec{x}})\) under the Fokker–Planck equation is described by a gradient flow expression,

where \(D_\textrm{KL}(P_t\Vert P^{\textrm{pcan}}_t)\) is the Kullback–Leibler divergence defined as

and \({\textsf{D}} [\cdot ]\) stands for the weighted Laplacian operator defined as

Proof

The functional derivative \(\partial _{P_t({\varvec{x}})} D_\textrm{KL} (P_t \Vert P^{\textrm{pcan}}_t)\) is calculated as

and its gradient is calculated as

where we used \(\nabla \left[ \int d{\varvec{x}} \exp \left[ - U_{t}^*({\varvec{x}})/T\right] \right] =0\). Thus, the optimal mean local velocity provides Eq. (94) as follows,

\(\square \)

Remark 26

The Kullback–Leibler divergence is calculated as \(D_\textrm{KL}(P_t\Vert P^{\textrm{pcan}}_t)= \int d{\varvec{x}} P_t({\varvec{x}})\ln [P_t({\varvec{x}})/P^\textrm{pcan}_t({\varvec{x}})]\) by using the normalization of the probability \(\int d{\varvec{x}} P_t({\varvec{x}}) =\int d{\varvec{x}} P^{\textrm{pcan}}_t({\varvec{x}}) =1\). We take care that the functional derivative for \( \int d{\varvec{x}} P_t({\varvec{x}})\ln [P_t({\varvec{x}})/P^\textrm{pcan}_t({\varvec{x}})] \) is different from the functional derivative for Eq. (95), and the functional derivative \(\partial _{P_t({\varvec{x}})} D_\textrm{KL} (P_t \Vert P^{\textrm{pcan}}_t) = \ln [ P_t({\varvec{x}})/P^{\textrm{pcan}}_t({\varvec{x}})]\) is defined for Eq. (95).

Remark 27

If a force is given by a potential force \({\varvec{F}}_t ({\varvec{x}}) =- \nabla U({\varvec{x}})\) with a time-independent potential energy \(U({\varvec{x}})\in {\mathbb {R}}\), a pseudo energy becomes a potential energy \(U^*_t({\varvec{x}})=U({\varvec{x}})\) and a pseudo canonical distribution \(P^{\textrm{pcan}}_t({\varvec{x}})\) becomes an equilibrium distribution \(P^{\textrm{pcan}}_t({\varvec{x}}) = P^\textrm{eq}({\varvec{x}})\), which satisfies the condition that \(P_t({\varvec{x}}) \rightarrow P^{\textrm{eq}}({\varvec{x}})\) in the limit \(t \rightarrow \infty \). In this case, the gradient flow expression Eq. (94) is given by \(\partial _t P_t ({\varvec{x}}) = {\textsf{D}} [ \partial _{P_t({\varvec{x}})} D_\textrm{KL} (P_t \Vert P^{ \textrm{eq}})]\) which describes a relaxation to an equilibrium distribution \(P^{\textrm{eq}}({\varvec{x}})\).

Remark 28

If a pseudo canonical distribution is given by a time-independent distribution \(P^\textrm{pcan}_t (\varvec{x}) =P^\textrm{st} (\varvec{x})\), the Fokker–Planck equation can be rewritten as the heat equation,

or equivalently,

where \(\textrm{div}_{P_t} \) is the operator defined as

with \(\sqrt{|g|}= P_t (\varvec{x})\). Because the operator \(\textrm{div}_{\sqrt{| g |}}\) is a generalization of the divergence operator for non-Euclidean space with the absolute value of the determinant of the metric tensor |g|, the Fokker–Planck equation may be regarded as a kind of the diffusion equation for \(\partial _{P_t(\varvec{x})} D_\textrm{KL} (P_t \Vert P^\textrm{st})= \ln P_t(\varvec{x}) - \ln P^\textrm{st} (\varvec{x})\). As a consequence of the diffusion process, we may obtain \(\partial _{P_t(\varvec{x})} D_\textrm{KL} (P_t \Vert P^\textrm{st}) = \ln P_t(\varvec{x}) - \ln P^\textrm{st} (\varvec{x}) \rightarrow 0\) in the limit \(t \rightarrow \infty \), which implies the relaxation to a steady state \(P_t(\varvec{x}) \rightarrow P^\textrm{st}(\varvec{x})\).

Based on the gradient flow expression (94), we obtain the following expression of the excess entropy production rate discussed in Ref. [40].

Theorem 15

We assume that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. The excess entropy production rate is given by

Proof

The excess entropy production rate is calculated as

where we used \(\int d {\varvec{x}} \nabla \cdot ( \partial _{P_{\tau }({\varvec{x}})} D_\textrm{KL} (P_{\tau } \Vert P^\textrm{pcan}_{\tau }) (\nabla \phi _{\tau } ({\varvec{x}})) P_{\tau } ({\varvec{x}}) )=0\) because of the assumption that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. \(\square \)

Remark 29

If the pseudo distribution does not depend on time \(P^\textrm{pcan}_{\tau }({\varvec{x}}) = P^{\textrm{st}}({\varvec{x}})\), the non-negativity of the excess entropy production rate is related to the monotonicity of the Kullback–Leibler divergence \( \partial _{\tau } D_\textrm{KL} (P_{\tau } \Vert P^{\textrm{st}}) \le 0\), where \(P^{\textrm{st}}({\varvec{x}})\) is a steady-state distribution that satisfies \(\partial _{\tau } P^{\textrm{st}}({\varvec{x}}) = 0\). Because \(\sigma ^{\textrm{ex}}_{\tau }=0\) if and only if the system is in a steady-state \(P_{\tau }({\varvec{x}}) = P^{\textrm{st}}({\varvec{x}})\), the excess entropy production rate can be a Lyapunov function and this monotonicity \(\partial _{\tau } D_\textrm{KL} (P_{\tau } \Vert P^{\textrm{st}}) \le 0\) gives the relaxation to a steady state distribution \(P_{\tau }({\varvec{x}}) \rightarrow P^\textrm{st}({\varvec{x}})\) in the limit \(\tau \rightarrow \infty \).

Remark 30

This expression of the excess entropy production rate in terms of the Kullback–Leibler divergence provides a link between optimal transport theory and information geometry. As discussed in Ref. [41], the excess entropy production can be expressed using the dual coordinate systems for the Kullback–Leibler divergence. By using the dual coordinate systems with an affine transformation, the Kullback–Leibler divergence between P and Q is given by \(D_\textrm{KL}(P\Vert Q) = \varphi ({\eta }_{P} ({\varvec{x}}) ) + \psi ({\theta }_Q ({\varvec{x}})) - \int d {\varvec{x}} {\eta }_P ({\varvec{x}}) \theta _Q ({\varvec{x}})\), where \(\eta _P ({\varvec{x}}) =P({\varvec{x}})- P^{\textrm{st}}({\varvec{x}})\) and \(\theta _Q ({\varvec{x}})= \ln Q({\varvec{x}}) - \ln P^\textrm{st}({\varvec{x}})\) are the eta and theta coordinate systems that satisfy \(\eta _{P^{\textrm{st}}} ({\varvec{x}})= \theta _{P^{\textrm{st}}} ({\varvec{x}})=0\), and \(\varphi ({\eta }_{P} ({\varvec{x}}) ) =D_\textrm{KL}(P\Vert P^{\textrm{st}})\) and \(\psi (\theta _Q ({\varvec{x}})) =D_\textrm{KL}(P^{\textrm{st}}\Vert Q)\) are the dual convex functions, respectively. Thus, if the pseudo distribution does not depend on time \(P^{\textrm{pcan}}_{\tau }({\varvec{x}}) = P^\textrm{st}({\varvec{x}})\), the excess entropy production is given by \(\sigma ^{\textrm{ex}}_{\tau } = - \partial _{\tau } \varphi ({\eta }_{P_{\tau }} ({\varvec{x}}) )\).

Remark 31

The relaxation to an equilibrium distribution \(P^\textrm{eq}({\varvec{x}})\) for a time-independent potential force \({\varvec{F}}_{\tau }({\varvec{x}}) = - \nabla U({\varvec{x}})\) was discussed from the viewpoint of information geometry based on the expression of the entropy production rate \(\sigma ^\textrm{ex}_{\tau } = \sigma _{\tau } = - \partial _{\tau } D_\textrm{KL} (P_{\tau } \Vert P^{\textrm{eq}})\) in Refs. [30, 70, 71].

Near steady state, the Fisher metric for a probability density is also related to the entropy production rate. A thermodynamic interpretation of the Fisher metric was discussed in Ref. [11] as a generalization of the Weinhold geometry [7] or the Ruppeiner geometry [8, 10] in a stochastic system near equilibrium. We also examined this Fisher metric for a far-from-equilibrium system in Refs. [22, 24, 29]. To discuss a thermodynamic interpretation of the Fisher metric, we start with the definition of the Fisher information of time for a probability density.

Definition 9

Let \(P_{t} ({\varvec{x}})\) be a probability density of \({\varvec{x}} \in {\mathbb {R}}^d\). The Fisher information of time is defined as

The positive square root \(v_\textrm{info}(t) = \sqrt{ ds^2/ dt^2}\) is called the intrinsic speed.

Remark 32

The Fisher information of time is given by the Taylor expansion of the Kullback–Leibler divergence as follows.

If we consider a time-dependent parameter \(\theta \in {\mathbb {R}}\), the Fisher information of time is given by

where \(g_{\theta \theta }(P_{t})\) is the Fisher metric defined as \(g_{\theta \theta } (P_{t})= \int d {\varvec{x}} P_{t} ({\varvec{x}}) ( \partial _{\theta } \ln P_{t}({\varvec{x}}))^2\). Thus, the intrinsic speed \(v_\textrm{info}(t)= \sqrt{ ds^2/ dt^2}\) means the speed on the manifold of the probability simplex, where the metric is given by the Fisher metric. This differential geometry is well discussed in information geometry [12].

Remark 33

The relaxation to a steady state can be discussed in terms of the monotonicity of the intrinsic speed (or the monotonicity of the Fisher information of time) \(\partial _t v_\textrm{info}(t) \le 0\, \, (\textrm{or}~\partial _t (v_\text {info}(t))^2 \le 0)\), which is valid if a force \({\varvec{F}}_t({\varvec{x}})\) is time-independent. When a force \({\varvec{F}}_t({\varvec{x}})\) depends on time, the upper bound on \(\partial _t (v_\textrm{info} (t))^2\) cannot be zero. The upper bound on \(\partial _t (v_\textrm{info} (t))^2\) for the general case \(\partial _t {\varvec{F}}_t({\varvec{x}}) \ne {\varvec{0}}\) was discussed in Ref. [24].

If a pseudo canonical distribution is given by a time-independent steady-state distribution \(P^{\textrm{pcan}}_{\tau } = P^{\textrm{st}}\), the Fisher information of time is related to the excess entropy production rate. This was discussed in Ref. [29] for the entropy production rate with a general rate equation on chemical reaction networks. For the Fokker–Planck equation, we newly propose the following relation between the Fisher information of time and the excess entropy production rate as a correspondence of the result in Ref. [29].

Proposition 16

We assume that a pseudo canonical distribution does not depend on time \(P^{\textrm{pcan}}_{\tau } ({\varvec{x}}) = P^{\textrm{st}} ({\varvec{x}})\). We assume that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. Let \(\eta _{P_t} ({\varvec{x}}) = P_t({\varvec{x}})- P^{\textrm{st}} ({\varvec{x}})\) be a difference from a steady-state distribution. The Fisher information of time is given by

where \(O(\eta _{P_t}^3)\) stands for \(O(\eta _{P_t}^3)/(\eta _{P_t} ({\varvec{x}}) )^2 \rightarrow 0\) in the limit \(P_t \rightarrow P^{\textrm{st}}\).

Proof

Let \(\theta _{P_t} ({\varvec{x}}) = \ln P_t({\varvec{x}})- \ln P^{\textrm{st}}({\varvec{x}})\) be the theta coordinate that satisfies \(\theta _{P^{\textrm{st}}}({\varvec{x}})=0\). The Fisher information of time is given by

where we used \(\int d {\varvec{x}} \nabla \cdot ( (\nabla \theta _{P_t} ({\varvec{x}}) ) P_t ({\varvec{x}}) [ \partial _t \theta _{P_t} ({\varvec{x}})]) =0\) because of the assumption that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. The excess entropy production rate is given by

where we used \(\int d {\varvec{x}} \nabla \cdot ((\nabla \theta _{P_t} ({\varvec{x}})) P_t ({\varvec{x}}) \theta _{P_t} ({\varvec{x}}) )=0\) because of the assumption that \(P_{\tau } ({\varvec{x}})\) decays sufficiently rapidly at infinity. Thus, we obtain

\(\square \)

Remark 34

Because the excess entropy production rate is defined in terms of the \(L^2\)-Wasserstein distance \(\sigma _{t}^{\textrm{ex}} = [{\mathcal {W}}_2 (P_{\tau }, P_{\tau +dt})]^2/( \mu T dt^2)\) up to O(dt), Eq. (108) implies a relation between the \(L^2\)-Wasserstein distance and the Fisher information of time near steady state \((v_\textrm{info}(\tau ))^2 = - \partial _{\tau } [{\mathcal {W}}_2 (P_{\tau }, P_{\tau +dt})]^2/( 2\mu T dt^2)\) up to \(O(\eta _{P_t}^3)\) and O(dt).

Remark 35

We discussed a relation between the Fisher information and the excess entropy production rate proposed by Glansdorff and Prigogine near steady state in Ref. [38] from the viewpoint of the Glansdorff–Prigogine criterion for stability [5, 6, 72, 73]. We remark that the definition of the excess entropy production rate by Glansdorff and Prigogine is slightly different from the definition based on \(L^2\)-Wasserstein distance in this paper.

Remark 36

As discussed in Ref. [41], the expression of \(\sigma ^{\textrm{ex}}_{t} = - \int d {\varvec{x}} [\partial _t \eta _{P_t} ({\varvec{x}}) ]\theta _{P_t} ({\varvec{x}})\) in Eq. (110) implies that the time derivative of the eta coordinate system \(\partial _t \eta _{P_t}({\varvec{x}})\) corresponds to the thermodynamic flow and the theta coordinate \(-\theta _{P_t}({\varvec{x}})\) corresponds to the conjugated thermodynamic force, respectively. The expression of the Fisher information of time in terms of the thermodynamic flow and the conjugated thermodynamic force \((v_\textrm{info}(t))^2 = \int d {\varvec{x}} [\partial _t \eta _{P_t} ({\varvec{x}})][ \partial _t \theta _{P_t} ({\varvec{x}})]\) has been substantially obtained in Ref. [22]. The gradient of the thermodynamic force \(\nabla \theta _{P_t} ({\varvec{x}})\) is also regarded as the thermodynamic force because the gradient is given by the linear combination of the thermodynamic force at position \({\varvec{x}}+ \Delta {\varvec{x}}\) and position \({\varvec{x}}\) for the infinitesimal distance \(\Delta {\varvec{x}}\). The quantity \(\mu T P^{\textrm{st}} ({\varvec{x}})\) is also regarded as the Onsager coefficient near equilibrium because Eq. (110) is the quadratic function of the thermodynamic force \(\nabla \theta _{P_t} ({\varvec{x}})\) with proportionality coefficient \(\mu T P^{\textrm{st}} ({\varvec{x}})\). The gradient flow expression of the Fokker–Planck equation Eq. (94) is given by the weighted Laplacian operator Eq. (96) where this weight is regarded as the Onsager coefficient near equilibrium. Based on the quadratic expression in Eq. (110), we also can consider a geometry where the weight of the Onsager coefficient is a metric. We used the weight of the generalized Onsager coefficient in Ref. [29] to define the excess entropy production rate for general Markov processes based on optimal transport theory, and discussed a geometric interpretation of the excess entropy production rate.

By using the Fisher information of time, the information-geometric speed limit discussed in Refs. [11, 22, 24, 29] can be obtained in parallel with the derivation of the thermodynamic speed limit Eq. (68). The information-geometric speed limit provides a lower bound on the quantity \(\int _{\tau }^{\tau +\Delta \tau } dt (v_\textrm{info}(t))^2\). The quantity \(\int _{\tau }^{\tau +\Delta \tau } dt (v_\textrm{info}(t))^2\) can be regarded as the thermodynamic cost because this quantity is related to the change of the excess entropy production rate \(\int _{\tau }^{\tau +\Delta \tau } dt (v_\textrm{info}(t))^2 = (\sigma ^{\textrm{ex}}_{\tau }- \sigma ^{\textrm{ex}}_{\tau +\Delta \tau }) /2 + O(\eta _{P_t}^3)\) near steady state by using Eq. (108).

Theorem 17

The quantity \(\int _{\tau }^{\tau +\Delta \tau } dt (v_\textrm{info}(t))^2\) is bounded by

where \({\mathcal {D}}(P_{\tau }, P_{\tau + \Delta \tau })\) is the twice of the Bhattacharyya angle \(\zeta ^{\textrm{B}}\) defined as

Proof

From the Cauchy–Schwartz inequality, we obtain

Thus, the tighter lower bound is obtained as

To solve the minimization of \(\int _{\tau }^{\tau +\Delta t} dt (v_\textrm{info}(t))^2\) under the constraint \(\int d {\varvec{x}} P_{t} ({\varvec{x}})=1\) with fixed \(P_{\tau }\) and \(P_{\tau + \Delta \tau }\), we consider the Euler–Lagrange equation

for \(\tau< t < \tau + \Delta \tau \) with the Lagrangian

The Euler–Lagrange equation can be rewritten as

which solution is generally given by \(\sqrt{ P_t({\varvec{x}})} =\alpha ({\varvec{x}}) \cos ( \sqrt{\phi }/2 (t- \beta ({\varvec{x}})))\) for \(\alpha ({\varvec{x}}) \in {\mathbb {R}}\) and \(\beta ({\varvec{x}}) \in {\mathbb {R}}\). The constraint \(\int d {\varvec{x}} P_{t} ({\varvec{x}})=1\) with fixed \(P_{\tau }\) and \(P_{\tau + \Delta \tau }\) for this solution provides the optimal solution that minimizes \(\int _{\tau }^{\tau +\Delta t} dt (v_\textrm{info}(t))^2\) under the constraint,

where the normalization of the probability is satisfied for \(\tau \le t \le \tau + \Delta \tau \),

Thus, the weaker lower bound is calculated as

\(\square \)

Remark 37

\({\mathcal {D}}(P_{\tau }, P_{\tau + \Delta \tau })\) is regarded as the geodesic on the hyper-sphere surface of radius 2. An interpretation of the Bhattacharyya angle as the geodesic on the hyper-sphere surface is related to the fact that information geometry can be regarded as the geometry of a hyper-sphere surface of radius 2 because the square of the line element is obtained from the Fisher metric as \(ds^2 =\int d{\varvec{x}} (2 d\sqrt{P_t({\varvec{x}})})^2\) with the constraint \(\int d{\varvec{x}} (\sqrt{P_t({\varvec{x}})})^2 =1\). The Bhattacharyya angle \(\zeta ^{\textrm{B}}\) is given by the inner product for a unit vector on the hyper-sphere \(\cos \zeta ^{\textrm{B}} = \int d{\varvec{x}} (\sqrt{P_{\tau }({\varvec{x}})} \sqrt{P_{\tau + \Delta \tau }({\varvec{x}})})\).

Remark 38

The quantity \(\int _{\tau }^{\tau +\Delta \tau } dt v_\textrm{info}(t)\) is called the thermodynamic length proposed in Ref. [11] as a generalization of the result in Ref. [9]. The thermodynamic length is minimized as \(\int _{\tau }^{\tau +\Delta \tau } dt v_\textrm{info}(t) \ge {\mathcal {D}}(P_{\tau }, P_{\tau + \Delta \tau })\) for the fixed initial distribution \(P_{\tau }\) and the final distribution \(P_{\tau + \Delta \tau }\). The minimization of the thermodynamic length near equilibrium for large time interval \(\Delta \tau \) is related to an optimal protocol to minimize the quadratic cost representing an observable fluctuation [11, 20, 74].

From the Cramèr–Rao bound, the intrinsic speed is also related to the speed of the observable. From the viewpoint of thermodynamics, this fact was discussed in Ref. [24] for a time-independent observable, and in Ref. [26] for a time-dependent observable.

Definition 10

Let \(r({\varvec{x}}) \in {\mathbb {R}}\) be time-independent \(\partial _t r({\varvec{x}})=0\). The speed of the observable \(v_r(t)\) is defined as

where \({\mathbb {E}}_{P_t} [r]= \int d{\varvec{x}} r({\varvec{x}}) P_t({\varvec{x}})\) and \(\textrm{Var}_{P_t} [r]= {\mathbb {E}}_{P_t} [ (\Delta r)^2]\) with \(\Delta r({\varvec{x}}) = r({\varvec{x}})- {\mathbb {E}}_{P_t} [r]\).

Remark 39

The speed of the observable can be regarded as the degree of the expected value’s change \(\mid \partial _t {\mathbb {E}}_{P_t} [r] \mid \), which is normalized by its standard deviation \(\sqrt{\textrm{Var}_{P_t} [r]}\).

Lemma 18

For any \(r({\varvec{x}}) \in {\mathbb {R}}\), the speed of the observable \(v_r(t)\) is generally bounded by the intrinsic speed \(v_\textrm{info}(t)\),

Proof

The Fisher information of time \([v_\textrm{info}(t)]^2\) is the Fisher metric for the parameter \(\theta =t\). As discussed in Lemma 7, the Cramér–Rao bound for the parameter \(\theta =t\) is given by

where we used the Cauchy–Schwartz inequality and \(\int d{\varvec{x}} \partial _t P_t({\varvec{x}})=0\). By taking the square root of each side, we obtain Eq. (7). \(\square \)

We newly propose that the intrinsic speed \(v_\textrm{info}(t)\) also provides an upper bound on the excess entropy production rate. This fact was substantially proposed in Refs. [29, 38].

Proposition 19

The excess entropy production rate \(\sigma ^{\textrm{ex}}_t\) is bounded as follows.

where \(\theta _{P_t}({\varvec{x}})\) is the theta coordinate system defined as \(\theta _{P_t}({\varvec{x}}) = \ln P_t({\varvec{x}}) - \ln P^{\textrm{pcan}}_t ({\varvec{x}})\).

Proof

The excess entropy production is given by

where we used \(\int d{\varvec{x}} \partial _t P_t({\varvec{x}})=0\). From the Cauchy–Schwartz inequality, we obtain

By taking the square root of each side, we obtain Eq. (125). \(\square \)

Remark 40

Proposition 19 implies that the excess entropy production rate \(\sigma ^{\textrm{ex}}_t\) is generally bounded by the intrinsic speed \(v_\textrm{info}(t)\). From the bound (125), the excess entropy production rate is zero \(\sigma ^{\textrm{ex}}_t=0\) if the intrinsic speed is zero \(v_\textrm{info}(t)=0\). This result is consistent with the fact that the excess entropy production rate is zero in a steady state.

5.2 Excess entropy production and information geometry in space of path probability densities

Here we newly propose that the excess entropy production rate, which is given by the \(L^2\)-Wasserstein distance in optimal transport theory, can also be obtained from the projection theorem in the space of path probability densities as analogous to the entropy production rate. This projection theorem for the excess entropy production rate was substantially obtained in Ref. [44] for the general Markov process. This result also gives another link between information geometry in the space of path probability densities and optimal transport theory.

We start with the expressions of the entropy production rate, the excess entropy production rate and the housekeeping entropy production rate by the Kullback–Leibler divergence between the path probability densities of interpolated dynamics \({\mathbb {P}}_{\varvec{\nu }_{\tau }'}^{\theta }\) defined in Definition 4.

Proposition 20

The entropy production rate \(\sigma _{\tau }\), the excess entropy production rate \(\sigma _{\tau }^{\textrm{ex}}\) and the housekeeping entropy production rate \(\sigma _{\tau }^{\textrm{hk}}\) for original Fokker–Planck dynamics (1) are given by

where \(\varvec{\nu }^*_{\tau }({\varvec{x}})\) is the optimal mean local velocity defined as \(\varvec{\nu }^*_{\tau }({\varvec{x}}) = \nabla \phi _{\tau }({\varvec{x}})\), and \(\varvec{\nu }_{\tau } ({\varvec{x}}_{\tau })\) is the mean local velocity \(\varvec{\nu }_{\tau } ({\varvec{x}}) = \mu ( {\varvec{F}}_{\tau } ({\varvec{x}}) - T \nabla \ln P_{\tau }({\varvec{x}}) )\) for the original Fokker–Planck equation \(\partial _{\tau } P_{\tau }({\varvec{x}}) =- \nabla \cdot ( \varvec{\nu }_{\tau } ({\varvec{x}}) P_{\tau } ({\varvec{x}}) )\).

Proof

For any \({\varvec{v}} ({\varvec{x}}) \in {\mathbb {R}}^d\) and \({\varvec{v}}' ({\varvec{x}}) \in {\mathbb {R}}^d\), we obtain

Thus, the Kullback–Leibler divergecne is calculated as

Therefore, by plugging \(({\varvec{v}}, {\varvec{v}}')=(\varvec{\nu }_{\tau }, {\varvec{0}})\), \(({\varvec{v}}, {\varvec{v}}')=(\varvec{\nu }_{\tau }, \varvec{\nu }_{\tau } - \varvec{\nu }^*_{\tau })\), \(({\varvec{v}}, {\varvec{v}}')=(\varvec{\nu }_{\tau }^*, {\varvec{0}})\), \(({\varvec{v}}, {\varvec{v}}')=(\varvec{\nu }_{\tau }, \varvec{\nu }^*_{\tau })\), \(({\varvec{v}}, {\varvec{v}}')=(\varvec{\nu }_{\tau }- \varvec{\nu }_{\tau }^*, {\varvec{0}})\) into Eq. (133), we obtain

\(\square \)

Remark 41

Proposition 20 implies that the origin of the decomposition \(\sigma _{\tau } = \sigma ^\textrm{ex}_{\tau }+ \sigma ^\textrm{hk}_{\tau }\) comes from the generalized Pythagorean theorems

that is consistent with the Pythagorean theorem in Remark 22.

Based on the projection theorem for the Pythagorean theorem in Remark 41, we obtain expressions of the excess entropy production rate and the housekeeping entropy production rate by the minimization problem of the Kullback–Leibler divergence.

Proposition 21

We assume that \(P_{\tau }({\varvec{x}}_{\tau })\) decays sufficiently rapidly at infinity. The excess entropy production rate and the housekeeping entropy production rate are given by

where \({\mathcal {M}}_\textrm{ZD} ({\mathbb {P}})\) is the zero-divergence manifold defined as

and \({\mathcal {M}}_\textrm{G} ({\mathbb {P}})\) is the gradient manifold defined as

Proof

Let \(\varvec{\nu }^*_{\tau }({\varvec{x}}_{\tau })= \nabla \phi _{\tau } ({\varvec{x}}_{\tau })\) be the optimal mean local velocity. For any \({\mathbb {P}}^{1}_{{\varvec{v}}} \in {\mathcal {M}}_\textrm{ZD} ({\mathbb {P}})\), we obtain the generalized Pythagorean theorem,

where we used Eq. (133) and

because \(\nabla \cdot ( P_{\tau } ({\varvec{x}}_{\tau }) (\varvec{\nu }_{\tau }({\varvec{x}}_{\tau }) -\varvec{\nu }^*_{\tau }({\varvec{x}}_{\tau }) - {\varvec{v}}({\varvec{x}}_{\tau }))= 0\) and \(P_{\tau }({\varvec{x}}_{\tau })\) decays sufficiently rapidly at infinity. Because \(D_\textrm{KL}({\mathbb {P}}^{1}_{\varvec{\nu }_{\tau }-\varvec{\nu }^*_{\tau }} \Vert {\mathbb {P}}^{1}_{{\varvec{v}}} ) \ge 0\), we obtain \(\inf _{{\mathbb {Q}} \in {\mathcal {M}}_\textrm{ZD} ({\mathbb {P}}) } D_\textrm{KL}({\mathbb {P}}\Vert {\mathbb {Q}}) = D_\textrm{KL}({\mathbb {P}}\Vert {\mathbb {P}}^{1}_{\varvec{\nu }-\varvec{\nu }^*_{\tau }} )\) and Eq. (139) from the generalized Pythagorean theorem Eq. (143).

For any \({\mathbb {P}}^{1}_{\nabla r} \in {\mathcal {M}}_\textrm{G} ({\mathbb {P}})\), we obtain the generalized Pythagorean theorem,

where we used Eq. (133) and

because \(\nabla \cdot [ P_{\tau } ({\varvec{x}}_{\tau }) (\varvec{\nu }_{\tau }({\varvec{x}}_{\tau })-\varvec{\nu }^*_{\tau }({\varvec{x}}_{\tau })) ]= 0\) and \(P_{\tau }({\varvec{x}}_{\tau })\) decays sufficiently rapidly at infinity. Because \( D_\textrm{KL}({\mathbb {P}}^{1}_{\varvec{\nu }^*_{\tau }} \Vert {\mathbb {P}}^{1}_{\nabla r} ) \ge 0\), we obtain \(\inf _{{\mathbb {Q}} \in {\mathcal {M}}_\textrm{G} ({\mathbb {P}}) } D_\textrm{KL}({\mathbb {P}}\Vert {\mathbb {Q}}) = D_\textrm{KL}({\mathbb {P}}\Vert {\mathbb {P}}^{1}_{\varvec{\nu }^*_{\tau }} )\) and Eq. (140) from the generalized Pythagorean theorem Eq. (145). \(\square \)

Remark 42

Because \({\mathbb {P}}^1_{{\varvec{0}}} \in {\mathcal {M}}_\textrm{G}({\mathbb {P}})\) and \({\mathbb {P}}^1_{{\varvec{0}}} \in {\mathcal {M}}_\textrm{ZD}({\mathbb {P}})\), the path probability \({\mathbb {P}}^1_{{\varvec{0}}}\) is on the intersection of two manifolds \({\mathcal {M}}_\textrm{G}({\mathbb {P}})\) and \({\mathcal {M}}_\textrm{ZD}({\mathbb {P}})\).

Remark 43

The path probability density \({\mathbb {P}}^{\theta }_{\varvec{\nu }^*_{\tau }}\) corresponds to the e-geodesic between \({\mathbb {P}}\) and \({\mathbb {P}}^{1}_{\varvec{\nu }^*_{\tau }}\). The path probability density \({\mathbb {P}}^{\theta }_{\varvec{\nu }_{\tau }- \varvec{\nu }^*_{\tau }}\) corresponds to the e-geodesic between \({\mathbb {P}}\) and \({\mathbb {P}}^{1}_{\varvec{\nu }_{\tau }-\varvec{\nu }^*_{\tau }}\).