Abstract

This paper investigates the problem of finite-time stability (FTS) for a class of delayed genetic regulatory networks with reaction-diffusion terms. In order to fully utilize the system information, a linear parameterization method is proposed. Firstly, by applying the Lagrange’s mean-value theorem, the linear parameterization method is applied to transform the nonlinear system into a linear one with time-varying bounded uncertain terms. Secondly, a new generalized convex combination lemma is proposed to dispose the relationship of bounded uncertainties with respect to their boundaries. Thirdly, sufficient conditions are established to ensure the FTS by resorting to Lyapunov Krasovskii theory, convex combination technique, Jensen’s inequality, linear matrix inequality, etc. Finally, the simulation verifications indicate the validity of the theoretical results.

Similar content being viewed by others

Introduction

With the deepening research on biological network, neural network, gene network and other excellent achievements have been produced in recent years [1,2,3,4,5,6]. Especially, as a powerful research tool for cell recognition, metabolism and signal transduction in the growth and reproduction processes of organisms, a mess of experts and scholars pay a widely sight of genetic regulatory networks (GRNs) in the field of biomedical and bioengineering [1, 2]. There exist two common phenomenons in the process of gene regulation. The first is hysteresis by slow conduction of gene regulation [7]. The second is reaction-diffusion phenomenon by the nonuniform concentration distribution of cell components in various regions, which means that the concentration changes of mRNA and protein in time and space from one layer to another must be considered. In recent years, some related research results have been presented for these dynamic characteristics of GRNs [8,9,10,11,12].

Since 1961, P. Dorato proposed the detailed finite-time stability (FTS) theory to describe the system performance indicators and state trajectories in a short time area in [13], the study of FTS has attracted wide attention in various fields. As we all know, Lyapunov theory is very important and universal to study the various dynamic characteristics of complex systems, and a large number of excellent results have been produced on the basis of this theory [14,15,16,17,18,19]. Therefore, it is convenient and effective to use Lyapunov theory to study the FTS of GRNs. Several excellent results for FTS analysis of the delayed GRNs with reaction-diffusion terms (DGRNs-RDTs) have proposed in [10,11,12, 20]. By applying the secondary delay-partition approach to divide the time-delay interval into two subintervals, the FTS conditions of DGRNs-RDTs are established in [10]. The problem of FTS for uncertain DGRNs-RDTs is analyzed by using the reconstructed uncertainties in [11, 20]. In [12], the FTS criteria of DGRNs-RDTs in relation to the character of delay and reaction-diffusion is established based on an LKF with quad-slope integrations. The common goal of the above literatures is to obtain the stability criterion with low conservatism, which is also the research purpose of this paper.

In the aspect of reducing the conservativeness of stability, delay information is often used to construct different LKF and different stability criteria have been obtained. For example, by introducing a fraction of the time delay, a novel stability result is obtained [21, 22]. In [10, 23], using such an idea that the whole delay interval is nonuniformly decomposed into multiple subintervals, several stability criteria are proposed. In [24], along with the routine of dynamic programming, a multiple dynamic contraction mapping idea and homeomorphism theory are combined and a nonuniformly weighting-delay-based analysis method is developed to analyze the stability of neural networks. In the above literatures, the stability of the system is analyzed by constructing a complex LKF. However, it is also an effective way to analyze stability, that is, to construct a simple LKF that contains system information as completely as possible. In addition, the utilization of the information on the slope of the regulatory function plays an important role in conservativeness as [25]. Currently, the slope information of nonlinear regulatory function is used by transforming it into the state-dependent inequality like \((G_1x-F(x))(G_2x-F(x))\le 0\) in FTS analysis. Namely, only the minimum slope matrix \(G_1\) and the maximum slope matrix \(G_2\) of the nonlinear regulatory function are used in [10,11,12, 20]. To this end, we will make a breakthrough in the use of nonlinear regulatory function, and construct an LKF with low complexity. The deterministic nonlinear regulator function information will be converted into polytope information based on a linear parameterization analysis method, which will increase the utilization of system information in the analysis process.

The main contributions of this paper can be summarized as follows: (i) the nonlinear DGRNs-RDTs is transformed into an equivalent linear one with time-varying bounded uncertainties based on the proposed linear parameterization method; (ii) a new generalized convex combination lemma is proposed to deal with the multiple bounded uncertainties; (iii) a FTS criterion of DGRNs-RDTs is established.

The remainder of this paper is distributed as follows. In Sect. 2, the problem description and some preliminaries, assumptions, definition are introduced. In Sect. 3, two main results are proposed, i.e., a linear parameterization method and sufficient FTS conditions of DGRNs-RDTs. In Sect. 4, two numerical examples are given to prove the validity of the theoretical results. Some summaries are drawn in Sect. 5.

Problem formulation and preliminaries

Consider the following DGRNs-RDTs:

where \(i\in \mathfrak {I}_n=\{1,2, \dots , n\}\), \(\tilde{\mathfrak {O}}_{i}(t,\varepsilon )\) and \(\tilde{\mathfrak {H}}_{i}(t,\varepsilon )\) are the i-th node mRNA and protein concentrations at time t, respectively, \(\varepsilon =(\varepsilon _1, \varepsilon _2,\dots ,\varepsilon _m)^{T}\in \varOmega \subset {\mathbb {R}}^{m}\) represents the space variable, \(\varOmega =\{\varepsilon : |\varepsilon _\curlyvee |\le M_\curlyvee , \curlyvee \in \mathfrak {I}_m\}\) is a compact set in \(\mathbb {R}^{n}\) with smooth boundary \(\partial \varOmega \), \(M_\curlyvee >0\) is constant, \(K_{i_\curlyvee }>0\) and \(K^*_{i_\curlyvee }>0\) are the positive definite matrices; \(d_i\) represents the translation rate, \(a_i\) and \(c_i\) are the degradation rates, \(b_{ij}\) is represented as follows:

\(q_{i}=\varSigma _{j\in \mathfrak {Z}_{i}} \alpha _{ij}\) stands for the basal metabolic rate, \(\mathfrak {Z}_{i}\) represents the set of repressers of gene i, \(f_{j}(s)=(\frac{s}{\beta _{j}})^{\lambda _{j}}/(1+(\frac{s}{\beta _{j}})^{\lambda _{j}})\) denotes the Hill feedback regulation function, where \(\beta _{j}>0\) and \(\lambda _{j}>0\) are the constants; \(\mathfrak {A}(t)\) and \(\mathfrak {L}(t)\) are the time-varying delays and satisfying:

where \(\tilde{\mathfrak {A}}\), \(\tilde{\mathfrak {L}}\), \(\mu _{\mathfrak {A}}\) and \(\mu _{\mathfrak {L}}\) are non-negative real constants.

Let

be the equilibrium point of DGRNs-RDTs (1). Obviously, we can easily transfer \((\mathfrak {O}^*(\varepsilon ),\mathfrak {H}^*(\varepsilon ))\) to the origin through the following transformations

Then, DGRNs-RDTs (1) is converted into a compact matrix form:

where \(A=\mathrm {diag}(a_{1},a_{2},\dots ,a_{n}),C = \mathrm {diag}(c_{1},c_{2},\dots ,c_{n}), B=[b_{ij}]\in \mathbb {R}^{n}, D=\mathrm {diag}(d_{1}\), \(\ d_{2}, \ \ \dots , \ \ d_{n}), \ \ \ K_{\curlyvee }=\mathrm {diag}\,(K_{1_\curlyvee }, \ K_{2_\curlyvee }, \ \dots ,\ K_{n_\curlyvee }), \ \ K^*_{\curlyvee }=\mathrm {diag}\,(K^*_{1_\curlyvee }, \ \ K^*_{2_\curlyvee }, \ \dots , K^*_{n_\curlyvee })\), \(\mathfrak {O}(t, \ \varepsilon )=\mathrm {col}(\mathfrak {O}_{1}(t,\varepsilon ), \ \ \mathfrak {O}_{2}(t,\varepsilon ), \ \ \dots \, \ \ \mathfrak {O}_{n}(t,\varepsilon )), \mathfrak {H}(t,\varepsilon )=\mathrm {col}\,(\mathfrak {H}_{1}(t,\varepsilon ), \ \ \mathfrak {H}_{2}(t,\varepsilon )\), \( \dots ,\ \ \mathfrak {H}_{n}\,(t,\varepsilon )),\ \ F(\bar{\mathfrak {\mathfrak {H}}}\,(z,\varepsilon ))=\mathrm {col}\,(F_{1}(\bar{\mathfrak {H}}_1\,(z,\varepsilon )), \ \ F_{2}\,(\bar{\mathfrak {H}}_2\,(z,\varepsilon )), \ \ \dots \ , \ \ F_{n}\,(\bar{\mathfrak {H}}_n\,(z,\varepsilon ))\), \( F_{j}\,(\bar{\mathfrak {H}}_{j}\,(t-\mathfrak {A}(t),\varepsilon )) = f_{j}(\tilde{\mathfrak {H}}_{j}(t-{\mathfrak {A}}(t),\varepsilon ))-f_{j}(\mathfrak {H}^*_{j}(\varepsilon )) .\)

Assumption 1

[12] The DGRNs-RDTs (3) satisfy the following Dirichlet boundary conditions and initial conditions:

where \(h=\max \{\tilde{\mathfrak {A}},\tilde{\mathfrak {L}}\}\), \(\phi (t,\varepsilon )\), \(\varphi (t,\varepsilon )\in C^{1}([-h,0]\times \varOmega ,\mathbb {R}^n)\), \(C^1([-h,0]\times \varOmega ,\mathbb {R}^n)\) is a continuous function in Banach space , and the norm on this map is defined as

Assumption 2

The activation function \(f_{j}(\cdot )\) is a monotonically nondecreasing Hill feedback regulation function, and satisfies the peculiar formulas (see [26, 27]):

for any distinct \(\chi _{1}\), \(\chi _{2}\) \(\in \) \(\mathbb {R}\) , where \(g_{j1}\) and \(g_{j2}\) are nonnegative constants.

Definition 1

[28] For given positive constants \(c_1\), \(c_2\) and T, the trivial solution of DGRNs-RDTs (3) is finite-time-stable, if

for

Remark 1

Under the assumptions of the Dirichlet boundary conditions and the Lipschitz conditions of \(f_j(\cdot )\), the existence of the equilibrium point \((\mathfrak {O}^*(\varepsilon ),\mathfrak {H}^*(\varepsilon ))\) can be easily derived by using the fixed point theory (see, [29, 30]).

Remark 2

Under normal circumstances, the concentrations inside and outside of the cell are inconsistent. To more meaningfully and truthfully study the problem of GRNs, it is necessary to consider the influence of concentration changes during the movement of genes. At present, many excellent results have been proposed on DGRNs-RDTs [10,11,12, 20, 31, 32]. This paper studies the DGRNs-RDTs (1), which consider the gradient of mRNA and protein concentration like the terms of \(\frac{\partial }{\partial \varepsilon _{\curlyvee }}(K_{i_\curlyvee }\frac{\partial \tilde{\mathfrak {O}}_{i}(t, \varepsilon )}{\partial \varepsilon _\curlyvee })\) and \(\frac{\partial }{\partial \varepsilon _{\curlyvee }}(K^*_{i_\curlyvee }\frac{\partial \tilde{\mathfrak {H}}_{i}(t, \varepsilon )}{\partial \varepsilon _\curlyvee })\). In addition, the lower boundary \(g_{j1}\) of nonlinear regulation function slope property is defined as 0 in [11, 12, 20, 31, 32]. In this paper, the regulation function slope property is defined to satisfy the condition (4) to comprehensively consider the stability problem.

Main results

In this section, the nonlinear DGRNs-RDTs (3) is translated into an equivalent linear one with bounded uncertainties, and a new generalized convex combination lemma is proposed. Then, FTS criterion of the new linear model is established under Dirichlet boundary conditions in terms of LMIs.

A linear parameterization approach

In order to dispose the nonlinearity in DGRNs-RDTs (3), we propose the following linear parameterization method based on the Lagrange’s mean-value theorem (LMVT)

By using the LMVT, there exist variable \(\xi _{j}(t,\varepsilon )\ge 0\), between \(\tilde{\mathfrak {H}}_{j}(t-\mathfrak {L}(t),\varepsilon )\) and \(\mathfrak {H}^{*}_{j}(\varepsilon )\), \(j\in \mathfrak {I}_n\), such that

where \(f'_j(\xi _{j}(t,\varepsilon ))=\theta _{j}(t,\varepsilon )\).

According to Assumption 1 and the definition of function derivative, it follows that \(g_{j1}\le \theta _{j}(t,\varepsilon )\le g_{j2}\) for all \(t\ge 0\) and \(j\in \mathfrak {I}_n\). These variables \(\theta _{j}(t,\varepsilon )\) will be defined as uncertainties in the rest of this paper.

For simplicity of notations, we define \(\theta _{\varepsilon _{j}}(t)\), \(\mathfrak {O}_\varepsilon (t)\) and \(\mathfrak {H}_\varepsilon (t)\) to replace \(\theta _{j}(t,\varepsilon )\), \(\mathfrak {O}(t,\varepsilon )\) and \(\mathfrak {H}(t,\varepsilon )\). Then, we can transform DGRNs-RDTs (3) to

where \(\theta _\varepsilon (t)= \mathrm {diag}(\theta _{\varepsilon _{1}}(t), \theta _{\varepsilon _{2}}(t), \dots , \theta _{\varepsilon _{n}}(t))\).

Based on the linear parameterization method above, the nonlinear GRNs is transformed into an equivalent linear one with uncertain forms.

Remark 3

Linearization refers to finding the linear approximation function of the nonlinear function at the fixed point or equilibrium point \(x_0\). The most widely used Linearization method is the Taylor series expansion, that is, the Taylor series expansion is performed at fixed point \(x_0\) and the higher-order terms are ignored to obtain a linear function with increment as the variable like \(f(x)-f(x_0)=(\frac{d(f(x))}{dx})_{x_0}(x-x_0)\) in [33]. This method requires the variation range of the variable near the fixed point \(x_0\) is small, and the resulting linear function changes with the choice of fixed point. The linear parameterization method above is to transform the nonlinear regulation function into an equivalent linear uncertainty function that satisfies the principle of superposition. In addition, the linear parameterization method is obtained by using the information of equilibrium point, but there is no requirement for the changes of state variable near the equilibrium point. Then, the linear parameterization method proposed is not a strict linearization method, but it is more accurate to convert the nonlinear function into a linear one, and the method can better reflect the slope information of the nonlinear function. The proposed linear parameterization method is the main highlight and key point of this paper, and promotes the subsequent FTS analysis.

Remark 4

Improving the utilization of system information can effectively reduce the conservativeness. As shown in [25], the activation function is divided into two parts to improve the utilization of its slope information, thereby reducing the conservativeness of the stability criterion. In this paper, in order to increase the utilization of system information, the nonlinear regulation function is transformed into equation (5) based on the linear parameterization method. That is, the slope information of the regulation function is converted into the uncertainties boundary information. Then, the \(2^n\) uncertainties boundary information matrices like \(G = \mathrm {diag}(g_{1s_j},g_{2s_j},\dots ,g_{ns_j}) (s_j\in \mathfrak {I}_2)\) will be considered to replace the upper and lower bound matrices in [10,11,12, 25]. Therefore, based on the linear parameterization method, a more accurate feasible region of the FTS conditions can be obtained by using the above \(2^n\) boundary information matrices.

Lemma 1

For a matrix \(\sigma = \left\{ \mathrm {diag}(\tilde{\sigma }_1,\tilde{\sigma }_2,\dots ,\tilde{\sigma }_n):u_{j1}\le \tilde{\sigma }_j\le u_{j2}, \ j\in \mathfrak {I}_n\right\} \) and any appropriate dimensional constant matrices \(\tilde{A}\), \(\tilde{B}\) and \(\tilde{C}\), the following inequality holds:

where \(U_{s_1,s_2, \dots , s_n}=\mathrm {diag}(u_{1s_j},u_{2s_j},\dots ,u_{ns_j})\), \(s_j\in \mathfrak {I}_2\). That is, \(U_{s_1,s_2, \dots , s_n}\) represents \(2^n\) matrices formed by the random combination of the upper and lower boundaries of \(\tilde{\sigma }_j\) in the matrix \(\sigma \).

Proof

The above lemma is equivalent to

if and only if

where \(\sigma _{j}\) and \(U_{js_j}\) are diagonal matrix belong to \(R^{n\times n}\) with \(\tilde{\sigma }_j\) and \(u_{js_j}\) in the j-th site and 0 elsewhere, respectively.

The “only if” part follows immediately derived from \(u_{j1}\le \tilde{\sigma }_j\le u_{j2},j\in \mathfrak {I}_n\). Now we show the “if” part.

According to the convex combination lemma [34], and \(u_{j1}\le \tilde{\sigma }_j\le u_{j2}\), there exist positive \(\mathfrak {X}_{j1}\) and \(\mathfrak {X}_{j2}\) satisfying \(\mathfrak {X}_{j1}+\mathfrak {X}_{j2}=1\) such that \(\tilde{\sigma }_j=\mathfrak {X}_{j1}u_{j1}+\mathfrak {X}_{j2}u_{j2}\), \(j\in \mathfrak {I}_n\).

The left and right sides of inequality (8) are multiplied by \(\mathfrak {X}_{11}\) and \(\mathfrak {X}_{12}\) for \(s_1=1\) and \(s_1=2\), respectively. Then, we can derive \(\varTheta _{s_{2},\dots ,s_{n}} :=\mathfrak {X}_{11}\varOmega _{1,s_{2} ,\dots , s_{n}}+\mathfrak {X}_{12}\varOmega _{2, s_{2} , ..., s_{n}}<0\), that is,

Likewise, we can get \(\varTheta _{s_{3},\dots ,s_{n}} :=\mathfrak {X}_{21}\varOmega _{1,s_{3} ,\dots , s_{n}}+\mathfrak {X}_{22}\varOmega _{2,s_{3} ,..., s_{n}}<0\), that is,

Continuous calculation based on the above algorithm, and ultimately we can obtain \(\tilde{A}+\sum \nolimits _{j=1}^n \tilde{B}^{T}\sigma _j\tilde{C}<0\), the proof is completed. \(\square \)

Remark 5

The lemma above is a new result of convex combination with multiple bounded uncertainty parameters. Specifically, the convex combination lemma in [34] is a special case of above lemma, for \(j=1\), and \(\mathfrak {X}_{j1}\), \(\tilde{B}^{T}U_{j1}\tilde{C}\), \(\tilde{B}^{T}U_{j2}\tilde{C}\) are defined as \(\alpha \), \(X_1\), \(X_2\), respectively. That is, \(\tilde{A}+\alpha X_1+(1-\alpha )X_2<0\) if and only if \(\tilde{A}+ X_1<0\) and \(\tilde{A}+X_2<0\). Therefore, the lemma above generalizes the corresponding results in [34] to a situation with n bounded uncertainties.

FTS analysis for DGRNs-RDTs

For notational ease, set

Theorem 1

For given constants \(\tilde{\mathfrak {A}}\), \(\tilde{\mathfrak {L}}\), \(\mu _{\mathfrak {A}}\) and \(\mu _{\mathfrak {L}}\) satisfying (2), and positive scalars \(\rho \), \(c_1\), \(c_2\) and T, the DGRNs-RDTs (6) is finite-time-stable under Assumption 1 and 2, if there exist real symmetrical positive definite matrices \(Q_\iota \), diagonal positive definite matrices \(J_\varsigma \), \(Y_\varsigma \), and appropriate dimensional matrices \(\hat{H}_\varsigma \) \((\iota \in \mathfrak {I}_{10}, \varsigma \in \mathfrak {I}_2)\) such that the following LMIs hold:

where

Proof

Define an LKF candidate for DGRNs-RDTs (6) as:

where

Computing the derivative of\(V(t, {\mathfrak {O}, \mathfrak {H}})\) along the trajectories of DGRNs-RDTs (6), then

with the specific differentials as follow:

Firstly, by applying Lemma 3 in [32], Green formula and Assumption 1, we derive

where \(\tilde{\varPhi }_{11}(t)=l_{1}J_1B\theta _\varepsilon (t)l^{T}_{6}+l_7Y_1B\theta _\varepsilon (t)l^{T}_6\).

Then, based on the second inequality in (10), according to reciprocally convex technique and Wirtinger-type integral inequality in [35] and [36], respectively, we obtain

In addition, based on Jensen’s inequality and Wirtinger-type integral Lemma in [36] and [37], respectively, one can derive

Therefore, substituting (12)-(17) into (11), we obtain

where

According to the formula (18), we have

where \(\tilde{\varPi }_1(\mathfrak {A}(t),\mathfrak {L}(t))=\hat{\varPi }_1(\mathfrak {A}(t),\mathfrak {L}(t)) {-}\rho l_1J_1l^{T}_1{-}\rho l_{9}J_2l^{T}_{9}\).

Apparently, \(\tilde{\varPhi }_{11}(t)\), \(\varPhi _4(\mathfrak {A}(t),\mathfrak {L}(t))\) and \(\varPhi _5(\mathfrak {A}(t),\mathfrak {L}(t))\) are closely related to \(\mathfrak {A}(t)\), \(\mathfrak {L}(t)\) and the diagonal matrix compose of n time-varying bounded uncertain terms \(\theta _\varepsilon (t)\), respectively. Then, applying the lemma 1 to inequality (9), and using inequality (2), we can derive

and

Integrating from 0 to t on the both sides of the inequality (21), and \(t\in [0,T]\), we obtain

From Gronwall inequality in [10], we derive \( V(T, \mathfrak {O}, \mathfrak {H})\le e^{\rho T}V(0,\mathfrak {O}_\varepsilon (0),\mathfrak {H}_\varepsilon (0)). \) Noting that \( V(0,\mathfrak {O}_\varepsilon (0),\mathfrak {H}_\varepsilon (0)) =\sum _{i=1}^5V_i(0,\mathfrak {O}_\varepsilon (0),\mathfrak {H}_\varepsilon (0)) \le \lambda _{1}\Vert \phi (t)\Vert ^{2}_{h}+\lambda _{2}\Vert \varphi (t)\Vert ^{2}_{h}\) \(\le (\lambda _{1}+\lambda _{2})(\Vert \phi (t)\Vert ^{2}_{h}+\Vert \varphi (t)\Vert ^{2}_{h})\).

Then, we get

and

Now, based on inequality (24), we can get that

Therefore, we can derive that the DGRNs-RDTs (6) is finite-time-stable according to Definition 1 and inequality (9)-(10). The proof is completed. \(\square \)

Remark 6

In this paper, the slope information of regulatory function is more fully utilized rather than the fixed lower bound matrix like \(G_1=diag(g_{11}, g_{21},\dots , g_{n1})\) and the upper matrix like \(G_2=diag(g_{12}, g_{22},\dots , g_{n2})\) in [10,11,12, 25]. In detail, compared with the two slope information matrices mentioned above, the applicable slope information matrices can be increased to \(2^{n}\) by random combination of upper and lower boundaries of n uncertain items like \(G_{s_1,s_2,\dots ,s_n}=diag(g_{1s_j}, g_{2s_j},\dots , g_{ns_j}),\ s_j\in \{1,2\}\). That is, the condition (9) represents \(2^n\) inequalities. Although the method proposed in this paper increases the computational burden, it also obtains a more accurate feasible region of FTS criterion.

Remark 7

As shown in [12], the fourth-order integral term can more completely reflect the system state information. Then, the same fourth-order integral term like \(\int ^{}_{\varOmega }\int ^{0}_{-\tilde{\mathfrak {A}}}\int ^{0}_{\lambda }\int ^{0}_{\alpha }\int ^{t}_{t+\nu }\frac{\partial \mathfrak {O}^{T}_\varepsilon (s)}{\partial s}Q_9{\frac{\partial \mathfrak {O}_\varepsilon (s)}{\partial s}}\mathrm {d}s\mathrm {d}\nu \mathrm {d}\alpha \mathrm {d}\lambda \mathrm {d}\varepsilon \) is introduced into the LKF in this paper. However, different from the LKF in [12], the item like \(\int ^{}_{\varOmega }\int ^{t}_{t-\sigma (t)}f^{T}(p(s,x))Q_5\) \(\times f(p(s,x))\mathrm {d}s\mathrm {d}\varepsilon \) of LKF is removed because the information of \(f(\cdot )\) is transformed into the information of uncertainties and system states. Then, the LKF will be more simple and can fully reflect the system information. In addition, the slope information of the nonlinear regulation function is used more complete and flexible like \(G_{s_1,s_2, \dots , s_n}\) than the fixed form like \((G_1x-F(x))(G_2x-F(x))\le 0\). Therefore, the less conservative FTS criterion will be obtained by utilizing more information of GRNs in this paper.

Numerical example

To demonstrate the effectiveness of the theoretical, we will consider two numerical examples.

Example 1

Consider the following DGRNs-RDTs (3):

Assume \(\beta _{1}=1\), \(\lambda _{1}=2\), \(f_{1}(s)=\frac{s^2}{1+s^2}\), \(g_{11}=0.1\), \(g_{12}=0.65\), \(M_1=1\), \({\rho =0.001}\), \(\mu _{\mathfrak {A}}=\mu _{\mathfrak {L}}=2\), \(c_1=0.0878\), \(c_2=8\), \(T=20\), and the initial conditions are \(\phi _\varepsilon (t)=\varphi _\varepsilon (t)=1.3\).

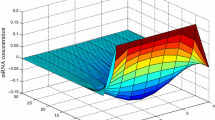

The trajectory of mRNA concentration \(\mathfrak {O}_\varepsilon (t)\) for DGRNs-RDTs (3)

Then, we can testify the FTS conditions (9)-(10) are feasible by using the Toolbox YALMIP of MATLAB for \(\tilde{\mathfrak {A}}=\tilde{\mathfrak {L}}\in {(0,\ 1.5696]}\). Furthermore, a set of feasible solutions for conditions (9)-(10) are listed as follows:

Next, when \(\mathfrak {A}(t)=\mathfrak {L}(t)=0.5\), the state trajectories of DGRNs-RDTs (3) are shown in Figures 1 and 2. They reflect that the concentration trajectories of protein and mRNA gradually converge to the zero equilibrium point under \(T=20s\), which also show that the theoretical results of this paper are valid.

Example 2

We consider the DGRNs-RDTs (3) and its parameters as follow:

Assume \(\beta _{j}=1\), \(\lambda _{j}=2\), \(f_{j}(s)=\frac{s^2}{1+s^2}\), \(g_{j1}=0\), \(g_{j2}=0.65\), \(M_j=1\), \(\rho =0.002\), \(\mu _{\mathfrak {A}}=\mu _{\mathfrak {L}}=2\), \(c_1=0.0878\), \(c_2=8\), \(T=10\), \(\tilde{\mathfrak {A}}=\tilde{\mathfrak {L}}\in (0,\ 1.068]\), \(j\in \mathfrak {I}_2\).

The trajectory of protein concentration \(\mathfrak {H}_\varepsilon (t)\) for DGRNs-RDTs (3)

We verify the FTS criterion (9)-(10) is viable by applying the Toolbox YALMIP of MATLAB under the numerical example mentioned above. In addition, we test the FTS conditions of this system are also feasible in [12].

Comparing the two slope information matrix in [12], we obtain four boundary matrices from two time-varying bounded uncertain term formed by the two regulation function \(F_{1}(s)\) and \(F_{2}(s)\) based on the proposed linear parameterization method.

However, substituting \(\tilde{\mathfrak {A}}=\tilde{\mathfrak {L}}\in (0,\ 1.152]\), and all other parameters of the mentioned model above remain the same, we testify the FTS conditions (9)-(10) proposed in this paper are solvable. Meanwhile, the corresponding conditions proposed in [12] are infeasible, which declare the stability criterion is ineffective for the considered system.

The simulation results of this example demonstrate the effectiveness of the theoretical verification in this paper, and attest the stability criterion proposed has a less conservative than the conditions in [12], and allows a larger time-delay upper bounds.

Conclusion

A linear parameterization method is proposed to study the finite-time stability (FTS) of delayed genetic regulatory networks with reaction-diffusion terms. The main contributions of this paper are as follows. (1) Based on the proposed linear parameterization method, the nonlinear system is transformed into an equivalent linear one with time-varying bounded uncertain terms. (2) The slope information of regulatory function is transformed into the boundary information of uncertain terms, which can make the information more fully and flexibly used. And a new generalized convex combination lemma with multiple bounded uncertainty parameters is proposed. (3) A stability criterion is established to guarantee FTS based on the proposed technique lemma. In the future, how to extend the method proposed in this paper to the study of state estimation and control problems, see [3, 5, 8] are the further research topics.

References

Rao X, Chen X, Shen H, Ma Q, Li G, Tang Y, Pena MJ, York WS, Frazier TP, Lenaghan SC et al (2019) Gene regulatory networks for lignin biosynthesis in switchgrass (Panicum virgatum). Plant Biol J 17(3):580–593

Swarup V, Hinz FI, Rexach JE, Noguchi K, Toyoshiba H, Oda A, Hirai K, Sarkar A, Seyfried NT, Cheng C et al (2019) Identification of evolutionarily conserved gene networks mediating neurodegenerative dementia. Nat Med 25(1):152–164

Tan G, Wang Z (2020) Generalized dissipativity state estimation of delayed static neural networks based on a proportional-integral estimator with exponential gain term. IEEE Trans Circuits Syst II: Express Briefs https://doi.org/10.1109/TCSII.2020.2998300

Xia Y, Wang J (1998) A general methodology for designing globally convergent optimization neural networks. IEEE Trans Neural Netw 9(6):1331–1343

Ding S, Wang Z (2020) Event-triggered synchronization of discrete-time neural networks: a switching approach. Neural Netw 125:31–40

Ding S, Wang Z, Rong N (2020) Intermittent control for quasisynchronization of delayed discrete- time neural networks. IEEE Trans Cybern. https://doi.org/10.1109/TCYB.2020.3004894

Zhang X, Wu L, Zou J (2016) Globally asymptotic stability analysis for genetic regulatory networks with mixed delays: an M-matrix-based approach. IEEE/ACM Trans Comput Biol Bioinform 13(1):135–147

Zhang X, Han Y, Wu L, Wang Y (2018) State estimation for delayed genetic regulatory networks with reaction-diffusion terms. IEEE Trans Neural Netw Learn Syst 29(2):299–309

Zou C, Wang X (2020) Robust stability of delayed markovian switching genetic regulatory networks with reaction-diffusion terms. Comput Math Appl 79(4):1150–1164

Wang W, Dong Y, Zhong S, Shi K, Liu F (2019) Secondary delay-partition approach to finite-time stability analysis of delayed genetic regulatory networks with reaction-diffusion terms. Neurocomputing 359:368–383

Wang W, Dong Y, Zhong S, Liu F (2019) Finite-time robust stability of uncertain genetic regulatory networks with time-varying delays and reaction-diffusion terms. Complexity 2019:1–18

Fan X, Zhang X, Wu L, Shi M (2017) Finite-time stability analysis of reaction-diffusion genetic regulatory networks with time-varying delays. IEEE/ACM Trans Comput Biol Bioinf 14(4):868–879

Dorato P (1961) Short time stability in linear time-varying systems. In: Proceedings of the IRE International Convention Record, New York, pp 83-87

Liu L, Liu Y, Chen A, Chen P (2020) Integral barrier Lyapunov function-based adaptive control for switched nonlinear systems. Sci China Inform Sci 63(3):212–225

Liu L, Liu Y, Li D, Tong S, Wang Z (2020) Barrier Lyapunov function-based adaptive fuzzy FTC for switched systems and its applications to resistance-inductance-capacitance circuit system. IEEE Trans Cybern 50(8):3491–3502

Sun Q, Han R, Zhang H, Zhou J, Guerrero JM (2015) A multiagent-based consensus algorithm for distributed coordinated control of distributed generators in the energy internet. IEEE Trans Smart Grid 6(6):3006–3019

Sun Q, Zhang Y, He H, Ma D, Zhang H (2017) A novel energy function-based stability evaluation and nonlinear control approach for energy internet. IEEE Trans Smart Grid 8(3):1195–1210

Sun Q, Fan R, Li Y, Huang B, Ma D (2019) A distributed double-consensus algorithm for residential we-energy. IEEE Trans Ind Inf 15(8):4830–4842

Wang R, Sun Q, Ma D, Liu Z (2019) The small-signal stability analysis of the droop-controlled converter in electromagnetic timescale. IEEE Trans Sustain Energy 10(3):1459–1469

Zhou J, Xu S, Shen H (2011) Finite-time robust stochastic stability of uncertain stochastic delayed reaction-diffusion genetic regulatory networks. Neurocomputing 74(17):2790–2796

Gouaisbaut F, Peaucelle D (2006) Delay-dependent stability analysis of linear time delay systems. In: Proceedings of the 6th IFAC Workshop Time-Delay Syst, Aquila, Italy, Jul, pp 10-12

Mou S, Gao H, Lam J, Qiang W (2008) A new criterion of delay-dependent asymptotic stability for hopfield neural networks with time delay. IEEE Trans Neural Netw 19(3):532–535

Zhang X, Han Q (2009) New Lyapunov–Krasovskii functionals for global asymptotic stability of delayed neural networks. IEEE Trans Neural Netw 20(3):533–539

Zhang H, Liu Z, Huang G, Wang Z (2010) Novel weighting-delay-based stability criteria for recurrent neural networks with time-varying delay. IEEE Trans Neural Netw 21(1):91–106

Wang Y, Xia Y, Zhou P, Duan D (2017) A new result on H\(\infty \) state estimation of delayed static neural networks. IEEE Trans Neural Netw Learn Syst 28(12):3096–3101

Yu T, Liu J, Zeng Q, Wu L (2019) Dissipativity-based filtering for switched genetic regulatory network swith stochastic disturbances and time-varying delays. IEEE/ACMTrans Comput Biol Bioinf. https://doi.org/10.1109/TCBB.2019.2936351

Ali MS, Gunasekaran N, Ahn CK, Shi P (2018) Sampled-data stabilization for fuzzy genetic regulatory networks with leakage delays. IEEE/ACM Trans Comput Biol Bioinform 15(1):271–285

He S, Liu F (2010) Robust finite-time stabilization of uncertain fuzzy jump systems. Int J Innov Comput I 6(9):3853–3862

Wang L, Xu D (2003) Global exponential stability of hopfield reaction-diffusion neural networks with time-varying delays. Sci China Ser F 6:466–474

Hohn ME, Li B, Yang W (2015) Analysis of coupled reaction-diffusion equations for RNA interactions. J Math Anal Appl 425(1):212–233

Fan X, Xue Y, Zhang X, Ma J (2017) Finite-time state observer for delayed reaction-diffusion genetic regulatory networks. Neurocomputing 227:18–28

Zou C, Wei X, Zhang Q, Zhou C (2018) Passivity of reaction-diffusion genetic regulatory networks with time-varying delays. Neural Process Lett 47(3):1115–1132

Leith DJ, Leithead WE (2000) Survey of gain-scheduling analysis and design. Int J Control 73(11):1001–1025

Liu Z, Zhang H (2010) Delay-dependent stability for systems with fast-varying neutral-type delays via a PTVD compensation. Acta Autom Sin 36(1):147–152

Park PG, Ko JW, Jeong C (2011) Reciprocally convex approach to stability of systems with time-varying delays. Automatica 47(1):235–238

Park P, Lee WI, Lee SY (2015) Auxiliary function-based integral inequalities for quadratic functions and their applications to time-delay systems. J Franklin Inst 352(4):1378–1396

Seuret A, Gouaisbaut F (2013) Wirtinger-based integral inequality: application to time-delay systems. Automatica 49(9):2860–2866

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 61973070, the Liaoning Revitalization Talents Program under Grant XLYC1802010, and in part by SAPI Fundamental Research Funds under Grant 2018ZCX22.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Xiao, S., Wang, Z. Stability analysis of genetic regulatory networks via a linear parameterization approach. Complex Intell. Syst. 8, 743–752 (2022). https://doi.org/10.1007/s40747-020-00245-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40747-020-00245-1