Abstract

In this paper, we focus on the numerical solution of the non-linear Volterra integral equation of the first kind. We start by converting its form to a second-kind Volterra equation and we construct some assumptions to give a sufficient condition to ensure the solution’s existence and uniqueness, this is on the one hand. On the other hand, to use it in the numerical and analytical analysis computation which put the two analyses compatible. Then, by applying block-by-block method, we transform our integral equation to a non-linear system. This system gives us a discrete numerical solution converging to our analytical solution. The approximate solution is computed using a Newton’s method and the numerical examples clearly demonstrate the efficiency of our proposed method compared with the Nystöm method.

Similar content being viewed by others

1 Introduction

Volterra integral equations have been considered as the core of applied mathematics. Recently, it has been noticed that the models presented to express the Corona virus using Volterra equations are better than the existing models presented in the form of differential equations. Since, Volterra integral equations allow to monitor the initial reproduction number, thus determining the incubation period of the virus and then adjusting the preventive measures Fodor et al. (2020) and also due to the tremendous progress made in computer science, and partly due to the complexity of synthetic computational models aided by integration equations in modeling these various complexities. The so-called deep neural network, plays a major role in various applications of artificial intelligence starting with applications of faceprinting, self-driving cars and ending with automatic flight. What we have presented earlier is the importance it has in a particular field, such as medicine and network. As well as, the extent of this importance in other fields such as physics, nuclear energy, as well as dynamics (Tricomi 1985; Wazwaz 2011).

There are different types of Volterra integral equations, like the non-linear weakly singular equation of the second kind which is analyzed in Micula (1862). Also, we mention some of the models that have emerged recently, such as Fredholm and Volterra integro-differential equations, Fredholm integral equations and Volterra–Fredholm equations (Ghiat et al. 2020; Ghiat and Guebbai 2018; Ghiat et al. 2021; Touati et al. 2019). But the important question is how to search for a solution to these equations? In the first place, is there a solution to find it ? Finding a way to get the right solution is difficult and sometimes impossible. Thus, many mathematicians have resorted to the numerical process by innovating, inventing and developing computational methods that allow them to find a solution which converges to the exact solution (Karamov et al. 2021; Deepa et al. 2022; Dehbozorgi and Maleknejad 2021; Fawze et al. 2021; Micula and Cattani 2018; Micula 2015).

In this paper, our objective is to focus on a specific numerical method, called block-by-block, to find an approximate solution of u which is an unknown functions verified the following integral equation:

where f is a given function in the Banach space \(C^1[0,X]\) and the kernel \(K \in C^1([a,b]^2 \times {\mathbb {R}})\). This equation is named the non-linear Volterra equation of the first kind.

In the article Brunner (1997) titled by ’100 years of Volterra equations’, it is noted that the equation (1) first appeared in 1887 Jaëck (2018). It is the result of six papers presented by Volterra and also appears in his book Volterra (2005). Many researchers have followed its path, so we find that in 1959, it entered the world of economics Kantorovich and Gorkov (1959) through Sloan, who developed a capital equation and received the Nobel Prize for it Solow (1969). In 1960, it was used in the modeling of heat transfers between solids and gases Levinson (1960), and in 1996 it was used in the dynamic systems of the economy (Hritonenko and Yatsenko 1996; Muftahov et al. 2017), notably the life span of certain equipment. This is related to the last decade, and recently we find other applications in areas such as: energy (Karamov et al. 2021; Markova et al. 2021), engineering Solodusha and Bulatov (2021), in studies of the evolution of the rapid spread and mutation of Corona virus (Gao et al. 2022; Noeiaghdam et al. 2021; Giorno and Nobile 2022), biology (Brauer 1976; Halpea et al. 2021) and neural network Jafarian et al. (2022).

We note that the analytical study was carried out by Linz in his book Linz (1987), and therefore, we can say that the equation (1) has a unique solution under certain conditions. There are several research papers that are interested in the numerical solution of (1). As Petryshyn’s fixed point theorem Deepa et al. (2022), Taylor collocation method De Bonis et al. (2022), Homotopy perturbation Fawze et al. (2021), direct operational scheme Dehbozorgi and Maleknejad (2021), Hp-version collocation method Nedaiasl et al. (2019), wavelets method Micula and Cattani (2018), iterative method Micula (2015), etc.

In this article, the focus of our attention is on the numerical solution of (1) based on the block-by-block method. In Kasumo and Moyo (2020), the others have constructed a numerical method using this block-by-block method. They built it in two main steps: linearization of the equation (1) and then descritisation by the block-by-block method fourth order. In this manuscript, we start first with the discretization and then the linearization by the Newton method. We pay attention to the comparison between this method and the classical method based on the quadrature scheme described in the book of Linz (1987). Also, we prove that the approximate solution proposed is convergent to the exact solution.

This article is organized as follows: In the first Sect. 2, we reformulate our equation to another equivalent form which is of the second kind. Then, we construct hypotheses to verify the solution’s existence and uniqueness. Afterwards, we move to the second Sect. 3 in which we describe our digital processor and explain how it functioning to obtain a discretization system. We present theorems that show the existence and uniqueness of the solution of the new system and the convergence of the estimate solution. In the end of our manuscript 4, we test our method in a numerical example and compare it with the Nystöm method.

2 Main problem

The direct numerical treatment of the above equation (1) can create a problem of non-regularity. Because, the first kind version of the Volettra equation is an ill-posed problem. For this reason, it cannot be treated in this form. The idea to deal with this form easily is to find an equivalent equation of (1). For this, Linz in Linz (1987) proposed to derive it. So, we assume that the kernel is derivable with respect to x. This allows us to reform our equation to a new form. Hence, our new problem is given as: Search for u the solution of the following Volterra equation of the second kind

where \(f',f \in C^0[0,X]\) and \({\mathcal {K}}\), \(\dfrac{\partial {\mathcal {K}}}{\partial x} \in C^0 \bigg ( [0,X]^2 \times {\mathbb {R}}\bigg )\)

So, we assume that the kernel verifies the below hypothesis.

The condition (1) is called Lipschitz condition and the condition (3) is called lower-Lipschitz condition propose by Linz in the first time to ensure the solution uniqueness of (2) (see Linz 1987).

Before moving to digital framework, we must ensure the existence and uniqueness of the solution (1) in \(C^0[0,X]\). For this reason, we present the next theorem without proof, because it has already been shown in Linz (1987). Therefore, we recall hat \(C^0[0,X]\) is the Banach space equipped with following norm

Theorem 1

Under the hypothesis (H), the equation (1) has a unique solution in the Banach space \(C^0[0,X]\).

Now, we can offer the numerical framework comfortably. Therefore, in the following section, we are going to explain all the necessary and basic steps used in the construction of our proposed numerical method.

3 Block-by-block method

One of the most popular ways to solve this non-linear Volterra equation is Nystöm method. But, it has a clear problem. Because, we cannot start and launch our calculation without choosing \(u_0\). Which in most cases is arbitrament choice, i.e., is not the approximate solution of u at the starting point 0. At the beginning, for \(n \ge 1\) we need to define \(\Delta _n\) the uniform subdivision of the interval [0, X] for all \(n \ge 1\) by

Then, we propose to solve this problem using a new method which depends on the calculation of the solution in the subdivision \([x_i,x_{i+1}]\) for \(0 \le i \le n\) interval by interval at once separately. This method is good as block-by-block. Each subdivision \([x_i,x_{i+1}]\), is divided into three sub-intervals: \([x_i,x_{i1}]\), \([x_{i1},x_{i2}]\) and \([x_{i2}, x_{i+1}]\) such that \(x_{i1}=x_i+\frac{h}{3}\) and \(x_{i2}=x_i+\frac{2h}{3}\). Therefore, our goal is to find the approximate solution in the points \(x_{i1}\), \(x_{i2}\) and \(x_{i+1}\).

The block-by-block method is a generalization of the known implicit Runge–Kutta method for ordinary differential equations. The idea is quite generalized, but it is more easily understood. As we mentioned earlier, the approximate solution is calculated in the points \(x_{1i}\), \(x_{2i}\) and \(x_{i+1}\).

So, for \(x=x_{i1}\), we have

and for \(x=x_{i2}\), we obtain

finally, for \(x=x_{i+1}\), we get

First of all, we recall the Pouzet-type numerical integration scheme given by (9.53)-(9.55) page 154 in Linz (1987)

where

Now, we replace the integral in (5) by the integration scheme (6), to obtain for k=3

for k=2

and for k=1

From the quadrature interpolation, we obtain

Finally, in each subdivision of the interval \([x_i,x_{i+1}]\), we get the following non-linear system of dimension 3:

where \(u_{i1}\), \(u_{i2}\) and \(u_{i+1}\) are the approximation of \(u(x_{i1}), u(x_{i2})\) and \(u(x_{i+1})\), respectively. We write the last system in another form

Before starting the convergence study of this new method, we need to verify that the approximate system (13) has a unique solution. Therefore, we introduce the following theorem.

Theorem 2

For \(h < \dfrac{3\theta }{11 L}\) small enough and under the hypothesis (H), the system (13) has a unique solution in \([x_i, x_{i+1}]\) for \( 0 \le i \le n\).

Proof

We fix i and let \(U_i=(u_{i1}, u_{i2},u_{i+1})\) be a vector of \({\mathbb {R}}^3\), supposed \({\mathbb {R}}^3\) equipped with the next norm

Also, we present this vector \(S(i)=(S_{i1}, S_{i2}, S_{i+1})\). We define two non-linear functional \(\psi \) and \(\rho \) by

So, the system (13) is equivalent to

For any i fixed and for each subdivision \([x_i, x_{i+1}]\) we define the sequences \(\{U_i^p\}_{ p \in {\mathbb {N}}}\) by

Also, we define the sequences \(\{\sigma _{i} ^{p}\}_{p \in {\mathbb {N}}}\) by

It is clear that \(\underset{q=0}{\overset{p}{\sum } }\sigma _{i}^{q}=U_{i}^{p}\). Then, we prove that \(U_{i}^{p}\) converges to \(U_{i}\).

For all \(p \ge 1\), we have

According to the hypothesis (H), (5) and (7), we get the following

So, we obtain

Consequently,

which gives

By recurrence, we obtain

Assuming that h is small enough such that \(\dfrac{11\,L h}{3 \theta } <1\), then \( \underset{q\ge 1}{\sum }\ \bigg (\dfrac{11\,h W}{3 \theta } \bigg )^{q}\) is convergent. Therefore, \( \sum \nolimits _{q=0}^{p} \sigma _i^{q}\) is convergent. So, \(\underset{ p \rightarrow +\infty }{\lim } U_i^{p}=U_i\).

It remains to be seen whether this limit checks our system.

From the system (16), we have

since, \({\mathcal {K}}\) and \( \dfrac{\partial {\mathcal {K}}}{\partial x} \) are continuous, so \(\rho \) and \(\phi \) are continuous functional.

which gives that

Now, let us prove that the system has a unique solution.

Let \(\{U_i\}_{0\le i \le n}\) and \(\{V_i\}_{0\le i \le n}\) to solutions of the system (16). For i fixed and according the hypothesis (H), we get

From calculations and simplifications, we obtain

since, \(\dfrac{11 L h}{3 \theta } < 1\), we get the result. \(\square \)

The system (16) is non-linear system of the size 3 in each subdivision \([x_i, x_{i+1}]\) and to solve it, we apply the principle the newton method which it convergence shown in the book of Argyros (2004).

3.1 Convergence of method

In this section, we study the convergence of the approximate solution obtained from the bloc-by-block method. Since, we define the continuity module \(\omega _0(h,.)\) as

and the local consistency error in each \([x_i,x_{i+1}]\) by

Our numerical method is consistent if

Let define the following errors for \(0 \le i \le n\),

and

Theorem 3

Let \( \theta > \dfrac{11 L h}{3}\) and \( {{ {\tilde{L}}}}=\min \bigg ( \dfrac{1}{h(3+4c_1)},\dfrac{1}{h(1+4c_2)}\bigg )\). Then,

Therefore,

Proof

For n large enough and \( 0 \le i \le n\), we have

The hypothesis (H) implies that

From the second equation of the system (13)

From hypothesis ( H), we get

Using the fact \(\dfrac{11 L h}{3} < \theta \), which implies that \( \dfrac{5\,L h}{9} < \theta \), we obtain

Substituting (29) in (27), we get

where \(c_2=\dfrac{5}{4}+\dfrac{36 L h}{91 \theta -45L h }\), \(c_1=\dfrac{23}{8}+\dfrac{56 L h}{18 \theta -L h 10}\) and \(c_3=1+\dfrac{9 L h}{9 \theta -5L h }\).

From the third equation of the system (13)

then,

So, we get

We have \( {{ {\tilde{L}}}}=\min \bigg ( \dfrac{1}{h(3+4c_1)},\dfrac{1}{h(1+4c_2)}\bigg )\). We get \({{ {\tilde{L}}}} \le \dfrac{1}{h(3+4c_1)} \) which gives \(1-{{ {\tilde{L}}}}h (\dfrac{3}{4}+c_1) \ge \dfrac{3}{4}\). Also, \({{ {\tilde{L}}}} \le \dfrac{1}{h(1+4c_2)} \) which gives \(1-{{ {\tilde{L}}}}h (c_2+\dfrac{1}{4}) \ge \dfrac{1}{4}\). So, we get

Applying Gronwel’s lemma Linz (1987), we get

and we have for \(0 \le i \le n\),

So,

As a result,

Substituting (37) and (38) in (29), we get

\(\square \)

Now, we give the theorem which prove the order of convergence of our numerical technique

Theorem 4

-

1.

If \( \dfrac{\partial K}{\partial x} \in C^0([0,X]^2 \times {\mathbb {R}}, {\mathbb {R}})\) and \(u \in C^1[0,X]\), we have

$$\begin{aligned} {\bar{\varepsilon }}_i \le \dfrac{h}{4} \; \rho \bigg (1+\dfrac{c_3+1}{\theta }\bigg )^{i}, \quad 0 \le i \le n. \end{aligned}$$ -

2.

If \( \dfrac{\partial K}{\partial x} \in C^2([0,X]^2 \times {\mathbb {R}}, {\mathbb {R}})\) and \(u \in C^3[0,X]\), we obtain

$$\begin{aligned} {\bar{\varepsilon }}_i \le \dfrac{h^3}{3}\; {\bar{\rho }}\bigg (1+\dfrac{c_3+1}{\theta }\bigg )^{i}, \quad 0 \le i \le n, \end{aligned}$$

where \(\rho \) and \({\bar{\rho }}\) are positive constants.

Proof

For \(n\ge 1\), we define \(\pi _{n,1}\) and \(\pi _{n,2}\) two piecewise linear interpolation of orders 1 and 2, respectively. So, for all \(x \in [ x_i+\lambda _k \dfrac{h}{3}, x_i+\lambda _k {h}]\)

and for all \(x \in [ x_i, x_{i+1}]\)

Therefore, the consistence errors (24)-(26) are equivalent

Then,

-

1.

If \( \dfrac{\partial K}{\partial x} \in C^0([0,X]^2 \times {\mathbb {R}}, {\mathbb {R}})\) and \(u \in C^1[0,X]\) the consistence error (39) have this markup

$$\begin{aligned} \mid \delta _i(h,x_i+\lambda _k h)\mid\le & {} \dfrac{h}{4} {\bar{\upsilon }}_k, \end{aligned}$$(40)where

$$\begin{aligned} {\bar{\upsilon }}_k=\lambda _k \; \omega _0\bigg ( \dfrac{\partial K}{\partial x}(x_i+\lambda _k h,.,u)\bigg )+ L \omega _0(u,h).\end{aligned}$$So, using (40), the estimation (36) is given as

$$\begin{aligned} {\bar{\varepsilon }}_i \le \bigg (1+\dfrac{c_3+1}{\theta }\bigg )^{i-1} \bigg [\dfrac{h}{4}\varrho +\dfrac{c_3+1}{\theta }\bar{\varepsilon _0}\bigg ], \end{aligned}$$where \(\varrho =\dfrac{1}{\theta }{\bar{\upsilon }}_1 +\dfrac{9\,L h}{ 9 \theta ^2 -5\,L h }{\bar{\upsilon }}_2+ {\bar{\upsilon }}_3.\) Furthermore, by applying (40) and the definitions of error consistence (24)-(26)

$$\begin{aligned} \bar{\varepsilon _0} \le \dfrac{h}{4}\rho . \end{aligned}$$We obtain

$$\begin{aligned} {\bar{\varepsilon }}_i \le \dfrac{h}{4}\; \rho \bigg (1+\dfrac{c_3+1}{\theta }\bigg )^{i}. \end{aligned}$$ -

2.

If \( \dfrac{\partial K}{\partial x} \in C^2([0,X]^2 \times {\mathbb {R}}, {\mathbb {R}})\) and \(u \in C^3[0,X]\) by the error interpolation theorem (see Endre and David 2003, page 287) the consistence error (39) have the following markup

$$\begin{aligned}{} & {} \underset{ 1 \le k \le 3}{\max }\ \mid \delta _i(h,x_i+\lambda _k h)\mid \nonumber \\{} & {} \quad \le \Theta \lambda _k^3 \;\dfrac{h^3}{3} + \dfrac{L h}{4 } \left| u\left( x_i+\lambda _k \dfrac{h}{3}\right) -\pi _{n,2}\left( u\left( x_i+\lambda _k +\dfrac{h}{3}\right) \right) \right| \nonumber \\{} & {} \quad \le \dfrac{h^3}{3} {\upsilon }_k, \end{aligned}$$(41)where

$$\begin{aligned} \Theta =\underset{ 0 \le x,t \le X}{\max }\ \left| \dfrac{\partial ^3 K}{\partial ^2 t \partial x}(x,t,u(t))\right| , \end{aligned}$$and

$$\begin{aligned} {\upsilon }_k=\Theta \lambda _k^3 + \dfrac{L \lambda _k (1-\lambda _k)(2-\lambda _k) h}{12}\left| u^{(3)} \left( x_i+\lambda _k \dfrac{h}{3}\right) \right| . \end{aligned}$$Then, (36) has the following estimation

$$\begin{aligned} {\bar{\varepsilon }}_i \le \bigg (1+\dfrac{c_3+1}{\theta }\bigg )^{i-1} \bigg [\dfrac{h^3}{3}{\bar{\varrho }}+\dfrac{c_3+1}{\theta }\bar{\varepsilon _0}\bigg ], \end{aligned}$$where \({\bar{\varrho }}=\dfrac{1}{\theta }{\upsilon }_1 +\dfrac{9\,L h}{ 9 \theta ^2 -5\,L h }{\upsilon }_2+{\upsilon }_3.\) Also, we have

$$\begin{aligned} \bar{\varepsilon _0} \le \dfrac{h^3}{3} {\bar{\rho }}. \end{aligned}$$In addition,

$$\begin{aligned} {\bar{\varepsilon }}_i \le \dfrac{h^3}{3}{\bar{\rho }}\bigg (1+\dfrac{c_3+1}{\theta }\bigg )^{i}. \end{aligned}$$

\(\square \)

4 Numerical examples

We give two numerical examples to prove the efficiency and accuracy of the method presented. In the following examples, we calculate \(u_i\) according the scheme (16). First, we define the discrete error as

where \(u_i^{\nu }\) is a solution of the system (16) by Newton method. In the both examples, we choose the initial point of the Newton method \(u_0^{0}=f(0)\).

Let give the next equation

where the exact solution is \(u(x)=\log (x+1)\).

In addition, we have the lower-Lipschitz coefficient \(\theta =\dfrac{1}{11+\log (2)}\) and the Lipschitz coefficient \(L=\dfrac{1}{9}\). So, the condition of convergence \(\theta > \dfrac{11\,L h}{3}\) is verified.

We give another equation

and the exact solution \(u(x)=\sin (x)\). In addition, we have the lower-Lipschitz coefficient \(\theta =\dfrac{1}{3}\) and the Lipschitz coefficient \(L=1+9e^1+1\). So, the condition of convergence \(\theta > \dfrac{11L h}{3}\) is verified.

Let introduce tables, which explain the error between the numerical and exact solutions in all points \(x_i\). In these following tables, we will calculate the error between the numerical solution and the correct solution using the MATLAB program, where we will apply the method proposed in this article and compare the results obtained with Nystöm method.

In each row of the table, we will choose n, which is the number of divisions of the interval [a, b]. Then, we will choose in each column a number of iterations \({\nu }\), where we notice that the more we increase n and \({\nu }\), the error using the block-by-block method gets closer to zero faster than Nystöm method. Therefore, this table is the best proof that shows the efficiency of the method proposed in this paper.

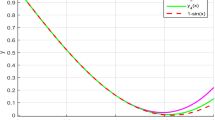

To further clarify the difference between the error using the two mentioned methods, we have drawn the error statement, where the first statement refers to the equation (42) and the second refers to the equation (43). We, can see from the two statements the great difference between the two methods. We see that the error of the block-by-block method applies to zero.

Block-by-block and Nyström error of Eq. (42) with \(n=10\)

Block-by-block and Nyström error of Eq. (43) with \(n=10\)

In both graphs, the x-axis is the discretization nodes. The error graph of the block-by-block method is colored in blue. It applies completely to the x-axis. Indicating that, the error of our method compared by the Nyström error is very close or equal to zero.

5 Conclusion

In this paper, we have concentrated and focused on finding a numerical solution to the non-linear Volterra integral equation of the first kind. We did not focus on the analytical study because it has already been verified in the Linz book Linz (1987).

First, we define a condition on the kernel, so that we can convert the first kind equation to a second-kind equation, because dealing directly with the first equation can lead us to the next problem: A small change in the input leads to a huge change in the results, and this is what we call ill-posed problems. We also built some assumptions which then allowed us to be consistent in the numerical and analytical study.

As for the numerical solution, we apply the block-by-block method, which is the opposite of what is done to solve this type of equation. One of the advantages of this method is that it is not necessary to know the value of the solution at the initial point i.e we can choose the initial point only in iteration \(i=0\) by \(u_0^{0}=f(0)\). Then, in every iteration i we put \(u_{i0}^{\nu +1}=u_{(i-1)0}^{\nu }\), \(u_{i1}^{\nu +1}=u_{(i-1)1}^{\nu }\) and \(u_{i}^{\nu +1}=u_{(i-1)}^{\nu }\). Moreover, the performance of this method depends on transforming the equation of each division \([x_i,x_{i+1}]\) into a non-linear system of dimension three, and thus finding the solution at three different points \(x_{i1}\), \(x_{i2}\) and \(x_{i+1}\)in each division \([x_i,x_{i+1}]\). To solve this system, we apply the Newton’s method Wazwaz (2011). Because, in the literature of the numerical processes of the non-linear Volterra equations there are two essential steps: linearization and discretization. The numerical treatment that starts with the discretization of the equation its consequence is a non-linear algebraic system. It is necessary to solve this system by Newton’s method and the best choice of initial point is \(u_0=f(0)\) (see Ghiat et al. 2021; Ghiat and Guebbai 2018; Ghiat et al. 2020; Touati et al. 2019; Deepa et al. 2022; Linz 1987).

We have presented some theorems that explain the convergence of the numerical solution to the exact solution. We could see that this method is considered as the best one compared to the established method, which is the quadrature method as the numerical examples well illustrate it.

Data availability statement

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

Argyros IK (2004) Newton methods. Nova Science Publishers Inc, New York

Brauer F (1976) Constant rate harvesting of populations governed by Volterra Integral Equations. J Math Anal Appl 56:27

Brunner H (1997) 1896–1996: one hundred years of Volterra integral equations of the first kind. Appl Numer Math 24:83–93

De Bonis MC, Laurita C, Sagaria V (2022) A numerical method for linear Volterra integral equations on infinite intervals and its application to the resolution of metastatic tumor growth models. Appl Numer Math 172:475–496. https://doi.org/10.1016/j.apnum.2021.10.015

Deepa A, Kumarb A, Abbasc S, Rabbanid M (2022) Solvability and numerical method for non-linear Volterra integral equations by using Petryshyn’s fixed point theorem. Int J Nonlinear Anal Appl 13(1):1–28. https://doi.org/10.22075/ijnaa.2021.22858.2422

Dehbozorgi R, Maleknejad K (2021) Direct operational vector scheme for first-kind nonlinear volterra integral equations and its convergence analysis. Mediterr J Math 18–31. https://doi.org/10.1007/s00009-020-01686-1

Endre S, David FM (2003) An introduction to numerical analysis. Cambridge University Press, Cambridge

Fawze A, Juma’a B, Al Hayani W (2021) Homotopy perturbation technique to solve nonlinear systems of Volterra integral equations of 1st kind. J Artif Intell Soft Comput Res 2(1):19–26

Fodor Z, Katz SD, Kovacs TG (2020) Why integral equations should be used instead of differential equations to describe the dynamics of epidemics, arXiv preprint

Gao W, Veeresha P, Cattani C, Baishya C, Baskonus HM (2022) Modified predictor?corrector method for the numerical solution of a fractional-order SIR model with 2019-nCoV. Fractal Fract 6:92. https://doi.org/10.3390/fractalfract6020092

Ghiat M, Guebbai H (2018) Analytical and numerical study for an integro-differential nonlinear volterra equation with weakly singular kernel. Comput Appl Math 37(14):4661–4974. https://doi.org/10.1016/j.amc.2013.12.046

Ghiat M, Guebbai H, Kurulay M, Segni S (2020) On the weakly singular integro-differential nonlinear Volterra equation depending in acceleration term. Comput Appl Math 39(2):206. https://doi.org/10.1007/s40314-020-01235-2

Ghiat M, Bounaya MC, Lemita S, Aissaoui MZ (2021) On a nonlinear integro-differential equation of Fredholm type. Int J Math Comput Sci 13(12):197. https://doi.org/10.1504/IJCSM.2021.10036905

Giorno V, Nobile AG (2022) A numerical method for linear Volterra integral equations on infinite intervals and its application to the resolution of metastatic tumor growth models. Appl Math Comput 422:126993. https://doi.org/10.1016/j.amc.2022.126993

Halpea IS, Parajdi GL, Precup R (2021) On the controllability of a system modeling cell dynamics related to Leukemia. Symmetry 13:1867. https://doi.org/10.3390/sym13101867

Hritonenko N, Yatsenko Yu (1996) Modeling and optimization of the lifetime of technologies. Kluwer Academic Publishers, Dordrecht

Jaëck F (2018) Calcul différentiel et intégral adapté aux substitutions par Volterra. Hist, Math

Jafarian A, Rezaei R, Golmankhaneh A (2022) On solving fractional higher-order equations via artificial neural networks. Iran J Sci Technol Trans A Sci. https://doi.org/10.1007/s40995-021-01254-6

Kantorovich L, Gorkov L (1959) Investment and technical progress. In: K.J. Arrow, S. Karlin, P. Suppes (Eds.), Mathematical Methods in the Social Sciences. Dokl Akad Nauk SSSR 129:73

Karamov DN, Sidorov DN, Muftahov IR, Zhukov AV, Liu F (2021) Optimization of isolated power systems with renewables and storage batteries based on nonlinear Volterra models for the specially protected natural area of lake Baikal. J Phys Conf Ser 012037

Kasumo K, Moyo E (2020) Approximate solutions of nonlinear Volterra integral equations of the first kind. Appl Math Sci 14(18):867–880. https://doi.org/10.12988/ams.2020.914288

Levinson N (1960) A Nonlinear Volterra Equation Arising in the Theory of Super fluidity. J Math Anal Appl 129:1–11

Linz P (1987) Analytical and Numerical Methods for Volterra Equations. Society for Industrial Mathematics

Markova E, Sidler I, Solodusha S (2021) Integral models based on Volterra equations with prehistory and their applications in energy. Mathematics 9:1127. https://doi.org/10.3390/math9101127

Micula S (1862) A numerical method for weakly singular nonlinear Volterra integral equations of the second kind. Symmetry Basel 12(11):2020. https://doi.org/10.3390/sym12111862

Micula S (2015) A fast converging iterative method for Volterra integral equations of the second kind with delayed arguments. Fixed Point Theory 16(2):371–380

Micula S, Cattani C (2018) On a numerical method based on wavelets for Fredholm-Hammerstein integral equations of the second kind. Math Methods Appl Sci 141(18):9103–9115. https://doi.org/10.1002/mma.495219

Muftahov I, Tynda T, Sidorov D (2017) Numeric solution of Volterra integral equations of the first kind with discontinuous kernels. J Comput Appl Math 313:119–128

Nedaiasl K, Dehbozorghi R, Maleknejad K (2019) Hp-version collocation method for a class of nonlinear Volterra integral equations of the first kind. Appl Numer Math 150:452–477. https://doi.org/10.1016/j.apnum.2019.10.006

Noeiaghdam S, Micula S, Nieto JJ (2021) A novel technique to control the accuracy of a nonlinear fractional order model of COVID-19: application of the CESTAC method and the CADNA library. Mathematics 9:1321. https://doi.org/10.3390/math9121321

Solodusha S, Bulatov M (2021) Integral equations related to Volterra series and inverse problems: elements of theory and applications in Heat power engineering. Mathematics 9:1905. https://doi.org/10.3390/math916190

Solow RM (1969) On some functional equations arising in analysis of single-commodity economic model. Stanford University Press, 89–104

Touati S, Lemita S, Ghiat M, Aissaoui MZ (2019) Solving a non-linear Volterra-Fredholm integro-differentail equation with weakly singular kernels. Fasciculi Math 62:155–168

Tricomi FG (1985) Integral equations. Dover Publications City, New York

Volterra V (2005) Theory of functionals and of integral and integro-differential equations. Dover Publications City, Mineola, New York

Wazwaz AM (2011) Linear and nonlinear integral equations: methods and applications. Springer

Acknowledgements

We are very grateful to the reviewers for their valuable time and proposed corrections to improve our paper.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Ghiat, M., Tair, B., Ghuebbai, H. et al. Block-by-block method for solving non-linear Volterra integral equation of the first kind. Comp. Appl. Math. 42, 67 (2023). https://doi.org/10.1007/s40314-023-02212-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-023-02212-1