Abstract

Introduction

Cost-effectiveness analyses typically ignore healthcare system resource constraints. Ophthalmology is affected by resource constraints because of increasing disease prevalence and the use of resource-intensive treatments. This study evaluated the impact of resource constraints on the cost-effectiveness of faricimab 6 mg, compared with aflibercept 2 mg and ranibizumab biosimilar 0.5 mg, for treating wet age-related macular degeneration (wAMD) or diabetic macular oedema (DMO) over a 5-year horizon.

Methods

A microsimulation model estimated the impact of resource constraints on patients visits, delays, costs and quality-adjusted life-year (QALY) losses due to treatment delays at a typical UK National Health Service eye hospital treating 1500 patients with wAMD and 500 patients with DMO. Patient characteristics, treatment regimens and treatment intervals were informed using published literature and expert opinion. Resource constraint was represented by limiting the number of available intravitreal injection appointments per week, with growing demand caused by rising disease prevalence. The model compared outcomes across three scenarios; each scenario involved treating all patients with one of the three treatments.

Results

Over 5 years, in a resource-constrained hospital, compared with aflibercept, faricimab use resulted in the avoidance of 12,596 delays, saved GBP/£15,108,609 in cost and avoided the loss of 60.06 QALYs. Compared with ranibizumab biosimilar, faricimab use resulted in the avoidance of 18,910 delays, incurred £2,069,088 extra cost and avoided the loss of 105.70 QALYs, resulting in an incremental cost-effectiveness ratio of £19,574/QALY.

Conclusions

Accounting for resource constraints in health economic evaluation is crucial. Emerging therapies that are more durable and require less frequent clinic visits can reduce treatment delays, leading to improved QALY outcomes and reduced burden on healthcare systems. Faricimab reduced the number of delayed injections, leading to improved QALY outcomes for patients in a healthcare system with resource constraints. Faricimab is cost-saving when compared with aflibercept and cost-effective when compared with ranibizumab biosimilar.

Graphical Abstract

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

Why carry out this study? |

In ophthalmology, it may be particularly important to consider the impact of resource constraints on patients and health systems when assessing the value of new treatments. |

This case study presents a patient-level simulation model that estimates the number of patient visits, delays, treatment costs and lost quality-adjusted life years (QALYs) associated with delayed treatments in patients with wet age-related macular degeneration (wAMD) or diabetic macular oedema (DMO). |

What was learned from this study? |

Results from this case study showed that using faricimab reduced the number of delays compared with using aflibercept or ranibizumab biosimilar, leading to improved QALY outcomes for patients with wAMD or DMO in a healthcare system with resource constraints. |

Emerging therapies that are more durable and require less frequent clinic visits, such as faricimab, can reduce treatment delays, leading to net cost savings from the healthcare payer perspective and improved QALY outcomes for patients with wAMD or DMO. |

Digital Features

This article is published with digital features, including a graphical abstract, to facilitate understanding of the article. To view digital features for this article, go to https://doi.org/10.6084/m9.figshare.26218133.

Introduction

In many healthcare systems, cost-effectiveness and budget impact analyses of a new treatment compared with the existing treatment options (standard of care) are the cornerstones of the health technology assessment (HTA) decision-making process. Decisions on whether new treatments are reimbursed by payers are typically based on affordability and budgetary considerations. Although these analyses address comparative efficiency, the impact of resource constraints in a healthcare system on the implementation of new technologies is often not part of the decision-making process and has been largely ignored [1]. Healthcare resources, such as beds, doctors and nurses, are constrained, but most economic evaluations assume all resources required by a new technology and existing treatment options are immediately available following the adoption of the new technology, with the potential impact of resource constraints not routinely captured in economic evaluations. Failing to consider resource constraints may lead to low levels of uptake or impracticality of implementing a new technology. In addition, not considering resource constraints could lead to inefficient use of overall healthcare resources, resulting in long waiting lists and suboptimal population health outcomes.

While many disease areas can be affected by resource constraints, ophthalmology is one of the areas that is most affected because of an increasing prevalence of retinal conditions and increasing utilisation of resource-intensive treatments [2, 3]. In addition to the published data, there is a huge hidden backlog for follow-up appointments because the published figure only includes those waiting for the first appointment. Permanent harm, such as avoidable visual loss from delays to care, is ninefold more likely to happen in follow-up patients than in new patients [4]. It is estimated that every month approximately 22 people in the UK suffer from severe or permanent sight loss due to delays caused by the capacity challenges the UK National Health System (NHS) currently faces [5]. NHS England revealed that there were 551 reports of sight loss due to delayed appointments between 2019 and 2023 in the National Reporting and Learning System [6].

Wet age-related macular degeneration (wAMD) and diabetic macular oedema (DMO) are both chronic and debilitating conditions with a substantial impact on the quality of life. The prevalence of late age-related macular degeneration (AMD) in the UK is 2.4% among people aged ≥ 50 years and 4.8% among people aged ≥ 65 years [7]. Over time, about one in 15 people with diabetes may develop DMO [8]. With an ageing population and increasing prevalence of diabetes, a key prognostic factor, the incidence of AMD and DMO is predicted to rise substantially [9, 10]. Intravitreal therapy, the first-line treatment option for AMD and DMO, requires anti-vascular endothelial growth factor (anti-VEGF) injections as frequently as every 4 weeks and regular eye examinations at a specialist eye clinic for many years [11, 12]. This imposes a considerable burden on specialist eye clinics, which are subject to considerable resource constraints (such as the limited number of injection appointments that are available for patients, especially in the post-COVID-19 era). Between 2009 and 2019, a single tertiary care hospital in the UK saw an 11-fold increase in the number of anti-VEGF injections administered, totalling 44,924 injections delivered in 2019. This number is predicted to reach 83,000 in the year 2029 [13]. The increasing demand for anti-VEGF injections tests NHS resources and contributes to delays in initiating and providing treatments, ultimately resulting in poor vision outcomes [14].

Currently, four agents are recommended by the UK National Institute for Health and Care Excellence (NICE) as treatment options for treating AMD and DMO: ranibizumab [15, 16], aflibercept [17, 18], brolucizumab [19, 20] and faricimab [12, 21]. Brolucizumab was excluded from the present case study because it may be used as a second-line therapy, whereas the other three agents are first-line therapies [20]. Different from other agents, faricimab is a combined-mechanism medication with effects on both VEGF A and angiopoietin-2. Although the efficacy of anti-VEGF agents is well established, the majority of patients with AMD and DMO treated with ranibizumab and aflibercept would need intravitreal injections every 4 to 8 weeks. Dosing intervals may be extended to 12 weeks for some patients being treated with ranibizumab and to 16 weeks for some patients being treated with aflibercept, but the proportion of these patients is relatively low. With faricimab, the dosing interval may be extended to 16 weeks in > 60% of the patients.

Considering delays in treatment is known to lead to vision loss in AMD and DMO [22, 23], it is essential to ensure injections can be provided promptly, especially in the context of resource constraints. Early real-world evidence suggests a promising effect of faricimab on reducing resource use and relieving resource constraints [24, 25]. In the NICE productivity initiative, faricimab was recognised as a productivity opportunity because it is associated with a reduction in the number of both clinical appointments and outpatient injections [26]. Emerging sight-saving therapies such as faricimab, associated with more durable effects and reduced resource use (i.e. longer treatment intervals between 2 injections), can help to address the negative impact of resource constraints on vision outcomes due to potential treatment delays. However, traditional economic evaluation methods used in HTA which ignore the impact of resource constraints are unlikely to capture the true value of more durable therapies within the reality of the healthcare system.

New approaches to modelling treatments which alleviate the strain on resource-constrained systems may be required. This study aims to quantify the extent of resource constraints and their impact on the cost-effectiveness of treatments for wAMD and DMO as a case study, using an individual-level simulation model.

Methods

An individual-level simulation model was developed in Microsoft Excel (Microsoft Corp., Redmond, WA, USA) to estimate the impact of limited resources in the ophthalmology service at a hypothetical NHS eye hospital. Patients and their required visits to the hospital were simulated based on pre-specified model inputs, including patient characteristics, treatment intervals, hospital capacity and maximum allowed delay time. The details of these inputs are described in the following sections. The model used a weekly time cycle during which all patient-hospital interactions were simulated (see section Booking Schedule for details). The model measured the number of patient visits, the number and duration of delays, the cost of treatment for wAMD and DMO and the loss of quality-adjusted life years (QALYs) due to delayed treatments. The model simulated and compared the outcomes across three hypothetical scenarios; in each scenario, all patients are treated with one of the three treatments, namely faricimab, aflibercept or ranibizumab biosimilar. To the best of our knowledge, this is the first cost-effectiveness study of pharmacological treatments for wAMD and DMO that has considered both demand and supply constraints.

Patient Characteristics

The model simulated a cohort of 1500 patients with wAMD and 500 patients with DMO, representing the number of patients at a typical NHS eye hospital, over a 5-year time horizon. New patients were added to the model based on a prespecified annual growth rate of 2.7% for wAMD and 2.6% for DMO [27, 28]. Based on a study conducted by Giocanti-Auregan and colleagues, 36% of patients with wAMD and 48% of patients with DMO would have bilateral disease [29].

Treatment Intervals

Treatment intervals were informed using data from randomised controlled trials and long-term studies of faricimab, aflibercept and ranibizumab [30,31,32,33,34,35,36], other literature [17, 18] and expert opinion obtained from a series of extensive discussions with an NHS ophthalmologist, Dr. Robin Hamilton, who is a consultant at the Moorfield Eye Hospital London (UK).

All patients were assumed to be on a treat-and-extend treatment regimen for all treatments. Treatment intervals for faricimab were informed based on the TENAYA and LUCERNE trials for wAMD [31] and the YOSEMITE and RHINE trials for DMO [36]. For DMO, treatment frequency for aflibercept and ranibizumab biosimilar was assumed to be the same as that for ranibizumab, informed by the TREX-DME study [34]. This was because the proportion of patients on a 12-week interval in the ranibizumab TREX-DME study (43.0%) was similar to that in the aflibercept VIOLET study (40.8%) [30]. This assumption has been validated by a clinical expert. In the case of wAMD, the intervals for aflibercept and ranibizumab were informed using the ARIES [35] and Kertes et al. [33] trials, respectively.

Table 1 presents the treatment regimen and interval inputs data used in the model. With faricimab, four doses at a 4-week interval were given to patients during the loading period for both wAMD and DMO; with aflibercept and ranibizumab biosimilar, three doses or five doses were given to patients with wAMD or DMO, respectively, at a 4-week interval. The model further assumes that, following the loading phase, 22% of patients treated for wAMD with faricimab would reach an 8-week injection interval, 15% would reach a 12-week interval and 63% would reach a 16-week interval; meanwhile, 22% of patients treated for DMO with faricimab would reach an 8-week interval, 16% would reach a 12-week interval and 62% would reach a 16-week interval. With aflibercept, 57% of patients treated for wAMD would be on an 8-week treatment interval, 17% would be on a 12-week interval and 26% would be on a 16-week interval; and 28% of patients treated for DMO would be on a 4-week treatment interval, 29% would be on an 8-week interval and 43% would be on a 12-week interval. For ranibizumab biosimilar, 26% of patients with wAMD would be on a 4-week interval, 31% would be on an 8-week interval and 43% would be on a 12-week interval.

It is worth noting that, for faricimab in DMO, the proportions of patients requiring treatment every 4 weeks and every 8 weeks were combined, and all were assumed to be on an 8-week treatment interval in order to minimise model complexity. The same assumption applied for aflibercept in wAMD. An alternative assumption, where all patients on 4- and 8-week treatment intervals were assumed to be on a 4-week treatment interval, was tested in a scenario analysis. All patients were assumed to be receiving treatment throughout the entire model horizon of 5 years, meaning no patients would discontinue treatment. Treatment discontinuation was modelled as a scenario analysis.

Resource Constraint

An explicit resource constraint was represented by setting a limit on the number of intravitreal injection appointments per week, with growing demand from rising disease prevalence. Clinic capacity was calculated as the product of the number of sessions and clinics occurring each week and the number of patient visits that can be provided in each session. Based on expert opinion, 20 four-hour injection sessions were an appropriate estimate for weekly capacity. However, NHS staff typically work 44 weeks instead of 52 weeks a year. Therefore, 20 sessions were reduced to 17 to account for staff holidays. Within each 4-hour injection session, 16 injections can be delivered [37]. It was assumed that 77.6% injection capacity was offered to patients with wAMD and DMO at this hypothetical NHS eye hospital to allow for capacity use for other health conditions [13]. This capacity was used to determine when demand exceeded capacity, and then either the patient visit was delayed or provided as an out-of-hours (OOH) service.

Out-of-Hours Appointment and Treatment Delays

Based on baseline patient characteristics and treatment intervals, modelled patients were placed on a waiting list for an available clinic appointment until the maximum allowed waiting time was reached, after which an OOH appointment was provided at the hospital. A delay occurred when the demand for clinic visits in a given week exceeded the available routine capacity. Because initial assessment and treatment visits are generally considered to be high priority and delays should ideally be avoided, it was assumed in the model base case that the maximum allowed delay was 2 weeks for first appointments for wAMD (as per NICE’s commissioning guidance [38]), 4 weeks for wAMD follow-up appointments and DMO first appointments and 6 weeks for DMO follow-up appointments. This assumption has been validated by a clinical expert. In addition, the impact of shorter allowable delays (i.e. 1 week for first appointments for wAMD, 3 weeks for wAMD follow-up appointments and DMO first appointments, 5 weeks for DMO follow-up appointments) has been tested using scenario analyses.

The cost of OOH appointments was assumed to be twofold higher than that of routine appointments. A scenario analysis was conducted assuming the cost of an OOH appointment was threefold higher than that of a routine appointment.

Booking Schedule

A key assumption of the model was that the clinic maintained a booking schedule containing the week of next visit for every patient treated by the clinic. The model used the booking schedule property to track and schedule each patient’s next clinic visit. During the initiation phase, every patient under the care of the clinic was assigned to a slot in the booking schedule based on their assigned treatment and regimen, as well as the clinic’s capacity. As the simulation progressed, the booking schedule determined the events that would occur during each week. After a patient completed a clinic visit, they were rebooked to a new slot in the schedule. The schedules under the alternative scenarios of worlds with different treatments diverged because patients were on different treatment intervals.

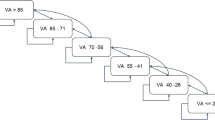

The approach to scheduling patient visits is illustrated in Fig. 1. When a patient was simulated and passed to the booking method of the clinic, the initial step involved determining the patient’s target booking week. This is the week in which the visit ought to take place based on the patient’s regimen. The next step was to check whether there was capacity in the target week to provide this patient visit; if so, the booking would be assigned a slot within routine hours. If the capacity limit was reached in that week, then the visit could either be conducted OOH or it could be delayed to a later week. If the limit on waiting times had not been reached, then the booking scheduler moved to the next week and repeated the above process. If the limit on waiting times had been reached, the booking was assigned an OOH visit. OOH slots were assumed to be unlimited.

Resource Use and Costs

Ophthalmology clinic visits were categorised according to types that are intended to broadly capture the differences in terms of resources required. Resources associated with a baseline assessment (i.e. treatment initiation) included a first-time consultation, optical coherence tomography, fundus fluorescein angiography and drug acquisition and administration costs. All subsequent appointments were assumed to involve a follow-up consultation, optical coherence tomography and drug acquisition and administration costs. The cost of baseline assessment and follow-up appointment was GBP/£576.4 and £383.8, respectively. The list price for faricimab, aflibercept and ranibizumab biosimilar was £857.0, £816.0 and £523.5, respectively. Unit costs were sourced from the National Schedule of NHS Costs [39], and drug acquisition costs were based on prices listed in the British National Formulary [40]. No discounting was applied because of the short time horizon in the model. Costs associated with vision loss were not included in the case study.

Health-Related Quality of Life

The impact of treatment delays was measured in QALYs by combining visual acuity and associated utility, based on the assumption that treatment delays would lead to worsened visual acuity as well as delayed treatment benefit [41]. It was assumed that a treatment delay of 1 week would lead to a vision loss of 0.23 Early Treatment Diabetic Retinopathy Study (ETDRS) letters as well as the loss of 0.12 ETDRS letters vision gain had the treatment been given in time. Based on the algorithm (model 1 + 2) published in Hodgson and colleagues [42], which estimated a regression model to correlate visual acuity and utility, we conservatively assumed that a change of five ETDRS letters is associated with a utility change of 0.0160 using the smallest change in utility value between any two visual acuity health states. The total utility decrement due to treatment delays was calculated as the product of the number of delays, estimated average delay duration and utility change associated with a 0.35 change in ETDRS letters.

Outcomes

Total number of clinic attendances, total number of injections given, total cost and total QALY loss due to treatment delays were estimated for scenarios in which all patients were treated with each treatment. Analysis results were reported using incremental costs, incremental cost-effectiveness ratio (ICER) and net monetary benefit (NMB) for one scenario with all patients treated using faricimab versus all patients treated with aflibercept and for another scenario with all patients treated with faricimab versus all patients treated with ranibizumab biosimilar.

Uncertainty

Scenario analyses were performed to assess the impact of key parameters on the base case results (Table 2). The purpose of scenario analyses was to explore different assumptions in demand- and supply-side factors within the system. On the supply side, this involved changes in appointment delays; on the demand side, it included adjustments to intervals between injections, discontinuation of treatment or an increase in the number of patients accessing the service. A scenario that assumed the absence of capacity constraints allowed a comparison of the base case with a standard cost-effectiveness model, which does not explicitly consider capacity constraints. We explored uncertainties in cost and utility values by testing alternative costs and utility data sources and assumptions.

Ethical Approval

This article is based on previously conducted studies and does not contain any new studies with human participants or animals performed by any of the authors.

Results

Base Case

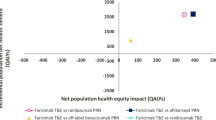

As estimated by the model, in the comparison between faricimab and aflibercept, for 2000 simulated patients with wAMD or DMO over a 5-year period there was a decrease of 15,975 (− 20.81%) in the number of injections when all patients were treated with faricimab. In the comparison between faricimab and ranibizumab biosimilar, the reduction was 20,824 (− 25.56%). Additionally, using faricimab reduced almost all delays compared with using aflibercept (99.97% reduction) and ranibizumab biosimilar (99.99% reduction) (see Fig. 2; Table 3). The use of faricimab was dominant (total cost savings of − £15,108,609, 60.06 QALYs gained) when compared with aflibercept (NMB £16,309,863). Compared with ranibizumab biosimilar, faricimab had an incremental cost of £2,069,088 and provided 105.7 additional QALYs. This yielded an ICER per QALY gained of £19,574 (NMB: £45,011), which is below the NICE threshold for cost-effectiveness of £20,000–£30,000 per QALY gained. Cost results are graphically represented in Fig. 3.

Number of injections per year. Figure 2 represents the number of injections estimated for each year in the model, with linear interpolation between the years. Values between the discrete annual time points should be interpreted with caution

Scenario Analyses

A summary of results from scenario analyses that tested different model assumptions is presented in Table 4. Overall, results show that faricimab is still dominant compared with aflibercept in all scenarios and is cost-effective compared with ranibizumab in all but two scenarios. Faricimab is most cost-saving and provides the highest NMB when there is no capacity constraint and all patients can be treated in time. The lowest NMB was observed in a scenario where the most conservative treatment interval assumption was applied to faricimab in DMO. In such a scenario, faricimab still reduced almost all treatment delays compared with aflibercept and ranibizumab biosimilar, but the gain in QALYs and savings in OOH appointments were not enough to compensate for the increased drug acquisition cost when compared with ranibizumab biosimilar.

Discussion

Interpretation of Results

In this study, we evaluated how capacity constraints affect the cost and effectiveness of pharmacological therapies for the treatment of wAMD and DMO. Economic evaluation methods typically focus on budget constraints, overlooking the quantification of the broader impact of multiple health system capacity limitations on the implementation process [44, 45]. The results of the present study showed that switching to faricimab from aflibercept or ranibizumab biosimilar reduces the number of injections that patients required and therefore reduces the number of delayed appointments. The cost and QALY impact of faricimab were dependent on the assumed constraints present in the system, such as the number of available clinic appointments, allowed treatment delay and increase in demand over time. The results remained consistent even when incorporating demand-side determinants, such as treatment discontinuation.

Model Uncertainty

In the literature, treatments with a lower discontinuation rate result in increased costs but also a gain in terms of QALYs because of prolonged efficacy [46]. In our analysis, we conservatively adopted the same assumption as used in other economic evaluations where the same discontinuation rate is applied for all alternatives and discontinuation would not lead to loss of QALYs for patients [47,48,49]. The observed outcomes in the scenario with discontinuation are explained by the fact that including it leads to a decrease in overall demand, thereby easing the burden on the healthcare system. Consequently, there is a reduced need for frequent injections for all therapies. This decrease in the frequency of injections also means that the benefit of avoiding treatment delays is smaller, given fewer patients have to wait for an appointment. On the cost side, compared with ranibizumab biosimilar, there are savings because the average number of injections per patient drops more noticeably. However, with aflibercept, as time progresses, the average number of injections between the two treatments becomes more similar, which results in smaller cost savings.

In several prior modelling studies, analyses have focused on the recommended dosage stated on the labels. This may not always align with clinical practice, where physicians individualise treatment intervals based on disease activity [46]; also, baseline characteristics of patients in the real world differ from those of patients in clinical trials. Conversely, some analyses based on claims databases reveal minimal differences between treatment alternatives; for example, a study comparing the use of aflibercept and ranibizumab in wAMD showed no significant variation in the average number of injections administered [50]. Concerning this, some authors note that, in real-world settings, the intervals of administration and average number of injections often vary from those in pivotal studies, partly because of resource constraints and limited capacity to implement complete treatment protocols [46, 50,51,52].

In this analysis, we incorporated alternative administration frequencies, altering the treatment intervals applied in the base case. For faricimab in DMO and aflibercept in wAMD, the proportions of patients requiring treatment every 4 weeks and every 8 weeks were combined in the base case; all of these patients were assumed to be on an 8-week treatment interval in order to minimise model complexity. Scenario 2 altered this assumption and assumed all patients on 4- or 8-week treatment intervals would be on a 4-week treatment interval. This conservative scenario deliberately increased the clinic capacity used for treating patients with DMO with faricimab and patients with wAMD with aflibercept. Shorter administration intervals could lead to system overload from increased treatment demand, ultimately causing more OOH appointments and more delays in attendances. In this scenario, faricimab was associated with reduced cost savings and NMB compared with aflibercept, but avoided more QALY losses due to delays than estimated in the base case. Compared with ranibizumab biosimilar, using faricimab avoided 105.70 lost QALYs as a result of reducing almost all treatment delays that would have happened if patients were treated with ranibizumab biosimilar; nevertheless, this difference did not compensate for the higher cost of faricimab, leading to a negative net monetary benefit and an ICER above the NICE £20,000 to £30,000 per QALY threshold for cost-effectiveness.

In a scenario with an increase in the annual growth rate in the number of new patients treated from 2.70% to 5.40%, the results did not change substantially from the base case. In the world with faricimab, more QALYs were generated because of a larger number of delays avoided. However, expanding treatment to more patients may incur higher costs. Despite the advantages of interventions that require fewer injections, an overall rise in the number of injections because of an increase in new patients and reduced administration intervals in the induction phase could offset the QALY gains.

Reducing maximum waiting times by 1 week (priority 1: 1 week for wAMD initial visits; priority 2: 3 weeks for wAMD follow-up visits and DMO initial visits; priority 3: 5 weeks for DMO follow-up visits) imposes higher strain on system capacity. In such a case, more patients are treated using OOH capacity because there is a greater emphasis on completing treatment in time. This means more savings associated with using faricimab because this agent reduces the need for OOH appointments, but there is a smaller incremental gain in QALYs for faricimab because there are fewer delays. As a result, the intervention becomes dominant compared with the other two treatments.

The findings suggest that the cost-effectiveness of faricimab increases in the presence of greater system capacity constraints. However, it is important to interpret these results in the context of this study, which is not an exhaustive cost-effectiveness analysis but rather a case study focused on how capacity constraints can alter cost-effectiveness outcomes. Empirical studies have shown that, when capacity constraints are a critical aspect of the evaluated system, incorporating these elements into models considerably affects cost-effectiveness results. This, in turn, can lead to different resource allocation decisions, ultimately influencing clinical practice [53].

Comparison Against Previous Studies

No studies incorporating capacity constraints into the cost-effectiveness analysis of a treatment for wAMD and DMO in ophthalmology were found. However, one previous study assessed the impact of different approaches to organising and delivering glaucoma patient services at a major public hospital [54]. The study’s analysis went beyond treatment pathways to include service delivery aspects such as follow-up times and booking cycles [54].

In other disease areas, studies have integrated factors that have an impact on resource constraints into economic evaluations [55,56,57]. In oncology, resource constraints have been quantified in various ways—for example, through the capacity to perform biopsy and other testing [58], through drug wastage during the implementation of a new intervention [59], in relation to a changing patient population [60] and in relation to patient adherence [61]. A major difference between these studies and our study is that these previous studies incorporated a consideration for resource constraints as part of a complete cost-effectiveness analysis that explicitly models the clinical effectiveness of health interventions. In contrast, our model assumes that all interventions are equally effective in preserving patients’ vision, and that the only difference in effectiveness is due to delayed injections resulting from resource constraints. Both these earlier studies and our study have shown that the inclusion of constraints in the economic evaluation will modify or affect the cost-effectiveness of a new health technology. Resource constraints that limit implementation can make a new technology less cost-effective, causing interventions that are cost-effective to become wasteful in the short term [62].

Study Limitations

Like all simulation models, this study may not fully represent real-world clinical situations and, therefore, results should be interpreted with caution. This study included several limitations, primarily because of reliance on the quality and certainty of available evidence. Detailed national statistics on capacity constraints in practices administering injections for patients with wAMD and DMO are not available; therefore, certain assumptions had to be made. The capacity constraints incorporated in the model were selected in consultation with a clinical expert, yet there may be other constraints not captured in the model that affect the cost-effectiveness of treatments for wAMD or DMO. The model time horizon was sufficiently long to fully capture all future costs and benefits of the technologies, and it did not require lengthy extrapolation given the potential for future drug cost changes and care process modifications that could alter system constraints and introduce uncertainty. Discontinuation rates were based on those used in previous HTAs [17, 18], but comparability is limited by varying definitions across studies. Although the reduction in QALYs due to early treatment discontinuation was not included, this was unlikely to substantially change the study conclusions given the same assumed discontinuation rate across all treatments. This scenario analysis used an annual discontinuation rate, not accounting for possible differences in administration frequencies, as has been suggested in the literature [63]. Additionally, treatment switching was not modeled because the choice of subsequent therapy remains largely arbitrary owing to limited evidence on treatment sequences, number of injections and subsequent effectiveness. Finally, drug acquisition costs applied in the case study were calculated based on the list price of treatments without considering the true costs for the NHS based on patient access schemes; therefore, the estimated cost savings associated with faricimab may deviate from those occurring in the real-world setting.

Recommendations for Future Research

More information is required to improve the functionality of the model in capturing the supply- and demand-side dynamics of newer treatments with different mechanisms of action, such as faricimab, in the wAMD and DMO population. It is important to be able to calibrate the model as more clinical evidence as well as real-world evidence emerge regarding treatment schedules, the relationship between injection frequency and clinical outcomes and treatment sequences.

Future economic evaluation of treatments for wAMD and DMO should aim to include capacity constraints; their inclusion provides valuable additional information beyond cost-effectiveness. Moreover, for any economic evaluation of new healthcare interventions, it will be pertinent to consider if and how the new technology could affect health system capacity and how this will affect the cost-effectiveness of the new intervention. This information is critical for guiding resource allocation decisions and facilitating implementation strategies among payers and providers.

Conclusion

This study demonstrated that it is crucial to integrate the influence of resource constraints into economic evaluations, especially in disease areas like ophthalmology, in which constrained resources have been shown to reduce the real-world health benefits of treatment. Integrating these constraints into cost-effectiveness modelling has the potential to reshape the decision-making process, providing additional information on the impact of a new technology on the healthcare system’s capacity and offering a more realistic and sustainable basis for decision-making.

The use of faricimab to treat patients addresses resource constraints by reducing injection frequency and minimising delays, with notable benefits in this simulation exercise. These benefits include net cost savings from the healthcare payer perspective and improved QALY outcomes for patients with wAMD and DMO. As shown here, implementing faricimab broadly in clinical practice not only streamlines resource utilisation but also enhances patient outcomes by minimising delays and injections in a resource-constrained health system. Although ranibizumab biosimilar is the least costly option in terms of drug acquisition costs, in the presence of resource constraints, it is associated with considerable QALY losses due to treatment delays; in this case, faricimab is cost-effective compared with ranibizumab biosimilar.

Data Availability

The parameter inputs used in the model were identified from sources in open domain. All parameters are reported in the article.

References

Thokala P, Dixon S, Jahn B. Resource modelling: the missing piece of the HTA jigsaw? Pharmacoeconomics. 2015;33(3):193–203.

Gale R, Cox O, Keenan C, Chakravarthy U. Health technology assessment of new retinal treatments; the need to capture healthcare capacity issues. Eye. 2022;36(12):2236–8.

NHS England. Consultant-led referral to treatment waiting times data 2022–23. 2023. https://www.england.nhs.uk/statistics/statistical-work-areas/rtt-waiting-times/rtt-data-2022-23/. Accessed 22 July 2024.

Royal College of Ophthalmologists. Public Accounts Committee inquiry—managing NHS backlogs & waiting times: Royal College of Ophthalmologists response 2022. https://www.rcophth.ac.uk/wp-content/uploads/2022/11/RCOphth-response-to-Public-Accounts-Committee-inquiry-Managing-NHS-backlogs-and-waiting-times-Nov-2022.pdf. Accessed 22 July 2024.

Royal College of Ophthalmologists. Workforce Census 2018. https://www.rcophth.ac.uk/wp-content/uploads/2020/05/RCOphth-Workforce-Census-2018.pdf. Accessed 22 July 2024.

Association of Optometrists. NHS backlog emergency forcing people to go private or risk permanent sight loss, latest survey finds. 2023. https://www.aop.org.uk/our-voice/media-centre/press-releases/2023/11/23/nhs-backlog-emergency-forcing-people-to-go-private-or-risk-permanent-sight-loss-latest-survey-finds. Accessed 22 July 2024.

National Institute for Health and Care Excellence. Clinical Knowledge Summaries (CKS): macular degeneration—age-related. 2022. https://cks.nice.org.uk/topics/macular-degeneration-age-related/. Accessed 22 July 2024.

National Eye Institute. Diabetic Retinopathy. 2024. https://www.nei.nih.gov/learn-about-eye-health/eye-conditions-and-diseases/diabetic-retinopathy. Accessed 22 July 2024.

Holman N, Forouhi N, Goyder E, Wild S. The Association of Public Health Observatories (APHO) diabetes prevalence model: estimates of total diabetes prevalence for England, 2010–2030. Diabet Med. 2011;28(5):575–82.

Li JQ, Welchowski T, Schmid M, Mauschitz MM, Holz FG, Finger RP. Prevalence and incidence of age-related macular degeneration in Europe: a systematic review and meta-analysis. Br J Ophthalmol. 2020;104(8):1077–84.

National Institute for Health and Care Excellence. Age-related macular degeneration [NG82]. 2018. https://www.nice.org.uk/guidance/ng82. Accessed 22 July 2024.

National Institute for Health and Care Excellence. Faricimab for treating diabetic macula oedema [TA799]. 2022. https://www.nice.org.uk/guidance/ta799. Accessed 22 July 2024.

Chopra R, Preston GC, Keenan TDL, et al. Intravitreal injections: past trends and future projections within a UK tertiary hospital. Eye (Lond). 2022;36(7):1373–8.

Holz FG, Tadayoni R, Beatty S, et al. Multi-country real-life experience of anti-vascular endothelial growth factor therapy for wet age-related macular degeneration. Br J Ophthalmol. 2015;99(2):220–6.

National Institute for Health and Care Excellence. Ranibizumab and pegaptanib for the treatment of age-related macular degeneration [TA155]. 2008. https://www.nice.org.uk/guidance/ta155. Accessed 22 July 2024.

National Institute for Health and Care Excellence. Ranibizumab for treating diabetic macular oedema [TA274]. 2013. https://www.nice.org.uk/guidance/ta274. Accessed 22 July 2024.

National Institute for Health and Care Excellence. Aflibercept solution for injection for treating wet age-related macular degeneration. 2013. https://www.nice.org.uk/guidance/ta294. Accessed 22 July 2024.

National Institute for Health and Care Excellence. Aflibercept for treating diabetic macular oedema [TA346]. 2015. https://www.nice.org.uk/guidance/ta346. Accessed 22 July 2024.

National Institute for Health and Care Excellence. Brolucizumab for treating wet age-related macular degeneration [TA672]. 2021. https://www.nice.org.uk/guidance/ta672. Accessed 22 July 2024.

National Institute for Health and Care Excellence. Brolucizumab for treating diabetic macular oedema [TA820]. 2022. https://www.nice.org.uk/guidance/ta820. Accessed 22 July 2024.

National Institute for Health and Care Excellence. Faricimab for treating wet age-related macular degeneration [TA800]. 2022. https://www.nice.org.uk/guidance/ta800. Accessed 22 July 2024.

Lim JH, Wickremasinghe SS, Xie J, et al. Delay to treatment and visual outcomes in patients treated with anti-vascular endothelial growth factor for age-related macular degeneration. Am J Ophthalmol. 2012;153(4):678-86.e2.

Im JH, Jin Y-P, Chow R, Dharia RS, Yan P. Delayed anti-VEGF injections during the COVID-19 pandemic and changes in visual acuity in patients with three common retinal diseases: a systematic review and meta-analysis. Surv Ophthalmol. 2022. https://doi.org/10.1016/j.survophthal.2022.08.002.

Penha FM, Masud M, Khanani ZA, Thomas M, Fong RD, Smith K, et al. Review of real-world evidence of dual inhibition of VEGF-A and ANG-2 with faricimab in NAMD and DME. Int J Retina Vitreous. 2024;10(1):5.

Narayan A, Watson S. Faricimab: the solution to treatment resistant neovascular age-related macular degeneration? A real-world study. Royal College of Ophthalmologists Annual Congress, 25–28 May 2023; Birmingham3.

National Institute for Health and Care Excellence. Supporting the health and care system in improving productivity. 2023. https://www.nice.org.uk/productivity. Accessed 22 July 2024.

Haider S, Thayakaran R, Subramanian A, et al. Disease burden of diabetes, diabetic retinopathy and their future projections in the UK: cross-sectional analyses of a primary care database. BMJ Open. 2021;11(7):e050058.

Owen CG, Jarrar Z, Wormald R, Cook DG, Fletcher AE, Rudnicka AR. The estimated prevalence and incidence of late stage age related macular degeneration in the UK. Br J Ophthalmol. 2012;96(5):752–6.

Giocanti-Auregan A, Tadayoni R, Grenet T, et al. Estimation of the need for bilateral intravitreal anti-VEGF injections in clinical practice. BMC Ophthalmol. 2016;16:142.

Garweg JG, Štefanickova J, Hoyng C, et al. Dosing regimens of intravitreal aflibercept for diabetic macular edema beyond the first year: VIOLET, a prospective randomized trial. Adv Ther. 2022;39(6):2701–16.

Heier JS, Khanani AM, Quezada Ruiz C, et al. Efficacy, durability, and safety of intravitreal faricimab up to every 16 weeks for neovascular age-related macular degeneration (TENAYA and LUCERNE): two randomised, double-masked, phase 3, non-inferiority trials. Lancet. 2022;399(10326):729–40.

Hirano T, Toriyama Y, Takamura Y, et al. Outcomes of a 2-year treat-and-extend regimen with aflibercept for diabetic macular edema. Sci Rep. 2021;11(1):4488.

Kertes PJ, Galic IJ, Greve M, et al. Efficacy of a treat-and-extend regimen with ranibizumab in patients with neovascular age-related macular disease: a randomized clinical trial. JAMA Ophthalmol. 2020;138(3):244–50.

Payne JF, Wykoff CC, Clark WL, et al. Long-term outcomes of treat-and-extend ranibizumab with and without navigated laser for diabetic macular oedema: TREX-DME 3-year results. Br J Ophthalmol. 2021;105(2):253–7.

Souied EH, Holz FG, Hykin PG, et al. Efficacy of intravitreal aflibercept treat-and-extend regimen over 2 years for neovascular age-related macular degeneration: ARIES study. Invest Ophthalmol Visual Sci. 2020;61(7):4210.

Wykoff CC, Abreu F, Adamis AP, et al. Efficacy, durability, and safety of intravitreal faricimab with extended dosing up to every 16 weeks in patients with diabetic macular oedema (YOSEMITE and RHINE): two randomised, double-masked, phase 3 trials. Lancet. 2022;399(10326):741–55.

MacEwen CD, Alison; Chang, Lydia. Ophthalmology: GIRFT Programme National Specialty Report. 2019. https://gettingitrightfirsttime.co.uk/wp-content/uploads/2019/12/OphthalmologyReportGIRFT19P-FINAL.pdf. Accessed 22/07/2024

Royal College of Ophthalmologists. Commissioning Guidance: Age Related Macular Degeneration Services. 2024. https://www.rcophth.ac.uk/resources-listing/commissioning-guidance-age-related-macular-degeneration-services/. Accessed 22/07/2024

NHS England. 2021/22 National Cost Collection Data Publication 2023. Available from: https://www.england.nhs.uk/publication/2021-22-national-cost-collection-data-publication/. Accessed 22/07/2024

National Institute for Health and Care Excellence. British National Formulary. 2024. https://bnf.nice.org.uk/. Accessed 22/07/2024

Loewenstein A, Berger A, Daly A, et al. Save our Sight (SOS): a collective call-to-action for enhanced retinal care across health systems in high income countries. Eye. 2023. https://doi.org/10.1038/s41433-023-02540-w.

Hodgson R, Reason T, Trueman D, et al. Challenges associated with estimating utility in wet age-related macular degeneration: a novel regression analysis to capture the bilateral nature of the disease. Adv Ther. 2017;34(10):2360–70.

Czoski-Murray C, Carlton J, Brazier J, Young T, Papo NL, Kang HK. Valuing condition-specific health states using simulation contact lenses value in health. Value Health. 2009;12(5):793–99. https://doi.org/10.1111/j.1524-4733.2009.00527.x

van Baal P, Morton A, Severens JL. Health care input constraints and cost effectiveness analysis decision rules. Soc Sci Med. 2018;200:59–64.

Brennan A, Chick SE, Davies R. A taxonomy of model structures for economic evaluation of health technologies. Health Econ. 2006;15(12):1295–310.

Ferrante N, Ritrovato D, Bitonti R, Furneri G. Cost-effectiveness analysis of brolucizumab versus aflibercept for the treatment of neovascular age-related macular degeneration (nAMD) in Italy. BMC Health Serv Res. 2022;22(1):573.

Ming J, Zhang Y, Xu X, et al. Cost–effectiveness analysis of intravitreal aflibercept in the treatment of diabetic macular edema in China. J Compar Eff Res. 2019;9(3):161–75.

Yanagi Y, Takahashi K, Iida T, et al. Cost-effectiveness analysis of ranibizumab biosimilar for neovascular age-related macular degeneration in Japan. Ophthalmol Ther. 2023;12(4):2005–21.

Yu JS, Carlton R, Agashivala N, Hassan T, Wykoff CC. Brolucizumab vs aflibercept and ranibizumab for neovascular age-related macular degeneration: a cost-effectiveness analysis. J Manag Care Spec Pharm. 2021;27(6):743–52.

Kemp-Casey A, Pratt N, Ramsay E, Roughead EE. Using post-market utilisation analysis to support medicines pricing policy: an Australian case study of aflibercept and ranibizumab use. Appl Health Econ Health Policy. 2019;17:411–7.

Carrasco J, Pietsch GA, Nicolas MP, Koerber C, Bennison C, Yoon J. Real-world effectiveness and real-world cost-effectiveness of intravitreal aflibercept and intravitreal ranibizumab in neovascular age-related macular degeneration: systematic review and meta-analysis of real-world studies. Adv Ther. 2020;37(1):300–15.

Maniadakis N, Konstantakopoulou E. Cost effectiveness of treatments for diabetic retinopathy: a systematic literature review. Pharmacoeconomics. 2019;37(8):995–1010.

Standfield LB, Comans TA, Scuffham PA. An empirical comparison of Markov cohort modeling and discrete event simulation in a capacity-constrained health care setting. Eur J Health Econ. 2017;18(1):33–47.

Crane GJ, Kymes SM, Hiller JE, Casson R, Martin A, Karnon JD. Accounting for costs, QALYs, and capacity constraints: using discrete-event simulation to evaluate alternative service delivery and organizational scenarios for hospital-based glaucoma services. Med Decis Making. 2013;33(8):986–97.

Wright SJ, Newman WG, Payne K. Quantifying the impact of capacity constraints in economic evaluations: an application in precision medicine. Med Decis Making. 2022;42(4):538–53.

Hontelez JA, Chang AY, Ogbuoji O, de Vlas SJ, Barnighausen T, Atun R. Changing HIV treatment eligibility under health system constraints in sub-Saharan Africa: investment needs, population health gains, and cost-effectiveness. AIDS. 2016;30(15):2341–50.

Jahn B, Pfeiffer KP, Theurl E, Tarride JE, Goeree R. Capacity constraints and cost-effectiveness: a discrete event simulation for drug-eluting stents. Med Decis Making. 2010;30(1):16–28.

Vanderlaan BF, Broder MS, Chang EY, Oratz R, Bentley TG. Cost-effectiveness of 21-gene assay in node-positive, early-stage breast cancer. Am J Manag Care. 2011;17(7):455–64.

Delea TE, Amdahl J, Chit A, Amonkar MM. Cost-effectiveness of lapatinib plus letrozole in her2-positive, hormone receptor-positive metastatic breast cancer in Canada. Curr Oncol. 2013;20(5):e371–87.

Garrison LP Jr, Veenstra DL. The economic value of innovative treatments over the product life cycle: the case of targeted trastuzumab therapy for breast cancer. Value Health. 2009;12(8):1118–23.

McCowan C, Wang S, Thompson AM, Makubate B, Petrie DJ. The value of high adherence to tamoxifen in women with breast cancer: a community-based cohort study. Br J Cancer. 2013;109(5):1172–80.

Wright SJ, Newman WG, Payne K. Accounting for capacity constraints in economic evaluations of precision medicine: a systematic review. Pharmacoeconomics. 2019;37(8):1011–27.

Bakri SJ, Karcher H, Andersen S, Souied EH. Anti–vascular endothelial growth factor treatment discontinuation and interval in neovascular age-related macular degeneration in the United States. Am J Ophthalmol. 2022;242:189–96.

Authorship

All authors made substantial contributions to the work, drafted or revised the manuscript critically for important intellectual content, approved the version to be published and agreed to be accountable for all aspects of the work in ensuring that questions related to the accuracy of integrity of any part of the work are appropriately investigated and resolved.

Medical Writing/Editorial Assistance

Editorial support was provided by Karl Read who is an Editorial Consultant at Putnam. Putnam has received funding from F. Hoffmann-La Roche Ltd.

Funding

The study and Rapid Service Fee were funded by F. Hoffmann-La Roche Ltd, which is the manufacturer of faricimab.

Author information

Authors and Affiliations

Contributions

Tara Li: conceptualisation; methodology; formal analysis; writing—original draft, review, and editing. Vladislav Berdunov: conceptualisation; methodology; writing—original draft, review and editing. Robin Hamilton: conceptualisation; clinical expert input; writing—review and editing. Yaneth Gil Rojas: formal analysis; writing—original draft, review and editing. Christian Bührer: conceptualisation; methodology; writing—review and editing. Oliver Cox: conceptualisation; methodology; writing—review and editing. Roelien Postema: conceptualisation; writing—review and editing. Marloes Bagijn: conceptualisation; methodology; writing—review and editing.

Corresponding author

Ethics declarations

Conflict of Interest

Tara Li, Vladislav Berdunov, Yaneth Gil Rojas, and Roelien Postema are employees of Putnam, which has received funding from F. Hoffmann-La Roche Ltd. Christian Bührer, Oliver Cox, and Marloes Bagijn are employees of F. Hoffmann-La Roche Ltd. Robin Hamilton has received financial support for consultancy from F. Hoffmann-La Roche Ltd, travel and speaker honoraria and being on advisory boards for F. Hoffmann-La Roche Ltd, Bayer, Teva, and Biogen, and research grants from F. Hoffmann-La Roche Ltd, Bayer, Perspectum, and Quantel.

Ethical Approval

This article is based on previously conducted studies and does not contain any new studies with human participants or animals performed by any of the authors.

Additional information

Prior presentation: The manuscript is based on work that has been previously presented at a poster session of the ISPOR Europe 2023 Conference (Copenhagen): https://doi.org/10.1016/j.jval.2023.09.673.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License, which permits any non-commercial use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc/4.0/.

About this article

Cite this article

Li, T., Berdunov, V., Hamilton, R. et al. Economic Assessment in Resource-Constrained Systems: Individual-Level Simulation Model in Wet Age-Related Macular Degeneration and Diabetic Macular Oedema. Ophthalmol Ther (2024). https://doi.org/10.1007/s40123-024-00999-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40123-024-00999-8