Abstract

Many physical phenomena can be modelled through nonlocal boundary value problems whose boundary conditions involve integral terms. In this work we propose a numerical algorithm, by combining second-order Crank–Nicolson schema for the temporal discretization and Legendre–Chebyshev pseudo-spectral method (LC–PSM) for the space discretization, to solve a class of parabolic integrodifferential equations subject to nonlocal boundary conditions. The approach proposed in this paper is based on Galerkin formulation and Legendre polynomials. Results on stability and convergence are established. Numerical tests are presented to support theoretical results and to demonstrate the accuracy and effectiveness of the proposed method

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the last decades, the theory of integrodifferential equations has been extensively investigated by many researchers, and it has become a very active research area. The study of this class of equations ranges from the theoretical aspects of solvability and well-posedness to the analytic and numerical methods for obtaining solutions. A strong motivation for studying integrodifferential equations of PDEs type comes from the fact that they could serve as mathematical models for many problems in physics, mechanics, biology and other fields of sciences.

In this work, we are concerned with the numerical solution of the following parabolic integrodifferential equation:

with the initial condition

subject to integral boundary conditions

where \(\Lambda \) and J stand for the space domain \((-1,1)\) and time interval \([0,T]\) with \(T>0\), respectively. The functions \(a,f,u_0,K_1\) and \(K_2\) are well-defined functions. Assume that the kernel a in the integral part of Eq. (1.1) is bounded, namely

Integrodifferential equations of the form (1.1), and other similar variants, arise in the mathematical modelling of many physical phenomena and practical engineering problems, such as nonlocal reactive flows in porous media [8, 9], heat transfer in materials with memory [13, 17], phenomena of visco-elasticity [7, 19], gas diffusion problems [18], spatio-temporal development of epidemics [21], and so on.

Considerable work has been made on the area of nonlocal boundary values problems in the numerical and theoretical aspects. Indeed theoretical studies devoted to these classes of problems are usually connected with some difficulties due to the presence of an integral terms in the boundary conditions, this promoted researchers to perform some modifications and improvements on the classical methods to overcome this issue (see, e.g., [2, 3, 12, 16]).

On the other hand, integrodifferential equations are usually too complicate to be solved analytically; this made the use of numerical methods is required to obtain approximate solutions. Many efforts have been undertaken to design and develop efficient numerical approaches for solving differential and integrodifferential equations with nonlocal boundary conditions. In [15], Merad and Martín-Vaquero presented a computational study for two-dimensional hyperbolic integrodifferential equations with purely integral conditions, in which, they demonstrated the existence and uniqueness of the solution and proposed a numerical approach based on Galerkin method. Authors in [11], utilized reproducing kernels approach to solve parabolic and hyperbolic integrodifferential equations subject to integral and weighted integral conditions. More recently, Bencheikh et al. [1] implemented numerical method, based on operational matrices of orthonormal Bernstein polynomials, to approximate the solution of an integrodifferential parabolic equation with purely nonlocal integral conditions. The problem under consideration in this paper has been well studied in [10], where the authors proved the existence and uniqueness of the solution using energy inequalities method, and for the numerical resolution, a numerical algorithm based on superposition principle is presented, where the original nonlocal problem was replaced by three auxiliary standard boundary value problems that solved using finite difference method.

As for the numerical methods, Spectral and pseudo-spectral methods [4, 20] have gained increasing popularity in the numerical resolution of many types of problems. In the context of spectral methods, Legendre approximation has been used widely, and this Legendre–Galerkin spectral method has been shown to be computationally efficient and highly accurate with exponential rate of convergence. While plenty of papers have devoted to discussing the use of spectral methods for solving problems with classical boundary conditions. Surprisingly, a limited number of authors touched upon the implementation and analysis of the spectral methods for problems with nonlocal boundary conditions [6].

The primary aim in this paper is to present a suitable way to analysis and implement Legendre–Chebyshev pseudo-spectral method for the numerical resolution of a class of parabolic integrodifferential equations subject to non-local boundary conditions. The proposed approach is based on Galerkin formulation and used Legendre polynomials as a basis for the spatial discretization, followed by, temporal discretization using the trapezoidal method. Both efficiency and accuracy are achieved using the presented method, and the numerical experiments showed that (LC–PSM) can realize better accuracy compared to other existing methods and with less computational time.

This paper is organized as follows. In the next section, we briefly describe the way to implement Legendre–Chebyshev pseudo-spectral method for discretizing the parabolic integrodifferential equation (1.1). In Sect. 3, we first recall some lemmas and results related to spectral methods, and then, the stability and convergence of the method are established in \(L^2\)-norms. In Sect. 4, we provide some numerical tests to confirm the effectiveness and robustness of (LC–PSM) presented in this paper. Finally, in Sect. 5, we summarize some remarks on the main features of our method and cite some possible extensions.

2 Legendre–Galerkin spectral method

In the next subsections, we shall briefly describe the way to implement Legendre–Chebyshev pseudo-spectral method to approximate the solution of the nonlocal boundary value problem considered in this paper. As a starting point, we formulate the nonlocal problem (1.1)–(1.3) in weak formulation: find \(u : J \rightarrow H^1(\Lambda )\) such that for any \(v \in H^1(\Lambda )\)

where the functional \({\mathcal {K}}(\cdot ,\cdot )\) is defined as follows:

Here and in what follows, we use the notation \((\cdot ,\cdot )\) to denote the \(L^2\)-inner product and \(\Vert \cdot \Vert \) for the induced norm on the space \(L^2(\Lambda )\). Denote by \(H^m(\Lambda )\) the standard Sobolev space with norm and semi-norm denoted by \(\Vert \cdot \Vert _m\) and \(\vert \cdot \vert _m\), respectively. Solvability of the above variational problem is addressed in the following theorem [10].

Theorem 2.1

Assume that \(a_0\) satisfies rm (1.4), then the variational problem (2.1) admits a unique weak solution in \(L^2(J;H^1(\Lambda ))\).

2.1 Space discretization: LC–PSM

Let \({\mathbb {P}}_N(\Lambda )\) be the space consisting of all algebraic polynomials of degree at most N and denote by \(I_N^C: L^2(\Lambda ) \rightarrow {\mathbb {P}}_N(\Lambda )\) the operator of interpolation at Chebyshev–Gauss–Lobatto points \(\xi _i = cos\left( \frac{i\pi }{N} \right) ,0\le i \le N\) defined as

Based on the above weak formulation, we pose the semi-discrete Legendre–Chebyshev Galerkin schema as: find \(u_N : J \rightarrow {\mathbb {P}}_N(\Lambda )\) such that for any \(v \in {\mathbb {P}}_N(\Lambda )\)

Let \(L_k\) be the kth degree Legendre polynomial defined by the following three-term recurrence formula:

We recall that the set of Legendre polynomials is mutually orthogonal in \(L^2(\Lambda )\), namely

Let N be a positive integer, we define [5]

The following lemma is the key technique in our algorithm.

Lemma 2.2

[22] For two integer \(j,k \in {\mathbb {N}}\), let us denote,

Then, for \(0 \le j,k \le N-2\)

and

Thanks to linear algebra arguments on can easily prove that

Consequently, the numerical solution \(u_N\) of (2.3) can be expanded in terms of \(\left( \varphi _k \right) _{k=0}^N\) with time-dependent coefficients, namely

Inserting (2.5) into (2.3) and taking \(v=\varphi _j, 0\le j \le N\), we obtain the following system of ODEs

where

with initial conditions

Denote

Then, the initial value problem (2.6) and (2.7) can be written in matrix formulation as follows:

The coefficients \(m_{jk}\) and \(p_{jk}\) are already determined in Lemma (2.2). For the matrix \({\mathbf {Q}}\), one can uses the values of \(\phi _j(\pm 1)\) to determinate its entries. In fact, since \(\phi _j(\pm 1) = 0\) for \(0\le j \le N-2\), hence \({\mathbf {Q}}\) is almost-null matrix except the two last rows whose entries

2.2 Fully-discretization schema

For time advancing, we use the second-order Crank–Nicolson scheme to discretize the differential system (2.8). For a given positive integer M, we define the step time \(\Delta t = \frac{T}{M}\). Let \(t_i = i\Delta t,(i=0\cdots ,M)\), we denote by \(\alpha _k^i\) and \(A_k^i\) the approximations of \(\alpha _k(t_i)\) and \(A_k(t_i)\), respectively.

The fully discretization LC–PSM/CN for (1.1)–(1.3) leads to the following recurrent algebraic system

where

The above algebraic system can be solved easily using either direct or iterative methods. As a choice, on can use QR factorization method, given its accurate results and ease of implementation.

3 Error analysis

In this section, we derive \(L^2\)-error estimate for the error \(e_N(t) = u_N(t)- u(t)\). For this purpose, we first, in the next subsection, recall a sequence of lemmas that will be needed to perform the error analysis.

3.1 Preliminaries

Now, we introduce two projection operators and their approximation properties. First, let \(P_N : L^2(\Lambda ) \rightarrow {\mathbb {P}}_N(\Lambda )\) be the \(L^2\)-orthogonal projection, namely

We also define the operator \(P^1_N : H^1(\Lambda ) \rightarrow {\mathbb {P}}_N(\Lambda )\) such that

From the definition of \(P_N^1\), one can obtain

Next, we give the approximation property of the projection operator \(P_N^{1}\) and the interpolation operator \(I_N^C\).

Lemma 3.1

[14] If \( v \in H^r(\Lambda )\) with \(r \ge 1\), then the following estimate holds

where \(C>0\) is a positive constant independent on N.

Lemma 3.2

[14] Let \( v \in H^1(\Lambda )\), there exists a positive constant C independent on N such that

Moreover, if \(v \in H^r(\Lambda )\) for \(r \le 1\), then the following estimate holds

where \(C>0\) is a positive constant independent on N.

Remark 3.3

Under the same assumptions of Lemma (3.2), we can obtain using approximation property (3.3) the following inequality

Now, we derive a basic estimate that will be used later in our proofs.

Lemma 3.4

[5] Let \({\mathcal {K}}(\cdot ,\cdot )\) defined by (2.2). Assume that \(K_1,K_2 \in L^2(\Lambda )\). Then, for any \(w,v \in H^1(\Lambda )\), the following estimate holds

3.2 Error estimates

In this subsection, we consider the stability and convergence of the semi-discrete approximation (2.3). We first state a Gronwall-type inequality that will be used in the proof of our main results.

Lemma 3.5

Let E(t) and H(t) be two non-negative integrable functions on \([0,T]\) satisfying

where \(C_1,C_2 \in {\mathbb {R}}^+\), then there exist \(C>0\) such that

Proof

For a non-negative function E(t), we perform a permutation of variables to obtain:

Hence, inequality (3.7) of Lemma (3.5) becomes

Now, applying the standard Gronwall inequality yields the desired estimate (3.8). \(\square \)

Theorem 3.6

Let \( u_0 \in H^1(\Lambda )\) and \(f \in C^1\left( 0,T;H^1(\Lambda )\right) \), then the solution \(u_N(t)\) of (2.3) satisfies

Proof

Let \(t\in J\), setting \(u_N(t) = v\) in

We have to estimate the terms on the right-hand side of (3.10). For the first term \(I_1\), we use the hypothesis (1.4) and then apply Cauchy and Young inequalities.

Next, combining Cauchy and Young inequalities with approximation property (3.5) to estimate \(I_2\).

The estimate of \(I_3\) is an immediate consequence of Lemma (3.6), namely

Putting things together and choosing \(0<\varepsilon <1\) yields

Integrating both sides of (3.14) form 0 to t, we obtain

where

Thanks to the Gronwall-type inequality (3.5), we get

Because of \(u_N(0) = I_N^C u_0 = (I_N^C u_0 - u_0) + u_0\), we use approximation properties (3.3) and (3.5) to obtain \( \Vert u_N(0) \Vert \le C\Vert u_0 \Vert _1^2 \). Then it is easy to show the desired result. \(\square \)

Let u(t) and \(u_N(t)\) be the solutions to (2.1) and (2.3), respectively. Denoting

Then, we have the following estimate.

Lemma 3.7

Assume that \(u \in C^1\left( 0,T;H^r(\Lambda )\right) ,r \ge 2\), then the following estimate holds

where \(C>0\) is a positive constant independent on N.

Proof

From (2.1), (2.3) and (3.1) we know that for a fixed \(t\in J\) the \(\theta _N(t)\) satisfies for all \(v\in {\mathbb {P}}_N(\Lambda )\) the following error equation:

Setting \(v = \theta _N(t)\) in (3.17), we obtain

where

Now, we estimate the terms on the right hand-side of inequality (3.17) using a standard procedure. For the term \(I_1\), we apply Cauchy and Young inequalities and take into account (1.4),

In a similar manner, we can obtain for \(I_2\)

By virtue of approximation property (3.2), we bound \(I_2\) as follows

For the term \(I_3\)

Similarly,

To estimate of the term \(I_5\) we use Lemma (3.4). Setting \(w = \theta _N(t) + \rho _N(t) \) and \( v = \theta _N(t)\) in (3.6) yields

using the triangular inequality

hence, due to Lemma (3.1), on can obtain,

In virtue of above estimates , then inequality (3.18) becomes

By taking \(\varepsilon \) sufficiently small and integrating (3.26) over (0, t), we obtain

where

Gronwall-type inequality (3.5) implies

Take into account,

and approximation results (3.2) and (3.4), we obtain

Inserting (3.30) into (3.27) yields

for all \(0 < t \le T\), which is the desired result. \(\square \)

Now, we are in position to state our main result concerning the convergence of the semi-discrete approximation (2.3).

Theorem 3.8

Let u(t) and \(u_N(t)\) be the solution of (2.1) and (2.3), respectively. If \(u \in C^1\left( 0,T;H^r(\Lambda )\right) \) with \(r \ge 1\), then the following error estimate holds,

where \(C>0\) is a positive constant independent on N.

Proof

Using triangular inequality, we have

By the aid Lemmas (3.2) and (3.7), for all \(t\in J\) we obtain

This completes the proof. \(\square \)

4 Numerical experiments

In this section, we carry out several numerical experiments to verify the efficiency and accuracy of the proposed (LC–PSM), and we will compare our results against results obtained using other methods.

Example 4.1

In this first test problem, the following parabolic integrodifferential equation is considered

where \(f(x,t) = -(x^2-x-2)(-3e^{-t}-4t+2t^2+4)-2e^{-t}\) and \(u_0(x) = x^2 -x -2\).

The exact solution to the above integrodifferential problem is given as

Figure 1 presents the computational results obtained by applying (LC–PSM) to the above test problem, where the profiles of exact and approximate solutions as well as the absolute error are plotted.

From the numerical results illustrated in Fig. 1, one can observe that the approximate solution shows a great agreement with the exact solution, which confirms that (LG–PSM) yields a very accurate an efficient numerical method for the numerical resolution of nonlocal boundary value problems of integrodifferential parabolic type.

For comparison purposes, in Tables 1 and 2 we compared our computational results with the results obtained in [10]. Obviously, the proposed (LC–PSM) in this paper gives more accurate solutions with less CPU time than the finite difference schema used in mentioned reference.

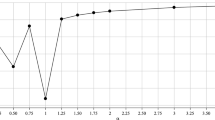

A \(L^2\)-norm versus N. B Pointwise absolute errors with \(N = 20, \Delta t = 10^{-2}\) for Example (4.2) at \(t = 1\)

Example 4.2

To examine the spatial discretization, we take in this example a test problem that has an analytic solution with limited regularity. Let us consider the following problem:

The exact solution is given as the following:

We first choose a step time small enough so that the error of the temporal discretization can be eliminated, and make the polynomial degree N varies. Table 3 shows the error in \(L^2\) and \(L^{\infty }\)-norms at a selected point \(t=1\) and by going through each line one can observe an increasing accuracy until the error of the temporal discretization becomes dominant.

To examine the theoretical result, we plot in Fig. 2 the decay rates of error in \(L^2\)-norm versus N in a log-scale and the lines of decay rates \(N^{-2}\) and \(N^{-4}\). As expected, \(L^2\)-error of (LC-PSM) for the solved problem in this example has a rate of convergence between \(N^{-3}\) and \(N^{-4}\) , which supports the results established in Theorem (3.8) since \(u \in H^3(\Lambda )\) and \(u \notin H^4(\Lambda )\)

5 Conclusions

In this paper, we are concerned in the implement and analysis of the spectral method to solve a class of integrodifferential parabolic equations subject to nonlocal boundary conditions of Neumann-type. We combined the Legendre spectral method based on Galerkin formulation to discretize the problem in the spatial direction and the second-order Crank–Nicolson finite difference schema for the temporal discretization. Rigorous error analysis has been carried out in \(L^2\)-norm for the proposed method, and the computational results of numerical examples have supported the theoretical results. Moreover, a comparison with fully finite-difference schema clearly shows that the presented method is computationally superior with less required CPU time. It should be noted that other high-order methods can be used for time integration to improve the accuracy of the fully discretization. Convergence and stability of such combinations are still undiscussed.

In future works, we plan to investigate how to implement space–time spectral method for the resolution of this class and other challenging models, such as nonlocal boundary value problems in the two-dimensional case and fractional integrodifferential problems.

References

Bencheikh, A.; Chiterb, L.; Lic, T.: Solving parabolic integro-differential equations with purely nonlocal conditions by using the operational matrices of Bernstein polynomials. J. Nonlinear Sci. Appl. 11(5), 624–634 (2018)

Boumaza, N.; Gheraibia, B.: On the existence of a local solution for an integro-differential equation with an integral boundary condition. Bol. Soc. Math. Mex. 26, 521–534 (2020)

Bouziani, A.; Mechri, B.: The Rothe’s method to a parabolic integrodifferential equation with a nonclassical boundary conditions. Int. J. Stoch. Anal. 2010, 1–16 (2010)

Canuto, C.; Hussaini, M.Y.; Quarteroni, A.; Zang, T.A.: Spectral Methods: Evolution to Complex Geometries and Applications to Fluid Dynamics. Springer, Berlin (2007)

Chattouh, A.; Saoudi, K.: Legendre–Chebyshev pseudo-spectral method for the diffusion equation with non-classical boundary conditions. Moroc. J. Pure Appl. Anal. 6(2), 303–317 (2020)

Chattouh, A.; Saoudi, K.: Error analysis of Legendre–Galerkin spectral method for a parabolic equation with Dirichlet-type non-local boundary conditions. Math. Model. Anal. 26(2), 287–303 (2021)

Christensen, R.M.: Theory of Viscoelasticity. Academic Press, New York (1971)

Cushman, J.H.; Ginn, T.R.: Nonlocal dispersion in media with continuously evolving scales of heterogeneity. Transp. Porous Media 13(1), 123–138 (1993)

Dagan, G.: The significance of heterogeneity of evolving scales to transport in porous formations. Water Resour. Res. 30(12), 3327–3336 (1994)

Dehilis, S.; Bouziani, A.; Oussaeif, T.: Study of solution for a parabolic integrodifferential equation with the second kind integral condition. Int. J. Anal. Appl. 16(4), 569–593 (2018)

Fardi, M.; Ghasemi, M.: Solving nonlocal initial-boundary value problems for parabolic and hyperbolic integro-differential equations in reproducing kernel Hilbert space. Numer. Methods Partial Differ. Equ. 33(1), 174–198 (2017)

Guezane-Lakoud, A.; Belakroum, D.: Time-discretization schema for an integrodifferential Sobolev type equation with integral conditions. App. Math. Comput. 218(9), 4695–4702 (2012)

Gurtin, M.E.; Pipkin, A.C.: A general theory of heat conduction with nite wave speed. Arch. Ration. Mech. Anal. 31(2), 113–126 (1968)

Ma, H.P.: Chebyshev–Legendre spectral viscosity method for nonlinear conservation laws. SIAM J. Numer. Anal. 35(3), 869–892 (1998)

Merad, A.; Martin-Vaquero, J.: A Galerkin method for two-dimensional hyperbolic integro-differential equation with purely integral conditions. Appl. Math. Comput. 291, 386–394 (2016)

Merad, A.; Bouziani, A.; Ozel, C.; Kiliçman, A.: On solvability of the integrodifferential hyperbolic equation with purely nonlocal conditions. Acta Math. Sci. 35(3), 1–9 (2015)

Miller, R.K.: An integro-differential equation for grid heat conductors with memory. J. Math. Anal. Appl. 66, 313–332 (1978)

Raynal, M.: On some nonlinear problems of diffusion. Lect. Notes Math. 737, 251–266 (1979)

Rcnardy, M.: Mathematical analysis of viscoelastic flows. Ann. Rev. Fluid Mech. 21, 21–36 (1989)

Shen, J.; Tang, T.; Wang, L.L.: Spectral Methods: Algorithms. Analysis and Applications. Springer, Heidelberg (2011)

Thieme, H.R.: A model for the spatio spread of an epidemic. J. Math. Biol. 4(4), 337–351 (1977)

Zhao, T.; Li, C.; Zang, Z.; Wu, Y.: Chebyshev–Legendre pseudo-spectral method for the generalised Burgers–Fisher equation. Appl. Math. Mod. 36(3), 1046–1056 (2012)

Funding

This work has been partially supported by Ministry of Higher Education and Scientific Research of Algeria, under PRFU project N: C00L03UN400120210001.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chattouh, A. Numerical solution for a class of parabolic integro-differential equations subject to integral boundary conditions. Arab. J. Math. 11, 213–225 (2022). https://doi.org/10.1007/s40065-022-00371-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40065-022-00371-3