Abstract

High-entropy alloys (HEAs) have attracted much attention for laser additive manufacturing, due to their superb mechanical properties. However, their industry application is still hindered by the high entry barriers of design for additive manufacturing and the limited performance library of HEAs. In most machine learning methods used to predict the properties of HEAs, their processing paths are not clearly distinguished. To overcome these issues, in this work, a novel deep neural network architecture is proposed that includes HEA manufacturing routes as input features. The manufacturing routes, i.e., as-cast and laser additive manufactured samples, are transformed into the One-Hot encoder. This makes the samples in the dataset provide better directivity and reduces the prediction error of the model. Data augmentation with conditional generative adversarial networks is employed to obtain some data samples with a distribution similar to that of the original data. These additional added data samples overcome the shortcoming of the limited performance library of HEAs. The results show that the mean absolute error value of the prediction is 44.6, which is about 27% lower than that using traditional neural networks in this work. This delivers a new path to discover chemical compositions suitable for laser additive manufactured HEAs, which is of universal relevance for assisting specific additive manufacturing processes.

Similar content being viewed by others

Introduction

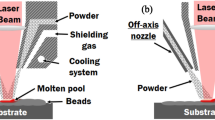

The birth of the concept of high-entropy alloys (HEAs) offers unprecedented freedom for designing high-performance alloys.1,2 This provides a promising strategy to break the long-standing shackles of compositional design in search of high-performance metallic materials. HEAs are composed of multi-principal elements, and differ from conventional alloys. They have attracted interest in potential structural applications due to their outstanding mechanical properties.3,4,5 New routes, e.g., fabrication methods, composition modifications, or a combination of both,6,7 remain necessary to obtain HEAs with an excellent combination of properties. Among the routes, laser-related additive manufacturing is a disruptive manufacturing technology to make three-dimensional objects. Therefore, in recent years, laser additive manufactured HEAs have attracted considerable interest in both science and engineering. However, there is still a challenge to establish microstructure–performance linkages in additive manufactured HEAs. One of the most important and well-studied performance metrics for the evaluation of additive manufactured alloys is the Vickers hardness.8,9 For conventional alloys with specific compositions, there is still a wide variation in hardness values measured experimentally.10 The trial-and-error method remains one of the most common approaches to overcome difficulties in the selection for structural applications.11 On the other hand, considering the existence of multiple elements and the wide range of element concentrations, the composition space of HEAs tends to be much wider than that of conventional alloys. The presence of some expensive elements, e.g., tantalum12 and hafnium,13 directly increases the cost of HEAs, making complete exploration of all compositions of HEAs difficult and expensive. Some computational methods, e.g., the calculated phase diagram,14 density functional theory,15,16,17 molecular dynamics,18,19 and other methods,20 have accelerated the understanding of microstructure–performance linkages in HEAs. It is still difficult to quickly develop high-performance HEAs prepared using laser additive manufacturing, considering both the extensive unexplored space of compositions for HEAs and unacceptable costs for modeling and simulation.

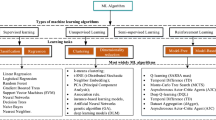

Throughout the past few years, machine learning (ML) algorithms, e.g., deep learning (DL),21,22 have gradually gained popularity and attracted the attention of many people in the field of materials design. The algorithms can perform nonlinear fitting between input and output data, construct complex connections and rules, and predict material properties.23,24,25 Based on the available experimental data of HEAs, Uttam et al.'s work26 shows how to forecast the yield strength of HEAs at the desired temperature utilizing the random forest (RF) regressor model. Islam et al.'s work27 provides a neural network (NN) technique for predicting phases. A variety of ML algorithms have been used in Yegi et al.'s work,28 including support vector machine (SVM) classifiers, artificial neural networks (ANN), logistic regression and decision trees for HEA phase prediction, with ANN showing a higher level of accuracy than the others. This shows that combining deep neural networks (DNN) and conditional generative adversarial networks (CGAN) can increase the accuracy of NNs in forecasting the phase of high-entropy alloys in Lee et al.'s work.29 In the area of hardness of HEAs, Chen et al.'s work30 shows a material design approach that incorporates ML models with experimental design techniques for obtaining HEAs with large hardness in the Al-Co-Cr-Cu-Fe-Ni model system. Based on RF models, the hardness of HEAs with a single solid solution phase can be better predicted than by physically modelled solid solution hardening in Huang et al.'s work.31 Chen et al.'s work32 shows that an SVM model utilized to predict the enhanced hardness is proposed to promote the design of HEAs. It shows that a model based on the RF regression model is built to provide predictions about the hardness and ultimate tensile strength of complex concentrated alloys in Jie et al.'s work.33 It shows that ML has been used to develop the solid-solution strengthening model for HEAs, and the model shows superior prediction performance when predicting solid-solution hardness and strength in Wen et al.'s work.34 Chang et al.'s work35 shows that an ANN model can be used to predict the hardness of nonequimolar AlCoCrFeMnNi, combined with a simulated annealing algorithm, aiming at optimal prediction results. Debnath et al.’s work36 shows that several ANN models are designed to predict the hardness and Young’s modulus of HEAs with the input features of 11 chemical elements. Mahmoud et al.’s research37 shows that employing ANN models for HEA phase and hardness prediction utilizing chemical composition as the input features is possible, and that the accuracy of the ANN model to predict hardness is higher than that of other ML regression models. This shows that the hardness of refractory HEAs can be forecast with input features, such as the melting temperature, shear modulus, and mixed entropy of alloys in Uttam et al.'s work.38 The use of ML to predict the properties of HEAs shows significant potential, and its development is crucial. For hardness prediction models, the most commonly used input features are elemental parameters, electronic parameters, thermodynamic characteristics, physical properties, chemical characteristics, and simulations of hand-designed parameters. However, different manufacturing routes yield different hardness values for HEAs made up of the same element group and composition. ML models have not included manufacturing routes as part of the input features in the prior literature.

Here, a DNN architecture that includes HEA manufacturing routes as input features is proposed. The manufacturing routes are transformed into the One-Hot encoder, which is merged with elemental parameters, electronic parameters, thermodynamic parameters, and so on. This makes the samples in the dataset provide better directivity and reduces the prediction error of the model. In addition, data augmentation with CGAN is employed, aiming to obtain some data samples with a distribution similar to that of the original data. These additional data samples can further improve the accuracy of the hardness prediction model. The impact and significance of the eigenvalues are analyzed through the hardness prediction results.

Methods

Data Collection

Data collection was carried out using the published literature.39,40,41,42,43 In previous studies,39,40,41,42,43 a large number of Vicker hardness values for HEAs fabricated by casting and laser additive manufacturing were collected. After preprocessing the data, it takes the average hardness value when the values are similar in the literature for HEAs of the same composition and discards the literature data with large deviations (relative error > 10%). Furthermore, heat-treated and laser-remelted hardness values were removed to eliminate the influence of experimental errors and process treatments on the predicted hardness of the DL model. Overall, there are 324 HEAs in the dataset, of which 209 are as-cast and 115 are laser additive manufactured.

The feature parameters commonly used for ML to predict the hardness of HEAs are melting temperature, valence electron concentration, mixing enthalpy, mixing entropy, atomic size difference, and electronegativity difference, which can indirectly affect the hardness of HEAs due to the formation of phases. The characteristic parameters relevant to the mechanical properties are selected as the shear modulus and the differences in shear modulus, as shown in Table I.44,45,46,47

DNN

The DNN is derived from the traditional ANN by expanding the number of neurons and widening the number of hidden layers, which possess better feature extraction and nonlinear fitting ability. In this study, the feature parameters in Table I are inserted into the input layer of the NN, and the fabrication methods of the HEAs are also put into the input layer together. As the fabricated methods are discrete variables, they need to be transformed into a One-Hot encoder and then merged with other feature parameters. As shown in Fig. 1, the One-Hot encoder '01' represents the fabricated method as 'as-cast', and '10' denotes the fabricated method as 'laser additive manufactured'.

CGAN

Generative adversarial networks (GANs)48 are DNN models for adversarial training that consist of mutually independent data generators and data discriminators. The generator randomly collects noisy variables conforming to a Gaussian distribution and obtains the generated data through complex nonlinear variations of the generative network. The input of the discriminator consists of the generated data and the real data, and the judgment of the data source is completed after the training of the discriminative network. After a large amount of adversarial training, the discriminator is unable to judge the source of the input data, i.e., the distribution of the generated data is consistent with the real data, and, finally, the purpose of dataset enhancement is achieved. The objective function is shown as:

where \(E\) is the expected value of the objective function, \(x_{{}}\) the true data, \({\text{z}}_{{}}\) the random noise variable, \(P_{{{\text{data}}}}\) the true sample distribution, \(P_{z}\) the false sample distribution generated by the generator, \(G(z)\) the false sample output by the generator, \(D(x)\) the probability that the true data output by the discriminator model is true, and \(D(G(z))\) the probability that the generated data output by the discriminator model is true.

A GAN lacks efficiency due to the high model freedom, high randomness, and uncontrollability of generated data. The CGAN49 is based on the traditional GAN by labeling the data with the HEA manufacturing routes, so that the generated data are constrained and the generator accepts additional category information to generate data-designated casting or laser additive manufacturing, which improves the efficiency of the model. The objective function is:

where \(P_{data} (x)\) is the output of the discriminator after adding the manufacturing method label, and \(P_{data} (x)\) refers to the output of the generator after adding the manufacturing method label. The output structure diagram is shown in Fig. 2.

DL

The DL model used to predict values of Vickers hardness for HEAs is a linear regression model, so the last layer of output is selected as a linear output. Thus, the loss function is chosen to be the mean square error (MSE), as shown in:

where \(y_{i}\) is the true value and \(\hat{y}_{i}\) is the predicted value. The backpropagation algorithm is utilized to train the NN, which involves comparing the actual value to the predicted value and propagating the error back to the previous layer, iterating and updating the weight and bias parameters. The process is then repeated until the output error decreases below a particular criterion. Due to the different ranges of each feature, the input must be normalized by the Pandas library, which can speed up the learning of the DNN, as indicated in:

where \(X_{{{\text{new}}}}\) denotes the normalized data, \(X_{\min ,i}\) is the minimum value, and \(X_{\max ,i}\) is the maximum value. The normalization makes eigenvalues range from − 1 to 1. With the DNN, the expanded number of layers and the increased number of neurons impose a higher computational burden on the network's training, while gradient disappearances and gradient explosions are more likely to occur, influencing the training of the model. The approaches of learning rate decay, dropout, and batch normalization are used to optimize the training process in this work. Table II summarizes the hyperparameters.

Results and Discussions

Data Analysis

Redundant and irrelevant features enhance the training difficulty in the DL process. Therefore, feature analysis and feature dimensionality reduction based on Pearson correlation coefficients are presented in:50

where \(X_{i}\) and \(Y_{i}\) denote the two input feature values, \(\overline{X}\) and \(\overline{Y}\) represent the mean values of the two input features, and \(n\) is the sample number. In total, correlation values range from − 1 to 1, with 0 indicating no correlation, 1 denoting a significant positive correlation, and − 1 representing a significant negative correlation. Figure 3 shows the correlation values computed from the features.

This means that there is no significant correlation between the two features, as shown in Fig. 3, since the values of the members are distributed between − 0.69 and 0.70. Additionally, the matrix values do not contain a zero value, indicating that a correlation exists between any two features. The valence electron concentration shows a positive correlation with the shear modulus and a negative correlation with the melting temperature, which means that, when the valence electron concentration increases, the shear modulus increases and the melting temperature decreases. Generally, all features can be utilized as inputs to a DL model, and no features are redundant or irrelevant.

DNN Results

The dataset is usually divided into three parts in the DL process: training, validation, and testing. The test set does not appear in the model training process and is only used for the final evaluation of the model. This study utilizes the Keras framework to construct DL models, dividing the dataset into a 60% training set, 20% validation set, and 20% test set. With the gradient descent method, the training and validation sets are combined to train the NN. To obtain the optimal model with the appropriate hyperparameters, 4-fold cross-validation is performed to ensure that each data point in the training set and validation set is trained and validated. The performance of the model is evaluated by the R2 score and mean absolute error (MAE), as shown in:

where \(y_{i}\) is the true value, \(\hat{y}_{i}\) is the predicted value, and \(\overline{y}\) is the mean value.

DL-A is trained by directly inputting the element parameters, thermodynamic characteristics, and physical characteristics into the NN, and the result is shown in Fig. 4a. DL-B is trained by transforming the fabricated methods into the One-Hot encoder, merging it with other feature values and feeding it into the NN, as illustrated in Fig. 4b.

Figure 4a and b shows that, as the number of training epochs increases, the error of both models decreases. Due to the addition of a regularization strategy such as dropout to the training process, training accuracy is sacrificed to improve validation accuracy, resulting in a smaller validation loss than training loss. The training and validation curves of DL-A exhibit a larger oscillation trend in Fig. 4a, the final training result does not show convergence, and a similar result was obtained in the study of Uttam et al.38 As a result of different manufacturing paths, the HEAs with the same element group and composition show different hardness values, which means that the label values differ. However, their eigenvalues are the same, i.e., the mixing enthalpy, mixing entropy, atomic size difference, etc. DL is an algorithm that delivers data and answers to the DL model, and the model searches for the pattern between the data and the answers. It is important to note that the same input features correspond to multiple numerical labels of hardness, which affect the performance of the model during backpropagation and cause up and down oscillations in error. Mahmoud et al.'s research37 also proposed that the manufacturing routes of HEAs should be taken into consideration when predicting the hardness by utilizing DL. Compared with DL-A, the training and validation curves of DL-B tend to oscillate less and eventually converge in Fig. 4b. In DL-B, the manufacturing routes are transformed into the One-Hot encoder and merged to the feature values, and all the samples correspond uniquely to the numerical labels of hardness, which eliminates the mistake of multiple numerical labels for the same input features. Therefore, the training curve of DL-B is smoother than that of DL-A, and the loss value on the validation set is less than that of DL-A. The training performance for DL-B is superior to that of DL-A.

The data samples in the test set are input into DL-A and DL-B to test the final outcome of the models, and the results are shown in Fig. 4c and d. Figure 4c shows the prediction results of DL-A, while Fig. 4d shows the outcome of DL-B. DL-A does not distinguish between the manufacturing methods, so all the samples in the test set are represented in blue. The manufacturing methods are separated in DL-B, where red samples are as-cast and blue samples are laser additive manufactured. According to the prediction results, the MAE value for the hardness of DL-A is 61.4, and the MAE value of DL-B is 47.4. The MAE value of DL-B is lower than that of DL-A. The R2 score is calculated by comparing the value of the mean value as a benchmark with the prediction error to determine whether it is larger or smaller than the mean benchmark error, so the value should be as close to 1 as possible. The R2 values for DL-A and DL-B are 0.71 and 0.84, respectively. This indicates that DL-B outperforms DL-A on the test set. This shows that, when using DL to predict the hardness value of HEAs, there need to be distinctions made in manufacturing methods. Not all data samples can be fed directly into a model for training.

CGAN Results

The CGAN model for data augmentation is built by adding the manufacturing routes of HEAs (data labels) to the generator and discriminator. The architecture can generate two different types of data and is trained by the existing 324 data samples. First, n noisy samples are randomly extracted from the Gaussian distributed data, \(P_{z} (z)\), and the generated data are obtained after feeding them into the generative network. Then, n samples with labels are randomly taken from the real data, \(P_{{{\text{data}}}} (x)\), and input to the discriminator network together with the generated data, and the discriminator parameters are updated according to the network loss values. After the discriminant network parameters are updated and kept unaltered, n samples are randomly obtained from the noise distribution again and input to the generative network, and the generator parameters are updated based on the network loss values. The training network parameters are shown in Table III.

In previous studies, the number of samples manufactured by as-cast far exceeded the number made by laser additive manufacturing. Additionally, the number of samples influences the prediction performance of the model; the more samples that are collected, the more features the model learns from them. Thus, CGAN-based data augmentation can enhance the number of samples, which can improve the model's accuracy. After training the model, the accuracy of the discriminator to distinguish real data and generated data is shown in Fig. 5.

Data samples of HEAs are produced for both manufacturing routes with the generator. The result in Fig. 5 shows that the discriminator's judgment on generated data tends to increase as the number of epochs increases, while the judgment on real data tends to decrease. When the number of trainings reaches 630, the discriminator's accuracy for real data is 0.48 and that for generated data is 0.49, which are both approximately 0.50. As the amount of training continues to rise, the disparity between the discriminator's accuracy for real data and that for generated data increases. This indicates that the generator and the discriminator are close to Nash equilibrium when the training times reach 630, and the discriminator is unable to determine whether the source of the input data is the generated data or the real data, i.e., the generated data are very similar to the real data.

The generated data were processed and analyzed. The generated dataset contains some very similar data samples, so only one set will be retained, and the rest will be discarded. Simultaneously, the generated data samples have been compared to the data samples in the test set, and samples in the generated data that are very similar to those in the test set were eliminated, preventing the leak of the test set resulting in model cheating. In summary, 67 generated data points were obtained from the two manufacturing methods, including 41 from as-cast and 26 from laser additive manufacturing. Figure 6 shows the distribution of the generated data.

As shown in Fig. 6, the radar graph illustrates the distribution of the eight input features and hardness for the real data and the CGAN-generated data. Figure 6a shows the true data distribution, which includes both casting and laser additive manufacturing. The data generated by the CGAN for the two methods are plotted in Fig. 6b depending on the distribution of the eight design parameters. These generated data were obtained via the generator rather than by random extraction from the original real data. Thus, these generated data are not the same as the original actual data. As depicted in Fig. 6, the generated and real data exhibit similar distribution trends, and the values of each feature have been taken within the range of the real data values, indicating that the two are similar. To increase the number of samples within the training set, these generated data were added to the training set. The hardness values of HEAs are predicted by DNN trained on 4-fold cross validation. Figure 7 shows the overall training model, and Fig. 8 shows the result of CGAN and DNN.

As shown in Fig. 8a, the CGAN and DNN training curve is smoother than the DNN training curve. The increased number of samples allows the model to extract and learn features from more data, while more samples make the average error of the model tend to be less sensitive. Nevertheless, in comparison to the training error of the DNN, there is less tendency to further reduce the final training error of the CGAN and DNN. Figure 8b shows the model performance on the test set, and Table III lists the assessment metrics for the final three models.

The results show that the prediction of the DNN model (DL-B) with the addition of the manufacturing route labels as the One-Hot encoder is significantly better than that of the normal DNN (DL-A), due to the better directionality and attribution of the samples. There will be no cases where the same input feature values correspond to different hardness values, causing the model to extract features inaccurately. The MAE value of DL-A is 61.4, and the R2 score is 0.71, while the MAE value of DL-B is 47.4 and the R2 score is 0.84. For the CGAN and DNN model, the MAE value is 44.6, and the R2 score is 0.85. Table IV presents the results in this work and in related publications. Among the models, different evaluation methods have been adopted, but they can still provide an indicative role.

The improvement effect is smaller compared with the DL-B model because the number of generated data samples is short. The CGAN has certain training sample requirements, and adequate data are required to achieve network training convergence. A Nash equilibrium can only be achieved by optimizing the hyperparameters continuously when the data volume is limited. Furthermore, in this case, the generated sample size is expanded; however, it does not significantly increase the diversity of the samples and is close to a simple replication. Since the generated samples with highly similar distributions to the test set are removed, the number of samples available for data augmentation is small, which leads to a slight improvement of the DNN model for HEA hardness prediction. However, Lee et al.'s work29 shows that employing CGAN and DNN can substantially improve the ability to predict HEA phases. As it utilizes 989 samples as the basis for the generation, the number and diversity of the produced samples are guaranteed by sufficient real data. Therefore, data augmentation by CGAN on a smaller dataset has limited improvement on the model prediction performance of DNN.

Relative Significance of Input Features

To verify the importance of the 8 feature values, single factor evaluation experiments are conducted by the CGAN-DNN architecture. For a total of eight experiments, one input feature is removed from each experiment, and the remaining feature values are fed into the neural network for training to observe the degree of change in the average error of the predicted hardness values for the HEAs. Figure 9 shows the variation in error across the eight studies, indicating that each of the eight parameters increases the hardness value error. Compared to other eigenvalues, mixing entropy and valence electron concentration tend to have a more significant effect on the error in predictions. As shown in the high entropy effect, the increase in the mixing entropy value can reduce the Gibbs free energy of HEAs, facilitating stable solid solution phases to form. A similar trend relationship exists between phase and hardness,51,52 with the transition from face-centered cubic to body-centered cubic corresponding to a transition from low to high hardness. It shows that the more severe lattice distortion that the body-centered cubic structure exhibits allows in more solid solution strengthening of it, which enhances the hardness. In accordance with the Hume-Rothery rules, the stability of a solid solution is impacted by the number of valence electrons per atom, and the phase of an alloy can be determined by element valence. The results suggest that mixing entropy and the valence electron concentration regulate the phase formation of HEAs to influence their hardness indirectly. Although the bond distances of different atom sizes vary, making the lattice distortion more severe to boost the hardness, electronic reaction impacts the bond strength of elemental atoms to regulate the underlying mechanical properties of HEAs.53 Furthermore, different lattice architectures display different atomic radii of the same atomic species, so as opposed to atomic size differences, charge transferring what produces the alteration in atomic-level pressure is the primary reason for solid solution strength.54 It suggests that the simple parameters related to electronegativity differences, entropy, and radius, although not always decisive, are strongly indicative in design of advanced HEAs for laser additive manufacturing. Thus, an ideal combination among such parameters can harden and strengthen laser additive manufactured HEAs.

Conclusion

The industry application of additively manufactured HEAs is hindered by the high entry barriers of design for additive manufacturing and the limited performance library of HEAs. In this work, to overcome these issues, a novel deep neural network architecture is proposed that includes high-entropy alloy manufacturing routes as input features, i.e., as-cast and laser additive manufactured samples. The manufacturing routes are transformed into the One-Hot encoder, which is merged with various physical parameters. This makes the samples in the dataset provide better directivity and reduces the prediction error of the model. Data augmentation with conditional generative adversarial networks has been employed to obtain some data samples with a distribution similar to that of the original data. These additional added data samples overcome the shortcoming of the limited performance library of HEAs. Based on the ML results, the simple parameters related to electronegativity differences, entropy, and radius, although not always decisive, are strongly indicative in the design of advanced HEAs for laser additive manufacturing. This work delivers a new path to discover chemical compositions suitable for laser additive manufactured HEAs, which is of universal relevance in assisting the design of HEAs for specific additive manufacturing processes.

References

Z.Y. Rao, P.-Y. Tung, R.W. Xie, Y. Wei, H.B. Zhang, A. Ferrari, T.P.C. Klaver, F. Körmann, P.T. Sukumar, A.K. da Silva, Y. Chen, Z.M. Li, D. Ponge, J. Neugebauer, O. Gutfleisch, S. Bauer, and D. Raabe, Science 378, 78 (2022).

Q.S. Pan, L.X. Zhang, R. Feng, Q.H. Lu, K. An, A.C. Chuang, J.D. Poplawsky, P.K. Liaw, and L. Lu, Science 374, 984 (2021).

Z.Z. Li, S.T. Zhao, R.O. Ritchie, and M.A. Meyers, Prog. Mater. Sci. 102, 296 (2019).

P. Sathiyamoorthi, and H.S. Kim, Prog. Mater. Sci. 123, 100709 (2022).

Y.S. Tian, W.Z. Zhou, Q.B. Tan, M.X. Wu, S. Qiao, G.L. Zhu, A.P. Dong, D. Shu, and B.D. Sun, Trans. Nonferrous Metal. Soc. China 32, 3487 (2022).

J.W. Pegues, M.A. Melia, R. Puckett, S.R. Whetten, N. Argibay, and A.B. Kustas, Addit. Manuf. 37, 101598 (2021).

C.X. Han, J.Q. Zhi, Z. Zeng, Y.S. Wang, B. Zhou, J. Gao, Y.X. Wu, Z.Y. He, X.M. Wang, and S.W. Yu, Appl. Surf. Sci. 623, 157108 (2023).

H. Dobbelstein, E.L. Gurevich, E.P. George, A. Ostendorf, and G. Laplanche, Addit. Manuf. 24, 386 (2018).

S.Y. Zhang, B. Han, T.M. Zhang, Y.H. Chen, J.L. Xie, Y. Shen, L. Huang, X.W. Qin, Y.B. Wu, and K.J. Pu, Intermetallics 159, 107939 (2023).

N. Khatavkar, S. Swetlana, and A.K. Singh, Acta Mater. 196, 295 (2020).

A. Jahan, M.Y. Ismail, S.M. Sapuan, and F. Mustapha, Mater. Des. 31, 696 (2010).

W.Y. Huo, H. Zhou, F. Fang, X.F. Zhou, Z.H. Xie, and J.Q. Jiang, J. Alloys Compd. 735, 897 (2018).

H. Dobbelstein, E.L. Gurevich, E.P. George, A. Ostendorf, and G. Laplanche, Addit. Manuf. 25, 252 (2019).

O.N. Senkov, J.D. Miller, D.B. Miracle, and C. Woodward, Calphad 50, 32 (2015).

S.Q. Wang, B.L. Xu, W.Y. Huo, H.C. Feng, X.F. Zhou, F. Fang, Z.H. Xie, J.K. Shang, and J.Q. Jiang, Appl. Catal. B 313, 121472 (2022).

W.Y. Huo, S.Q. Wang, F.J. Dominguez-Gutierrez, K. Ren, L. Kurpaska, F. Fang, S. Papanikolaou, H.S. Kim, and J.Q. Jiang, Mater. Res. Lett. 11, 713 (2023).

X. Wang, X.F. Li, H.Q. Xie, T.W. Fan, L. Zhang, K.Y. Li, Y.K. Cao, X.H. Yang, B. Liu, and P.K. Bai, J. Mater. Res. Technol. 23, 1130 (2023).

L. Xie, P. Brault, A.-L. Thomann, and J.-M. Bauchire, Appl. Surf. Sci. 285B, 810 (2013).

Q.W. Guo, H. Hou, Y. Pan, X.L. Pei, Z. Song, P.K. Liaw, and Y.H. Zhao, Mater. Des. 231, 112050 (2023).

P. Zhang, S.X. Wang, Z.Y. Lin, X.J. Yue, Y.R. Gao, S.T. Zhang, and H.J. Yang, Vacuum 211, 111939 (2023).

S. Wang, D. Li, and J. Xiong, Trans. Nonferrous Metal. Soc. China 33, 518 (2023).

Z.H. Li, L. Qin, B.S. Guo, J.P. Yuan, Z.G. Zhang, W. Li, and J.W. Mi, Acta Metall. Sin. Eng. Lett. 35, 115 (2021).

J.F. Durodola, Prog. Mater. Sci. 123, 100797 (2022).

M.E. Haque, and K.V. Sudhakar, Int. J. Fatig. 23, 1 (2001).

I. Mohanty, D. Bhattacharjee, and S. Datta, Comput. Mat. Sci. 50, 2331 (2011).

U. Bhandari, M.R. Rafi, C.Y. Zhang, and S.Z. Yang, Mater. Today Commun. 26, 101871 (2021).

N. Islam, W.J. Huang, and H.L. Zhuang, Comput. Mater. Sci. 150, 230 (2018).

Y.V. Krishna, U.K. Jaiswal, and M.R. Rahul, Scr. Mater. 197, 113804 (2021).

S.Y. Lee, S. Byeon, H.S. Kim, Y.H. Jin, and Y.S. Lee, Mater. Des. 197, 109260 (2021).

C. Wen, Y. Zhang, C.X. Wang, D.Z. Xue, Y. Bai, S. Antonov, L.H. Dai, T. Lookman, and Y.J. Su, Acta Mater. 170, 109 (2019).

X.Y. Huang, C. Jin, C. Zhang, H. Zhang, and H.W. Fu, Mater. Des. 211, 110177 (2021).

C. Yang, C. Ren, Y.F. Jia, G. Wang, M.J. Li, and W.C. Lu, Acta Mater. 222, 117431 (2022).

J. Xiong, S.-Q. Shi, and T.-Y. Zhang, J. Mater. Sci. Technol. 87, 133 (2021).

C. Wen, C.X. Wang, Y. Zhang, S. Antonov, D.Z. Xue, T. Lookman, and Y.J. Su, Acta Mater. 212, 116917 (2021).

Y.-J. Chang, C.-Y. Jui, W.J. Lee, and A.-C. Yeh, JOM 71, 3433 (2019).

B. Debnath, A. Vinoth, M. Mukherjee, S. Datta, and I.O.P. Conf, Ser. Mater. Sci. Eng. 912, 052021 (2020).

M. Bakr, J. Syarif, and I.A.T. Hashem, Mater. Today Commun. 31, 103407 (2022).

U. Bhandari, C.Y. Zhang, C.Y. Zeng, S.M. Guo, and S.Z. Yang, Crystals 11, 46 (2021).

Z.U. Arif, M.Y. Khalid, A.A. Rashid, E. ur Rehman, and M. Atif, Opt. Laser Technol. 145, 107447 (2022).

Z.U. Arif, M.Y. Khalid, E. ur Rehman, S. Ullah, and M. Atif, J. Manuf. Proc. 68B, 225 (2021).

C.K.H. Borg, C. Frey, J. Moh, T.M. Pollock, S. Gorsse, D.B. Miracle, O.N. Senkov, B. Meredig, and J.E. Saal, Sci. Data 7, 430 (2020).

S. Gorsse, M.H. Nguyen, O.N. Senkov, and D.B. Miracle, Data Brief 21, 2664 (2018).

F.Y. Tian, L.K. Varga, N.X. Chen, J. Shen, and L. Vitos, Intermetallics 58, 1 (2015).

S.S. Fang, X.S. Xiao, L. Xia, W.H. Li, and Y.D. Dong, J. Non-Cryst. Sol. 321, 120 (2003).

Y. Zhang, Y.J. Zhou, J.P. Lin, G.L. Chen, and P.K. Liaw, Adv. Eng. Mater. 10, 534 (2008).

C.T. Liu, Int. Metall. Rev. 29, 168 (1984).

J.H. Zhu, P.K. Liaw, and C.T. Liu, Mater. Sci. Eng. A 239–240, 260 (1997).

I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, Commun. ACM 63, 139 (2020).

M. Mirza, S. Osindero, arXiv (2014) preprint arXiv: 1411.1784. https://doi.org/10.48550/arXiv.1411.1784.

W.H. Zhu, W.Y. Huo, S.Q. Wang, X. Wang, K. Ren, S.Y. Tan, F. Fang, Z.H. Xie, and J.Q. Jiang, J. Mater. Res. Technol. 18, 800 (2022).

W.-R. Wang, W.-L. Wang, S.-C. Wang, Y.-C. Tsai, C.-H. Lai, and J.-W. Yeh, Intermetallics 26, 44 (2012).

J.Y. He, W.H. Liu, H. Wang, Y. Wu, X.J. Liu, T.G. Nieh, and Z.P. Lu, Acta Mater. 62, 105 (2014).

H.T. Zhang, H.D. Fu, X.Q. He, C.S. Wang, L. Jiang, L.Q. Chen, and J.X. Xie, Acta Mater. 200, 803 (2020).

H.S. Oh, S.J. Kim, K. Odbadrakh, W.H. Ryu, K.N. Yoon, S. Mu, F. Körmann, Y. Ikeda, C.C. Tasan, D. Raabe, T. Egami, and E.S. Park, Nat. Commun. 10, 2090 (2019).

Acknowledgements

The work was supported by Natural Science Foundation of the Jiangsu Higher Education Institutions of China (21KJB430014), National Natural Science Foundation of China (52171110), and Jiangsu Province Natural Science Foundation, China (BK20220428). Also, support was acknowledged from the European Union Horizon 2020 Research and Innovation Program (857470), from the European Regional Development Fund via the Foundation for Polish Science, International Research Agenda PLUS program (MAB PLUS/2018/8), and the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIP) (NRF-2021R1A2C3006662, NRF-2022R1A5A1030054). For the purpose of Open Access, the authors have applied a CC-BY public copyright license to any Author Accepted Manuscript (AAM) version arising from this submission.

Funding

Natural Science Foundation of the Jiangsu Higher Education Institutions of China (21KJB430014), National Natural Science Foundation of China (52171110), Jiangsu Province Natural Science Foundation, China (BK20220428), European Union Horizon 2020 Research and Innovation Program (857470), European Regional Development Fund via the Foundation for Polish Science, International Research Agenda PLUS program (MAB PLUS/2018/8), and the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIP) (NRF-2021R1A2C3006662, NRF-2022R1A5A1030054).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhu, W., Huo, W., Wang, S. et al. Machine Learning-Based Hardness Prediction of High-Entropy Alloys for Laser Additive Manufacturing. JOM 75, 5537–5548 (2023). https://doi.org/10.1007/s11837-023-06174-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11837-023-06174-x