Abstract

We propose a greedy variational method for decomposing a non-negative multivariate signal as a weighted sum of Gaussians, which, borrowing the terminology from statistics, we refer to as a Gaussian mixture model. Notably, our method has the following features: (1) It accepts multivariate signals, i.e., sampled multivariate functions, histograms, time series, images, etc., as input. (2) The method can handle general (i.e., ellipsoidal) Gaussians. (3) No prior assumption on the number of mixture components is needed. To the best of our knowledge, no previous method for Gaussian mixture model decomposition simultaneously enjoys all these features. We also prove an upper bound, which cannot be improved by a global constant, for the distance from any mode of a Gaussian mixture model to the set of corresponding means. For mixtures of spherical Gaussians with common variance \(\sigma ^2\), the bound takes the simple form \(\sqrt{n}\sigma \). We evaluate our method on one- and two-dimensional signals. Finally, we discuss the relation between clustering and signal decomposition, and compare our method to the baseline expectation maximization algorithm.

Similar content being viewed by others

1 Introduction

Mixtures of Gaussians are often used in clustering to fit a probability distribution to some given sample points. In this work we are concerned with the related problem of approximating a non-negative but otherwise arbitrary signal by a sparse linear combination of potentially anisotropic Gaussians. Our interest in this problem stems mainly from its applications in transmission electron microscopy (TEM), where it is common to express the reconstructed 3D image as a linear combination of Gaussians [5,6,7,8]. Consequently, because the projection of a Gaussian is a Gaussian, TEM projection data can be treated as 2D images made up of linear combinations of Gaussians [14].

Methods for sparse decomposition of multivariate signals as Gaussian mixture models (GMMs) may be considered in two classes. The first class contains methods based on the expectation maximization algorithm that is commonly used for fitting a GMM to a point-cloud and adapt it to input data in the form of multivariate signals [7, 8]. The second class is the class of greedy variational methods [4, 5, 9]. The proposed method, which belongs to the latter class, is similar to—and is inspired by—both [9] and [5]. It is a continuously parameterized analogue of orthogonal matching pursuit where at each iteration the \(L^2\)-norm of the error is non-increasing. The significance of the proposed method, and what distinguishes it from earlier work on GMM decomposition of signals, is that

-

1.

The resulting GMM may contain ellipsoidal Gaussians. This allows for a sparser representation than what could be achieved with a GMM only consisting of spherical Gaussians.

-

2.

The number of Gaussians does not need to be set before-hand.

We are not aware of previous methods for GMM decomposition of signals that enjoys both of these properties at the same time.

We complement our algorithm with a theorem (Theorem 1) that upper bounds the distance from a local maximum of a GMM to the set of mean vectors. This provides theoretical support for our initialization of each new mean vector at a maximum of the residual. We remark that Theorem 1 could also be of interest in its own right. Indeed the number of modes of Gaussian mixtures has been investigated previously [1, 3], but the authors of this paper are not aware of any existing quantitative bounds on the distance from a mode of a GMM to its mean vectors in the multivariate setting.

The rest of this paper is organized as follows. In Sect. 2 we define the GMM decomposition problem. Section 3 contains a description of the proposed algorithm together with numerical examples. Section 4 is devoted to theoretical questions; in particular, we state and prove the afore-mentioned upper bound. Finally, in Sect. 5 we provide a conclusion.

2 Problem statement

For \(x_0\in \mathbb {R}^n\) and \(\Sigma \in \mathbb {R}^{n\times n}\) symmetric non-negative definite we define \(g(x_0, \Sigma )\) as the Gaussian density in n dimensions with mean vector \(x_0\) and covariance matrix \(\Sigma ^{2}\), i.e.,

where \(C_{\Sigma }\) is a normalizing factor, ensuring that \(\left|g(x_0,\Sigma )\right|_1\) \(=1\). Further, by a GMM we mean a linear combination of the formFootnote 1

The problem we consider is to construct an algorithm with the following properties. Given input in the form of a non-negative signal \(d\in \mathbb {R}^{k_1\times \dots \times k_n}\), where \(k_i\) is the number of grid points along the i:th variable, the output should be a list of GMM parameters, i.e., it is a list \((a^*_m, x_m^*, \Sigma ^*_m)_{m=1}^M\) of weights, mean vectors and square roots of covariance matrices. The output should be such that

-

1.

the residual

$$\begin{aligned} r:=d- \sum _{m=1}^Ma^*_mg(x^*_m,\Sigma ^*_m) \end{aligned}$$has a small \(L^2\)-norm. (We remark that any sufficiently regular non-negative function can be uniformly approximated arbitrarily well using GMMs; see Sect. 4.)

-

2.

the approximation is sparse, i.e., the number of Gaussians M in the sum should ideally be as small as possible given the \(L^2\)-norm of the residual.

3 Proposed method

In each iteration of our algorithm, a new Gaussian is added to the GMM by a procedure that corresponds to one iteration of a continuously parameterized version of matching pursuit (MP), c.f. [10]: The starting guess \(x_0\) for the mean vector is defined to be a global maximum of \(r'\), which is a smoothed version of the current residual r. Likewise \(\Sigma _0\) is set to be a square root of the matrix of second-order moments of \(\frac{r''}{|r''|_1}\), where \(r''\) is r restricted to a neighborhood of \(x_0\). The initial weight \(a_0\) is given by projecting the Gaussian atom defined by \((x_0, \Sigma _0)\) onto the residual, i.e., it is given as the global minimum of the convex objective \(a\rightarrow |r-ag(x_0, \Sigma _0)|_2^2, a\ge 0\).

We found that this vanilla MP type of approach was by itself not capable of producing a good approximation. Because of this, we update the already obtained Gaussians in a way that bears some resemblance to the projection step of orthogonal MP (OMP) [13]: Starting from \((a_0, x_0, \Sigma _0)\), the parameters defining the most recently added Gaussian are updated by minimizing the \(L^2\)-norm of the residual.Footnote 2 The final step of an outer iteration is to simultaneously adjust all Gaussians in the current GMM, again by minimizing the \(L^2\)-norm of the residual. We propose to use the L-BFGS-B [2] method with non-negativity constraints on the weights for all of the three afore-mentioned minimization problems. Our algorithm runs until some user-specified stopping criterion is met and is summarized in pseudo-code in Algorithm 1. Our implementation of Algorithm 1 is in Python. The source code and consequent examples below are available upon request.

We now assess the space and time complexity of the proposed method in terms of the final number M of Gaussians used and the dimension n of the input signal. Let \(N_{i}\) denote the number of parameters of i Gaussians, so \(N_i = \mathcal {O}(in^2)\). The most demanding step of a single outer iteration of the proposed algorithm is the simultaneous adjustment of all Gaussians. If this step is done using L-BFGS-B, this amounts to a cost of \(\mathcal {O}(N_i)\), both in terms of space and time [2]. Summing over the M iterations we hence find the space and time requirements of the proposed method to be \(\mathcal {O}(M^2n^2)\).

3.1 Main numerical results

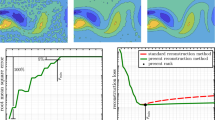

The theoretical results that our algorithm relies on are valid for any dimension. However, motivated by TEM data acquisition, we limit our examples up to 2D, which is sufficient for recovering the 3D structure [14]. As proof of concept for the proposed method, we ran our algorithm on small toy examples in 1D and 2D. We refer to the examples as Experiment 1–Experiment 3, see Figs. 1, 2, 3. A clean signal \(d_\text {clean}\) was generated by discretizing GMMs with parameters as in Table 1, 2, 3 to the grids \(\left\{ y_k = -10 + \frac{20k}{1000}\right\} _{k=0}^{1000}\) and

\(\big \{y_{k,\ell } = \big (-10 + \frac{20k}{65}, -10 + \frac{20\ell }{65}\big )\big \}_{k,\ell =0}^{64}\) in the 1D and 2D experiments, respectively. From this signal, noisy data were then generated as \(d = d_\text {clean} + \epsilon \) where \(\epsilon \) denotes white Gaussian noise with standard deviation \(\sigma _\text {noise}\). The latter was chosen so that SNR = 20, where \( \text {SNR} := 10\log _{10}\frac{\text {Var}\left( d_\text {clean}\right) }{\sigma ^2_\text {noise}}. \) As stopping criterion we used \(\text {SNR}_\text {stop} \ge 20\), where \( \text {SNR}_\text {stop} := 10\log _{10}\frac{\text {Var}\left( d_\text {est}\right) }{\text {Var}\left( d-d_\text {est}\right) }, \) and where \(d_{est} := \sum _ma_m^*g(x_m^*,\Sigma _m^*)\). In all minimization sub-procedures we used the L-BFGS-B method with non-negativity constraints on the weights and ran it until convergence. Hyper-parameters \(\tau _1\) and \(\tau _2\) (introduced in line 5 and 7 in Algorithm 1) should ideally be chosen based on the amount of noise in the data; however, we found that the exact values were not so important in our toy examples, and we used the ad-hoc chosen values \(\tau _1=10\) and \(\tau _2=20\). We leave a more careful sensitivity analysis with respect to these parameters to future studies. The total run-time was a few minutes on a computer with an Intel Pentium CPU running at 2.90GHz, using \(\sim 8\) GB of RAM. The final results of our experiments are tabulated in Table 1, 2, 3 and are plotted in Figure 1, 2, 3. Intermediate results for the 2D experiments are plotted in Figure 4, 5.

Remark 1

The number of modes of a GMM is not necessarily equal to the number of means. Indeed, there might be more modes than means [1]. Alternatively, there could be fewer modes than means, for example, in Experiment 2, a low-amplitude Gaussian and high-amplitude Gaussian are close to each other, and there is only one mode within the vicinity of both means. Our method successfully recovered the parameters of both Gaussians. There is also a mode at the center dominantly formed by the superposition of the tails of three anisotropic Gaussians, where our algorithm did not introduce a spurious Gaussian. Similar performance is observed in Experiment 3. We attribute this to the greedy nature of the algorithm where a predefined number of Gaussians is not enforced in the decomposition.

3.2 Clustering and comparison to expectation maximization

As one of the main techniques in unsupervised learning, clustering seeks to subdivide data into groups based on some similarity measure. A common approach in clustering is based on Gaussian mixtures. In this approach, one is given a point-cloud \(P\subset \mathbb {R}^n\) and the goal is to find a GMM f such that the probability that P is a sample from f is maximal among all GMMs with a prescribed number of components.

There are many ways of transforming a point-cloud into a signal and vice-versa. Thus one may use the method proposed in this paper for GMM-based clustering, and conversely, one may use methods from clustering in order to decompose a signal into a GMM. Below we illustrate both directions of this “problem transformation” with some numerical examples.

3.2.1 Clustering via signal decomposition

A sample point-cloud P of size \(10^5\) was drawn from a normalized version of the GMM in Experiment 3 above. Then a signal d was generated from P by computing a histogram based on the 2D grid used in the previous experiments. Results from the expectation maximization (EM) algorithm and the proposed method applied to P and d, respectively, are shown in Fig. 6. The two methods resulted in approximately the same likelihood of P given the computed GMMs.

3.2.2 Signal decomposition via clustering

A noisy signal d was generated as in Experiment 3 described in Sect. 3.1, except that the GMM was normalized before discretization. We then associated a point-cloud P to the noisy signal, following the methodology in [7]. More precisely, P had \(\left\lfloor {C^{-1}d(y)}\right\rfloor \) points at grid-point y, where the constant \(C := 10^{-5}\sum _yd(y)\) was chosen so that P had roughly \(10^5\) points in total. Using P we estimated a GMM with the EM algorithm. The performance of EM depended on the random initialization. A typical result using EM applied to P, along with the result from the proposed method applied to d, is shown in Fig. 7. We remark that it is possible that a better result with EM may be obtained using some other procedure for generating the point-cloud from the given signal.

4 Theoretical considerations

The main theoretical contribution of our paper is Theorem 3.4, which says that a mode of a GMM cannot lie too far away from the set of means. This theorem generalizes the 1D result that any mode will lie within one standard deviation away from some mean, and provides theoretical support for our way of initializing each iteration of our method. Before turning to this theorem, we need three lemmas. In the first two lemmas we compute some integrals over the n-sphere of certain polynomials, and in the third lemma we provide expressions for the gradient and Hessian of a GMM. We also prove, in Proposition 6, that any sufficiently nice non-negative function may be approximated in \(L^\infty \) to any desired accuracy by a GMM.

4.1 Location of modes of Gaussian mixtures

Lemma 1

where \(C_k := \int _{S^{n-1}}\left( e_1, y\right) ^k dy\).

Proof

Omitted due to space constraints. \(\square \)

Lemma 2

Let A be a symmetric \(n\times n\) matrix. Then

Proof

Let \(\uplambda _i, i=1,\dots , n\) be the eigenvalues of A and take \(U\in \text {O}(n)\) s.t. \(A=U^TDU\), \(D:=\text {diag}\left( \uplambda _1,\dots ,\uplambda _n\right) \).

\(\square \)

Lemma 3

The gradient and Hessian of a Gaussian mixture modelFootnote 3

\(a_m>0, \Sigma _m>0,\) are given by

Proof

Since this is a standard result, we omit the straightforward proof. \(\square \)

Theorem 1

Consider a Gaussian mixture model in n dimensions

\(a_m>0, \Sigma _m>0.\) Let \(\sigma _{m,\max }\) and \(\sigma _{m,\min }\) be the maximal and minimal eigenvalues of \(\Sigma _m\). Let \(x'\) be a local maximum of f. Then there exists an index m such that

Proof

Since \(x'\) is a local maximum we have \(Hf(x') \le 0\), i.e., \(\left( y, Hf(x')y\right) \le 0, y\in \mathbb {R}^n\). We integrate the last inequality over the unit-sphere and make use of Lemma 3 to conclude:

\(A_m(x',y):= \left[ \left( \Sigma _m^{-2}\left( x'-x_m\right) \right) \left( \Sigma _m^{-2}\left( x'-x_m\right) \right) ^T - \Sigma _m^{-2}\right] \). Hence there exist some index m such that

which leads to

We apply Lemma 1 and Lemma 2 and obtain:

Now \(C_2> 0\) since \(C_2\) is an integral of a continuous non-negative function that is not everywhere zero. Hence \( \left| \Sigma _m^{-2}\left( x'-x_m\right) \right| ^2 \le \text {Tr}\left( \Sigma _m^{-2}\right) . \) Note that \( \sigma _{m,\max }^{-4}\left| x'-x_m\right| ^2 \le \left| \Sigma _m^{-2}\left( x'-x_m\right) \right| ^2 \) and that \( \text {Tr}\left( \Sigma _m^{-2}\right) \) \( \le n\sigma _{m,\min }^{-2}. \) So \( \sigma _{m,\max }^{-4}\left| x'-x_m\right| ^2 \le n\sigma _{m,\min }^{-2}, \) and the claim of the theorem follows. \(\square \)

Corollary 4

Let f be a mixture of spherical Gaussians with common variance \(\sigma ^2\), i.e.,

If \(x'\) is local maximum of f, then there is an index m such that \( |x'-x_m| \le \sqrt{n}\sigma .\)

Proof

This is an immediate consequence of Theorem 1. \(\square \)

Proposition 5

The bound in Theorem 1 cannot be improved by a constant, i.e., for any \(\delta >0\) there exist a GMM f such that some mode \(x'\) of f satisfies

for all mean vectors \(x_m\) of f.

Proof

We explicitly construct a family \(\{f_\epsilon \}_\epsilon \) of functions that satisfy the statement of this proposition. For \(\epsilon \) such that \(\min \left( \delta ,\sqrt{n}\sigma \right)> \epsilon >0\) let \(f_\epsilon \) be the 2n component n-dimensional spherical GMM with common variance \(\sigma ^2\), common amplitude a and with means at \(\pm (\sqrt{n}\sigma - \epsilon ) e_{i}\), for \(i=1,2,\dots ,n\). We shall prove that \(f_\epsilon \) has a mode in the origin, and thus it has a mode at distance \(\sqrt{n}\sigma - \epsilon \) from the set of means of \(f_\epsilon \).Footnote 4 Lemma 3 implies

By symmetry \(\sum _{m}x_m=0\), so \(f_\epsilon \) has a critical point in the origin. Hence we are done if we prove that \(Hf_\epsilon (0) < 0\). Again by Lemma 3:

Hence \(Hf_\epsilon (0) < 0\). \(\square \)

4.2 Function approximation by Gaussian mixtures

The ability of GMMs to approximate functions in \(L^p\) spaces has been investigated previously, see, e.g., [12] where it is noted that any probability density function may be approximated in the sense of \(L^1\) by GMMs. For completeness, we here give a proof of the density of GMMs in the \(L^\infty \)-norm. The proof relies on the machinery of quasi-interpolants [11].

Proposition 6

Let u be a twice-differentiable and compactly supported non-negative function on \(\mathbb {R}^n\) such that u and all its partial derivatives up to order two are bounded. Then u may be approximated in \(L^\infty \) arbitrarily well by Gaussian mixtures.

Proof

Let \(g = g(0, \Sigma )\) be an n-dimensional centered Gaussian density function with non-degenerate covariance matrix \(\Sigma ^2\). As is noted on page 50 in [11], g generates an approximate quasi-interpolant of order 2. This follows from the observation that f satisfies both Condition 2.15 in [11, p. 33], with moment order \(N=2\), and Condition 2.12 in [11, p. 32], with decay order K, for all \(\mathbb {Z}\ni K > n\). Hence Theorem 2.17 in [11, p. 35] is applicable and implies that for any twice-differentiable real-valued function u on \(\mathbb {R}^n\) such that u and all its partial derivatives up to order two are bounded, one has that for any \(\epsilon >0\) that there exists a \(\mathcal {D} = \mathcal {D}(\epsilon )>0\) such that for all h, \(\left|u - \mathcal {M}_{h,\mathcal {D}}u\right|_\infty \) is bounded from the above by \( c \mathcal {D}h^2\max _{|\alpha |=2}|\partial ^\alpha u|_\infty + \epsilon \left( |u|_\infty + \sqrt{\mathcal {D}}h|\nabla u|_\infty \right) \le A \left( c\mathcal {D}h^2+ \epsilon + \sqrt{\mathcal {D}}h\right) , \)

where \(A:= \sqrt{n}\max _{0 \le |\alpha | \le 2} |\partial ^\alpha u|_\infty \), c is a constant independent of u, h and \(\mathcal {D}\) and the quasi-interpolant \(\mathcal {M}_{h,\mathcal {D}}u(x)\) is defined by

Note that the weights u(hm) are non-negative since u is non-negative, and that the compact support of u allows us to restrict the domain of summation in (1) to a finite subset of \(\mathbb {Z}^n\). Hence \(\mathcal {M}_{h,\mathcal {D}}u(x)\) is a GMM. Now for a given \(\epsilon >0\) we let \(\epsilon ' := \frac{\epsilon }{2A}\) and pick \(\mathcal {D}>0\) such that:

Next we take h small enough, so that \(A \left( c\mathcal {D}h^2 + \sqrt{\mathcal {D}}h\right) \le \epsilon /2\), and conclude that \(\left|u - \mathcal {M}_{h,\mathcal {D}}u\right|_\infty \le \epsilon .\) \(\square \)

5 Conclusion

Motivated primarily by applications in TEM, we have developed a new algorithm for decomposing a non-negative multivariate signal as a sum of Gaussians with full covariances. We have tested it on 1D and 2D data. Moreover, we have also proved an upper bound for the distance from a local maximum of a GMM to the set of its mean vectors. This upper bound provides motivation for a key step in our method, namely the initialization of each new Gaussian at the maximum of the residual. Finally we remark that while we have only tested the proposed method on functions sampled on uniform grids, it is straightforward to extend the method to handle input data in the form of multivariate functions sampled on non-uniform grids.

Availability of data and material

Upon request

Notes

We do not require the GMM to be normalized, i.e., we do not require that \(\sum _{m=1}^Ma_m=1\).

This step is not crucial for the performance of the algorithm, but was empirically found to improve the final data fit.

In the interest of readability we have abused notation and absorbed the normalizing constants \(C_{\Sigma _m}\) into the weights \(a_m\).

Numerical experiments suggest that \(f_\epsilon \) has a mode in the origin also for \(\epsilon =0\). A proof of this (if it is true) would however need an argument different from the one given here, since \(Hf_0(0) = 0\).

References

Amendola, C., Engstrom, A. and Haase, C.: Maximum number of modes of gaussian mixtures. arXiv preprint arXiv:1702.05066, 2017

Byrd, R.H., Lu, P., Nocedal, J., Zhu, C.: A limited memory algorithm for bound constrained optimization. SIAM J. Sci. Comput. 16(5), 1190–1208 (1995)

Carreira-Perpinan, M.A.: Mode-finding for mixtures of Gaussian distributions. Technical report, Dept. of Computer Science, University of Sheffield, UK, 2000

Fatma, K.B. and Cetin, A.E.: Design of gaussian mixture models using matching pursuit. IEEE-EURASIP Workshop on Nonlinear Signal and Image Processing, 1999

Jonic, S., Sorzano, C.O.S.: Coarse-graining of volumes for modeling of structure and dynamics in electron microscopy: Algorithm to automatically control accuracy of approximation. IEEE J. Sel. Topics Signal Process. 10(1), 161–173 (2016)

Jonic, S. and Sorzano, C.O.S.: Versatility of approximating single-particle electron density maps using pseudoatoms and approximation-accuracy control. BioMed Research International (2016)

Joubert, P.: A Bayesian approach to initial model inference in cryo-electron microscopy. PhD thesis, Georg-August University School of Science, 2016

Kawabata, T.: Gaussian-input Gaussian mixture model for representing density maps and atomic models. J. Struct. Biol. 203, 1–16 (2018)

Keriven, N., Bourrier, A., Gribonval, R., Perez, P.: Sketching for large-scale learning of mixture models. Inf. Inference 7(3), 447–508 (2018)

Mallat, S.G., Zhang, Z.: Matching pursuits with time-frequency dictionaries. IEEE Trans Signal Process 41(12), 3397–3415 (1993)

Mazya V. G., and Schmidt G.: Approximate approximations. Number 141. American Mathematical Soc., 2007

Nestoridis, V., Schmutzhard, S., Stefanopoulos, V.: Universal series induced by approximate identities and some relevant applications. J. Approx. Theory 163(12), 1783–1797 (2011)

Pati, Y.C., Rezaiifar, R. and Krishnaprasad, P.S.: Orthogonal matching pursuit: Recursive function approximation with applications to wavelet decomposition. In Proceedings of 27th Asilomar conference on signals, systems and computers, pages 40–44. IEEE, 1993

Zickert, G.: Analytic and data-driven methods for 3D electron microscopy. PhD thesis, KTH Royal Institute of Technology, 2020

Acknowledgements

The authors thank Ozan Öktem and Pär Kurlberg for fruitful discussions on this work.

Funding

Open access funding provided by Royal Institute of Technology. G.Z. acknowledges support by the Swedish Foundation of Strategic Research under Grant AM13-004.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest

Code availability

Upon request.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zickert, G., Yarman, C.E. Gaussian mixture model decomposition of multivariate signals. SIViP 16, 429–436 (2022). https://doi.org/10.1007/s11760-021-01961-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-021-01961-y