Abstract

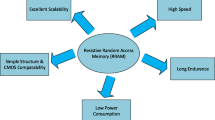

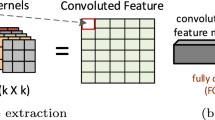

The performance and efficiency of running large-scale datasets on traditional computing systems exhibit critical bottlenecks due to the existing “power wall” and “memory wall” problems. To resolve those problems, processing-in-memory (PIM) architectures are developed to bring computation logic in or near memory to alleviate the bandwidth limitations during data transmission. NAND-like spintronics memory (NAND-SPIN) is one kind of promising magnetoresistive random-access memory (MRAM) with low write energy and high integration density, and it can be employed to perform efficient in-memory computation operations. In this study, we propose a NAND-SPIN-based PIM architecture for efficient convolutional neural network (CNN) acceleration. A straightforward data mapping scheme is exploited to improve parallelism while reducing data movements. Benefiting from the excellent characteristics of NAND-SPIN and in-memory processing architecture, experimental results show that the proposed approach can achieve ∼2.6× speedup and ∼1.4× improvement in energy efficiency over state-of-the-art PIM solutions.

Similar content being viewed by others

References

Shafique M, Hafiz R, Javed M U, et al. Adaptive and energy-efficient architectures for machine learning: challenges, opportunities, and research roadmap. In: Proceedings of IEEE Computer Society Annual Symposium on VLSI, Bochum, 2017. 627–632

Luo L, Zhang H, Bai J, et al. SpinLim: spin orbit torque memory for ternary neural networks based on the logic-in-memory architecture. In: Proceedings of Design, Automation and Test in Europe Conference and Exhibition, 2021. 1865–1870

Cai H, Guo Y, Liu B, et al. Proposal of analog in-memory computing with magnified tunnel magnetoresistance ratio and universal STT-MRAM cell. 2021. ArXiv:2110:03937

Liu J, Zhao H, Ogleari M A, et al. Processing-in-memory for energy-efficient neural network training: a heterogeneous approach. In: Proceedings of the 51st IEEE/ACM International Symposium on Microarchitecture, Fukuoka, 2018. 655–668

Song L, Zhuo Y, Qian X, et al. GraphR: accelerating graph processing using ReRAM. In: Proceedings of IEEE International Symposium on High Performance Computer Architecture, Vienna, 2018. 531–543

Eckert C, Wang X, Wang J, et al. Neural cache: bit-serial in-cache acceleration of deep neural networks. In: Proceedings of ACM/IEEE 45th Annual International Symposium on Computer Architecture, Los Angeles, 2018. 383–396

Hao Y, Xiang S Y, Han G Q, et al. Recent progress of integrated circuits and optoelectronic chips. Sci China Inf Sci, 2021, 64: 201401

Papandroulidakis G, Serb A, Khiat A, et al. Practical implementation of memristor-based threshold logic gates. IEEE Trans Circ Syst I, 2019, 66: 3041–3051

Xue C X, Chen W H, Liu J S, et al. 24.1 a 1Mb multibit ReRAM computing-in-memory macro with 14.6 ns parallel MAC computing time for CNN based AI edge processors. In: Proceedings of IEEE International Solid-State Circuits Conference, San Francisco, 2019. 388–390

Li B, Song L, Chen F, et al. ReRAM-based accelerator for deep learning. In: Proceedings of Design, Automation and Test in Europe Conference and Exhibition, Dresden, 2018. 815–820

Yuan Z H, Liu J Z, Li X C, et al. NAS4RRAM: neural network architecture search for inference on RRAM-based accelerators. Sci China Inf Sci, 2021, 64: 160407

Kim T, Lee S. Evolution of phase-change memory for the storage-class memory and beyond. IEEE Trans Electron Devices, 2020, 67: 1394–1406

Ambrogio S, Narayanan P, Tsai H, et al. Equivalent-accuracy accelerated neural-network training using analogue memory. Nature, 2018, 558: 60–67

Guo Z, Yin J, Bai Y, et al. Spintronics for energy- efficient computing: an overview and outlook. Proc IEEE, 2021, 109: 1398–1417

Apalkov D, Dieny B, Slaughter J M. Magnetoresistive random access memory. Proc IEEE, 2016, 104: 1796–1830

Jain S, Ranjan A, Roy K, et al. Computing in memory with spin-transfer torque magnetic RAM. IEEE Trans VLSI Syst, 2017, 26: 470–483

Wang M, Cai W, Zhu D, et al. Field-free switching of a perpendicular magnetic tunnel junction through the interplay of spin-orbit and spin-transfer torques. Nat Electron, 2018, 1: 582–588

Cai W, Shi K, Zhuo Y, et al. Sub-ns field-free switching in perpendicular magnetic tunnel junctions by the interplay of spin transfer and orbit torques. IEEE Electron Device Lett, 2021, 42: 704–707

Wang Z, Zhang L, Wang M, et al. High-density NAND-like spin transfer torque memory with spin orbit torque erase operation. IEEE Electron Device Lett, 2018, 39: 343–346

Shi K, Cai W, Zhuo Y, et al. Experimental demonstration of NAND-like spin-torque memory unit. IEEE Electron Device Lett, 2021, 42: 513–516

Angizi S, He Z, Parveen F, et al. IMCE: energy-efficient bit-wise in-memory convolution engine for deep neural network. In: Proceedings of the 23rd Asia and South Pacific Design Automation Conference, Jeju, 2018. 111–116

Angizi S, He Z, Rakin A S, et al. CMP-PIM: an energy-efficient comparator-based processing-in-memory neural network accelerator. In: Proceedings of the 55th Annual Design Automation Conference, San Francisco, 2018. 1–6

Cai H, Liu B, Chen J T, et al. A survey of in-spin transfer torque MRAM computing. Sci China Inf Sci, 2021, 64: 160402

Fong X, Kim Y, Venkatesan R, et al. Spin-transfer torque memories: devices, circuits, and systems. Proc IEEE, 2016, 104: 1449–1488

Rho K, Tsuchida K, Kim D, et al. 23.5 a 4Gb LPDDR2 STT-MRAM with compact 9f2 1T1MTJ cell and hierarchical bitline architecture. In: Proceedings of IEEE International Solid-State Circuits Conference, San Francisco, 2017. 396–397

Peng S, Zhu D, Li W, et al. Exchange bias switching in an antiferromagnet/ferromagnet bilayer driven by spin-orbit torque. Nat Electron, 2020, 3: 757–764

Yu Z, Wang Y, Zhang Z, et al. Proposal of high density two-bits-cell based NAND-like magnetic random access memory. IEEE Trans Circ Syst II, 2021, 68: 1665–1669

Shafiee A, Nag A, Muralimanohar N, et al. ISAAC: a convolutional neural network accelerator with in-situ analog arithmetic in crossbars. In: Proceedings of ACM/IEEE 43rd International Symposium on Computer Architecture, Seoul, 2016. 14–26

Yang J, Fu W, Cheng X, et al. S2Engine: a novel systolic architecture for sparse convolutional neural networks. IEEE Trans Comput, 2021. doi: https://doi.org/10.1109/TC.2021.3087946

Zhou S, Wu Y, Ni Z, et al. DoReFa-Net: training low bitwidth convolutional neural networks with low bitwidth gradients. 2016. ArXiv:1606.06160

Angizi S, He Z, Awad A, et al. MRIMA: an MRAM-based in-memory accelerator. IEEE Trans Comput-Aided Des Integr Circ Syst, 2019, 39: 1123–1136

Ghose S, Boroumand A, Kim J S, et al. Processing-in-memory: a workload-driven perspective. IBM J Res Dev, 2019, 63: 1–19

Imani M, Gupta S, Kim Y, et al. Floatpim: in-memory acceleration of deep neural network training with high precision. In: Proceedings of ACM/IEEE 46th Annual International Symposium on Computer Architecture, Phoenix, 2019. 802–815

Wang X, Yang J, Zhao Y, et al. Triangle counting accelerations: from algorithm to in-memory computing architecture. IEEE Trans Comput, 2021. doi: https://doi.org/10.1109/TC.2021.3131049

Chen Y H, Krishna T, Emer J S, et al. Eyeriss: an energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J Solid-State Circ, 2017, 52: 127–138

Li S, Niu D, Malladi K T, et al. DRISA: a DRAM-based reconfigurable in-situ accelerator. In: Proceedings of the 50th Annual IEEE/ACM International Symposium on Microarchitecture, Cambridge, 2017. 288–301

Wang X, Yang J, Zhao Y, et al. TCIM: triangle counting acceleration with processing-in-MRAM architecture. In: Proceedings of the 57th ACM/IEEE Design Automation Conference, San Francisco, 2020. 1–6

Yang J, Wang P, Zhang Y, et al. Radiation-induced soft error analysis of STT-MRAM: a device to circuit approach. IEEE Trans Comput-Aided Des Integr Circ Syst, 2015, 35: 380–393

Cai W L, Wang M X, Cao K H, et al. Stateful implication logic based on perpendicular magnetic tunnel junctions. Sci China Inf Sci, 2022, 65: 122406

Li S, Xu C, Zou Q, et al. Pinatubo: a processing-in-memory architecture for bulk bitwise operations in emerging non-volatile memories. In: Proceedings of the 53rd Annual Design Automation Conference, Austin, 2016. 1–6

Tang T, Xia L, Li B, et al. Binary convolutional neural network on RRAM. In: Proceedings of the 22nd Asia and South Pacific Design Automation Conference, Tokyo, 2017. 782–787

Chi P, Li S, Xu C, et al. PRIME: a novel processing-in-memory architecture for neural network computation in ReRAM-based main memory. SIGARCH Comput Archit News, 2016, 44: 27–39

Zhang D, Zeng L, Gao T, et al. Reliability-enhanced separated pre-charge sensing amplifier for hybrid CMOS/MTJ logic circuits. IEEE Trans Magn, 2017, 53: 1–5

Colangelo P, Nasiri N, Nurvitadhi E, et al. Exploration of low numeric precision deep learning inference using Intel FPGAs. In: Proceedings of the 26th Annual International Symposium on Field-Programmable Custom Computing Machines, Boulder, 2018. 73–80

Ding P L K, Martin S, Li B. Improving batch normalization with skewness reduction for deep neural networks. In: Proceedings of the 25th International Conference on Pattern Recognition, Milan, 2021. 7165–7172

Eken E, Song L, Bayram I, et al. NVSim-VXs: an improved NVSim for variation aware STT-RAM simulation. In: Proceedings of the 53rd ACM/EDAC/IEEE Design Automation Conference, Austin, 2016. 1–6

Acknowledgements

This work was supported in part by National Natural Science Foundation of China (Grant Nos. 62072019, 62004011, 62171013), Joint Funds of the National Natural Science Foundation of China (Grant No. U20A20204), and State Key Laboratory of Computer Architecture (Grant No. CARCH201917).

Author information

Authors and Affiliations

Corresponding authors

Rights and permissions

About this article

Cite this article

Zhao, Y., Yang, J., Li, B. et al. NAND-SPIN-based processing-in-MRAM architecture for convolutional neural network acceleration. Sci. China Inf. Sci. 66, 142401 (2023). https://doi.org/10.1007/s11432-021-3472-9

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11432-021-3472-9