Abstract

Purpose

Systematic reviews evaluating and comparing the measurement properties of outcome measurement instruments (OMIs) play an important role in OMI selection. Earlier overviews of review quality (2007, 2014) evidenced substantial concerns with regards to alignment to scientific standards. This overview aimed to investigate whether the quality of recent systematic reviews of OMIs lives up to the current scientific standards.

Methods

One hundred systematic reviews of OMIs published from June 1, 2021 onwards were randomly selected through a systematic literature search performed on March 17, 2022 in MEDLINE and EMBASE. The quality of systematic reviews was appraised by two independent reviewers. An updated data extraction form was informed by the earlier studies, and results were compared to these earlier studies’ findings.

Results

A quarter of the reviews had an unclear research question or aim, and in 22% of the reviews the search strategy did not match the aim. Half of the reviews had an incomprehensive search strategy, because relevant search terms were not included. In 63% of the reviews (compared to 41% in 2014 and 30% in 2007) a risk of bias assessment was conducted. In 73% of the reviews (some) measurement properties were evaluated (58% in 2014 and 55% in 2007). In 60% of the reviews the data were (partly) synthesized (42% in 2014 and 7% in 2007); evaluation of measurement properties and data syntheses was not conducted separately for subscales in the majority. Certainty assessments of the quality of the total body of evidence were conducted in only 33% of reviews (not assessed in 2014 and 2007). The majority (58%) did not make any recommendations on which OMI (not) to use.

Conclusion

Despite clear improvements in risk of bias assessments, measurement property evaluation and data synthesis, specifying the research question, conducting the search strategy and performing a certainty assessment remain poor. To ensure that systematic reviews of OMIs meet current scientific standards, more consistent conduct and reporting of systematic reviews of OMIs is needed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Plain English summary

Instruments that measure health outcomes are important for making treatment decisions and understanding diseases. Systematic reviews are used to compare different instruments and help select the best one for a specific situation. Previous studies have shown that the quality of these reviews can vary and may not always meet scientific standards. Since then, new tools and methods have been developed to help systematic review authors in improving the quality of their work. This study looked into the quality of recent systematic reviews of instruments. The study identified important improvements over time. For example, risk of bias is more often evaluated, and the data is analyzed in a better way. However, the study also shows that there are still areas that need improvement. These include formulating a clear research question, and creating a comprehensive search strategy. Ongoing efforts are needed to improve the quality of systematic reviews of instruments. This can be achieved by developing new and accessible resources.

Introduction

Outcome measurement instruments (OMIs) are used to evaluate the impact of disease and treatment [1,2,3]. When many different OMIs that measure similar constructs are available [1, 4, 5], the choice for an OMI depends on various aspects, including its quality (i.e., the sufficiency of measurement properties) [6]. Systematic reviews in which the measurement properties of OMIs are critically evaluated and compared are important tools for the selection of an OMI [4], for example in core outcome sets used in research projects or clinical practice [7]. With these systematic reviews, gaps in knowledge about the measurement properties of OMIs can also be identified.

Only well-designed, well-conducted, and comprehensively reported systematic reviews can provide a complete and balanced overview of the measurement properties of OMIs [4]. High-quality systematic reviews have: a well-defined research question; a comprehensive search strategy in multiple databases; independent abstract and full-text article selection; a risk of bias assessment of included studies; a systematic evaluation and syntheses of the results; and a certainty assessment of the body of evidence [8].

Previous overviews appraising the quality of systematic reviews of OMIs identified major limitations in the search strategy, the risk of bias assessment, and the evaluation and synthesis of the measurement properties’ results [9, 10]. These limitations preclude systematic reviews to provide a complete and unbiased overview of the measurement properties of OMIs. This has consequences for knowledge users, who rely on the findings of these systematic reviews and might select suboptimal OMIs to use in their research or clinical practice [11]. This in turn impacts the measurements conducted on patients, which might be invalid and unreliable, and possibly even lead to incorrect healthcare decisions.

Various methodologies and practical tools have been developed to guide authors in conducting high-quality systematic reviews of OMIs [4, 12, 13]. The methodology and tools developed by the COSMIN (COnsensus-based Standards for the selection of health Measurement INstruments) initiative are the most comprehensive and most widely used (Fig. 1) [14]. Since the most recent overview that assessed the quality of systematic reviews of OMIs, published in 2016 [10], the COSMIN guideline for systematic reviews has been developed [4] and the COSMIN risk of bias checklist has been updated [15, 16]. Other methodologies and tools for critical appraisal of OMIs have also been developed and updated since then [12, 17]. When reading or reviewing such systematic reviews, even those that claim to have used these guidelines, we often observe flaws in the design, conduct, and reporting. The aim of this overview of reviews was therefore to investigate whether the quality of recent systematic reviews of OMIs lives up to the current scientific standards. As a secondary aim, we explored which aspects have notably improved over time.

Methods

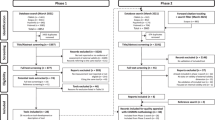

The study protocol was registered in the PROSPERO database, number CRD42022320675 [18]. There were no important deviations from the protocol. The study was reported according to the preferred reporting items for overviews of reviews (PRIOR) statement [19]. Consistent with the previous overview [10], we randomly selected 100 out of 136 most recent systematic reviews from the COSMIN database of systematic reviews [20]. These reviews were identified while updating the COSMIN database through a systematic literature search performed on March 17, 2022 in MEDLINE (through PubMed) and EMBASE (through www.embase.com), and concerned systematic reviews of OMIs published from June 1, 2021 onwards. The search strategy consisted of search terms for systematic reviews, search terms for OMIs, and a validated search filter for measurement properties [21]. The full search strategy can be found in Supplementary File 1. Table 1 contains inclusion and exclusion criteria for the COSMIN database [20]. We defined systematic reviews of OMIs as peer-reviewed studies with a systematic search in at least one electronic database which aimed to summarize evidence on the measurement properties of all OMIs of interest to the review.

Eligibility for inclusion in the COSMIN database was determined by one reviewer (IS). All reviewers confirmed that each review appraised in the current study complied with the inclusion and exclusion criteria. If a review was selected from the COSMIN database that should have been excluded (false-positive), this review was replaced by a randomly selected new review after confirming exclusion by a third reviewer (LM).

A study-specific data extraction form (Supplementary File 2) was developed to appraise the quality of systematic reviews of OMIs, which includes both methodological quality and reporting quality—two aspects that cannot be considered separately when appraising the quality of published OMI systematic reviews. The data extraction form was based on criteria used in previous studies [9, 10], which were updated for this study. The data extraction form contained items on the key elements of the review (i.e., construct, population, type of OMI, and measurement properties of interest), search strategy, eligibility criteria, article selection, data extraction, risk of bias assessment, evaluation of measurement properties, data synthesis, certainty assessment, presentation of results, instrument recommendation, and elements of open science). Specifically, the appropriateness of the search for the construct, population, type of OMI and measurement properties was based on published search filters [21, 23], search terms found at blocks.bmi-online.nl, and the reviewers own knowledge. For each item, two independent reviewers extracted information on whether this was done/reported in the included reviews. No attempts were made to verify information with study authors. Reviewers also noted any major methodological and reporting flaws for each of these aspects.

The data extraction form was pilot tested with six OMI systematic reviews [24,25,26,27,28,29] by two independent reviewers (different pairs of EE, CT, and LM). A subsequent update was done after training the other reviewers, who were instructed to extract data for one of these six reviews [25]. Discrepancies were discussed during two 90-min Zoom meetings intended to standardize the data extraction process. After these meetings, the data extraction form and instructions on how to appraise each systematic review were finalized, and five pairs of reviewers were formed (EE&JP/IS, LM&DO, CT&IA, KH&KM, AC&OA). Each reviewer pair subsequently appraised the quality of 18–19 systematic reviews independently. Reviews were not appraised by a reviewer who was a co-author or had a potential conflict of interest. Discrepancies between the pair of reviewers were resolved through discussion. Appraisals of reviewers were descriptively synthesized by review counts and a qualitative comparison of the results was made to the results of previous studies [9, 10], if possible.

Results

Characteristics of the 100 systematic reviews are presented in Table 2. Half of the included reviews focused on patient-reported outcomes, 30% focused on non-patient-reported outcomes, and 20% on a combination of both. The aspect of health of the construct of interest in the reviews was mostly functional status (62%), symptom status (56%), and/or general health perceptions (36%). Reviews focused on a variety of populations, such as children and (older) adults with a variety of diseases and conditions. Questionnaires (77%), clinical rating scales (41%), and/or performance-based tests (24%) were the OMI types most often included.

Syntheses of the quality appraisal of the 100 systematic reviews of OMIs [24,25,26,27,28,29,30,31,32,33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108,109,110,111,112,113,114,115,116,117,118,119,120,121,122,123] are presented in Table 3. Supplementary File 2 contains the completed data extraction form, whereas Supplementary File 3 contains the data from Table 3 in comparison with the results of the two previous studies [9, 10].

Key elements

Only 11% of the reviews had a title that included all four key elements (i.e., construct, population, type of OMI, and measurement properties of interest) and the fact that it concerned a systematic review. In titles of the remaining reviews, often no reference to measurement property evaluation was made. 47% of the reviews had a title that omitted at least 2 key elements and/or the fact that it concerned a systematic review. The term ‘scoping review’ was used in 7% of the reviews. In 45% of the reviews all 4 key elements were included in the aim, whereas in 18% of the review aims at least 2 key elements were not reported. Major flaws identified in the aim were often that the aim was unclear or vague, for example by stating that the aim was “to discuss validity” [121], or “to provide information about frailty instruments” [94].

Search strategy

In 78% of the reviews the search strategy matched the research aim. When there was a mismatch between the aim and the search strategy, often the aim was to identify all available OMIs, whereas search terms for measurement properties were included. Hence, only OMIs with evidence for the measurement properties were identified.

Only 27% of the reviews had an appropriate search strategy with respect to search terms used for both the construct, population, OMI type and measurement properties. Search terms for OMI type were not appropriate for 40% of the reviews because relevant synonyms or search terms were not included. Search terms for measurement properties were deemed inappropriate for 34% of the reviews.

The number of databases searched ranged from 1–14, with a median of 4. MEDLINE was searched in 98% of the included reviews, whereas EMBASE was searched in 56%. Only 66% of the reviews performed reference checking of included articles.

Eligibility criteria and article selection

In 75% of the reviews the eligibility criteria were clearly defined, and in 83% the eligibility criteria matched the research aim. Mismatches often concerned that the aim was to identify all available or used OMIs, whereas eligibility criteria included that the study should report on measurement properties, hence resulting in including only OMIs that were validated to at least some extent. In 42% of the reviews other notable eligibility criteria were used, such as only including OMIs that were reported in at least a certain number of articles, only including validation studies of original OMIs or certain (language) versions, excluding studies of low quality, or excluding OMIs that were described in previously published systematic reviews.

In 65% and 69% of the reviews, respectively abstract and full text selection was (partly) done by at least 2 independent reviewers, compared to 41% and 38% in 2014. Data extraction was (partly) done by at least 2 independent reviewers in 42%, compared to 25% in both 2014 and 2007. In most other cases it was unclear whether two independent reviewers were involved.

Risk of bias assessment

The methodological quality (i.e., risk of bias) of the studies was evaluated in 63% of the reviews, compared to 41% in 2014 and 30% in 2007. In 62% of those reviews, the quality assessment was done by at least two reviewers independently. For 33% of the reviews this was unclear.

Measurement property evaluation

In 73% of the reviews (some) measurement properties of the included OMIs were evaluated, compared to 58% in 2014 and 55% in 2007. This means that in these reviews a judgement was made about the sufficiency of the measurement properties, rather than providing only the results of measurement properties. For those reviews in which (some) measurement properties were evaluated, (a reference to) criteria for measurement properties were provided in 81% of the reviews; in 19% of the reviews it was not clear on what criteria judgements were based. In those reviews in which measurement properties were evaluated and that included multidimensional OMIs, only 18% evaluated each subscale separately. In 22% of the reviews the evaluation of measurement properties was (partly) done by at least two independent reviewers.

Data synthesis and certainty assessment

Data synthesis, in which results from multiple studies on the same OMI were combined, was (partly) performed in 60% of the reviews, compared to 44% in 2014 and 7% in 2007. In those reviews in which data synthesis was performed and that included multidimensional OMIs, synthesis was performed for each subscale separately in only 13% of the cases. Methods for data syntheses were clearly described in 47% of the reviews. In 84% of the reviews data synthesis was performed for each measurement property separately. Data synthesis was performed by at least 2 independent reviewers for 18% of the reviews.

In 33% of the reviews, a certainty assessment was done in which the quality of the evidence was graded. Quality of the evidence was graded by at least 2 independent reviewers in 27% of the reviews.

Results and instrument recommendation

A flowchart was provided in 96% of the reviews, often with reasons for excluding full texts (85% vs. 55% in 2014). Included instruments were in 86% of the reviews in accordance with the inclusion criteria. In 72% of the reviews, the results of (some) measurement properties were reported as raw data.

In almost half of the reviews (42%) recommendations on which instrument (not) to use were made. In 25% of the reviews, recommendations were made for each construct of interest. In 62% of the reviews the recommendations made were consistent with the evidence appraisal.

A summary of the main results with recommendations for future OMI systematic reviews is provided in Table 4.

Discussion

This overview of reviews aimed to investigate whether the quality of recent systematic reviews of OMIs lives up to the current scientific standards and which aspects have notably improved over time. Compared to previous studies [9, 10], we found marked improvements in the conduct of risk of bias assessments, evaluation of measurement properties, and performance of formal data syntheses. Despite this, further improvements in these areas are necessary, as well as with respect to the research question and search strategy.

Over half of the reviews included in this study had an unclear research question or aim, for example with respect to the population of interest, the measurement properties that were evaluated, or the type of OMIs that were included. Including the four key elements, analogue to the PICO (population, intervention, comparison, outcome) format in systematic reviews of interventions [4, 8, 125], helps to formulate a well-defined research question and facilitates the development of an appropriate search strategy. Without a clear research question, it is not possible to assess the comprehensiveness of the search strategy.

Almost three-quarters of the reviews had an inappropriate or incomprehensive search strategy, often because inappropriate search terms for OMI type or measurement properties were included. It is preferred not to use search terms for OMI type to avoid missing any studies; however, if search terms are needed because of too many results, a search filter exists for PROMs [23]. A highly sensitive search filter also exists for measurement properties [21], but it was used in only 14 reviews. While searching both MEDLINE and EMBASE is recommended as a minimum by Cochrane [126], almost half of the reviews included in this study did not search EMBASE. Similarly, whilst reference checking is recommended [126], this was not reported by a third of the reviews. Through reference checking, one can also confirm the comprehensiveness of the search strategy: if many relevant articles were found through reference checking, the search was probably not comprehensive and important studies may have been missed [126].

In almost half of the reviews poorly justified eligibility criteria were used, e.g., only including OMIs in a certain language, or excluding OMIs that were included in previous systematic reviews. Such unintuitive eligibility criteria might negatively impact the inclusion of relevant studies or OMIs, hampering a complete synthesis of the body of available evidence. The number of reviews in which article selection and data extraction was conducted by at least 2 independent reviewers increased compared to previous overviews [9, 10].

Whilst a marked increase in the number of reviews that included a risk of bias assessment was found (63% currently compared to 41% in 2014 and 30% in 2007 [9, 10]), opportunities for improvement remain. Evaluating risk of bias in empirical studies on measurement properties is important, because results might not be valid if a study has bias. For example, relevant items might be missing in a PROM if patients were not involved in its development, or the reliability of an OMI might be underestimated if the time interval between test and retest is (too) long. The COSMIN risk of bias checklist [16] or tool [15] were specifically developed for this purpose and were used in 47 reviews. Other risk of bias tools reported in the reviews [43, 70, 82, 101, 120] included, for example, the QUADAS-2 [127], QAREL [128], ROBINS-I [129], and Newcastle–Ottawa quality assessment scale [130]. These tools are, however, not specifically developed to assess the methodological quality of empirical measurement property studies and may not identify important bias.

The number of reviews in which measurement properties were formally evaluated has notably increased since 2007 (73% currently compared to 58% in 2014 and 55% in 2007 [9, 10]). In 14 reviews, however, it was not clear which criteria were used. In several reviews, authors mistakenly used risk of bias or certainty assessment ratings as a measure of OMI quality. However, these ratings refer to the quality of the study and the quality of the evidence, respectively, and not to the quality of the OMI (i.e., its measurement properties).

A clear increase in the number of reviews in which a data synthesis was performed was also observed (60% currently compared to 42% in 2014 and 7% in 2007 [9, 10]). However, the methods for data synthesis were often unclearly described and only in a third of the reviews a certainty assessment of the body of evidence was conducted. Potentially, the publication of the COSMIN guideline for systematic reviews of PROMs [4] in 2018 increased the number of reviews in which a data synthesis was performed. This guideline details how to synthesize multiple studies on the same measurement property for the same OMI, although more guidance might be necessary.

Each subscale in a multidimensional instrument should be considered a separate instrument as it represents a unique construct with measurement properties often varying between subscales [4]. However, we observed that few studies separately evaluated measurement properties or conducted an evidence synthesis at the subscale level. By not evaluating each subscale separately, a review therefore presents an incomplete picture of the measurement properties for the given scale.

Less than half of the reviews made recommendations about which OMI (not) to use. The conclusions of systematic reviews will be used by other researchers and clinicians who need to select an OMI for their purpose, although the selection of the most appropriate OMI may depend on the context and situation. Clear, evidence-based recommendations on which OMI (not) to use will help others in their OMI selection and contribute to the standardization of OMIs.

Although two-thirds of the reviews purport to include an evaluation of content validity, there is doubt over the thoroughness of these evaluations. Whilst 25 reviews reported application of the COSMIN guideline for evaluating content validity, only 13 appear to have applied it correctly. One of the steps in the assessment of content validity according to the COSMIN guideline is the evaluation of the content by reviewers themselves. This step was often lacking. Other flaws included not distinguishing between development and content validity studies, and only conducting a risk of bias assessment without evaluating the content validity of the OMI.

Other major flaws that we observed in some reviews were confusing the quality of the study (i.e., risk of bias) with the quality of the OMI (i.e., its measurement properties) or making recommendations based on certainty assessment rather than the sufficiency of measurement properties.

Towards high quality OMI systematic reviews

Systematic reviews of OMIs are difficult to conduct, and this study shows that the availability of methodology and tools that guide authors in the conduct of their systematic review does not translate automatically into high-quality systematic reviews. Besides more and better resources, behavioral change techniques [131], implementation strategies, and knowledge translation activities are needed to improve systematic review quality. Several of these have recently been developed or are being considered. First, the COSMIN guideline for systematic reviews has recently been updated and made more user-friendly to better facilitate reviewers [132]. Second, a newly developed animated video explains the key steps of conducting a systematic review of OMIs (available at https://www.cosmin.nl/). Third, a reporting guideline for OMI systematic reviews has recently been developed [133], and knowledge translation activities have been implemented to increase its uptake. Last, a course on how to conduct OMI systematic reviews is being developed to educate reviewers more thoroughly. To alert systematic review authors to the various tools available, an automated email can be sent to authors registering their review in PROSPERO. PROSPERO is a database for registering systematic reviews of health related outcomes [134], and although less than half of the included reviews reported prospective registration, such an email alert might increase the uptake of tools and improve the quality of future OMI systematic reviews.

Limitations

An important limitation of the current study is the potential subjectivity in appraising the quality of systematic reviews. We attempted to use a rigorous and standardized data extraction process, in which we pilot tested and improved the data extraction form, provided training to reviewers who were already experts in systematic reviews of OMIs, and assigned systematic reviews to reviewer pairs who independently appraised their quality and reached consensus about any discrepancies. However, because of large variations in the systematic reviews included, some degree and variation of subjective judgement in appraising the quality of systematic reviews could not be avoided. Second, some of the included reviews might not have been systematic reviews by definition, as the inclusion criteria were not stringent in that respect. We decided to include a review if at least one measurement property was evaluated (i.e., some degree of judgement was made about the sufficiency of a measurement property, as opposed to only providing an overview of the measurement properties). Third, we were unable to compare all quality aspects historically, because not all aspects were rated in the studies conducted in 2014 and 2007 [9, 10]. Compared to the previous studies, the current appraisal is the most comprehensive, and new elements were added, such as inclusion of key elements in the title, specification of criteria for measurement properties, evaluation of subscales, and assessment of certainty. Fourth, we randomly selected 100 recent reviews that fulfilled the eligibility criteria, out of a set of 136 reviews that were identified while updating the COSMIN database [20]. Our aim was not to include all available systematic reviews but rather to appraise and compare the quality of a random sample of the most recently published reviews with a set of reviews published respectively 8 and 15 years ago. We believe that the inclusion of additional reviews would not have altered our findings. Lastly, the appraisal of the reviews’ quality was hampered by poor reporting, for example with respect to the process of data synthesis or the number of independent reviewers involved in each of the steps of the review process. The recently developed PRISMA-COSMIN for OMIs reporting guideline could improve the reporting of OMI systematic reviews [133]. Although the current study is not a one-to-one baseline assessment of reporting aspects required by PRISMA-COSMIN for OMIs, most reporting items have been included in the current quality appraisal. Because our aim was to assess whether the quality of recent systematic reviews lived up to the current scientific standards, including reporting quality, we have not contacted the authors of the included systematic reviews to provide additional information.

Conclusion

In conclusion, this overview of 100 reviews published after June 2021 found, compared to previous overviews of reviews, a clear improvement in the number of OMI systematic reviews that conducted a risk of bias assessment, evaluated the measurement properties of included OMIs, and conducted a data synthesis. However, room for improvement in these areas remains. Improvements regarding the research question and search strategy are urgently needed, as more than half of the reviews likely missed important studies. To ensure that systematic reviews of OMIs meet current scientific standards, more consistent conduct and reporting of systematic reviews of OMIs is needed.

Data availability

All data supporting the findings of this study are available within the paper and its Supplementary Information.

References

Devlin, N. J., & Appleby, J. (2010). Getting the most out of PROMs. Putting health outcomes at the heart of NHS decision-making. The King’s Fund.

Fitzpatrick, R., Davey, C., Buxton, M. J., & Jones, D. R. (1998). Evaluating patient-based outcome measures for use in clinical trials. Health Technology Assessment. https://doi.org/10.3310/hta2140

Greenhalgh, J. (2009). The applications of PROs in clinical practice: What are they, do they work, and why? Quality of Life Research, 18, 115–123.

Prinsen, C. A., Mokkink, L. B., Bouter, L. M., Alonso, J., Patrick, D. L., De Vet, H. C., & Terwee, C. B. (2018). COSMIN guideline for systematic reviews of patient-reported outcome measures. Quality of Life Research, 27(5), 1147–1157.

Mokkink, L. B., Prinsen, C. A., Bouter, L. M., de Vet, H. C., & Terwee, C. B. (2016). The COnsensus-based standards for the selection of health measurement instruments (COSMIN) and how to select an outcome measurement instrument. Brazilian Journal of Physical Therapy, 20, 105–113.

Mokkink, L. B., Terwee, C. B., Patrick, D. L., Alonso, J., Stratford, P. W., Knol, D. L., Bouter, L. M., & de Vet, H. C. (2010). The COSMIN study reached international consensus on taxonomy, terminology, and definitions of measurement properties for health-related patient-reported outcomes. Journal of Clinical Epidemiology, 63(7), 737–745.

Prinsen, C. A., Vohra, S., Rose, M. R., Boers, M., Tugwell, P., Clarke, M., Williamson, P. R., & Terwee, C. B. (2016). How to select outcome measurement instruments for outcomes included in a “core outcome set”–a practical guideline. Trials, 17(1), 1–10.

Higgins Jpt, T., Chandler, J., Cumpston, M., Li, T., Page, M., & Welch, V., (2022) Cochrane Handbook for systematic reviews of interventions version 6.3 (updated February 2022), Cochrane.

Mokkink, L. B., Terwee, C. B., Stratford, P. W., Alonso, J., Patrick, D. L., Riphagen, I., Knol, D. L., Bouter, L. M., & de Vet, H. C. (2009). Evaluation of the methodological quality of systematic reviews of health status measurement instruments. Quality of Life Research, 18(3), 313–333.

Terwee, C. B., Prinsen, C., Garotti, M. R., Suman, A., De Vet, H., & Mokkink, L. B. (2016). The quality of systematic reviews of health-related outcome measurement instruments. Quality of Life Research, 25(4), 767–779.

McKenna, S. P., & Heaney, A. (2021). Setting and maintaining standards for patient-reported outcome measures: Can we rely on the COSMIN checklists? Journal of Medical Economics, 24(1), 502–511.

OMERACT. (2021). The OMERACT handbook for establishing and implementing core outcomes in clinical trials across the spectrum of rheumatologic conditions. OMERACT.

Aromataris, E. & Munn, Z. (2020). JBI manual for evidence synthesis. 2020: JBI.

COSMIN (2023). Retrieved, www.cosmin.nl.

Mokkink, L. B., Boers, M., van der Vleuten, C., Bouter, L. M., Alonso, J., Patrick, D. L., De Vet, H. C., & Terwee, C. B. (2020). COSMIN risk of bias tool to assess the quality of studies on reliability or measurement error of outcome measurement instruments: A Delphi study. BMC Medical Research Methodology, 20(1), 1–13.

Mokkink, L. B., De Vet, H. C., Prinsen, C. A., Patrick, D. L., Alonso, J., Bouter, L. M., & Terwee, C. B. (2018). COSMIN risk of bias checklist for systematic reviews of patient-reported outcome measures. Quality of Life Research, 27(5), 1171–1179.

Aromataris, E. & Munn, Z., JBI Manual for Evidence Synthesis. 2020, JBI.

Elsman, E. B., Mokkink, L. B., Butcher, N. J., Offringa, M., & Terwee, C. B. (2022). The reporting and methodological quality of systematic reviews of health-related outcome measurement instruments. PROSPERO.

Gates, M., Gates, A., Pieper, D., Fernandes, R. M., Tricco, A. C., Moher, D., Brennan, S. E., Li, T., Pollock, M., Lunny, C., Sepulveda, D., McKenzie, J. E., Scott, S. D., Robinson, K. A., Matthias, K., Bougioukas, K. I., Fusar-Poli, P., Whiting, P., Moss, S. J., & Hartling, L. (2022). Reporting guideline for overviews of reviews of healthcare interventions: Development of the PRIOR statement. BMJ, 378, e070849.

COSMIN (2022) COSMIN database of systematic reviews. Retrieved 30, Jan, 2022, from www.cosmin.nl/tools/database-systematic-reviews/.

Terwee, C. B., Jansma, E. P., Riphagen, I. I., & de Vet, H. C. (2009). Development of a methodological pubmed search filter for finding studies on measurement properties of measurement instruments. Quality of Life Research, 18, 1115–1123.

Wilson, I. B., & Cleary, P. D. (1995). Linking clinical variables with health-related quality of life: A conceptual model of patient outcomes. JAMA, 273(1), 59–65.

Mackintosh, A., Casañas i Comabella, C., Hadi, M., Gibbons, E., Fitzpatrick, R., & Roberts, N. (2010). PROM GROUP CONSTRUCT & INSTRUMENT TYPE FILTERS. Retrieved 2023, May, from https://cosmin.nl/wp-content/uploads/prom-search-filter-oxford-2010.pdf.

Alamrani, S., Rushton, A. B., Gardner, A., Bini, E., Falla, D., & Heneghan, N. R. (2021). Physical functioning in adolescents with idiopathic scoliosis: A systematic review of outcome measures and their measurement properties. Spine, 46(18), E985–E997.

Barni, L., Ruiz-Munoz, M., Gonzalez-Sanchez, M., Cuesta-Vargas, A. I., Merchan-Baeza, J., & Freddolini, M. (2021). Psychometric analysis of the questionnaires for the assessment of upper limbs available in their Italian version: A systematic review of the structural and psychometric characteristics. Health and Quality of Life Outcomes, 19(1), 259.

Meilani, E., Zanudin, A., & Mohd Nordin, N. A. (2022). Psychometric properties of quality of life questionnaires for patients with breast cancer-related lymphedema: A systematic review. International Journal of Environmental Research and Public Health, 19(5), 2519.

Proctor, K. B., Tison, K. H., Park, J., Scahill, L., Vickery, B. P., & Sharp, W. G. (2022). A systematic review of parent report measures assessing the psychosocial impact of food allergy on patients and families. Allergy, 77(5), 1347–1359.

Oluchi, S. E., Manaf, R. A., Ismail, S., Kadir Shahar, H., Mahmud, A., & Udeani, T. K. (2021). Health related quality of life measurements for diabetes: A systematic review. International Journal of Environmental Research and Public Health, 18(17), 9245.

Sellitto, G., Morelli, A., Bassano, S., Conte, A., Baione, V., Galeoto, G., & Berardi, A. (2021). Outcome measures for physical fatigue in individuals with multiple sclerosis: A systematic review. Expert Review of Pharmacoeconomics & Outcomes Research, 21(4), 625–646.

Ai, X., Yang, X., Fu, H. Y., Xu, J. M., & Tang, Y. M. (2022). Health-related quality of life questionnaires used in primary biliary cholangitis: A systematic review. Scandinavian Journal of Gastroenterology, 57(3), 333–339.

Alaqeel, S., Alfakhri, A., Alkherb, Z., & Almeshal, N. (2022). Patient-reported outcome measures in Arabic-speaking populations: A systematic review. Quality of Life Research, 31(5), 1309–1320.

Arts, J., Gubbels, J. S., Verhoeff, A. P., Chinapaw, M. J. M., Lettink, A., & Altenburg, T. M. (2022). A systematic review of proxy-report questionnaires assessing physical activity, sedentary behavior and/or sleep in young children (aged 0–5 years). International Journal of Behavioral Nutrition and Physical Activity, 19(1), 18.

Baamer, R. M., Iqbal, A., Lobo, D. N., Knaggs, R. D., Levy, N. A., & Toh, L. S. (2022). Utility of unidimensional and functional pain assessment tools in adult postoperative patients: A systematic review. British Journal of Anaesthesia, 128(5), 874–888.

Baqays, A., Zenke, J., Campbell, S., Johannsen, W., Rashid, M., Seikaly, H., & El-Hakim, H. (2021). Systematic review of validated parent-reported questionnaires assessing swallowing dysfunction in otherwise healthy infants and toddlers. Journal of Otolaryngology—Head & Neck Surgery, 50(1), 68.

Bawua, L. K., Miaskowski, C., Hu, X., Rodway, G. W., & Pelter, M. M. (2021). A review of the literature on the accuracy, strengths, and limitations of visual, thoracic impedance, and electrocardiographic methods used to measure respiratory rate in hospitalized patients. Annals of Noninvasive Electrocardiology, 26(5), e12885.

Beshara, P., Anderson, D. B., Pelletier, M., & Walsh, W. R. (2021). The reliability of the microsoft kinect and ambulatory sensor-based motion tracking devices to measure shoulder range-of-motion: a systematic review and meta-analysis. Sensors (Basel), 21(24), 8186.

Bik, A., Sam, C., de Groot, E. R., Visser, S. S. M., Wang, X., Tataranno, M. L., Benders, M., van den Hoogen, A., & Dudink, J. (2022). A scoping review of behavioral sleep stage classification methods for preterm infants. Sleep Medicine, 90, 74–82.

Blanco-Orive, P., del Corral, T., Martín-Casas, P., Ceniza-Bordallo, G., & López-de-Uralde-Villanueva, I. (2022). Quality of life and exercise tolerance tools in children/adolescents with cystic fibrosis: Systematic review. Medicina Clínica, 158(11), 519–530.

Bortolani, S., Brusa, C., Rolle, E., Monforte, M., De Arcangelis, V., Ricci, E., Mongini, T. E., & Tasca, G. (2022). Technology outcome measures in neuromuscular disorders: A systematic review. European Journal of Neurology, 29(4), 1266–1278.

Bru-Luna, L. M., Marti-Vilar, M., Merino-Soto, C., & Cervera-Santiago, J. L. (2021). Emotional intelligence measures: A systematic review. Healthcare, 9(12), 1696.

Bruyneel, A. V., & Dube, F. (2021). Best quantitative tools for assessing static and dynamic standing balance after stroke: A systematic review. Physiotherapy Canada, 73(4), 329–340.

Buck, B., Gagen, E. C., Halverson, T. F., Nagendra, A., Ludwig, K. A., & Fortney, J. C. (2022). A systematic search and critical review of studies evaluating psychometric properties of patient-reported outcome measures for schizophrenia. Journal of Psychiatric Research, 147, 13–23.

Chamorro-Moriana, G., Perez-Cabezas, V., Espuny-Ruiz, F., Torres-Enamorado, D., & Ridao-Fernandez, C. (2022). Assessing knee functionality: Systematic review of validated outcome measures. Annals of Physical and Rehabilitation Medicine, 65(6), 101608.

Chen, B. S., Galus, T., Archer, S., Tadic, V., Horton, M., Pesudovs, K., Braithwaite, T., & Yu-Wai-Man, P. (2022). Capturing the experiences of patients with inherited optic neuropathies: A systematic review of patient-reported outcome measures (PROMs) and qualitative studies. Graefes Archive for Clinical and Experimental Ophthalmology, 260(6), 2045–2055.

Collins, K. C., Burdall, O., Kassam, J., Firth, G., Perry, D., & Ramachandran, M. (2022). Health-related quality of life and functional outcome measures for pediatric multiple injury: A systematic review and narrative synthesis. Journal of Trauma and Acute Care Surgery, 92(5), e92–e106.

Cuenca-Garcia, M., Marin-Jimenez, N., Perez-Bey, A., Sanchez-Oliva, D., Camiletti-Moiron, D., Alvarez-Gallardo, I. C., Ortega, F. B., & Castro-Pinero, J. (2022). Reliability of field-based fitness tests in adults: A systematic review. Sports Medicine, 52(8), 1961–1979.

Daskalakis, I., Sperelakis, I., Sidiropoulou, B., Kontakis, G., & Tosounidis, T. (2021). Patient-reported outcome measures (PROMs) relevant to musculoskeletal conditions translated and validated in the greek language: A COSMIN-based systematic review of measurement properties. Mediterr J Rheumatol, 32(3), 200–217.

de Assis Brasil, M. L., Zakhour, S., Figueira, G. L., Pires, P. P., Nardi, A. E., & Sardinha, A. (2022). Sexuality assessment of the Brazilian population: An integrative review of the available instruments. Journal of Sex and Marital Therapy, 48(8), 757–774.

de Oliveira, M. P. B., da Silva Serrao, P. R. M., de Medeiros Takahashi, A. C., Pereira, N. D., & de Andrade, L. P. (2022). Reproducibility of assessment tests addressing body structure and function and activity in older adults with dementia: A systematic review. Physical Therapy. https://doi.org/10.1093/ptj/pzab263

de Witte, M., Kooijmans, R., Hermanns, M., van Hooren, S., Biesmans, K., Hermsen, M., Stams, G. J., & Moonen, X. (2021). Self-report stress measures to assess stress in adults with mild intellectual disabilities-a scoping review. Frontiers in Psychology, 12, 742566.

Essiet, I. A., Lander, N. J., Salmon, J., Duncan, M. J., Eyre, E. L. J., Ma, J., & Barnett, L. M. (2021). A systematic review of tools designed for teacher proxy-report of children’s physical literacy or constituting elements. International Journal of Behavioral Nutrition and Physical Activity, 18(1), 131.

Etkin, R. G., Shimshoni, Y., Lebowitz, E. R., & Silverman, W. K. (2021). Using evaluative criteria to review youth anxiety measures, part I: self-report. Journal of Clinical Child and Adolescent Psychology, 50(1), 58–76.

Fan, Y., Shu, X., Leung, K. C. M., & Lo, E. C. M. (2021). Patient-reported outcome measures for masticatory function in adults: A systematic review. BMC Oral Health, 21(1), 603.

Galan-Gonzalez, E., Martinez-Perez, G., & Gascon-Catalan, A. (2021). Family functioning assessment instruments in adults with a non-psychiatric chronic disease: a systematic review. Nurs Rep, 11(2), 341–355.

Gallo, L., Gallo, M., Augustine, H., Leveille, C., Murphy, J., Copeland, A. E., & Thoma, A. (2022). Assessing patient frailty in plastic surgery: A systematic review. Journal of Plastic, Reconstructive & Aesthetic Surgery, 75(2), 579–585.

Gard, E., Lyman, A., & Garg, H. (2022). Perinatal incontinence assessment tools: A psychometric evaluation and scoping review. Journal of Women’s Health (2002), 31(8), 1208–1218.

Giga, L., Petersone, A., Cakstina, S., & Berzina, G. (2021). Comparison of content and psychometric properties for assessment tools used for brain tumor patients: A scoping review. Health and Quality of Life Outcomes, 19(1), 234.

Goodney, P., Shah, S., Hu, Y. D., Suckow, B., Kinlay, S., Armstrong, D. G., Geraghty, P., Patterson, M., Menard, M., Patel, M. R., & Conte, M. S. (2022). A systematic review of patient-reported outcome measures patients with chronic limb-threatening ischemia. Journal of Vascular Surgery, 75(5), 1762–1775.

Guallar-Bouloc, M., Gomez-Bueno, P., Gonzalez-Sanchez, M., Molina-Torres, G., Lomas-Vega, R., & Galan-Mercant, A. (2021). Spanish questionnaires for the assessment of pelvic floor dysfunctions in women: A systematic review of the structural characteristics and psychometric properties. International Journal of Environmental Research and Public Health, 18(23), 12858.

Gutierrez-Vilahu, L., & Guerra-Balic, M. (2021). Footprint measurement methods for the assessment and classification of foot types in subjects with down syndrome: A systematic review. Journal of Orthopaedic Surgery and Research, 16(1), 537.

Halvorsen, M. B., Helverschou, S. B., Axelsdottir, B., Brondbo, P. H., & Martinussen, M. (2023). General measurement tools for assessing mental health problems among children and adolescents with an intellectual disability: A systematic review. Journal of Autism and Developmental Disorders, 53(1), 132–204.

Huang, E. Y., & Lam, S. C. (2021). Review of frailty measurement of older people: Evaluation of the conceptualization, included domains, psychometric properties, and applicability. Aging Med (Milton), 4(4), 272–291.

Kitamura, K., van Hooff, M., Jacobs, W., Watanabe, K., & de Kleuver, M. (2022). Which frailty scales for patients with adult spinal deformity are feasible and adequate? A Systematic Review. Spine, 22(7), 1191–1204.

Kusi-Mensah, K., Nuamah, N. D., Wemakor, S., Agorinya, J., Seidu, R., Martyn-Dickens, C., & Bateman, A. (2022). Assessment tools for executive function and adaptive function following brain pathology among children in developing country contexts: A scoping review of current tools. Neuropsychology Review, 32(3), 459–482.

Lambert, K., & Stanford, J. (2022). Patient-reported outcome and experience measures administered by dietitians in the outpatient setting: Systematic review. Canadian Journal of Dietetic Practice and Research, 83(1), 11.

Le, C. Y., Truong, L. K., Holt, C. J., Filbay, S. R., Dennett, L., Johnson, J. A., Emery, C. A., & Whittaker, J. L. (2021). Searching for the holy grail: A systematic review of health-related quality of life measures for active youth. Journal of Orthopaedic & Sports Physical Therapy, 51(10), 478–491.

Lear, M. K., Lee, E. B., Smith, S. M., & Luoma, J. B. (2022). A systematic review of self-report measures of generalized shame. Journal of Clinical Psychology, 78(7), 1288–1330.

Levasseur, M., Lussier-Therrien, M., Biron, M. L., Dubois, M. F., Boissy, P., Naud, D., Dubuc, N., Coallier, J. C., Calve, J., & Audet, M. (2022). Scoping study of definitions and instruments measuring vulnerability in older adults. Journal of the American Geriatrics Society, 70(1), 269–280.

Lim-Watson, M. Z., Hays, R. D., Kingsberg, S., Kallich, J. D., & Murimi-Worstell, I. B. (2022). A systematic literature review of health-related quality of life measures for women with hypoactive sexual desire disorder and female sexual interest/arousal disorder. Sexual Medicine Reviews, 10(1), 23–41.

Liu, X., McNally, T. W., Beese, S., Downie, L. E., Solebo, A. L., Faes, L., Husain, S., Keane, P. A., Moore, D. J., & Denniston, A. K. (2021). Non-invasive instrument-based tests for quantifying anterior chamber flare in uveitis: A systematic review. Ocular Immunology and Inflammation, 29(5), 982–990.

Lodge, M. E., Moran, C., Sutton, A. D. J., Lee, H. C., Dhesi, J. K., Andrew, N. E., Ayton, D. R., Hunter-Smith, D. J., Srikanth, V. K., & Snowdon, D. A. (2022). Patient-reported outcome measures to evaluate postoperative quality of life in patients undergoing elective abdominal surgery: A systematic review. Quality of Life Research, 31(8), 2267–2279.

Long, M., Stansfeld, J. L., Davies, N., Crellin, N. E., & Moncrieff, J. (2022). A systematic review of social functioning outcome measures in schizophrenia with a focus on suitability for intervention research. Schizophrenia Research, 241, 275–291.

Luck-Sikorski, C., Rossmann, P., Topp, J., Augustin, M., Sommer, R., & Weinberger, N. A. (2022). Assessment of stigma related to visible skin diseases: A systematic review and evaluation of patient-reported outcome measures. Journal of the European Academy of Dermatology and Venereology, 36(4), 499–525.

Madso, K. G., Flo-Groeneboom, E., Pachana, N. A., & Nordhus, I. H. (2021). Assessing momentary well-being in people living with dementia: A systematic review of observational instruments. Frontiers in Psychology, 12, 742510.

Marshall, A. N., Root, H. J., Valovich McLeod, T. C., & Lam, K. C. (2022). Patient-reported outcome measures for pediatric patients with sport-related injuries: A systematic review. Journal of Athletic Training, 57(4), 371–384.

Marsico, P., Meier, L., van der Linden, M. L., Mercer, T. H., & van Hedel, H. J. A. (2022). Psychometric properties of lower limb somatosensory function and body awareness outcome measures in children with upper motor neuron lesions: A systematic review. Developmental Neurorehabilitation, 25(5), 314–327.

Master, H., Coleman, G., Dobson, F., Bennell, K., Hinman, R. S., Jakiela, J. T., & White, D. K. (2021). A narrative review on measurement properties of fixed-distance walk tests up to 40 meters for adults with knee osteoarthritis. Journal of Rheumatology, 48(5), 638–647.

Menegol, N. A., Ribeiro, S. N. S., Okubo, R., Gulonda, A., Sonza, A., Montemezzo, D., & Sanada, L. S. (2022). Quality assessment of neonatal pain scales translated and validated to brazilian portuguese: A systematic review of psychometric properties. Pain Management Nursing, 23(4), 559–565.

Mlynczyk, J., Abramowicz, P., Stawicki, M. K., & Konstantynowicz, J. (2022). Non-disease specific patient-reported outcome measures of health-related quality of life in juvenile idiopathic arthritis: A systematic review of current research and practice. Rheumatology International, 42(2), 191–203.

Nascimento, D., Carmona, J., Mestre, T., Ferreira, J. J., & Guimaraes, I. (2021). Drooling rating scales in Parkinson’s disease: A systematic review. Parkinsonism & Related Disorders, 91, 173–180.

Ngwayi, J. R. M., Tan, J., Liang, N., Sita, E. G. E., Obie, K. U., & Porter, D. E. (2021). Systematic review and standardised assessment of Chinese cross-cultural adapted hip patient reported outcome measures (PROMs). PLoS ONE, 16(9), e0257081.

Nolet, P. S., Yu, H., Cote, P., Meyer, A. L., Kristman, V. L., Sutton, D., Murnaghan, K., & Lemeunier, N. (2021). Reliability and validity of manual palpation for the assessment of patients with low back pain: A systematic and critical review. Chiropr Man Therap, 29(1), 33.

Orth, Z., & van Wyk, B. (2021). Measuring mental wellness among adolescents living with a physical chronic condition: A systematic review of the mental health and mental well-being instruments. BMC Psychol, 9(1), 176.

Overman, M. J., Leeworthy, S., & Welsh, T. J. (2021). Estimating premorbid intelligence in people living with dementia: A systematic review. International Psychogeriatrics, 33(11), 1145–1159.

Parati, M., Ambrosini, E. B., Gallotta, M., Dalla Vecchia, L. A., Ferriero, G., & Ferrante, S. (2022). The reliability of gait parameters captured via instrumented walkways: a systematic review and meta-analysis. European Journal of Physical and Rehabilitation Medicine, 58(3), 363–377.

Pereira, T., & Lousada, M. (2023). Psychometric properties of standardized instruments that are used to measure pragmatic intervention effects in children with developmental language disorder: A systematic review. Journal of Autism and Developmental Disorders, 53(5), 1764–1780.

Phillips, S. M., Summerbell, C., Ball, H. L., Hesketh, K. R., Saxena, S., & Hillier-Brown, F. C. (2021). The validity, reliability, and feasibility of measurement tools used to assess sleep of pre-school aged children: A systematic rapid review. Frontiers in Pediatrics, 9, 770262.

Piscitelli, D., Ferrarello, F., Ugolini, A., Verola, S., & Pellicciari, L. (2021). Measurement properties of the gross motor function classification system, gross motor function classification system-expanded & revised, manual ability classification system, and communication function classification system in cerebral palsy: A systematic review with meta-analysis. Developmental Medicine and Child Neurology, 63(11), 1251–1261.

Pizzinato, A., Liguoro, I., Pusiol, A., Cogo, P., Palese, A., & Vidal, E. (2022). Detection and assessment of postoperative pain in children with cognitive impairment: A systematic literature review and meta-analysis. European Journal of Pain, 26(5), 965–979.

Ploumen, R. L. M., Willemse, S. H., Jonkman, R. E. G., Nolte, J. W., & Becking, A. G. (2023). Quality of life after orthognathic surgery in patients with cleft: An overview of available patient-reported outcome measures. Cleft Palate-Craniofacial Journal, 60(4), 405–412.

Qian, M., Shi, Y., Lv, J., & Yu, M. (2021). Instruments to assess self-neglect among older adults: A systematic review of measurement properties. International Journal of Nursing Studies, 123, 104070.

Ramanathan, D., Chu, S., Prendes, M., & Carroll, B. T. (2021). Validated outcome measures and postsurgical scar assessment instruments in eyelid surgery: A systematic review. Dermatologic Surgery, 47(7), 914–920.

Ratti, M. M., Gandaglia, G., Alleva, E., Leardini, L., Sisca, E. S., Derevianko, A., Furnari, F., Mazzoleni Ferracini, S., Beyer, K., Moss, C., Pellegrino, F., Sorce, G., Barletta, F., Scuderi, S., Omar, M. I., MacLennan, S., Williamson, P. R., Zong, J., MacLennan, S. J., … Cornford, P. (2022). Standardising the assessment of patient-reported outcome measures in localised prostate cancer A Systematic Review. European Urology Oncology, 5(2), 153–163.

Roopsawang, I., Zaslavsky, O., Thompson, H., Aree-Ue, S., Kwan, R. Y. C., & Belza, B. (2022). Frailty measurements in hospitalised orthopaedic populations age 65 and older: A scoping review. Journal of Clinical Nursing, 31(9–10), 1149–1163.

Roos, M., Dagenais, M., Pflieger, S., & Roy, J. S. (2022). Patient-reported outcome measures of musculoskeletal symptoms and psychosocial factors in musicians: A systematic review of psychometric properties. Quality of Life Research, 31(9), 2547–2566.

Roth, E. M., Lubitz, C. C., Swan, J. S., & James, B. C. (2020). Patient-reported quality-of-life outcome measures in the thyroid cancer population. Thyroid, 30(10), 1414–1431.

Rymer, J. A., Narcisse, D., Cosiano, M., Tanaka, J., McDermott, M. M., Treat-Jacobson, D. J., Conte, M. S., Tuttle, B., Patel, M. R., & Smolderen, K. G. (2022). Patient-reported outcome measures in symptomatic, non-limb-threatening peripheral artery disease: A state-of-the-art review. Circulation. Cardiovascular Interventions, 15(1), e011320.

Sabah, S. A., Hedge, E. A., Abram, S. G. F., Alvand, A., Price, A. J., & Hopewell, S. (2021). Patient-reported outcome measures following revision knee replacement: A review of PROM instrument utilisation and measurement properties using the COSMIN checklist. British Medical Journal Open, 11(10), e046169.

Salas, M., Mordin, M., Castro, C., Islam, Z., Tu, N., & Hackshaw, M. D. (2022). Health-related quality of life in women with breast cancer: A review of measures. BMC Cancer, 22(1), 66.

Schulz, J., Vitt, E., & Niemier, K. (2022). A systematic review of motor control tests in low back pain based on reliability and validity. Journal of Bodywork and Movement Therapies, 29, 239–250.

Schuttert, I., Timmerman, H., Petersen, K. K., McPhee, M. E., Arendt-Nielsen, L., Reneman, M. F., & Wolff, A. P. (2021). The definition, assessment, and prevalence of (human assumed) central sensitisation in patients with chronic low back pain: A systematic review. Journal of Clinical Medicine, 10(24), 5931.

Shakshouk, H., Tkaczyk, E. R., Cowen, E. W., El-Azhary, R. A., Hashmi, S. K., Kenderian, S. J., & Lehman, J. S. (2021). Methods to assess disease activity and severity in cutaneous chronic graft-versus-host disease: A critical literature review. Transplantation and Cellular Therapy, 27(9), 738–746.

Slavych, B. K., Zraick, R. I., & Ruleman, A. (2021). A systematic review of voice-related patient-reported outcome measures for use with adults. Journal of Voice. https://doi.org/10.1016/j.jvoice.2021.09.032

Smeele, H. P., Dijkstra, R. C. H., Kimman, M. L., van der Hulst, R., & Tuinder, S. M. H. (2022). Patient-reported outcome measures used for assessing breast sensation after mastectomy: Not fit for purpose. Patient, 15(4), 435–444.

Strong, A., Arumugam, A., Tengman, E., Roijezon, U., & Hager, C. K. (2022). Properties of tests for knee joint threshold to detect passive motion following anterior cruciate ligament injury: A systematic review and meta-analysis. Journal of Orthopaedic Surgery and Research, 17(1), 134.

Su, Y., Cochrane, B. B., Yu, S. Y., Reding, K., Herting, J. R., & Zaslavsky, O. (2022). Fatigue in community-dwelling older adults: A review of definitions, measures, and related factors. Geriatric Nursing, 43, 266–279.

Thoen, A., Steyaert, J., Alaerts, K., Evers, K., & Van Damme, T. (2023). A systematic review of self-reported stress questionnaires in people on the autism spectrum. Rev J Autism Dev Disord, 10(2), 295–318.

Tinti, S., Parati, M., De Maria, B., Urbano, N., Sardo, V., Falcone, G., Terzoni, S., Alberti, A., & Destrebecq, A. (2022). Multi-dimensional dyspnea-related scales validated in individuals with cardio-respiratory and cancer diseases: A systematic review of psychometric properties. Journal of Pain and Symptom Management, 63(1), 46–58.

Tu, J. Y., Jin, G., Chen, J. H., & Chen, Y. C. (2022). Caregiver burden and dementia: A systematic review of self-report instruments. Journal of Alzheimer’s Disease, 86(4), 1527–1543.

Ullrich, P., Werner, C., Abel, B., Hummel, M., Bauer, J. M., & Hauer, K. (2022). Assessing life-space mobility : A systematic review of questionnaires and their psychometric properties. Zeitschrift fur Gerontologie und Geriatrie, 55(8), 660–666.

Valenti, G. D., & Faraci, P. (2022). Instruments measuring fatalism: A systematic review. Psychological Assessment, 34(2), 159–175.

van Dokkum, N. H., Reijneveld, S. A., de Best, J., Hamoen, M., Te Wierike, S. C. M., Bos, A. F., & de Kroon, M. L. A. (2022). Criterion validity and applicability of motor screening instruments in children aged 5–6 years: A systematic review. International Journal of Environmental Research and Public Health, 19(2), 781.

van Krugten, F. C. W., Feskens, K., Busschbach, J. J. V., Hakkaart-van Roijen, L., & Brouwer, W. B. F. (2021). Instruments to assess quality of life in people with mental health problems: A systematic review and dimension analysis of generic, domain- and disease-specific instruments. Health and Quality of Life Outcomes, 19(1), 249.

van Raath, M. I., Chohan, S., Wolkerstorfer, A., van der Horst, C., Limpens, J., Huang, X., Ding, B., Storm, G., van der Hulst, R., & Heger, M. (2021). Treatment outcome measurement instruments for port wine stains: A systematic review of their measurement properties. Dermatology, 237(3), 416–432.

Wang, C., Chen, H., Qian, M., Shi, Y., Zhang, N., & Shang, S. (2022). Balance function in patients with COPD: A systematic review of measurement properties. Clinical Nursing Research, 31(6), 1000–1013.

Wang, X., Cao, X., Li, J., Deng, C., Wang, T., Fu, L., & Zhang, Q. (2021). Evaluation of patient-reported outcome measures in intermittent self-catheterization users: A systematic review. Archives of Physical Medicine and Rehabilitation, 102(11), 2239–2246.

Wen, H., Yang, Z., Zhu, Z., Han, S., Zhang, L., & Hu, Y. (2022). Psychometric properties of self-reported measures of health-related quality of life in people living with HIV: A systematic review. Health and Quality of Life Outcomes, 20(1), 5.

Wessels, M. D., van der Putten, A. A. J., & Paap, M. C. S. (2021). Inventory of assessment practices in people with profound intellectual and multiple disabilities in three European countries. Journal of Applied Research in Intellectual Disabilities, 34(6), 1521–1537.

Wiitavaara, B., & Florin, J. (2022). Content and psychometric evaluations of questionnaires for assessing physical function in people with arm-shoulder-hand disorders: A systematic review of the literature. Disability and Rehabilitation, 44(24), 7575–7586.

Wohlleber, K., Heger, P., Probst, P., Engel, C., Diener, M. K., & Mihaljevic, A. L. (2021). Health-related quality of life in primary hepatic cancer: A systematic review assessing the methodological properties of instruments and a meta-analysis comparing treatment strategies. Quality of Life Research, 30(9), 2429–2466.

Wu, J. W., Pepler, L., Maturi, B., Afonso, A. C. F., Sarmiento, J., & Haldenby, R. (2022). Systematic review of motor function scales and patient-reported outcomes in spinal muscular atrophy. American Journal of Physical Medicine and Rehabilitation, 101(6), 590–608.

Yanez Touzet, A., Bhatti, A., Dohle, E., Bhatti, F., Lee, K. S., Furlan, J. C., Fehlings, M. G., Harrop, J. S., Zipser, C. M., Rodrigues-Pinto, R., Milligan, J., Sarewitz, E., Curt, A., Rahimi-Movaghar, V., Aarabi, B., Boerger, T. F., Tetreault, L., Chen, R., Guest, J. D., … Committee, A.O.S.R.-D.S. (2022). Clinical outcome measures and their evidence base in degenerative cervical myelopathy: A systematic review to inform a core measurement set (AO Spine RECODE-DCM). British Medical Journal Open, 12(1), e057650.

Zanin, E., Aiello, E. N., Diana, L., Fusi, G., Bonato, M., Niang, A., Ognibene, F., Corvaglia, A., De Caro, C., Cintoli, S., Marchetti, G., Vestri, A., Italian Working Group on t.-n. (2022). Tele-neuropsychological assessment tools in Italy: A systematic review on psychometric properties and usability. Neurological Sciences, 43(1), 125–138.

Guyatt, G. H., Oxman, A. D., Vist, G. E., Kunz, R., Falck-Ytter, Y., Alonso-Coello, P., & Schünemann, H. J. (2008). GRADE: An emerging consensus on rating quality of evidence and strength of recommendations. BMJ, 336(7650), 924–926.

Munn, Z., Stern, C., Aromataris, E., Lockwood, C., & Jordan, Z. (2018). What kind of systematic review should I conduct? A proposed typology and guidance for systematic reviewers in the medical and health sciences. BMC Medical Research Methodology, 18, 1–9.

Lefebvre, C., Glanville, J., Briscoe, S., Featherstone, R., Littlewood, A., Marshall, C., Metzendorf, M.-I., Noel-Storr, A., Paynter, R., Rader, T., Thomas, J., & Wieland, L., Chapter 4: Searching for and selecting studies. , in Cochrane Handbook for Systematic Reviews of Interventions version 6.3 (updated February 2022), J. Higgins, Editors. 2022, Cochrane.

Whiting, P. F., Rutjes, A. W., Westwood, M. E., Mallett, S., Deeks, J. J., Reitsma, J. B., Leeflang, M. M., Sterne, J. A., Bossuyt, P. M., Group*, Q.-. (2011). QUADAS-2: A revised tool for the quality assessment of diagnostic accuracy studies. Annals of internal medicine, 155(8), 529–536.

Lucas, N. P., Macaskill, P., Irwig, L., & Bogduk, N. (2010). The development of a quality appraisal tool for studies of diagnostic reliability (QAREL). Journal of clinical epidemiology, 63(8), 854–861.

Sterne, J. A., Hernán, M. A., Reeves, B. C., Savović, J., Berkman, N. D., Viswanathan, M., Henry, D., Altman, D. G., Ansari, M. T., & Boutron, I. (2016). ROBINS-I: a tool for assessing risk of bias in non-randomised studies of interventions. BMJ, 355, i4919.

Wells, G., Shea, B., O’Connell, D., Peterson, J., Welch, V., Losos, M., & Tugwell, P. (2014). Newcastle-Ottawa quality assessment scale cohort studies. University of Ottawa.

Matvienko-Sikar, K., Hussey, S., Mellor, K., Byrne, M., Clarke, M., Kirkham, J. J., Kottner, J., Quirke, F., Saldanha, I. J., & Smith, V. (2024). Using behavioral science to increase core outcome set use in trials. Journal of Clinical Epidemiology, 168, 111285.

Mokkink, L.B., Elsman, E.B.M., & Terwee, C.B., COSMIN guideline 2.0 for systematic reviews of patient-reported outcome measures. Submitted for publication.

Elsman, E.B.M., Mokkink, L.B., Terwee, C.B., Beaton, D., Gagnier, J.J., Tricco, A.C., Baba, A., Butcher, N.J., Smith, M., Hofstetter, C., Aiyegbusi, O.L., Berardi, A., Farmer, J., Haywood, K.L., Krause, K.R., Markham, S., Mayo-Wilson, E., Mehdipour, A., Ricketts, J., Szatmari, P., Touma, Z., Moher, D., & Offringa, M., Guideline for reporting systematic reviews of outcome measurement instruments (OMIs): PRISMA-COSMIN for OMIs 2024. Accepted for publication.

Schiavo, J. H. (2019). PROSPERO: An international register of systematic review protocols. Medical Reference Services Quarterly, 38(2), 171–180.

Funding

The authors declare that no funds, grants, or other support were received during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

CB conceptualized and designed the study. Material preparation was performed by EE. Data extraction was performed by EE, LM, OA, AC, KH, KMS, DO, JP, ISM, and CB. Data analysis was performed by EE. The first draft of the manuscript was written by EE and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose. Drs. Terwee and Mokkink are the founders of COSMIN.

Ethical approval

This is a methodological study for which no ethical approval is required. There were no individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Elsman, E.B.M., Mokkink, L.B., Abma, I.L. et al. Methodological quality of 100 recent systematic reviews of health-related outcome measurement instruments: an overview of reviews. Qual Life Res (2024). https://doi.org/10.1007/s11136-024-03706-z

Accepted:

Published:

DOI: https://doi.org/10.1007/s11136-024-03706-z