Abstract

Optical sensors, mounted on uncrewed aerial vehicles (UAVs), are typically pointed straight downward to simplify structure-from-motion and image processing. High horizontal and vertical image overlap during UAV missions effectively leads to each object being measured from a range of different view angles, resulting in a rich multi-angular reflectance dataset. We propose a method to extract reflectance data, and their associated distinct view zenith angles (VZA) and view azimuth angles (VAA), from UAV-mounted optical cameras; enhancing plant parameter classification compared to standard orthomosaic reflectance retrieval. A standard (nadir) and a multi-angular, 10-band multispectral dataset was collected for maize using a UAV on two different days. Reflectance data was grouped by VZA and VAA (on average 2594 spectra/plot/day for the multi-angular data and 890 spectra/plot/day for nadir flights only, 13 spectra/plot/day for a standard orthomosaic), serving as predictor variables for leaf chlorophyll content (LCC), leaf area index (LAI), green leaf area index (GLAI), and nitrogen balanced index (NBI) classification. Results consistently showed higher accuracy using grouped VZA/VAA reflectance compared to the standard orthomosaic data. Pooling all reflectance values across viewing directions did not yield satisfactory results. Performing multiple flights to obtain a multi-angular dataset did not improve performance over a multi-angular dataset obtained from a single nadir flight, highlighting its sufficiency. Our openly shared code (https://github.com/ReneHeim/proj_on_uav) facilitates access to reflectance data from pre-defined VZA/VAA groups, benefiting cross-disciplinary and agriculture scientists in harnessing the potential of multi-angular datasets.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In agriculture, plant breeding, and crop science, structural and biochemical plant traits are collected and analyzed to understand and improve crop performance, yield, and overall crop quality (Herrmann & Berger, 2021). Mapping fields with remote sensing technologies, such as uncrewed aerial vehicles (UAVs), has shown a reduction in sampling time and produced good estimates of plant traits at the field scale in comparison to random manual sampling. For example, to predict yield and productivity, the leaf area index (LAI) is a widely applied measure in vegetation remote sensing (Fang et al., 2019; Hilty et al., 2021). There is a strong relationship between LAI, green LAI (GLAI), plant nitrogen and chlorophyll content (Gitelson et al., 2014). Plants with higher LAI tend to have higher nitrogen and chlorophyll content, which can result in increased photosynthesis and plant productivity. Optical remote sensing methods have been proven useful to obtain key biophysical variables such as LAI, leaf chlorophyll content (LCC), leaf nitrogen content (LNC), as the interaction of radiation with vegetation depends on its structural and biochemical properties (Asner et al., 2003; Gitelson et al., 2014; Houborg & Boegh, 2008; Schaepman et al., 2005; Schaepman-Strub et al., 2006; Schlemmer et al., 2013).

When using optical UAV remote sensing in crop research, it has become standard to collect plant trait information with sensors facing straight down. However, this habit is limiting the information potentially to be gained from a UAV mission. Several studies have suggested to include imagery from different viewing angles to better capture the three-dimensional structure of plants (Asner et al., 1998; Burkart et al., 2015; He et al., 2020; Li et al., 2021a, 2021b, 2021c; Roosjen et al., 2018). Burkart et al. (2015) were one of the first to use a UAV and a non-imaging spectrometer to study angular effects and found significant variations in various spectral vegetation indices. They highlighted the necessity of considering angular effects in optical sensors when assessing vegetation.

The prediction of plant canopy properties, such as LAI, canopy height, and canopy clumping, have been improved by Roosjen et al., (2017, 2018) using a 2D imaging system to collect a multi-angular and a nadir dataset. They harnessed the high overlap between adjacent images, resulting in objects on the ground being viewed in multiple different images. By inversion of the PROSAIL radiative transfer model (Jacquemoud et al., 2009), they showed that multi-angular data performed better than inversion based on nadir data for estimating LAI and LCC. In a study by Li et al. (2021b), RGB data at close range were collected using a custom-built buggy at view zenith angles of 0° and 45°, resulting in a multi-angular dataset for estimating wheat (Triticum aestivum L.) leaf parameters. The multi-angular data yielded improved results by allowing more plant parts to be represented by a denser and more detailed point cloud. Another study (He et al., 2020) utilized close-range hyperspectral data obtained with a handheld spectroradiometer. The data were grouped based on view zenith angles (VZA) ranging from − 60° to + 60°, aiming to estimate LAI in winter wheat. Results indicated the red-edge region's pivotal role in LAI estimation when viewed from the nadir direction, with predictive ability decreasing as the view zenith angle increased. This type of data has thus been recognized as a promising resource in precision agriculture.

The previously mentioned studies often used handheld spectrometers and small terrestrial vehicles to capture multi-angular data. However, using aerial imagery from UAVs promises a less labor-intense and more holistic collection of data. The standard flight plan for UAV missions usually captures neighboring images with high overlap to allow for effective scene reconstruction by structure-from-motion. This results in a set of images where each point/pixel on the ground is seen from up to 40 different viewing angles (Roosjen et al., 2017). The range of reflectance values from the associated combination of VZAs (0°–90°) and view azimuth angles (0°–360° or -180°–180°) depends on the field of view of the camera system and the heading of the UAV. Both can be controlled with a gimbal mounted on the UAV and by setting a heading direction in the mission planning software. However, even when the camera is facing downward, only the center pixel is capturing reflectance signals at 0° VZA (i.e., nadir position). The VZA of the other pixels in the scene increases as the distance from the pixel to the image center increases. Different combinations of VZA and view azimuth angles (VAA) result in different intensities and shapes of the associated spectral signal (Burkart et al., 2015; Escadafal & Huete, 1991).

This variation of intensity and shape is complicating the generation of maps by structure-from-motion (Hardin & Jensen, 2011; Seifert et al., 2019). Therefore, off-nadir viewing angles are usually removed or corrected for airborne remote sensing. In the case of structure-from-motion algorithms used for UAV image processing, the algorithms typically select the most nadir-facing pixels for the final orthomosaic (Hardin & Jensen, 2011). While the removal of oblique angle information simplifies photogrammetric processes, the reflectance information from such observation angles is also removed and cannot be used anymore to analyze plant canopies and their biochemical and structural properties (Roosjen et al., 2018; Roth et al., 2018).

Despite some studies using multi-angular datasets, the current standard in UAV remote sensing is still using photogrammetrically orthorectified image products that are blended from the entire range of images collected during the UAV mission. During image blending, pixels that are part of the overlapping areas of two or more individual images are chosen based on different selection rules. A weighted average of pixel values in the overlapping areas are usually used to avoid transition lines, and maximum/minimum intensity blending giving preference to the highest/lowest intensity in the overlap areas (Agisoft LLC, 2022). However, concerns have been raised that blending of pixels potentially changes the original values from the raw images (Wang et al., 2020). None of these blending methods provide the option to select values that were derived from a specific range of VZAs or VAAs. But selecting specific viewing angles might be relevant for tasks such as estimating LAI. It is also known that leaf orientation differs across plant species and has a drastic influence on the reflectance signal (Müller-Linow et al., 2015). Therefore, it would be useful to select specific and optimal VZA-VAA angle combinations to capture plant canopies and leaves from an intentional perspective. Eventually, current standards in UAV photogrammetry workflows result in a single orthomosaic which is used to extract plant trait information. Inevitably, this reduces the wealth of observation angles to a single observation per pixel, thus discarding possibly relevant data.

The purpose of this study is to investigate the added value of moving beyond the standard UAV image processing approach. First, a method to effectively leverage all available reflectance data, incorporating both VZA and VAA in the captured imagery is presented. The method allows selection and extraction of sample areas based on a target coordinate. This way, informative reflectance data is not discarded during orthomosaic generation. Next, the method is applied in a case study on maize (Zea mays). Therefore, multispectral reflectance data was collected over two consecutive days to classify LAI, GLAI, LCC, and a proxy-metric for leaf nitrogen, the nitrogen-balanced index (NBI). The current knowledge is advanced, by outlining that multi-angular reflectance data can also be used for classification problems and not only for radiative transfer modelling, as shown in previous studies. The first research hypotheses states that (i) incorporating distinct VZA and VAA reflectance groups will improve classification compared to the standard orthomosaic approach. Further, it is hypothesized that (ii) a standard nadir flight already provides sufficient viewing angles for an accurate classification without the need for additional flights, to further increase the range of viewing angles. Before testing the hypotheses, it is assessed which ground sampling distance is most suitable for the analysis.

According to our knowledge, no previous study has provided such a detailed assessment of a multi-angular, multispectral UAV reflectance dataset while openly providing the analysis and method for editing, reuse, and interdisciplinary transfer. The available code will allow users to extract reflectance signatures from all available viewing angles contained in their data.

Methods

Experimental site

In September 2020, a multivariate dataset was collected for a 4-hectare maize field (Zea mays) on the experimental farm Bottelare (Lat = 50.95746, Lon = 3.76629) of Ghent University in East Flanders, Belgium (Fig. 1). This field had a loamy sand soil with low organic content and moderate to high permeability (www.geopunt.be). The region has a temperate oceanic climate with no dry season, warm summers, and a frost-free period of approximately 199 days. The mean temperature is recorded as 11.0 °C, the average elevation is ~ 60 m, and precipitation is about 786 mm per year. The maize was at a late reproductive growth stage and close to maturity. A nitrogen treatment trial was established in this field. The nitrogen treatment caused variations in NBI, LAI, GLAI, and LCC. The details of the nitrogen experiment were confidential but are not required to answer the research questions of this study.

Experimental site (Zea mays) in Bottelare, Belgium (BD72/Belgian Lambert 72 EPSG::31370). The white points show the 26 sampling points from which ground reference data was collected. From all pixels within a 2 m radius of these points, multispectral reflectance signals were extracted. Checkerboard patterns indicate ground control points that were used for georeferencing remotely sensed data

To select the sample sites within the nitrogen experiment, we first classified the different plots in low, medium, and high growth vigor based on the Enhanced Vegetation Index (Huete et al., 2002) and the Normalized Difference Vegetation Index (Rouse et al., 1974) that were extracted from an initial flight mission to survey the site. We then selected 26 sampling points. From each of these points a range of reference data and remote sensing signals were collected as outlined below, spread evenly across the different growth vigor classes. A precision GNSS (R8s, Trimble, Sunnyvale, California, United States) was used to identify each sampling point precisely in the field.

Ground reference data

For each sampling point, the NBI, LCC, LAI and GLAI were measured from the three plants closest to the established plot coordinate. The sampling was conducted on September 9 and 10, 2020 and serves as ground reference data for the UAV campaign.

The LCC and NBI were measured at 30 different points on different upper leaf surfaces for the top-, mid-, and lower third along the vertical axis of the three sampled plants using a Dualex leafclip sensor (Force A, Orsay, France). For the analysis, the lower-third measurements were discarded as it turned out that most leaves were fully senescent at this late growth stage. This resulted in 60 measurements per sampling point (n = 26 sampling points × 3 plants x (10 upper canopy measurements + 10 middle canopy measurements)). For maize, Cerovic et al. (2012) confirmed a direct linear response of the sensor to LCC and a direct equivalence between Dualex units and total chlorophyll content in μg cm−2.

On the same plants, the leaf length (LL), leaf width (LW) and leaf greenness of all leaves was measured. Greenness was estimated as the percentage of the leaf that was still green. Three different colleagues rated the leaf greenness in the field. A sub-sample of 30 leaves was used to assess the individual rating bias of each colleague and the average rating of all three colleagues was used to calibrate the overall field ratings.

From the LW and LL measurements, leaf area (LA) was calculated using an allometric relationship between LL and LW in maize (LA = LL × LW × 0.75) according to multiple studies (e.g., Mario et al., 2018). In addition, the correctness of the calculation was confirmed by measuring LW, LL, and the LA of 20 leaves from the mid- and top canopy sections, using a leaf surface area meter (LI-3100C, LI-COR Biosciences GmbH, Germany). The density of the maize plants was measured by counting the number of maize plants along a 2 m intra-row distance and the average of three inter-row distances was measured as well. Then, all LA measurements were summed and related to planting density to yield LAI. Similarly, GLAI was estimated by multiplying the LA with the greenness and was then related to plant density.

UAV data

Multispectral aerial imagery was collected to derive all potential VZAs and VAAs and their associated reflectance data. To confirm that plant canopy spectral patterns in the data are robust, multispectral imagery on two consecutive days was collected (2020-09-06, 3.18 pm–5.09 pm and 2020-09-07, 9.38 am–11.15 am). Flights were performed with a Matrice 600 Pro uncrewed aerial vehicle (DJI, Nanshan, Shenzhen, China) equipped with a MicaSense RedEdge Dual multispectral camera system (MicaSense Inc, Seattle, USA). The camera was mounted on a Gremsy T3V2 gimbal (Gremsy, Ho Chi Minh City, Vietnam). The multispectral camera has a horizontal and vertical field of view of 47.2° and 35.4°, respectively. It has ten spectral bands, with central wavelengths at 444, 475, 531, 560, 650, 668, 705, 717, 740, and 842 nm and is connected to a downwelling light sensor (DLS2, MicaSense Inc, Seattle, USA). At the mission altitude of 60 m, the theoretical ground sampling distance (i.e., distance between the centers of two adjacent pixels) was 4.17 cm.

The first flight on each day had the cameras in nadir position. Due to the camera/lens specifications, the VZA range would be limited to VZA between 0 and maximally 23.6° in nadir position. To collect a wider range of VZAs and VAAs, two additional flights each day with the view angle set manually at 20° were performed (Table 1). Across all three flights the flight path directions crossed each other to ensure the sun being at different azimuth directions in reference to the camera (S1 and S2).

Conditions were not the same during the flights. The flights were conducted in the afternoon on the first day and in the morning on the second day. The first day was not optimal for reflectance data collection due to intermittent cloud cover and changing illumination. The second day had close to optimal lighting conditions. The difference in flight time between days resulted in different illumination directions caused by different sun azimuth angles (SAA). On the first day, the sun illuminated the maize field from the south-west direction (2020-09-06 = 212°–242° SAA). This means that sun rays were parallel to the rows between plots. On the second day the sun was south-east of the field, meaning that illumination was perpendicular to the rows (2020-09-07 = 109°–133°). The weather conditions and cloud cover also affected the proportion of direct and diffuse irradiance, with less direct irradiance on the first day and more on the second (Table 2).

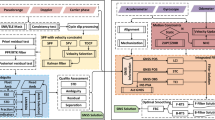

Six custom-built and near-lambertian gray-scale radiometric calibration targets were placed in the field during data acquisition, see also Daniels et al. (2023) for more information. The reflectance of each panel was measured using a micro-spectrometer within the range of 350 and 810 nm (Ocean ST VIS, Ocean Optics, Orlando, USA). After the flights, the raw data had to be pre-processed before the multi-angular reflectance data was extracted. This entailed a brief screening for blurry images, relative radiometric calibration, and geo-referencing. For more details, see the flow chart in Fig. 2 and the further explanations given below.

Flowchart showing the image pre-processing after multispectral images were collected. The photogrammetry process is displayed followed by the scripted workflow of the reflectance and view angle retrieval. The file names (e.g. 02_filter_…py) are the same as in the online repository from where the method can be downloaded

Initially, multispectral raw digital numbers (DN) were converted to reflectance by using the empirical line method based on the custom-built grey-scale panels and the micro-spectrometer reference measurements (Aasen et al., 2018). Vignetting effects, row gradient effects, and lens distortion were removed using a Python script (Daniels et al., 2023; https://github.com/micasense) that was integrated into a custom scripted workflow (https://github.com/ReneHeim/proj_on_uav), developed in the Python programming language (Van Rossum & Drake, 2009) as well. The radiometrically calibrated and corrected imagery was processed using Agisoft Metashape 1.8.3.14331 (Agisoft LLC, Russia, St. Petersburg). Example spectra can be examined in the supplementary data section (S3–S6).

Image alignment was grouped for the first and second day to limit stochastic errors to a single alignment process. For both days, pre-configured image alignment settings from the Agisoft Metashape Professional User Manual version 1.8 (Agisoft LLC, 2022) were used with slight modifications as reported in the supplementary data section (S7). The sparse point cloud was improved by optimizing the reconstruction uncertainty, the projection accuracy, and the reprojection error (Over et al., 2021). Then, image locations were optimized based on ground control points and according to best practices in UAV remote sensing (Harwin et al., 2015). The exact locations of the ground control points were logged with a GNSS receiver at an accuracy of 0.015 m. Dense point clouds and digital elevation models (DEMs) were produced under quality settings reported in the supplementary material (S7). For the pixel-centers to exactly match for further processing, the DEM and orthomosaic were superimposed by matching the orthomosaic boundaries with the DEM boundaries (Fig. 2, see photogrammetry box). Both the orthomosaic and DEM, were exported as GeoTIFF files at a ground sampling distance (GSD) of 20 cm, 50 cm, and 100 cm. As the information of each individual pixel at the overlapping areas of adjacent images is lost in the orthomosaic due to blending, all individual orthophotos were exported using the Belge Lambert 72 (EPSG::31370) coordinate system. Compared to the orthomosaic, the orthophotos still contain all available, and not yet blended, pixels and were the basis for the calculation of VZA and VAA (Fig. 2). For the calculation of VZA and VAA, the camera positions (xcam, ycam, zcam) were exported as ASCII file from Agisoft Metashape. Eventually, the standard orthomosaic was also exported to be used for a comparison with the presented multi-angular data extraction approach. The standard orthomosaic data extraction resulted in 13 reflectance spectra per plot (n = 26) and day (n = 2) for both flight patterns (i.e., nadir alone and nadir combined with two oblique flights). Our custom developed data extraction resulted in 890 reflectance spectra per plot and day for the nadir flight and 2594 reflectance spectra per plot and day for the NOO flight. These numbers resulted from a resampled ground sampling distance of 100 cm and a radius of 200 cm around the center of each plot. In case a smaller ground sampling distance is used, the number of extracted reflectance spectra can be increased (e.g., > 1 billion data points at a ground sampling distance of 10 cm). All processing was performed on a Windows 10 (64 bit) operating system, using an Intel(R) Core(TM) i9-10850 K CPU @ 3.60 GHz, 3600 MHz, 10 Core(s), 20 Logical Processor(s), an NVIDIA GeForce RTX 3060 Ti 8 GB graphics card, and 64 GB of installed memory.

Calculating VZA and VAA

Once all photogrammetry products were exported from Metashape (i.e., digital elevation model, orthomosaic, orthophotos, camera positions), they were converted, in Python, into tabular data so that each row of the table contained a single pixel value. For each pixel, the image coordinate, the world coordinate in longitude and latitude, the associated reflectance signal, and elevation information was extracted from the image EXIF data. Eqs. 1 and 2 were modified from Roosjen et al. (2017) to yield the VZA and VAA in degrees:

where xcam, ycam, zcam and xpix, ypix, zpix represent the longitude, latitude and altitude of the camera and the pixel, respectively.

The resulting tabular data contained reflectance values for 10 multispectral bands and the associated VZA and VAA values. As the resulting data exceeded multiple gigabytes, they were managed using Apache feather file formats (Apache Software Foundation, Delaware, USA). This format allows high data volume compression and fast reading and writing speeds. To associate each listed reflectance value with the LAI, GLAI, NBI, and LCC reference values, pixels within a radius of 200 cm from the logged sampling coordinates were selected. To perform the pixel selection at high computational efficiency, the pixel list was first converted into a k-dimensional, binary search tree where the data in each node is a k-dimensional point in space. Thus, facilitating the look-up of sampling points and sampling area definition.

Eventually, a smaller data frame, containing ground reference values for LAI, GLAI, NBI, LCC, VAA and VZA, and the associated reflectance in the 10 multispectral bands was used to perform the classification analysis. All four plant parameters were categorized, based on their quantiles, into three classes: high, medium, and low.

Machine learning model and statistical analysis

Reflectance data was prepared to compare classification performance of the standard data extraction approach from the orthomosaic, the full reflectance data extraction from a single nadir flight (N) and the full reflectance data extraction from a nadir flight combined with two oblique angle flights. The input data for each tested classification approach are the reflectance values across 10 multispectral bands. To evaluate the best performing VZA and VAA groups, VZAs were split into balanced groups of reflectance values (see Table 2; VZA Groups). The VAAs were split into backscattering (0°–60°, 300°–360°), forward scattering (120°–240°), and side scattering (60°–120°, 240°–300°).

To pre-select classifiers that perform well on the data, the automated machine learning tool “Tree-based pipeline optimization tool (TPOT)” was run (Olson et al., 2016). It explores thousands of possible pipelines consisting of pre-processing algorithms and hyperparameters of various classifiers within the algorithmic families of naïve bayes, recursive partitioning, support vector machines, and others (Olson et al., 2016). TPOT was run across all stratification levels and the VZA/VAA reflectance groups. Eventually, the Random Forest (Breiman, 2001) and Extra Trees (Geurts et al., 2006) classifiers were selected to compare the classification performance between the standard orthomosaic approach and the other two full data extraction approaches. The Extra Trees classifier performed slightly (but significantly) better across all four parameters (i.e., LAI, GLAI, LCC, and NBI) and will be reported later in the paper. To assess model performance, the f1-scores are used. The f1-score is a measure of the effectiveness of a classification model and can range from 0 to 1 (or 0% to 100%). It is calculated as the harmonic mean of precision and recall, where a score of 1 represents the best possible performance and a score of 0 indicates the worst. Error matrices are provided as supplementary material (S8–S15). The algorithms were trained using a repeated stratified cross-validation approach with 5 folds and 3 repeats. Therefore, the final f1 score was an average of 15 iterations, with each iteration having an unseen 20% subset for testing. This hold-out set is not truly independent but as the aim was data exploration and feature analysis and not to build a general model, the approach seemed reasonable.

The influence of the ground sampling distance (i.e., GSD at 20 cm, 50 cm, and 100 cm) to classify plant parameters, was additionally evaluated. A Kruskal-Wallis Test (McKight & Najab, 2010) revealed that there was a statistically significant difference in classification accuracy (f1-score) between at least two groups (chi-squared = 362.38, df = 2, p-value < 2.2e-16). A pairwise comparison using Wilcoxon Rank Sum Test (McKight & Najab, 2010) with continuity correction and a Bonferroni p-value adjustment method found that the mean value of f1-score was significantly different between all three GSDs (20 cm: 100 cm, p = < 2e-16; 50 cm: 100 cm, p = 4.1e-06; 50 cm: 20 cm, p = < 2e-16). Therefore, results are reported based on a GSD of 100 cm as this provided the highest classification accuracies and, conveniently, the lowest computer memory requirements. It was also tested whether the selection of only plant pixels results in more accurate classification. Therefore, a thresholding procedure (Otsu, 1979) based on EVI and NDVI was used to separate the green plant pixels and the soil pixels. However, differences in classification accuracies were marginal, so data without segmentation was used.

Results

Plant trait summary statistics

Across all 26 plots, LAI, GLAI, LCC, and NBI had a mean of 3.6, 2.6, 38 μg cm−2, and 44.3 μg cm−2, respectively. LCC and NBI were highly positively correlated (r = 0.892), and so were LAI and GLAI (r = 0.911). The biochemical traits (LCC, NBI) however showed no correlation with the structural traits (LAI, GLAI). (Please refer to Table 3 for descriptive statistics on each plant parameter).

Effect of days and VZA/VAA groups on classification accuracy

A one-way ANOVA was performed to compare the effect of different days on the classification accuracy. Across all plant traits and VZA/VAA groups, the second day (2020-09-07) performed significantly better (f1 score = 80.4%; F(1, 446) = 22.7, p = 2.610-6) compared to the first day (2020-09-06; f1 score = 77.5%).

On average, on both days, the datasets with the categorized VZA/VAA groups performed markedly better (Day 1 = 75.2%–82.3% and Day 2 = 77.9%–84.5%) than the standard orthomosaic approach (Day 1 = 70.2% and Day 2 = 69%) or when all VAA groups are combined into a single predictor (64.2% and 64.5%).

Effect of flight type (nadir versus nadir + oblique) on classification accuracy

With a sampling radius of 2 m and a GSD of 100 cm, 13 reflectance spectra were collected in the orthomosaic approach, whereas 890 spectra were available for a standard (nadir) flight, and 2593 spectra when the oblique flights were added. When the 13 spectra from the standard orthomosaic approach were used for classifying all four plant traits, across both days, an overall f1-score of 69.6% was reached. When all the available reflectance spectra were used, classification accuracies of 78.6% (N, 890 spectra) and 79.3% (NOO, 2593 spectra) were achieved. While there is no significant difference between the N and NOO approach, both clearly outperform the standard orthomosaic approach. For both flight types, N and NOO, it was observed that lower VZAs result in a more balanced dataset (i.e., having similar amounts of reflectance spectra for each available VAA; Fig. 3c and d).

Distribution of view zenith angles (VZA) per view azimuth angle (VAA) group (a, b) and distribution of VAAs per VZA group (c, d). Plots on the left half (a, c) show data collected from a single nadir flight (N). Plots on the right half (b, d) show data collected from all 3 flights together (nadir, oblique 20°, oblique 20°; NOO). The N flight resulted in a smaller VZA range and total amount of collected pixels compared to the NOO flights

In the following, more details on the classification results for each individual plant trait are provided. All reported results were confirmed using one-way ANOVA tests.

Classification of LAI and GLAI

For the data obtained with the single nadir flight, the classification based on the orthomosaic approach shows accuracies between 67 and 69% across both days for LAI (Fig. 4a). When all data from all VZA and VAA angles were included, the model performance was slightly lower, with f1-scores of 63% on the first and 65% on the second day (All VZA, Fig. 4). However, if data with VZA and VAA for a specific range were included, the performance of all models was markedly higher, ranging between 74 and 89% (all scattering directions, Fig. 4a). Pooling all VZA and VAA reflectance signals into a single group increases signal heterogeneity and therefore can lead to lower classification accuracies.

Classification f1-scores for leaf area index (LAI) according to the extra trees algorithm (ET). The upper plot (a) shows results that were derived from a single nadir flight (NADIR). The lower plot (b) shows the results originating from the dataset where two oblique flights were added to the nadir flight (NOO). Shapes differentiate (circle and triangle) between both flight days

For all azimuth directions, on average, the second day provided significantly higher f1-scores (forward scattering = 84.1%, back scattering = 85.6%, side scattering = 80.3%) compared to the first day (forward scattering = 80.0%, back scattering = 76.8%, side scattering = 81.4%). The back scattering direction especially differed between both days, being less accurate on the first day. The overall irradiance intensity was lower on the first day, deteriorating the signal-to-noise ratio on that day.

When the data from the non-nadir flights are included (NOO), very similar model results emerge (Fig. 4b). With the additional data of the camera tilted at 20°, the range of VZA angles increases (0°–50°), but still few data were collected with VZA above 40° (Fig. 3b). The more extreme the VZA, the less stable the coverage of VAAs (Fig. 3c, d). When comparing the f1-scores that were achieved using the orthomosaic (75%–76%), and by pooling all VZA and VAA groups (66%–67%), it can be observed that again, the distinct VZA/VAA groups deliver higher f1-scores on both days. Overall, f1-score accuracies drop with increasing VZA angle. A one-way ANOVA revealed that there was no statistically significant difference in f1-scores between the nadir and the multi-flight dataset (F(1, 110) = 0.31, p = 0.58).

As expected from the high Pearson correlation coefficient between LAI and GLAI (r = 0.911), the results of GLAI (Fig. 5) are in line with those of LAI. A one-way ANOVA revealed that there was no statistically significant difference in f1-scores between the nadir and the multi-flight dataset (F(1, 110) = 0.15, p = 0.69).

Classification f1-scores for green leaf area index (GLAI) according to the extra trees algorithm (ET). The upper plot (a) shows results that were derived from a single nadir flight (N). The lower plot (b) shows the results originating from the dataset where two oblique flights were added to the nadir flight (NOO). Shapes differentiate (circle and triangle) between both flight days

Classification of LCC and NBI

For the LCC (Fig. 6) and NBI (Fig. 7) data, very similar trends were observed. Again, models derived from the nadir flight data, where all VZA and VAA observations were pooled, or where the orthomosaic was used for data extraction, performed worse than those where distinct VZA/VAA angles were used as predictor variables. For the nadir flights of LCC estimation, only a few f1-scores generated from the most extreme VZA groups (21°–35° and 25°–50°) were as low as the orthomosaic data f1-scores. For LCC, the first day provided worse classification accuracies (78.4%), as already observed for the structural plant traits, compared to the second day (80.5%), however not significantly worse. For NBI, the first day was significantly worse (76.0%) compared to the second day (78.9%). The VZA groups closest to nadir performed best on both days. However, on the second day, the VZA group 19°-25° was among the top three best-performing for LCC and NBI. Still, the overall trend of extreme VZA groups performing worse than close-nadir groups holds true.

Classification f1-scores for leaf chlorophyl content (LCC) according to the extra trees algorithm (ET). The upper plot (a) shows results that were derived from a single nadir flight (N). The lower plot (b) shows the results originating from the dataset where two oblique flights were added to the nadir flight (NOO). Shapes differentiate (circle and triangle) between both flight days

Classification f1-scores for nitrogen balanced index (NBI) according to the extra trees algorithm (ET). The upper plot (a) shows results that were derived from a single nadir flight (N). The lower plot (b) shows the results originating from the dataset where two oblique flights were added to the nadir flight (NOO). Shapes differentiate (circle and triangle) between both flight day

As for the azimuth directions, on the first day for LCC and NBI, side scattering and forward scattering performed best (forward scattering = 79.8%, back scattering = 77.8%, side scattering = 82.2%). No significant difference was observed between side scattering and forward scattering; however, forward scattering differed significantly from back scattering. Back scattering, on the other hand, did not show a significant distinction from forward scattering.

On the second day, when flight conditions were optimal, side scattering (80.6%) demonstrated a lower accuracy compared to forward scattering (83.7%) and back scattering (83.6%) for both LCC and NBI. Notably, back scattering played a more influential role in overall accuracies on that second day, contrasting its less relevant role on the first day when the sun azimuth angle and lighting conditions were suboptimal. The similarities in classification accuracies between both biochemical parameters are supported by a positive Pearson correlation coefficient (r = 0.892). Most importantly, a one-way ANOVA revealed that there was no statistically significant difference in f1-scores (N = 76.9% and NOO = 77.9%) between the nadir and the multi-flight dataset (F(1, 110) = 0.23, p = 0.64).

Analysis of feature importance

To further support the classification results, it was investigated whether feature importance is robust across both days and weather conditions. As the overall pattern of the relative importance did not change across all four plant parameters, relative feature importance was reported for both sampling days and only for the nadir data in the main body of the text (Figs. 8 and 9). The visualization for both days of the NOO data can be found as supplementary data (S16 and S17). For the nadir-only data, on the first day, the near-infrared band (NIR) (842 nm, Fig. 8) was most important for the structural plant parameters (LAI and GLAI). This was true for most observation angles. The NIR band was also most relevant for the classification of LAI and GLAI when the standard orthomosaic data was used. This was the case across all dates and datasets (N and NOO). Interestingly, in few cases on the second day, the NIR band had a high relevance for the classification of NBI.

Relative feature importance for the nadir-only dataset, the extra trees classifier and the first flight day. Each subplot is subset according to the view zenith angle group (top line labels) and view azimuth angle group (bottom line labels). Bar colors indicate plant parameters. The last two plots show the relative importance for the case when all groups are pooled (all vza, all vaa) and the orthomosaic dataset

Relative feature importance for the nadir-only dataset, the extra trees classifier and the second flight day. Each subplot is subset according to the view zenith angle group (top line labels) and view azimuth angle group (bottom line labels). Bar colors indicate plant parameters. The last two plots show the relative importance for the case when all groups are pooled (all vza, all vaa) and the orthomosaic dataset

The multispectral sensor that was used provided three different red edge bands (705 nm, 717 nm, 740 nm). Across all observation angles, days, and datasets, it seems that the red edge band at 740 nm, which is closest to the NIR band, tends to follow the importance patterns of the NIR band. Both red edge bands that are closer to the red band at 668 nm, behave more like this red band itself, meaning, that the red edge bands at 705 nm and 717 nm tend to be more important for the classification of the biochemical parameters (i.e., LCC and NBI) compared to the structural plant parameters (i.e., LAI and GLAI). This seems true across all VZAs and VAAs. In the standard orthomosaic approach or when all observation angles are pooled, the differences between structural and biochemical parameters become less obvious in that spectral region.

When looking at the bands in the visual region, the blue and the red bands often show a higher relative importance compared to the green bands. This pattern especially stands out when evaluating the data collected on the second day. From the first blue band to the last NIR band, it seems that first the relative importance is high, then drops in the green region, climbs a bit in the red region, and then drastically increases towards the NIR band. Overall, trends were very similar across both days, confirming the robustness of the method.

Discussion

This study contributes to the existing knowledge of multi-angular reflectance datasets by conducting a comprehensive analysis on maize (Zea mays). Improved classification of LAI, GLAI, LCC, and NBI was achieved through the use of specific view zenith and azimuth angle reflectance groups. To enhance accessibility to the presented multi-angular UAV reflectance data extraction method, we provide the coded framework that can also be used to reproduce the results (https://github.com/ReneHeim/proj_on_uav).

We demonstrated that harnessing all reflectance values from a single nadir flight significantly enhances the classification of biochemical and structural plant traits. However, additional flights and a tilted camera potentially add valuable data for different crops and/or phenotypic stages. Varied observation angles can capture relevant prediction phenomena, such as plant disease symptoms on the lower leaf surface of upright leaves or hidden leaf biomass not visible from above.

Unlocking multi-angular data for classification analysis

For orthomosaic creation, standard photogrammetry software selects the single observation closest to nadir (Hardin & Jensen, 2011; Roth et al., 2018; Seifert et al., 2019). By capturing images with high horizontal and vertical overlap, pixels are sampled multiple times and appear in two or more different images. Therefore, it is possible to blend adjacent images into a single orthomosaic. This blending procedure implicates that only a single pixel value from all available values is retained. The blending mode varies in its range of functionality depending on the commercial software that is used. It dictates which pixel value is retained (e.g., average, minimum, or maximum).

In this investigation, it is illustrated that utilizing a subset of VZA-VAA angle combinations as input in classification models, specifically a relatively homogeneous subset comprising a significantly larger dataset than that available in an orthomosaic, leads to a pronounced enhancement in the performance of the models. This notable improvement in plant trait modeling prompts the consideration of whether, and under what circumstances, the conventional orthomosaic approach should take precedence in the analysis of spectral UAV data. A comparable approach was demonstrated by Roosjen et al. (2018), who found that incorporating simulated multi-angular reflectance data from a potato field, obtained by a frame-camera mounted on a UAV, led to enhanced estimations of LAI and LCC compared to using solely nadir data. Their improvements using measured data were not significantly better compared to the standard approach. This is not the case in the present study, where model accuracy improvements through simulated data can be confirmed with measured field data. Thus, harvesting multi-angular information from UAV-based frame-cameras can contribute to more accurate plant trait estimations. This aspect makes the presented approach, aimed at unlocking the complete spectrum of available reflectance data, particularly attractive for operational use.

However, when using multi-angular reflectance data, one should consider at which phenotypic stage the data is collected. In the present study, data was collected on two different days, one with optimal weather and lighting conditions and one with suboptimal conditions. On the optimal day, it was found that using reflectance data that was recorded in the backscattering direction (i.e., the sun being behind the observer), was most accurate in classifying our structural and biochemical plant traits. This is in line with previous studies (Roosjen et al., 2017; Verrelst et al., 2012) and explained by the fact that reflectance from the backscattering direction is less influenced by shadows and more sensitive to small signal variations that are often caused by structural plant traits such as the leaf area index (LAI). While the improved prediction of LAI by reflectance data from the backscattering direction is well described, it was suggested by Roosjen et al. (2017) that more research should investigate the effect of different azimuth view direction on the prediction of biochemical plant traits. Through radiative transfer simulations it was shown that in the visible domain of the electromagnetic spectrum (VIS; 400–700 nm) canopy reflectance is mostly influenced by chlorophyll a + b content in the backscattering direction (Jacquemoud et al., 2009). Here, these results are confirmed with actual measurements as the backscattering reflectance resulted in the most accurate prediction for leaf chlorophyll content as well as for the highly correlated nitrogen balanced index.

Weather and illumination can have a strong effect on the prediction accuracy. On the flight day when weather and illumination were suboptimal, the backscattering direction dropped in accuracy and the side scattering direction provided the highest accuracies. Unfortunately, the illumination direction was also different on that day. Sun rays were entering the maize field in parallel to larger maintenance rows and perpendicular to the smaller rows that were separating the plots. It is known that tassels have a higher reflectance intensity compared to healthy leaves (Viña et al., 2004). Also, they protrude above the leaf canopy. Highly variable illumination conditions in combination with a moderate sun zenith angle (Table 1), might have cast shadows from adjacent plots into the sampling plots, negating the positive effect of backscattering reflectance. As reflectance in the side scattering direction is a more homogenous mix of forward and back scattering reflectance, this azimuth direction might have a normalizing effect on the reflectance, thus leading to better predictions when illumination conditions are difficult to correct with the empirical line method. Especially in maize, where the tassels have a stronger reflectance intensity than a healthy leaf, the predictive differences VAAs must be investigated at different growth stages. Also investigating the influence of viewing geometry on other plant traits (Cotrozzi et al., 2020) would be an interesting follow-up study. Any follow-up study might want to consider the hotspot effect (Jones & Vaughan, 2010). Reflected light in this area has strong specular characteristics, thus any change in viewing or illumination direction can result in strong signal variation. Conversely, examining reflectance data from the perimeter of the hotspot is associated with enhanced signal quality. This is attributed to the diminished impact of shadowing effects, resulting in a more pronounced first-order scattering. Consequently, this leads to an enriched representation of subtle variations in reflectance (Verrelst et al., 2012).

This study consistently achieved optimal results with VZA groups near nadir, outperforming the orthomosaic alone. Simulation studies by Dorigo et al. (2007), Duan et al. (2014), and Roosjen et al. (2018) emphasized that increased and well-distributed viewing angles improved LAI and LCC estimations. Indeed, VZAs up to 30° enhance the estimation of the presented plant traits compared to using solely close-nadir data from the orthomosaic. However, this study observed a decline in classification performance with more extreme VZAs, potentially due to the underrepresentation of such angles in the dataset for this study. Beyond 30° VZA, VAAs were sparsely collected, known to adversely affect classification results as machine learning algorithms assume balanced data (Krawczyk, 2016). In September 2020, the maize was in a late reproductive growth stage with a well-developed and closed canopy, making extreme viewing angles likely less informative. Additionally, reflectance intensities, particularly in the NIR region (750 nm–1300 nm), increase with rising VZA (Jones & Vaughan, 2010). A comparison of angle-dependent reflectance variation in the NIR region before and after the tasseling stage could provide valuable insights. Also, the higher the VZA, the less intense the irradiance (Jones & Vaughan, 2010), thus the signal-to-noise ratio decreases. Still, this study distinguishes itself by showcasing advancements over the classic orthomosaic method. Employing real measurements and a machine learning approach, this study demonstrates the potential for LAI and LCC improvement with distinct VZA groups.

Our method leverages a broader, yet precisely defined, range of UAV-captured reflectance data, substantially improving plant parameter classification in comparison to the traditional orthomosaic approach. Performance was notably inferior when all viewing angles were employed for classification, as the inclusion of continuously more observation directions introduced redundant information and noise, as highlighted by Weiss et al. (2000). This ultimately leads to increased uncertainty in the parameter retrieval. However, given the variability in blending modes and software solutions for orthomosaic generation, further studies are needed to compare plant parameter predictions between the classic orthomosaic approach and our proposed method. Wang et al. (2020) already expressed a general concern about the impact of blending modes, noting inconsistent patterns in pixel values that deviated from their original values. Thus, in the optimization of commercial photogrammetry software incorporating a blending option to selectively choose specific view angles could prove beneficial. This is particularly relevant since the optimal view angle for plant parameter analysis may vary depending on the canopy type.

Feature importance

The selected spectral bands crucial for classification in our study align with well-established knowledge of the relationships between the red edge (RE), red, and near-infrared (NIR) reflectance and structural and biochemical plant traits (Curran & Milton, 1983; Curran et al., 1991; Daughtry et al., 2000; Gitelson et al., 1996; Gitelson et al., 2014). The NIR band at 842 nm, and the red bands at 650 nm and 668 nm, were consistently relevant in our analysis. The red edge, spanning approximately 680 to 750 nm and marking the transition between the red and NIR wavelengths, exhibited higher significance in classifying biochemical parameters compared to structural plant parameters. These findings, supported both empirically and mechanistically in the literature, strengthen the robustness of our presented results.

Consequences of ground sampling distance to data handling

The presented approach generates substantial data volumes, necessitating user consideration for efficient handling. Future optimization could include features allowing users to selectively choose specific VZAs or VAAs, enhancing computational efficiency. In our case, with a best ground sampling distance of 100 cm, a system with less than 32 GB of RAM and a commercial-grade graphics adapter could manage the data. However, testing at a GSD of 10 cm approached the computing environment's limits. Weiss et al. (2000) emphasized the need for a pixel size sufficient to observe heterogeneous pixels accurately. While the code was optimized and parallelized, further enhancements in computational efficiency and accessibility are possible.

Conclusion

This study introduces a method (https://github.com/ReneHeim/proj_on_uav) designed to extract multi-angular reflectance data from multispectral UAV imagery. The case study on maize illustrates that specific view zenith angle (VZA) and view azimuth angle (VAA) groups significantly enhance the classification of biochemical and structural plant parameters, surpassing the standard orthomosaic approach. The enhanced classification accuracy is attributable not solely to the selection of specific viewing angles but also to the methodology's capability to augment the data volume by over 50 times compared to the conventional orthomosaic approach, achievable already through a single UAV mission. This highlights the effectiveness of utilizing the presented method, especially in combination with data-intensive machine learning methods. This marks a departure from previous studies that harnessed deterministic modeling approaches. The applicability of this method in enhancing classification approaches with multi-angular datasets in other disciplines warrants further investigation. It is essential to assess whether the inclusion of additional viewing angles contributes meaningful information to the associated research question. For example, a completely closed and planophile canopy may not present sufficient openings for extra viewing angles to be informative. Conversely, in erectophile canopy types, optical signals may more readily penetrate the leaf layers, offering potentially valuable insights. The utility of this approach extends beyond the classification of biochemical and structural plant traits. This approach offers potential across diverse fields, such as evaluating the spectral variability hypothesis in plant ecology, detecting objects in urban and natural settings, and diagnosing biotic and abiotic stress within forestry and agricultural contexts, among others. Should additional cases corroborate these and prior findings regarding the effectiveness of multi-angular remote sensing, it becomes imperative to reconsider existing methodologies in photogrammetric processing.

References

Aasen, H., Honkavaara, E., Lucieer, A., & Zarco-Tejada, P. (2018). Quantitative remote sensing at ultra-high resolution with UAV spectroscopy: A review of sensor technology, measurement procedures, & data correction workflows. Remote Sensing, 10(7), 1091. https://doi.org/10.3390/rs10071091

Agisoft LLC, (2022). Agisoft Metashape User Manual: Professional Edition, Version 1.8.

Asner, G. P., Braswell, B. H., Schimel, D. S., & Wessman, C. A. (1998). Ecological research needs from multiangle remote sensing data. Remote Sensing of Environment, 63(2), 155–165. https://doi.org/10.1016/S0034-4257(97)00139-9

Asner, Gregory P., Scurlock, Jonathan M. O., & Hicke, Jeffrey A. (2003). Global synthesis of leaf area index observations: Implications for ecological & remote sensing studies. Global ecology & biogeography., 12(3), 191–205. https://doi.org/10.1046/j.1466-822X.2003.00026.x

Breiman, L. (2001). Random forests. Machine Learning, 45, 5–32. https://doi.org/10.1023/A:1010933404324

Burkart, A., Aasen, H., Alonso, L., Menz, G., Bareth, G., & Rascher, U. (2015). Angular dependency of hyperspectral measurements over wheat characterized by a novel UAV based goniometer. Remote Sensing, 7(1), 725–746. https://doi.org/10.3390/rs70100725

Cerovic, Z. G., Masdoumier, G., Ghozlen, N. B., & Latouche, G. (2012). A new optical leaf-clip meter for simultaneous non-destructive assessment of leaf chlorophyll & epidermal flavonoids. Physiologia Plantarum, 146(3), 251–260. https://doi.org/10.1111/j.1399-3054.2012.01639.x

Cotrozzi, L., Peron, R., Tuinstra, M. R., Mickelbart, M. V., & Couture, J. J. (2020). Spectral phenotyping of physiological & anatomical leaf traits related with maize water status. Plant Physiology, 184(3), 1363–1377. https://doi.org/10.1104/pp.20.00577

Curran, P. J., Dungan, J. L., Macler, B. A., & Plummer, S. E. (1991). The effect of a red leaf pigment on the relationship between red edge & chlorophyll concentration. Remote Sensing of Environment, 35(1), 69–76. https://doi.org/10.1016/0034-4257(91)90066-F

Curran, P. J., & Milton, E. J. (1983). The relationships between the chlorophyll concentration, LAI & reflectance of a simple vegetation canopy. International Journal of Remote Sensing, 4(2), 247–255. https://doi.org/10.1080/01431168308948544

Daniëls, L., Audenaert, K., Wieme, J., Eeckhout, E., & Maes, W. H. (2023). Comparing different radiometric calibration methods for remote sensing in an agricultural context. Remote Sensing, 15(11), 2920. https://doi.org/10.3390/rs15112909

Daughtry, C. (2000). Estimating corn leaf chlorophyll concentration from leaf & canopy reflectance. Remote Sensing of Environment, 74(2), 229–239. https://doi.org/10.1016/S0034-4257(00)00113-9

Dorigo, W. A., Zurita-Milla, R., de Wit, A. J. W., Brazile, J., Singh, R., & Schaepman, M. E. (2007). A review on reflective remote sensing & data assimilation techniques for enhanced agroecosystem modeling. International Journal of Applied Earth Observation and Geoinformation, 9(2), 165–193. https://doi.org/10.1016/j.jag.2006.05.003

Duan, S.-B., Li, Z.-L., Wu, H., Tang, B.-H., Ma, L., Zhao, E., & Li, C. (2014). Inversion of the PROSAIL model to estimate leaf area index of maize, potato, & sunflower fields from unmanned aerial vehicle hyperspectral data. International Journal of Applied Earth Observation and Geoinformation, 26, 12–20. https://doi.org/10.1016/j.jag.2013.05.007

Escadafal, R., & Huete, A. (1991). Influence of the viewing geometry on the spectral properties (high resolution visible & NIR) of selected soils from Arizona. Proceedings of the 5th International Colloquium, Physical Measurements and Signatures in Remote Sensing, 1, 401–404.

Fang, H., Baret, F., Plummer, S., & Schaepman-Strub, G. (2019). An overview of global leaf area index (LAI): Methods, products, validation, & applications. Reviews of Geophysics, 57(3), 739–799. https://doi.org/10.1029/2018RG000608

Geurts, P., Ernst, D., & Wehenkel, L. (2006). Extremely randomized trees. Machine Learning, 63, 3–42. https://doi.org/10.1007/s10994-006-6226-1

Gitelson, A. A., Merzlyak, M. N., & Lichtenthaler, H. K. (1996). Detection of red edge position & chlorophyll content by reflectance measurements near 700 nm. Journal of Plant Physiology, 148(3–4), 501–508. https://doi.org/10.1016/S0176-1617(96)80285-9

Gitelson, A. A., Peng, Y., Arkebauer, T. J., & Schepers, J. (2014). Relationships between gross primary production, green LAI, & canopy chlorophyll content in maize: Implications for remote sensing of primary production. Remote Sensing of Environment, 144, 65–72. https://doi.org/10.1016/j.rse.2014.01.004

Hardin, P. J., & Jensen, R. R. (2011). Small-scale unmanned aerial vehicles in environmental remote sensing: Challenges & opportunities. Giscience & Remote Sensing, 48(1), 99–111. https://doi.org/10.2747/1548-1603.48.1.99

Harwin, S., Lucieer, A., & Osborn, J. (2015). The impact of the calibration method on the accuracy of point clouds derived using unmanned aerial vehicle multi-view stereopsis. Remote Sensing, 7(9), 11933–11953. https://doi.org/10.3390/rs70911933

He, L., Ren, X., Wang, Y., Liu, B., Zhang, H., Liu, W., Feng, W., & Guo, T. (2020). Comparing methods for estimating leaf area index by multi-angular remote sensing in winter wheat. Scientific Reports, 10(1), 13943. https://doi.org/10.1038/s41598-020-70951-w

Herrmann, I., & Berger, K. (2021). Remote & proximal assessment of plant traits. Remote Sensing, 13(10), 1893.

Hilty, J., Muller, B., Pantin, F., & Leuzinger, S. (2021). Plant growth: The What, the How, & the Why. New Phytologist, 232(1), 25–41. https://doi.org/10.1111/nph.17610

Houborg, R., & Boegh, E. (2008). Mapping leaf chlorophyll & leaf area index using inverse & forward canopy reflectance modeling & SPOT reflectance data. Remote Sensing of Environment, 112(1), 186–202. https://doi.org/10.1016/j.rse.2007.04.012

Huete, A., Didan, K., Miura, T., Rodriguez, E. P., Gao, X., & Ferreira, L. G. (2002). Overview of the radiometric & biophysical performance of the MODIS vegetation indices. Remote Sensing of Environment, 83(1–2), 195–213. https://doi.org/10.1016/S0034-4257(02)00096-2

Jacquemoud, S., Verhoef, W., Baret, F., Bacour, C., Zarco-Tejada, P. J., Asner, G. P., François, C., & Ustin, S. L. (2009). PROSPECT+SAIL models: A review of use for vegetation characterization. Remote sensing of environment, 113, S56–S66. https://doi.org/10.1016/j.rse.2008.01.026

Jones, H. G., & Vaughan, R. A. (2010). Remote sensing of vegetation: Principles, techniques, & applications. Oxford University Press.

Krawczyk, B. (2016). Learning from imbalanced data: Open challenges & future directions. Progress in Artificial Intelligence, 5(4), 221–232. https://doi.org/10.1007/s13748-016-0094-0

Li, L., Mu, X., Qi, J., Pisek, J., Roosjen, P., Yan, G., Huang, H., Liu, S., & Baret, F. (2021). Characterizing reflectance anisotropy of background soil in open-canopy plantations using UAV-based multiangular images. ISPRS Journal of Photogrammetry and Remote Sensing, 177, 263–278. https://doi.org/10.1016/j.isprsjprs.2021.05.007

Li, M., Shamshiri, R. R., Schirrmann, M., & Weltzien, C. (2021b). Impact of camera view angle for estimating leaf parameters of wheat plants from 3d point clouds. Agriculture, 11(6), 563. https://doi.org/10.3390/agriculture11060563

Li, W., Jiang, J., Weiss, M., Madec, S., Tison, F., Philippe, B., Comar, A., & Baret, F. (2021). Impact of the reproductive organs on crop BRDF as observed from a UAV. Remote Sensing of Environment, 259, 112433. https://doi.org/10.1016/j.rse.2021.112433

Mario, C., Isabel, P., & Sosdito, E. M. (2018). Maize leaf area estimation in different growth stages based on allometric descriptors. African Journal of Agricultural Research, 13(4), 202–209. https://doi.org/10.5897/AJAR2017.12916

McKight, P. E., & Najab, J. (2010). Kruskal-Wallis test. The Corsini Encyclopedia of Psychology. https://doi.org/10.1002/9780470479216.corpsy0491

Müller-Linow, M., Pinto-Espinosa, F., Scharr, H., & Rascher, U. (2015). The leaf angle distribution of natural plant populations: assessing the canopy with a novel software tool. Plant Methods. https://doi.org/10.1186/s13007-015-0052-z

Olson, R. S., Urbanowicz, R. J., Andrews, P. C., Lavender, N. A., Kidd, L. C., & Moore, J. H. (2016). Automating biomedical data science through tree-based pipeline optimization. In G. Squillero & P. Burelli (Eds.), Applications of evolutionary computation: 19th European Conference, EvoApplications 2016, Porto, Portugal, March 30 – April 1, 2016, Proceedings, Part I (pp. 123–137). Springer. https://doi.org/10.1007/978-3-319-31204-0_9

Otsu, N. (1979). A threshold selection method from gray-level histograms. IEEE Transactions on Systems, Man, and Cybernetics, 9(1), 62–66. https://doi.org/10.1109/TSMC.1979.4310076

Over, J.-S.R., Ritchie, A.C., Kranenburg, C.J., Brown, J.A., Buscombe, D., Noble, T., Sherwood, C.R., Warrick, J.A., & Wernette, P.A. (2021). Processing coastal imagery with Agisoft Metashape Professional Edition, Version 1.6—Structure from motion workflow documentation. https://doi.org/10.3133/ofr20211039

Roosjen, P. P. J., Brede, B., Suomalainen, J. M., Bartholomeus, H. M., Kooistra, L., & Clevers, J. G. P. W. (2018). Improved estimation of leaf area index and leaf chlorophyll content of a potato crop using multi-angle spectral data—potential of unmanned aerial vehicle imagery. International Journal of Applied Earth Observation and Geoinformation, 66, 14–26. https://doi.org/10.1016/j.jag.2017.10.012

Roosjen, P., Suomalainen, J., Bartholomeus, H., Kooistra, L., & Clevers, J. (2017). Mapping reflectance anisotropy of a potato canopy using aerial images acquired with an unmanned aerial vehicle. Remote Sensing, 9(5), 417. https://doi.org/10.3390/rs9050417

Roth, L., Aasen, H., Walter, A., & Liebisch, F. (2018). Extracting leaf area index using viewing geometry effects—a new perspective on high-resolution unmanned aerial system photography. ISPRS Journal of Photogrammetry and Remote Sensing, 141, 161–175. https://doi.org/10.1016/j.isprsjprs.2018.04.012

Rouse, W., Hass, R.H., Schell, J.A., & Deering, D.W. (1974). Monitoring vegetation systems in the great plains with ERTS, In Proceedings of the Third Earth Resources Technology Satellite Symposium. Washington, pp. 309–317.

Schaepman, M. E., Koetz, B., Schaepman-Strub, G., & Itten, K. I. (2005). Spectrodirectional remote sensing for the improved estimation of biophysical & -chemical variables: Two case studies. International Journal of Applied Earth Observation and Geoinformation, 6, 271–282. https://doi.org/10.1016/j.jag.2004.10.012

Schaepman-Strub, G., Schaepman, M. E., Painter, T. H., Dangel, S., & Martonchik, J. V. (2006). Reflectance quantities in optical remote sensing—definitions and case studies. Remote Sensing of Environment, 103(1), 27–42. https://doi.org/10.1016/j.rse.2006.03.002

Schlemmer, M., Gitelson, A., Schepers, J., Ferguson, R., Peng, Y., Shanahan, J., & Rundquist, D. (2013). Remote estimation of nitrogen & chlorophyll contents in maize at leaf and canopy levels. International Journal of Applied Earth Observation and Geoinformation, 25, 47–54. https://doi.org/10.1016/j.jag.2013.04.003

Seifert, E., Seifert, S., Vogt, H., Drew, D., van Aardt, J., Kunneke, A., & Seifert, T. (2019). Influence of drone altitude, image overlap, and optical sensor resolution on multi-view reconstruction of forest images. Remote Sensing, 11(10), 1252. https://doi.org/10.3390/rs11101252

Van Rossum, G., & Drake, F. L. (2009). Python 3 reference manual. CreateSpace.

Verrelst, J., Romijn, E., & Kooistra, L. (2012). Mapping vegetation density in a heterogeneous river floodplain ecosystem using pointable CHRIS/PROBA data. Remote Sensing, 4(9), 2866–2889. https://doi.org/10.3390/rs4092866

Viña, A., Gitelson, A. A., Rundquist, D. C., Keydan, G., Leavitt, B., & Schepers, J. (2004). Monitoring maize (Zea mays L.) phenology with remote sensing. Agronomy Journal, 96(4), 1139–1147. https://doi.org/10.2134/agronj2004.1139

Wang, X., Silva, P., Bello, N. M., Singh, D., Evers, B., Mondal, S., Espinosa, F. P., Singh, R. P., & Poland, J. (2020). Improved accuracy of high-throughput phenotyping from unmanned aerial systems by extracting traits directly from orthorectified images. Frontiers in Plant Science, 11, 587093. https://doi.org/10.3389/fpls.2020.587093

Weiss, M., Baret, F., Myneni, R. B., Pragnère, A., & Knyazikhin, Y. (2000). Investigation of a model inversion technique to estimate canopy biophysical variables from spectral and directional reflectance data. Agronomie, 20(1), 3–22. https://doi.org/10.1051/agro:2000105

Acknowledgements

RH, the UAV, and sensors were paid as part of the SMAC (Simultaneous Measurement of leaf Area and Chlorophyll) project, funded by the BOF Starting Grant at Ghent University (BOF/STA/201909/004). This research was partially supported by funds of the Federal Ministry of Food and Agriculture (BMEL) based on a decision of the Parliament of the Federal Republic of Germany. The Federal Office for Agriculture and Food (BLE) provided coordinating support for digitalization in agriculture as funding organization, grant number 28DE104A18. RH was partially funded by the Deutsche Forschungsgemeinschaft (DFG, German Research Foundation) - 521313940. Thanks to Brechtje and Christophe for the pleasant and helpful hours in the laboratory.

Funding

Open Access funding enabled and organized by Projekt DEAL. This work was funded by Bijzonder Onderzoeksfonds UGent, BOF/STA/201909/004, Wouter Maes, and the Bundesanstalt für Landwirtschaft und Ernährung, 28DE104A18.

Author information

Authors and Affiliations

Contributions

WM and RH conceived and designed the experiments; WM and RH performed the experiments; RH and NO analyzed the data; MCVL, and KS contributed reagents/materials/analysis tools; RH, WM, IG, and NO wrote the paper. IG provided language editing. All authors provided comments to improve the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Heim, R.H.J., Okole, N., Steppe, K. et al. An applied framework to unlocking multi-angular UAV reflectance data: a case study for classification of plant parameters in maize (Zea mays). Precision Agric 25, 1751–1775 (2024). https://doi.org/10.1007/s11119-024-10133-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11119-024-10133-0