Abstract

Four time-lapse cameras, Bushnell Nature View HD Camera (Bushnell, Overland Park, KS, USA) were installed in a soybean field to track the response of soybean plants to changing weather conditions. The purpose was to confirm if visible spectroscopy can provide useful data for tracking the condition of crops and, if so, whether game and trail time-lapse cameras can serve as reliable crop sensing and monitoring devices. Using the installed cameras, images were taken at 30-min intervals between July 22 and August 1, 2015. Using the RGBExcel software application developed in-house, image data from the R (red), G (green), and B (blue) bands were exported to Microsoft Excel for further processing and analysis. Daytime adjusted green red index data for the plant, based on the R and G data, were plotted against time of image acquisition and also regressed with selected weather parameters. The former showed a rise-and-fall trend with daily peaks around 13:00, while the latter showed a decreasing order of correlation with weather variables as follows: log of solar radiation > log of soil surface temperature > log of air temperature > log of soil temperature at 50-mm depth > log of relative humidity. Despite some low correlations, the potential for using game and trail cameras with time-lapse capability to track changes in crop vegetation response under varying conditions is established. The resulting data can be used to develop models that can aid precision agriculture applications. This can be further explored in future studies.

Similar content being viewed by others

Introduction

Precision agriculture for crop management requires adequate information from the crop both over time and across the field in order to provide timely, precise, and variable treatment. This is particularly true where variable crop response or performance exist due to variability in soil texture and nutrient availability across a field, and precision (variable rate) irrigation management is required. The goal of irrigation is to supplement plant water needs which are only partially and unevenly met by precipitation during the crop growing season. The target soil moisture condition is known as the field capacity. Irrigation scheduling is used to replenish depleted soil water in a timely manner preventing the soil condition from reaching the crop stress point. Consumptive soil water depletion is mainly due to evapotranspiration (ET), the combined process of evaporation from the soil and transpiration from the crop. ET rate increases as air temperature increases and relative humidity decreases. The cumulative daily ET amount is tracked from a previous irrigation event to determine the next time to irrigate.

Because the growing crop responds to the soil and meteorological conditions to which it is subjected (Hollinger and Angel 2009), crop health data can be collected alongside soil and meteorological data to establish mathematical relationships (Murthy 2004) that can be utilized to determine a crop’s status at a given point in time. Analysis of meteorological data can provide near real-time information about the status of a crop in terms of quality and/or quantity (Doraiswamy et al. 2003). For example, crop imagery data from the visible (RGB) and/or near-infrared (NIR) bands can be correlated to meteorological data to establish crop response to changing weather conditions for irrigation scheduling purposes. Such information can serve as an early-warning indicator or decision-support resource for proper planning and timely intervention to efficiently manage the crop (Akeh et al. 2000). In fact, crop stress factors such as pest and disease infestations, water and nutrient deficiencies must be detected early enough to allow for early mitigation to prevent massive loss in yield (Nutter et al. 2002).

Satellite and aerial remote sensing (SARS), methods used in precision agriculture, are used to capture crop spectral response data from which vegetation indices relevant for determining crop status can be derived (Boschetti et al. 2007). SARS deals with imagery of crops covering large areas such as a whole farm or much larger areas (Holecz et al. 2013). While SARS can cover large areas, ground based proximal remote sensing (PRS) employing similar image processing and analysis techniques as SARS can also be implemented to cover much smaller areas such as a field or plot. Deery et al. (2014) evaluated the potential for using PRS for field-based phenotyping. They concluded that while commercial-scale pre-breeding and breeding situations require mature data acquisition and automated data processing to keep pace with the high demand, reduced amount of automation and higher human involvement in data processing and analysis is acceptable for low-throughput applications. Small area implementations can provide representative data for an entire field or can be used to monitor specific locations or networks of locations of interest (Cheng et al. 2016). For crop monitoring for precision management, particularly precision irrigation, images must be provided on a frequent basis to allow the farmer to respond quickly (Seelan et al. 2003; Toureiro et al. 2017). Frequent acquisition of images allows for the generation of time series data which can be used to build models describing crop phenology throughout the growth cycle. Crop phenological information from time series data has been employed in multi-sensor mapping of crops by Siachalou et al. (2015).

Two of the limitations to the adoption of crop sensing/monitoring by imagery and image processing for precision farm management are the cost of the instrumentation and image processing software and the high expertise required (Seelan et al. 2003). For these reasons and a host of others, a high number of successful advances either still remain at the research level or have had very low adoption rates by farmers. Some ways to encourage adoption include reducing the cost of the technologies, making the technologies easily accessible and easy to learn and use. These steps can allow crop consultants, extension agents, and farmers to try small plot applications as proof of concept and to encourage the adoption of relevant practices.

Recent efforts to develop low cost image processing and analysis techniques have been pursued in the Precision Application Technology Lab in the College of Agriculture, Engineering, and Technology, Arkansas State University. These efforts were strengthened by the creation of a software application, RGBExcel (Larbi 2016a) and the later version RGB2X (Larbi 2016b). This application extracts and exports image data of digital images (regardless of the camera used for the acquisition and the file format) to Microsoft Excel for further processing and analysis. Studies utilizing this software package include a foundational paper highlighting the implementation of standard image processing techniques using Microsoft Excel (Larbi 2016c, 2018) and another study which compared processed image data from five different cameras representing a range of available commercial color camera options on the market (Vong and Larbi 2016). Since Excel has over 750 million users worldwide, this innovation will become highly accessible globally as the RGB2X software is made available. Other software applications are available that convert image to RGB and alpha data such as Get RGB (Johnson n.d.), however, the advantage of RGB2X is the ability to convert multiple files at a time allowing for convenience and speed.

In the present study, the potential utility of a low-cost game and trail time-lapse camera for monitoring crop status is explored. Since the camera can be configured to capture images at a user-defined time interval, very minimal expertise is required for setting it up. The purpose was to emphasize the utility of visible spectroscopy in providing useful data for tracking the condition of crops and determining whether game and trail time-lapse cameras could serve as reliable crop sensing and monitoring devices. The specific objectives were:

-

1.

To test the potential utility of game and trail time lapse cameras for automatic monitoring of soybean plant response to changing environmental conditions;

-

2.

To demonstrate the utility of Microsoft Excel for image processing and analysis; and

-

3.

To establish relationships between the processed image data and corresponding weather parameters.

Achieving the above objectives will provide simple tools that can be adopted by crop consultants, extension agents, and farmers as initial steps towards wide scale adoption of precision agriculture practices.

Materials and methods

In this preliminary observational study, four game and trail time-lapse cameras (Fig. 1), Bushnell Nature View HD Camera (Bushnell, Overland Park, KS, USA) were installed in an experimental soybean field to capture day and night images of the crop over time. Each camera took images with 8 MP high-quality full color resolution and 1–3 images per trigger, as well as 1280 × 720 pixel high definition video with audio record programmable length from 1 to 60 s. A field scan time-lapse mode took images at pre-set intervals of 1 to 60 min. Each camera had an imbedded temperature sensor with − 20 to 60 °C range. Images captured were stamped with the camera name, air temperature (both °F and °C), date and time of capture.

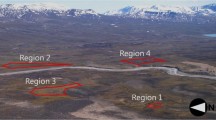

The experimental field (latitude 35.83868, longitude − 90.66634) was located at the Arkansas State University Farm Complex (ASU Farm) in Jonesboro, AR, USA. A soybean plot which was under no particular experiment at the time of the study was used, thus placement of the cameras was not meant to compare between crop responses to any particular treatments. The cameras (referred to as Cam 1, Cam 2, Cam 3, and Cam 4) were installed on a mount as shown in Fig. 2 at four corners of a rectangular area in the plot (Fig. 3). A nearby weather station, ASU WS, which was used as a reference was located about 210 m from the farthest camera (Cam 1). Cam 1 and Cam 2 were intended to monitor plants located near the Northside boundary of the plot while Cam 3 and Cam 4 monitored plants near the middle of the plot. The locations of the cameras are summarized in Fig. 3.

With the cameras overlooking the plants at a height of about 2.4 m, each camera captured vertical aerial images covering a ground area of dimension 1.88 m × 1.32 m. The height of the camera was selected in order to capture reasonable sections of at least two rows of the soybean plants. Additionally, the cameras needed to be easily accessible for retrieving images as well as replacing batteries using a 910-mm 2-tier heavy-duty step ladder. Since natural illumination was used for imaging, the height was not expected to influence the intensity of reflected light detected from the target plants.

Using the installed cameras, images were taken between July 22 and August 1, 2015. Daytime images were color images while nighttime images were monochrome infrared (IR) images. Figure 4 shows daytime images obtained from Cam 1 on July 22 @16:00 (left), July 27 @16:00 (middle), and July 31 @12:00 (right), 2015. Due to occasional strong winds, the actual field of view of the cameras at the time of capture shifted slightly within the general area being monitored, but this shift was not considered to affect the results significantly. Some of the images obtained had the shadow of the instrumentation but illumination compensation was accomplished in the image processing to eliminate this illumination defect. Figure 5 shows a nighttime image obtained from Cam 1 on July 22 @22:00. Because the nighttime images were single band monochrome images (unsuitable for the multispectral image processing performed) with the appearance of overexposure to the IR light, they were not considered useful in this study. However, the average pixel value was explored to identify potential use in the future.

The air temperature stamped on the images at 30-min intervals was plotted over time to track the changing environmental conditions. Image data from the R (red), G (green), and B (blue) bands of the images were extracted and exported to Microsoft Excel for processing and analysis, using the RGBExcel software application (Larbi 2016a). Average pixel value of the nighttime images was obtained and plotted against time from dusk to dawn. For each daytime image, an adjusted green red index (AGRI) image dataset was generated based on the equation

where IG and IR are the signal intensities (or pixel values) respectively in G and R bands. Next, the background (mainly soil) was removed by using a thresholding technique where values outside the range of 0.4 ≤ AGRI < 0.48 (i.e. AGRI pixel range for plants) were assigned zeros and those within the specified range were retained. The average value of the AGRI was obtained for the remaining pixels and plotted against the date and time of image collection.

As this study was only observational and no treatments were applied to the soybean plants at the different locations, only the plants’ response to weather conditions were tracked based on the AGRI data. The AGRI data was regressed with logs of solar radiation, air temperature, relative humidity, soil surface temperature, and soil temperature at 50-mm depth. The weather data which were obtained from the ASU Farm weather station were retrieved from the weather library at http://weatherdata.astate.edu/Main.asp. Investigation of the skewness of the weather data suggested that they exhibited logarithmic behavior. Therefore they were transformed to logs for further analysis so that the distribution was closer to normal.

Results and discussion

The changing environmental conditions over the period of observation, as portrayed by the changing temperature value stamped on the images, are shown in Fig. 6. Throughout this period, temperature at the locations of Cams 2, 3, and 4 appeared to be similar, while that for Cam 1 was higher. This behavior could be due to either true local temperature variations or differences between the embedded temperature sensors. The diurnal temperature variation (Lillesand et al. 2015) for all four camera locations was similar to the temperature data obtained from the ASU weather station. Overall, temperature values at all four camera locations were higher than that at the ASU weather station during the day and lower during the night. The temperature difference between Cam 1 and the ASU weather station (temperature error) is shown in Fig. 7 to better portray this difference.

The average pixel value of the nighttime images plotted against time of night and date generally showed increasing trends in both cases (Fig. 8). The average values for Cams 1 and 2 (which were located near the boundary of the soybean plot) were similar. Likewise, the average values for Cam 3 and Cam 4 (which were located near the middle of the plot) were also similar. These similarities likely indicate similar performance of plants in those locations in response to the prevailing conditions. However, individual comparisons of the average pixel values with corresponding temperature readings at the same location did not show much consistency for all the camera locations. Hence, the nighttime data was not further analyzed.

The raw R and G daytime image data were processed to obtain the AGRI image data. Figure 9a shows an example of the AGRI images obtained from one of the images from Cam 1. The plant pixel values were observed to range from about 0.40 to 0.48, while values outside this range represented the background. Figure 9b shows a further enhanced image with the background (mainly soil) removed by the thresholding method described above. Some plant pixels were falsely cutoff due to high light reflection caused by the orientation of the affected leaves, but the proportion of pixels affected was considered to be insignificant.

The daytime average value of the AGRI image data with the background removed were tracked for the period of the study. Figure 10 shows the AGRI time-series data for Cam 1 superimposed with the corresponding air temperature data. The rise-and-fall trends in the AGRI plotted data are quite similar to the air temperature variation although there are some departures. It appears that the trend was not solely influenced by the air temperature. The daily peak AGRI value was observed to be at about 13:00 each day, which somewhat coincides with the air temperature peak time.

The 10-day summary AGRI data for Cam 1 is shown in Fig. 11a, while the overall daily average values for all the four cameras are shown in Fig. 11b. The AGRI values from Cam 1 were the highest while those from Cam 2 were the lowest; both locations were near the boundary of the plot. High AGRI values are indicative of low crop performance (i.e. low leaf greenness) due to some stress factor. Since the air temperature at Cam 1 was the highest throughout, it is likely that the plants in this location were the most stressed. Vice versa, plants in Cam 2 location were the least stressed. Visually, the plants in Cam 2 location appeared slightly greener than the plants at other locations. There was not much difference between the data from Cam 3 and Cam 4, which were located in the interior sections of the soybean field.

The regression of AGRI values with the logs of selected weather parameters provide some insightful relationships. The values of the weather parameters recorded represent typical values during the growing season. Figures 12, 13, 14, 15, and 16 show the relationships of average AGRI with solar radiation, air temperature, relative humidity, soil surface temperature, and soil temperature at 50-mm depth, respectively, at Cam 1 location (left) and at all four locations (right). Similar trends were observed among all four camera locations, with some variation, indicating consistency among the cameras in capturing crop response trends. Generally, AGRI values increased with: air temperature from 20 °C to about 40 °C; soil surface temperature between about 20 °C to around 40 °C; soil 50-mm temperature from around 22 °C to roughly 38 °C; and solar radiation from zero to about 1000 W/m2. Increasing values of all these parameters generally represent a higher atmospheric water demand on plants, i.e. higher ET rates, leading to higher stress on the crop. On the other hand, AGRI values decreased with relative humidity from mid-30% to lower 90%. Higher relative humidity values represent a wetter atmosphere, which also decreases ET rates and crop stress. The decreasing order of correlation between AGRI and the weather parameters is as follows: log of solar radiation (0.36 < R2 < 0.65) > log of soil surface temperature (0.23 < R2 < 0.38) > log of air temperature (0.09 < R2 < 0.30) > log of soil temperature at 50-mm depth (0.09 < R2 < 0.20) > log of relative humidity (0.00 < R2 < 0.18). With the exception of solar radiation, there appears to be some interactions between camera location and the other weather parameters. This implies that the variation in the AGRI data was due to the combined effect of multiple parameters. Nevertheless, solar radiation played the biggest role.

An assessment of the correlations suggests that higher AGRI values could be indicative of higher crop stress or lower crop health. This is because higher temperatures combined with lower relative humidity values tend to increase the rate of ET which is a determining factor for irrigation scheduling. However, air temperature, soil 50-mm temperature, and relative humidity can be neglected due to low correlations, thus leaving only solar radiation and soil surface temperature. While solar radiation is totally out of the farmer’s control, soil surface temperature can be partly controlled with irrigation. There are indications that other variables, most likely soil and crop variables, also account for the crop AGRI response in addition to these weather parameters. However, since the soil and crop variables were not measured, it is uncertain which particular variables contribute to explaining the variability in the data from the different locations and to what extents. This can be explored further in an experimental study. Some other experimental studies involving game and trail time lapse cameras have been completed including Larbi et al. (2017a, b).

Although the current study was only observational and the weather variables studied cannot be adjusted by the farmer, it shows the potential for the time series data created to be employed in building models that can describe crop phenology throughout the growth cycle. This kind of information can be used for identifying different levels of susceptibility to various conditions among different varieties of the crop and can enhance irrigation scheduling. If relevant soil and crop variables are additionally integrated into the models, the information derived from the crop can assist in timely intervention where the plants show indication of high stress or other problems. Finally, the use of the time-lapse cameras can be combined with other leaf sensors to provide additional visual records of crop condition for the areas being monitored.

Conclusion

This observational study has demonstrated the potential of using game and trail time-lapse cameras to provide useful data for monitoring crops or studying time-series response to changing environmental conditions. The temperature measured by the internal temperature sensor of each camera showed similar trends among the four cameras used and these were similar to the temperature from a nearby weather station. By using the RGBExcel software application to extract RGB image data into Microsoft Excel, images of soybean plants captured by the cameras were successfully processed and analyzed in Excel. The average Adjusted Green Red Index (AGRI), which was used to analyze daytime crop response to weather conditions, was regressed with weather data yielding varying correlation coefficients. Although the study was only observational and the parameters studied cannot be adjusted by the farmer, the time series data created can be employed in building models that can describe crop phenology throughout the growth cycle and could lead to better crop management. Despite some low correlations, the potential for using game and trail cameras with time-lapse capability to track changes in crop vegetation is established and the effects of varied treatments should be explored in future studies. Such studies should include similar experimental studies involving plants under different treatments to strengthen the evidence of the potential to use game and trail cameras for crop monitoring. Future studies should also look into placing cameras in different management zones established based on aerial photos or soil series maps to reflect differences in the crop or soil. Other crops such as a variety of cover crops grown in Arkansas will be investigated. Additional sensors and experimental treatments will be applied to better understand the applicability and adoptability of this practice by farmers.

References

Akeh, L. E., Nnoli, N., Gbuyiro, S., Ikehua F., & Ogunbo S. (2000). Meteorological early warning systems (EWS) for drought preparedness and drought management in Nigeria. In D. A. Wilhite, M. V. K. Sivakumar & D. A. Wood (Eds.), Proceedings of an Expert Group Meeting on early warning systems for drought preparedness and drought management (pp. 154–167). Lisbon, Portugal: WMO.

Boschetti, M., Stroppiana, D., Giardino, C., Brivio, P. A., Vincini, M., & Frazzi, E. (2007). Proximal and remote sensing observations for precision farming application, the Citimap project: experimental design and preliminary data analysis. In Z. Bochenek (Ed.), New developments and challenges in remote sensing. Retrieved April 10, 2018, from https://pdfs.semanticscholar.org/94b7/cb5d5eb9a07f98d8650835b816d09ae863b6.pdf?_ga=2.27945724.1521863603.1523350089-1704642520.1523350089.

Cheng, T., Yang, Z., Inoue, Y., Zhu, Y., & Cao, W. (2016). Preface: Recent advances in remote sensing for crop growth monitoring. Remote Sensing, 8, 116.

Deery, D., Jimenez-Berni, J., Jones, H., Sirault, X., & Furbank, R. (2014). Proximal remote sensing buggies and potential applications for field-based phenotyping. Agronomy, 5, 349–379. https://doi.org/10.3390/agronomy4030349.

Doraiswamy, P. C., Moulin, S., Cook, P. W., & Stern, A. (2003). Crop yield assessment from remote sensing. Photogrammetric Engineering & Remote Sensing, 69(6), 665–674.

Holecz, F., Barbieri, M., Collivignarelli, F., Gatti, L., Nelson, A., Setiyono, T. D., et al. (2013). An operational remote sensing based service for rice production estimation at national scale. In Proceedings of the living planet symposium. Edinburgh, UK: ESA.

Hollinger, S. E., & Angel, J. R. (2009). Weather and crops. Illinois agronomy handbook (24th ed., pp. 1–12). Urbana, IL, USA: University of Illinois.

Johnson, Z. (n.d.). Get RGB. Retrieved January 26, 2018, from https://itg.beckman.illinois.edu/technology_development/software_development/get_rgb/.

Larbi, P. A. (2016a). RGBExcel: An RGB image data extractor and exporter for Excel processing. Signal and Image Processing: An International Journal, 7(1), 1–9.

Larbi, P. A. (2016b). RGB2X: An RGB image data extract-export tool for digital image processing and analysis in Microsoft Excel. ASABE Paper No. 162460787. St. Joseph, MI, USA: ASABE.

Larbi, P. A. (2016c). Advancing Microsoft Excel’s potential for low-cost digital image processing and analysis. ASABE Paper No. 162455503. St. Joseph, MI, USA: ASABE.

Larbi, P. A. (2018). Advancing Microsoft Excel’s potential for teaching digital image processing and analysis. Applied Engineering in Agriculture, 34(2), 263–276.

Larbi, P. A., Marbaniang, C. D., Bade, K., & Vong, C. N. (2017a). Verification of temperature sensor readings obtained from game and trail cameras used for crop monitoring. ASABE Paper No. 1700231. St. Joseph, MI, USA: ASABE.

Larbi, P. A., Vong, C. N., & Green, S. (2017b). Exploring spectral responses of different cover crops potentially beneficial to farmers in Northeast Arkansas and the Delta Region. ASABE Paper No. 1700350. St. Joseph, MI, USA: ASABE.

Lillesand, T. M., Kiefer, R. W., & Chipman, J. W. (2015). Remote sensing and image interpretation (7th ed.). Hoboken, NJ, USA: Wiley.

Murthy, V. R. K. (2004). Crop growth modeling and its applications in agricultural meteorology. In M. V. K. Sivakumar, P. S. Roy, K. Harsen & S. K. Saha (Eds.), Proceedings of the training workshop on satellite remote sensing and GIS applications in agricultural meteorology (pp. 235–261). Dehra Dun, India: WMO, IMD, CSSTEAP, IIRS, NRSA, SAC.

Nutter, F. W., Tylka, G. L., Guan, J., Moreira, A. J. D., Marett, C. C., Rosburg, T. R., et al. (2002). Use of remote sensing to detect soybean cyst nematode-induced plant stress. Journal of Nematology, 34(3), 222–231.

Seelan, S. K., Laguette, S., Casady, G. M., & Seielstad, G. A. (2003). Remote sensing applications for precision agriculture: A learning community approach. Remote Sensing of Environment, 88, 157–169.

Siachalou, S., Mallinis, G., & Tsakiri-Strati, M. (2015). A hidden Markov models approach for crop classification: Linking crop phenology to time series of multi-sensor remote sensing data. Remote Sensing, 7, 3633–3650.

Toureiro, C., Serralheiro, R., Shahidian, S., & Sousa, A. (2017). Irrigation management with remote sensing: Evaluating irrigation requirement for maize under Mediterranean climate condition. Agricultural Water Management, 184, 211–220.

Vong, C. N., & Larbi, P. A. (2016). Comparison of image data obtained with different commercial cameras for use in visible spectroscopy. ASABE Paper No. 162455510. St. Joseph, MI, USA: ASABE.

Acknowledgements

This study was carried out under the auspices of the USDA-NIFA Capacity Building Grants for Non-Land Grant Colleges of Agriculture Program, Project No. 2015-70001-23439, and the USDA-NIFA Hatch Project No. 1005836. Funding and facility supports were also provided by the College of Agriculture and Technology at Arkansas State University and the Division of Agriculture at the University of Arkansas. The authors also wish to acknowledge the assistance provided by Siddhardha Manne, Kumar Bade, and Anjani Sravanthi Kaikala, graduate students, during the data collection and processing.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Human and animal rights

The authors declare that no human participants and/or animals were involved in the study

Informed consent

The authors declare that no informed consent was necessary.

Rights and permissions

About this article

Cite this article

Larbi, P.A., Green, S. Time series analysis of soybean response to varying atmospheric conditions for precision agriculture. Precision Agric 19, 1113–1126 (2018). https://doi.org/10.1007/s11119-018-9577-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11119-018-9577-2