Abstract

In this work, a multiparametric family of iterative vectorial fourth-order methods free of Jacobian matrices is proposed. A convergence analysis of this family is carried out as well as a study of its efficiency. Several numerical experiments are made in order to compare the behaviour of the proposed family with other competitive methods of the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Newton’s method, see [1], is one of the best known methods and has the following structure for vectorial non-linear problems:

where \(F:D\subseteq \mathbb {R}^n\rightarrow \mathbb {R}^n\) is a non-linear vectorial function describing the non-linear system \(F(x)=0\).

This method has quadratic convergence, and it is computationally very efficient. Many methods have been designed in an attempt to improve Newton’s convergence and its computational efficiency, see for instance [2].

On the other hand, Newton’s method is the first optimal method for vectorial problems according to the following conjecture for vectorial methods proposed in [3].

Conjecture 1

The order of convergence of any iterative method, without memory, for solving non-linear systems cannot exceed \(2^{k_1+k_2-2}\), where \(k_1\) is the number of evaluations of the Jacobian matrix and \(k_2\) the number of evaluations of the non-linear function per iteration, and \(k_1\le k_2\). When the scheme reaches this upper bound, we say that it is optimal.

In the literature, up to our knowledge, only one other optimal method for vectorial problems has been proposed according to the above conjecture and it is the family proposed in [4]. In it, the authors proposed a parametric family of optimal methods of order 4 which has the following structure:

where \(\lambda \) and \(\psi \) are free real parameters,

The idea of using such a definition of the variable \(\nu _k\) comes from the article [5].

The iterative class (2) has a Jacobian matrix in its iterative expression, so it is not a Jacobian-free family. The aim of this work is to modify this family to obtain a new class of Jacobian-free schemes, which maintains the convergence order. In addition, we intend to make the modifications without considerably increasing the computational cost in order to obtain an efficient and competitive family compared to methods with the same characteristics.

In this article, a Jacobian-free multiparametric class of iterative methods for solving non-linear systems is presented. In Sect. 2, the parametric family is presented and an analysis of the convergence order is performed. Section 3 presents an analysis of the efficiency of the proposed family and compares it with other known methods in the literature with similar characteristics. In Sect. 4, several numerical experiments are carried out to see the behaviour of the family and to compare it with other iterative methods. The article concludes with some conclusions and references used in it.

2 Design of the parametric family

The only part we need to replace to obtain a derivative-free method is the Jacobian matrix \(F'(x^{(k)})\) of the family (2). For that reason, we are going to keep the previous structure by replacing the Jacobian matrix with a divided difference operator. If we replace the matrix by a forward or backward divided difference operator, the order of convergence is not maintained, so we choose the following symmetrical divided difference operator \(\left[ x^{(k)}+rF(x^{(k)}),x^{(k)}-rF(x^{(k)});F\right] \), which has a parameter r that can be any real number different from 0.

The parametric family obtained is denoted by CRTT and has the following iterative structure

where \(r\in \mathbb {R}\), \(r\ne 0\) and

We prove that the parametric family CRTT has fourth order of convergence for any value of real parameters r, \(\lambda \) and \(\psi \).

Theorem 2

Let us consider \(F: D \subseteq \mathbb {R}^n \longrightarrow \mathbb {R}^n\) a differentiable enough function defined in a neighbourhood D of \(\alpha \), such that \(F(\alpha )=0\). Let us also assume that \(F'(\alpha )\) is non-singular. Therefore, being \(x^{(0)}\) an initial guess close enough to \(\alpha \), sequence \(\{x^{(k)}\}\) defined by CRTT converges to \(\alpha \) with order 4, for any non-zero value of parameter r and for any values of \(\lambda \) and \(\psi \).

Proof

We first obtain the Taylor development of \( F(x^{(k)})\), \( F'(x^{(k)})\), \( F''(x^{(k)})\) and \( F'''(x^{(k)})\) around \(\alpha \), where \(e_k=x^{(k)}-\alpha \) and \(C_i=\frac{1}{i!}[F'(\alpha )]^{-1}F^{(i)}(\alpha )\), \(i=2, 3,\ldots \)

From [6], it is obtained the following expansion using the Genocchi-Hermite formula:

It can be simply checked that the inverse operator of \( [x^{(k)}+rF(x^{(k)}),x^{(k)}-rF(x^{(k)});F]\) has the following expression

From expression (4) and the Taylor development of \(F(x^{(k)})\), we obtain that \(e_{y,k}=y^{(k)}-\alpha \) satisfies the following equality

To simplify the notation, we define \(Y_3\) as follows

For any \(x\in \mathbb {R}^n\), we have that \(F(x)=(f_1(x),f_2(x),\ldots , f_n(x))^T\), where \(f_i(x):\mathbb {R}^n\rightarrow \mathbb {R}\) for \(i=1,2,\ldots ,n\).

Also, it is verified that

where the developments of \( f_i(x^{(k)})\) and \( f_i(y^{(k)})\) around \(\alpha \) for \(i=1,2,\ldots ,n\) are

being \(f_i'(x)=\left( \frac{\partial f_i}{\partial x_1},\frac{\partial f_i}{\partial x_2},\ldots ,\frac{\partial f_i}{\partial x_n} \right) \) and \(f_i''(x)\) is the Hessian matrix with components \(\frac{\partial ^2 f_i}{\partial x_j \partial x_k} \), for \(j,k\in \{1,2,\ldots ,n\}\).

To simplify the notation, we can rewrite it in the following way

with \(R_i=f_i'(\alpha )\) and \(H_i=\dfrac{1}{2}f_i''(\alpha )\).

In order to obtain \(\nu _k\), we compute now \(f_i^2(x^{(k)})\) and \(f_i^2(y^{(k)})\), for \(i=1,2,\ldots ,n\):

By denoting \(P_i=R_i^TR_i\) and \(Q_i=R_i^TH_i+H_i^TR_i\), the relations (6) can be rewritten as

Since \(e_{y,k}=C_2e_k^2+Y_3e_k^3+O\left( e_k^4\right) \), therefore \(e_{y,k}^2=C_2^2e_k^4+(C_2Y_3+Y_3C_2)e_k^5+O\left( e_{k}^6\right) .\) Substituting this relation in (7), we obtain

If we denote \(P=\sum \limits _{i=1}^nP_i\) and \(Q=\sum \limits _{i=1}^nQ_i\), and substituting the results of (7) and (8) in (5), we obtain

Since

we obtain that

In order to simplify the notation, we denote \(V_3\) as follows

obtaining then that \(\nu _k=C_2^2e_k^2+V_3e_k^3+O\left( e_k^4\right) \).

Therefore, the expression of \(K_k\) is as follows

From (10) and (11), we obtain that the expressions of \(p_k\) and \(q_k\) are

Therefore,

Therefore, from (4) and (13), the error equation is

Since

the error equation can be rewritten as

Therefore, it is proven that the members of the parametric family (3) have order of convergence 4. \(\square \)

3 Efficiency index

To compare different iterative methods, the efficiency index suggested by Ostrowski is widely used. The formula of this index is the following

where p is the order of convergence of the method and d represents the number of functional evaluations needed to perform the method per iteration.

Another classical measure of the efficiency of iterative methods is the operational efficiency index proposed by Traub, with the expression:

where op is the number of operations, expressed in units of product, needed to calculate each iteration.

In several occasions, a combination of both is also used, called the computational efficiency index, whose expression is

In this section, we will study these different indices for our parametric family, as well as compare the results obtained with the efficiency indices of other methods known in the literature, which are S2S from [7], CJTS5 from [8], WF6S from [9] and WZ7S from [10].

When working on systems of size \(n\times n\), the number of functional evaluations used in calculating F(z) is n while the number used in calculating a divided difference operator as used in the iterative method is \(n^2-n\).

In the case of the CRTT parametric family, we compute \(F(x^{(k)})\), \(F(y^{(k)})\), \(F(x^{(k)}+rF(x^{(k)}))\) and \(F(x^{(k)}-rF(x^{(k)}))\) and just one divided difference operator, so the number of functional evaluations is:

Below is a list of the number of products and quotients needed to perform the operations involved:

-

Each scalar/vector product costs n.

-

Each transpose vector/vector product costs n.

-

Each matrix/vector product costs \(n^2\).

-

Each matrix/matrix product costs \(n^3\).

-

The number of quotients of a divided difference operator is \(n^2\).

-

Each LU decomposition costs \(\frac{1}{3}(n^3-n)\).

-

Each system resolution costs \(n^2\).

In this case, we calculate three scalar/vector products, two transpose vector/vector products, a divided difference operator, and a single LU decomposition and we solve two systems, so the number of operations is:

Then, the addition of evaluations and operations is:

In the following, we present and compute the efficiency of the methods with which we compare the indices.

The S2S method has the following iterative expression:

There are 3 evaluations and a divided difference, so the number of functional evaluations is:

On the other hand, a divided difference operator is calculated and a single system is solved, so the number of operations is:

Thus, the total number of functional evaluations and operations is:

The iterative expression of CJTS5 is:

where \(a^{(k)}=x^{(k)}+F(x^{(k)})\) and \(b^{(k)}=x^{(k)}-F(x^{(k)})\).

This method calculates 6 functional evaluations and a divided difference, then the number of evaluations is:

On the other hand, it performs 3 scalar/vector products, a divided difference operator, and a single LU decomposition and solves 4 systems with the same matrix; therefore, the number of operations is:

The total number of evaluations and operations is:

The iterative expression of the WF6S method is as follows:

where \(w^{(k)}=x^{(k)}+F(x^{(k)})\) and \(s^{(k)}=x^{(k)}-F(x^{(k)})\).

This method calculates 5 functional evaluations and 2 divided difference operators. Then, the number of functional evaluations is:

The number of operations performed is as follows: two divided difference operators are calculated, 3 systems are solved with the same coefficient matrix, a multiplication between 2 matrices is done, a scalar matrix multiplication is done and a vector matrix multiplication is done, so the number of operations is:

The total of evaluations and operations is:

Finally, the iterative expression of the method WZ7S is:

being \(w^{(k)}=x^{(k)}+F(x^{(k)})\).

In this case, 4 evaluations of F and 5 divided difference operators are calculated, so the number of functional evaluations is:

The number of operations performed is as follows: 5 different divided difference operators are calculated and 2 different LU decompositions are performed and a system is solved with each of the decompositions. Thus, the total number of operations is:

Thus, the number of total evaluations and operations is:

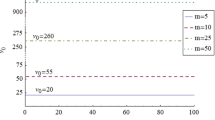

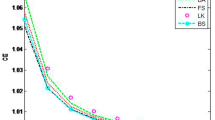

Below are some images showing the efficiency index, the operational index and the computational efficiency index of the previously mentioned methods for different sizes of the system to be solved, in order to compare the different methods.

Figure 1 illustrates the efficiency rate of the different methods for different system sizes. In these figures, we can see that the methods with the highest efficiency rates are CJTS5 and CRTT.

Figure 2 illustrates the operational efficiency index of the different methods for different system sizes. In these figures, we can see that for small sizes the methods CJTS5 and CRTT are not as competitive, but when the size is greater than or equal to 10, these are the two methods with the highest operational efficiency index.

Figure 3 illustrates the computational efficiency index for different system sizes. As with the other indices, the methods CJTS5 and CRTT stand out for system sizes greater than 1.

4 Numerical performance of CRTT

We compare some methods of CRTT class, namely CJF4S, TJF4S and CRTT4, with values of the parameters \(\psi =0, \;r=1\) in all cases and \(\lambda =\{-4,\; -5, \; 0\},\) respectively, with others, of high efficiency or order, S2S, CJTS5, WF6S and WZ7S, of orders 2, 5, 6 and 7, respectively, in the same problems, which have been define in the previous section. Note that CRTT4 is the version with the least computational effort of all of the family.

The maximum number of iterations considered is 50 and the stopping criterion is \(\left\| x^{(k+1)}-x^{(k)}\right\| < 10^{-100}\) or \( \left\| F (x^{(k+1)}) \right\| < 10^{-100}\). Each calculation was performed using Matlab R2022b with variable-precision arithmetic with a 500-digit mantissa to minimize round-off errors.

We must take into account that the approximate computational order of convergence (ACOC), see [11]:

will be calculated that gives the numerical approximation of the theoretical order of an iterative method.

4.1 Academical problems

Consider the system of equations:

By taking \(m=200,\) the initial estimate that we chose is \(x^{(0)}=\left( \frac{1}{100}, \ldots , \frac{1}{100}\right) ^T\) to obtain the solution

In Table 1, the results obtained by the different methods for the academic problem are shown. The table shows that the elements of the CRTT family take the least computational time to reach the required tolerance, being considerably less than other methods that double their ACOC. Moreover, excluding the S2S method, they require the same iterations as the other methods with the highest order of convergence.

4.2 Application to boundary value problem

We consider a particular case of the partial derivative equation, called the transport equation (see [12]). This case is non-linear and non-homogeneous. This equation models physical phenomena, referring to the movement of different entities, such as inertial moment, mass or energy, through a medium, solid or fluid, with particular conditions that exist within the medium and it is expressed by the non-differentiable partial differential equation:

for \(x \in [0,1]\), \(t \ge 0\). The boundary conditions on u(x, t) are imposed as \(u(0,t)=\frac{1}{1+t}, \; u(1,t)=\frac{1}{2+t}\) for all \(t \ge 0,\) along with the initial condition as \(u(x,0)=\frac{1}{1+x}\), for \(0 \le x \le 1.\)

Applying the method of characteristics, we have the system of ordinary differential equations:

We solve the system numerically applying in each case the trapezium method (or second-order implicit Runge-Kutta). With the first two equations, we have that \(\Delta x +\Delta t=2 \Delta s.\)

From the third equation, we form a system of \(n = 500\) equations, taking as step size \(\Delta s=(b-a)/(n-1)\), we obtain our initial approximation, applying the initial condition.

Now, we must solve the system of size \(500 \times 500\), numerically:

We take the same stopping criteria as in the academic problem. Next, we form the corresponding mesh, considering the domain

with the partition of spatial step \(\Delta x =1/(n-1)\) and time step \(\Delta t =1/(n-1),\) respectively, for the domains, from the solution of the first two equations, and we obtain the solution shown in Fig. 4.

In Table 2, the results obtained for the boundary value problem by the different methods are shown, where ‘-’ denotes lack of convergence or unstable value of the ACOC. In this table, we can see that the WZ7S method only requires a single iteration, while the rest require 3 iterations or more. We can also see that the TJF4S element of the CRTT family increases the ACOC by one unit for this problem.

The methods of the CRTT family maintain the theoretical order in very large systems, considering sizes 200 and 500. They can also compete with methods of equal or higher order, as can be seen in Tables 1 and 2.

About computational effort, note that the methods of CRTT family make much better use of the computational effort compared to the others.

5 Conclusion

In this work, a parametric family of Jacobian-free fourth-order convergence iterative methods has been presented. This class has been designed from the family defined in [4] in order to maintain the properties by modifying the Jacobian matrix by a divided difference operator, to make it suitable for non-differentiable problems. An efficiency study and several numerical experiments have been carried out, showing that it is a family of competitive methods compared to other known methods in the literature with similar characteristics.

Availability of data and materials

Not applicable.

References

Traub, J.F.: Iterative Methods for the Solution of Equations. Prentice-Hall, New York, USA (1964)

Amat, S., Busquier, S.: Advances in Iterative Methods for Nonlinear Equations, vol. 10. Springer, Switzerland (2016)

Arroyo, V., Cordero, A., Torregrosa, J.R.: Approximation of artificial satellites’ preliminary orbits: the efficiency challenge. Math. Comput. Model. 54(7), 1802–1807 (2011). https://doi.org/10.1016/j.mcm.2010.11.063

Cordero, A., Rojas-Hiciano, R., Torregrosa, J.R., Penkova, M.: A highly efficient class of optimal fourth-order methods for solving nonlinear systems. Numer. Algorithm. (2023). https://doi.org/10.1007/s11075-023-01631-9

Singh, H., Sharma, J.R., Kumar, S.: A simple yet efficient two-step fifth-order weighted-newton method for nonlinear models. Numer. Algorithm. 93(1), 203–225 (2022). https://doi.org/10.1007/s11075-022-01412-w

Villalba, E.G., Hernandez, M., Hueso, J.L., Martínez, E.: Using decomposition of the nonlinear operator for solving non-differentiable problems. Math. Methods Appl. Sci. (2023). https://doi.org/10.1002/mma.9455

Samanskii, V.: On a modification of the Newton method. Ukr. Math. J. 19, 133–138 (1967)

Cordero, A., Jordán, C., Sanabria, E., Torregrosa, J.R.: A new class of iterative processes for solving nonlinear systems by using one divided differences operator. Mathematics. 7, 776 (2019). https://doi.org/10.3390/math7090776

Wang, X., Fan, X.: Two efficient derivative-free iterative methods for solving nonlinear systems. Algorithms. 9, 14 (2016). https://doi.org/10.3390/a9010014

Wang, X., Zhang, T.: A family of Steffensen type methods with seventh-order convergence. Numer. Algorithm. 62, 429–444 (2013). https://doi.org/10.1007/s11075-012-9597-3

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method using fifth-order quadrature formulas. Appl. Math. Comput. 190, 686–698 (2007). https://doi.org/10.1016/j.amc.2007.01.062

Boutin, B., Nguyen, T.H.T., Sylla, A., Tran-Tien, S., Coulombel, J.-F.: High order numerical schemes for transport equations on bounded domains*. ESAIM: ProcS. 70, 84–106 (2021). https://doi.org/10.1051/proc/202107006

Acknowledgements

The authors would like to thank the anonymous reviewers for their suggestions and comments that have improved the final version of this manuscript.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature.

Author information

Authors and Affiliations

Contributions

RVRH and PTN wrote the draft version of the main manuscript text, JRT and AC wrote the revised version of the manuscript, RVRH prepared the figures, and the numerical tests have been made by RVRH and PTN. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Ethical approval

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cordero, A., Rojas-Hiciano, R.V., Torregrosa, J.R. et al. Efficient parametric family of fourth-order Jacobian-free iterative vectorial schemes. Numer Algor (2024). https://doi.org/10.1007/s11075-024-01776-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11075-024-01776-1