Abstract

In this manuscript, a general class of Jacobian-free iterative schemes for solving systems of nonlinear equations is presented. Once its fourth-order convergence is proven, the most efficient sub-family is selected in order to make a qualitative study. It is proven that the most of elements of this family are very stable, and this is checked by means on numerical tests on several problems of different sizes. Their performance is compared with other known Jacobian-free iterative procedure, being better in the most of results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(F(x) = 0\) be a system of nonlinear equations, where \(F:D\subset \mathbb {R}^n \rightarrow \mathbb {R}^n\) and \(f_i,\) \( i = 1, 2, \ldots , n,\) are the coordinate functions of F, \(F(x) = \left( f_1(x), f_2(x), \ldots , f_n(x)\right) ^T\). Nonlinear systems are difficult to solve, the solution \(\bar{x}\) usually is obtained by linearizing the nonlinear problem or using a fixed point function \(G:D\subset \mathbb {R}^n \rightarrow \mathbb {R}^n\), which leads to a fixed point iterative scheme. There are many finding-root methods for systems of nonlinear equations. The most famous one is the second order Newton scheme, \(x^{(k+1)} =x^{(k)} - [F'(x^{(k)})]^{-1}F(x^{(k)})\) where \(F'(x^{(k)})\) denotes the Jacobian matrix of F evaluated at kth iteration.

In recent decades, numerous authors have endeavored to formulate iterative procedures that surpass Newton’s scheme in terms of efficiency and higher convergence order. Many of these methods require the evaluation of the Jacobian matrix at one or more points during each iteration. However, a significant challenge associated with these approaches lies in the computation of the Jacobian matrix. In certain scenarios, this matrix may not exist, or in cases where it does, calculating it for high-dimensional problems can be prohibitively expensive or even impractical.

As a solution to this issue, some authors have explored alternatives to the Jacobian matrix, opting to replace it with a divided difference operator. The vectorial version of Steffensen’s scheme (due to Samanskii, see [1, 2]), is the simplest of these alternatives. It involves substituting the Jacobian matrix of \(F'(x^{(k)})\) in Newton’s method with a first-order divided difference:

where \(z^{(k)}=x^{(k)}+ F(x^{(k)})\), being \([\cdot ,\cdot ;F]:\Omega \times \Omega \subset \mathbb {R}^n \times \mathbb {R}^n \rightarrow \mathcal{L}(\mathbb {R}^n)\) the divided difference operator of F on \(\mathbb {R}^n\) defined as \([x,y;F](x-y)=F(x)-F(y)\), for any \(x,y \in \Omega \) (see [3]). This replacement maintains the second order of convergence, offering a practical approach to overcome the challenges associated with the computation of the Jacobian matrix.

Despite both Newton and Steffensen schemes exhibiting quadratic convergence, research has demonstrated that the stability of Steffensen’s method, as defined by its dependence on the initial estimation, is notably poorer when compared to Newton’s method. This disparity in stability has been extensively examined in [4] and [5], where it was established that, in the scalar case of \(f(x) = 0\), the stability of iterative schemes without derivatives improves when choosing \(z =x + \gamma f(x)\) for small values of \(\gamma \).

However, a drawback of directly substituting Jacobian matrices with divided differences is that, in many iterative methods, the resulting Jacobian-free scheme does not possess the same order as the original one. For instance, consider the multidimensional extension of the fourth-order Ostrowski’s method ( [6, 7])

that only reaches cubic convergence when \(F'(x^{(k)})\) is replaced by the non-symmetric divided difference [x, y; F], that is,

However, it is worth noting that also other fourth-order methods deviate from preserving the order of convergence, as observed in Jarratt’s scheme [8],

where \(J(x^{(k)}) = \left[ 6F'(y^{(k)}) - 2F'(x^{(k)})\right] ^{-1} \left[ 3F'(y^{(k)}) + F'(x^{(k)})\right] \), whose Jacobian-free version is

being

Also fourth-order Sharma’s method [9], whose iterative expression is

has, as Jacobian-free version,

Moreover, Montazeri et al. [10] designed a fourth-order scheme,

that can be transformed in a Jacobian-free method,

Finally, we consider the iterative scheme due to Sharma and Arora [11], with fifth-order of convergence:

where \(H(x^{(k)}) = [F'(x^{(k)})]^{-1} F'(y^{(k)})\), whose Jacobian-free version is

being \(\bar{H}(x^{(k)}) = [x^{(k)},x^{(k)}+F(x^{(k)});F]^{-1} [y^{(k)},y^{(k)}+F(y^{(k)});F]\).

None of these Jacobian-free schemes hold the fourth-order of convergence of their original versions with Jacobian matrices. However, Amiri et al. [12] shown that, if we consider \([x, x+G(x); F]\) as an estimation of the Jacobian matrix, where \(G:D \subseteq \mathbb {R}^{n} \rightarrow \mathbb {R}^{n}\) satisfying \(G(x) = (f_1^m(x), f_2^m(x), \ldots , f_n^m(x))^T\), it is possible to obtain an approximation of order m of the Jacobian matrix of function F(x) at the point \(x\in \mathbb {R}^n \). In that work, the authors also show that by choosing a suitable \(m\in \mathbb {N}\), the order of convergence of these four iterative methods can be preserved when we replace the Jacobian matrix with the estimation \([x, x+(f_1^m(x), f_2^m(x), \ldots , f_n^m(x))^T; F]\), for a particular value of m depending on the original procedure. We denote these fourth-order Jacobian-free versions of Ostrowski (\(m=2\) and \(m=3\)), Jarratt (\(m=3\)), Sharma (\(m=2\)), Montarezi (\(m=3\)) and Sharma-Arora (\(m=2\)) schemes by \(MO_4\), \(MJ_4\), \(MS_{4}\), \(MM_4\), and \(MSA_5\), respectively.

In this paper, we introduce a Jacobian-free fourth-order class of iterative methods, demonstrating superior performance compared to the mentioned Jacobian-free versions of known methods. This comparison is made in terms of efficiency and stability.

The rest of the paper is organized as follows: Sect. 2 provides essential concepts and previous results. Section 3 details the development and convergence analysis of our method. In Sect. 4, we investigate the stability of the proposed approach, and Sect. 5 presents numerical results illustrating the efficiency and convergence of our Jacobian-free scheme. The paper finishes with some conclusions and the references used.

2 Preliminary definitions

Let us consider a sequence \(\{x^{(k)}\}_{k \ge 0}\) in \(\mathbb {R}^n\), converging to \(\bar{x}\). Then, its convergence is said to be of order p, \(p \ge 1\), if there exists \(K >0\) (\(0<K<1\) if \(p=1\)) and \(k_0 \in \mathbb {N}\) such that

or

being \(e^{(k)}=x^{(k)}-\bar{x}\).

In order to define a clear notation to rigorously proof the convergence of an iterative method, the following notation was presented in [13]. Let \(T:D\subseteq \mathbb {R}^{n} \rightarrow \mathbb {R}^{n}\) be a sufficiently Fréchet differentiable function in D. The qth derivative of T at \(u \in \mathbb {R}^{n}\), \(q \ge 1\), is the q-linear function \(T^{(q)}(u): \mathbb {R}^{n} \times \cdots \times \mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) such that \(T^{(q)}(v)(u_1,\ldots ,u_q) \in \mathbb {R}^{n}\). Let us remark that

-

1.

\(T^{(q)}(v)(u_1,\ldots ,u_{q-1}, \cdot ) \in \mathcal{L}(\mathbb {R}^{n})\),

-

2.

\(T^{(q)}(v)(u_{\sigma (1)},\ldots ,u_{\sigma (q)})= T^{(q)}(v)(u_1,\ldots ,u_q)\), for all permutation \(\sigma \) of \(\{1,2,\ldots ,q\}\).

From properties [1.] and [2.], we state the following notation:

-

(a)

\(T^{(q)}(v)(u_1,\ldots ,u_{q})=T^{(q)}(v)u_1 \ldots u_{q}.\)

-

(b)

\(T^{(q)}(v)u^{q-1}T^{(p)}u^p=T^{(q)}(v)T^{(p)}(v)u^{q+p-1}.\)

On the other hand, for \(\bar{x}+h \in \mathbb {R}^{n}\) lying in a neighborhood of a solution \(\bar{x}\) of \(T(x)=0\), we can apply Taylor’s expansion on \(T(\bar{x}+ h) \) around \(\bar{x}\) and assuming that the Jacobian matrix \(T'(\bar{x})\) is nonsingular, we have

where \(C_q=\dfrac{1}{q!}[T'(\bar{x})]^{-1}T^{(q)}(\bar{x})\), \(q \ge 2\). Since \(T^{(q)}(\bar{x}) \in \mathcal{L}(\mathbb {R}^{n} \times \cdots \times \mathbb {R}^{n}, \mathbb {R}^{n})\) and \([T'(\bar{x})]^{-1} \in \mathcal{L}(\mathbb {R}^{n})\), then \(C_qh^q \in \mathbb {R}^{n}\).

In addition, we can express \(T'(\bar{x}+ h)\) as

where I is the identity matrix. Therefore, \(q\,C_q\,h^{q-1} \in \mathcal{L}(\mathbb {R}^{n})\). From (2), we assume

being

by forcing \(A_j\), for \(j=2,3, \ldots \) to satisfy \([T(\bar{x}+ h)]^{-1}T^{\prime }(\bar{x}+ h)=I.\)

The equation

where \({e^{(k)}}^p =(e^{(k)}, e^{(k)}, \ldots , e^{(k)})\), p is the order of convergence, L is a p-linear function, \(L \in \mathcal{L}(\mathbb {R}^{n} \times \cdots \times \mathbb {R}^{n}, \mathbb {R}^{n})\) is called the error equation.

On the other hand, the divided difference operator of F on \(\mathbb {R}^{n}\) is a mapping \([\cdot ,\cdot ; F]: \Omega \times \Omega \subset \mathbb {R}^{n} \times \mathbb {R}^{n} \rightarrow \mathcal{L}(\mathbb {R}^{n})\) (see [3]) such that

By developing \(F ^{\prime }(x + th)\) in Taylor series around x, and replacing it in the Genocchi-Hermite formula (see [3])

we obtain

where \(e=x-\bar{x}\).

3 Development and convergence of the proposed methods

In this work, we present a two-step scheme:

where \(\alpha , \beta \in \mathbb {R}^{n}\) should be chosen in order to obtain the fourth-order of convergence, \(w^{(k)}= x^{(k)}+\beta \, F(x^{(k)}),\) \([x^{(k)},w^{(k)}, F]\) is the first order divided difference and the variable of the weight function G is \(\eta ^{(k)}= [x^{(k)},y^{(k)}; F]^{-1}\,[x^{(k)},w^{(k)}; F],\) such that when k tends to infinity the variable \(\eta ^{(k)}\) tends to the identity I. To properly describe the Taylor development of the matrix weight function, we recall the notation by Artidiello et al. in [14]:

Let \(X=\mathbb {R}^{n \times n}\) denotes the Banach space of real square matrices of size \(n \times n\). Then, the matrix function \(G:X \rightarrow X\) can be defined as its Fréchet derivative satisfies

-

(a)

\(G'(u)(v)=G_1uv,\; \textrm{being}\; G':X\rightarrow \mathcal {L}(X)\;\textrm{and}\; G_1 \in \mathbb {R}\),

-

(b)

\(G''(u,v)(v)=G_2uvw,\; \textrm{where}\; G'':X \times X\rightarrow \mathcal {L}(X)\;\textrm{and}\; G_2 \in \mathbb {R}\).

Therefore, the matrix weight function \(G(\eta ^{(k)})\) can expanded around the identity matrix I as

being \(G_i,\) \(i \ge 1\) real numbers.

The following result establishes the convergence of iterative method presented in (4).

Theorem 1

Let \(F:D \subseteq \mathbb {R}^{n}\longrightarrow \mathbb {R}^{n}\) be sufficiently differentiable at each point of a convex set D including \(\bar{x} \in \mathbb {R}^{n}\), a solution of \(F(x)=0\). Let \(x^{(0)}\) be an initial estimation close enough to \(\bar{x}\). Let us suppose that \(F'(x)\) is continuous and nonsingular at \(\bar{x}\). Then, sequence \(\{x^{(i)}\}_{i \ge 0}\) obtained from expression (4) converges to \(\bar{x}\) with order four if \(\alpha =1\), \(G (I)=I\) and \(G_1=1\). The error equation is,

where \(C_{q}=\dfrac{1}{q!}[F'(\bar{x})]^{-1}F^{(q)}(\bar{x})\), \(q=2,3,\ldots \) and \(e^{(k)}=x^{(k)}-\bar{x}, k=0,1,\ldots \)

Proof

By using Taylor expansion of \(F(x^{(k)})\) and its derivatives around \(\bar{x}:\)

and the Genocchi-Hermite (see [3]), we obtain the Taylor expansion of the divided difference

and also the expansion of its inverse operator, as

We also need the following expansions of divided differences and their corresponding inverses:

and

also

and

On the other hand, the matrix variable of the weight function is

\(\eta ^{(k)}=[x^{(k)},y^{(k)}; F]^{-1}[x^{(k)},w^{(k)}; F]\) that tends to the identity I when k tends to infinity, and whose Taylor expression around I is:

and therefore

By using the previous developments, we calculate

and finally by substituting the above values in (4) we have the error equation,

In order to obtain a method of order four, we force to be zero the coefficients of \({e^{(k)}}^{i}\), \(i=1,2,3,\) to zero. The first equation of the obtained system is

and then \(\alpha =1.\) The following term to make null is

from which we get \(G (I)=I\). With these values in the third equation, we have

Then \(G_1=1\). Therefore, the solutions of the proposed system are \(\alpha =1\), \(G (I)=I\) and \(G_1=1\). Considering these values we get

which justifies the fourth-order of convergence of the proposed class. \(\square \)

We observe that when we consider a polynomial weight function \(G(\eta ^{(k)})=\eta ^{(k)}\), the resulting parametric family of schemes is a particular case of class (4) by considering \(\alpha =1\), \(G (I)=I,\) \(G_1=1\) and \(G_i=0,\) \(i\ge 2,\) that we call \(JCST_4(\beta ).\) This sub-family corresponds to expression (6) in [15]. In fact, the weight function \(G(\eta ^{(k)})\) represents a general class of methods that can be constructed by other polynomial functions, rational functions, etc. For example, another polynomial function \(G(\eta ^{(k)})=I-\eta ^{(k)}+{\eta ^{(k)}}^2\) is obtained by considering \(\alpha =1\), \(G (I)=I,\) \(G_1=1,\) \(G_2=2\) and \(G_i=0,\) \(i\ge 3\); moreover, \(G(\eta ^{(k)})=(2 \eta ^{(k)}-I) {(\eta ^{(k)})}^{-1}\) is an example of a rational function satisfying the convergence conditions set in Theorem 1.

It is well-known that, among all subfamilies of (4) satisfying the convergence conditions, the best ones in terms of computational cost are those with least number of inverse operators and linear systems to be solved, per iteration. Under these hypothesis, the best subfamily is \(JCST_4(\beta )\), as it has two different divided difference operators and two linear systems to be solved, per iteration.

When computational simplicity is stated, another key fact to be taken into account is the stability. The dependence from the initial guess is analyzed in the next section.

4 Stability analysis

In this section, we use real multidimensional discrete dynamics tools to determine which elements of class \(JCST_4(\beta )\) present better performance in terms of the dependence of their convergence on the initial estimations used. To achieve this aim, let us recall some concepts.

Let us denote by R(x) the vectorial fixed-point rational function associated to an iterative method (or a parametric family of schemes) applied to a n-variable polynomial system \(p(x)=0\), where \(p:\mathbb {R}^n \rightarrow \mathbb {R}^n\). Let us also remark that most of the following definitions are a direct extension of those considered in complex dynamics (see, for example, [16, 17] for more explanations).

The orbit of \(x^{(0)} \in \mathbb {R}^n\) is defined as \( \left\{ x^{(0)},R(x^{(0)}),\ldots ,R^m(x^{(0)}),\ldots \right\} \). A point \(x^{*}\in \mathbb {R}^n\) is a fixed point of R if \(R(x^{*})=x^{*}\), and it is called strange fixed point when it is not a root of \(p(x)=0\). The stability of the fixed points is characterized in a result from Robinson ( [16], page 558), establishing that the character of a k-periodic point \(x^*\) depends on the eigenvalues of the Jacobian matrix \(R'(x^*)\), \(\lambda _1,\lambda _2,\ldots ,\lambda _n\). It is attracting (repelling) if all \(|\lambda _j|<1\) (\(|\lambda _j|>1\)), \(j=1,2,\ldots ,n\), and unstable or saddle if at least one \(j_0\in \{1,2,\ldots ,n\}\) exists such that \(|\lambda _{j_0}|>1\). In addition, a fixed point is called hyperbolic if all the eigenvalues \(\lambda _j\) of \(R'(x^*)\) have \(|\lambda _j|\ne 1\).

In what follows, we denote by \(Op^4(x, \beta )=(o_1^4(x, \beta ),o_2^4(x, \beta ),\ldots ,o_n^4(x, \beta ))^T\), the fixed point function associated to \(JCST_4(\beta )\) applied on n-dimensional quadratic polynomial \(p(x)=0\), where:

As the polynomial system has separated variables, all the coordinate functions \(o_j^4(x,\beta )\) have the same expression, with the only difference of the index \(j=1,2,\ldots ,n\). These coordinate functions of the multidimensional rational operator can be expressed as:

Some information about the stability analysis of the fixed points of \(Op^4(x,\beta )\) appears in the following results.

Theorem 2

There exist \(2^n\) superattracting fixed points of the rational function \(Op^4(x,\beta )\) associated to the family of iterative methods \(JCST_4(\beta )\), whose components are given by the roots of p(x). On the other hand, the components of the real strange fixed points of this operator are found combining the roots of p(x) and the real roots of the polynomial

depending on \( \beta \ne 0\). By considering the values \(\beta _1 \approx -3.3015\) and \(\beta _2 \approx 3.3015\) we have:

-

(a)

If \(\beta <\beta _1\) or \(\beta >\beta _2,\) there exist four real roots of polynomial q(t), denoted by \(q_j(\beta )\), \(j=1,2,3,4.\)

-

(a.1)

If \(\beta <-3.30338\) or \(\beta > 3.30338,\) the strange fixed points with these four components \(q_j(\beta )\), \(j=1,2,3,4\) are repulsive. But if at least one (but not all) of their components is 1 or \(-1,\) the strange fixed point will be a saddle point.

-

(a.2)

If \(-3.30338< \beta <\beta _1,\) the strange fixed point \((q_2(\beta ), q_2(\beta ), \ldots , q_2(\beta ))\) and their combinations with \(\pm 1\) are attractive. The strange fixed points different of these ones which at least one (but not all) of their components is \(q_2(\beta )\) or \(\pm 1\) are saddle points and the rest of combinations are repulsive.

-

(a.3)

If \(\beta _2< \beta <3.30338 \), the strange fixed point \((q_3(\beta ), q_3(\beta ), \ldots , q_3(\beta ))\) and their combinations with \(\pm 1\) are attractive. The strange fixed points different of these ones which at least one (but not all) of their components is \(q_3(\beta )\) or \(\pm 1\) are saddle points and the rest of combinations are repulsive.

-

(a.1)

-

(b)

If \(\beta \ne 0\) and \(\beta _1<\beta <\beta _2,\) there are only two real roots \(q_i(t)\), \(i=1,2,\) and the strange fixed point expressed as \((q_{\sigma _1}(\beta ),q_{\sigma _2}(\beta ),\ldots ,q_{\sigma _n}(\beta ))\), being \(\sigma _i \in \{1,2\}\), are repulsive. But if at least one of the components of the strange fixed point (but not all) are equal to 1 or \(-1\), it will be a saddle fixed point.

Proof

To obtain the fixed points of the multidimensional rational function, we solve the equations \(o^4_j(x,\beta )=x_j\), which we simplify as:

The solutions of each equation are \(x_j=\pm 1\) and also the real roots of a polynomial that we will call q(t):

Then, the components of the fixed points will be the above solutions.

The real roots of q(t) depending on the parameter \(\beta \), \( q_j(\beta ),\) are described from the real roots \(\beta _1\) and \(\beta _2\) of the following polynomial r(t) :

whose approximate values are \(\beta _1 \approx -3.3015\) and \(\beta _2 \approx 3.3015.\)

It can be checked that at most four of the eight possible roots of q(t) are real, depending on the value of parameter \(\beta \). If \(\beta <\beta _1\) or \(\beta >\beta _2,\) there exist four real roots of polynomial q(t), denoted by \(q_j(\beta )\), \(j=1,2,3,4,\) and if \(\beta \ne 0\) and \(\beta _1<\beta <\beta _2,\) there are only two real roots \(q_i(t)\), \(i=1,2.\)

Now we consider the fixed points \(x(\beta )=(q_{\sigma _1}(\beta ),q_{\sigma _2 }(\beta ),\ldots , q_{\sigma _n }(\beta ))\), \(\sigma _i \in \{1,2,3,4\}\). Their stability is given by the absolute value of the eigenvalues of the associated Jacobian matrix evaluated at them.

These eigenvalues can be expressed as:

where

Moreover, when we analyze the absolute value of the eigenvalue \(Eig_j(x_{\beta })\), we find out that most combinations among the roots \(q_j(\beta )\) give rise to repulsive strange fixed points or saddle ones. Specifically, in case that at least one of its components is \(\pm 1\) we have the following analysis. If all the other components different from \(\pm 1\) are \(q_{2}(\beta )\) when \(-3.30338<\beta <\beta _1\), or are \(q_{3}(\beta )\) with \(\beta _2<\beta <3.30338\) then they are attractors. In the rest of the cases, we have saddle points.

Finally, we analyze what happens if the roots \(\pm 1\) do not appear as components. We obtain an attracting point if all its components are \(q_{2}(\beta )\) when \(-3.30338<\beta <\beta _1\) or all its components are \(q_{3}(\beta )\) when \(\beta _2<\beta <3.30338\). In the other cases, the fixed points are repulsive or saddle.

\(\square \)

Once the existence of strange fixed points has been studied and their stability has been determined, it is necessary to analyze whether it is possible to obtain any other attracting behavior, such as attracting periodic orbits or even strange attractors. This can be made through the orbits of the free critical points, if they exist.

4.1 Critical points and bifurcation diagrams

Firstly, we analyze the Jacobian matrix \({Op^4}(x,\beta )\) of the rational function under analysis and its critical points. Let us recall that, in this context, the critical point are those values of x that make all the eigenvalues of the Jacobian matrix null. When a critical point is not a solution of \(p(x)=0\), then it is called a free critical point.

Theorem 3

The components of the free critical points of operator \({Op^4}(x,\beta )\), for any value of \(\beta \) except \(\beta = 0\) and \(\beta = \pm \frac{1}{2}\), are the roots \(\pm 1\) (but not all \(\pm 1\)), the real roots, \(z_i(\beta )\), \(i=1,2,3,4\), of polynomial

and also the preimages of the roots \(\pm 1\) by the operator \({Op^4}(x,\beta ),\) that is, \(z_5(\beta )=\displaystyle {\frac{-1-\beta }{\beta }}\) and \(z_6(\beta )=\displaystyle {\frac{-1+\beta }{\beta }}.\)

In the case \(\beta =\frac{1}{2},\) we have that \(z_1(\frac{1}{2})=z_5(\frac{1}{2})=-3\) and \(z_2(\frac{1}{2})=z_6(\frac{1}{2})=-1,\) and when \(\beta =-\frac{1}{2}\) we have \(z_1(-\frac{1}{2})=z_5(-\frac{1}{2})=1\) and \(z_2(-\frac{1}{2})=z_6(-\frac{1}{2})=3.\)

Proof

The proof is straightforward, as critical points are the only ones making null all the eigenvalues of the Jacobian matrix of the operator \({Op^4}(x,\beta )\), that is, those satisfying

where \( D=(2 x_j + (x_j^2-1)\beta )^2 (1 + 3 x_j^2 + 2 x_j (x_j^2-1)\beta )^2 (1 + (x_j + ( x_j^2-1) \beta )(3 x_j + ( x_j^2-1) \beta ))^2.\) \(\square \)

In a classical result from Julia and Fatou (see, for example, [18]), it is proven that in the immediate basin of attraction of any attracting point (fixed or periodic) there exist at least one critical point. So, the existence of these free critical points states the possibility of another attracting behavior different from that of the roots and their absence means that no other behavior is possible than convergence to the roots. We can check this performance by plotting the dynamical planes of \(Op^4(x,\beta )\) for different values of \(\beta .\)

In order to be able to make graphical representations, we will work with \(n=2\), although the results can be extended to higher dimensions. Figures 1, 2 and 3 have been obtained using the routines appearing in [19] in the following way: a mesh of \(400\times 400\) points was used, the maximum number of iterations employed was 80, and the stopping criterium is a distance to the fixed point with a tolerance of \(10^{-3}\). In the images the roots of \(p(x)=0\) are represented by white stars. The points of this mesh are coloured with the same colour as the root to which they converge. This color is brighter when the number of iterations used is lower; moreover, it is colored in black if it reaches the maximum number of iterations without converging to any of the roots.

In Figs. 1a–d all the points have a stable behavior with fourth-order of convergence. Figures 3a, b are details of the Figs. 2a, b corresponding to the values \(\beta =-3.3024 \in \, ]-3.30338, \beta _1[\) and \(\beta =3.3024 \in \,]\beta _2,3.30338[,\) intervals where the strange fixed points \((q_i(\beta ),q_i(\beta ), \ldots , q_i(\beta )),\) \(i=2,3,\) respectively, and their combinations with \(\pm 1\) are attracting points, as we shown in Theorem 2. In them, it can be observed a narrow band around the lines \((x,-0.66),\) \((-0.66,y)\) and (x, 0.66), (0.66, y) where the method does not converge to the roots.

As there exist at least one critical point in each basin of attraction (see [18]), the orbits of these critical points give us relevant information about the stability of the rational function and, therefore, of the iterative method involved. We present in Fig. 4 real parametric lines showing these orbits (see Theorem 3) for \(n=2\).

In each one of pictures in Fig. 4, a different free critical point is used as starting point of each member of the family of iterative schemes. We consider \(-3.5<\beta <3.5\) to plot these parameter lines, because it includes the two intervals \(]-3.30338, \beta _1[,\) \(]\beta _2,3.30338[,\) where there exist attracting strange fixed points according to Theorem 2, a mesh of \(500\times 500\) points and the interval [0, 1] to fatten the interval where \(\beta \) is defined, allowing a better visualization. So, the color corresponding to each value of \(\beta \) is red if the corresponding critical point converges to one of the roots of the polynomial system, blue in case of divergence and black in other cases. This color is also assigned to all the values of [0, 1] with the same value of the parameter. The maximum number of iterations used is 200 and the tolerance for the error estimation when the iterates tend to a fixed point is \(10^{-3}\).

The case \((z_1(\beta ), z_1(\beta ))\) for each one is presented in Fig. 4a (for the bidimensional case). It is observed that there is convergence to the roots (red color) for almost all values of \(\beta \), except two black small regions \(-3.30338<\beta <-3.3015\) and \(3.3015<\beta <3.30338.\) We also observe darker regions corresponding to slow convergence for some parameters. In Figs. 4b–d, global convergence to the roots of polynomial system p(x) is observed. When a critical point is used with different components, the performance of the rational function is the combination of these parameter lines. That is, the only way to get global convergence is to combine \(z_i(\beta )\), \(i=2,3,4\), among themselves.

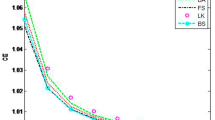

At this stage, we use Feigenbaum diagrams to analyze the bifurcations of the map related to each family on system p(x) using each one of the free critical points of the map as a starting point and observing their behavior for different ranges of \(\beta \). When the rational function is iterated on these critical points, different behavior can be found after 500 iterations of the method corresponding to each value of \(\beta \) in a mesh of 3000 subintervals. The resulting performance differs from convergence to the roots, to periodic orbits or even other chaotic attractors.

Figure 5 corresponds to the bifurcation diagrams in the black area of the parameter line for \((z_1(\beta ),z_1(\beta ))\) with \(-4\le \beta \le 4\). In Fig. 5a it can be observed how there is a general convergence to one of the roots, but in a small interval around \(\beta =-3.3024\) and \(\beta =3.3024\) several period-doubling cascades appear, including not only periodic but also chaotic behavior (blue regions). In them, strange attractors can be found, see Fig. 5b, c respectively (visualized also in the bidimensional case, \(n=2\)).

Finally, in Figs. 6a–d, the phase space for \(\beta =-3.3024\) are represented. In them, period orbits appear in yellow (in this case, the elements of the orbit are linked by yellow lines). In all cases, more attracting orbits exist, with symmetric coordinates. The behavior is similar in the case of \(\beta =3.3024.\)

In general, it can be concluded that, the main performance of the \(JCST_4(\beta )\) iterative methods on this kind of polynomial systems is the stability. There are a few points attracting strange fixed points or unstable performance in very narrow intervals of \(\beta \). These conclusions are numerically checked in the following section, also for non-polynomial system.

5 Numerical results

We initiate this section by examining the feasibility of our proposed \(JCST_4(\beta )\) parametric class of iterative methods on various academic nonlinear systems. Our objective is to evaluate its performance on some of those with favorable qualitative characteristics (\(\beta \in \{ \pm 1,\pm 10,\pm 100 \}\)), as well as on others with stability problems, as \(\beta \in \{ \pm 3.3024 \}\). This information is gleaned from the outcomes obtained in the preceding section. Subsequently, we compare the behavior of \(JCST_4(\beta )\) with that of other established methods on the same set of problems. We have limited the number of iterations to 1000, and the stopping criterion is set at \(\Vert x^{(k)}-x^{(k-1)}\Vert < {10}^{-150}\) or \(\Vert F(x^{(k)})\Vert < 10^{-150}\). To minimize round-off errors, all the computations were executed using Matlab R2022a with variable precision arithmetic having 10000 digits of mantissa.

We use in each example the Approximated Computational Order of Convergence, (ACOC), defined as

and introduced in [20], that estimates numerically the theoretical order of convergence p.

We compare the proposed family, for different values of parameter \(\beta \) with five other known methods (described in the introduction) modified replacing their Jacobian matrices by divided differences constructed with power n in the auxiliary point \(x+G(x)\): \(MO_4\) with \(m=2,3,\) \(MSA_5\) and \(MS_2\) with \(m=2\), and finally \(MJ_4\) and \(MM_4\) with \(m=3.\)

Example 1

The first nonlinear system is defined by

In this example, we use the initial estimation \(x^{(0)}=(1, 2)^T\), being the solution reached \(\bar{x} =(5, 6)^T\) in all the cases.

In Table 1, we present the values of \(\Vert x^{(k)}-x^{(k-1)}\Vert \) and \(\Vert F(x^{(k)})\Vert \) as well as the value of ACOC and we can observe that for the scheme \(JCST_4(\beta )\) are generally more stable than the other methods.

Example 2

The next example is:

The initial estimation is \(x^{(0)}= (1, 4)^T\) which leads to different solutions depending on the parameter \(\beta \) used, as we show in Table 2, which contains similar information than in the previous example. We can observe that the new class \(JCST_4(\beta )\) performs similarly in terms of ACOC when compared to other methods. Furthermore, both \(\Vert x^{(k)}-x^{(k-1)}\Vert \) and \(\Vert F(x^{(k)})\Vert \) demonstrate either better or similar accuracy.

Example 3

In the third example, we use a larger number of equations.

We consider the initial estimation \(x^{(0)}=(0.1, \cdots , 0.1)^T\) that are displayed in Table 3. The method converges to two different solutions: \((1, \ldots , 1)^T \) and \((-1, \ldots , -1)^T\), depending on the value of the parameter. We observe that, as before, the new family \(JCST_4(\beta )\) gets a similar ACOC that the other methods, and that \(\Vert x^{(k)}-x^{(k-1)}\Vert \) and \(\Vert F(x^{(k)})\Vert \) have better or similar performance. Let us remark that unstable schemes \(JCST_4(\pm 3.3024)\) need more iterations than the rest of schemes, but the lowest number of iterations corresponds to \(MLM_5\) (\(m=2\)) but in this case the accuracy is worst.

Example 4

Finally, the last example that we analyze is:

The numerical results are displayed in Table 4, where we show the same information than in the previous examples. The initial estimation is \(x^{(0)}= (0.75, \ldots , 0.75)^T\) reaching the solution \(\bar{x} \approx (1.1141, \ldots ,1.1141 )^T\) in all the cases showed except for the value \(\beta =-3.3024\) which converge to \(\bar{x} \approx (-2.773, \ldots , -2.773)^T\).

In Table 4 we can see that the new method \(JCST_4(\beta )\) method performs similarly in terms of ACOC when compared to other methods. Furthermore, both \(\Vert x^{(k)}-x^{(k-1)}\Vert \) and \(\Vert F(x^{(k)})\Vert \) demonstrate either better or similar efficiency.

6 Conclusions

In this manuscript, a general class of Jacobian-free iterative methods is designed, by using matrix weight functions. The fourth order of convergence is assured by several conditions on the weight function. One subfamily of this class with lowers computational cost is selected in order to make a qualitative analysis. This study has provided a key information: the only unstable performance correspond to a very narrow set of values of the parameter that defines the class of iterative methods. The rest of the elements are completely stable and converge only to the roots of the polynomial system. Finally, the numerical test have been performed by using a comparison between some stable and unstable members of the proposed class and some known vectorial schemes that were forced to be Jacobian-free by means of a known and efficient technique. The obtained results conform the stable and robust performance of the new class of iterative procedures, as well as the high accuracy of the calculations made.

Data Availibility

‘Not applicable’.

References

Samanskii, V.: On a modification of the newton method. Ukr. Math. J. 19, 133–138 (1967)

Steffensen, J.F.: Remarks on iteration. Skand. Aktuarietidskr. 1, 64–72 (1933)

Ortega, J.M., Rheinboldt, W.C.: Iterative Solution of Nonlinear Equations in Several Variables. Academic Press (1970)

Chicharro, F.I., Cordero, A., Gutiérrez, J.M., Torregrosa, J.R.: Complex dynamics of derivative-free methods for nonlinear equations. Appl. Math. Comput. 219, 7023–7035 (2013)

Cordero, A., Soleymani, F., Torregrosa, J.R., Shateyi, S.: Basins of attraction for various Steffensen-type methods. J. Appl. Math. 2014(1), 539707 (2014)

Cordero, A., García-Maimó, J., Torregrosa, J.R., Vassileva, M.P.: Solving nonlinear problems by Ostrowski-Chun type parametric families. Math. Chem. 53, 430–449 (2015)

Ostrowski, A.M.: Solutions of Equations and Systems of Equations. Academic Press, New York, London (1966)

Jarratt, P.: Some fourth order multipoint iterative methods for solving equations. Math. Comp. 20, 434–437 (1966)

Sharma, J.R., Arora, H.: On efficient weighted-Newton methods for solving systems of nonlinear equations. Appl. Math. Comput. 222, 497–506 (2013)

Montazeri, H., Soleymani, F., Shateyi, S., Motsa, S.S.: On a new method for computing the numerical solution of systems of nonlinear equations. J. Appl. Math. 2012(1), 751975 (2012)

Sharma, J.R., Arora, H.: Efficient derivative-free numerical methods for solving systems of nonlinear equations. Comput. Appl. Math. 35, 269–284 (2016)

Amiri, A.R., Cordero, A., Darvishi, M.T., Torregrosa, J.R.: Preserving the order of convergence: low-complexity Jacobian-free iterative schemes for solving nonlinear systems. Comput. Appl. Math. 337, 87–97 (2018)

Cordero, A., Hueso, J.L., Martínez, E., Torregrosa, J.R.: A modified Newton-Jarratt’s composition. Numer. Algor. 55, 87–99 (2010)

Artidiello, S., Cordero, A., Torregrosa, J.R., Vassileva, M.P.: Design of high-order iterative methods for nonlinear systems by using weight function procedure. Abstr. Appl. Anal. 2015, 289029 (2015)

Behl, R., Cordero, A., Torregrosa, J.R., Bhalla, S.: A new high-order Jacobian-free iterative method with memory for solving nonlinear systems. Mathematics 9, 2122 (2021)

Robinson, R.C.: An Introduction to Dynamical Systems Continuous and Discrete. Americal Mathematical Society, Providence, RI, USA (2012)

Cordero, A., Soleymani, F., Torregrosa, J.R.: Dynamical analysis of iterative methods for nonlinear systems or how to deal with the dimension? Appl. Math. Comput. 244, 398–412 (2014)

Devaney, R.L.: An Introduction to Chaotic Dynamical Systems. Advances in Mathematics and Engineering. CRC Press (2003)

Chicharro, F.I., Cordero, A., Torregrosa, J.R.: Drawing dynamical and parameters planes of iterative families and methods. Sci. World J. 2013(1), 780153 (2013)

Cordero, A., Torregrosa, J.R.: Variants of Newton’s method using fifth-order quadratura formulas. Appl. Math. Comput. 190, 686–698 (2007)

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This research was partially supported by PID2021-124577NB-I00 funded by MCIN/AEI/10.13039/501100011033 and by ‘ERDF A way of making Europe’ and ‘European Union NextGenerationEU/PRTR’.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no Conflict of interest nor Conflict of interest.

Ethics approval and consent to participate

‘Not applicable’.

Consent for publication

All the authors agree with the publication of this manuscript, if accepted.

Materials availability

‘Not applicable’.

Code availability

‘Not applicable’.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Cordero, A., Jordán, C., Sanabria-Codesal, E. et al. Solving nonlinear vectorial problems with a stable class of Jacobian-free iterative processes. J. Appl. Math. Comput. (2024). https://doi.org/10.1007/s12190-024-02166-5

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12190-024-02166-5