Abstract

We consider the numerical evaluation of a class of double integrals with respect to a pair of self-similar measures over a self-similar fractal set (the attractor of an iterated function system), with a weakly singular integrand of logarithmic or algebraic type. In a recent paper (Gibbs et al. Numer. Algorithms 92, 2071–2124 2023), it was shown that when the fractal set is “disjoint” in a certain sense (an example being the Cantor set), the self-similarity of the measures, combined with the homogeneity properties of the integrand, can be exploited to express the singular integral exactly in terms of regular integrals, which can be readily approximated numerically. In this paper, we present a methodology for extending these results to cases where the fractal is non-disjoint but non-overlapping (in the sense that the open set condition holds). Our approach applies to many well-known examples including the Sierpinski triangle, the Vicsek fractal, the Sierpinski carpet, and the Koch snowflake.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the numerical evaluation of integrals of the form

where (see Sect. 2 for details) \(\Gamma \subset \mathbb {R}^n\) is the attractor of an iterated function system (IFS) of contracting similarities satisfying the open set condition, \(\mu \) and \(\mu '\) are self-similar (also known as “invariant” or “balanced”) measures on \(\Gamma \), and

In the case where \(t>0\) and \(\mu '=\mu \) the integral (1) is known in the fractal analysis literature as the “t-energy”, or “generalised electrostatic energy”, of the measure \(\mu \) (see, e.g. [9, §4.3], [20, §2.5] and [19, Defn 4]). Integrals of the form (1) also arise as the diagonal entries in the system matrix in Galerkin integral equation methods for the solution of PDE boundary value problems in domains with fractal boundaries, for instance in the scattering of acoustic waves by fractal screens [6]. In such contexts, the accurate numerical evaluation of these matrix entries is crucial for the practical implementation of the methods in question.

Numerical quadrature rules for (1) were presented recently in [13] for the case where \(\Gamma \) is disjoint (see Sect. 2 for our definition of disjointness), e.g. a Cantor set in \({\mathbb {R}}\) or a Cantor dust in \({\mathbb {R}}^n\), \(n\ge 2\). The approach of [13] is to decompose \(\Gamma \) into a finite union of self-similar subsets \(\Gamma _1,\ldots ,\Gamma _M\) (each similar to \(\Gamma \)) using the IFS structure and to write \(I_{\Gamma ,\Gamma }\) as a sum of integrals over all possible pairs \(\Gamma _m\times \Gamma _n\).

Using the homogeneity properties of the integrand \(\Phi _t(x,y)\), namely that \(\Phi _t(x,y)=\tilde{\Phi }_t(|x-y|)\), where \(\tilde{\Phi }_t(r):=r^{-t}\) for \(t>0\) and \(\tilde{\Phi }_t(r):=\log {r}\) for \(t=0\), which satisfies, for \(\rho >0\),

one can show that the “self-interaction” integrals over \(\Gamma _m\times \Gamma _m\), for \(m=1,\ldots ,M\), can be expressed in terms of the original integral \(I_{\Gamma ,\Gamma }\), which allows \(I_{\Gamma ,\Gamma }\) to be written in terms of the integrals over \(\Gamma _m\times \Gamma _n\), for \(m,n=1,\ldots ,M\), with \(m\ne n\). When \(\Gamma \) is disjoint the latter integrals are regular (i.e. they have smooth integrands), so that one can obtain a representation formula for the singular integral (1) (when it converges) as a linear combination of regular integrals, which can be readily evaluated numerically (see [13, Thm 4.6], which generalises previous results for Cantor sets, e.g. [3]). In the non-disjoint case, however, distinct self-similar subsets of \(\Gamma \) may be non-disjoint, intersecting at discrete points (such as for the Sierpinski triangle, see Sect. 5.1) or at higher dimensional sets (such as for the Sierpinski carpet, see Sect. 5.3, or the Koch snowflake, see Sect. 5.4). This means that some of the integrals over \(\Gamma _m\times \Gamma _n\), for \(m\ne n\), are singular, reducing the accuracy of quadrature rules based on the representation formula of [13, Thm 4.6] (which assumes they are regular). In this paper, we remedy this, showing that in many non-disjoint cases (including those mentioned above), by decomposing \(\Gamma \) further into smaller self-similar subsets it is possible to find a finite number of “fundamental” singular integrals (including \(I_{\Gamma ,\Gamma }\) itself) that satisfy a square system of linear equations that can be solved to express \(I_{\Gamma ,\Gamma }\) purely in terms of regular integrals that are amenable to accurate numerical evaluation.

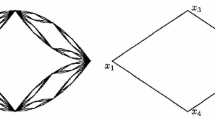

We will describe our methodology in generality in Sect. 4, but for the benefit of the reader seeking intuition, we illustrate here the basic idea for the simple case where \(\Gamma =[0,1]^2\subset {\mathbb {R}}^2\) is the unit square, \(\mu =\mu '\) is the Lebesgue measure on \({\mathbb {R}}^2\) restricted to \(\Gamma \), and \(t=1\). Despite not generally being regarded as a “fractal”, the square \(\Gamma \) can be viewed as the self-similar attractor of an iterated function system comprising four contracting similarities and can accordingly be split into a “level one” decomposition of 4 squares of side length 1/2 or a “level two” decomposition of 16 squares of side length 1/4, as illustrated in Fig. 1. Let \(I_{m,n}\) denote the integral \(\int _{\Gamma _m}\int _{\Gamma _n}|x-y|^{-1}\,{\textrm{d}}y{\textrm{d}}x\) where \(\Gamma _m\) and \(\Gamma _n\) are any of the level one squares in Fig. 1. One can then express (1) as the sum \(I_{\Gamma ,\Gamma }=\sum _{m=1}^4\sum _{n=1}^4 I_{m,n}\) of 16 singular integrals over all the pairs of level one squares, which can be categorised as follows: 4 self interactions (\(I_{1,1}\), \(I_{2,2}\) etc.), 8 edge interactions (\(I_{1,2}\), \(I_{2,1}\), \(I_{2,3}\) etc.) and 4 vertex interactions (\(I_{1,3}\), \(I_{3,1}\), \(I_{2,4}\), \(I_{4,2}\)). By symmetry, each of the integrals in each category is equal to all the others, so that

Furthermore, by (3) (with \(t=1\)), combined with a change of variables, the self-interaction integral \(I_{1,1}\) can be expressed in terms of the original integral, as

Combining this with (4), we obtain the equation \(\frac{1}{2}I_{\Gamma ,\Gamma } = 8 I_{1,2} + 4 I_{1,3}\). To derive two further equations connecting \(I_{\Gamma ,\Gamma }\), \(I_{1,2}\) and \(I_{1,3}\), we move to the level two decomposition, extending our notation in the obvious way, so that, e.g. \(I_{12,23}\) denotes the integral \(\int _{\Gamma _{12}}\int _{\Gamma _{23}}|x-y|^{-1}\,{\textrm{d}}y{\textrm{d}}x\), where \(\Gamma _{12}\) and \(\Gamma _{23}\) are the level two squares labelled “12” and “23” in Fig. 1. Then, the edge interaction integral \(I_{1,2}\) can be written as a sum of 16 integrals over pairs of level two squares, which, after applying symmetry simplifications, gives

where \(R_{1,2} = 4I_{11,21} + 4I_{11,24} + 2I_{11,22} + 2I_{11,23}\) is a sum of regular integrals. Similarly, the vertex interaction integral \(I_{1,3}\) can be written as

where \(R_{1,3} = 4I_{13,32} + 4I_{13,33} + 4I_{12,33} + 2I_{12,34} + I_{11,33}= 4I_{11,24} + 4I_{13,33} + 4I_{12,33} + 2I_{11,23} + I_{11,33}\) is a sum of regular integrals. Furthermore, by (3), combined with a change of variables, we have that

and inserting these identities into (6) and (7) gives our two sought-after equations, namely \(\frac{3}{4}I_{1,2}=\frac{1}{4}I_{1,3}+R_{1,2}\) and \(\frac{7}{8}I_{1,3}=R_{1,3}\).

To summarise, we have shown that the triple \((I_{\Gamma ,\Gamma },I_{1,2},I_{1,3})^T\) satisfies the linear system

and solving the system gives

which is an exact formula for \(I_{\Gamma ,\Gamma }\) in terms of the seven regular integrals \(I_{11,21}\), \(I_{11,24}\), \(I_{11,22}\), \(I_{11,23}\), \(I_{13,33}\), \(I_{12,33}\) and \(I_{11,33}\), which are all amenable to accurate numerical evaluation, for instance with a product Gauss rule.

Our goal in this paper is to derive formulas analogous to (9) and (10) for more general \(\Gamma \), t, \(\mu \) and \(\mu '\). The structure of the paper is as follows. In Sect. 2, we review some preliminaries concerning self-similar fractal sets and measures. In Sect. 3, we introduce the notion of “similarity” for integrals over pairs of subsets of \(\Gamma \) and provide sufficient conditions under which it holds. In Sect. 4, we describe a general algorithm for generating linear systems of the form (9) using our notion of similarity. In Sect. 5, we apply our algorithm to a number of examples including the Sierpinski triangle, the Sierpinski carpet and the Koch snowflake. Finally, in Sect. 7, we show how our results can be combined with numerical quadrature to compute accurate numerical approximations to the integral (1) in these and other cases. As an application, we show how our algorithm can be used in the context of the “Hausdorff boundary element method” of [6] to compute acoustic scattering by non-disjoint fractal screens.

Regarding related literature, we note that a three-dimensional version of the approach described above for integration over the unit square was used to compute the gravitational force between two cubes sharing a common face in [28]. More generally, this sort of approach forms the basis of the “hierarchical quadrature” developed for singular integrals over cubical and simplicial domains by Börm and Hackbusch [5] and Meszmer [21, 22]. In the context of integration over fractals, we mention the work of Mantica [17] and Strichartz [25], where self-similarity techniques were used to derive exact formulas for integrals of polynomials over fractals. Our previous paper [13] and the current paper can be viewed as extensions of the results of [17] and [25] to singular integrands.

2 Preliminaries

Throughout the paper, we assume that \(\Gamma \) is the attractor of an iterated function system (IFS) of contracting similarities satisfying the open set condition (OSC), meaning that (see, e.g. [15])

-

(i)

there exists \(M\in {\mathbb {N}}\), \(M\ge 2\), and a collection of maps \(\{s_1,s_2,\ldots ,s_M\}\), such that, for each \(m=1,\ldots ,M\), \(s_m:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\) satisfies

$$\begin{aligned} |s_m(x)-s_m(y)| = \rho _m|x-y|,\quad \text {for }x,y\in {\mathbb {R}}^{n}, \end{aligned}$$for some \(\rho _m\in (0,1)\). Explicitly, for each \(m=1,\ldots ,M\), we can write

$$\begin{aligned} s_m(x) = \rho _mA_mx + \delta _m, \end{aligned}$$(11)for some orthogonal matrix \(A_m\in {\mathbb {R}}^{n\times n}\) and some translation \(\delta _m\in {\mathbb {R}}^n\);

-

(ii)

\(\Gamma \) is the unique non-empty compact set such that

$$\begin{aligned} \Gamma = s(\Gamma ), \end{aligned}$$where

$$\begin{aligned} s(E) := \bigcup _{m=1}^M s_m(E), \quad E\subset {\mathbb {R}}^{n}; \end{aligned}$$(12) -

(iii)

there exists a non-empty bounded open set \(O\subset {\mathbb {R}}^{n}\) such that

$$\begin{aligned} s(O) \subset O \quad \text{ and } \quad s_m(O)\cap s_{m'}(O)=\emptyset , \quad m\ne m'\in \{1,\ldots ,M\}. \end{aligned}$$(13)

Then, \(\Gamma \) has Hausdorff dimension \(\dim _{\text {H}}(\Gamma )=d\), where \(d\in (0,n]\) is the solution of the equation

We say that the IFS is disjoint (cf. [1, Defn 7.1]) if

which holds if and only if the open set O in (13) can be taken such that \(\Gamma \subset O\) [6].

We say that the IFS is homogeneous if \(\rho _m=\rho \), \(m=1,\ldots ,M\), for some \(\rho \in (0,1)\). In this case, the solution of (14) is \(d = \log {M}/\log {(1/\rho )}\).

To describe the decomposition of \(\Gamma \) into self-similar subsets via the IFS structure, we adopt the standard index notation of [15]. For \(\ell \in {\mathbb {N}}\), let \({\Sigma }_\ell :=\{1,\ldots ,M\}^\ell \), and, for \({\textbf{m}}=(m_1,\ldots ,m_\ell )\in {\Sigma }_\ell \), let

When working with examples, we shall typically write \(\Gamma _{(m_1,m_2,\ldots ,m_\ell )}\) as simply \(\Gamma _{m_1m_2\ldots m_\ell }\) to make the notation more compact (as we did in Sect. 1).

For each \({\textbf{m}}=(m_1,\ldots ,m_\ell )\in {\Sigma }_{\ell }\), \(s_{\textbf{m}}\) is a contracting similarity of the form

where (with the convention that an empty product equals 1)

The inverse of \(s_{\textbf{m}}\) then has the representation

Setting \({\Sigma }_{{\emptyset }}:=\{{\emptyset }\}\), \(\Gamma _{{\emptyset }}:=\Gamma \) and \(s_{{\emptyset }}(x):=x\), we define \({\Sigma }:={\Sigma }_{{\emptyset }} \cup (\cup _{\ell =1}^\infty {\Sigma }_\ell )\).

Given such a \(\Gamma \), and a collection \((p_1,\ldots ,p_M)\) of positive weights (or “probabilities”) satisfying

there exists [15, Sections 4 & 5] a positive Borel-regular finite measure \(\mu \) supported on \(\Gamma \), unique up to normalisation, called a self-similar [23] (also known as invariant [15] or balanced [2]) measure, such that \(\mu (E)=\sum _{m=1}^M p_m \mu (s_m^{-1}(E))\) for every measurable set \(E\subset \mathbb {R}^n\). For such a measure, by [23, Thm. 2.1], the OSC (13) implies that \(\mu (s_m(\Gamma )\cap s_{m'}(\Gamma ))=0\) for each \(m\ne m'\), and as a consequence, we find that for \({\textbf{m}}=(m_1,\ldots ,m_\ell )\in {\Sigma }\), and any \(\mu \)-measurable function f,Footnote 1

where (again with the convention that an empty product equals 1)

In particular,

Example 2.1

By choosing \(p_m=\rho _m^d\) for \(m=1,\ldots ,M\) and normalising appropriately, we can obtain \(\mu ={\mathcal {H}}^d|_\Gamma \), where \({\mathcal {H}}^d\) is the d-dimensional Hausdorff measure on \({\mathbb {R}}^n\) (note that in this case, (18) holds by (14)). We recall that \({\mathcal {H}}^n\) is proportional to n-dimensional Lebesgue measure [9, §3.1].

Given an IFS attractor \(\Gamma \) and two self-similar measures \(\mu \) and \(\mu '\), with associated weights \((p_1,\ldots ,p_M)\) and \((p'_1,\ldots ,p'_M)\), we define \(t_*>0\), if it exists, to be the largest positive real number such that the integral \(I_{\Gamma ,\Gamma }\) converges for \(0\le t< t_*\). In [13, Lem. A.4], we showed that if \(\Gamma \) is disjoint, then \(t_*\) exists and is the unique positive real solution of the equation

Our conjecture is that the same holds for non-disjoint \(\Gamma \), under the assumption of the OSC (13). As yet, we have not been able to prove this conjecture in its full generality. However, we shall proceed under the assumption that it holds, noting that it is well known to hold, with \(t_*=d\), in the special case where \(\mu =\mu '={\mathcal {H}}^d|_\Gamma \) for \(d=\dim _{\text {H}}(\Gamma )\) (see, e.g. [13, Corollary A.2]), which is the case of relevance for the integral equation application from [6] that we study in Sect. 7. We comment that when \(\mu =\mu '\) the quantity \(t_*\) is sometimes referred to as the “electrostatic correlation dimension” of \(\mu \) [19, Defn 6]. This and related notions of the “dimension” of a measure \(\mu \) give, amongst other things, lower bounds on the Hausdorff dimension of the support of \(\mu \) (which may be strictly smaller than that of \(\Gamma \)), and important information about the asymptotic behaviour of the Fourier transform of \(\mu \) (see, e.g.[24]).

We note that if the IFS is homogeneous, then (21) can be solved analytically to give

which reduces to \(t_*=d\) in the case \(\mu =\mu '={\mathcal {H}}^d|_\Gamma \) (where \(p_m=p_m'=\rho ^d\)).

Self-similar measures sometimes possess useful symmetry properties. Let \(T:{\mathbb {R}}^n\rightarrow {\mathbb {R}}^n\) be an isometry, with \(T(x)=A_Tx+\delta _T\) for some orthogonal matrix \(A_T\) and some translation vector \(\delta _T\). We say that \(\mu \) is invariant under T if \(\mu (T(E))=\mu (E)\) for all measurable \(E\subset {\mathbb {R}}^n\). If \(\mu \) is invariant under T, then \(\mu \) is also invariant under \(T^{-1}\), the push-forward measure \(\mu \circ T^{-1}:E\mapsto \mu (T^{-1}(E))\) coincides with \(\mu \), and \(T(\Gamma )=\Gamma \), so that by [4, Thm. 3.6.1], we have that, for all measurable f,

Remark 2.2

Determining a complete list of isometries T under which a given self-similar measure \(\mu \) is invariant, directly from the IFS \(\{s_1,\ldots ,s_M\}\) and weights \(p_1,\ldots ,p_M\), appears to be an open problem. However, for specific examples, it is often straightforward to determine the admissible T, as we demonstrate in Sect. 5 and Sect. 7. In the case \(\mu ={\mathcal {H}}^d|_\Gamma \) (see Sect. 5.1-Sect. 5.4), a necessary and sufficient condition for \(\mu \) to be invariant under T is that \(T(\Gamma )=\Gamma \), because \({\mathcal {H}}^d\) is invariant under isometries of \({\mathbb {R}}^n\). For \(\mu \ne {\mathcal {H}}^d|_\Gamma \), it is still necessary that \(T(\Gamma )=\Gamma \), but no longer sufficient (see Sect. 5.5). Generically, a self-similar measure \(\mu \) may not be invariant under any non-trivial isometries T (see Sect. 7.1).

3 Similarity

We assume henceforth that \(\Gamma \) is the attractor of an IFS \(\{s_1,\ldots ,s_M\}\) of contracting similarities satisfying the OSC and that \(\mu \) and \(\mu '\) are self-similar measures on \(\Gamma \), with associated weights \((p_1,\ldots ,p_M)\) and \((p'_1,\ldots ,p'_M)\).

As mentioned in Sect. 1, our approach to deriving representation formulas for (1) will be based on decomposing the integral \(I_{\Gamma ,\Gamma }\) into sums of integrals over pairs of subsets of \(\Gamma \). For any two vector indices \({\textbf{m}},{\textbf{m}}'\in {\Sigma }\) (possibly of different lengths), we define the sub-integral

Note that the original integral \(I_{\Gamma ,\Gamma }={I_{\emptyset ,\emptyset }}\) is included in this definition.

Central to our approach will be identifying when two sub-integrals \(I_{{\textbf{m}},{\textbf{m}}'}\) and \(I_{{\textbf{n}},{\textbf{n}}'}\) are similar, in the sense that

for some \(a>0\) and \(b\in {\mathbb {R}}\) that we can determine explicitly in terms of the parameters of the IFS and the measures \(\mu \) and \(\mu '\).

The simplest instance of similarity occurs when \(\mu =\mu '\), in which case the symmetry of the integrand (i.e. the fact that \(\Phi _t(x,y)=\Phi _t(y,x)\)), combined with Fubini’s theorem, provides the following elementary result (which was used in the example in Sect. 1, for which \(I_{1,2}=I_{2,1}\) etc.).

Proposition 3.1

If \(\mu =\mu '\), then \(I_{{\textbf{m}},{\textbf{n}}}=I_{{\textbf{n}},{\textbf{m}}}\) for each \({\textbf{m}},{\textbf{n}}\in {\Sigma }\).

Other instances of similarity may be associated with the IFS structure (as for the derivation of (5) and (8), in the example in Sect. 1) and/or with other geometrical symmetries (as for the observation that \(I_{1,2}=I_{2,3}\) etc., in the example in Sect. 1). The following result provides sufficient conditions under which a given pair of sub-integrals \(I_{{\textbf{m}},{\textbf{m}}'}\) and \(I_{{\textbf{n}},{\textbf{n}}'}\) are similar in this manner. We recall that the notion of a self-similar measure being invariant under an isometry T was defined in Sect. 2 and that the question of determining for which isometries this holds was discussed in Remark 2.2. If no non-trivial isometries can be determined for \(\mu \) or \(\mu '\), one can always take T and \(T'\) to be the identity in the following.

Proposition 3.2

Let \({\textbf{m}},{\textbf{m}'},{\textbf{n}},{\textbf{n}'}\in {\Sigma }\). Let T and \(T'\) be isometries of \({\mathbb {R}}^n\) such that \(\mu \) and \(\mu '\) are invariant under T and \(T'\) respectively. Suppose there exists \(\varrho >0\) such that

Then,

Proof

By (19), and the invariance of \(\mu \) and \(\mu '\) with respect to T and \(T'\), we have that

Condition (25) implies that

which in turn, by (3), implies that

and combining this with (27)–(28) gives the result.\(\square \)

The following result provides an equivalent characterisation of the sufficient condition (25) in terms of the scaling factors, orthogonal matrices and translations associated with the maps \(s_{\textbf{m}},s_{\textbf{m}'},s_{\textbf{n}},s_{\textbf{n}'},T\) and \(T'\) (see (16) for the definition of the notation \(\rho _{\textbf{m}}\), \(A_{\textbf{m}}\), \(\delta _{\textbf{m}}\) etc.). We remark that a necessary and sufficient condition for (30) to hold is that

Proposition 3.3

Let \(Tx=A_Tx+\delta _T\) and \(T'x=A_{T'}x+\delta _{T'}\). Then, condition (25) holds if and only if the following three conditions are satisfied:

Proof

We first note that (25) is equivalent to

It is easy to check that (30)–(32) are sufficient for (33) (and hence for (25)). To see that they are also necessary, suppose that (33) holds. Then, taking \(x=y=0\) in (33) gives (32). Combining this with (33) gives

and taking first \(x=0\) and \(y\ne 0\), then \(x\ne 0\) and \(y= 0\) in this equation gives

from which we deduce (30). Finally, combining (30) with (34) gives

or, equivalently,

Now, we note that if B is an \(n\times n\) matrix and \(|x-By|=|x-y|\) for all \(x,y\in {\mathbb {R}}^n\), then B is the identity matrix. To prove this, suppose that B is not the identity matrix. Then, there exists \(y\ne 0\) such that \(By\ne y\), and setting \(x=By\) gives \(|x-By|=0\) and \(|x-y|\ne 0\), so that \(|x-By|\ne |x-y|\). Hence, (35) implies that

is the identity matrix, which is equivalent to (31).\(\square \)

Remark 3.4

If \(A_{{\textbf{m}}}\), \(A_{{\textbf{m}'}}\), \(A_{{\textbf{n}}}\) and \(A_{{\textbf{n}'}}\) are all equal to the identity matrix, and \(A_{T}=A_{T'}\), then condition (31) is automatically satisfied. If, further, \(\delta _T\) and \(\delta _{T'}\) are both zero, and condition (30) holds, then condition (32) reduces to

4 Algorithm for deriving representation formulas

We present our algorithm for deriving representation formulas for the integral \(I_{\Gamma ,\Gamma }\) in Algorithm 1 below. The output of the algorithm, when it terminates, is a linear system of the form

where \(\textbf{x}=[I_{{\textbf{m}}^{}_{s,1},{\textbf{m}}'_{s,1}},\ldots ,I_{{\textbf{m}}^{}_{s,n_s},{\textbf{m}}'_{s,n_s}}]^T\in {\mathbb {R}}^{n_s}\) is a vector of “fundamental” singular sub-integrals (the subscript \(_s\) standing for “singular”), with the original integral \(I_{{\textbf{m}}^{}_{s,1},{\textbf{m}}'_{s,1}}=I_{\Gamma ,\Gamma }\) as its first entry, \(\textbf{r}=[I_{{\textbf{m}}^{}_{r,1},{\textbf{m}}'_{r,1}},\ldots ,I_{{\textbf{m}}^{}_{r,n_r},{\textbf{m}}'_{r,n_r}}]^T\in {\mathbb {R}}^{n_r}\) is a vector of “fundamental” regular sub-integrals (the subscript \(_r\) standing for “regular”), \(A\in {\mathbb {R}}^{n_s\times n_s}\) and \(B\in {\mathbb {R}}^{n_s\times n_r}\) are matrices, and \(\textbf{b}\in {\mathbb {R}}^{n_s}\) is a vector of logarithmic terms present only in the case \(t=0\). The algorithm is based on repeated subdivision of the integration domain and the identification of similarities between the resulting sub-integrals (in the sense of (24)), and the word “fundamental” refers to a sub-integral which, when encountered in the subdivision algorithm, is not found to be similar to any other sub-integral previously encountered. Whether the algorithm terminates, and the resulting lengths \(n_s\) and \(n_r\) of the vectors \(\textbf{x}\) and \(\textbf{r}\), depends on the measures \(\mu \) and \(\mu '\), as we shall demonstrate in Sect. 5.

If the algorithm terminates, one can obtain numerical approximations to the values of the integrals in \(\textbf{x}\), including the original integral \(I_{\Gamma ,\Gamma }\), by applying a suitable quadrature rule to the regular integrals in \(\textbf{r}\), solving the system (38) and extracting the relevant entry from the solution vector \(\textbf{x}\). We discuss this in more detail in Sect. 6.

Remark 4.1

If \(\Gamma \) is disjoint, then the algorithm terminates with \(n_s=1\) and recovers the result of [13, Thm 4.6].

Remark 4.2

Our algorithm (in line 9) requires us to specify a subdivision strategy. With reference to the notation in Algorithm 1, we have considered two such strategies:

-

Strategy 1: Always subdivide both \(\Gamma _{{\textbf{m}}_{s,n}}\) and \(\Gamma _{{\textbf{m}}'_{s,n}}\), i.e. take (with \(({\emptyset },m)\) interpreted as (m))

$$\begin{aligned} \mathcal {I}_n = \{({\textbf{m}}_{s,n},m)\}_{m=1}^M, \quad \mathcal {I}'_{n} = \{({\textbf{m}}'_{s,n},m)\}_{m=1}^M. \end{aligned}$$(39) -

Strategy 2: Subdivide only the larger of \(\Gamma _{{\textbf{m}}_{s,n}}\) and \(\Gamma _{{\textbf{m}}'_{s,n}}\), i.e. take

$$\begin{aligned} {\left\{ \begin{array}{ll} \mathcal {I}_n = \{({\textbf{m}}_{s,n},m)\}_{m=1}^M \text { and } \mathcal {I}'_{n} = \{{\textbf{m}}'_{s,n}\}, &{} \text {if } {{\,\textrm{diam}\,}}(\Gamma _{{\textbf{m}}_{s,n}})>{{\,\textrm{diam}\,}}(\Gamma _{{\textbf{m}}'_{s,n}}),\\ \mathcal {I}_n = \{{\textbf{m}}_{s,n}\} \text { and } \mathcal {I}'_{n} = \{({\textbf{m}}'_{s,n},m)\}_{m=1}^M, &{} \text {if } {{\,\textrm{diam}\,}}(\Gamma _{{\textbf{m}}_{s,n}})<{{\,\textrm{diam}\,}}(\Gamma _{{\textbf{m}}'_{s,n}}),\\ \mathcal {I}_n = \{({\textbf{m}}_{s,n},m)\}_{m=1}^M\text { and } \mathcal {I}'_{n} = \{({\textbf{m}}'_{s,n},m)\}_{m=1}^M, &{} \text {if } {{\,\textrm{diam}\,}}(\Gamma _{{\textbf{m}}_{s,n}})={{\,\textrm{diam}\,}}(\Gamma _{{\textbf{m}}'_{s,n}}). \end{array}\right. } \end{aligned}$$(40)

If the IFS is homogeneous, then the two subdivision strategies coincide, since using Strategy 2, we never encounter pairs of subsets with different diameters.

Algorithm for deriving representation formulas for the integral (1).

Remark 4.3

Our algorithm (in line 11) requires the user to be able to determine whether an integral \(I_{{\textbf{n}},{\textbf{n}'}}\) is singular or regular, i.e. whether \(\Gamma _{\textbf{n}}\) intersects \({\Gamma _{\textbf{n}'}}\) non-trivially or not. Deriving a criterion for this based solely on the indices \({\textbf{n}}\) and \({\textbf{n}'}\) and the IFS parameters appears to be an open problem. However, for the examples considered in Sect. 5, we were able to determine this by inspection on a case-by-case basis. We emphasize that one does not need to specify the type of singularity, i.e. the dimension of \(\Gamma _{\textbf{n}}\cap {\Gamma _{\textbf{n}'}}\), merely whether \(\Gamma _{\textbf{n}}\cap {\Gamma _{\textbf{n}'}}\) is empty or not.

Remark 4.4

Our algorithm (in lines 12 and 20) requires a way of checking for “similarity” of pairs of subintegrals. For this, we use Propositions 3.1–3.3, combined with a user-provided list of isometries T and \(T'\) under which \(\mu \) and \(\mu '\) are respectively invariant. Then, to verify (25) in Proposition 3.2, we use Proposition 3.3: we first check (30), then (31), then (32). As noted in Remark 3.4, in the special case where \(A_{{\textbf{m}}}\), \(A_{{\textbf{m}'}}\), \(A_{{\textbf{n}}}\) and \(A_{{\textbf{n}'}}\) are all equal to the identity matrix, \(A_{T}=A_{T'}\), and \(\delta _T\) and \(\delta _{T'}\) are both zero, it is sufficient to check (30) and then (37).

The question of how to determine the permitted isometries T and \(T'\) was discussed in Remark 2.2. If the user is not able to provide the full list of isometries for \(\mu \) and \(\mu '\), it may be that the algorithm still terminates, but does so with a larger number \(n_s\) of fundamental singular integrals than would be obtained with the full list of isometries. However, in Sect. 5.5, we provide an example where failing to specify a non-trivial isometry would lead to non-termination of the algorithm.

Remark 4.5

The matrix A (when the algorithm terminates) depends not only on the IFS \(\{s_1,\ldots ,s_M\}\) and the weights \(p_1,\ldots ,p_M\), but also on the subdivision strategy used in line 9 of the algorithm. For both subdivision strategies described in Remark 4.2, the first column of A is guaranteed to be of the form \((\alpha ,0\ldots ,0)^T\) for some \(\alpha >0\), because of the fact that \(\mu (\Gamma _m\cap \Gamma _{m'})=0\) for \(m\ne m'\). For all the examples in Sect. 5.1–Sect. 5.4, the matrix A is upper triangular, with diagonal entries that are all non-zero when \(t<t_*\), so that A is invertible when \(t<t_*\). However, the upper-triangularity of A is not guaranteed in general, as Sect. 5.5 illustrates (see in particular (47)), and proving that A is invertible whenever the algorithm terminates remains an open problem.

5 Examples

We now report the results of applying Algorithm 1 to some standard examples.

5.1 Sierpinski triangle and Hausdorff measure

We first consider the case where \(\Gamma \subset {\mathbb {R}}^2\) is the Sierpinski triangle, the attractor of the homogeneous IFS with \(M=3\) and

for which \(d=\dim _{\text {H}}(\Gamma )=\log {3}/\log 2\approx 1.59\). The first two levels of subdivision of \(\Gamma \) are illustrated in Fig. 2. We shall assume for simplicity that \(\mu =\mu '={\mathcal {H}}^d|_\Gamma \), so that \(t_*=d\). Since we are working with Hausdorff measure, as discussed in Remark 2.2, the isometries T under which \(\mu \) is invariant are precisely those for which \(T(\Gamma )=\Gamma \), which in this case are the elements of the dihedral group \(D_3\) corresponding to the symmetries of the equilateral triangle. The two subdivision strategies (39) and (40) coincide, since the IFS is homogeneous, and Algorithm 1 terminates after finding two fundamental singular sub-integrals, with \(\textbf{x}=(I_{\Gamma ,\Gamma },I_{1,2})^T\). The integral \(I_{1,2}\) captures the interaction between neighbouring subsets of \(\Gamma \) of the same size, intersecting at a point. The linear system (38) satisfied by these unknowns is as follows:

where

and

is the sum of the regular integrals arising from the decomposition of \(I_{1,2}\) into level 2 subsets. In the notation of Sect. 4, we have

and \(\textbf{r} = (I_{11,21}, I_{11,22}, I_{11,23}, I_{12,23})^T\). Then

and, solving the system, we obtain the representation formulas

5.2 Vicsek fractal and Hausdorff measure

Next, we consider the case where \(\Gamma \subset {\mathbb {R}}^2\) is the Vicsek fractal (shown in Fig. 3), the attractor of the homogeneous IFS with \(M=5\) and

with \(d=\dim _{\text {H}}(\Gamma )=\log 5/\log 3\approx 1.47\). Again, we assume that \(\mu =\mu '={\mathcal {H}}^d|_\Gamma \), so that \(t_*=d\). In this case, the isometries T under which \(\mu \) is invariant are the elements of the dihedral group \(D_4\) corresponding to the symmetries of the square. The first two levels of subdivision of \(\Gamma \) are illustrated in Fig. 3, from which it is clear that the situation is similar to that for the Sierpinski triangle of Sect. 5.1, as the only new singularities at level one are point singularities, which are similar (in the sense of (25)) to those arising at level two. Again, our two subdivision strategies coincide because the IFS is homogeneous, and Algorithm 1 terminates after finding two fundamental singular sub-integrals, with \(\textbf{x}=\left( I_{\Gamma ,\Gamma },I_{1,5}\right) ^T\). For brevity, we do not present the full linear system satisfied by these unknowns, but rather just report the matrix A of (38), which is

where

5.3 Sierpinski carpet and Hausdorff measure

Next, we consider the case where \(\Gamma \subset {\mathbb {R}}^2\) is the Sierpinski carpet, the attractor of the homogeneous IFS with \(M=8\) and

for which \(d=\dim _{\text {H}}(\Gamma )=\log {8}/\log 3\approx 1.89\). The first two levels of subdivision of \(\Gamma \) are illustrated in Fig. 2. We again assume that \(\mu =\mu '={\mathcal {H}}^d|_\Gamma \), so that \(t_*=d\). As for the previous example, the isometries T under which \(\mu \) is invariant are the elements of the dihedral group \(D_4\). Again, the two subdivision strategies (39) and (40) coincide, since the IFS is homogeneous, and now Algorithm 1 terminates after finding three fundamental singular sub-integrals, with \(\textbf{x} = (I_{\Gamma ,\Gamma }, I_{1,2}, I_{2,4})^T\). The integrals \(I_{1,2}\) and \(I_{2,4}\) capture the interaction between neighbouring subsets of the same size, intersecting along a line segment and at a point, respectively (Fig. 4). In this case, the matrix of (38) is as follows:

where, for \(t\in [0,d)\),

5.4 Koch snowflake and Lebesgue measure

Next, we consider the case where \(\Gamma \subset {\mathbb {R}}^2\) is the Koch snowflake, the attractor of the non-homogeneous IFS with \(M=7\) and

for which \(d=\dim _{\text {H}}(\Gamma )=2\). The first three levels of subdivision of \(\Gamma \) are illustrated in Fig. 5. We assume that \(\mu \) and \(\mu '\) are both equal to the Lebesgue measure on \({\mathbb {R}}^2\), restricted to \(\Gamma \), so, again, \(t_*=d\). (As mentioned in Example 2.1, \(\mu \) is proportional to \({\mathcal {H}}^2|_\Gamma \).) The isometries T under which \(\mu \) is invariant are the elements of the dihedral group \(D_6\) corresponding to the symmetries of the hexagon. In this case, both subdivision strategies produce a terminating algorithm, but with different results.

With Strategy 1 (subdividing both subsets), Algorithm 1 terminates after finding four fundamental singular sub-integrals, with \(\textbf{x} = (I_{\Gamma ,\Gamma }, I_{1,2}, I_{2,3}, I_{11,25})^T\). The integral \(I_{1,2}\) captures the interaction between neighbouring subsets of \(\Gamma \), intersecting along a Koch curve. The integrals \(I_{2,3}\) and \(I_{11,25}\) both capture point interactions, but of different types: in \(I_{2,3}\), the two interacting subsets are the same size, while in \(I_{11,25}\), one is three times the diameter of the other. \(I_{11,25}\) arises as a new fundamental sub-integral in the subdivision of \(I_{1,2}\), but in the subdivision of \(I_{11,25}\), one obtains just one singular sub-integral, \(I_{117,255}\), which is similar to \(I_{11,25}\), so the algorithm terminates.

For brevity, we do not report the resulting linear system satisfied by \((I_{\Gamma ,\Gamma }, I_{1,2}, I_{2,3},\)\(I_{11,25})^T\), but instead present the simpler result obtained with Strategy 2 (subdividing the subset with the largest diameter), for which Algorithm 1 terminates after finding only three fundamental singular sub-integrals, with \(\textbf{x} = (I_{\Gamma ,\Gamma }, I_{1,2}, I_{2,3})^T\). With this strategy, in the subdivision of \(I_{1,2}\), we subdivide only \(\Gamma _1\), leaving \(\Gamma _2\) intact, obtaining the edge interaction sub-integrals \(I_{12,2}\) and \(I_{17,2}\), both of which are similar to \(I_{1,2}\), and the point interaction sub-integral \(I_{11,2}\), which is similar to \(I_{2,3}\). The resulting linear system is

where

and

are linear combinations of regular integrals.

Hence,

and solving the system gives

and

5.5 \(\Gamma =[0,1]\), including non-terminating examples

We now consider a class of simple one-dimensional examples that illustrates the dependence of the output of Algorithm 1 on the choice of measures \(\mu \) and \(\mu '\), and the fact that it does not always terminate.

Given \(\rho \in (0,1/2]\), consider the IFS \(\{s_1,s_2\}\) in \({\mathbb {R}}\) with \(s_1(x)=\rho x\) and \(s_2(x)=(1-\rho )x+\rho \), for which \(\Gamma =[0,1]\). The first four levels of subdivision of \(\Gamma \) are illustrated in Fig. 6 in the case \(\rho \in (0,1/2)\). Let \(\mu \) be the invariant measure on \(\Gamma \) for some weights \(p_1,p_2\in (0,1)\) with \(p_1+p_2=1\), and let \(\mu '=\mu \), so that \(I_{{\textbf{m}},{\textbf{m}'}}=I_{{\textbf{m}'},{\textbf{m}}}\) for all \({\textbf{m}},{\textbf{m}'}\in {\Sigma }\) by Proposition 3.1. For definiteness, we assume the normalisation \(\mu (\Gamma )=1\). If \(p_1=\rho \), then \(p_2=1-\rho \) and \(\mu \) is Lebesgue measure restricted to [0, 1] (recall Example 2.1), which is invariant under the operation \(T_\textrm{ref}\) of reflection with respect to the point \(x=1/2\). If \(p_1\ne \rho \), then \(\mu \) is not Lebesgue measure, and the only isometry T under which \(\mu \) is invariant is the identity.

If \(\rho =1/2\), then the IFS is homogeneous, so Strategy 1 and Strategy 2 coincide, and Algorithm 1 always terminates, for any \(\mu \), finding just 2 fundamental singular sub-integrals, \(I_{\Gamma ,\Gamma }\) and \(I_{1,2}\).

If \(\rho \in (0,1/2)\), then the IFS is inhomogeneous, so Strategy 1 and Strategy 2 differ, and the outcome of Algorithm 1 depends on the choice of strategy, and on the measure \(\mu \). We consider four cases:

-

Case 1: Lebesgue measure, Strategy 1 Algorithm 1 terminates, finding 3 fundamental singular sub-integrals, \(I_{\Gamma ,\Gamma }\), \(I_{1,2}\) and \(I_{12,21}\).Footnote 2 (\(I_{122,211}\) is similar to \(I_{2,1}=I_{1,2}\) in this case, taking \(T=T'=T_\textrm{ref}\). This is an example where, if the non-trivial isometry \(T_\textrm{ref}\) had not been identified, the algorithm would not have terminated (cf. Case 3 below).)

-

Case 2: Lebesgue measure, Strategy 2 If \(\rho =\rho _*:=\frac{3-\sqrt{5}}{2}\approx 0.38\), the unique positive solution of \(\rho =(1-\rho )^2\), Algorithm 1 terminates with two fundamental singular sub-integrals \(I_{\Gamma ,\Gamma }\) and \(I_{1,2}\). (In this case, \(I_{1,21}\) is similar to \(I_{2,1}=I_{1,2}\), again taking \(T=T'=T_\textrm{ref}\).) If \(\rho \in (0,\rho _*)\cup (\rho _*,1/2)\), then Algorithm 1 terminates with four fundamental singular sub-integrals \(I_{\Gamma ,\Gamma }\), \(I_{1,2}\), \(I_{1,21}\) and \(I_{12,21}\). (The subdivision of \(I_{12,21}\) leads to \(I_{122,211}\), which is similar to \(I_{2,1}=I_{1,2}\), as in Case 1.)

-

Case 3: Non-Lebesgue measure, Strategy 1: In this case, Algorithm 1 does not terminate, since we encounter an infinite sequence of fundamental singular sub-integrals

$$\begin{aligned} I_{\Gamma ,\Gamma }, I_{1,2}, I_{12,21}, I_{122,211}, I_{1222,2111},I_{12222,21111} \ldots , \end{aligned}$$(48)none of which is found to be similar to any other. To see this, note that for a sub-integral \(\Gamma _{{\textbf{m}},{\textbf{m}'}}\) in this sequence, with \({\textbf{m}}=(1,2,\ldots ,2)\) (k 2’s) and \({\textbf{m}'}=(2,1,\ldots ,1)\) (k 1’s), we have

$$\begin{aligned} \frac{\rho _{\textbf{m}}}{\rho _{\textbf{m}'}}=\frac{\rho (1-\rho )^k}{\rho ^k(1-\rho )} = \left( \frac{1-\rho }{\rho }\right) ^{k-1}=:R_{k}. \end{aligned}$$(49)Then, since \(0<\rho<1-\rho <1\), the sequence \((R_k)_{k=0}^\infty \) is monotonically increasing, with \(R_k\ge 1\) for \(k\ge 1\). This implies that (29) (and hence (30)) is not satisfied by any pair of elements of the sequence (48), except for \(I_{1,2}\) and \(I_{122,211}\). However, since \(\mu \) is not Lebesgue measure, \(I_{1,2}\) and \(I_{122,211}\) are not found to be similar, because \(\mu \) is not invariant under \(T_\textrm{ref}\) (so one cannot use it in Proposition 3.2), and (32) fails with \(T=T'\) the identity.

-

Case 4: Non-Lebesgue measure, Strategy 2: In this case, Algorithm 1 can only terminate if \(\rho \) is a solution of a polynomial equation

$$\begin{aligned} (1-\rho )^{j}\rho ^k=1, \quad \text { or } \quad (1-\rho )^{j}=\rho ^k, \end{aligned}$$(50)for some \(j,k\in {\mathbb {N}}_0\) with either \(j>0\) or \(k>0\). In particular, Algorithm 1 does not terminate if \(\rho \) is transcendental. To see that (50) is necessary for termination of the algorithm, we note that, in the subdivision of \(I_{1,2}\), Strategy 2 will produce pairs of subsets of \(\Gamma \) (intervals) that intersect at the point \(x=\rho \), and the lengths of the intervals in each pair will be in the ratio \(\rho (1-\rho )^j:\rho ^k(1-\rho )\) for some \(j,k\in {\mathbb {N}}_0\). For the sub-integrals associated with any two distinct pairs of such intervals to be found to be similar, the ratio of their lengths must coincide (by (29)), implying that

$$\begin{aligned} \frac{\rho (1-\rho )^{j_1}}{\rho ^{k_1}(1-\rho )}=\frac{\rho (1-\rho )^{j_2}}{\rho ^{k_2}(1-\rho )}, \qquad \text {or, equivalently, }\,\,\,\,(1-\rho )^{j_1-j_2}\rho ^{{k_2-k_1}}=1, \end{aligned}$$for some \(j_1,k_1,j_2,k_2\in {\mathbb {N}}_0\) with either \(j_1\ne j_2\) or \(k_1\ne k_2\), giving (50). If \(\rho \) is the solution of a polynomial (50), then Algorithm 1 may terminate, but the number of fundamental singular sub-integrals encountered will depend on \(\rho \). For instance, if \(\rho =\rho _*\), the algorithm terminates with four fundamental singular sub-integrals \(I_{\Gamma ,\Gamma }\), \(I_{1,2}\), \(I_{1,21}\) and \(I_{12,21}\) (since in this case \(I_{122,211}\) is similar to \(I_{1,21}\)). If \(\rho =\rho _{**}\approx 0.43\), defined to be the unique positive solution of \(\rho ^2=(1-\rho )^3\), or \(\rho =\rho _{***}\approx 0.32\), defined to be the unique positive solution of \(\rho =(1-\rho )^3\), the algorithm terminates with five fundamental singular sub-integrals \(I_{\Gamma ,\Gamma }\), \(I_{1,2}\), \(I_{1,21}\), \(I_{12,21}\) and \(I_{122,211}\) (since in these cases \(I_{1222,211}\) is similar to \(I_{1,2}\) and \(I_{1,21}\) respectively). For \(\rho \in (0,1/2)\setminus \{\rho _*,\rho _{**},\rho _{***}\}\), if the algorithm does terminate, it will find at least six fundamental singular sub-integrals, since then none of \(I_{\Gamma ,\Gamma }\), \(I_{1,2}\), \(I_{1,21}\), \(I_{12,21}\), \(I_{122,211}\) and \(I_{1222,211}\) are found to be similar to each other.

6 Numerical quadrature and error estimates

Once Algorithm 1 has been applied, and the system (38) has been solved, producing a representation formula for the singular integral \(I_{\Gamma ,\Gamma }\) in terms of regular sub-integrals, a numerical approximation of \(I_{\Gamma ,\Gamma }\) can be obtained by applying a suitable quadrature rule to the regular sub-integrals. Let us call the resulting approximation \(Q_{\Gamma ,\Gamma }\). We discuss some possible choices of quadrature rule below. But first, we make a general comment on the error analysis of such approximations. Suppose that the quadrature rule chosen can compute each of the regular sub-integrals in the vector \(\textbf{r}\) with absolute error \(\le E\) for some \(E\ge 0\). Then, the absolute quadrature error in computing \(I_{\Gamma ,\Gamma }\) using the representation formula (38) can be bounded by

The constant \(\Vert A^{-1}\Vert _\infty \Vert B\Vert _\infty \) depends on the problem at hand and is expected to blow up as \(t\rightarrow t_*\). Indeed, for the examples in Sect. 5.1-Sect. 5.4 (for which \(t_*=d=\dim _{\text {H}}(\Gamma )\)) one can check that

for some constant \(C>0\), independent of t. This follows from the fact that in all these examples, the constant \(\sigma _1=O(d-t)\) as \(t\rightarrow d\), while \(\sigma _2\), \(\sigma _3\) etc. remain bounded away from zero in this limit.

We now return to the choice of quadrature rule for the approximation of the regular sub-integrals in \(\textbf{r}\), which are double integrals of smooth functions over pairs of self-similar subsets of \(\Gamma \) with respect to a pair of invariant measures \(\mu \) and \(\mu '\). We shall restrict our attention to tensor product quadrature rules, so that it suffices to consider methods for evaluating a single integral of a smooth function over a single self-similar subset \(\Gamma _{\textbf{m}}\) of \(\Gamma \) with respect to a single invariant measure \(\mu \). In fact, it is enough to consider the case \(\Gamma _{\textbf{m}}= \Gamma \), since the more general case can then be treated using (19). Hence, we consider quadrature rules for the evaluation of the integral

for an integrand f that is smooth in a neighbourhood of \(\Gamma \). We consider three types of quadrature:

-

Gauss rules: Highly accurate, but currently only practically applicable for the case \(n=1\), i.e. \(\Gamma \subset {\mathbb {R}}\).Footnote 3

-

Composite barycentre rules: Less accurate than Gauss rules, but can be applied to \(\Gamma \subset {\mathbb {R}}^n\) for \(n>1\).

-

Chaos game rules: Monte-Carlo type rules which converge (in expectation) at a relatively slow but dimension-independent rate, which makes them well-suited to high-dimensional problems (large \(d=\dim _{\text {H}}(\Gamma )\)).

In the following three sections, we provide further details of these methods and any theory supporting them, before comparing their performance numerically in Sect. 7.

6.1 Gauss rules in the case \(\Gamma \subset {\mathbb {R}}\)

In general, N-point Gauss rules require the existence of a set of polynomials \(\{p_j\}_{j=0}^N\), orthogonal with respect to the measure \(\mu \). A sufficient condition for the existence of such polynomials is the positivity of the Hankel determinant, which, in the case of self-similar invariant measures, is implied by \({{\,\textrm{supp}\,}}\mu =\Gamma \) having infinitely many points [11, §1.1]. We then define the N-point Gauss rule on \(\Gamma \) as

where \(x_j\), \(j=1,\ldots ,N\), are the zeros of \(p_N\). Gauss rules are interpolatory, so the weights (also known as Christoffel numbers) may be defined by \(w_j:=\int _\Gamma \ell _j(x){\textrm{d}}\mu (x)\), where \(\ell _j\) is the jth corresponding Lagrange polynomial (see, e.g [29, (5.3)]). The weights are positive (see, for example [12, Theorem 1.46]), which generalises to any positive measure \(\mu \).

For classical \(\mu \), a range of algorithms (see, e.g. [14, 26]) exist for efficient O(N) computation of the weights and nodes in (53). However, standard approaches involving polynomial sampling break down for singular measures [17, 18]. This presents an obstacle for the evaluation of (52) in our context of self-similar invariant measures, which are in general singular when \(\dim _{\text {H}}(\Gamma )\ne n\). However, in the special case \(n=1\), where \(\Gamma \subset {\mathbb {R}}\), this issue can be overcome by applying the stable Stieltjes technique proposed in [17, §5]Footnote 4. It seems that a stable and efficient algorithm for the evaluation of Gauss rules for the case where \(\Gamma \subset {\mathbb {R}}^n\), \(n>1\), has not yet been developed. Hence, in this paper, we only consider Gauss rules for the case where \(\Gamma \subset {\mathbb {R}}\).

The error analysis for the Gauss rule follows the standard approach, giving the usual exponential convergence as \(N\rightarrow \infty \). In the following, \(\textrm{Hull}(\Gamma )\) denotes the convex hull of \(\Gamma \).

Theorem 6.1

Let \(\Gamma \subset {\mathbb {R}}\) be an IFS attractor, and let \(\mu \) be a self-similar measure supported on \(\Gamma \). If f is analytic in a neighbourhood of \(\textrm{Hull}(\Gamma )\subset {\mathbb {R}}\), then

for some constants \(C>0\) and \(c>0\), independent of N.

Proof

Denote by \(p_{2N-1}^*\) the \(L^\infty (\textrm{Hull}(\Gamma ))\)-best approximation to f, over the space of polynomials of degree \(2N-1\). By linearity and the exactness property [11, (1.17)], we can write

Since the weights are positive, \( \sum _{j=1}^N|w_j|= \sum _{j=1}^Nw_j = \mu (\Gamma )\). The result then follows by applying classical approximation theory estimates to \(\Vert f-p_{2N-1}^*\Vert _{L^\infty (\textrm{Hull}(\Gamma ))}\), for example [29, Theorem 8.2]. \(\square \)

6.2 The composite barycentre rule

The basic idea of the composite barycentre rule (for more detail, see [13]) is to partition \(\Gamma \) into a union of self-similar subsets of approximately equal diameter, then to approximate f on each subset by its (constant) value at the barycentre of each subset. Given a maximum mesh width \(h>0\), we define a partition of \(\Gamma \) using the following set of indices:

The composite barycentre rule is then defined as

where the weights and nodes are defined by \(w_{\textbf{m}}:= \mu (\Gamma _{\textbf{m}})\) and \(x_{\textbf{m}}:={\int _{\Gamma _{\textbf{m}}} x~{\textrm{d}}\mu (x)}/{\mu (\Gamma _{\textbf{m}})}\), respectively. The weights and nodes can be computed using simple formulas involving the IFS parameters of (11), as (see [13, (28)-(30)], and recall (20))

with

where \(\textrm{I}\) is the \(n\times n\) identity matrix and \(\rho _m\), \(A_m\) and \(\delta _m\), \(m=1,\ldots ,M\), are as in (11).

The error analysis of the composite barycentre rule follows a standard Taylor series approximation argument. The following is a simplified version of results in [13].

Theorem 6.2

[[13, Theorem 3.6 and Remark 3.9]] Let \(\Gamma \subset {\mathbb {R}}^n\) be an IFS attractor, and let \(\mu \) be a self-similar measure supported on \(\Gamma \).

-

(i)

If f is Lipschitz continuous on \(\textrm{Hull}(\Gamma )\), then

$$ \left| I[f;\mu ]-Q^{\textrm{B}}_h[f;\mu ]\right| \le Ch, \qquad h>0, $$for some \(C>0\) independent of h.

-

(ii)

If f is differentiable in a neighbourhood of \(\textrm{Hull}(\Gamma )\), and its gradient is Lipschitz continuous on \(\textrm{Hull}(\Gamma )\), then

$$ \left| I[f;\mu ]-Q^{\textrm{B}}_h[f;\mu ]\right| \le Ch^2, \qquad h>0, $$for some \(C>0\) independent of h.

If the IFS defining \(\Gamma \) is homogeneous, then h and \(h^2\) on the right-hand sides of the above estimates can be replaced by \(N^{-1/d}\) and \(N^{-2/d}\) respectively, where \(N:=|L_h(\Gamma )|\).

6.3 Chaos game quadrature

Chaos game quadrature, described, e.g. in [10, (3.22)–(3.23)] and [16, § 6.3.1], is a Monte-Carlo type approach, defined by the following procedure:

-

(i)

Choose some \(x_0\in \mathbb {R}^n\), e.g. \(x_0=x_\Gamma \), the barycentre of \(\Gamma \).

-

(ii)

Select a realisation of the sequence \(\{m_j\}_{j\in \mathbb N}\) of i.i.d. random variables taking values in \(\{1,\ldots ,M\}\) with probabilities \(\{p_1,\ldots ,p_M\}\).

-

(iii)

Construct the stochastic sequence \(x_j=s_{m_j}(x_{j-1})\) for \(j\in \mathbb N\).

-

(iv)

For a given \(N\in {\mathbb {N}}\), define the chaos game quadrature approximation by

$$\begin{aligned} Q^{\textrm{C}}_N[f;\mu ] := \frac{1}{N}\sum _{j=1}^N f(x_j). \end{aligned}$$(59)

For continuous f, the chaos game rule (59) will converge to (52) with probability one (see the arguments in the appendix of [10]). While no error estimates were provided in [10] or [16], in the numerical experiments of [13, §6] and Sect. 7 below, convergence in expectation was observed at a rate consistent with an estimate of the form

7 Numerical results and applications

Algorithm 1 and the quadrature approximations described in Sect. 6 have been implemented in the open-source Julia code |IFSIntegrals|, available at www.github.com/AndrewGibbs/IFSintegrals. In this section, we present numerical results illustrating the accuracy of our approximations, comparing different quadrature approaches and applying our method in the context of a boundary element method for acoustic scattering by fractal screens.

7.1 Sierpinski triangle, Vicsek fractal, Sierpinski carpet and Koch snowflake

We first consider the application of our approach to the attractors considered in Sect. 5.1-Sect. 5.4, namely the Sierpinski triangle, Vicsek fractal, Sierpinski carpet and Koch snowflake. However, in contrast to Sect. 5.1–Sect. 5.4, where representation formulas were presented for the standard case where \(\mu =\mu '={\mathcal {H}}^d|_\Gamma \) (Lebesgue measure in the case of the Koch snowflake), to demonstrate the generality of our approach, we present numerical results for completely generic self-similar measures \(\mu \ne \mu '\), with randomly chosen probability weights \(p_m\) and \(p_m'\), as detailed in Table 1. Table 1 also documents the resulting values of \(t_*\), as computed by solving (21), as well as the numbers \(n_s\), \(n_r\), of fundamental singular and regular sub-integrals discovered by our algorithm. In all cases, our algorithm terminated, using the same subdivision strategies as in Sect. 5.1–Sect. 5.4, producing an invertible matrix A. However, for these non-standard examples, there are no nontrivial isometries under which the measures are invariant, so our algorithm took \(T=T'\) to be the identity throughout. As a result (cf. the related discussion in Remark 4.4), the linear systems (38) are larger than those obtained in the standard case documented in Sect. 5.1–Sect. 5.4, where additional symmetries of the measures could be exploited.

In Fig. 7 (solid curves), we plot the relative error in our quadrature approximation for \(I_{\Gamma ,\Gamma }\), for three values of \(t=0,0.5,1\), obtained by solving the linear system (38) obtained by Algorithm 1 (using subdivision strategy 2 for the Koch snowflake), combined with composite barycentre rule quadrature for the evaluation of the regular sub-integrals, for different values of the maximum mesh width h. In more detail, we plot errors for \(h={{\,\textrm{diam}\,}}(\Gamma )\rho ^{\ell }\), for \(\ell =0,\ldots ,\ell _{\textrm{ref}}-1\), where \(\ell _{\textrm{ref}}\) is the value of \(\ell \) used for the reference solution (which is computed using the same method). For the three homogeneous attractors, we take \(\rho =\rho _1=\ldots =\rho _M\) (the common contraction factor), while for the Koch snowflake, we take \(\rho =1/\sqrt{3}\) (the largest contraction factor). For the Sierpinski triangle, \(\ell _{\textrm{ref}}=10\); for the Vicsek fractal, \(\ell _{\textrm{ref}}=7\); and for the Sierpinski Carpet and Koch snowflake, \(\ell _{\textrm{ref}}=6\). According to our theory, we expect our method to give \(O(h^2)\) error, by Theorem 6.2(ii) and (51), and this is exactly the rate we observe in our numerical results in Fig. 7.

In Fig. 7 (dashed curves), we also show results obtained using the method of our previous paper [13, (48)], which we refer to as the “old method”. This method is accurate for disjoint IFS attractors, but is expected to perform less well for non-disjoint attractors, because it only applies self-similarity to deal with the self-interaction integrals and treats all other sub-integrals as being regular. Precisely, the old method corresponds to taking the equation corresponding to the first row in the linear system (38) obtained by Algorithm 1, solving this equation for \(I_{\Gamma ,\Gamma }\), then applying the composite barycentre rule not just to the regular sub-integrals coming from the right-hand side of (38), but also to the fundamental singular sub-integrals \(I_{{\textbf{m}}^{}_{s,i},{\textbf{m}}'_{s,i}}\), \(i=2,\ldots ,n_s\). We expect that the resulting quadrature approximation should converge to \(I_{\Gamma ,\Gamma }\) as \(h\rightarrow 0\), but at a slower rate than our new method, because of the inaccurate treatment of the singular sub-integrals. This is borne out in our numerical results in Fig. 7, with the errors for the old method being significantly larger than those for the new method. To quantify these observations, we present in Table 2 the empirical convergence rates (computed from the errors for the two smallest h values) observed for the old method for each of the three t values considered. The deviation from \(O(h^2)\) convergence is different for each example, but clearly increases as t, the strength of the singularity, increases, as one would expect.

For all the experiments in Fig. 7, the total number of quadrature points \(N_\textrm{tot}\) grows like \(N_\textrm{tot}\approx Ch^{-2d}\) as \(h\rightarrow 0\), with the value of C depending on the number of fundamental regular sub-integrals that need to be evaluated. (Recall from Sect. 6.2 that for each regular sub-integral, we use a tensor product rule with \(N^2\) points, where \(N\approx C'h^{-d}\) for some \(C'\).) For each choice of attractor, the value of C for the new method is slightly smaller than that for the old method, because the new method takes greater advantage of similarities between regular sub-integrals. The value of \(N_\textrm{tot}\) used for the reference solutions is 1,291,401,630 for the Sierpinski triangle, 2,382,812,500 for the Vicsek fractal, 18,790,481,920 for the Sierpinski carpet and 379,046,894,100 for the Koch snowflake.

7.2 Unit interval experiments

We now consider an attractor \(\Gamma \subset {\mathbb {R}}\), so that we can investigate the performance of the Gauss quadrature discussed in Sect. 6.1. The classic example of an IFS attractor \(\Gamma \subset {\mathbb {R}}\) is the Cantor set, but since this is disjoint (in the sense of (15)), it can already be treated by our old method (of [13]). To demonstrate the efficacy of our new method for dealing with non-disjoint attractors, we consider the case where \(\Gamma =[0,1]\subset {\mathbb {R}}\), which, as discussed in Sect. 5.5 (taking \(\rho =1/2\)), is the attractor of the homogeneous IFS with \(M=2\), \(s_1(x)=x/2\) and \(s_2(x)=x/2+1/2\). We consider the case where \(\mu =\mu '\), with \(p_1=p_1'=1/3\) and \(p_2=p_2'=2/3\), so that, by (22), \(t_* = \log (9/5)/\log 2\approx 0.848\). As discussed in Sect. 5.5, for this problem, Algorithm 1 finds just two fundamental singular sub-integrals, \(I_{\Gamma ,\Gamma }\) and \(I_{1,2}\), and two fundamental regular sub-integrals, \(I_{11,21}\) and \(I_{11,22}\).

In Fig. 8, we report relative errors for the computation of \(I_{\Gamma ,\Gamma }\) with \(t=0\) and \(t=1/2\), using Algorithm 1 combined with Gauss, composite barycentre, and chaos game quadrature for the evaluation of the regular sub-integrals. For each method, the total number of quadrature points satisfies \(N_\textrm{tot}=2N^2\), where N is the number of points used for each of the two iterated integrals in each of the two fundamental regular sub-integrals (recall that we are using tensor product rules). For the composite barycentre rule, we have \(N\approx \frac{1}{4h}\) in this case. As the reference solution, we use the result obtained using the Gauss rule with \(N=100\), which corresponds to \(N_\textrm{tot}=20,000\). For the Gauss rule, we see the expected root-exponential \(O({\textrm{e}}^{-c\sqrt{N_\textrm{tot}}})\) convergence predicted by Theorem 6.1 and (51), with \(c\approx 1.77\) and \(c\approx 2.31\) (for \(t=0\) and \(t=1/2\) respectively) in this case. As a result, the singular double integral \(I_{\Gamma ,\Gamma }\) can be computed to machine precision using \(N\approx 10\), which corresponds to \(N_\textrm{tot}\approx 200\) quadrature points. The barycentre rule is significantly less accurate, converging like \(O(h^2)=O(N^{-2})=O(N_\textrm{tot}^{-1})\), as predicted by Theorem 6.2 and (51). For the chaos game quadrature, we computed 1000 realisations, plotting both the errors for each realisation and the average error over all the realisations, which is a proxy for the expected error. The latter is observed to converge like \(O(N^{-1/2}) = O(N_\textrm{tot}^{-1/4})\), in accordance with the remarks at the end of Sect. 6.3.

Comparison of the performance of the three quadrature rules presented in Sect. 6.1-Sect. 6.3, applied in the context of Algorithm 1, for the example considered in Sect. 7.2, which concerns the evaluation of singular double integrals on the unit interval \(\Gamma =[0,1]\) with respect to a self-similar measure

7.3 Application to Hausdorff BEM for acoustic scattering by fractal screens

We conclude by demonstrating how our new representation formulas and resulting quadrature rules can be used to compute the scattering of acoustic waves by fractal screens using the “Hausdorff boundary element method” (BEM) of [6]. For full details of the scattering problem and the Hausdorff BEM, we refer the reader to [6] and the references therein; here, we merely provide a brief overview.

The underlying scattering problem under consideration is the three-dimensional time-harmonic acoustic scattering of an incident plane wave \({\textrm{e}}^{{\textrm{i}}kx\cdot \vartheta }\) (for \(x=(x_1,x_2,x_3)^T\in {\mathbb {R}}^3\), wavenumber \(k>0\) and unit direction vector \(\vartheta \in {\mathbb {R}}^3\)) by a fractal planar screen \(\Gamma \times \{0\}\subset {\mathbb {R}}^3\), where \(\Gamma \subset {\mathbb {R}}^2\) is the attractor of an IFS satisfying the open set condition. Assuming that the total wave field u (which is a solution of the Helmholtz equation \(\Delta u + k^2 u=0\) in \({\mathbb {R}}^3\setminus (\Gamma \times \{0\})\)) satisfies homogeneous Dirichlet (“sound soft”) boundary conditions on the screen, it was shown in [6] that the scattering problem can be reduced to the solution of the integral equation

Here, S is the single layer boundary integral operator defined by \(S\phi (x) = \int _\Gamma \Phi (x,y)\phi (y) \,{\textrm{d}}y\) (with the integral interpreted in a suitable distributional sense), where \(\Phi (x,y):={\textrm{e}}^{{\textrm{i}}k |x-y|}/(4\pi |x-y|)\) is the fundamental solution of the Helmholtz equation in three dimensions, \(\phi \) is the unknown jump in the \(x_3\)-derivative of u across the screen and f is a known function depending on the incident wave.

The Hausdorff BEM in [6] discretises (60) using a Galerkin method with a numerical approximation space of piecewise constant functions multiplied by the Hausdorff measure \({\mathcal {H}}^d|_\Gamma \). The mesh used for the piecewise constant functions is of the same form as that used in the composite barycentre rule in Sect. 6.2—having chosen a maximum BEM mesh width \(h_\textrm{BEM}\), we partition \(\Gamma \) using the index set \(L_{h_\textrm{BEM}}(\Gamma )\) defined in (55). If we choose the natural basis for the approximation space, then assembling the Galerkin matrix involves the numerical evaluation of the integral

for all pairs of indices \({\textbf{m}},{\textbf{n}}\in L_{h_\textrm{BEM}}(\Gamma )\).

Field scattered by Sierpinski triangle (top left), Vicsek fractal (top right), Sierpinski carpet (bottom left) and Koch snowflake (bottom right), for the Dirichlet screen scattering problem described in Sect. 7.3 with wavenumber \(k=50\) and incident angle \(\vartheta =(0,1,-1)/\sqrt{2}\). In each case, the screen (sketched in black) lies in the plane \(x_3=0\)

When \(\Gamma _{\textbf{m}}\) and \(\Gamma _{\textbf{n}}\) are disjoint, the integral (61) has a smooth integrand and can be evaluated using the composite barycentre rule with some maximum mesh width \(h\le h_\textrm{BEM}\), with error \(O(h^2)\) (by Theorem 6.2(ii)). When \(\Gamma _{\textbf{m}}\cap \Gamma _{\textbf{n}}\) is non-empty, the integral (61) is singular, and to evaluate it, we adopt a singularity subtraction approach, writing

where \(\Phi _*(x,y):=\Phi (x,y)-(4\pi |x-y|)^{-1} = ({\textrm{e}}^{{\textrm{i}}k |x-y|}-1)/(4\pi |x-y|)\). The first integral on the right-hand side of (62) can be evaluated using the methods of this paper with \(t=1\). In more detail, if \(\Gamma _{\textbf{m}}= \Gamma _{\textbf{n}}\), this first integral will be similar to \(I_{\Gamma ,\Gamma }\), and if \(\Gamma _{\textbf{m}}\ne \Gamma _{\textbf{n}}\), it will be similar to one of the other fundamental singular sub-integrals encountered in Algorithm 1. In both cases, it can be evaluated by combining Algorithm 1 with the composite barycentre rule, again with mesh width \(h\le h_\textrm{BEM}\) and error \(O(h^2)\). The second integral on the right-hand side of (62) has a Lipschitz continuous integrand and hence can be evaluated using the composite barycentre rule directly. According to Theorem 6.2(i), the error in this approximation is guaranteed to be O(h). In fact, for disjoint homogeneous attractors, the error in evaluating this second term was proved in [13, Proposition 5.5] to be \(O(h^2)\), and experiments in [13, Figure 8(a)] suggest that the same may be true for certain non-homogeneous disjoint attractors. In Fig. 9, we present numerical results suggesting, furthermore, that the same may also be true for certain non-disjoint attractors. The plots in Fig. 9 show the relative error (against a high-order reference solution) in computing the second term in (62) using the composite barycentre rule, for the four attractors from Sect. 5.1–Sect. 5.4 and a range of wavenumbers, in the case where \(\Gamma _{\textbf{m}}=\Gamma _{\textbf{n}}=\Gamma \). This case is chosen since it represents the most difficult case, in which \(\Gamma _{\textbf{m}}\) and \(\Gamma _{\textbf{n}}\) have full overlap. For all four examples, we clearly observe \(O(h^2)\) error in the numerical results. However, we leave the theoretical justification of this observation to future work.

We end the paper by presenting in Fig. 10 plots of the scattered field computed by our Hausdorff BEM solver (available at www.github.com/AndrewGibbs/IFSintegrals) for scattering by the four attractors from Sect. 5.1–Sect. 5.4. In each case, the wavenumber \(k=50\) and incident angle \(\vartheta =(0,1,-1)/\sqrt{2}\). Here, \(h_{\textrm{BEM}}={{\,\textrm{diam}\,}}(\Gamma )\rho ^{\ell _{\textrm{BEM}}}\), where \(\rho \) is as defined in Sect. 7.1 and \(\ell _{\textrm{BEM}}=5\) for the Sierpinski triangle, \(\ell _{\textrm{BEM}}=4\) for the Vicsek fractal, \(\ell _{\textrm{BEM}}=4\) for the Sierpinski Carpet, and \(\ell _{\textrm{BEM}}=8\) for the Koch snowflake, so that in each case, we are discretising with at least 5 elements per wavelength. The Galerkin BEM matrix is constructed as described above, with \(h=h_{\textrm{BEM}}\rho ^{4}\) in each case, taking advantage also of the reduced quadrature approach described in [6, Remark 5.19] (which exploits the far-field decay in \(\Phi (x,y)\) to reduce the number of quadrature points for pairs of elements \(\Gamma _{\textbf{m}}\) and \(\Gamma _{\textbf{n}}\) that are well separated).

We note that for disjoint attractors, the Hausdorff BEM is supported by a fully discrete convergence analysis (presented in [6]). A similar analysis for the case \(d=2\) (applying for instance to the Koch snowflake) will be presented in a forthcoming article [7].

Data availability

All data generated or analysed during this study are included in this published article.

Notes

A key step in the proof of this result is showing that \(\mu (\Gamma _{m'}) = p_{m'}\mu (\Gamma )\) for \({m'}=1,\ldots ,M\). To prove the latter, we first note that, by (18) and the positivity and self-similarity of \(\mu \), if E is measurable and \(\mu (E)=0\), then \(\mu (s_m^{-1}(E))=0\) for \(m=1,\ldots ,M\). In particular, since \(\mu (s_m(\Gamma )\cap s_{{m'}}(\Gamma ))=0\) for \(m\ne {m'}\), we have that \(\mu (s_m^{-1}(\Gamma _m\cap \Gamma _{m'}))=0\) for \(m\ne {m'}\). Then, using the fact that \(\mu \) is supported in \(\Gamma \), we have \(\mu (\Gamma _{m'})=\sum _{m} p_m \mu (s_m^{-1}(\Gamma _{m'})) = \sum _{m} p_m \mu (\Gamma \cap s_m^{-1}(\Gamma _{m'})) = \sum _{m} p_m \mu (s_m^{-1}(\Gamma _m\cap \Gamma _{m'})) = p_{m'} \mu (\Gamma )\), as claimed.

The matrix A arising in (38) in this case, with \(\textbf{x} = (I_{\Gamma ,\Gamma }, I_{1,2}, I_{12,21})^T\), is given by

$$\begin{aligned} A = \left( \begin{array}{ccc} 1-\omega _1-\omega _2 &{} -2 &{} 0 \\ 0 &{} 1 &{} -1 \\ 0 &{} -\omega _1\omega _2 &{} 1 \end{array}\right) , \end{aligned}$$(47)where \(\omega _1 = \rho ^{2-t}\) and \(\omega _2 = (1-\rho )^{2-t}\). Noting that \(\det (A)=(1-\omega _1-\omega _2)(1-\omega _1\omega _2)\), one can check that A is invertible for all \((\rho ,t)\in (0,1/2]\times [0,2)\).

There is an error in [17, Equation (32)]:

$$\begin{aligned} \sum _{m=0}^{n-1}\Gamma ^{n}_{i,m}\Gamma ^n_{i,m+1}\delta _i(r_m+r_{m+1})\quad \text { should be replaced by }\quad 2\sum _{m=0}^{n-1}\delta _i\Gamma ^n_{i,m}\Gamma ^n_{i,m+1}r_{m+1}. \end{aligned}$$(54)

References

Barnsley, M., Vince, A.: Developments in fractal geometry. Bull. Math. Sci. 3, 299–348 (2013)

Barnsley, M.F., Demko, S.: Iterated function systems and the global construction of fractals. Proc. Roy. Soc. A. Math. Phys. Sci. 399, 243–275 (1985)

Bessis, D., Fournier, J., Servizi, G., Turchetti, G., Vaienti, S.: Mellin transforms of correlation integrals and generalized dimension of strange sets. Phys. Rev. A 36, 920 (1987)

Bogachev, V.I.: Measure theory (Volume 1). Springer (2007)

Börm, S., Hackbusch, W.: Hierarchical quadrature for singular integrals. Computing 74, 75–100 (2005)

Caetano, A.M., Chandler-Wilde, S.N., Gibbs, A., Hewett, D., Moiola, A.: A Hausdorff measure boundary element method for acoustic scattering by fractal screens. arXiv:2212.06594 (2022)

Caetano, A.M., Chandler-Wilde, S.N., Gibbs, A., Hewett, D.P.: Properties of IFS attractors with non-empty interiors and associated function spaces and scattering problems. In preparation

Calabrò, F., Corbo Esposito, A.: An evaluation of Clenshaw-Curtis quadrature rule for integration w.r.t. singular measures. J. Comput. Appl. Math. 229, 120–128 (2009)

Falconer, K.: Fractal geometry: Mathematical foundations and applications. Wiley, 3rd ed. (2014)

Forte, B., Mendivil, F., Vrscay, E.: Chaos games for iterated function systems with grey level maps. SIAM J. Math. Anal. 29, 878–890 (1998)

Gautschi, W.: Computational aspects of orthogonal polynomials. In: Nevai, P. (ed.) Orthogonal polynomials: Theory and Practice, NATO Adv. Sci. Inst. Ser. C Math. Phys. Sci., Kluwer Acad. Publ., Dordrecht, pp. 181–216 (1990)

Gautschi, W.: Orthogonal polynomials: computation and approximation. OUP, (2004)

Gibbs, A., Hewett, D., Moiola, A.: Numerical quadrature for singular integrals on fractals. Numer. Algorithms 92, 2071–2124 (2023)

Hale, N., Townsend, A.: Fast and accurate computation of Gauss-Legendre and Gauss-Jacobi quadrature nodes and weights. SIAM J. Sci. Comput. 35, A652–A674 (2013)

Hutchinson, J.E.: Fractals and self-similarity. Indiana Univ. Math. J. 30, 713–747 (1981)

Kunze, H., La Torre, D., Mendivil, F., Vrscay, E.R.: Fractal-based methods in analysis. Springer, (2011)

Mantica, G.: A stable Stieltjes technique for computing orthogonal polynomials and Jacobi matrices associated with a class of singular measures. Constr. Approx. 12, 509–530 (1996)

Mantica, G.: On computing Jacobi matrices associated with recurrent and Möbius iterated function systems. In: Proceedings of the 8th International Congress on Computational and Applied Mathematics, ICCAM-98 (Leuven), vol. 115(1-2), pp. 419–431 (2000)

Mantica, G., Vaienti, S.: The asymptotic behaviour of the Fourier transforms of orthogonal polynomials I: Mellin transform techniques. Ann. Henri Poincaré 8, 265–300 (2007)

Mattila, P.: Fourier analysis and Hausdorff dimension. CUP, (2015)

Meszmer, P.: Hierarchical quadrature for multidimensional singular integrals. J. Numer. Math. 18, 91–117 (2010)

Meszmer, P.: Hierarchical quadrature for multidimensional singular integrals - part II. J. Numer. Math. 22, 33–60 (2014)

Morán, M., Rey, J.-M.: Singularity of self-similar measures with respect to Hausdorff measures. T. Am. Math. Soc. 350, 2297–2310 (1998)

Strichartz, R.S.: Self-similar measures and their Fourier transforms I. Indiana U. Math. J. 797–817 (1990)

Strichartz, R.S.: Evaluating integrals using self-similarity. Am. Math. Mon. 107, 316–326 (2000)

Townsend, A., Trogdon, T., Olver, S.: Fast computation of Gauss quadrature nodes and weights on the whole real line. IMA J. Numer. Anal. 36, 337–358 (2016)

Trefethen, L.N.: Is Gauss quadrature better than Clenshaw-Curtis? SIAM Rev. 50, 67–87 (2008)

Trefethen, L.N.: Ten digit problems. In: Schleicher, D., Lackmann, M. (eds.) An invitation to mathematics: from competitions to research, pp. 119–136. Springer, (2011)

Trefethen, L.N.: Approximation theory and approximation practice. SIAM (2013)

Acknowledgements

AG and DH acknowledge support from the EPSRC grant EP/V053868/1 and thank the Isaac Newton Institute for Mathematical Sciences, Cambridge, for support and hospitality during the programme Mathematical theory and applications of multiple wave scattering, where work on this paper was undertaken. This work was supported by EPSRC grant no. EP/R014604/1. BM and DH gratefully acknowledge support from the LMS Undergraduate Research Bursary scheme, which funded BM on a summer research internship at UCL, during which this work was initiated.

Funding

AG and DH are funded by the EPSRC grant EP/V053868/1. BM was funded by the LMS summer research internship grant URB-2022-12.

Author information

Authors and Affiliations

Contributions

AG, DH and BM jointly developed the concept and theoretical results. AG produced the numerical results. DH wrote the main manuscript, with contributions from AG and BM.

Corresponding author

Ethics declarations

Ethical approval

Not applicable

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gibbs, A., Hewett, D.P. & Major, B. Numerical evaluation of singular integrals on non-disjoint self-similar fractal sets. Numer Algor 97, 311–343 (2024). https://doi.org/10.1007/s11075-023-01705-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-023-01705-8