Abstract

We propose a variation of the forward–backward splitting method for solving structured monotone inclusions. Our method integrates past iterates and two deviation vectors into the update equations. These deviation vectors bring flexibility to the algorithm and can be chosen arbitrarily as long as they together satisfy a norm condition. We present special cases where the deviation vectors, selected as predetermined linear combinations of previous iterates, always meet the norm condition. Notably, we introduce an algorithm employing a scalar parameter to interpolate between the conventional forward–backward splitting scheme and an accelerated \(\mathcal {O}\left( \frac{1}{n^2}\right) \)-convergent forward–backward method that encompasses both the accelerated proximal point method and the Halpern iteration as special cases. The existing methods correspond to the two extremes of the allowed scalar parameter range. By choosing the interpolation scalar near the midpoint of the permissible range, our algorithm significantly outperforms these previously known methods when addressing a basic monotone inclusion problem stemming from minimax optimization.

Similar content being viewed by others

1 Introduction

We consider the problem of finding \(x\in \mathcal {H}\) such that

where \(A:\mathcal {H}\rightarrow 2^\mathcal {H}\) is a maximally monotone operator, \(C:\mathcal {H}\rightarrow \mathcal {H}\) is a cocoercive operator, \(\mathcal {H}\) is a real Hilbert space and \(2^\mathcal {H}\) denotes its power set. This monotone inclusion has optimization problems [18, 29], convex-concave saddle-point problems [13], and variational inequalities [5, 14, 38] as special cases.

The forward–backward (FB) splitting method [11, 24, 27] has been widely used to solve the monotone inclusion problem (1). The gradient method, the proximal point algorithm [30], the proximal-gradient method [16], the Chambolle–Pock method [13], the Douglas–Rachford method [18, 24], and the Krasnosel’skiĭ–Mann iteration [9, Section 5.2] can all be considered special instances of the FB method. Various attempts have been made to improve the convergence of the FB splitting algorithm by incorporating information from previous iterations. Notable examples include the heavy-ball method [28], the inertial proximal point algorithm [1, 2], and inertial FB algorithms [3, 4, 6, 7, 10, 12, 15, 25, 26], which integrate prior information into the current iteration through a momentum term.

In this paper, we propose an extension to the conventional FB algorithm that includes momentum-like terms and two deviation vectors. These deviations have the same dimension as the underlying space of the problem and serve as adjustable parameters that provide the algorithm with great flexibility. This flexibility can be exploited to control the trajectory of the iterations with the aim to enhance algorithm convergence. To guarantee convergence, we require the deviations to satisfy a safeguarding condition that restricts the norm, but not the direction, of the deviation vectors. Our safeguarding approach is similar to those in [8, 32, 33]—which indeed are special instances of our algorithm—while it distinctly contrasts with the safeguarding conditions presented in [20, 34, 37, 40] that choose between a globally convergent and locally fast methods depending on the fulfillment of their respective safeguarding conditions.

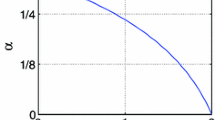

We also introduce two special cases where the deviation vectors are predetermined linear combinations of prior iteration data. This construction ensures that the safeguarding condition is met in all iterations, implying that it does not require online evaluation. The two special cases incorporate different scalar parameters controlling the behaviour of their respective algorithms. In one case, the scalar parameter \(\kappa \in (-1,1)\) regulates the momentum used in the algorithm, with \(\kappa =0\) yielding the standard FB method. This algorithm converges weakly towards a solution of the inclusion problem for all \(\kappa \) within the permitted range. In the other case, the scalar parameter \(e\in [0,1]\) acts as an interpolator between the standard FB method (\(e=0\)) and an accelerated FB method (\(e=1\)) featuring the accelerated proximal point method from [21] and the Halpern iteration analyzed in [23] as special cases. The scalar parameter e regulates the convergence rate of the squared norm of the fixed-point residual, converging as \(\mathcal {O}\left( \min \left( 1/n^{2e},1/n\right) \right) \), with \(e=1\) offering the accelerated FB method with an \(\mathcal {O}\left( 1/n^2\right) \) convergence rate, consistent with the rates in [21, 23].

We perform numerical evaluation of these two special cases on a simple skew-symmetric monotone inclusion problem arising from optimality conditions for the minimax problem \(\max _{y\in \mathbb {R}}\min _{x\in \mathbb {R}}xy\). Our findings suggest that with \(\kappa \in [0.8,0.9]\), our first special case performs an order of magnitude better than the FB method (\(\kappa =0\)) on this problem. Furthermore, by allowing \(e\in [0.4,0.5]\), we observe that our second special case outperforms the FB method (\(e=0\)) by an order of magnitude and performs several orders of magnitude better than the accelerated FB method (\(e=1\)), despite the latter’s stronger theoretical convergence guarantee.

The analysis of our base algorithm relies on a Lyapunov inequality. We derive this inequality by applying the monotonicity inequality of operator A and the cocoercivity inequality of operator C (that are referred to as interpolation conditions in the terminology of performance estimation (PEP), see for instance [31, 35, 36]), both between the last iterate and a solution of the problem, as well as the last two points generated by the algorithm. This is in contrast to the analysis conducted in [33], which restricts the use of these inequalities to only the last iteration and a solution. The inclusion of additional inequalities allows for deriving special cases such as the one that interpolates between FB splitting and accelerated FB splitting along with the associated convergence rate of \(\mathcal {O}(1/n^{2e})\) where \(e\in [0,1]\). This result is not achievable via the algorithm proposed in [33].

The paper is organized as follows. In Section 2, we establish the basic definitions and notations used throughout the paper. Section 3 contains the formal presentation of the problem and introduces our proposed algorithm that is analyzed in Section 4. In Section 5, we present several special instances of our algorithm, two of which we examine numerically in Section 6. Proofs omitted for the sake of brevity are shared in Sections 7 and 8 concludes the paper.

2 Preliminaries

The set of real numbers is denoted by \(\mathbb {R}\). \(\mathcal {H}\) denotes a real Hilbert space that is equipped with an inner product and an induced norm, respectively denoted by \( {\left\langle \cdot , \cdot \right\rangle }\) and \( {\left\| \cdot \right\| }:=\sqrt{ {\left\langle \cdot , \cdot \right\rangle }}\). \(\mathcal {M} {\left( \mathcal {H} \right) }\) denotes the set of bounded linear, self-adjoint, strongly positive operators on \(\mathcal {H}\). For \(M\in \mathcal {M} {\left( \mathcal {H} \right) }\) and all \(x,y\in \mathcal {H}\), the M-induced inner product and norm are denoted and defined by \( {\left\langle x, y \right\rangle }_M:= {\left\langle x, My \right\rangle }\) and \( {\left\| x \right\| }_M = \sqrt{ {\left\langle x, Mx \right\rangle }}\), respectively.

The power set of \(\mathcal {H}\) is denoted by \(2^\mathcal {H}\). A map \(A:\mathcal {H}\rightarrow 2^{\mathcal {H}}\) is characterized by its graph \({\text {gra}}(A) = {\left\{ {\left( x, u \right) }\in \mathcal {H}\times \mathcal {H} : u\in Ax \right\} }\). An operator \(A:\mathcal {H}\rightarrow 2^{\mathcal {H}}\) is monotone if \( {\left\langle u-v, x-y \right\rangle }\ge 0\) for all \( {\left( x, u \right) }, {\left( y, v \right) }\in {\text {gra}}(A)\). A monotone operator \(A:\mathcal {H}\rightarrow 2^{\mathcal {H}}\) is maximally monotone if there exists no monotone operator \(B:\mathcal {H}\rightarrow 2^\mathcal {H}\) such that \({\text {gra}}(B)\) properly contains \({\text {gra}}(A)\).

Let \(M\in \mathcal {M} {\left( \mathcal {H} \right) }\). An operator \(T:\mathcal {H}\rightarrow \mathcal {H}\) is said to be

-

(i)

L-Lipschitz continuous (\(L \ge 0\)) w.r.t. \( {\left\| \cdot \right\| }_M\) if

$$\begin{aligned} {\left\| Tx-Ty \right\| }_{M^{-1}}\le L {\left\| x-y \right\| }_M \qquad \text {for all } x,y\in \mathcal {H}; \end{aligned}$$ -

(ii)

\(\frac{1}{\beta }\)-cocoercive (\(\beta \ge 0\)) w.r.t. \( {\left\| \cdot \right\| }_M\) if

$$\begin{aligned} \beta {\left\langle Tx -Ty, x-y \right\rangle }\ge {\left\| Tx-Ty \right\| }_{M^{-1}}^2\qquad \text {for all } x,y\in \mathcal {H}; \end{aligned}$$ -

(iii)

nonexpansive if it is 1-Lipschitz continuous w.r.t. \( {\left\| \cdot \right\| }\).

Note that a \(\frac{1}{\beta }\)-cocoercive operator is \(\beta \)-Lipschitz continuous. This holds trivially for \(\beta =0\) and for \(\beta >0\) it follows from the Cauchy-Schwarz inequality.

3 Problem statement and proposed algorithm

We consider structured monotone inclusion problems of the form

that satisfy the following assumption.

Assumption 1

Let \(\beta \ge 0\) and \(M\in \mathcal {M} {\left( \mathcal {H} \right) }\) and assume that

-

(i)

\(A:\mathcal {H}\rightarrow 2^{\mathcal {H}}\) is maximally monotone,

-

(ii)

\(C:\mathcal {H}\rightarrow \mathcal {H}\) is \(\frac{1}{\beta }\)-cocoercive with respect to \( {\left\| \cdot \right\| }_M\),

-

(iii)

the solution set \(\text{zer}(A+C) := {\left\{ x\in \mathcal {H} : 0\in Ax+Cx \right\} }\) is nonempty.

Since operator C has a full domain and is maximally monotone as a cocoercive operator [9, Corollary 20.28], the operator \(A+C\) is also maximally monotone [9, Corollary 25.5].

We propose the following variant of FB splitting which incorporates momentum terms and deviations in order to solve the inclusion problem in (2). The algorithm has many degrees of freedom that we will specify later in this section and in the special cases found in Section 5.

At the core of the method is a forward–backward type step, found in Step 7 of Algorithm 1, which reduces to a nominal forward–backward step when \(z_n=y_n\). The update equations for the algorithm sequences \(y_n\), \(z_n\), and \(x_n\) involve linear combinations of momentum-like terms and the so-called deviations, \(u_n\) and \(v_n\). These deviations are arbitrarily chosen provided they satisfy safeguarding condition in (3), where \(\ell _{n}\) is defined in (4). When selecting the deviations, all other quantities involved in () are computable. These deviations offer a degree of flexibility that can be used to control the algorithm trajectory with the aim of improving convergence. In Section 5, we present examples of nontrivial deviations that a priori satisfy this condition, thus removing the need for online evaluation.

For the algorithm to be implementable, let alone convergent, the algorithm parameters must be constrained. For the FB step in Step 7 to be implementable, and the safeguarding step to be satisfied for some \(u_{n+1}\) and \(v_{n+1}\), we require for all \(n\in \mathbb {N}\) that \(\gamma _n\), \(\lambda _n\), \(\theta _n\), \(\hat{\theta }_n\), and \(\tilde{\theta }_n\) are strictly positive and the parameters \(\zeta _n\), \(\mu _n\), \(\alpha _n\), and \(\bar{\alpha }_n\) are non-negative. Fulfillment of these requirements allows for a trivial choice that satisfies the safeguarding condition (), namely \(u_{n+1}=v_{n+1}=0\), which results in a novel momentum-type forward–backward scheme. Additional requirements on some of these parameters, that are needed for the convergence analysis, are discussed in Section 4.

Algorithm 1 can be viewed as an extension of the algorithm in [33]. The key difference arises from the inclusion of additional monotonicity and cocoercivity inequalities (interpolation conditions) in our analysis compared to the analysis of [33]. In contrast to the analysis in [33], we utilize inequalities not only between the last iteration points and a solution but also between points generated during the last two iterations of our algorithm. This approach provides our algorithm with an additional degree of freedom, embodied by the parameter \(\mu _n\), that stems from the degree to which these extra interpolation conditions are incorporated into the analysis. This addition yields momentum-like terms in the updates, a less restrictive safeguarding condition, and the potential to derive convergence rate estimates for several involved quantities up to \(O(\frac{1}{n^2})\). Such rates are not achievable in [33] as setting \(\mu _n\) to zero reverts our algorithm to that of [33].

When \(\gamma _n=\gamma >0\) for every \(n\in \mathbb {N}\), the deviation vectors \(u_n\) and \(v_n\) can be chosen so that \(y_{n}=z_n\). In this case, Step 7 of Algorithm 1 simplifies to a FB step of the form

It is widely recognized that given appropriate selections of \(\gamma >0\), \(M\in \mathcal {M} {\left( \mathcal {H} \right) }\), A, and C, this FB step can reduce to iterations of well-known algorithms. These include the Chambolle–Pock algorithm [13], the Condat–Vũ method [17, 39], the Douglas–Rachford method [24], the Krasnosel’skiĭ–Mann iteration [9, Section 5.2], and the proximal gradient method. Consequently, Algorithm 1 can be applied to all these special cases.

3.1 Preview of special cases

This section previews some special cases of Algorithm 1, which we will explore in depth in Sections 5 and 6. Specifically, we consider cases where the sequence \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is non-decreasing and, for all \(n\in \mathbb {N}\), \(\gamma _n=\gamma >0\),

and \(u_n\) is parallel to the expression in the first norm in the \(\ell _{n}\) expression in () that contributes to the upper bound in the safeguarding condition in (). This yields \(z_n=y_n\) and as demonstrated in Section 5, with a particular choice of \(\mu _n\), Algorithm 1 becomes

that is initialized with \(y_{-1}=p_{-1}=x_0\) and \(u_0=0\). This algorithm, under the condition \(\kappa _n^2\le \zeta _{n+1}\), satisfies the safeguarding condition. As we will see later, \(\zeta _{n+1}\in [0,1]\) can be set arbitrarily (though stronger convergence conclusions can be drawn if \(\zeta _n<1\) for all \(n\in \mathbb {N}\)), indicating that as long as \(\kappa _n\in [-1,1]\), this new algorithm satisfies the safeguarding condition by design.

In Section 5, we present two special cases of this iteration that we numerically evaluate in Section 6. The first special case involves setting \(\lambda _0=1\) and, for all \(n\in \mathbb {N}\), \(\lambda _n=\lambda _0\) and \(\kappa _n=\kappa \in (-1,1)\). As shown in Section 5, the resulting algorithm can be written as

that is initialized with \(u_{-1}=u_0=0\). This algorithm converges weakly to a solution of the inclusion problem and setting \(\kappa =0\) gives \(u_n=0\) for all \(n\in \mathbb {N}\) and the algorithm reduces to the standard FB method.

The second special case is obtained by letting \(\kappa _{n}=\frac{\lambda _{n+1}-\lambda _0}{\lambda _{n+1}}\in [0,1)\) resulting, as shown in Section 5, in the algorithm:

that is initialized with \(y_{-1}=p_{-1}\). We will pay particular attention to the choice \(\lambda _n=\left( 1-\frac{\gamma \bar{\beta }}{4}\right) ^e(1+n)^e\) with \(e\in [0,1]\). The choice \(e=0\) gives the standard FB method and, as shown in Section 5.2.1, the choice \(e=1\) has the Halpern iteration analyzed in [23] and the accelerated proximal point method in [21] as special cases. By choosing an e value between the allowed extremes, we can interpolate between these methods. We show that \(\Vert p_n-y_n\Vert _M^2\) with this choice of \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) converges as \(\mathcal {O}\left( \frac{1}{n^{2e}}\right) \) (and, if \(\bar{\beta }>\beta \), as \(\mathcal {O}\left( \frac{1}{n}\right) \) for all \(e\in [0,1]\)) implying that the convergence rate can be tuned by selecting e. The requirements we will pose on the parameters in Section 4 to guarantee convergence state that \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) can grow at most linearly, meaning values of \(e>1\) are not viable and the best possible rate is \(\mathcal {O}\left( \frac{1}{n^2}\right) \) obtained by letting \(e=1\). As shown in Section 5, this case recovers the exact \(\mathcal {O}\left( \frac{1}{n^2}\right) \) rate results of the Halpern iteration in [23] and the accelerated proximal point method in [21].

In Section 6, we present numerical experiments on a simple skew-symmetric monotone inclusion problem, originating from the problem \(\max _{y\in \mathbb {R}}\min _{x\in \mathbb {R}}xy\). We find that both Algorithms 5 and 6 can significantly outperform the standard FB method and the Halpern iteration when \(\kappa \) and e are appropriately chosen.

4 Convergence analysis

In this section, we conduct a Lyapunov-based convergence analysis for Algorithm 1. In Theorem 1, we define a Lyapunov function, \(V_n\), based on the iterates generated by Algorithm 1, and present an identity that establishes a relation between \(V_{n+1}\) and \(V_n\). In Theorem 2, we introduce additional assumptions and derive a Lyapunov inequality that serves as the main tool for the convergence and convergence rate analysis in Theorem 3.

The proof of our first theorem is lengthy and only based on algebraic manipulations and is therefore deferred to Section 7. The equality in the proof is validated with symbolic calculations in

https://github.com/sbanert/incorporating-history-and-deviations.

Theorem 1

Suppose that Assumption 1 holds. Let \(x^\star \) be an arbitrary point in \(\text{zer}(A+C)\) and \(V_0= {\left\| x_0-x^\star \right\| }_M^2\), and based on the iterates generated by Algorithm 1, for all \(n\in \mathbb {N}\), let

where

and \(\ell _{n}\) given by (). Then,

holds for all \(n\in \mathbb {N}\).

The identity relation presented in Theorem 1 can provides meaningful insights on convergence (as we will see in Theorem 3) when all its constituent terms are non-negative. The non-negativity of these terms is contingent on the selection of the parameter sequences \( {\left( \zeta _n \right) }_{n\in \mathbb {N}}\), \( {\left( \gamma _n \right) }_{n\in \mathbb {N}}\), \( {\left( \mu _n \right) }_{n\in \mathbb {N}}\), and \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\). To reduce the degrees of freedom and facilitate a clearer exposition, we constrain ourselves to a non-decreasing \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\), and

for all \(n\in \mathbb {N}\). This implies \(\mu _n\ge 0\) and offers a slightly less general algorithm, yet it encompasses all special cases in Section 5. We next state our restrictions on the parameter sequences that will give rise to a meaningful Lyapunov inequality in Theorems 2 and 3.

Assumption 2

Assume that \(\varepsilon >0\), \(\epsilon _0,\epsilon _1\ge 0\), \(\lambda _0>0\), and that, for all \(n\in \mathbb {N}\), \(\mu _n=\frac{1}{\lambda _0}\lambda _n^2-\lambda _n\) and the following hold:

-

(i)

\(0\le \zeta _n\le 1-\epsilon _0\);

-

(ii)

\(\varepsilon \le \gamma _n\bar{\beta }\le 4-2\lambda _0-\varepsilon \);

-

(iii)

\(\lambda _{n+1}\ge \lambda _{n}\) and \(\gamma _n\lambda _n-\gamma _{n-1}\lambda _{n-1}\le \gamma _{n}\lambda _0-\epsilon _1\);

-

(iv)

\(\bar{\beta }\ge \beta \).

Remark 1

Assumption 2 (i) gives an upper bound for \(\zeta _n\) to be less than or equal to 1. The variable, \(\zeta _n\), multiplies \(\ell _{n-1}\) in the right-hand side of the safeguarding condition (), effectively contributing to limit the size of the ball from which the deviations \(u_n\) and \(v_n\) are selected. A consistent choice is \(\zeta _n=1-\epsilon _0\). Assumption 2 (ii) sets requirements on the relation between the initial relaxation parameter \(\lambda _0\) and the step size parameter \(\gamma _n\). An alternative expression of the upper bound is given by

implying that

This inequality will be used to bound certain algorithm parameters. Note that, similarly to in [19, 22], we can allow for a \(\gamma _n>\frac{2}{\beta }\) with the trade-off of using a relaxation parameter \(\lambda _0<1\). Assumption 2 (iii) states that \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is non-decreasing, resulting in \(\mu _n\ge 0\), and enforces a linear growth upper bound since \(\gamma _n\) is both positive and upper bounded. We will later see that our algorithm can converge as \(\mathcal {O}(\frac{1}{\lambda _n^2})\), with this upper bound resulting in a best possible convergence rate of \(\mathcal {O}(\frac{1}{n^2})\). Finally, Assumption 2 (iv) sets requirements on \(\bar{\beta }\). While the choice \(\bar{\beta }=\beta \) always works, selecting \(\bar{\beta }>\beta \) guarantees convergence of certain parameter sequences. Note that when \(\lambda _0=1\) and \(\bar{\beta }=\beta \), it follows from Assumption 2 (ii) that \(\gamma _n\le \frac{2-\varepsilon }{\beta }\), aligning with the conventional step size upper bound for forward–backward splitting.

Our convergence analysis requires that specific parameter sequences are non-negative or, in certain cases, are lower bounded by a positive number. Before showing that Assumption 2 ensures this, we provide expressions for the following sequences defined in Algorithm 1 in terms of \(\lambda _n\), \(\gamma _n\), and \(\bar{\beta }\):

Note that the final four quantities are quadratic in \(\lambda _n\).

Proposition 1

Consider the quantities defined in Algorithm 1 and suppose that Assumption 2 holds. The parameter sequences \( {\left( \theta _n \right) }_{n\in \mathbb {N}}\), \( {\left( \hat{\theta }_n \right) }_{n\in \mathbb {N}}\), \( {\left( \tilde{\theta }_n \right) }_{n\in \mathbb {N}}\), \( {\left( \frac{\tilde{\theta }_n}{\hat{\theta }_n} \right) }_{n\in \mathbb {N}}\), \( {\left( \frac{\hat{\theta }_n}{\theta _n} \right) }_{n\in \mathbb {N}}\), and \( {\left( \frac{\theta _n}{\lambda _n^2} \right) }_{n\in \mathbb {N}}\) are lower bounded by a positive constant and \( {\left( \alpha _n \right) }_{n\in \mathbb {N}}\) and \( {\left( \gamma _n(\lambda _n-\bar{\alpha }_{n+1}\lambda _{n+1})-\epsilon _1 \right) }_{n\in \mathbb {N}}\) are non-negative.

Proof

Let us first consider \(\theta _n\), \(\hat{\theta }_n\), and \(\tilde{\theta }_n\). Then Assumption 2, (12), (10), and (11) immediately imply that \(\theta _n\), \(\hat{\theta }_n\), and \(\tilde{\theta }_n\) are lower bounded by a positive constant. Moreover, since \(2-\lambda _0\gamma _n\bar{\beta }\ge \varepsilon \lambda _0>0\) by (11), we have

and since from (10), \(4-\gamma _n\bar{\beta }-2\lambda _0\ge \varepsilon >0\), we have

and

That \(\alpha _n\ge 0\) follows trivially from nonegativity of \(\mu _n\) and that \(\lambda _n>0\). Finally,

by Assumption 2 (iii).\(\square \)

In the following result, we introduce a so-called Lyapunov inequality that serves as the foundation of our main convergence results.

Theorem 2

Suppose that Assumptions 1 and 2 hold. Let \(x^\star \) be an arbitrary point in \(\text{zer}(A+C)\), and the sequences \( {\left( \ell _{n} \right) }_{n\in \mathbb {N}}\), \( {\left( V_n \right) }_{n\in \mathbb {N}}\), and \( {\left( \phi _n \right) }_{n\in \mathbb {N}}\) be constructed in terms of the iterates obtained from Algorithm 1, as per (), (7), and (8) respectively. Then, for all \(n\in \mathbb {N}\),

-

(i)

the safeguarding upper bound

$$\begin{aligned} \zeta _{n+1}\ell _{n} \ge \frac{\zeta _{n+1}\theta _n}{2} {\left\| p_n-x_n+\alpha _n(x_n-p_{n-1}) +\frac{\gamma _n\bar{\beta }\lambda _n^2}{\hat{\theta }_n}u_n - \frac{2\bar{\theta }_n}{\theta _n}v_n \right\| }_M^2\ge 0; \end{aligned}$$ -

(ii)

the term safeguarded by \(\zeta _{n+1}\ell _{n}\),

$$\begin{aligned} \left( \lambda _{n+1}+\mu _{n+1}\right) \left( \frac{\tilde{\theta }_{n+1}}{\hat{\theta }_{n+1}} {\left\| u_{n+1} \right\| }_{M}^2 + \frac{\hat{\theta }_{n+1}}{\theta _{n+1}} {\left\| v_{n+1} \right\| }_{M}^2\right) \ge 0; \end{aligned}$$ -

(iii)

\(\phi _n\ge 0\), more specifically, if \(\beta >0\):

$$\begin{aligned} \phi _n\ge \frac{\bar{\beta }}{4} {\left\| \frac{2}{\bar{\beta }}(Cy_n-Cx^\star )+M(p_n-y_n) \right\| }_{M^{-1}}^2+\frac{\bar{\beta }-\beta }{\bar{\beta }\beta } {\left\| Cy_n-Cx^\star \right\| }_{M^{-1}}^2\ge 0, \end{aligned}$$and if \(\beta =0\):

$$\begin{aligned} \phi _n\ge \frac{\bar{\beta }}{4} {\left\| p_n-y_n \right\| }_M^2\ge 0; \end{aligned}$$ -

(iv)

the Lyapunov function \(V_n\ge 0\);

-

(v)

the following Lyapunov inequality holds

$$\begin{aligned} V_{n+1}+2\gamma _n(\lambda _n-\bar{\alpha }_{n+1}\lambda _{n+1})\phi _n + (1-\zeta _n)\ell _{n-1} \le V_n. \end{aligned}$$

Proof

Theorem 2 (i). In view of () and since, by Assumption 2 and Proposition 1, \(2\mu _{n}\gamma _{n}\ge 0\), \(\theta _n>0\), and \(\zeta _{n+1}\ge 0\), the statement reduces to showing that

It follows from Step 7 of Algorithm 1 that

Combined with montonicity of A, this gives

If \(\beta =0\), C is constant, implying that the right-hand side reduces to the first term defining \(\widehat{\varphi }_n\). Therefore, \(\widehat{\varphi }_n\ge 0\) since it is constructed by adding two non-negative terms. It remains to show \(\widehat{\varphi }_n\ge 0\) when \(\beta >0\). From \(\frac{1}{\beta }\)-cocoercivity of C w.r.t. \( {\left\| \cdot \right\| }_M\), we have

Adding (14) and (15) to form \(\varphi _n\) gives

where we have used that

holds for all \(t,s\in \mathcal {H}\). Since \(\varphi _n\ge 0\) by construction and \(\bar{\beta }\ge \beta > 0\), this implies that \(\widehat{\varphi }_n\ge 0\).

Theorem 2 (ii). This follows by Assumption 2 and Proposition 1 that imply strict positiveness of \(\lambda _{n+1}+\mu _{n+1}\), \(\frac{\tilde{\theta }_{n+1}}{\hat{\theta }_{n+1}}\), and \(\frac{\hat{\theta }_{n+1}}{\theta _{n+1}}\).

Theorem 2 (iii). Recall that

as defined in (8). Since \(x^\star \in \text {zer}(A+C)\), we have \(-Cx^\star \in {Ax^\star }\), which combined with (13) and montonicity of A gives

If \(\beta =0\), C is constant and the right hand side reduces to \(\phi _n-\frac{\bar{\beta }}{4}\Vert y_n-p_n\Vert _M^2\), which is non-negative for all \(n\in \mathbb {N}\) by (21). Let us now consider \(\beta >0\). From \(\frac{1}{\beta }\)-cocoercivity of C w.r.t. \( {\left\| \cdot \right\| }_M\), we have

Construct \(\widehat{\phi }_n\) by adding (21) and (22) to get

where (19) is used in the next to last equality. The result therefore follows since \(\bar{\beta }\ge \beta >0\) and \(\widehat{\phi }_n\ge 0\) by construction.

Theorem 2 (iv). Since \(\ell _{n}\ge 0\) by Theorem 2 (i), \(\phi _n\ge 0\) by Theorem 2 (iii), and the coefficients in front of \(\phi _n\) in the definition of \(V_n\) in (7) are non-negative by Assumption 2 and Proposition 1, we conclude that \(V_n\ge 0\).

Theorem 2 (v). By Theorem 1, we have

Using this equality and () gives

Moving \(\zeta _n\ell _{n-1}\) to the other side gives the desired result. This concludes the proof.\(\square \)

This result demonstrates the feasibility of selecting \(u_{n+1}\) and \(v_{n+1}\) that meet the safeguarding condition. The obvious selection of \(u_{n+1}=v_{n+1}=0\) is always viable, but we will provide in Section 5 a nontrivial choice that consistently satisfies the condition and can enhance convergence. Furthermore, Theorem 2 introduces a valuable Lyapunov inequality that will underpin our conclusions on convergence. Before stating these convergence results, we show boundedness of certain coefficient sequences.

Lemma 1

Consider the quantities defined in Algorithm 1 and suppose that Assumption 2 holds. The sequences \( {\left( \frac{\tilde{\theta }_n}{\hat{\theta }_n} \right) }_{n\in \mathbb {N}}\), \( {\left( \frac{\lambda _n^2}{\hat{\theta }_n} \right) }_{n\in \mathbb {N}}\), \( {\left( \frac{\bar{\theta }_n}{\theta _n} \right) }_{n\in \mathbb {N}}\), as well as \( {\left( \frac{(2-\gamma _n\bar{\beta })(\lambda _n+\mu _n)}{\theta _n} \right) }_{n\in \mathbb {N}}\) are bounded.

Proof

We have \(\frac{\tilde{\theta }_n}{\hat{\theta }_n}\ge 0\) by Proposition 1 and

by (11). Further, \(\frac{\lambda _n^2}{\hat{\theta }_n}\ge 0\) by Proposition 1 and

and, since \(\gamma _n\bar{\beta }\in (0,4)\) by Assumption 2 (ii),

This completes the proof.\(\square \)

Theorem 3

Suppose that Assumptions 1 and 2 hold. Let \(x^\star \) be an arbitrary point in \(\text{zer}(A+C)\), and the sequences \( {\left( \ell _{n} \right) }_{n\in \mathbb {N}}\), \( {\left( V_n \right) }_{n\in \mathbb {N}}\), and \( {\left( \phi _n \right) }_{n\in \mathbb {N}}\) be constructed in terms of the iterates obtained from Algorithm 1, as per (), (7), and (8) respectively. Then the following hold:

-

(i)

the sequence \( {\left( V_n \right) }_{n\in \mathbb {N}}\) is convergent and \(\ell _{n}\le V_{n+1}\le \Vert x_0-x^\star \Vert _M^2\);

-

(ii)

if \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) increasing and \(\lambda _n\rightarrow \infty \) as \(n\rightarrow \infty \), then

$$\begin{aligned} {\left\| p_n-x_n+\alpha _n(x_n-p_{n-1}) +\frac{\gamma _n\bar{\beta }\lambda _n^2}{\hat{\theta }_n}u_n - \frac{2\bar{\theta }_n}{\theta _n}v_n \right\| }_M^2\le \frac{2\lambda _0\Vert x_0-x^\star \Vert _M^2}{(4-\gamma _n\bar{\beta }-2\lambda _0)\lambda _n^2}; \end{aligned}$$ -

(iii)

if \(\epsilon _0>0\), then \( {\left( \ell _{n} \right) }_{n\in \mathbb {N}}\) is summable;

-

(iv)

if \(\epsilon _0>0\), then \(\lambda _n u_n\rightarrow 0\), \(\lambda _nv_n\rightarrow 0\), and \(x_{n+1}-x_n\rightarrow 0\) as \(n\rightarrow \infty \);

-

(v)

if \(\epsilon _1>0\), then \( {\left( \phi _n \right) }_{n\in \mathbb {N}}\) is summable;

-

(vi)

if \(\epsilon _1>0\) and \(\bar{\beta }>\beta \), then \(\Vert y_n-p_n\Vert _M^2\) is summable;

-

(vii)

if \(\epsilon _0,\epsilon _1>0\), \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is bounded, and \(p_n-x_n\rightarrow 0\), \(y_n-p_n\rightarrow 0\), and \(z_n-p_n\rightarrow 0\) as \(n\rightarrow \infty \), then \(p_n\rightharpoonup x^\star \);

-

(viii)

if \(\epsilon _0,\epsilon _1>0\) and \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is constant, then \(p_n\rightharpoonup x^\star \).

Proof

We base our convergence results on

from Theorem 2.

Theorem 3 (i). Recall that the sequences \( {\left( \ell _{n} \right) }_{n\in \mathbb {N}}\), \( {\left( V_n \right) }_{n\in \mathbb {N}}\), and \( {\left( \phi _n \right) }_{n\in \mathbb {N}}\) are non-negative by Theorem 2. Additionally, by Assumption 2 (i) and Proposition 1 respectively, the quantities \(1-\zeta _n\) and \(\gamma _n(\lambda _n-\bar{\alpha }_{n+1}\lambda _{n+1})\) are non-negative for all \(n\in \mathbb {N}\); and thus, the quantity \(2\gamma _n(\lambda _n-\bar{\alpha }_{n+1}\lambda _{n+1})\phi _n + {\left( 1-\zeta _n \right) }\ell _{n-1}\) is non-negative for all \(n\in \mathbb {N}\). Therefore, by [9, Lemma 5.31] the sequence \( {\left( V_n \right) }_{n\in \mathbb {N}}\) converges and \(V_{n+1}\le V_n\) for all \(n\in \mathbb {N}\) and since \(\lambda _{n+1}\gamma _{n+1}\alpha _{n+1}\ge 0\) by Assumption 2 and Proposition 1,

Theorem 3 (ii). Theorem 2 (i) states that

where \(\ell _{n}\le \Vert x_0-x^\star \Vert _M^2\) by Theorem 3 (i). Inserting the definition of \(\theta _n\) and rearranging gives the result.

Theorem 3 (iii). That \(\epsilon _0>0\) implies that \(1-\zeta _n>\epsilon _0>0\) by Assumption 2 (i) and a telescope summation of (23) gives summability of \( {\left( \ell _{n} \right) }_{n\in \mathbb {N}}\).

Theorem 3 (iv). To show that \( {\left( \lambda _nu_n \right) }_{n\in \mathbb {N}}\) and \( {\left( \lambda _nv_n \right) }_{n\in \mathbb {N}}\) converge to 0, we note that due to (), the summability of \( {\left( \ell _{n} \right) }_{n\in \mathbb {N}}\) implies summability of

Hence, as, for all \(n\in \mathbb {N}\), by Proposition 1 and Assumption 2 the coefficients in the expression above are strictly positive, the sequences \( {\left( \lambda _nu_n \right) }_{n\in \mathbb {N}}\) and \( {\left( \lambda _nv_n \right) }_{n\in \mathbb {N}}\) must be convergent to zero.

Next, we show convergence to zero of \( {\left( x_{n+1}-x_n \right) }_{n\in \mathbb {N}}\). Since \( {\left( \ell _{n} \right) }_{n\in \mathbb {N}}\) is summable, Theorem 2 (i) implies that

is summable. Using Lemma 2 to replace the expression inside the norm above by Lemma 2 (iii) and taking the factor \(\frac{1}{\lambda _n}\) out of the norm, we get

which is a summable sequence too. Since, by Proposition 1, \(\frac{\theta _n}{2\lambda _n^2}\) is lower bounded by a positive constant and the coefficients multiplying \(\lambda _nu_n\) and \(\lambda _nv_n\) are bounded by Lemma 1, we conclude, since \(\lambda _nu_n\rightarrow 0\) and \(\lambda _nv_n\rightarrow 0\) as \(n\rightarrow \infty \), that \(x_{n+1}-x_n\rightarrow 0\) as \(n\rightarrow \infty \).

Theorem 3 (v). Proposition 1 implies that \(2\gamma _n(\lambda _n-\bar{\alpha }_{n+1}\lambda _{n+1})\ge 2\epsilon _1>0\) and a telescope summation of (23) gives summability of \( {\left( \phi _n \right) }_{n\in \mathbb {N}}\).

Theorem 3 (vi). Let \(\beta =0\). Then Theorem 2 (iii) immediately gives the result due to summability of \( {\left( \phi _n \right) }_{n\in \mathbb {N}}\). Let \(\beta >0\). Then Theorem 2 (iii) and \(\bar{\beta }>\beta \) imply that

are summable. Since

we conclude that \( {\left( \Vert p_n-y_n\Vert _M^2 \right) }_{n\in \mathbb {N}}\) is summable.

Theorem 3 (vii). We first show that \(\Vert x_n-x^\star \Vert _M^2\) converges. From Theorem 3 (i), we know that

converges. Since \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is bounded so is \(\lambda _{n+1}\gamma _{n+1}\alpha _{n+1}\) and by Theorem 3 (iii) and Theorem 3 (v) we conclude that \(2\lambda _{n+1}\gamma _{n+1}\alpha _{n+1}\phi _n + \ell _{n}\rightarrow 0\) as \(n\rightarrow \infty \). This implies that \( {\left\| x_{n+1}-x^\star \right\| }_M^2\) converges.

Now, since \(\Vert p_n-x_n\Vert _M^2\rightarrow 0\) as \(n\rightarrow \infty \) and \(\Vert x_n-x^\star \Vert \le D\) for all \(n\in \mathbb {N}\) and some \(D\in (0,\infty )\), we conclude that

as \(n\rightarrow \infty \). Therefore also \(\Vert p_n-x^\star \Vert ^2\) converges and \(p_n\) has weakly convergent subsequences. Let \((p_{n_k})_{k\in \mathbb {N}}\) be one such subsequence with weak limit point \(\bar{x}\) and construct corresponding subsequences \((y_{n_k})_{k\in \mathbb {N}}\), \((z_{n_k})_{k\in \mathbb {N}}\), and \((\gamma _{n_k})_{k\in \mathbb {N}}\). Now, Step 7 in Algorithm 1 can equivalently be written as

which is equivalent to that

The right hand side converges to 0 as \(k\rightarrow \infty \) since \(z_{n_k}-p_{n_k}\rightarrow 0\) and \(p_{n_k}-y_{n_k}\rightarrow 0\) as \(k\rightarrow \infty \) and due to Lipschitz continuity of C, the uniform positive lower bound on \(\gamma _n\) in Assumption 2 (ii), and boundedness of \(M\in \mathcal {M} {\left( \mathcal {H} \right) }\). By weak-strong closedness of the maximal monotone operator \((A+C)\) (which is maximally monotone since C has full domain) the limit point satisfies \(0\in (A+C)\bar{x}\) by [9, Proposition 20.38]. The weak convergence result now follows from [9, Lemma 2.47].

Theorem 3 (viii). In view of Theorem 3 (vii), it is enough to show that \(p_n-x_n\rightarrow 0\), \(y_n-p_n\rightarrow 0\), and \(z_n-p_n\rightarrow 0\) as \(n\rightarrow \infty \). Since \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is constant, \(\mu _n=\frac{1}{\lambda _0}\lambda _n^2-\lambda _n=0\) and \(\alpha _n=0\). Summability of \(\ell _{n}\) therefore implies through Theorem 2 (i) that

is summable. Since \(\theta _n\) is lower bounded by a positive constant due to Proposition 1 and the coefficients in front of \(u_n\) and \(v_n\) are bounded due to Lemma 1, we conclude, since \(u_n\rightarrow 0\) and \(v_n\rightarrow 0\) by Theorem 3 (iv) and Assumption 2, that \(p_n-x_n\rightarrow 0\) as \(n\rightarrow \infty \). From the \(x_n\) update,

Theorem 3 (iv), and since \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is constant, we conclude that \(p_n-z_n\rightarrow 0\) as \(n\rightarrow \infty \). Finally, from the \(y_n\) update,

and since \(u_n\rightarrow 0\), we conclude that \(y_n-x_n\rightarrow 0\), which implies that \(y_n-p_n\rightarrow 0\) as \(n\rightarrow \infty \). This concludes the proof.\(\square \)

We could derive convergence properties for other quantities involved, yet we limit our discussion to these results as they are sufficient for our needs for the special cases. Notably, the conclusion in Theorem 3 (viii) aligns with a similar result presented in the authors’ previous work [33]. This is due to \(\mu _n=0\), causing our algorithm to reduce to the one presented in that work.

5 A special case

The safeguarding condition in () typically requires the evaluation of four norms and a scalar product. However, if the vectors inside the norms are parallel, the number of norm evaluations is reduced. This section introduces an algorithm wherein we choose \(u_{n}\) and \(v_{n}\) to ensure \(y_n=z_n\) for all \(n\in \mathbb {N}\) and such that the safeguarding condition reduces to a scalar condition that is readily verified offline. The algorithm we propose is as follows:

where \(y_{-1}=p_{-1}=x_0\), \(u_0=0\), and a constant step size \(\gamma >0\) is used. With a constant step size, Assumption 2 (iii) reduces to

which gives an increasing \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) sequence that grows at most linearly in n.

Prior to presenting the convergence results for this algorithm, we specify a particular form for the sequence \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\). This form separates the growth in n from the selection of \(\lambda _0\).

Assumption 3

Let \(\lambda _0>0\). Assume that \(f:{\textrm{dom}} f\rightarrow \mathbb {R}\), with \(\mathrm {int\,dom} f\supseteq \{x\in \mathbb {R}:x\ge 0\}\), is differentiable (on the interior of its domain), concave, and non-decreasing, and satisfies \(f(0)=1\) and \(f^\prime (0)\in [0,1]\). Let, for all \(n\in \mathbb {N}\),

Proposition 2

Suppose that Assumption 3 holds, then (27) and Assumption 2 (iii) hold with \(\epsilon _1=0\). Suppose in addition that \(f^\prime (0)<1\), then there exists \(\epsilon _1>0\) such that (27) and Assumption 2 (iii) hold.

Proof

That f is non-decreasing trivially implies \(\lambda _{n}\le \lambda _{n+1}\). Concavity implies \(f^{\prime }(x)\le f^{\prime }(0)\) for all \(x\ge 0\). Therefore

and \(\lambda _{n+1}\le (2+n)\lambda _0\). Let \(a:=f^{\prime }(0)\in [0,1)\), then

and with \(\epsilon _1=(1-a)\gamma \lambda _0>0\), we get \(\lambda _{n+1}\le (2+n)\lambda _0-(n+1)\frac{\epsilon _1}{\gamma }\), as desired.\(\square \)

Example 1

Examples of functions f that satisfy Assumption 3 for which an \(\epsilon _1>0\) exists include functions that, for all \(n\in \mathbb {N}\), satisfy \(f(n)=(1+n)^e\) with \(e\in [0,1)\), \(f(n)=\frac{\log (n+2)}{\log (2)}\), and \(f(n)=1\). The choice \(f(n)=(1+n)\) requires that \(\epsilon _1=0\).

We will use this construction of \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) throughout this section and specialize Assumption 2 as follows.

Assumption 4

Assume that \(\varepsilon >0\), \(\epsilon _0\ge 0\), \(\lambda _0>0\), and that, for all \(n\in \mathbb {N}\), \(\mu _n=\frac{1}{\lambda _0}\lambda _n^2-\lambda _n\) and the following hold:

-

(i)

\(0\le \kappa _n^2\le 1-\epsilon _0\);

-

(ii)

\(\varepsilon \le \gamma \bar{\beta }\le 4-2\lambda _0-\varepsilon \);

-

(iii)

\( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is given by Assumption 3;

-

(iv)

\(\bar{\beta }\ge \beta \).

The differences to Assumption 2 are that \(\zeta _{n}\) in Assumption 2 (i) has been replaced with \(\kappa _n^2\) in Assumption 4 (i) and that Assumption 2 (iii) has been replaced with Assumption 4 (iii). If, e.g., \(\kappa _n^2\le \zeta _{n+1}\), Proposition 2 implies that Assumption 2 holds if Assumption 4 does.

We are ready to state our convergence results for the algorithm in (26).

Proposition 3

Suppose that Assumptions 1 and 4 hold. Then the following hold for (26):

-

(i)

if \(f(n)\rightarrow \infty \) as \(n\rightarrow \infty \), then

$$\begin{aligned} {\left\| p_n-x_n+\frac{\lambda _n-\lambda _0}{\lambda _n}(x_n-p_{n-1}) - \frac{2-\gamma \bar{\beta }-2\lambda _0}{4-\gamma \bar{\beta }-2\lambda _0}u_n \right\| }_M^2\le \frac{2\Vert y_0-x^\star \Vert _M^2}{(4-\gamma \bar{\beta }-2\lambda _0)\lambda _0f(n)^2}; \end{aligned}$$ -

(ii)

if \(\bar{\beta }>\beta \) and \(f^{\prime }(0)<1\), then \( {\left( \Vert p_n-y_n\Vert ^2 \right) }_{n\in \mathbb {N}}\) is summable;

-

(iii)

if \( {\left( \kappa _n^2 \right) }_{n\in \mathbb {N}}\) is upper bounded by a constant less than 1 and \(f^{\prime }(0)=0\) (i.e., \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is constant), then \(p_n\rightharpoonup x^\star \in \text{zer}(A+C)\).

Proof

We first show that the algorithm is a special case of Algorithm 1. First note that \(\gamma _n=\gamma \) implies that \(\alpha _n=\bar{\alpha }_n\) for all \(n\in \mathbb {N}\). Let

for all \(n\in \mathbb {N}\), which implies that

since \(2-\lambda _0\gamma _n\bar{\beta }>0\) by (11). Let us show by induction that this implies \(y_n=z_n\) for all \(n\in \mathbb {N}\) in Algorithm 1. Since \(y_{-1}=z_{-1}=p_{-1}=x_0\) and \(u_0=0\), we get \(y_0=z_0\). Now, assume that \(y_k=z_k\) for all \(k\in \{-1,\ldots ,n\}\), then, since \(\alpha _n=\bar{\alpha }_n\) and due to (28),

Therefore, the \(z_{n+1}\) update of Algorithm 1 can be removed and all \(z_n\) instances replaced by \(y_n\) in (26). Moreover, the \(y_n\) and \(x_n\) updates of (26) are obtained from the corresponding sequences in Algorithm 1 by inserting \(\alpha _n=\frac{\lambda _n-\lambda _0}{\lambda _n}\).

It remains to show that the \(u_{n+1}\) update satisfies the safeguarding condition. We use Theorem 2 (i), \(\alpha _n=\frac{\lambda _n-\lambda _0}{\lambda _n}\), and the equality

to conclude that

Now, since \(\lambda _{n+1}+\mu _{n+1}=\frac{\lambda _n^2}{\lambda _0}\), we conclude that if

the safeguarding condition in Algorithm 1 is satisfied. The vectors \(u_{n+1}\) and \(v_{n+1}\) are scalars times the quantity inside this norm. Therefore, the safeguarding condition reduces to the scalar condition

Inserting the quantities in (12) and \(\mu _n=\frac{1}{\lambda _0}\lambda _n^2-\lambda _n\) and multiplying by \(\frac{2}{\theta _n}>0\) gives

The left-hand side satisfies

leading to the safeguarding condition

which is satisfied by letting \(\zeta _{n+1}=\kappa _n^2\).

Using Proposition 2 and the choice \(\zeta _{n+1}=\kappa _n^2\), we conclude that Assumption 2 holds since Assumption 4 does and we can use Theorem 3 to prove convergence.

That Proposition 3 (i) holds follows from Theorem 3 (ii) since \(y_0=x_0\) and by updating the norm expression using (29).

Proposition 3 (ii) follows from Theorem 3 (vi) due to Proposition 2 that ensures \(\epsilon _1>0\).

Proposition 3 (iii) follows from Theorem 3 (viii) due to Proposition 2 and that \(\kappa _n^2=\zeta _{n+1}\) is upper bounded by a constant less than 1, which implies \(\epsilon _0>0\).\(\square \)

Remark 2

The algorithm produces points that satisfy

and Proposition 3 (ii) implies, if \(f^\prime (0)<1\) and \(\bar{\beta }>\beta \), that \(M(y_n-p_n)-C(y_n-p_n)\rightarrow 0\) as \(n\rightarrow \infty \) due to boundedness of M and Lipschitz continuity of C. Although \( {\left( p_n \right) }_{n\in \mathbb {N}}\) may not converge, it satisfies the monotone inclusion (2) in the limit. If in addition \(\Vert p_n-x^\star \Vert _M\) converges, we can conclude that \(p_n\rightharpoonup x^\star \in \text{zer}(A+C)\).

A special case of (26) that we will evaluate numerically in Section 6 is found by letting \(\lambda _0=1\), and, for all \(n\in \mathbb {N}\), \(\lambda _n=\lambda _0\), and \(\kappa _n=\kappa \in (-1,1)\). Then (26) reduces to

which, since \(x_{n+1}=p_n-u_n\), can be written as

This algorithm is previewed in Section 3.1. Since \(\kappa _n=\kappa \in (-1,1)\) for all \(n\in \mathbb {N}\) and since \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is constant, Proposition 3 (iii) ensures that this algorithm produces a \(p_n\)-sequence that converges weakly to a solution.

5.1 Alternative formulation

We can eliminate the \(x_n\) sequence in (26) and express the algorithm solely in terms of \(y_n\), \(p_n\), and \(u_n\). The algorithm becomes

with \(y_{-1}=p_{-1}=x_0\) and \(u_0=0\).

Proposition 4

The algorithms in (26) and (31) produce the same \( {\left( y_n \right) }_{n\in \mathbb {N}}\) and \( {\left( p_n \right) }_{n\in \mathbb {N}}\) sequences, provided \(p_{-1}=y_{-1}=y_0=x_0\).

Proof

We remove the \(x_n\) sequence from (26) by inserting

that comes from the \(y_n\) update, into the \(x_{n+1}\) update. This gives \(x_{n+1}\) update

Multiplying by \(\frac{\lambda _0}{\lambda _{n+1}}\) gives

The \(u_{n+1}\) update becomes

This concludes the proof.\(\square \)

5.2 Fixed-point residual convergence rate

The convergent quantity in Proposition 3 (i) may be hard to interpret. In this section, we propose a special case of (26) and (31) such that this quantity is the fixed-point residual, \(p_n-y_n\), for the forward–backward mapping. This is achieved by letting \(\kappa _n=\frac{\lambda _{n+1}-\lambda _0}{\lambda _{n+1}}\), which implies that

and that the algorithm becomes

This algorithm converges as per the following result.

Proposition 5

Suppose that Assumptions 1 and 4 hold. Then the following hold for (32):

-

(i)

if \(f(n)\rightarrow \infty \) as \(n\rightarrow \infty \), then

$$\begin{aligned} {\left\| \frac{1}{\gamma }(p_n-y_n) \right\| }_M^2\le \frac{2\Vert y_0-x^\star \Vert _M^2}{\gamma ^2(4-\gamma \bar{\beta }-2\lambda _0)\lambda _0f(n)^2}; \end{aligned}$$ -

(ii)

if \(\bar{\beta }>\beta \) and \(f^{\prime }(0)<1\), then \( {\left( \Vert p_n-y_n\Vert ^2 \right) }_{n\in \mathbb {N}}\) is summable;

-

(iii)

if \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) is constant, the algorithm reduces to relaxed forward–backward splitting and \(p_n\rightharpoonup x^\star \in \text{zer}(A+C)\).

Proof

The claims follow from Propositions 3 and 4 by showing that, for all \(n\in \mathbb {N}\): the choice \(\kappa _n=\frac{\lambda _{n+1}-\lambda _0}{\lambda _{n+1}}\) in (31) gives (32); by noting that \(\kappa _n=\frac{\lambda _{n+1}-\lambda _0}{\lambda _{n+1}}\) satisfies Assumption 4 (i) with \(\epsilon _0>0\) if f (and consequently \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\)) is bounded and with \(\epsilon _0=0\) if f (and consequently \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\)) is unbounded; and by showing that the expression inside the norm in Proposition 3 (i) satisfies

We will first show that the \(u_{n+1}\) update in (31) with \(\kappa _n=\frac{\lambda _{n+1}-\lambda _0}{\lambda _{n+1}}\), i.e.,

implies that \(u_n=\frac{\lambda _n-\lambda _0}{\lambda _n}\frac{(4-\gamma \bar{\beta }-2\lambda _0)}{2}(p_{n-1}-y_{n-1})\) for all \(n\in \mathbb {N}\). For \(n=0\), since \(p_{-1}=y_{-1}=u_0=0\), we get

For \(n\ge 1\), we use induction. Assume that \(u_n=\frac{\lambda _n-\lambda _0}{\lambda _n}\frac{(4-\gamma \bar{\beta }-2\lambda _0)}{2}(p_{n-1}-y_{n-1})\), then

since the last two terms in (34) cancel, which is what we wanted to show.

The \(y_{n+1}\) update in (31) with \(u_{n+1}\) defined in (35) inserted satisfies

which equals the \(y_{n+1}\) update in (32).

Finally, using the \(y_n\) update equation in (26), i.e, \(\frac{\lambda _0}{\lambda _n}x_n=y_n-\frac{\lambda _n-\lambda _0}{\lambda _n}y_{n-1}-u_n\) and the \(u_{n+1}\) definition in (35), we conclude that

This completes the proof.\(\square \)

One of the special cases previewed in Section 3.1 is obtained from (32) by letting \(\lambda _n=\left( 1-\frac{\gamma \bar{\beta }}{4}\right) ^e(1+n)^{e}\) for all \(n\in \mathbb {N}\). The resulting algorithm is numerically evaluated in Section 6 and enjoys the following convergence properties.

Corollary 1

Suppose that Assumptions 1 and 4 hold with \(\lambda _0=\left( 1-\frac{\gamma \bar{\beta }}{4}\right) ^e\) and let \(f(n)=(1+n)^{e}\) with \(e\in [0,1]\). Then the following hold for (32):

-

(i)

if \(e>0\), then

$$\begin{aligned} {\left\| \frac{1}{\gamma }(p_n-y_n) \right\| }_M^2\le \frac{2\Vert y_0-x^\star \Vert _M^2}{\gamma ^2(4-\gamma \bar{\beta }-2\lambda _0)\lambda _0(1+n)^{2e}}; \end{aligned}$$ -

(ii)

if \(\bar{\beta }>\beta \) and \(e<1\), then \( {\left( \Vert p_n-y_n\Vert ^2 \right) }_{n\in \mathbb {N}}\) is summable;

-

(iii)

if \(e=0\), the algorithm reduces to forward–backward splitting and \(p_n\rightharpoonup x^\star \in \text {zer}(A+C)\).

Corollary 1 (i) states that \( {\left( \Vert p_n-y_n\Vert ^2 \right) }_{n\in \mathbb {N}}\) converges as \(\mathcal {O}\left( \frac{1}{n^{2e}}\right) \). When \(\bar{\beta }>\beta \) and \(e<1\). Corollary 1 (ii) gives that \( {\left( \Vert p_n-y_n\Vert ^2 \right) }_{n\in \mathbb {N}}\) converges as \(\mathcal {O}\left( \frac{1}{n}\right) \) due to its summability. This gives a combined \(\mathcal {O}\left( \min \left( \frac{1}{n},\frac{1}{n^{2e}}\right) \right) \) convergence rate and implies tunability of the convergence rate by selecting \(e\in [0,1]\). Letting \(e=1\) implies that our algorithm, as we will see in Section 5.2.1, reduces to the accelerated proximal point method and the Halpern iteration that converge as \(O\left( \frac{1}{n^2}\right) \).

5.2.1 Accelerated proximal point method and Halpern iteration

Letting \(f(n)=1+n\) and \(\lambda _0=\left( 1-\frac{\gamma \bar{\beta }}{4}\right) \) to get \(\lambda _n=\left( 1-\frac{\gamma \bar{\beta }}{4}\right) (1+n)\), we get that the \(y_{n+1}\) update of (32) satisfies

and algorithm (32) becomes

From Corollary 1, we conclude since \(e=1\) and by letting \(\beta =\bar{\beta }\) that this algorithm converges as

By letting \(C=0\) and consequently \(\beta =0\), we arrive at the accelerated proximal point method in [21] and the \(O(\frac{1}{n^2})\) convergence rate results found in [21] is recovered by Corollary 1.

We evaluate the algorithm in (32) with \(\lambda _n\) in (37) with the different choices of e specified in the legend to the right. The upper figure shows the 100 first \(p_n\)-iterates for the different e and the lower figure shows the distance to the unique solution. The algorithm with \(e=0\) is standard forward–backward splitting (here, only the backward part is used) and the algorithm with \(e=1\) is the accelerated proximal point in [21]. For small e, we get a strictly decreasing distance to solution, while large e gives an oscillatory behavior. Our new algorithms with e chosen around the middle of the allowed range strike a good balance and achieves superior performance compared to the previously known methods

If we let \( A = 0 \), \(\bar{\beta }= \beta \), and \(\gamma \beta = 2\), the forward–backward mapping in (36) satisfies

where \((M-\frac{2}{\beta } C): = N\) is nonexpansive in the \(\Vert \cdot \Vert _M\) norm. This implies that the algorithm aims at solving the nonexpansive fixed-point equation \(y = Ny\) by iterating

which is the Halpern iteration studied in [23]. This is seen by recursively inserting \(y_n\) into the \(y_{n+1}\) update to get

which is the formulation used in [23]. From Corollary 1, we conclude that this iteration converges as

which recovers the convergence result in [23]. Interestingly, although the convergence rate is optimized by this choice of \(\lambda _n\), it does not perform very well in practice. Other choices of \( {\left( \lambda _n \right) }_{n\in \mathbb {N}}\) with slower rate guarantees can give significantly better practical performance as demonstrated in Section 6.

We evaluate the algorithm in (30) with \(\kappa \in \{-0.9,-0.8,\ldots ,0.9\}\) as specified in the legend to the right. The upper figure shows the 100 first \(p_n\)-iterates for the different \(\kappa \) and the lower figure shows the distance to the unique solution. The performance is best for \(\kappa \in \{0.8,0.9\}\) and many choices of \(\kappa \in (0,1)\) outperform the standard forward–backward splitting method that is obtained by letting \(\kappa = 0\)

6 Numerical examples

In this section, we apply our proposed algorithms on the problem \(0\in Az\), where \(z = (x,y)\) and

for all \(z\in \mathbb {R}^2\). The operator \(A:\mathbb {R}^2\rightarrow \mathbb {R}^2\) is skew-symmetric and the monotone inclusion problem \(0\in Az\) can be interpreted as an optimality condition for the minimax problem

with unique solution \(x = y = 0\). We will in particular evaluate the algorithm in (30) with different choices of \(\kappa \in (-1,1)\) and the algorithm in (32) with

for all \(n\in \mathbb {N}\) and \(e\in [0,1]\). According to Propositions 3 and 5, (30) with \(\kappa \in (-1,1)\) and (32) with \(\lambda _n\) in (37) and \(e = 0\) (corresponding to the standard FB method) converge weakly to a solution of the inclusion problem. As per Corollary 1, (32) with \(\lambda _n\) in (37) and \(e\in (0,1]\) converges in squared norm of the fixed point residual as \(O\left( \frac{1}{n^{2e}}\right) \) and when \(e<1\) and \(\bar{\beta }>\beta \), it does so with a rate of \(O\left( \frac{1}{n}\right) \).

For all our experiments, parameters \(\gamma = 0.1\) and \(\bar{\beta }= 0.001\) (which is feasible since \(C = 0\) and therefore \(\beta = 0\)) are used, and starting points \(y_{-1} = p_{-1} = y_0 = (3,3)\) and \(x_{-1} = p_{-1} = x_0 = (3,3)\) for (32) and (30) respectively.

In Fig. 1 and Table 1 we report numerical results for the algorithm in (32) with \(\lambda _n\) in (37) and \(e\in \{0,0.1,\ldots ,1\}\) and \(M = {\textrm{Id}}\). The choice \(e = 0\) gives standard forward–backward splitting and \(e = 1\) gives the accelerated proximal point method in [21]. The other choices of e gives rise to new algorithms. The figure shows that the distance to the unique solution behaves over-damped for small values of e and under-damped for large values of e. There is a sweet spot in the middle that has the right level of damping and performs significantly better than the previously known special cases with \(e = 0\) and \(e = 1\), at least for this problem.

We evaluate the algorithm in (30) with \(\kappa \in \{0.8,0.82,\ldots ,0.9\}\) as specified in the legend to the right. The upper figure shows the 100 first \(p_n\)-iterates for the different \(\kappa \) and the lower figure shows the distance to the unique solution. All these choices perform well and we go from a non-oscillatory behavior to an oscillatory behaviour within this range of \(\kappa \)

In Fig. 2 and Table 2, we report numerical results for the algorithm in (30) with \(\kappa \in \{-0.9,-0.8,\ldots ,0.9\}\) and \(M = \textrm{Id}\). The theory predicts sequence convergence towards a solution of the problem for all \(\kappa \in (-1,1)\). The choice \(\kappa = 0\) gives rise to standard forward–backward splitting and all other values of \(\kappa \) define new algorithms. The figure reveals that the performance is best for \(\kappa \in [0.8,0.9]\), significantly better than standard FB splitting with \(\kappa = 0\).

In Fig. 3 and Table 3, we provide numerical results over a finer grid of the best performing \(\kappa \). We set the range to \(\kappa \in [0.8,0.9]\) and use a spacing of 0.02. We see that for \(\kappa = 0.8\) and \(\kappa = 0.82\), the distance to solution is non-oscillatory, while it oscillates for greater values of \(\kappa \). All these choices of \(\kappa \) perform very well.

7 Deferred results and proofs

In what follows, we present some results that have been used in the previous sections along with the proof of Theorem 1 that was deferred to this section. Prior to that, we define the auxiliary parameter

which frequently appears throughout this section.

We begin by establishing some identities between the parameters defined in Algorithm 1. These identities are used several times in the proof of Theorem 1.

Proposition 6

Consider the auxiliary parameters defined in Step 2 of Algorithm 1. Then, for all \(n\in \mathbb {N}\), the following identities hold

-

(i)

\(\theta _n = {\left( 2-\gamma _n\bar{\beta } \right) }\bar{\theta }_n+\hat{\theta }_n\);

-

(ii)

\(\theta _n = 2\bar{\theta }_n+ {\left( 2-\gamma _n\bar{\beta } \right) }(\lambda _n+\mu _n)\);

-

(iii)

\(\lambda _n^2\theta _n = \hat{\theta }_{n}(\lambda _{n}+\mu _{n})-2\bar{\theta }_n^2\).

Proof

For Proposition 6 (i), from definition of \(\bar{\theta }_n\) and \(\hat{\theta }_n\), we have

which holds by definition of \(\theta _n\) in Algorithm 1. For Proposition 6 (ii) we have

For Proposition 6 (iii), after moving all terms to the left-hand side of the equality we get

where in the first equality \(\theta _n\) is substituted using Proposition 6 (i) and in the second and the third equalities, definitions of \(\bar{\theta }_n\) and \(\hat{\theta }_n\) are used, respectively.\(\square \)

We note from Proposition 6 (iii) that the assumption \(\theta _n\) > 0 in Algorithm 1 implies \(\hat{\theta }_{n}\) > 0.

The next lemma provides alternative expressions for the term inside the first norm in ().

Lemma 2

Suppose that Assumption 1 holds and consider the sequences generated by Algorithm 1. Then, for all \(n\in \mathbb {N}\), the following

-

(i)

\(p_n - (1-\alpha _n)x_n-\alpha _np_{n-1} +\frac{\gamma _n\bar{\beta }\lambda _n^2}{\hat{\theta }_n}u_n - \frac{2\bar{\theta }_n}{\theta _n}v_n\);

-

(ii)

\(p_n-\frac{2\bar{\theta }_n}{\theta _n}z_n+\frac{\tilde{\theta }_n}{\theta _n}y_n-\frac{2\lambda _n}{\theta _n}x_n-\frac{\theta ^{\prime }_n}{\theta _n}p_{n-1}+\frac{2\bar{\theta }_n\bar{\alpha }_n}{\theta _n}z_{n-1}-\frac{\tilde{\theta }_n\alpha _n}{\theta _n}y_{n-1}\);

-

(iii)

\(\frac{1}{\lambda _n} {\left( x_{n+1}-x_n \right) }+\frac{\tilde{\theta }_n}{\hat{\theta }_n}u_n+\frac{(2-\gamma _n\bar{\beta })(\lambda _n+\mu _n)}{\theta _n}v_n\).

Proof

We, first, show that Lemma 2 (ii) represents the same vector as Lemma 2 (i):

where the coefficients of the last equality are found as follows. The numerator of the coefficient of \(x_n\) reads

where in the first equality, \(\bar{\theta }_n\) is substituted from Proposition 6 (ii), and \(\tilde{\theta }_n\) and \(\alpha _n\) are substituted by their definitions in Algorithm 1. The numerator of the coefficient of \(p_{n-1}\) is

where in the first equality (38) is used, the third equality is obtained using the definition of \(\alpha _n\), and Proposition 6 (ii) is utilized in the last equality. For the numerator of \(u_n\) we get

where the first equality is obtained by substitution of the definition of \(\tilde{\theta }_n\) from Algorithm 1, and in the last equality Proposition 6 (iii) is used.

Now, we show that Lemma 2 (ii) and (iii) represent the same vector. Starting from Lemma 2 (ii), we have

In the second equality, the definition of \(x_{n+1}\) in Step 8 of Algorithm 1 is used. In the fourth equality, the definition of \(y_n\) in Step 5 and the definition of \(z_n\) in Step 6 of Algorithm 1 are used. In the last equality, the coefficient of \(x_n\) is found to be \(-\frac{1}{\lambda _n}\) by (39), the coefficient of \(p_{n-1}\) is zero by (40), the coefficient of \(v_n\) is found by Proposition 6 (ii), and for the coefficient of \(u_n\) we have

where the second equality is obtained by (41), and in the last equality the definition of \(\bar{\theta }_n\) is used. This concludes the proof.\(\square \)

7.1 Proof of Theorem 1

Proof

The only (non-trivial) divisors that will appear the proof (as well as Algorithm 1) are \(\theta _n\) and \(\hat{\theta }_{n}\). In Algorithm 1, we assume \(\theta _n>\) 0 for all \(n \in \mathbb {N}\), which by Proposition 6 (iii) (and since \(\lambda _n > 0\) and \(\mu _n \ge 0\)) implies that \(\hat{\theta }_{n}>\) 0. Therefore, there are no divisions by zero.

Let us define the following quantity

and prove the result by showing that, for all \(n\in \mathbb {N}\), it is identical to zero. By substituting \(V_{n+1}\) and \(V_n\) in (42), we get

where in the last equality we used \(\gamma _n\bar{\alpha }_{n+1} = \gamma _{n+1}\alpha _{n+1}\). Next, substituting \(\ell _{n}\) from (), and \(\phi _{n-1}\) and \(\phi _n\) from (8) on the right-hand side of the last equality above, yields

We define

and substitute it in the last equality above; and also from Step 8 of Algorithm 1, we replace \(\lambda _n(z_n-p_n)-\bar{\alpha }_n\lambda _n(z_{n-1}-p_{n-1})\) by \(x_n-x_{n+1}\). Then, we get

where in the last equality we used the identity \(2 {\left\langle a-b, c-d \right\rangle }_M+ {\left\| b-d \right\| }_M^2- {\left\| a-d \right\| }_M^2 = {\left\| b-c \right\| }_M^2- {\left\| a-c \right\| }_M^2\) for all \(a,b,c,d\in \mathcal {H}\). Now, inserting \(x_{n+1}\) from Step 8 of Algorithm 1, yields

Next, using Lemma 2 and Steps 5–6 of Algorithm 1, we replace the terms including \(u_n\) and \(v_n\) in terms of the iterates

where we used

which is obtained by substituting \(u_n\) from Step 5 into Step 6 of Algorithm 1. Next, we expand the terms on the right-hand side of the last equality above which include \(p_n\). This yields

All terms involving \(p_n\) in this expression are identically zero since their coefficients become zero. This is for most terms straightforward to show by substituting \(\theta _n\), \(\bar{\theta }_n\), \(\tilde{\theta }_n\), \(\theta ^{\prime }_n\), \(\alpha _n\), and \(\bar{\alpha }_n\) defined in Algorithm 1 into the corresponding coefficients. We show this for two coefficients for which it is less obvious. For the coefficient of \( {\left\langle p_n, p_{n-1} \right\rangle }_M\) we have

where in the first equality \(\omega _n\) is substituted from (43) and in the third equality the definition of \(\bar{\alpha }_n\) is used. For the coefficient of \( {\left\langle p_n, z_{n-1} \right\rangle }_M\)

Next, for the terms containing \(z_n\), we do a similar procedure of expanding, reordering, and recollecting the terms as we did for \(p_n\). This gives

Now, we show that all the coefficients of the terms containing \(z_n\) are identical to zero. The coefficients of \( {\left\| z_n \right\| }_M^2\) and \( {\left\langle z_n, z_{n-1} \right\rangle }_M\) are zero by Proposition 6 (iii). For the coefficient of \( {\left\langle z_n, x_n \right\rangle }_M\) we have

which is identical to zero by Proposition 6 (ii). For the coefficient of \( {\left\langle z_n, p_{n-1} \right\rangle }_M\) we have

where in the first equality, \(\theta ^{\prime }_n\) is substituted and in the third equality Proposition 6 (iii) is used and in the last equality Proposition 6 (i) is used. Therefore, all terms containing \(z_n\) can be eliminated from \(\Delta _n\) and we are left with

Now, we show that all the coefficients of the terms containing \(y_n\) are identically zero. For the coefficient of \( {\left\| y_n \right\| }_M^2\) we have

which, by Proposition 6 (iii), is identical to zero. Now, for the coefficient of \( {\left\langle y_n, x_n \right\rangle }_M\) we have

which is identically zero by Proposition 6 (i). For the coefficient of \( {\left\langle y_n, p_{n-1} \right\rangle }_M\) we have

which is equal to zero by the definition of \(\theta _n\). The equivalence of the coefficient of \( {\left\langle y_n, z_{n-1} \right\rangle }_M\) to zero follows from the definition of \(\tilde{\theta }_n\). For the coefficient of \( {\left\langle y_n, y_{n-1} \right\rangle }_M\) we have

which by Proposition 6 (iii) is identical to zero. Therefore, all the coefficients of the terms containing \(y_n\) are zero and we can eliminate those terms. The remaining terms are

We want to show that all the coefficients of the terms containing \(x_n\) are zero. For the coefficient of \( {\left\| x_n \right\| }_M^2\) we have

where in the first equality \(\tilde{\theta }_n\) and \(\alpha _n\) and in the third equality \(\hat{\theta }_n\) are substituted by their definition from Algorithm 1. For the coefficient of \( {\left\langle x_n, p_{n-1} \right\rangle }\) we have

which by Proposition 6 (ii) is zero. For the coefficient of \( {\left\langle x_n, z_{n-1} \right\rangle }\) we have

which is identically zero by Proposition 6 (ii). For the coefficient of \( {\left\langle x_n, y_{n-1} \right\rangle }\) we have

which by Proposition 6 (i) is identically zero. Now, expanding all the remaining terms, reordering and recollecting them give

We show that all the coefficients in the expression above are identically zero. Starting by the coefficient of \( {\left\| p_{n-1} \right\| }_M^2\), we have

where in the first equality \(\omega _n = -\bar{\alpha }_n\mu _n\) is used and \(\theta ^{\prime }_n\) is substituted from 38, the third equality is attained from Proposition 6 (i) and (iii), and the expression to the right-hand side of the last equality is identically zero by (44). For the coefficient of \( {\left\langle p_{n-1}, z_{n-1} \right\rangle }_M\) we have

which by Proposition 6 (i) is equal to zero. The third equality above is attained by using Proposition 6 (iii). For the coefficient of \( {\left\langle p_{n-1}, y_{n-1} \right\rangle }_M\) we have

which by Proposition 6 (ii) is identical to zero. For the coefficient of \( {\left\| z_{n-1} \right\| }_M^2\), it is straightforward to see its equivalence to zero by Proposition 6 (iii). Likewise, the coefficient of \( {\left\langle z_{n-1}, y_{n-1} \right\rangle }_M\) is identically zero by definition of \(\tilde{\theta }_n\). The coefficient of \( {\left\| y_{n-1} \right\| }_M^2\) is

which by Proposition 6 (iii) is equivalent to zero. This concludes the proof.\(\square \)

8 Conclusions

We have presented a variant of the well-known forward–backward algorithm. Our method incorporates momentum-like terms in the algorithm updates as well as deviation vectors. These deviation vectors can be chosen arbitrarily as long as a safeguarding condition that limits their size is satisfied. We propose special instances of our method that fulfill the safeguarding condition by design. Numerical evaluations reveal that these novel methods can significantly outperform the traditional forward–backward method as well as the accelerated proximal point method and the Halpern iteration, all of which are encompassed within our framework. This demonstrates the potential of our proposed methods for efficiently solving structured monotone inclusions.

References

Alvarez, F.: On the minimizing property of a second order dissipative system in Hilbert spaces. SIAM J. Control Optim. 38.4, 1102–1119 (2000). https://doi.org/10.1137/s0363012998335802

Alvarez, F., Attouch, H.: An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 9(1/2), 3–11 (2001). https://doi.org/10.1023/a:1011253113155

Apidopoulos, V., Aujol, J.-F., Dossal, C.: Convergence rate of inertial forward-backward algorithm beyond Nesterov’s rule. Math. Program. 180(1), 137–156 (2020). https://doi.org/10.1007/s10107-018-1350-9

Attouch, H., Cabot, A.: Convergence of a relaxed inertial proximal algorithm for maximally monotone operators. Math. Program. 184(1), 243–287 (2020). https://doi.org/10.1007/s10107-019-01412-0

Attouch, H., Czarnecki, M.-O., Peypouquet, J.: Coupling forward-backward with penalty schemes and parallel splitting for constrained variational inequalities. SIAM J. Optim. 21(4), 1251–1274 (2011). https://doi.org/10.1137/110820300

Attouch, H., Peypouquet, J.: The rate of convergence of Nesterov’s accelerated forward-backward method is actually faster than \(1/k^{2}\). SIAM J. Optim. 26(3), 1824–1834 (2016). https://doi.org/10.1137/15M1046095

Attouch, H., et al.: Fast convergence of inertial dynamics and algorithms with asymptotic vanishing viscosity. Math. Program. 168(1), 123–175 (2018). https://doi.org/10.1007/s10107-016-0992-8

Banert, S., et al.: Accelerated forward–backward optimization using deep learning (2021). arXiv:2105.05210v1 [math.OC]

Bauschke, H.H., Combettes, P. L.: Convex analysis and monotone operator theory in Hilbert spaces. 2nd edn. CMS Books in Mathematics. Springer, 2017. https://doi.org/10.1007/978-3-319-48311-5

Beck, A., Teboulle, M.: A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM J. Imaging Sci. 2(1), 183–202 (2009). https://doi.org/10.1137/080716542

Bruck, R.E.: An iterative solution of a variational inequality for certain monotone operators in Hilbert space. Bull. Am. Math. Soc. 81, 890–892 (1975). https://doi.org/10.1090/S0002-9904-1975-13874-2

Chambolle, A., Dossal, C.: On the convergence of the iterates of the fast iterative shrinkage/thresholding algorithm. J. Optim. Theory Appl. 166(3), 968–982 (2015). https://doi.org/10.1007/s10957-015-0746-4

Chambolle, A., Pock, T.: A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 40(1), 120–145 (2011). https://doi.org/10.1007/s10851-010-0251-1

Chen, G.H.-G., Rockafellar, R.T.: Convergence rates in forward–backward Splitting. SIAM J. Optim. 7.2, 421–444 (1997). https://doi.org/10.1137/S1052623495290179

Cholamjiak, W., Cholamjiak, P., Suantai, S.: An inertial forward–backward splitting method for solving inclusion problems in Hilbert spaces. J. Fixed Point Theory Appl. 20.1 (2018). https://doi.org/10.1007/s11784-018-0526-5

Combettes, P. L., Pesquet, J.-C.: Proximal splitting methods in signal processing. In: by Bauschke, H.H., et al. (eds.) Fixed-point algorithms for inverse problems in science and engineering. Springer New York, pp. 185–212 (2011). https://doi.org/10.1007/978-1-4419-9569-8_10

Condat, L.: A primal-dual splitting method for convex optimization involving Lipschitzian, proximable and linear composite terms. J. Optim. Theory Appl 158(2), 460–479 (2013). https://doi.org/10.1007/s10957-012-0245-9

Eckstein, J.: Splitting methods for monotone operators with applications to parallel optimization. PhD thesis. Mass. Insitute Technol. (1989). http://hdl.handle.net/1721.1/14356

Giselsson, P.: Nonlinear forward-backward splitting with projection correction. SIAM J. Optim. 31(3), 2199–2226 (2021). https://doi.org/10.1137/20M1345062

Giselsson, P., Fält, M., Boyd, S.: Line search for averaged operator iteration. In: 2016 IEEE 55th Conference on decision and control (CDC). IEEE, pp. 1015–1022 (2016). https://doi.org/10.1109/CDC.2016.7798401

Kim, D.: Accelerated proximal point method for maximally monotone operators. Math. Program. 190(1), 57–87 (2021). https://doi.org/10.1007/s10107-021-01643-0

Latafat, P., Patrinos, P.: Asymmetric forward-backward-adjoint splitting for solving monotone inclusions involving three operators. Comput. Optim. Appl. 68(1), 57–93 (2017). https://doi.org/10.1007/s10589-017-9909-6

Lieder, F.: On the convergence rate of the Halpern-iteration. Optim. Lett. 15(2), 405–418 (2021). https://doi.org/10.1007/s11590-020-01617-9

Lions, P.L., Mercier, B.: Splitting algorithms for the sum of two nonlinear operators. SIAM J. Numer. Anal. 16(6), 964–979 (1979). https://doi.org/10.1137/0716071

Lorenz, D.A., Pock, T.: An inertial forward-backward algorithm for monotone inclusions. J. Math. Imaging Vis. 51(2), 311–325 (2015). https://doi.org/10.1007/s10851-014-0523-2

Morin, M., Banert, S., Giselsson, P.: Nonlinear forward–backward splitting with momentum correction (2021). arXiv:2112.00481v4 [math.OC]

Passty G.B.: Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J. Math. Anal. Appl. 72.2, 383x390 (1979). https://doi.org/10.1016/0022-247x(79)90234-8

Polyak, B.T.: Some methods of speeding up the convergence of iteration methods. USSR Comput. Mathem. Math. Phys. 4(5), 1–17 (1964). https://doi.org/10.1016/0041-5553(64)90137-5

Raguet, H., Landrieu, L.: Preconditioning of a generalized forward–backward splitting and application to optimization on graphs. SIAM J. Imaging Sci. 8(4), 2706–2739 (2015). https://doi.org/10.1137/15m1018253

Rockafellar, R., T.: Monotone operators and the proximal point algorithm. SIAM J. Control Optim 14.5, 877–898 (1976). https://doi.org/10.1137/0314056

Ryu, E.K., et al.: Operator splitting performance estimation: tight contraction factors and optimal parameter selection. SIAM J. Optim. 30(3), 2251–2271 (2020). https://doi.org/10.1137/19M1304854

Sadeghi, H., Banert, S., Giselsson, P.: DWIFOB: A dynamically weighted inertial forward–backward algorithm for monotone inclusions (2021). arXiv:2203.00028 [math.OC]

Sadeghi, H., Banert, S., Giselsson, P.: Forward–backward splitting with deviations for monotone inclusions (2021). arXiv:2112.00776 [math.OC]

Sadeghi, H., Giselsson, P.: Hybrid acceleration scheme for variance reduced stochastic optimization algorithms (2021). arXiv:2111.06791 [math.OC]

Taylor, A.B., Hendrickx, J.M., Glineur, F.: Performance estimation toolbox (PESTO): Automated worst-case analysis of first-order optimization methods. In: 2017 IEEE 56th Annual conference on decision and control (CDC). IEEE, pp. 1278–1283 (2017). https://doi.org/10.1109/CDC.2017.8263832

Taylor, A.B., Hendrickx, J.M., Glineur, F.: Exact worst-case performance of first-order methods for composite convex optimization. SIAM J. Optim. 27(3), 1283–1313 (2017). https://doi.org/10.1137/16M108104X

Themelis, A., Patrinos, P.: SuperMann: a superlinearly convergent algorithm for finding fixed points of nonexpansive operators. IEEE Trans. Autom. Control 64(12), 4875–4890 (2019). https://doi.org/10.1109/TAC.2019.2906393

Tseng, P.: A modified forward-backward splitting method for maximal monotone mappings. SIAM J. Control Optim. 38(2), 431–446 (2000). https://doi.org/10.1137/S0363012998338806

Vũ, B.C.: A splitting algorithm for dual monotone inclusions involving cocoercive operators. Adv. Comput. Math. 38(3), 667–681 (2013). https://doi.org/10.1007/s10444-011-9254-8

Zhang, J., O’Donoghue, B., Boyd, S.: Globally convergent type-I Anderson acceleration for nonsmooth fixed-point iterations. SIAM J. Optim. 30.4, 3170–3197 (2020). https://doi.org/10.1137/18M1232772

Acknowledgements

This research was partially supported by Wallenberg AI, Autonomous Systems and Software Program (WASP) funded by the Knut and Alice Wallenberg Foundation. S. Banert was partially supported by ELLIIT.

Funding

Open access funding provided by Lund University.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sadeghi, H., Banert, S. & Giselsson, P. Incorporating history and deviations in forward–backward splitting. Numer Algor (2023). https://doi.org/10.1007/s11075-023-01686-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11075-023-01686-8

Keywords

- Forward–backward splitting

- Monotone inclusions

- Inertial algorithms

- Convergence rate

- Halpern iteration

- Deviations