Abstract

In this work, we give a tight estimate of the rate of convergence for the Halpern-iteration for approximating a fixed point of a nonexpansive mapping in a Hilbert space. Specifically, using semidefinite programming and duality we prove that the norm of the residuals is upper bounded by the distance of the initial iterate to the closest fixed point divided by the number of iterations plus one.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let H be a Hilbert space equipped with a symmetric inner product \(\langle . , . \rangle :H \times H \rightarrow {\mathbb {R}}\). Let \(T:H \rightarrow H\) be a nonexpansive mapping and consider for fixed \(x_0\in H\) the Halpern-Iteration

from [5] with \(\lambda _k:= \tfrac{1}{k+2}\) for approximating a fixed point of T. Let \(\Vert x \Vert :=\sqrt{\langle x , x \rangle }\) denote the induced norm and \( Fix(T):= \{ x \in H \ : \ x=T(x) \}\) the set of fixed points of T. It is well known that, if the set Fix(T) is nonempty, then the sequence \( \{x_k\}_{k\in {\mathbb {N}}_0}\) will converge to \(x_*\in Fix(T)\) minimizing the distance to \(x_0\); see [17] Theorem 2, and [18] for generalizations of this remarkable property. As a consequence the norm of the residuals \(x_k -T(x_k)\) tends to zero, i.e. \( \lim _{k \rightarrow \infty } \Vert x_k -T(x_k) \Vert =0\). Our goal here is to quantify their rate of convergence. A first result of this type was generated via proof mining in [10] in normed spaces (see also [8] and [9] for further details on results in more general spaces.). Here, we improve the result for the setting of Hilbert spaces. Our proof technique is not based on proof mining, but on semidefinite programming, and is strongly motivated by the recent work of Taylor et al. [15] on worst case performance of first order minimization methods. Our methodology and focus here are, however, slightly different. We present two new proofs below. The first one is short and uses a parameter choice derived from the technique used in [15]. The second proof based on semidefinite programming is self-contained and adapts the framework of [15] to fixed point problems. The second approach can also be applied to other choices of parameters \(\lambda _k\) and to other fixed point methods. The rates are however in general not obvious. After the manuscript of this work was made public in late 2017, the author (see section 4.1 and following of [12]) and independently other authors (see e.g. [2,3,4, 6, 16]) have studied similar setups, which may all be categorized as part of the performance estimation framework. Let us briefly sketch how our setup here and in [12] may be applied in the context of proximal point algorithms (e.g. the setup of [2, 6]) by defining the nonexpansive operator \(T:=2J-I\), where I is the identity and J is a firmly nonexpansive operator (e.g. the resolvent operator of a maximal monotone operator). Because the fixed points of J and T are the same, one may now apply the Halpern-Iteration (1) to find a fixed point of J. The (tight) convergence rate is then implied by Theorem 2.1 below.

2 Main result

Theorem 2.1

Let \( x_0 \in H\) be arbitrary but fixed. If T has fixed points, i.e. \( Fix(T) \not = \emptyset \), then the iterates defined in (1) satisfy

This bound is tight.

Remark 2.1

A generalization of the Halpern-Iteration, the sequential averaging method (SAM), was analyzed in the recent paper [14], where for the first time a rate of convergence of order O(1/k) could be established for SAM. The rate of convergence in (2) is even slightly faster than the one established for the more general framework in [14] (by a factor of 4). More importantly, however, as shown by Example 3.1 below, the estimate (2) is actually tight, in the sense that for every \(k\in {\mathbb {N}}_0\) there exists a Hilbert space H and a nonexpansive operator T with some fixed point \(x_*\) such that the inequality (2) is not strict.

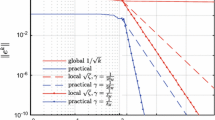

Estimate (2) refers to the step length \(\lambda _k:=1/(k+2)\). The restriction to this choice is motivated by problem (17) below in the proof based on semidefinite programming; in numerical tests for small for dimensions k these coefficients provided a better worst-case complexity than any other choice of coefficients.

Next, an elementary direct proof of Theorem 2.1 is given.

Direct proof based on a weighted sum

The iteration (1) with \(\lambda _k=1/(k+2)\) implies for \(1\le j\le k\):

By nonexpansiveness the following inequalities hold:

and

Below we reformulate the following weighted sum of (5):

Using the second relation in (3) the first terms in the summation (6) are

and using the first relation in (3) it follows for the second terms in (6)

Observe [using again the second relation in (3)] that the first term in (8)

cancels the third term in (7). Summing up the second terms in (8) for \(j=1,\ldots ,k\) we shift the summation index,

so that summing up the second terms in (7) and in (8) for \(j=1,\ldots ,k\) results in

Shifting again the index in the summation of the third terms in (8)

and summing up the first terms in (7) and the third terms in (8) for \(j=1,\ldots ,k\) gives

where the sum in the middle cancels the sum in the middle of (10) and the terms \(2{\Vert x_0-T(x_0)\Vert ^2}\) cancel as well. The only remaining terms are the first terms in (10) and (11).

Thus, inserting (9), (10), and (11) in (6) leads to

Applying the Cauchy–Schwarz inequality to the second term in (12) leads to

which may be interesting in its own right. To prove the theorem, (12) is divided by \(k+1\) and then (4) is added:

To see the last equation, the last two terms in (13) can be combined, and then a straightforward but tedious multiplication of the terms \(a:=x_k-T(x_k)\), \(b:= x_k-x_0\), \(c:= T(x_k)-x_*\), \(a+c=x_k-x_*\), and \(a+c-b =x_0-x_*\) reveals the identity.

Omitting the last term in (13) one obtains

which proves the theorem when taking square roots on both sides. \(\square \)

The above proof is somewhat unintuitive as the choice of the weights with which the inequalities (4) and (5) are added in (6) and (13) is far from obvious. In fact we owe the suggestion of these weights to an extremely helpful anonymous referee, who extracted it from a more complex construction in [15] which was also the basis for the initial proof of this paper based on semidefinite programming. We state this proof next since it offers a generalizable approach for analyzing fixed point iterations; it can be modified to the KM iteration, for example in the recent thesis [12]—though this modification is quite technical. The proof based on semidefinite programming also led to Example 3.1 below showing that the rate of convergence is tight.

Proof based on semidefinite programming Let \(x_* \in Fix(T)\). The Halpern-Iteration was stated in the form (1) to comply with existing literature. For our proof however, it is more convenient to consider the shifted sequence \({\bar{x}}_1:= x_0\) and \({\bar{x}}_k:=x_{k-1} \ \forall k \in {\mathbb {N}}_{\not = 0 }:=\{1,2,3,\dots \} \) and to show a shifted statement

Let us define \(g(x):=\frac{1}{2}(x-T(x))\). It is well known that g is firmly nonexpansive. For sake of completeness the argument is repeated here:

Nonexpansiveness and the Cauchy–Schwarz inequality also imply \( \Vert g(x)-g(y) \Vert \le \Vert x-y \Vert \forall x,y\in H\). For \( k =1\) the statement (14) follows immediately since \(g(x_*)=0\) and therefore \( \tfrac{1}{2} \Vert {\bar{x}}_1 - T( {\bar{x}}_1) \Vert = \Vert g({\bar{x}}_1 ) \Vert = \Vert g({\bar{x}}_1) - g( {\bar{x}}^*) \Vert \le \tfrac{\Vert {\bar{x}}_1-x_* \Vert }{1}\).

For fixed \(k\ge 2\) we first consider the differences \({\bar{x}}_j - {\bar{x}}_1\) for \( j \in \{2,\dots ,k \}\)

which inductively leads to

Let us shorten the notation slightly and define \( g_i:= g({\bar{x}}_i) \), \( R:= \Vert {\bar{x}}_1 -x_* \Vert \ge 0\), the vector \(b=(\langle g_i , {\bar{x}}_1-x_* \rangle )_{i=1}^k\), the matrices \(A:= (\langle g_i , g_j \rangle )_{i,j=1}^k\) and

Let \(b^T\) denote the transpose of b. Note that

is a Gramian matrix formed from \( {\bar{x}}_1-x_*,g_1,\dots ,g_k\in H\) and is therefore symmetric and positive semidefinite. We proceed by expressing the inequalities from firm nonexpansiveness in terms of the Gram-Matrix. Since L often is of much lower dimension than H, this is sometimes referred to as “kernel trick”. Keeping in mind that we can rewrite the differences \( {\bar{x}}_j - {\bar{x}}_1 = - 2 \sum _{l=1}^{j-1} \frac{l}{j} g_l\) for \(j\in \{1,\dots ,k\}\), we arrive at

Let \(e \in {\mathbb {R}}^k\) denote the vector of all ones. Then

where \(\mathrm {diag}(.)\) denotes the diagonal of its matrix argument, holds true. Hence

and

The firm nonexpansiveness inequalities \( \Vert g_i- g_j \Vert ^2 \le \langle g_i-g_j , {\bar{x}}_i-{\bar{x}}_j \rangle \) are equivalent to the component-wise inequality

Note that only \( \tfrac{k^2-k}{2}\) of these componentwise inequalities are non redundant. From

\(g_*:=g(x_*)=0\) we obtain another k inequalities, i.e. \( \Vert g_i \Vert ^2 \le \langle g_i , {\bar{x}}_i-x_* \rangle \), which translate to

Defining \(U:=I-L\) , relations (15) and (16) can be shortened slightly to

and

Let \(e_k \in {\mathbb {R}}^k\) denote the k-th unit vector, \( {\mathbb {S}}^n := \{ X \in {\mathbb {R}}^{n \times n }\ | \ X=X^T \}\) denote the space of symmetric matrices and \({\mathbb {S}}_+^n := \{ X \in {\mathbb {S}}^n\ | x^TXx\ge 0 \ \forall x \in {\mathbb {R}}^n \}\) the convex cone of positive semidefinite matrices. Consider the chain of inequalities

for

where \(\mathrm {Diag}(.)\) denotes the square diagonal matrix with its vector argument on the diagonal. The first equality follows from construction, the first inequality from relaxing, and the second inequality from weak conic duality as detailed in Sect. 5. We conclude the proof by showing feasibility of \({\hat{\xi }} := \frac{1}{k^2} >0\) and

for the last optimization problem (18). First note that \({\hat{X}}= {\hat{X}}^T\) is symmetric and nonnegative. A short computation reveals, that the equality

holds true: Define the diagonal matrix \( D:= \frac{1}{k} \mathrm {Diag}([1,\ldots ,k ]^T ) \in {\mathbb {R}}^{k \times k }\), together with the strict upper triangular matrix

and the bidiagonal matrix

The matrices \(U, {\hat{X}}\) and \(F({\hat{X}})\) can now be expressed as

Combining the equalities (21), \(D e_k =e_k\) and \( D^{-1} e_k =e_k\), yields

and using (22) we compute

which implies \(U F({\hat{X}}) + F({\hat{X}})U^T= 2 e_k e_k^T\) as we claimed above. Consequently

is positive semidefinite and as a result, \( {\hat{\xi }}\) and \( {\hat{X}}\) is feasible for (18). Hence

which yields the desired result after taking the square root. \(\square \)

Remark 2.2

The matrix \({\hat{X}}\) in the above proof carrying the weights \(j(j+1)\) used in (6) were obtained by solving (18) with YALMIP [11] in combination with the SDP solver Sedumi [13] for small values of k. In order to provide a theoretical proof that the points \({\hat{\xi }}\) and \({\hat{X}}\) above are not only feasible but actually optimal for (18) and to prove tightness of the derived bound, we refer to Example 3.1 below, which was derived from a, numerically obtained, low-rank optimal solution of (17). More precisely, after numerically determining the optimal value of (18) a linear equation was added to (18) requiring that \((Y_2)_{kk}\) equals this value, and then the trace of \(Y_{2,2}\) was minimized with the intention to find the optimal solution with minimum rank. This optimal solution was then used to derive Example 3.1 below proving the tightness of (2). In fact for any optimal solution of the SDP relaxation (17), there exists at least one Lipschitz continuous operator \({{\tilde{T}}}_k:{\mathbb {R}}^d \rightarrow {\mathbb {R}}^d\) for appropriately chosen d with some fixed point \(x_*\) such that the inequality in (18) is tight: This follows from appropriately labeling the columns of the symmetric square root of such an optimal solution and a Lipschitz extension argument. Specifically the Kirszbraun-Theorem [7] allows a Lipschitz extension of an operator that is Lipschitz on a discrete set to the entire space. For further details we refer to Section 4.2 of [12].

3 Tightness and choice of step lengths

Example 3.1

We consider the following one-dimensional real example with fixed point \(x_* = 0\) and starting point \(x_0\not = 0\). Let \(k\in {\mathbb {N}}\) be given. A nonexpansive mapping proving tightness of (2) can then be set up as follows: Let \(T:{\mathbb {R}}\rightarrow {\mathbb {R}}\) be defined via

for some fixed \(k\in {\mathbb {N}}\) and \(R:= \Vert x_0-x_*\Vert _2\) with \(x_0\in {\mathbb {R}}\) and \(x_*:= 0\). Note that T satisfies \(T(x_*)=0=x_*\) and is 1-Lipschitz continuous, i.e. nonexpansive, because it is piece-wise linear, continuous and the derivative is bounded in norm by one (\(|T'|\le 1\)) whenever it exists. We will now show that applying the Halpern-Iteration results in an equality in (2) for the k-th iterate, i.e.

is satisfied. This means that the bound (2) can not be improved without making further assumptions, as the operator above would otherwise pose a counterexample. For the first k iterates of the Halpern-Iteration (1) we can obtain

inductively: for \(x_0=0=x_*\) there is nothing to prove. The case \(0<x_0=\Vert x_0-x_*\Vert _2 =R\) follows by using the definition of T and considering for \( j \in \{0,\dots ,k-1\}\) the iterates

which imply, that

holds true. The case \(x_0<0\) follows from the operators point symmetry, i.e. \(T(-x)=-T(x)\). This completes the proof of tightness. \(\square \)

While Example 3.1 shows that the bound (2) is best possible for the Halpern-Iteration with \(\lambda _k=1/(k+2)\) , the rate of convergence could be improved for this example, if the values \(\lambda _k\) were chosen appropriately less than \(1/(k+2)\). Let us illustrate next that this is not true in general, i.e. that choosing smaller values of \(\lambda _k\) does not always provide faster convergence. Let H be a Hilbert space with a countable orthonormal Schauder basis \(\{e_j\}_{j\in {\mathbb {N}}}\) and T be the linear operator defined by \(T(e_j)=e_{j+1}\) for \(j\in {\mathbb {N}}\). Hence the unique fixed point is \(x_*=0\). When choosing \(x_0=e_1\), then for any choice of step lengths \(\lambda _j\in [0,1]\), the k-th iterate always lies in the convex hull of \(e_1,\ldots ,e_{k+1}\), and the choice of \(\lambda _j\) minimizing the error \(\Vert x_k-x_*\Vert \) is precisely the step length \(\lambda _j=1/(j+2)\) for all \(1\le j\le k\). This step length leads to a slightly faster rate of convergence than (2) for this example, namely \(\frac{1}{2} \Vert x_k-T(x_k)\Vert \le \frac{\Vert x_0-x_*\Vert }{\sqrt{2}(k+1)}\). While this step length does not minimize the residual \(\frac{1}{2} \Vert x_k-T(x_k)\Vert \) it shows that smaller values of \(\lambda _j\) such as \(\lambda _j := \rho /(j+2)\) for all j with \(\rho \in [0,1)\) lead to larger residuals. On the other hand larger values \(\lambda _j > 1/(j+2)\) for all j lead to larger residuals for Example 3.1.

4 Conclusions

We have derived a new and tight complexity bound for the Halpern-Iteration with coefficients chosen as \(\lambda _k= \tfrac{1}{k+2}\). The proof based on semidefinite programming can in principle be adapted for other choices of parameters and fixed point iterations, again leading to tight complexity bounds. For example, for the Krasnoselski–Mann (KM) iteration (see e.g. [1])

with some constant stepsize \(t\in [\tfrac{1}{2},1]\) a proof can be found in [12] (Theorem 4.9), where

is used to to define inequalities of the form (15). However, while in practice the KM-Iteration with constant stepsize may often perform much better than the Halpern-Iteration, its worst-case complexity is an order of magnitude worse—and the convergence analysis based on semidefinite programming is considerably longer.

References

Cominetti, R., Soto, J.A., Vaisman, J.: On the rate of convergence of Krasnoselskii–Mann iterations and their connection with sums of Bernoullis. Isr. J. Math. 199(2), 757–772 (2014)

Gu, G., Yang, J.: Optimal nonergodic sublinear convergence rate of proximal point algorithm for maximal monotone inclusion problems (2019). arXiv preprint arXiv:1904.05495

Gu, G., Yang, J.: On the optimal linear convergence factor of the relaxed proximal point algorithm for monotone inclusion problems (2019). arXiv preprint arXiv:1905.04537

Gu, G., Yang, J.: On the optimal ergodic sublinear convergence rate of the relaxed proximal point algorithm for variational inequalities (2019). arXiv preprint arXiv:1905.06030

Halpern, B.: Fixed points of nonexpanding maps. Bull. Am. Math. Soc. 73(6), 957–961 (1967)

Kim, D.: Accelerated proximal point method for maximally monotone operators (2019). arXiv preprint arXiv:1905.05149

Kirszbraun, M.D.: Über die zusammenziehende und Lipschitzsche Transformationen. Fund. Math. 22, 77–108 (1934)

Kohlenbach, U.: On quantitative versions of theorems due to FE Browder and R Wittmann. Adv. Math. 226(3), 2764–2795 (2011)

Kohlenbach, U., Leuştean, L.: Effective metastability of Halpern iterates in CAT (0) spaces. Adv. Math. 231(5), 2526–2556 (2012)

Leustean, L.: Rates of asymptotic regularity for halpern iterations of nonexpansive mappings. J. UCS 13(11), 1680–1691 (2007)

Lofberg, J.: YALMIP: a toolbox for modeling and optimization in MATLAB. In: 2004 IEEE International Symposium on Computer Aided Control Systems Design, IEEE, pp. 284–289 (2004)

Lieder, F.: Projection based methods for conic linear programming—optimal first order complexities and norm constrained quasi newton methods, (Doctoral dissertation, Universitäts-und Landesbibliothek der Heinrich-Heine-Universität Düsseldorf) (2018). https://docserv.uni-duesseldorf.de/servlets/DerivateServlet/Derivate-49971/Dissertation.pdf

Sturm, J.F.: Implementation of interior point methods for mixed semidefinite and second order cone optimization problems. Optim. Methods Softw. 17(6), 1105–1154 (2002)

Sabach, S., Shtern, S.: A first order method for solving convex bilevel optimization problems. SIAM J. Optim. 27(2), 640–660 (2017)

Taylor, A.B., Hendrickx, J.M., Glineur, F.: Smooth strongly convex interpolation and exact worst-case performance of first-order methods. Math. Program. 161(1–2), 307–345 (2017)

Ryu, E.K., Taylor, A.B., Bergeling, C., Giselsson, P.: Operator splitting performance estimation: tight contraction factors and optimal parameter selection (2018). arXiv preprint arXiv:1812.00146

Wittmann, R.: Approximation of fixed points of nonexpansive mappings. Arch. der Math. 58(5), 486–491 (1992)

Xu, H.K.: Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 66(1), 240–256 (2002)

Acknowledgements

Open Access funding provided by Projekt DEAL. I would like to thank Florian Jarre for careful proofreading of this paper. I would also like to sincerely thank the anonymous referees for their constructive criticism that helped to improve the presentation of the paper and that led to the direct proof based on a weighted sum.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The author declares that he has no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix, a technical duality result

Appendix, a technical duality result

While the duality used in (17) and (18) is the well known weak conic duality, the format of the problems (17) and (18) is quite intricate. Here, the explicit derivation of the dual problem therefore is derived in detail: Define the Euclidean space \({\mathbb {E}}:= {\mathbb {R}} \times {\mathbb {S}}^k \times {\mathbb {S}}^{k+1}\) and the closed convex cone \({\mathcal {K}}:={\mathbb {R}}_+ \times ({\mathbb {S}}^k \cap {\mathbb {R}}_+^{k\times k}) \times {\mathbb {S}}_+^{k+1}\). We denote the trace inner product \(A\cdot B:= \mathrm{trace}({AB})\) for all symmetric matrices A, B. Then \({\mathcal {K}}\) is self-dual with respect to the canonical inner product \(\langle X , Y \rangle _{{\mathbb {E}}}:=x_1^Ty_1 + X_2 \cdot Y_2 + X_3 \cdot Y_3\), which we define for any

We proceed by restating problems (17) and (18) in standard form and exploit (weak) conic duality. In fact, by adding slack variables \(S\in {\mathcal {K}}\), we can write (17) equivalently as a conic optimization problem in dual standard form

for

and the linear operator \({\mathcal {A}}^*: {\mathbb {S}}^{n+1}\rightarrow {\mathbb {E}}\)

The constraint \({\mathcal {A}}^*(Y) + S ={\widetilde{C}}, S \in {\mathcal {K}}\) is a direct translation of the constraints in (17) except for the diagonal entries. Here it is used that the term \(\mathrm {diag}(Y_2 U)e^T + e \ \mathrm {diag}(U^T Y_2)^T - (Y_2U+ U^TY_2)\) has an all zero diagonal, which is why we can “place ” the constraints \(\mathrm {diag}(Y_2 U) - y_1\le 0\) on the diagonal.

Recall that the optimal value of the conic optimization problem (24) in dual standard form is upper bounded by the optimal value of the conic optimization in primal standard form

where the linear operators \({\mathcal {A}}^*: {\mathbb {S}}^{n+1}\rightarrow {\mathbb {E}}\) and \( {\mathcal {A}}: {\mathbb {E}} \rightarrow {\mathbb {S}}^{n+1}\) are adjoint, i.e. \({\mathcal {A}}\) is the unique operator that satisfies

for all \(Y\in {\mathbb {S}}^{n+1}, \ {\tilde{X}}\in {\mathbb {E}}\). The ”\(Y_2\)-part” of the adjoint of the second component of \({\mathcal {A}}^*\) can be obtained from

leading to the full description of \({\mathcal {A}}\)

where, as before \(F(X) = \mathrm {Diag}(Xe) + \tfrac{1}{2} \mathrm {Diag}(\mathrm {diag}(X))-X\). This shows that we can rewrite (27) in the claimed form (18) by eliminating the (semidefinite) variable \(X_2\). This establishes the inequality in (17) and (18). \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lieder, F. On the convergence rate of the Halpern-iteration. Optim Lett 15, 405–418 (2021). https://doi.org/10.1007/s11590-020-01617-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11590-020-01617-9