Abstract

Multibody dynamics comprises methodologies for the design and analysis of mechanical systems, with Kalman filters being the principal state estimation methods. The Kalman filters are generally formulated for unconstrained systems, the dynamics of which are described by ordinary differential equations in state-space models. However, multibody systems are constrained systems, and the commonly used method to describe their dynamics involves using differential algebraic equations (DAEs) comprising differential and algebraic equations. The differential equations also include the Lagrange multipliers. Hence, incorporating multibody systems, described by DAEs into the scheme of Kalman filters, cannot be achieved straightforwardly, which facilitates different strategies being addressed. This study develops a novel method for converting DAEs into a state-space model. A transition model of the time derivatives of Lagrange multipliers and a Lagrange multiplier constraint vector are devised and used in the state and output equations, respectively. The continuous- and discrete-time extended Kalman filters (CEKF and DEKF) are constructed using the proposed state-space model, and state estimations are simulated on the benchmark planar four- and five-bar linkages. Further, a demonstration of system observability is conducted, and sensitivity to the initial state estimates is studied. These tests demonstrate that the proposed state-space model achieves observable systems and that both the CEKF and DEKF, constructed using the proposed state-space model, can estimate the states with a wide range of initial conditions.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

1.1 Multibody dynamics and differential algebraic equations

Nonlinear computational dynamics theory has been developed for systematically deriving the equations of motion of multibody systems from the perspective of analytical mechanics [1, 2]. In the 1980s, commercial software with functions for modeling, simulation, and animation of multibody systems was developed, and multibody dynamics began to be used in practical situations [3]. Since the 1990s, the interest in multibody dynamics has shifted towards the deformation of bodies [4]. The theory of multibody dynamics, which incorporates the deformation of bodies, has been developed into flexible multibody dynamics [5, 6].

The same multibody system can be modeled in independent or dependent coordinates. The number of independent coordinates coincides with that of the degrees of freedom of the system and, hence, is minimal, which leads to high computational efficiency. However, they cannot directly determine the position of the system. Therefore, studies on this subject tend to conclude that independent coordinates are not appropriate for general-purpose analysis [7]. The model of multibody system dynamics often appears as a set of algebraic, ordinary differential, or differential algebraic equations (DAEs) [8]. DAEs are formulated in dependent coordinates and are generally used in analysis software for multibody systems. They are advantageous as they facilitate the calculation of forces associated with constraints via the Lagrange multipliers with minimal additional effort [7].

1.2 Kalman filters and their applications to constrained systems

The Kalman filter theory was published in the 1960s [9]. This is a minimum-variance state observer for linear systems with Gaussian noise [10]. To consider the nonlinearities of real-world problems, state observers for nonlinear systems have been developed based on the theory of Kalman filters, such as the extended Kalman filter (EKF) and unscented Kalman filter (UKF) [11, 12].

In case of the availability of information on systems that Kalman filters do not incorporate into their schemes, it can be employed to improve the filtering performance [13]. In the 2000s, Kalman filters were developed for constrained systems. Simon and Chia [14] presented a method to incorporate linear state constraints into a Kalman filter. The effectiveness of this method was demonstrated using a vehicle-tracking simulation. Julier and LaViola [15] categorized the previous approaches into pseudo-observation and projection methods. They proposed using the projection method twice to constrain the entire distribution and its statistics. Yang and Blasch [16] proposed a method that specifically facilitated using second-order nonlinear constraints between states. For second-order constraints, there is a trade-off between reducing the approximation errors and maintaining tractable computational costs.

1.3 Combination of Kalman filters and multibody dynamics

Many approaches for combining Kalman filters and multibody dynamics have been studied over the past 20 years [17].

Cuadrado et al. [18] used the continuous-time extended Kalman filter (CEKF) to estimate the states of a multibody system. In their study, DAEs were converted into state equations via two methods. One method involved using the matrix-R method to reduce the dependent generalized coordinates and Lagrange multipliers. The other involved using the penalty method to eliminate the Lagrange multipliers. The observer constructed using the matrix-R method exhibited better results than that constructed using the penalty method. Cuadrado et al. [19] developed real-time automotive observers based on the work presented in [18]. Although a good convergence was demonstrated, the computational cost was high. Pastorino et al. [20] compared the performance of Kalman filters implemented using multibody models. The state observers considered in their study were CEKF, UKF, and spherical simplex UKF (SSUKF). The matrix-R method was used to transform the DAEs into state equations. The UKF and SSUKF approximated the system nonlinearities better than the CEKF and hence exhibited better results in terms of accuracy with an additional computational cost. Sanjurjo et al. [21] introduced state observers built by combining multibody models with an error-state extended Kalman filter (errorEKF). It was compared with previously proposed state observers, namely the discrete-time extended Kalman filter (DEKF), CEKF, and UKF. These observers were tested on four- and five-bar linkages using several sensor configurations and sampling rates. They considered the position and velocity sensors. The results showed that the errorEKF was the most computationally efficient. Furthermore, it was one of the methods whose computational cost was less affected by an increase in the system size from four- to five-bar linkages. Sanjurjo et al. [22] improved their previous work by proposing two state observers for multibody models: errorEKF with the exact Jacobian matrix of the plant (errorEKF-EJ) and errorEKF with force estimation (errorEKF-FE). The errorEKF-FE follows the same structure as the errorEKF-EJ and provides input force estimation. The performances of the state observers were compared with those of the errorEKF and UKF. They were tested using four- and five-bar linkages. Regarding the accuracy, the errorEKF-EJ outperformed the errorEKF with a slight increase in the computational time. Moreover, it achieved nearly identical accuracy to the UKF, with a much shorter computational time. The errorEKF-FE was the only method that yielded reliable estimation results when accelerometers were used. Its computational cost was slightly higher than that of the errorEKF-EJ. Rodríguez et al. [23] presented a state-parameter-input observer for vehicle dynamics. As a considerable number of parameters was involved in vehicle modeling, state and parameter estimations were combined for accuracy improvement. This combination approach is referred to as the dual Kalman filter method. The state and parameter estimators used were errorEKF-FE and UKF, respectively. Rodríguez et al. [24] also proposed an adaptive errorEKF-FE (AerrorEKF-FE) method, known as the maximum likelihood method, which was adjusted to the errorEKF-FE. The accuracy of the Kalman filters is dependent on noise covariance matrices, which are usually unknown; therefore, they are estimated via adaptive techniques.

Various studies on state and parameter estimations of systems based on multibody dynamics and hydraulics have been conducted, recently. Jaiswal et al. [25] extended the use of the errorEKF-EJ to hydraulically actuated systems, where a monolithic approach was used to couple the multibody system and the hydraulic subsystem. A hydraulically actuated four-bar linkage was employed in the case study. The work cycle of the system and hydraulic pressures were accurately estimated. Khadim et al. [26] developed a state observer based on the UKF and a multiphysics model of a forestry crane. The state observer was experimentally investigated, and the non-measured states were estimated with reasonable accuracy. Pyrhönen et al. [27] proposed an online algorithm for identifying the load mass of a hydraulically driven crane. The algorithm was divided into two parts. First, states were estimated using mechanism kinematics and hydraulic system dynamics. Second, the unknown load mass was estimated using inverse dynamics. The states and mass were accurately estimated using the proposed method.

Naets et al. [28] proposed the subsystem global modal parametrization (SS-GMP) approach. Using SS-GMP reduced the variables of multibody models such that real-time capable modes could be achieved without a drastic decrease in accuracy. The augmented DEKF (ADEKF) was used to estimate the states and inputs of the reduced multibody model. Notably, their approach was not robust against incorrect initial conditions. Hagh et al. [29] investigated the application of four different, adaptive, sigma-point Kalman filters to a simulated, rigid–flexible four-bar linkage and experimental, servo-hydraulic actuator; these were as follows: the adaptive UKF, adaptive square-root UKF, adaptive scaled spherical simplex UKF, and adaptive square-root scaled spherical simplex UKF. The performance of these filters was examined under various operating conditions. Pyrhönen et al. [30] proposed an accurate state-transition model employed in the DEKF framework. The state-transition model presented in their study was based on the coordinate-partitioning method and linearized using an exponential integration scheme. Their approach was more accurate and numerically stable than the forward Euler linearization methods. Palomba et al. [31] proposed a general theory for designing state observers based on kinematic models of multibody systems under the assumption of rigid bodies and negligible joint clearance. A simple formulation was obtained using this approach. This was validated through numerical and experimental tests on open- and closed-chain multibody systems. The EKF and UKF were employed as state observers. A two-stage approach was presented in [32]. The estimation was processed by two observers that ran simultaneously. In the first stage, the states were estimated using a state observer based on kinematic models [31]. In the second stage, unknown inputs were estimated using a force observer based on dynamic models. Their study was numerically validated using a closed-chain multibody system. Risaliti et al. [33] presented an ADEKF for flexible multibody models governed by DAEs. The penalty method was used to convert DAEs into ordinary differential equations (ODEs), eliminating the Lagrange multipliers from the equations and introducing additional effort to select effective penalty factors. It was applied to estimate the six-wheel center loads and strain fields on the vehicle suspension system. All six loads and strain fields were accurately estimated when the model incorporated the accurate relationships between the estimated and measured loads. In [34], the UKF was used to estimate the states of a flexible cable, and the state estimates were then used to obtain control inputs. Both ends of the cable body, modeled by the absolute nodal coordinate formulation [35–37], were attached to an unmanned aerial vehicle and a rigid body by pin joints. This yielded a multibody system that could be used for construction inspection and disaster monitoring. Cuadrado et al. [38] used acceleration measurements to tune the EKF parameters for optical-motion capture. This study offered two key advantages. One, when employing a marker-based optical system, the EKF significantly contributed to enhancing the efficiency of motion capture and reconstruction. Two, using inertial-sensor-based acceleration measurements assists in tuning-parameter adjustments, improving the overall reliability of the accelerations obtained. Adduci et al. [39] presented a generalized approach to the methodology described in [33], eliminating the efforts to determine effective penalty factors. A linearization approach for DAEs to extract the system matrices required in the DEKF scheme was proposed. In addition, output equations, augmented by the constraint equations of the DAEs, were introduced. The state and output equations were used in the DEKF scheme. This was experimentally validated using a slider-crank mechanism. Mohammadi et al. [40] incorporated the UKF into multibody dynamics to estimate states of flexible multibody systems. Both reference and modal coordinates were used to form the equations of motion. As sensor measurements do not have a physical meaning, a novel technique for converting them into non-physical coordinates was proposed. Capalbo et al. [41] presented an approach for estimating states, inputs, and parameters of flexible structures. Their approach integrated a parametric model order reduction into an augmented EKF. Both numerical and experimental validations were conducted. Tamarozzi et al. [42] proposed an augmented manifold differential-algebraic extended Kalman filter (AMANDA-EKF) for multibody systems. The AMANDA-EKF has two steps. The first involved the removal of the Lagrange multipliers from the state vector through a null-space projection. The second was the solution of a constrained optimization problem.

1.4 Differences between proposed and conventional methods

Various approaches for incorporating multibody dynamics into the Kalman filter scheme have been studied. Although the commonly used method that describes the dynamics of multibody systems involves using DAEs, Kalman filters have been formulated for systems described by ODEs. Thus, to apply Kalman filters to multibody systems formulated by DAEs, DAEs must be converted to ODEs.

In [18–22], the matrix-R method was used to obtain the form of ODEs in minimal coordinates. The constraint equations of DAEs must be eliminated while maintaining the mathematical and physical correctness of the systems to convert DAEs in dependent coordinates into ODEs in minimal coordinates. Eliminating the constraint equations is an additional effort for users. Moreover, the information that can be obtained via the Kalman filter scheme was reduced. To the best of our knowledge, the method presented in [39] is the only method that applies the Kalman filter to multibody systems described by DAEs, where all the variables of the DAEs, namely generalized coordinates, generalized velocities, and Lagrange multipliers, remain in the states and are estimated using the Kalman filters. The Lagrange multipliers facilitate the calculation of forces that are associated with constraints [7]. These forces contribute to the designs and strength analyses of multibody systems [43]. The Lagrange multipliers can be algebraically calculated as a post process, using the generalized coordinates and velocities that are estimated by the Kalman filters. However, the errors generated in the scheme of Kalman filters are amplified by the post calculation because the Kalman filters assume that errors are independent of the states. In contrast, when the state vector includes the Lagrange multipliers, the Kalman filters estimate them directly and contribute to error reduction in each step, and the problem mentioned above does not occur.

However, it should be noted that systems using minimal coordinates tend to be more computationally efficient than those using dependent coordinates. Thus, both dependent- and reduced-coordinate usages have their own advantages and disadvantages. For clarity, conversion methods that reduce the number of states were not considered in this study.

The common feature of the proposed method and the method in [39] was that the constraint equations of DAEs need not be eliminated. Instead, they were exploited in the output equations as perfect measurements, thereby enforcing kinematic constraints in the estimation schemes. Further comparisons between the proposed and conventional methods are summarized in Table 1. In their study, unknown input forces further augmented the states, assuming that random-walk models were associated. The control input was accurately estimated during validation. However, this was not considered in this study and will be addressed in future work. Another point to mention is the difference between the output models. The output model in their study comprised a sensor model and a constraint vector, which is sufficient to achieve observable systems when used in a state-space model, along with the state equations in their work. However, observable systems were not achieved when they were used in conjunction with the proposed state equations. Therefore, we propose a Lagrange multiplier constraint vector and employ it in the output model, thereby improving the system observability. Although their study offers several advantages, it has three limitations that should be addressed. First, the state equations were expressed only in a discrete-time form. However, the proposed state equations can be expressed in continuous- and discrete-time forms. Second, forward discretization schemes could not apply to the linearization approach. By contrast, the proposed state equations do not have such limitations. Third, the linearization approach was specific to the DEKF scheme. However, as the proposed approach achieves the general form of state-space models, the schemes formulated for state-space models are applicable to the proposed state-space model. Therefore, the proposed method provides more choices for the users.

1.5 Research objectives

This study has two primary research objectives.

The first objective is the proposal of a novel method for converting DAEs into a state-space model. We propose a transition model of the time derivatives of the Lagrange multipliers used in the proposed state equations. In addition, we propose a Lagrange multiplier constraint vector and use it in the proposed output equations, thereby improving the system observability.

The second objective is the verification of the proposed state-space model. State estimations are simulated on benchmark four- and five-bar linkages. The CEKF and DEKF are constructed using the proposed state-space model. The Popov–Belevitch–Hautus rank test [44] is employed for observability analysis. Tests with errors in the parameters and initial positions are conducted.

The remainder of this paper is organized as follows. Section 2 explains the derivation of the proposed state-space model. Section 3 describes the structures of CEKF and DEKF. Section 4 presents the verification method and the results. Finally, Sect. 5 presents the conclusions drawn from this study.

2 Novel method for converting DAEs into a state-space model

The dynamics of holonomic multibody systems can be described by DAEs that are composed of second-order differential and algebraic equations. The differential equations represent the dynamics of multibody systems, whereas the algebraic equations represent the kinematic constraints of the systems. Index-3 DAEs [7] are formulated as

where \(\mathbf{M}\) is a mass matrix, \(\mathbf{Q}\) is a generalized force vector, \(\mathbf{q}\) is a generalized coordinate vector, \(\boldsymbol{\lambda}\) is a Lagrange multiplier vector, \(\boldsymbol{\Phi}\) is a constraint vector, \(\boldsymbol{\Phi}_{\mathbf{q}}\) is a Jacobian matrix of the constraint vector with respect to the generalized coordinate vector, and \(m\) is the number of constraints. Index-1 DAEs in matrix form [7] are formulated as

where \(\boldsymbol{\gamma}\) is a vector associated with the second-order time derivative of the constraint vector.

The DAEs must be converted into a state-space model composed of the state and output equations to fit the Kalman filter scheme. In this section, we propose a method for converting the DAEs into state and output equations.

2.1 Proposed state equations

State equations are first-order ODEs that describe the dynamics of systems. The DAEs, which represent the dynamics of multibody systems, include both algebraic and differential equations. In addition, the Lagrange multipliers are included in the differential equations. The derivation of the proposed state equations begins with index-1 DAEs, as expressed by Eq. (2). If the mass matrix is a nonsingular matrix, then the coefficient matrix in Eq. (2) can be inverted in the block matrix form; hence, the generalized acceleration and Lagrange multiplier vectors can be expressed as

where \(\mathbf{f}\)acc() and \(\mathbf{f}\)Lag() are continuous-time transition models of the acceleration and Lagrange multipliers, respectively. They are summarized as

Exploiting the fact that they are only dependent on generalized coordinates and velocities, we propose a transition model of the time derivatives of the Lagrange multipliers. By differentiating both sides of Eq. (5) with respect to time and substituting Eq. (4), we obtain the following:

where \(\mathbf{f}\)LagDyn() is a continuous-time transition model of the time derivatives of the Lagrange multipliers. As the generalized acceleration vector is replaced by its transition model, the transition model of the time derivatives of the Lagrange multipliers depends only on the generalized coordinates and velocities. The partial derivatives are obtained analytically as explained in Appendix A. Although analytical differentiations are the most computationally efficient for derivative evaluation, substantial effort is required for implementation [45]. These terms can have complex expressions, particularly for relative coordinates. Hence, the approximations of these terms will be addressed in future research. Using the proposed transition model, we define a continuous-time transition model of the states, where the state vector is defined as

Using Eqs. (4) and (6), the proposed state equations can be formulated as

where \(\mathbf{f}\)cont() is a continuous-time transition model of the states.

2.2 Conventional output equations

The state vector, defined in Eq. (7), includes dependent variables, which may result in a lack of observability. Thus, the output equations must be improved to guarantee the observability of the systems. The general output equations indicating the relationship between the states and physical quantities measured by the sensors are expressed as

where \(\mathbf{y}\)sensor is an output vector containing physical quantities measured by the sensors, and \(\mathbf{h}\)sensor() is a sensor model. Equation (9) contains general output equations that only include the sensor models. Thus, it is referred to as sensor output equations in this study. Because the proposed state equations include dependent variables, many states must be measured to render the system observable. Thus, many sensors are required. Moreover, certain states are difficult to measure, such as Lagrange multipliers. Hence, it is not realistic to measure all the states required to render the system observable. The general output equations are augmented using the algebraic equations of the DAEs to solve this problem. In [13], various methods for exploiting state constraints in the Kalman filter schemes were introduced.

In [39], the constraint equations of the DAEs were treated as perfect measurements (PMs) and used to augment the general output equations of Eq. (9), that is,

where \(\mathbf{h}\)conv() expresses a conventional PM output model. Equation (10) expresses the output equations presented by Adduci et al. [39] and is referred to as conventional PM output equations in this study.

2.3 Proposed output equations

The output equations in Eq. (10) enforce the kinematic constraints and provide information regarding the dependent generalized coordinates in the estimation schemes. However, it does not provide any information regarding the Lagrange multipliers. The lack of this information renders the systems unobservable when used along with the proposed state equations of Eq. (8). Consequently, we propose a Lagrange multiplier constraint vector. Moving the Lagrange multiplier vector in Eq. (5) to the right side, the Lagrange multiplier constraint equations are formulated as

where \(\boldsymbol{\Phi}\)Lag denotes a Lagrange multiplier constraint vector. We propose treating the equations as perfect measurements and augmenting the output equations of Eq. (10), that is,

where \(\mathbf{h}\)prop() denotes a proposed PM output model. Equation (12) expresses the output equations originally proposed in this study and is referred to as the proposed PM output equations. In the Kalman filter scheme, the output equations of Eq. (12) provide information regarding the dependent generalized coordinates as well as Lagrange multipliers. Hence, they play an important role in improving system observability when used along with the proposed state equations of Eq. (8). To prove this, studies on system observability are presented in Sect. 4.2.

3 Structures of state observers

As state observers of multibody systems, CEKF and DEKF [11] were employed in this study. The following explains the structures of the CEKF and DEKF.

3.1 Structure of CEKF

Using the state and output equations, the CEKF is formulated as

where \(\hat{\mathbf{x}}\) is a state estimate vector, \(\mathbf{P}\) is an error covariance matrix, \(\boldsymbol{\Sigma}_{\mathrm{sys}}^{\mathrm{CEKF}}\) is a covariance matrix of the system noise for the CEKF, \(\boldsymbol{\Sigma}\)measure is a covariance matrix of the measurement noise, \(\mathbf{y}\) is an output vector, \(\mathbf{h}\)() is an output model, \(\mathbf{H}\)() is a Jacobian matrix of the output model with respect to the state vector, and \(\mathbf{F}\)cont() is a Jacobian matrix of the continuous-time transition model with respect to the state vector. The Jacobian matrix \(\mathbf{F}\)cont() is expressed as

where \(n\) is the number of generalized coordinates. The partial derivatives are analytically obtained as explained in Appendix A. As mentioned earlier, the analytical expressions of partial derivatives can be complicated. Hence, the approximations of these terms will be investigated in future. In addition, the UKF application will also be considered because the Jacobian matrices are not required for the implementation. The output vector \(\mathbf{y}\) and output model \(\mathbf{h}\)() are represented by Eq. (9), (10), or (12). The Jacobian matrices of the output models with respect to the state vector are respectively shown as

where \(s\) is the number of physical quantities measured by sensors.

3.2 Structure of DEKF

To apply the DEKF, the state equations must be discretized in time. In this study, the fourth-order explicit Runge–Kutta method (RK4) was used to discretize Eq. (8), that is,

where \(\Delta t\) is a time step, \(\mathbf{f}\)RK4() is a gradient vector of the RK4, explained in Appendix B, and \(\mathbf{f}\)disc() is a discrete-time transition model of the states.

The DEKF comprises two stages: prediction and update. States are first predicted using transition models in the prediction stage, and the outputs are then used to improve the predicted states in the update stage. The prediction stage involves the following:

where \(\boldsymbol{\Sigma}_{\mathrm{sys}}^{\mathrm{DEKF}}\) is a covariance matrix of the system noise for the DEKF, and \(\mathbf{F}\)disc() is a Jacobian matrix of the discrete-time transition model with respect to the state vector, as explained in Appendix B. The update stage involves the following:

where \(\mathbf{G}\) is a Kalman gain matrix.

3.3 Covariance matrices of system and measurement noises

For the EKFs, the optimal combinations of tuning parameters (covariance matrices) differ depending on the initial conditions. Considerable time is required to determine these parameters for each initial condition. In [24, 38], adaptive techniques were combined with the EKF to estimate covariance matrices. However, this is beyond the scope of this study. The optimal combinations of the tuning parameters have not been explored. The covariance matrices are simplified as follows.

The covariance matrices of system noise for the CEKF and DEKF are respectively defined as

where \(\sigma \)coord, \(\sigma \)vel, \(\sigma \)acc, \(\sigma \)Lag, and \(\sigma \)LagDyn are variances of the system noises for the coordinates, velocities, accelerations, Lagrange multipliers, and time derivatives of the Lagrange multipliers, respectively.

Three types of covariance matrices for the measurement noise are prepared corresponding to the output models. These are defined as

where \(\sigma \)sensor, \(\sigma \)constr, and \(\sigma \)LagConstr are variances of the measurement noises for the sensors, constraints, and Lagrange multiplier constraints, respectively. In each state estimation, one of them is employed depending on the output model.

Therefore, the parametric study described herein involves the determination of the values of \(\sigma \)vel, \(\sigma \)acc, \(\sigma \)LagDyn, \(\sigma \)sensor, \(\sigma \)constr, and \(\sigma \)LagConstr for the CEKF and \(\sigma \)coord, \(\sigma \)vel, \(\sigma \)Lag, \(\sigma \)sensor, \(\sigma \)constr, and \(\sigma \)LagConstr for the DEKF.

4 State estimations using four- and five-bar linkages

The three-simulation method [17] was employed to evaluate the accuracy of state observers. The first simulation was conducted by numerically solving the index-1 DAEs shown as Eq. (2). The reference values of the generalized coordinates, generalized velocities, and Lagrange multipliers were obtained by this simulation and used to evaluate the state observers and create sensor outputs. In this study, noise was created according to [22] and added to the sensor outputs for recreating real-world noise conditions. The same noise sequence was used throughout for fair comparison. The second simulation, referred to as the model, was also conducted by numerically solving the index-1 DAEs; however, this simulation involved several errors, such as initial positions and parameters that were different from the first simulation. The third simulation was the state estimation using the state observers. This simulation included the same errors as the second simulation. In addition, the initial values of the Lagrange multipliers were set to zero to represent zero energy; zero energy cannot exist in dynamics systems.

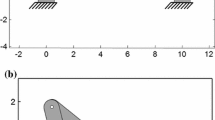

The state estimations were simulated on planar four- and five-bar linkages. The four-bar linkage with a gyroscope on its coupler is shown in Fig. 1a, where \(L_{1}\), \(L_{2}\), and \(L_{3}\) represent the lengths of the crank, coupler, and rocker, respectively, \(\theta _{0}\) indicates the initial angle between the \(x\)-axis and crank, and \(X_{1}\), \(Y_{1}\), \(X_{2}\), and \(Y_{2}\) indicate the coordinates of the joints. The initial angle was set as \(\pi /3\) rad. The five-bar linkage with gyroscopes on its couplers is shown in Fig. 1b, where \(L_{1}\), \(L_{2}\), \(L_{3}\), and \(L_{4}\), respectively, represent the lengths of the left crank, left coupler, right coupler, and right crank, \(\theta _{01}\) indicates the initial angles between the \(x\)-axis and left crank, \(\theta _{02}\) indicates the initial angles between the \(x\)-axis and right crank, and \(X_{1}\), \(Y_{1}\), \(X_{2}\), \(Y_{2}\), \(X_{3}\), and \(Y_{3}\) indicate the coordinates of the joints. The initial angles were set to 0 and \(\pi \) rads, respectively. The outputs of gyroscopes are angular velocities denoted as \(\omega \), \(\omega _{1}\), and \(\omega _{2,}\) respectively. The properties of the four- and five-bar linkages were determined according to [21] and summarized in Tables 2 and 3, respectively. The ground element specifies the distance between the two joints attached to the ground. The four- and five-bar linkages were formulated in natural coordinates according to [7], and their generalized coordinate vectors are defined as

The gyroscope model was formulated according to [22]. The gyroscopes were installed on couplers or cranks. In this study, analytical differentiation was used to obtain the partial derivatives of the four- and five-bar linkages. However, numerical differentiation may be required for more complex multibody systems, and it will be addressed in our future research.

The RK4 was employed to numerically integrate the differential equations of the CEKF. The time step of the RK4 and sampling rate of the gyroscope were respectively set to \(5\times 10^{-3}\) s and 200 Hz, indicating that the gyroscope output was available at every time step. In this study, the initial value of the error covariance matrix \(\mathbf{P}_{0}\) is defined as

where the parameters \(P_{0}^{\mathrm{coord}}\), \(P_{0}^{\mathrm{vel}}\), and \(P_{0}^{\mathrm{Lag}}\) are summarized in Table 4. The tuning parameters of the CEKF and DEKF are summarized in Tables 5 and 6, respectively. As aforementioned, discovering the optimal combinations of tuning parameters is beyond the scope of this study.

In this study, two evaluation approaches were employed. The first was to analyze the L2 norms of errors in generalized coordinates, generalized velocities, and Lagrange multipliers at each time step. These values were calculated using the following equations:

where \(q_{\mathrm{e}}^{\left ( i \right )}\left ( t \right ) \equiv q^{\left ( i \right )}\left ( t \right ) - \hat{q}^{\left ( i \right )}\left ( t \right )\), \(\dot{q}_{\mathrm{e}}^{\left ( i \right )}\left ( t \right ) \equiv \dot{q}^{\left ( i \right )}\left ( t \right ) - \hat{\dot{q}}^{\left ( i \right )}\left ( t \right )\), and \(\lambda _{\mathrm{e}}^{\left ( i \right )}\left ( t \right ) \equiv \lambda ^{\left ( i \right )}\left ( t \right ) - \hat{\lambda}^{\left ( i \right )}\left ( t \right )\). The other evaluation was to investigate the total normalized root mean square error (NRMSEs), defined as

where \(N\) is the amount of data of each state. The reference values of generalized coordinates, generalized velocities, and Lagrange multipliers were obtained from the first simulation. The purpose of this calculation was the evaluation of the state observers, and the estimated values originated from the third simulation.

4.1 State estimations and computational costs

The initial positions for the reference and estimated mechanisms of the four- and five-bar linkage are shown in Fig. 2, where \(\theta _{\mathrm{e}}\) indicates an error in the initial angle of the estimated mechanism. As \(\theta _{\mathrm{e}}\) was set to \(\pi /6\) rad, the initial generalized coordinates of the estimated mechanism provided to the state observers differed from the initial generalized coordinates of the reference mechanism. The proposed output equations were used in the test. State estimations were simulated for 10 s under these conditions. The gyroscope was installed on the coupler.

The estimation results of the four-bar linkage for generalized coordinates, generalized velocities, and Lagrange multipliers are shown in Figs. 3, 4, and 5, respectively. The results include the errors and 95% credible intervals (CIs) for the CEKF and DEKF. The state estimates for both the CEKF and DEKF converged to the reference values of the states, verifying the proposed state-space model. For the DEKF, the 95% CIs immediately decreased and remained small till the end of the simulation. In contrast, for the CEKF, the 95% CIs remained large. This is attributed to \(\boldsymbol{\Sigma}_{\mathrm{sys}}^{\mathrm{CEKF}}\) being a constant matrix containing large values. Owing to the importance of the ratio of \(\boldsymbol{\Sigma}_{\mathrm{sys}}^{\mathrm{CEKF}}\) to \(\boldsymbol{\Sigma}\)measure for CEKF tuning, a reduction in \(\boldsymbol{\Sigma}_{\mathrm{sys}}^{\mathrm{CEKF}}\) necessitates a reduction in \(\boldsymbol{\Sigma}\)measure. However, if \(\boldsymbol{\Sigma}\)measure becomes further smaller, the term: \(\mathbf{PH}^{\mathrm{T}}\left ( \hat{\mathbf{x}} \right )\boldsymbol{\Sigma}_{\mathrm{measure}}^{ - 1}\mathbf{H}\left ( \hat{\mathbf{x}} \right )\mathbf{P}\) in the second equation of Eq. (13) becomes excessively large in the first step. Consequently, the state estimation diverges.

In this test, one gyroscope was installed on the coupler of the four-bar linkage, and two were installed on the right and left couplers of the five-bar linkage, as shown in Fig. 1. The outputs of gyroscopes are angular velocities denoted as \(\omega \), \(\omega _{1}\), and \(\omega _{2,}\) respectively. The angular velocities were also calculated using the estimation results of the model and state observers. In Fig. 6, they were compared with the reference sensor outputs. The results of both the CEKF and DEKF converged to the reference sensor outputs, which also verifies the proposed state-space model.

Computational costs are summarized in Fig. 7 for investigating the real-time ability of the state observers. The results show that the state observers achieved real-time performance in all the four cases. However, computational costs significantly depend on target systems and computer specifications. Hence, these results should only be taken as a reference when selecting a state observer for multibody systems. The state estimations were conducted using MATLAB 2024a on a computer with a 12th Gen Intel(R) Core(TM) i7-12700 processor @ 2.10 GHz and 16.0 GB of RAM. The results indicate that the DEKF is more computationally efficient than the CEKF. This trend was also reported in [21].

4.2 Tests with errors in parameter and initial angle

Modeling-error sensitivity was investigated using four- and five-bar linkages. This was evaluated using the total NRMSEs defined in Eq. (24). State estimations were simulated for 100 s. High and low modeling errors were prepared [21, 22]. The low modeling error was 0.5 m/s2 when measuring acceleration due to gravity and \(\pi /32\) rad for the initial angle. The high modeling error was 1 m/s2 in measuring acceleration due to gravity and \(\pi /16\) rad in the initial angle.

The state estimates converged well to the states of the reference mechanism. The total NRMSEs are summarized in Fig. 8; the NRMSEs of the low modeling errors are smaller than those of the high modeling errors in all the cases. This test shows that the state observers constructed using the proposed state-space model are not affected much by errors in parameter and initial angle.

Total NRMSEs of the CEKF and DEKF with high and low modeling errors, in which the gyroscopes are installed on either the couplers or cranks (low modeling error: 0.5 m/s2 in measuring acceleration due to gravity and \(\pi /32\) rad in the initial angle, high modeling error: 1 m/s2 in measuring acceleration due to gravity and \(\pi /32\) rad in the initial angle)

4.3 Demonstration of system observability

Three types of output equations are introduced in Eqs. (9), (10), and (12); here, Eq. (12) was originally derived in this study. The system observability, depending on the output equations, was investigated.

The initial position errors provide simple demonstrations of system observability; if the errors are corrected by a state observer, the system can be considered observable under these conditions [22]. The four-bar linkage with the gyroscope on the coupler was employed in this test. The results of the CEKF and DEKF are presented in Fig. 9. The errors were calculated using Eq. (23). In both cases, the state observer with the proposed output model is the only one that corrects all the initial errors. This indicates that the Lagrange multiplier constraint vector in Eq. (11) has a significant role in the proposed output equations. Therefore, when the proposed state equations are used, the proposed output equations must be employed to obtain observable systems.

The Popov–Belevitch–Hautus observability test [44] was conducted for further investigation. This test does not perfectly explain observability of nonlinear systems as it was originally designed for linear systems; however, it does have a certain value when combined with the previous test. The matrix for the observability test is defined as follows:

where \(\mathbf{F}\)() is the Jacobian matrix of the continuous- or discrete-time transition model with respect to the state vector, and \(e_{i}\) denotes eigenvalues of \(\mathbf{F}\)() at a current time step. The system is observable at the time step if the matrix defined by Eq. (25) has a rank of (2\(n + m\)) for every eigenvalue \(e_{i}\) (\(i = 1\), 2, …, 2\(n + m\)). Hence, the system is observable if the following equation is satisfied for every time step:

where rank(\(\mathbf{M}\)obs) represents a rank of \(\mathbf{M}\)obs. This test employed the four-bar linkage with two different sensor configurations: the gyroscope on either the coupler or crank. Thus, if \(r\)sum = \((2\times 4+ 3)^{2} = 121\) for every time step, the system is observable. The time histories of \(r\)sum are shown in Figs. 10 and 11, respectively. In all the cases, the state observer with the proposed output model satisfies \(r\)sum = 121 for every time step. The results of this test also indicate that, when the proposed state equations are used, the proposed output equations must be employed to achieve observable systems.

Among the three output equations introduced in this study, the proposed output equations are the only ones that explicitly contain the Lagrange multipliers. The proposed output equations provide the information regarding the Lagrange multipliers, and hence the errors of the Lagrange multipliers can be corrected via the scheme of Kalman filters. This contributes to the improvement of the system observability.

4.4 Tests with wide error ranges in initial generalized coordinates

The sensitivity to errors in the initial generalized coordinates of the estimated mechanism was studied using four- and five-bar linkages. This was evaluated using the total NRMSEs defined in Eq. (24). The gyroscopes were installed on the couplers, state estimations were simulated for 100 s. Two types of initial errors were prepared.

In one test, the errors in the initial angles \(\theta _{\mathrm{e}}\) were assigned to the estimated mechanisms of the four- and five-bar linkages, as shown in Fig. 2. The initial generalized coordinates of the estimated mechanisms satisfied the constraint equations. The error was increased from \(-\pi \) to \(\pi \) rad at intervals of \(\pi /6\) rad, and the total NRMSE was calculated for each case. The results are summarized in Fig. 12. In the case of four-bar linkage, the results converged for every attempt, and the trends in the total NRMSEs did not differ significantly. However, in the case of five-bar linkage, a significant difference in the trends of the total NRMSEs was observed. In case of a small initial error, the total NRMSEs for DEKF were smaller. On the other hand, when the initial error was large, the total NRMSEs for CEKF were smaller. It should also be noted that the estimation result of CEKF diverged for the case of \(\pi /3\) rad. When dealing with nonlinear systems, state observers can be unstable if the tuning parameters are not set properly [21, 22]. To discuss the robustness of the CEKF and DEKF, the tuning parameters must be properly set for each system with specific initial conditions. However, exploring the best tuning-parameter combination is beyond the scope of this study. Recently, adaptive EKFs [24, 38] that do not require tuning were proposed. The integration of the adaptive techniques into our proposed formulation is to be expected; and will be addressed in our future research.

In the other test, errors in the initial length for each rigid bar were assigned to the estimated mechanisms of the four- and five-bar linkages, as shown in Fig. 13, where \(L_{\mathrm{e}}\) represents the error. The errors were used only to calculate the initial generalized coordinates of the estimated mechanisms, implying that the estimated mechanisms themselves were constructed using the true lengths. In this test, the initial generalized coordinates of the estimated mechanisms did not satisfy the constraint equations. The error was increased from −1 to 1 m with step size of 0.1 m for the four-bar linkage and from −0.5 to 0.4 m with step size of 0.1 m for the five-bar linkage. The smaller range of the five-bar linkage was owing to the following geometrical limitations. The shortest bar has a length of 0.5 m, and the five-bar linkage has no geometrically possible positions when \(L_{\mathrm{e}}\) = 0.5 m or longer. The total NRMSE was calculated for each case. The estimation results are summarized in Fig. 14, both of which exhibit the same trend. The total NRMSE of the DEKF was lower than that of the CEKF in every case, and the convergent range of the DEKF was as wide as or wider than that of the CEKF. This trend clearly indicates that the DEKF is more robust than the CEKF when errors in the initial length for each rigid bar are assigned to the estimated mechanisms.

These tests demonstrate that, although convergence depends on the system and initial conditions, both the CEKF and DEKF, constructed using the proposed state-space model, can estimate the states accurately when the wide range of errors in the initial generalized coordinates are provided to the estimated mechanisms.

5 Conclusions

In this study, we developed a novel method for converting DAEs into a state-space model, where the state vector comprises generalized coordinates, generalized velocities, and Lagrange multipliers. The transition model of the time derivatives of the Lagrange multipliers was derived from index-1 DAEs and employed in the transition model of the states. The proposed state equations were successfully expressed in continuous- and discrete-time forms. As the states include dependent variables, the output equations must be improved to render the system observable. To address this problem, we proposed a Lagrange multiplier constraint vector and used it to augment the output equations.

State estimations were simulated using the benchmark four- and five-bar linkages. The CEKF and DEKF were constructed using the proposed state-space model. Demonstrations of system observability were performed. Three types of output equations were prepared, and observability was investigated for each output equation. The test demonstrated that when the proposed state equations were used, the proposed output equations were necessary to achieve observable systems. The sensitivity to the initial state estimates was also studied. This test revealed that when the proposed state-space model was used to construct state observers, and the RK4 was employed as a discretization scheme, both the CEKF and DEKF presented good convergence.

In future research, the proposed state-space model will be employed to construct other types of Kalman filters, and their performances will be compared. Applications to input estimation, parameter identification, and control of multibody systems will be expected. State estimations of flexible multibody systems, approximations of partial derivatives, and integration of adaptive techniques will also be addressed.

Abbreviations

- \(e\) :

-

Eigenvalue

- \(\mathbf{F}\)():

-

Jacobian matrix of transition model with respect to state vector

- \(\mathbf{f}\) acc():

-

Continuous-time transition model of acceleration

- \(\mathbf{f}\) cont(), \(\mathbf{F}\) cont():

-

Continuous-time transition model of states and its Jacobian matrix with respect to state vector

- \(\mathbf{f}\) disc(), \(\mathbf{F}\) disc():

-

Discrete-time transition model and its Jacobian matrix with respect to state vector

- \(\mathbf{f}\) Lag():

-

Continuous-time transition model of Lagrange multiplier

- \(\mathbf{f}\) LagDyn():

-

Continuous-time transition model of time derivative of Lagrange multiplier

- \(\mathbf{f}\) RK4():

-

Gradient vector of Runge–Kutta method

- \(\mathbf{G}\) :

-

Kalman gain matrix

- \(\mathbf{h}\)(), \(\mathbf{H}\)():

-

Output model and its Jacobian matrix with respect to state vector

- \(\mathbf{h}\) conv(), \(\mathbf{H}\) conv():

-

Conventional perfect measurement output model and its Jacobian matrix with respect to state vector

- \(\mathbf{h}\) prop(), \(\mathbf{H}\) prop():

-

Proposed perfect measurement output model and its Jacobian matrix with respect to state vector

- \(\mathbf{h}\) sensor(), \(\mathbf{H}\) sensor():

-

Sensor model and its Jacobian matrix with respect to state vector

- \(\mathbf{I}\) :

-

Identity matrix

- \(\mathbf{k}\), \(\mathbf{K}\) :

-

Vector and matrix used for Runge–Kutta method

- \(L\) :

-

Length of rigid bar

- \(\mathbf{M}\) :

-

Mass matrix

- \(\mathbf{M}\) obs :

-

Matrix used for Popov–Belevitch–Hautus test of observability

- \(m\) :

-

Number of constraints

- \(n\) :

-

Number of generalized coordinates

- \(N\) :

-

Amount of data

- NRMSE:

-

Normalized root mean square error

- NRMSEtotal :

-

Total normalized root mean square error

- \(\mathbf{P}\) :

-

Error covariance matrix

- \(P\) coord :

-

Error variance of generalized coordinate

- \(P\) Lag :

-

Error variance of Lagrange multiplier

- \(P\) vel :

-

Error variance of generalized velocity

- \(\mathbf{Q}\) :

-

Generalized force vector

- \(q\), \(\mathbf{q}\) :

-

Generalized coordinate and its vector

- \(\mathbf{q}\) four-bar :

-

Generalized coordinate vector of four-bar linkage

- \(\mathbf{q}\) five-bar :

-

Generalized coordinate vector of five-bar linkage

- \(r\) sum :

-

Sum of ranks of matrices used for Popov–Belevitch–Hautus test of observability

- \(s\) :

-

Number of physical quantities measured by sensor

- \(t\) :

-

Time

- \(\Delta t\) :

-

Time step of numerical integration

- \(x\), \(\mathbf{x}\) :

-

State and its vector

- \(X\), \(Y\) :

-

Absolute coordinates

- \(\mathbf{y}\) :

-

Output vector

- \(\mathbf{y}\) sensor :

-

Output vector containing physical quantity measured by sensor

- \(\boldsymbol{\gamma}\) :

-

Vector associated with second-order time derivative of constraint equation

- \(\lambda \), \(\boldsymbol{\lambda}\) :

-

Lagrange multiplier and its vector

- \(\sigma \) acc :

-

Variance of system noise for generalized acceleration

- \(\sigma \) constr :

-

Variance of measurement noise for constraint

- \(\sigma \) coord :

-

Variance of system noise for generalized coordinate

- \(\sigma \) LagConstr :

-

Variance of measurement noise for Lagrange multiplier constraint

- \(\sigma \) LagDyn :

-

Variance of system noise for time derivative of Lagrange multiplier

- \(\sigma \) sensor :

-

Variance of measurement noise for sensor

- \(\sigma \) vel :

-

Variance of system noise for generalized velocity

- \(\boldsymbol{\Sigma}\) measure :

-

Covariance matrix of measurement noise

- \(\boldsymbol{\Sigma}_{\mathrm{measure}}^{\mathrm{conv}}\) :

-

Covariance matrix of measurement noise corresponding to conventional perfect measurement output model

- \(\boldsymbol{\Sigma}_{\mathrm{measure}}^{\mathrm{prop}}\) :

-

Covariance matrix of measurement noise corresponding to proposed perfect measurement output model

- \(\boldsymbol{\Sigma}_{\mathrm{measure}}^{\mathrm{sensor}}\) :

-

Covariance matrix of measurement noise corresponding to sensor model

- \(\boldsymbol{\Sigma}_{\mathrm{sys}}^{\mathrm{CEKF}}\) :

-

Covariance matrix of system noise for continuous-time extended Kalman filter

- \(\boldsymbol{\Sigma}_{\mathrm{sys}}^{\mathrm{DEKF}}\) :

-

Covariance matrix of system noise for discrete-time extended Kalman filter

- \(\boldsymbol{\Phi}\) :

-

Constraint vector

- \(\boldsymbol{\Phi}\) Lag :

-

Lagrange multiplier constraint vector

- \(\omega \) :

-

Angular velocity

- ()(i) :

-

Value associated with \(i\)-th element of vector

- ()0 :

-

Initial value

- ()e :

-

Error value

- ()k :

-

Value at time \(k\)

- ()k + 1 | k :

-

Priori estimated value at time \(k+1\)

- ()k + 1 | k + 1 :

-

Posteriori estimated value at time \(k+1\)

- ()max, ()min :

-

Maximum and minimum values

- ()q :

-

Partial derivative with respect to generalized coordinate vector

- \(\hat{()}\) :

-

Estimated value

- \(\dot{()}\) :

-

Time derivative

References

Schiehlen, W.: Multibody system dynamics: roots and perspectives. Multibody Syst. Dyn. 1, 149–188 (1997). https://doi.org/10.1023/A:1009745432698

Eberhard, P., Schiehlen, W.: Computational dynamics of multibody systems: history, formalisms, and applications. J. Comput. Nonlinear Dyn. 1(1), 3–12 (2006). https://doi.org/10.1115/1.1961875

Haug, E.J.: Computer-Aided Kinematics and Dynamics of Mechanical Systems. Allyn & Bacon, Boston (1989)

Shabana, A.A.: Flexible multibody dynamics: review of past and recent developments. Multibody Syst. Dyn. 1(2), 189–222 (1997). https://doi.org/10.1023/A:1009773505418

Shabana, A.A.: Dynamics of Multibody Systems. Cambridge University Press, Cambridge (2013). https://doi.org/10.1017/CBO9781107337213

Bauchau, O.A.: Flexible Multibody Dynamics. Springer, Berlin (2011). https://doi.org/10.1007/978-94-007-0335-3

García de Jalón, J., Bayo, E.: Kinematic and Dynamic Simulation of Multibody Systems. Springer, New York (1994). https://doi.org/10.1007/978-1-4612-2600-0

Peng, H., Zhang, M., Zhang, L.: Semi-analytical sensitivity analysis for multibody system dynamics described by differential–algebraic equations. AIAA J. 59(3), 893–904 (2021). https://doi.org/10.2514/1.J059355

Kalman, R.E.: A new approach to linear filtering and prediction problems. J. Basic Eng. 82(1), 35–45 (1960). https://doi.org/10.1115/1.3662552

Rhodes, I.B.: A tutorial introduction to estimation and filtering. IEEE Trans. Autom. Control 16(6), 688–706 (1971). https://doi.org/10.1109/TAC.1971.1099833

Simon, D.: Optimal State Estimation: Kalman, H Infinity, and Nonlinear Approaches. Wiley, New York (2006). https://doi.org/10.1002/0470045345

Grewal, M.S., Andrews, A.P.: Kalman Filtering: Theory and Practice with MATLAB. Wiley, New York (2014)

Simon, D.: Kalman filtering with state constraints: a survey of linear and nonlinear algorithms. IET Control Theory Appl. 4(8), 1303–1318 (2010). https://doi.org/10.1049/iet-cta.2009.0032

Simon, D., Chia, T.L.: Kalman filtering with state equality constraints. IEEE Trans. Aerosp. Electron. Syst. 38(1), 128–136 (2002). https://doi.org/10.1109/7.993234

Julier, S.J., LaViola, J.J.: On Kalman filtering with nonlinear equality constraints. IEEE Trans. Signal Process. 55(6), 2774–2784 (2007). https://doi.org/10.1109/TSP.2007.893949

Yang, C., Blasch, E.: Kalman filtering with nonlinear state constraints. IEEE Trans. Aerosp. Electron. Syst. 45(1), 70–84 (2009). https://doi.org/10.1109/TAES.2009.4805264

Naya, M.Á., Sanjurjo, E., Rodríguez, A.J., Cuadrado, J.: Kalman filters based on multibody models: linking simulation and real world. A comprehensive review. Multibody Syst. Dyn. 58, 479–521 (2023). https://doi.org/10.1007/s11044-023-09893-w

Cuadrado, J., Dopico, D., Barreiro, A., Delgado, E.: Real-time state observers based on multibody models and the extended Kalman filter. J. Mech. Sci. Technol. 23, 894–900 (2009). https://doi.org/10.1007/s12206-009-0308-5

Cuadrado, J., Dopico, D., Perez, J.A., Pastorino, R.: Automotive observers based on multibody models and the extended Kalman filter. Multibody Syst. Dyn. 27, 3–19 (2012). https://doi.org/10.1007/s11044-011-9251-1

Pastorino, R., Richiedei, D., Cuadrado, J., Trevisani, A.: State estimation using multibody models and non-linear Kalman filters. Int. J. Non-Linear Mech. 53, 83–90 (2013). https://doi.org/10.1016/j.ijnonlinmec.2013.01.016

Sanjurjo, E., Naya, M.Á., Blanco-Claraco, J.L., Torres-Moreno, J.L., Giménez-Fernández, A.: Accuracy and efficiency comparison of various nonlinear Kalman filters applied to multibody models. Nonlinear Dyn. 88, 1935–1951 (2017). https://doi.org/10.1007/s11071-017-3354-z

Sanjurjo, E., Dopico, D., Luaces, A., Naya, M.Á.: State and force observers based on multibody models and the indirect Kalman filter. Mech. Syst. Signal Process. 106, 210–228 (2018). https://doi.org/10.1016/j.ymssp.2017.12.041

Rodríguez, A.J., Sanjurjo, E., Pastorino, R., Naya, M.Á.: State, parameter and input observers based on multibody models and Kalman filters for vehicle dynamics. Mech. Syst. Signal Process. 155, 107544 (2021). https://doi.org/10.1016/j.ymssp.2020.107544

Rodríguez, A.J., Sanjurjo, E., Pastorino, R., Naya, M.A.: Multibody-based input and state observers using adaptive extended Kalman filter. Sensors 21(15), 5241 (2021). https://doi.org/10.3390/s21155241

Jaiswal, S., Sanjurjo, E., Cuadrado, J., Sopanen, J., Mikkola, A.: State estimator based on an indirect Kalman filter for a hydraulically actuated multibody system. Multibody Syst. Dyn. 54(4), 373–398 (2022). https://doi.org/10.1007/s11044-022-09814-3

Khadim, Q., Hagh, Y.S., Jiang, D., Pyrhönen, L., Jaiswal, S., Zhidchenko, V., Yu, X., Kurvinen, E., Handroos, H., Mikkola, A.: Experimental investigation into the state estimation of a forestry crane using the unscented Kalman filter and a multiphysics model. Mech. Mach. Theory 189, 105405 (2023). https://doi.org/10.1016/j.mechmachtheory.2023.105405

Pyrhönen, L., Jaiswal, S., Mikkola, A.: Mass estimation of a simple hydraulic crane using discrete extended Kalman filter and inverse dynamics for online identification. Nonlinear Dyn. 111, 21487–21506 (2023). https://doi.org/10.1007/s11071-023-08946-1

Naets, F., Pastorino, R., Cuadrado, J., Desmet, W.: Online state and input force estimation for multibody models employing extended Kalman filtering. Multibody Syst. Dyn. 32, 317–336 (2014). https://doi.org/10.1007/s11044-013-9381-8

Hagh, Y.S., Mohammadi, M., Mikkola, A., Handroos, H.: An experimental comparative study of adaptive sigma-point Kalman filters: case study of a rigid–flexible four-bar linkage mechanism and a servo-hydraulic actuator. Mech. Syst. Signal Process. 191, 110148 (2023). https://doi.org/10.1016/j.ymssp.2023.110148

Pyrhönen, L., Jaiswal, S., Garcia-Agundez, A., Vallejo, D.G., Mikkola, A.: Linearization-based state-transition model for the discrete extended Kalman filter applied to multibody simulations. Multibody Syst. Dyn. 57, 55–72 (2023). https://doi.org/10.1007/s11044-022-09861-w

Palomba, I., Richiedei, D., Trevisani, A.: Kinematic state estimation for rigid-link multibody systems by means of nonlinear constraint equations. Multibody Syst. Dyn. 40, 1–22 (2017). https://doi.org/10.1007/s11044-016-9515-x

Palomba, I., Richiedei, D., Trevisani, A.: Two-stage approach to state and force estimation in rigid-link multibody systems. Multibody Syst. Dyn. 39, 115–134 (2017). https://doi.org/10.1007/s11044-016-9548-1

Risaliti, E., Tamarozzi, T., Vermaut, M., Cornelis, B., Desmet, W.: Multibody model based estimation of multiple loads and strain field on a vehicle suspension system. Mech. Syst. Signal Process. 123, 1–25 (2019). https://doi.org/10.1016/j.ymssp.2018.12.024

Mikami, M., Yamamoto, T., Sugawara, Y., Takeda, M.: Motion control of mechanical systems with a cable contacting the ground. IFAC-PapersOnLine 53(2), 9045–9052 (2020). https://doi.org/10.1016/j.ifacol.2020.12.2128

Gerstmayr, J., Sugiyama, H., Mikkola, A.: Review on the absolute nodal coordinate formulation for large deformation analysis of multibody systems. J. Comput. Nonlinear Dyn. 8(3), 031016 (2013). https://doi.org/10.1115/1.4023487

Otsuka, K., Makihara, K., Sugiyama, H.: Recent advances in the absolute nodal coordinate formulation: literature review from 2012 to 2020. J. Comput. Nonlinear Dyn. 17(8), 080803 (2022). https://doi.org/10.1115/1.4054113

Zhang, B., Fan, W., Ren, H.: A universal quadrilateral shell element for the absolute nodal coordinate formulation. J. Comput. Nonlinear Dyn. 18(10), 101001 (2023). https://doi.org/10.1115/1.4062630

Cuadrado, J., Michaud, F., Lugrís, U., Pérez Soto, M.: Using accelerometer data to tune the parameters of an extended Kalman filter for optical motion capture: preliminary application to gait analysis. Sensors 21(2), 427 (2021). https://doi.org/10.3390/s21020427

Adduci, R., Vermaut, M., Naets, F., Croes, J., Desmet, W.: A discrete-time extended Kalman filter approach tailored for multibody models: state-input estimation. Sensors 21(13), 4495 (2021). https://doi.org/10.3390/s21134495

Mohammadi, M., Hagh, Y.S., Yu, X., Handroos, H., Mikkola, A.: Determining the state of a nonlinear flexible multibody system using an unscented Kalman filter. IEEE Access 10, 40237–40248 (2022). https://doi.org/10.1109/ACCESS.2022.3163304

Capalbo, C.E., De Gregoriis, D., Tamarozzi, T., Devriendt, H., Naets, F., Carbone, G., Mundo, D.: Parameter, input and state estimation for linear structural dynamics using parametric model order reduction and augmented Kalman filtering. Mech. Syst. Signal Process. 185, 109799 (2023). https://doi.org/10.1016/j.ymssp.2022.109799

Tamarozzi, T., Jiránek, P., De Gregoriis, D.: A differential-algebraic extended Kalman filter with exact constraint satisfaction. Mech. Syst. Signal Process. 206, 110901 (2024). https://doi.org/10.1016/j.ymssp.2023.110901

Wojtyra, M., Frączek, J.: Solvability of reactions in rigid multibody systems with redundant nonholonomic constraints. Multibody Syst. Dyn. 30(2), 153–171 (2013). https://doi.org/10.1007/s11044-013-9352-0

Junkins, J.L., Kim, Y.: Introduction to Dynamics and Control of Flexible Structures. AIAA Education Series. Reston (1993). https://doi.org/10.2514/4.862076

López Varela, Á., Dopico, D., Luaces Fernández, A.: Augmented Lagrangian index-3 semi-recursive formulations with projections: direct sensitivity analysis. Multibody Syst. Dyn. (2023). https://doi.org/10.1007/s11044-023-09928-2

Funding

This work was supported by the Japan Society for the Promotion of Science KAKENHI (grant numbers 21K14341, 22K18853, and 23K22945) and the Mazak Foundation.

Author information

Authors and Affiliations

Contributions

Conceptualization: T.O.; Methodology: T.O.; Formal analysis and investigation: T.O.; Writing—original draft preparation: T.O.; Writing—review and editing: T.O., S.D., R.K., Y.T., Y.S., Y.H., K.O., K.M.; Supervision: Y.H., K.O., K.M.; Funding acquisition: K.O., K.M.; All authors have read and agreed to the published version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Taiki Okada is the primary corresponding author.

Appendices

Appendix A: Evaluations of Partial Derivatives

The formulation of the proposed method is complicated and includes several partial derivatives. The derivation processes are presented below. The resulting expressions include third- and forth-order tensors, and the orders of calculation must be considered. In this study, the systems used for the validation are rigid multibody systems in a uniform gravitational field and formulated on natural coordinates. Thus, the mass matrix \(\mathbf{M}\) and generalized force vector \(\mathbf{Q}\) are treated as constants.

There are two Jacobian matrices, \(\partial \mathbf{f}_{\mathrm{Lag}} / \partial \mathbf{q}\) and \(\partial \mathbf{f}_{\mathrm{Lag}} / \partial \dot{\mathbf{q}}\), in Eq. (6). Let \(\bar{\mathbf{M}}\) denote \(\boldsymbol{\Phi}_{\mathbf{q}}\mathbf{M}^{ - 1}\boldsymbol{\Phi}_{\mathbf{q}}^{\mathrm{T}}\); these Jacobians are respectively evaluated as follows:

where \(\partial \boldsymbol{\gamma} / \partial \mathbf{q} \equiv \boldsymbol{\gamma}_{\mathbf{q}}\), and \(\partial \bar{\mathbf{M}}^{ - 1} / \partial \mathbf{q} = - \bar{\mathbf{M}}^{ - 1}\left ( \partial \bar{\mathbf{M}} / \partial \mathbf{q} \right )\bar{\mathbf{M}}^{ - 1} \equiv - \bar{\mathbf{M}}^{ - 1}\bar{\mathbf{M}}_{\mathbf{q}}\bar{\mathbf{M}}^{ - 1}\). The partial derivative of \(\bar{\mathbf{M}}\) with respect to the generalized coordinate vector is defined as

where \(\partial \boldsymbol{\Phi}_{\mathbf{q}} / \partial \mathbf{q} \equiv \boldsymbol{\Phi}_{\mathbf{qq}}\): \(m\ \times \ n\ \times \ n\). The dimension of \(\bar{\mathbf{M}}_{\mathbf{q}}\) is \(m\ \times \ m\ \times \ n\). The partial derivatives, shown by Eqs. (27) and (28), are also used in the Jacobian matrix of the proposed output model, expressed by Eq. (15).

There are four partial derivatives in the Jacobian matrix of the continuous-time transition model shown by Eq. (14). They are evaluated as follows:

There are four undefined partial derivatives in Eqs. (32) and (33). They are evaluated as follows:

where \(\partial \boldsymbol{\Phi}_{\mathbf{qq}} / \partial \mathbf{q} \equiv \boldsymbol{\Phi}_{\mathbf{qqq}}\): \(m\ \times \ n\ \times \ n\ \times \ n\), and \(\partial \boldsymbol{\gamma}_{\mathbf{q}} / \partial \mathbf{q} \equiv \boldsymbol{\gamma}_{\mathbf{qq}}\): \(m\ \times \ n\ \times \ n\). The dimensions of \(\partial \boldsymbol{\gamma}_{\mathbf{q}} / \partial \dot{\mathbf{q}}\) and \(\partial ^{2}\boldsymbol{\gamma} / \partial \dot{\mathbf{q}}^{2}\) are \(m\ \times \ n\ \times \ n\). The partial derivative of \(\bar{\mathbf{M}}_{\mathbf{q}}\) with respect to the generalized coordinate vector is defined as

where the dimension of \(\bar{\mathbf{M}}_{\mathbf{qq}}\) is \(m\ \times \ m\ \times \ n\ \times \ n\).

Appendix B: Fourth-order explicit Runge–Kutta method for discretizing continuous-time transition model of states in time

The continuous-time transition model of states \(\mathbf{f}\)cont() is discretized in time using the RK4. The gradient vector of RK4 \(\mathbf{f}\)RK4() is defined as

Hence, the Jacobian matrix of the discrete-time transition model of the states \(\mathbf{F}\)disc() is defined as follows:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Okada, T., Dong, S., Kuzuno, R. et al. State observer of multibody systems formulated using differential algebraic equations. Multibody Syst Dyn (2024). https://doi.org/10.1007/s11044-024-09995-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11044-024-09995-z