Abstract

Sketch-to-image is an important task to reduce the burden of creating a color image from scratch. Unlike previous sketch-to-image models, where the image is synthesized in an end-to-end manner, leading to an unnaturalistic image, we propose a method by decomposing the problem into subproblems to generate a more naturalistic and reasonable image. It first generates an intermediate output which is a semantic mask map from the input sketch through instance and semantic segmentation in two levels, background segmentation and foreground segmentation. Background segmentation is formed based on the context of the foreground objects. Then, the foreground segmentations are sequentially added to the created background segmentation. Finally, the generated mask map is fed into an image-to-image translation model to generate an image. Our proposed method works with 92 distinct classes. Compared to state-of-the-art sketch-to-image models, our proposed method outperforms the previous methods and generates better images.

Similar content being viewed by others

Data availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Chen T, Cheng M-M, Tan P, Shamir A, Hu S-M (2009) Sketch2Photo: internet image montage. ACM Trans Graph 28(5):1–10

Eitz M, Richter R, Hildebrand K, Boubekeur T, Alexa M (2011) Photosketcher: interactive sketch-based image synthesis. IEEE Comput Graph Appl 31(6):56–66

Szántó B, Pozsegovics P, Vámossy Z, Sergyán S (2011) Sketch4match — Content-based image retrieval system using sketches. In: IEEE 9th International Symposium on Applied Machine Intelligence and Informatics (SAMI), Smolenice, pp 183–188. https://doi.org/10.1109/SAMI.2011.5738872

Rajput GG, Prashantha (2019) Sketch based image retrieval using grid approach on large scale database. Procedia Comput Sci 165:216–223

Springenberg JT , Dosovitskiy A, Brox T, Riedmiller MA (2014) Striving for simplicity: the all convolutional net. In: International Conference on Learning Representations

Yu Q, Liu F, Song Y-Z, Xiang T, Hospedales TM, Loy CC (2016) Sketch me that shoe. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp 799–807

Sangkloy P, Burnell N, Ham C, Hays J (2016) The sketchy database: learning to retrieve badly drawn bunnies. ACM Trans Graph 35(4):1–12

Goodfellow IJ, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. In: Proceedings of the 27th International Conference on Neural Information Processing Systems 2:2672–2680

Sangkloy P, Lu J, Fang C, Yu F, Hays J (2017) Scribbler: controlling deep image synthesis with sketch and color. In: IEEE conference on computer vision and pattern recognition (CVPR), pp 6836–6845

Liu Y, Qin Z, Luo Z, Wang H (2017) Auto-painter: cartoon image generation from sketch by using conditional generative adversarial networks. ArXiv, abs/1705.01908

Xian W, Sangkloy P, Agrawal V, Raj A, Lu J, Fang C, Yu F, Hays J (2017) TextureGAN: controlling deep image synthesis with texture patches. Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2018:8456–8465

Chen W, Hays J (2018) SketchyGAN: towards diverse and realistic sketch to image synthesis. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 9416–9425

Liu R, Yu Q, Yu SX (2020) Unsupervised sketch to photo synthesis. Computer Vision – ECCV 2020. Springer International Publishing, Cham, pp 36–52

Liu B, Zhu Y, Song K, Elgammal A (2021) Self-supervised sketch-to-image synthesis. Proc Conf AAAI Artif Intell 35(3):2073–2081

Zhang P, Zhang B, Chen D, Yuan L, Wen F (2020) Cross-domain correspondence learning for exemplar-based image translation. In: IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 5142–5152

Gao C, Liu Q, Xu Q, Wang L, Liu J, Zou C (2020) SketchyCOCO: image generation from freehand scene sketches. In: Conference on Computer Vision and Pattern Recognition (CVPR), pp 5173–5182

He K, Gkioxari G, Dollar P, Girshick R (2017) Mask R-CNN. In: IEEE international conference on computer vision (ICCV), pp 2961–2969

Cai Z, Vasconcelos N (2017) Cascade R-CNN: Delving into high quality object detection. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 6154–6162

Chen K, Pang J, Wang J, Xiong Y, Li X, Sun S, Feng W, Liu Z, Shi J, Ouyang W, Loy CC, Lin D (2019) Hybrid Task Cascade for Instance Segmentation. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 4974–4983

Fang Y, Yang S, Wang X, Li Y, Fang C, Shan Y, Feng B, Liu W (2021) Instances as Queries. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 6910–6919

Qiao S, Chen L-C, Yuille A (2021) DetectoRS: detecting objects with recursive feature pyramid and switchable atrous convolution. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 10213–10224

Park T, Liu M-Y, Wang T-C, Zhu J-Y (2019) Semantic image synthesis with spatially-adaptive normalization. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp 2337–2346

Eitz M, Hildebrand K, Boubekeur T, Alexa M (2009) A descriptor for large scale image retrieval based on sketched feature lines. In: Proceedings of the 6th Eurographics symposium on sketch-based interfaces and modeling. pp 29–36

Manjunath BS, Salembier P, Sikora T (eds) (2002) Introduction to MPEG-7: Multimedia Content Description Interface, Chichester, England, John Wiley & Sons

Chalechale A, Mertins A, Naghdy G (2004) Edge image description using angular radial partitioning. IEE Proc Vis Image Signal Process 151(2):93

Dalal N, Triggs B (2005) Histograms of oriented gradients for human detection. In: IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05) 1:886–893

Lowe DG (2004) Distinctive image features from scale-invariant keypoints. Int J Comput Vis 60(2):91–110

Ojala T, Pietikäinen M, Harwood D (1996) A comparative study of texture measures with classification based on featured distributions. Pattern Recogn 29(1):51–59

Kruskal JB (1964) Nonmetric multidimensional scaling: a numerical method. Psychometrika 29(2):115–129

Krause A “A classification based similarity metric for 3D image retrieval,” Cmu.edu, 01-Jun-1998. [Online]. Available: https://www.ri.cmu.edu/publications/a-classification-based-similarity-metric-for-3d-image-retrieval/. [Accessed: 20-Sep-2021]

Chicco D (2021) Siamese neural networks: An overview. Methods Mol Biol 2190:73–94

Yu Q, Yang Y, Liu F, Song Y-Z, Xiang T, Hospedales TM (2017) Sketch-a-net: A deep neural network that beats humans. Int J Comput Vis 122(3):411–425

Krizhevsky A, Sutskever I, Hinton GE (2017) ImageNet classification with deep convolutional neural networks. Commun ACM 60(6):84–90

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1–9

Zou C, Mo H, Gao C, Du R, Fu H (2019) Language-based colorization of scene sketches. ACM Trans Graph 38(6):1–16

Isola P, Zhu JY, Zhou T, Efros AA (2017) Image-to-image translation with conditional adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 1125–1134

Lin TY, Maire M, Belongie S, Hays J, Perona P, Ramanan D, Dollár P, Zitnick CL (2014) Microsoft coco: Common objects in context. In: Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, Proceedings, Part V 13 2014, pp 740–755

Beyeler M (2015) OpenCV with Python blueprints: design and develop advanced computer vision projects using OpenCV with Python. Packt Publishing Ltd., London, England, ISBN 978-178528269-0,

Xie S, Tu Z (2015) Holistically-nested edge detection. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV), pp 1395–1403

Ding L, Goshtasby A (2001) On the canny edge detector. Pattern Recogn 34(3):721–725

Kanopoulos N, Vasanthavada N, Baker RL (1988) Design of an image edge detection filter using the Sobel operator. IEEE J Solid State Circuits 23(2):358–367

Caesar H, Uijlings J, Ferrari V (2018) COCO-stuff: thing and stuff classes in context. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp 1209–1218

Zhou B, Lapedriza A, Khosla A, Oliva A, Torralba A (2018) Places: a 10 million image database for scene recognition. IEEE Trans Pattern Anal Mach Intell 40(6):1452–1464

Soo S (2014) Object detection using Haar-cascade classifier. Institute of Computer Science, University of Tartu 2(3):1–2

Wan Z, Zhang J, Chen D, Liao J (2021) High-fidelity pluralistic image completion with transformers. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp 4692–4701

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) “Gans trained by a two time-scale update rule converge to a local Nash equilibrium,” in NIPS

Salimans T, Goodfellow I, Zaremba W, Cheung V, Radford A, Chen X (2016) Improved techniques for training GANs. In: Proceedings of the 30th International Conference on Neural Information Processing Systems, pp 2234–2242

Dokmanic I, Parhizkar R, Ranieri J, Vetterli M (2015) Euclidean distance matrices: essential theory, algorithms and applications. Adv Neural Inf Process Syst 2016;29

Wang L, Qian X, Zhang Y, Shen J, Cao X (2020) Enhancing sketch-based image retrieval by CNN semantic re-ranking. IEEE Trans Cybern 50(7):3330–3342

Li Z, Deng C, Yang E, Tao D (2021) Staged sketch-to-image synthesis via semi-supervised generative adversarial networks. IEEE Trans Multimedia 23:2694–2705. https://doi.org/10.1109/TMM.2020.3015015

Mirza M, Osindero S (2014) Conditional generative adversarial nets. arXiv [cs.LG]

Osahor U, Kazemi H, Dabouei A, Nasrabadi N (2020) Quality guided sketch-to-photo image synthesis. In 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW, 2020)

Liu B, Zhu Y, Song K, Elgammal A (2020) Self-supervised sketch-to- image synthesis. In: Proceedings of the AAAI conference on artificial intelligence 2021 May 18, vol 35, no. 3, pp 2073–2081

Kramer MA (1991) Nonlinear principal component analysis using autoassociative neural networks. AIChE J 37(2):233–243

Vincent P, Larochelle H, Lajoie I, Bengio Y, Manzagol PA, Bottou L (2010) Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. Journal of machine learning research. 11(12)

Feng Z, Xu C, Tao D (2019) Self-supervised representation learning by rotation feature decoupling. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR, page 10364–10374

Kolesnikov A, Zhai X, Beyer L (2019) Revisiting self-supervised visual representation learning. arXiv [cs.CV]

Liu L, Chen R, Wolf L, Cohen-Or D (2010) Optimizing photo composition. Comput Graph. Forum 29(2):469–478

Sohl-Dickstein J, Weiss EA, Maheswaranathan N, Ganguli S (2015) Deep unsupervised learning using nonequilibrium thermodynamics. In: International conference on machine learning. PMLR, pp 2256–2265

Wang T, Zhang T, Zhang B, Ouyang H, Chen D, Chen Q, Wen F (2022) “Pretraining is all you need for image-to-image translation,” arXiv [cs.CV]

Ho J, Jain A, Abbeel P (2020) Denoising diffusion probabilistic models. Adv Neural Inf Process Syst 33:6840–6851

Yang S, Ermon S (2020) Improved techniques for training score- based generative models. Adv Neural Inf Process Syst 33:12438–12448

Jolicoeur-Martineau A, Piché-Taillefer R, Combes RTD, Mitliagkas I (2020) Adversarial score matching and improved sampling for image generation. arXiv preprint arXiv:2009.05475

Nichol A, Dhariwal P (2021) Improved denoising diffusion probabilistic models. In: International Conference on Machine Learning. PMLR, pp 8162–8171

Sasaki H, Willcocks CG, Breckon TP (2021) Unit-ddpm: Unpaired image translation with denoising diffusion probabilistic models. arXiv preprint arXiv:2104.05358

Özbey M, Dalmaz O, Dar SUH, Bedel HA, Özturk Ș, Güngör A, Çukur T (2022) Unsupervised medical image translation with adversarial diffusion models. IEEE Transactions on Medical Imaging

Güngör A, Dar SUH, Ztürk ŞÖ, Korkmaz Y, Elmas G, özbey M, Çukur T (2022) Adaptive diffusion priors for accelerated mri reconstruction. Medical Image Analysis. 2023 Jun 20:102872.

Nichol AQ, Dhariwal P, Ramesh A, Shyam P, Mishkin P, McGrew B, Sutskever I, Chen M (2022) GLIDE: towards photorealistic image generation and editing with text-guided diffusion models. arXiv preprint arXiv:2112.10741. 2021 Dec 20

Ho J, Saharia C, Chan W, Fleet DJ, Norouzi M, Salimans T (2022) Cascaded diffusion models for high fidelity image generation. The Journal of Machine Learning Research. 2022 Jan 1;23(1):2249-81.

Ramesh A, Dhariwal P, Nichol A, Chu C, Chen M (2022) "Hierarchical text-conditional image generation with clip latents," arXiv preprint arXiv:2204.06125

Xu P, Hospedales TM, Yin Q, Song Y-Z, Xiang T, Wang L (2020) “Deep learning for free-hand sketch: A survey,” arXiv [cs.CV]

Qi Y, Su G, Wang Q, Yang J, Pang K, Song Y-Z (2022) Generative sketch healing. International Journal of Computer Vision. vol. 130, no. 8, pp. 2006–2021.

Sun J, Yu H, Zhang JJ, Dong J, Yu H, Zhong G (2022) Face image-sketch synthesis via generative adversarial fusion. Neural Networks. vol. 154, pp. 179–189.

Otsu N (1979) A threshold selection method from gray-level histograms. IEEE Trans Syst Man Cybern 9(1):62–66

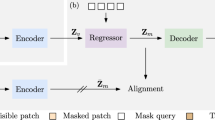

Baraheem SS, Nguyen TV (2020) Text-to-image via mask anchor points. Pattern Recogn Lett 133:25–32

Baraheem SS, Nguyen TV (2020) “Aesthetic-aware text to image synthesis,” in 2020 54th Annual Conference on Information Sciences and Systems (CISS), pp. 1–6

Richardson E et al. (2020) “Encoding in Style: a StyleGAN Encoder for Image-to-Image Translation,” arXiv [cs.CV], pp. 2287–2296

Liu M, Li Q, Qin Z, Zhang G, Wan P, Zheng W (2021) BlendGAN: Implicitly GAN blending for arbitrary stylized face generation. Advances in Neural Information Processing Systems 34:29710–22

Li B, Zhu Y, Wang Y, Lin C-W, Ghanem B, Shen L (2021) AniGAN: Style-guided generative adversarial networks for unsupervised Anime face generation. IEEE Transactions on Multimedia 24:4077–91

Liu B, Song K, Elgammal A (2020) Sketch-to-art: Synthesizing stylized art images from sketches. Computer Vision – ACCV 2020, Cham: Springer International Publishing, 2021, pp. 207–222

Tian Y, Suzuki C, Clanuwat T, Bober-Irizar M, Lamb A, Kitamoto A (2020) KaoKore: A Pre-modern Japanese Art Facial Expression Dataset. arXiv preprint arXiv:2002.08595

Chen Z, Chen L, Zhao Z, Wang Y (2020) AI illustrator: Art illustration generation based on generative adversarial network. IEEE 5th International Conference on Image, Vision and Computing (ICIVC), 2020, pp. 155–159

Tian Q, Franchitti J-C (2022) Text to artistic image generation. arXiv preprint arXiv:2205.02439

Shen J, Robertson N (2021) BBAS: towards large scale effective ensemble adversarial attacks against deep neural network learning. Information Sciences. vol. 569, pp. 469–478.

Yang B, Zhang H, Zhang Y, Xu K, Wang J (2021) Adversarial example generation with AdaBelief Optimizer and Crop Invariance. Appl Intelligence 53(2):2332–47

Kwon H, Jeong J (2022) AdvU-net: generating adversarial example based on medical image and targeting U-net model. J Sens 2022:1–13

Iyyer M, Wieting J, Gimpel K, Zettlemoyer L (2018) Adversarial example generation with syntactically controlled paraphrase networks. arXiv preprint arXiv:1804.06059

Zhang R, Luo S, Pan L, Hao J, Zhang J (2022) Generating adversarial examples via enhancing latent spatial features of benign traffic and preserving malicious functions. Neurocomputing 490:413–430

Acknowledgements

The first author would like to thank Umm Al-Qura University, in Saudi Arabia, for the continuous support. The second author is supported by NSF grant # 2025234. This work has been supported in part by the University of Dayton Office for Graduate Academic Affairs through the Graduate Student Summer Fellowship Program.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no conflicts of interest to declare that are relevant to the content of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Baraheem, S.S., Nguyen, T.V. Sketch-to-image synthesis via semantic masks. Multimed Tools Appl 83, 29047–29066 (2024). https://doi.org/10.1007/s11042-023-16704-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-16704-z