Abstract

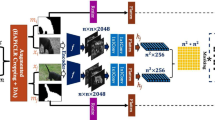

We present a novel masked image modeling (MIM) approach, context autoencoder (CAE), for self-supervised representation pretraining. We pretrain an encoder by making predictions in the encoded representation space. The pretraining tasks include two tasks: masked representation prediction—predict the representations for the masked patches, and masked patch reconstruction—reconstruct the masked patches. The network is an encoder–regressor–decoder architecture: the encoder takes the visible patches as input; the regressor predicts the representations of the masked patches, which are expected to be aligned with the representations computed from the encoder, using the representations of visible patches and the positions of visible and masked patches; the decoder reconstructs the masked patches from the predicted encoded representations. The CAE design encourages the separation of learning the encoder (representation) from completing the pertaining tasks: masked representation prediction and masked patch reconstruction tasks, and making predictions in the encoded representation space empirically shows the benefit to representation learning. We demonstrate the effectiveness of our CAE through superior transfer performance in downstream tasks: semantic segmentation, object detection and instance segmentation, and classification. The code will be available at https://github.com/Atten4Vis/CAE.

Similar content being viewed by others

Data availability

The datasets used in this paper are publicly available. ImageNet: https://www.image-net.org/, ADE20K: https://groups.csail.mit.edu/vision/datasets/ADE20K/, COCO: https://cocodataset.org/, Food-101: https://data.vision.ee.ethz.ch/cvl/datasets_extra/food-101/, Clipart: http://projects.csail.mit.edu/cmplaces/download.html, Sketch: http://projects.csail.mit.edu/cmplaces/download.html.

Code Availability

Our code will be available at https://github.com/Atten4Vis/CAE.

Notes

Our encoder does not know that the subspace is about a dog, and just separates it from the subspaces of other categories.

There are a few images in which the object does not lie in the center in ImageNet-1K. The images are actually viewed as noises and have little influence for contrastive self-supervised learning.

References

Asano, Y. M., Rupprecht, C., & Vedaldi, A. (2019). Self-labelling via simultaneous clustering and representation learning. arXiv:1911.05371

Atito, S., Awais, M., & Kittler, J. (2021). Sit: Self-supervised vision transformer. arXiv:2104.03602

Baevski, A., Hsu, W.-N., Xu, Q., Babu, A., Gu, J., & Auli, M. (2022). data2vec: A general framework for self-supervised learning in Speech. Technical report: Vision and Languags.

Bao, H., Dong, L., & Wei, F. (2021). BEiT: BERT pre-training of image transformers. arXiv:2106.08254

Bardes, A., Ponce, J., & LeCun, Y. (2021). Vicreg: Variance-invariance-covariance regularization for self-supervised learning. arXiv:2105.04906

Bossard, L., Guillaumin, M., & Van Gool, L. (2014). Food-101—Mining discriminative components with random forests. In ECCV.

Cai, Z., & Vasconcelos, N. (2021). Cascade r-cnn: High quality object detection and instance segmentation. TPAMI, 43, 1483–1498.

Caron, M., Bojanowski, P., Joulin, A., & Douze, M. (2018). Deep clustering for unsupervised learning of visual features. In ECCV (pp. 132–149).

Caron, M., Bojanowski, P., Mairal, J., & Joulin, A. (2019). Unsupervised pre-training of image features on non-curated data. In ICCV (pp. 2959–2968).

Caron, M., Misra, I., Mairal, J., Goyal, P., Bojanowski, P., & Joulin, A. (2020). Unsupervised learning of visual features by contrasting cluster assignments. arXiv:2006.09882.

Caron, M., Touvron, H., Misra, I., Jégou, H., Mairal, J., Bojanowski, P., Joulin, A. (2021). Emerging properties in self-supervised vision transformers. CoRR, arxiv:2104.14294.

Castrejon, L., Aytar, Y., Vondrick, C., Pirsiavash, H., & Torralba, A. (2016). Learning aligned cross-modal representations from weakly aligned data. In CVPR (pp. 2940–2949).

Chen, X., & He, K. (2021). Exploring simple Siamese representation learning. In CVPR (pp. 15750–15758).

Chen, Q., Chen, X., Wang, J., Feng, H., Han, J., Ding, E., Zeng, G., & Wamg, J. (2022). Group detr: Fast detr training with group-wise one-to-many assignment.

Chen, X., Ding, M., Wang, X., Xin, Y., Mo, S., Wang, Y., Han, S., Luo, P., Zeng, G., & Wang, J. (2022). Context autoencoder for self-supervised representation learning. CoRR, arxiv:2202.03026.

Chen, J., Hu, M., Li, B., & Elhoseiny, M. (2022). Efficient self-supervised vision pretraining with local masked reconstruction. arXiv:2206.00790

Chen, T., Kornblith, S., Norouzi, M., & Hinton, G. E. (2020). A simple framework for contrastive learning of visual representations. In ICML, volume 119 of Proceedings of Machine Learning Research (pp. 1597–1607). PMLR.

Chen, M., Radford, A., Child, R., Wu, J., Jun, H., Luan, D., & Sutskever, I. (2020). Generative pretraining from pixels. In ICML (pp. 1691–1703). PMLR.

Chen, Q., Wang, J., Han, C., Zhang, S., Li, Z., Chen, X., Chen, J., Wang, X., Han, S., Zhang, G., Feng, H., Yao, K., Han, J., Ding, E., & Wang, J. (2022). Group DETR v2: Strong object detector with encoder-decoder pretraining. CoRR, arxiv:2211.03594.

Chen, K., Wang, J., Pang, J., Cao, Y., Xiong, Y., Li, X., Sun, S., Feng, W., Liu, Z., Xu, J., Zhang, Z., Cheng, D., Zhu, C., Cheng, T., Zhao, Q., Li, B., Lu, X., Zhu, R., Wu, Y., Dai, J., Wang, J., Shi, J., Ouyang, W., Loy, C. C., & Lin, D. (2019). MMDetection: Open mmlab detection toolbox and benchmark. arXiv:1906.07155.

Chen, X., Xie, S., & Kaiming, H. (2021). An empirical study of training self-supervised vision transformers. CoRR, arxiv:2104.02057.

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., & Fei-Fei, L. (2009). Imagenet: A large-scale hierarchical image database. In CVPR (pp. 248–255). IEEE.

Devlin, J., Chang, M.-W., Lee, K., & Toutanova, K. (2019). BERT: Pre-training of deep bidirectional transformers for language understanding. In J. Burstein, C. Doran, & T. Solorio (Eds.), NAACL-HLT (pp. 4171–4186). Association for Computational Linguistics.

Doersch, C., Gupta, A., & Efros, Alexei, A. (2015). Unsupervised visual representation learning by context prediction. In ICCV.

Dong, X., Bao, J., Zhang, T., Chen, D., Zhang, Weiming,Y., Lu, C., Dong, W., Fang, & Yu, N. (2021). Peco: Perceptual codebook for bert pre-training of vision transformers. arXiv:2111.12710.

Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, M., Heigold, G., Gelly, S., Uszkoreit, J., & Houlsby, N. (2021). An image is worth 16x16 words: Transformers for image recognition at scale. In ICLR: OpenReview.net.

Dosovitskiy, A., Fischer, P., Springenberg, J. T., Riedmiller, M., & Brox, T. (2015). Discriminative unsupervised feature learning with exemplar convolutional neural networks. TPAMI, 38(9), 1734–1747.

Dosovitskiy, A., Springenberg, J. T., Riedmiller, M., & Brox, T. (2014). Discriminative unsupervised feature learning with convolutional neural networks. NeurIPS, 27, 766–774.

El-Nouby, A., Izacard, G., Touvron, H., Laptev, I., Jegou, H., & Grave, E. (2021). Are large-scale datasets necessary for self-supervised pre-training? arXiv:2112.10740

Ermolov, A., Siarohin, A., Sangineto, E., & Sebe, N. (2021). Whitening for self-supervised representation learning. In ICML (pp. 3015–3024). PMLR.

Fang, Y., Dong, L., Bao, H., Wang, X., & Wei, F. (2022). Corrupted image modeling for self-supervised visual pre-training. arXiv:2202.03382

Gallinari, P., Lecun, Y., Thiria, S., & Soulie, F. F. (1987). Mémoires associatives distribuées: une comparaison (distributed associative memories: A comparison). In Proceedings of COGNITIVA 87, Paris, La Villette, May 1987. Cesta-Afcet.

Garrido, Q., Chen, Y., Bardes, A., Najman, L. (2022). On the duality between contrastive and non-contrastive self-supervised learning. CoRR, arXiv:2206.02574

Gidaris, S., Bursuc, A., Komodakis, N., Pérez, P., & Cord, M. (2020). Learning representations by predicting bags of visual words. In CVPR (pp. 6928–6938).

Gidaris, S., Bursuc, A., Puy, G., Komodakis, N., Cord, M., & Pérez, P. (2020). Online bag-of-visual-words generation for unsupervised representation learning. arXiv:2012.11552

Goyal, P., Caron, M., Lefaudeux, B., Xu, M., Wang, P., Pai, V., Singh, M., Liptchinsky, V., Misra, I., Joulin, A., et al. (2021). Self-supervised pretraining of visual features in the wild. arXiv:2103.01988

Grill, J.-B., Strub, F., Altché, F., Tallec, C., Richemond, P. H., Buchatskaya, E., Doersch, C., Pires, B. A., Guo, Z. D., Azar, M. G., et al. (2020). Bootstrap your own latent: A new approach to self-supervised learning. arXiv:2006.07733

He, K., Chen, X., Xie, S., Li, Y., Dollár, P., & Girshick, R. (2022). Masked autoencoders are scalable vision learners. In CVPR.

He, K., Fan, H., Wu, Y., Xie, S., & Girshick, R. B. (2020). Momentum contrast for unsupervised visual representation learning. In CVPR (pp. 9726–9735). Computer Vision Foundation/IEEE.

He, K., Gkioxari, G., Dollár, P., & Girshick, R. (2017). Mask r-cnn. In ICCV (pp. 2961–2969).

Henaff, O. (2020). Data-efficient image recognition with contrastive predictive coding. In ICML (pp. 4182–4192). PMLR.

Hinton, G. E., & Salakhutdinov, R. R. (2006). Reducing the dimensionality of data with neural networks. Science, 313(5786), 504–507.

Hinton, G. E., & Zemel, R. S. (1994). Autoencoders, minimum description length, and helmholtz free energy. NeurIPS, 6, 3–10.

Huang, J., Dong, Q., Gong, S., & Zhu, X. (2019). Unsupervised deep learning by neighbourhood discovery. In ICML (pp. 2849–2858). PMLR.

Huang, Z., Jin, X., Lu, C., Hou, Q., Cheng, M.-M., Fu, D., Shen, X., & Feng, J. (2022). Contrastive masked autoencoders are stronger vision learners. arXiv:2207.13532

Huang, G., Sun, Y., Liu, Z., Sedra, D., & Weinberger, K. Q. (2016). Deep networks with stochastic depth. In ECCV (pp. 646–661). Springer.

Huang, L., You, S., Zheng, M., Wang, F., Qian, C., & Yamasaki, T. (2022). Green hierarchical vision transformer for masked image modeling. arXiv:2205.13515

Ioffe, S., & Szegedy, C. (2015). Batch normalization: Accelerating deep network training by reducing internal covariate shift. In ICML.

Jing, L., Zhu, J., & LeCun, Y. (2022). Masked siamese convnets. arXiv:2206.07700

Kakogeorgiou, I., Gidaris, S., Psomas, B., Avrithis, Y., Bursuc, A., Karantzalos, K., & Komodakis, N. (2022). What to hide from your students: Attention-guided masked image modeling. In ECCV.

Kingma, Diederik P., & Welling, M. (2013). Auto-encoding variational bayes. arXiv:1312.6114.

Kong, X., & Zhang, X. (2022). Understanding masked image modeling via learning occlusion invariant feature. arXiv:2208.04164

LeCun, Y. (1987). Mod‘eles connexionistes de l’apprentissage. PhD thesis, Universit’e de Paris VI.

Li, X., Ge, Y., Yi, K., Hu, Z., Shan, Y., & Duan, L.-Y. (2022). mc-beit: Multi-choice discretization for image bert pre-training. In ECCV.

Li, X., Wang, W., Yang, L., & Yang, J. (2022). Uniform masking: Enabling mae pre-training for pyramid-based vision transformers with locality. arXiv:2205.10063

Li, S., Wu, D., Wu, F., Zang, Z., Wang, K., Shang, L., Sun, B., Li, H., Li, S., et al. (2022). Architecture-agnostic masked image modeling-from vit back to cnn. arXiv:2205.13943

Li, G., Zheng, H., Liu, D., Su, B., & Zheng, C. (2022). Semmae: Semantic-guided masking for learning masked autoencoders. arXiv:2206.10207

Li, J., Zhou, P., Xiong, C., & Hoi, S. C. H. (2020). Prototypical contrastive learning of unsupervised representations. arXiv:2005.04966

Li, Z., Chen, Z., Yang, F., Li, W., Zhu, Y., Zhao, C., Deng, R., Liwei, W., Zhao, R., Tang, M., et al. (2021). Mst: Masked self-supervised transformer for visual representation. NeurIPS, 34, 13165–13176.

Lin, T.-Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., Dollár, P., & Lawrence Zitnick, C. (2014). Microsoft coco: Common objects in context. In ECCV (pp. 740–755). Springer.

Liu, Z., Hu, H., Lin, Y., Yao, Z., Xie, Z., Wei, Y., Ning, J., Cao, Y., Zhang, Z., Dong, L., Wei, F. & Guo, B. (2021). Swin transformer v2: Scaling up capacity and resolution. Cornell University.

Liu, H., Jiang, X., Li, X., Guo, A., Jiang, D., & Ren, B. (2022). The devil is in the frequency: Geminated gestalt autoencoder for self-supervised visual pre-training. arXiv:2204.08227

Loshchilov, I., & Hutter, F. (2017). Decoupled weight decay regularization. arXiv:1711.05101

Noroozi, M., & Favaro, P. (2016). Unsupervised learning of visual representations by solving jigsaw puzzles. In ECCV (pp. 69–84). Springer.

Pathak, D., Krahenbuhl, P., Donahue, J., Darrell, T., & Efros, A. A. (2016). Context encoders: Feature learning by inpainting. In CVPR (pp. 2536–2544).

Peng, X., Wang, K., Zhu, Z., & You, Y. (2022). Crafting better contrastive views for siamese representation learning. In CVPR.

Qi, J., Zhu, J., Ding, M., Chen, X., Luo, P., Wang, L., Wang, X., Liu, W., & Wang, J. (2023). Understanding self-supervised pretraining with part-aware representation learning. Report: Tech.

Ramesh, A., Pavlov, M., Goh, G., Gray, S., Voss, C., Radford, A., Chen, M., & Sutskever, I. (2021). Zero-shot text-to-image generation. In M. Meila & T. Zhang (Eds.), ICML (Vol. 139, pp. 8821–8831). PMLR.

Ranzato, M., Poultney, C., Chopra, S., LeCun, Y., et al. (2007). Efficient learning of sparse representations with an energy-based model. NeurIPS, 19, 1137.

Russakovsky, O., Deng, J., Hao, S., Krause, J., Satheesh, S., Ma, S., Huang, Z., Karpathy, A., Khosla, A., Bernstein, M., et al. (2015). Imagenet large scale visual recognition challenge. IJCV, 115(3), 211–252.

Tao, C., Zhu, X., Huang, G., Qiao, Y., Wang, X. & Dai, J. (2022). Siamese image modeling for self-supervised vision representation learning. arXiv:2206.01204

Tian, Y., Xie, L., Fang, J., Shi, M., Peng, J., Zhang, X., Jiao, J., Tian, Q., & Ye, Q. (2022). Beyond masking: Demystifying token-based pre-training for vision transformers. arXiv:2203.14313

Tian, Y., Xie, L., Zhang, X., Fang, J., Xu, H., Huang, W., Jiao, J., Tian, Q., & Ye, Q. (2021). Semantic-aware generation for self-supervised visual representation learning. arXiv:2111.13163

Tian, Y., Sun, C., Poole, B., Krishnan, D., Schmid, C., & Isola, P. (2020). What makes for good views for contrastive learning? NeurIPS, 33, 6827–6839.

Touvron, H., Cord, M., Douze, M., Massa, F., Sablayrolles, A., & Jégou, H. (2020). Training data-efficient image transformers & distillation through attention. arXiv:2012.12877

van den Oord, A., Li, Y., & Vinyals, O. (2018). Representation learning with contrastive predictive coding. arXiv:1807.03748

Van der Maaten, L., & Hinton, G. (2008). Visualizing data using t-sne. Journal of Machine Learning Research,9(11).

Vincent, P., Larochelle, H., Bengio, Y., & Manzagol, P.-A. (2008). Extracting and composing robust features with denoising autoencoders. In ICML (pp. 1096–1103).

Vincent, P., Larochelle, H., Lajoie, I., Bengio, Y., & Manzagol, P.-A. (2010). Stacked denoising autoencoders: Learning useful representations in a deep network with a local denoising criterion. Journal of Machine Learning Research, 11, 3371–3408.

Wang, W., Bao, H., Dong, L., Bjorck, J., Peng, Z., Liu, Q., Aggarwal, K., Khan, O., Singhal, S., Som, S., & Wei, F. (2023). Image as a foreign language: Beit pretraining for all vision and vision-language tasks.

Wang, L., Liang, F., Li, Y., Ouyang, W., Zhang, H., & Shao, J. (2022). Repre: Improving self-supervised vision transformer with reconstructive pre-training. arXiv:2201.06857

Wang, X., Zhang, R., Shen, C., Kong, T., & Li, L. (2021). Dense contrastive learning for self-supervised visual pre-training. In CVPR (pp. 3024–3033).

Wei, C., Fan, H., Xie, S., Wu, C.-Y., Yuille, A., & Feichtenhofer, C. (2021). Masked feature prediction for self-supervised visual pre-training. arXiv:2112.09133

Wei, L., Xie, L., Zhou, W., Li, H., & Tian, Q. (2022). Mvp: Multimodality-guided visual pre-training. In ECCV.

Wu, Z., Xiong, Y., Yu, S. X., & Lin, D. (2018). Unsupervised feature learning via non-parametric instance discrimination. In CVPR (pp. 3733–3742).

Xiao, T., Liu, Y., Zhou, B., Jiang, Y., & Sun, J. (2018). Unified perceptual parsing for scene understanding. In ECCV (pp. 418–434).

Xie, Z., Geng, Z., Hu, J., Zhang, Z., Hu, H., & Cao, Y. (2022). Revealing the dark secrets of masked image modeling. arXiv:2205.13543.

Xie, J., Girshick, R., & Farhadi, A. (2016). Unsupervised deep embedding for clustering analysis. In ICML (pp. 478–487). PMLR.

Xie, J., Li, W., Zhan, X., Liu, Z., Ong, Y. S., & Loy, C. C. (2022). Masked frequency modeling for self-supervised visual pre-training. arXiv:2206.07706

Xie, Z., Lin, Y., Zhang, Z., Cao, Y., Lin, Stephen, & Hu, H. (2021). Propagate yourself: Exploring pixel-level consistency for unsupervised visual representation learning. In CVPR (pp. 16684–16693).

Xie, Z., Zhang, Z., Cao, Y., Lin, Y., Bao, J., Yao, Z., Dai, Q., & Hu, H. (2021). Simmim: A simple framework for masked image modeling. arXiv:2111.09886

Xie, Z., Zhang, Z., Cao, Y., Lin, Y., Wei, Y., Dai, Q., & Hu, H. (2022). On data scaling in masked image modeling. arXiv:2206.04664

Yang, J., Parikh, D., & Batra, D. (2016). Joint unsupervised learning of deep representations and image clusters. In CVPR (pp. 5147–5156).

Yi, K., Ge, Y., Li, Xiaotong, Y., Shusheng, L., Dian, W., Jianping, S. Y., & Qie, X. (2022). Masked image modeling with denoising contrast. arXiv:2205.09616

You, Y., Gitman, I., & Ginsburg, B. (2017). Large batch training of convolutional networks. arXiv:1708.03888

Yun, S., Han, D., Oh, S. J., Chun, S., Choe, J., & Yoo, Y. (2019). Cutmix: Regularization strategy to train strong classifiers with localizable features. In ICCV (pp. 6023–6032).

Zbontar, J., Jing, L., Misra, I., LeCun, Y., & Deny, S. (2021). Barlow twins: Self-supervised learning via redundancy reduction. arXiv:2103.03230

Zhang, X., Chen, J., Yuan, J., Chen, Q., Wang, J., Wang, X., Han, S., Chen, X., Pi, J., Yao, K., Han, J., Ding, E., & Wang, J. (2022). CAE v2: Context autoencoder with CLIP target. CoRRarxiv:2211.09799.

Zhang, H., Cisse, M., Dauphin, Y. N., & Lopez-Paz, D. (2017). mixup: Beyond empirical risk minimization. In ICLR.

Zhang, R., Isola, P., & Efros, A. A. (2016). Colorful image colorization. In ECCV (pp. 649–666). Springer.

Zhang, H., Li, F., Liu, S., Zhang, L., Hang, S., Zhu, J., Ni, L. M., & Shum, H.-Y. (2023). Dino: Detr with improved denoising anchor boxes for end-to-end object detection.

Zhang, X., Tian, Y., Huang, W., Ye, Q., Dai, Q., Xie, L., & Tian, Q. (2022). Hivit: Hierarchical vision transformer meets masked image modeling. arXiv:2205.14949

Zhou, J., Wei, C., Wang, H., Shen, W., Xie, C., Yuille, A., & Kong, T. (2021). Ibot: Image bert pre-training with online tokenizer. arxiv:2111.07832

Zhou, B., Zhao, H., Puig, X., Fidler, S., Barriuso, A., & Torralb, A.(2017). Scene parsing through ade20k dataset. In CVPR (pp. 633–641).

Zhuang, C., Zhai, A. L., & Yamins, D. (2019). Local aggregation for unsupervised learning of visual embeddings. In ICCV (pp. 6002–6012).

Acknowledgements

We would like to acknowledge Hangbo Bao, Xinlei Chen, Li Dong, Qi Han, Zhuowen Tu, Saining Xie, and Furu Wei for the helpful discussions.

Funding

This work is partially supported by the National Key Research and Development Program of China (2020YFB1708002), National Natural Science Foundation of China (61632003, 61375022, 61403005), Grant SCITLAB-20017 of Intelligent Terminal Key Laboratory of SiChuan Province, Beijing Advanced Innovation Center for Intelligent Robots and Systems (2018IRS11), and PEK-SenseTime Joint Laboratory of Machine Vision. Ping Luo is supported by the General Research Fund of HK No.27208720, No.17212120, and No.17200622.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Communicated by Chunyuan Li.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Chen, X., Ding, M., Wang, X. et al. Context Autoencoder for Self-supervised Representation Learning. Int J Comput Vis 132, 208–223 (2024). https://doi.org/10.1007/s11263-023-01852-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11263-023-01852-4