Abstract

Student ratings, a critical component in policy efforts to assess and improve teaching, are often collected using questionnaires, and inferences about teachers are then based on aggregated student survey responses. While considerable attention has been paid to the reliability and validity of these aggregates, much less attention has been paid to within-classroom consensus, and what that consensus can reveal about classrooms. This study used data from the Measures of Effective Teaching Project to investigate how the consensus among student ratings in a classroom can enhance our understanding of the learning environment, and potentially could be used to understand features of instructional practice. The results suggest that consensus is related to teacher effectiveness, the questioning strategies used by teachers, and the demographic heterogeneity of students. The possibility of instructional subclimates and the implications for the use of overall averages in teacher appraisal are discussed together with directions for future research.

Similar content being viewed by others

Notes

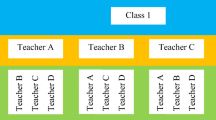

For example, in Classroom 1, the mean is given by \(\frac{10\left( 3 \right)}{10} = 3\). In Classroom 2, the mean is given by \(\frac{5\left( 1 \right) + 5\left( 5 \right)}{10} = 3\). In Classroom 3, the mean is given by \(\frac{2\left( 1 \right) + 2\left( 2 \right) + 2\left( 3 \right) + 2\left( 4 \right) + 2\left( 5 \right)}{10} = 3\).

Alternative specifications of this model were also used, including a two-level model ignoring the clustering of class sections within teachers, a three-level model with district fixed effects, and a four-level model with district random effects. Because these alternative specifications did not influence substantive conclusions, only one set of models is presented here.

References

Babad, E. (1993). Teachers’ differential behavior. Educational Psychology Review, 5(4), 347–376.

Balch, R. T. (2012). The validation of a student survey on teacher practice. Unpublished doctoral dissertation, Vanderbilt University.

Bill and Melinda Gates Foundation. (2009). Teachers’ perceptions and the MET project. Seattle, WA: Bill & Melinda Gates Foundation.

Bill and Melinda Gates Foundation. (2010). Learning about teaching: Initial findings from the measures of effective teaching project. Seattle, WA: Bill & Melinda Gates Foundation.

Bill and Melinda Gates Foundation. (2013). Measures of effective teaching: Study information. Ann Arbor, MI: Inter-University Consortium for Political and Social Research.

Bliese, P. D. (2000). Within-group agreement, non-independence, and reliability: Implications for data aggregation and analysis. In K. J. Klein & S. W. Kozlowski (Eds.), Multilevel theory, research, and methods in organizations: Foundations, extensions, and new directions (pp. 349–381). San Francisco, CA: Jossey-Bass.

Bliese, P. D., & Halverson, R. R. (1998). Group consensus and psychological well-being: A large field study. Journal of Applied Social Psychology, 28(7), 563–580.

Braun, H. I. (2005). Using student progress to evaluate teachers: A primer on value-added models. Princeton, NJ: Educational Testing Service.

Brophy, J. E., & Good, T. L. (1974). Teacher-student relationships: Causes and consequences. New York: Holt, Rinehart & Winston.

Burniske, J., & Meibaum, D. (2012). The use of student perceptual data as a measure of teaching effectiveness. Austin, TX: SEDL.

Casteel, C. A. (2000). African American students’ perceptions of their treatment by Caucasian teachers. Journal of Instructional Psychology, 27(3), 143–148.

Chan, D. (1998). Functional relations among constructs in the same content domain at different levels of analysis: A typology of composition models. Journal of Applied Psychology, 83(2), 234–246.

Charalambous, C. Y. (2010). Mathematical knowledge for teaching and task unfolding: An exploratory study. The Elementary School Journal, 110(3), 247–278.

Clarke, K. A. (2005). The phantom menace: Omitted variable bias in econometric research. Conflict Management and Peace Science, 22(4), 341–352.

Cole, M. S., Bedeian, A. G., Hirschfeld, R. R., & Vogel, B. (2011). Dispersion-composition models in multilevel research: A data-analytic framework. Organizational Research Methods, 14(4), 718–734.

Crosson, A. C., Boston, M., Levison, A., Matsumura, L. C., Resnick, L. B., Wolf, M. K., et al. (2006). Beyond summative evaluation: The instructional quality assessment as a professional development tool (CSE Technical Report 691). Los Angeles, CA: National Center for Research on Evaluation, Standards, and Student Testing.

Danielson, C. (2011). Enhancing professional practice: A framework for teaching. Alexandria, VA: ASCD.

Downer, J. T., Stuhlman, M., Schweig, J., Martínez, J. F., & Ruzek, E. (2014). Measuring effective teacher-student interactions from a student perspective a multi-level analysis. The Journal of Early Adolescence, 35(5–6), 722–758.

Doyle, W. (1983). Academic work. Review of Educational Research, 53(2), 159–199.

Doyle, W., & Carter, K. (1984). Academic tasks in classrooms. Curriculum Inquiry, 14(2), 129–149.

Doyle, W., Sandford, J., Clements, B., Schmidt-French, B., & Emmer, E. (1983). Managing academic tasks: An interim report of the junior school study (R & D Report 6186). Austin, TX: University of Texas Research and Development Center for Teacher Education.

Erickson, F., & Schultz, J. (1981). When is a context? Some issues and methods in the analysis of social competence. In J. Green & C. Wallat (Eds.), Ethnography and language in educational settings (pp. 147–160). Norwood, NJ: Ablex.

Fauth, B., Decristan, J., Rieser, S., Klieme, E., & Büttner, G. (2014). Student ratings of teaching quality in primary school: Dimensions and prediction of student outcomes. Learning and Instruction, 29, 1–9.

Feldman, K. A. (1978). Course characteristics and college students’ ratings of their teachers: What we know and what we don’t. Research in Higher Education, 9(3), 199–242.

Ferguson, R. (2010). Student perceptions of teaching effectiveness. Boston, MA: Harvard University.

Ferguson, R. (2012). Can student surveys measure teaching quality? Phi Delta Kappan, 94(3), 24–28.

Follman, J. (1992). Secondary school students’ ratings of teacher effectiveness. The High School Journal, 75(3), 168–178.

Fraser, B. J. (1998a). Classroom environment instruments: Development, validity and applications. Learning Environments Research, 1(1), 7–34.

Fraser, B. J. (1998b). Science learning environments: Assessment, effects and determinants. In B. J. Fraser & K. G. Tobin (Eds.), International handbook of science education (pp. 527–564). Dordrecht, The Netherlands: Kluwer.

Gates, S. M., Ringel, J. S., & Santibanez, L. (2003). Who is leading our schools? An overview of school administrators and their careers (No. 1679). Santa Monica, CA: RAND Corporation.

Godfrey, E. B., & Grayman, J. K. (2014). Teaching citizens: The role of open classroom climate in fostering critical consciousness among youth. Journal of Youth and Adolescence, 43(11), 1801–1817.

Goe, L., Bell, C., & Little, O. (2008). Approaches to evaluating teacher effectiveness: A research synthesis. Washington, DC: National Comprehensive Center For Teacher Quality.

Griffith, J. (2000). School climate as group evaluation and group consensus: Student and parent perceptions of the elementary school environment. The Elementary School Journal, 101, 35–61.

Guion, R. M. (1973). A note on organizational climate. Organizational Behavior and Human Performance, 9(1), 120–125.

Haertel, G. D., Walberg, H. J., & Haertel, E. H. (1981). Socio-psychological environments and learning: A quantitative synthesis. British Educational Research Journal, 7(1), 27–36.

Hill, H. C., & Grossman, P. (2013). Learning from teacher observations: Challenges and opportunities posed by new teacher evaluation systems. Harvard Educational Review, 83(2), 371–384.

Ho, A. D., & Kane, T. J. (2013). The reliability of classroom observations by school personnel. Seattle, WA: Bill & Melinda Gates Foundation.

Hoy, W. K., & Clover, S. I. (1986). Elementary school climate: A revision of the OCDQ. Educational Administration Quarterly, 22(1), 93–110.

Irvine, J. J., & Irvine, R. W. (1995). Black youth in school: Individual achievement and institutional/cultural perspectives. African-American youth: Their social and economic status in the United States, 129–142.

James, L. R., Demaree, R. G., & Wolf, G. (1984). Estimating within-group interrater reliability with and without response bias. Journal of Applied Psychology, 69(1), 85–98.

Jehn, K. A., Chadwick, C., & Thatcher, S. M. (1997). To agree or not to agree: The effects of value congruence, individual demographic dissimilarity, and conflict on workgroup outcomes. International Journal of Conflict Management, 8(4), 287–305.

Jones, M. G., & Wheatley, J. (1990). Gender differences in teacher-student interactions in science classrooms. Journal of Research in Science Teaching, 27(9), 861–874.

Kane, M. T., & Brennan, R. L. (1977). The generalizability of class means. Review of Educational Research, 47(2), 267–292.

Kane, T. J., Staiger, D. O., McCCaffrey, D., Cantrell, S., Archer, J., Buhayar, S., et al. (2012). Gathering feedback for teaching: Combining high-quality observations with student surveys and achievement gains. Seattle, WA: Bill & Melinda Gates Foundation.

Klein, K. J., Conn, A. B., Smith, D. B., & Sorra, J. S. (2001). Is everyone in agreement? An exploration of within-group agreement in employee perceptions of the work environment. Journal of Applied Psychology, 86(1), 3–16.

Kozlowski, S. W., & Klein, K. J. (2000). A multilevel approach to theory and research in organizations: Contextual, temporal, and emergent processes. In K. J. Klein & S. W. Kozlowski (Eds.), Multilevel theory, research, and methods in organizations: Foundations, extensions, and new directions (pp. 3–90). San Francisco, CA: Jossey-Bass.

Kratz, H. (1896). Characteristics of the best teacher as recognized by children. The Pedagogical Seminary, 3(3), 413–460.

Kuklinski, M., & Weinstein, R. (2000). Classroom and grade level differences in the stability of teacher expectations and perceived differential teacher treatment. Learning Environments Research, 3(1), 1–34.

Kyriakides, L. (2005). Drawing from teacher effectiveness research and research into teacher interpersonal behaviour to establish a teacher evaluation system: A study on the use of student ratings to evaluate teacher behaviour. Journal of Classroom Interaction, 40(2), 44–66.

Lampert, M. (2001). Teaching problems and the problems of teaching. New Haven, CT: Yale University Press.

LeBreton, J. M., James, L. R., & Lindell, M. K. (2005). Recent issues regarding rWG, rWG, rWG (J), and rWG (J). Organizational Research Methods, 8(1), 128–138.

Lindell, M. K., & Brandt, C. J. (1997). Measuring interrater agreement for ratings of a single target. Applied Psychological Measurement, 21(3), 271–278.

Lindell, M. K., & Brandt, C. J. (2000). Climate quality and climate consensus as mediators of the relationship between organizational antecedents and outcomes. Journal of Applied Psychology, 85(3), 331–348.

Lindell, M. K., Brandt, C. J., & Whitney, D. J. (1999). A revised index of interrater agreement for multi-item ratings of a single target. Applied Psychological Measurement, 23(2), 127–135.

Lüdtke, O., Robitzsch, A., Trautwein, U., & Kunter, M. (2009). Assessing the impact of learning environments: How to use student ratings of classroom or school characteristics in multilevel modeling. Contemporary Educational Psychology, 34(2), 120–131.

Lüdtke, O., Trautwein, U., Kunter, M., & Baumert, J. (2006). Reliability and agreement of student ratings of the classroom environment: A reanalysis of TIMSS data. Learning Environments Research, 9(3), 215–230.

Marsh, H. W. (1987). Students’ evaluations of university teaching: Research findings, methodological issues, and directions for future research. International Journal of Educational Research, 11(3), 253–388.

Marsh, H. W., & Roche, L. A. (1997). Making students’ evaluations of teaching effectiveness effective: The critical issues of validity, bias, and utility. American Psychologist, 52(11), 1187.

Marsh, H. W., Lüdtke, O., Nagengast, B., Trautwein, U., Morin, A. J., Abduljabbar, A. S., et al. (2012). Classroom climate and contextual effects: Conceptual and methodological issues in the evaluation of group-level effects. Educational Psychologist, 47(2), 106–124.

Martínez, F., Taut, S., & Schaaf, K. (2016). Classroom observation for evaluating and improving teaching: An international perspective. Studies in Educational Evaluation, 49, 15–29.

Mathieu, J., Maynard, M. T., Rapp, T., & Gilson, L. (2008). Team effectiveness 1997–2007: A review of recent advancements and a glimpse into the future. Journal of Management, 34(3), 410–476.

McCaffrey, D. F., Lockwood, J. R., Koretz, D., Louis, T. A., & Hamilton, L. (2004). Models for value-added modeling of teacher effects. Journal of Educational and Behavioral Statistics, 29(1), 67–101.

McGarity, J. R., & Butts, D. P. (1984). The relationship among teacher classroom management behavior, student engagement, and student achievement of middle and high school science students of varying aptitude. Journal of Research in Science Teaching, 21(1), 55–61.

McPherson, M., Smith-Lovin, L., & Cook, J. M. (2001). Birds of a feather: Homophily in social networks. Annual Review of Sociology, 27, 415–444.

Meade, A. W., & Eby, L. T. (2007). Using indices of group agreement in multilevel construct validation. Organizational Research Methods, 10(1), 75–96.

Mihaly, K., McCaffrey, D. F., Staiger, D. O., & Lockwood, J. (2013). A composite estimator of effective teaching. Seattle, WA: Bill & Melinda Gates Foundation.

Morin, A. J., Marsh, H. W., Nagengast, B., & Scalas, L. F. (2014). Doubly latent multilevel analyses of classroom climate: An illustration. The Journal of Experimental Education, 82(2), 143–167.

O’Brien, R. M. (1990). Estimating the reliability of aggregate-level variables based on individual-level characteristics. Sociological Methods & Research, 18(4), 473–504.

Partee, G. L. (2012). Using multiple evaluation measures to improve teacher effectiveness: State strategies from round 2 of No child left behind act waivers. Washington, DC: Center for American Progress.

Peterson, K. D. (2000). Teacher evaluation: A comprehensive guide to new directions and practices. Thousand Oaks, CA: Corwin Press.

Peterson, K. D., Wahlquist, C., & Bone, K. (2000). Student surveys for school teacher evaluation. Journal of Personnel Evaluation in Education, 14(2), 135–153.

Phillips, M., & Yamashiro, K. (2013). Reliability and validity of a student survey measuring classroom conditions and practices: Evidence form the pilot administration of the classroom and school environment survey. Paper presented at the annual meeting of the American Educational Research Association, San Francisco.

Pianta, R. C., & Hamre, B. K. (2009). Conceptualization, measurement, and improvement of classroom processes: Standardized observation can leverage capacity. Educational Researcher, 38(2), 109–119.

Preacher, K. J., Curran, P. J., & Bauer, D. J. (2006). Computational tools for probing interaction effects in multiple linear regression, multilevel modeling, and latent curve analysis. Journal of Educational and Behavioral Statistics, 31, 437–448.

Raudenbush, S. W., & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods (Vol. 1). Thousand Oaks, CA: Sage.

Raudenbush, S. W., & Jean, M. (2014). To what extent do student perceptions of classroom quality predict teacher value added? In T. Kane, K. Kerr, & R. Pianta (Eds.), Designing teacher evaluation systems: New guidance from the measures of effective teaching project (pp. 170–202). San Francisco, CA: Wiley.

Raudenbush, S. W., Rowan, B., & Kang, S. J. (1991). A multilevel, multivariate model for studying school climate with estimation via the em algorithm and application to us high-school data. Journal of Educational and Behavioral Statistics, 16(4), 295–330.

Roth, W. M. (1999). Learning environments research, lifeworld analysis, and solidarity in practice. Learning Environments Research, 2(3), 225–247.

Rothstein, J., & Mathis, W. J. (2013). Review of two culminating reports from the MET project. American Educational Research Journal, 50(1), 4–36.

Rowan, B., & Correnti, R. (2009). Studying reading instruction with teacher logs: Lessons from the study of instructional improvement. Educational Researcher, 38(2), 120–131.

Rubin, B., & Fernandes, R. (2013). The teacher as leader: Effect of teaching behaviors on class community and agreement. The International Review of Research in Open and Distributed Learning, 14(5), 1–26.

Sanders, W. L. (1998). Value-added assessment. School. Administrator, 55, 24–29.

Schachter, S. (1951). Deviation, rejection, and communication. The Journal of Abnormal and Social Psychology, 46(2), 190–207.

Seidel, T. (2006). The role of student characteristics in studying micro teaching-learning environments. Learning Environments Research, 9(3), 253–271.

Seidel, T., & Shavelson, R. J. (2007). Teaching effectiveness research in the past decade: The role of theory and research design in disentangling meta-analysis results. Review of Educational Research, 77(4), 454–499.

Shulman, L. S. (1987). Knowledge and teaching: Foundations of the new reform. Harvard Educational Review, 57(1), 1–23.

Sirotnik, K. A. (1980). Psychometric implications of the unit-of-analysis problem (with examples from the measurement of organizational climate). Journal of Educational Measurement, 17(4), 245–282.

Stein, M. K., Grover, B. W., & Henningsen, M. (1996). Building student capacity for mathematical thinking and reasoning: An analysis of mathematical tasks used in reform classrooms. American Educational Research Journal, 33(2), 455–488.

Stern, G. G., Stein, M. I., & Bloom, B. S. (1956). Methods in personality assessment. Glencoe, IL: The Free Press.

Stigler, J. W., Gallimore, R., & Hiebert, J. (2000). Using video surveys to compare classrooms and teaching across cultures: Examples and lessons from the TIMSS video studies. Educational Psychologist, 35(2), 87–100.

Taylor, E. S., & Tyler, J. H. (2012). The effect of evaluation on teacher performance. The American Economic Review, 102(7), 3628–3651.

Thompson, J. E. (1974). Student evaluation of teachers. NASSP Bulletin, 58(384), 25–30.

Veldman, D. J., & Peck, R. F. (1969). Influences on pupil evaluations of student teachers. Journal of Educational Psychology, 60(2), 103–108.

Wang, M. C., Haertel, G. D., & Walberg, H. J. (1993). Toward a knowledge base for school learning. Review of Educational Research, 63(3), 249–294.

White, M. J. (1986). Segregation and diversity measures in population distribution. Population Index, 52, 198–221.

Wilkerson, D. J., Manatt, R. P., Rogers, M. A., & Maughan, R. (2000). Validation of student, principal, and self-ratings in 360 feedback for teacher evaluation. Journal of Personnel Evaluation in Education, 14(2), 179–192.

Worrell, F. C., & Kuterbach, L. D. (2001). The use of student ratings of teacher behaviors with academically talented high school students. Prufrock Journal, 12(4), 236–247.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Jonathan Schweig declares that he has no conflict of interest.

Ethical standards

All procedures followed were in accordance with the ethical standards of the responsible committee on human experimentation (institutional and national) and with the Helsinki Declaration of 1975, as revised in 2008 (5).

Informed consent

Informed consent was obtained from all individuals for being included in the study.

Rights and permissions

About this article

Cite this article

Schweig, J.D. Moving beyond means: revealing features of the learning environment by investigating the consensus among student ratings. Learning Environ Res 19, 441–462 (2016). https://doi.org/10.1007/s10984-016-9216-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10984-016-9216-7