Abstract

In the early years of nuclear explosion monitoring, experts used downwind detections with meaningful ratios of radioactive species to identify an explosion. Today’s reality is sparse networks of radionuclide monitoring stations looking for weak signals. Analysts need to discriminate between industrial background radioactivity and nuclear explosion signals, even using the detection of one isotope. Aerosol and xenon measurements potentially related to nuclear tests in 2006 and 2013 announced by the Democratic People’s Republic of Korea and from worldwide civilian background radioactivity are considered when defining radionuclide detection anomalies to objectively guide the use of limited analyst resources and reduce the possibility of not detecting nuclear explosions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

A summary [1] of the releases from underground nuclear tests in the United States [2] shows that xenon isotopes are the most likely isotopes to escape underground containment in an nuclear test explosion. In a general sense, noble gases, which include xenon, are the most likely to escape from containment. Higher magnitude underground test releases and explosions in the atmosphere will contain non-volatile species in detectable concentrations, usually attached to atmospheric dust. Networks of monitoring systems exist around the world to ensure the safe operation of the civilian nuclear industry, but a network with much higher sensitivity is needed for nuclear explosion detection. Such a network was developed as a verification system for the Comprehensive Nuclear Test Ban Treaty (CTBT) (for example [3] and [4]). The international monitoring system (IMS) of the CTBT will eventually have 80 operational stations, 40 initially instrumented for xenon monitoring, and all instrumented for aerosol monitoring.

Before xenon monitoring began, there was an expectation that detection of radioactive xenon in the atmosphere would be a ‘smoking gun’ of a leaking nuclear test; a simple test of presence or absence. This view began to disappear when one of the first automated xenon measurements carried out in the development of IMS monitoring systems detected 133Xe and 135Xe, likely from several nuclear reactors in the region [5, 6]. The first intercomparison of automated systems was done at the location of IMS station DEX33, in Schauinsland, Germany. This station is ringed tightly with reactors, and is downwind from a medical isotope production facility [7, 8]. In June 2001, a field system installed in Ottawa, Canada quickly established a measurement history with concentrations of 133Xe manyfold higher than reported during the Shauinsland development tests with frequent observation of all 4 relevant CTBT radioxenon isotopes (131mXe, 133mXe, 133Xe, and 135Xe). Ultimately, it was established that high concentrations were caused by to proximity to the world’s largest point source of radioxenon emissions at that time, medical isotope production at Atomic Energy of Canada Limited’s Chalk River Laboratory [9]. These field experiments were strong indications that a degree of forensic diligence would need to be added to the simple presence or absence of radioactive xenon to screen out uninteresting radionuclide releases from the perspective of the CTBT.

Experience operating the IMS radionuclide network began around 2000, and has grown substantially since then with 71 of the 80 treaty radionuclide stations submitting data [4, 10]. This has led to the accumulation of hundreds of thousands of measurements of aerosol and xenon. These measurements include several unusual situations: a nuclear reactor disaster [11,12,13,14,15,16,17], industrial accidents [18,19,20,21], and the announced nuclear tests by the Democratic People’s Republic of Korea (DPRK) [22,23,24,25,26].

Even for places with low natural and/or anthropogenic backgrounds, industrial emissions of radioactive materials can occasionally be difficult to distinguish from nuclear explosion signals. In some locations like Schauinsland, Germany, there are frequent detection of civilian emissions that may naively appear as nuclear explosions [27,28,29,30,31,32,33,34]. Schauinsland (IMS station DEX33) has detection of civilian radioxenon emissions in 40% to 66% of measurements [35]. A 2005 comparison shows a stark difference between measurements taken in Ottawa, Canada, versus a Pacific Island location [9]. In the case of Tahiti (FRX27), where essentially no xenon is detected, the presence or absence of radioxenon might be a sufficient anomaly to trigger additional study by an analyst, but more sophisticated anomaly triggers are likely needed for measurements in places like the Ottawa monitoring testbed location, which observes radioxenon from power reactors, research reactors, and a regional medical isotope producer.

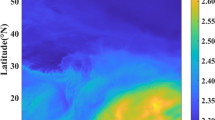

Most of the radioxenon detections in the IMS are caused by routine operations or incidents at civilian nuclear facilities [8, 12, 36,37,38]. The magnitude of the radioxenon measurements potentially associated with underground nuclear tests announced in the last decade were similar to background radioxenon levels from civilian nuclear facilities [23, 25, 26]. Isotopic ratios specific to releases from different types of facilities or activities have been calculated and published [23, 29, 30] to aid the analyst in determining the cause of the release. Other studies [34] also compared the calculated isotopic composition for releases from civilian nuclear facilities with field measurements. Hopefully, isotopic ratios will be of use because 133Xe release magnitudes can be similar: medical isotope production facility releases can be as large as 1012–1013 Bq per day [36, 39], while a 0.1% release from a 1 kt underground nuclear test leaking after 3 days would be about 1013 Bq [40]. Nuclear power plants typically release much less xenon [38]. Figure 1 shows the predicted number of detections of 133Xe from 13 medical isotope production facilities that were in operation in 2017 using published release magnitudes [41]. The number of detections is based on an assumption of samplers with a 12 h collection period and a 0.2 mBq/m3 detection limit, with a possible maximum of 730 detections in the year at any location. A comparable map of average air concentrations was produced by Generoso et al. [42], but average air concentrations don’t directly translate into the number of detections.

In 2014, 32.4% of 10,662 radioxenon samples taken at 23 IMS radionuclide stations had 133Xe concentrations above a critical limit for detection, LC [43]. The daily average number of detections of 133Xe is expected to increase as the civilian nuclear industry grows [44], more monitoring stations are installed, and as monitoring stations with lower detection limits and shorter collection intervals replace first generation systems. For example, three next-generation noble gas samplers have recently been certified for use in the IMS. The Swedish system [45] uses a 6 h collection period and began operation at IMS station SEX63 in Stockholm, Sweden in the middle of 2021. The French system, [46] uses an 8 h collection time and may replace current systems with a 24 h collection time. The Xenon International system was developed by Pacific Northwest National Laboratory (PNNL) and Teledyne Brown Engineering (TBE) [35, 47, 48]. The xenon international system uses a 6 h collection time. Next generation particulate samplers are in development [49, 50], and include the concept of adding short-lived isotopes for screening purposes.

Network optimization studies for next-generation noble gas systems [40, 51] show an increased number of detections, an ability to detect lower concentrations, and more sampling stations detecting the same release events. A similar study has been conducted for potential next generation aerosol systems [52]. Studies of this nature use the number of detections as a stand-in for the capability to determine the release location. The capability of next generation systems to accurately determine the source location is expected to be greater than for current systems.

Analysis of the data generated by each type of station can quantify the levels above which the system developers regard a signal as very likely a true detection. Following the approach of Currie [53], two such measures are the critical level (LC) and the minimum detectable concentration (MDC) [49, 54] for each analyzed isotope. Declaring a signal detection is just the beginning step of the search for anomalies needed for nuclear explosion monitoring. All the next-generation systems should make more detections of background isotopes per day than before, because they make more measurements per day than the systems they are replacing. The new systems offer an enhanced potential to identify anomalies, especially the next-generation radioxenon systems with improved detection limits for 131mXe and 133mXe. Detection of more nuclides will facilitate screening out signals that match expected background signals that have a low probability of matching nuclear explosion signatures. In other words, anomaly detection using multiple isotopes is much more specific for nuclear explosion signal detection than a single isotope anomaly analysis.

In concept, it is possible to analyze each positive radionuclide detection and estimate the chance that it originated with an expected anthropogenic or natural source. However, in any organization, there is a practical limit to the human and computational resources available for conducting the investigations. Thus, monitoring institutions require a way to screen out a large percentage of the irrelevant signals (rate of false detections) while retaining all or almost all the explosion signals (rate of true detections). It is an impactful policy choice—some would consider even a small possibility of missing a nuclear test to be unacceptable.

Definition of an anomaly

There are several philosophical approaches for forming an anomaly definition in the context of a resource-constrained organization and a policy regarding false negative and false positive rates. One policy might naively set the false positive rate to zero—screening anything out that might be false, but this might result in the true detection rate being much lower than 100%, meaning an unacceptably high loss of real nuclear explosion signals. Another naïve alternative is to set the desired true detection rate to 100%—naïve because the false positive rate will also be very high and very little screening has been accomplished.

A more practical approach is to focus special analysis procedures on anomalous samples under the assumption that a nuclear explosion is more likely to exhibit abnormal features rather than appearing like normal background. In general, anomalies are samples whose features differ significantly from normal background data and that occur very rarely in the data set. This deviation from the majority of normal background data may indicate that the sample was generated by a different mechanism that possibly was a nuclear explosion. In order to support nuclear explosion monitoring, the definition of an anomalous observation can be guided by physical properties that are specific for a nuclear explosion and differ from signals normally arising from nuclear facilities, e.g. by using isotopic ratio thresholds above which the fresh releases from nuclear explosions are the most likely source.

The International Data Centre (IDC) currently uses an Interest Level measurement categorization scheme summarized in [55]. For aerosols, this scheme uses a list of isotopes to trigger a shift from Levels 1 and 2 (that only contain natural radioactivity), to Levels 3, 4, and 5, which are respectively presence of trigger isotopes in a ‘normal’ range, one trigger isotope above that normal range, and at least two trigger isotopes above the normal range. Only Level 5 samples get special confirmatory re-measurements. The normal range is set by a moving window of past observations. For xenon isotopes, Levels A, B, and C are set based on, respectively, no detected presence of xenon isotopes, xenon concentrations at or below the 75th percentile observed for that isotope at that station, and above the 75th percentile of recent observations for that station. One might expect that a real underground nuclear test leaking at low levels might only produce aerosol measurements in Level 3 and 4, and that perhaps all Level 5 measurements to date have nothing to do with underground nuclear tests. Similarly, positive xenon measurements marked Level B might indeed represent nuclear test leakage, and in the hopefully normal situation that no nuclear explosive tests have occurred, all Level C measurements arose from unrelated activity. Thus, the existing scheme likely neither keeps 100% of the real tests it might encounter nor rejects most of the unrelated background.

The IDC further applies isotopic ratio screening flags for noble gas samples [56]. These are derived from the four-isotope plot [57]. The flag would be raised in cases where the isotopic activity ratio is above a specific threshold that is specific for an atmospheric nuclear test or a rapid venting from an underground nuclear explosion.

A different kind of policy might be to make selections such that the rate of anomalies is within the resources available for utilizing human analysts. This may seem skewed to merely financial concerns and not intellectual or policy goals, as it could miss a real explosion. However, identifying a stream of events to be studied that no analyst will ever analyze, in which background events are categorized at a higher level than real events has the same risk. The case of a weak anomaly policy is possibly worse because the analyst may not objectively choose which of the oversupply of events to study, and be more likely to miss a real event.

Thus, experts require an objective way to define an anomaly that weighs the relative value of different kinds of anomalies. The non-explosion signals mentioned above (nuclear power reactors, medical isotope facilities) represent the background radioactivity that challenges analysts. Against this backdrop, we review the challenging situations presented by several DPRK announced tests as case studies to further develop a useful anomaly definition. “Useful” means that the anomalies will have a high probability of identifying a signal that could result from a nuclear test but at the same time have a low probability of missing a signal from an explosive nuclear test. To demonstrate the power of anomaly detection, the DPRK 2013 test-related phenomena are split into February and April cases, and the first DPRK-announced test in 2006 is a third case. This paper is not attempting to quantify the probability of missing a nuclear explosion-like signal.

DPRK February 2013—magnitude anomaly

Monitoring experts identified seismic signals on February 12th, 2013, from a nuclear explosive test announced by the DPRK. These experts determined the test location, in some cases, with unusually precise accuracy [58, 59].

There was a detection of 133Xe at Takasaki (JPX38) on February 21–22 of about 0.3 mBq/m3, more than 2 standard deviations above the minimum detectable concentration for the system. This signal level is at about the 85th percentile of the Takasaki frequency chart in Fig. 2. The measurement system has a minimum detectable concentration of about 0.2 mBq/m3. This may be consistent with a late leak occurring one week after the explosion. However, this measurement had no particular feature that distinguished it from reactor leakage and did not correspond to the seismic time of the announced test. Even if atmospheric transport showed a good chance of air transport from the putative test location to the Takasaki sensor, this is necessary but not sufficient to show that some or all of the xenon was associated with the test.

Without corroborating evidence, there was not sufficient evidence to claim the detection on February 21–22 was linked to the seismic signals. It is arguable that a responsible analyst must fully investigate such a signal occurring in the downwind area of an announced test, or overlapping with an anomalous seismic, hydroacoustic, or infrasound event, but ambiguity has to be acknowledged when reporting results, maybe in the form of confidence intervals or uncertainties.

Considering Fig. 2, one might select an anomaly level of the 133Xe to conform to any one of several policy decisions mentioned above—analyze ALL signals or perhaps analyze signals above an arbitrary magnitude, say, 95th percentile, of background signals. Selecting the 95th percentile for the February 2013 case would have deselected a positive 133Xe detection. This policy might easily have been challenged by analysts and users of the data at that time.

Another approach would be to estimate the magnitude of the signals expected for, say, a 1% leak of a 1 kt test, simulated all over the network [40, 52]. As an alternative to these physics-based choices, a policy could select a background percentile where analysts are kept fully busy with magnitude anomalies, as mentioned above. A slightly more complex scheme could use the presence of a trigger from other monitoring technologies to change the 95th percentile threshold, but it might be sufficient to allow the data users and analysts to request exceptions for extenuating circumstances.

DPRK 2006—atmospheric transport and hypothesis tests

On October 9th of 2006, seismic signals were detected from a location in North Korea [60, 61]. The authors referenced here estimate the yield between 0.5 and 1 kt and used seismic analysis methods to obtain enhanced location information [59].

Ringbom et al. [25] estimate about 0.7% leak of 133Xe produced in the explosion based on non-IMS ground measurements conducted near the Republic of Korea-DPRK border. This work did not have the luxury of a lengthy measurement campaign to assess the frequency of signals versus magnitude, but the measurements had the advantage of being relatively close to the putative source and contributed strong evidence that a leak had occurred.

From October 21st to 25th, 133Xe was detected [26] at an IMS station (CAX16) in Yellowknife Canada. This location is over 7000 km northwest of the seismic trigger event in North Korea, which leads to high atmospheric dilution and can result in inaccurate predicted arrival times from atmospheric transport models (ATM). ATM is very important, as the medical isotope production facility in Canada (Chalk River Laboratories—CRL) that was releasing over 1013 Bq of 133Xe per day [36] could possibly contribute to 133Xe measurements at Yellowknife.

Figure 3 shows the CAX16 measurements and the modeled expected contributions from the DPRK test on October 9th and the CRL medical isotope production facility ~ 3000 km to the southeast. The actual excursion beginning on October 21st leads the modeled excursion by 1 day, but if the first 5 days of measured signals are integrated, before the modeled medical isotope contribution contributes much, there is about 2.1 mBq/m3 in aggregate. Using the first 5 days of CAX16 background measurements, which are possibly due to regional reactor leakage, results in an aggregate of about 0.65 mBq/m3. The excess measured 133Xe then would be about 1.45 mBq/m3, compared to a 1-day-delayed 5-day aggregate from the modeled DPRK explosion to be 1.25 mBq/m3. The atmospheric transport model (ATM) used meteorological data from the European Center for Medium-Range Weather Forecasts (ECMWF).

Given the uncertainty inherent in long-range atmospheric transport, this is excellent agreement between measurement and model. Nuclear scientists can calculate the vanishing probability that the measured values are not statistical fluctuations, but the uncertainties in this result arise from predictions of atmospheric models and the collection of hypotheses—sources of 133Xe, like nuclear explosions, medical isotope production, reactor leakage, and medical isotope usage (hospitals). The analysis in Fig. 3 takes into consideration only the two largest contributors.

In Fig. 4, the 133Xe signals which have good agreement with predictions from the estimated release from the DPRK event are not nearly the largest seen at CAX16. At this distance (> 7000 km), it is still possible to reasonably correlate the arrival time of the signals with the atmospheric transport from the seismic event location and time, and to de-conflict the observed signals from the maximum predicted background signal from a known regional background source, CRL. The October data represent a very modest anomaly in size, but a significant anomaly in correlation to a seismic event, and without serious background signal contribution. Having multiple samples at several sigma above background levels in the upper panel of Fig. 3 greatly suppress the possibility of the detections being a random statistical fluctuation. For this work, the occurrence of consecutive detections will be referred to as a ‘persistence anomaly.’

Several years of 133Xe background measurements at CAX16. The CAX16 signals of October 2006 in Fig. 3 highlighted here with an oval, are not very unusual in size

Importantly, this anomaly case study requires calculations with an atmospheric transport model. With ATM results developed for positive detections, a rule regarding anomaly by location can be formulated. The ATM estimate of the source location can be overlaid on locations of interest and other locations [62]. In this case, news agencies brought reports of an announced nuclear test [63]. Other locations to consider might represent possible source hypotheses that would decrease interest in the event, like civilian nuclear facilities that represent non-explosion sources of radionuclides. Location information can raise or lower the anomalous nature of an event.

As illustrated in Fig. 4, industrial sources of 133Xe can cause detections in the IMS noble gas samplers. In contrast, some noble gas samplers in the IMS may go a year or longer without a detection of 133Xe. Two such stations are GBX68 located on Tristan da Cunha and CLX19 located on Easter Island. These two remote island stations are more than 3,500 km from known industrial sources of 133Xe. Other samplers see more frequent detections, which sometimes occur in consecutive samples.

The persistence of 133Xe detections is illustrated in Fig. 5. This logarithmic-scale plot compares JPX38 (Takasaki, Japan) and USX75 (Charlottesville, VA, USA) for samples collected in 2020–2022. Over 2000 12-h samples were collected at each station during this three-year period. Most positive detections occurred in sequences of consecutive samples. For JPX38, there were 531 total detections (25% of the > 2000 measured samples) and only 103 samples with detections were not part of sequential detects (4.8%). USX75 data for this period is without the influence of CRL medical isotope production, and there are 247 total detects (11%) and only 85 samples were not part of sequential detects (2.4%). In this case, there are 9 operating nuclear power reactor complexes within 350 km of the USX75 sampler. Persistent positive detections on two or more sequential days may not actually represent Xe from a singular source, but then again, a magnitude anomaly may also originate from two reactors and not be of interest for locating potential explosions. In Fig. 5 there are several instances of more than 5 sequential detections at either of the two stations. These are quite rare and may motivate a detailed ATM location study to see if they represent a leaking test or a continuous industrial process.

This logarithmic-scale chart shows the count of the number of consecutive detections of 133Xe at JPX38 (Takasaki, Japan) and USX75 (Charlottesville, VA, USA) during 2020–2022. The cumulative curve is considered from the perspective of the number of samples with detectable concentrations, not the occurrences of the sequences

DPRK April 2013—isotopic ratios and hypothesis tests

On April 9th, 53 days after the triggering seismic event in February, there was an interesting detection of two xenon isotopes at the Takasaki station. Anomalous level of both 133Xe and 131mXe were seen in coincidence. Figure 6 includes charts that show how clearly the signals, with two-sigma uncertainties shown, exceed the MDC for the system, and compare to the ambient levels just beforehand.

Looking back at Fig. 2, the magnitude of the weakest of these 133Xe detections (0.705 mBq/m3) is roughly at the 99th percentile of the background measurements, and the other three detections are several times larger. Thus, this is a strong magnitude anomaly.

These 4 large 133Xe values also show a moderate level of persistence. The cumulative probability that a sample with a detectable concentration of 133Xe would be in a sequence of 4 samples with detectable concentrations is above about 60% of samples that year, as seen in Fig. 5. About 80% of the samples above the MDC occur in multi-sample sequences. Thus, 133Xe detections in April, 2013 would be difficult to attribute to the February 12th seismic trigger event without a second isotope.

The 133Xe concentrations in Table 1 are 10–15 times the MDC while the 131mXe values are less than two times the MDC. However, the 131mXe strength has strong statistical significance. Comparing the peak values of each isotope to the frequency histograms in Fig. 7, shows that both are above the 95th percentile of past signals for that isotope. These isotope detections are thus each anomalous in magnitude. Looking at Fig. 7, there are about 18 samples per year (2.5%) in the size range of the 133Xe signals in this case, and about 30 samples per year (4%) with 131mXe at or above 0.25 mBq/m3. The chance that unrelated measurements of 133Xe and 131mXe would occur at the same time is less than one per year. Using 6850 samples collected between February 2013 and January 2023, there were 1886 samples (27.5%) with detectable levels of 133Xe. For the same period, there were 328 samples (4.79%) with detectable levels of 131mXe. However, only 11 samples (0.16%) had joint detections of 131mXe and 133Xe. That multiple detections of both isotopes occurred in April 2013 also bolsters confidence in a physical anomaly rather than a random statistical anomaly.

Other authors [64] recently used multiple isotope data from this 2013 event to illustrate new techniques for deciding whether data taken at different times and at different locations came from the same release event. Their analysis techniques may also apply to other situations when multiple isotopes are detected.

The ATM analysis of these signals is consistent with the seismic event location, but we temporarily set aside the location anomaly of the event to consider the power of isotopic ratios and various hypotheses that could give rise from them. Considering reactors, fuels may be most likely to leak when thermally stressed at startup and shut down [65] and have distinguishable isotopic compositions at those times. The ratios of isotopes in nuclear fuel at startup, shutdown, and restart then form a set of isotopic ratio hypotheses. Medical isotope production could have many forms and slightly different ratios [34], but one has been selected for comparison.

Fission xenon ratios are greatly impacted by the continuing presence of iodine isotopes produced by fission. If the iodine fraction of the fission atoms is separated from the xenon at the instant of fission, this is referred to as instantaneous or independent fission yield. If the iodine and xenon are kept together for a time, the iodine isotopes of various half-life gradually decay into xenon isotopes and the atoms accumulate and change the ratios amongst the xenon isotopes. If this continues, the xenon ratios ultimately attain cumulative fission yield. The isotopic ratios among xenon isotopes in between these times are determined by their starting values and the time when the iodine fraction is separated from the xenon fraction, called the fractionation time. Elemental fractionation can be caused simply by the cooling of the debris underground, such that elements with high boiling points freeze in place while noble gases keep moving through porous structures or fractures.

In Fig. 8, the April 2013 Takasaki signals are positioned as if the supporting fission occurred 53 days previously, but the chart allows the reader to mentally slide the measured ratios to the right or left, to match other possible hypotheses with their concurrent origin time. These computations are indicative and not to be considered exact. However, the signals would be consistent with a reactor shutdown if the shutdown occurred about 30 days prior to the detections in April 2013. Because normal reactor releases are much smaller signals, the reactor would need to be local (not regional) and the fission gas held perhaps 10–30 days, allowing isotopes to decay to observed ratios. Another hypothesis is that the measured Takasaki signals would be consistent with a reactor startup that happened about one day prior, but the analyst would have to explain the absence of 135Xe and 133mXe constituents. The analyst is then further challenged to find an actual reactor restart or shutdown in Japan in 2013. Finding little support for the reactor-related hypotheses in Fig. 8, the coincidence of the 10-day-fractionation line with an announced and seismically detected nuclear explosion 53 days prior is a compelling hypothesis.

Comparison of potential competing hypotheses for the source of the April 2013 xenon signals. The various green curves refer to xenon fission isotopes immediately separated from iodine fission isotopes, fractionated (separated) away from iodine at various times from 1 h to 10 d, or kept together with iodine indefinitely

As an aside, some have asked how the fractionation could take ten days, and how the atmospheric transport could take the remainder of the 53 days, when atmospheric transport from a previous announced DPRK test to Takasaki required only a few days [25]. The time gas takes to migrate to the surface can depend on fracture size and porosity of the geologic confinement of a nuclear test, and on the barometric pressure. When nuclear debris is remotely collected from the atmosphere, there are many possible leak times from prompt to many days later. For tunnel-based nuclear tests, so-called horizontal emplacement, xenon release can trivially occur when the tunnel is opened. Thus, one possible evolution of this event is the slow leak of xenon from a prepared room to the tunnel, such that the average separation time is 10 days. Then, a release of gas with this isotopic composition in Fig. 8 when the tunnel is opened, and the detection in Takasaki occurs shortly thereafter.

The sort of thought process outlined compactly in the last few paragraphs is required for every underground nuclear test data evaluation, but the most rudimentary parts can be computed objectively to select anomalies and in so doing, screen out a fair amount of background signals. Without human intervention, one could determine that the ratios of the experimental data in Fig. 8 are potentially consistent with reactor restart, reactor shutdown, and various explosion or fractionation hypotheses. Perhaps in the future, reactor status information could be available and used to automatically reject some of these hypotheses, but in any case, the un-rejected explosion hypotheses are reason enough to select these data points for expert analysis.

In the example analysis represented in Fig. 8, a limited hypothesis analysis is presented. Perhaps other hypotheses are possible e.g. [66], but they have not been considered in this work. A concept for pre-calculating the range of signatures from a comprehensive set of scenario for radioxenon being released from underground nuclear explosion is presented by [67]. If the atmospheric transport at the time of the detection favors the seismic location, if reactor shutdown and restart are not favored by schedule or location, then in this limited analysis, what remains is a hypothesis involving detection 53 days after fission, and fractionation that averaged about 10 days.

A summary of the radionuclide detections from this event is that there was a 133Xe magnitude anomaly and a 131mXe magnitude anomaly, a coincidence of two single-isotope anomalies, a persistence anomaly of 3 positive detects times two isotopes, a location anomaly (location resolved to include seismic event location, a declared test site, and an announced test), and the event passed a substantial hypothesis test, retaining consistency with an explosion. With human analysis, the event may resist consistency with any other forwarded counter hypothesis.

This case study makes the argument for developing an objective anomaly scheme. It might be tempting to stop the list of anomalies of this event with the 133Xe magnitude anomaly. Indeed, the 133Xe concentration observed would be sufficient to be categorized as a Level C today that would be sufficient to raise this event for human analyst investigations—if there were not too many other Level C magnitude anomalies to consider. This is precisely why additional anomaly types are important, to objectively raise the priority of investigation.

Creating a multi-measurement event, identifying it as anomalous and screening out background, are goals for the future, but the careful analysis of a collection of associated measurements is already a well-established practice using the skills of several disciplines. Excellent examples of this practice, focused on this event, are Ringbom et al. [23] and [68].

We can create a new case study by modifying the April 2013 event case study, robbing that event of some of the magnitude anomaly. This can be helpful in exploring the relative weight of anomalies. In this modification, consider a slightly different 2013 event where the two xenon isotopes were one tenth as concentrated, presumably from a smaller leak. The peak 133Xe would be 0.3 mBq/m3 and the peak 131mXe would be 0.06 mBq/m3. The 133Xe signals would then be similar to the February 21st–22nd signal that was not elevated in importance. According to Fig. 7, the peak of these modified isotope signals would be roughly 85th percentile (133Xe) and 50th percentile (131mXe) for that station at that time. Some of the three positive isotope detections in Table 1 for each isotope might fall below detectability, so the persistence anomaly might be much weaker or gone. As in the February 21–22 case, the 133Xe signal is still large enough that it might trigger an event analysis, either from a magnitude threshold of 85th percentile or multiple isotopes detected. In contrast to the February 2013 signals, the presence of a 131mXe isotope in interesting ratio would appear anomalous and consistent with explosion hypotheses. If there were sufficient positive detections, perhaps the location anomaly, correlating with a seismic time and location might exist, but also, might not.

This modified event clearly makes the case that a second or possibly third isotope detected will be extremely valuable for identifying consistency with civilian enterprise or explosion hypotheses, even when detections are marginal or fail a magnitude anomaly threshold. Thus, in addition to searching for detections that exceed a threshold for an anomaly, it would be beneficial to look for ratio anomalies among detected isotopes, as long as the detection exceeds a reasonable confidence threshold.

Background signals

Far from allowing a ‘smoking gun’ treatment, non-explosion atmospheric radioactivity can obscure interesting signals. Therefore, understanding the nature of background releases—magnitude, duration, frequency, isotopic composition, and geographical source location—is a key to formulating anomaly definitions that produce a stream of events that contain all or nearly all explosion signals and not too many background signals. Besides natural radioactivity, background events for xenon include:

-

Normal reactor operations [38],

-

Accidents, for example [70], and

-

Other sources like nuclear physics research facilities [66].

Because medical isotope productions are such an important background, scientific studies have been in progress recently to estimate their total impact [36, 71] and to determine the isotopic composition [72] at the facility release stack. Background for aerosol signals is dominated by natural radioactivity coming from radon in the atmosphere, and leakage from civilian nuclear processes, some of which are significant backgrounds in a few regions [1].

To consider how continuous industrial processes might affect persistence, two 3-year time periods for USX75 are compared in Fig. 9. The light bars show detections under the influence of CRL. There were 1237 total positive detections during this period (56%) and 180 samples were part of sequential detects (8%). The cumulative detection curves shown in Fig. 9 give an indication of how often samples are detected as part of a sequence. The 80th percentile occurs in the data at a persistence of about 7 samples and 17 samples, without and with the CRL medical isotope facility running. While some modest number of sequential detects could raise interest, higher levels of persistence may be an indicator of continuous release mechanisms.

This graphic compares the USX75 data for 2020–2022 in black (also shown in Fig. 5) with USX75 data from a 3-year period during operation of the medical isotope production facility at Chalk River in Canada

The Fukushima nuclear accident of 2011 presented an unanticipated challenge in that so many stations saw so many isotopes that it became normal—not anomalous—to see those fission product isotopes in large quantities. This is an aberration that is not likely to occur frequently, although it was probably similar to the Chernobyl accident in 1986. One potential Fukushima lesson is that once strength, duration, and multiple station anomalies are clearly evident, the isotopic ratio anomaly test could potentially bind all of the following associated detections to a single or a few radionuclide events, such that they do not each require a separate analysis versus an update. Improvements in background knowledge, in highly resolved isotopic identification, and in location capability all contribute strongly to this goal. Next generation monitoring equipment for xenon and aerosol discussed previously should provide improved isotopic and location input for this analysis. However, measurement campaigns with additional samplers would help define the expected background levels.

An annual summary of isotopes detected in the IMS for 2011–2013 is presented in Table 2. The International Data Centre (IDC) uses an algorithm to place detections in categories (L4, L5) if the isotope has not been detected often. This can be observed by inspecting the comparison of 2011 non-anomalous 137Cs detections (L3 = 1589) with the anomalous ones (L4 + L5 = 689). The stations that reported detections each required a minimum number of 137Cs detections before it became non-anomalous. This table shows the excess of isotopes and their frequency in 2011 as compared to 2012 and 2013. Of the ~ 20 k aerosol sample measurements taken in 2011, about 1800 (9%) contained 131I, and 2200 (11%) contained 137Cs, versus about 30 and 300 respectively, in previous years. See appendix I of [10] for more details.

The frequency of detection of minor isotopes in 2011 versus other years in Table 2 highlights the power of ‘presence or absence’ in a network. The seldom-observed isotopes can indicate, from their presence or absence, an anomaly. In the case of 2011, it was a reactor accident. If only a few unusual isotopes are present in a region in a few days period, it would likely be sufficiently anomalous to motivate a human analysis. Isotopes seen more often, like isotopes of xenon and iodine, benefit from at least a ratio anomaly consideration.

The interest level categorization scheme left thousands of anomalies to investigate in 2011, and thus this interest level categorization was not a sufficient limit. The comparison of 131I and 133I, which was available only 27 times in 2011, and not in any other year, shows that the event was reactor related and not explosion-like [29]. This method cannot dismiss every detection, because 133I was only detected 27 times, compared to 1700 for 131I. Thus, 131I detections that can be attributed to the several release events of the Fukushima reactor accident need to be considered as one or a few events, each of which can be screened out by ratio anomaly.

The designations L3, L4, and L5 denote the detection interest-level categorization scheme in effect at the International Data Centre, representing non-anomalous levels, anomalous single isotope, and anomalous multiple isotopes.

Besides developing a new generation of monitoring equipment and studying the composition of emissions at the source, another possible approach to solving the background challenge is to conduct background measurement campaigns in the real world to understand signals. The preparatory commission for the Comprehensive Nuclear-Test-Ban Treaty Organization (CTBTO) has organized the global monitoring community to perform local or regional measurement campaigns [27]. These have mostly consisted of placing a single monitoring device in an area where backgrounds were unknown, and in a few cases, little background was seen. More recently, several systems were deployed to a region surrounded by multiple nuclear power reactors. This arrangement may allow the testing of the multi-station anomaly concept more quickly than using only IMS data, which is rather sparse and requires larger and rarer background signals for multi-station detection. An excellent demonstration of this concept was documented by Hoffman et al. [73] who show multi-station detections for the largest known medical isotope production emissions—where the stations are in St. John’s NL, Canada, Paris, France, Schauinsland, Germany, and Stockholm, Sweden. The new SAUNA QB technology allows for cost-effective implementation of noble gas sensor arrays [74]. It is argued that when such arrays are used instead of a single system, the aggregated verification performance (detection, location, and characterization) can be higher. Such regional high-density networks offer frequent opportunities of multiple detections of the same release and these data can be used to develop and validate methods for anomaly detection, screening and event analysis that can be applied to the sparse IMS system.

Discussion

In discussing anomalies, there is a risk of accidentally suggesting that only signals above a threshold are important or should be used in an analysis. The authors wish to assert instead that all the measurements associated with a release of radioactivity, including non-detections, should be included in an analysis, but the trigger for the analysis would most likely be a positive detection above some chosen anomaly detection as determined by an organizational policy, possibly influenced by available human and computational resources. An interesting aspect is when information comes from another phenomenon, e.g., a seismic signal, or comes externally, e.g., an important announcement or news report which triggers an event analysis.

In the examples shown above, several resources are required for anomaly detection. It is likely that analysts already have these resources available.

A continuous flow of monitoring data containing gamma spectroscopy results of sample measurements and a way to transform spectra into activity concentration values where measurement trends and frequencies can be established.

-

1.

A stream of carefully computed atmospheric transport models based on interpolated atmospheric physics measurements.

-

2.

Current status of all measurement facilities—Which systems are currently monitoring?

-

3.

Access to current status of civilian nuclear industry facilities, e.g. recent status of power reactors, and atmospheric transport modeling related to these [8]. Where possible, the history of releases from these facilities, including their isotopic composition can be invaluable. Ideally, recent emission versus time data would be available, as well.

Other reference resources are needed, and only occasionally updated:

-

4.

Frequency distributions of background events—to select the threshold deemed anomalous

-

5.

An atlas of previously observed unusual events and backgrounds

-

6.

Pre-computed tables of isotopic ratios from explosion sources

-

7.

Pre-computed tables of isotopic ratios from key background processes, like those in Fig. 8, for hypothesis comparison and screening out

Before an analysis is completed, quality assurance questions must be asked.

-

8.

Calibration and other quality measures for each station/measurement involved

While any analyst would have access to items 1–4, the experienced analyst may have a repository of items 5–8, and not complete any analytical report without consulting item 9 to understand the quality of the data the analytical results depend on.

The work of the analyst or analytical team could be described as triage of the anomaly—sorting the signals into one of several types of anomalies discussed here. These include:

-

A.

Anomalous detected magnitude of one relevant isotope, which could include very rarely detected isotopes whose presence is anomalous. A subset might be the presence or absence of multiple isotopes

-

B.

Persistence anomaly—a sequence of positive measurements that are rare for a location

-

C.

Location anomaly—where possible ATM source locations overlap relevant locations (e.g., seismic trigger location or a location different from any documented background locations)

-

D.

Ratio anomaly, where isotopic ratios exist and are not consistent with plausible background sources. Some background types are consistent with explosion signatures and may have their interest level lowered using ATM location and other information, like stack monitoring.

So far in the development of this idea, the authors have not considered precisely what signals from a leaking underground nuclear test would look like, other than the basic ratio hypotheses of Fig. 8. The magnitude and the time profile of a release depends on geological and engineered containment failures that will be unknown to the monitoring community. Isotopic ratios are probably the only feature of a release that allows a positive consistency check with an explosion. However, all the event characteristics discussed above can be characterized for a region, and specific background sources may offer temporal data. A reasonable picture of regional background can be developed and signals that fit the picture well can be screened out or lowered in priority for analyst review. Some analysis of the likelihood of rejecting nuclear explosion signals should be done, though, so that users can accept a true and false acceptance percentage and scale the amount of analyst resource needed. Identifying an anomaly is just one step of the process, and this step will need to be repeated several times as additional measurements at one or multiple stations are reported. Bound up with detecting an anomaly is sample association—finding other atmospheric radionuclide measurements that are associated with the same source event [75]. If additional samples with positive detections are found through sample association, as more measurements arrive, they could easily add anomalies B, C, and perhaps D to the description of the event. This is important, when it can be done, as adding additional levels of anomaly help screen out irrelevant sources for nuclear explosion monitoring and increase the basic confidence that an important release of radionuclides was detected. Another aspect is that events that do not pass a magnitude threshold today might be classified as anomalous under B, C, or D in this scheme, thereby reducing the number of true events that would be rejected in today’s scheme.

Another important use of anomaly triage is selecting the most effective kind of analysis approach. Members of the monitoring community have developed various means of combining multiple measurements, including non-detects. Various versions of this method [76,77,78,79,80] allow combination of aerosol and xenon measurements. Other methods allow for the presence of a frequently detected nuisance source [81]. Figure 10 shows how anomaly classification would occur after gamma spectroscopy estimation of sample concentration is completed. Further, the result would feed into sample association and Bayesian event analysis. The anomaly type might determine the Bayesian formulation that should be used. What Fig. 10 does not show is how iterative the process would need to be. Even in the simplest case of a 133Xe magnitude anomaly with a single measurement, the process may need to run several times to make all the input values, such as a starting value for the release magnitude, consistent. Further, the process would be repeated as new measurements are reported in at the one station or among nearest neighbor measurement locations. The Event Bulletin 1 in block 5 would be rather fluid for a few days and could result in multiple analyst-reviewed bulletins and updates from block 6. No attempt has been made to fit these ideas into the regulations and opportunities of an organization like the IDC. The IDC may use expert technical analysis to make standard screened reviewed event bulletins, make use of updated event bulletins to capture new results from subsequent samples, and, with waveform measurements, create fused event bulletins. These structures are outside the scope of this work, except the concept of anomaly detection versus the simple interest level of the existing categorization scheme.

A simplified block diagram showing how anomaly detection would fit in a processing sequence for radionuclide data. Block 1 could be commercial gamma spectroscopy software. Block 2 is the subject of this paper. Block 3 is the selection of adjacent measurements that might support a Bayesian analysis. Block 4 is one of several possible Bayesian algorithms tailored to the situation

Conclusions

It is possible to imagine a continuously operating automated processing pipeline for radionuclide monitoring data. However, there is a balancing act between missing no explosion-like signals and promoting no background events for advanced human analysis. This work explores some concepts that could constitute useful screening of background events to reduce the dataset to a manageable size while retaining a large fraction of explosion-like signals. A vision is emerging of how to exploit an established network of sensors to make confident statements about the consistency of an event with a nuclear explosion or a known background source. Ultimately, these ideas are not much removed from what an expert analyst would do today, albeit it manually. The power of modern computations may remove the most time-consuming elements of the work and allow the analyst to peruse more potential events per unit time, and with a greater level of confidence than before.

Change history

17 May 2024

A Correction to this paper has been published: https://doi.org/10.1007/s10967-024-09519-1

References

Eslinger PW, Miley HS, Johnson CM, Sarathi RS, Schrom BT (2022) Impact of environmental backgrounds on atmospheric monitoring of nuclear explosions. Pure Appl Geophys. https://doi.org/10.1007/s00024-022-03134-5

Schoengold C, DeMarre M, Kirkwood E (1996) Radiological effluents released from US continental tests, 1961 through 1992. Revision 1. Bechtel Nevada Corp.

Schulze J, Auer M, Werzi R (2000) Low level radioactivity measurement in support of the CTBTO. Appl Radiat Isot 53:23–30. https://doi.org/10.1016/S0969-8043(00)00182-2

Werzi R (2009) The operational status of the IMS radionuclide particulate network. J Radioanal Nucl Chem 282:749. https://doi.org/10.1007/s10967-009-0270-9

Bowyer TW, Abel KH, Hubbard CW, McKinnon AD, Panisko ME, Perkins RW, Reeder PL, Thompson RC, Warner RA (1998) Automated separation and measurement of radioxenon for the comprehensive test ban treaty. J Radioanal Nucl Chem 235:77–82. https://doi.org/10.1007/bf02385941

Bowyer TW, Abel KH, Hensley WK, Panisko ME, Perkins RW (1997) Ambient 133Xe levels in the Northeast US. J Environ Radioact 37:143–153. https://doi.org/10.1016/s0265-931x(97)00005-2

McIntyre JI, Abel KH, Bowyer TW, Hayes JC, Heimbigner TR, Panisko ME, Reeder PL, Thompson RC (2001) Measurements of ambient radioxenon levels using the automated radioxenon sampler/analyzer (ARSA). J Radioanal Nucl Chem 248:629–635. https://doi.org/10.1023/A:1010672107749

Eslinger PW, Bowyer TW, Achim P, Chai T, Deconninck B, Freeman K, Generoso S, Hayes P, Heidmann V, Hoffman I, Kijima Y, Krysta M, Malo A, Maurer C, Ngan F, Robins P, Ross JO, Saunier O, Schlosser C, Schöppner M, Schrom BT, Seibert P, Stein AF, Ungar K, Yi J (2016) International challenge to predict the impact of radioxenon releases from medical isotope production on a Comprehensive Nuclear Test Ban Treaty sampling station. J Environ Radioact 157:41–51. https://doi.org/10.1016/j.jenvrad.2016.03.001

Stocki TJ, Blanchard X, D’Amours R, Ungar RK, Fontaine JP, Sohier M, Bean M, Taffary T, Racine J, Tracy BL, Brachet G, Jean M, Meyerhof D (2005) Automated radioxenon monitoring for the Comprehensive Nuclear-Test-Ban Treaty in two distinctive locations: Ottawa and Tahiti. J Environ Radioact 80:305–326. https://doi.org/10.1016/j.jenvrad.2004.10.005

Miley HS, Eslinger PW, Friese JI (2021) Examining nuisance aerosol detections in light of the origin of the screening process. Pacific Northwest National Laboratory, Richland. https://doi.org/10.2172/1843271

Biegalski SR, Bowyer TW, Eslinger PW, Friese JA, Greenwood LR, Haas DA, Hayes JC, Hoffman I, Keillor M, Miley HS, Moring M (2012) Analysis of data from sensitive U.S. monitoring stations for the Fukushima Daiichi nuclear reactor accident. J Environ Radioact 114:15–21. https://doi.org/10.1016/j.jenvrad.2011.11.007

Eslinger PW, Biegalski SR, Bowyer TW, Cooper MW, Haas DA, Hayes JC, Hoffman I, Korpach E, Yi J, Miley HS, Rishel JP, Ungar K, White B, Woods VT (2014) Source term estimation of radioxenon released from the Fukushima Dai-ichi nuclear reactors using measured air concentrations and atmospheric transport modeling. J Environ Radioact 127:127–132. https://doi.org/10.1016/j.jenvrad.2013.10.013

Miley HS, Bowyer TW, Engelmann MD, Eslinger PW, Friese JA, Greenwood LR, Haas DA, Hayes JC, Keillor ME, Kiddy RA, Kirkham RR, Landen JW, Lepel EA, Lidey LS, Litke KE, Morris SJ, Olsen KB, Thompson RC, Valenzuela BR, Woods VT, Biegalski SR (2013) Measurement of Fukushima aerosol debris in Sequim and Richland, WA and Ketchikan, AK. J Radioanal Nucl Chem 296:877–882. https://doi.org/10.1007/s10967-012-2231-y

Bowyer TW, Biegalski SR, Cooper MW, Eslinger PW, Haas DA, Hayes JC, Miley HS, Strom DJ, Woods VT (2011) Elevated radioxenon detected remotely following the Fukushima nuclear accident. J Environ Radioact 102:681–687. https://doi.org/10.1016/j.jenvrad.2011.04.009

Stohl A, Seibert P, Wotawa G, Arnold D, Burkhart JF, Eckhardt S, Tapia C, Vargas A, Yasunari TJ (2012) Xenon-133 and caesium-137 releases into the atmosphere from the Fukushima Dai-ichi nuclear power plant: determination of the source term, atmospheric dispersion, and deposition. Atmos Chem Phys 12:2313–2343. https://doi.org/10.5194/acp-12-2313-2012

Stohl A, Seibert P, Wotawa G (2012) The total release of xenon-133 from the Fukushima Dai-ichi nuclear power plant accident. J Environ Radioact 112:155–159. https://doi.org/10.1016/j.jenvrad.2012.06.001

Yasunari TJ, Stohl A, Hayano RS, Burkhart JF, Eckhardt S, Yasunari T (2011) Cesium-137 deposition and contamination of Japanese soils due to the Fukushima nuclear accident. Proc Natl Acad Sci 108:19530–19534. https://doi.org/10.1073/pnas.1112058108

Bossew P, Gering F, Petermann E, Hamburger T, Katzlberger C, Hernandez-Ceballos MA, De Cort M, Gorzkiewicz K, Kierepko R, Mietelski JW (2019) An episode of Ru-106 in air over Europe, September–October 2017—geographical distribution of inhalation dose over Europe. J Environ Radioact 205–206:79–92. https://doi.org/10.1016/j.jenvrad.2019.05.004

Saunier O, Didier D, Mathieu A, Masson O, Dumont Le Brazidec J (2019) Atmospheric modeling and source reconstruction of radioactive ruthenium from an undeclared major release in 2017. Proc Natl Acad Sci. https://doi.org/10.1073/pnas.1907823116

Tichý O, Šmídl V, Hofman R, Šindelářová K, Hýža M, Stohl A (2017) Bayesian inverse modeling and source location of an unintended I-131 release in Europe in the fall of 2011. Atmos Chem Phys Discuss 2017:1–24. https://doi.org/10.5194/acp-2017-206

Mietelski JW, Povinec PP (2020) Environmental radioactivity aspects of recent nuclear accidents associated with undeclared nuclear activities and suggestion for new monitoring strategies. J Environ Radioact 214–215:106151. https://doi.org/10.1016/j.jenvrad.2019.106151

De Meutter P, Camps J, Delcloo A, Termonia P (2018) Source localisation and its uncertainty quantification after the third DPRK nuclear test. Sci Rep 8:10155. https://doi.org/10.1038/s41598-018-28403-z

Ringbom A, Axelsson A, Aldener M, Auer M, Bowyer TW, Fritioff T, Hoffman I, Khrustalev K, Nikkinen M, Popov V, Popov Y, Ungar K, Wotawa G (2014) Radioxenon detections in the CTBT international monitoring system likely related to the announced nuclear test in North Korea on February 12, 2013. J Environ Radioact 128:47–63. https://doi.org/10.1016/j.jenvrad.2013.10.027

Becker A, Wotawa G, Ringbom A, Saey PRJ (2010) Backtracking of noble gas measurements taken in the aftermath of the announced October 2006 event in North Korea by means of PTS methods in nuclear source estimation and reconstruction. Pure Appl Geophys 167:581–599. https://doi.org/10.1007/s00024-009-0025-0

Ringbom A, Elmgren K, Lindh K, Peterson J, Bowyer TW, Hayes JC, McIntyre JI, Panisko M, Williams R (2009) Measurements of radioxenon in ground level air in South Korea following the claimed nuclear test in North Korea on October 9, 2006. J Radioanal Nucl Chem 282:773–779. https://doi.org/10.1007/s10967-009-0271-8

Saey PRJ, Bean M, Becker A, Coyne J, d’Amours R, De Geer LE, Hogue R, Stocki TJ, Ungar RK, Wotawa G (2007) A long distance measurement of radioxenon in Yellowknife, Canada, in late October 2006. Geophys Res Lett 34:L20802. https://doi.org/10.1029/2007gl030611

Baré J, Gheddou A, Kalinowski MB (2023) Overview of temporary radioxenon background measurement campaigns conducted for the CTBTO between 2008 and 2018. J Environ Radioact 257:107053. https://doi.org/10.1016/j.jenvrad.2022.107053

Kalinowski M, Grosch M, Hebel S (2014) Global xenon-133 emission inventory caused by medical isotope production and derived from the worldwide technetium-99m demand. Pure Appl Geophys 171:707–716. https://doi.org/10.1007/s00024-013-0687-5

Kalinowski MB, Liao Y-Y (2014) Isotopic characterization of radioiodine and radioxenon in releases from underground nuclear explosions with various degrees of fractionation. Pure Appl Geophys 171:677–692. https://doi.org/10.1007/s00024-012-0580-7

Kalinowski MB, Liao Y-Y, Pistner C (2014) Discrimination of nuclear explosions against civilian sources based on atmospheric radioiodine isotopic activity ratios. Pure Appl Geophys 171:669–676. https://doi.org/10.1007/s00024-012-0564-7

Steinhauser G, Lechermann M, Axelsson A, Böck H, Ringbom A, Saey PJ, Schlosser C, Villa M (2013) Research reactors as sources of atmospheric radioxenon. J Radioanal Nucl Chem 296:169–174. https://doi.org/10.1007/s10967-012-1949-x

Saey PRJ, Ringbom A, Bowyer TW, Zähringer M, Auer M, Faanhof A, Labuschagne C, Al-Rashidi MS, Tippawan U, Verboomen B (2012) Worldwide measurements of radioxenon background near isotope production facilities, a nuclear power plant and at remote sites: the ‘“EU/JA-II”’ project. J Radioanal Nucl Chem. https://doi.org/10.1007/s10967-012-2025-2

Saey PRJ, Schlosser C, Achim P, Auer M, Axelsson A, Becker A, Blanchard X, Brachet G, Cella L, De Geer L-E, Kalinowski MB, Le Petit G, Peterson J, Popov V, Popov Y, Ringbom A, Sartorius H, Taffary T, Zähringer M (2010) Environmental radioxenon levels in Europe: a comprehensive overview. J Pure Appl Geophys 167:499–515. https://doi.org/10.1007/s00024-009-0034-z

Saey PRJ, Bowyer TW, Ringbom A (2010) Isotopic noble gas signatures released from medical isotope production facilities—simulations and measurements. Appl Radiat Isot 68:1846–1854. https://doi.org/10.1016/j.apradiso.2010.04.014

Brander S, Baur S, Krais R, Ross JO, Orr A, Sayne R, Howard M, Mayer M, Panisko M, Hayes JC, Bollhöfer A (2022) Phase II testing of xenon international on mount Schauinsland, Germany. J Environ Radioact 255:107034. https://doi.org/10.1016/j.jenvrad.2022.107034

Saey PRJ (2009) The influence of radiopharmaceutical isotope production on the global radioxenon background. J Environ Radioact 100:396–406. https://doi.org/10.1016/j.jenvrad.2009.01.004

Kalinowski MB, Tayyebi P, Lechermann M, Tatlisu H (2021) Global radioxenon emission inventory from nuclear research reactors. Pure Appl Geophys 178:2711–2739. https://doi.org/10.1007/s00024-021-02719-w

Kalinowski MB, Tatlisu H (2020) Global radioxenon emission inventory from nuclear power plants for the calendar year 2014. Pure Appl Geophys. https://doi.org/10.1007/s00024-020-02579-w

Maurer C, Galmarini S, Solazzo E, Kuśmierczyk-Michulec J, Baré J, Kalinowski M, Schoeppner M, Bourgouin P, Crawford A, Stein A, Chai T, Ngan F, Malo A, Seibert P, Axelsson A, Ringbom A, Britton R, Davies A, Goodwin M, Eslinger PW, Bowyer TW, Glascoe LG, Lucas DD, Cicchi S, Vogt P, Kijima Y, Furuno A, Long PK, Orr B, Wain A, Park K, Suh KS, Quérel A, Saunier O, Quélo D (2022) Third international challenge to model the medium- to long-range transport of radioxenon to four Comprehensive Nuclear-Test-Ban Treaty monitoring stations. J Environ Radioact 255:106968. https://doi.org/10.1016/j.jenvrad.2022.106968

Eslinger PW, Ely JH, Lowrey JD, Miley HS (2022) Projected network performance for multiple isotopes using next-generation xenon monitoring systems. J Environ Radioact 251–252:106963. https://doi.org/10.1016/j.jenvrad.2022.106963

Kalinowski MB (2023) Global emission inventory of 131mXe, 133Xe, 133mXe, and 135Xe from all kinds of nuclear facilities for the reference year 2014. J Environ Radioact 261:107121. https://doi.org/10.1016/j.jenvrad.2023.107121

Generoso S, Achim P, Morin M, Gross P, Le Petit G, Moulin C (2018) Seasonal variability of Xe-133 global atmospheric background: characterization and implications for the international monitoring system of the Comprehensive Nuclear-Test-Ban Treaty. J Geophy Res Atmos 123:1865–1882. https://doi.org/10.1002/2017JD027765

Gueibe C, Kalinowski MB, Baré J, Gheddou A, Krysta M, Kusmierczyk-Michulec J (2017) Setting the baseline for estimated background observations at IMS systems of four radioxenon isotopes in 2014. J Environ Radioact 178–179:297–314. https://doi.org/10.1016/j.jenvrad.2017.09.007

IAEA (2022) Energy, electricity and nuclear power estimates for the period up to 2050: 2022 Edition. Reference data series No. 1. International Atomic Energy Agency, Vienna, Austria

Ringbom A, Aldener M, Axelsson A, Fritioff T, Kastlander J, Mortsell A, Olsson H (2017) Analysis of data from an intercomparison between a Sauna II and a Sauna III system. In: Paper presented at the science and technology 2017 conference, Vienna, Austria.

Topin S, Gross P, Achim P, Generoso S, Cagniant A, Delaune O, Morin M, Philippe T, Fontaine J-P, Moulin C, Douysset G, Le Petit G (2020) 6 months of radioxenon detection in western Europe with the SPALAX-new generation system—part 1: metrological capabilities. J Environ Radioact 225:106442. https://doi.org/10.1016/j.jenvrad.2020.106442

Haas DA, Eslinger PW, Bowyer TW, Cameron IM, Hayes JC, Lowrey JD, Miley HS (2017) Improved performance comparisons of radioxenon systems for low level releases in nuclear explosion monitoring. J Environ Radioact 178–179:127–135. https://doi.org/10.1016/j.jenvrad.2017.08.005

Hayes JC, Harper W, Panisko M, Cooper MW (2018) Xenon international overview. United States. https://doi.org/10.2172/1472068

Miley HS, Burnett JL, Chepko AB, Devoy CL, Eslinger PW, Forrester JB, Friese JI, Lidey LS, Morris SJ, Schrom BT, Stokes S, Swanwick ME, Smart JE, Warren GA (2019) Design considerations for future radionuclide aerosol monitoring systems. J Environ Radioact 208–209:106037. https://doi.org/10.1016/j.jenvrad.2019.106037

Britton R, Davies AV (2022) Next-generation particulate monitoring. Appl Radiat Isot. https://doi.org/10.1016/j.apradiso.2022.110156

Eslinger PW, Miley HS (2022) Projected network performance for next-generation xenon monitoring systems. J Environ Radioact 251–252:106976. https://doi.org/10.1016/j.jenvrad.2022.106976

Eslinger PW, Miley HS, Burnett JL, Lidey LS, Mendez JM, Schrom BT, Sharma MK (2023) Projected network performance for next generation aerosol monitoring systems. J Environ Radioact 257:107088. https://doi.org/10.1016/j.jenvrad.2022.107088

Currie LA (1968) Limits for qualitative detection and quantitative determination: application to radiochemistry. Anal Chem 40:586–593. https://doi.org/10.1021/ac60259a007

Foltz Biegalski K, Biegalski S (2001) Determining detection limits and minimum detectable concentrations for noble gas detectors utilizing beta–gamma coincidence systems. J Radioanal Nucl Chem 248:673–682. https://doi.org/10.1023/a:1010684410475

Burnett JL, Miley HS, Bowyer TW, Cameron IM (2018) The 2014 integrated field exercise of the Comprehensive Nuclear-Test-Ban Treaty revisited: the case for data fusion. J Environ Radioact 189:175–181. https://doi.org/10.1016/j.jenvrad.2018.03.013

Postelt F (2014) Potential of spectrum categorisation concepts using radionuclide ratios for Comprehensive Nuclear-Test-Ban Treaty verification. Pure Appl Geophys 171:693–697. https://doi.org/10.1007/s00024-012-0577-2

Kalinowski MB, Pistner C (2006) Isotopic signature of atmospheric xenon released from light water reactors. J Environ Radioact 88:215–235. https://doi.org/10.1016/j.jenvrad.2006.02.003

Zhao LF, Xie XB, Wang WM, Yao ZX (2014) The 12 February 2013 North Korean underground nuclear test. Seismol Res Lett 85:130–134. https://doi.org/10.1785/0220130103

Selby ND (2010) Relative locations of the October 2006 and May 2009 DPRK announced nuclear tests using international monitoring system seismometer arrays. Bull Seismol Soc Am 100:1779–1784. https://doi.org/10.1785/0120100006

Zhao L-F, Xie X-B, Wang W-M, Yao Z-X (2008) Regional seismic characteristics of the 9 October 2006 North Korean nuclear test. Bull Seismol Soc Am 98:2571–2589. https://doi.org/10.1785/0120080128

Murphy J, Stevens J, Kohl B, Bennett T (2013) Advanced seismic analyses of the source characteristics of the 2006 and 2009 North Korean nuclear tests. Bull Seismol Soc Am 103:1640–1661. https://doi.org/10.1785/0120120194

Eslinger PW, Schrom BT (2016) Multi-detection events, probability density functions, and reduced location area. J Radioanal Nucl Chem 307:1599–1605. https://doi.org/10.1007/s10967-015-4339-3

Sanger DE (2006) North Koreans say they tested nuclear device. The New York Times. https://www.nytimes.com/2006/10/09/world/asia/09korea.html. Accessed July 31 2023

Kijima Y, Schoemaker R, Liu B, Kunkle J, Tipka A, Kuśmierczyk-Michulec J, Kalinowski M (2023) Investigation on atmospheric radioactivity sample association using consistency with isotopic ratio decay over time at IMS radionuclide stations. J Environ Radioact 270:107301. https://doi.org/10.1016/j.jenvrad.2023.107301

Kalinowski M, Axelsson A, Bean M, Blanchard X, Bowyer T, Brachet G, Hebel S, McIntyre J, Peters J, Pistner C, Raith M, Ringbom A, Saey P, Schlosser C, Stocki T, Taffary T, Kurt Ungar R (2010) Discrimination of nuclear explosions against civilian sources based on atmospheric xenon isotopic activity ratios. Pure Appl Geophys 167:517–539. https://doi.org/10.1007/s00024-009-0032-1

Eslinger PW, Ely J, Cooper MW, Foxe M, Hayes JC, Mayer MF, Panisko ME, Sarathi R (2022) Determining the source of unusual xenon isotopes in samples. J Environ Radioact 247:106853. https://doi.org/10.1016/j.jenvrad.2022.106853

Liu B, Kalinowski M, Sun Y, Carrigan CR, Saragiotis C, Wang J, Ertl M, Kijima Y, Schoemaker R, Kuśmierczyk-Michulec J, Tipka A, Antoun T (2023) Characterization of CTBT-relevant radioxenon detections at IMS stations using isotopic activity ratio analysis. Pure Appl Geophys. https://doi.org/10.1007/s00024-023-03291-1

Kijima Y, Kalinowski M, Liu B, Kuśmierczyk-Michulec J, Schoemaker R, Tipka A (2022) Sample association by using anomalous concentration episodes and decay-consistent isotopic ratios at IMS radionuclide stations. In: Paper presented at the EGU General Assembly 2022, Vienna, Austria.

Achim P, Generoso S, Morin M, Gross P, Le Petit G, Moulin C (2016) Characterization of Xe-133 global atmospheric background: implications for the international monitoring system of the Comprehensive Nuclear-Test-Ban Treaty. J Geophys Res Atmos 121:4951–4966. https://doi.org/10.1002/2016JD024872

Achim P, Monfort M, Le Petit G, Gross P, Douysset G, Taffary T, Blanchard X, Moulin C (2014) Analysis of radionuclide releases from the Fukushima Dai-ichi nuclear power plant accident part II. Pure Appl Geophys 171:645–667. https://doi.org/10.1007/s00024-012-0578-1

Eslinger PW, Bowyer TW, Doll CG, Friese JI, Metz LA, Schrom BT (2022) Using STAX data to predict IMS radioxenon concentrations. J Environ Radioact 250:106916. https://doi.org/10.1016/j.jenvrad.2022.106916

Metz L, Bowyer T, Burnett J, Dion M, Eslinger P, Friese J, Doll C, McIntyre J, Schrom B (2022) Source term analysis of xenon (STAX): an effort focused on differentiating man-made isotope production from nuclear explosions via stack monitoring. J Environ Radioact 255:107037. https://doi.org/10.1016/j.jenvrad.2022.107037

Hoffman I, Ungar K, Bean M, Yi J, Servranckx R, Zaganescu C, Ek N, Blanchard X, Le Petit G, Brachet G, Achim P, Taffary T (2009) Changes in radioxenon observations in Canada and Europe during medical isotope production facility shut down in 2008. J Radioanal Nucl Chem 282:767–772. https://doi.org/10.1007/s10967-009-0235-z

Ringbom A, Fritioff T, Aldener M, Axelsson A, Elmgren K, Hellesen C, Karlkvist L, Kastlander J, Olsson H, Berglund H, Hellman B, Pettersson O (2023) SAUNA QB - array: the realization of a new concept in radioxenon detection. J Environ Radioact 261:107136. https://doi.org/10.1016/j.jenvrad.2023.107136

Eslinger PW, Miley HS, Schrom BT (2022) Investigations of association among atmospheric radionuclide measurements. J Environ Radioact 241:106777. https://doi.org/10.1016/j.jenvrad.2021.106777

Jensen DD, Lucas DD, Lundquist KA, Glascoe LG (2019) Sensitivity of a Bayesian source-term estimation model to spatiotemporal sensor resolution. Atmos Environ X 3:100045. https://doi.org/10.1016/j.aeaoa.2019.100045

Eslinger PW, Lowrey JD, Miley HS, Rosenthal WS, Schrom BT (2019) Source term estimation using multiple xenon isotopes in atmospheric samples. J Environ Radioact 204:111–116. https://doi.org/10.1016/j.jenvrad.2019.04.004

De Meutter P, Hoffman I (2020) Bayesian source reconstruction of an anomalous selenium-75 release at a nuclear research institute. J Environ Radioact 218:106225. https://doi.org/10.1016/j.jenvrad.2020.106225

De Meutter P, Hoffman I, Ungar K (2020) On the model uncertainties in Bayesian source reconstruction using the emission inverse modelling system FREARtool v1.0 and the Lagrangian transport and dispersion model Flexpart v9.0.2. Geosci Model Dev Discuss 2020:1–23. https://doi.org/10.5194/gmd-2020-162

Eslinger PW, Lowrey JD, Miley HS, Rosenthal WS, Schrom BT (2020) Source type estimation using noble gas samples. J Environ Radioact 225:106439. https://doi.org/10.1016/j.jenvrad.2020.106439

Eslinger PW, Mendez JM, Schrom BT (2019) Source term estimation in the presence of nuisance signals. J Environ Radioact 203:220–225. https://doi.org/10.1016/j.jenvrad.2019.03.022

Acknowledgements

This Low Yield Nuclear Monitoring (LYNM) research was funded by the National Nuclear Security Administration, Defense Nuclear Nonproliferation Research and Development (NNSA DNN R&D). The authors acknowledge important interdisciplinary collaboration with scientists and engineers from LANL, LLNL, NNSS, PNNL, and SNL. Pacific Northwest National Laboratory (PNNL) is operated by Battelle for the U.S. Department of Energy under Contract DE-AC05-76RL01830. The authors acknowledge the valuable contribution by Real d’Amours and Gerhard Wotowa with respect to modeling the 2006 CRL contributions at Yellowknife. The views expressed herein are those of the authors and do not necessarily reflect the views of the United States Government, other governmental agencies, or the CTBTO Preparatory Commission.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original article has been revised due to error in Copyright holder name.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Miley, H.S., Eslinger, P.W., Bowyer, T.W. et al. In the nuclear explosion monitoring context, what is an anomaly?. J Radioanal Nucl Chem 333, 1681–1697 (2024). https://doi.org/10.1007/s10967-024-09411-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10967-024-09411-y