Abstract

Studying independent sets of maximum size is equivalent to considering the hard-core model with the fugacity parameter \(\lambda \) tending to infinity. Finding the independence ratio of random d-regular graphs for some fixed degree d has received much attention both in random graph theory and in statistical physics. For \(d \ge 20\) the problem is conjectured to exhibit 1-step replica symmetry breaking (1-RSB). The corresponding 1-RSB formula for the independence ratio was confirmed for (very) large d in a breakthrough paper by Ding, Sly, and Sun. Furthermore, the so-called interpolation method shows that this 1-RSB formula is an upper bound for each \(d \ge 3\). For \(d \le 19\) this bound is not tight and full-RSB is expected. In this work we use numerical optimization to find good substituting parameters for discrete r-RSB formulas (\(r=2,3,4,5\)) to obtain improved rigorous upper bounds for the independence ratio for each degree \(3 \le d \le 19\). As r grows, these formulas get increasingly complicated and it becomes challenging to compute their numerical values efficiently. Also, the functions to minimize have a large number of local minima, making global optimization a difficult task.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is concerned with the independence ratio of random regular graphs. A graph is said to be regular if each vertex has the same degree. For a fixed degree d, let \(\mathbb {G}(N,d)\) be a uniform random d-regular graph on N vertices. Note that \(\mathbb {G}(N,d)\) has a “trivial local structure” in the sense that with high probability almost all vertices have the same local neighborhood: \(\mathbb {G}(N,d)\) almost surely converges locally to the d-regular tree \(T_d\) as \(N \rightarrow \infty \). In statistical physics \(T_d\) is also known as the Bethe lattice. In fact, Mézard and Parisi used the expression Bethe lattice for referring to \(\mathbb {G}(N,d)\) (see [21] for example), and proposed to study various models on these random graphs.

In a graph, an independent set is a set of vertices, no two of which are adjacent, that is, the induced subgraph has no edges. The independence ratio of a graph is the size of its largest independent set normalized by the number of vertices. For any fixed degree \(d \ge 3\), the independence ratio of \(\mathbb {G}(N,d)\) is known to converge to some constant \(\alpha ^*_d\) as \(N \rightarrow \infty \) [4]. Determining \(\alpha ^*_d\) is a major challenge of the area. The cavity method, a non-rigorous statistical physics tool, led to a 1-step replica symmetry breaking (1-RSB) formula for \(\alpha ^*_d\). The authors of [5] also argued that the formula may be exact for \(d \ge 20\), which is widely believed to be indeed the case. Later this 1-RSB formula was confirmed to be exact for (very) large d in the seminal paper of Ding et al. [10].

Lelarge and Oulamara [19] used the interpolation method to rigorously establishFootnote 1 the 1-RSB formula as an upper bound for every \(d \ge 3\). This approach also provides r-step RSB bounds for any \(r \ge 2\). The problem is that these formulas get increasingly complicated and fully solving the corresponding optimization problems seems to be out of reach. Can we, at least, get an estimate or a bound?

The parameters of the r-RSB bound includes a functional order parameter which can be thought of as a measure.Footnote 2 The optimal measure satisfies a certain self-consistency equation. We cannot hope for an exact solution so the natural instinct is to try to find an approximate solution. In the physics literature an iterative randomized algorithm called population dynamics is often used to find an approximate solution in the 1-RSB (and occasionally in the 2-RSB) setting for various models. This sounds like a promising approach but we came to the surprising conclusion that for the hard-core model it may be a better strategy to forget about the equation altogether and search among “simple” measures. It seems to be possible to get very close to the global optimum using atomic measures with a moderate number of atoms. Furthermore, when we only have a few atoms, we can tune their weights and locations to a great precision, and this seems to outweigh the advantage of having a more “delicate” measure (but being unable to tune it to the same precision).

Moreover, using a small number of atoms means that we can compute the value exactly and the interpolation method ensures that what we get is always a rigorous upper bound. In contrast, population dynamics only gives an estimate for the value of the bound because for large populations one simply cannot compute the corresponding bound precisely and has to settle for an estimate based on a sample.

Therefore, our approach is that we try to find local minima of the discrete version (corresponding to atomic measures) using a computer. Even this is a formidable challenge as we will see. Table 1 shows the best bounds we found via numerical optimization.

These may seem to be small improvements but we actually expect the true values to be fairly close to our new bounds. In particular, for \(d=3\) it is reasonable to conjecture that the bound is sharp up to at least five decimal digits, that is, \(\alpha ^*_3 = 0.45078...\).

We also have improvements for \(9 \le d \le 19\). However, as the degree gets closer to the threshold \(d \ge 20\) (above which 1-step replica symmetry breaking is believed to be the truth), the 1-RSB bound gets sharper and our improvement gets smaller. For more details, see the tables in the Appendix.

1.1 Upper Bound Formulas

In Sect. 2 we will explain the RSB bounds in detail. Here we only display a few formulas in order to give the reader an idea of the optimization tasks we are faced with.

For comparison, we start with the replica symmetric (RS) bound: for any \(\lambda >0\) and any \(x \in [0,1]\) we have

Here the fugacity parameter \(\lambda \) is the “reward” for including a vertex in the independent set, while x can be thought of as the probability that a cavityFootnote 3 vertex is included. Then the right-hand side expresses the change in the free energy when adding a star (i.e., connecting a new vertex to d cavities) versus adding d/2 edges between cavities. Choosing \(\lambda \) and x optimally leads to the exact same formula as the Bollobás bound from 1981 [6], which was based on a first moment calculation for the number of independent sets of a given size. Actually, this relatively simple bound is already asymptotically tight: \(\big ( 2 + o_d(1) \big ) \frac{\log d}{d}\), where the asymptotic lower bound is due to Frieze and Łuczak [11].

The 1-RSB bound says that for any \(\lambda _0>1\) and any \(q \in [0,1]\):

Choosing \(\lambda _0\) and q optimally leads to an (implicit) formula for \(\alpha ^*_d\). As we mentioned, this 1-RSB bound is conjectured to be sharp for any \(d \ge 20\) and known to be sharp for sufficiently large d.

Heavy notation would be needed to describe the r-step RSB bounds in general. In order to keep the introduction concise, we only give (a discretized version of) the formula for the case \(r=2\): for any \(\lambda _0 >1\), \(0<m<1\), and any \(p_1, \ldots , p_n, q_1, \ldots , q_n \in [0,1]\) with \(p_1 + \cdots + p_n = 1\) we have

The number of parameters for general r is roughly \(2 n_1 \ldots n_{r-1}\), where \(n_k\) denotes the number of atoms used at the different layers, so the dimension of the parameter space grows exponentially in r, see Sect. 2.3 for details.

1.2 The Case of Degree 3

One can plug any concrete choice of parameter values into (3) to get a bound for the independence ratio. To demonstrate the strength of (3) even for small n, we include here an example for a 2-RSB bound for \(d=3\), \(n=4\): the values

give a bound \(\alpha ^*_3< 0.450789952<0.45079\) that already comfortably beats the currently best bound (\(\approx 0.45086\)). Table 2 shows our best bounds for \(d=3\).

As for lower bounds for small d, the best results have been achieved by so-called local algorithms. Table 3 lists a few selected works and the obtained bounds for \(\alpha ^*_3\).

Note that a beautiful result of Rahman and Virág [25], building on a work of Gamarnik and Sudan [14], says that asymptotically (as \(d \rightarrow \infty \)) local algorithms can only produce independent sets of half the maximum size (over random regular graphs). For small d, however, the independence ratio produced by local algorithms may be the same as (or very close to) \(\alpha ^*_d\).

1.3 Optimization

We wrote Python/SAGE codes to perform the numerical optimization for the replica bounds.

-

The first task was to efficiently compute the r-RSB formulas and their derivatives w.r.t. the parameters.

-

Then we used standard algorithms to perform local optimization starting from random points. As the parameter space grows, more attempts are required to find an appropriate starting point leading to a good local optimum.

-

Eventually we start to encounter a rugged landscape with a huge number of local minima, where we cannot expect to get close to the global optimum even after trying a large number of starting points. In order to overcome this obstacle, for \(d=3\) we used a technique called basin hopping. In each step, the algorithm randomly visits a “nearby” local minimum, favoring steps to smaller values. This approach led to the discovery of our best bounds for \(d=3\).

-

The smaller the degree d, the deeper we could go in the replica hierarchy (i.e., use larger r). We could perform the 3-RSB optimization for \(d \le 10\), the 4-RSB optimization for \(d \le 6\), and the 5-RSB optimization for \(d=3\).

See Sect. 3.2 for further details about the implementation.

Although the bounds are hard to find, they are easy to check: one simply needs to plug the specific parameter values into the given formulas. We created a website with interactive SAGE codes where the interested reader may check the claimed bounds and even run simple optimizations: https://www.renyi.hu/~harangi/rsb.htm. Our codes can be found in the public GitHub repository https://github.com/harangi/rsb.

1.4 2-RSB in the Literature

As far as we know, there was only one previous attempt to get an estimate for the 2-RSB formula (only for \(d=3\)). In [5] it reads that “the 2-RSB calculation is [...] somewhat involved and was done in [24] [and obtained the value] 0.45076(7)”. Rivoire’s thesis [24] indeed reports briefly of a 2-RSB calculation. Note that, since he considers the equivalent vertex-cover problem (concerning the complements of independent sets), we need to subtract his value from 1 to get our value. On page 113 he writes that using population dynamics he obtained the following estimate: \(0.54924 \pm 0.00007\). For our problem this means \(0.45076 \pm 0.00007 = [0.45069,0.45083]\). The value 0.45076(7) in [5] may have come from mistakenly using an error \(\pm 0.000007\) instead of \(\pm 0.00007\) when citing Rivoire’s work. The thesis only provides a short description of how this estimate was obtained. The author refers to it as “unfactored” 1-RSB and it seems to be the same as what we call a non-standard 1-RSB in our remarks after Theorem 2.2. If that is indeed the case, then our findings suggest that its true value should actually be around 0.45081.

1.5 Outline of the Paper

In Sect. 2 we present the general replica bounds and their discrete versions that we need to optimize. Section 3 contains details about the numerical optimization. In Sect. 4 we revisit the \(r=1\) case and investigate more sophisticated choices for the functional order parameter. The Appendix contains a table listing our best bounds for different values of d and r (Sect. 5) and an overview of the interpolation method for the hard-core model over random regular graphs (Sect. 6).

2 Replica Formulas

Originally the cavity method and belief propagation were non-rigorous techniques in statistical physics to predict the free energy of various models. They inspired a large body of rigorous work, and over the years several predictions were confirmed. In particuler, the so-called interpolation method has been used with great success to establish rigorous upper bounds on the free energy.

In the context of the hard-core model over random d-regular graphs, the interpolation method was carried out by Lelarge and Oulamara in [19], building on the pioneering works [12, 13, 23]. First we present the general r-step RSB bound obtained this way.

2.1 The General Replica Bound

For a topological space \(\Omega \) let \(\mathcal {P}(\Omega )\) denote the space of Borel probability measures on \(\Omega \) equipped with the weak topology. We set  and then recursively

and then recursively  for \(k \ge 1\). The general bound will have the following parameters:

for \(k \ge 1\). The general bound will have the following parameters:

-

\(\lambda >1\);

-

\(0< m_1, \ldots , m_r <1\) corresponding to the so-called Parisi parameters;

-

a measure \(\eta ^{(r)} \in \mathcal {P}^r\).

Definition 2.1

Given a fixed \(\eta ^{(r)} \in \mathcal {P}^r\), we choose (recursively for \(k=r-1,r-2,\ldots ,1\)) a random \(\eta ^{(k)} \in \mathcal {P}^k\) with distribution \(\eta ^{(k+1)}\). Finally, given \(\eta ^{(1)}\) we choose a random \(x \in [0,1]\) with distribution \(\eta ^{(1)}\). In fact, we will need d independent copies of this random sequence, indexed by \(\ell \in \{1,\ldots , d\}\). Schematically:

For \(1 \le k \le r\) we define \(\mathcal {F}_k\) as the \(\sigma \)-algebra generated by \(\eta _\ell ^{(r-1)}, \ldots , \eta _\ell ^{(k)}\), \(\ell =1,\ldots ,d\), and by \(\mathbb {E}_k\) we denote the conditional expectation w.r.t. \(\mathcal {F}_k\). Note that \(\mathcal {F}_r\) is the trivial \(\sigma \)-algebra and hence \(\mathbb {E}_r\) is simply \(\mathbb {E}\).

Given a random variable V (depending on the variables \(\eta _\ell ^{(k)}, x_\ell \)), let us perform the following procedure: raise it to power \(m_1\), then apply \(\mathbb {E}_1\), raise the result to power \(m_2\), then apply \(\mathbb {E}_2\), and so on. In formula, let  and recursively for \(k=1, \ldots , r\) set

and recursively for \(k=1, \ldots , r\) set

In this scenario, applying \(\mathbb {E}_k\) means that, given \(\eta _\ell ^{(k)}\), \(\ell =1,\ldots ,d\), we take expectation in \(\eta _\ell ^{(k-1)}\), \(\ell =1,\ldots ,d\) (or in \(x_\ell \) if \(k=1\)).

Now we are ready to state the r-RSB bound given by the interpolation method.

Theorem 2.2

Let \(r \ge 1\) be a positive integer and \(\lambda , m_1, \ldots , m_r, \eta ^{(r)}\) parameters as described above. Let \(x_\ell \), \(\ell =1,\ldots ,d\) denote the random variables obtained from \(\eta ^{(r)}\) via the procedure in Definition 2.1. Then we have the following upper bound for the asymptotic independence ratio \(\alpha ^*_d\) of random d-regular graphs:

This was rigorously proved in [19]. They actually considered a more general setting incorporating a class of (random) models over a general class of random hypergraphs (with given degree distributions). They used the hard-core model over d-regular graphs as their chief example, working out the specific formulas corresponding to their general RS and 1-RSB bounds. Theorem 2.2 follows from their general r-RSB bound [19, Theorem 3] exactly the same way as in the RS and 1-RSB case.

We should make a number of remarks at this point.

-

Above we slightly deviated from the standard notation as the usual form of the Parisi parameters would be

$$\begin{aligned} 0< \hat{m}_1< \cdots< \hat{m}_r < 1 , \end{aligned}$$where \(\hat{m}_k\) can be expressed in terms of our parameters \(m_k\) as follows:

$$\begin{aligned} \hat{m}_r = m_1; \quad \hat{m}_{r-1} = m_1 m_2; \quad \ldots ; \quad \hat{m}_{1} = m_1 m_2 \cdots m_r . \end{aligned}$$As a consequence, the indexing of \(\mathcal {F}_k\), \(\mathbb {E}_k\), \(T_k\) is in reverse order, and the definition of \(T_k\) simplifies a little because raising to power \(1/\hat{m}_{r-k+2}\) and then immediately to \(\hat{m}_{r-k+1}\) (as done, for example, in [23]) amounts to a single exponent \(\hat{m}_{r-k+1}/\hat{m}_{r-k+2}=m_k\) in our setting.

-

Also, generally there is an extra layer of randomness (starting from an \(\eta ^{(r+1)} \in \mathcal {P}^{r+1}\)) resulting in another expectation outside the \(\log \). This random choice is meant to capture the local structure of the graph in a given direction. However, when the underlying graph is d-regular (meaning that essentially all vertices see the same graph structure locally), we do not need this layer of randomness (in principle). Therefore, in the d-regular case one normally chooses a trivial \(\eta ^{(r+1)} = \delta _{\eta ^{(r)}}\). That is why we omitted \(\eta ^{(r+1)}\) and started with a deterministic \(\eta ^{(r)}\).

For \(d \ge 20\), where the 1-RSB bound is (conjectured to be) tight, the optimal choice of parameters indeed uses a trivial \(\eta ^{(r+1)} = \delta _{\eta ^{(r)}}\) with r being 1 in this case.

For \(d \le 19\), the same choice gives us a 1-RSB upper bound (which is not tight any more). Let us call this the standard 1-RSB bound, and, in general, we call an r-RSB bound standard if it was obtained by using a deterministic \(\eta ^{(r)}\) at the start. Then a non-standard bound would use \(\eta ^{(r+1)}\) (and hence random \(\eta _\ell ^{(r)}\) variables). Note that a non-standard r-RSB bound is actually a special case of standard \((r+1)\)-RSB bounds in the limit \(m_{r+1} \rightarrow 0\). So even though it is possible to improve on standard r-step bounds by non-standard r-step bounds, it actually makes more sense to use the extra layer to move to \((r+1)\)-step bounds instead (and use some positive \(m_{r+1})\).

-

The full RSB picture is well-understood for the famous Sherrington–Kirkpatrick model [22, 26], where the infimum of the r-RSB bound converges to the free energy as \(r \rightarrow \infty \). It is reasonable to conjecture that this is the case for the hard-core model as well. There is some progress towards this in [8] where a variational formula is obtained for \(\alpha _d^*\).

2.2 A Specific Choice

The formula in Theorem 2.2 would be hard to work with numerically because it would only give good results for very large \(\lambda \). So we make a specific choice (similar to the one made in [19, Section 3.2.1] in the case \(r=1\)) that may not be optimal but will allow us to use numerical optimization. We consider the limit \(\lambda \rightarrow \infty \) and \(m_1 \rightarrow 0\) in a way that \(m_1 \log \lambda \) stays constant and x is concentrated on the two-element set \(\{0, 1-1/\lambda \}\), meaning that \(\eta ^{(1)}\) is a distribution \(q \delta _{1-1/\lambda } + (1-q) \delta _0\) for some random \(q \in [0,1]\).

For a fixed \(\lambda _0>1\) let \(\log \lambda _0 = m_1 \log \lambda \). First we focus on the expressions \(T_1 \big ( 1+ \lambda (1-x_1)\cdots (1-x_d) \big )\) and \(T_1 (1-x_1 x_2)\). If each \(x_\ell \in \{0, 1-1/\lambda \}\) was fixed, we would have the following in the limit as \(\lambda \rightarrow \infty \), \(m_1 \rightarrow 0\) with \(m_1 \log \lambda = \log \lambda _0\):

Therefore, conditioned on

for some deterministic \(q_1, \ldots , q_d \in [0,1]\), we get

In the resulting formula the randomness in layer 1 disappears along with the Parisi parameter \(m_1\). After re-indexing (\(k \rightarrow k-1\)) we get the following corollary.

Corollary 2.3

Let \(\lambda _0>1\) and \(0< m_1, \ldots , m_{r-1} <1\). Furthermore, fix a deterministic \(\pi ^{(r-1)} \in \mathcal {P}^{r-1}\) and take d independent copies of recursive sampling:

We define the conditional expectations \(\mathbb {E}_k\) and the corresponding \(T_k\) as before, w.r.t. this new system of random variables. Then

Proof

For a formal proof one needs to define an \(\eta ^{(r)}=\eta _\lambda ^{(r)} \in \mathcal {P}^r\) for the fixed \(\pi ^{(r-1)}\) and any given \(\lambda \) such that the corresponding \(\eta _\ell ^{(1)}\) is distributed as \(q_\ell \delta _{1-1/\lambda } + (1-q_\ell ) \delta _0\). Then Theorem 2.2 can be applied and we get the new formula in the limit. \(\square \)

2.3 Discrete Versions

In our numerical computations we will use the bound of Corollary 2.3 in the special case when each distribution is discrete.

For \(r=1\) we have a deterministic q and we get back (2), while \(r=2\) gives (3).

Let \(r=3\). For any \(\lambda _0 >1\), \(0<m_1,m_2<1\), \(p_i \ge 0\) with \(\sum p_i=1\), \(p_{i,j} \ge 0\) with \(\sum _j p_{i,j}=1\) for every fixed i, and \(q_{i,j} \in [0,1]\) we get that

For a general \(r \ge 1\), we will index our parameters \(p_s,q_s\) with sequences \(s=\big ( s^{(1)},\ldots ,s^{(k)} \big )\) of length \(|s|=k \le r-1\). We denote the empty sequence (of length 0) by \(\emptyset \). Furthermore, we write \(s' \succ s\) if \(s'\) is obtained by adding an element to the end of s, that is, \(|s'|=|s|+1\) and the first |s| elements coincide.

Now let S be some set of sequences of length at most \(r-1\) such that \(\emptyset \in S\). We partition S into two parts \(S_{\le r-2} \cup S_{r-1}\) based on whether the length of the sequence is at most \(r-2\) or exactly \(r-1\), respectively.

Now the discrete version of the r-RSB bound has the following parameters:

-

\(\lambda _0>1\);

-

\(0<m_1, \ldots , m_{r-1}<1\);

-

\(p_{s} \ge 0\), \(s \in S\), satisfying

$$\begin{aligned} \sum _{s' \succ s} p_{s'} = 1 \text{ for } \text{ each } s \in S_{\le r-2} ; \end{aligned}$$ -

\(q_{s} \in [0,1]\), \(s \in S_{r-1}\).

Now we define the distribution \(\pi ^{(r-1)} \in \mathcal {P}^{r-1}\) corresponding to the parameters \(p_s, q_s\). Set

and then, recursively for \(k=r-2,r-3,\ldots ,1,0\), for a sequence s of length \(|s|=k\) let

We want to use Corollary 2.3 with  . The obtained bound can be expressed as follows.

. The obtained bound can be expressed as follows.

For any d-tuple \(s_1,\ldots ,s_d\) of sequences of length \(r-1\), set

and then, recursively for \(k=r-1, r-2, \ldots , 1\), for any d-tuple \(s_1,\ldots ,s_d\) of sequences of length \(k-1\) let

Similarly, for any pair \(s_1,s_2\) of sequences of length \(r-1\), set

and then, recursively for \(k=r-1, r-2, \ldots , 1\). for any pair \(s_1,s_2\) of sequences of length \(k-1\), let

Then the bound is

Remark 2.4

Normally we fix integers \(n_1, \ldots , n_{r-1} \ge 2\) and assume that the k-th elements of our sequences come from the set \(\{1,\ldots , n_k\}\). This way the number of free parameters (after taking the sum restrictions on the parameters \(p_s\) into account) is

In the tables of Sect. 3.1 and the Appendix we will refer to such a parameter space as \([n_1, \ldots , n_{r-1}]\).

3 Numerical Optimization

3.1 Numerical Results

Our starting point was the observation in [5] that the 1-RSB formula for \(\alpha ^*_d\) “is stable towards more steps of replica symmetry breaking” only for \(d \ge 20\), so it should not be exact for \(d \le 19\). Therefore the 2-RSB bound in Corollary 2.3 ought to provide an improved upper bound for some choice of \(\lambda _0,m_1,\pi ^{(1)}\). The optimal \(\pi ^{(1)}\) may be continuous. Can we achieve significant improvement on the 1-RSB bound even by using some atomic measure \(\pi ^{(1)} = \sum _{i=1}^n p_i \delta _{q_i}\)? In other words, can we find good substituting values for the parameters \(p_i,q_i\) of the discrete version (3) using numerical optimization? We were skeptical because we may not be able to use a large enough n to get a good atomic approximation of the optimal \(\pi ^{(1)}\). Surprisingly, based on our findings it appears that even a small number of atoms may yield close-to-optimal bounds. Table 4 shows our best 2-RSB bounds for \(d=3\) and for different values of n.

Of course, we do not know what the true infimum of the bound in Corollary 2.3 is, but our bounds seem to stabilize very quickly as we increase the number of atoms (n). Also, we experimented with various other approaches that would allow for better approximations of continuous distributions and they all pointed to the direction that the bounds in Table 4 are close to optimal.

This actually gave us the hope that it may not be impossible to get further improvements by considering r-step replica bounds for \(r \ge 3\) even though the number of atoms we can use at each layer is indeed very small due to computational capacities. Table 5 shows some bounds we obtained for \(d=3\) and \(r \ge 3\) using different parameter spaces (see Remark 2.4).

The dimension of the parameter space (7) depends only on \(r,n_1,\ldots ,n_{r-1}\) and not on the degree d. However, as we increase d, computing \(R^{\textrm{star}}\) (see Sect. 2.3) and its derivative takes longer and we have to settle for using smaller values of r and \(n_k\). At the same time, the 1-RSB formula is presumably getting closer to the truth as we are approaching the phase transition between \(d=19\) and \(d=20\). Nevertheless, we tried to achieve as much improvement as we could for each degree \(d=3,\ldots , 19\). See the Appendix for results for \(d \ge 4\).

3.2 Implementation

3.2.1 Efficient Computation

According to (6), our RSB upper bound for \(\alpha ^*_d\) reads as

where \(R_{\emptyset ,\ldots ,\emptyset }^{\textrm{star}}\) and \(R_{\emptyset ,\emptyset }^{\textrm{edge}}\) were defined recursively through \(r-1\) steps, each step involving a multifold summation, see Sect. 2.3 for details. So our task is to minimize (8) as a function of the parameters. During optimization the function and its partial derivatives need to be evaluated at a large number of locations. So it was crucial for us to design program codes that compute them efficiently. Instead of trying to do the summations using for loops, the idea is to utilize the powerful array manipulation tools of the Python library NumPy. In particular, one can efficiently perform element-wise calculations or block summations on the multidimensional arrays of NumPy.

First we show how \(R_{\emptyset ,\ldots ,\emptyset }^{\textrm{star}}\) can be obtained using such tools. Recall that \(S_k\) contains sequences of length k and we have a parameter \(p_s\) for any \(s \in S_1 \cup \cdots \cup S_{r-1}\) and \(q_s\) for any \(s \in S_{r-1}\). In particular, in the \([n_1, \ldots , n_{r-1}]\) setup we have \(|S_k|=n_1 \cdots n_k\). We do the following steps.

-

\(v_k\): vector of length \(|S_k|\) consisting of \(p_s\), \(s \in S_k\) (\(1 \le k \le r-1\)).

-

\(P_k\): d-dimensional array of size \(|S_k| \times \cdots \times |S_k|\) obtained by “multiplying” d copies of \(v_k\). (Each element of \(P_k\) is a product \(p_{s_1}\cdots p_{s_d}\) for some \(s_1,\ldots ,s_d \in S_k\).)

-

\(M_{r-1}\): d-dimensional array of size \(|S_{r-1}| \times \cdots \times |S_{r-1}|\) obtained by “multiplying” d copies of the vector consisting of \(1-q_s\), \(s \in S_{r-1}\), then multiply each element by \(\lambda _0-1\) and add 1; cf. (4).

-

Then, recursively for \(k=r-1,r-2, \ldots ,1\), given the \(|S_k| \times \cdots \times |S_k|\) array \(M_k\) we obtain \(M_{k-1}\) as follows: we raise \(M_k\) to the power of \(m_{r-k}\) and multiply by \(P_k\) (both element-wise), and perform a block summation: in the \([n_1,\ldots ,n_{r-1}]\) setup we divide the array into \(n_k \times \cdots \times n_k\) blocks and replace each with the sum of the elements in the block; cf. (5).

-

At the end \(M_0\) will have a single element equal to \(R_{\emptyset ,\ldots ,\emptyset }^{\textrm{star}}\).

One can compute \(R_{\emptyset ,\ldots ,\emptyset }^{\textrm{edge}}\) similarly, using two-dimensional arrays this time.

Note that during the computation of \(R_{\emptyset ,\ldots ,\emptyset }^{\textrm{star}}\) all the d-dimensional arrays are invariant under any permutation of the d axes. This means that the same products appear in many instances, hence the same calculations are repeated many times in the approach above. However, typically we get plenty of compensation in efficiency due to the fact that all the calculations can be done in one sweep using powerful array tools. Nevertheless, when the degree d gets above 7, we do use another approach in the 2-RSB setting \(d \, \, [n]\). In advance, we create a list containing all partitions of d into the sum of n nonnegative integers \(d=a_1+\cdots +a_n\). We also store the corresponding multinomial coefficients \(\left( {\begin{array}{c}d\\ a_1,\ldots ,a_n\end{array}}\right) \) in a vector. Then, at each function call, we go through the list of partitions and compute

storing the values in a vector. Then we simply need to take the dot product with the precalculated vector containing the multinomial coefficients.

In both approaches computing the partial derivatives with respect to the parameters (\(\lambda \), \(m_k\), \(p_s\), \(q_s\)) is more involved but can be done using similar techniques (array manipulations and partitioning, respectively). As an example, we show how we can compute \(\partial R_{\emptyset ,\ldots ,\emptyset }^{\textrm{star}} / \partial p_s\) in the first approach. For a given \(1 \le \ell \le r-1\) we will do this for all \(s \in S_\ell \) at once, resulting in a vector of length \(|S_\ell |\) consisting of the partial derivatives w.r.t. each \(p_s\), \(s \in S_\ell \). We will use again the vectors \(v_k\) and the arrays \(P_k,M_k\) obtained during the computation of \(R_{\emptyset ,\ldots ,\emptyset }^{\textrm{star}}\).

-

\(P'_k\): d-dimensional array of size \(|S_k| \times \cdots \times |S_k|\) obtained by “multiplying” the all-ones vector of length \(|S_k|\) and \(d-1\) copies of \(v_k\).

-

\(D_k\): d-dimensional array of size \(|S_k| \times \cdots \times |S_k|\) obtained by (element-wise) raising \(M_k\) to the power of \(m_{r-k}-1\) and multiplying by \(m_{r-k}\) and by \(P_k\).

-

For a given \(1 \le \ell \le r-1\) we start with \(M_\ell \), raise it to the power of \(m_{r-\ell }\) and multiply it by \(P'_\ell \) (both element-wise) and perform a block summation for blocks of size \(1 \times n_\ell \times \cdots \times n_\ell \), resulting in an \(|S_\ell | \times |S_{\ell -1}| \times \cdots \times |S_{\ell -1}|\) array that we denote by \(M'_{\ell -1}\).

-

Then, recursively for \(k=\ell -1,\ell -2, \ldots ,1\), given the \(|S_\ell | \times |S_k| \times \cdots \times |S_k|\) array \(M'_k\) we obtain \(M'_{k-1}\) as follows: we “stretch” \(D_k\) so that its first axis has length \(|S_\ell |\) by repeating each element \(|S_\ell |/|S_k|\) times (along that first axis) to get an \(|S_\ell | \times |S_k| \times \cdots \times |S_k|\) array, which we multiply element-wise by \(M'_k\), and perform a block summation for blocks of size \(1 \times n_k \times \cdots \times n_k\).

-

At the end we get the array \(M'_0\) of size \(|S_\ell | \times 1 \times \cdots \times 1\). We simply need to multiply its elements by d to get the partial derivatives w.r.t. \(p_s\), \(s \in S_\ell \).

3.2.2 Local Optimization

Given a differentiable multivariate function, gradient descent means that at each step we move in the opposite direction of the gradient at the current point, and thus (hopefully) converging to a local minimum of the function. This is a very natural strategy because we have the steepest initial descent in that direction. There are other standard iterative algorithms that also use the gradient (i.e., the vector consisting of the partial derivatives). They can make more sophisticated steps because they take previous gradient evaluations into account as well, resulting in a faster convergence to a local minimum. Since we have complicated functions for which gradient evaluations are computationally expensive, it is important for us to reach a local optimum in as few iterations as possible. Specifically, we used the conjugate gradient and the Broyden–Fletcher–Goldfarb–Shanno algorithm, which are both implemented in the Python library SciPy.

With efficient gradient evaluation and fast-converging optimization at our disposal, we were able to find local optima. However, we were surprised to see that, depending on the starting point, these algorithms find a large number of different local minima of the RSB formulas. This is due to our parameterization: we only consider discrete measures with a fixed number of atoms, and the atom locations are included among the parameters.Footnote 4 (This is what allowed us to tackle the problem numerically but it also makes the function behave somewhat chaotically.)

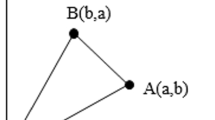

It is hard to get a good picture of the behavior of a function of so many variables. To give some idea, in Fig. 1 we plotted the 2-RSB bound for \(d=3 \, [n=5]\) over two-dimensional sections. In both cases we chose three local minima and took the plane H going through them and plotted the function over H. (Note that the left one appears to have a fourth local minimum. However, it is only a local minimum for the two-dimensional restriction of the function and it can actually be locally improved when we are allowed to use all dimensions.)

Many of these local minima have very similar values. It appears that one would basically need to check them all in order to find the one that happens to be the global minimum (for the given number of atoms). So our strategy is to simply start local optimization from various (random) points to eventually get a strong bound. This seems to work well as long as there are not too many local minima.

3.2.3 Basin Hopping

As the dimension of the parameter space grows, we start to see a landscape with a huge number of local minima and our chance for picking a good starting point becomes extremely slim. Instead, when we get to a local minimum (i.e., the bottom of a “basin”), we may try to “hop out” of the basin by applying a small perturbation of the variables. After a new round of local optimization, we end up at the bottom of another basin. If the function value decreases compared to the previous basin, we accept this step. If not, then we make a random decision of acceptance/rejection with a probability based on the difference of the values. Such a basin hopping algorithm randomly travels through local minima, with a slight preference for smaller values. (This preference should not be too strong, though, as we have to allow enough leeway for this random travel.) This approach led to the discovery of our best bounds for \(d=3\). We mention that in the case of our 5-RSB bound the basin hopping algorithm was running for days.

3.2.4 Avoiding Lower-Depth Minima

There is one more subtlety we have to pay attention to, especially when \(r \ge 3\). The fact that the r-RSB formula contains the \((r-1)\)-RSB as a special case means that the optimization has the tendency to converge to such “lower-depth” local minima (on the boundary of the parameter space). So it is beneficial to distort the target function in some way in order to force the \(r-1\) Parisi parameters to stay away from the boundary. That is, we need to add a penalty term to our function based on the distance of each \(m_k\) from 1. Once the function value is sufficiently small, we can continue the optimization with the original (undistorted) function.

4 One-Step RSB Revisited

It is possible to improve the previous best bounds even within the framework of the \(r=1\) case of the interpolation method. Recall that Theorem 2.2 gives the following bound in this case:

where \(x_1,\ldots ,x_d\) are IID from some fixed distribution \(\eta \) on [0, 1]. If we use \(\eta =q \delta _{1-1/\lambda }+(1-q)\delta _0\) and take the limit \(m \rightarrow 0, \lambda \rightarrow \infty \) with \(m \log \lambda = \lambda _0\), then we get (2) as explained in Sect. 2.2 for general r. Optimizing (2) leads to what we refer to as the 1-RSB bound throughout the paper. In this section we show how one can improve on (2) for \(d \le 19\) by considering a more sophisticated \(\eta \). We will refer to the obtained bounds as \(1^+\)-RSB bounds. Although this approach is generally inferior to 2-RSB bounds, it is computationally less demanding. In fact, for degrees \(d=17,19\) we could only perform the 2-RSB optimization with \(n_1=2\) and the obtained bound was actually worse than the \(1^+\)-RSB bound outlined below.

For the sake of simplicity we start with a choice of \(\eta \) only slightly more general than the original one: let \(\eta \) have three atoms at the locations

We denote the measures of these atoms by  , where

, where  ; i.e.,

; i.e.,

Note that the original choice corresponds to the case  .

.

As before, we let \(m \rightarrow 0, \lambda \rightarrow \infty \) with \(m \log \lambda = \lambda _0\), which leads to the following bound:

where

Substituting  , we have three remaining parameters:

, we have three remaining parameters:  . Setting the partial derivatives of the right-hand side w.r.t. \(q_0\) and

. Setting the partial derivatives of the right-hand side w.r.t. \(q_0\) and  to 0, we get that

to 0, we get that

and similarly for  . It follows that for the optimal choice of parameters we have

. It follows that for the optimal choice of parameters we have

One can easily compute these partial derivatives to conclude that

The second equality gives

which turns the first equality into

So our bound has one free parameter left (\(q_0\)), in which we can easily optimize numerically. For \(d=3\) one gets 0.450851131. This is the simplest way to improve upon the basic 1-RSB bound.

More generally, one can take any measure \(\tau \) on [0, 1] and define \(\eta \) as the push-forward of \(\tau \) w.r.t. the mapping \(t \mapsto 1-1/\lambda ^t\). Once again, letting \(m \rightarrow 0, \lambda \rightarrow \infty \) with \(m \log \lambda = \lambda _0\), we get the following:

For any fixed \(\lambda _0\), an optimal \(\tau \) must satisfy a simple fixed point equation involving the convolution power \(\tau ^{*(d-1)}\). For \(\textrm{div} \in \mathbb {N}\) one can divide [0, 1] into \(\textrm{div}\) many intervals and search among atomic measures \(\tau \) with atom locations at \(i/\textrm{div}\), \(i=0,1,\ldots ,\textrm{div}\). It is possible to numerically solve the fixed point equation by an iterative algorithm. Then it remains to tune the parameter \(\lambda _0\). We computed these \(1^+\)-RSB bounds for \(\textrm{div}=1,2,4,8,\ldots ,1024\). Note that \(\textrm{div}=1\) corresponds to the original 1-RSB, while \(\textrm{div}=2\) gives (9). Table 6 shows the results for \(d=3\).

Data Availability

The datasets needed to recover the claimed bounds of the current paper are available in a Github repository: https://github.com/harangi/rsb/blob/5f2ea09166a1931c3bae5e05afaee13093015b53/examples.sobj

Notes

More precisely, as a measure that is supported on measures that are supported on measures that ... (iterated r times).

Cavities are vertices of degree \(d-1\) that are created by deleting a relatively small number of edges from the graph. The point is that this way the symmetry is broken while the independence ratio is essentially unchanged.

Even in models where the Parisi functional is known to be convex, as in the SK model [1], parameterizing with atom locations (as opposed to with the measure itself) changes the notion of convexity and may lead to functions that are far from convex.

In fact, we should also work with a soft version of Z (at some positive temperature), where neighboring 1’s are possible but penalized in the partition function. As the temperature goes to zero (i.e., the penalty increases), we get back the hard-core model in the limit. For the sake of simplicity, we describe the interpolation method using the hard-core model but keep in mind that a rigorous treatment would need positive temperatures.

References

Auffinger, A., Chen, W.-K.: The parisi formula has a unique minimizer. Commun. Math. Phys. 335(3), 1429–1444 (2015)

Ayre, P., Coja-Oghlan, A., Greenhill, C.: Lower bounds on the chromatic number of random graphs. Combinatorica 42(5), 617–658 (2022)

Austin, T., Panchenko, D.: A hierarchical version of the de Finetti and Aldous-Hoover representations. Probab. Theory Relat. Fields 159(3), 809–823 (2014)

Bayati, M., Gamarnik, D., Tetali, P.: Combinatorial approach to the interpolation method and scaling limits in sparse random graphs. The Annals of Probability 41(6), 4080–4115 (2013)

Barbier, J., Krzakala, F., Zdeborová, Lenka, Zhang, P.: The hard-core model on random graphs revisited. J. Phys. 473, 012021 (2013)

Bollobás, B.: The independence ratio of regular graphs. Proc. Am. Math. Soc. 83(2), 433–436 (1981)

Csóka, E., Gerencsér, B., Harangi, V., Virág, B.: Invariant Gaussian processes and independent sets on regular graphs of large girth. Random Struct. Algorithms 47(2), 284–303 (2015)

Coja-Oghlan, A., Perkins, W.: Spin systems on Bethe lattices. Commun. Math. Phys. 372(2), 441–523 (2019)

Csóka, E.: Independent sets and cuts in large-girth regular graphs (2016)

Ding, J., Sly, A., Sun, N.: Maximum independent sets on random regular graphs. Acta Math. 217(2), 263–340 (2016)

Frieze, A.M., Łuczak, T.: On the independence and chromatic numbers of random regular graphs. J. Comb. Theory Ser. B 54(1), 123–132 (1992)

Franz, S., Leone, M.: Replica bounds for optimization problems and diluted spin systems. J. Stat. Phys. 111(3), 535–564 (2003)

Franz, S., Leone, M., Toninelli, F.L.: Replica bounds for diluted non-Poissonian spin systems. J. Phys. A 36, 10967–10985 (2003)

Gamarnik, D., Sudan, M.: Limits of local algorithms over sparse random graphs. Proceedings of the 5-th Innovations in Theoretical Computer Science conference. ACM Special Interest Group on Algorithms and Computation Theory (2014)

Guerra, F.: Broken replica symmetry bounds in the mean field spin glass model. Commun. Math. Phys. 233(1), 1–12 (2003)

Hoppen, C.: Properties with graphs of large girth. PhD Thesis, University of Waterloo (2008)

Hoppen, C., Wormald, N.: Local algorithms, regular graphs of large girth, and random regular graphs. Combinatorica 38(3), 619–664 (2018)

Kardoš, F., Král, D., Volec, J.: Fractional colorings of cubic graphs with large girth. SIAM J. Discret. Math. 25(3), 1454–1476 (2011)

Lelarge, M., Oulamara, M.: Replica bounds by combinatorial interpolation for diluted spin systems. J. Stat. Phys. 173(3), 917–940 (2018)

McKay, B.D.: Independent sets in regular graphs of high girth. Ars Comb. 23A, 179–185 (1987)

Mézard, M., Parisi, G.: The Bethe lattice spin glass revisited. Eur. Phys. J. B 20(2), 217–233 (2001)

Panchenko, D.: The Sherrington–Kirkpatrick Model. Springer Monographs in Mathematics, Springer, New York (2013)

Panchenko, D., Talagrand, M.: Bounds for diluted mean-fields spin glass models. Probab. Theory Relat. Fields 130(3), 319–336 (2004)

Rivoire, O.: Phases vitreuses, optimisation et grandes déviations. Theses, Université Paris Sud—Paris XI, July 2005. les articles devant figurer en appendices ne sont pas inclus (2005)

Rahman, M., Virág, B.: Local algorithms for independent sets are half-optimal. Ann. Probab. 45(3), 1543–1577 (2017)

Talagrand, M.: The Parisi formula. Ann. Math. 163(1), 221–263 (2006)

Funding

Open access funding provided by ELKH Alfréd Rényi Institute of Mathematics. This work was supported by the MTA-Rényi Counting in Sparse Graphs “Momentum” Research Group, NRDI Grant KKP 138270, and the Hungarian Academy of Sciences (János Bolyai Scholarship).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The author declares that he has no conflict of interest.

Additional information

Communicated by Alessandro Giuliani.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported by the MTA-Rényi Counting in Sparse Graphs “Momentum” Research Group, NRDI Grant KKP 138270, and the Hungarian Academy of Sciences (János Bolyai Scholarship)

Appendices

Appendix 1: Our Best Bounds

Below we list our best r-RSB bounds of \(\alpha ^*_d\) for each degree \(3 \le d \le 19\) in the following format: \(\quad r \quad [n_1, \ldots , n_{r-1}] \quad \text{ bound } \quad \) (see Remark 2.4 for the definition of \(n_k\)).

For comparison, we included \(r=1\), that is, the 1-RSB bound from [19] that we improve on.

Appendix 2: An Overview of the Interpolation Method

The interpolation method is a rigorous technique to prove upper bounds for the free energy in various models. It has several variants. Originally it was invented by Guerra [15] in the context of the Sherrington–Kirkpatrick spin glass model. In this section we explain the technique for the hard-core model, omitting the technical details and assuming no statistical physics background. We mainly follow the exposition in [2], where the closely related problem of the chromatic number was considered, and [23].

Given a finite graph \(G=(V,E)\), the partition function of the hard-core model is defined as

where \(\lambda >1\) is a parameter often called fugacity. So \(Z_G\) counts 0-1 configurations \(\sigma =( \sigma _v )_{v \in V}\) on the vertices with no neighboring 1’s, that is,  is an independent set counted with weight \(\lambda ^{|I|}\). Thus \(Z_G\) is simply the sum of these weights for all independent setsFootnote 5. Let \(\alpha (G)\) denote the independence number of G (i.e., the size of the largest independent set). Using the simple inequality \(Z_{G,\lambda } \ge \lambda ^{\alpha (G)}\), one can bound the independence number as follows:

is an independent set counted with weight \(\lambda ^{|I|}\). Thus \(Z_G\) is simply the sum of these weights for all independent setsFootnote 5. Let \(\alpha (G)\) denote the independence number of G (i.e., the size of the largest independent set). Using the simple inequality \(Z_{G,\lambda } \ge \lambda ^{\alpha (G)}\), one can bound the independence number as follows:

which is clearly asymptotically tight for any fixed G as \(\lambda \rightarrow \infty \).

We are interested in the asymptotic independence ratio \(\alpha ^*_d\) of the random d-regular graph \(\mathbb {G}=\mathbb {G}(N,d)\) as the number of vertices N goes to infinity. It follows from the above that for any \(\lambda \) the normalized free energy  upper bounds \(\alpha ^*_d \log \lambda \). More precisely, we have

upper bounds \(\alpha ^*_d \log \lambda \). More precisely, we have

The method is based on an “interpolating” family of models \(G_t\), \(t \in [0,1]\), with \(G_0\) being our original model (plus a disjoint part), which is then “continuously transformed” into \(G_1\). The key is to prove that the free energy \(\mathbb {E}\log Z_{G_t}\) increases as t goes from 0 to 1 by showing that the derivative is nonnegative along the way:

We will elaborate on this key part of the proof later in Sect. 6.5. It implies that \(\mathbb {E}\log Z_{G_0} \le \mathbb {E}\log Z_{G_1}\), which will translate to a bound of the form

where Y and \(Y'\) are partition functions that are easier to handle. Next we will describe the models in detail.

1.1 Variables and Factors

The models have two types of nodes: variable nodes and fields (corresponding to local fields in physics). We assign a variable \(\sigma _v\) to any variable node v that ranges over \(\{0,1\}\). When we compute the partition function, the sum runs through all possible configurations \(\sigma =(\sigma _v)\) with the weight of a configuration being the product of various (penalty and reward) factors. For example, each \(\sigma _v=1\) is rewarded with a \(\lambda >1\) factor, while an edge between two variable nodes v,\(v'\) forbids that \(\sigma _v=\sigma _{v'}=1\), i.e., the factor is

A field u does not have a variable, instead there is a probability distribution \(\mu _u\) on \(\{0,1\}\) assigned to it. In other words, each field u is labelled with a real number \(x_u \in [0,1]\) denoting the probability of 1:  .

.

If there is an edge between a variable node v and a field u, then we use the following factor:

Finally, for an edge between two fields u,\(u'\) we add the following constant factor (that does not depend on \(\sigma \)):

1.2 The Models

Now we are ready to describe the models \(G_t\) and the partition functions Y and \(Y'\).

-

Each model \(G_t\) has N variable nodes and dN fields. A variable node has d half-edges, each may be connected to another half-edge or to a field.

-

In \(G_t\) there are \((1-t)dN/2\) edges connecting two fields, the remaining tdN fields are connected to half-edges of variable nodes randomly, and the remaining \((1-t)dN\) half-edges are matched randomly, creating \((1-t)dN/2\) edges between variable nodes.

-

In particular, at \(t=0\) we get the disjoint union of a random d-regular graph \(\mathbb {G}\) (over the variable nodes) and dN/2 pairs of fields, each pair connected by an edge. Therefore

$$\begin{aligned} \log Z_{G_0} = \log Z_\mathbb {G}+ \log Y' , \end{aligned}$$where \(Y'\) is the partition function of the dN/2 “field edges”.

-

At the other endpoint \(t=1\), we have N “stars”, each containing one variable node connected to d fields. We denote the corresponding partition function by Y and hence write

$$\begin{aligned} \log Z_{G_1} = \log Y . \end{aligned}$$

These are random models. Note that for \(Z_\mathbb {G}\) the randomness comes purely from the underlying random graph structure, while for Y and \(Y'\) it comes from the random labels \(x_u\) of the fields u that we will explain next.

In the simplest scenario one fixes a real number \(x \in [0,1]\) and use \(x_u=x\) for each u. In this setup Y and \(Y'\) are actually deterministic and can be expressed as products (with the terms corresponding to the N stars and dN/2 field edges, respectively):

(Note that the model of \(Y'\) does not have any variable nodes and the “sum” is simply the product of constant factors.) Plugging these into (11) we get back the replica symmetric bound (1).

More generally, one may choose each \(x_u\) independently from a fixed distribution \(\nu \) on [0, 1]. (It is important to use the same \(\nu \) for Y and \(Y'\).) The resulting partition functions can be factorized again and we get a more general version of the RS bound:

Next we explain how a seemingly insignificant modification of the method turns this approach into a much more powerful tool and resulting in replica symmetry breaking bounds.

1.3 A Weighting Scheme

For a countable index set \(\Gamma \) let us fix weights \(w_\gamma \ge 0\), \(\gamma \in \Gamma \), with \(\sum _{\gamma \in \Gamma } w_\gamma = 1\) (essentially a probability distribution on \(\Gamma \)) and an arbitrary collection of random variables \(\big ( x^\gamma \big )_{\gamma \in \Gamma }\), each \(x^\gamma \) taking values in [0, 1]. For each \(\gamma \in \Gamma \) we consider a version \(Y_\gamma \) of Y. To this end we need to take independent copies of the collection \(\big ( x^\gamma \big )\) for all fields u:

We set the label of each field u to be \(x^\gamma _u\) and define \(Y_\gamma \) to be the corresponding partition function. We define \(Y'_\gamma \) similarly. Then the following weighted version of (11) is also true:

This weighted version is (potentially) more general but it seems that we lose the crucial property of factorization for the formulas inside the \(\log \). There is, however, a “magical” (random) choice of the coefficients \(w_\gamma \) (based on the so-called Derrida–Ruelle cascades) for which we still have factorization provided that the collection \(\big ( x^\gamma \big )\) is hierarchically exchangeable, which notion was introduced in [3].

For a given \(r \ge 1\) we use \(\Gamma =\mathbb {N}^r\) as the countable index set. For any fixed parameters \(0< m_1, \ldots , m_r < 1\), there exist random weights \(w_\gamma \) such that for any given \(\eta ^{(r)}\) of Theorem 2.2 we can define the collection \(\big ( x^\gamma \big )\) in a way that (12) yields the bound in the theorem.

We define \(\big ( x^\gamma \big )\) using the notations of Sect. 2.1. For any \(1 \le k \le r\) and any \(\gamma _1, \ldots , \gamma _k \in \mathbb {N}\) we will define a random \(\eta ^{(r-k+1)}(\gamma _1,\ldots ,\gamma _k) \in \mathcal {P}^{r-k+1}\). Since we started with a deterministic \(\eta ^{(r)}\) in Theorem 2.2 (see the remarks after the theorem), in our case each  will be the same for \(k=1\). Given \(\eta ^{(r-k+1)}(\gamma _1,\ldots ,\gamma _k) \in \mathcal {P}^{r-k+1}\), we define

will be the same for \(k=1\). Given \(\eta ^{(r-k+1)}(\gamma _1,\ldots ,\gamma _k) \in \mathcal {P}^{r-k+1}\), we define

to be conditionally independent and distributed as \(\eta ^{(r-k+1)}(\gamma _1,\ldots ,\gamma _k)\). Finally, for each \(\gamma =(\gamma _1,\ldots ,\gamma _r) \in \mathbb {N}^r\) we sample \(x^\gamma \) from \(\eta ^{(1)}(\gamma _1,\ldots ,\gamma _r)\). Schematically:

Now suppose that we have a function \(f :[0,1]^M \rightarrow \mathbb {R}\). Let us take M independent copies of the above sampling scheme. For each fixed \(\gamma \in \mathbb {N}^r\) we plug the M copies of \(x^\gamma \) into f resulting in a random variable \(V_\gamma \). Then one can choose the weights \(w_\gamma \) randomly in such a way that

where \(T_r\) is defined analogously to Definition 2.1 [23, Proposition 2].

We will not elaborate on how the weights \(w_\gamma \) need to be chosen for general r. Instead, we focus on the case \(r=1\) which already captures the essence of the method.

1.4 One-Step RSB

In this case we simply have \(\Gamma =\mathbb {N}\) and each \(\eta ^{(1)}(\gamma )\) is the same deterministic distribution \(\eta ^{(1)} \in \mathcal {P}^1\). In other words, all field labels \(x^\gamma _u\) are IID across all nodes u in all models \(Y_\gamma ,Y'_\gamma \). Next we define the random weights \(w_\gamma \).

Definition 6.1

Given a real number \(0<m<1\), let \(\hat{w}_1 \ge \hat{w}_2 \ge \ldots \) be the nonincreasing enumeration of the points generated by a nonhomogeneous Poisson point process on \([0,\infty )\) with intensity function \(t \mapsto t^{-1-m}\). The sum  is finite almost surely. For \(\gamma \in \mathbb {N}\) let

is finite almost surely. For \(\gamma \in \mathbb {N}\) let

The distribution of \((w_1,w_2,\ldots )\) is called the Poisson–Dirichlet distribution.

In many statistical physics models the relative cluster sizes are believed to behave as the Poisson–Dirichlet distribution for some m. It has the following magical property.

Lemma 6.2

[23, Proposition 1] For any fixed \(0<m<1\) let \(w_\gamma \), \(\gamma \in \mathbb {N}\) be the random weights as above. Then for any IID sequence \(X_\gamma >0\) with \(\mathbb {E}X_1^2 < \infty \) we have

Note that on the left we take expectation both in \(w_\gamma \) and in \(X_\gamma \).

Applying the lemma for \(X_\gamma =Y_\gamma \) and also for \(X_\gamma =Y'_\gamma \), the bound (12) turns into

where \(x_1,\ldots ,x_d\) are IID with distribution \(\eta ^{(1)}\). Hence we indeed get back Theorem 2.2 for \(r=1\).

1.5 Monotonicity of the Free Energy

Now we turn to the final ingredient (the reason why all this provides an upper bound): the fact that the free energy of the model \(G_t\) is monotone increasing as t goes from 0 to 1. In other words, the derivative (10) is nonnegative.

In \(G_t\) there are three types of edges (based on whether there are 0, 1, or 2 variable nodes among the endpoints) and we defined \(G_t\) by prescribing the number of edges for all three types. In fact, it is better to define \(G_t\) in a way that there is a small portion of the variable nodes with degree \(d-1\). Intuitively it is clear that we have to compare the effect (on the free energy) of the addition of an edge of each of the three types. (See [2, Section 4.2] for an elegant argument justifying this intuition.)

Suppose that we have any fixed model on N variable nodes with partition function Z, where we distinguish some of the nodes as cavity nodes (in our setting they belong to the variable nodes that do not have full degree d but only degree \(d-1\)). The number of cavity nodes should be small compared to N but should converge to \(\infty \) as \(N \rightarrow \infty \). We want to understand the effect (on \(\log Z\)) of the addition of a new factor to the model. In our case this will be the addition of either one of the three types of edges:

-

We choose two cavity nodes uniformly and independently and add an edge between them: resulting in a random partition function \(Z_{\textrm{cc}}\).

-

We add two new fields and add an edge between them: resulting in a random partition function \(Z_{\textrm{ff}}\).

-

We choose a cavity node uniformly and connect it to a new random field: resulting in a random partition function \(Z_{\textrm{cf}}\).

What we need to prove is that

To incorporate the Replica Symmetry Breaking scenario we will have an additional variable \(\gamma \): let \(\Omega =\{0,1\}^N \times \Gamma \) where each \(\omega =(\sigma _1,\ldots ,\sigma _N,\gamma ) \in \Omega \) encodes a configuration of N variables \(\sigma _i\) and a state \(\gamma \) ranging over a countable set \(\Gamma \).

Imagine that at a particular stage of the interpolation we see a certain deterministic model. It is actually not important what the model is; the point is that it assigns a weight \(\Psi (\omega )\) to each configuration \(\omega \in \Omega \). If we normalize these weights with the corresponding partition function \(Z=\sum _{\omega \in \Omega } \Psi (\omega )\), then we get a probability distribution on \(\Omega \), called the Boltzmann distribution. It is a simple fact that adding a new weight factor \(\Psi '(\omega )\) to the model changes the free energy \(\log Z\) by \(\log \mathbb {E}_\omega \Psi '(\omega )\), where \(\mathbb {E}_\omega \) means taking expectation w.r.t. the Boltzmann distribution. It follows that

where \(c_1,c_2\) are chosen uniformly and independently from the set \(C \subseteq \{1,\ldots ,N\}\) of cavities, and \(x_1=(x^\gamma _1)_{\gamma \in \Gamma }\) and \(x_2=(x^\gamma _2)_{\gamma \in \Gamma }\) are two independent collections of random variables with the same joint distribution. Then (13) follows from the following lemma.

Lemma 6.3

Let X and Y be random \(\Omega \rightarrow [0,1]\) functions with independent copies \(X_1,X_2\) and \(Y_1,Y_2\), respectively. Then for any random \(\omega \in \Omega \) we have

Proof

Due to the identity

it suffices to show for each \(\ell \ge 1\) that

which can be easily seen to be equivalent to

where \(\omega _1,\ldots ,\omega _\ell \) are independent copies of \(\omega \).

Indeed, we may rewrite the first term of (14) as

Similar manipulations can be carried out for the two other terms. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Harangi, V. Improved Replica Bounds for the Independence Ratio of Random Regular Graphs. J Stat Phys 190, 60 (2023). https://doi.org/10.1007/s10955-022-03062-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10955-022-03062-7