Abstract

We develop new multilevel Monte Carlo (MLMC) methods to estimate the expectation of the smallest eigenvalue of a stochastic convection–diffusion operator with random coefficients. The MLMC method is based on a sequence of finite element (FE) discretizations of the eigenvalue problem on a hierarchy of increasingly finer meshes. For the discretized, algebraic eigenproblems we use both the Rayleigh quotient (RQ) iteration and implicitly restarted Arnoldi (IRA), providing an analysis of the cost in each case. By studying the variance on each level and adapting classical FE error bounds to the stochastic setting, we are able to bound the total error of our MLMC estimator and provide a complexity analysis. As expected, the complexity bound for our MLMC estimator is superior to plain Monte Carlo. To improve the efficiency of the MLMC further, we exploit the hierarchy of meshes and use coarser approximations as starting values for the eigensolvers on finer ones. To improve the stability of the MLMC method for convection-dominated problems, we employ two additional strategies. First, we consider the streamline upwind Petrov–Galerkin formulation of the discrete eigenvalue problem, which allows us to start the MLMC method on coarser meshes than is possible with standard FEs. Second, we apply a homotopy method to add stability to the eigensolver for each sample. Finally, we present a multilevel quasi-Monte Carlo method that replaces Monte Carlo with a quasi-Monte Carlo (QMC) rule on each level. Due to the faster convergence of QMC, this improves the overall complexity. We provide detailed numerical results comparing our different strategies to demonstrate the practical feasibility of the MLMC method in different use cases. The results support our complexity analysis and further demonstrate the superiority over plain Monte Carlo in all cases.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the following convection–diffusion eigenvalue problem with random coefficients: Find a non-trivial eigenpair \((\lambda ,u)\in {\mathbb {C}}\times H^1_0(D;{\mathbb {C}})\) such that

The PDE is considered for the physical variable \(\textbf{x}\) in a bounded Lipschitz domain \(D\in {\mathbb {R}}^d\) with \(d=1,2,\) or 3, and for the stochastic variable \({\varvec{\omega }}\) from a given probability space \((\varOmega ,{\mathcal {F}},\pi )\). For \(\pi \)-almost all \({\varvec{\omega }} \in \varOmega \), we assume Dirichlet conditions on the boundary \(\varGamma = \partial D\),

The conductivity \(\kappa (\textbf{x},{\varvec{\omega }}):D\times \varOmega \rightarrow {\mathbb {R}}\) is a log-uniform random field (as used in, e.g., [19]), defined using the process convolution approach in [37], such that

with \(k(\textbf{x}-\textbf{c}_i)\) a kernel centered at a certain number of points \(\textbf{c}_i \in D\) and i.i.d. uniform random variables \(\omega _i\sim {\mathcal {U}}[0,1]\). Similarly, the convection velocity \(\textbf{a}(\textbf{x},{\varvec{\omega }}):D\times \varOmega \rightarrow {\mathbb {R}}^d\) can also be some bounded random variable, which also depends on uniform random variables \(\omega _i \sim {\mathcal {U}}[0, 1]\) and is additionally assumed to be divergence-free, i.e.,

The purpose of this paper is to compute the expectation of the smallest eigenvalue of (1),

using multilevel Monte Carlo methods.

Stochastic eigenvalue problems arise in a variety of physical and scientific applications and their numerical simulations. Factors such as measurement noise, limitations of mathematical models, the existence of hidden variables, the randomness of input parameters, and other factors contribute to uncertainties in the modelling and prediction of many phenomena. Applications of uncertainty quantification (UQ) specifically related to eigenvalue problems include: nuclear reactor criticality calculations [2, 3, 25], the derivation of the natural frequencies of an aircraft or a naval vessel [41], band gap calculations in photonic crystals [22, 27, 55], the computation of ultrasonic resonance frequencies to detect the presence of gas hydrates [51], the analysis of the elastic properties of crystals with the use of rapid measurements [52, 61], or the calculation of acoustic vibrations [12, 66]. Stochastic convection–diffusion equations are used to describe simple cases of turbulent [24, 44, 54, 63] or subsurface flows [64, 67].

Monte Carlo sampling is one of the most popular methods for quantifying uncertainties in quantities of interest coming from stochastic PDEs. Although simple and robust, Monte Carlo methods can be severely inefficient when applied to UQ problems, because their slow convergence rate often requires a large number of samples to meet the desired accuracy. To improve the efficiency, the multilevel Monte Carlo (MLMC) method was developed, where the key idea is to reduce the computational cost by spreading the samples over a hierarchy of discretizations. The main idea was introduced by Heinrich [36] for path integration, then generalized by Giles [30] for SDEs. More recently, MLMC methods have been applied with great success to stochastic PDEs, see, e.g., [6, 7, 14, 28, 29, 53, 60, 65] specifically for eigenproblems. A general overview of MLMC is presented by Giles [31].

In this paper, we present a MLMC method to approximate (4), which, motivated by the use of MLMC for source problems described above, is based on a hierarchy of discretizations of the eigenvalue problem (1) and which is much more efficient in practice than a Monte carlo approximation. We consider two discretization methods, a standard Galerkin finite element method (FEM) and a streamline upwind Petrov–Galerkin (SUPG) method. The SUPG method improves the stability of the approximation for cases with high convection and also allows us to start the MLMC method from a coarser discretization. To further reduce the cost of our MLMC method, we again exploit the hierarchy of discretizations by using approximations on coarse levels as the starting values for the eigensolver on the fine level. We also present the two extensions of MLMC that aim to improve different aspects of the method. First, to improve the stability of the eigensolver for each sample we include a homotopy method for solving convection–diffusion eigenvalue problems in the MLMC algorithm. The homotopy method computes the eigenvalue of the convection–diffusion operator by following a continuous path starting from the pure diffusion operator. Second, to improve the overall complexity we present a multilevel quasi-Monte Carlo method that aims to speed up the convergence of the variance on each level by replacing the Monte Carlo samples with a quasi-Monte Carlo (QMC) quadrature rule.

The structure of the paper is as follows. Section 2 introduces the variational formulation of (1), along with necessary background material on stochastic convection–diffusion eigenvalue problems. Two discrete formulations of the eigenvalue problem are introduced: the Galerkin FEM and the SUPG method. Section 3 introduces the MLMC method and presents the corresponding complexity analysis. In particular, this section details how to efficiently use each eigensolver, the Rayleigh quotient and implicitly restarted Arnoldi iterations, within the MLMC algorithm. In Sect. 4, we present the two extensions of our MLMC algorithm: a homotopy MLMC and a multilevel quasi-Monte Carlo method. Section 5 presents numerical results for finding the smallest eigenvalue of the convection–diffusion operator in a variety of settings. In particular, we present examples for difficult cases with high convection.

To ease notation, for the remainder of the paper we combine the random variables in the convection and diffusion coefficients into a single uniform random vector of dimension \(s < \infty \), denoted by \({\varvec{\omega }} = (\omega _i)_{i = 1}^s\) with \(\omega _i \sim {\mathcal {U}}[0, 1]\). In this case, \(\pi \) is the product uniform measure on \(\varOmega {:}{=}[0, 1]^s\).

2 Variational Formulation

The eigenvalue problem (1) needs to be discretized, because its solution is not analytically tractable for arbitrary geometries and parameters. As such, we apply the standard finite element method to (1) to obtain an approximation of the desired eigenpair \((\lambda , u)\).

Before deriving the variational form of (1), we first establish certain assumptions about the problem domain, the random field \(\kappa ({\varvec{\omega }})\) and the velocity field \(\textbf{a}({\varvec{\omega }})\) for \({\varvec{\omega }}\in \varOmega \), which, in particular, ensure that the solution is in \(H^2(D)\) [33] as well as incompressibility.

Assumption 1

Assume that \(D \subset {\mathbb {R}}^{d}\), for \(d = 1, 2, \) or 3, is a bounded, convex domain with Lipschitz continuous boundary \(\varGamma \).

Assumption 2

The diffusion coefficient is bounded from above and from below for almost all \({\varvec{\omega }} \in \varOmega \), i.e., there exist two constants \(\kappa _\textrm{min}, \kappa _\textrm{max}\) such that \(0<\kappa _{\min }\le \kappa (\textbf{x},{\varvec{\omega }}) \le \kappa _{\max }<\infty \). In addition, we assume that also \(\Vert \kappa (\cdot , {\varvec{\omega }})\Vert _{W^{1, \infty }} \le \kappa _\textrm{max}\) for almost all \({\varvec{\omega }} \in \varOmega \).

Assumption 3

The convection coefficient is divergence free, \(\nabla \cdot \textbf{a}(\textbf{x}, {\varvec{\omega }}) = 0\) for all \(\textbf{x}\in D\), and uniformly bounded, \(\Vert \textbf{a}(\cdot , {\varvec{\omega }})\Vert _{L^\infty } \le \textbf{a}_\textrm{max}\), for almost all \({\varvec{\omega }}\).

A simple example of a random convection term is a homogeneous convection, \(\textbf{a}(\textbf{x}, {\varvec{\omega }}) = [a_1\omega _1,\ldots , a_d \omega _d]^\top \) for \(a_1, \ldots , a_d \in {\mathbb {R}}\), which are independent of \(\textbf{x}\). Another example is the curl of random vector field, e.g., \(\textbf{a}(\textbf{x}, {\varvec{\omega }}) = \nabla \times \varvec{Z}(\textbf{x}, {\varvec{\omega }})\) where \(\varvec{Z}\) is a vector-valued random field similar to that defined in (2). Both of these examples satisfy Assumption 3.

Next we introduce the variational form of (1). Whenever it does not lead to confusion, we drop the spatial coordinate of (stochastic) functions for brevity—for example, \(u(\textbf{x},{\varvec{\omega }})\) is also written as \(u({\varvec{\omega }})\). Let \(V=H^1_0(\varOmega )\) be the first-order Sobolev space of complex-valued functions with vanishing trace on the boundary with norm \(\Vert v\Vert _V=\Vert \nabla v\Vert _{L^2}\). Then let \(V^*\) denote the dual space of V. Multiplying (1) by a test function \(v\in V\) and then performing integration by parts, noting that we have no Neumann boundary condition term since \(u(\textbf{x},{\varvec{\omega }} )=0\) on \(\varGamma \), we obtain

The variational eigenvalue problem corresponding to (1) is then: Find a non-trivial eigenpair \((\lambda ({\varvec{\omega }}),u({\varvec{\omega }}))\in {\mathbb {C}}\times V\) with \(\Vert u({\varvec{\omega }})\Vert _{L^2} = 1\) such that

where

and \(\langle \cdot , \cdot \rangle \) denotes the \(L^2(D)\) inner product

Since the velocity \(\textbf{a}\) is divergence free, \(\nabla \cdot \textbf{a} = 0\), the sesquilinear form in (5) is uniformly coercive, i.e.,

with \(a_\textrm{min}> 0\) independent of \({\varvec{\omega }}\). It is also uniformly bounded, i.e.,

with \(a_\textrm{max}< \infty \) independent of \({\varvec{\omega }}\).

For each \({\varvec{\omega }} \in \varOmega \), the eigenvalue problem (5) admits a countable sequence of eigenvalues \((\lambda _k({\varvec{\omega }}))_{k = 1}^\infty \subset {\mathbb {C}}\), which has no finite accumulation points, and the smallest eigenvalue, \(\lambda _1({\varvec{\omega }})\), is real and simple, see, e.g., [4]. The eigenvalues are enumerated in order of increasing magnitude, counting multiplicity, such that

with corresponding eigenfunctions \((u_k(\cdot ,{\varvec{\omega }}))_{k = 1}^\infty \), enumerated accordingly.

In addition to the primal form (5), to facilitate our analysis later on we also consider the dual eigenproblem: Find a non-trivial dual eigenpair \((\lambda ^*({\varvec{\omega }}),u^*({\varvec{\omega }}))\in {\mathbb {C}}\times V\) with \(\Vert u^*({\varvec{\omega }})\Vert _{L^2}=1\) such that

The primal and dual eigenvalues are related to each other via \(\lambda ({\varvec{\omega }})=\overline{\lambda ^*({\varvec{\omega }})}\).

Proposition 1

For all \({\varvec{\omega }} \in \varOmega \), the smallest eigenvalue \(\lambda _1({\varvec{\omega }})\) of (5) is simple and the gap is uniformly bounded, i.e., there exists \(\rho > 0\), independent of \({\varvec{\omega }}\), such that

Proof

For each \({\varvec{\omega }} \in \varOmega \), the Krein–Rutman Theorem implies that \(\lambda _1({\varvec{\omega }})\) is simple. It remains to show that the gap is uniformly bounded for \({\varvec{\omega }} \in \varOmega \). Since the eigenvalues are continuous in \({\varvec{\omega }}\), it follows that the gap is also continuous. Hence, there exists a strictly positive minimum on the compact domain \(\varOmega \) and we can take

\(\square \)

Theorem 1

Suppose Assumptions 1–3 hold. For \({\varvec{\omega }} \in \varOmega \), let \((\lambda ({\varvec{\omega }}), u(\cdot , {\varvec{\omega }}))\) be an eigenpair of the EVP (5) and let \((\lambda ^*({\varvec{\omega }}), u^*(\cdot , {\varvec{\omega }}))\) be the corresponding dual eigenpair of the adjoint EVP (8), i.e., \(\lambda ({\varvec{\omega }}) = \overline{\lambda ^*({\varvec{\omega }})}\). Then, the primal and the dual eigenfunctions satisfy \(u(\cdot , {\varvec{\omega }}),\ u^*(\cdot , {\varvec{\omega }}) \in V \cap H^2(D)\) with

for \(C_{\lambda , 2} < \infty \) and \(C_{\lambda ^*, 2} < \infty \) independent of \({\varvec{\omega }}\).

Proof

Rearranging (1), we can write the Laplacian of \(u(\cdot , {\varvec{\omega }})\) as

which holds for almost all \(\textbf{x}\in D\). Since \(\kappa (\cdot , {\varvec{\omega }}) \in W^{1, \infty }(D)\), \(\textbf{a}(\cdot , {\varvec{\omega }}) \in L^\infty (D)^d\), \(u(\cdot , {\varvec{\omega }}) \in V\) and \(1/\kappa (\textbf{x}, {\varvec{\omega }}) \le 1/\kappa _\textrm{min}< \infty \) it follows that \(f_{\varvec{\omega }} \in L^2(D)\) with

where in the last step we have used that \(\Vert u({\varvec{\omega }})\Vert _{L^2} = 1\), as well as Assumptions 2 and 3. Since \(\lambda ({\varvec{\omega }}), u(\cdot , {\varvec{\omega }})\) satisfy (5) with \(\Vert u({\varvec{\omega }})\Vert _{L^2} = 1\) and the sesquilinear form is coercive, it follows from (6) that

where in the last inequality we have used Poincaré’s inequality, as well as \(\Vert u({\varvec{\omega }})\Vert _{L^2} = 1\) again. The first inequality also implies \(\Vert u({\varvec{\omega }})\Vert _V \le \sqrt{|\lambda ({\varvec{\omega }})|/a_\textrm{min}}\). Thus, substituting these two bounds, the \(L^2\)-norm of \(f_{\varvec{\omega }}\) is bounded by

where the constant is independent of \(\lambda \).

Finally, using classical results in Grisvard [33] it follows that

where \(C_D\) depends only on the domain D. Finally, substituting in the bound on \(\Vert f_{\varvec{\omega }}\Vert _{L^2}\) (11) gives the desired upper bound (10).

The result for the dual eigenfunction follows analogously. \(\square \)

2.1 Finite Element Formulation

Let \(\{{\mathcal {T}}_h\}_{h>0}\) be a family of (quasi-)uniform, shape-regular, conforming meshes on the spatial domain D, where each \({\mathcal {T}}_h\) is parameterised by its mesh width \(h>0\). For \(h>0\), we approximate the infinite-dimensional space V by a finite-dimensional subspace \(V_h\). In this paper, we consider piecewise linear finite element (FE) spaces, but the method will work also for more general spaces.

The resulting discrete variational problem is to find non-trivial primal and dual eigenpairs \((\lambda ({\varvec{\omega }}),u_h({\varvec{\omega }}))\in {\mathbb {C}}\times V_h\) and \((\lambda ^*({\varvec{\omega }}),u_h^*({\varvec{\omega }}))\in {\mathbb {C}}\times V_h\) such that

and

For each \({\varvec{\omega }}\), it is well-known that for h sufficiently small the FE eigenvalue problem (12) admits \(M_h {:}{=}\dim (V_h)\) eigenpairs, denoted by

which approximate the first \(M_h\) eigenpairs of (5). This approach is also called the Galerkin method.

In convection-dominated regions, the Galerkin method has well-known stability issues for standard (Lagrange-type) FEs, if the element size h does not capture all necessary information about the flow. The Peclet number (sometimes called the mesh Peclet number) [68]

governs how small the mesh size h should be in order to have a stable solution using basic (Lagrange-type) FE methods.

The error in the FE approximations (14) can be analysed using the Babuška–Osborn theory [4]. We state the error bounds for a simple eigenpair.

Theorem 2

Let \((\lambda ({\varvec{\omega }}), u({\varvec{\omega }}))\) be an eigenpair of (5) that is simple for all \({\varvec{\omega }} \in \varOmega \), where \(\varOmega \) is a compact domain. Then there exist constants \(C_\lambda , C_u\), independent of h and \({\varvec{\omega }}\), such that

and \(u_h({\varvec{\omega }})\) can be normalized such that

Proof

See Babuška and Osborn [4] and the appendix, where we show explicitly that the constants are bounded uniformly in \({\varvec{\omega }}\). \(\square \)

2.2 Streamline-Upwind Petrov–Galerkin Formulation

A sufficiently small Peclet number (15) guarantees numerical stability of the standard Galerkin method. One can either choose a small overall mesh size h or locally adapt the mesh size to satisfy the stability condition. However, globally reducing the mesh size may lead to a high computational cost, while local adaptations may need to be performed path-wise for each realisation of \({\varvec{\omega }}\), which in turn leads to complications in the algorithmic design. In this section, we consider using the streamline-upwind Petrov–Galerkin (SUPG) method to improve numerical stability.

The SUPG method was introduced by Brooks and Hughes [10] to stabilize the finite element solution. Since then, the method has been extensively investigated and used in various applications [8, 15, 35, 39, 40, 43]. The SUPG method can be derived in several ways. Here, we introduce its formulation by adding a stabilization term to the bilinear form. An equivalent weak formulation can be obtained by defining a test space with additional test functions in the form \(\hat{v}(\textbf{x})=v(\textbf{x})+p(\textbf{x})\), where \(v(\textbf{x})\) is a standard test function in the finite element method and \(p(\textbf{x})\) is an additional discontinuous function.

We define the residual operator \({\mathcal {R}}\) as

which gives the residual of the convection–diffusion equation (1) for a pair \((\sigma , v) \in {\mathbb {C}}\times V\). Then, stabilization techniques can be derived from the general formulation

where \(|{\mathcal {T}}_h|\) is the number of elements of the mesh \({\mathcal {T}}_h\), \({\mathcal {P}}({\varvec{\omega }})\) is some stabilization operator and \(\tau _m({\varvec{\omega }})\) is the stabilization parameter acting in the mth finite element. The stabilization strategy will be determined by \({\mathcal {P}}({\varvec{\omega }})\) and \(\tau _m({\varvec{\omega }})\).

Various definitions exist for the operator \({\mathcal {P}}(v,{\varvec{\omega }})\), such as the Galerkin Least Square method [38], the SUPG method [9, 10, 23], the Unusual Stabilized Finite Element method [5], etc. For the SUPG method, the stablization operator \({\mathcal {P}}({\varvec{\omega }})\) is defined as

Substituting Eqs. (18) and (20) into (19) gives the SUPG weighted residual formulation

which is equivalent to

After approximating the weak form (21) by the usual finite-dimensional subspaces, we obtain the discrete variational problem: Find non-trivial (primal) eigenpairs \((\lambda _h({\varvec{\omega }}),u_h({\varvec{\omega }}))\in {\mathbb {C}}\times V_h\) such that

and dual eigenpairs \((\lambda _h^*({\varvec{\omega }}),u_h^*({\varvec{\omega }}))\in {\mathbb {C}}\times V_h\) such that

It follows that the right-hand side matrix is no longer symmetric and is stochastic compared to the mass matrix in the standard Galerkin method.

In general, finding the optimal stabilization parameter \(\tau _m(\textbf{x},{\varvec{\omega }})\) is an open problem, and thus it is defined heuristically [43]. We employ the following stabilization parameter [8, 35]

However, in practical implementations the following asymptotic expressions of \(\tau _m(\textbf{x}, {\varvec{\omega }})\) are used

Figure 1 shows the 20 smallest eigenvalues for a single realization of random field \(\kappa (\textbf{x}, {\varvec{\omega }})\) with velocity \(\textbf{a}(\textbf{x},{\varvec{\omega }})=[50,0]^T\) on meshes with size \(h=2^{-3}, 2^{-4}, 2^{-5}\). The standard Galerkin method has non-physical oscillations in the discretized eigenfunction for such a coarse mesh and its two smallest eigenvalues form a complex conjugate pair; this contradicts the fact that the smallest eigenvalue should be real and simple. The SUPG method, on the other hand, has a real smallest eigenvalue, indicating a stable solution.

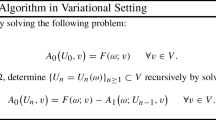

3 Multilevel Monte Carlo Methods

To compute \({\mathbb {E}}[\lambda ]\), we first approximate the eigenproblem (5) for each \({\varvec{\omega }} \in \varOmega \) and then use a sampling method to estimate the expected value of the approximate eigenvalue. There are two layers of approximation: First the eigenvalue problem is discretized by a numerical method, e.g., FEM or SUPG as in Sect. 2.1, then the resulting discrete eigenproblem is solved by an iterative eigenvalue solver, e.g., the Rayleigh quotient method, such that \(\lambda ({\varvec{\omega }}) \approx \lambda _h({\varvec{\omega }}) \approx \lambda _{h,K}({\varvec{\omega }})\), where h denotes the meshwidth of the spatial discretization and K denotes the number of iterations used by the eigenvalue solver.

Applying the Monte Carlo method to \(\lambda _{h, K}\), the expected eigenvalue can be approximated by the estimator

where the samples \(\{{\varvec{\omega }}_n\}_{n = 1}^N \subset \varOmega \) are i.i.d. uniformly on \(\varOmega \). This introduces a third factor that influences the accuracy of the estimator in (26) in addition to h and K, namely the number of samples N. Note that we assume that the number of iterations K is uniformly bounded in \({\varvec{\omega }}\).

The standard Monte Carlo estimator in (26) is computationally expensive. To measure its accuracy we use the mean squared error (MSE)

where the outer expectation is with respect to the samples in the estimator \(Y_{h,K,N}\). Under mild conditions, the MSE can be decomposed as

In this decomposition, the bias \(\left| {\mathbb {E}}[\lambda ({\varvec{\omega }})] - {\mathbb {E}}[\lambda _{h,K}({\varvec{\omega }})]\right| \) is controlled by h and K, whereas the variance term decreases linearly with 1/N. To guarantee that the MSE remains below a threshold \(\varepsilon ^2\), h and K need to be chosen such that the bias is \(O(\varepsilon ^2)\), while the sample size needs to satisfy \(N = O(\varepsilon ^{-2})\). Suppose \(K = K(h)\) is sufficiently large so that the bias is solely controlled by h and satisfies \(\left| {\mathbb {E}}[\lambda ({\varvec{\omega }})] - {\mathbb {E}}[\lambda _{h,K}({\varvec{\omega }})]\right| = O(h^\alpha )\) for some \(\alpha >0\). Suppose further that the computational cost to compute \(\lambda _{h,K}({\varvec{\omega }})\) for each \({\varvec{\omega }}\) is \(O(h^{-\gamma })\) for some \(\gamma >0\). Then the total computational complexity to achieve an MSE of \(\varepsilon ^2\) is \(O(\varepsilon ^{-2-\gamma /\alpha })\). Note that in the best-case scenario, we have \(\gamma = d\), i.e., when the computational cost of an eigensolver iteration is linear in the degrees of freedom of the discretization and the number of iterations can be bounded independently of h. Due to the quadratic convergence of algebraic eigensolvers, K is usually controlled very easily.

The multilevel Monte Carlo (MLMC) method offers a natural way to reduce the complexity of the standard Monte Carlo method by spreading the samples over a hierarchy of discretizations. In our setting, we define a sequence of meshes corresponding to mesh sizes \(h_0>h_1>\cdots>h_L > 0\). This in turn defines a sequence of discretized eigenvalues \(\lambda _{h_0, K_0}({\varvec{\omega }}), \lambda _{h_1, K_1}({\varvec{\omega }}),\dots ,\lambda _{h_{L}, K_L}({\varvec{\omega }})\) that approximate \(\lambda ({\varvec{\omega }})\) with increasing accuracy and increasing computational cost. The MLMC method approximates \({\mathbb {E}}[\lambda ({\varvec{\omega }})]\) using the telescoping sum

where \(\lambda _\ell ({\varvec{\omega }}):=\lambda _{h_\ell ,K_\ell }({\varvec{\omega }})\) is the shorthand notation for the discretized eigenvalues. Each expected value of differences in (27) can be estimated by an independent Monte Carlo approximation, leading to the multilevel estimator

Suppose independent samples are used to compute each \(Y_\ell \), then

and the MSE of (28) can also be split into a bias and a variance term, i.e.,

Thus, to ensure again a MSE of \(O(\varepsilon ^2)\), it is sufficient to ensure that the bias, \(\Bigg |{\mathbb {E}}[\lambda ({\varvec{\omega }})] - {\mathbb {E}}[\lambda _{L}({\varvec{\omega }})]\Bigg |^2 \), and the variance, \(\textrm{var}[Y]\), are both less than \(\frac{1}{2}\varepsilon ^2\). The following theorem from [14] (see also [31]) provides bounds on the computational cost of a general MLMC estimator and applies in particular to (28).

Theorem 3

Let Q denote a random variable and \(Q_{\ell }\) its numerical approximation on level \(\ell \), and suppose \(C_\ell \) is the computational cost of evaluating one realization of the difference \(Q_\ell -Q_{\ell -1}\). Consider the multilevel estimator

where \(Q_{\ell ,n}\) is a sample of \(Q_{\ell }\) and \(Q_{-1,n} = 0\), for all n.

If there exist positive constants \(\alpha , \beta , \gamma \) such that \(\alpha \ge \frac{1}{2}\min (\beta ,\gamma )\) and

-

I

\(|{\mathbb {E}}[Q_\ell -Q]| = O( h_{\ell }^{\alpha })\) (convergence of bias),

-

II

\(\textrm{var}[Y_\ell ] = O(h_{\ell }^{\beta })\) (convergence of variance),

-

III

\(C_\ell = O(h_{\ell }^{-\gamma })\) (cost per sample),

then for any \(0< \varepsilon < e^{-1}\) there exist a constant c, a stopping level L, and sample sizes \(\{N_\ell \}_{\ell = 0}^L\) such that the MSE of Y satisfies \(\textrm{MSE}({\mathbb {E}}[Q], Y) \le \varepsilon ^2\) with a total computational complexity, denoted by \(C(\varepsilon )\), satisfying

where the constant c is independent of \(\alpha \), \(\beta \) and \(\gamma \).

For a given \(\varepsilon \), from [14] the maximum level L in Theorem 3 is given by

where \(c_I\) is the implicit constant from Assumption I (convergence of bias) above. The optimal sample sizes, \(\{N_\ell \}\), that minimize the computational cost of the multilevel estimator in Theorem 3 are obtained using a standard Lagrange multipliers argument as in [14] and are given by

Since \(\beta > 0\), Theorem 3 shows that for all cases in (31), the MLMC complexity is superior to that of Monte Carlo. When \(\beta >\gamma \), the variance reduction rate is larger than the rate of increase of the computational cost, and thus most of the work is spent on the coarsest level. In this case, the multilevel estimator has the best computational complexity. When \(\beta <\gamma \) the total computational work of the multilevel estimator may only have a marginal improvement compared to that of the classic Monte Carlo method.

Corollary 1

(Order of convergence) For \({\varvec{\omega }} \in \varOmega \), let \(h > 0\) be sufficiently small and consider two finite element approximations, cf. (12), of the smallest eigenvalue \(\lambda ({\varvec{\omega }})\) of the eigenvalue problem (5) with \(h_{\ell -1} = h\) and \(h_{\ell } = h/2\). The expectation of their difference is bounded by

while the variance of the difference is bounded by

for two constants \(c_1, c_2\) that are independent of \({\varvec{\omega }}\), h and \(\ell \).

Proof

Applying Theorem 2, since \(C_\lambda \) is independent of \({\varvec{\omega }}\) we have

and

Therefore, by the triangle inequality, we have

The variance reduction rate comes from the following relation

and, similarly, by the Cauchy-Schwarz inequality

\(\square \)

Remark 1

In our numerical experiments, we observed that the SUPG approximation of the eigenvalue problem, cf. (22), has similar rates of convergence \(\alpha \) and \(\beta \) in MLMC compared to the standard finite element approximation.

An important physical property of the smallest eigenvalue of (5) is that it is real and strictly positive. Clearly, \({\mathbb {E}}[\lambda ] > 0\) as well, and so we would like our multilevel approximation (28) to preserve this property. Below we show that a multilevel approximation based on Galerkin FEM with a geometrically-decreasing sequence of meshwidths is strictly positive provided that \(h_0\) is sufficiently small.

Proposition 2

Suppose that \(h_\ell = h_0 2^{-\ell }\) for \(\ell \in {\mathbb {N}}\) with \(h_0 > 0\) sufficiently small and let \(\lambda _{h_\ell }(\cdot )\) be the approximation of the smallest eigenvalue using the Galerkin FEM as in (12). Then, for any \(L \in {\mathbb {N}}\), the multilevel approximation of the smallest eigenvalue is strictly positive, i.e.,

Proof

First, since \(\lambda \) is continuous and strictly positive on \(\varOmega \) it can be bounded uniformly from below, i.e., there exists  such that

such that

For \(\ell = 0\), using (16) and (40) we can bound \(\lambda _{h_0}({\varvec{\omega }})\) uniformly from below by

Since this bound is independent of \({\varvec{\omega }}\), it follows that

Similarly, for \(\ell \ge 1\) using (16) we obtain

Again, this bound is independent of \({\varvec{\omega }}\) and so

Finally, we bound the multilevel approximation \(\widetilde{Y}\) from below using (41) and (42) as follows,

where we have used the property that \(h_0\) is sufficiently small, i.e.,  , to ensure \(\widetilde{Y} > 0\), as required. \(\square \)

, to ensure \(\widetilde{Y} > 0\), as required. \(\square \)

The result above can be extended beyond the geometric sequence of FE meshwidths to a general sequence of FE meshwidths, provided that \(\sum _{\ell = 0}^L h_\ell ^2\) is sufficiently small. Similarly, as in Remark 1, we observe that the MLMC approximations based on SUPG are also strictly positive.

Choosing the number of iterations \(K_\ell \) such that the error of the eigensolver is of the same order as the FE error on each level, i.e., \(|\lambda _{h_\ell }(\varvec{\omega }) - \lambda _{h_\ell , K_\ell }(\varvec{\omega })| \lesssim h_\ell ^2\) for all \(\ell = 0, 1, \ldots , L\) and \(\varvec{\omega }\in \varOmega \), it can similarly be shown that the multilevel approximation (28) also satisfies \(Y > 0\).

To obtain the eigenvalue approximation on level \(\ell \), choosing a basis for the FE space \({V_\ell {:}{=}V_{h_\ell }}\) in (12) leads to a generalized (algebraic) eigenproblem in matrix form for each sample \({\varvec{\omega }}\), i.e.,

where \(\textbf{u}_\ell ({\varvec{\omega }})\) is the coefficient vector (with respect to the basis) and \(\textbf{A}_\ell ({\varvec{\omega }})\), \(\textbf{M}_\ell ({\varvec{\omega }})\) are the associated FE matrices corresponding to the mesh \({\mathcal {T}}_\ell :={\mathcal {T}}_{h_\ell }\). The number of iterations K in the computational cost per sample, as well as the rate of the cost per iteration depend on the choice of the algebraic eigensolver. A variety of solvers can be applied here to solve the generalized eigenvalue problem (43), including power iteration, the QR algorithm, subspace iterations, etc. For our purposes, we only need an eigensolver that is able to compute the smallest eigenvalue, which is real and simple. As such, we consider here two eigenvalue solvers, the Rayleigh quotient iteration and the implicitly restarted Arnoldi method.

We first consider the Rayleigh quotient iteration (Algorithm 1), introduced first by Lord Rayleigh in 1894 for a quadratic eigenproblem of oscillations of a mechanical system [57] and then extended in the 1950s and 1960s to non-symmetric generalized eigenproblems [17, 56]. The following lemma, whose proof can be found in Crandall [17] and Ostrowski [56], establishes the error reduction rate of the Rayleigh quotient iteration, which will in turn help to bound the computational cost on each level.

Lemma 1

Suppose we have an initial guess \(\lambda _{\ell ,0}({\varvec{\omega }})\) to the eigenvalue \(\lambda _\ell ({\varvec{\omega }})\) at the level \(\ell \) and \(|\lambda _{\ell ,0}({\varvec{\omega }})-\lambda _\ell ({\varvec{\omega }})|\) is sufficiently small. Then the sequence \(\lambda _{\ell ,i}({\varvec{\omega }})\) converges to \(\lambda _\ell ({\varvec{\omega }})\) quadratically, i.e., there exists a constant \({\hat{C}}({\varvec{\omega }})\) such that

The computational cost of Rayleigh quotient iteration (RQI) is dominated by the cost of solving two linear systems in each iteration (cf. Lines 6 and 7 of Algorithm 1). For direct solvers, such as LU decomposition, the computational cost depends on the sparsity and bandwidth of the matrices, e.g., for piecewise linear FE applied to (5) and \(d = 2\), the cost for solving these linear systems on level \(\ell \) is \(O(h_\ell ^{-3})\) [26]. However, optimal iterative solvers, such as geometric multigrid methods, are able to achieve the optimal computational complexity of (or close to) \(O(h_\ell ^{-d})\). All other steps in Algorithm 1 are linear in the degree of freedoms, and thus \(O(h_\ell ^{-d})\). Hence, typically the cost per iteration grows with rate \(\gamma \ge d\), but it can be as big as \(\gamma =3\) for \(d=2\). The remaining factor in the computational cost is the number of iterations K for the Rayleigh quotient iteration within the MLMC estimator, but this is independent of \(h_\ell \).

Recall the MLMC estimator (28), where at each level \(\ell \) we compute the differences \(\lambda _{\ell }({\varvec{\omega }}_n)-\lambda _{\ell -1}({\varvec{\omega }}_n)\) for the same sample \({\varvec{\omega }}_n\). The number of RQI iterations needed for a sufficiently accurate approximation of \(\lambda _{\ell }({\varvec{\omega }}_n)\)—the more costly level \(\ell \) computation—can be significantly reduced by using the computed approximation of the eigenvalue \(\lambda _{\ell -1}({\varvec{\omega }}_n)\) on the coarser level as the initial guess, thus also reducing the total computational cost. In fact, we design a three-grid method, similar to the one used in [29] to implement this strategy, which uses the approximate eigenvalue \(\lambda _{0}({\varvec{\omega }}_n)\) on level zero with mesh size \(h_0\) as the initial guess for computing eigenvalue \(\lambda _{\ell -1}({\varvec{\omega }}_n)\) on level \(\ell -1\). Then, \(\lambda _{\ell -1}({\varvec{\omega }}_n)\) is used as the initial guess for computing \(\lambda _{\ell }({\varvec{\omega }}_n)\); see Algorithm 2 for details.

To estimate the computational cost of this three-grid method, we choose again \(h_{\ell -1} = h = 2 h_{\ell }\) and denote the exact discrete eigenvalues on level \(\ell -1\) and level \(\ell \) by \(\lambda _{h}({\varvec{\omega }}_n)\) and \(\lambda _{h/2}({\varvec{\omega }}_n)\), respectively. The goal is to control the errors of the eigenvalues \(\lambda _{\ell -1}({\varvec{\omega }}_n)\) and \(\lambda _{\ell }({\varvec{\omega }}_n)\) actually computed using Algorithm 2 to be within the respective discretization errors. Due to the quadratic convergence rate of the RQI (cf. Lemma 1), often only two or three iterations are sufficient to compute a sufficiently accurate approximation \(\lambda _{0}({\varvec{\omega }}_n)\) on Level 0 in Line 3 of Algorithm 2. Similarly, in Line 5 of Algorithm 2, two to three iterations of RQI are again sufficient to ensure that the error of the estimated eigenvalue \(\lambda _{\ell -1}({\varvec{\omega }}_n)\) satisfies

which is the bound on the discretization error on level \(\ell -1\) in Theorem 2. When \(\lambda _{\ell -1}({\varvec{\omega }}_n)\) is then used as the initial guess for estimating \(\lambda _{h/2}({\varvec{\omega }}_n)\), the initial error satisfies

using triangle inequality and Theorem 2 again. Therefore, using Lemma 1 for sufficiently small mesh size h such that \(h \le \frac{2}{9} \big ({\hat{C}}({\varvec{\omega }}_n) C_\lambda \big )^{-1/2}\), one single iteration of RQI on level \(\ell \) suffices such that

In practice, two iterations of RQI are typically used to achieve the target accuracy for \(\lambda _\ell ({\varvec{\omega }}_n)\) in Line 10 of Algorithm 2. These two calls to RQI dominate the computational cost of Algorithm 2 with their four linear solves. Hence, for sparse direct solvers and \(d=2\), the overall computational cost of Algorithm 2 is \(O(h_\ell ^{-3})\) and \(\gamma = 3\) in Theorem 3. The computational complexity of Algorithm 2 can be further reduced using multigrid-based methods to efficiently solve the Rayleigh quotient iterations [11] that potentially offer a rate of \(\gamma = d\) (or close to) even in three dimensions. However, it is unclear if the same rate of convergence as for self-adjoint operators can be retained for the convection-dominated problems we are considering here.

We also consider the implicitly restarted Arnoldi method [1, 48, 58, 59, 62] and its implementation in the library ARPACK [49] to solve the eigenvalue problem. Compared to the Rayleigh quotient iteration, the Arnoldi method calculates a specified number of eigenpairs that depend on the dimension of the Krylov subspace. The performance of the implicitly restarted Arnoldi method is determined by several factors such as the dimension of the Krylov subspace and the initial vector. To the best of the authors’ knowledge, for the eigenvalue problem (12) we are considering here, the convergence rate, and therefore the computational cost, of the implicitly restarted Arnoldi method is not yet known. As such, we numerically estimate the rate variable \(\gamma \) and the computational cost \(C_\ell \) for determining the optimal sample sizes in MLMC. It appears that the number of iterations grows slightly faster than \(O(h_\ell ^{-1})\) leading to a similar total complexity as RQI for \(d=2\) of \(\gamma \approx 3.5\).

4 Extensions of MLMC Method

In this section, we introduce two extensions of the MLMC method for convection–diffusion eigenvalue problems. First, we employ a homotopy method to add stability to the eigensolve for each sample. Second, we replace the Monte Carlo approximation of the expected value on each level in (27) with a quasi-Monte Carlo (QMC) method, which, due to the faster convergence of QMC, allows us to use less samples on each level and improves the overall complexity.

4.1 Homotopy Multilevel Monte Carlo Method

In Carstensen et al. [13], a homotopy method is employed to solve convection–diffusion eigenvalue problems with deterministic coefficients, using the homotopy method to derive adaptation strategies for FE methods. The authors also provided estimates on the convergence rate of the smallest eigenvalue with respect to the homotopy parameter. We aim to investigate the application of this homotopy method in the MLMC method, particularly in designing multilevel models for alleviating numerical instability (due to the high advection velocity) on coarser meshes.

For eigenvalue problems, the homotopy method [50] uses an initial operator \({\mathcal {L}}_0\)—for which the target eigenvalue is easier to compute than that of the original operator \({\mathcal {L}}\)—to form a continuation

with a function \(f:[0;1]\rightarrow [0;1]\) and \(f(0)=0\), \(f(1)=1\). For the convection–diffusion operator in (1), it is natural to set the diffusion operator as the initial operator. Here we consider a simple linear function \(f(t)=t\) to design the sequence of operators used for the homotopy. Given a sequence of homotopy parameters, \(0 = t_0< t_1< \cdots < t_L = 1\), the homotopy operators with stochastic coefficients define a sequence of eigenvalue problems of the form

for \(\ell = 0, \ldots , L\). The following lemma [13, Lemma 4.1] establishes the homotopy error on the smallest eigenvalue in (46) for fixed \({\varvec{\omega }}\).

Lemma 2

Suppose the velocity field \(\textbf{a}\) is divergence-free and \({\varvec{\omega }}\) is fixed. The homotopy error—which is defined as the difference between the smallest eigenvalue \(\lambda ({\varvec{\omega }},t=1)\) of the original operator and that of the homotopy operator in (46) satisfies for any \(t\in [0, 1]\)

where

and \(u^*({\varvec{\omega }},t)\) is the dual homotopy solution. For t sufficiently close to 1 and almost all \(\varvec{\omega }\in \varOmega \), \(C_{t, \varvec{\omega }} < C_t \) for some \(C_t < \infty \) independent of \(\varvec{\omega }\).

Proof

First, the primal and dual homotopy eigenvalue problems are

where we again normalise the homotopy eigenfunctions such that \(\Vert u({\varvec{\omega }}, t)\Vert _{L^2} = 1 = \Vert u^*({\varvec{\omega }}, t)\Vert _{L^2}\).

Following the proof of [13, Lemma 4.1], using the homotopy eigenvalue problems we can write the homotopy error as

where we have also used the property \(\lambda ({\varvec{\omega }}, t) = \overline{\lambda ^*({\varvec{\omega }}, t)}\).

Since \(\textbf{a}({\varvec{\omega }})\) is divergence free, we have

Then by the triangle inequality, followed by the Cauchy–Schwarz inequality

where we have used the property that the homotopy eigenfunctions are normalized and Assumption 3. Substituting (50) into (49) then rearranging gives the result (47) with \(C_{t, \varvec{\omega }}\) as in (48).

Next, we bound \(C_{t, \varvec{\omega }}\) independently of \(\varvec{\omega }\). Clearly, the numerator is bounded for all t and almost all \(\varvec{\omega }\). Next, we show that the denominator is strictly positive. Suppose for a contradiction that \(\langle u(\varvec{\omega }, 1), u^*(\varvec{\omega }, t)\rangle = 0\), then this implies that

since the eigenfunction and dual eigenfunction are not orthogonal if the corresponding eigenvalues satisfy \(\lambda (\varvec{\omega }, 1) = \overline{\lambda ^*}(\varvec{\omega }, 1)\). However, since \(u^*(\varvec{\omega }, t) \rightarrow u^*(\varvec{\omega }, 1)\) as \(t \rightarrow 1\), the left hand side tends to zero whereas the right hand side is strictly positive and independent of t, leading to a contradiction. Hence, for t sufficiently small \(\langle u(\varvec{\omega }, 1), u^*(\varvec{\omega }, t)\rangle > 0\) and similarly \(\langle u(\varvec{\omega }, t), u^*(\varvec{\omega }, 1)\rangle > 0\). Thus, for t sufficiently small \(C_{t, \varvec{\omega }} < \infty \). Since \(\textbf{a}(\varvec{\omega })\) along with the primal and dual eigenfunctions are continuous in \(\varvec{\omega }\), it follows that \(C_{t, \varvec{\omega }}\) is also continuous in \(\varvec{\omega }\) and thus, can be bounded by the maximum over the compact domain \(\varOmega \),

\(\square \)

With the homotopy method, the approximation error now comes from three sources: the FE discretization, the iterative eigensolver, and the value of the homotopy parameter. We suppose again that the error due to the eigensolver is bounded from above by the other two sources of error and design multilevel sequences such that the homotopy error and the discretization error are non-increasing with increasing level. Denoting the homotopy parameter and the mesh size at level \(\ell \) by \(t_\ell \) and \(h_\ell \), respectively, the multilevel sequence

is designed such that \(t_{\ell -1} \le t_\ell \), \(h_{\ell -1} \ge h_\ell \), and \(t_L=1\). The multilevel parameters are required to be non-repetitive, i.e., \((t_{\ell -1}, h_{\ell -1})\ne (t_\ell ,h_\ell )\) for all \(\ell = 1, \ldots , L\), to ensure an asymptotically decreasing total approximation error in the sequence. However, one of these two parameters is allowed to be the same on two adjacent levels, i.e., either \(h_{\ell -1}=h_\ell \) or \(t_{\ell -1}=t_\ell \) is possible. This setting allows for adapting the homotopy parameter to discretisations on different meshes to satisfy the stability condition of the FE approximation.

The resulting MLMC estimator can be derived from the telescoping sum

Following a similar derivation as that of Corollary 1 and based on the error bound in Lemma 2, we conjecture that the expectation and the variance of the multilevel difference with the homotopy method are bounded by

respectively. This will be used as the guideline for choosing the multilevel sequences in our numerical experiments. We will also demonstrate that the above conjecture is valid in our numerical experiments.

4.2 Multilevel QMC Methods

QMC methods are a class of equal-weight quadrature rules originally designed to approximate high-dimensional integrals on the unit hypercube. A QMC approximation of the expected value of f is given by

where, in contrast to Monte Carlo methods, the quadrature points \(\{\varvec{\tau }_k\}_{k = 1}^{N - 1} \subset [0, 1]^s\) are chosen deterministically to be well-distributed and have good approximation properties in high dimensions. There are several types of QMC methods, including lattice rules, digital nets and randomised rules. The main benefit of QMC methods is that for sufficiently smooth integrands the quadrature error converges at a rate of \({\mathcal {O}}(N^{-1 + \delta })\), \(\delta > 0\), or faster, which is better than the Monte Carlo convergence rate of \({\mathcal {O}}(N^{-1/2})\). For further details see, e.g., [20, 21].

In this paper, we consider randomly shifted lattice rules, which are generated by a single integer vector \(\textbf{z}\in {\mathbb {N}}^s\) and a single random shift \(\varvec{\varDelta }\sim \textrm{Uni}[0, 1]^s\). The points are given by

where \(\{\cdot \}\) denotes taking the fractional part of each component. The benefits of random shifting are that the resulting approximation (52) is unbiased and that performing multiple QMC with i.i.d. random shifts provides a practical estimate for the mean-square error using the sample variance of the multiple approximations.

If f is sufficiently smooth (i.e., has square-integrable mixed first derivatives) then a generating vector can be constructed such that the mean-square error (MSE) of a randomly shifted lattice rule approximation satisfies

see, e.g., Theorem 5.10 in [20]. I.e., for \(\eta \approx 1/2\) the convergence of the MSE is close to \(1/N^{2}\).

Starting again with the telescoping sum (27), a multilevel QMC (MLQMC) method approximates the expectation of the smallest eigenvalue by using a QMC rule to compute the expectation on each level. MLQMC methods were first introduced in [32] for SDEs, then applied to parametric PDEs in [46, 47] and elliptic eigenvalue problems in [28, 29]. For \(L \in {\mathbb {N}}\) and \(\{N_\ell \}_{\ell = 0}^L\), the MLQMC approximation is given by

where we apply a different QMC rule with points \(\{\varvec{\tau }_{\ell , k}\}_{k = 0}^{N_\ell - 1}\) on each level, e.g., an \(N_\ell \)-point randomly shifted lattice rule (53) generated by \(\textbf{z}_\ell \) and an i.i.d. \(\varvec{\varDelta }_\ell \).

The faster convergence of QMC rules leads to an improved complexity of MLQMC methods compared to MLMC, where in the best case the cost is reduced to close to \(\varepsilon ^{-1}\) for a MSE of \(\varepsilon ^2\). Following [46], under the same assumptions as in Theorem 3, but with Assumption II replaced by

- II(b):

-

\(\textrm{MSE}[Y_\ell ^\textrm{QMC}] = O(N_\ell ^{-1/\eta } h_\ell ^\beta )\) with \(\eta \in (\frac{1}{2}, 1]\),

the MSE of the MLQMC estimator (55) is bounded above by \(\varepsilon ^2\) and the cost satisfies

The maximum level L is again given by (32) and \(\{N_\ell \}\) are given by

where \(C_\ell \) is the cost per sample as in assumption III in Theorem 3 and \(N_0\) is chosen as

Verifying Assumption II(b) for the convection–diffusion EVP (1) requires performing a technical analysis similar to [28] and in particular, requires bounding the derivatives of the eigenvalue \(\lambda (\varvec{\omega })\) and its eigenfunction \(u(\varvec{\omega })\) with respect to \(\varvec{\omega }\). Such analysis is left for future work. In the numerical results, section we study the convergence of QMC and observe that II(b) holds with \(\eta \approx 0.61\).

In practice, one should perform multiple, say \(R \in {\mathbb {N}}_0\), QMC approximations corresponding to i.i.d. random shifts, then take the average as the final estimate. In this way, we can also estimate the MSE by the sample variance over the different realisations.

5 Numerical Results

In this section, we present numerical results for three test cases. The quantity of interest in all cases is the smallest eigenvalue of the stochastic convection–diffusion problem (1) in the unit domain \(D=[0,1]^2\). The first two test cases use constant convection velocities at different magnitudes to benchmark the performance of eigenvalue solvers and finite element discretisation methods in the multilevel setting. In these two test cases, the random conductivity \(\kappa (\textbf{x};{\varvec{\omega }})\) is modelled as a log-uniform random field constructed through the convolution of \(s_\kappa \) i.i.d. uniform random variables

with exponential kernels \(k(\textbf{x}-\textbf{c}_i)=\exp [-\frac{25}{2}\Vert \textbf{x}-\textbf{c}_i\Vert _2]\), where \(\textbf{c}_i\) are the kernel centers placed uniformly on a \(5\times 5\) grid in the domain D. In the third test case, we also make the convection velocity a random field. Specifically, we first construct a log-uniform random field

similar to that of the conductivity field using additional \(s_a\) i.i.d. uniform random variables. Then, a divergence-free velocity field can be obtained by

We employ the Eigen [34] library for Rayleigh quotient iteration and solve the linear systems using sparse LU decomposition with permutation from the SuiteSparse [18] library. For the implicitly restarted Arnoldi method, we use the ARPACK [49] library with the SM mode for finding the smallest eigenvalue. Random variables are generated using the standard C++ library and the pseudo-random seeds are the same across all experiments.

Numerical experiments are organized as follows. For a relatively low convection velocity \(\textbf{a}=[20;0]^T\), we demonstrate the multilevel Monte Carlo (MLMC) method using the Galerkin FEM discretization. In this case, we also consider applying the homotopy method together with a geometrically refined mesh hierarchy. Then, on a test case with relatively high convection velocity \(\textbf{a}=[50;0]^T\), we demonstrate the extra efficiency gain offered by the numerically more stable SUPG method, compared with the Galerkin discretization. For the third test case with a random velocity field, we apply SUPG to demonstrate the efficacy and efficiency of our multilevel method. Here we also demonstrate that quasi-Monte Carlo (QMC) samples can be used to replace Monte Carlo samples to further enhance the efficiency of multilevel methods.For all multilevel methods, we consider a sequence of geometrically refined meshes with \(h_\ell = h_0 \times 2^{-\ell }, \ell = 0, 1, \ldots , 4\), and \(h_0 = 2^{-3}\). At the finest level, this gives 16129 degrees of freedom in the discretised linear system. We use \(10^4\) samples on each level \(\ell \) to compute the estimates of rate variables \(\alpha , \beta , \gamma \) in the MLMC complexity theorem (cf. Theorem 3).

5.1 Test Case I

In the first experiment, we set \(\textbf{a}=[20;0]^T\) and use the Galerkin FEM to discretize the convection–diffusion equation. The stopping criteria for the Rayleigh quotient iteration and for the implicitly restarted Arnoldi method are set to be \(10^{-12}\). In addition, for the implicitly restarted Arnoldi method, the Krylov subspace dimensions (the ncv values of ARPACK) are chosen empirically for each mesh size to optimize the number of Arnoldi iterations. They are \(m=20, 40, 70, 70, 100\) for \(h=2^{-3},2^{-4},2^{-5}, 2^{-6},2^{-7}\), respectively.

We demonstrate the efficiency of four variants of the MLMC method: (i) the three-grid Rayleigh quotient iteration (tgRQI) with a model sequence defined by grid refinement; (ii) tgRQI with a model sequence defined by grid refinement and homotopy; (iii) the implicitly restarted Arnoldi method (IRAr) with a model sequence defined by grid refinement; and (iv) IRAr with a model sequence defined by grid refinement and homotopy.

(i) MLMC with tgRQI: Fig. 2 illustrates the mean, the variance and the computational cost of multilevel differences \(\lambda _\ell ({\varvec{\omega }})-\lambda _{\ell -1}({\varvec{\omega }})\) of the smallest eigenvalue using tgRQI as the eigenvalue solver (without homotopy). Figure 2a also shows Monte Carlo estimates of the expected mean and variance of the smallest eigenvalue \(\lambda _\ell ({\varvec{\omega }})\) for each of the discretization levels. In addition to the computational cost, Fig. 2b also shows the number of Rayleigh quotient iterations used at each level. We observe that the average number of iterations follows our analysis of the computational cost of tgRQI (cf. Algorithm 2). From these plots, we estimate that the rate variables in the MLMC complexity theorem are \(\alpha \approx 2.0\), \(\beta \approx 4.0\) and \(\gamma \approx 2.41\). Since the variance reduction rate \(\beta \) is larger than the cost increase rate \(\gamma \), the MLMC estimator is in the best case scenario, with \(O(\varepsilon ^{-2})\) complexity, as stated in Theorem 3.

MLMC method using tgRQI for Test Case I with \(\textbf{a}=[20;0]^T\) and Galerkin FEM: a mean (blue) and variance (red) of the eigenvalue \(\lambda _\ell \) (dashed) and of \(\lambda _\ell -\lambda _{\ell -1}\) (solid); b computational times for one multilevel difference (blue) and average number of Rayleigh quotient iterations (red) on each level. Where shown, the error bars represent ± one standard deviation (Color figure online)

(ii) MLMC with homotopy and tgRQI: Next, we consider the homotopy method in the MLMC setting together with tgRQI. We use the conjecture in (51) to set the homotopy parameters such that \(1 - t_\ell = O(h_\ell ^2)\), \(t_0 = 0\) and \(t_L = 1\). For \(L = 5\), this results in \(t_\ell = \{0, 3/4, 15/16, 63/64, 1\}\). With this choice the eigenproblem on the zeroth level contains no convection term and is thus self-adjoint. Figure 3a shows again the means and the variances of the multilevel differences \(\lambda _\ell -\lambda _{\ell -1}\) in this setting, together with MC estimates of the expected means and variances of the eigenvalues for each level. The hierarchy of homotopy parameters is chosen to guarantee good variance reduction for MLMC. Indeed, the variance of the multilevel difference decays smoothly with a rate \(\beta \approx 3.65\). The expected mean of the difference, on the other hand, stagnates between \(\ell = 1\) and \(\ell = 2\). However, this initial stagnation is irrelevant for the MLMC complexity theorem; eventually for \(\ell \ge 2\), the estimated means of the multilevel differences decrease again with a rate of \(\alpha \approx 2\). Figure 3b shows the number of Rayleigh quotient iterations used at each level and the computational cost, which grows with a rate of \(\gamma \approx 2.56\) here. This leads to the same asymptotic complexity for MLMC, since the regime is the same, i.e., \(\beta >\gamma \), which is the optimal regime in Theorem 3 with a complexity of \(O(\varepsilon ^{-2})\).

MLMC method using homotopy and tgRQI for Test Case I with \(\textbf{a}=[20;0]^T\) and Galerkin FEM: a mean (blue) and variance (red) of the eigenvalue \(\lambda _\ell \) (dashed) and of \(\lambda _\ell -\lambda _{\ell -1}\) (solid); b computational times for one multilevel difference (blue) and average number of RQIs (red) on each level. Where shown, the error bars represent ± one standard deviation (Color figure online)

(iii) MLMC with IRAr: Similar results are obtained by using the implicitly restarted Arnoldi eigenvalue solver (without homotopy). Since the mean and the variance of the multilevel differences in this setting are almost identical to those of the Rayleigh quotient solver, we omit the plots here and only report the computational cost. Figure 4a shows the average number of matrix–vector products and the estimated CPU time for computing each of the multilevel differences, which grows with a rate of \(\gamma \approx 3.5\). Here, the increasing dimension of Krylov subspaces with grid refinement likely causes the higher growth rate of computational time compared to the experiment using tgRQI. Nonetheless, the MLMC estimator has again the optimal \(O(\varepsilon ^{-2})\) complexity.

MLMC method using IRAr for Test Case I with \(\textbf{a}=[20;0]^T\) and Galerkin FEM, both without (a) and with (b) homotopy: average computational cost (blue) and average number of matrix–vector products (red) per sample of \(\lambda _\ell -\lambda _{\ell -1}\). The error bars represent ± one standard deviation (Color figure online)

(iv) MLMC with homotopy and IRAr: Finally, we consider the behaviour of IRAr with homotopy, using the same sequence for the homotopy parameter \(t_\ell \) as in (ii). Again, we only focus on computational cost, showing the average number of matrix–vector products and the CPU time for computing each of the multilevel differences in Fig. 4b. As in (ii), the cost grows at a rate of \(\gamma \approx 3\) leading again to the optimal \(O(\varepsilon ^{-2})\) complexity for MLMC.

5.1.1 Overall Comparison

In Fig. 5, we show the CPU time versus the root mean square error for all four presented MLMC estimators together, as well as for standard Monte Carlo estimators using tgRQI (red) and IRAr (blue). The estimated complexity of standard Monte Carlo methods are \(O(\varepsilon ^{-2.92})\) and \(O(\varepsilon ^{-3.35})\) for tgRQI and IRAr, respectively. Overall, MLMC using tgRQI (without homotopy) outperforms all other methods, despite that all four MLMC methods achieve the optimal \(O(\varepsilon ^{-2})\) complexity.

5.2 Test Case II

For the second experiment, we increase the velocity to \(\textbf{a}=[50;0]^T\) and focus on the comparison between Galerkin and SUPG discretizations. Thus, we only consider the three-grid Rayleigh quotient iteration (tgRQI) with a multilevel sequence based on geometrically refined grids without homotopy. Note that for such a strong convection, five steps in the homotopy approach are insufficient: the eigenvalues for consecutive homotopy parameters are too different to achieve variance reduction in the homotopy-based MLMC method. Its computational complexity is almost the same as the complexity of standard Monte Carlo, namely almost \(O(\varepsilon ^{-3.5})\). The performance of MLMC with implicitly restarted Arnoldi on the other hand is similar to MLMC with tgRQI.

MLMC method using tgRQI for Test Case II with \(\textbf{a}=[50;0]^T\) and Galerkin FEM: a mean (blue) and variance (red) of the eigenvalue \(\lambda _\ell \) (dashed) and of \(\lambda _\ell -\lambda _{\ell -1}\) (solid); b computational time for one multilevel difference (blue) and average number of Rayleigh quotient iterations (red) on each level. Where shown, the error bars represent ± one standard deviation (Color figure online)

5.2.1 Galerkin

Due to the higher convection velocity the first two levels are unstable for most of the realizations of \({\varvec{\omega }}\) as the FEM solution may exhibit non-physical oscillations. Thus, we set the coarsest level for the MLMC method to \(h_0=2^{-5}\) here. Keeping the same finest grid level \(h_L=2^{-7}\), this means that we only use a total of three levels (\(L=2\)) compared to the sequence in Test Case I, which had a total of five levels (\(L=4\)). Figure 6a shows the expectation and variance of the multilevel differences. Here, we only have a couple of data points for estimating the rate variables of the MLMC complexity theorem, but the estimates are \(\alpha \approx 2\) and \(\beta \approx 4\) as expected theoretically. The average number of Rayleigh quotient iterations in Fig. 6b also behaves as in Test Case I with 5 iterations on the coarsest level and 2 iterations on the subsequent levels as expected for the three-grid Rayleigh quotient iteration (Algorithm 2)—recall that Levels 1 and 2 here correspond to Levels 3 and 4 in Figs. 2b and 3b. The estimated value for \(\gamma \approx 1.88\), and thus the MLMC complexity is still \(O(\varepsilon ^{-2})\). However, we cannot use as many levels due the numerical stability issues caused by the higher convection velocity, which substantially increases the prefactor in the \(O(\varepsilon ^{-2})\) cost of the algorithm.

5.2.2 SUPG

By using the SUPG discretization, we overcome the numerical stability issue and can use all five levels in MLMC, starting with \(h_0 = 2^{-3}\). As can be seen in Fig. 7a, the expectation and the variance of the multilevel differences converge with the same rates as for the Galerkin FEM, namely \(\alpha \approx 2\) and \(\beta \approx 4\) respectively. Also, clearly the use of SUPG leads to stable estimates even on the coarser levels. Figure 7b reports the average number of Rayleigh quotient iterations used at each level and the computational cost. We estimate that the computational cost increases at a rate of \(\gamma \approx 2.33\) here. In any case, the use of SUPG in the MLMC also results in the optimal \(O(\varepsilon ^{-2})\) complexity.

MLMC method using tgRQI for Test Case I with \(\textbf{a}=[20;0]^T\) and SUPG discretization: a mean (blue) and variance (red) of the eigenvalue \(\lambda _\ell \) (dashed) and of \(\lambda _\ell -\lambda _{\ell -1}\) (solid); b computational time for one multilevel difference (blue) and average number of Rayleigh quotient iterations (red) on each level. Where shown, the error bars represent ± one standard deviation (Color figure online)

5.2.3 Overall Comparison

Figure 8 shows CPU times versus root mean square errors for the MLMC methods (with tgRQI and without homotopy) using Galerkin FEM and SUPG discretizations. They are compared to a standard Monte Carlo method with Galerkin FEM. Although both MLMC estimates have the optimal \(O(\varepsilon ^{-2})\) complexity, the stability offered by SUPG enables us to use more, coarser levels, thus leading to a smaller prefactor and a significant computational gain of a factor 10–20 over the Galerkin FEM based method.

5.3 Test Case III

In this experiment, the convection velocity becomes a divergence-free random field generated using (57) and (58). We discretise the eigenvalue problem using SUPG and apply the three-grid Rayleigh quotient iteration (tgRQI) without homotopy to solve multilevel eigenvalue problems. The stopping criteria for tgRQI is set to be \(10^{-12}\). The same sequence of grid refinements, \(h=2^{-3},2^{-4},2^{-5}, 2^{-6},2^{-7}\), as in previous test cases is used to construct multilevel estimators.

5.3.1 MLMC

Figure 9 illustrates the mean, the variance and the computational cost of multilevel differences \(\lambda _\ell ({\varvec{\omega }})-\lambda _{\ell -1}({\varvec{\omega }})\) of the smallest eigenvalue using tgRQI as the eigenvalue solver. Figure 9a also shows Monte Carlo estimates of the expected mean and variance of the smallest eigenvalue \(\lambda _\ell ({\varvec{\omega }})\) for each of the discretization levels. In addition to the computational cost, Fig. 9b also shows the number of Rayleigh quotient iterations used at each level. We observe that the average number of iterations follows our analysis of the computational cost of tgRQI (cf. Algorithm 2). From these plots, we estimate that the rate variables in the MLMC complexity theorem are \(\alpha \approx 2.0\), \(\beta \approx 4\) and \(\gamma \approx 2.23\). Since the variance reduction rate \(\beta \) is larger than the cost increase rate \(\gamma \), the MLMC estimator is in the best case scenario, with \(O(\varepsilon ^{-2})\) complexity, as stated in Theorem 3. In Fig. 11, we compare the computational complexity of MLMC to that of the standard Monte Carlo. Numerically, we observe that the CPU time of MLMC is approximately \(O(\varepsilon ^{-2.06})\), which is close to the theoretically predicted rate. In comparison, the CPU time of the standard MC is approximately \(O(\varepsilon ^{-3.2})\) in this test case.

5.3.2 MLQMC

All QMC computations were implemented using Dirk Nuyens’ code accompanying [45] and use a randomly shifted embedded lattice rule in base 2, as outlined in [16], with 32 i.i.d. random shifts. In Fig. 10, we plot convergence of the MSE for both MC and QMC for three different cases: for \(\lambda _0\) in plot (a), for the difference \(\lambda _1 - \lambda _0\) in plot (b), and for the difference \(\lambda _2 - \lambda _1\) in plot (c). Here the meshwidths are given by \(h_0 = 2^{-3}\), \(h_1 = 2^{-4}\) and \(h_2 = 2^{-5}\). In all cases, QMC outperforms MC, where for \(\lambda _0\) the MSE for QMC converges at an observed rate of \(-1.78\), whereas MC converges with the rate \(-1\). For the other two cases, which are MSEs of multilevel differences, the QMC converges with an approximate rate of \(-1.63\), which is again clearly faster than the MC convergence rate of \(-1\). This observed MSE convergence for the QMC approximations of the differences implies that II(b) holds with \(\eta \approx 0.61\). For MLQMC, to choose \(N_\ell \) we use (56) with \(\eta \approx 0.61\) and with \(N_0\) scaled such that the overall MSE is less than \(\varepsilon ^2/\sqrt{2}\) for each tolerance \(\varepsilon \). Since we use a base-2 lattice rule, we round up \(N_\ell \) to the next power of 2.

The MLQMC complexity, in terms of CPU time, is plotted in Fig. 11, along with the results for MC and MLMC. Comparing the three methods in Fig. 11, clearly MLQMC provides the best complexity, followed by MLMC then standard MC. In this case, we have the approximate rates \(\beta \eta \approx 4 \times 0.61 = 2.44 > \gamma \approx 2.23 \), which implies that for MLQMC we are in the optimal regime for the cost with \(C_\textrm{MLQMC}(\varepsilon ) \lesssim \varepsilon ^{-2\eta }\). Numerically, we observe that the rate is given by 1.28, which is very close to the theoretically predicted rate of \(2\eta \approx 1.22\).

MLMC method using tgRQI and SUPG for Test Case III with random velocity and random conductivity: a mean (blue) and variance (red) of the eigenvalue \(\lambda _\ell \) (dashed) and of \(\lambda _\ell -\lambda _{\ell -1}\) (solid); b computational times for one multilevel difference (blue) and average number of RQIs (red) on each level. Where shown, the error bars represent ± one standard deviation (Color figure online)

Convergence of QMC and MC methods using tgRQI and SUPG for Test Case III with random velocity and conductivity. Plots a–c give the MSE of estimators versus sample sizes for grid sizes \(h = 2^{-3}, 2^{-4}, 2^{-5}\), respectively. Blue lines with circles and black lines with squares indicate the MSE for MC and QMC, respectively. Dashed lines and solid lines correspond to the MSE of the estimated multilevel differences and the MSE of the estimated eigenvalues, respectively (Color figure online)

6 Conclusion

In this paper we have considered and developed various MLMC methods for stochastic convection–diffusion eigenvalue problems in 2D. First, we established certain error bounds on the variational formulation of the eigenvalue problem under assumptions such as eigenvalue gap, boundedness, and other approximation properties. Then we presented the MLMC method based on a hierarchy of geometrically refined meshes with and without homotopy. We also discussed how to improve the computational complexity of MLMC by replacing Monte Carlo samples with QMC samples. At last, we provided numerical results for three test cases with different convection velocities.

Test Case I shows that, for low convection velocity, all variants of the MLMC method (based on a Galerkin FEM discretization of the PDE) achieve optimal \(O(\varepsilon ^{-2})\) complexity, including the one with homotopy. In Test Case II with a high convection velocity, the homotopy-based MLMC does not work anymore—at least without increasing the number of levels—and MLMC based on Galerkin FEM has severe stability restrictions, preventing the use of a large number of levels. This restriction can be circumvented easily by using stable SUPG discretizations. Numerical experiments suggest that MLMC with SUPG achieves the optimal \(O(\varepsilon ^{-2})\) complexity and is 10–20 times faster than the Galerkin FEM-based versions for the same level of accuracy. In Test Case III, we considered both the conductivity and the convection velocity as random fields and compared the performance of MLMC and MLQMC. In this example, both MLMC and MLQMC deliver computational complexities that are close to the optimal complexities predicted by the theory, while the rate of the computational complexity of MLQMC outperforms that of MLMC.

7 Appendix: Bounding the Constants in the FE Error

The results in Theorem 2 follow from the Babuška–Osborn theory [4]. In this appendix we show that the constants can be bounded independently of the stochastic parameter.

The Babuška–Osborn theory studies how the continuous solution operators \(T_{\varvec{\omega }}\), \(T_{\varvec{\omega }}^*: V \rightarrow V\), which for \(f, g \in V\) are defined by

are approximated by the discrete operators \(T_{{\varvec{\omega }}, h}, T_{{\varvec{\omega }}, h}^*: V_h \rightarrow V_h\),

We summarize the pertinent details here. First, we introduce:

where the eigenspaces are defined by

The result for the eigenfunction (17) is given by [4, Thm. 8.1], which gives

for a constant \(C({\varvec{\omega }})\) defined below. Since \(\lambda ({\varvec{\omega }})\) is simple, the best approximation property of \(V_h\) in \(H^2(D)\) followed by Theorem 1 gives

where the best approximation constant \(C_\textrm{BAP}\) is independent of \({\varvec{\omega }}\). In the last inequality we have also used that \(\lambda ({\varvec{\omega }})\) is continuous on the compact domain \(\varOmega \), thus can be bounded uniformly by

Hence, all that remains is to bound \(C_u({\varvec{\omega }})\), uniformly in \({\varvec{\omega }}.\) This constant is given by

where \(\varGamma ({\varvec{\omega }})\) is a circle in the complex plane enclosing the eigenvalue \(\mu ({\varvec{\omega }}) = 1/\lambda ({\varvec{\omega }})\) of \(T_{\varvec{\omega }}\), but no other points in the spectrum \(\sigma (T_{\varvec{\omega }})\), and for an operator A and \(z \in \rho (A) = {\mathbb {C}}\setminus \sigma (A)\), the resolvent set of A, we define the resolvent operator \(R_z(A) {:}{=}(z - A)^{-1}\). Hence, all that remains is to show that \(C_u({\varvec{\omega }})\) is bounded from above uniformly in \({\varvec{\omega }}\).

First, by the Lax–Milgram Lemma and the Poincaré inequality \(T_{\varvec{\omega }}\) is bounded with \(\Vert T_{\varvec{\omega }}\Vert \le C_\textrm{Poin}/ a_\textrm{min}\). Also, since \({\mathcal {A}}\) is coercive (6) and \(u({\varvec{\omega }})\) satisfies (5), using (61) we have the bound

Consider next the norm of the resolvent \(\Vert R_z(T_{\varvec{\omega }})\Vert \) for \({\varvec{\omega }} \in \varOmega \). Note that care must be taken here since the domain for z, namely the resolvent set, changes with \({\varvec{\omega }}\).

Let \(\varGamma ({\varvec{\omega }}) = \{z \in {\mathbb {C}}: |z - \mu ({\varvec{\omega }})| = \gamma /2\}\), where \(\gamma \) is a lower bound on the spectral gap for \(\mu \)

So that for each \({\varvec{\omega }} \in \varOmega \) the circle \(\varGamma ({\varvec{\omega }})\) encloses only \(\mu ({\varvec{\omega }})\) and no other eigenvalues of \(T_{\varvec{\omega }}\). Then \(z \in \varGamma ({\varvec{\omega }})\) can be parametrised by both \({\varvec{\omega }} \in \varOmega \) and \(\theta \in [0, 2\pi ]\),

Clearly \(z(\cdot , \cdot )\) is continuous in both \({\varvec{\omega }}\) and \(\theta \) and belongs to the resolvent set, \(z({\varvec{\omega }}, \theta ) \in \rho (T_{\varvec{\omega }})\), for all \({\varvec{\omega }} \in \varOmega \) and \(\theta \in [0, 2\pi ]\). Thus, \(R_{z({\varvec{\omega }}, \theta )}(T_{\varvec{\omega }})\) is bounded for all \({\varvec{\omega }} \in \varOmega \) and \(\theta \in [0, 2\pi ]\).

For all \({\varvec{\omega }} \in \varOmega \) we have the bound

Now, in general the resolvent \(R_z(A)\) is continuous in both arguments, z and the (compact) operator A (in fact it is holomorphic, see [42, Theorem IV\(-\)3.11]). Since z is continuous in both \(\theta \) and \({\varvec{\omega }}\) and \(T_{\varvec{\omega }}\) is continuous in \({\varvec{\omega }}\), it follows that \(R_{z({\varvec{\omega }}, \theta )} (T_{\varvec{\omega }})\) is continuous in \(\theta \) and \({\varvec{\omega }}\). In turn, the norm \(\Vert R_{z({\varvec{\omega }}, \theta )}(T_{\varvec{\omega }})\Vert \) is also continuous in \(\theta \) and \({\varvec{\omega }}\). Thus, \(\Vert R_{z({\varvec{\omega }}, \theta )}(T_{\varvec{\omega }})\Vert \) is bounded and continuous on the compact domain \([0, 2\pi ] \times \varOmega \), and so the maximum is attained for some \((\theta ^*, {\varvec{\omega }}^*) \in [0, 2\pi ] \times \varOmega \), i.e., for all \({\varvec{\omega }} \in \varOmega \)

For h sufficiently small \(\Vert R_z(T_{{\varvec{\omega }}, h})\Vert \) can be bounded in a similar way.

For \(\varGamma ({\varvec{\omega }})\) deined above \(\textrm{length}(\varGamma ({\varvec{\omega }})) = \pi \gamma \), which is obviously independent of \({\varvec{\omega }}\). Thus, \(C_u({\varvec{\omega }}) \le C_u < \infty \) for all \({\varvec{\omega }} \in \varOmega \), where

is independent of \({\varvec{\omega }}\).

For the eigenvalue error (16) we follow the proof of [4, Theorem 8.2]. Since \(\lambda ({\varvec{\omega }})\) is simple, from Theorem 7.2 in [4], the eigenvalue error is bounded by

where in the second inequality we have used (60) and the equivalent bound for the dual eigenvalue, combining the two constants into \(C_\eta \). By following [4], the constant \(C_\lambda ({\varvec{\omega }})\) can be bounded independently of \({\varvec{\omega }}\) in a similar way to \(C_u({\varvec{\omega }})\).

Data Availability

Our research does not generate any new data.

References

Arnoldi, W.E.: The principle of minimized iterations in the solution of the matrix eigenvalue problem. Q. Appl. Math. 9, 17–29 (1951)

Avramova, M.N., Ivanov, K.N.: Verification, validation and uncertainty quantification in multi-physics modeling for nuclear reactor design and safety analysis. Prog. Nucl. Energy 52, 601–614 (2010)

Ayres, D.A.F., Eaton, M.D., Hagues, A.W., Williams, M.M.R.: Uncertainty quantification in neutron transport with generalized polynomial chaos using the method of characteristics. Ann. Nucl. Energy 45, 14–28 (2012)

Babuška, I., Osborn, J.: Eigenvalue problems. In: Ciarlet, P.G., Lions, J.L. (eds.) Handbook of Numerical Analysis, Finite Element Methods (Part 1), vol. 2, pp. 641–787. Elsevier, Amsterdam (1991)

Barrenechea, G., Valentin, F.: An unusual stabilized finite element method for a generalized stokes problem. Numer. Math. 92, 653–677 (2002)

Barth, A., Schwab, C., Zollinger, N.: Multi-level Monte Carlo finite element method for elliptic PDEs with stochastic coefficients. Numer. Math. 119, 123–161 (2011)

Beck, A., Dürrwächter, J., Kuhn, T., Meyer, F., Munz, C.-D., Rohde, C.: \(hp\)-Multilevel Monte Carlo methods for uncertainty quantification of compressible Navier–Stokes equations. SIAM J. Sci. Comput. 42(4), B1067–B1091 (2020)

Bochev, P.B., Gunzburger, M.D., Shadid, J.N.: Stability of the SUPG finite element method for transient advection–diffusion problems. Comput. Methods Appl. Mech. Eng. 193(23), 2301–2323 (2004)

Broersen, R., Stevenson, R.: A robust Petrov–Galerkin discretisation of convection–diffusion equations. Comput. Math. Appl. 68(11), 1605–1618 (2014)

Brooks, A.N., Hughes, T.J.R.: Streamline upwind/Petrov–Galerkin formulations for convection dominated flows with particular emphasis on the incompressible Navier–Stokes equations. Comput. Methods Appl. Mech. Eng. 32, 199–259 (1982)

Cai, Z., Mandel, J., McCormick, S.: Multigrid methods for nearly singular linear equations and eigenvalue problems. SIAM J. Numer. Anal. 34(1), 178–200 (1997)

Carnoy, E.G., Geradin, M.: On the practical use of the Lanczos algorithm in finite element applications to vibration and bifurcation problems. In: Kågström, B., Ruhe, A. (eds.) Matrix Pencils, pp. 156–176. Springer, Berlin (1983)

Carstensen, C., Gedicke, J., Mehrmann, V., Miedlar, A.: An adaptive homotopy approach for non-self-adjoint eigenvalue problems. Numer. Math. 119, 557–583, 11 (2011)

Cliffe, K.A., Giles, M.B., Scheichl, R., Teckentrup, A.L.: Multilevel Monte Carlo methods and applications to elliptic PDEs with random coefficients. Comput. Vis. Sci. 14, 3–15 (2011)

Cohen, A., Dahmen, W., Welper, G.: Adaptivity and variational stabilization for convection–diffusion equations. Eur. Ser. Appl. Ind. Math. Math. Model. Numer. Anal. 46, 1247–1273 (2012)

Cools, R., Kuo, F.Y., Nuyens, D.: Constructing embedded lattice rules for multivariate integration. SIAM J. Sci. Comput. 28, 2162–2188 (2006)

Crandall, S.H.: Iterative procedures related to relaxation methods for eigenvalue problems. Proc. R. Soc. A Math. Phys. Eng. Sci. 207, 416–423 (1951)

Davis, T.A.: Direct Methods for Sparse Linear Systems. SIAM, Philadelphia (2006)

Dick, J., Gantner, R.N., Le Gia, Q.T., Schwab, C.: Higher order Quasi-Monte Carlo integration for Bayesian PDE inversion. Comput. Math. Appl. 77, 144–172 (2019)

Dick, J., Kuo, F.Y., Sloan, I.H.: High dimensional integration: the quasi-Monte Carlo way. Acta Numer. 22, 133–288 (2013)

Dick, J., Pillichshammer, F.: Digital Nets and Sequences: Discrepancy Theory and Quasi-Monte Carlo Integration. Cambridge University Press, New York (2010)