Abstract

The generalized Nash equilibrium problem (GNEP) is a kind of game to find strategies for a group of players such that each player’s objective function is optimized. Solutions for GNEPs are called generalized Nash equilibria (GNEs). In this paper, we propose a numerical method for finding GNEs of GNEPs of polynomials based on the polyhedral homotopy continuation and the Moment-SOS hierarchy of semidefinite relaxations. We show that our method can find all GNEs if they exist, or detect the nonexistence of GNEs, under some genericity assumptions. Some numerical experiments are made to demonstrate the efficiency of our method.

Similar content being viewed by others

1 Introduction

Suppose there are N players and the ith player’s strategy is a vector \(x_{i}\in \mathbb {R}^{n_{i}}\) (the \(n_i\)-dimensional real Euclidean space). We write that

The total dimension of all strategies is \(n:= n_1+ \cdots + n_N.\) When the ith player’s strategy is considered, we use \(x_{-i}\) to denote the subvector of all players’ strategies except the ith one, i.e.,

and write \(x=(x_{i},x_{-i})\) accordingly. The generalized Nash equilibrium problem (GNEP) is to find a tuple of strategies \(u = (u_1, \ldots , u_N)\) such that each \(u_i\) is a minimizer of the ith player’s optimization

In the above, the \(\mathcal {E}_i\) and \(\mathcal {I}_i\) are disjoint labeling sets (possibly empty), the \(f_i\) and \(g_{i,j}\) are continuous functions in x and we suppose \(\mathcal {E}_i\cup \mathcal {I}_i=\{1,\dots ,m_i\}\) for each \(i\in \{1,\dots ,N\}\). A solution to the GNEP is called a generalized Nash equilibrium (GNE). If defining functions \(f_i\) and \(g_{i,j}\) are polynomials in x for all \(i\in \{1,\dots ,N\}\) and \(j\in \{1,\dots ,m_i\}\), then we call the GNEP a generalized Nash equilibrium problem of polynomials. Besides that, we let \(X_i\) be the point-to-set map such that

Then, \(X_i(x_{-i})\) is the ith player’s feasible set for the given other players’ strategies \(x_{-i}\). Let

We say \(x\in \mathbb {R}^n\) is feasible for this GNEP if \(x\in X\). Moreover, if for every \(i\in \{1,\dots ,N\}\) and \(j\in \{1,\dots ,m_i\}\), the constraining function \(g_{i,j}\) only has the variable \(x_i\), i.e., the ith player’s feasible strategy set is independent of other players’ strategies, then the GNEP is called a (standard) Nash equilibrium problem (NEP). The GNEP is said to be convex if for each i and all \(x_{-i}\) such that \(X_i(x_{-i})\ne \emptyset \), the \(\text{ F}_i(x_{-i})\) is a convex optimization, i.e., \(f_i(x_i,x_{-i})\) is convex in \(x_i\), \(g_{i,j}\,(j\in \mathcal {E}_i)\) is linear in \(x_i\), and \(g_{i,j}\,(j\in \mathcal {I}_i)\) is convex in \(x_i\).

GNEPs originated from economics in [2, 11], and have been widely used in many other areas, such as telecommunications [1], supply chain [42] and machine learning [33]. There are plenty of interesting models formulated as GNEP of polynomials, and we refer to [14, 15, 38, 39, 42] for them.

For recent studies on GNEPs, one primary task is to develop efficient methods for finding GNEs. Indeed, solving GNEPs may easily be out of reach, especially when convexity assumptions are not given. For NEPs, some methods are studied in [18, 44]. When the NEP is defined by polynomials, a method using the Moment-SOS semidefinite relaxation on the KKT system is introduced in [38]. For GNEPs, people mainly consider solution methods under convexity assumptions, such as the penalty method [3, 15], the augmented Lagrangian method [24], the variational and quasi-variational inequality approach [13, 16, 19], the Nikaido-Isoda function approach [49, 50], and the interior point method on solving the KKT system [12]. Moreover, for convex GNEP of polynomials, a semidefinite relaxation method is introduced in [39], and it is extended to nonconvex rational GNEPs in [41]. The Gauss-Seidel method is studied in [40] for nonconvex GNEPs of polynomials. We refer to [14, 16] for surveys on GNEPs.

In this paper, we study GNEPs of polynomials. The problems without convexity assumptions are mainly considered. We propose a method for finding GNEs based on the polyhedral homotopy continuation and the Moment-SOS semidefinite relaxations, and investigate its properties. Our main contributions are:

-

We propose a numerical algorithm for solving GNEPs of polynomials. The polyhedral homotopy continuation is exploited for solving the complex KKT system of GNEPs, and we select GNEs from the set of complex KKT points with the help of Moment-SOS semidefinite relaxations.

-

We show that when the GNEP is given by dense polynomials whose coefficients are generic, the mixed volume for the complex KKT system is identical to its algebraic degree. In this case, the polyhedral homotopy continuation can obtain all complex KKT points, and our algorithm finds all GNEs if they exist, or detect their nonexistence of them.

-

Even when the number of complex KKT points obtained by the polyhedral homotopy is less than the mixed volume, or there exist infinitely many complex KKT points, our algorithm may still find one or more GNEs.

-

Numerical experiments are presented to show the effectiveness of our algorithm.

This paper is organized as follows. In Sect. 2, we introduce some basics in optimality conditions for GNEPs, polyhedral homotopy and polynomial optimization. The algorithm for solving GNEPs of polynomials is proposed in Sect. 3. We show the polyhedral homotopy continuation is optimal for GNEPs of polynomials with generic coefficients in Sect. 4. Numerical experiments are presented in Sect. 5.

2 Preliminaries

In this section, preliminary concepts for GNEPs, polyhedral homotopy continuation and polynomial optimization problems are reviewed. We introduce the optimality conditions for GNEPs to derive a system of polynomials whose collection of solutions contains GNEs. Then, we review Bernstein’s theorem, which gives an upper bound of the number of solutions for a system of polynomials. Finally, the polyhedral homotopy continuation and the Moment-SOS hierarchy of semidefinite relaxations are suggested as two main tools to solve GNEPs.

2.1 Optimality Conditions for GNEPs

Under some suitable constraint qualifications (for example, the linear constraint qualification condition (LICQ), or Slater’s Condition for convex problems; see [5]), if \(u\in X\) is a GNE, then there exist Lagrange multiplier vectors \(\lambda _1,\ldots ,\lambda _N\) such that

where \(\nabla _{x_i}f_i(x)\) is the gradient of \(f_i\) with respect to \(x_i\) and \(\lambda _i\perp g_i(x)\) implies that \(\lambda _{i}^\top g_{i}(x)=0\) for the constraining vector

The polynomial system (2.1) is called the KKT system of this GNEP. The solution \((x,\lambda _1,\dots ,\lambda _N)\) of the KKT system is called a KKT tuple and the first block of coordinates x is called a KKT point. For the GNEP of polynomials, the LICQ of \(\text{ F}_i(u_{-i})\) hold at every GNE [37], under some genericity conditions. Moreover, consider the system consists of all equations in (2.1), i.e.,

Then, the \((x,\lambda _1,\dots ,\lambda _N)\) satisfying (2.2) is called a complex KKT tuple, and similarly, the first coordinate x is called a complex KKT point. For generic GNEPs of polynomials, there are finitely many solutions to (2.2) [37]. In this case, the number of complex KKT points is called the algebraic degree of the GNEP.

2.2 Mixed Volumes and Bernstein’s Theorem

Let \(\mathbb {C}[z_1,\dots ,z_k]\) be the set of all complex coefficient polynomials in variables \(z_1,\dots ,z_k\). For a polynomial \(p\in \mathbb {C}[z_1,\dots , z_k]\), suppose

where \(z^a = z_1^{a_1}\cdots z_k^{a_k}\) for \(a=(a_1,\dots , a_k)\). Then the support of p, denoted by \(A_p\), is the set of exponent vectors for monomials such that

The convex hull \(Q_p\) of the support \(A_p\) is called the Newton polytope of p. For an integer vector \(w\in \mathbb {Z}^k\), we define a map \(h_w:\mathbb {Z}^k\rightarrow \mathbb {Z}\) such that

Given a finite integer lattice of points \(A\subset \mathbb {Z}^k\), the minimum value of \(h_w\) on A is denoted by \(h_w^\star (A)\). When it is clear from the context, we may omit the index w for h. Moreover, we let \(A^w\)\(:=\{a\in A\mid h_w(a)=h_w^\star (A)\}\). Then, we define \(p^w\) be the polynomial consists of terms of p supported on \(A^w\), i.e., for each \(p=\sum _{a\in A}c_az^a\in \mathbb {C}[z_1,\dots , z_k]\), we have

For an m-tuple of polynomials \(\mathscr {P}=(p_1,\dots , p_m)\), we denote \(\mathscr {P}^w:=(p_1^w,\dots , p_m^w)\). The m-tuple \(\mathscr {P}^w\) is called the facial system of \(\mathscr {P}\) with respect to w. The term ‘facial’ comes from the fact that \(A^w\) is a face of A exposed by a vector w.

Let \(Q_1,\dots , Q_m\) be polytopes in \(\mathbb {R}^k\), and \(\alpha _1,\dots , \alpha _m\) be nonnegative real scalars. The Minkowski sum of polytopes is

The volume of the Minkowski sum \(\alpha _1Q_1+\cdots +\alpha _mQ_m\) can be understood as a homogeneous polynomial in variables of \(\alpha _1,\dots ,\alpha _m\). In particular, the coefficient for the term \(\alpha _1\alpha _2\cdots \alpha _m\) in the volume of \(\alpha _1Q_1+\cdots +\alpha _mQ_m\) is called the mixed volume of \(Q_1,\dots , Q_m\), which is denoted by \(MV(Q_1,\dots , Q_m)\).

In [4], it was proved that for a square polynomial system in \(\mathbb {C}[z_1,\dots , z_k]\), the mixed volume of the system is an upper bound for the number of isolated roots in the complex torus \((\mathbb {C}\setminus \{\textbf{0}\})^k\), where \(\textbf{0}\) is the all-zero vector. This is called Bernstein’s theorem. Moreover, it states when the mixed volume bound is tight.

Theorem 2.1

(Bernstein’s theorem)[4, Theorems A and B] Let \(\mathscr {P}\) be a system consists of polynomials \(p_1,\dots , p_k\) in \(\mathbb {C}[z_1,\dots ,z_k]\). For each Newton polytope \(Q_{p_i}\) of \(p_i\), we have

The equality holds if and only if the facial system \(\mathscr {P}^w\) has no root in \((\mathbb {C}\setminus \{\textbf{0}\})^k\) for any nonzero \(w\in \mathbb {Z}^k\).

It is worth noting that Bernstein’s theorem concerns roots in the torus \((\mathbb {C}\setminus \{\textbf{0}\})^k\) because it allows considering Laurent polynomials which are possible to have negative exponents. A system that satisfies the equality in the above theorem is called Bernstein generic.

2.3 Polyhedral Homotopy Continuation

The homotopy continuation is an algorithmic method to find numerical approximations of roots for a system of polynomial equations. Consider a square system of polynomial equations \(\mathscr {P}\,:=\, \{p_1,\dots ,p_k\}\subset \mathbb {C}[z_1,\dots , z_k]\) with k equations and k variables. We are interested in solving the system \(\mathscr {P}\), i.e., computing a zero set

The main idea for the homotopy continuation is solving \(\mathscr {P}\) by tracking a homotopy path from the known roots of a system \(\mathscr {Q}\), called a start system, to that of the target system \(\mathscr {P}\). Given the start system \(\mathscr {Q}=\{q_1,\dots , q_k\}\subset \mathbb {C}[z_1,\dots , z_k]\) with the same number of variables and equations of \(\mathscr {P}\), we construct a homotopy \(\mathscr {H}(z,t)\) such that \(\mathscr {H}(z,0)=\mathscr {Q}\) and \(\mathscr {H}(z,1)=\mathscr {P}\). For tracking the homotopy from \(t=0\) to \(t=1\), numerical ODE solving techniques for Davidenko equations and Newton’s iteration are applied; see [46, Chapter 2] for more details.

Choosing a proper start system is an important task for the homotopy continuation as it determines the number of paths to track. In this paper, the polyhedral homotopy continuation established by Huber and Sturmfels [23] is considered. For each polynomial \(p_i\) in \(\mathscr {P}\) with its Newton polytope \(Q_{p_i}\), the polyhedral homotopy continuation constructs a start system \(\mathscr {Q}\) with the mixed volume \(MV(Q_{p_1},\dots , Q_{p_k})\) many solutions. We briefly introduce the framework of the polyhedral homotopy continuation. For a polynomial \(p\in \mathbb {C}[z_1,\dots , z_k]\) and its support set \(A_{p}\), we know that

Let \(\ell _p:A_p\rightarrow \mathbb {R} \) be a function defined on every lattice point in \(A_p\), we define

which is called a lifted polynomial of p by the lifting function \(\ell _p\). Lifting all polynomials \(p_1,\dots , p_k\) in \(\mathscr {P}\) gives a lifted system \(\overline{\mathscr {P}}(z,t)\). Note that \(\overline{\mathscr {P}}(z,1)=\mathscr {P}\). A solution of \(\overline{\mathscr {P}}\) can be expressed by a Puiseux series \(z(t)=(z_1(t),\dots , z_k(t))\) where

for some rational number \(\alpha _i\) and a nonzero constant \(y_i\). As z(t) is a solution for the lifted system, plugging z(t) into each \(p_j\) gives

Dividing by \(t^{\langle a,\alpha \rangle +\ell _{p_j}(a)}\) and letting \(t=0\), we have a start system \(\mathscr {Q}\). The solutions for \(\mathscr {Q}\) can be obtained from the branches of the algebraic function z(t) near \(t=0\). The homotopy \(\mathscr {H}(z,t)\) joining \(\mathscr {P}\) and \(\mathscr {Q}\) has \(MV(Q_{p_1},\dots , Q_{p_k})\) many paths as t varies from 0 to 1. It is motivated from Theorem 2.1 that a polynomial system supported on \(A_{p_1},\dots , A_{p_k}\) has at most \(MV(Q_{p_1},\dots , Q_{p_k})\) many isolated solutions in the torus \((\mathbb {C}{\setminus } \textbf{0})^k\). Polyhedral homotopy continuation is implemented robustly in many software HOM4PS2 [30], PHCpack [48] and HomotopyContinuation.jl [9].

Remark 2.2

-

1.

Even in the case that the number of solutions is smaller than the mixed volume, the polyhedral homotopy continuation algorithm may find all complex solutions.

-

2.

For GNEPs, there may exist complex KKT tuples outside of the torus. For instance, when there are KKT points with inactive constraints, Lagrange multipliers according to these constraints are 0. Theoretically, the polyhedral homotopy continuation aims on finding roots in the torus \((\mathbb {C}\setminus \{\varvec{0}\})^k\). However, actual implementations are designed to find roots outside the torus by adding a small perturbation on the constant term; see [32] for details. In Example 3.3, we give an example where the homotopy continuation successfully finds all complex solutions to a system, while the mixed volume is strictly greater than the number of solutions, and there exist roots outside the torus.

In summary, we give the general framework of polyhedral homotopy continuation for solving a polynomial system:

Algorithm 2.3

For a system of polynomial equations \(\mathscr {P}=\{p_1,\dots , p_k\}\), do the following:

-

Step 1

For each \(i=1,\dots ,k\), choose a function \(\ell _{p_i}:A_{p_i}\rightarrow \mathbb {R}\). Then, construct the lifted polynomial \(\bar{p}_i(z,t)\) as in (2.4), and define \(\overline{\mathscr {P}}(z,t):=\{\bar{p}_1(z,t),\dots ,\bar{p}_k(z,t)\}\).

-

Step 2

Construct a start system \(\mathscr {Q}\) from the lifted system \(\overline{\mathscr {P}}\) by trimming some powers of t and letting \(t=0\).

-

Step 3

Starting from \(\mathscr {Q}\), track \(MV(Q_{p_1},\dots , Q_{p_k})\) many paths from \(t=0\) to \(t=1\) with Puiseux series solutions z(t) obtained near \(t=0\).

As the polyhedral homotopy continuation approximates the roots of a system numerically, a posteriori certifications are usually applied to verify the output obtained by numerical solvers, such as the Smale’s \(\alpha \)-theory [6, Chapter 8] and interval arithmetic [34, Chapter 8]. There are multiple known implementations for these methods. For \(\alpha \)-theory certification, one can use alphaCertified [20] or NumericalCertification [29]. For certification using interval arithmetic, software NumericalCertification and HomotopyContinuation.jl [7] are available.

2.4 Basic Concepts in Polynomial Optimization

For the set of real polynomials \(\mathcal {H} = \{h_1, \ldots , h_s\}\) in \(z:=(z_1,\dots , z_k)\), the ideal generated by \(\mathcal {H}\) is

For a nonnegative integer d, the d-truncation of \(\text{ Ideal }[\mathcal {H}]\) is

A polynomial \(\sigma \in \mathbb {R}[z]\) is said to be a sum of squares (SOS) if \(\sigma = \sigma _1^2+\cdots +\sigma _l^2\) for some polynomials \(\sigma _i \in \mathbb {R}[z]\). The set of all SOS polynomials in z is denoted as \(\Sigma [z]\). For a degree d, we denote the truncation

For a set \(\mathcal {Q}=\{q_1,\ldots ,q_t\}\) of polynomials in z, its quadratic module is the set

Similarly, we denote the truncation of \(\text{ Qmod }[\mathcal {Q}]\)

The set \(\mathcal {Q}\) determines the basic closed semi-algebraic set

Moreover, for \(\mathcal {H}=\{h_1,\dots ,h_s\}\), its real zero set is

The set \(\text{ Ideal }[\mathcal {H}]+\text{ Qmod }[\mathcal {Q}]\) is said to be archimedean if there exists \(\rho \in \text{ Ideal }[\mathcal {H}]+\text{ Qmod }[\mathcal {Q}]\) such that the set \(\mathcal {S}(\{\rho \})\) is compact. If \(\text{ Ideal }[\mathcal {H}]+\text{ Qmod }[\mathcal {Q}]\) is archimedean, then \(\textbf{V}_\mathbb {R}(\mathcal {H})\cap \mathcal {S}(\mathcal {Q})\) must be compact. Conversely, if \(\textbf{V}_\mathbb {R}(\mathcal {H})\cap \mathcal {S}(\mathcal {Q})\) is compact, say, \(\textbf{V}_\mathbb {R}(\mathcal {H})\cap \mathcal {S}(\mathcal {Q})\) is contained in the ball \(R -\Vert z\Vert ^2 \ge 0\), then \(\text{ Ideal }[\mathcal {H}]+\text{ Qmod }[\mathcal {Q}\cup \{R -\Vert z\Vert ^2\}]\) is archimedean and \(\textbf{V}_\mathbb {R}(\mathcal {H})\cap \mathcal {S}(\mathcal {Q}) = \textbf{V}_\mathbb {R}(\mathcal {H})\cap \mathcal {S}(\mathcal {Q}\cup \{R -\Vert z\Vert ^2\})\). Clearly, if \(f \in \text{ Ideal }[\mathcal {H}]+\text{ Qmod }[\mathcal {Q}]\), then \(f \ge 0\) on \(\textbf{V}_\mathbb {R}(\mathcal {H}) \cap \mathcal {S}(\mathcal {Q})\). The reverse is not necessarily true. However, when \(\text{ Ideal }[\mathcal {H}]+\text{ Qmod }[\mathcal {Q}]\) is archimedean, if the polynomial \(f > 0\) on \(\textbf{V}_\mathbb {R}(\mathcal {H})\cap \mathcal {S}(\mathcal {Q})\), then \(f \in \text{ Ideal }[\mathcal {H}]+\text{ Qmod }[\mathcal {Q}]\). This conclusion is referenced as Putinar’s Positivstellensatz [43]. Interestingly, if \(f \ge 0\) on \(\textbf{V}_\mathbb {R}(\mathcal {H})\cap \mathcal {S}(\mathcal {Q})\), we also have \(f\in \text{ Ideal }[\mathcal {H}]+\text{ Qmod }[\mathcal {Q}]\), under some standard optimality conditions [36].

Truncated multi-sequences (tms) are useful for characterizing the duality of nonnegative polynomials. For an integer \(d\ge 0\), a real vector \(y=(y_{\alpha })_{\alpha \in \mathbb {N}_{2d}^k}\) is called a tms of degree 2d. For a polynomial \(p(z) = \sum _{ \alpha \in \mathbb {N}^k_{2d} } p_\alpha z^\alpha \), define the operation

The operation \(\langle p, y \rangle \) is bilinear in p and y. Moreover, for a polynomial \(q \in \mathbb {R}[x]_{2s}\) (\(s \le d\)), and a degree \(t\le d - \lceil \deg (q)/2 \rceil \), the dth order localizing matrix of q for y is the symmetric matrix \(L_{q}^{(d)}[y]\) such that (the vec(a) denotes the coefficient vector of a)

for all \(a \in \mathbb {R}[x]_t\). When \(q=1\) (the constant one polynomial), the localizing matrix \(L_{q}^{(d)}[y]\) becomes the dth order moment matrix \(M_d[y] :=L_{1}^{(d)}[y]\). We refer to [21, 26, 28] for more details and applications about tms and localizing matrices. In Sect. 3.2, SOS polynomials and localizing matrices are exploited for solving polynomial optimization problems.

3 An Algorithm for Finding GNEs

In this section, we study an algorithm for finding GNEs based on the polyhedral homotopy continuation and the Moment-SOS semidefinite relaxations. First, we propose a framework for solving GNEPs. Then, we discuss the polyhedral homotopy continuation for solving the complex KKT systems for GNEPs of polynomials, and the Moment-SOS relaxations for selecting GNEs from the set of KKT points.

For the GNEP of polynomials, we consider its complex KKT system (2.2). Let \(m:=m_1+\cdots +m_N\) and define \(\mathcal {K}_{\mathbb {C}}\subseteq \mathbb {C}^n\times \mathbb {C}^m\) as a finite set of complex KKT tuples, i.e., every point in \(\mathcal {K}_{\mathbb {C}}\) solves the system (2.2). We further define

Then, \(\mathcal {K}\) and \(\mathcal {P}\) are sets of KKT tuples and KKT points respectively. When the GNEP is convex, all points in \(\mathcal {P}\) are GNEs. Furthermore, when \(\mathcal {K}_{\mathbb {C}}\) is the set of all complex KKT tuples and some constraint qualifications hold at every GNE, the \(\mathcal {P}\) is the set of all GNEs if it is nonempty, or the nonexistence of GNEs can be certified by the emptiness of \(\mathcal {P}\). However, when there is no convexity assumed for the GNEP, the KKT conditions are usually not sufficient for \(x\in \mathcal {P}\) being a GNE.

Suppose the GNEP is not convex. Let \(u=(u_i,u_{-i})\in \mathcal {P}\). For each \(i\in \{1,\dots , N\}\), we consider the following optimization problem:

Then, u is a GNE if and only if every \(\delta _i\ge 0\), i.e., \(u_i\) minimizes (3.2) for each i. If \(\delta _i<0\) for some i, then u is not a GNE. For such a case, suppose (3.2) has a minimizer \(v_i\). Then it is clear that

holds with \(x=x^\star \) at any GNE \(x^\star \in \mathcal {P}\) such that \(v_i\in X_i(x_{-i}^\star )\) (e.g., the \(v_i\in X_i(x_{-i}^\star )\) holds at all NEs for NEPs). However, (3.3) does not hold at \(x=u\). Therefore, we propose the following algorithm for finding GNEs.

Algorithm 3.1

For the GNEP of polynomials, do the following:

-

Step 0

Let \(S:=\emptyset \) and \(V_i:=\emptyset \) for all \(i\in \{1,\dots , N\}\).

-

Step 1

Solve the complex KKT system (2.2) for a set of complex solutions \(\mathcal {K}_{\mathbb {C}}\). Let \(\mathcal {P}\) be the set given as in (3.1).

-

Step 2

If \(\mathcal {P}\ne \emptyset \), then select \(u\in \mathcal {P}\), let \(\mathcal {P}:=\mathcal {P}\setminus \{u\}\), and proceed to the next step. Otherwise, output the set S (possibly empty) of GNEs and stop.

-

Step 3

If \(V_i =\emptyset \) for all \(i\in \{1, \dots , N\}\), or \(f_i (v_i , u_{-i} )-f_i (u_i , u_{-i})\ge 0\) for all \(i\in \{1, \dots , N\}\) and for all \(v_i\in V_i \cap X_i (u_{-i} )\), then go to the next step. Otherwise, go back to Step 2.

-

Step 4

For each \(i\in \{1,\dots , N\}\), solve the polynomial optimization problem (3.2) for a minimizer \(v_i\). If there exists \(i\in \{1,\dots , N\}\) such that \(\delta _i<0\), let \(V_i:=V_i\cup \{v_i\}\) for all such i. Otherwise, u is a GNE and let \(S:=S\cup \mathcal {\{}u\}\). Then, go back to Step 2.

In Sect. 3.1, we show how to find the set of complex solutions for the system (2.2) using the polyhedral homotopy continuation. Since the polyhedral homotopy tracks mixed volume many paths, \(\mathcal {P}\) must be a finite set (possibly empty). Therefore, Algorithm 3.1 must terminate within finitely many loops. Moreover, if \(\mathcal {K}_{\mathbb {C}}\) is the set of all complex KKT tuples, i.e., Algorithm 2.3 finds all complex solutions for (2.2), then Algorithm 3.1 will either find all GNEs, or detect the nonexistence of GNEs. This is the case when Algorithm 2.3 finds the mixed volume many complex solutions for (2.2). In Sect. 4, we show that when the GNEP is defined by generic dense polynomials, Algorithm 2.3 can find mixed volume many solutions for (2.2). The following result is straightforward:

Theorem 3.2

For the GNEP, suppose \(|\mathcal {K}_{\mathbb {C}}|\) equals the mixed volume of (2.2). If S produced by Algorithm 3.1 is nonempty, then S is the set of all GNEs. Otherwise, the GNEP does not have any GNE.

3.1 The Polyhedral Homotopy Method for Finding KKT Tuples

In this subsection, we explain how the polyhedral homotopy continuation is applied to find complex solutions for the system (2.2). For each \(i\in \{1,\dots , N\}\), denote the set of polynomials in variables of x and \(\lambda _i\) as

We define a system

Then, \(F(x,\lambda )\) is a system with \(n+m\) polynomial equations in \(n+m\) variables, and we use Algorithm 2.3 to solve \(F(x,\lambda )=0\) by letting \(z:=(x,\lambda )\) and \(\mathscr {P}(z):=F(z)\).

The example below shows detail of applying the homotopy method for finding KKT tuples from an actual NEP problem.

Example 3.3

Consider the two-player unconstrained NEP

For this problem, the complex KKT system reduces to vanishing the gradients \(\nabla _{x_1}f_1\) and \(\nabla _{x_2}f_2\), i.e., we have

Considering a lifted system with generic lifting functions \(\ell _{f_1}\) and \(\ell _{f_2}\), we have

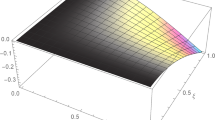

such that \(\overline{F}(x,1)=F\). Also, we get a start system \(\overline{F}(x,0)\) after trimming some powers of t. The system F has the mixed volume equal to 8. Therefore, the polyhedral homotopy continuation provided 8 paths to track and found 6 numerical solutions to F:

Indeed, using the software Macaulay2 [17], we verified that the system \(F(x)=0\) has exactly 6 complex solutions.

Remark 3.4

-

1.

Note that the system \(F(x)=0\) has 6 complex solutions, which is strictly less than its mixed volume. This shows that the polyhedral homotopy continuation may find all complex solutions to the polynomial system even if the number of solutions is smaller than the mixed volume.

-

2.

As presented in this example, the polyhedral homotopy continuation may find solutions outside the torus in the actual implementation.

In Sect. 4, we show that under the genericity assumption, the polyhedral homotopy continuation provides the optimal number of paths for finding complex KKT points. In this case, the polyhedral homotopy continuation guarantees finding all complex KKT points, hence the complete collection of GNEs can be obtained by Algorithm 3.1.

3.2 The Moment-SOS Relaxation for Selecting GNEs

In this sequel, we discuss how to solve the polynomial optimization (3.2). For each i, denote

Denote the degree

where \(\deg (\Phi _i(x_i)):=\max \{\deg (g_{i,j}(x_i,u_{-i}))\mid j\in \mathcal {E}_i\}\), and \(\deg (\Psi _i(x_i))\) is similarly defined. For \(d\ge d_i\), recall that the tms \(y\in \mathbb {R}^{\mathbb {N}^{n_i}_{2d}}\) and localizing matrices \(L_q^{(d)}[y]\) are given by (2.5) and (2.6) respectively. The dth moment relaxation for (3.2) is

Its dual optimization problem is the dth SOS relaxation

Both (3.6)–(3.7) are semidefinite programs that can be efficiently solved by some well developed methods and software (e.g., SeDuMi [47]). By solving the relaxations (3.6)–(3.7) for \(d=d_0, d_0+1, \ldots \), we get the following algorithm, named Moment-SOS hierarchy [25], for solving (3.2).

Algorithm 3.5

For the given \(u \in \mathcal {P}\) and the ith player’s optimization (3.2). Initialize \(d:= d_i\).

-

Step 1

Solve the moment relaxation (3.6) for the minimum value \(\vartheta _i^{(d)}\) and a minimizer \(y^\star \). If \(\vartheta _i^{(d)}\ge 0\), then \(\delta _i=0\) and stop; otherwise, go to the next step.

-

Step 2

Let \(t:=d_i\) as in (3.5). If \(y^\star \) satisfies the rank condition

$$\begin{aligned} {{\,\textrm{rank}\,}}{M_t[y^\star ]} \,=\, {{\,\textrm{rank}\,}}{M_{t-d_i}[y^\star ]} , \end{aligned}$$(3.8)then extract a set \(U_i\) of \(r:={{\,\textrm{rank}\,}}{M_t(y^\star )}\) minimizers for (3.2) and stop.

-

Step 3

If (3.8) fails to hold and \(t < d\), let \(t:= t+1\) and then go to Step 2; otherwise, let \(d:= d+1\) and go to Step 1.

The rank condition (3.8) is called flat truncation [35]. It is a sufficient (and almost necessary) condition for checking finite convergence of the Moment-SOS hierarchy. Indeed, the Moment-SOS hierarchy has finite convergence if and only if the flat truncation is satisfied for some relaxation orders, under some generic conditions [35]. When (3.8) holds, the method in [21] can be used to extract r minimizers for (3.2). The method is implemented in the software GloptiPoly 3 [22]. We refer to [21, 35] and [26, Chapter 6] for more details.

The convergence properties of Algorithm 3.5 are as follows. By solving the hierarchy of relaxations (3.6) and (3.7), we get a monotonically increasing sequence of lower bounds \(\{\vartheta _d\}_{d=d_0}^{\infty }\) for the minimum value \(\vartheta _{\min }\), i.e.,

When \(\text{ Ideal }(\Psi _i)_{2d}+ \text{ Qmod }(\Phi _i)_{2d}\) is archimedean, we have \(\vartheta _d \rightarrow \vartheta _{\min }\) as \(d \rightarrow \infty \) [25]. If \(\vartheta _d = \vartheta _{\min }\) for some d, the relaxation (3.6) is said to be exact for solving (3.2). For such a case, the Moment-SOS hierarchy is said to have finite convergence. This is guaranteed when the archimedean and some optimality conditions hold (see [36]). Although there exist special polynomials such that the Moment-SOS hierarchy fails to have finite convergence, such special problems belong to a set of measure zero in the space of input polynomials [36]. We refer to [26,27,28, 36] for more work on polynomial and moment optimization.

4 The Mixed Volume of GNEPs

For a polynomial system, if the set of its complex solutions is zero-dimensional, then the algebraic degree of the polynomial system counts the number of complex solutions for the system. In this section, we prove that under some genericity assumptions on the GNEP, the mixed volume of the complex KKT system (2.2) equals its algebraic degree. Throughout this section, we have a GNEP of polynomials consisting of dense polynomials of certain degrees. Without loss of generality, we assume \(\mathcal {I}_i=\emptyset \) for all \(i\in \{1,\dots , N\}\), i.e., all players only have equality constraints, for the convenience of our discussion. Note that if there exist inequality constraints, then all following results still hold by enumerating the active constraints. For a tuple \(d:=(d_1,\dots ,d_N)\) of nonnegative integers, the \(\mathbb {C}[x]_d\) represents the space of polynomials whose degree in \(x_i\) is not greater than \(d_i\).

Recall that we say a system of polynomials is Bernstein generic if the number of isolated solutions in the complex torus equals its mixed volume. The main result of this section is the following:

Theorem 4.1

Consider the GNEP of polynomials given as in (1.1). For each i, let \(d_{i,0},\dots ,d_{i,m_i}\in \mathbb {N}^N\) be tuples of nonnegative integers. Suppose all \(f_i\) and \(g_{i,j}\) are generic dense polynomials in \(\mathbb {C}[x]_{d_{i,0}}\) and \(\mathbb {C}[x]_{d_{i,j}}\) respectively. Then, the complex KKT system (3.4) is Bernstein generic.

We first introduce some basic notation and useful lemmas before showing Theorem 4.1. Let \(w_1,\dots , w_N\) be weight vectors such that

where \(w_{i,k}\) and \(v_{i,j}\) are weights for variables \(x_{i,k}\) and \(\lambda _{i,j}\) respectively. Define \(w:=\sum _{i=1}^N w_i\). Each \(w_i\) is the weight vector for the system F applied only for \(x_i\) and \(\lambda _{i}\) variables.

The idea for the proof of the result is inspired by the paper [8]. We introduce the lemmas established from the paper that will be used for the proof of the Theorem 4.1.

Lemma 4.2

[8, Lemma 8] Let p be a polynomial in \(\mathbb {C}[x]\) and \(w\in \mathbb {Z}^n\) be an integer vector. If \(\frac{\partial p^w}{\partial x_i}\ne 0\), then \(\frac{\partial p^w}{\partial x_i}=\left( \frac{\partial p}{\partial x_i}\right) ^w\) and \(h^\star (A_{\frac{\partial p}{\partial x_i}})=h^\star (A_p)-w_i\).

Lemma 4.3

Let p be a dense polynomial in \(\mathbb {C}[x]_d\) and \(w=\sum _{i=1}^N w_i\) be a weight vector in \(\mathbb {Z}^n\). Then, we have

Proof

For a monomial \(x^a\), note that \(x_{i,k}\frac{\partial x^a}{\partial x_{i,k}}=a_{i,k}x^a\). Therefore, we have

Summing over all monomials in \(p^w\), we get

Noting the fact that \(h_{w_i}^\star (A_p)=h_{w_i}^\star (A_{p^w})\), we get the desired result.

Note that Lemma 4.3 is a generalization of Euler’s formula for quasihomogeneous polynomials mentioned in [8, Lemma 9].

We are now ready to prove Theorem 4.1.

Proof of Theorem 4.1

Recall the second part of Theorem 2.1 that a polynomial system is Bernstein generic if and only if the facial system has no root in the complex torus for any nonzero vector w. For each polynomial p and a weight vector w, let \(\textbf{I}^w_i(p)\) and \(\textbf{J}^w_i(p)\) be partition sets of indices \(\{1,\dots ,n_i\}\) for each i, such that \(\frac{\partial p^w}{\partial x_{i,j}}\ne 0\) if \(j\in \textbf{I}^w_i(p)\) and \(\frac{\partial p^w}{\partial x_{i,j}}=0\) if \(j\in \textbf{J}^w_i(p)\). That is, \(\textbf{I}^w_i(p)\) is the set of all labels j such that the variable \(x_{i,j}\) appears in \(p^w\), and \(\textbf{J}^w_i(p)=\{1 ,\dots ,n_i\}\setminus \textbf{I}^w_i(p)\). Also, we let

Furthermore, let \(\hat{n}:=\sum _{i=1}^N \hat{n}_i\). It is clear that if \(j\in \textbf{J}^w_i(p)\) and \(a\in A^w_p\), then \(a_{i,j}=0\). Hence, we may consider \(p^w\) as a polynomial in \(\mathbb {C}[\textbf{I}^w]:=\mathbb {C}[x_{i,j}\mid j \in \textbf{I}^w_i(p),i = 1,\dots , N]\). Note that if p is a generic polynomial, then \(p^w\) can also be considered as a generic polynomial for a given support.

In the following, for a fixed weight vector w, we show that there are no roots for the facial system \(F^w\) in the torus \((\mathbb {C}\setminus \{\varvec{0}\})^n\). Consider the facial system of \(F_i\), say,

where

For each \(k\in \{1,\dots ,n_i\}\), let \(A_{\partial _{i,k}}\) be the support of \(\frac{\partial f_i}{\partial x_{i,k}}-\sum _{j=1}^{m_i}\lambda _{i,j}\frac{\partial g_{i,j}}{\partial x_{i,k}}\). Then, we have

In the above, we recall that for a polynomial p, the \(h^\star (A_{p})\) is the minimum of \(h(a):=\left\langle w,a \right\rangle \) over \(a\in A_p\); see Sect. 2.2 for more details. Depending on where the value of \(h^\star (A_{\partial _{i,k}})\) is attained, there are the following three cases:

- (a):

-

\(h^\star (A_{\partial _{i,k}})=\min \limits _{j=1,\dots ,m_i}\left\{ h^\star \left( A_{\frac{\partial g_{i,j}}{\partial x_{i,k}}}\right) +v_{i,j}\right\} { < h^\star \left( A_{\frac{\partial f_i}{\partial x_{i,k}}}\right) }\),

- (b):

-

\(h^\star (A_{\partial _{i,k}})=h^\star \left( A_{\frac{\partial f_i}{\partial x_{i,k}}}\right) { <\min \limits _{j=1,\dots ,m_i}\left\{ h^\star \left( A_{\frac{\partial g_{i,j}}{\partial x_{i,k}}}\right) +v_{i,j}\right\} }\),

- (c):

-

\(h^\star (A_{\partial _{i,k}})=h^\star \left( A_{\frac{\partial f_i}{\partial x_{i,k}}}\right) =\min \limits _{j=1,\dots ,m_i}\left\{ h^\star \left( A_{\frac{\partial g_{i,j}}{\partial x_{i,k}}}\right) +v_{i,j}\right\} \).

Let \(M_i^w\subseteq \{1,\dots , m_i\}\) be the set of indices l such that

Then, for each \(k=1,\dots ,n_i\), we have

Note that for a fixed i, if we consider a generic dense polynomial \(p\in \mathbb {C}[x]\) with a fixed multidegree, then we have the same support \(A_{\frac{\partial p}{\partial x_{i,k}}}\) for \(\frac{\partial p}{\partial x_{i,k}}\) for any \(k =1,\dots , n_i\). Therefore, the values of \(h^\star \left( A_{\frac{\partial f_{i}}{\partial x_{i,k}}}\right) \) and \(\min \limits _{j=1,\dots ,m_i}\left\{ h^\star \left( A_{\frac{\partial g_{i,j}}{\partial x_{i,k}}}\right) +v_{i,j}\right\} \) do not depend on the choice of \(k=1,\dots , n_i\). It means that without loss of generality, if we have \(h^\star \left( A_{\frac{\partial f_i}{\partial x_{i,k}}}\right) >\min \limits _{j=1,\dots ,m_i}\left\{ h^\star \left( A_{\frac{\partial g_{i,j}}{\partial x_{i,k}}}\right) +v_{i,j}\right\} \) for some \(k\in \{1,\dots ,n_i\}\), then so do all other indices in \(\{1,\dots , n_i\}\). Furthermore, since we only concern zeros of \(F^w\) in the torus, we assume that not all polynomials in \(F^w\) are divisible by any single variable \(x_{i,j}\); otherwise, we may divide all polynomials in \(F^w\) by a proper power of \(x_{i,j}\) until one of them cannot be divided by \(x_{i,j}\) any further.

For each index i, let

Note that the variety \(U^w_i\) is defined by the ith player’s feasibility constraints, \(\text {Jac}^w_i\) is the transpose of the Jacobian matrix in the variable \(x_i\) for the vector \([f^w_i,\ g^w_{i,1},\ \dots ,\ g^w_{i,m_i}]^{\top }\), and \(W^w_i\) is the determinantal variety given by the facial system of complex Fritz-John conditions (see [37, Section 3]). Recall the assumption that not all polynomials involved are divisible by any single variable. For all \(2\le l\le m_i\), the hypersurface given by \(g^w_{i,l}(x)=0\) intersects the common zeros of \(g^w_{i,1},\dots ,g^w_{i,l-1}\) without any fixed point when varying the coefficients of \(g^w_{i,l}\). Thus, by Bertini’s theorem (see [37, Proposition 2.2] for example), the variety \(U_i^w\) is of codimension \(m_i\) (or possibly empty). Then, by a similar argument, \(\dim (U_i^w\cap W_i^w)\le \hat{n}-\hat{n}_i\). Also, following the argument in the proof of [37, Theorem 3.1], the dimension for \(V_{-i}^w:=\bigcap _{k\ne i}(U_k^w\cap W_k^w)\) is not greater than \(\hat{n}_i\).

If \(x^\star \in W_i^w\), then there exist \(\lambda _{i,0},\dots ,\lambda _{i,m_i}\in \mathbb {C}\) such that

It means that if \(\left( \frac{\partial f_i}{\partial x_{i,k}}-\sum _{j=1}^{m_i}\lambda _{i,j} \frac{\partial g_{i,j}}{\partial x_{i,k}}\right) ^w (x^\star ) =0\), then \(x^\star \in W_i^w\). Indeed, all nonzero solutions to the facial system, if they exist, must be in \(U_i^w\cap W_i^w\) for all \(i=1,\dots ,N\). If \(m_i> \hat{n}_i\) for some i, then \({ U_i^w\cap }{V}^w_{-i}=\emptyset \) when \(g_{i,j}\) are generic polynomials, so \(F^w\) does not have any solution. Hence, we may assume that \(m_i\le \hat{n}_i\). From now on, we prove the desired statement by considering each of the three cases mentioned above respectively.

Case (a). Suppose that there exists \(i=1,\dots ,N\) such that

Without loss of generality, we assume \(i=1\). Note that if there is a root \((x^\star ,\lambda ^\star )\) over \((\mathbb {C}{\setminus } \{\varvec{0}\})\) of \(F^w\), then

Denote by \((\text {Jac}_i^w)^{\circ }\) the submatrix of the rightmost \(m_i\) columns of \(\text {Jac}_i^w\), and

Then the Eq. (4.1) implies that \(x^\star \in (W_1^w)^{\circ }\cap U_1^w\). For a generic \(z_{-1}\in \mathbb {C}^{\hat{n}-\hat{n}_1}\), [37, Proposition 2.2] implies that the variety \(\{x_1\in \mathbb {C}^{\hat{n}_1}:g_{1,1}^w(x_1,z_{-1})=\dots =g_{1,m_1}^w(x_1,z_{-1})=0\}\) is smooth, i.e., the matrix \((\text{ Jac}_i^w)^{\circ }\) has full column rank at \((x_1,z_{-1})\) for all \(x_1\in \mathbb {C}^{\hat{n}_1}\). So we know \(\dim ((W_1^w)^{\circ }\cap U_1^w)<\hat{n}-\hat{n}_1\) by [45, Theorem 1.25]. Thus, we have \((W_1^w)^{\circ }\cap U_1^w\cap V^w_{-1}=\emptyset \), which contradicts to the fact that \((x^\star ,\lambda ^\star )\) is a root of \(F^w\).

Case (b). Suppose that there exists \(i=1,\dots ,N\) such that

Without loss of generality, assume that \(i=1\). We further assume that \(m_1\ne 0\) because if \(m_1=0\), then it can be considered as a special case of Case (c). For a root \((x^\star ,\lambda ^\star )\) for the facial system, we have \(\frac{\partial f_1^w}{\partial x_{1,k}}(x^\star )=0\) for all \(k=1,\dots ,n_1\). Then, \(\textbf{V}(\frac{\partial f_1^w}{\partial x_{1,k}}\mid k=1,\dots , n_1)\cap V^w_{-1}\) has the dimension at most zero due to the genericity of \(f_1^w\). Hence, the genericity of \(g_{1,j}^w\) concludes that there is no point in \(\bigcap _{i=1}^N(W_i^w\cap U_i^w)\) satisfying (4.2).

Case (c). As the first two cases cannot happen, we may assume that

for all \(i=1,\dots , N\). We consider two subcases, the case that \(w_{i,k}\ge 0\) for each indices \(i=1,\dots ,N\) and \(k\in \textbf{I}_i^w\), and the case that there is \(i\in {1,\dots ,N}\) such that \(w_{i,k}<0\) for some \(k\in \textbf{I}_i^w\).

First, we assume that \(w_{i,k}\ge 0\) for each index i and \(k\in \textbf{I}_i^w\). Because we consider a dense polynomial \(f_i\), its partial derivatives are also dense polynomials. Thus, we have \(\varvec{0}\) in the support \(A_{p}\) for each \(p\in \{\frac{\partial f_i}{\partial x_{i,k}}\mid {k\in \textbf{I}_i^w}\}\). Therefore, we have \(0\ge h^\star (A_{p})\). Since all \(w_{i,k}\ge 0\), we also have \(h^\star (A_{p})\ge 0\), and so we get \(h^\star (A_p)=0\) for each p. It further concludes that \(w_{i,k}=0\) for all i and \(k\in \textbf{I}_i^w\). Also, since

for each i, we have \(\min \limits _{j=1,\dots ,m_i} v_{i,j}=0\). We assume that there is at least one index \(i\in {1,\dots ,N}\) such that \(v_{i,j}>0\) for some \(j\in \{1,\dots , m_i\}\). Otherwise, w can be considered as just a zero vector and there is nothing to prove. Recall that \(M_i^w\) is a subset of \(\{1,\dots , m_i\}\) such that \(v_l=\min \limits _{j=1,\dots , m_i} v_{i,j}=0\) for all \(l\in M_i^w\). Then, we know that \(M_i^w\subsetneq \{1,\dots , m_1\}\) for some \(i=1,\dots ,N\); otherwise, \(F^w\) equals to F. Without loss of generality, let \(i=1\) be such an index. Then, the size of \(M_1^w\) is exactly the number of variables \(\lambda _{1,j}\) that appear in \(F_1^w\) (i.e., \(\lambda _{1,j}\) variable appears in \(F_1^w\) if and only if \(j\in M_1^w\)). Without loss of generality, we further assume \(M_1^w=\{1,\dots ,\hat{m}_1\}\) for some \(\hat{m}_1<m_1\), and let \(\widehat{\text {Jac}_1^w}\) be the submatrix of the left most \(\hat{m}_1+1\) columns of \(\text {Jac}_1^w\). If \((x^{\star },\lambda ^{\star })\) is a nonzero solution to the facial system, then \(\text{ rank }\,(\widehat{\text {Jac}_1^w}(x^{\star }))\le \hat{m}_1\). Define

the determinantal variety of \(\widehat{\text {Jac}_1^w}\). Then, by the similar argument from the proof for [37, Theorem 3.1], we have

Note that \(g^w_{1,j}(x^{\star })=0\) for all \(j=1,\dots ,m_i\). Since \(\hat{m}_1<m_1\), for any l such that \(\hat{m}_1< l\le m_1\), the hypersurface \(g^w_{1,l}\) intersects \(\widehat{W}_1^w\cap \textbf{V}(g^w_{1,1},\dots ,g^w_{1,\hat{m}_1})\) properly from the genericity of \(g^w_{1,l}\). It means that we have \(\text{ codim }(\widehat{W}_1^w\cap U_1^w)>\hat{n}_1\), and hence \(\widehat{W}_1^w\cap U_1^w\cap V_{-1}^w=\emptyset \). Therefore, such \(x^{\star }\) does not exist.

Lastly, we deal with the subcase that there exists \(i\in \{1,\dots ,N\}\) such that \(w_{i,k}<0\) for some \(k\in \textbf{I}_i^w\). Without loss of generality, assume that \(i=1\) and suppose that \(w_{1,\hat{k}}<0\) for some \(\hat{k}\in \textbf{I}_1^w\). Since there is a negative entry \(w_{1,\hat{k}}\), we have \(h_1^\star (A_{g_{1,t}})<0\) for some \(t\in \{1,\dots , m_1\}\). Furthermore, suppose that we have a root \((x^\star ,\lambda ^\star )\) of \(F^w\). Note that \(g_{1,j}^w(x^\star )=0\) for all \(j=1,\dots , m_i\). Let \(t\in M_1^w\) be the index such that \(h^\star (A_{g_{1,t}})<0\). Then, by Lemma 4.3, we have

In the above, the third equality holds due to the fact that

and the last equality is obtained by applying Lemma 4.3. Let

be the polynomial obtained from the last equality. We know that a point \(x^\star \) lies in \(\textbf{V}(q)\). It means that \(q(x^\star )=h_{w_1}^\star (A_{f_1})f_1^w(x^\star )=0\) since \(x^\star \in U_1^w\). However, it contradicts the genericity of \(f_1\).

Remark 4.4

-

1.

For the GNEP, if the defining functions are generic polynomials, then the set of complex KKT tuples is finite, and all KKT tuples lie in the torus when the GNEP only has equality constraints. This is implied by [37, Theorem 3.1]. In this case, Bernstein genericity implies that the mixed volume agrees with the algebraic degree. The explicit formula for the algebraic degree of generic GNEPs is studied in the recent paper [37].

-

2.

Even when defining functions for the GNEP are not generic, the mixed volume still is an upper bound for the number of isolated solutions in the torus by Theorem 2.1. In this case, we may still find all complex KKT tuples using the homotopy continuation. However, it is still open in general that how to justify the completeness of solutions of a system found by the homotopy continuation. For partial results on the test of checking completeness, see [10, 31].

5 Numerical Examples

In this section, we present some numerical experiments of solving GNEPs of polynomials using the polyhedral homotopy continuation. We apply the software HomotopyContinuation.jl to find complex KKT points of GNEPs by the polyhedral homotopy continuation, and apply Gloptipoly3 and SeDuMi to implement the Moment-SOS hierarchy of semidefinite relaxations for verifying GNEs. The computation is executed in a Macbook pro, 2 GHz Quad-Core Intel Core i5, 32 GB RAM.

When the GNEP is convex, if the complex KKT tuple \((x,\lambda _1 ,\dots ,\lambda _N)\) satisfies

i.e., x is a KKT point, then x is a GNE. For nonconvex GNEPs, the tuple of strategies x is a GNE if and only if the

where each \(\delta _i\) is given by (3.2). The \(\delta \) is called the accuracy parameter for x. In practical computation, one may not get \(\delta \ge 0\) exactly, due to rounding-off errors. In this section, we regard x being a GNE if \(\delta \ge -10^{-6}\).

Example 5.1

-

(i)

Consider the 2-player NEP in [38]

This is a nonconvex NEP since both players’ optimization problems are nonconvex. Moreover, the feasible set for the first player’s optimization problem is unbounded. By implementing the polyhedral homotopy continuation on the complex KKT system, we got 252 complex KKT tuples, and 8 of them satisfy the KKT system (2.1). Since this is a nonconvex problem, we ran Algorithm 3.1 for selecting NEs. We obtained four NEs \(u=(u_1,u_2)\) with

$$\begin{aligned} \begin{array}{ll} u_1=(0.3198, 0.6396, -0.6396 ), &{} u_2=( 0.6396, 0.6396, -0.4264); \\ u_1=(0.0000, 0.3895, 0.5842 ), &{} u_2=(-0.8346, 0.3895, 0.3895); \\ u_1=(0.2934, -0.5578, 0.8803 ), &{} u_2=( 0.5869, -0.5578, 0.5869);\\ u_1=(0.0000, -0.5774, -0.8660 ), &{} u_2=( -0.5774, -0.5774, -0.5774). \\ \end{array} \end{aligned}$$Their accuracy parameters are respectively

$$\begin{aligned} -5.2324\cdot 10^{-11}, \, -1.7619\cdot 10^{-9}, \, -4.8633\cdot 10^{-9}, -7.1933\cdot 10^{-9}. \end{aligned}$$Note that for this NEP, the mixed volume of the complex KKT system equals 252. The polyhedral homotopy found all complex KKT tuples, so all NEs are obtained by our method. It took about 7.81 seconds to find all NEs, including 4 seconds to find all complex KKT tuples, and about 3.81 seconds to verify NEs.

-

(ii)

If the second player’s objective becomes

then the polyhedral homotopy continuation found 252 complex KKT tuples, and there are 3 of them satisfying the KKT system (2.1). However, none of these KKT points are NEs, by Algorithm 3.1. Indeed, since the mixed volume for the complex KKT system equals 252, all complex KKT tuples were found by homotopy continuation. Therefore, we detected that this NEP does not have any NE. It took around 3 seconds to solve the complex KKT system, and 1.09 seconds to detect the nonexistence of NEs.

Example 5.2

Consider a GNEP variation of the problem in Example 5.1(i).

Similar to the problem in Example 5.1(i), this is a nonconvex GNEP, and the first player has an unbounded feasible strategy set. By implementing the polyhedral homotopy continuation on the complex KKT system, we computed the mixed volume 512 and found 484 complex KKT tuples, and 11 of them satisfy the KKT system (2.1). Since this is a nonconvex problem, we ran Algorithm 3.1 for selecting GNEs. We obtained two GNEs \(u=(u_1,u_2)\) with

Their accuracy parameters are respectively

It took about 9.85 seconds to find all GNEs including 4 seconds to solve the complex KKT system, and about 5.85 seconds to verify GNEs.

Example 5.3

Consider the 2-player convex GNEP in [39]

By implementing the polyhedral homotopy continuation on the complex KKT system, we knew the mixed volume is 23, and we got 17 complex KKT tuples. For these KKT tuples, only one of them satisfies the KKT system (2.1). Because this is a convex GNEP, we got a GNE \(u:=(u_1,u_2)\) from this KKT tuple with

It took around 2 seconds to solve the complex KKT system.

Example 5.4

Consider a 2-player GNEP

This is a nonconvex GNEP. By implementing the polyhedral homotopy continuation on the complex KKT system, we knew the mixed volume is 480 and polyhedral continuation found exactly 480 complex KKT tuples. We ran Algorithm 3.1 and obtained the unique GNE \(u:=(u_1,u_2)\) with

Note that for this GNEP, the mixed volume of the complex KKT system coincides with the number of complex KKT tuples we found. The polyhedral homotopy found all complex KKT tuples, so all GNEs are obtained by our method. It took around 5.75 seconds to find all GNEs including 4 seconds to solve the complex KKT system, and 1.75 seconds to select the GNE.

Example 5.5

Consider a GNEP whose optimization problems are

This is a nonconvex GNEP. By implementing the polyhedral homotopy continuation on the complex KKT system, we computed the mixed volume is 168 and polyhedral homotopy found 168 complex KKT tuples. However, none of them are GNEs. It took around 3 seconds to solve this problem, including 3 seconds to solve the complex KKT system, and 0.001 seconds to detect the nonexistence of GNEs.

Example 5.6

Consider a convex GNEP of 3 players. For \(i=1,2,3\), the ith player aims to minimize the quadratic function

All variables have box constraints \(-10\le x_{i,j}\le 10\), for all i, j. In addition to them, the first player has linear constraints \(x_{1,1}+x_{1,2}+x_{1,3}\le 20,\,x_{1,1}+x_{1,2}-x_{1,3}\le x_{2,1}-x_{3,2}+5\); the second player has \(x_{2,1}-x_{2,2}\le x_{1,2}+x_{1,3}-x_{3,1}+7\); and the third player has \(x_{3,2}\le x_{1,1}+x_{1,3}-x_{2,1}+4.\)

(i) Consider the case that the values of parameters are set as in [15, Example A.3]:

This is a convex GNEP since for all \(i\in \{1,2,3\}\), the \(A_i\) is positive semidefinite and all constraints are linear. By implementing the polyhedral homotopy continuation on the complex KKT system, we got the mixed volume 12096, and polyhedral homotopy found 11631 complex KKT tuples. There are 5 GNEs obtained by Algorithm 3.1, which are presented in the following table.

\(u_1\) | \(u_2\) | \(u_3\) | |

|---|---|---|---|

1 | (-0.3805,-0.1227,-0.9932) | (0.3903,1.1638) | (0.0504,0.0176) |

2 | (-0.9018,-4.4017,-2.1791) | (-2.0034,-2.4541) | (-0.0316,2.9225) |

3 | (-0.8039,-0.3062,-2.3541) | (0.9701, 3.1228) | (0.0751,-0.1281) |

4 | (1.9630,-1.3944, 5.1888) | (-3.1329,-10.0000) | (-0.0398,1.6392) |

5 | (0.6269,10.0000,9.3731) | (1.8689,10.0000) | (0.3353,-10.0000) |

It took around 177 s to solve the complex KKT system. We would like to remark that in [15] and [39], only the first GNE was found, and the second to the fourth GNEs are new solutions found by our algorithm.

(ii) If we let

and all other parameters be given as in (i), then this GNEP is nonconvex. By implementing the polyhedral homotopy continuation on the complex KKT system, the mixed volume equals 12096 and we got 11620 complex KKT tuples, and five of them satisfy the KKT condition (2.1). Since this is a nonconvex problem, we ran Algorithm 3.1 for selecting GNEs, and obtained one GNE \(u=(u_1,u_2,u_3)\) with

The accuracy parameter is \(-9.5445\cdot 10^{-7}\). It took around 209.68 seconds to find all GNEs including 207 seconds to solve the complex KKT system, and 2.68 seconds to select the GNE.

5.1 Comparison with Existing Methods

In this subsection, we compare the performance of Algorithm 3.1 with some existing methods for solving GNEPs, such as the augmented Lagrangian method (ALM) in [24], Gauss-Seidel method (GSM) in [40], the interior point method (IPM) in [12], and the semidefinite relaxation method (KKT-SDP) in [39]. We tested these methods on all GNEPs of polynomials in Examples 5.1–5.6.

Given a computed tuple \(u=(u_1,\dots , u_N)\) for an N-player game. Then, u is a GNE if and only if \(\delta \ge 0\). For the KKT-SDP method, we say the method finds a GNE successfully whenever \(\delta \ge -10^{-6}\) since \(\delta \ge 0\) may not be possible due to a numerical error. For other earlier algorithms mentioned above, since they are iterative methods, the stopping criterion is given as the following: For the computed tuple u, when \(\min \limits _{i=1,\dots ,N}\left\{ \min \limits _{j\in \mathcal {I}} \{ g_{i,j}(x)\},\ \min \limits _{j\in \mathcal {E}}\{ -|g_{i,j}(x)|\}\right\} \ge -10^{-6}\), we solve (3.2) for each i. If we further have \(\delta \ge -10^{-6}\), then we stop the iteration and report that the method found a GNE successfully.

For the ALM, GSM and IPM, the same parameters are applied as in [12, 24, 40]. In the augmented Lagrangian method, full penalization is used, and a Levenberg-Marquardt type method (see [24, Algorithm 24]) is implemented to solve penalized subproblems. For the Gauss-Seidel method, normalization parameters are updated as (4.3) in [40], and Moment-SOS relaxations are used to solve normalized subproblems. We let 1000 be the maximum number of iterations for the ALM and IPM, and at most 100 iterations are allowed in the GSM. For initial points, we use \((0,0,0,\frac{1}{\sqrt{3}},\frac{1}{\sqrt{3}},\frac{1}{\sqrt{3}})\) for Examples 5.1(i-ii), \((0,0,0,0,-\frac{1}{\sqrt{2}},\frac{1}{\sqrt{2}})\) for Example 5.2, (0, 0, 1, 1) for Example 5.4, \((0, 0, 0, \frac{1}{\sqrt{5}})\) for Example 5.5, and the zero vector for all other problems. For the one-shot KKT-SDP method, randomly generated positive semidefinite matrices are exploited to formulate polynomial optimization. Note that the ALM, IPM and KKT-SDP are designed for finding a KKT point of the GNEP. When the GNEP is convex (e.g., Examples 5.3 and 5.6(i)), the limit point is guaranteed to be a GNE, if these methods produce a convergent sequence. However, since we in general do not make any convexity assumption, it is possible that these methods converge to a KKT point which is not a GNE. For the ALM and IPM, the produced sequence is considered convergent to a KKT point if the last iterate satisfies the KKT conditions up to a small round-off error (say, \(10^{-6}\)). If the iteration is convergent but the stopping criterion is not met, we still solve (3.2) to check if the latest iterating point is a GNE or not.

The numerical results are shown in Table 1. In general, the method can be regarded to solve the GNEP successfully if the error is small (e.g., less than \(10^{-6}\)). For Algorithm 3.1, when there are more than one GNE obtained, we present the largest error among these GNEs.

The comparison is summarized as follows:

-

1.

The augmented Lagrangian method converges to a KKT point that is not a GNE for Examples 5.1(i)–(ii) and 5.6(ii). For Example 5.2, the iteration cannot proceed because the maximum penalty parameter was reached at the 14th iteration. For Examples 5.4 and 5.5, it fails to converge because the penalized subproblem cannot be solved accurately.

-

2.

The interior point method converges to a KKT point that is not a GNE for Examples 5.1(ii), 5.4 and 5.6(ii). For Examples 5.2 and 5.5, the algorithm does not converge. In this problem, the Newton-type directions usually do not satisfy sufficient descent conditions.

-

3.

For Examples 5.1(i)–(ii) and 5.6(i), the Gauss-Seidel method failed to converge and it alternated between several points. For Examples 5.2, 5.5 and 5.6(ii), the iteration cannot proceed at some stages since global minimizers for normalized subproblems cannot be obtained. Usually, this is because the normalized subproblem is infeasible or unbounded.

-

4.

The semidefinite relaxation method obtained a KKT point that is not a GNE for Examples 5.1(i)–(ii), 5.2 and 5.6(ii).

-

5.

Algorithm 3.1 detected nonexistence of GNEs for Examples 5.1(ii) and 5.5. We would like to remark that if there exist KKT points that are not GNEs, then the semidefinite relaxation method may not detect the nonexistence of GNEs. For all other GNEPs, Algorithm 3.1 found at least one GNE. Moreover, for Examples 5.1(i), 5.2 and 5.6, Algorithm 3.1 found more than one GNE, and the completeness of GNEs are guaranteed for Examples 5.1(i), 5.2 and 5.4.

5.2 GNEPs of Polynomials with Randomly Generated Coefficients

We present numerical results of Algorithm 3.1 on GNEPs defined by polynomials whose coefficients are randomly generated. For the GNEP with N players, we assume that all players have the same dimension for their strategy vectors, i.e., \(n_1=n_2=\cdots =n_N\). The ith player’s optimization problem is given by

In the above, we have \(A_i=R_i^{\top } R_i\) with a randomly generated matrix \(R_i\in \mathbb {R}^{n_i\times n_i}\). Also, \(B_i\in \mathbb {R}^{{n_i}\times (n-n_i)}\), \(c_i\in \mathbb {R}^n\), \(d_i\in \mathbb {R}\) are randomly generated real matrices or vectors. Under this setting, the constraining function of (5.1) is a convex polynomial in \(x_i\), and the \(X_i(x_{-i})\) is compact, for all \(x_{-i}\in \mathbb {R}^{n-n_i}\).

For the objective function \(f_i\), we consider two cases. First, we let

where \(\Sigma _i=\Theta _i^{\top }\Theta _i\) with a randomly generated matrix \(\Theta _i\in \mathbb {R}^{n_i\times n_i}\), and \(\Lambda _i\) (resp., \(c_i\)) is a randomly generated matrix (resp., vector) in \(\mathbb {R}^{{n_i}\times (n-n_i)}\) (resp., in \(\mathbb {R}^n\)). In this case, the GNEP given by (5.1) is convex, and all KKT points are GNEs. The second case is for GNEPs without convexity settings. We consider a degree d dense polynomial with randomly generated real coefficients, i.e.,

where \(\zeta \) is a randomly generated real vector of the proper size. To choose real matrices, vectors and coefficients randomly, we use the Matlab function unifrnd that generates real numbers following the uniform distribution.

The numerical results are presented in Table 2. By Theorem 3.2, if the mixed volume many complex KKT points are obtained, then Algorithm 3.1 can find all GNEs or detect the nonexistence of GNEs. Since we consider random examples, the homotopy method mostly finds all mixed volume many KKT points. As the problem sizes grow, there are some cases where the homotopy method cannot find all mixed volume many KKT points, due to numerical issues.

6 Conclusions and Discussions

This paper studies a new approach for solving GNEPs of polynomials using the polyhedral homotopy continuation and the Moment-SOS relaxations. We show that under some generic assumptions, the mixed volume and the algebraic degree for the complex KKT system are identical, and our method can find all GNEs or detect the nonexistence of GNEs. Some numerical experiments are presented to show the effectiveness of our method.

For future work, it is interesting to find local GNEs, i.e., find \(x=(x_1,\dots ,x_N)\) such that each \(x_i\) is local minimizer for \(\text{ F}_i(x_{-i})\). Note that every local GNE satisfies the KKT condition. However, it is difficult to select local GNEs from KKT points, especially when the second-order sufficient optimality conditions (see [5]) are not satisfied. Moreover, when the \(|\mathcal {K}_{\mathbb {C}}|\) is strictly less than the mixed volume for (2.2), how do we know whether our method finds all GNEs or detects nonexistence of GNEs or not? These questions are mostly open, to the best of the authors’ knowledge.

Data Availability

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Change history

03 May 2023

A Correction to this paper has been published: https://doi.org/10.1007/s10915-023-02192-8

References

Ardagna, D., Ciavotta, M., Passacantando, M.: Generalized Nash equilibria for the service provisioning problem in multi-cloud systems. IEEE Trans. Serv. Comput. 10(3), 381–395 (2015)

Arrow, K.J., Debreu, G.: Existence of an equilibrium for a competitive economy. Econometrica: J. Econom. Soc. 22, 265–290 (1954)

Ba, Q., Pang, J.-S.: Exact penalization of generalized Nash equilibrium problems. Oper. Res. 70(3), 1448–1464 (2022)

Bernshtein, D.N.: The number of roots of a system of equations. Funct. Anal. Its Appl. 9(3), 183–185 (1975)

Bertsekas, D.: Nonlinear Programming, 3rd edn. Athena Scientific, Nashua (2016)

Blum, L., Cucker, F., Shub, M., Smale, S.: Complexity and Real Computation. Springer, Berlin (2012)

Breiding, P., Rose, K., Timme, S. (2020) Certifying zeros of polynomial systems using interval arithmetic. arXiv:2011.05000

Breiding, P., Sottile, F., Woodcock, J.: Euclidean distance degree and mixed volume. Found. Comput. Math. 22, 1743–1765 (2022)

Breiding, P. Timme, S.: HomotopyContinuation.jl: A package for homotopy continuation in Julia. In: International Congress on Mathematical Software, pp. 458–465. Springer (2018)

Brysiewicz, T, Burr, M (2022) Sparse trace tests. arXiv:2201.04268

Debreu, G.: A social equilibrium existence theorem. Proc. Natl. Acad. Sci. 38(10), 886–893 (1952)

Dreves, A., Facchinei, F., Kanzow, C., Sagratella, S.: On the solution of the KKT conditions of generalized Nash equilibrium problems. SIAM J. Optim. 21(3), 1082–1108 (2011)

Facchinei, F., Fischer, A., Piccialli, V.: On generalized Nash games and variational inequalities. Oper. Res. Lett. 35(2), 159–164 (2007)

Facchinei, F., Kanzow, C.: Generalized Nash equilibrium problems. Ann. Oper. Res. 175(1), 177–211 (2010)

Facchinei, F., Kanzow, C.: Penalty methods for the solution of generalized Nash equilibrium problems. SIAM J. Optim. 20(5), 2228–2253 (2010)

Facchinei, F., Pang, J-S (2010) 12 Nash equilibria: the variational approach. In: Convex optimization in signal processing and communications, p. 443

Grayson, D, Stillman, M.: Macaulay2, a software system for research in algebraic geometry. http://www.math.uiuc.edu/Macaulay2/

Gürkan, G., Pang, J.-S.: Approximations of Nash equilibria. Math. Program. 117(1), 223–253 (2009)

Harker, P.T.: Generalized Nash games and quasi-variational inequalities. Eur. J. Oper. Res. 54(1), 81–94 (1991)

Hauenstein, J.D., Sottile, F.: alphaCertified: Software for certifying numerical solutions to polynomial equations. Available at math.tamu.edu/\(\sim \)sottile/research/stories/alphaCertified (2011)

Henrion, D., Laserre, J.: Detecting global optimality and extracting solutions in GloptiPoly. Positive polynomials in control. Lecture Notes in Control and Information Sciences, vol. 312, p. 293310 (2005)

Henrion, D., Laserre, J.-B., Löfberg, J.: GloptiPoly3: moments, optimization and semidefinite programming. Optim. Methods Softw. 24(4–5), 761–779 (2009)

Huber, B., Sturmfels, B.: A polyhedral method for solving sparse polynomial systems. Math. Comput. 64(212), 1541–1555 (1995)

Kanzow, C., Steck, D.: Augmented Lagrangian methods for the solution of generalized Nash equilibrium problems. SIAM J. Optim. 26(4), 2034–2058 (2016)

Laserre, J.B.: Global optimization with polynomials and the problem of moments. SIAM J. Optim. 11(3), 796–817 (2001)

Laserre, J.B.: An Introduction to Polynomial and Semi-Algebraic Optimization, vol. 52. Cambridge University Press, Cambridge (2015)

Lasserre, J.: The Moment-SOS Hierarchy. In: Sirakov, B., Ney de Souza, P., Viana, M. (eds.) Proceedings of the International Congress of Mathematicians (ICM 2018), vol. 3, pp. 3761–3784. World Scientific, Singapore (2019)

Laurent, M.: Optimization over polynomials: Selected topics. In: Jang, S., Kim, Y., Lee, D.-W., Yie, I. (eds.), Proceedings of the International Congress of Mathematicians ICM 2014, pp. 843-869 (2014)

Lee, K.: The NumericalCertification package in Macaulay2. arXiv:2208.01784 (2022)

Lee, T.-L., Li, T.-Y., Tsai, C.-H.: HOM4PS-2.0: a software package for solving polynomial systems by the polyhedral Homotopy continuation method. Computing 83(2), 109–133 (2008)

Leykin, A., Rodriguez, J.I., Sottile, F.: Trace test. Arnold Math. J. 4(1), 113–125 (2018)

Li, T.-Y., Wang, X.: The BKK root count in \(\mathbb{C} ^n\). Math. Comput. 65, 1477–1484 (1996)

Liu, M., Tuzel, O.: Coupled generative adversarial networks. Adv. Neural Inf. Process. Syst. 29, 469–477 (2016)

Moore, R.E., Kearfott, R.B., Cloud, M.J.: Introduction to Interval Analysis, vol. 110. SIAM, New Delhi (2009)

Nie, J.: Certifying convergence of Laserre’s hierarchy via flat truncation. Math. Program. 142(1), 485–510 (2013)

Nie, J.: Optimality conditions and finite convergence of Laserre’s hierarchy. Math. Program. 146(1–2), 97–121 (2014)

Nie, J., Ranestad, K., Tang, X.: Algebraic degree of generalized Nash equilibrium problems of polynomials. arXiv:2208.00357 (2022)

Nie, J., Tang, X.: Nash equilibrium problems of polynomials. arXiv:2006.09490 (2020)

Nie, J., Tang, X.: Convex generalized Nash equilibrium problems and polynomial optimization. Math. Program (2021). https://doi.org/10.1007/s10107-021-01739-7

Nie, J., Tang, X., Xu, L.: The Gauss-Seidel method for generalized Nash equilibrium problems of polynomials. Comput. Optim. Appl. 78(2), 529–557 (2021)

Nie, J., Tang, X., Zhong, S.: Rational generalized Nash equilibrium problems. arXiv:2110.12120 (2021)

Pang, J.-S., Scutari, G., Facchinei, F., Wang, C.: Distributed power allocation with rate constraints in gaussian parallel interference channels. IEEE Trans. Inf. Theory 54(8), 3471–3489 (2008)

Putinar, M.: Positive polynomials on compact semi-algebraic sets. Indiana Univ. Math. J. 42(3), 969–984 (1993)

Ratliff, L.J., Burden, S.A., Sastry, S.S.: Characterization and computation of local Nash equilibria in continuous games. In: 2013 51st Annual Allerton Conference on Communication, Control, and Computing (Allerton), pp. 917–924. IEEE (2013)

Shafarevich, I.R.: Basic Algebraic Geometry 1. Springer, Berlin (2013)

Sommese, A., Wampler, C.: The Numerical Solution of Systems of Polynomials Arising in Engineering and Science. World Scientific, Singapore (2005)

Sturm, J.: Using SeDuMi 1.02, a MATLAB toolbox for optimization over symmetric cones. Optim. Methods Softw. 11(1–4), 625–653 (1999)

Verschelde, J.: Algorithm 795: PHCpack: a general-purpose solver for polynomial systems by homotopy continuation. ACM Trans. Math. Softw. (TOMS) 25(2), 251–276 (1999)

Von Heusinger, A., Kanzow, C.: Optimization reformulations of the generalized Nash equilibrium problem using Nikaido–Isoda-type functions. Comput. Optim. Appl. 43(3), 353–377 (2009)

Von Heusinger, A., Kanzow, C.: Relaxation methods for generalized Nash equilibrium problems with inexact line search. J. Optim. Theory Appl. 143(1), 159–183 (2009)

Acknowledgements

The authors would like to thank Jiawang Nie for motivating this paper and helpful comments.

Funding

Xindong Tang is partially supported by the Start-up Fund P0038976/BD7L from The Hong Kong Polytechnic University.

Author information

Authors and Affiliations

Contributions

All authors contributed equally to this work. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original of the article was revised: The corrections were incorrectly processed were corrected.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lee, K., Tang, X. On the Polyhedral Homotopy Method for Solving Generalized Nash Equilibrium Problems of Polynomials. J Sci Comput 95, 13 (2023). https://doi.org/10.1007/s10915-023-02138-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10915-023-02138-0

Keywords

- Generalized Nash equilibrium problem

- Polyhedral homotopy

- Polynomial optimization

- Moment-SOS relaxation

- Numerical algebraic geometry