Abstract

In this work, we study the numerical solution for time fractional Black-Scholes model under jump-diffusion involving a Caputo differential operator. For simplicity of the analysis, the model problem is converted into a time fractional partial integro-differential equation with a Fredholm integral operator. The L1 discretization is introduced on a graded mesh to approximate the temporal derivative. A second order central difference scheme is used to replace the spatial derivatives and the composite trapezoidal approximation is employed to discretize the integral part. The stability results for the proposed numerical scheme are derived with a sharp error estimation. A rigorous analysis proves that the optimal rate of convergence is obtained for a suitable choice of the grading parameter. Further, we introduce the Adomian decomposition method to find out an analytical approximate solution of the given model and the results are compared with the numerical solutions. The main advantage of the fully discretized numerical method is that it not only resolves the initial singularity occurred due to the presence of the fractional operator, but it also gives a higher rate of convergence compared to the uniform mesh. On the other hand, the Adomian decomposition method gives the analytical solution as well as a numerical approximation of the solution which does not involve any mesh discretization. Furthermore, the method does not require a large amount of computer memory and is free of rounding errors. Some experiments are performed for both methods and it is shown that the results agree well with the theoretical findings. In addition, the proposed schemes are investigated on numerous European option pricing jump-diffusion models such as Merton’s jump-diffusion and Kou’s jump-diffusion for both European call and put options.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \((S,\tau )\in {\mathbb {R}}^+\times (0,T]\) and \({\mathscr {W}}(S,\tau )\) denote the option price that depends on the underlying asset price S with the current time \(\tau \). Then, the time fractional Black-Scholes (TFBS) model for option price under jump-diffusion can be described as:

Here, \(0<\alpha <1\), r is the risk-free interest rate and \(\sigma \) denotes the volatility of the returns from the underlying asset, \(\lambda >0\) is the intensity of the independent Poisson process and T is the expiry time. k is the expected relative jump size which is assumed to adopt one of the two forms:

where \(\mu _J\) and \(\sigma _J\) are respectively the mean and the variance of the jump in return. \({\textbf{E}}(.)\) denotes the expectation operator and \(\xi -1\) is the impulse function making a jump from S to \(S\xi \), with \(\xi _1>0,\xi _2>0,p>0\) and \(q=1-p\). Further, \({\mathscr {G}}(\xi )\) represents the probability density function of the jump with amplitude \(\xi \) satisfying that \(\forall \xi ,\; {\mathscr {G}}(\xi )\ge 0\) with \(\displaystyle {\int _0^\infty }{\mathscr {G}}(\xi )d\xi =1\) and is defined by

where \({\textbf{H}}(.)\) is the Heaviside function. \({\mathscr {Q}}(S)\) is considered as a pay-off function which can be described for European options as:

where K denotes the strike price of the option. In particular, when \(\alpha =1\), the model (1) describes the classical Black-Scholes jump-diffusion model (Kadalbajoo et al., 2015a; Moon et al., 2014). In this paper our aim is to study the impact of the fractional operator on the options price based on numerical simulations.

The hypothesis behind the option pricing is a probabilistic approach to assigning a value to an options contract. The main aim of this theory is to calculate the probability that an option will be exercised or be in the money at expiration. An option provides the holder with the right to buy or sell a specified quantity of an underlying asset at a fixed price (called a strike price or an exercise price) at or before the expiration date of the option. There are two types of options: call options and put options. Call options allow the option holder to buy an asset at a prespecified price, whereas put options allow the holder to sell an asset at a prespecified price. Some commonly used models to price an option include the Black-Scholes (B-S) model, binomial tree, Monte-Carlo simulation, etc. Among them, the B-S model is one of the most highly regarded pricing models that includes the variables representing the strike price of the option, the stock price, time to expiration, the risk-free interest rate of return and volatility. There are many equivalent studies in the available literature addressing analytical and numerical investigations of the B-S model (see (Mehdizadeh et al., 2022; Rao, 2018; Valkov, 2014) among others). In this study, our main focus is on a fractional B-S model as in the current models, the fractional differential calculus is continuously updating its profile by considering many numerical methods such as the finite difference method (FDM) (Gracia et al., 2018; Santra &Mohapatra, 2021a), the finite element method (Ford et al., 2011), the Adomian decomposition method (ADM) (Panda et al., 2021), the homotopy perturbation method (HPM) (Das et al., 2020), the modified Laplace decomposition method (Hamoud & Ghadle, 2018), and a radial basis function-generated finite difference method (Nikan et al., 2022), etc. Wyss in Wyss (2000) introduced the TFBS model by replacing the first derivative in time by a fractional derivative in which he gave the complete solution of the model. Its accuracy and efficiency in predicting option prices have enabled options traders to increase their trading volume by a significant margin. The TFBS model has recently been solved numerically by several methods. A detailed study can be found in Golbabai et al. (2019); Golbabai &Nikan (2020); Nikan et al. (2021). Further, the Chebyshev collocation method was adopted in Mesgarani et al. (2020) to provide a numerical solution to the TFBS equation. Özdemir and Yavuz in Ozdemir &Yavuz (2017) used the multivariate Padé approximation for the numerical solution of a TFBS model. An implicit finite difference scheme was constructed in Song &Wang (2013) for numerical discretization of a TFBS model. A high accuracy numerical method was designed in Roul (2019) to solve a TFBS European option pricing model in which the author discussed the convergence analysis of the proposed method. Recently, a robust FDM was set up in Nuugulu et al. (2021) for a numerical study of the TFBS equation. Further, many semi analytical approaches were studied for analytical as well as numerical investigations of the TFBS equation. For instance, Fall et al. in Fall et al. (2019) applied the HPM to obtain the analytical solution of a fractional B-S model involving the Caputo fractional derivative. The Laplace HPM was used in Kumar et al. (2012) to get an analytical approximate solution of a European option pricing model. Also in Ampun &Sawangtong (2021), an approximate analytical solution was studied for a TFBS equation governed by European option pricing model. For more investigation about numerical solutions of TFBS model, the reader can refer to Akrami and Erjaee (2015); Korbel and Luchko (2016); Kumar et al. (2014); Thanompolkrang et al. (2021); Tomovski et al. (2020) and references therein.

It is noticed that jumps appear continuously in the discrete movement of the stock price due to inconsistent behavior of the B-S model to capture the real stock price with constant volatility and these jumps cannot be solved by the usual B-S model. To overcome this phenomena, Merton in Merton (1976) and Kou in Kou (2002) introduced the jump-diffusion models as an extension of the jump process. The jump-diffusion models consist of two parts, a jump part and a diffusion part. The diffusion part is determined by a common Brownian motion and the second part is determined by an impulse-function and a distribution function. The impulse-function causes price changes in the underlying asset, and is determined by a distribution function, whereas the jump part enables to model sudden and unexpected price jumps of the underlying asset. Very few articles are available in the literature to deal with the B-S equation under jump-diffusion model. The Crank-Nicolson Leap-Frog finite difference scheme was used in Kadalbajoo et al. (2015b) and a spline collocation method was introduced in Kadalbajoo et al. (2015a) for numerical investigation of B-S jump-diffusion equation. A finite element method was proposed in Liu et al. (2019) to solve B-S equation under jump-diffusion model. For more investigation, one may refer to the book (Cont &Tankov, 2004), and the articles (Kim et al., 2019; Moon et al., 2014). To the best of our knowledge, there is no literature available where the B-S jump-diffusion models are examined including fractional order derivatives.

In this work, we generalize the usual B-S jump-diffusion model by replacing the first order derivative in time by a fractional one of order \(\alpha \in (0,1)\). We present an efficient FDM to discretize the TFBS equation under jump-diffusion model involving a Caputo fractional derivative. For simplicity of the analysis, the model problem is converted into a time fractional partial integro-differential equation (PIDE) with a Fredholm integral operator. To construct the scheme, the L1 discretization is introduced on a graded mesh to approximate the temporal derivative, the second order central difference scheme is used for the spatial derivatives and the composite trapezoidal approximation is used to discretize the Fredholm operator. The convergence analysis is carried out and it is shown that the optimal rate of convergence is attained for a suitable choice of the grading parameter. In addition, we consider the ADM to find out an analytical approximate solution of the given model. The numerical experiments are done for FDM as well as for ADM and it is proved that the results are in good agreement with the theoretical findings. Further, the proposed schemes are investigated on numerous European option pricing under jump-diffusion models such as Merton’s jump-diffusion, Kou’s jump-diffusion for both European call options as well as European put options.

Now, we introduce some basic definitions and preliminaries about fractional integrals and fractional derivatives, and some well known properties, that will be used later in our analysis (more details about fractional calculus can be found in Diethelm (2010); Podlubny (1999)).

Definition 1

Let \(\phi (x,t)\) be any continuous function defined on \({\mathfrak {D}}\), \({\mathfrak {D}}\) is some closed set in \({\mathbb {R}}^2\). The Riemann-Liouville fractional integral of \(\phi (x,t)\) is denoted by \({\mathscr {J}}^\beta \phi \) and is defined by:

Definition 2

The Caputo fractional derivative of the function \(\phi (x,t)\) at the point \((x,t)\in S\) is defined as:

Here, \(\beta \) is the order of the derivative and considered to be a positive real number. If \(\phi \) is constant, then \(\partial _t^\beta \phi =0\). For any \(\nu \in {\mathbb {R}}\), \(m\in {\mathbb {N}}\), we have the following properties:

-

1.

\( \partial _{t}^{\beta } t^{\nu } = \begin{array}{*{20}c} {\left\{ \begin{gathered} 0 \hfill \\ \frac{{\Gamma (\nu + 1)}}{{\Gamma (\nu - \beta + 1)}}t^{{\nu - \beta }} \hfill \\ \end{gathered} \right.} & \begin{gathered} {\text{if }}m - 1 < \beta < m,\nu \le m - 1, \hfill \\ {\text{if }}m - 1 < \beta < m,\nu > m - 1. \hfill \\ \end{gathered} \\ \end{array} \)

-

2.

\( {\mathcal{J}}^{\beta } t^{\nu } = \begin{array}{*{20}c} {\frac{{\Gamma (\nu + 1)}}{{\Gamma (\nu + \beta + 1)}}t^{{\nu + \beta }} } & {{\text{if }}m - 1 < \beta < m,\nu \ge 0.} \\ \end{array} \).

-

3.

\(\partial _t^\beta {\mathscr {J}}^\beta \phi (x,t)=\phi (x,t)\), but \({\mathscr {J}}^\beta \partial _t^\beta \phi (x,t)=\phi (x,t) -\displaystyle {\sum _{k=0}^{m-1}}\dfrac{\partial ^k}{\partial t^k}\phi (x,0)\dfrac{t^k}{k!}\), \(\;m-1<\beta <m\).

-

4.

\(\partial _t^\beta \{c_1 \phi _1(x,t)\pm c_2 \phi _2(x,t)\}=c_1\partial _t^\beta \phi _1(x,t)\pm c_2\partial _t^\beta \phi _2(x,t)\), and \({\mathscr {J}}^\beta \{c_1 \phi _1(x,t)\pm c_2 \phi _2(x,t)\}=c_1{\mathscr {J}}^\beta \phi _1(x,t)\pm c_2{\mathscr {J}}^\beta \phi _2(x,t)\),

where \(c_1,c_2\) are some constants. If \(\{{\mathcal {V}}_m^n\}_{m=0,n=0}^{M,N}\) is the mesh function corresponding to a continuous function \({\mathcal {V}}:{\mathfrak {B}}\subset {\mathbb {R}}^2\rightarrow {\mathbb {R}}\), then one can define

2 The Continuous Problem

Set \(S=Ke^x,\tau =T-t\) and \(\xi =e^{y-x}\). Using this transformation, (1) is converted into

It is crucial to work within a constrained interval in order to get a good numerical approximation of the solution of the above-mentioned model. Therefore, we truncate the interval in the spatial variable, and instead of the domain \({\mathbb {R}}\times (0,T]\), we consider the bounded domain \(\Omega :=[-L,L]\times (0,T]\). Putting \({\mathcal {U}}(x,t)={\mathscr {W}}(Ke^x,T-t)\), (2) yields

where \(A=\sigma ^2/2,B=r-A-\lambda k\) and \(D=r+\lambda \). The functions \({\tilde{\eta }},{\tilde{\zeta }}\) and \(\tilde{{\mathscr {Q}}}\) correspond to the functions \(\eta ,\zeta \) and \({\mathscr {Q}}\), respectively in the transformed domain and are defined by: \({\tilde{\eta }}(t)=\eta (T-t),~ {\tilde{\zeta }}(t)=\zeta (T-t),~\tilde{{\mathscr {Q}}}(x) ={\mathscr {Q}}(Ke^x)\). The source term f(x, t) is introduced only for partial fulfillment of the validation in the numerical experiment section. Further, \(g(y)={\mathscr {G}}(e^y)e^y\) and under the above transformation g(y) can be expressed explicitly as:

Under certain assumptions on \(\sigma ,r,k,\lambda \) and on the probability density function g, together with the following bounds on the derivatives of \({\mathcal {U}}\)

the existence and uniqueness of the solution \({\mathcal {U}}(x,t)\in {\mathcal {C}}_x^\infty ({\bar{\Omega }},{\mathbb {R}})\) of (3) for \((x,t)\in \Omega \) can be guaranteed. \(C>0\) denotes a generic constant which can take different values at different places. For more information, the reader may refer to the book (Cont &Tankov, 2004) and the articles (Gracia et al., 2018; Kadalbajoo et al., 2015a; Santra &Mohapatra, 2021b). Here, \({\mathcal {C}}_x^\infty ({\bar{\Omega }},{\mathbb {R}})\) is a subspace of \({\mathcal {C}}({\bar{\Omega }},{\mathbb {R}})\) in which the functions are infinitely differentiable in the x variable with the norm defined by:

where \({\mathcal {C}}({\bar{\Omega }},{\mathbb {R}})\) denotes the set of all real-valued continuous functions defined on \({\bar{\Omega }}\). The convergence analysis for the ADM will be done based on the norm defined above.

2.1 Analytical Approximate Solution

In this section, we successfully apply the ADM for obtaining the analytical as well as the numerical approximation of (3). According to ADM, the solution of (3) can be expressed in terms of an infinite series as:

If the model problem involves any nonlinear term, then it can be approximated by Adomian polynomials (see (Panda et al., 2021)). Since (3) does not involve any nonlinear term, we don’t need the Adomian polynomials. Applying \({\mathscr {J}}^\alpha \) to both sides of (3), we get

Substituting (5) into (6) and comparing both sides, we reach at the following recursive algorithm:

The exact solution is given by: \({\mathcal {U}}(x,t)= \displaystyle { \lim _{{\mathscr {N}}\rightarrow \infty }\sum _{j=0}^{{\mathscr {N}}}}{\mathcal {U}}_j(x,t)\). One can get an analytical approximate solution by truncating the series up to a finite number of terms (say \({\mathscr {N}}\) terms). In this case, the approximate solution is \({\mathscr {U}}_{{\mathscr {N}}}=\displaystyle {\sum _{j=0}^{{\mathscr {N}}-1}}{\mathcal {U}}_j(x,t)\).

Theorem 1

Let \(f(x,t)\in {\mathcal {C}}_x^\infty ({\bar{\Omega }},{\mathbb {R}})\) and \(\tilde{{\mathscr {Q}}}(x),g(x)\in {{\mathcal {C}}_x^\infty ([-L,L],{\mathbb {R}})}\). Then the series solution for (3) represented by (5) converges uniformly on \({\bar{\Omega }}\).

Proof

The idea that we have used to prove this theorem has been used in Das et al. (2020). The additional assumption that we need is that \(\vartheta :=\dfrac{(|A|+|B|+|D|+2\lambda L{\mathbb {G}})T^\alpha }{\Gamma (\alpha +1)}<1\), where \(\Vert g\Vert _{{\mathcal {C}}_x^\infty ({\bar{\Omega }},{\mathbb {R}})}\le {\mathbb {G}}\). Since \(f(x,t)\in {\mathcal {C}}_x^\infty ({\bar{\Omega }},{\mathbb {R}})\) and \(\tilde{{\mathscr {Q}}}(x)\in {\mathcal {C}}_x^\infty ([-L,L],{\mathbb {R}})\), so \({\mathcal {U}}_0\in {\mathcal {C}}_x^\infty ({\bar{\Omega }},{\mathbb {R}})\). Then, there exists an \({\mathscr {M}}>0\) such that \(|{\mathcal {U}}_0|\le \displaystyle {\sup _{k\ge 0} \sup _{(x,t)\in {\bar{\Omega }}}}\Big |\dfrac{\partial ^k{\mathcal {U}}_0(x,t)}{\partial x^k}\Big |=\Vert {\mathcal {U}}_0\Vert _{C_x^\infty ({\bar{\Omega }},{\mathbb {R}})}\le {\mathscr {M}}\). Then, the expression described in (7) confirms that \({\mathcal {U}}_j\in {\mathcal {C}}_x^\infty ({\bar{\Omega }},{\mathbb {R}})\), for \(j=1,2,\ldots \). Now, we apply the principle of mathematical induction to prove that \(|{\mathcal {U}}_j|\le {\mathscr {M}}\vartheta ^j~\forall ~j=1,2,3, \ldots \). For \(j=1\), we have

Suppose that the inequality holds true for \(j=p-1,~p\in {\mathbb {N}}\) i.e., \(|{\mathcal {U}}_{p-1}|\le \displaystyle {\sup _{k\ge 0} \sup _{(x,t)\in {\bar{\Omega }}}}\Big |\dfrac{\partial ^k{\mathcal {U}}_{p-1}(x,t)}{\partial x^k}\Big |=\Vert {\mathcal {U}}_{p-1}\Vert _{C_x^\infty ({\bar{\Omega }},{\mathbb {R}})}\le {\mathscr {M}}\vartheta ^{p-1}\). Then for \(j=p\), we get

Therefore, we have \(\Bigg |\displaystyle {\sum _{j=0}^\infty }{\mathcal {U}}_j(x,t)\Bigg |\le \displaystyle {\sum _{j=0}^\infty }|{\mathcal {U}}_j|\le \displaystyle {\sum _{j=0}^\infty }{\mathscr {M}}\vartheta ^j\). Notice that since \(\vartheta \in (0,1)\), the series \(\displaystyle {\sum _{j=0}^\infty }{\mathscr {M}}\vartheta ^j\) is a convergent geometric series. Hence, by the Weierstrass M-test, one can conclude that the series \(\displaystyle {\sum _{j=0}^\infty }{\mathcal {U}}_j(x,t)\) converges uniformly on \({\bar{\Omega }}\). \(\square \)

3 Finite Difference Approximation

For numerical discretization, a uniform mesh is used in the spatial direction whereas, a graded mesh is introduced to discretize the temporal direction.

3.1 Time Discretization

Let, N be a fixed positive integer and set \(t_n=T\Big (\dfrac{n}{N}\Big )^\varrho \) for \(n=0,1,\cdots ,N\). \(\varrho \ge 1\) is the grading parameter. If \(\varrho =1\) then the mesh will be uniform. Take \(\Delta t_n=t_n-t_{n-1},\;n=1,2,\cdots ,N\). At each \(t=t_n\), the Caputo fractional derivative \(\partial _t^\alpha {\mathcal {U}}\) is defined by

The L1 discretization is used to approximate \(\partial _t^\alpha {\mathcal {U}}(x,t_n)\) as follows:

where for each \(n=1,2,\cdots ,N\), \(d_{n,l}^{(\alpha )}\) is defined by

Particularly, \(d_{n,1}^{(\alpha )}=\Delta t_n^{-\alpha }\). The mean value theorem gives \((1-\alpha )(t_n-t_{n-l})^{-\alpha }\le d_{n,l}^{(\alpha )}\le (1-\alpha )(t_n-t_{n-l+1})^{-\alpha }\) and hence, we have

For further study about L1 discretization, the reader may refer to Gracia et al. (2018); Huang et al. (2020); Santra and Mohapatra (2020).

3.2 Space Discretization

Take \(M\in {\mathbb {N}}\) be fixed and set \(x_m=-L+m\Delta x\) for \(m=0,1,\cdots ,M\), where \(x_0=-L, x_M=L\) and the mesh parameter \(\Delta x=2\,L/M\). At each \(x=x_m\), the spatial derivatives \(\dfrac{\partial {\mathcal {U}}}{\partial x}\) and \(\dfrac{\partial ^2{\mathcal {U}}}{\partial x^2}\) are discretized as:

Finally, the nonuniform mesh \(\{(x_m,t_n):\;m=0,1,\cdots ,M;\;n=0,1,\cdots ,N\}\) is constructed and at each mesh points \((x_m,t_n)\), we have the following approximations:

Therefore, (3) becomes

where \({\mathscr {R}}_{m,n}^{(1)}=(\partial _N^\alpha -\partial _t^\alpha ) {\mathcal {U}}(x_m,t_n)\), \({\mathscr {R}}_{m,n}^{(2)}=A\Big (\dfrac{\partial ^2}{\partial x^2}-\delta _x^2\Big ){\mathcal {U}}(x_m,t_n)\) and \({\mathscr {R}}_{m,n}^{(3)}=B\Big (\dfrac{\partial }{\partial x}-{\mathscr {D}}_x^0\Big ){\mathcal {U}}(x_m,t_n)\). It remains to approximate the Fredholm integral part. The composite trapezoidal rule is used to discretize it. Here, the \(n^{\text {th}}\) level solution is approximated by the \((n-1)^{\text {th}}\) level solution, which will produce an error of order \(O(N^{-\varrho \alpha })\) based on the choice of a suitable grading parameter \(\varrho \), such that \(\displaystyle {\max _{n}}~\Delta t_n=O(t_1)\). The error bound is obtained as follows:

Here, in the first inequality, we have used the bounds given in (4). The approximation to the integral operator is then given by:

The Taylor series expansion gives the bounds for the remainder term \({\mathscr {R}}_{m,n}^{(4)}\) as:

Then, (10) reduces to

where \(F(x_m,t_n)=f(x_m,t_n)+\dfrac{\lambda \Delta x}{2}\displaystyle {\sum _{l=0}^{M-1}}\Big [{\mathcal {U}}(x_l,t_{n-1}) g(x_l-x_m)+{\mathcal {U}}(x_{l+1},t_{n-1})g(x_{l+1}-x_m)\Big ]\) and the remainder term \({\mathscr {R}}_{m,n}\) is given by

Neglecting \({\mathscr {R}}_{m,n}\), (12) reduces to the following discrete problem:

Using (9), the following implicit scheme is obtained.

For each \(m=1,2,\cdots ,M-1\), \({\mathscr {F}}_m^0=\dfrac{d_{n,n}^{(\alpha )}}{\Gamma (2-\alpha )}\,{\mathcal {U}}_m^0+F(x_m,t_1)\), and for each \(n=2,3,\ldots ,N\), we have

\(m=1,2,\ldots ,M-1\). The coefficient matrix associated with the discrete operator is tridiagonal. Notice that \(A\ge 0,\;D\ge 0\). For stability, the matrix needs to be imposed in correct sign pattern and it is done by making the nonrestrictive assumption that M satisfies \(\dfrac{L|B|}{|A|}\le M\).

4 Error Analysis of the Finite Difference Method

The complete error bounds for the numerical solution of (1) obtained by using the proposed FDM given by the discrete scheme (14) is discussed in this section. One can see that the stability multipliers are taken into account to show the convergence results.

4.1 Stability of the Scheme

The discrete scheme (15) can be rewritten as:

Lemma 2

The solution of the discrete problem (14) satisfies the following inequality:

Proof

The idea that we have used here is discussed in Stynes et al. (2017). For any fixed \(n\in \{1,2,\ldots ,N\}\), choose \(m^*\) in such a way that \(|{\mathcal {U}}_{m^*}^n|=\Vert {\mathcal {U}}^n\Vert \). Therefore at the mesh point \((x_{m^*},t_n)\), we have

Notice that \(D\ge 0\) and the choice of \(m^*\) yields

which is equivalent to

Now, using (8) and dividing both sides by \(d_{n,1}^{(\alpha )}\), we obtain

which is the required result. \(\square \)

Define the stability multipliers \(\Lambda _{n,j}\), for \(n=1,2,\ldots ,N\); \(j=1,2,\ldots ,n-1\) by

From (8), it can be observed that \(\Lambda _{n,j}\ge 0\;\forall \; n,j\). The following lemma reveals a more general stability result in terms of stability multipliers.

Lemma 4.2

For each \(n=1,2,\ldots ,N\), one has

Proof

The proof of this lemma is available in Stynes et al. (2017). \(\square \)

4.2 Convergence of the Scheme

To show the convergence of the scheme, first we calculate the truncation error bounds for \(\partial _N^\alpha ,\delta _x^2,{\mathscr {D}}_x^0\) for each \((x_m,t_n)\). Then using the stability results, the complete error bounds for the numerical solution obtained by the proposed FDM are established.

Lemma 4.3

Let the solution of (1) satisfies (4). Then the remainder term \({\mathscr {R}}_{m,n}^{(1)}\) satisfies the following inequality:

Proof

It can be obtained in a similar way as was done in Huang et al. (2020). \(\square \)

Lemma 4.4

For the discrete operators \(\delta _x^2\) and \({\mathscr {D}}_x^0\) for all, one has

Proof

Using Taylor series expansion, one can easily prove the above inequalities. \(\square \)

Denote \(\Vert {\mathscr {E}}_m^n\Vert =\Vert {\mathcal {U}}(x_m,t_n)-{\mathcal {U}}_m^n\Vert \) as the maximum errors at each \((x_m,t_n)\). The error equation can be obtained by subtracting (14) from (12) as follows:

Theorem 4.5

If \(\{{\mathcal {U}}(x_m,t_n)\}_{m,n=0}^{M,N}\) and \(\{{\mathcal {U}}_m^n\}_{m,n=0}^{M,N}\) denote the exact solution and the computed solution of (3) by using the scheme (14), then for each \((x_m,t_n)\in [-L,L]\times (0,T]\), one has the following error bounds:

Proof

According to Lemma 4.2, the solution of the discrete problem (16) satisfies

Notice that \({\mathscr {R}}_{m,j}={\mathscr {R}}_{m,j}^{(1)}+{\mathscr {R}}_{m,j}^{(2)} +{\mathscr {R}}_{m,j}^{(3)}+{\mathscr {R}}_{m,j}^{(4)}\). Using triangle inequality and then, applying Lemmas 4.3, 4.4, and error bounds displayed in (11), we have

If a parameter \(\gamma \) be such that \(\gamma \le \varrho \alpha \), then one has \(\Delta t_n^\alpha \displaystyle {\sum _{j=1}^n}j^{-\gamma }\Lambda _{n,j}\le \dfrac{T^{\alpha }N^{-\gamma }}{1-\alpha },~n=1,2,\ldots ,N\). For more details, one may refer ((Stynes et al., 2017)). Now, using this result to the above inequality with \(\gamma =\min \{\varrho \alpha ,~2-\alpha \}\) for the first term and \(\gamma =0\) for the remaining terms, we obtain

which is the desired bound. \(\square \)

Remark 4.6

If \(\varrho \ge (2-\alpha )/\alpha \), the above error bounds can be rewritten as:

5 Results and Discussion

Several tests are performed on TFBS equations under Merton’s jump diffusion model as well as Kou’s jump diffusion model. The graphical representation of the numerical solutions obtained by both the techniques FDM and ADM are shown. Further, the solution obtained by FDM is compared with the solution obtained by ADM. The numerical data was obtained using the mathematical software MATLAB R2015a, which was also used to get the graphical representations.

5.1 Experiments on Merton’s Model

Example 5.1

Consider the following TFBS equation under Merton’s jump-diffusion model governing a European call option:

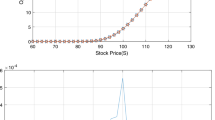

Here, \(\alpha \in (0,1)\) and the parameters are given by: \(\sigma =0.15,r=0.05,\lambda =0.10,\sigma _J=0.45\) and \(\mu _J=-0.90\). \(T=0.25\) (year) and the strike price \(K=100\). Further, k is defined as: \(k=\exp \Big (\mu _J+\dfrac{\sigma _J^2}{2}\Big )-1\). The surface plot displayed in Fig. 1a represents the numerical solution obtained by the proposed FDM with \(\alpha =0.4,\varrho =(2-\alpha )/2\alpha \) and \(M=N=30\). The solution obtained by ADM with \({\mathscr {N}}=3\) is plotted in Fig. 1b. The curves represented in Fig. 2 show the European call option prices with different values of \(\alpha \) at \(t=0.25\) for Example 5.1. The presence of the fractional differential operator in the model has a keen impact on the profile of the option price. It can be noticed that the option value decreases as \(\alpha \) increases when the stock price is greater than the strike price.

Example 5.2

Let \(\alpha \in (0,1)\). Consider another TFBS equation which describes a European put option under Merton’s jump-diffusion model.

where \(\sigma =0.30,r=0.05,\lambda =1,\sigma _J=0.5\) and \(\mu _J=-0.90\). \(T=0.50\) (year) and the strike price \(K=100\). Further, \(k=\exp \Big (\mu _J+\dfrac{\sigma _J^2}{2}\Big )-1\). The numerical solution of the European put option price is shown in Fig. 3a with \(\alpha =0.3,\varrho =(2-\alpha )2\alpha \) and \(M=N=30\) by FDM whereas, Fig. 3b displays the approximate solution by ADM with \({\mathscr {N}}=3\). The cross section view is shown in Fig. 4 and it represents the put option value at \(t=0.5\). One can observe that the price value increases as \(\alpha \) increases when the stock price is less that the strike price.

5.2 Experiments on Kou’s Model

Example 5.3

Consider the following TFBS equation under Kou’s jump-diffusion model governing a European call option:

Here, \(\alpha \in (0,1)\) and the parameters are given by: \(\sigma =0.15,r=0.05,\lambda =0.10,\xi _1=3.0465,\xi _2=3.0775\) and \(p=0.3445\). \(T=0.25\) (year) and the strike price \(K=30\). For Kou’s model, k is defined as: \(k=\dfrac{p\xi _1}{\xi _1-1}+\dfrac{(1-p)\xi _2}{\xi _1+1}-1\). Here, we apply FDM as well as ADM for the numerical solution of Example 5.3 and it’s graphical representation is shown in Fig. 5 with \(\alpha =0.5\). The comparison of European call option price in displayed in Fig. 6 for \(\alpha =0.25,0.45,0.65,0.85\). The value of \(\alpha \) influences the value of the option price.

Example 5.4

Let \(\alpha \in (0,1)\). Consider another TFBS equation which describes a European put option under Kou’s jump-diffusion model.

Here, the parameters are given by: \(\sigma =0.25,r=0.05,\lambda =0.10,\xi _1=3.0465,\xi _2=3.0775\) and \(p=0.3445\). \(T=1\) (year) and the strike price \(K=30\). Further, \(k=\dfrac{p\xi _1}{\xi _1-1}+\dfrac{(1-p)\xi _2}{\xi _1+1}-1\). The numerical solutions corresponding to the European put option price are displayed in Fig. 7a (FDM with \(M=N=30\)) and Fig. 7b (ADM with \({\mathscr {N}}=3\)) with \(\alpha =0.2\) for Example 5.4. Figure 8 represents the European put option value under Kou’s jump-diffusion model for different \(\alpha =0.35,0.55,0.75,0.95\) on the stock price domain \(S\in [3,50]\). The value of \(\alpha \) apparently influences on the option price.

The following example we consider here is a generalized version of the TFBS equation under Merton’s jump-diffusion model with known exact solution to validate the theoretical analysis.

Example 5.5

Let \(\alpha \in (0,1)\). Consider the following TFBS equation under Merton’s jump-diffusion model as:

where the parameters are given by: \(\sigma =0.1,r=0.05,\lambda =0.01,\sigma _J=0.5\) and \(\mu _J=0\). Then, \(A=\sigma ^2/2,B=r-A-\lambda k\) and \(D=r+\lambda \). k is defined as: \(k=\exp \Big (\mu _J+\dfrac{\sigma _J^2}{2}\Big )-1\). Here, f(x, t) is chosen in such a way that the exact solution of Example 5.5 be \({\mathcal {U}}(x,t)=t^\alpha e^{x^2/2\sigma _J^2}\). If \(\{{\mathcal {U}}(x_m,t_n)\}_{m=0,n=0}^{M,N}\) and \(\{{\mathcal {U}}_m^n\}_{m=0,n=0}^{M,N}\) be the exact and the corresponding numerical solution obtained by using FDM, then the maximum error and the rate of convergence are estimated by the following formula:

If \({\mathscr {U}}_{{\mathscr {N}}}=\displaystyle {\sum _{j=0}^{{\mathscr {N}}-1}}{\mathcal {U}}_j(x,t)\) denotes the \({\mathscr {N}}\) term approximate solution by ADM, then the absolute error is computed as: \({\mathscr {E}}_{{\mathscr {N}}}=\Big |{\mathcal {U}}(x,t) -{\mathscr {U}}_{{\mathscr {N}}}(x,t)\Big |, ~(x,t)\in {\bar{\Omega }}\).

The surface displayed in Fig. 9a shows the analytical solution with \(\alpha =0.3,\varrho =(2-\alpha )/2\alpha \) and \(M=N=32\) for Example 5.5. With same parameters, the solution obtained by FDM is shown in Fig. 9b. Similarly, for \(\alpha =0.6\), the analytical and the corresponding approximate solution obtained by ADM are shown in Fig. 10a and b respectively with \({\mathscr {N}}=2\). The maximum error is shown in graphical representation (see Fig. 11) with \(\varrho =2(2-\alpha )/\alpha , (2-\alpha )/\alpha \) for different values of \(\alpha \). One can see that for a fixed \(\alpha \), the error represented by the curve decreases for increasing values of M, N. This proves the convergence of the finite difference scheme. The log-log plots of the errors and the error bounds are shown in Fig. 12 for different values of \(\alpha \). Table 1 indicates \({\mathscr {E}}^{M,N}\) and \({\mathscr {P}}^{M,N}\) with fixed \(\alpha =0.4\) and varying M, N for different grading parameters. It can be observed that if we consider the uniform mesh (\(\varrho =1\)), we are getting \(\alpha =0.4\) rate of convergence whereas for \(\varrho =(2-\alpha )/2\alpha \), the scheme gives \((2-\alpha )/2=0.8\) rate of convergence. Similarly, for \(\varrho =(2-\alpha )/\alpha ,2(2-\alpha )/\alpha \), it produces \(2-\alpha =1.6\) rate of convergence. Therefore, we are getting higher rate of convergence on graded mesh compared to the uniform mesh and the optimal rate of convergence is obtained by making \(\varrho \ge (2-\alpha )/\alpha \) which is same as we obtained theoretically (see Theorem 4.5 and Remark 4.6). Similar arguments can be explained for Table 2 with \(\alpha =0.6\) and Table 3 with \(\alpha =0.8\) respectively. Finally, we compare the absolute errors obtained by ADM with FDM in Table 4 for \(\alpha =0.3,0.5,0.7\).

6 Concluding Remarks

In this work, a fully discrete finite difference scheme is constructed on a nonuniform mesh to solve a time fractional Black-Scholes equation under jump-diffusion model. The L1 discretization is used on a time graded mesh to discretize the temporal derivative whereas the spatial derivatives are approximated by second order central difference schemes in which the spatial direction is discretized uniformly. The error analysis is carried out and it is proved that the nonuniform mesh is more effective than uniform mesh to solve such model. A rigorous analysis proves that the optimal rate of convergence is obtained for a suitable choice of the grading parameter. Further, a analytical approximate solution is presented with the help of Adomian decomposition method. Several experiments are done on the Merton’s jump-diffusion model as well as the Kou’s jump-diffusion model. The solution obtained by both FDM and ADM are presented graphically and it can be observed that the numerical solution is well agreement with the exact solution. Also, it is noticed that the presence of the fractional derivative in the model has a keen impact on the value of the option price. Computed error and the rate of convergence are shown in shape of tables which demonstrate the accuracy of the theoretical findings.

Surfaces represent numerical solutions for Example 5.1 with \(\alpha =0.4, M=N=30\)

European call option price for Example 5.1

Surfaces represent numerical solutions for Example 5.2 with \(\alpha =0.3, M=N=30\)

European put option price for Example 5.2

Surfaces represent numerical solutions for Example 5.3 with \(\alpha =0.5, M=N=30\)

European call option price for Example 5.3

Surfaces represent numerical solutions for Example 5.4 with \(\alpha =0.2, M=N=30\)

European put option price for Example 5.4

Analytical and numerical solutions for Example 5.5 with \(\alpha =0.3, M=N=32\)

Analytical and numerical solutions for Example 5.5 with \(\alpha =0.6, M=N=32\)

Maximum errors for Example 5.5

Log-log plots for Example 5.5 with \(\varrho =2(2-\alpha )/\alpha \)

Data Availibility

The data sets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

Akrami, M. H., & Erjaee, G. H. (2015). Examples of analytical solutions by means of Mittag-Leffler function of fractional Black-Scholes option pricing equation. Fractional Calculus and Applied Analysis, 18, 38–47.

Ampun, S., & Sawangtong, P. (2021). The approximate analytic solution of the time fractional Black-Scholes equation with a European option based on the Katugampola fractional derivative. Mathematics, 9(3), 214. https://doi.org/10.3390/math9030214

Cont, R., & Tankov, P. (2004). Financial modelling with jump processes. Boca Raton: Chapman & Hall/CRC.

Das, P., Rana, S., & Ramos, H. (2020). On the approximate solutions of a class of fractional order nonlinear Volterra integro-differential initial value problems and boundary value problems of first kind and their convergence analysis. Journal of Computational and Applied Mathematics. https://doi.org/10.1016/j.cam.2020.113116

Diethelm, K. (2010). The analysis of fractional differential equations: An application-oriented exposition using differential operators of Caputo type. Berlin: Springer-Verlag.

Fall, A. N., Ndiaye, S. N., & Sene, N. (2019). Black-Scholes option pricing equations described by the Caputo generalized fractional derivative. Chaos Solitons Fractals, 125, 108–118.

Ford, J. N., Xiao, J., & Yan, Y. (2011). A finite element method for time fractional partial differential equations. Fractional Calculus and Applied Analysis, 14(3), 454–474.

Golbabai, A., Nikan, O., & Nikazad, T. (2019). Numerical analysis of time fractional Black-Scholes European option pricing model arising in financial market. Computational and Applied Mathematics, 38, 173. https://doi.org/10.1007/s40314-019-0957-7

Golbabai, A., & Nikan, O. (2020). A computational method based on the moving least-squares approach for pricing double barrier options in a time-fractional Black-Scholes model. Computational Economics, 55, 119–141. https://doi.org/10.1007/s10614-019-09880-4

Gracia, J. L., O’Riordan, E., & Stynes, M. (2018). Convergence in positive time for a finite difference method applied to a fractional convection-diffusion problem. Computational Methods in Applied Mathematics, 18(1), 33–42.

Hamoud, A. A., & Ghadle, K. P. (2018). Modified Laplace decomposition method for fractional Volterra-Fredholm integro-differential equations. Journal of Mathematical Modeling, 6(1), 91–104.

Huang, C., Liu, X., Meng, X., & Stynes, M. (2020). Error analysis of a finite difference method on graded meshes for a multi-term time fractional initial boundary value problem. Computational Methods in Applied Mathematics, 20(4), 815–825.

Kadalbajoo, M. K., Tripathi, L. P., & Kumar, A. (2015). Second order accurate IMEX methods for option pricing under Merton and Kou jump-diffusion models. Journal of Scientific Computing, 65(3), 979–1024.

Kadalbajoo, M. K., Kumar, A., & Tripathi, L. P. (2015). An efficient numerical method for pricing option under jump-diffusion model. International Journal of Advances in Engineering Sciences and Applied Mathematics, 7(3), 114–123.

Kim, K. H., Yun, S., Kim, N. U., & Ri, J. H. (2019). Pricing formula for European currency option and exchange option in a generalized jump mixed fractional Brownian motion with time-varying coefficients. Physica A: Statistical Mechanics and Its Applications, 522, 215–231.

Korbel, J., & Luchko, Y. (2016). Modeling of financial processes with a space-time fractional diffusion equation of varying order. Fractional Calculus and Applied Analysis, 19, 1414–1433.

Kou, S. G. (2002). A jump-diffusion model for option pricing. Management Science, 48(8), 1086–1101.

Kumar, S., Yildirim, A., Khan, Y., Jafari, H., Sayevand, K., & Wei, L. (2012). Analytical solution of fractional Black-Scholes European option pricing equation by using Laplace transform. Journal of fractional calculus and Applications, 2(8), 1–9.

Kumar, S., Kumar, D., & Singh, J. (2014). Numerical computation of fractional Black-Scholes equation arising in financial market. Egyptian Journal of Basic and Applied Sciences, 1(3–4), 177–183.

Liu, S., Zhou, Y., Wu, Y., & Ge, X. (2019). Option pricing under the jump-diffusion and multi factor stochastic processes. Journal of Function Spaces. https://doi.org/10.1155/2019/9754679

Mehdizadeh, M. K., Rashidi, M. M., Shokri, A., Ramos, H., & Khakzad, P. (2022). A nonstandard finite difference method for a generalized Black-Scholes equation. Symmetry. https://doi.org/10.3390/sym14010141

Merton, R. C. (1976). Option pricing when underlying stock returns are discontinuous. Journal of Financial Economics, 3(1), 125–144.

Mesgarani, H., Beiranvand, A., & Aghdam, Y. E. (2020). The impact of the Chebyshev collocation method on solutions of the time fractional Black-Scholes. Mathematical Sciences, 15, 137–43. https://doi.org/10.1007/s40096-020-00357-2

Moon, K. S., Kim, H., & Jeong, Y. (2014). A series solution of Black-Scholes equation under jump-diffusion model. Economic Computation and Economic Cybernetics Studies and Research, 48(1), 127–39.

Nikan, O., Avazzadeh, Z., & Tenreiro Machado, J. A. (2021). Localized kernel-based meshless method for pricing financial options underlying fractal transmission system. Mathematical Methods in the Applied Sciences. https://doi.org/10.1002/mma.7968

Nikan, O., Golbabai, A., Tenreiro Machado, J. A., & Nikazad, T. (2022). Numerical approximation of the time fractional cable model arising in neuronal dynamics. Engineering with Computers, 38, 155–173. https://doi.org/10.1007/s00366-020-01033-8

Nuugulu, S. M., Gideon, F., & Patidar, K. C. (2021). A robust numerical solution to a time fractional Black-Scholes equation. Advances in Difference Equations. https://doi.org/10.1186/s13662-021-03259-2

Özdemir, N., & Yavuz, M. (2017). Numerical solution of fractional Black-Scholes equation by using the multivariate Padé approximation. Acta Physica Polonica A. https://doi.org/10.12693/APhysPolA.132.1050

Panda, A., Santra, S., & Mohapatra, J. (2021). Adomian decomposition and homotopy perturbation method for the solution of time fractional partial integro-differential equations. Journal of Applied Mathematics and Computing. https://doi.org/10.1007/s12190-021-01613-x

Podlubny, I. Fractional differential equations: an introduction to fractional derivatives, fractional differential equations, to methods of their solution and some of their applications. Mathematics in Science and Engineering, Vol. 198, Academic Press, Inc., San Diego, CA (1999)

Rao, S. C. S. (2018). Manisha: Numerical solution of generalized Black-Scholes model. Applied Mathematics and Computation, 321, 401–421.

Roul, P. (2019). A high accuracy numerical method and its convergence for time-fractional Black-Scholes equation governing European options. Applied Numerical Mathematics. https://doi.org/10.1016/j.apnum.2019.11.004

Santra, S., & Mohapatra, J. (2020). Analysis of the L1 scheme for a time fractional parabolic-elliptic problem involving weak singularity. Mathematical Methods in the Applied Science, 44(2), 1529–1541.

Santra, S., & Mohapatra, J. (2021). Numerical analysis of Volterra integro-differential equations with Caputo fractional derivative. Iranian Journal of Science and Technology, Transactions A: Science, 45(5), 1815–1824.

Santra, S., & Mohapatra, J. (2021). A novel finite difference technique with error estimate for time fractional partial integro-differential equation of Volterra type. Journal of Computational and Applied Mathematics. https://doi.org/10.1016/j.cam.2021.113746

Song, L., & Wang, W. (2013). Solution of the fractional Black-Scholes option pricing model by finite difference method. Abstract and applied analysis. https://doi.org/10.1155/2013/194286

Stynes, M., O’Riordan, E., & Gracia, J. L. (2017). Error analysis of a finite difference method on graded meshes for a time-fractional diffusion equation. SIAM Journal on Numerical Analysis, 55(2), 1057–1079.

Thanompolkrang, S., Sawangtong, W., & Sawangtong, P. (2021). Application of the generalized Laplace homotopy perturbation method to the time fractional Black-Scholes equations based on the Katugampola fractional derivative in Caputo type. Computation, 9(33), 30033. https://doi.org/10.3390/computation9030033

Tomovski, Z., Dubbeldam, J. L. A., & Korbel, J. (2020). Applications of Hilfer-Prabhakar operator to option pricing financial model. Fractional Calculus and Applied Analysis, 23, 996–1012.

Valkov, R. (2014). Fitted finite volume method for a generalized Black-Scholes equation transformed on finite interval. Numerical Algorithms, 65(1), 195–220.

Wyss, W. (2000). The fractional Black-Scholes equation. Fractional Calculus and Applied Analysis, 3(1), 51–61.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. No funding source is available.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare that there is no conflicts of interest.

Informed consent

On behalf of the authors, Dr. Higinio Ramos shall be communicating the manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mohapatra, J., Santra, S. & Ramos, H. Analytical and Numerical Solution for the Time Fractional Black-Scholes Model Under Jump-Diffusion. Comput Econ 63, 1853–1878 (2024). https://doi.org/10.1007/s10614-023-10386-3

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10614-023-10386-3

Keywords

- Black-Scholes jump-diffusion model

- Caputo derivative

- Adomian decomposition method

- Finite difference

- L1 discretization

- Error analysis