Abstract

Conditioning complex subsurface flow models on nonlinear data is complicated by the need to preserve the expected geological connectivity patterns to maintain solution plausibility. Generative adversarial networks (GANs) have recently been proposed as a promising approach for low-dimensional representation of complex high-dimensional images. The method has also been adopted for low-rank parameterization of complex geologic models to facilitate uncertainty quantification workflows. A difficulty in adopting these methods for subsurface flow modeling is the complexity associated with nonlinear flow data conditioning. While conditional GAN (CGAN) can condition simulated images on labels, application to subsurface problems requires efficient conditioning workflows for nonlinear data, which is far more complex. We present two approaches for generating flow-conditioned models with complex spatial patterns using GAN. The first method is through conditional GAN, whereby a production response label is used as an auxiliary input during the training stage of GAN. The production label is derived from clustering of the flow responses of the prior model realizations (i.e., training data). The underlying assumption of this approach is that GAN can learn the association between the spatial features corresponding to the production responses within each cluster. An alternative method is to use a subset of samples from the training data that are within a certain distance from the observed flow responses and use them as training data within GAN to generate new model realizations. In this case, GAN is not required to learn the nonlinear relation between production responses and spatial patterns. Instead, it is tasked to learn the patterns in the selected realizations that provide a close match to the observed data. The conditional low-dimensional parameterization for complex geologic models with diverse spatial features (i.e., when multiple geologic scenarios are plausible) performed by GAN allows for exploring the spatial variability in the conditional realizations, which can be critical for decision-making. We present and discuss the important properties of GAN for data conditioning using several examples with increasing complexity.

Similar content being viewed by others

References

Aanonsen, S. I., Nævdal, G., Oliver, D.S., Reynolds, A.C., Vallès, B.: Ensemble kalman filter in reservoir engineering—a review. SPE J. 14(3), 393–412 (2009)

Agbalaka, C. C., Oliver, D. S.: Application of the enkf and localization to automatic history matching of facies distribution and production data. Math. Geosci. 40, 353–374 (2008). https://doi.org/10.1007/s11004-008-9155-7

Arjovsky, M., Chintala, S., Bottou, L.: Wasserstein gan. CoRR. arXiv:https://arxiv.org/abs/1701.07875(2017)

Astrakova, A., Oliver, D. S.: Conditioning truncated Pluri-Gaussian models to facies observations in Ensemble-Kalman-Based data assimilation. Math. Geosci. 47, 345–367 (2015). https://doi.org/10.1007/s11004-014-9532-3

Bellemare, M. G., Danihelka, I., Dabney, W., Mohamed, S., Lakshminarayanan, B., Hoyer, S., Munos, R.: The cramer distance as a solution to biased wasserstein gradients. CoRR, arXiv:1705.10743(2017)

Bhark, E.W., Jafarpour, B., Datta-Gupta, A.: A generalized grid connectivity–based parameterization for subsurface flow model calibration. Water Resour. Res. 47(6). https://doi.org/10.1029/2010WR009982 (2011)

Caers, J.: Efficient gradual deformation using a streamline-based proxy method. J. Petroleum Sci. Eng. 39(1):57-83. ISSN 0920-4105. https://doi.org/10.1016/S0920-4105(03)00040-8 (2003)

Canchumuni, S. W. A., Emerick, A. A., Pacheco, M. A. C.: Integration of ensemble data assimilation and deep learning for history matching facies models offshore technology conference. https://doi.org/10.4043/28015-MS(2017)

Canchumuni, S. W. A., Castro, J. D. B., Potratz, J., Emerick, A. A., Pacheco, M. A. C.: Recent developments combining ensemble smoother and deep generative networks for facies history matching. Comput. Geosci. 25, 433–466 (2021). https://doi.org/10.1007/s10596-020-10015-0

Chan, S., Elsheikh, A. H.: Parametric generation of conditional geological realizations using generative neural networks. Computational Geosciences. https://doi.org/10.1007/s10596-019-09850-7 (2019)

Chang, H., Zhang, D., Lu, Z.: History matching of facies distribution with the EnKF and level set parameterization. J. Comput. Phys. 229(20):8011–8030. ISSN 0021-9991. https://doi.org/10.1016/j.jcp.2010.07.005(2010)

Chen, C., Gao, G., Ramirez, B.A., Vink, J.C., Girardi, A.M.: Assisted History Matching of Channelized Models by Use of Pluri-Principal-Component Analysis. Soc. Petroleum Eng. 21. https://doi.org/10.2118/173192-PA(2016a)

Chen, X., Duan, Y., Houthooft, R., Schulman, J., Sutskever, I., Abbeel, P.: Infogan: Interpretable representation learning by information maximizing generative adversarial nets. CoRR (2016b)

Demyanov, V., Arnold, D., Rojas, T., Christie, M.: Uncertainty quantification in reservoir prediction Part 2—handling uncertainty in the geological scenario. Math. Geosci. 51(2), 241–264 (2019)

Dovera, L., Della Rossa, E.: Multimodal ensemble Kalman filtering using Gaussian mixture models. Comput. Geosci. 15, 307–323 (2011). https://doi.org/10.1007/s10596-010-9205-3

Emerick, A. A.: Investigation on principal component analysis parameterizations for history matching channelized facies models with Ensemble-Based data assimilation. Math. Geosci. 49, 85–120 (2017). https://doi.org/10.1007/s11004-016-9659-5

Evensen, G.: Sequential data assimilation with a nonlinear quasi-geostrophic model using monte carlo methods to forecast error statistics. J. Geophys. Res. Oceans 99(C5), 10143–10162 (1994). https://doi.org/10.1029/94JC00572

Evensen, G.: The ensemble kalman filter: theoretical formulation and practical implementation. Ocean Dyn. 53, 343–367 (2003). https://doi.org/10.1007/s10236-003-0036-9

Hendricks Franssen, H. J., Alcolea, A., Riva, M., Bakr, M., van der Wiel, N., Stauffer, F., Guadagnini, A.: A comparison of seven methods for the inverse modelling of groundwater flow. application to the characterisation of well catchments. Adv. Water Resour. 32(6), 851–872 (2009)

Gao, G., Zafari, M., Reynolds, A. C.: Quantifying uncertainty for the punq-s3 problem in a bayesian setting with rml and enkf. Society of Petroleum Engineers. https://doi.org/10.2118/93324-PA (2006)

Gao, G., Jiang, H., Vink, J. C., Chen, C., El Khamra, Y., Ita, J. J.: Gaussian mixture model fitting method for uncertainty quantification by conditioning to production data. Computational Geosciences (2019)

Golmohammadi, A., Khaninezhad, M. M., Jafarpour, B.: Group-sparsity regularization for ill-posed subsurface flow inverse problems. Water Resour. Res. 51(10), 8607–8626 (2015). https://doi.org/10.1002/2014WR016430

Goodfellow, I. J.: Nips 2016 tutorial: Generative adversarial networks. coRR (2017)

Goodfellow, I. J., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., Courville, A., Bengio, Y.: Generative adversarial nets. Adv. Neural Inf. Process. Syst. 27, 2672–2680 (2014)

Grana, D., Fjeldstad, T., Omre, H.: Bayesian gaussian mixture linear inversion for geophysical inverse problems. Math. Geosci. 49(4), 493–515 (2017). https://doi.org/10.1007/s11004-016-9671-9

Gulrajani, I., Ahmed, F., Arjovsky, M., Dumoulin, V., Courville, A.C.: Improved training of wasserstein gans. CoRR (2017)

Hakim-Elahi, S., Jafarpour, B.: A distance transform for continuous parameterization of discrete geologic facies for subsurface flow model calibration. Water Resour. Res. 53(10), 8226–8249 (2017). https://doi.org/10.1002/2016WR019853

He, J., Sarma, P., Durlofsky, L.J.: Reduced-order flow modeling and geological parameterization for ensemble-based data assimilation. Comput. Geosci. 55:54–69. ISSN 0098-3004. https://doi.org/10.1016/j.cageo.2012.03.027 (2013)

Hu, L. Y.: Extended probability perturbation method for calibrating stochastic reservoir models. Math. Geosci. 40, 875–885 (2008). https://doi.org/10.1007/s11004-008-9158-4

Jafarpour, B.: Wavelet Reconstruction of Geologic Facies From Nonlinear Dynamic Flow Measurements. IEEE Trans. Geosci. Remote Sens. 49(5):1520–1535. ISSN 1558-0644. https://doi.org/10.1109/TGRS.2010.2089464 (2011)

Jafarpour, B., Khodabakhshi, M.: A probability conditioning method (PCM) for nonlinear flow data integration into multipoint statistical facies simulation. Math. Geosci. 43, 133–164 (2011). https://doi.org/10.1007/s11004-011-9316-y

Jafarpour, B., McLaughlin, D. B.: History matching with an ensemble kalman filter and discrete cosine parameterization. Comput. Geosci. 12(2), 227–244 (2008). https://doi.org/10.1007/s10596-008-9080-3

Jafarpour, B., McLaughlin, D.B.: Estimating channelized-reservoir permeabilities with the ensemble kalman filter The importance of ensemble design. Soc. Petroleum Eng. 14(2), 374–388 (2009). https://doi.org/10.2118/108941-PA

Jafarpour, B., Tarrahi, M.: Assessing the performance of the ensemble kalman filter for subsurface flow data integration under variogram uncertainty. Water Resour. Res. 47(5). https://doi.org/10.1029/2010WR009090 (2011)

Jiang, R., Stern, D., Halsey, T., Manzocchi, T.: Scenario discovery workflow for robust petroleum reservoir development under uncertainty. Int. J. Uncertain. Quantif. 6 (2016)

Jiang, S., Sun, W., Durlofsky, L. J.: A data-space inversion procedure for well control optimization and closed-loop reservoir management. Computational Geosciences (2019)

Jo, H., Jung, H., Ahn, J., Lee, K., Choe, J.: History matching of channel reservoirs using ensemble kalman filter with continuous update of channel information. Energy Explor. Exploit. 35(1), 3–23 (2017). https://doi.org/10.1177/0144598716680141

Karras, T., Aittala, M., Hellsten, J., Laine, S., Lehtinen, J., Aila, T.: Training generative adversarial networks with limited data. CoRR arXiv:https://arxiv.org/abs/2006.06676 (2020)

Khaninezhad, M. M., Jafarpour, B.: Sparse randomized maximum likelihood (sprml) for subsurface flow model calibration and uncertainty quantification. Adv. Water Resour. 69, 23–37 (2014)

Khodabakhshi, M., Jafarpour, B.: A bayesian mixture-modeling approach for flow-conditioned multiple-point statistical facies simulation from uncertain training images. Water Resour. Res. 49(1), 328–342 (2013)

Kitanidis, P. K.: Quasi-linear geostatistical theory for inversing. Water Resour. Res. 31(10), 2411–2419 (1995)

Laloy, E., Hérault, R., Lee, J., Jacques, D., Linde, N.: Inversion using a new low-dimensional representation of complex binary geological media based on a deep neural network. Adv. Water Resour. 110:387–405. ISSN 0309-1708. https://doi.org/10.1016/j.advwatres.2017.09.029 (2017)

Laloy, E., Hérault, R., Jacques, D., Linde, N.: Training-image based geostatistical inversion using a spatial generative adversarial neural network. Water Resour. Res. 54(1), 381–406 (2018). https://doi.org/10.1002/2017WR022148

Li, L., Jafarpour, B.: Effective solution of nonlinear subsurface flow inverse problems in sparse bases. Inverse Probl. 26(10). https://doi.org/10.1088/0266-5611/26/10/105016 (2010)

Liu, N., Oliver, D. S.: Evaluation of monte carlo methods for assessing uncertainty. Soc. Petroleum Eng. 6, 149–162 (2003). https://doi.org/10.2118/84936-PA

Lorentzen, R. J., Flornes, K. M., Nævdal, G.: History matching channelized reservoirs using the ensemble kalman filter. Soc. Petroleum Eng. 17(1), 137–151 (2012). https://doi.org/10.2118/143188-PA

Ma, W., Jafarpour, B.: Pilot points method for conditioning multiple-point statistical facies simulation on flow data. Adv. Water Resour. 115:219–233. ISSN 0309-1708. https://doi.org/10.1016/j.advwatres.2018.01.021 (2018)

Maschio, C., Schiozer, D. S.: Bayesian history matching using artificial neural network and markov chain monte carlo. J. Pet. Sci. Eng. 123, 62–71 (2014)

Mirza, M., Osindero, S.: Conditional generative adversarial nets. CoRR arXiv:1411.1784(2014)

Mohd Razak, S., Jafarpour, B.: Convolutional neural networks (cnn) for feature-based model calibration under uncertain geologic scenarios. Comput. Geosci. 24(4), 1625–1649 (2020). https://doi.org/10.1007/s10596-020-09971-4

Mosser, L., Dubrule, O., Blunt, M. J.: Reconstruction of three-dimensional porous media using generative adversarial neural networks. coRR (2017)

Mosser, L., Dubrule, O., Blunt, M.J.: Stochastic reconstruction of an oolitic limestone by generative adversarial networks. Transp. Porous Media 125(1):81–103 (2018a)

Mosser, L., Dubrule, O., Blunt, M.J.: Stochastic seismic waveform inversion using generative adversarial networks as a geological prior. First EAGE/PESGB Workshop Machine Learning (2018b)

Odena, A., Olah, C., Shlens, J.: Conditional image synthesis with auxiliary classifier gans. coRR (2016)

Oliver, D. S., He, N., data, A.C Reynolds.: Conditioning Permeability Fields to Pressure. Paper Presented at the 5Th European Conference for the Mathematics of Oil Recovery, Leoben (1996)

Oliver, D. S., Reynolds, A. C., Liu, N.: Inverse theory for petroleum reservoir characterization and history matching. Cambridge University Press (2008)

Park, H., Scheidt, C., Fenwick, D., Boucher, A., Caers, J.: History matching and uncertainty quantification of facies models with multiple geological interpretations. Comput. Geosci. 17(4), 609–621 (2013)

Ping, J., Zhang, D.: History matching of fracture distributions by ensemble Kalman filter combined with vector based level set parameterization. J. Petroleum Sci. Eng. 108:288–303. ISSN 0920-4105. https://doi.org/10.1016/j.petrol.2013.04.018 (2013)

Reed, S.E., Akata, Z., Yan, X., Logeswaran, L., Schiele, B., Lee, H.: Generative adversarial text to image synthesis. CoRR. arXiv:1605.05396 (2016)

Reynolds, A. C., He, N., Oliver, D.S.: Reducing uncertainty in geostatistical description with well testing pressure data. Reservoir Characterization Recent Advances, American Association of Petroleum Geologists, pp. 149–162 (1999)

Salimans, T., Goodfellow, I.J., Zaremba, W., Cheung, V., Radford, A., Chen, X.: Improved techniques for training gans. CoRR. arXiv:1606.03498 (2016)

Sarma, P., Durlofsky, L. J., Aziz, K.: Kernel principal component analysis for efficient, differentiable parameterization of multipoint geostatistics. Math. Geosci. 40(1), 3–32 (2008). https://doi.org/10.1007/s11004-007-9131-7

Schlumberger. Eclipse E100 Industry-Reference Reservoir Simulator (2014). https://www.software.slb.com/products/eclipse

Schlumberger. Petrel E&P Software Platform (2016). https://www.software.slb.com/products/petrel

Sebacher, B., Stordal, A. S., Hanea, R.: Bridging multipoint statistics and truncated Gaussian fields for improved estimation of channelized reservoirs with ensemble methods. Comput. Geosci. 19, 341–369 (2015). https://doi.org/10.1007/s10596-014-9466-3

Sønderby, C.K., Caballero, J., Theis, L., Shi, W., Huszár, F.: Amortised MAP inference for image super-resolution. CoRR arXiv:1610.04490 (2016)

Sun, A.Y., Morris, A.P., Mohanty, S.: Sequential updating of multimodal hydrogeologic parameter fields using localization and clustering techniques. Water Resour. Res. 45(7). https://doi.org/10.1029/2008WR007443 (2009)

Tarantola, A.: Inverse problem theory and methods for model parameter estimation. Society for Industrial and Applied Mathematics (2005)

Popper, T.A.: Bayes and the inverse problem. Nat. Phys. 2, 492–494 (2006)

Tavakoli, R., Reynolds, A. C.: History matching with parametrization based on the SVD of a dimensionless sensitivity matrix. Soc. Petroleum Eng., 15. https://doi.org/10.2118/118952-PA (2010)

Tavassoli, Z., Carter, J. N., King, P. R.: Errors in history matching. SPE J., 9. https://doi.org/10.2118/86883-PA (2004)

Vo, H. X., Durlofsky, L. J.: A new differentiable parameterization based on principal component analysis for the low-dimensional representation of complex geological models. Math. Geosci. 46, 775–813 (2014). https://doi.org/10.1007/s11004-014-9541-2

Vo, H. X., Durlofsky, L. J.: Regularized kernel PCA for the efficient parameterization of complex geological models. J. Comput. Phys. 322, 859–881 (2016). https://doi.org/10.1016/j.jcp.2016.07.011

Zhang, T., Tilke, P., Dupont, E., Zhu, L., Liang, L., Bailey, W.: Generating geologically realistic 3d reservoir facies models using deep learning of sedimentary architecture with generative adversarial networks. Petroleum Sci. 16(3):541–549. ISSN 1995-8226. https://doi.org/10.1007/s12182-019-0328-4 (2019)

Zhao, J., Mathieu, M., LeCun, Y.: Energy-based generative adversarial network. coRR (2016)

Zhou, H., Gómez-Hernández, J.J., Franssen, H.H., Li, L.: An approach to handling non-Gaussianity of parameters and state variables in ensemble Kalman filtering. Adv. Water Resour. 34(7):844–864. ISSN 0309-1708. https://doi.org/10.1016/j.advwatres.2011.04.014 (2011)

Zhou, H., Li, L., Gómez-Hernández, J. J.: Characterizing curvilinear features using the localized Normal-Score ensemble kalman filter. Abstr. Appl. Anal. https://doi.org/10.1155/2012/805707 (2012)

Acknowledgements

This research is supported in part by Energi Simulation Industry Chair Program.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix A: Network architecture and training process

Appendix A: Network architecture and training process

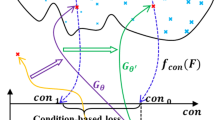

We provide a complete description of the architecture used in our study. The networks are implemented with the open-source machine learning framework Tensorflow (version 1.12). For this particular example in the appendix, each label is defined as one of the five geologic scenarios, and is assigned according to the TI used to generate the 32 × 32 realizations. CGAN (Method 1) is tasked to parameterize 500 model realizations within each geologic scenario and can be used to generate realizations from the respective geologic scenario when provided with a latent vector z from a Gaussian distribution and a geologic scenario label c (as one-hot vector encoding). Figure 18 shows the dimensions of input, output and weights (parameters) associated with each layer. A layer refers to a sequence of dense/convolution/deconvolution operation, followed by an optional batch normalization operation and finally a nonlinear operation. Note that the batch normalization operation and the nonlinear operation do not change the dimension of the input.

Table 3 lists the actual Tensorflow functions and hyperparameters used within each layer. The shorthand notation for each Tensorflow function in Table 3 is consistent with the shorthand notations used in Fig. 18. For all the examples used in this paper, the weight of the gradient penalty term, λ (in Eqs. 2, and 3) is set as 10. The three loss functions (3)-(5) for training CGAN are tuned using tf.train.AdamOptimizer(α = 5 × 10− 4, β1 = 0.5, β2 = 0.9) with a batch size (denoted as Nb) of 32. For the second method, the classifier \(\mathcal {C}_{\phi }\) loss function (\({\mathscr{L}}_{\mathcal {C}}\)) is simply omitted from the training process and the input to the generator \(\mathcal {G}_{\theta }\) only includes the latent vector.

Figure 18 also illustrates the flow of tensors when the components (\(\mathcal {G}_{\theta }, \mathcal {D}_{\psi }, \mathcal {C}_{\phi }\)) in CGAN are optimized in an alternating manner. When \(\mathcal {D}_{\psi }\) and \(\mathcal {C}_{\phi }\) are updated, the weights in \(\mathcal {G}_{\theta }\) are fixed and the flow of tensors is represented by the red bold path for generated (fake) realizations and the red stippled path for training (real) realizations. In this update step, gradient information is backpropagated to \(\mathcal {D}_{\psi }\) (calculated using \({\mathscr{L}}_{\mathcal {D}}\) via the red bold and stippled paths) to train \(\mathcal {D}_{\psi }\) how to distinguish between fake and real realizations. Additionally, gradient information is backpropagated to \(\mathcal {C}_{\phi }\) and \(\mathcal {D}_{\psi }\) (calculated using \({\mathscr{L}}_{\mathcal {C}}\) via the red stippled path) to train \(\mathcal {C}_{\phi }\) and \(\mathcal {D}_{\psi }\) to learn the geologic features for each class label. When \(\mathcal {G}_{\theta }\) is updated, the weights in \(\mathcal {D}_{\psi }\) and \(\mathcal {C}_{\phi }\) are fixed and the flow of tensors is represented by the green bold path for generated (fake) realizations. In this update step, gradient information is backpropagated to \(\mathcal {G}_{\theta }\) (calculated using \({\mathscr{L}}_{\mathcal {C}}\) and \({\mathscr{L}}_{\mathcal {D}}\) via the green bold path) to teach the generator how to reproduce geologic features associated with each class label.

Figure 19(a) shows total losses of the components (\(\mathcal {G}_{\theta }, \mathcal {D}_{\psi }, \mathcal {C}_{\phi }\)) in CGAN when trained with model realizations labeled by the geologic scenario. The network is trained for 8000 iterations, where \(\mathcal {D}_{\psi }\) is updated 5 times for every 1 iteration as recommended by [26]. A single iteration refers to loss computation on a batch - in this case since there are 2500 realizations in total, 78 iterations are needed to process the entire dataset once. Figure 19b shows samples of realizations generated by geologic scenario at selected iterations. To monitor the convergence, the input latent vector for each generated sample in Fig. 19(b) is fixed for each iteration. It is observed that \(\mathcal {C}_{\phi }\) converges rather easily where generated realizations at iteration 1000 are already exhibiting the correct features (azimuth and channel geometry) for each geologic scenario. \(\mathcal {G}_{\theta }\) generates continuous realizations (as shown, with satisfactory quality at iteration 8000) and can be discretized by taking a mid-point threshold in the case of binary facies. In this case, using thresholding method with a mid-point cutoff value of 0.5 (i.e., the mid-point value between 0 and 1) for the generated realizations, any pixel with value of less than 0.5 is assigned a discrete value of 0 and any pixel with value of more or equal to 0.5 is assigned a discrete value of 1. Beyond iteration 8000, the generated realizations remain consistent and only the continuous-valued pixels show minor variations in terms of location and values. The same behavior is observed in Method 2 when the network is trained without \(\mathcal {C}_{\phi }\).

Rights and permissions

About this article

Cite this article

Razak, S.M., Jafarpour, B. Conditioning generative adversarial networks on nonlinear data for subsurface flow model calibration and uncertainty quantification. Comput Geosci 26, 29–52 (2022). https://doi.org/10.1007/s10596-021-10112-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10596-021-10112-8