Abstract

In recent years, emotion recognition has received significant attention, presenting a plethora of opportunities for application in diverse fields such as human–computer interaction, psychology, and neuroscience, to name a few. Although unimodal emotion recognition methods offer certain benefits, they have limited ability to encompass the full spectrum of human emotional expression. In contrast, Multimodal Emotion Recognition (MER) delivers a more holistic and detailed insight into an individual's emotional state. However, existing multimodal data collection approaches utilizing contact-based devices hinder the effective deployment of this technology. We address this issue by examining the potential of contactless data collection techniques for MER. In our tertiary review study, we highlight the unaddressed gaps in the existing body of literature on MER. Through our rigorous analysis of MER studies, we identify the modalities, specific cues, open datasets with contactless cues, and unique modality combinations. This further leads us to the formulation of a comparative schema for mapping the MER requirements of a given scenario to a specific modality combination. Subsequently, we discuss the implementation of Contactless Multimodal Emotion Recognition (CMER) systems in diverse use cases with the help of the comparative schema which serves as an evaluation blueprint. Furthermore, this paper also explores ethical and privacy considerations concerning the employment of contactless MER and proposes the key principles for addressing ethical and privacy concerns. The paper further investigates the current challenges and future prospects in the field, offering recommendations for future research and development in CMER. Our study serves as a resource for researchers and practitioners in the field of emotion recognition, as well as those intrigued by the broader outcomes of this rapidly progressing technology.

Similar content being viewed by others

1 Introduction

Emotions are complex and multifaceted mental and physical states that reflect an individual's situation and state of mind. There is no universally accepted definition of emotions. They are commonly understood as a range of mental or physical states such as anger, happiness, sadness, or surprise [1]. Various sources, including dictionaries and scholarly works, offer different perspectives on emotions. The Oxford DictionaryFootnote 1 describes emotion as "a strong feeling such as love, fear, or anger; the part of a person's character that consists of feelings." In contrast, the Encyclopedia BritannicaFootnote 2 defines it as "a complex experience of consciousness, bodily sensation, and behavior that reflects the personal significance of a thing, an event, or a state of affairs." In addition, emotion has also been defined as “A response to a particular stimulus (person, situation or event), which is generalized and occupies the person as a whole. It is usually an intense experience of short duration—seconds to minutes—and the person is typically well aware of it” [2].

From a philosophical perspective, emotions can be viewed as states or processes. As a state, like being angry or afraid, an emotion is a mental state that interacts with other mental states, leading to specific behaviors (Internet Encyclopedia of PhilosophyFootnote 3). Neurologically, emotions can be defined as a series of responses originating from parts of the brain that affect both the body and other brain regions, utilizing neural and humoral pathways [3]. Psychologically, emotions are characterized as “conscious mental reactions (such as anger or fear) subjectively experienced as strong feelings, usually directed toward a specific object, and typically accompanied by physiological and behavioral changes in the body” (American Psychological AssociationFootnote 4).

Interpreting emotional states involves assessing various components such as behavioral tendencies, physiological reactions, motor expressions, cognitive appraisals, and subjective feelings [4, 5]. However, capturing these signals often requires specialized equipment, posing substantial challenges to emotion recognition. In the field of human–computer interaction (HCI), emotion recognition plays a pivotal role in determining an individual's current situation and interaction context. It has garnered huge attention due to its extensive applications across sectors including video games [6], medical diagnosis [7], education [8, 9], patient care [10], law enforcement [11, 12], digital marketing and sales [13], entertainment [14], road traffic safety [15], autonomous vehicles [16, 17], smart home assistants [18], surveillance [19], robotics [20], and cognitive edge computing [21], to name a few. Consequently, the global market for emotion detection and recognition has witnessed significant growth recently with an estimated value of USD 32.95 billion in 2021 and a projected compound annual growth rate of 16.7% from 2022 to 2030 [22]. This growth can be attributed to various factors, including the increasing demand for advanced technologies in various industries, the need for enhanced customer experiences, and the rising significance of mental health and well-being. As a result, organizations across sectors are investing in emotion detection and recognition solutions to better understand and cater to their users' needs, driving market growth and innovation.

Human communication is inherently multimodal, involving textual, audio, and visual channels that work together to effectively convey emotions and sentiments during interactions. This underlines the significance of integrating various modalities for more accurate emotion recognition [23]. Multimodality refers to the presence of multiple channels or modalities [23], such as visual, audio, text, and physiology, encompassing a wide range of formats, including text, image, audio, video, numeric, graphical, temporal, relational, and categorical data [24].

Sensing techniques for Multimodal Emotion Recognition (MER) can be categorized into three main types: invasive, contact-based, and contactless [25]. Invasive methods, which are relatively less common, involve the neurosurgical placement of electrodes inside the user's brain to measure physiological signals such as stereotactic EEG or electrocorticographic [26]. On the other hand, contact-based methods are non-invasive but necessitate the use of sensors in direct contact with the skin. Examples of contact-based methods include scalp EEG, disposable adhesive ECG electrodes for heart rate measurement, finger electrodes for electrodermal activity assessment [27], armbands for EMG signal detection, respiration sensors worn around the chest or abdomen, and wearable cameras for capturing facial expressions or body language. Contact-based methods offer the advantage of providing authentic emotional data that is difficult to enact; however, these methods can cause discomfort or psychological distress for users due to the need to wear the equipment [28].

Contactless Multimodal Emotion Recognition (CMER) integrates various sensing techniques that eliminate the need for physical contact with the user, providing a non-invasive and unobtrusive approach to emotion detection. These contactless methods employ an array of sensors, including RGB cameras, infrared/near-infrared cameras, frequency-modulated continuous-wave radars, continuous-wave Doppler radars, and Wi-Fi [29]. Although there has been considerable progress in emotion recognition technology, current methods of data collection through contact-based methods restrict the effective implementation and widespread adoption of this technology. Moreover, the lack of a comprehensive and user-friendly CMER system, along with unaddressed ethical challenges, impedes the technology's potential to transform various industries.

Given these challenges, there is a pressing need for a systematic literature review that explores the potential of contactless data collection methods and identifies gaps in the existing research landscape. The extensive review presented in this paper aims to address the problem by critically analyzing and benchmarking the existing review studies on MER, identifying the individual modalities, cues, and modality combinations used in MER, highlighting the importance and need for a CMER system, benchmarking the existing review studies, discussing a comparative schema for selecting modality combinations, addressing ethical considerations and challenges, and providing future research directions. By delving into these concerns, the literature review helps advance the field of CMER systems and ensure their responsible and ethical application across different domains.

The research questions outlined below guide the systematic literature review process. By addressing these research questions, the review aims to provide a comprehensive understanding of the current state and future directions of CMER, ultimately contributing to its advancement and ethical implementation across various applications and domains.

RQ1: | How do different emotion recognition modalities function, what limitations do they face, and how can these be overcome through multimodal and contactless methods? |

RQ2: | What are the existing gaps in multimodal emotion recognition research and how can they be bridged to improve the effectiveness of CMER systems? |

RQ3: | How can CMER systems be adapted for various real-world scenarios, what criteria should guide the selection of modality combinations and cues, and what are the potential challenges and their respective solutions? |

RQ4: | What ethical issues are associated with the deployment of CMER technology, and how can these challenges be effectively mitigated? |

RQ5: | What are the current limitations and upcoming trends in CMER that could shape the direction of future research and development? |

This comprehensive literature review stands apart from numerous existing studies on MER. Instead of adhering to traditional reviews that primarily focus on data collection methods, fusion techniques, datasets, and machine learning approaches, our study takes a unique approach to examine this field. We believe that MER has already been extensively covered from traditional perspectives, which is why we have opted for a two-tier approach. In the first tier, we conduct a thorough review of all recent reviews published on MER in general. Our motivation stems from the investigation of contemporary trends, challenges, and issues in this domain. By exploring only the review studies, we provide a more comprehensive understanding of the field than if we were to review other study types separately. In the second tier, we identify and review studies specifically addressing CMER, a more feasible and practical approach to emotion recognition. This tier considers all relevant study types to uncover current methods, developments, challenges, and limitations. We emphasize and justify the importance of contactless emotion recognition over contact-based methods.

The insights gained from this two-tier study enable us to identify the specific set of modalities, cues, and unique modality combinations used in CMER. A thorough review of the existing open datasets of contactless modalities further leads us to discuss the implementation of a CMER system in diverse use cases with the help of a comparative schema, an aspect that has been overlooked in the literature. Our comparative schema, which serves as an assessment model, is grounded in strong theoretical foundations and is supported by justifications derived from our comprehensive review of the relevant literature. Moreover, our study considers the ethical implications of contactless emotion recognition. We outline these challenges and provide potential solutions. Finally, we provide a detailed description of the challenges and limitations in implementing a CMER system while also shedding light on its future prospects, research, and development.

The contributions made by this systematic literature review can be summarized as follows:

-

1.

A comprehensive examination of individual modalities, cues, and models for emotion recognition, underlining the significance of MER, and presenting the benefits of CMER as a solution to address existing limitations (Sect. 2, answer to RQ1).

-

2.

Benchmarking of the survey studies by the selected metrics and identification of unaddressed research gaps, accompanied by a critical review of these gaps, potential solutions, an exploration of available contactless open datasets, and an analysis of unique modality combinations, shedding light on new avenues for research (Sects. 4–6, answer to RQ2).

-

3.

The formulation of a comparative schema for a CMER system, demonstrating its applicability across a diverse range of use-cases, promoting its practical relevance and addressing the potential challenges (Sect. 7, answer to RQ3).

-

4.

A thorough investigation into the ethical implications of CMER systems, providing insights into data protection, privacy issues, and methods to address biases. This includes the proposal of key principles concerning ethics and privacy, framing guidelines for ethical CMER applications (Sect. 8, answer to RQ4).

-

5.

A detailed analysis of the current limitations, potential remedial measures, and emerging trends in the CMER landscape, providing guidance for future research and development in the field (Sect. 9, answer to RQ5).

This study elucidates both the general principles of MER and the specifics of its contactless variant. It explores the roles of various modalities and their associated cues in emotion recognition, highlighting their advantages and disadvantages. Moreover, the study explores how traditionally contact-based cues can be procured through contactless methods. A thorough analysis of the dominance and frequency of usage of each modality, respective cues, modality combinations, and the relevant open datasets within the existing literature offers valuable insights into prevalent trends.

This comprehensive examination aids in identifying unique combinations of modalities and facilitating the development of a comparative schema for determining a particular modality combination suitable for a specific use case and its requirements. The discussions concerning the implementation of CMER on diverse use cases and the modality selection based on their unique requirements, challenges, ethical considerations, potential solutions, and future research directions highlighted in this study provide a stimulating reference guide for AI researchers, AI developers seeking to incorporate these systems into applications, and industry professionals and policymakers who are responsible for shaping guidelines and practices in this emergent field.

The remainder of this article is structured to methodically address the proposed research questions. Section 2 discusses the theoretical background and related work in MER and serve as a comprehensive response to RQ1. Section 3 describes our review methodology, detailing the review protocol, research strategy, selection criteria, and the process of study selection. Thereafter, our study delves into RQ2, which is thoroughly explored and addressed through the discourse presented in Sects. 4–6. Section 4 offers an exhaustive review of existing literature on MER, whereas Sect. 5 is dedicated to CMER specifically. In Sect. 6, we critically examine existing datasets pertinent to CMER. Section 7 responds effectively to RQ3 by exploring the application of CMER systems across various use cases using a modality-selection schema. Section 8 is devoted to unpacking and addressing RQ4 by exploring ethical and privacy concerns associated with CMER, proposing key principles to ensure ethical practice and privacy protection. Finally, Sect. 9, which focuses on answering RQ5 in a detailed manner, consolidates the insights gathered from the study, highlights several challenges and potential solutions, and outlines future avenues for research.

2 Theoretical background and related work

Before exploring the specific challenges and limitations in multimodal emotion recognition, as well as identifying the existing research gaps through an in-depth systematic literature review, it is essential to establish a foundational understanding through a discussion of the theoretical background and a review of related work. This introductory section provides a thorough overview of the various types of emotions, followed by an in-depth analysis of the individual modalities, cues, and models pertinent to emotion recognition. Furthermore, this section also discusses the application of machine learning techniques and the role of pre-trained models in enhancing the efficacy and accuracy of CMER methodologies.

2.1 Emotion types and their impact

Comprehending the subtleties of emotion types and their impact is crucial for developing an effective emotion recognition system that accurately identifies and interprets various emotional states across diverse contexts. Delving into emotion types allows the system to capture the full spectrum of emotions experienced by users, encompassing subtle distinctions, variations, and complex emotions that may be challenging to categorize.

Emotions are intricate mental and physiological states that reflect an individual's circumstances and mindset, steering their actions and decisions. As a result, precise emotion identification is essential for analyzing behavior and anticipating a person's intentions, needs, and preferences. A fundamental aspect of human interaction that facilitates its natural flow is our ability to discern others' emotional states based on subtle and overt cues. This capacity enables us to tailor our responses and behaviors, ensuring effective communication and promoting mutual understanding [30].

Emotions generally fall into two primary categories: positive and negative [31]. Negative emotions, such as anger, sadness, fear, disgust, and loneliness, are linked to harmful consequences on an individual's well-being [32]. On the other hand, positive emotions, like love, happiness, joy, passion, and hope, are perceived as favorable and contribute to overall well-being [33]. While positive emotions reinforce resilience, enhance coping strategies, and foster healthy relationships [34], negative emotions can result in stress, anxiety, and various physical health problems. Consequently, understanding and managing emotions are integral aspects of personal development and maintaining a balanced, healthy lifestyle.

Recognizing the importance of emotion types and their impact is important for creating emotion recognition systems that are more accurate, effective, and better equipped to address the complexities of human emotions in various contexts. This knowledge ultimately leads to improved system performance, user satisfaction, and an enhanced understanding of the emotional landscape that supports human experiences.

2.2 Emotion models

Selecting an appropriate emotion model is fundamental for any emotion recognition system, as it dictates how emotions are represented, analyzed, and interpreted across different contexts. Two primary models, categorical and dimensional, have gained popularity [35]. The former, also known as the discrete model, identifies basic emotions like happiness, sadness, anger, fear, surprise, and disgust [36]. Even though its simplicity makes it extensively used in emotion recognition, it may fail to accurately capture an emotion's valence or arousal [37].

Contrarily, dimensional models view emotions as points or regions within a continuous space [38]. The circumplex affect model is a popular example of this methodology that classifies emotions into two independent neurophysiological systems: one related to valence and the other to arousal [15]. This perspective allows to encompass a wider range of emotions and represent subtle transitions between them. Studies indicate that continuous dimensional models may provide a more accurate description of emotional states than categorical models [39,40,41]. Figure 1 illustrates the mapping of the discrete emotions model to the continuous dimensional model.

2.3 Emotion modalities

In HCI’s realm, modality can be defined as a communication channel that enables interaction between users and a computer through a specific interface [42]. It can also be seen as a sensory input/output channel between a computer and a human [43]. Modalities represent a diverse range of information sources capable of offering various types of data and perspectives [31]. They offer diverse types of data that contribute to our understanding of emotional states. Modalities for emotion recognition encompass text, visuals, auditory signals, and physiological signals. Each offers unique insights and varies in terms of effectiveness and accessibility. While some data can be easily extracted, others might require specialized setups or equipment. An understanding of different modalities' characteristics can aid researchers in designing comprehensive and effective emotion recognition systems that respond accurately to subtle changes in human emotions.

2.3.1 Text modality

Text modality has been predominantly utilized in sentiment analysis [44, 45]; however, researchers have also discovered that it contains elements of emotions, particularly in data published on social media platforms [46]. Text data have been employed for emotion recognition as a standalone modality [47] or in conjunction with other modalities such as audio [48]. Convolutional neural networks have shown success in emotion recognition from text [49], and recent advances in large language transformers have further improved the accuracy of emotion recognition in text-based data [50]. Despite its potential, relying exclusively on text data for emotion recognition presents several challenges. One such challenge is the contrasting perceptions of emotions between writers and readers [51], which can lead to misinterpretations. Additionally, the absence of contextual meaning within text data can further complicate the process of correctly identifying emotions [52].

2.3.2 Visual modality

Videos provide a multifaceted source of data, combining visual, auditory, and textual elements to enable comprehensive identification of human emotions through multimodal analysis [53]. Video cues include facial expressions [54], body language [55], gestures [56], eye gaze [57], contextual elements within the scene [58], and socio-dynamic interactions between individuals [59]. Researchers have utilized these visual cues, either individually or in combination, to recognize and classify emotions more accurately. Visual modality plays a crucial role in continuous emotion recognition and serves as a methodology that aims to identify human emotions in a temporally continuous manner. By capturing the natural progression and fluctuations of emotions over time, continuous emotion recognition offers a more realistic and distinct understanding of human emotional states [60]. However, relying solely on visual modalities for emotion recognition, particularly a single cue, has its limitations, as certain individuals may not express their emotions as openly or clearly. Some people may display subtle or masked emotional cues, making it challenging for visual-based systems to accurately detect and interpret their emotions [61].

2.3.3 Audio modality

Acoustic and prosodic cues, such as pitch, intensity, voice quality features, spectrum, and cepstrum, have been widely studied and employed for emotion recognition [62]. Researchers have also utilized cues like formants, Mel frequency cepstral coefficients, pause, teager energy operated-based features, log frequency power coefficients, and linear prediction cepstral coefficients for feature extraction. With the advent of deep learning, new opportunities have arisen for extracting prosodic acoustic cues [63]. Convolutional neural networks have been effectively applied to extract both prosodic and acoustic features [64, 65]. In addition to these verbal prosodic-acoustic cues, non-verbal cues such as laughter, cries, or other emotion interjections have been investigated for emotion recognition using convolutional neural networks [66]. Since convolutional neural networks cannot capture temporal information, researchers have also employed alternative deep neural networks like dilated residual networks [67] and long short-term memory networks [68] to preserve temporal structure in learning prosodic-acoustic cues.

Despite the potential of the audio modality for emotion recognition, several challenges remain. One major challenge is selecting the most suitable prosodic-acoustic cues for effective emotion recognition [69]. Another active area of research is determining the appropriate duration of speech to analyze [70]. Furthermore, emotion recognition using the audio modality faces issues such as background noise [71], variability in speech [72], and ambiguity in emotional expression [73], which can impact the accuracy and reliability of the emotion recognition system.

2.3.4 Physiological modality

The physiological modality captures data from the human body and its systems. These responses arise from both the central and autonomic nervous systems [12]. A range of physiological cues can be used to monitor these responses, such as electroencephalography (EEG), electrocardiography (ECG), galvanic skin response, heart rate variability, respiration rate analysis, skin temperature measurements, electromyogram, electrooculography [25], and photoplethysmography or blood volume pulse [74].

Physiological signals, which are involuntary and often unnoticed by individuals, offer a more reliable approach to emotion recognition since they reflect dynamic changes in the central neural system that are difficult to conceal [61]. Recent studies have extensively explored physiological signals for emotion recognition [75,76,77]. Among these signals, EEG has gained significant attention due to its fine temporal resolution, which captures the rapid changes in emotions [78], and its proximity to human emotions as a neural signal [79].

While physiological signals offer valuable insights into emotion recognition, they also present several challenges. Data acquisition typically require specialized setups or wearable sensors, which may be invasive, uncomfortable, or inconvenient for users, especially when worn for extended periods. A standard lab setting often involves subjects wearing earphones and sitting relatively motionless in front of a screen displaying emotional stimuli [80]. Despite the advancements in ubiquitous computing that enable the collection of physiological data through electronic devices (e.g., skin conductivity, skin temperature, heart rate), some signals, such as EEG and ECG, still necessitate costly sensors or laboratory environments. Moreover, the reliance on contact-based sensing and voluntary user participation can pose practical difficulties. Another challenge lies in the variability of participants' responses to different stimuli, which can lead to lower emotion recognition accuracy. This issue necessitates a well-designed experimental setup and the careful selection of stimuli to ensure reliable results [81].

2.3.5 Multimodal systems

Multimodal systems do not necessarily require cues from different modalities. Cues may come from a single modality, forming a multimodal system. For example, the visual modality can combine facial expressions and gaits [59], while the integration of different acoustic cues such as 1D raw waveform, 2D time–frequency Mel-spectrogram, and 3D temporal dynamics can be used for speech emotion recognition [82]. Similarly, different cues from various modalities can form a multimodal system, such as sentence embeddings (text modality), spectrogram (audio modality), and face embeddings (visual modality) as used in [83], or facial expressions and EEG-based emotion recognition used in [84]. However, multiple inputs for the same cue do not constitute a multimodal system (e.g., facial expression recognition using multiple camera views). This leads us to define multimodality as 'the data obtained from more than one cue pertaining to single or multiple modalities, where modalities refer to textual, auditory, visual, and psychological channels.'

2.3.6 Contactless multimodal emotion recognition (CMER)

The modalities, including visual cues (face, posture, and gestures), oculography (visible light and infrared), skin temperature, heart rate variability, and respiratory rate can all be obtained via contactless sensors [25]. The primary advantage of CMER is that it minimizes discomfort and inconvenience typically associated with wearable devices or skin-contact sensors, enhancing user experience and promoting natural interactions. By seamlessly integrating into various environments, contactless approaches are well-suited for applications where user comfort and unobtrusive emotion recognition are essential.

The study of CMER encompasses a range of methodologies, including traditional machine learning, deep learning, and the utilization of pre-trained models. These various approaches are elaborated upon in the subsequent discussion.

2.3.6.1 Employing traditional machine learning for CMER

Machine learning has become a popular tool for CMER across a variety of fields, employing both traditional and deep learning techniques. Over the last one decade, several machine learning techniques have been employed for CMER. Here, we discuss a few of them proposed in the last few years. These methods first extract the modality features using a feature extractor and then train machine learning algorithms to learn the emotions. These algorithms include Support Vector Machines (SVM), K-nearest neighbors (KNN), random forests (RF), and decision trees (DT), among others. The extracted features included different combinations of modalities studied for different applications. For instance, the authors in [85] train SVM models on acoustic and facial features for emotion recognition from surgical and fabric masks. It has been found that the performance of machine learning algorithms vary significantly for the same data and task. For instance, the authors in [86] evaluated both traditional machine learning algorithms (such as KNN, random forests RF, decision trees DT, among others) as well as extreme learning machines (ELMs) and Long-Short-Term Memory networks (LSTM) for board game interaction analysis. The results show that ELMs and LSTM perform best for expressive moment detection whereas random forests perform best for emotional expression classification.

Earlier techniques did not consider the temporal information and environmental conditions in emotion recognition. However, temporal and environmental factors have been deemed increasingly important for emotion recognition as shown by some recent techniques. For example, the authors in [87] showed the importance of temporal information in emotion detection by proposing a Coupled Hidden Markov Model (CHMM)-based multimodal fusion approach to modeling the contextual information based on the temporal change of facial expressions and speech responses for mood disorder detection. The speech and facial features were used to construct the SVM-based detector for emotion profile generation. The CHMM was applied for mood disorder detection. Similarly, environmental factors were studied for emotion recognition in combination with physiological signals by a hybrid approach in [88] by developing RF, KNN, and SVM models. These techniques showed that temporal and environmental context has significant impact on emotions.

Besides visual and acoustic features, text features have also been studied in combination with these two modalities and have been identified as an important modality. For instance, the authors in [88] used a Gabor filter and SVM for facial expression analysis, regression analysis of audio signals, and sentiment analysis of audio transcriptions using several existing emotion lexicons for tension level estimation in news videos. This work showed that the integration of sentiments extracted from text into visual and acoustic features improve the performance of CMER. This also motivated authors to study physiological signals in combination with visual and acoustic modalities. For example, a study [89] exploited facial expressions and physiological responses such as heart rate and pupil diameter for emotion detection, using an extreme learning machine algorithm. However, the setup required for measuring the physiological signals makes it infeasible for practical applications.

2.3.6.2 Employing deep learning for CMER

Due to the growing performance of deep learning algorithms, CMER’s focus has greatly shifted to employing deep learning architectures. The notable deep learning architectures used in emotion recognition include CNNs, Recurrent Neural Networks (RNNs), LSTMs, and transformer-based architectures. These architectures have been used individually or in combination. Most deep learning approaches to CMER emphasize on speech signals and audio-visual features [90, 91]. Attention-based methods in deep learning have further enhanced the deep learning performance. A notable instance is a study [92] that utilized an attention-based fusion of facial and speech features for CMER. Frustration detection in gaming scenarios was another application where deep neural networks were used on audio-visual features [93]. Text features, in combination with visual and audio features were also studied using deep learning. For instance, depression detection using audio, video, and text features was achieved through deep learning-based CMER [94]. It was found that the integration of text features into audio and visual features using deep learning further improved the CMER performance.

CMER employed deep learning in a number of interesting applications including education. For example, CMER was applied to younger students during the COVID-19 lockdown by using two convolutional neural networks (CNNs) on acoustic and facial features combined [95]. Another CMER technique used CNN on eye movement and audiovisual features in Massive Open Online Courses (MOOC) environments to classify learners' emotions during video learning scenarios [96]. Further research included student interest exploration, where deep learning was used on head pose as well as facial expression and class interaction analysis [97]. Some methods have also probed into contactless physiological signals with deep learning for CMER; a study employed a method that combined heart rate and spatiotemporal features to recognize micro-expressions [98].

2.3.6.3 Employing pre-trained models for CMER

The progressive strides in deep learning have paved the way for sophisticated, large-scale, pre-trained models that are now finding application across diverse fields. Pre-trained models are the deep learning models trained on large datasets. These models can be fine-tuned or re-trained for a similar task without training from scratch. These models have emerged as a particularly effective tool for CMER, achieving superior results when fine-tuned for CMER. Several studies have leveraged the power of these pre-trained models for emotion recognition using different modalities such as speech and facial features. Research has also demonstrated the effectiveness of finetuning multiple pre-trained models for CMER tasks. Fine-tuning pre-trained models eliminates the need for vast datasets, extensive computational resources, and prolonged training times. Several pre-trained models have been finetuned for CMER.

Among such models, one model is Wav2Vec2.0 which is a transformer based speech model trained on large-scale unlabeled automatic speech recognition data that has been extensively employed for speech recognition-based CMER [99]. Other notable models include the CNN architectures such as AlexNet, ResNet, VGG and Xception which have been employed for both audio and visual features [82, 100]. Similarly, pre-trained LLMs such as Bidirectional Encoder Representations from Transformers (BERT), Generative Pretrained Transformer (GPT) and Robustly Optimized BERT Pretraining Approach (RoBERTa) have also been used for CMER using text features [101].

The use of multiple pre-trained models to handle each modality by its respective model has also been studied in several studies for improving CMER’s performance. For instance, AlexNet was used to extract audio features which, along with visual features extracted by CNN + LSTM architectures, formed an effective combination for CMER tasks [102]. Similarly, the authors in [103] utilized both Wav2Vec2.0 and BERT pre-trained models for CMER while extracting speech and text features respectively. Similar multi-model approaches have been adopted by other researchers as well [104]. A notable example is [105] in which a pre-trained model trained on the ImageNet dataset was used to generate segment-level speech features before applying them to a Bidirectional Long Short-Term Memory with Attention (BLSTMwA) model.

Other techniques involved integrating audio and visual features with the BERT model [106], or fine-tuning separate pre-trained models such as GPT, WaveRNN, and FaceNet + RNN to extract domain-specific text, audio, and visual features, respectively [83]. More recent studies involved finetuning an audiovisual transformer for CMER [107], and finetuning ResNet and BERT pre-trained models for audio-text-based CMER [108]. Pre-trained models were also extended to study continuous emotions to detect arousal and valence, where multiple pre-trained models were tested for feature extraction across different modalities [109].

While finetuning single or multiple pre-trained models has been extensively used for CMER, some studies have also proposed specialized pre-trained models, such as MEmoBERT [110], that make them ideal candidates for CMER methods. This is further supported by recent initiatives such as an open-source, multimodal LLM based on a video-LLAMA architecture designed explicitly to predict emotions using multimodal inputs [111].

Given the wide range of CMER techniques, their relative performance comparison becomes a complex task due to the use of varied datasets and evaluation metrics. This complexity is enhanced by different research approaches, such as deep learning versus machine learning, and performance metrics such as accuracy, concordance correlation coefficient, and F1 score among others, and the scenarios considered. Furthermore, the application of these research techniques varies across studies, with some using publicly available datasets while others utilize self-generated or privately held data. Adding to this complexity is the diverse focus and emphasis found within each article which makes a balanced comparison nearly impossible without additional comprehensive investigation. Future studies should aim for a thorough exploration presenting a benchmark comparison among various methods. Despite these complexities in comparative analysis, there is an observable trend toward improved efficiency in existing studies that adopt more advanced models and methods over time. In general, several recent studies (e.g. [112, 113],) show that the performance of deep learning-based multimodal emotion recognition for speech and image data is better than traditional machine learning algorithms due to the fact that deep learning can find intricate patterns in complex data more efficiently than traditional machine learning.

As many contactless methods rely on audiovisual modalities, these are prone to manipulations [28] and can be affected by factors like occlusion, lighting conditions, noise, and cultural differences. To enhance CMER accuracy, it is essential to combine audiovisual modalities with other cues from different modalities. The contact-based nature of physiological modality-based methods has traditionally limited their use in CMER; however, recent research has demonstrated promising advancements.

Several studies have successfully acquired physiological cues, traditionally dependent on contact-based methods, using contactless techniques. Examples include measuring heart rate and respiratory rate with remote photoplethysmography [114] and continuous-wave Doppler radars [115]. These breakthroughs have been made possible by recent advancements in multispectral imaging, computing, and machine learning, which facilitate the transformation of contact-based cues into non-contact ones.

3 Review method

This section outlines the methodological approach employed to conduct a systematic literature review on CMER. We detail the steps taken to ensure a comprehensive, organized, and rigorous examination of the existing literature. The following subsections cover the review protocol, research strategy, inclusion/exclusion criteria, and the study selection process. These elements collectively provide a clear and transparent framework for the systematic review, ensuring that the findings are robust and relevant to the research questions and objectives.

3.1 Review protocol

Our review protocol, developed in accordance with the established guidelines for conducting systematic literature reviews as outlined in [116], is illustrated in Fig. 2. The process begins with the identification of research questions that target the primary theme of our review. These questions guide the scope and objectives of the systematic review. After establishing the research questions, we create search queries based on them and select relevant databases to search for relevant literature. These search queries are individually applied to each chosen database, ensuring comprehensive coverage of the relevant studies. The retrieved papers then undergo a manual analysis to assess their relevance based on our inclusion and exclusion criteria. In an iterative process, we refine the search queries and repeat the search until no significant changes in the results are observed. This approach ensures that we collect the most relevant studies and minimize the risk of overlooking crucial papers. After the iterative refinement, we remove any duplicate papers from the search results and consolidate the remaining papers into a final list of relevant studies. This step reduces redundancy and allows for a more focused analysis of the literature. Finally, we conduct a thorough review of the selected papers, extracting essential insights, identifying trends, and drawing conclusions.

4 Research strategy

Considering our research questions and the objectives of our review, we first conduct a thorough examination of review articles that address MER in general. Following this initial analysis, we shift our focus specifically to CMER. To accommodate these two distinct aspects of our review, we formulate two separate search queries, the details of which can be found in Table 1. It is important to note that our selected keywords may not always appear in the title fields of the articles; therefore, we extend our search to include titles, abstracts, and keywords to identify relevant articles. However, we refrain from searching the entire article text, as this approach could yield numerous irrelevant results. That said, we meticulously analyze the retrieved articles to ensure their relevance to our study. By using this two-tiered search strategy, we are able to comprehensively review the literature on MER while also delving deeper into the specific area of CMER. This approach allows us to effectively address our research questions and gain a deeper understanding of the current state and future directions of this rapidly evolving field.

It is crucial to emphasize that the selection of keywords is meticulously carried out, considering contemporary trends in MER. This process begins with a basic search query, which is refined iteratively as we analyze the retrieved articles. The goal is to ensure that the search query becomes more focused and aligned with the most relevant and current trends in the field. In our second query, we specifically include the keyword “audiovisual” to fetch more articles related to contactless emotion recognition. The addition of this keyword helps to capture a broader range of studies that focus on contactless approaches, reflecting the increasing popularity and effectiveness of audiovisual-based emotion recognition methods in the field. In order to maintain consistency and align with the objective of investigating contactless-based methods, we deliberately excluded the term “EEG” from our search in the second query. Although “EEG” often appeared in conjunction with “non-invasive” in the search results, it predominantly refers to a contact-based or even invasive method, which deviates from our research focus. By refining our search queries in this manner, we can create a comprehensive and targeted collection of articles that reflect the current state of research in CMER. This approach ensures that our review is both accurate and up-to-date, allowing us to draw meaningful conclusions and identify areas for future exploration.

4.1 Inclusion/exclusion criteria

In a systematic literature review, well-defined inclusion and exclusion criteria play a crucial role in identifying papers relevant to the research questions for inclusion in the review. Our inclusion/exclusion criterion is based on relevance, specific databases, date of publication, study type, review method, completeness of study, and publication language. We selected studies from prominent and pertinent databases which were chosen due to their comprehensive coverage of literature in the relevant fields, ensuring access to a wide range of high-quality research articles. The selected studies included journal articles and papers published in conference proceedings and workshops. To focus on the most recent developments and advancements in the area, we set the publication range from 1st January 2017 to 1st March 2023. This timeframe was chosen to provide a comprehensive overview of the recent trends, methods, and findings while maintaining the review’s relevance and currency.

For the first query, our focus is exclusively on review articles. We filter the results by using the available option within the database to specifically select review articles or, when such an option is not provided, by manually analyzing and selecting the review articles. This approach aligns with the scope of our literature review, as our primary objective is to focus on the review articles related to MER, enabling us to identify research gaps unaddressed in existing reviews. In recent years, review articles on MER have already assessed various study types, including experimental studies, case studies, qualitative, and quantitative research. Consequently, we chose not to include these study types in the first tier of our literature review, as they would not offer any new insights. By focusing on review articles, we can obtain a more comprehensive understanding of the existing research landscape, allowing us to recognize trends, gaps, and potential areas for future exploration in the field of MER.

Since our study specifically emphasizes CMER, it is essential to consider other study types in addition to review papers in the second query. By doing so, we aim to conduct a comprehensive review of the existing modalities, data collection methods, fusion techniques, and machine learning approaches relevant to the topic. This thorough examination enables us to find plausible answers to our research questions and better understand the current state of the field. Furthermore, incorporating other study types, such as experimental studies, case studies, qualitative, or quantitative research, allows us to explore the nuances and complexities of CMER. This inclusive approach also helps us identify any potential gaps or shortcomings in the existing literature, paving the way for further advancements in the field. Moreover, considering various study types provides valuable insights into the practical implementation of CMER, ensuring that our research is both relevant and applicable to real-world scenarios.

Our selection criteria focus on comprehensive, peer-reviewed studies available in full text. This encompasses journal articles, conference proceedings, and workshop papers, all published in English. We source these materials from a mix of open-access platforms, subscription-based databases, and preprint repositories, including Web of Science (WoS), IEEE Xplore, ACM, EBSCO, SpringerLink, Science Direct, and ProQuest. The subject areas under consideration span a diverse range, including computer science, psychology, neuroscience, signal processing, human–computer interaction, and artificial intelligence.

We exclude certain types of content to maintain the focus of our study. Editorial and internal reviews, incomplete studies, extended abstracts, technical reports, white papers, short surveys, and lecture notes are left out of the selection. In addition, we do not include articles that were primarily concerned with sociology, cognition, medicine, behavioral science, linguistics, and philosophy to maintain a specific thematic focus.

4.2 Study selection process

Figure 3 presents the PRISMA flow diagram demonstrating the study selection process used in our systematic review and meta-analysis. Both Query 1 and Query 2 initially yielded 2196 articles across all databases. Some databases, such as WoS, allowed direct filtering for review articles. In contrast, databases such as SpringerLink required manual examining of search results for articles featuring the term “review” in their titles. In this phase, we excluded non-English articles, studies published before 2017, and incomplete studies, reducing the article count to 395. Additionally, for query 2, we manually excluded studies that utilized electroencephalograph/EEG or other brain imaging techniques as the primary recording channel. This exclusion was essential due to the current limitations in recording such data in a contactless manner. Following this, all references were collated within the Mendeley Reference Manager. Subsequently, in the second phase, abstracts underwent additional scrutiny to exclude experimental studies from Query 1 and papers relating to other disciplines such as behavioral sciences. This left us with 177 full papers for detailed examination.

Upon reviewing these papers thoroughly, we further excluded 79 articles. Exclusion criteria included duplication, lack of direct relevance to Human–Computer Interaction (HCI), absence of significant insights (often found in short conference papers), or exclusive focus on unimodal techniques. Ultimately, this meticulous process led to the inclusion of 98 papers in our final study.

Our meticulous selection process ensures that the articles included in our review are highly relevant to our research questions and objectives, providing a comprehensive understanding of MER and addressing any existing gaps in the literature.

5 Benchmarking multimodal emotion recognition: analysis of key metrics and research gaps

To explore the state-of-the-art in MER, we conduct a comprehensive review of existing literature on the subject. Our first objective is to identify relevant comparison and analysis metrics that could effectively evaluate each study. For this purpose, we perform a thorough examination of prior work in this field [10, 12, 23, 31, 72, 81, 117,118,119,120,121,122,123,124,125,126,127,128,129,130,131,132,133,134,135,136,137,138,139,140,141,142,143,144], identifying the key metrics shown in Fig. 4. These metrics have been explained in detail in Table 2. Following the identification of these metrics, we carefully assess each review article using these criteria as a guiding framework. It is worth acknowledging that while it may not be feasible to address all these metrics in any single study, our aim in identifying them and comparing them with the existing literature is to shed light on potential research gaps that could be addressed in future work. In addition to this, identifying and comparing these metrics across existing literature results in establishing a common framework for evaluation and benchmarking, as well as highlighting best practices and successful methodologies. Some acronyms, used in the analysis, are given in the table given in Appendix (supplementary information).

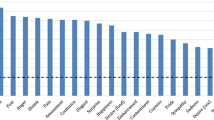

By analyzing the distribution of modalities and cues used in the study set, our comprehensive review provides a thorough understanding of the current landscape of MER research (see Figs. 5 and 6), emphasizing the significant role non-verbal cues play, notably physiological signals such as EEG, ECG, and EDA, either individually or in combination with other visual inputs. These signals can reveal subconscious emotional states that are often subtle to other modalities. However, implementing physiological signal-based systems in the wild remains a challenge due to the aforementioned issues.

Distribution of modalities in the study set. Physiological signals emerge as the predominant modality for emotion recognition, both when utilized individually and when combined with other modalities (see also Fig. 7)

The coverage of cues within the study set with cues highlighted in red indicating the highest coverage. Among the physiological signals group, EEG and ECG exhibit the most extensive coverage. FER emerges as the widely employed modality within the visual group. In the audio and text groups, MFCC, Text Reviews (TR), and Word Embeddings (WE) are the modalities that have been most frequently utilized

Our findings also highlight diverse combinations of modalities utilized in emotion recognition (see Fig. 7). In addition to the physiological and physiological–visual cues that are still largely required for the contact-based techniques, audio–visual modalities have been widely applied to MER, especially in the context of CMER. Some studies also explore the combination of audio, visual, and physiological modalities. Despite a smaller representation of text modality in our review, its effectiveness, particularly in conjunction with other modalities, is noteworthy. This is especially true in the context of conversational agents, where user input is largely text-based, emphasizing the necessity of an efficient emotion recognition system that incorporates audio, visual, text, and physiological modalities.

The distribution of modality combinations within the study set, highlighting the prominence of various combinations. Physiological signal combinations are the most extensively employed choice, owing to their capability to accurately detect subtle emotional changes, relative immunity to deliberate manipulation, and provide continuous, real-time data, enabling a more comprehensive understanding of emotions

Within the scope of behavioral cues, Facial Emotion Recognition (FER) is highly employed, owing to its intuitive nature and the advancements in computer vision and machine learning that facilitate automated analysis. In addition, facial features provide profound insights into human emotion and behavior and are considered to be the strongest source of emotion cues [145, 146]. Paired with body gestures and movements, FER can provide a richer emotional context. Likewise, audio features such as Mel Frequency Cepstral Coefficients (MFCCs) and prosodic characteristics yield crucial emotional insights by dissecting spectral and rhythmic speech elements. Text modality, enhanced by natural language processing techniques like sentiment analysis, offers an added layer of emotional context, supporting the effectiveness of MER systems (see Fig. 6).

Figure 4 also categorizes the benchmarking metrics into two primary groups based on their coverage in review studies. Metrics in green are well-covered, representing a common pattern followed by most studies. In contrast, the metrics in orange indicate research gaps, as they have not been addressed by many studies. Figure 8 provides a clear illustration of the metrics that have been adequately addressed in the existing studies and those that have not received sufficient attention. In order to identify the research gaps, which are represented by the under-addressed metrics, we established a threshold of 30%.

One notable limitation in existing review studies is the lack of attention given to ethical and privacy concerns. With the rapid advancement of artificial intelligence, numerous ethical implications and privacy-preserving challenges have arisen. These concerns are crucial to address, as they involve respecting individuals’ rights, ensuring fairness, and maintaining public trust in AI-enabled systems. We address ethical and privacy issues in detail in Sect. 7, where we also highlight the key principles for ensuring ethical and privacy compliance.

The computational complexity of MER systems is also overlooked in many review studies. The addition of more modalities to emotion recognition systems correlates with increased computational complexity [147], which can hinder real-time system deployment. Evaluating this complexity is vital in determining if these systems are feasible for real-time applications. There is no one-size-fits-all approach to managing computational complexity, but several strategies can enhance real-time performance. For example, we can select features that are highly representative of the emotional state [120], or apply dimensionality reduction to multimodal feature vectors [148] to simplify the model. In terms of deep learning models, the careful selection of parameters like convolution kernel size [149], the total number of model parameters [150], and the sizes of masks and weights in attention networks [151] can help manage complexity. Another strategy could be the utilization of the correct type of features for the task at hand. For instance, using virtual facial markers for facial emotion recognition (FER) may be more efficient than an approach based solely on image pixels [147].

The importance of robustness and reliability in emotion recognition (ER) systems cannot be overstated, however, these factors have received inadequate attention in review studies. Robustness characterizes the system’s capacity to sustain performance in the face of external disturbances, and reliability represents its consistency in delivering accurate results over time. These qualities become particularly crucial in healthcare settings where lives hang in the balance. Take, for instance, an MER system employed for pain detection—it must possess high robustness to assess pain accurately. Several methods can be used to enhance the robustness and reliability of an MER system such as reducing the influence of environmental factors like noise [152], applying robust fusion techniques such as transformer-based cross-modality fusion [83], employing data augmentation in training MER models [153], and adopting self-supervised learning approaches [154]. Recent studies have also indicated the high robustness of transformer-based multimodal ER models and deep canonical correlation analysis (DCCA) when combined with an attention-based fusion strategy [113, 155]. A more recent approach tackles the issue of robustness and reliability in MER systems by providing explanations for the predictions, thereby addressing the issue of label ambiguity due to the subjective nature of emotions. The model’s predictions are deemed correct if the reasoning behind them is plausible [111].

Context awareness remains yet another significant research gap in the survey studies. While the integration of multiple modalities indeed enhances contextual comprehension, it only addresses one aspect of the overall context. The notion of context is vast, encompassing various elements like time, location, interaction, and ambiance, among others [156]. Recent studies have shown that self-attention transformer models are effective in capturing long-term contextual information [157]. These models can help in associating a modality cue—for instance, facial emotion recognition (FER)—with context cues such as the body and background [117]. Likewise, by employing a self-attention transformer, we can use background information and socio-dynamic interaction as contextual cues in conjunction with other modality cues like FER and gait [59]. Therefore, these transformer models represent promising approaches for improving our understanding of the context in MER.

Although transparency and explainability are becoming increasingly significant in the field of AI, existing survey studies often overlook these aspects in emotion recognition. This oversight can largely be attributed to little focus on these attributes in the realm of emotion recognition. Earlier techniques mostly confined themselves to identifying influential modalities or cues [158, 159]. Nevertheless, a growing interest in these aspects has been observed recently. For instance, the authors in [160] sought to explain the predictions of MER systems using emotion embedding plots and intersection matrices. A recent study [161] implemented a more advanced technique. Their method provides explanations for its recognition results by leveraging situational knowledge, which included elements like location type, location attributes, and attribute-noun pair information, as well as the spatiotemporal distribution of emotions.

The issue of inter-subject data variance, or individual differences in emotion expression and perception, is often overlooked in emotion recognition research. Data related to specific modalities and emotions can vary significantly from person to person. For example, facial expressions, gait analysis, gestures, and physiological signals may not universally represent emotions, as individual responses and expressions can differ. More research about domain adaptation and developing attention-based models could help tackle inter-subject data variability [81].

6 Contactless multimodal emotion recognition (CMER)

In our second-tier investigation, we review the method papers that utilize MER techniques ([70, 85,86,87,88,89, 93,94,95,96,97,98,99, 162,163,164,165,166,167,168,169,170,171,172,173,174,175,176,177,178,179,180,181,182,183,184,185,186,187,188,189,190,191,192,193,194,195,196,197,198,199,200,201,202,203,204,205,206,207,208,209,210,211,212]), where modalities are obtained through sensors that are not in physical contact with the user’s skin. A comprehensive analysis of contactless studies, exploring aspects like modality combination, types of emotion models, applications, and methods, is presented in Fig. 9. In our comparative analysis, we differentiate between verbal and nonverbal cues within the audio modality, treating them as separate entities. Verbal cues primarily pertain to the content of speech, while nonverbal cues encompass various non-linguistic elements like laughter, cries, or prosodic features such as tone, pitch, and speech rate, amongst others. This distinction enables a clearer understanding of how different aspects of audio modalities have been explored. Similarly, we distinguish between RGB and thermal cues within the visual modalities to differentiate the roles of different visual cues in CMER. It is pertinent to mention that all the methods summarized in this section utilizing physiological modality use contactless techniques for data collection.

We identify the specific modality combinations used in CMER systems along with their percentage coverage, as shown in Fig. 10. The audio-visual emerges as the dominant combination of modalities. Specifically, verbal cues along with RGB visual cues remain dominant due to the fact that verbal communication is a key form of human interaction, while RGB visual cues provide critical context, enhancing communication effectiveness. Nonverbal and thermal cues of audio and visual modalities as augmented modalities remain under-explored despite their effectiveness. These cues have the potential to reveal subtle elements of emotional responses, engagement levels, and intentions, which are not explicitly conveyed through verbal and/or RGB visual cues. Similarly, the integration of text and contactless physiological signals as auxiliary modalities have been inadequately studied, despite their unique benefits (see Sects. 1.4.1, 1.4.4 and Sect. 5). Among other modality combinations, the audio-visual-physiological group has not been adequately explored, which could be promising.

Most of the studies have focused only on the categorical model, potentially due to its simplicity. The dimensional models, which provide a more complex but realistic representation of emotions as discussed in Sect. 3.1, have not been adequately addressed potentially due to the inherent complexity of dimensional models.

We also analyze the applications of CMER systems in the studies in the context of three major fields: healthcare, education, and social interaction due to their intrinsic connection with human emotions. Despite the presence of studies discussing the application of existing CMER methods in one or more of these sectors, most research tends to address CMER in a general manner. We explore the implementation of the CMER system in these specific sectors along with other diverse scenarios in Sect. 6.

Due to the variation in modality groups and evaluation criteria across different studies, we do not draw performance comparisons between the CMER methods. However, we assess these studies based on the employed machine learning techniques: traditional methods like random forest, nearest-neighbors, and SVM [213], in contrast with deep learning methods. An upward examination of Fig. 9 reveals a progressive shift in CMER from traditional machine learning to deep learning. This shift can be attributed to deep learning’s inherent ability to learn feature representations dynamically, which leads to enhanced accuracy and improved generalization. In addition, the proliferation of large-scale datasets and the surge in computational resources have further enhanced the accuracy of deep learning techniques in emotion recognition tasks.

For a comprehensive discussion of machine learning and deep learning emotion recognition techniques, we recommend recent surveys [214, 215], which explore this topic in greater detail.

7 Existing datasets for contactless multimodal emotion recognition

The availability of open datasets is of utmost importance in the advancement of MER systems, as they serve as the foundation for training AI models. The quality and diversity of these datasets directly impact the system’s ability to generalize and accurately detect emotions in real-world scenarios. Open datasets not only facilitate the initial development and training of models but also contribute to their evaluation. By testing the system on separate data sets, developers can assess its performance and robustness against unseen instances, ensuring its reliability and validity. Furthermore, open datasets foster faster research progress by eliminating the need for individual data collection efforts. Conducting a review of existing open datasets also holds value in curating a selection of datasets specifically designed for contactless modalities. This analysis provides additional support for one of the metrics outlined in our comparative schema—data availability for a specific use-case (refer to Sect. 6).

Several open datasets have been published over the past 15 years, many of which have been previously examined in reviews such as [81, 124, 213]. In this section, we present a comprehensive list of relevant datasets, with a specific focus on CMER. Table 3 provides an overview of 33 datasets, each offering at least two contactless modalities. For these datasets, we select six key features that we deem most relevant when selecting data for model development in a specific use case or application. However, it is important to acknowledge that directly comparing these datasets is challenging due to their inherent heterogeneity. They were collected under varying conditions, utilizing different properties, and have distinct limitations. These factors will be discussed next.

In our dataset listings, we prioritize providing the number of separate subjects (N) instead of individual samples. This is crucial for ensuring model generalization to a broader population beyond the training sample. However, for datasets derived from movies, TV series, and streaming services, the exact count of individual subjects is often not available. In such cases, we report the number of annotated samples instead. To maintain consistency and statistical significance, we have included only datasets with a minimum of ten subjects or samples. Datasets smaller than this threshold are typically inadequate for model development in practical applications.

Regarding annotated emotions, we report the number of distinct classes for categorical emotion models and valence and/or arousal for dimensional models, as discussed in Subsection 1.2. Some datasets offer both types of annotations. We acknowledge that certain properties are difficult to evaluate precisely and indicate them with an asterisk (*) symbol. For instance, the genuineness of emotions in TV series (e.g., reality TV) and online videos (e.g., YouTube blogs) cannot be accurately assessed. Another point of ambiguity lies in the number of subjects. Notably, for datasets MELD and MEmor, we report 13,708 and 8,536 samples (clips), respectively. However, all these clips are derived from the TV series ‘Friends’ and ‘Big Bang Theory,’ predominantly featuring the main actors (5 and 7) from their respective shows.

Among the 33 datasets listed in Table 3, the distribution of modality groups is as follows: 18 datasets include audio-visual modalities (AVF), 7 datasets include audio-visual and physiological modalities (AVF + CPS), 6 datasets include visual-audio-text modalities (VATF), and 1 dataset includes visual, thermal, and physiological signals (VF + Th + CPS). While all aspects of training data are crucial for developing models applicable to real-life scenarios, we want to highlight three specific features in Table 3: condition, spontaneity, and emotions. Firstly, the condition under which the data is collected significantly impacts the generalizability of machine vision applications. In controlled environments, low-level features of the data remain relatively consistent, whereas in-the-wild data can exhibit significant variation. This variation affects aspects such as video resolution, occlusion, brightness, angles, ambient sounds, and noise levels. When utilizing the model in real-life applications, it must be capable of performing well under non-ideal conditions. Secondly, we emphasize whether the data captures genuine emotions or acted/imitated emotions. Genuine emotions are preferred to ensure the authenticity and real-life accuracy of the trained recognition model. Lastly, the choice of target labels determines the dataset’s suitability for a specific use case. A significant distinction exists between datasets employing continuous models (valence/arousal) and those using discrete emotion models. The count of categories for discrete emotions varies widely, ranging from 3 to 26. Some datasets even include mental states such as boredom, confusion, interest, antipathy, and admiration. While certain applications may suffice with distinguishing between negative, neutral, and positive emotions (e.g., product reviews and customer satisfaction), other applications benefit from more nuanced annotations and require finer grained emotion distinctions.

Deep neural networks typically require a substantial amount of data for effective training. To address this, there are several strategies one can employ. Firstly, it is advisable to combine multiple existing datasets that are suitable for the task at hand. This approach helps increase the overall data volume and diversity, enhancing the model’s ability to generalize. Additionally, collecting new data using the same setup of the application is essential to ensure that the model is trained on data that closely resembles what it will encounter during inference. Furthermore, data augmentation techniques can be leveraged to augment the existing dataset. Data augmentation has become a viable option, particularly with the introduction of Generative Adversarial Network (GAN) models and generative AI in general [246]. While image data augmentation is widely used, methods for augmenting audio-visual and physiological data also exist. For example, the authors in [179] proposed an augmentation method that considers temporal shifts between modalities and randomly selects audio-visual content. [168] developed GAN models specifically for audio-visual data augmentation. The authors in [174] demonstrated the effectiveness of data augmentation for physiological signals, improving emotion detection performance in deep learning models. Apart from expanding the training data, data augmentation can also help address potential biases in the dataset, such as gender or minority imbalances (see Sect. 7 for further discussion of ethical aspects).

8 Harnessing CMER for diverse use-cases

We explore the real-life scenarios in this section and explain how a CMER system can be effectively applied within the unique constraints and requirements of each use-case. The intention behind this detailed exploration is multifold. Primarily, it serves to highlight the versatility and adaptability of CMER systems, demonstrating their broad applicability across a range of scenarios with varying conditions and demands. Additionally, it provides a concrete, contextual understanding of how the selection and combination of modalities can be tailored to meet the specific needs of each use-case, thus adding a practical dimension to the theoretical understanding of these systems. By discussing the unique challenges inherent to each scenario and how these can be addressed within the framework of CMER, we aim to equip readers with a practical understanding that will inform and guide the future implementation of these technologies.

In our analysis, we identify a variety of modality combinations utilized in existing studies and propose a comparative schema comprising a wide range of metrics to evaluate the performance of these modalities. Figure 11 shows the CMER’s comparative schema comprising various modality combinations such as audio-visual, physiological signals, and visual-audio-text, amongst others, and their benchmarks against the performance parameters such as real-time performance, in-the-wild feasibility, and context awareness, to name a few. Each cell in this comparative schema corresponds to the performance of a specific modality combination against a given metric. The process of constructing the comparative schema involved a detailed review and analysis of relevant literature, as well as an intuitive understanding derived from the studies. Each cell in the schema represents the performance of a specific modality combination against a given metric. The assignment of the values “Low”, “Moderate”, or “High” for each cell was carried out based on a consensus-driven analysis of the literature and the real-world application of these systems.

The metrics are segmented into two distinct sets, each representing a unique aspect of the performance evaluation. The first set, highlighted in blue, represents ‘positive metrics.’ A higher value in this section, such as a ‘High’ rating in ‘real-time performance,’ is indicative of superior performance or desirable attributes. Conversely, the latter section, marked in orange, comprises ‘negative metrics.’ Within this section, an elevated value represents less favorable outcomes. For instance, a ‘High’ score in ‘processing complexity’ infers a significant computational demand, which is generally regarded as undesirable in the context of efficient and resourceful system design.

The term 'contactless' measures the system's capability to function with contactless interaction. An emotion recognition system that retrieves modality data from a smartphone, for instance, exhibits moderate contactless operation. Context awareness signifies the system's need for contextual data to accurately identify emotions. Systems functioning in a controlled environment, such as vital signs monitoring, require less context awareness than those operating in uncontrolled or 'wild' conditions. Data availability pertains to the accessibility of open datasets or the simplicity of gathering new data. Noise sensitivity involves the unintentional incorporation of undesired signals in a modality, which could occur due to external sounds in audio modalities, irrelevant objects in visual modalities, or electrical interference in physiological modalities.

Sensitivity to environmental conditions indicates how a system's performance might be affected by factors such as light intensity, weather conditions, or ambient temperature. Ethical and privacy concerns might range from low to high based on the application context. For example, a system designed for driver attention detection may carry fewer ethical and privacy implications than a system used in healthcare. Deliberate enactment refers to the faking of emotions by subjects. This factor could be moderate in a healthcare setting where patients might exaggerate symptoms, and higher in a law enforcement scenario where there's an incentive to deceive. User discomfort refers to any negative feelings users may have towards the system, whether due to its complexity, or any other factors that detract from a smooth user experience. Data availability refers to whether suitable open datasets are available in training a model that applies corresponding modalities simultaneously. This comparative schema aids in the selection of suitable modalities based on the requirements and constraints of a given use-case. It takes into account not only the functional attributes of each modality combination but also their implications on ethical and privacy concerns and user discomfort, ensuring a well-rounded and thorough evaluation.

When evaluating each combination of modalities, we initially take into account the inherent characteristics and limitations of the modalities involved. As an example, the 'audio-visual' modality combination receives a 'High' rating due to its lower computational demands and prompt feedback [90]. Additionally, this combination is also rated 'High' for context awareness, as it provides substantial contextual information [247]. Similarly, as another example, the 'video-audio-text-physiological' modality combination received a 'Low' rating for the "data availability" metric, as indicated in Table 3 of Sect. 5.