Abstract

Sequential methods for synthetic realisation of random processes have a number of advantages compared with spectral methods. In this article, the determination of optimal autoregressive (AR) models for reproducing a predefined target autocovariance function of a random process is addressed. To this end, a novel formulation of the problem is developed. This formulation is linear and generalises the well-known Yule-Walker (Y-W) equations and a recent approach based on restricted AR models (Krenk-Møller approach, K-M). Two main features characterise the introduced formulation: (i) flexibility in the choice for the autocovariance equations employed in the model determination, and (ii) flexibility in the definition of the AR model scheme. Both features were exploited by a genetic algorithm to obtain optimal AR models for the particular case of synthetic generation of homogeneous stationary isotropic turbulence time series. The obtained models improved those obtained with the Y-W and K-M approaches for the same model parsimony in terms of the global fitting of the target autocovariance function. Implications for the reproduced spectra are also discussed. The formulation for the multivariate case is also presented, highlighting the causes behind some computational bottlenecks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The synthetic realisation of a random process (or, simply, the numerical generation of a random process) refers to the computational generation of time/space series that simulate the random behaviour of a dynamical system accurately. In this context, accurately means that the obtained time/space series reproduce sufficiently well a number of statistical features defined beforehand, like time/space covariance and cross spectra.

Numerical generation has been employed in numerous scientific and engineering problems over decades, such as the simulation of earthquake ground motions (Chang et al. 1981; Gersch and Kitagawa 1985; Deodatis and Shinozuka 1988), ocean waves (Spanos and Hansen 1981; Spanos 1983; Samii and Vandiver 1984), atmospheric variables (mainly wind velocity fluctuations ( Reed and Scanlan 1983; Li and Kareem 1990, 1993; Deodatis 1996; Di Paola and Gullo 2001; Di Paola and Zingales 2008; Kareem 2008; Krenk 2011; Krenk and Müller 2019), but also pressure (Reed and Scanlan 1983), temperature and precipitation (Sparks et al. 2018), and variables for hydrological modelling like discharge and flood frequency (Beven 2021)), random vibration systems in the context of structural system identification (Gersch and Luo 1972; Gersch and Foutch 1974; Gersch and Liu 1976; Gersch and Yonemoto 1977) and spatial structures of several geological phenomena (Sharifzadehlari et al. 2018; Soltan Mohammadi et al. 2020).

While many of the aforementioned real-life processes can only be rigourously represented through a non-stationary and/or non-homogeneous random process, stationarity and homogeneity are usually assumed in the modelling for convenience. In some cases, the obtained algorithms have served as a basis for the development of strategies oriented to non-stationary random processes, as in the case of evolutionary spectra, see the seminal paper (Deodatis and Shinozuka 1988).

There are different types of numerical generation approaches. Although an agreed classification based on common names for the different models lacks, see (Kleinhans et al. 2009; Liu et al. 2019), two families, referred to as spectral and sequential methods, are usually identified. Spectral methods are based on strategies like harmonic superposition and inverse Fast Fourier Transform (FFT) (Shinozuka and Deodatis 1991, 1996). These methods require information regarding the spectral characterisation of the random process as an input, for example, in the form of predefined target cross power spectral density (CPSD) functions, coherence functions, etc. Some limitations of spectral methods are related to the fact that the process realisation needs to be synthesised in the whole time/space domain at once, which translates into high computational requirements for long-duration/long-distance multivariate and/or multi-dimensional processes (Kareem 2008).

Sequential methods, also referred to as digital filters, are usually based on time series linear models, such as autoregressive (AR) and Moving Average (MA) models, or a combination of both (ARMA), and their multivariate versions (VAR, VMA and VARMA, respectively). Compared to spectral methods, sequential methods are less intensive in computational requirements during the synthesis, as only the model coefficients need to be stored. In addition, synthesis is a sequential process that can be stopped at a desired length of the time series and restarted later to lengthen the simulation. However, the determination of the model coefficients may demand high computational memory for multi-dimensional and/or multivariate problems. Model coefficients can be derived from a predefined target autocovariance function defined in time and/or spaceFootnote 1, though some approaches introduce spectral information as input as well, see (Spanos and Hansen 1981; Spanos 1983).

Another advantage of sequential methods is that AR, MA, and ARMA models have theoretical expressions of their autocovariance and PSD functions that can be computed directly from the model coefficients. This fact allows comparing directly the target autocovariance function with the model theoretical autocovariance function to assess the accuracy of the model in reproducing the desired statistical information, while this comparison for the spectral methods is based on sample functions estimated from a finite number of finite-length realisations, subjected to smearing and leakage effects (Stoica and Moses 2005). It is remarked that statistical bias affecting the sample autocovariance and PSD functions estimated from time series has to be taken into account when generating synthetic time series, regardless the nature of the method employed (spectral/sequential), in order to properly set the parameters of the simulations, like the time series length. Dimitriadis and Koutsoyiannis (2015) provides expressions for the bias of estimators for both the PSD and autocovariance function, and discusses the advantages of the climacogram as an alternative statistical object to characterise random processes.

It is noted that, in the context of stationary random processes, both spectral and sequential methods can be applied regardless the form of the input information, since the power spectrum and the autocovariance function are Fourier pairs, thus they are two forms of providing the same statistical information. However, going from a PSD to an autocovariance function (and vice versa), except for particular cases that admit a theoretical formulation, requires a numerical implementation of the corresponding Fourier transform. For this reason, spectral methods are usually applied to problems where the input information is provided in the frequency domain, while time domain descriptions of a random process represent natural inputs for sequential methods.

This tutorial is focused on the determination of AR models to optimally reproduce a predefined target autocovariance function. Indeed, optimal is a notion that needs to be clearly defined, as it will be discussed in Sect. 5. To frame the problem, consider a one-dimensional univariate discrete random process, \(\{z_t(\alpha )\}\), where \(t \in \mathbb {N}\) is a time index and \(\alpha \in \mathbb {N}\) is the index of the realisation. Thus, \(z_{t_i}(\alpha )\) is a random variable associated to time index \(t_i\), and \(\{z_t(\alpha _j)\}\) is a time series corresponding to the \(\alpha _j\)-th realisation of the random process. To simplify notation, the realisation index \(\alpha\) will be omitted, so that \(\{z_t\}\) will be used to refer a time series for a generic realisation \(\alpha\), and \(z_t\) to refer the random variable associated to time t. The random process is assumed to be Gaussian, stationary, and zero-mean. In addition, the existence of the integral time scale (i.e. the integral of the autocorrelation function) is assumed. Consequently, long-term persistence processes (Hurst phenomenon), characterised by an infinite integral scale, are excluded. The reason for this hypothesis is that this research considers stationary AR models, whose integral time scale always exists because the autocorrelation function decays exponentially. Long memory processes can be handled through different model types, like Fractional Autoregressive-Moving Average (FARMA) models (Hip 1994).

The general formulation of an AR model of order p, AR(p), is as follows:

where \(\varphi _i\) for \(i=1,...,p\) are the regression coefficients of the AR model, and \(\sigma \, \varepsilon _t\) represents the random term; \(\varepsilon _t\) is a sequence of independent and identically distributed (iid) random variables with zero mean and unit variance, and \(\sigma\), here referred to as the noise coefficient, scales the variance of the random term, given by \(\text {Var}[\sigma \, \varepsilon _t] = \sigma ^2\). Thus, the AR(p) model has \(p+1\) parameters, comprising p regression coefficients and one noise coefficient.

Figure 1 illustrates the different elements involved in the synthetic generation of a random process with an AR model (also valid for MA and ARMA models). \(\gamma ^T_{l}\) is the target autocovariance funcion, which depends only on the time lag l under the assumption of stationarity (the formal definition of the autocovariance function is provided in Sect. 2). In some applications, \(\gamma ^T_{l}\) is derived from theoretical models and admits a mathematical expression, but in real-life problems it usually has to be estimated from observations. Step (1) represents the determination of the AR coefficients from \(\gamma ^T_{l}\); this step requires a methodology and a choice for the model order, p. \(\gamma ^{AR}_{l}\) is the theoretical autocovariance function, and it can be computed directly from the AR model coefficients, step (2) in the figure. Step (3) represents the synthesis of the random process through the AR model. By using a sequence of random values as inputs, \(\{\varepsilon _t\}\), the AR model can be employed to generate realisations of the process in the form of time series. \(\gamma ^{\alpha }_{l}\) denotes the sample autocovariance function computed for realisation \(\alpha\). Averaging N sample autocovariance functions yields the ensemble autocovariance function, \(\gamma ^E_{l}\). The ensemble autocovariance function converges to \(\gamma ^{AR}_{l}\) when \(N \longrightarrow \infty\). Thus, the objective in step (1) is to define a methodology that yields an AR model with a theoretical autocovariance function, \(\gamma ^{AR}_{l}\), that optimally reproduces the target, \(\gamma ^{T}_{l}\); this can be verified without the need for generating a large number of realisations N to compute \(\gamma ^E_{l}\).

Regarding the methodologies to obtain the AR(p) model parameters, the vast majority of works make use of the Yule-Walker (Y-W) equations (Spanos and Hansen 1981; Spanos 1983; Reed and Scanlan 1983; Samaras et al. 1985), which establish relationships between the \(p+1\) AR parameters and the \(p+1\) first autocovariance terms, from \(l=0\) to \(l=p\). These relationships arise simply from applying the definition of the autocovariance function for time lags from 0 to p. In the context of this work, these expressions are referred to as autocovariance equations for time lags from 0 to p. This approach leads to a perfect match of the first \(p+1\) terms of the target autocovariance function. Consequently, under this approach, there exists no means to improve the matching between the target and the theoretical AR autocovariance functions for lags larger than the model order, p. This fact is problematic for processes with high inertia (i.e., large integral time scale) or situations in which small sampling times of the time series are employed, because large values of the model order p are required to guarantee the matching of the target autocovariance function in a sufficiently wide range of time lags. But large p values reduce model parsimony and increase the computational cost to determine the model coefficients (Spanos 1983; Dimitriadis and Koutsoyiannis 2018). Model parsimony refers to achieving a certain model performance with the lowest number of model parameters, and is considered a key feature in time series modelling (Box et al. 2016). A proposal to preserve model parsimony is to use ARMA models (Gersch and Liu 1976; Samaras et al. 1985). Several methodologies have been developed, typically based on multistage approaches that build the ARMA model by combining an AR(p) model previously defined with a MA(q) component. However, the potential of these approaches may be limited because MA processes show nonzero autocovariance only in the first \(q+1\) terms, see for example (Madsen 2007). Thus, the improvement of the matching between target and the theoretical autocovariance functions for large time lags could be conditioned to considering high q values. In this article, a proposal for overcoming this limitation is introduced in Sect. 2.2. It consists in including autocovariance equations for lags larger than p in the procedure.

In a recent paper (Krenk and Müller 2019), an interesting proposal based on AR models only with regression coefficients at certain time lags was introduced for the synthetic generation of turbulent wind fields. The theoretical formulation is provided for a generic sequence of lags, and the simulations are performed for AR models with an exponential scheme (regression coefficients only for time lags \(2^k\), \(k=0,1,2,...\)). In econometrics, such models are usually referred to as restricted AR models (Giannini and Mosconi 1987), because they can be seen as a particular case of an AR(p) model for which some of the regression coefficients are imposed to be zero.Footnote 2 Thus, the actual number of regression coefficients is lower than the AR order, and the capacity of matching exactly the first \(p+1\) terms of the target autocovariance function is lost. However, the trade-off between model parsimony and target autocovariance function reproducibility for a wide range of lags was improved. In our opinion, one limitation of that work is that the exponential scheme of the model was assumed to be reasonably good for the considered application, and no further discussion is provided concerning the impact of different model schemes on the results. In what follows, the methodology presented in Krenk and Müller (2019) will be referred to as the K-M approach.

Within this context, this article introduces a general formulation to determine the parameters of a restricted AR model from a predefined target autocovariance function. Under this general framework, it will be shown that both the Y-W approach and the K-M approach could be seen as particular cases of the presented formulation. The described approach requires a reduced number of input parameters related to the model scheme and the employed autocovariance equations. An optimisation procedure based on genetic algorithms is applied to obtain AR models that reproduce the target autocovariance function more accurately than the Y-W and K-M approaches for the same model parsimony. The main ideas contained in this tutorial and its research contributions are as follows:

-

The Yule-Walker approach to obtain an AR(p) model from a target autocovariance function is described, emphasising the classical result consisting in the perfect matching of the first \(p+1\) terms of the target autocovariance function as a consequence of selecting a set of autocovariance equations for time lags from 0 to p.

-

We show that using autocovariance equations for time lags larger than p may improve the matching of the target autocovariance function for lags beyond the model order.

-

The potential of restricted AR models for improving the matching of a target autocovariance function is revisited by considering the K-M approach introduced in Krenk and Müller (2019).

-

We introduce a general formulation for the AR parameters determination from a target autocovariance function. The formulation is general in the sense that it provides flexibility in the choice for the autocovariance equations and in the definition of the AR model scheme.

-

The introduced formulation is exploited by a genetic algorithm to obtain optimal AR models without a pre-defined model scheme. The considered application is based on a stationary, homogeneous, and isotropic (SHI) turbulence model. Results are compared to those obtained with the Y-W approach and the K-M approach.

-

The introduced general formulation is extended to the multivariate case. This leads to some computational bottlenecks that are highlighted.

The article is organised as follows. Section 2 describes the relationships between the parameters of an AR model and its theoretical autocovariance function, emphasising the impact of the selected autocovariance equations on the matching of the target autocovariance function. The case of restricted AR models is addressed in Sect. 3, where the focus is placed on the role of the model scheme. The general formulation for the determination of a restricted AR model from a predefined target autocovariance function is introduced in Sect. 4. Section 5 contains the optimisation exercise based on genetic algorithms. The generalisation of the problem to the mulivariate case is briefly described in Sect. 6. The paper ends with the main conclusions gathered in Sect.7. The article includes a number of examples and reflections to facilitate comprehension.

2 The autocovariance equations of an AR model

In time series analysis, the covariance between two random variables \(z_{t_1}\) and \(z_{t_2}\) is usually denoted by \(\gamma _{t_1,t_2}\). Under the assumption of stationarity, the autocovariance depends only on the time lag, \(l=t_1-t_2\), and is referred to as the autocovariance function:

Note that the autocovariance function is symmetric, \(\gamma _{-l} = \gamma _l\). Note also that, since the random term in (1) is independent, there is no dependency between the random term at time t, \(\sigma \, \varepsilon _t\), and previous values of the process, \(z_{t-l}\) for \(l>0\). Indeed, the following expression can be demonstrated (Madsen 2007):

Given these considerations, Eq. (2) together with (1) and (3) provide a means to generate analytical expressions that relate the autocovariance function for different time lags and the AR model parameters. As mentioned above, these expressions are here referred to as autocovariance equations. The autocovariance equation for lag \(l=0\) is:

The autocovariance equation for a generic positive time lag l is:

Note that Eq. (4) is the only one among all autocovariance equations that includes the noise coefficient, \(\sigma\). Actually, this equation defines the relationship between the variance of the AR process, \(\gamma _{0}\), and the variance of the random term, \(\sigma ^2\).

Equations (4) and (5) are the basis for computing the theoretical autocovariance function of a given AR model, addressed in Sect. 2.1, and for obtaining the AR model parameters from a predefined target autocovariance function, see Sect. 2.2.

2.1 Computing the theoretical autocovariance function of an AR model

The objective of this section is to compute the first \(n+1\) terms of the theoretical autocovariance function of an AR(p) model, \(\gamma _0^{AR}, \gamma _1^{AR},..., \gamma _{n}^{AR}\), assuming that the AR parameters, \(\varphi _i\) for \(i=1,...,p\) and \(\sigma\), are known.

Without loss of generality, the case of an AR(2) model is considered. The following expression represents the autocovariance eq. for lags from \(l=0\) to \(l=n\), see eq. (4) and (5), in the form of a matrix equation, where the autocovariance terms have been gathered into the independent vector.

Equation (6) represents a linear system of \(n+1\) equations with \(n+3\) unknowns.Footnote 3 However, by applying symmetry in the autocovariance function, \(\gamma _{-l}=\gamma _l\), it is possible to remove terms \(\gamma _{-1}\) and \(\gamma _{-2}\) from the independent vector. This allows one expressing the system of Eq. (6) with as many equations as unknowns:

From that, the following expression for the theoretical autocovariance function of the AR(2) model is readily obtained:

Equation (8) can be employed to obtain an arbitrary number n of terms of \(\gamma _{l}^{AR}\). Increasing n comes at the expense of increasing the dimension of the matrix to be inverted, thus, the computational memory requirements. An alternative approach for computational alleviation consists in solving the subsystem given by the \(p+1\) first autocovariance equations in order to obtain \(\gamma _{0}^{AR}\), ..., \(\gamma _{p}^{AR}\),

and then to compute recursively \(\gamma _{l}^{AR}\) for \(l>p\) through Eq. (5). The counterpart of this approach is that the accumulation of rounding errors may lead to inaccurate estimations of \(\gamma _{l}^{AR}\) for large l values.

As an example, Fig. 2 shows \(\gamma _{l}^{AR}\) for an AR(2) model given by \(\varphi _1 = 1.2\), \(\varphi _2 = -0.3\) and \(\sigma = 0.5\), computed for lags up to \(l=20\).

2.2 Computing the AR model parameters from a predefined target autocovariance function

The objective of this section is to compute the parameters of an AR model from a predefined target autocovariance function, \(\gamma ^T_l\), assuming that the target is available for any time lag l. It will be shown that only a limited number of values of \(\gamma ^T_l\) are required, depending on the employed autocovariance equations .

The \(p+1\) model parameters, \(\varphi _1\), \(\varphi _2\), ..., \(\varphi _p\) and \(\sigma\), are computed from \(p+1\) autocovariance equations. The traditional approach to this problem considers the autocovariance equations for lags \(l=0,1,...,p\), which leads to the Yule-Walker (Y-W) equations. For this reason, this approach is here referred to as the Y-W approach. For illustrative purposes, consider the case of an AR(3) model, note that symmetry in the autocovariance function has already been applied:

Equation (10) can be written in matrix form as:

By replacing in (11) the autocovariance terms \(\gamma _i\) by the corresponding target values, \(\gamma ^T_i\), the four AR model parameters are obtained from \(\gamma ^T_{0}, ... ,\gamma ^T_{3}\):

A key consequence of employing the autocovariance equations for lags \(l=0,...,3\) is that number of model parameters and the number of required \(\gamma ^T_l\) values is the same, which leads to an AR(3) model with a theoretical autocovariance function that matches exactly the employed target values. This conclusion can be extended for an AR(p) and the first \(p+1\) terms of the target autocovariance function.

As an example, the following AR(3) model has been obtained for the target autocovariance function described in Appendix B:

The theoretical autocovariance function of the obtained AR model, \(\gamma ^{AR}_l\), and the target autocovariance function, \(\gamma ^{T}_l\), are shown in Fig. 3. The four target values employed during the model determination have been highlighted. Note that the theoretical autocovariance function of the AR(3) model matches exactly the employed target autocovariance values, but increasing differences with the target are observed for larger lags.

Note also the following comments:

-

(i)

The determination of the AR model involves a matrix inversion. Care should be taken with issues related to ill-conditioned matrices that may arise from some target autocovariance functions defined arbitrarily. For example, target values \(\gamma ^T_{0}=1\), \(\gamma ^T_{1}=0.5\) and \(\gamma ^T_{2}=-0.5\) lead to a non-invertible matrix in (12), regardless the value of \(\gamma ^T_3\).

-

(ii)

While the autocovariance function of the obtained AR model reproduces exactly \(\gamma ^T_{l}\) for \(l=0,...,p\), no constraints have been imposed on the autocovariance function for larger time lags \(l > p\). This implies that there is no means to improve the matching between \(\gamma ^{AR}_{l}\) and \(\gamma ^T_{l}\) for time lags larger than p.

The last comment leads to an important question: is it possible to introduce information of \(\gamma ^T_{l}\) for time lags larger than the AR model order in the determination of the model parameters? If so, that would provide a means to obtain AR(p) models for which there exists some control on \(\gamma ^{AR}_{l}\) for \(l>p\), potentially improving the trade-off between model parsimony and matching between target and theoretical autocovariance function, compared to the Y-W approach. To address this question, autocovariance equations for time lags larger than p could be considered. Let us define vector \(\mathbf{l} =[l_1,l_2,...,l_N]\) with the N positive lags corresponding to the autocovariance equations employed in the model determination. By default, the autocovariance equation for \(l=0\) is always required to determine the noise parameter, \(\sigma\). For this reason, only positive lags are specified in vector \(\mathbf{l}\). Note also that N must be equal to the number of model regression coefficients, p. As an example, consider the set of autocovariance equations obtained with \(\mathbf{l} = [1, 2, 5]\) for an AR(3) model:

Now, the AR model parameters are given by:

Equation (15) reveals that, in this case, the four AR model parameters are computed from six terms of the target autocovariance function, from \(\gamma _0^T\) to \(\gamma _5^T\). For the particular case of the target autocovariance function described in Appendix B, the obtained AR(3) model is:

which differs notably from the model obtained with the Y-W approach, see (13). Figure 4 shows the target and the theoretical autocovariance functions, \(\gamma _l^T\) and \(\gamma _l^{AR}\), respectively. The six target values employed during the model determination have been highlighted. It can be seen that \(\gamma _l^{AR}\) does not match exactly \(\gamma _l^T\) for any time lag. However, a visual comparison with Fig. 3 reveals that the global matching is improved.

From this analysis, it can be concluded that, in the determination of the parameters of an AR(p) model, using autocovariance equations for lags larger than p leads to a number of required target terms higher than the number of model parameters. Since the autocovariance equations represent constraints between the AR model parameters and certain terms of the autocovariance function, this inequality makes that \(\gamma _l^{AR}\) does not exactly match the target values employed in the model determination, Equation (15), as it was the case for the Y-W approach; \(\gamma _l^{AR}\) only fulfils the constraints determined by the selected autocovariance equations. However, given a particular target \(\gamma _l^{T}\) and a considered AR model order p, optimal decisions on the selected autocovariance equations (defined in vector \(\mathbf{l}\)) may lead to AR models that reproduce \(\gamma _l^{T}\) globally better, as compared with the models obtained with the Y-W approach, as it was shown in the previous example. Thus, considering vector \(\mathbf{l}\) as an input parameter in the determination of an AR(p) model introduces flexibility as compared with the assumption \(\mathbf{l} =[1,...,p]\) that underlies the Y-W approach, and represents a path for improvement in reproducing a predefined target autocovariance function. To the authors knowledge, this strategy has not been addressed previously in the literature .

3 The autocovariance equations of a restricted AR model

In a restricted AR model, not all regression coefficients from \(\varphi _1\) to \(\varphi _p\) are considered. Let us define vector \(\mathbf{j} = [ j_1 , j_2 , ... , j_N]\), with \(N<p\), containing the lags of the regression terms included in the model. The AR order is given by the regression term with the highest lag, \(p \equiv j_N\). Note that N represents the number of regression coefficients of the model. To distinguish between restricted and unrestricted AR models, the following notation will be employed for restricted AR models:

A restricted AR(\(p,\mathbf{j}\)) model can be seen as a particular case of an AR(p) model for which:

and

Note also that an AR(p) can be seen as a particular case of a restricted AR(\(p,\mathbf{j}\)) model for which:

provided that the constraint \(N < p\) is relaxed.

The autocovariance equations of a restricted AR(\(p,\mathbf{j}\)) process can be readily obtained by combining the autocovariance equations of the corresponding unrestricted AR(p) model, see Sect. 2, with Eqs. (18) and (19):

3.1 Computing the theoretical autocovariance function of a restricted AR model

The problem of computing \(\gamma _l^{AR}\) for a restricted AR(\(p,\mathbf{j}\)) model can be readily addressed by applying the procedure described in Sect. 2.1 together with Eqs. (18) and (19). As an example, Fig. 5 shows \(\gamma _l^{AR}\) for an AR(5, [1, 2, 5]) model given by \(a_1 = 1.2\), \(a_2 = -0.5\), \(a_5 = 0.1\) and \(b = 0.5\), computed for lags up to \(l=20\).

A peculiarity of restricted AR models is that specific model schemes (i.e., specific values of vector \(\mathbf{j}\) components) lead to theoretical autocovariance functions with alternating zero and nonzero values. For example, let us consider the model AR(3,[3]):

The values of the autocovariance function \(\gamma _l^{AR}\) up to lag \(l=3\) are provided by the following autocovariance equations:

By applying symmetry to the autocovariance function, the system of equations given in (24) can be expressed as follows:

Equation (25) can be divided into two independent subsystems. The first one with equations for lags \(l=0\) and \(l=3\), and the second one for lags for which there are no regression terms, \(l=1\) and \(l=2\). Solving the first subsystem yields:

Concerning the second subsystem, the only possible solution is:

By recursively applying Eq. (22), one gets nonzero values for \(\gamma _l^{AR}\) only at lags \(l=3,6,9,...\). In a general case, a restricted AR(p) model with a single regression term at lag \(\mathbf{j} =[p]\) has a theoretical autocovariance function with non-zero values only at lags \(l=p\) and its multiples. This particular structure of \(\gamma _l^{AR}\) is also observed for restricted AR models with \(\mathbf{j}\) vectors such that \(j_i\) is multiple of \(j_1\) for \(i>1\). For example, the theoretical autocovariance function of an AR(6,[2, 4, 6]) model will show nonzero values only at even time lags. Such model schemes are likely to represent bad candidates for reproducing real-life autocovariance functions that usually fade out to zero in a continuous fashion.

3.2 Computing the parameters of a restricted AR model from a predefined target autocovariance function

In Section 2.2 it was shown that the \(p+1\) parameters of an (unrestricted) AR(p) model can be computed to reproduce the first \(p+1\) values of a predefined target autocovariance function through the Y-W approach. It was also discussed how the exact matching up to lag p could be sacrificed in favour of improving the global matching by employing autocovariance equations for lags larger than p. In this section, restricted AR models are considered as an additional strategy to increase the control on the obtained model autocovariance function for time lags larger than the number of regression coefficients, N.

Without loss of generality, consider the problem of computing the parameters of a restricted AR model given by \(\mathbf{j} =[1,2,5]\). Note that the model parsimony is the same than that of the AR(3) model employed in Sect. 2.2, i.e. \(N=3\), but in this case the model order is \(p=5\). Let us start by considering the autocovariance equations of the corresponding unrestricted AR(5) model, for lags \(l=0,...,5\):

The system of Eqs. (27) contains six equations, six model parameters (\(\sigma\) , \(\varphi _1\), ..., \(\varphi _5\)) and six autocovariance terms (\(\gamma _{0}, \gamma _{1}, ... \gamma _{5}\)). Thus, according to the Y-W approach described in Sect. 2.2, by introducing the six target autocovariance terms, the system of equations is linear and provides the six parameters of an AR model whose theoretical autocovariance function matches exactly the imposed target autocovariance values.

Now, consider the restricted model AR(5,[1, 2, 5]), obtained from an AR(5) by imposing \(\varphi _3=0\) and \(\varphi _4=0\). Since these two model parameters are no longer unknowns in (27), two possible strategies can be followed to obtain the model parameters (\(\varphi _1\), \(\varphi _2\), \(\varphi _5\) and \(\sigma\)) from (27):

-

1.

To select two new unknowns from the set of six autocovariance terms. These two terms will not be replaced by target autocovariance values. This yields a system of equations with six equations and six unknowns (four model parameters and the two selected autocovariance terms).

-

2.

To discard two autocovariance equations, in order to have a system of equations with four equations and four unknowns (the four model parameters).

If the strategy defined in 1 is considered, only four (and not six) values of the target autocovariance function are required. Let us select, with no loss of generality, \(\gamma _{0}\), \(\gamma _{1}\), \(\gamma _{3}\) and \(\gamma _{5}\) to be replaced by the corresponding target autocovariance values, and consider \(\gamma _{2}\) and \(\gamma _{4}\) as additional unknowns. In this case, the system of Eq. (27) yields the six unknowns (\(\sigma\), \(\varphi _1\), \(\varphi _2\), \(\varphi _5\), \(\gamma _{2}\) and \(\gamma _{4}\)). The drawback of this strategy is that the system of equations becomes non-linear, due to the products between unknowns such as \(\varphi _2 \, \gamma _{2}\) and \(\varphi _5 \, \gamma _{4}\) in the first and second equations, respectively. Note that the computational cost may be dramatically increased, specially for AR models with large order p, as the system of equations to be solved comprises \(p+1\) equations, regardless the number of AR coefficients assigned to zero. The advantage of this strategy is that the obtained restricted AR model has a theoretical autocovariance function that matches exactly the four provided target autocovariance values.

To illustrate this, the restricted AR(5,[1, 2, 5]) model was obtained for the target autocovariance function described in Appendix B:

Figure 6 shows the corresponding \(\gamma _l^{AR}\) and \(\gamma _l^{T}\) functions, where the four target values employed during the model determination have been highlighted. The figure shows an exact matching of \(\gamma ^T_{0}\), \(\gamma ^T_{1}\), \(\gamma ^T_{3}\) and \(\gamma ^T_{5}\), as well as an improved global matching of the target autocovariance function with respect to the Y-W approach for the same model parsimony can be observed, see Fig. 3.

The second strategy defined in 2 is actually equivalent to just selecting as many autocovariance equations as AR parameters, by defining a vector l. For the considered example, and without loss of generality, the autocovariance equations for lags \(l=0\) and \(\mathbf{l} =[1,4,5]\) are selected. The resulting system of equations, using the notation for restricted AR models, is:

The system of Eq.(29) is linear, and comprises four equations, four unknowns (the model parameters \(b^2\), \(a_1\), \(a_2\) and \(a_5\)), and requires six autocovariance terms. By introducing the target autocovariance terms, the model parameters are given by:

This situation is similar to that explained in Sect. 2.1, in the sense that, since the number of required target autocovariance terms is higher than the number of equations (i.e., the model parameters), the autocovariance function of the obtained AR model will not exactly match any of the imposed target autocovariance values, but it may show a reasonably good matching for a wide range of time lags. To illustrate this idea, Fig. 7 shows \(\gamma _l^{AR}\) and \(\gamma _l^{T}\) functions of the AR(5,[1,2,5]) model computed with \(\mathbf{l} =[1,4,5]\) for the target autocovariance function described in Appendix B. The six target values employed during the model determination have been highlighted. The obtained model is:

Although the obtained restricted AR model given in (31) performs slightly worst than the model given in (16), it still improves the model obtained with the Y-W approach, Eq. (13). The key idea of the presented analysis is that selecting appropriate AR model schemes through vector \(\mathbf{j}\) has the potential to improve the global matching between \(\gamma _l^{AR}\) and \(\gamma _l^{T}\). To the authors knowledge, this strategy has been considered only to a limited extent in a previous work (Krenk and Müller 2019), since in that work an exponential model scheme \(\mathbf{j} =[1,2, ... , 2^{N-1}]\) was assumed for the presented simulations, which leaves the possibility of optimising the AR scheme unexplored.

Finally, to gather the results obtained with the examples described in Sects. 2.2 and 3.2, Fig. 8 shows \(|e_l| = |\gamma ^T_l - \gamma ^{AR}_l|\), computed for the AR model obtained with the Y-W approach, Eq. (13), the AR model obtained by selecting autocovariance equations \(\mathbf{l} =[1,2,5]\), see Eq. (16), and the restricted models AR(5,[1,2,5]) obtained with the linear formulation, Eq. (31), and the non-linear formulation, Eq. (28).

Absolute error between target and theoretical autocovariance function, \(|e_l|\), for the AR model obtained with the Y-W approach, Eq. (13), the AR model obtained by selecting autocovariance equations \(\mathbf{l} =[1,2,5]\), see Eq. (16), and the restricted models AR(5,j=[1,2,5]) obtained with the linear formulation, Eq. (31), and the non-linear formulation, Eq. (28)

4 General formulation for the determination of a restricted AR model from a predefined target autocovariance function

This section provides a general formulation for the linear problems addressed in Sect. 2.2 and 3.2. The non-linear problem described in Sect. 3.2 is not covered by the following formulation and will be further analysed in future research. The objective here is to obtain the parameters of a restricted AR(\(p,\mathbf{j}\)) model given a target autocovariance function, from generic input vectors \(\mathbf{j} =[j_1 , j_2 , ... , j_N]\) (regression coefficients considered in the model) and \(\mathbf{l} =[l_1 , l_2 , ... , l_N]\) (autocovariance equations considered to obtain the model parameters), by means of a linear system that can be computed with low computational resources.

The problem is divided into two steps:

-

(i)

Determination of the regression coefficients \(a_{j_i}\), for \(i = 1,...,N\), by means of the set of N autocovariance equations for lags gathered in \(\mathbf{l}\). For convenience, the regression coefficients are encapsulated into a row vector \(\mathbf{a} =[ a_{j_1} , a_{j_2} , ... , a_{j_N} ]\).

-

(ii)

Determination of the noise coefficient, \(\sigma\), by means of the autocovariance equation for lag \(l=0\).

Note that, while in Sects. 2.2 and 3.2 a single system of equations including (i) and (ii) was considered, the division of the problem proposed in this section is always possible since the noise coefficient appears only in the autocovariance equation for lag \(l=0\). By doing so, a more handy general formulation is obtained. Note also that the formulation is presented for the case of a restricted AR model, but the case of an unrestricted AR model is included by simply considering Eq. (20).

4.1 Determination of the model coefficients: a

The autocovariance equations considered for the lags gathered in \(\mathbf{l}\) and for the case of a restricted AR(\(p,\mathbf{j}\)) model can be written in matrix form as follows:

or, in a more compact way:

where \(\varvec{\gamma }_{\mathbf {l}}\) is a row vector with dimension N, and \(\varvec{\gamma }_{\mathbf {j},\mathbf {l}}\) is a matrix \(N \times N\), both containing values of the autocovariance function at specific time lags, according to vectors j and l. It is worth noting that, for the particular case \(\mathbf{j} =\mathbf{l} = [1, 2, 3, ..., p]\), the system of equations given in (32) becomes the Yule-Walker equations. If the autocovariance terms in (33) are replaced by the target values, the model coefficients are given by:

4.2 Determination of the noise coefficient: b

The autocovariance equation for time lag \(l=0\) for a restricted AR(\(p,\mathbf{j}\)) model is as follows:

where tilde means transposed. Operating and applying symmetry in the autocovariance function, the noise coefficient is given by:

or, in a more compact way:

where \(\varvec{\gamma }_{\mathbf {j}}\) is a row vector containing N values of the target autocovariance function at time lags gathered in \(\mathbf{j}\).

Finally, note that Eq.(33) and (37) particularised for \(\mathbf{j} =\mathbf{l} =[1,2,...,2^{N-1}]\) represent the univariate case of the formulation introduced in Krenk and Müller (2019). The formulation here presented is more flexible, as it allows searching for optimal j and l vectors independently, that is, without assuming the constraint \(\mathbf{j} =\mathbf{l}\).

5 Optimal AR models for synthetic isotropic turbulence generation

In this section, a methodological proposal is presented to obtain optimal AR models from a predefined target autocovariance function. The particular case of homogeneous stationary isotropic (SHI) turbulence is considered. The methodology combines the general formulation described in Sect. 4 with the use of genetic algorithms. The aim is to find, for a given number of regression coefficients N, optimal vectors \(\mathbf{j} = [ \, j_1 , j_2 , ... , j_N \, ]\) and \(\mathbf{l} = [ \, l_1 , l_2 , ... , l_N \, ]\). In this context, optimal means that the obtained AR model resulting from expressions (34) and (37) provides minimum mean squared error, MSE, between its theoretical autocovariance function, \(\gamma ^{\text {AR}}_l\), and the target autocovariance function, \(\gamma ^T_l\). MSE is given by:

with

\(\gamma ^T_l\) is defined as the non-dimensional longitudinal autocovariance function of SHI turbulence, \(\mathring{R}_u(\mathring{r})\), as described in Appendix B; \(\mathring{r}=r/L\) is a non-dimensional spatial coordinate, and L is the length scale parameter of the three-dimensional energy spectrum. In (38), M represents the maximum lag considered in the computation of the MSE. This parameter has been fixed to \(M=41\), which corresponds to a maximum non-dimensional spatial distance of \(\mathring{r}_{max} = 40 \cdot \varDelta \mathring{r} = 4.98\). It holds that:

meaning that the selected M value accounts for the \(99.5\%\) of the integral length scale, \(L_u^x\).

The genetic algorithm is designed to minimise the criterion given in (38). The following inequality constraints were included in the algorithm:

-

1.

\(0< j_1< j_2< ... < j_N\), with \(j_i \in \mathbb {N}\), for \(i=1,...,N.\)

-

2.

\(0< l_1< l_2< ... < l_N\), with \(l_i \in \mathbb {N}\), for \(i=1,...,N.\)

-

3.

\(j_i - \varDelta \le l_i \le j_i + \varDelta\), with \(\varDelta \in \mathbb {N}\) , for \(i=1,...,N.\)

The first and second constraints derive from the definition of vectors \(\mathbf{j}\) and \(\mathbf{l}\). The third constraint introduces the parameter \(\varDelta\), that regulates the maximum difference between the regression lags included in the AR model and the corresponding autocovariance equations included in the equation system that provides the model parameters. It has been observed that large differences between elements \(j_i\) and \(l_i\) may derive into numerical instabilities during the optimisation process. Note that \(\varDelta =0\) means that \(\mathbf{l} = \mathbf{j}\).

The analysis includes a benchmark exercise for a number of models, which are compared for the same model parsimony. The range \(N=1,...,10\) is considered in what follows. The benchmark comparison includes the following models (italic letters are employed to denote the models):

-

Y-W, an unrestricted AR(p) model obtained through the Yule-Walker approach, that is, \(p=N\) and \(\mathbf{j} = \mathbf{l} = [ \, 1 , 2 , ... , p \, ]\).

-

K-M, a restricted AR(\(p,\mathbf{j}\)) with an exponential model scheme, \(\mathbf{j} = [ \, 1 , 2 , ... , 2^{N-1} \, ]\)), and \(\mathbf{j} = \mathbf{l}\), as proposed in Krenk and Müller (2019).

-

GA-0: obtained with a genetic algorithm with \(\varDelta =0\). This model allows exploring the potential improvement due to relaxing the exponential model scheme employed in K-M, while keeping the constraint \(\mathbf{j} = \mathbf{l}\).

-

GA-10: obtained with a genetic algorithm with \(\varDelta =10\). This model allows assessing the additional improvement with respect to GA-0 due to relaxing the constraint \(\mathbf{j} = \mathbf{l}\).

Figure 9 shows the components of vector \(\mathbf{j}\) obtained for model GA-0, for different N values. Figure 10 shows the components of vectors \(\mathbf{j}\) (left) and \(\mathbf{l}\) (right) obtained for model GA-10, for different N values. Y-W and K-M models are included in both figures for comparison.

It is noted that, for the GA-10 model, the maximum difference found between i-th elements \(j_i\) and \(l_i\) was 5, meaning that the choice \(\varDelta = 10\) was flexible enough to allow the search for optimal AR models witout actually constraining the difference between corresponding elements in j and l vectors.

Results show that, for the most parsimonious models, \(N=1\), the single regression term considered in the four models is the previous lag, \(\mathbf{j} =[1]\). However, for GA-10 the employed autocovariance equation is for lag \(\mathbf{l} =[2]\). For the case \(N=2\), both GA-0 and GA-10 models provided optimal regression lags \(\mathbf{j} =[1, 3]\), different from Y-W and K-M models, for which \(\mathbf{j} =[1, 2]\). Concerning the model order p, given by the last term of \(\mathbf{j}\), \(j_N\), results show that, while for models Y-W and K-M p is determined by N (linearly and exponentially, respectively), the proposed methodology has the ability to reveal an optimal model order for a given model parsimony. It is noted that the obtained optimal order models depend on the specific target autocovariance function considered. Indeed, for increasing N values, the obtained model order for the most flexible model, GA-10, stagnates around \(p=23\). This value is coherent with the fact that the target autocovariance function is already very close to zero for this lag, see Fig. 18. Thus, including additional regression coefficients by reducing the model parsimony is better exploited by the model by rearranging terms in vector \(\mathbf{j}\) rather than increasing the model order beyond this value, as it is the case for models Y-W and K-M.

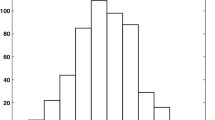

Figure 11 shows the MSE obtained with all the models considered in the analysis, as a function of N.

As expected, the MSE obtained with the Y-W approach decreases with N, because the obtained models match exactly the target autocovariance function for lags up to the model order. For the K-M approach, the error decreases rapidly for low N values, reflecting the advantages of restricted AR models as compared with the Y-W approach. However, the obtained error stagnates from \(N=5\) on. This can be explained by the fact that including a regression term for lag \(2^{6-1}=32\) (i.e. \(N=6\)) improves little the fitting of a target autocovariance function that becomes very close to zero for such lag values. This result clearly shows that an exponential model scheme, as that of model K-M, has an intrinsic upper limit on the number of regression terms that is worthy to consider, this limit being related to the range of lags for which \(\gamma ^T_l\) takes non-negligeable values. This range depends on the selected \(\varDelta \mathring{r}\) employed to obtain the discrete target values from the continuous non-dimensional target autocovariance function of the underlying random process, see Appendix B. The improvements provided by GA-0 with respect to K-M model show the importance of searching for optimal model schemes rather than assuming a predefined model structure. The improvement becomes noticeable for N values larger than the aforementioned upper limit \(N=5\). Finally, the gap between the results for GA-0 and GA-10 clearly shows the additional improvement associated with an optimal selection of the autocovariance equations employed in the determination of the model parameters. Thus, relaxing the hypothesis \(\mathbf{l} = \mathbf{j}\), that usually underlies in the literature, is actually a clear path for improvement.

To delve more into this analysis, the distribution of MSE with the lag is analysed. Figure 12 shows \(e^2_l\), see Eq. (39), for case \(N=3\).

It can be seen that improving the global fitting of the target autocovariance function by means of restricted AR models may come at the expense of deteriorating the local fitting in some of the first lags, as compared with the results of the unrestricted models employed under the Y-W approach. For example, model GA-10 improves greatly the other models in terms of the global fitting, see Fig. 11, but at the same time GA-10 shows the highest error for lag \(l=2\). This trade-off between global fitting and local fitting of the target autocovariance function is relevant when using restricted AR models, and it probably needs to be addressed with a broader concept of optimal model, which may vary from one problem to another. In this regard, additional information from the frequency domain could be included to define the notion of optimal model. This is particularly relevant for some engineering problems, for which some constraints could be defined in terms of frequency ranges, like those involving wind loads (Li and Kareem 1990; Kareem 2008). For illustrative purposes, Table 1 shows the model parameters obtained for \(N=3\), and Fig. 13 gathers, on top, the non-dimensional one-sided target spectrum, \(\mathring{S}^{T}(\mathring{k})\), together with the corresponding theoretical spectra of the AR models obtained with the four approaches, and at the bottom, the differences between the target spectrum and the AR model spectra. \(\mathring{k}\) is the non-dimensional wave number, \(\mathring{k}= k \, L\). Note that \(\mathring{S}^{T}(\mathring{k})\) is affected by aliasing due to the discretisation of the target autocovariance function, see details in Appendix B. The non-dimensional one-sided spectrum of an AR model can be obtained either from the model coefficients,

or from its theoretical autocovariance function (Box et al. 2016):

where \(\mathring{k}_{max} = \frac{1}{2 \varDelta \mathring{r}}\) is the maximum non-dimensional wave number, and \(\varDelta \mathring{r}\) is the non-dimensional length employed to obtain the discrete version of the target autocovariance function, see Appendix B.

Figure 13 illustrates that, in terms of fitting the target spectrum, model GA-10 outperforms the rest of the models for low frequencies (up to \(\mathring{k} = 4\)), including the frequency at which the maximum of the target spectrum is attained (\(\mathring{k} \approx 1.245\)). For higher frequencies, all model spectra show similar oscillations around the target spectrum. This result suggests that, a priory, reducing the local fitting of the target autocovariance function in the first lags to improve the global fitting does not have a clear negative impact on the spectrum fitting.

6 Generalisation to multivariate processes

In this section, the analyses described in Sects. 2, 3 and 4 are extended for a one-dimensional multivariate random process, \(\{\mathbf {z}_t(\alpha )\}\), with the column vector \(\mathbf {z}_t = [z_1 , z_2 , ... , z_k ]_t'\) being a k-variate random variable. In particular, we describe the reasons why some bottlenecks in terms of computational cost may arise as a consequence of the multivariate character of the formulation. Note that multi-dimensional multivariate problems in some cases admit a one-dimensional multivariate formulation, as firstly described in Mignolet and Spanos (1991). In Krenk and Müller (2019), a three-dimensional three-variate description of SHI turbulent wind field was expressed in the form of a one-dimensional 3P-variate random process by stacking the three velocity components at P points of the plane perpendicular to the mean wind into a random variable.

The general formulation of a zero-mean k-variate VAR(p) model is:

where \(\mathbf {z}= [z_1 , z_2 , ... , z_k ]'\) is a k-variate random variable, and \(\varvec{\varepsilon }_t\) is a sequence of independent and identically distributed (iid) random vectors with zero mean, \(\text {E}[\varvec{\varepsilon }_t] = \mathbf {0}_{k\times 1}\), and unity covariance matrix, \(\text {Var}[\varvec{\varepsilon }_t]=\mathbf {I}_k\). The \(p+1\) model matrix parameters are given by the regression matrices, \(\varvec{\varPhi }_i\) with \(i=1,...,p\), and the noise matrix, \(\varvec{\varSigma }\); the dimension of all the matrix parameters is \(k \times k\). The covariance of the random term is given by \(\text {Var}[\varvec{\varSigma }\, \varvec{\varepsilon }_t ] = \text {E}[ (\varvec{\varSigma }\, \varvec{\varepsilon }_t) \, (\varvec{\varSigma }\, \varvec{\varepsilon }_t)' ] = \varvec{\varSigma }\varvec{\varSigma }'\).

\(\varvec{\varGamma }_{t_1,t_2}\) is the covariance between random vectors \(\mathbf {z}_{t_1}\) and \(\mathbf {z}_{t_2}\). As in the univariate case, the assumption of stationary process implies that \(\varvec{\varGamma }_{t_1,t_2}\) depends only on the time lag, \(l=t_1-t_2\), which is referred to as the covariance matrix function:

The covariance equations that generalise Eqs. (4) and (5) for the multivariate case are:

6.1 Computing the theoretical covariance matrix function of a VAR model

As in the univariate case, Eq. (44) and (45) can be employed to compute the theoretical covariance matrix function, \(\varvec{\varGamma }^{VAR}_l\), from the model parameters, \(\varvec{\varPhi }_i, i=1,...,p\) and \(\varvec{\varSigma }\). However, note that in the multivariate case, \(\varvec{\varGamma }_{-l} = \varvec{\varGamma }_l'\). This fact makes the linear formulation described in Sect. 2.1 not applicable for the multivariate case, as it is not possible to replicate the step given between Eqs. (6) and (7). There are two approaches for obtaining the theoretical covariance matrix function of a VAR(p) model, both involving an increasing computational cost, as compared with the univariate case:

-

To obtain \(\varvec{\varGamma }^{VAR}_l\) from the covariance matrix of the VAR(1) representation of the VAR(p) model. This strategy provides the exact covariance matrix function of the VAR(p) model, but it is computationally very expensive, as the size of the involved matrices scales up to \((pk)^2 \times (pk)^2\).

-

To obtain \(\varvec{\varGamma }^{VAR}_l\) from the covariance matrix function of the equivalent multivariate Vector Moving Average (VMA) model. Since this equivalent VMA model contains infinite terms, truncation to a VMA(q) model is required, meaning that the obtained covariance matrix function is an approximation of the exact VAR covariance matrix function. In this case, the increase in the computational cost comes from the fact that appropriate q values are related to the integral length scale of the process, which typically leads to \(q \gg p\).

In what follows, both methodologies are briefly described.

6.1.1 Covariance matrix function of a VAR(p) model through the VAR(1) representation

A k-variate VAR(p) model can be expressed in the form of an extended pk-dimensional VAR(1) model by using an expanded model representation (Tsay 2013). For illustrative purposes, the case of a VAR(3) model is analysed:

Let us define the expanded 3k-variate random variable as:

The VAR(1) representation of the original VAR(3) model is given by:

where \(\varvec{\varPhi }_1^*\) is called the companion matrix, defined as:

and the random matrix is given by:

The covariance matrix function of the extended VAR(1) model of Eq. (48) is given by:

The solution of Eq. (51) is given by Tsay (2013):

where vec(\(\cdot\)) denotes the column-stacking vector of a matrix and \(\otimes\) is the Kronecker product of two matrices.

The covariance matrix function of the original VAR(3) model can be readily obtained from \(\varvec{\varGamma }^*_0\), noting that \(\varvec{\varGamma }_0^{VAR}\), \(\varvec{\varGamma }_1^{VAR}\) and \(\varvec{\varGamma }_2^{VAR}\) are given by:

and using recursively Eq. (45) to obtain \(\varvec{\varGamma }^{VAR}_l\) for lags \(l \ge 3\).

6.1.2 Covariance matrix function of a VAR(p) model through VMA(q) representation

It can be demonstrated that any VAR(p) model admits a Vector Moving-Average (VMA) representation with infinite terms:

where matrices \(\varvec{\varPsi }_i\) are known functions of the VAR regression matrices, \(\varvec{\varPhi }_i\) (Tsay 2013):

and \(\varvec{\varPsi }_0 = \mathbf {I}_k\).

The theoretical covariance matrix function of a VMA process is given by:

\(\varvec{\varGamma }^{VMA(\infty )}_l\) matches exactly the covariance matrix function of the related VAR(p) model. However, for practical reasons, this approach requires truncation by considering only the first q terms of the VMA representation, that is, a VMA(q) model:

The covariance matrix function of a VMA(q) model is given by:

In summary, \(\varvec{\varGamma }^{VAR}_l\) can be approximated in a two step procedure: (i) computing a truncated VMA(q) representation of the VAR(p) model, see Eq.(57); the q coefficient matrices of the VMA representation can be computed through Equation (55); (ii) computing the covariance matrix function of the VMA(q) model given by Eq. (58). Concerning the choice for parameter q, note that \(\varvec{\varGamma }^{VMA(\textit{q})}_l = \mathbf {0}_k\) for \(l>q\), thus q should be such that \(\varvec{\varGamma }^{VAR}_q \approx \mathbf {0}_k\).

To illustrate the two presented methodologies, Figs. 14 and 15 show the theoretical covariance matrix function, \(\varvec{\varGamma }_l^{VAR}\) of the following 2-variate VAR(2) model:

In particular, Fig. 14 shows the exact covariance matrix function computed through the VAR(1) representation, where the property \(\varvec{\varGamma }_{-l}=\varvec{\varGamma }_l'\) can be appraised from \(\gamma ^{VAR}_{z_1,z_2,l}\) and \(\gamma ^{VAR}_{z_2,z_1,l}\).

Figure 15 illustrates \(\gamma ^{VAR}_{z_1,z_1,l}\) together with three approximations obtained through the VMA(q) representation. It can be noted that a reasonable approximation requires \(q \gg p\).

6.2 General formulation for the determination of a restricted VAR model from a predefined target covariance matrix function

A restricted VAR(\(p,\mathbf{j}\)) model, with \(\mathbf{j} =[j_1, j_2, ..., j_N]\) is given by:

As in the univariate case, a restricted VAR(p) model defined by vector \(\mathbf{j}\) can be seen as a particular case of a VAR(p) model for which:

and

Note also that a VAR(p) model can be seen as a particular case of a restricted VAR(p) model for which:

In the following, the formulation for obtaining the matrix parameters of a restricted VAR(p) model from a target covariance matrix function is introduced for generic vectors \(\mathbf{j} =[j_1 , j_2 , ... , j_N]\) and \(\mathbf{l} =[l_1 , l_2 , ... , l_N]\). As in the univariate case, the problem is divided into two steps, and the regression matrix coefficients are encapsulated into matrix \(\mathbf {A}=[\mathbf {A}_{j_1} \, \mathbf {A}_{j_2} \, ... \, \mathbf {A}_{j_N}]\), which has dimension \(k \times kN\).

6.2.1 Determination of the model matrix coefficients: A

The multivariate form of Eq. (32) is given by:

or in a more compact way:

where \(\varvec{\varGamma }_{\mathbf {l}}\) is a matrix \(k \times kN\) and \(\varvec{\varGamma }_{\mathbf {j},\mathbf {l}}\) a matrix \(kN\times kN\). By replacing the covariance terms by the target values, the model matrix coefficients are given by:

6.2.2 Determination of the noise matrix: B

The multivariate version of Eq. (35) is:

Operating and applying \(\varvec{\varGamma }_{-l} = \varvec{\varGamma }_l'\), the covariance matrix of the random term, \(\mathbf {B}\mathbf {B}'\), is given by:

or in a more compact way:

where \(\varvec{\varGamma }_{\mathbf {j}}\) is a matrix \(k \times kN\). From (65), the noise matrix \(\mathbf {B}\) can be obtained in several ways, for example, through Cholesky decomposition. Finally, it is remarked that Eq. (61) and (65) particularised for j=l represent the formulation introduced in Krenk and Müller (2019) (in particular \(\varvec{\varGamma }_0 = C_{uu}\), \(\varvec{\varGamma }_{\mathbf {j}}=\varvec{\varGamma }_{\mathbf {l}}=C_{uw}\) and \(\varvec{\varGamma }_{\mathbf {j},\mathbf {l}}=C_{ww}\)).

As an example, two VAR models with three regression matrices have been computed to reproduce the target covariance matrix function described in Appendix B, for the case of the longitudinal wind component at two spatial locations with a non-dimensional lateral separation of \(\varDelta \mathring{y} = \frac{\varDelta y}{L} = \lambda = 0.747\). The first model is a VAR(3) model, and it was computed with the Y-W approach, i.e. \(\mathbf{j} =\mathbf{l} =[1, 2, 3]\):

The second model is a restricted VAR(5,[1,2,5]) computed for l=[1, 2, 6]:

Figures 16 and 17 show the resulting covariance matrix functions, computed through VAR(1) representation (i.e. exact values). As expected, the model obtained with the Y-W approach provides exact matching for lags from \(l=-3\) to \(l=3\). However, an improved global fitting is obtained with the restricted VAR(5,[1,2,5]) model.

7 Conclusions

Sequential methods for synthetic realisation of random processes have a number of advantages compared to spectral methods. For instance, they are characterised by a more handy synthesis process, as it can be stopped and restarted at any time. In addition, the models obtained through the sequential approach (e.g., autoregressive models) have theoretical expressions of their autocovariance function and power spectrum density (PSD) function, which allows an improved assessment of the accuracy of the models in reproducing the predefined statistical information.

In this article, a methodological proposal for the determination of optimal autoregressive (AR) models from a predefined target autocovariance function was introduced. To this end, a general formulation of the problem was developed. Two main features characterise the introduced formulation: (i) flexibility in the choice for the autocovariance equations employed in the model determination, through the definition of a lag vector l; and (ii) flexibility in the definition of the AR model scheme through vector j, that defines the regression terms considered in the model. The AR parameters are directly obtained as a solution of a linear system that depends on j and l. The well-known Yule-Walker (Y-W) and a recent approach based on restricted AR models (K-M) can be seen as particular cases of the introduced formulation, since \(\mathbf{j} =\mathbf{l} =1,...,p\) for Y-W and \(\mathbf{j} =\mathbf{l}\) for K-M.

The introduced formulation was exploited by a genetic algorithm to obtain optimal AR models for the synthetic generation of stationary homogeneous isotropic (SHI) turbulence time series. The resulting models improved Y-W and K-M based models for the same model parsimony in terms of the global fitting of the target autocovariance function. This achievement was obtained at the expense of reducing the local fitting for some lags. The impact of this trade-off on the frequency domain was presented as a path for extending the notion of optimal model to specific problems in which constraints in the frequency domain may exist, as it is the case in some engineering problems.

The formulation for the one-dimensional multivariate case was also presented. The reasons behind some computational bottlenecks associated with the multivariate formulation were highlighted.

Finally, a non-linear approach for the univariate case was described, for which preliminary results suggest an improved fitting of the target autocovariance function.

Notes

For simplicity, we will refer only to univariate time series from here on, unless stated otherwise

In line with the literature, AR models refer to unrestricted AR models, unless stated otherwise.

In the general case of an AR(p) model, \(n+1\) equations with \(n+p+1\) unknowns.

References

Beven K (2021) Issues in generating stochastic observables for hydrological models. Hydrol Process 35(6):e14203

Box GEP, Jenkins GM, Reinsel GC, Ljung GM (2016) Time series analysis: forecasting and control, 5th edn. Wiley, Hoboken, New Jersey

Chang MK, Kwiatkowski JW, Nau RF, Oliver RM, Pister KS (1981) ARMA models for earthquake ground motions. Seismic Safety Margins Research Program. Technical Report NUREG/CR–1751, International Atomic Energy Agency (IAEA)

de Kármán T, Howarth L (1938). On the statistical theory of isotropic turbulence. In: Proceedings of the royal society of London. Series A, mathematical and physical sciences, 164(917): 192–215

Deodatis G (1996) Simulation of ergodic multivariate stochastic processes. J Eng Mech 122(8):778–787

Deodatis G, Shinozuka M (1988) Auto-regressive model for nonstationary stochastic processes. J Eng Mech 114(11):1995–2012

Di Paola M, Gullo I (2001) Digital generation of multivariate wind field processes. Probab Eng Mech 16(1):1–10

Di Paola M, Zingales M (2008) Stochastic differential calculus for wind-exposed structures with autoregressive continuous (ARC) filters. J Wind Eng Ind Aerodyn 96(12):2403–2417

Dias NL, Crivellaro BL, Chamecki M (2018) The hurst phenomenon in error estimates related to atmospheric turbulence. Bound-Layer Meteorol 168(3):387–416

Dimitriadis P, Koutsoyiannis D (2015) Climacogram versus autocovariance and power spectrum in stochastic modelling for Markovian and Hurst-Kolmogorov processes. Stoch Environ Res Risk Assess 29(6):1649–1669

Dimitriadis P, Koutsoyiannis D (2018) Stochastic synthesis approximating any process dependence and distribution. Stoch Environ Res Risk Assess 32(6):1493–1515

Dimitriadis P, Koutsoyiannis D, Papanicolaou P (2016) Stochastic similarities between the microscale of turbulence and hydro-meteorological processes. Hydrol Sci J 61(9):1623–1640

Gersch W, Foutch D (1974) Least squares estimates of structural system parameters using covariance function data. IEEE Trans Autom Control 19(6):898–903

Gersch W, Kitagawa G (1985) A time varying AR coefficient model for modelling and simulating earthquake ground motion. Earthq Eng & Struct Dyn 13(2):243–254

Gersch W, Liu RS-Z (1976) Time series methods for the synthesis of random vibration systems. J Appl Mech 43(1):159–165

Gersch W, Luo S (1972) Discrete time series synthesis of randomly excited structural system response. J Acoust Soc Am 51(1B):402–408

Gersch W, Yonemoto J (1977) Synthesis of multivariate random vibration systems: a two-stage least squares AR-MA model approach. J Sound Vib 52(4):553–565

Giannini C, Mosconi R (1987) Predictions from unrestricted and restricted VAR models. Giornale degli Economisti e Annali di Economia 46(5/6):291–316

Helland K, Atta C (1978) The "Hurst phenomenon" in grid turbulence. J Fluid Mech 85(3):573–589

Hip, 1994 (1994). Chapter 11 fractional autoregressive-moving average models. In Hipel K W and McLeod A I (ed) Time series modelling of water resources and environmental systems, Vol. 45 of Developments in Water Science. Elsevier, pp. 389–413

Kareem A (2008) Numerical simulation of wind effects: a probabilistic perspective. J Wind Eng Ind Aerodyn 96(10):1472–1497

Kleinhans D, Friedrich R, Schaffarczyk A. P, Peinke J (2009). Synthetic turbulence models for wind turbine applications. In: Progress in turbulence III. Springer, pp. 111–114

Krenk S (2011). Explicit calibration and simulation of stochastic fields by low-order ARMA processes. In: ECCOMAS thematic conference on computational methods instructural dynamics and earthquake engineering. ECCOMAS, 550: 1–10

Krenk S, Müller RN (2019) Turbulent wind field representation and conditional mean-field simulation. Proc R Soc A 475(2223):20180887

Li Y, Kareem A (1990) ARMA systems in wind engineering. Probab Eng Mech 5(2):49–59

Li Y, Kareem A (1993) Simulation of multivariate random processes: hybrid DFT and digital filtering approach. J Eng Mech 119(5):1078–1098

Liu Y, Li J, Sun S, Yu B (2019) Advances in Gaussian random field generation: a review. Comput Geosci 23(5):1011–1047

Madsen H (2007) Time series analysis. Chapman & Hall, CRC, Boca Raton

Mignolet M, Spanos P (1991) Simulation of homogeneous two-dimensional random fields. Part I. AR and ARMA models. In: Anon (ed) American society of mechanical engineers (Paper)

Nordin CF, McQuivey RS, Mejia JM (1972) Hurst phenomenon in turbulence. Water Resour Res 8(6):1480–1486

Reed DA, Scanlan RH (1983) Time series analysis of cooling tower wind loading. J Struct Eng 109(2):538–554

Samaras E, Shinzuka M, Tsurui A (1985) ARMA representation of random processes. J Eng Mech 111(3):449–461

Samii K, Vandiver J. K (1984) A numerically efficient technique for the simulation of random wave forces on offshore structures. In: Offshore technology conference

Sharifzadehlari M, Fathianpour N, Renard P, Amirfattahi R (2018) Random partitioning and adaptive filters for multiple-point stochastic simulation. Stoch Environ Res Risk Assess 32(5):1375–1396

Shinozuka M, Deodatis G (1991) Simulation of stochastic processes by spectral representation. Appl Mech Rev 44(4):191–204

Shinozuka M, Deodatis G (1996) Simulation of multi-dimensional Gaussian stochastic fields by spectral representation. Appl Mech Rev 49(1):29–53

Soltan Mohammadi H, Abdollahifard MJ, Doulati Ardejani F (2020) CHDS: conflict handling in direct sampling for stochastic simulation of spatial variables. Stoch Environ Res Risk Assess 34(6):825–847

Spanos PD (1983) ARMA algorithms for ocean wave modeling. J Energy Resour Technol 105(3):300–309

Spanos PD, Hansen JE (1981) Linear prediction theory for digital simulation of sea waves. J Energy Resour Technol 103(3):243–249

Sparks NJ, Hardwick SR, Schmid M, Toumi R (2018) IMAGE: a multivariate multi-site stochastic weather generator for European weather and climate. Stoch Environ Res Risk Assess 32(3):771–784

Stoica P, Moses R (2005) Spectral analysis of signals. Prentice Hall, Upper Saddle River, New Jersey

Tsay RS (2013) Multivariate time series analysis: with R and financial applications. Wiley series in probability and statistics. Wiley, Hoboken, New Jersey

Acknowledgements

This research has been undertaken as a part of the zEPHYR project. This project has received funding from the European Union’s Horizon 2020 research and innovation programme under Grant Agreement No EC grant 860101. The authors wish to express their gratitude to Reviewer 1, for the constructive comments received during the reviewing process.

Funding

Open Access funding provided thanks to the CRUE-CSIC agreement with Springer Nature. This research has been undertaken as a part of the zEPHYR project. This project has received funding from the European Union’s Horizon 2020 research and innovation programme under Grant Agreement No EC Grant 860101.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Isotropic turbulence

The covariance tensor of a three-dimensional statistically stationary, homogeneous and isotropic velocity field between two spatial points separated by vector \(\mathbf{r}\) is given by:

where \(\sigma _0^2\) is the isotropic variance parameter, \(r=|\mathbf{r} |\), f(r) is the longitudinal correlation function and g(r) is the transverse correlation function (de Kármán and Howarth 1938). Assuming incompressibility provides the following relationship between g(r) and f(r):

For the particular case of a line along the wind direction, \(\mathbf{r} = [ r , 0 , 0]^T\), the covariance tensor is given by:

From the generalized von Karman spectral density functions, explicit expressions for f(r) and g(r) can be obtained (Krenk and Müller 2019):

where L is the length scale parameter of the three-dimensional von Karman energy spectrum, \(\varGamma (\cdot )\) is the gamma function (not to be confused with the covariance function), and \(K_n\) is the Bessel function of second kind of order n. The integral length scale in the longitudinal direction, \(L_u^x\), is defined as:

and it holds that:

with \(\lambda = \frac{\varGamma (1/2) \, \varGamma (\gamma )}{ \varGamma (\gamma -1/2)}\), where \(\gamma\) is a parameter with the value proposed by von Karman, \(\gamma =5/6\) (not to be confused with the autocovariance function).

Note that Eq. (A.6) implies that the integral length scale exists and is \(L_u^x < \infty\). However, there is evidence on the fact that geophysical time series, including turbulent velocity fluctuations, may show long-term persistence (Hurst phenomenon), meaning that the integral length scale does not exist (Nordin et al. 1972; Helland and Atta 1978). According to Dias et al. (2018), the assumption of a finite integral scale in atmospheric turbulence is not well based on experimental evidence. There are at least two reasons for that: on the one hand, the measurement periods are in the range of minutes-hours due to the inherent non-stationarity of the atmospheric boundary layer. On the other hand, tools for spectrum estimation from records usually include smoothing and low frequency loss of information, which leads to a bad representation of the PSD for very low frequencies (the length scale is the value of the PSD at frequency zero) (Dimitriadis et al. 2016). In Dimitriadis et al. (2016), a review on the most common three-dimensional power-spectrum-based models of stationary and isotropic turbulence is performed, concluding that Hurst-Kolmogorov (HK) behaviour is systematically excluded. To the author’s knowledge, the impact of this fact is still unclear, and it probably depends on the application. For the case of synthetic generation of turbulent wind velocity fields oriented to aeroelastic wind turbine simulation, the impact could be small because the duration of the required simulations is in the order of minutes, and the focus is placed on microturbulence, rather than low frequency fluctuations.

We define the non-dimensional autocovariance function for the longitudinal wind velocity component along the wind direction as a function of the non-dimensional separation \(\mathring{r} = r/L\) as follows:

It holds that:

The target autocovariance function \(\gamma ^T_l\) results from taking discrete values from \(\mathring{R}_u(\mathring{r})\) with a given \(\varDelta \mathring{r}\):

The numerical application considered in this work was obtained for:

Figure 18 shows \(\mathring{R}_u(\mathring{r})\) (continuous line) together with \(\gamma ^T_l\) (discrete values).

It is worth mentioning that the choice for parameter \(\varDelta \mathring{r}\) usually represents a trade-off between different criteria (Spanos 1983). On the one hand, small \(\varDelta \mathring{r}\) values involve target autocovariance functions with non negligible values for a large number of lags, increasing the number of required AR model coefficients to reproduce \(\gamma ^T_l\) reasonably well. On the other hand, large \(\varDelta \mathring{r}\) values may derive into problems in reproducing the spectrum for high frequencies (aliasing), which is problematic for some engineering problems (Li and Kareem 1990). Indeed, by discretising the continuous autocovariance function of a physical process to build \(\gamma ^T_l\) (see above), the corresponding target spectrum (i.e., the Fourier Transform of \(\gamma ^T_l\)) differs from the spectrum of the original continuous process in the high frequency range. To illustrate this, Fig. 19 shows the non-dimensional one-sided spectrum of the employed von Karman turbulence model, \(\mathring{S}^{VK}(\mathring{k})\), together with the target spectrum resulting from three different values of \(\varDelta \mathring{r}\), referred to as \(\mathring{S}^{T}\) for a specific \(\varDelta \mathring{r}\). \(\mathring{S}^{VK}(,\mathring{k})\) is given by:

where \(\mathring{k}\) is the non-dimensional wave number, \(\mathring{k}= k \, L\), and \(\gamma =5/6\), as stated above. Note that \(\mathring{S}^{T}\) are obtained only up to a maximum value of \(\mathring{k}\) derived from the Nyquist theorem, \(\mathring{k}_{max} = \frac{2\pi }{2 \varDelta \mathring{r}}\).

B Results for a target autocovariance function with very low decay rate

As mentioned in Sect. 1, long-term persistence processes (Hurst phenomenon), characterised by an infinite integral scale, are not considered in this study. However, because many physical processes may show very large integral time scales, it is interesting at least to highlight how the structure of the optimal AR model changes according to the decay rate of the considered target autocovariance function. To this end, the optimisation process performed in Section 5 has been repeated assuming a discretisation of the non-dimensional continuous autocovariance function ten times finer, i.e. \(\varDelta \mathring{r} = \frac{\lambda }{60} = 0.01245\). As a consequence, the target autocovariance function decays ten times slower, and the maximum lag considered in the MSE computation to account for the \(99.5\%\) of the integral length scale becomes \(M=401\). Figure 20 shows the resulting target autocovariance function. A direct consequence of this modification is an increase of the computational burden associated with the optimisation procedure.