Abstract

Inspired by the recent theory of Entropy-Transport problems and by the \({\mathbf {D}}\)-distance of Sturm on normalised metric measure spaces, we define a new class of complete and separable distances between metric measure spaces of possibly different total mass. We provide several explicit examples of such distances, where a prominent role is played by a geodesic metric based on the Hellinger-Kantorovich distance. Moreover, we discuss some limiting cases of the theory, recovering the “pure transport” \({\mathbf {D}}\)-distance and introducing a new class of “pure entropic” distances. We also study in detail the topology induced by such Entropy-Transport metrics, showing some compactness and stability results for metric measure spaces satisfying Ricci curvature lower bounds in a synthetic sense.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

With motivations from pure Mathematics as well as from applied sciences, over the last decades a growing attention has been paid to the problem of “comparing objects”, which come naturally endowed with a distance/metric and a weight/volume form/measure. From the mathematical point of view, such objects are formalised as metric measure spaces (m.m.s. for short) \((X,{\mathsf {d}},\mu )\), where the metric structure \((X,{\mathsf {d}})\) describes the geometry and the mutual distance of points, and the measure \(\mu \) “weights” the relative importance of different parts of the object.

The flexibility of such a framework allows to unify the treatment of a series of problems stemming from various fields of science and technology, e.g. chemistry [24], data science [33], multi-omics data alignment [13], computer vision [40], language processing [1], graph [46] and shape [42, 49] matching, barycenters & shape analysis [34], generative networks [6, 11], machine learning [47]. The theory of metric measure spaces has been flourishing in pure Mathematics as well, providing a unified setting to investigate concentration of measure phenomena [26, 41], the theory of Ricci limit spaces [8, 19] and, more generally, synthetic notions of Ricci curvature lower bounds [4, 30, 43, 44].

In order to “quantify the similarities and differences between two such objects”, it is thus natural to investigate appropriate notions of distance between metric measure spaces. This idea has its roots in the work of Gromov [23, Chapter 3\(\frac{1}{2}\)]), who first recognized the importance of studying the “space of spaces” \(\varvec{\mathrm {X}}\) as a metric space in its own right. Formally, \(\varvec{\mathrm {X}}\) denotes the set of equivalence classes of metric measure spaces \((X,{\mathsf {d}},\mu )\), where \((X,{\mathsf {d}})\) is a complete and separable metric space, and \(\mu \) is a finite, nonnegative, Borel measure; we are naturally identifying two m.m.s. \((X_1,{\mathsf {d}}_1,\mu _1)\), \((X_2,{\mathsf {d}}_2,\mu _2)\) if there exists an isometry \(\psi :{\mathsf {supp}}(\mu _1)\rightarrow {\mathsf {supp}}(\mu _2)\) such that \(\psi _{\sharp }\,\mu _1=\mu _2\). Here by \({\mathsf {supp}}(\mu )\) we denote the support of the measure \(\mu \) (see the preliminary section for more details).

In the recent years, the theory has been pushed forward by the works of Sturm [43, 45] and Memoli [32] who realized that ideas from mass transportation can be used to produce new relevant distances between metric measure spaces. Such distances have been successfully applied in different fields, but suffer from a major restriction which is intrinsic of the Wasserstein distances coming from optimal transport: they can be used to compare only spaces with the same total mass.

The goal of the present paper is to overcome this limitation by taking advantage of the theory of optimal Entropy-Transport problems [29]. In contrast with the classical transport setting, these problems allow the description of phenomena where the conservation of mass may not hold; for this reason they are also known in the literature as “unbalanced optimal transport problems”. The corresponding theory is fairly recent and is becoming increasingly popular in applications, e.g. gradient flows to train neural networks [9, 37], supervised learning [18], medical imaging [17] and video [27] registration. Indeed, the Entropy-Transport relaxation seems to outperform classical optimal transport in all the problems where the input data is noisy or a normalization procedure is not appropriate. We refer to [38] and references therein for more applications of unbalanced optimal transport.

As we are going to explain in detail below, inspired by the construction of the \({\mathbf {D}}\)-distance of Sturm [43], we are able to produce a new class of complete and separable distances between metric measure spaces by replacing the Wasserstein distance with an Entropy-Transport distance. Such metric structures on \(\varvec{\mathrm {X}}\) also turn out to be geodesic (resp. length) when the underlying Entropy-Transport distance is geodesic (resp. length).

Optimal transport and Sturm distances. Let \((X,{\mathsf {d}})\) be a metric space and \(\varvec{\mathrm c}:X\times X\rightarrow [0,+\infty ]\) be a lower semi-continuous cost function. The optimal transport problem between two probability measures \(\mu _1,\mu _2\) consists in the minimization problem:

Here \(\Pi (\mu _1,\mu _2)\) denotes the set of measures \(\varvec{\gamma }\) in the product space \(X\times X\) whose marginals satisfy the constraint \(\pi ^i_{\sharp }\varvec{\gamma }=\mu _i\), where \(\pi ^i\) denotes the projection map \(\pi ^i(x_1,x_2)=x_i\).

A typical choice for the cost function is \(\varvec{\mathrm c}(x_1,x_2)={\mathsf {d}}^p(x_1,x_2)\), \(p\ge 1\). In this situation, the transport cost \(\mathrm {T}\) is the p-power of the celebrated p-Wasserstein distance \({\mathcal {W}}_p\), a metric on the set \({\mathscr {P}}_p(X)\) of probability measures over X with finite p-moment. Starting from the seminal work of Kantorovich, the metric space \(({\mathscr {P}}_p(X), {\mathcal {W}}_p)\) has been thoroughly studied: it inherits many geometric properties of the underlying space \((X,{\mathsf {d}})\) (such as completeness, separability, geodesic property) and induces the weak topology (with p-moments) of probability measures. We refer to the monograph [48] for a detailed overview of the topic.

As observed by Sturm [43], one can lift the metric \({\mathcal {W}}_p\) to a distance between metric measure spaces by defining:

where the infimum is taken over all complete and separable metric spaces \(({\hat{X}},\hat{{\mathsf {d}}})\), and all isometric embeddings \(\psi ^i:{\mathsf {supp}}(\mu _i)\rightarrow {\hat{X}}\). It is proved in [43, Theorem 3.6] that \({\mathbf {D}}_p\) is a complete, separable and geodesic distance on the set

Entropy-Transport problems and Sturm-Entropy-Transport distances. The idea at the core of Entropy-Transport problems is to relax the marginal constraints typical of the classical Kantorovich formulation (1) by adding some suitable penalizing functionals which keep track of the deviation of the marginals \(\gamma _i:=\pi ^i_{\sharp }\varvec{\gamma }\) from the data \(\mu _i\), \(i=1,2\).

Following the approach of Liero, Mielke and Savaré [29], given a superlinear, convex function \(F:[0,+\infty )\rightarrow [0,+\infty ]\) such that \(F(1)=0\) (for simplicity here we assume F to be superlinear, see definition (21) for the general case), one considers the entropy functional (also called Csiszár F-divergence [12])

Here \({\mathscr {M}}(X)\) denotes the set of finite, nonnegative, Borel measures over X. A classical example is given by the choice \(F=U_1(s):=s\ln (s)-s+1\), that corresponds to the celebrated Kullback-Leibler divergence (note that when \({\varvec{\gamma }}\) and \(\mu \) are probability measures, \(D_{U_1}\) coincides with the celebrated Boltzmann-Shannon entropy \(\mathrm{Ent}(\rho \mu | \mu )=\int \rho \log \rho \, {\mathrm d}\mu \)).

Given \(\mu _1,\mu _2\in {\mathscr {M}}(X)\), the Entropy-Transport problem induced by the entropy function F and the cost function \(\varvec{\mathrm c}\) is then defined as

We emphasize that the problem (4) makes perfect sense even when \(\mu _1(X)\ne \mu _2(X)\).

As in the case of optimal transport problems, it is natural to consider cost functions of the form \(\varvec{\mathrm c}(x_1,x_2)=\ell ({\mathsf {d}}(x_1,x_2))\), where \({\mathsf {d}}\) is a distance on X and \(\ell :=[0,\infty )\rightarrow [0,\infty ]\) is a general function. With a careful choice of the functions F and \(\ell \) (see [14] for a discussion on the metric properties of Entropy-Transport problems), one is able to produce a distance  on the space \({\mathscr {M}}(X)\) by taking a suitable power of the Entropy-Transport cost

on the space \({\mathscr {M}}(X)\) by taking a suitable power of the Entropy-Transport cost  , namely

, namely  for a certain \(a\in (0,1]\).

for a certain \(a\in (0,1]\).

In the paper we introduce the class of regular Entropy-Transport distances. The formal definition of this class of distances is given in Definition 2, here we just mention than any regular Entropy-Transport distance  is a complete and separable metric on \({\mathscr {M}}(X)\) of the form

is a complete and separable metric on \({\mathscr {M}}(X)\) of the form  , for an Entropy-Transport cost induced by sufficiently regular functions F and \(\ell \).

, for an Entropy-Transport cost induced by sufficiently regular functions F and \(\ell \).

For any regular Entropy-Transport distance, the Sturm-Entropy-Transport distance  between the (equivalence classes of) m.m.s. \((X_1,{\mathsf {d}}_1,\mu _1)\), \((X_2,{\mathsf {d}}_2,\mu _2)\) is then defined as

between the (equivalence classes of) m.m.s. \((X_1,{\mathsf {d}}_1,\mu _1)\), \((X_2,{\mathsf {d}}_2,\mu _2)\) is then defined as

where the infimum is taken over all complete and separable metric spaces \(({\hat{X}},\hat{{\mathsf {d}}})\), and all isometric embeddings \(\psi ^1:{\mathsf {supp}}(\mu _1)\rightarrow {\hat{X}}\) and \(\psi ^2:{\mathsf {supp}}(\mu _2)\rightarrow {\hat{X}}\).

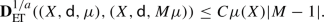

The main result of the paper (Theorem 2) is that every Sturm-Entropy-Transport distance defines a complete and separable metric structure on \({\mathbf {X}}\). Moreover it satisfies the geodesic (resp. length) property if the distance  satisfies the geodesic (resp. length) property on the space of measures.

satisfies the geodesic (resp. length) property on the space of measures.

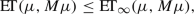

We also study in detail the notion of convergence induced by such distances, showing that it corresponds to the weak measured-Gromov convergence introduced in [21]. As a consequence, we obtain a compactness result for the class of m.m.s. \((X,{\mathsf {d}},\mu )\) satisfying the \({\mathsf {CD}}(K,N)\) condition, having bounded diameter and satisfying \(0<v\le \mu (X)\le V\). We refer to Theorem 4 for the precise statement and to the preliminaries for the definition of the curvature-dimension condition \({\mathsf {CD}}(K,N)\).

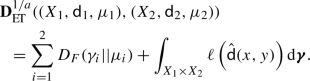

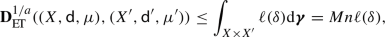

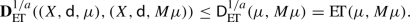

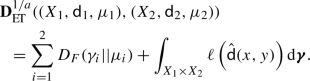

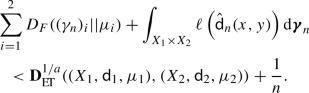

At a technical level, the proofs of our results are inspired by the corresponding ones given by Sturm in [43], but they require new ideas in order to deal with general cost functions and with the entropic part of the problem. Two key results of independent interest are contained in Proposition 2 and Lemma 6, where we show that the infimum in the right hand side of (5) is actually a minimum, and we give an explicit formulation of the Sturm-Entropy-Transport distance, namely

for some optimal measure \(\varvec{\gamma }\in {\mathscr {M}}(X_1\times X_2)\) and optimal pseudo-metric coupling \(\hat{{\mathsf {d}}}\) between \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\) (see the preliminaries for the definition of pseudo-metric coupling). Also the proof of one of the main results, Theorem 2, despite being inspired by [43], departs from it and needs some new ideas:

-

in order to show that \({\mathbf {D}}_p\) defines a non-degenerate distance function (i.e.

$$\begin{aligned} {\mathbf {D}}_p \left( (X_1,{\mathsf {d}}_1,\mu _1), (X_2,{\mathsf {d}}_2,\mu _2) \right) =0 \end{aligned}$$implies that \((X_1,{\mathsf {d}}_1,\mu _1)\) and \((X_2,{\mathsf {d}}_2,\mu _2)\) are isomorphic as metric measure spaces), Sturm [43] establishes a comparison result with Gromov’s \({\underline{\Box }}_{1}\) distance, of independent interest; this permits to inherit the non-degeneracy of \({\mathbf {D}}_p\) by the one of \({\underline{\Box }}_{1}\).

Instead, we argue directly: thanks to the aforementioned Proposition 2 and Lemma 6, we can exploit the existence of an optimal coupling both at the level of space and measure and infer the non-degeneracy of

directly;

directly; -

in order to show that the \({\mathbf {D}}_p\) distance is length, Sturm [43] argues by approximation via finite metric spaces, taking advantage of the “pure transport” behaviour of \({\mathbf {D}}_p\).

Due to the entropy contribution in the

distance, we argue differently: the main point is to embed everything in a complete, separable and geodesic ambient space, obtained by a slight modification of Kuratowski embedding.

distance, we argue differently: the main point is to embed everything in a complete, separable and geodesic ambient space, obtained by a slight modification of Kuratowski embedding.

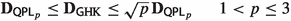

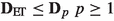

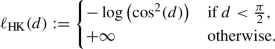

The class of regular Entropy-Transport distances includes some of the main examples of Entropy-Transport distances known in the literature, including:

-

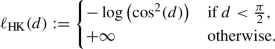

The Hellinger-Kantorovich geodesic distance [10, 25, 28, 29] induced by the choices

$$\begin{aligned} a=1/2\, , \qquad F(s)=U_1(s)\, , \qquad \ell (d)={\left\{ \begin{array}{ll} -\log \left( {\cos ^2(d)}\right) \ \ &{}\text {if} \ d<\frac{\pi }{2}, \\ +\infty \ \ &{}\text {otherwise}.\end{array}\right. } \end{aligned}$$ -

The so-called Gaussian Hellinger-Kantorovich distance [29] that corresponds to the choices

$$\begin{aligned} a=1/2\, , \qquad F(s)=U_1(s)\, , \qquad \ell (d)=d^2. \end{aligned}$$ -

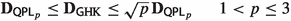

The quadratic power-like distances studied in [14] corresponding to

$$\begin{aligned} a=1/2\, , \quad F(s)=U_p(s):=\frac{s^p-p(s-1)-1}{p(p-1)}\, , \quad \ell (d)=d^2, \quad 1<p\le 3. \end{aligned}$$

Moreover, our analysis is not restricted to regular Entropy-Transport distances. By a limit procedure we also discuss some singular cases covering:

-

The “pure entropy” setting that corresponds to the choice

$$\begin{aligned} \varvec{\mathrm c}(x_1,x_2)={\left\{ \begin{array}{ll}0 \ &{}\text {if} \ x_1=x_2, \\ +\infty &{}\text {otherwise.} \end{array}\right. } \end{aligned}$$In this situation we construct a family of distances between metric measure spaces inducing a notion of strong convergence (see Theorems 5 and 6 for the details).

-

The “pure transport” setting, corresponding to

$$\begin{aligned} a=1/p\, , \qquad F(s)={\left\{ \begin{array}{ll} 0 \ &{}\mathrm {if} \ s=1, \\ +\infty &{}\mathrm {otherwise}, \end{array}\right. } \qquad \ell (d)=d^p, \end{aligned}$$where we recover the \({\mathbf {D}}_p\)-distances introduced by Sturm.

-

The Piccoli-Rossi distance \(\mathsf {BL}\) [35, 36] (also known as bounded-Lipschitz distance), induced by the choices

$$\begin{aligned} a=1\, , \qquad F(s)=|s-1|\, , \qquad \ell (d)=d. \end{aligned}$$By an analogous procedure to the one described in (5), in Theorem 8 we show that the distance \(\mathsf {BL}\) can be lifted to a complete distance \({\mathbf {BL}}\) on the set \({\mathbf {X}}\).

Note on the preparation. Some of the results of the paper (often under additional assumptions) have been presented at different seminars and included in the Phd thesis of the first named author [15, Chapter 5], where the construction of the Sturm-Entropy-Transport distances induced by the Hellinger-Kantorovich and the quadratic power-like distances is developed.

Only during the final stage of preparation of the present manuscript (September 2020), we became aware of the independent work [39], which defines a class of distances (denoted by \(\mathrm {CGW}\), for “conic Gromow-Wasserstein”) between unbalanced metric measure spaces starting from the construction of the Gromov–Wasserstein distance introduced in [32] and the conical formulation of the Entropy-Transport problems (see [10, 14, 29] and Remark 1 for a discussion on the “cone geometry” of Entropy-Transport problems). The paper [39] also provides some interesting numerical discussions on the topic, while it is not present a study on the analytic and geometric properties of this class of distances (such as completeness, separability, the length and geodesic property, compactness). An expert reader will notice that our  distance is an unbalanced counterpart of Sturm’s \({\mathbf {D}}_p\) distance [43] for probability metric measure spaces, while Séjourné-Vialard-Peyré \(\mathrm {CGW}\) distance [39] is an unbalanced counterpart of Memoli’s [32] Gromov–Wasserstein distance. A major difference between the two approaches is that while our

distance is an unbalanced counterpart of Sturm’s \({\mathbf {D}}_p\) distance [43] for probability metric measure spaces, while Séjourné-Vialard-Peyré \(\mathrm {CGW}\) distance [39] is an unbalanced counterpart of Memoli’s [32] Gromov–Wasserstein distance. A major difference between the two approaches is that while our  distance is complete (see Theorem 2), the Gromov–Wasserstein distance of [32] is not complete, and the same is expected for the \(\mathrm {CGW}\) distance of [39]. The relation between

distance is complete (see Theorem 2), the Gromov–Wasserstein distance of [32] is not complete, and the same is expected for the \(\mathrm {CGW}\) distance of [39]. The relation between  and \(\mathrm {CGW}\) is analysed in Sect. 5, where we prove an upper bound of the latter in terms of the former.

and \(\mathrm {CGW}\) is analysed in Sect. 5, where we prove an upper bound of the latter in terms of the former.

2 Preliminaries and notation

2.1 Metric and measure setting

A function \({\mathsf {d}}:X\times X\rightarrow [0,\infty ]\) is a pseudo-metric on the set X if \({\mathsf {d}}\) is symmetric, satisfies the triangle inequality and \({\mathsf {d}}(x,x)=0\) for every \(x\in X\). We say that \({\mathsf {d}}\) is a metric possibly attaining the value \(+\infty \) if it is a pseudo-metric such that \({\mathsf {d}}(x,y)=0\) implies \(x=y\). When \({\mathsf {d}}\) is also finite-valued, we simply say that \({\mathsf {d}}\) is a metric. A pseudo-metric space (resp. metric space) will be a couple \((X,{\mathsf {d}})\), where \({\mathsf {d}}\) is a pseudo-metric (resp. metric) on the set X.

On a pseudo-metric space we will always consider the topology induced by the open balls \(B_r(x):=\{y\in X: {\mathsf {d}}(x,y)<r\}.\) A Polish space is a separable completely metrizable topological space. We will denote by \(\mathsf {diam}(X)\) the diameter of a metric space X.

An isometry between two metric spaces \((X_1,{\mathsf {d}}_1)\), \((X_2,{\mathsf {d}}_2)\) is a map \(\psi :X_1\rightarrow X_2\) such that for every \(x,y\in X_1\) we have

Let \(\{(X_{\alpha },{\mathsf {d}}_{\alpha })|{\alpha }\in A\}\) be an indexed family of metric spaces, we define its disjoint union as

endowed with a pseudo-metric \(\hat{{\mathsf {d}}}\), called pseudo-metric coupling between \(\{{\mathsf {d}}_{\alpha }\}\), such that \(\hat{{\mathsf {d}}}((x,\alpha ),(y,\alpha ))={\mathsf {d}}_{\alpha }(x,y)\) for every \(x,y\in X_{\alpha }\). The inclusion map

is thus an isometry with image \(X_{\alpha }\times \{\alpha \}\). We will often identify, with a slight abuse of notation, the space \(X_{\alpha }\) with \(X_{\alpha }\times \{\alpha \}\).

Lemma 1

Let \((X_1,{\mathsf {d}}_1)\), \((X_2,{\mathsf {d}}_2)\) be two complete and separable metric spaces. Let \({\hat{{\mathsf {d}}}}\) be a finite valued pseudo-metric coupling between \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\). Then the space

endowed with the distance

is a complete and separable metric space. Here \([x]\in {\tilde{X}}\) denotes the equivalence class of the point \(x\in X_1\sqcup X_2\).

Proof

We firstly notice that \({\tilde{{\mathsf {d}}}}\) is well defined on \({\tilde{X}}\). Indeed, if \(x_1 \sim {\tilde{x}}_1\) and \(x_2 \sim {\tilde{x}}_2\) we have

which implies \({\tilde{{\mathsf {d}}}}(x_1,x_2)={\tilde{{\mathsf {d}}}}({\tilde{x}}_1,{\tilde{x}}_2)\).

It is clear that \({\tilde{{\mathsf {d}}}}\) is a metric on \((X_1\sqcup X_2)/\sim \).

The separability is a consequence of the fact that \((X_1\sqcup X_2,{\hat{{\mathsf {d}}}})\) is separable, being the union of two separable space (recall that \({\hat{{\mathsf {d}}}}={\mathsf {d}}_i\) on \(X_i\), \(i=1,2\)).

To prove the completeness, let us consider a Cauchy sequence \(\{y_j\}\in {\tilde{X}}\). It is sufficient to show that a subsequence is converging with respect to \({\tilde{{\mathsf {d}}}}\). Let \(p:X_1\sqcup X_2\rightarrow {\tilde{X}}\) be the quotient map and, recalling that \(X_1\sqcup X_2=X_1\times \{0\}\cup X_2\times \{1\}\), we can suppose without loss of generality that there exists a subsequence \(\{p^{-1}(y_{j_k})\}\in X_1\times \{0\}\) (the case \(\{p^{-1}(y_{j_k})\}\in X_2\times \{1\}\) being analogous). Up to identifying \((X_1\times \{0\},{\hat{{\mathsf {d}}}})\) with \((X_1,{\mathsf {d}}_1)\), we can infer that \(\{p^{-1}(y_{j_k})\}\) is a Cauchy sequence in the complete space \((X_1,{\mathsf {d}}_1)\) and thus it converges. It is immediate to check that \(\{y_{j_k}\}\) is converging in \({\tilde{X}}\) with respect to \({\tilde{{\mathsf {d}}}}\) and the proof is complete. \(\square \)

Starting from a metric space \((X,{\mathsf {d}})\), we define the cone over X as the space

If \((X,{\mathsf {d}})\) is a pseudo-metric space, we denote by \({\mathscr {M}}(X)\) the space of finite, nonnegative measures on the Borel \(\sigma \)-algebra \({\mathscr {B}}(X)\), and by \({\mathscr {P}}(X)\subset {\mathscr {M}}(X)\) the space of probability measures. We endow \({\mathscr {M}}(X)\) with the weak topology, inducing the following notion of convergence:

where \(C_b(X)\) denotes the set of real, continuous and bounded functions defined on X.

A subset \({\mathscr {K}}\subset {\mathscr {M}}(X)\) is bounded if \(\sup _{\mu \in {\mathscr {K}}}\mu (X)<\infty \) and it is equally tight if

Compactness properties with respect to the weak topology on \({\mathscr {M}}(X)\) are guaranteed by the following version of Prokhorov’s Theorem:

Theorem 1

Let X be a Polish space. A subset \({\mathscr {K}}\subset {\mathscr {M}}(X)\) is bounded and equally tight if and only if it is relatively compact with respect to the weak topology.

We recall that the set of measures of the form

where \(M\in {\mathbb {R}}_+\), \(N\in {\mathbb {N}}\) and \(x_n\in X\), is dense in \({\mathscr {M}}(X)\). Moreover, if X is separable, the measures of the form (11), with \(M\in {\mathbb {Q}}_+\) and \(x_n\) in a countable dense subset of X, form a countable dense subset of \({\mathscr {M}}(X)\), proving that also the latter is a separable space.

A metric measure space will be a triple \((X,{\mathsf {d}},\mu )\) where \((X,{\mathsf {d}})\) is a complete, separable metric space and \(\mu \in {\mathscr {M}}(X)\). If there exists a point \(x_0\in X\) such that

we will say that the measure \(\mu \in {\mathscr {P}}(X)\) has finite p-moment. We denote by \({\mathscr {P}}_p(X)\) the space of measures \(\nu \in {\mathscr {P}}(X)\) with finite p-moment.

The support of the measure \(\mu \) is the smallest closed set \(X_0:={\mathsf {supp}}(\mu )\) such that \(\mu (X{\setminus } X_0)=0\). We notice that the set \({\mathsf {supp}}(\mu )\) has a natural structure of metric measure space with the induced distance, \(\sigma \)-algebra and measure (which will be denoted in the same way).

We say that \(\varphi \) is a curve connecting \(x,y\in X\), if \(\varphi :[a,b]\rightarrow X\) is a continuous map such that \(\varphi (a)=x\) and \(\varphi (b)=y\). The length of a curve is defined as

where the supremum is taken over all the partitions \(a=t_0<t_1<\ldots <t_n=b\).

We will always assume that a curve of finite length is parametrized by constant speed, i.e.

A metric space \((X,{\mathsf {d}})\) is called length space if for all \(x,y\in X\)

A geodesic is a curve \(\varphi :[a,b]\rightarrow X\) such that

Notice in particular that if \(\varphi \) is a geodesic then

A metric space \((X,{\mathsf {d}})\) is geodesic if any pair of points \(x,y\in X\) is connected by a geodesic.

For a metric space \((X,{\mathsf {d}})\), the Kantorovich-Wasserstein distance \({\mathcal {W}}_p\) of order p, \(p\ge 1\), is defined as follows: for \(\mu _0,\mu _1 \in {\mathscr {M}}(X)\) we set

where the infimum is taken over all \(\varvec{\gamma } \in {\mathscr {M}}(X \times X)\) with \(\mu _0\) and \(\mu _1\) as the first and the second marginal, i.e. \((\pi ^i)_{\sharp }\varvec{\gamma }=\mu _i\) where \(\pi ^i:X\times X\rightarrow X\) denotes the projection map \(\pi ^i(x_1,x_2)=x_i\), \(i=1,2\). A measure \(\varvec{\gamma } \in {\mathscr {M}}(X \times X)\) achieving the minimum in (16) with given marginals is said a \({\mathcal {W}}_p\)-optimal coupling for \((\mu _0,\mu _1)\). It is clear that \({\mathcal {W}}_p(\mu _1,\mu _2)=+\infty \) when \(\mu _1(X)\ne \mu _2(X)\).

If \((X,{\mathsf {d}})\) is complete and separable, \(({\mathscr {P}}_p(X),{\mathcal {W}}_p)\) is a complete and separable metric space. It is geodesic when \((X,{\mathsf {d}})\) is geodesic. Moreover, for any sequence \(\mu _n\in {\mathscr {P}}_p(X)\) we have

where the latter means that for some (thus any) \(x_0\)

For a proof of these last facts, see [48, Theorem 6.18].

2.2 Curvature-Dimension condition

It is out of the scopes of this brief section to give a full account of the curvature-dimension condition and its properties; we will limit to schematically recalling the basic definitions involved. The interested reader is referred to the original papers [3,4,5, 7, 16, 20, 21, 30, 43, 44], the survey [2] and the monograph [48].

-

For any \(K\in {\mathbb {R}}, \, N\in (1,\infty ), \,\theta >0\) and \(t\in [0,1]\), define the distortion coefficients by

$$\begin{aligned} \tau ^{(t)}_{K,N}(\theta ) := t^{\frac{1}{N}} \sigma ^{(t)}_{K, N-1}(\theta )^{\frac{N-1}{N}}, \end{aligned}$$where

$$\begin{aligned} \sigma ^{(t)}_{K,N}(\theta ):= {\left\{ \begin{array}{ll} \infty &{}\text {if }K\theta ^2\ge N\pi ^2\\ \frac{\sin (t\theta \sqrt{K/N})}{\sin (\theta \sqrt{K/N})} &{}\text {if }0<K\theta ^2< N\pi ^2\\ t &{}\text {if }K\theta ^2=0\\ \frac{\sinh (t\theta \sqrt{K/N})}{\sinh (\theta \sqrt{K/N})} &{}\text {if } K\theta ^2<0 \end{array}\right. }. \end{aligned}$$ -

For every \(N\in (1,\infty )\), define the N-Rényi entropy functional relative to \(\mu \), \({{\mathcal {U}}}_N(\cdot \,| \mu ):{{\mathscr {P}}}(X) \rightarrow [-\infty ,0]\) as

$$\begin{aligned} {{\mathcal {U}}}_N(\nu | \mu ):=- \int _{X} \rho ^{1-\frac{1}{N}} {\mathrm d}\mu , \quad \text {where }\nu = \rho \mu +\nu ^s\text { and }\nu ^s\perp \mu . \end{aligned}$$ -

Define also the Boltzmann-Shannon entropy functional relative to \(\mu \), \(\mathrm{Ent}(\cdot \,| \mu ):{{\mathscr {P}}}(X) \rightarrow (-\infty ,+ \infty ]\) as

$$\begin{aligned} \mathrm{Ent}(\nu | \mu ):= \int _{X} \rho \,\log (\rho )\, {\mathrm d}\mu , \quad \text {if }\nu = \rho \mu \ll \mu \text { and }\rho \log \rho \in L^1(X,\mu ), \end{aligned}$$and \(+\infty \) otherwise.

-

\({\mathsf {CD}}(K,\infty )\) condition: given \(K\in {\mathbb {R}}\), we say that \((X,{\mathsf {d}},\mu )\) verifies the \({\mathsf {CD}}(K,\infty )\) condition if for any pair of probability measures \(\nu _0,\nu _1\in {{\mathscr {P}}}_2 (X) \) with

$$\begin{aligned} \mathrm{Ent}(\nu _0| \mu ), \mathrm{Ent}(\nu _1| \mu )<+\infty , \end{aligned}$$there exists a \({\mathcal {W}}_2\)-geodesic \((\nu _t)_{t\in [0,1]}\) from \(\nu _0\) to \(\nu _1\) such that

$$\begin{aligned} \mathrm{Ent}(\nu _t| \mu )\le (1-t)\, \mathrm{Ent}(\nu _0| \mu )+ t\, \mathrm{Ent}(\nu _1| \mu )- \frac{K}{2} t (1-t) {\mathcal {W}}^2_2(\mu _0, \mu _1), \end{aligned}$$for any \(t\in [0,1]\).

-

\({\mathsf {CD}}(K,N)\) condition: given \(K\in {\mathbb {R}}\), \(N\in (1,\infty )\) we say that \((X,{\mathsf {d}},\mu )\) verifies the \({\mathsf {CD}}(K,N)\) condition if for any pair of probability measures \(\nu _0,\nu _1\in {{\mathscr {P}}}{_2}(X) \) with bounded support and with \(\nu _0,\nu _1\ll \mu \), there exists a \({\mathcal {W}}_2\)-geodesic \((\nu _t)_{t\in [0,1]}\) from \(\nu _0\) to \(\nu _1\) with \(\nu _t \ll \mu \), and a \({\mathcal {W}}_2\)-optimal coupling \(\varvec{\gamma } \in {{\mathscr {P}}}(X \times X)\) such that

$$\begin{aligned} {{\mathcal {U}}}_{N'}(\nu _t| \mu )\le - \int \left[ \tau ^{(1-t)}_{K,N'}({\mathsf {d}}(x,y))\rho _0^{-\frac{1}{N'}}+ \tau ^{(t)}_{K,N'}({\mathsf {d}}(x,y)) \rho _1^{-\frac{1}{N'}} \right] {\mathrm d}\varvec{\gamma } (x,y), \end{aligned}$$for any \(N'\ge N\), \(t\in [0,1]\).

-

Consistency property: A smooth Riemannian manifold (resp. weighted Riemannian manifold) M satisfies the \({\mathsf {CD}}(K,N)\) condition for some \(K \in {\mathbb {R}}, N\in (1,\infty )\) if and only if \(\mathrm{dim}(M)\le N\) and the Ricci curvature is bounded below by K (resp. if and only if the N-Bakry-Émery-Ricci tensor is bounded below by K).

-

Define the slope of a real valued function \(u:X\rightarrow {\mathbb {R}}\) at the point \(x\in X\) as

$$\begin{aligned} |\nabla u|(x):= {\left\{ \begin{array}{ll} \limsup _{y\rightarrow x} \frac{|u(x)-u(y)|}{{\mathsf {d}}(x,y)} &{}\text {if { x} is not isolated} \\ 0 &{}\text {otherwise}. \end{array}\right. } \end{aligned}$$We denote with \(\mathrm{LIP} (X)\) the space of Lipschitz functions on \((X,{\mathsf {d}})\).

-

Let \(f\in L^2(X, \mu )\). The Cheeger energy of f is defined as

$$\begin{aligned} \mathsf {Ch}(f):= \inf \left\{ \liminf _{n\rightarrow \infty }\frac{1}{2}\int |\nabla f_n|^2 {\mathrm d}\mu \, |\, f_n\in \mathrm{LIP}(X)\cap L^2(X,\mu ), \Vert f_n-f\Vert _{L^2}\rightarrow 0 \right\} . \end{aligned}$$One can check that the Cheeger energy \(\mathsf {Ch}:L^2(X,\mu )\rightarrow [0,\infty ]\) is convex and lower semi-continuous. Thus it admits an \(L^2\)-gradient flow, called heat flow.

-

The metric measure space \((X,{\mathsf {d}},\mu )\) is said infinitesimally Hilbertian if \(\mathsf {Ch}\) is a quadratic form, i.e. it satisfies the parallelogram identity.

One can check that \((X,{\mathsf {d}},\mu )\) is infinitesimally Hilbertian if and only if the heat flow for every positive time is a linear map from \(L^2(X,\mu )\) to \(L^2(X,\mu )\).

If \((X,{\mathsf {d}},\mu )\) is the metric measure space associated to a smooth Finsler manifold, one can check that \((X,{\mathsf {d}},\mu )\) is infinitesimally Hilbertian if and only if the manifold is actually Riemannian.

-

Given \(K\in {\mathbb {R}}\) and \(N\in (1,\infty ]\), we say that \((X,{\mathsf {d}},\mu )\) verifies the \({\mathsf {RCD}}(K,N)\) condition if it satisfies the \({\mathsf {CD}}(K,N)\) condition and it is infinitesimally Hilbertian.

-

Pointed measured Gromov-Hausdorff convergence: Let \((X_n,{\mathsf {d}}_n,\mu _n)\), \(n\in {\mathbb {N}}\cup \{\infty \}\), be a sequence of metric measure spaces and let \({\bar{x}}_n\in X_n\) for every \(n\in {\mathbb {N}}\cup \{\infty \}\) be a sequence of reference points. We say that \((X_n,{\mathsf {d}}_n,\mu _n, {{\bar{x}}}_n)\rightarrow (X_\infty ,{\mathsf {d}}_\infty ,\mu _\infty , {{\bar{x}}}_\infty )\) in the pointed measured Gromov Hausdorff (pmGH) sense, provided for any \(\varepsilon ,R>0\) there exists \(N({\varepsilon ,R})\in {\mathbb {N}}\) such that for all \(n\ge N({\varepsilon ,R})\) there exists a Borel map \(f^{R,\varepsilon }_n:B_R({{\bar{x}}}_n)\rightarrow X_\infty \) such that

-

\(f^{R,\varepsilon }_n({{\bar{x}}}_n)={{\bar{x}}}_\infty \),

-

\(\sup _{x,y\in B_R({{\bar{x}}}_n)}|{\mathsf {d}}_n(x,y)-{\mathsf {d}}_\infty (f^{R,\varepsilon }_n(x),f^{R,\varepsilon }_n(y))|\le \varepsilon \),

-

the \(\varepsilon \)-neighbourhood of \(f^{R,\varepsilon }_n(B_R({{\bar{x}}}_n))\) contains \(B_{R-\varepsilon }({{\bar{x}}}_\infty )\),

-

\((f^{R,\varepsilon }_n)_\sharp (\mu _n\llcorner {B_R({{\bar{x}}}_n)})\) weakly converges to \(\mu _\infty \llcorner {B_R(x_\infty )}\) as \(n\rightarrow \infty \), for a.e. \(R>0\).

If in addition there exists \({\bar{R}}>0\) such that \(\mathrm{diam}(X_n)\le {\bar{R}}\) for every \(n\in {\mathbb {N}}\cup \{\infty \}\), then we say that \((X_n,{\mathsf {d}}_n,\mu _n)\rightarrow (X_\infty ,{\mathsf {d}}_\infty ,\mu _\infty )\) in the measured Gromov Hausdorff (mGH for short) sense. In this case it is enough to consider only \(R={\bar{R}}\) in the above requirements.

-

-

Stability: Let \(K\in {\mathbb {R}}\) and \(N\in (1,\infty ]\) be given. Assume that \((X_n, {\mathsf {d}}_n, \mu _n)\) satisfies \({\mathsf {CD}}(K,N)\) (resp. \({\mathsf {RCD}}(K,N)\)), for every \(n\in {\mathbb {N}}\), and that \((X_n, {\mathsf {d}}_n, \mu _n, {\bar{x}}_n )\rightarrow (X_\infty ,{\mathsf {d}}_\infty ,\mu _\infty , {{\bar{x}}}_\infty )\) in the pmGH sense. Then \((X_\infty , {\mathsf {d}}_\infty , \mu _\infty )\) satisfies \({\mathsf {CD}}(K,N)\) (resp. \({\mathsf {RCD}}(K,N)\)) as well.

2.3 Entropy functionals

In this section we assume that X is a Polish space.

A function \(F:[0,+\infty )\rightarrow [0,+\infty ]\) belongs to the class \(\Gamma _0({{\mathbb {R}}_{+}})\) of the admissible entropy functions if F is convex, lower semicontinuous and \(F(1)=0\). We define the recession constant as

and we say that F is superlinear if \(F'_{\infty }=+\infty \).

We also define the perspective function induced by \(F\in \Gamma _0({\mathbb {R}}_+)\) as the function \({\hat{F}}:[0,+\infty )\times [0,+\infty )\rightarrow [0,+\infty ]\), given by

The function

is called reverse entropy.

Let \(F\in \Gamma _{0}({\mathbb {R}}_{+})\) be an admissible entropy function. The F-divergence (also called Csiszár’s divergence or relative entropy) is the functional \(D_F:{\mathcal {M}}(X)\times {\mathcal {M}}(X)\rightarrow [0,+\infty ]\) defined by

where \(\gamma =\sigma \mu +\gamma ^{\perp }\) is the Lebesgue’s decomposition of the measure \(\gamma \) with respect to \(\mu \). When F is superlinear \(D_F(\gamma ||\mu )=+\infty \) if \(\gamma \) has a singular part with respect to \(\mu \). Moreover, it is clear that \(D_F(\mu ||\mu )=0\).

We now collect some useful properties of the relative entropies. For the proof see [29, Sect. 2.4].

Lemma 2

The functional \(D_F\) is jointly convex and lower semicontinuous in \({\mathscr {M}}(X)\times {\mathscr {M}}(X)\). More generally, if \(F\in \Gamma _0({\mathbb {R}}_+)\) is the pointwise limit of an increasing sequence \((F_n)\subset \Gamma _0({\mathbb {R}}_+)\) and \(\gamma ,\mu \in {\mathscr {M}}(X)\) are the weak limit of a sequence \((\gamma _n,\mu _n)\subset {\mathscr {M}}(X)\times {\mathscr {M}}(X)\) then we have

Lemma 3

If \({\mathcal {K}}\subset {\mathscr {M}}(X)\) is bounded and \(F'_{\infty }>0\) then the set

is bounded for every \(C\ge 0\). Moreover, if \({\mathcal {K}}\) is also equally tight and F is superlinear, then \({\mathbf {K}}_C\) is equally tight for every \(C\ge 0\).

The last lemma of this section shows an invariance result for the F-divergences.

Lemma 4

Let \(F\in \Gamma _0({\mathbb {R}}_{+})\) be an admissible entropy function, X, Y be two Polish spaces and \(f:X\rightarrow Y\) be a Borel injective map. Then, for any \(\gamma ,\mu \in {\mathscr {M}}(X)\) it holds

Proof

Let us consider the Lebesgue’s decompositions

Since \(f_{\sharp }\gamma \) and \(f_{\sharp }\mu \) have support contained in f(X), we can suppose without loss of generality that f is bijective.

For any Borel set \(A\subset X\) we have

By the uniqueness of the Lebesgue’s decomposition (see [29, Lemma 2.3]) it follows that \(\sigma ={\tilde{\sigma }}\circ f\) up to \((\mu + \gamma )\)-negligible sets and \(\gamma ^{\perp }(X)={\tilde{\gamma }}^{\perp }(f(X))={\tilde{\gamma }}^{\perp }(Y)\). In particular

\(\square \)

3 Entropy-Transport problem and distances

Let \(\varvec{\gamma }\in {\mathscr {M}}(X\times X)\). In the sequel we denote by \(\gamma _i:=(\pi ^i)_{\sharp }\varvec{\gamma }\) the marginals of \(\varvec{\gamma }\).

We are now ready to define the Entropy-Transport problem.

Definition 1

Let \(F\in \Gamma _{0}({\mathbb {R}}_{+})\) and let \(\varvec{\mathrm c}:X\times X\rightarrow [0,+\infty ]\) be a lower semicontinuous function. The Entropy-Transport functional between the measures \(\mu _1,\mu _2\in {\mathscr {M}}(X)\) is the functional

We define the Entropy-Transport problem between \(\mu _1\) and \(\mu _2\) as the minimization problem

To highlight the role of the entropy function F and the cost function \(\varvec{\mathrm c}\), we also say that  is the cost of the Entropy-Transport problem induced by \((F,\varvec{\mathrm c})\).

is the cost of the Entropy-Transport problem induced by \((F,\varvec{\mathrm c})\).

We are particularly interested in cost functions of the form \(\varvec{\mathrm c}(x_1,x_2)=\ell ({\mathsf {d}}(x_1,x_2))\) for a certain function \(\ell :[0,\infty )\rightarrow [0,\infty ]\).

In the next Proposition we recall some properties of Entropy-Transport problems (for a proof see [29]).

Proposition 1

Let us suppose that the Entropy-Transport problem between the measures \(\mu _1,\mu _2\in {\mathscr {M}}(X)\) is feasible, i.e. there exists \(\varvec{\gamma }\in {\mathscr {M}}(X\times X)\) such that \({\mathcal{ET}\mathcal{}}(\varvec{\gamma }||\mu _1,\mu _2)<\infty \), and that F is superlinear. Then the infimum in (26) can be replaced by a minimum and the set of minimizers is a compact convex subset of \({\mathscr {M}}(X\times X)\). Moreover, the functional  is convex and positively 1-homogeneous (thus subadditive).

is convex and positively 1-homogeneous (thus subadditive).

Remark 1

An important role in the theory of Entropy-Transport problems is played by the marginal perspective cost H, that we are going to define.

Given a number \(c\in [0,+\infty )\) and an admissible entropy function F, we first introduce the marginal perspective function \(H_c:[0,+\infty )\times [0,+\infty )\rightarrow [0,+\infty ]\) as the lower semicontinuous envelope of the function

where R is the reverse entropy defined in (20). If \(c=+\infty \), we set

When \(\varvec{\mathrm c}:X_1\times X_2\rightarrow [0,+\infty ]\) is a lower semicontinuous cost function on two metric spaces \(X_1,X_2\), the induced marginal perspective cost

is defined as

One can give some equivalent formulations of the problem (26) in terms of the marginal perspective cost (see for instance [29, Theorem 5.8]). Moreover, the metric properties of the entropy-transport cost  defined in (26) can be read in terms of the properties of H, studied as a function on the space \({\mathfrak {C}}(X)\times {\mathfrak {C}}(X)\). This point of view, which links the Entropy-Transport structure with the conical geometry of the problem, has been deeply investigated by Liero, Mielke and Savaré for the Hellinger-Kantorovich distance [29, Sect. 7] (see also [10, 14] and [15, Chapters 3,4] for general marginal perspective functions).

defined in (26) can be read in terms of the properties of H, studied as a function on the space \({\mathfrak {C}}(X)\times {\mathfrak {C}}(X)\). This point of view, which links the Entropy-Transport structure with the conical geometry of the problem, has been deeply investigated by Liero, Mielke and Savaré for the Hellinger-Kantorovich distance [29, Sect. 7] (see also [10, 14] and [15, Chapters 3,4] for general marginal perspective functions).

For brevity, we do not enter into the details of all these formulations (but see Sect. 5 for some details on the conical construction performed in [39]). Here we only remark that for any complete and separable metric space \((X,{\mathsf {d}})\) the cost  induces a distance on the space of measures \({\mathscr {M}}(X)\) if and only if \(H^a\) is a distance on the cone \({\mathfrak {C}}(X)\), \(a\in (0,1]\). In general, it is not difficult to identify conditions on F and \(\varvec{\mathrm c}\) for which the induced function H is nonnegative, symmetric and \(H(x_1,r;x_2,t)=0\) if and only if \((x_1,r)=(x_2,t)\) as points on the cone (see [14, Proposition 4]); on the contrary, proving the triangle inequality for (a power of) H is a much more challenging problem.

induces a distance on the space of measures \({\mathscr {M}}(X)\) if and only if \(H^a\) is a distance on the cone \({\mathfrak {C}}(X)\), \(a\in (0,1]\). In general, it is not difficult to identify conditions on F and \(\varvec{\mathrm c}\) for which the induced function H is nonnegative, symmetric and \(H(x_1,r;x_2,t)=0\) if and only if \((x_1,r)=(x_2,t)\) as points on the cone (see [14, Proposition 4]); on the contrary, proving the triangle inequality for (a power of) H is a much more challenging problem.

3.1 Regular Entropy-Transport distances

In the next definition we introduced the class of regular Entropy-Transport distances.

Definition 2

We say that  is a regular Entropy-Transport distance if

is a regular Entropy-Transport distance if

-

There exist \(a\in (0,1]\), \(F\in \Gamma _0({\mathbb {R}}_+)\) and a function \(\ell :[0,\infty )\rightarrow [0,\infty ]\) such that for every complete and separable metric space \((X,{\mathsf {d}})\), setting \(\varvec{\mathrm c}(x_1,x_2):=\ell ({\mathsf {d}}(x_1,x_2))\), the function

coincides with the power a of the Entropy-Transport cost

coincides with the power a of the Entropy-Transport cost  induced by \((F,\varvec{\mathrm c})\), namely

induced by \((F,\varvec{\mathrm c})\), namely  (28)

(28) -

The function \(\ell \) is continuous, convex and \(\ell (s)=0\) if and only if \(s=0\).

-

F is superlinear and finite valued.

-

For every complete and separable metric space \((X,{\mathsf {d}})\), the related Entropy-Transport distance

is a complete and separable metric on \({\mathscr {M}}(X)\) inducing the weak topology.

is a complete and separable metric on \({\mathscr {M}}(X)\) inducing the weak topology.

We also write that the distance  is induced by \((a,F,\ell )\) with obvious meaning.

is induced by \((a,F,\ell )\) with obvious meaning.

We notice that if  is a regular Entropy-Transport distance induced by \((a,F,\ell )\) then \(\ell \) is an increasing function and \(\lim _{d\rightarrow +\infty }\ell (d)=+\infty \).

is a regular Entropy-Transport distance induced by \((a,F,\ell )\) then \(\ell \) is an increasing function and \(\lim _{d\rightarrow +\infty }\ell (d)=+\infty \).

We conclude the section with a list of examples of regular Entropy-Transport distances.

Examples 1

-

(1)

Hellinger-Kantorovich: Let \(F(s)=U_1(s):=s\log {s}-s+1\) and

It is proved in [29, Sect. 7] that

induces a regular Entropy-Transport distance, called Hellinger-Kantorovich distance. We refer also to [28] for a discussion on “weighted versions” of the Hellinger-Kantorovich distance.

induces a regular Entropy-Transport distance, called Hellinger-Kantorovich distance. We refer also to [28] for a discussion on “weighted versions” of the Hellinger-Kantorovich distance. -

(2)

Gaussian Hellinger-Kantorovich: Let \(F(s)=U_1(s)=s\log {s}-s+1\) and \(\ell _2(d):=d^2\).

The triple \((1/2,U_1,\ell _2)\) induces a regular Entropy-Transport distance, as discussed in [29, Sect. 7.8]. It is called Gaussian Hellinger-Kantorovich distance.

-

(3)

Quadratic power-like distances: Let

$$\begin{aligned} F(s)=U_p(s):=\frac{s^p-p(s-1)-1}{p(p-1)}, \qquad p>1 \end{aligned}$$and \(\ell _2(d)=d^2\).

Then, for every \(1<p\le 3\) the triple \((1/2,U_p,\ell _2)\) induces a regular Entropy-Transport distance, as proved in [14, Theorem 6 and Corollary 1].

We notice that the class of entropy functions \(\{U_p\}\) satisfies \(\lim _{p\rightarrow 1} U_p(s)=U_1(s)\), justifying the notation we have used (see also [29, Example 2.5]).

-

(4)

Linear power-like distances: Let

$$\begin{aligned} F(s)=U_p(s):=\frac{s^p-p(s-1)-1}{p(p-1)}, \qquad p>1 \end{aligned}$$and \(\ell _1(d):=d\).

For every \(p>1\), \((1/2,U_p,\ell _1)\) induces a regular Entropy-Transport distance (see again [14, Theorem 6 and Corollary 1]).

4 Sturm-Entropy-Transport distance

We say that two metric measure spaces \((X_1,{\mathsf {d}}_1,\mu _1)\) and \((X_2,{\mathsf {d}}_2,\mu _2)\) are isomorphic if there exists an isometry \(\psi :{\mathsf {supp}}(\mu _1)\rightarrow {\mathsf {supp}}(\mu _2)\) such that \(\psi _{\sharp }\,\mu _1=\mu _2\), where \(\psi _{\sharp }\) denotes the push-forward through the map \(\psi \). A necessary condition in order to be isomorphic is that \(\mu _1(X_1)=\mu _2(X_2).\)

The family of all isomorphism classes of metric measure spaces will be denoted by \(\varvec{\mathrm {X}}\). From now on, we will identify a metric measure space with its class.

We recall now the definition of the \({\mathbf {D}}_p\)-distance due to Sturm.

Definition 3

([43]) Fix \(p\ge 1\). Let \((X_1,{\mathsf {d}}_1,\mu _1)\) and \((X_2,{\mathsf {d}}_2,\mu _2)\) be two metric measure spaces, the Sturm \({\mathbf {D}}_p\)-distance is defined as

where the infimum is taken over all complete and separable metric spaces \(({\hat{X}},\hat{{\mathsf {d}}})\) with isometric embeddings \(\psi ^1:{\mathsf {supp}}(\mu _1)\rightarrow {\hat{X}}\) and \(\psi ^2:{\mathsf {supp}}(\mu _2)\rightarrow {\hat{X}}\).

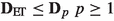

It is proved in [43, Theorem 3.6] that \({\mathbf {D}}_p\) is a complete, separable and geodesic metric on the set

We are now going to define the Sturm-Entropy-Transport distance in a similar way.

Definition 4

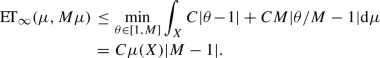

Let \((X_1,{\mathsf {d}}_1,\mu _1)\) and \((X_2,{\mathsf {d}}_2,\mu _2)\) be two metric measure spaces, we define the Sturm-Entropy-Transport distance induced by the regular Entropy-Transport distance  as

as

where the infimum is taken over all complete and separable metric spaces \(({\hat{X}},\hat{{\mathsf {d}}})\) with isometric embeddings \(\psi ^1:{\mathsf {supp}}(\mu _1)\rightarrow {\hat{X}}\) and \(\psi ^2:{\mathsf {supp}}(\mu _2)\rightarrow {\hat{X}}\).

It is not difficult to prove that the definition is well-posed. Indeed, let us suppose \((X'_i,{\mathsf {d}}'_i,\mu '_i)\) is isomorphic to \((X_i,{\mathsf {d}}_i,\mu _i)\) through the map \(\varphi ^i\), \(i=1,2\). Then, for every metric space \({\hat{X}}\) and every isometric embedding \(\psi ^i:{\mathsf {supp}}(\mu _i)\rightarrow {\hat{X}}\), \(i=1,2\), we have that

It is often convenient to work with explicit realisations of the ambient space \(({\hat{X}},\hat{{\mathsf {d}}})\), a particularly useful one is given by the disjoint union that we now discuss.

Given two metric spaces \((X_1,{\mathsf {d}}_1,\mu _1)\) and \((X_2,{\mathsf {d}}_2,\mu _2)\), let \(X_1\sqcup X_2\) be their disjoint union. We say that a (resp. pseudo-)metric \(\hat{{\mathsf {d}}}\) on \(X_1\sqcup X_2\) is a (resp. pseudo-)metric coupling between \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\) if \(\hat{{\mathsf {d}}}(x,y)={\mathsf {d}}_1(x,y)\) when \(x,y\in X_1\) and \(\hat{{\mathsf {d}}}(x,y)={\mathsf {d}}_{2}(x,y)\) when \(x,y\in X_2\).

A finite valued metric coupling \(\hat{{\mathsf {d}}}\) between \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\) always exists: to construct it, fix two points \({\bar{x}}_1\in X_1, {\bar{x}}_2\in X_2\), a number \(c\in {\mathbb {R}}_+\), and define \(\hat{{\mathsf {d}}}\) as:

Moreover, from any finite valued pseudo-metric coupling \(\hat{{\mathsf {d}}}\) of \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\) and any \(\delta >0\) we can obtain a complete, separable metric \(\hat{{\mathsf {d}}}_{\delta }\) which is again a coupling of \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\) in the following way:

We say that a measure \(\varvec{\gamma }\in {\mathscr {M}}(X_1\times X_2)\) is a measure coupling between \(\mu _1\) and \(\mu _2\) if

for all Borel sets \(A\subset X_1\) and \(B\subset X_2\). We keep the notation \(\gamma _i\) for the marginals of the measure \(\varvec{\gamma }\in {\mathscr {M}}(X_1\times X_2)\), \(i=1,2.\)

A more explicit formulation of the function  is given in the following Proposition.

is given in the following Proposition.

Proposition 2

Let \((X_1,{\textsf {d} }_1,\mu _1)\) and \((X_2,{\textsf {d} }_2,\mu _2)\) be two metric measure spaces and  a regular Entropy-Transport distance induced by \((a,F,\ell )\).

a regular Entropy-Transport distance induced by \((a,F,\ell )\).

-

(i)

In Definition 4 we can suppose without loss of generality that \({\hat{X}}=X_1\sqcup X_2\), \(\psi ^1=\iota _1\), \(\psi ^2=\iota _2\) be respectively the inclusion of \(X_1\) and \(X_2\) in \(X_1\sqcup X_2\) and the infimum is taken over all the pseudo-metric couplings \({\hat{\textsf {d} }}\) between \({\textsf {d} }_1\) and \({\textsf {d} }_2\).

-

(ii)

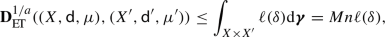

In the situation of (i) we will identify \(\mu _k\) with \((\iota _k)_\sharp \,\mu _k\), \(k=1,2\), and it holds

(34)

(34)where

$$\begin{aligned} C:= & {} \{(\varvec{\gamma },{\hat{{\mathsf {d}}}}): \varvec{\gamma }\in {\mathscr {M}}(X_1\times X_2), \nonumber \\&\ {\hat{{\mathsf {d}}}} \ \text {finite valued pseudo-metric coupling for} \ {\mathsf {d}}_1,{\mathsf {d}}_2\} \end{aligned}$$(35)

Proof

\(\mathrm{(i)}\) We first show that the infimum as in \(\mathrm{(i)}\) is less or equal to the infimum as in Definition 4. Let \(({\hat{X}},\hat{{\mathsf {d}}})\) be a complete and separable metric space with isometric embeddings \(\psi ^1:{\mathsf {supp}}(\mu _1)\rightarrow {\hat{X}}\), \(\psi ^2:{\mathsf {supp}}(\mu _2)\rightarrow {\hat{X}}\), and let \(\hat{\varvec{\gamma }}\in {\mathscr {M}}({\hat{X}}\times {\hat{X}})\). It is immediate to check that

defines a pseudo-metric on \(X_1\sqcup X_2\), coupling between \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\).

Moreover, setting the Borel injective functions \(\Psi ^i: \psi ^1({\mathsf {supp}}(\mu _1))\cup \psi ^2({\mathsf {supp}}(\mu _2))\subset {\hat{X}} \rightarrow X_1\sqcup X_2\), \(i=1,2\), defined as

and using Lemma 4 it is immediate to check that \(\tilde{\varvec{\gamma }}:= (\Psi ^1,\Psi ^2)_{\sharp } \hat{\varvec{\gamma }}\in {\mathscr {M}}((X_1\sqcup X_2)\times (X_1\sqcup X_2))\) satisfies

where we have used the fact that \(\tilde{{\mathsf {d}}}(\Psi ^1(x),\Psi ^2(y))\le \hat{{\mathsf {d}}}(x,y)\) whenever \(x\in \psi ^1({\mathsf {supp}}(\mu _1))\) and \(y\in \psi ^2({\mathsf {supp}}(\mu _2)).\)

This yields that the infimum as in \(\mathrm{(i)}\) is less or equal to the infimum as in Definition 4.

To show that the infimum as in Definition 4 is less or equal to the infimum as in \(\mathrm{(i)}\), it is sufficient to notice that for every pseudo-metric coupling \({\hat{{\mathsf {d}}}}\), for every measure \(\varvec{\gamma }\in {\mathscr {M}}(X_1\times X_2)\) and for every \(\epsilon >0\) there is \(\delta >0\) such that the complete and separable metric \(\hat{{\mathsf {d}}}_{\delta }\) defined in (32) is a coupling between \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\) satisfying

as a consequence of the finiteness of the measure \(\varvec{\gamma }\) and the continuity of \(\ell \).

\(\mathrm{(ii)}\) In case the infimum runs over the couples \((\varvec{\gamma },{\hat{{\mathsf {d}}}})\in C\) such that \({\hat{{\mathsf {d}}}}\) is a complete and separable metric, the inequality “\(\le \)” in (34) is a simple consequence of the explicit formulation of the Entropy-Transport problem together with the fact that the superlinearity of F allows to consider measures \(\varvec{\gamma }\in {\mathscr {M}}((X_1\sqcup X_2)\times (X_1\sqcup X_2))\) with support contained in \(X_1\times X_2\). The fact that “\(\le \)” holds in (34) even if the infimum is taken over the larger set C is a consequence of (38).

The proof of the inequality “\(\ge \)” in (34) is analogous to the first part of the proof of \(\mathrm{(i)}\), see in particular (37). \(\square \)

In the next Lemma we collect some of the basic properties of the function  .

.

Lemma 5

Let  be a regular Entropy-Transport distance induced by \((a,F,\ell )\).

be a regular Entropy-Transport distance induced by \((a,F,\ell )\).

-

(i)

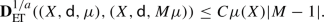

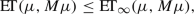

For any \(M\ge 0\) it holds

(39)

(39) -

(ii)

If \((X_1,{\textsf {d} }_1)=(X_2,{\textsf {d} }_2)\) then

(40)

(40) -

(iii)

The set

$$\begin{aligned} \varvec{\mathrm {X}}_{*}:=\left\{ (X,{\textsf {d} },\mu )\in \varvec{\mathrm {X}}, \ {\textsf {supp} }(\mu )=\{x_1,\ldots ,x_n\}, \ n \in {\mathbb {N}}, \ \mu =M\sum _{i=1}^n \delta _{x_i}, \ M\in {\mathbb {R}}_{+}\right\} \end{aligned}$$(41)is dense in

.

. -

(iv)

If

$$\begin{aligned} \mu =M\sum _{i=1}^n \delta _{x_i} \quad \text {and} \quad \mu '=M\sum _{i=1}^n \delta _{x'_i}, \end{aligned}$$(42)then

(43)

(43)where we put \({\textsf {d} }_{ij}={\textsf {d} }(x_i,x_j)\) and \({\textsf {d} }'_{ij}={\textsf {d} }(x'_i,x'_j)\).

-

(v)

For any \(N>1\) there exists a constant C such that for every M, \(1/N<M<N\), we have

(44)

(44)

Proof

-

(i)

This is a consequence of the 1-homogeneity of the cost

(Proposition 1) and of the push-forward map together with the definitions of

(Proposition 1) and of the push-forward map together with the definitions of  and

and  .

. -

(ii)

The result follows from the definition of

, since \(({\hat{X}},{\hat{{\mathsf {d}}}})=(X_1,{\mathsf {d}}_1)\) with \(\psi _1=\psi _2=\mathrm {Id}\) is an admissible competitor for the infimum.

, since \(({\hat{X}},{\hat{{\mathsf {d}}}})=(X_1,{\mathsf {d}}_1)\) with \(\psi _1=\psi _2=\mathrm {Id}\) is an admissible competitor for the infimum. -

(iii)

The result follows by the point (ii) of the present Lemma, the fact that

metrizes the weak convergence and the density in \({\mathscr {M}}(X)\) of the measures \(\mu \) of the form \(M\sum _{i=1}^n \delta _{x_i}\) with respect to weak convergence.

metrizes the weak convergence and the density in \({\mathscr {M}}(X)\) of the measures \(\mu \) of the form \(M\sum _{i=1}^n \delta _{x_i}\) with respect to weak convergence. -

(iv)

Let assume without loss of generality that \(X=\{x_1,\ldots ,x_n\}\) and \(X'=\{x'_1,\ldots ,x'_n\}\). We put \(\delta =\sup _{i,j}|{\mathsf {d}}_{ij}-{\mathsf {d}}'_{ij}|\). We construct the following pseudo-metric coupling: on \(X\times X\) we define \({\hat{{\mathsf {d}}}}={\mathsf {d}}\), on \(X'\times X'\) we put \({\hat{{\mathsf {d}}}}={\mathsf {d}}'\), on \(X\times X'\) we define

$$\begin{aligned} {\hat{{\mathsf {d}}}}(x_i,x'_j):=\inf _{k\in \{1,\ldots ,n\}}{\mathsf {d}}(x_i,x_k)+{\mathsf {d}}'(x'_k,x'_j)+\delta , \end{aligned}$$finally on \(X'\times X\) we put

$$\begin{aligned} {\hat{{\mathsf {d}}}}(x'_i,x_j):=\inf _{k\in \{1,\ldots ,n\}}{\mathsf {d}}(x_j,x_k)+{\mathsf {d}}'(x'_k,x'_i)+\delta , \end{aligned}$$so that \({\hat{{\mathsf {d}}}}(x_i,x'_i)={\hat{{\mathsf {d}}}}(x'_i,x_i)=\delta .\)

We then define the measure coupling

$$\begin{aligned} \varvec{\gamma }=M\sum _{i=1}^n\delta _{(x_i,x'_i)}. \end{aligned}$$It is straightforward to see that \({\hat{{\mathsf {d}}}}\) and \(\varvec{\gamma }\) are actually couplings between \({\mathsf {d}},{\mathsf {d}}'\) and \(\mu ,\mu '\), respectively. Then, using Proposition 2 and recalling that \(\ell \) is an increasing function we have that

and the thesis follows.

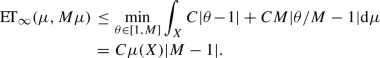

-

(v)

We can take \({\mathsf {d}}\) itself as metric coupling. Then, by the point (ii) of the present Lemma, we have

By replacing the cost \(\varvec{\mathrm c}\) with the cost

$$\begin{aligned} \varvec{\mathrm c}_{\infty }(x_1,x_2):={\left\{ \begin{array}{ll} 0 &{} \text {if } x_1=x_2\\ +\infty &{} \text {otherwise}, \end{array}\right. } \end{aligned}$$we obtain that

where we have denoted by

the Entropy-Transport problem induced by the entropy function F and the cost \(\varvec{\mathrm c}_{\infty }.\) Observe that every admissible entropy function satisfies $$\begin{aligned} F(s)\le C|s-1|, \quad \text {for every} \ \ 1/N<s<N, \end{aligned}$$(45)

the Entropy-Transport problem induced by the entropy function F and the cost \(\varvec{\mathrm c}_{\infty }.\) Observe that every admissible entropy function satisfies $$\begin{aligned} F(s)\le C|s-1|, \quad \text {for every} \ \ 1/N<s<N, \end{aligned}$$(45)where

$$\begin{aligned} C:=\max \left\{ \frac{F(1/N)}{1/N-1},\frac{F(N)}{N-1}\right\} . \end{aligned}$$The conclusion now follows from an explicit computation of

together with the bound (45). Indeed, we have (see [29, Example E.5])

together with the bound (45). Indeed, we have (see [29, Example E.5])  (46)

(46)

\(\square \)

The next Lemma shows the existence of the optimal couplings.

Lemma 6

Let  be a regular Entropy-Transport distance induced by \((a,F,\ell )\). Let \((X_1,{\textsf {d} }_1,\mu _1)\) and \((X_2,{\textsf {d} }_2,\mu _2)\) be two metric measure spaces. Then:

be a regular Entropy-Transport distance induced by \((a,F,\ell )\). Let \((X_1,{\textsf {d} }_1,\mu _1)\) and \((X_2,{\textsf {d} }_2,\mu _2)\) be two metric measure spaces. Then:

-

(i)

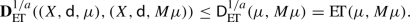

here exist a measure \(\varvec{\gamma }\in {\mathscr {M}}(X_1\times X_2)\) and a pseudo-metric coupling \({\hat{\textsf {d} }}\) between \({\textsf {d} }_1\) and \({\textsf {d} }_2\) such that

(47)

(47) -

(ii)

There exist a complete and separable metric space \(({\tilde{X}},{\tilde{{\mathsf {d}}}})\) and isometric embeddings \(\psi ^1:{\textsf {supp} }(\mu _1)\rightarrow {\tilde{X}}\), \(\psi ^2:{\textsf {supp} }(\mu _2)\rightarrow {\tilde{X}}\) such that

(48)

(48)where we have denoted by

the Entropy-Transport distance computed in the space \(({\tilde{X}},{\tilde{{\mathsf {d}}}})\).

the Entropy-Transport distance computed in the space \(({\tilde{X}},{\tilde{{\mathsf {d}}}})\).

Proof

-

(i)

\(\mathbf {Step\, 1}\): tightness of the plans.

By Proposition 2 there exist a sequence \(\varvec{\gamma }_n\in {\mathscr {M}}(X_1\times X_2)\) and \(\hat{{\mathsf {d}}}_n\) pseudo-metric couplings of \({\mathsf {d}}_1,{\mathsf {d}}_2\) such that

(49)

(49)Since the entropy functionals with respect to the fixed measures \(\mu _1\) and \(\mu _2\) are bounded, we can apply Theorems 1 and Lemma 3 in order to obtain the existence of subsequences (from now on we will not relabel them) such that \((\gamma _n)_i\) converges weakly to some \(\gamma ^i\in {\mathscr {M}}(X_i)\), \(i=1,2\). Since \((\gamma _n)_i\) are marginals of the measure \(\varvec{\gamma }_n\), the tightness of \((\gamma _n)_i\) implies the tightness of \(\varvec{\gamma }_n\), so that the sequence \(\varvec{\gamma }_n\in {\mathscr {M}}(X_1\times X_2)\) is converging to some \(\varvec{\gamma }\). Moreover, by the continuity of the operator \(\pi ^i_{\sharp }\) with respect to the weak topology, the marginals of \(\varvec{\gamma }\) coincide with \(\gamma ^i\), \(i=1,2.\) We notice that if \(\varvec{\gamma }\) is the null measure the proof is concluded by taking any pseudo-metric coupling \(\hat{{\mathsf {d}}}\) between \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\).

\(\mathbf {Step\, 2}\): pre-compactness of the pseudo-metric couplings.

Regarding the sequence \(\hat{{\mathsf {d}}}_n\), by the triangle inequality we have that

$$\begin{aligned} |\hat{{\mathsf {d}}}_n(x_1,y_1)-\hat{{\mathsf {d}}}_n(x_2,y_2)|\le |{\mathsf {d}}_1(x_1,x_2)+{\mathsf {d}}_2(y_1,y_2)|. \end{aligned}$$In particular, \(\hat{{\mathsf {d}}}_n\) is uniformly 1-Lipschitz with respect to the complete and separable metric \({\mathsf {d}}_1+{\mathsf {d}}_2\) on \(X_1\times X_2\). We claim it is also uniformly bounded in a point. To see this, take \(({\bar{x}},{\bar{y}})\in {\mathsf {supp}}(\varvec{\gamma })\): since \(\varvec{\gamma }_n\) weakly converges to \(\varvec{\gamma }\) for every \(r,\epsilon >0\) and for all n sufficiently large we have

$$\begin{aligned} \varvec{\gamma }_n\left( B_r({\bar{x}})\times B_r({\bar{y}})\right) \ge \varvec{\gamma }\left( B_r({\bar{x}})\times B_r({\bar{y}})\right) -\epsilon . \end{aligned}$$Fix \(r>0\) and suppose by contradiction that there exists a subsequence (not relabeled) such that \(2r\le \hat{{\mathsf {d}}}_n({\bar{x}},{\bar{y}})\rightarrow +\infty \). For \(\epsilon =\epsilon (r)\) small enough, from (49), the fact that \(({\bar{x}},{\bar{y}})\in {\mathsf {supp}}(\varvec{\gamma })\) and \(\ell \) is increasing we infer the existence of some positive constants C, c such that for all n sufficiently large

$$\begin{aligned}&C>\int _{X_1\times X_2}\ell \left( \hat{{\mathsf {d}}}_n(x,y)\right) {\mathrm d}\varvec{\gamma }_n(x,y)\\&\quad \ge \int _{B_r({\bar{x}})\times B_r({\bar{y}})}\ell \left( \hat{{\mathsf {d}}}_n({\bar{x}},{\bar{y}})-2r\right) {\mathrm d}\varvec{\gamma }_n(x,y)\\&\quad \ge \ell \left( \hat{{\mathsf {d}}}_n({\bar{x}},{\bar{y}})-2r\right) [\varvec{\gamma }(B_r({\bar{x}})\times B_r({\bar{y}}))-\epsilon ]\ge c\ell \left( \hat{{\mathsf {d}}}_n({\bar{x}},{\bar{y}})-2r\right) . \end{aligned}$$Since \(\ell \) has bounded sublevels, this implies that there exists a constant K such that \(\hat{{\mathsf {d}}}_n({\bar{x}},{\bar{y}})<K\) for every n that leads to a contradiction.

We can thus apply Ascoli-Arzelà’s theorem to infer the existence of a limit function \({\mathsf {d}}:X_1\times X_2\rightarrow [0,\infty )\) such that \({\mathsf {d}}_n\) converges (up to subsequence) pointwise to \({\mathsf {d}}\) and the convergence is uniform on compact sets. We can extend \({\mathsf {d}}\) to \((X_1 \sqcup X_2)\times (X_1 \sqcup X_2)\) in order to get a limit pseudo-metric coupling, that we denote in the same way.

\(\mathbf {Step\, 3}\): passing to the limit.

Next, we pass to the limit in the following expression

$$\begin{aligned} \sum _{i=1}^2D_F((\gamma _n)_i||\mu _i)+\int _{X_1\times X_2}\ell \left( \hat{{\mathsf {d}}}_n(x,y)\right) {\mathrm d}\varvec{\gamma }_n. \end{aligned}$$By Lemma 2, the entropy is jointly lower semicontinuous and thus

$$\begin{aligned} \liminf _n D_F((\gamma _n)_i||\mu _i)\ge D_F(\gamma _i||\mu _i). \end{aligned}$$So, it is sufficient to prove that

$$\begin{aligned} \liminf _n \int _{X_1\times X_2}\ell \left( \hat{{\mathsf {d}}}_n(x,y)\right) {\mathrm d}\varvec{\gamma }_n \ge \int _{X_1\times X_2}\ell \left( \hat{{\mathsf {d}}}(x,y)\right) {\mathrm d}\varvec{\gamma }. \end{aligned}$$(50)Using the equi-tightness of \(\{\varvec{\gamma }_k\}\) we can find a sequence of compact sets \(K_{1,n}\subset X_1\) and \(K_{2,n}\subset X_2\) such that

$$\begin{aligned} \varvec{\gamma }_k\left( X_1\times X_2 {\setminus } (K_{1,n}\times K_{2,n})\right) \le \frac{1}{n} \end{aligned}$$for every k. We define \(\ell _m(r):=\min (\ell (r),m),\) so that the sequence of functions \((x,y)\mapsto \ell _m({\mathsf {d}}_n(x,y))\) converges uniformly on compact subsets of \(X_1\times X_2\), as \(n\rightarrow \infty \). Possibly by taking a further subsequence via a diagonal argument, we can infer that \(\Vert \ell _m({\mathsf {d}})-\ell _m({\mathsf {d}}_n)\Vert _{\infty ;n}\rightarrow 0\) when \(n\rightarrow \infty \), where we denote by \(\Vert \cdot \Vert _{\infty ;n}\) the supremum norm in the set \(K_{1,n}\times K_{2,n}.\) Let M be a positive constant such that \(\gamma _n(X_1\times X_2)\le M\) for every n. We can bound the integral on the left hand side of (50) in the following way:

$$\begin{aligned}&\int _{X_1\times X_2} \ell (\hat{{\mathsf {d}}}_n) {\mathrm d}\varvec{\gamma }_n \ge \int _{X_1\times X_2} \ell _m(\hat{{\mathsf {d}}}_n) {\mathrm d}\varvec{\gamma }_n \ge \int _{K^1_n\times K^2_n} \ell _m(\hat{{\mathsf {d}}}_n) {\mathrm d}\varvec{\gamma }_n \\&\quad \ge \int _{K^1_n\times K^2_n} \ell _m(\hat{{\mathsf {d}}}) {\mathrm d}\varvec{\gamma }_n - M \Vert \ell _m(\hat{{\mathsf {d}}})-\ell _m(\hat{{\mathsf {d}}}_n)\Vert _{\infty ;n} \\&\quad \ge \int _{X_1\times X_2} \ell _m(\hat{{\mathsf {d}}}) {\mathrm d}\varvec{\gamma }_n - M \Vert \ell _m(\hat{{\mathsf {d}}})-\ell _m(\hat{{\mathsf {d}}}_n)\Vert _{\infty ;n} - m /n. \end{aligned}$$Now we can pass to the limit with respect to n using the weak convergence of \(\{\varvec{\gamma }_n\}\), and we obtain

$$\begin{aligned} \liminf _{n} \int _{X_1\times X_2} \ell (\hat{{\mathsf {d}}}_n) {\mathrm d}\varvec{\gamma }_n\ge \int _{X_1\times X_2} \ell _m(\hat{{\mathsf {d}}}) {\mathrm d}\varvec{\gamma } \end{aligned}$$and then we conclude using the Beppo Levi’s monotone convergence theorem with respect to m.

-

(ii)

Without loss of generality we assume \({\mathsf {supp}}(\mu _i)=X_i\). By the previous point we know the existence of an optimal measure \(\varvec{\gamma }\in {\mathscr {M}}(X_1\times X_2)\) and an optimal pseudo-metric coupling \(\hat{{\mathsf {d}}}\) between \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\). We consider the complete and separable metric space \(({\tilde{X}},{\tilde{{\mathsf {d}}}})\) constructed as in Lemma 1. Denoting by \(p: X_1\sqcup X_2\rightarrow {\tilde{X}}\) the projection to the quotient and using the identification

$$\begin{aligned} X_1\sqcup X_2=X_1\times \{0\}\cup X_2\times \{1\}, \end{aligned}$$we notice that \(X_1\times X_2 \hookrightarrow {\tilde{X}} \times {\tilde{X}}\) via the injective Borel map

$$\begin{aligned} \varvec{\psi }(x_1,x_2)=(\psi ^1(x_1),\psi ^2(x_2)):=(p(x_1,0),p(x_2,1)). \end{aligned}$$Moreover, we also have that \(\psi ^i\) is an isometry of \((X_i,{\mathsf {d}}_i)\) onto its image in \(({\tilde{X}},{\tilde{{\mathsf {d}}}})\), \(i=1,2\). Thus, denoting by \(\gamma _i\) the marginals of \(\varvec{\gamma }\), we can consider the measures \(\varvec{\psi }_{\sharp }\varvec{\gamma }\) whose projections are \((\psi ^1)_{\sharp }\gamma _1\) and \((\psi ^2)_{\sharp }\gamma _2\). Using Lemma 4 we know that

$$\begin{aligned} D_F(\gamma _i||\mu _i)=D_F((\psi ^i)_{\sharp }\gamma _i\,||\,(\psi ^i)_{\sharp }\mu _i), \qquad i=1,2. \end{aligned}$$(51)By recalling the definition of \({\tilde{{\mathsf {d}}}}\), we also have

$$\begin{aligned} \int _{X_1\times X_2}\ell \left( \hat{{\mathsf {d}}}(x,y)\right) {\mathrm d}\varvec{\gamma }=\int _{{\tilde{X}}\times {\tilde{X}}}\ell \left( {\tilde{{\mathsf {d}}}}(x,y)\right) {\mathrm d}(\varvec{\psi }_{\sharp }\varvec{\gamma }). \end{aligned}$$(52)Thus, as a consequence of (51), (52) and the optimality of \(\varvec{\gamma }\) and \({\hat{{\mathsf {d}}}}\), the equality (48) holds on \(({\tilde{X}},{\tilde{{\mathsf {d}}}})\) (with optimal measure \(\varvec{\psi }_{\sharp }\varvec{\gamma }\)).

\(\square \)

Remark 2

It is clear that the optimal coupling \(\hat{{\mathsf {d}}}\) whose existence is proven in the previous Lemma is in general only a pseudo-metric and not a metric on \(X_1\sqcup X_2\). To see this, it is sufficient to consider two isomorphic metric measure spaces \((X_1,{\mathsf {d}}_1,\mu _1)\), \((X_2,{\mathsf {d}}_2,\mu _2)\). If we denote by \(\psi :X_1\rightarrow X_2\) the isometry between \((X_1,{\mathsf {d}}_1)\) and \((X_2,{\mathsf {d}}_2)\), the optimal coupling \(\hat{{\mathsf {d}}}\) satisfies \({\hat{{\mathsf {d}}}}(x_1,\psi (x_1))=0\) for \(\mu _1\)-a.e \(x_1\).

The next theorem is the main result of the paper.

Theorem 2

Let  be a regular Entropy-Transport distance induced by \((a,F,\ell )\). Then

be a regular Entropy-Transport distance induced by \((a,F,\ell )\). Then  is a complete and separable metric space. It is also a length (resp. geodesic) space if

is a complete and separable metric space. It is also a length (resp. geodesic) space if  is a length (resp. geodesic) metric.

is a length (resp. geodesic) metric.

Proof

\(\mathbf {Step\, 1}\):  defines a metric.

defines a metric.

It is clear that  is symmetric, finite valued, nonnegative and

is symmetric, finite valued, nonnegative and

We claim that  implies that the metric measure spaces \((X_1,{\mathsf {d}}_1,\mu _1)\) and \((X_2,{\mathsf {d}}_2,\mu _2)\) are isomorphic. By Lemma 6 there exist a measure \(\varvec{\gamma }\in {\mathscr {M}}(X_1\times X_2)\) and a pseudo-metric coupling \(\hat{{\mathsf {d}}}\) such that

implies that the metric measure spaces \((X_1,{\mathsf {d}}_1,\mu _1)\) and \((X_2,{\mathsf {d}}_2,\mu _2)\) are isomorphic. By Lemma 6 there exist a measure \(\varvec{\gamma }\in {\mathscr {M}}(X_1\times X_2)\) and a pseudo-metric coupling \(\hat{{\mathsf {d}}}\) such that

All the terms are nonnegative, so that \(D_F\big (\gamma _i||\mu _i\big )=0\) and thus \(\gamma _i=\mu _i\), \(i=1,2\). Moreover, since \(\ell (d)=0\) if and only if \(d=0\), it follows that \(\hat{{\mathsf {d}}}(x,y)=0\) for \(\varvec{\gamma }\)-a.e (x, y). We also have

To see this, let \(({\bar{x}},{\bar{y}})\in {\mathsf {supp}}(\varvec{\gamma })\) so that for every \(r>0\) we have \(\varvec{\gamma }(B_r({\bar{x}},{\bar{y}}))>0\) where

We consider a sequence of balls of radius \(r_n:=1/n, n\in {\mathbb {N}},\) and use the fact that \(\hat{{\mathsf {d}}}(x,y)=0\) for \(\varvec{\gamma }\)-a.e (x, y) to infer the existence of a sequence of points \((x_n, y_n)\in B_{r_n}(({\bar{x}},{\bar{y}}))\) such that \(\hat{{\mathsf {d}}}(x_n,y_n)=0\). Thus

Sending \(n\rightarrow +\infty \) and using the arbitrariness of \(({\bar{x}},{\bar{y}})\), the claim (53) follows.

Since \({\mathsf {d}}_1\) and \({\mathsf {d}}_2\) are metrics, we infer that for every \(x_{1}\in {\mathsf {supp}}(\mu _{1})\) there exists a unique \(x_{2}\in {\mathsf {supp}}(\mu _{2})\) such that \((x_{1},x_{2})\in {\mathsf {supp}}(\varvec{\gamma })\). Indeed, for any \(x_2,{\tilde{x}}_{2}\in {\mathsf {supp}}(\mu _{2})\) such that \((x_{1},x_{2}), (x_{1},{\tilde{x}}_{2})\in {\mathsf {supp}}(\varvec{\gamma })\) we have

and thus \(x_2={\tilde{x}}_2\). Switching the role of \(X_{1}\) and \(X_{2}\) in the argument above, we obtain the existence of a bijection \(\psi : {\mathsf {supp}}(\mu _{1}) \rightarrow {\mathsf {supp}}(\mu _{2})\) such that \(\varvec{\gamma }=(\mathrm {Id},\psi )_{\sharp }\mu _1\) and (in virtue of (53))

Let \(x,y\in {\mathsf {supp}}(\mu _{1}) \), from (54) and the triangle inequality it follows

which implies that \(\psi : {\mathsf {supp}}(\mu _{1}) \rightarrow {\mathsf {supp}}(\mu _{2})\) is an isometry.

Hence \((X_1,{\mathsf {d}}_1,\mu _1)\) and \((X_2,{\mathsf {d}}_2,\mu _2)\) are isomorphic, as claimed.

Regarding the triangle inequality, let \((X_i,{\mathsf {d}}_i,\mu _i)\), \(i=1,2,3\), be three metric measure spaces. From the definition of  and Proposition 2, for every \(\epsilon >0\) we find a pseudo-metric coupling \({\mathsf {d}}_{12}\) between \({\mathsf {d}}_{1}\) and \({\mathsf {d}}_{2}\), and a pseudo-metric coupling \({\mathsf {d}}_{23}\) between \({\mathsf {d}}_{2}\) and \({\mathsf {d}}_{3}\) such that

and Proposition 2, for every \(\epsilon >0\) we find a pseudo-metric coupling \({\mathsf {d}}_{12}\) between \({\mathsf {d}}_{1}\) and \({\mathsf {d}}_{2}\), and a pseudo-metric coupling \({\mathsf {d}}_{23}\) between \({\mathsf {d}}_{2}\) and \({\mathsf {d}}_{3}\) such that

where we have denoted by  the Entropy-Transport distance induced by the pseudo-metric \({\mathsf {d}}\). Set \(X:=X_1\sqcup X_2\sqcup X_3\) and define a pseudo-metric \({\mathsf {d}}\) on X in the following way

the Entropy-Transport distance induced by the pseudo-metric \({\mathsf {d}}\). Set \(X:=X_1\sqcup X_2\sqcup X_3\) and define a pseudo-metric \({\mathsf {d}}\) on X in the following way

We notice that \({\mathsf {d}}\) coincides with \({\mathsf {d}}_i\) when restricted to \(X_i\). By applying Proposition 2, the point (ii) of Lemma 5 and the triangle inequality of  we obtain

we obtain

The conclusion follows since \(\epsilon >0\) is arbitrary.

\(\mathbf {Step\, 2}\): Completeness of  .

.

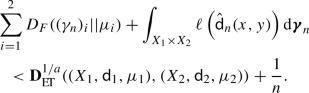

In order to prove completeness, let \(\{(X_n,{\mathsf {d}}_n,\mu _n)\}_{n\in {\mathbb {N}}}\) be a Cauchy sequence in the space  . In order to have convergence of the full sequence, it is enough to prove that there exists a converging subsequence. Let us consider a subsequence such that

. In order to have convergence of the full sequence, it is enough to prove that there exists a converging subsequence. Let us consider a subsequence such that

By definition of  and Proposition 2, we can find a measure \(\varvec{\gamma }_{k+1}\in {\mathscr {M}}(X_{n_k}\times X_{n_{k+1}})\) and a complete and separable metric coupling \(\hat{{\mathsf {d}}}_{k+1}\) between \({\mathsf {d}}_{X_{n_k}}\) and \({\mathsf {d}}_{X_{n_{k+1}}}\) such that

and Proposition 2, we can find a measure \(\varvec{\gamma }_{k+1}\in {\mathscr {M}}(X_{n_k}\times X_{n_{k+1}})\) and a complete and separable metric coupling \(\hat{{\mathsf {d}}}_{k+1}\) between \({\mathsf {d}}_{X_{n_k}}\) and \({\mathsf {d}}_{X_{n_{k+1}}}\) such that

where \(\sigma _{n_k}\) (resp. \(\sigma _{n_{k+1}}\)) is the Radon-Nykodim derivative of the first (resp. second) marginal of \(\gamma _{k+1}\) with respect to \(\mu _{n_k}\) (resp. \(\mu _{n_{k+1}}\)).

Now we want to define a sequence \(\big \{(X'_k,{\mathsf {d}}'_k)\big \}_{k=1}^{\infty }\) of metric spaces such that \(X_{n_k}\subset X'_k\) and \(X'_k\subset X'_{k+1}\). We proceed in the following way: we set

where \(x\sim y\) if \({\mathsf {d}}'_{k+1}(x,y)=0\) and the latter is defined as

From the definition of \({\mathsf {d}}'_k\), it is clear that we can endow the space \(X':=\bigcup _{k=1}^{\infty } X'_k\) with a limit metric \({\mathsf {d}}'\). Now we consider the completion \((X,{\mathsf {d}})\) of \((X',{\mathsf {d}}')\) and we notice that \((X_{n_k},{\mathsf {d}}_{X_{n_k}})\) is isometrically embedded in this space for every k. Using the embedding, we can also define a measure \({\bar{\mu }}_{n_k}\) as the push-forward of the measure \(\mu _{n_k}.\) Combining the construction above with (55) gives

where  is the regular Entropy-Transport distance computed in the space \((X,{\mathsf {d}}).\) In particular, (56) implies that \(({\bar{\mu }}_{n_{k}})_{k\in {\mathbb {N}}}\) is a Cauchy sequence in

is the regular Entropy-Transport distance computed in the space \((X,{\mathsf {d}}).\) In particular, (56) implies that \(({\bar{\mu }}_{n_{k}})_{k\in {\mathbb {N}}}\) is a Cauchy sequence in  . Since

. Since  is complete, there exists \(\mu \in {\mathscr {M}}(X)\) such that

is complete, there exists \(\mu \in {\mathscr {M}}(X)\) such that  .

.

Using again that \((X_{n_k},{\mathsf {d}}_{X_{n_k}})\) is isometrically embedded in \((X,{\mathsf {d}})\) and the point (ii) of Lemma 5, we can conlude that

\(\mathbf {Step\, 3}\): Separability of  .

.

Thanks to (iii) of Lemma 5 it is enough to show that the set \(\varvec{\mathrm {X}}_*\), defined in (41), is separable. To this aim, we notice that \(\varvec{\mathrm {X}}_*\) can be written as \(\bigsqcup _{n\in {\mathbb {N}}} \tilde{{\mathcal {K}}}_n\) where

Since the set of all \((D,M)=(D_{ij},M)\in {\mathbb {R}}_+^{n\times n}\times {\mathbb {R}}_{+}\) such that

is separable (as a subset of the Euclidean space), using (iv) of Lemma 5 we get that

is separable for every fixed \(n\in {{\mathbb {N}}}, M>0\). The separability of \(\tilde{{\mathcal {K}}}_n\) follows by the separability of \(\tilde{{\mathcal {K}}}_{n,M}\) combined with (v) of Lemma 5.

\(\mathbf {Step\, 4}\): Length/geodesic property of  .

.

Let us start by proving the length property. Let \((X_1,{\mathsf {d}}_1,\mu _1),\, (X_2,{\mathsf {d}}_2,\mu _2) \in \varvec{\mathrm {X}}\). By definition of  , for every \(\varepsilon >0\) we can find a complete and separable metric space \((X, {\mathsf {d}})\) and isometric embeddings \(\psi ^i: {\mathsf {supp}}(\mu _i) \rightarrow X\), \(i=1,2\), such that

, for every \(\varepsilon >0\) we can find a complete and separable metric space \((X, {\mathsf {d}})\) and isometric embeddings \(\psi ^i: {\mathsf {supp}}(\mu _i) \rightarrow X\), \(i=1,2\), such that

where, as before, we identify \({\mathsf {supp}}(\mu _i)\) with its isometric image \(\psi ^i({\mathsf {supp}} (\mu _i))\), and correspondingly \(\mu _i\) with \(\psi ^i_\sharp \mu _{i}\), \(i=1,2\), in order to keep notation short.

Recall that, by slightly modifying the classical Kuratowski embedding, one can show that every complete and separable metric space can be isometrically embedded in a complete, separable and geodesic metric space (see for instance [23, Exercise 1c. Ch. 3\(\frac{1}{2}.1\)] or [22, Proposition 1.2.12]). Thus, recalling also Lemma 4, without loss of generality we can assume that the complete and separable metric space \((X,{\mathsf {d}})\) above is also geodesic.

By assumption  is a length distance on \({\mathscr {M}}(X)\) since \((X,{\mathsf {d}})\) is a length space, so that we can find a curve

is a length distance on \({\mathscr {M}}(X)\) since \((X,{\mathsf {d}})\) is a length space, so that we can find a curve  from \(\mu _1\) to \(\mu _2\) satisfying

from \(\mu _1\) to \(\mu _2\) satisfying

Now, it is easy to check that the  -length of the curve of m.m.s. \(((X, {\mathsf {d}}, \mu _{t}))_{t\in [1,2]}\subset \varvec{\mathrm {X}}\) satisfies

-length of the curve of m.m.s. \(((X, {\mathsf {d}}, \mu _{t}))_{t\in [1,2]}\subset \varvec{\mathrm {X}}\) satisfies

Indeed the length of a curve is by definition the supremum of the sums of mutual distances over finite partitions (13), and for every partition \((t_{i})\) of [1, 2] it holds

The combination of (59), (60) and (61) gives

as desired.

To prove the geodesic property in the case  is a geodesic distance, we notice that we can follow verbatim the argument given above with \(\varepsilon =0\). Here one has to notice that the existence of an optimal complete and separable metric space on which (59) holds with \(\varepsilon =0\) follows from (ii) of Lemma 6. \(\square \)

is a geodesic distance, we notice that we can follow verbatim the argument given above with \(\varepsilon =0\). Here one has to notice that the existence of an optimal complete and separable metric space on which (59) holds with \(\varepsilon =0\) follows from (ii) of Lemma 6. \(\square \)

Remark 3

It is proved in [29, Proposition 8.3] that  is a geodesic space when the underlying space \((X,{\mathsf {d}})\) is geodesic. In particular, the last claim of Theorem 2 can be applied to the Hellinger-Kantorovich distance.