Abstract

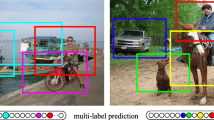

Multi-label image classification is a fundamental and challenging task in computer vision. Although remarkable success has been achieved by applying CNN–RNN pattern, such method has a slow convergence rate due to the existence of RNN module. Instead of utilizing the RNN modules, this paper proposes a novel channel correlation network which is fully based on convolutional neural network (CNN) to model the label correlations with high training efficiency. By creating a new attention module, the image features obtained by CNN are further convoluted to obtain the correspondence between the label and the channel-wise feature map. Then we use the SE and the convolution operation alternately to eliminate the irrelevant information to better explore the label correlation. Experiments on PASCAL VOC 2007 and MIRFlickr25k show that our model can effectively exploit the dependencies between multiple tags to achieve better performance.

Similar content being viewed by others

References

Sivic, J., Zisserman, A.: Video Google: a text retrieval approach to object matching in videos. In: Proceedings of IEEE International Conference on Computer Vision, pp. 1470–1477. IEEE (2003)

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines. ACM Trans. Intell. Syst. Technol. TIST 2(3), 27 (2011)

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Krizhevsky, A., Sutskever, I., Hinton, G.: Imagenet classification with deep convolutional neural networks. In: Neural Information Processing Systems, pp. 1106–1114 (2012)

Szegedy, C., Liu, W., Jia, Y.: Going deeper with convolutions. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–9 (2015)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770-778 (2016)

Huang, G., Liu, Z., Weinberger, K.Q., Maaten, L.: Densely connected convolutional networks. In: IEEE Conference on Computer Vision and Pattern Recognition, 2017, pp. 2261–2269. IEEE Computer Society (2017)

Gong, Y., Jia, Y., Leung, T., Toshev, A., Ioffe, S.: dee8p convolutional ranking for multi-label image annotation. In: International Conference on Learning Representations (2014)

Harzallah, H., Jurie, F., Schmid, C.: Combining efficient object localization and image classification. In: International Conference on Computer Vision, pp. 237–244 (2009)

Sanchez, J., Perronnin, F., Mensink, T., Verbeek, J.J.: Image classification with the fisher vector: theory and practice. Int. J. Comput. Vis. 105(3), 222–245 (2013)

Razavian, A.S., Azizpour, H., Sullivan, J., Carlsson, S.: CNN features off-the-shelf: an astounding baseline for recognition. In: Computer Vision and Pattern Recognition, pp. 512–519 (2014)

Wei, Y., Xia, W., Lin, M., Huang, J., Ni, B., Dong, J., Yan, S.: HCP: a flexible CNN framework for multi-label image classification. IEEE Trans. Pattern Anal. Mach. Intell. 38(9), 1901–1907 (2016)

Everingham, M., Van Gool, L., Williams, C.K., Winn, J., Zisserman, A.: The pascal visual object classes (VOC) challenge. Int. J. Comput. Vis. 88(2), 303–338 (2010)

Cheng, M.-M., Zhang, Z., Lin, W.-Y., Torr, P.H.S.: BING: binarized normed gradients for objectness estimation at 300 fps. In: Computer Vision and Pattern Recognition, pp. 3286–3293 (2014)

Wang, J., Yang, Y., Mao, J., Huang, Z., Huang, C., Xu, W.: CNN-RNN: a unified framework for multi-label image classification. In: Computer Vision and Pattern Recognition (CVPR), pp. 2285–2294 (2016)

Hochreiter, S., Schmidhuber, J.: Long short-term memory. Neural Comput. 9(8), 1735–1780 (1997)

Srivastava, N., Salakhutdinov, R.: Learning representations for multimodal data with deep belief nets. In: International Conference on Machine Learning Workshop, vol. 79 (2012)

Xue, X., Zhang, W., Zhang, J., Wu, B., Fan, J., Lu, Y.: Correlative multi-label multi-instance image annotation. In: International Conference on Computer Vision, 2011. IEEE Computer Society, pp. 651–658 (2011)

Guo, Y., Gu, S.: Multi-label classification using conditional dependency networks. In: International Joint Conference on Artificial Intelligence, pp. 1300–2011 (2011)

Zhang, J., Wu, Q., Shen, C., et al.: Multilabel image classification with regional latent semantic dependencies. IEEE Trans. Multimedia 20(10), 2801–2813 (2018)

Glorot, X., Bordes, A., Bengio, Y.: Deep sparse rectifier neural networks. In: International Conference on Artificial Intelligence and Statistics, pp. 315–323 (2011)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: Computer Science (2014)

Yang, H., Tianyi Zhou, J., Zhang, Y., Gao, B.-B., Wu, J., Cai, J.: Exploit bounding box annotations for multi-label object recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 280–288 (2016)

Hu, J., Shen, L., Sun, G.: Squeeze-and-excitation networks. In: Computer Vision and Pattern Recognition (2018)

Wang, R., Xie, Y., Yang, J., Xue, L., Hu, M., Zhang, Q.: Large scale automatic image annotation based on convolutional neural network. J. Vis. Commun. Image Represent. 49, 213–224 (2017)

Lowe, D.G.: Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 60(2), 91–110 (2004)

Ba, J., Mnih, V., Kavukcuoglu, K.: Multiple object recognition with visual attention. In: International Conference on Learning Representations (2015)

Mnih, V., Heess, N., Graves, A., Kavukcuoglu, K.: Recurrent models of visual attention. In: Neural Information Processing Systems, pp. 2204–2212 (2014)

Wang, Y., Deng, Z., Hu, X., Zhu, L., Ni, D.: Deep attentional features for prostate segmentation in ultrasound. In: International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI), 2018. Springer, Cham (2018)

Hu, X., Yu, L., Chen, H., Qin, J., Heng, P.A.: AGNet: attention-guided network for surgical tool presence detection. In: Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, pp. 186–194. Springer, Cham (2017)

Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A., Salakhutdinov, R., Zemel, R.S., Bengio, Y.: Show, attend and tell: neural image caption generation with visual attention. In: International Conference on Machine Learning, pp. 2048–2057 (2015)

Dalal, N., Triggs, B: Histograms of oriented gradients for human detection, In: Computer Vision and Pattern Recognition, pp. 886–893 (2005)

Deng, J., Dong, W., Socher, R., Li, L.-J., Li, K., FeiFei, L.: Imagenet: a large-scale hierarchical image database. In: Computer Vision and Pattern Recognition, pp. 248–255 (2009)

Ojala, T., Pietikainen, M., Harwood, D.: A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 29(1), 51–59 (1996)

Huang, G., Chen, D., Li, T., Wu, F., Laurens, V.D.M., Weinberger, K.Q.: Multi-scale dense networks for resource efficient image classification. International Conference on Learning Representations (2018)

Shen, Z., Liu, Z., Li, J., Jiang, Y. G., Chen, Y., Xue, X.: DSOD: learning deeply supervised object detectors from scratch. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 1919–1927 (2017)

Chen, Y., Li, J., Xiao, H., Jin, X., Yan, S., Feng, J.: Dual path networks. In: Neural Information Processing Systems, pp. 4467–4475 (2017)

Wang, W., Shen, J., Shao, L.: Video salient object detection via fully convolutional networks. IEEE Trans. Image Process. 27, 1 (2017)

Dong, X., Shen, J., Wang, W., Liu, Y., Shao, L., Porikli, F.: Hyperparameter optimization for tracking with continuous deep Q-learning. In: Computer Vision and Pattern Recognition (CVPR), pp. 518–527 (2018)

Dong, X., Shen, J.: Triplet loss in siamese network for object tracking. In: European Conference on Computer Vision (2018)

Zhu, L., Deng, Z., Hu, X., Fu, C. W., Xu, X., Qin, J., Heng, P.A.: Bidirectional feature pyramid network with recurrent attention residual modules for shadow detection. In: Proceedings of the European Conference on Computer Vision (ECCV), pp. 121–136 (2018)

Wenguan, W., Jianbing, S., Haibin, L.: A deep network solution for attention and aesthetics aware photo cropping. IEEE Trans. Pattern Anal. Mach. Intell. 41, 1 (2018)

Wang, W., Shen, J.: Deep visual attention prediction. IEEE Trans. Image Process. 27(5), 2368–2378 (2018)

Dong, X., Shen, J., Wu, D., et al.: Quadruplet network with one-shot learning for fast visual object tracking. IEEE Trans. Image Process. 28(7), 3516–3527 (2019)

Wang, J., Li, X., Yang, J., et al.: Stacked conditional generative adversarial networks for jointly learning shadow detection and shadow removal. In: Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1788–1797 (2018)

Hu, X., Zhu, L., Fu, C., et al.: Direction-aware spatial context features for shadow detection. In: Conference Computer Vision and Pattern Recognition (CVPR), pp. 7454–7462 (2018)

Khan, S.H., Bennamoun, M., Sohel, F.A., et al.: Automatic shadow detection and removal from a single image. IEEE Trans. Pattern Anal. Mach. Intell. 38(3), 431–446 (2016)

Hu, X., Fu, C., Zhu, L., et al.: Direction-aware spatial context features for shadow detection and removal. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 7454–7462 (2018)

Acknowledgements

We express our sincere thanks to the anonymous reviewers for their helpful comments and suggestions to raise the standard of our paper. This work is partly supported by the National Natural Science Foundation of China under Grant No. 61672202 and State Key Program of NSFC-Shenzhen Joint Foundation under Grant No. U1613217.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Xue, L., Jiang, D., Wang, R. et al. Learning semantic dependencies with channel correlation for multi-label classification. Vis Comput 36, 1325–1335 (2020). https://doi.org/10.1007/s00371-019-01731-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-019-01731-5