Abstract

We consider directed polymers in random environment in the critical dimension \(d = 2\), focusing on the intermediate disorder regime when the model undergoes a phase transition. We prove that, at criticality, the diffusively rescaled random field of partition functions has a unique scaling limit: a universal process of random measures on \({\mathbb {R}}^2\) with logarithmic correlations, which we call the Critical 2d Stochastic Heat Flow. It is the natural candidate for the long sought solution of the critical 2d Stochastic Heat Equation with multiplicative space-time white noise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and main results

1.1 Overview

The model of directed polymer in random environment (DPRE) is by now a fundamental model in statistical physics and probability theory. It is one of the simplest and yet most challenging models for disordered systems, where the effect of disorder—which is synonymous with random environment—can be investigated. Originally introduced by Huse and Henley [59] in the physics literature to study interfaces of the Ising model with random impurities, over the years, DPRE has become an object of mathematical interest and lies at the heart of two areas of intense research in recent years. On the one hand, it is one of the canonical examples in the Kardar–Parisi–Zhang (KPZ) universality class of interface growth models, which has witnessed tremendous progress over the last two decades in spatial dimension \(d=1\) (see e.g. the surveys [34, 35, 73]); on the other hand, it provides a discretisation of the Stochastic Heat Equation (SHE) and (via the Cole-Hopf transformation) of the Kardar–Parisi–Zhang (KPZ) equation, for which a robust solution theory in \(d=1\) has been developed only recently in the larger context of singular stochastic partial differential equations (SPDE) [49, 53, 55, 56, 64].

Our goal in this paper is to consider DPRE in the critical spatial dimension \(d=2\), for which much remains unknown. Our main result shows that, in a critical window for the disorder strength, the family of partition functions of DPRE converges to a universal limit, which can be interpreted as the solution of the (classically ill-defined) 2-dimensional SHE. This is the first example of a singular SPDE for which a solution has been constructed in the critical dimension and for critical disorder strength.

In the remainder of the introduction, we first recall the definition of DPRE, its basic properties, and the works leading up to our current result. We then present our main results and discuss their connections with singular SPDEs and related research.

1.2 The model

The first ingredient in the definition of DPRE is a simple symmetric random walk \((S = (S_n)_{n\geqslant 0}, \mathrm P)\) on \({\mathbb {Z}}^d\), started at \(S_0 = 0\). To specify a different starting time m and position z, we will write \(\mathrm P(\,\cdot \,|S_m = z)\). The second ingredient is the disorder or random environment, encoded by a family of i.i.d. random variables \((\omega = (\omega (n,z))_{n\in {\mathbb {N}}, z\in {\mathbb {Z}}^d}, {{\mathbb {P}}} )\) with zero mean, unit variance and some finite exponential moments:

Given \(N\in {\mathbb {N}}\), \(\beta >0\), and a realization of \(\omega \), the polymer measure of length \(N\in {\mathbb {N}}\) and disorder strength (inverse temperature) \(\beta \) in the random environment \(\omega \) is given by

where

is the partition function. Note that \(\lambda (\beta )\) in the exponent ensures that \({{\mathbb {E}}} [Z_N^{\beta ,\,\omega }(z)]=1\).

In the mathematical literature, DPRE was first studied by Imbrie and Spencer [60]. There have been many results since then, although many fundamental questions remain open. We briefly recall what is known and refer to the recent monograph by Comets [30] for more details and references.

DPRE exhibits a phase transition between a weak disorder phase and a strong disorder phase. Using the martingale structure of the partition functions \((Z_N^{\beta ,\,\omega }(0))_{N\in {\mathbb {N}}}\), first identified by Bolthausen in [6], DPRE is said to be in the weak disorder (or strong disorder) phase if the martingale converges almost surely to a positive limit (or to 0). It was later shown in [33] that there is a critical value \(\beta _c\geqslant 0\) such that strong disorder holds for \(\beta >\beta _c\) and weak disorder holds for \(0 \leqslant \beta < \beta _c\), where \(\beta _c \in (0,\infty )\) for \(d\geqslant 3\) [6, 60], and \(\beta _c=0\) for \(d=1,2\) [22, 32] (see also [10, 65, 72]).

In the weak disorder phase, a series of works culminating in [33] showed that the random walk under the polymer measure converges to a Brownian motion under diffusive scaling of space and time, as if the disorder is not present. In the strong disorder phase, it is believed that under the polymer measure, the path should be super-diffusive, but this has only been proved for special integrable models in dimension \(d=1\), see [36, 61]. Even less is known in \(d\geqslant 2\) due to the lack of integrable models within the same universality class. We mention that the strong disorder phase can alternatively be characterised by the fact that two polymer paths sampled independently in the same random environment have positive overlap, see [22, 32, 77] and the more recent results [5, 8, 9, 24].

1.3 The case \(d=2\)

Henceforth, we will focus on dimension \(d=2\). Surprisingly, even though \(\beta _c=0\), there is still a weak to strong disorder transition, which was identified in [17]. More precisely, if we choose \(\beta =\beta _N={\hat{\beta }}/\sqrt{\log N}\), which is called an intermediate disorder regime, then it was shown in [17] that below the critical point \({\hat{\beta _c}} = \sqrt{\pi }\), the partition function \(Z_N^{\beta _N, \,\omega }(0)\) converges in distribution to a log-normal random variable, which is strictly positive, while at and above \({\hat{\beta _c}}\), it converges to 0 (such a transition does not occur in \(d=1\)). This raises many interesting questions about the 2-dimensional DPRE.

There are two main perspectives in the study of the partition functions of DPRE. One is to investigate the fluctuation of a single log-partition function \(\log Z_N^{\beta , \,\omega }(0)\) as \(N\rightarrow \infty \). In \(d=1\), this is conjectured to converge, under suitable rescaling, to the universal Tracy–Widom distribution whenever \(\beta >0\). Similar universal fluctuations are expected to arise in \(d\geqslant 2\) when \(\beta >\beta _c\), although only numerical results are available so far [57, 58]. In \(d=2\) and in the intermediate disorder regime \(\beta _N= {\hat{\beta }}/\sqrt{\log N}\) with a sub-critical interaction strength \({\hat{\beta }}<{\hat{\beta _c}}\), [17] showed that \(\log Z_N^{\beta , \,\omega }(0)\) converges to a universal normal limit independent of the law of \(\omega \). The super-critical case \({\hat{\beta \geqslant }} {\hat{\beta _c}}\) remains a difficult challenge.

Another perspective, which we take in this paper, is to study the diffusively rescaled field of partition functions indexed by all starting points in space-time:

as well as the diffusively rescaled field of log-partition functions:

The fields \({{\mathcal {U}}} _N\) and \({{\mathcal {H}}} _N\) provide natural discretizations of the solutions of the two-dimensional Stochastic Heat Equation (SHE) and Kardar–Parisi–Zhang equation (KPZ) respectively:

where \({\dot{W}} = {\dot{W}}(t,x)\) denotes space-time white noise. These stochastic PDEs are singular and ill-posed: even the recent breakthrough solution theories of regularity structures [55, 56] and paracontrolled distributions [53, 54] only apply in \(d=1\) but not in the critical dimension \(d=2\). Therefore, if \({{\mathcal {U}}} _N\) and \({{\mathcal {H}}} _N\) admit non-trivial limits, then these limits are natural candidates for the long-sought solutions of SHE and KPZ in \(d=2\).

The study of the random field \({{\mathcal {U}}} _N\) was initiated in [17], which showed that in the subcritical regime \({\hat{\beta }}<{\hat{\beta _c}}\), the centered and rescaled random field \(\sqrt{\log N}\big (\,{{\mathcal {U}}} _N(t,x) -1\big )\) converges to the solution of the so-called Edwards–Wilkinson equation, which is a Gaussian free field at each time t. The study of the random field \({{\mathcal {H}}} _N\) was first carried out in [25],Footnote 1 which showed that \(\sqrt{\log N}\big (\,{{\mathcal {H}}} _N(t,x) - {{\mathbb {E}}} [{{\mathcal {H}}} _N(t,x)]\big )\) is tight in N as a family of distribution-valued random variables for \({\hat{\beta }}\) sufficiently small; shortly after, [20] proved convergence to the solution of the same Edwards–Wilkinson equation as for \({{\mathcal {U}}} _N\) for all \({\hat{\beta }}<{\hat{\beta _c}}\) (simultaneously, the same result was proved in [50] for \({\hat{\beta }}\) sufficiently small).

In the much more interesting and delicate critical regime \({\hat{\beta }}= {\hat{\beta _c}}\)—there is in fact a critical window of width \(O(1/\log N)\) around \({\hat{\beta _c}}\), see (1.11) below—the random field \({{\mathcal {U}}} _N(t,x)\) no longer needs any centering and rescaling. Its limiting correlation structure was first identified in [7] through a different regularisation of the 2d SHE (1.6) (mollifying the noise \({\dot{W}}\) instead of discretizing space and time). In [19], the third moment of the averaged random field \({{\mathcal {U}}} _N(t,\varphi ) := \int {{\mathcal {U}}} _N(t,x) \, \varphi (x) \, \textrm{d}x\), for test functions \(\varphi \), was computed and shown to converge to a finite limit as \(N\rightarrow \infty \), which implies that all subsequential limits of \({{\mathcal {U}}} _N\) have the same correlation structure identified in [7] (tightness is trivial since \({{\mathbb {E}}} [{{\mathcal {U}}} _N]\equiv 1\)). Subsequently, [51] identified the limit of all moments of \({{\mathcal {U}}} _N(t, \varphi )\) (see also the more recent work [26]). However, the uniqueness of the limit of \({{\mathcal {U}}} _N\) remained elusive and challenging, because the limiting moments identified in [51] and [26] grow too fast to uniquely determine the law of the random field.

Our main result settles this question and shows that, in the critical window around \({\hat{\beta }} = {\hat{\beta _c}}\), the random field \({{\mathcal {U}}} _N\) indeed converges to a unique universal limit, which naturally provides a notion of solution of the 2d SHE (1.6) for disorder strength \(\beta \) in the critical window. Therefore, we name it the Critical 2d Stochastic Heat Flow.

1.4 Main results

To formulate our main results, we generalize the partition functions in (1.3) by introducing a point-to-point version, where both the starting and ending positions of the random walk are fixed: for \(M \leqslant N \in {\mathbb {N}}_0 = \{0,1,2,\ldots \}\) and \(w,z\in {\mathbb {Z}}^2\) we set

with the convention \(\sum _{n=M+1}^{N-1} \{\ldots \} := 0\) for \(N \leqslant M+1\).

To deal with parity issues, for \(x \in {\mathbb {R}}^2\) we denote by \([\![ x ]\!]\) the closest point \(z \in {\mathbb {Z}}^2_{\textrm{even}} :=\{(z_1, z_2)\in {\mathbb {Z}}^2: z_1+z_2 \text{ even }\}\); for \(s \in {\mathbb {R}}\) we define the even approximation \([\![ s ]\!] := 2 \, \lfloor s/2 \rfloor \in {\mathbb {Z}}_{\textrm{even}} := 2{\mathbb {Z}}\). We then introduce the process of diffusively rescaled partition functions:Footnote 2

where \(\textrm{d}x \, \textrm{d}y\) denotes the Lebesgue measure on \({\mathbb {R}}^2 \times {\mathbb {R}}^2\), and \(\beta _N\) will be defined shortly.

We regard \({{\mathcal {Z}}} ^{\beta _N}_{N;\, s,t}(\textrm{d}x, \textrm{d}y)\) as a random measure on \({\mathbb {R}}^2 \times {\mathbb {R}}^2\), where we equip the space of locally finite measures on \({\mathbb {R}}^2 \times {\mathbb {R}}^2\) with the topology of vague convergence:

Our main result proves weak convergence of the law of \({{\mathcal {Z}}} ^{\beta }_N\) as \(N\rightarrow \infty \), when \(\beta = \beta _N\) is rescaled in a suitable critical window, that we define next. Let us introduce the sequence

which is the expected overlap (number of collisions) between two independent simple symmetric random walks starting from the origin in \({\mathbb {Z}}^2\) up to time N. Recalling that \(\lambda (\cdot )\) is the disorder log-moment generating function, see (1.1), the critical window for \(\beta = \beta _N\) is

Since \(\lambda (\beta ) \sim \frac{1}{2} \beta ^2\) as \(\beta \downarrow 0\), see (1.1), we have \(\beta _N \sim {\hat{\beta _c}} / \sqrt{\log N}\) with \({\hat{\beta _c}} = \sqrt{\pi }\) irrespective of the parameter \(\vartheta \), which contributes to the second order asymptotics, see (3.12).

We can now state our main result, which will be proved in Sect. 9.

Theorem 1.1

(Critical 2d Stochastic Heat Flow) Fix \(\beta _N\) in the critical window (1.11), for some \(\vartheta \in {\mathbb {R}}\). As \(N\rightarrow \infty \), the family of random measures \({{\mathcal {Z}}} ^{\beta _N}_N = ({{\mathcal {Z}}} ^{\beta _N}_{N;\, s,t}(\textrm{d}x, \textrm{d}y))_{0\leqslant s \leqslant t < \infty }\) defined in (1.9) converges in finite dimensional distributions to a unique limit

which we call the Critical 2d Stochastic Heat Flow. This limit \({\mathscr {Z}}^\vartheta \) is universal, in that it does not depend on the law of the disorder \(\omega \) except for the assumptions in (1.1).

We can infer directly from its construction some basic properties of the Critical 2d Stochastic Heat Flow, which we collect in the next result, also proved in Sect. 9.

Theorem 1.2

The Critical 2d Stochastic Heat Flow \({\mathscr {Z}}^\vartheta \) is (space-time) translation invariant in law:

and it satisfies the following scaling relation:

The first and second moments of \({\mathscr {Z}}^\vartheta \) are given by

where g denotes the heat kernel in \({\mathbb {R}}^2\), see (3.20), and \(K^\vartheta \) is an explicit kernel, see (3.56).

Remark 1.3

The covariance kernel \(K_{t-s}^\vartheta (x,x'; y, y')\) was first identified in [7] (see also [19]) and is logarithmically divergent near the diagonals \(x=x'\) or \(y=y'\).

We now briefly explain the proof strategy. As noted before, the moments of \({\mathscr {Z}}^\vartheta \) identified in [51] and [26] grow too fast to uniquely characterize the law of \({\mathscr {Z}}^\vartheta \). The bounds given in these works suggest that the n-th moment is at most of order \(\exp (\exp (n^2))\), while our recent work [21] gives a lower bound of \(\exp (c n^2)\). Physical arguments on the Delta-Bose gas [74] suggest that the growth should be \(\exp (\exp (n))\). It may thus be surprising that we are still able to prove Theorem 1.1 and show that the limit is unique, without criteria to uniquely identify the limit. Another prominent result of this nature, which gave us inspiration, is the work of Kozma [63] on the convergence of the three-dimensional loop erased random walk with dyadic scaling of the lattice \(2^{-N}{\mathbb {Z}}^3\).

The basic strategy is to show that the laws of \(({{\mathcal {Z}}} ^{\beta _N}_{N})_{N\in {\mathbb {N}}}\) form a Cauchy sequence, i.e.

To accomplish this, we first construct a coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\,\cdot \, |\Theta )\), for each \(\varepsilon \in (0,1)\), which is a function of a family \(\Theta \) of coarse-grained disorder variables. We then perform a coarse-graining approximation of the partition function on the time-space scale \((\varepsilon N, \sqrt{\varepsilon N})\), which shows that \({{\mathcal {Z}}} ^{\beta _N}_{N}\) can be approximated by the coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\,\cdot \, |\Theta )\) for a specific choice of coarse-grained disorder \(\Theta =\Theta _{N, \varepsilon }\) that depends on N and \(\varepsilon \), with an approximation error which is small for small \(\varepsilon \) and large N (shown via second moment bounds). As a consequence, we finally prove (1.14) by showing that the coarse-grained models \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\,\cdot \, |\Theta )\) with \(\Theta =\Theta _{N, \varepsilon }\) and \(\Theta =\Theta _{M, \varepsilon }\) are close in distribution, for small \(\varepsilon > 0\) and large \(N, M \in {\mathbb {N}}\) (shown via a Lindeberg principle).

We give a more detailed proof outline in Sect. 2. Let us just highlight here the key proof ingredients:

- A.:

-

Coarse-Graining, which leads to a coarse-grained model with the same structure as the original model, demonstrating a degree of self-similarity;

- B.:

-

Time-Space Renewal Structure, which sheds probabilistic light on second moment computations and leads in the continuum limit to the so-called Dickman subordinator;

- C.:

-

Lindeberg Principle for multilinear polynomials of dependent random variables, which controls the effect of changing \(\Theta \) in the coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\,\cdot \, |\Theta )\);

- D.:

-

Functional Inequalities for Green’s Functions of multiple random walks on \({\mathbb {Z}}^2\), which yield sharp higher moment bounds for the coarse-grained model.

This framework is robust enough that it can also be used to show convergence of other approximations of SHE (1.6) to the Critical 2d Stochastic Heat Flow.

Remark 1.4

(Mollified SHE) The same proof steps A, B, C, D can be carried out for the solution \(u_\delta \) of the mollified SHE (1.6), where the space-time white noise \({\dot{W}}\) is mollified spatially on the scale \(\delta \) and \(\beta =\beta _\delta \) is chosen in the corresponding critical window, that is \(\beta ^2_\delta =\tfrac{2\pi }{|\log \delta |} +\tfrac{\vartheta +o(1)}{(\log \delta )^2}\) (cf. (3.12)). A key point is that coarse-graining \(u_\delta \) on the mesoscopic scale leads to exactly the same coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\,\cdot \, |\Theta )\) constructed in this paper, just with a different family of coarse-grained disorder variables \(\Theta = \Theta _{\delta ,\varepsilon }\). This means that the solution \(u_\delta \) of the mollified SHE would converge as \(\delta \downarrow 0\) to the same universal limit \({\mathscr {Z}}^\vartheta \) in Theorem 1.1. We will not carry out the details here since the paper is long enough.

We remark that Clark has proved in [28] an analogue of Theorem 1.1 for DPRE on the hierarchical diamond lattice, which is particularly useful for renormalization analysis and can mimic Euclidean lattices of different dimensions as the lattice parameters vary. Furthermore, in [27, 29], he also constructed the continuum polymer measures and studied their properties. This raises interesting questions as to whether similar results can be proved for DPRE on the Euclidean lattice, where exact renormalization analysis is no longer available. We point out that our work developed in parallel to that of Clark, and our proof strategies share some common features, such as coarse-graining and controlling distributional distances via a Lindeberg principle in our case vs. Stein’s method in [28], and showing that the laws of the partition functions form a Cauchy sequence.

Now that we have proved the existence of a unique limit \({\mathscr {Z}}^\vartheta \)—the Critical 2d Stochstic Heat Flow—the next challenge will be to investigate its properties and characterize its law.

Remark 1.5

(Alternative scaling) The simple random walk on \({\mathbb {Z}}^2\) is 2-periodic and each component has variance \(\frac{1}{2}\). As a consequence, the diffusively rescaled partition functions \({{\mathcal {U}}} _N(t,x)\) in (1.4) provide a discretization of a slightly modified SHE (1.6), namely

(see [21, Appendix A.3] for more details). The SHE with the usual parameters in (1.6) can be recovered via the change of variable \({{\mathcal {U}}} _N(t,\frac{x}{\sqrt{2}})\). Therefore to describe a candidate solution of (1.6), we should consider the rescaled Critical 2d Stochastic Heat Flow given by (recall (1.12))

which is also normalized to have mean 1 rather than \(\frac{1}{2}\) (see (1.13)).

1.5 Related literature

We next discuss the connection between our work and various results in the literature and point out some future directions of research.

1.5.1 Singular SPDEs

As explained in Sect. 1.3, the scaling limit \({\mathscr {Z}}^\vartheta \) in Theorem 1.1 can be interpreted as the solution of the 2-dimensional SHE (1.6) in the critical window. For SHE, dimension \(d=2\) marks the critical dimension in the language of singular SPDEs and renormalisation group theory. To define a solution for singular SPDEs, such as SHE and KPZ in (1.6)–(1.7), a standard approach is to mollify the space-time noise \({\dot{W}}\) in space on the scale of \(\varepsilon \), and then try to identify a scaling limit as \(\varepsilon \downarrow 0\). Discretizing space-time by considering a lattice model, such as the DPRE that we study in this paper, is just another way of removing the singularity on small scales (also known as ultraviolet cutoff).

All existing solution theories for singular SPDEs, including regularity structures [55, 56], paracontrolled distributions [53, 54], the renormalization group approach [64], or energy solutions [49], do not apply at the critical dimension. The only singular SPDEs for which progress has been made in defining its solution at the critical dimension are SHE and KPZ (via the Cole-Hopf transform). The phase transition identified in [17] was unexpected, and to the best of our knowledge no such transition has been established for other singular SPDEs in the critical dimension. Theorem 1.1 is thus the first result to define a solution for a singular SPDE at the critical dimension and for critical disorder strength.

In dimension \(d=2\), recently there has also been significant progress in understanding the solution of the anisotropic version of the KPZ equation (aKPZ), which differs from (1.7) in that \(|\nabla v|^2= (\partial _{x_1}v)^2+(\partial _{x_2}v)^2\) therein is replaced by \((\partial _{x_1}v)^2- (\partial _{x_2}v)^2\). This case is also beyond the reach of existing solution theories, and unlike the isotropic KPZ, it cannot be linearized via the Cole-Hopf transformation. Cannizzaro, Erhard, and Schönbauer [11] regularized the aKPZ via a cutoff in Fourier space, instead of discretizing space and time or mollifying the noise on the spatial scale \(\varepsilon \) (all are ultraviolet cutoffs). They showed that if the non-linear term \((\partial _{x_1}v)^2- (\partial _{x_2}v)^2\) is rescaled by a factor \(\lambda /\sqrt{|\log \varepsilon |}\), then the solution of the regularized aKPZ is tight with non-trivial limit points, which is the anisotropic analogue of [25]. Very recently, Cannizzaro, Erhard, and Toninelli [14] succeeded in proving that the limit is in fact Gaussian and solves the Edwards–Wilkinson equation, which is the anisotropic analogue of [20, 50]. In contrast to the isotropic case (1.7), there is no phase transition in \(\lambda \) for the aKPZ. The same authors also studied the aKPZ without scaling the non-linearity, and in a surprising result [12, 13], they showed that the solution exhibits logarithmic superdiffusive behaviour.

In the supercitical dimensions \(d\geqslant 3\), the transition between the weak and strong disorder phases for the directed polymer is long known [30] and has a natural counterpart for SHE and KPZ. In recent years, there have been many studies on the solutions of SHE and KPZ via mollification, namely, analogues of the random fields \({{\mathcal {U}}} _N\) and \({{\mathcal {H}}} _N\) defined in (1.4)–(1.5). These studies are all in the weak disorder regime and are analgous to results in \(d=2\), see e.g. [31, 37, 38, 41, 52, 68, 69, 71].

1.5.2 Coarse-graining

The first step in our approach is to construct a coarse-grained model. Coarse-graining has a long history in statistical mechanics and renormalisation theory. In the framework of directed polymer models, coarse-graining has played a crucial role in the studies by Lacoin [65] and Berger–Lacoin [10] on free energy asymptotics, which extended previous works in the literature of pinning models, see [47], from which we single out the fundamental work of Giacomin–Lacoin–Toninelli [48].

In our analysis, we need a family of coarse-grained models which provide a sharp approximation of the partition function at the critical point, while the works mentioned above used coarse-grained models to provide upper bounds away from the critical point. The need for a sharper approximation creates several challenges, which lead to the refined estimates in Sects. 5 and 8 and the development of the enhanced Lindeberg principle in Appendix A.

1.5.3 DPRE on hierarchical lattices

In a series of papers [27,28,29], Clark successfully treated the directed polymer model on hierarchical diamond lattices at the “critical dimension” and in the critical window of disorder strength, which contains an analogue of Theorem 1.1 and more. Due to their tree-like structure, hierarchical lattices are especially convenient for performing exact renormalization group calculations that are typically intractable on the Euclidean lattice. By tuning suitable parameters (such as the number of branches and the number of segments along each branch), hierarchical lattices can mimic Euclidean lattices with different spatial dimensions. When the branch number equals the segment number, hierarchical lattices mimic \({\mathbb {Z}}^2\). For DPRE on these lattices, Clark was able to prove in [28] the analogue of Theorem 1.1.

Exploiting the structure of hierarchical lattices, in [29], Clark was able to use the limiting partition functions obtained in [28] to construct a continuum version of the polymer measure and study its properties. Furthermore, in [27], he identified an interesting conditional Gaussian Multiplicative Chaos (GMC) structure among the continuum polymer measures with different parameter \(\vartheta \) (similar to \(\vartheta \) in Theorem 1.1). These results raise interesting questions as to whether similar results can be obtained for DPRE on the Euclidean lattice. In this respect, Theorem 1.1 provides the starting point.

1.5.4 Continuum polymer measure

A continuum version of the DPRE polymer measure in dimension \(d=1\) was constructed in [1, 2], exploiting the continuum limit of the point-to-point partition functions. The same approach was applied in [15] to pinning models with tail exponent \(\alpha \in (\frac{1}{2},1)\). An essential feature of these constructions, as well as the one by Clark [29] in the hierarchical setting in the critical regime, is that the continuum partition functions are random functions of the polymer endpoints. The same holds for DPRE in dimension \(d=2\) in the subcritical regime \(\beta _N \sim {\hat{\beta }} / \sqrt{\log N}\), with \({\hat{\beta }} < {\hat{\beta _c}} = \sqrt{\pi }\), where it was recently shown in [44] that the discrete polymer measure, diffusively rescaled, converges to the law of Brownian motion.

The situation for DPRE in dimension \(d=2\) in the critical window is radically different, because the continuum partition functions \({{\mathcal {Z}}} ^\vartheta _{z,t}(\textrm{d}x, \textrm{d}y)\) given in Theorem 1.1 are only random measures and undefined pointwise. The point-to-plane partition function \(Z^{\beta _N, \omega }_N\) defined in (1.3) in fact converges to 0 as \(N\rightarrow \infty \), as shown in [17]. For this reason, constructing a continuum version of the polymer measure—or studying the scaling properties of the discrete polymer measure—started from a fixed point, remains a significant challenge. However, if we consider discrete polymer measures with the starting point chosen uniformly from a ball on the diffusive scale, then the same proof strategy as that for Theorem 1.1 should be applicable to show that the measures converge to a continuum polymer measure starting from a ball, whose finite dimensional distributions are uniquely determined.

1.5.5 Schrödinger operators with point interactions

When the disorder \(\omega \) is standard normal, a direct calculation shows that for \(k\in {\mathbb {N}}\), the k-th moment of the polymer partition function in (1.3) is the exponential moment (with parameter \(\beta ^2\)) of the total pairwise collision local time up to time N among k independent random walks on \({\mathbb {Z}}^2\). When \(k=2\), by a classic result of Erdös and Taylor [42] (see also [46]), the collision local time rescaled by \(1/\log N\) converges to an exponential random variable with parameter \(\pi \). In the critical window we consider here, we have \(\beta _N={\hat{\beta _c}}/\sqrt{\log N}\) with \({\hat{\beta _c}}=\sqrt{\pi }\), and hence the parameter of the exponential moment matches exactly the parameter of the limiting exponential random variable, making the moment analysis particularly delicate.

Via the Feynman–Kac formula, it can also be seen that the k-th moment of the partition function is the solution of a discrete space-time parabolic Schrödinger equation with a potential supported on the diagonal (point interaction). In the continuum setting, there have been a number of studies on the Schrödinger operator with point interactions (also called Delta-Bose gas) in dimension \(d=2\) [3, 4, 39, 40]. Using ideas from these studies, especially the works of Dell’Antonio–Figari–Teta [39] and of Dimock–Rajeev [40], Gu, Quastel, and Tsai [51] were able to compute asymptotically all moments of the averaged solution of the mollified SHE, which are analogues of the averaged polymer partition functions \({{\mathcal {Z}}} ^{\beta _N}_{N;\, s,t}(\varphi , \psi ) :=\iint \varphi (x) \, \psi (y) \, {{\mathcal {Z}}} ^{\beta _N}_{N;\, s,t}(\textrm{d}x, \textrm{d}y)\) in (1.9), with \(\varphi \) and \(\psi \) assumed to be in \(L^2\) in [51]. Previously, only the third moment had been obtained in [19]. When \(\varphi \) is a delta function, the moments of \({{\mathcal {Z}}} ^{\beta _N}_{N;\, s,t}(\varphi , \psi )\) diverge as \(N\rightarrow \infty \), and the asymptotics of the third moment has been investigated in [43]. But all mixed moments of the form \(\mathrm E\big [\prod _{i=1}^n{{\mathcal {Z}}} ^{\beta _N}_{N;\, s,t}(\varphi _i, \psi _i)\big ]\) converge if \(\varphi _i\) are chosen to be distinct \(\delta \) functions, which was shown recently by Chen in [26].

As an input to the Lindeberg principle mentioned in the proof sketch for Theorem 1.1, we need to bound the fourth moment of the coarse-grained model, which approximates the original partition function. The results from the Schrödinger operator literature and [51] are not applicable in our setting, because they rely on explicit Fourier calculations. We therefore develop an alternative and more robust approach based on functional inequalities for Green’s function of multiple random walks on \({\mathbb {Z}}^2\), see Lemma 6.8. Instead of working with \(\varphi , \psi \in L^2\) as in [51], we can work with weighted \(L^p\)–\(L^q\) spaces with \(\frac{1}{p}+\frac{1}{q}=1\). The choice of a weight allows us to consider a wider class of boundary conditions, such as \(\psi \equiv 1\) and \(\varphi \) an approximate delta function, and also to control spatial decay when the support of \(\varphi \) and \(\psi \) are far apart, all of which are needed in our proof. See Sect. 6 for more details.

1.5.6 Lindeberg principle

A Lindeberg principle is said to hold when the law of a function \(\Phi \) of a family of random variables does not change much if the family of random variables is switched to another family with some matching moments. Lindeberg principles have been very powerful tools in proving universality. The usual formulation such as in [23] requires the family of random variables to be independent (or exchangeable), and \(\Phi \) needs to have bounded first three derivatives. This is not satisfied when \(\Phi \) is a multilinear polynomial, whose derivatives are unbounded. This case was addressed in [70, 76] when the arguments are independent random variables (see also [16]).

In the proof of Theorem 1.1, we need to deal with a multilinear polynomial of dependent random variables with a local form of dependence. We formulate an extension of the Lindeberg principle to this setting in Appendix A. Our calculations are inspired by a work of Röllin on Stein’s method [75], which is an analogue of [23] for a function \(\Phi \) (with bounded first three derivatives) of dependent random variables.

1.6 Structure of the paper

The rest of the paper is organized as follows.

-

In Sect. 2, we give a detailed proof outline.

-

In Sect. 3, we introduce some basic notation and tools that we need for the rest of the paper, which includes in particular the polynomial chaos expansion and second moment asymptotics for the partition function.

-

In Sect. 4, we define the coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\,\cdot \, |\Theta )\) and the coarse-grained disorder \(\Theta = \Theta _{N,\varepsilon }\). Then in Sect. 5, we show that \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\,\cdot \, |\Theta _{N,\varepsilon })\) provides a good \(L^2\) approximation for the diffusively rescaled partition functions \({{\mathcal {Z}}} _N\) in (1.9).

-

In Sects. 6, 7 and 8, we derive key moment bounds for \({{\mathcal {Z}}} _N\), \(\Theta _{N, \varepsilon }\) and \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\,\cdot \, |\Theta )\).

-

In Sect. 9, we wrap up the proof of our main results: Theorems 1.1 and 1.2.

-

In Appendix A, we formulate an enhanced Lindeberg principle for multilinear polynomials of dependent random variables.

1.7 Notation

We denote by \(C_b({\mathbb {R}}^d)\), resp. \(C_c({\mathbb {R}}^d)\), the space of bounded, resp. compactly supported functions \(\varphi : {\mathbb {R}}^d \rightarrow {\mathbb {R}}\). The usual \(L^p\) norms will be denoted by \(\Vert \varphi \Vert _p\) for functions \(\varphi : {\mathbb {R}}^d \rightarrow {\mathbb {R}}\) and by \(\Vert X\Vert _{L^p}\) for random variables X. For notational simplicity, we will use \(c, C, C', C''\) to denote generic constants, whose values may change from place to place.

2 Proof outline

We elaborate in more detail our proof strategy for Theorem 1.1, especially the coarse-graining procedure. After reading the proof strategy, to see how the pieces fit together more precisely, we encourage the reader to go directly to Sect. 9.1 to read the proof of Theorems 1.1 and 1.2. The proof is contingent on some earlier results, such as Theorems 4.7 and 8.1, but otherwise is mostly self-contained.

Recalling (1.9), we just consider a single averaged partition function

for some \(\varphi \in C_c({\mathbb {R}}^2)\), \(\psi \in C_b({\mathbb {R}}^2)\), and \(\beta _N=\beta _N(\vartheta )\) chosen as in (1.11) for some fixed \(\vartheta \in {\mathbb {R}}\). To prove that \({{\mathcal {Z}}} _N\) converges in distribution to a limit as claimed in Theorem 1.1, we will show that the laws of \(({{\mathcal {Z}}} _N)_{N\in {\mathbb {N}}}\) form a Cauchy sequence.

The starting point of our analysis is a polynomial chaos expansion for \({{\mathcal {Z}}} _N\), which will be recalled in more detail in Sect. 3.3. In short, by introducing the i.i.d. random variables

which have mean 0 and variance \(\sigma _N^2\) as in (1.11), we can expand \({{\mathcal {Z}}} _N\) as a multilinear polynomial in the \(\xi _N\)’s as follows:

where \(q_{m,n}(x,y):= \mathrm P(S_n = y|S_m = x)\) is the random walk transition kernel, and \(q^N_{m,n}(\varphi , z_1)\), \(q^N_{m,n}(z_r, \psi )\), \(q^N_{m,n}(\varphi , \psi )\) are the averages of \(q_{m,n}(x,y)\) w.r.t. \(\varphi (x/\sqrt{N})\), \(\psi (y/\sqrt{N})\), or both (see (3.16)–(3.18)).

Each term in the sum in (2.1) contains a sequence of disorder variables \((\xi _N(n_j, z_j))_{1\leqslant j\leqslant r}\) linked by random walk transition kernels, and different terms in the sum are \(L^2\)-orthogonal. We will see that when it comes to second moment calculations, the sequence of points \((n_1, z_1), \ldots , (n_r, z_r)\) can be interpreted as a time-space renewal configuration.

Before explaining our proof strategy and ingredients, we first give a heuristic calculation that already shows universality, namely that as \(N\rightarrow \infty \), the limiting law of \({{\mathcal {Z}}} _{N}\) in (2.1) (if a unique limit exists) does not depend on the law of the i.i.d. random variables \(\xi _N(\cdot , \cdot )\) provided the first two moments are unchanged. The heuristic is based on a Lindeberg principle, which will help to illustrate some key ideas in our proof.

2.1 A heuristic calculation

Let us write \({{\mathcal {Z}}} _N(\xi _N)\) to emphasise the dependence on the i.i.d. family \(\xi _N(\cdot , \cdot )\), and let \({{\mathcal {Z}}} _N(\eta _N)\) be defined similarly with \(\xi _N\) replaced by an i.i.d. family \(\eta _N\) with matching first two moments and finite third moment. To show that \({{\mathcal {Z}}} _N(\xi _N)\) and \({{\mathcal {Z}}} _N(\eta _N)\) are close in law, it suffices to show that for any \(f:{\mathbb {R}}\rightarrow {\mathbb {R}}\) with bounded first three derivatives,

This difference can be bounded by a Lindeberg principle. In particular, we can apply Theorem A.4 to the case of i.i.d. random variables (the sums in (A.9)–(A.10) will only contain indices \(k=l=m\) due to the i.i.d. assumption) to get the bound

where \(\xi _N^{(t)}:= \sqrt{t}\, \xi _N + \sqrt{1-t}\, \eta _N\) interpolates between \(\eta _N\) and \(\xi _N\), and \(\partial _{(n,z)}{{\mathcal {Z}}} (\xi _N)\) denotes partial derivative w.r.t. \(\xi _N(n,z)\). Since \({{\mathcal {Z}}} (\xi ^{(t)}_N)\) is a multilinear polynomial in \(\xi ^{(t)}_N(\cdot , \cdot )\), it is easily seen from (2.1) that

where \({{\mathcal {Z}}} ((n,z), \psi )\) is the point-to-plane partition function starting from the point (n, z) and terminating at time N with boundary condition \(\psi \), and \({{\mathcal {Z}}} (\varphi , (n,z))\) is the plane-to-point partition function with initial boundary condition \(\varphi \) and terminating at the point (n, z). Since \(\varphi \) has compact support, only (n, z) on the diffusive scale (n of order N and \(z\in {\mathbb {Z}}^2\) of order \(\sqrt{N}\)) contribute to the sum in (2.3), and there are \(N^2\) such terms. This sum is more than compensated by the factor \(\frac{1}{N^3}\) from \(\mathrm E[|\partial _{\xi _N(n,z)}{{\mathcal {Z}}} (\xi _N)|^3]= \frac{1}{N^3} \mathrm E[|{{\mathcal {Z}}} (\varphi , (n,z))|^3] \mathrm E[|{{\mathcal {Z}}} ((n,z), \psi )|^3]\), where we used the independence between \({{\mathcal {Z}}} (\varphi , (n,z))\) and \({{\mathcal {Z}}} ((n,z), \psi )\). To deduce (2.2), it suffices to show that the moment of the point-to-plane partition function \(\mathrm E[|{{\mathcal {Z}}} ((n,z), \psi )|^3] \ll N\) as \(N\rightarrow \infty \), which holds by Remark 6.5 below.

This heuristic can be made rigorous using the results we establish in Sect. 6. But this argument will not show that \({{\mathcal {Z}}} _N(\xi _N)\) has a unique limit in law. For that, we need to define coarse-grained models and compare \({{\mathcal {Z}}} _N(\xi _N)\), for different N, with the same coarse-grained model. We outline the proof strategy below, which contains many of the same ideas in the heuristic above, but in a more complicated setting.

2.2 A. Coarse-graining

As a first step, for each \(\varepsilon \in (0,1)\), we approximate \({{\mathcal {Z}}} _N\) in \(L^2\) by a coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi , \psi |\Theta _{N,\varepsilon })\), which is a multi-linear polynomial in suitable coarse-grained disorder variables \(\Theta _{N,\varepsilon }\) and depends on N only through \(\Theta _{N,\varepsilon }\). The details will be given in Sect. 4. Here we give a sketch.

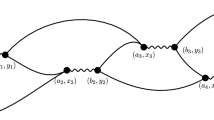

We partition \({\mathbb {N}}\times {\mathbb {Z}}^2\) into mesoscopic time-space boxes

where \(({\textsf{i}}, {\textsf{a}}) \in {\mathbb {N}}\times {\mathbb {Z}}^2\) is the mesoscopic time-space index of \({{\mathcal {B}}} _{\varepsilon N}({\textsf{i}},{\textsf{a}})\), which has temporal width \(\varepsilon N\) and spatial side length \(\sqrt{\varepsilon N}\), and \(({\textsf{a}}-{\textsf{b}}, {\textsf{a}}] = ({\textsf{a}}_1-{\textsf{b}}_1,{\textsf{a}}_1] \times ({\textsf{a}}_2-{\textsf{b}}_2,{\textsf{a}}_2]\) for squares in \({\mathbb {R}}^2\). We then decompose the sum in (2.1) according to the sequence of mesoscopic time intervals \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}_1), \ldots , {{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}_k)\) visited by the renewal configuration \((n_1, z_1), \ldots , (n_r, z_r)\). For each \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}_j)\), we then further decompose according to the first and last mesoscopic spatial boxes \({{\mathcal {S}}} _{\varepsilon N}({\textsf{a}}_j), {{\mathcal {S}}} _{\varepsilon N}({\textsf{a}}_j')\) visited in this time interval. This replaces the microscopic sum over \((n_1, z_1), \ldots , (n_r, z_r)\) in (2.1) by a mesoscopic sum over time-space renewal configurations \(({\textsf{i}}_1; {\textsf{a}}_1, {\textsf{a}}_1'), \ldots , ({\textsf{i}}_k; {\textsf{a}}_k, {\textsf{a}}_k')\), which specify the sequence of mesoscopic boxes \({{\mathcal {B}}} _{\varepsilon N}({\textsf{i}}_j,{\textsf{a}}_j)\) and \({{\mathcal {B}}} _{\varepsilon N}({\textsf{i}}_j,{\textsf{a}}_j')\) visited. See Fig. 1 for an illustration.

Ideally, we would like to replace each random walk kernel \(q_{n, m}(x,y)\) in (2.1) that connects two consecutive visited mesoscopic boxes \({{\mathcal {B}}} _{\varepsilon N}({\textsf{i}}_j,{\textsf{a}}_j')\ni (n,x)\) and \({{\mathcal {B}}} _{\varepsilon N}({\textsf{i}}_{j+1},{\textsf{a}}_{j+1})\ni (m,y)\) by a corresponding heat kernel. Namely, by the local limit theorem (3.21), replace \(q_{n, m}(x,y)\) by

where the factor 2 is due to periodicity. With such replacements, given a mesoscopic renewal configuration \(({\textsf{i}}_1; {\textsf{a}}_1, {\textsf{a}}_1'), \ldots , ({\textsf{i}}_k; {\textsf{a}}_k, {\textsf{a}}_k')\), as we sum over compatible microscopic renewal configurations \((n_1, z_1), \ldots , (n_r, z_r)\) in (2.1), the contributions of \(\xi _N(n,z)\) from each interval \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}_j)\) would decouple, leading to a product of coarse-grained disorder variables of the form

with consecutive coarse-grained disorder variables \(\Theta _{N, \varepsilon }({\textsf{i}}_j; {\textsf{a}}_j, {\textsf{a}}_j')\) and \(\Theta _{N, \varepsilon }({\textsf{i}}_{j+1}; {\textsf{a}}_{j+1}, {\textsf{a}}_{j+1}')\) linked by the heat kernel \(g_{\tfrac{1}{2}({\textsf{i}}_{j+1}-{\textsf{i}}_j)}({\textsf{a}}_{j+1}-{\textsf{a}}_j')\) (we absorbed the factor \(\frac{2}{\varepsilon N}\) into (2.5)). This would give our desired coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi , \psi |\Theta _{N,\varepsilon })\).

An illustration of the chaos expansion for the coarse-grained model (2.7). The solid laces represent heat kernels linking consecutively visited mesoscopic time-space boxes. The grey blocks represent the regions defining the coarse-grained disorder variables \(\Theta _{N, \varepsilon }\). The double block in the middle represents a coarse-grained disorder variable \(\Theta _{N, \varepsilon }(\vec {\textsf{i}}, \vec {\textsf{a}})\) visiting two mesoscopic time intervals \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}})\) and \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}')\) with \(|{\textsf{i}}'-{\textsf{i}}|\leqslant K_\varepsilon =(\log \frac{1}{\varepsilon })^6\) and cannot be decoupled

Unfortunately, this ideal procedure does not produce a sharp approximation of the partition function \({{\mathcal {Z}}} _N\) in (2.1). Indeed, the kernel replacement

induces an \(L^2\)-error, and this error is small (in the sense that it vanishes as \(\varepsilon \downarrow 0\), uniformly in large N) only if \({\textsf{i}}_{j+1}-{\textsf{i}}_j\) is sufficiently large (we will choose it to be larger than \(K_\varepsilon = (\log \frac{1}{\varepsilon })^6\)) and \(|{\textsf{a}}_{j+1}-{\textsf{a}}_j'|\) is not too large on the diffusive scale (we will choose it to be smaller than \(M_\varepsilon \sqrt{{\textsf{i}}_{j+1}-{\textsf{i}}_j}\) with \(M_\varepsilon =\log \log \frac{1}{\varepsilon }\)). We address this issue as follows.

The first crucial observation is that, modulo a small \(L^2\) error, microscopic renewal configurations \((n_1, z_1)\), ..., \((n_r, z_r)\) in (2.1) cannot visit three or more mesoscopic time intervals \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}_j)\), \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}_{j+1})\), and \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}_{j+2})\) with both \({\textsf{i}}_{j+1}-{\textsf{i}}_j\leqslant K_\varepsilon \) and \({\textsf{i}}_{j+2}-{\textsf{i}}_{j+1}\leqslant K_\varepsilon \) (see Lemma 5.1 below). Furthermore, with a small \(L^2\) error, we can also enforce a diffusive truncation \(|{\textsf{a}}_{j+1}-{\textsf{a}}_j'| \leqslant M_\varepsilon \sqrt{{\textsf{i}}_{j+1}-{\textsf{i}}_j}\) (see Lemma 5.6 below). We will then make the random walk/heat kernel replacement (2.6) only between mesoscopic boxes \({{\mathcal {B}}} _{\varepsilon N}({\textsf{i}}_j,{\textsf{a}}_j')\ni (n,x)\) and \({{\mathcal {B}}} _{\varepsilon N}({\textsf{i}}_{j+1},{\textsf{a}}_{j+1})\ni (m,y)\) that satisfy the constraint \({\textsf{i}}_{j+1}-{\textsf{i}}_j > K_\varepsilon \).

After such kernel replacements, what are left between the heat kernels decouple and appear as a product of two types of coarse-grained disorder variables:

-

one type is as given in (2.5), which visits a single mesoscopic time interval \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}})\);

-

another type visits two mesoscopic time intervals \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}})\) and \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}')\), with \({\textsf{i}}' - {\textsf{i}}\leqslant K_\varepsilon \): we denote it by \(\Theta _{N, \varepsilon }(\vec {\textsf{i}}, \vec {\textsf{a}})\) with \(\vec {\textsf{i}}=({\textsf{i}}, {\textsf{i}}')\) and \(\vec {\textsf{a}}=({\textsf{a}}, {\textsf{a}}')\), where \({\textsf{a}}\) identifies the first mesoscopic spatial box visited in the time interval \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}})\), while \({\textsf{a}}'\) identifies the last mesoscopic spatial box visited in the time interval \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}')\) (see (4.11)).

This leads to the actual coarse-grained model we will work with:

where \(\varphi _\varepsilon \) and \(\psi _\varepsilon \) are averaged versions of \(\varphi \) and \(\psi \) on the spatial scale \(\sqrt{\varepsilon }\), while \(g_{{\textsf{i}}/2}(\varphi _\varepsilon , {\textsf{a}})\), \(g_{{\textsf{i}}/2}({\textsf{a}}', \psi _\varepsilon )\), \(g_{{\textsf{i}}/2}(\varphi _\varepsilon , \psi _\varepsilon )\) are averages of the heat kernel \(g_{{\textsf{i}}/2}({\textsf{a}}-{\textsf{a}}')\) w.r.t. \(\varphi _\varepsilon \), \(\psi _\varepsilon \), or both.

In the sum in (2.7), we have hidden the various constraints on the mesoscopic time-space variables for simplicity (see (4.8) for the complete definition). Also note that in (2.7) we denote by \(\Theta = (\Theta (\vec {\textsf{i}},\vec {\textsf{a}}))\) a generic family of coarse-grained disorder variables; in order to approximate the averaged partition function \({{\mathcal {Z}}} _N\), we simply set \(\Theta =\Theta _{N, \varepsilon }\).

Remark 2.1

(Self-similarity) The coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi ,\psi |\Theta )\) in (2.7) has the same form as the original partition function \({{\mathcal {Z}}} _N\) in (2.1), with \(1/\varepsilon \) in place of N, \(\Theta _{N,\varepsilon }\) in place of \(\xi _N\), and the heat kernel \(g_{{\textsf{i}}/2}\) in place of the random walk kernel \(q_n\). This shows a remarkable degree of self-similarity: coarse-graining retains the structure of the model.

2.3 B. Time-Space Renewal Structure

Once we have defined precisely the coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi ,\psi |\Theta _{N,\varepsilon })\), see Sect. 4, we need to show that it indeed provides a good \(L^2\) approximations of the original partition function \({{\mathcal {Z}}} _N\), in the following sense:

This approximations will be carried out in Sect. 5, where we rely crucially on the time-space renewal interpretation of the sum in (2.1), which in the continuum limit with \(N\rightarrow \infty \) leads to the so-called Dickman subordinator [18]. This will be reviewed in Sect. 3.5.

2.4 C. Lindeberg Principle

In view of (2.8), given \(\varepsilon >0\) small, we can approximate \({{\mathcal {Z}}} _N\) by \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi ,\psi |\Theta _{N,\varepsilon })\), where the \(L^2\) error is uniform in large N and tends to 0 as \(\varepsilon \downarrow 0\). To prove that the laws of \(({{\mathcal {Z}}} _N)_{N\in {\mathbb {N}}}\) form a Cauchy sequence, it then suffices to show that given \(\varepsilon >0\) we can bound the distributional distance between \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi ,\psi |\Theta _{M,\varepsilon })\) and \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi ,\psi |\Theta _{N,\varepsilon })\) uniformly in \(M\geqslant N\) large, and furthermore, this bound can be made arbitrarily small by choosing \(\varepsilon >0\) sufficiently small. This would then complete the proof that \({{\mathcal {Z}}} _N\) converges in distribution to a unique limit.

The control of the distributional distance is carried out via a Lindeberg principle for the coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi ,\psi |\Theta _{N, \varepsilon })\), which is a multilinear polynomial in the family of coarse-grained disorder variables \(\Theta _{N, \varepsilon }=\{\Theta _{N, \varepsilon }(\vec {\textsf{i}}, \vec {\textsf{a}})\}\). We note that \(\Theta _{N, \varepsilon }(({\textsf{i}}, {\textsf{i}}'), ({\textsf{a}}, {\textsf{a}}'))\) and \(\Theta _{N, \varepsilon }(({\textsf{j}}, {\textsf{j}}'), ({\textsf{b}}, {\textsf{b}}'))\) have non-trivial dependence if \(({\textsf{i}}, {\textsf{a}})\) or \(({\textsf{i}}', {\textsf{a}}')\) coincides with either \(({\textsf{j}}, {\textsf{b}})\) or \(({\textsf{j}}', {\textsf{b}}')\). We thus need a Lindeberg principle for multilinear polynomials of dependent random variables, which we formulate in Appendix A and is of independent interest.

2.5 D. Functional Inequalities for Green’s Functions

To successfully apply the Lindeberg principle, we need to control the second and fourth moments of the coarse-grained disorder variables \(\Theta _{N, \varepsilon }\). We also need to control the influence of each \(\Theta _{N, \varepsilon }\), which boils down to bounding the fourth moment of the coarse-grained model \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi ,\psi |\Theta _{N, \varepsilon })\), with the choice of boundary conditions \(\psi \equiv 1\) and \(\varphi (x)=\frac{1}{\varepsilon } \mathbb {1}_{|x|\leqslant \sqrt{\varepsilon }}\).

The moment bounds on \(\Theta _{N, \varepsilon }\) and \({\mathscr {Z}}_{\varepsilon }^{(\textrm{cg})}(\varphi ,\psi |\Theta _{N, \varepsilon })\) are technically the most delicate parts of the paper, especially since we need to allow \(\varphi (x)=\frac{1}{\varepsilon } \mathbb {1}_{|x|\leqslant \sqrt{\varepsilon }}\) and \(\psi \equiv 1\). Since the structure of \(\Theta _{N, \varepsilon }\) is similar to an averaged partition function, we will first derive general moment bounds on the averaged partition function \({{\mathcal {Z}}} _N\) in Sect. 6. The fourth moment bound on \(\Theta _{N, \varepsilon }\) then follows as a corollary in Sect. 7.

The approach we develop is different from the methods employed in [51] to bound the moments of the averaged solution of the mollified SHE. Our approach is based on functional inequalities for the Green’s function of random walks (see Lemma 6.8) and it is robust enough to be applied also to the coarse-grained model defined in (2.7), which will be carried out Sect. 8.

3 Notation and tools

In this section, we introduce some basic notation and tools, including the polynomial chaos expansion for the partition function, random walk estimates, the renewal interpretation for the second moment of partition functions and the Dickman subordinator that arises in the continuum limit.

3.1 Random walk and disorder

As in Sect. 1.2, let \((S = (S_n)_{n\geqslant 0}, \mathrm P)\) be the simple symmetric random walk on \({\mathbb {Z}}^2\), whose transition kernel we denote by

Let \((\omega = (\omega (n,z))_{n\in {\mathbb {N}}, z\in {\mathbb {Z}}^2}, {{\mathbb {P}}} )\) be the disorder, given by a family of i.i.d. random variables with zero mean, unit variance and locally finite exponential moments, see (1.1).

The expected overlap between two independent walks is (see [18, Proposition 3.2])

Note that \(R_N\) is the expected number of collisions up to time N between two independent copies of the random walk S when both start from the origin. Also note that \(\gamma \) is the Euler–Mascheroni constant. We further define

where the asymptotic behavior follows by the local limit theorem, see (3.21) below.

In order to deal with the periodicity of simple random walk, we set

Given \(x \in {\mathbb {R}}^d\) with \(d \geqslant 2\), we denote by \([\![ x ]\!]\) the point in \({\mathbb {Z}}^d_\textrm{even}\) closest to x (fix any convention to break the tie if \([\![ x ]\!]\) is not unique). More explicitly, we have

For \(s \in {\mathbb {R}}\) it is convenient to define the even approximation \([\![ s ]\!] \in 2{\mathbb {Z}}\) by

3.2 Partition functions at criticality

The point-to-point partition functions \(Z_{M,N}^\beta (w,z)\) were defined in (1.8). We mainly consider the case \(M=0\), for which we write

The field of diffusively rescaled partition functions \({\mathcal {Z}}^{\beta }_{N; s,t}(\textrm{d}x, \textrm{d}y)\) was introduced in (1.9). In the special case \(s=0\) we simply write:

where we recall that \(\textrm{d}x \, \textrm{d}y\) denotes the Lebesgue measure on \({\mathbb {R}}^2 \times {\mathbb {R}}^2\). We next define averaged partition functions \({\mathcal {Z}}^{\beta }_{N, t}(\varphi , \psi )\) for suitable \(\varphi , \psi : {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\):

We can rewrite the integrals in (3.8) as sums. For a locally integrable function \(\varphi : {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\), we define \(\varphi _N : {\mathbb {Z}}^2_\textrm{even}\rightarrow {\mathbb {R}}\) as the average of \(\varphi (\frac{\cdot }{\sqrt{N}})\) over cells \(B(v) \subseteq {\mathbb {R}}^2\), see (3.5):

If we similarly define \(\psi _N: {\mathbb {Z}}^2_\textrm{even}\rightarrow {\mathbb {R}}\) given \(\psi : {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\), we can rewrite the second line of (3.8) as a sum over the points \(v=[\![ x ]\!], w= [\![ y ]\!] \in {\mathbb {Z}}^2_\textrm{even}\) as follows:

Remark 3.1

(Parity issue) Let \({\mathbb {Z}}^d_\textrm{odd}:= {\mathbb {Z}}^d\backslash {\mathbb {Z}}^d_{\textrm{even}}\). If in (3.10) we sum over \(v,w \in {\mathbb {Z}}^2_\textrm{odd}\), we obtain an alternative “odd version” of the averaged partition function, which is independent of the “even version” because two simple random walks started at even vs. odd sites can never meet. This explains why we enforce a parity restriction in (3.10).

Finally, we recall the critical window of the disorder strength (inverse temperature) that was introduced in (1.11). Given the definition (3.2) of \(R_N\), for some fixed \(\vartheta \in {\mathbb {R}}\), we choose \(\beta = \beta _N=\beta _N(\vartheta )\) such that

We can spell out this condition more explicitly in terms of \(\beta _N\) (see [18, Appendix A.4]):

where \(\kappa _3, \kappa _4\) are the disorder cumulants, i.e. \(\lambda (\beta ) = \frac{1}{2}\beta ^2 + \frac{\kappa _3}{3!} \beta ^3 + \frac{\kappa _4}{4!} \beta ^4 + o(\beta ^4)\) as \(\beta \downarrow 0\), and \(\alpha \simeq 0.208\) is as in (3.2). Henceforth we always set \(\beta = \beta _N\).

3.3 Polynomial chaos expansion

We now recall the polynomial chaos expansion of the partition function. This is based on the following product expansion, valid for any set A and any family of real numbers \((h_n)_{n\in A}\) labelled by A:

If we apply (3.13) to the partition function \(Z^{\beta _N}_{d,f}(x,y)\) in (1.8), by (3.1) we obtain

Recalling (3.11), we introduce a family \((\xi _N(n,z))_{(n,z) \in {\mathbb {Z}}^2}\) of i.i.d. random variables by

These variables allow us to write

hence, by the Markov property for the random walk with kernel q, we get

where \(\prod _{j=2}^r (\ldots ) := 1\) if \(r=1\). We have expressed the point-to-point partition function as a multilinear polynomial (polynomial chaos) in the independent random variables \(\xi _N(n,z)\).

A similar polynomial chaos representation holds for the averaged partition function \({\mathcal {Z}}^{\beta _N}_{N, t}(\varphi ,\psi )\) given in (3.10). To simplify notation, it is convenient to define an averaged version of the random walk transition kernel \(q_{m,n}(x,y)\). Given suitable \(\varphi , \psi : {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\), a time horizon \(M \in (0,\infty )\), and two points \((m, w), (n, z) \in {\mathbb {Z}}^3_{\textrm{even}}\), recalling \(\varphi _N\) and \(\psi _N\) from (3.9), we define

Then (3.15) yields the following polynomial chaos expansion for \({\mathcal {Z}}^{\beta _N}_{N, t}(\varphi ,\psi )\) in (3.10):

As will be explained later, when it comes to second moment calculations, the time-space points \((n_1, z_1), \ldots , (n_r, z_r)\) in the sum can be interpreted as a time-space renewal configuration.

3.4 Random walk estimates

Let \(g_t: {\mathbb {R}}^2 \rightarrow (0,\infty )\) denote the heat kernel on \({\mathbb {R}}^2\):

where, unless otherwise specified, we denote by \(|\cdot |\) the Euclidean norm on \({\mathbb {R}}^d\).

The asymptotic behavior of the random walk transition kernel \(q_n(x) = \mathrm P(S_n = x)\) is given by the local central limit theorem: as \(n\rightarrow \infty \) we have, uniformly for \(x\in {\mathbb {Z}}^2\),

where the two lines are two different variants of the local central limit theorem for the simple symmetric random walk on \({\mathbb {Z}}^2\) given by Theorems 2.3.5 and 2.3.11 in [66]. We recall that \({\mathbb {Z}}^d_\textrm{even}\) is defined in (3.4), the multiplicative factor 2 comes from the periodicity of the simple random walk \(S_n = (S^{(1)}_n, S^{(2)}_n)\) on \({\mathbb {Z}}^2\), while the factor \(\frac{1}{2}\) in the time argument of the heat kernel comes from the fact that \(\mathrm E[S_n^{(i)} S_n^{(j)}] = \frac{n}{2} \, \mathbb {1}_{i=j}\). We also note that

Similar to the averaged random walk kernels \(q^N_{\cdot , \cdot }\) defined in (3.16)–(3.18), given \(\varphi \in L^1({\mathbb {R}}^2)\), \(\psi \in L^\infty ({\mathbb {R}}^2)\), \(t > 0\), and \(a,b \in {\mathbb {R}}^2\), we define the averaged heat kernels

Recall \(q^N_{0, Nt}(\varphi , \psi )\) from (3.18). By the local limit theorem (3.21), recalling (3.9) and (3.22), we have

where the prefactor \(\frac{1}{2}\) is due to periodicity.

We will also need the following lemma, which allows us to replace a random walk transition kernel by a heat kernel even if the time-space increments are perturbed.

Lemma 3.2

Let \(q_n(\cdot )\) be the transition kernel of the simple symmetric random walk on \({\mathbb {Z}}^2\), see (3.1), and let \(g_t(\cdot )\) be the heat kernel on \({\mathbb {R}}^2\), see (3.20). Then there exists \(C\in (0, \infty )\) such that, for all \(n \in {\mathbb {N}}\) and for all \(x \in {\mathbb {Z}}^2\) with \(|x|\leqslant n^{\frac{3}{4}}\), we have

Let \(\varrho _1, \varrho _2>0\) and set \(C := 2 e \, \varrho _1 \, \varrho _2\). Then, given an arbitrary \(m\in {\mathbb {N}}\), for all \(n_1, n_2 \in {\mathbb {N}}\) with \(n_1 \geqslant m\) and \(\frac{n_2}{n_1}\in [1/\varrho _1, \varrho _2]\), and for all \(x_1, x_2\in {\mathbb {R}}^2\) with \(|x_1-x_2|\leqslant \sqrt{m}\), we have

Proof

Let us prove (3.27): by the second variant of the local limit theorem in (3.21),

We next prove (3.28): by the assumption \(\frac{n_2}{n_1}\in [1/\varrho _1, \varrho _2]\), we have

where the last inequality holds because \(|x_2|^2 \leqslant 2 (|x_1|^2 + |x_2-x_1|^2) \leqslant 2 |x_1|^2 + 2 m\) and \(n_1 \geqslant m\) by assumption. \(\square \)

3.5 Renewal estimates and Dickman subordinator

We next present the time-space renewal process underlying the second moment calculations for the partition function. Under diffusive scaling, this leads to the so-called Dickman subordinator in the continuum limit. This approach was developed in [18, 19].

We first define a slight modification of the partition function \(Z_{d,f}^\beta (x,y)\) in (1.8), where we “attach” disorder variables \(\xi _N(n,z)\), see (3.14), at the boundary points (d, x) and (f, y) (which may coincide, if \(d=f\)):

Such quantities will appear as basic building blocks in our proofs. Note that \({{\mathbb {E}}} [X^{\beta _N}_{d,f}(x,y)] = 0\). The second moment of \(X^{\beta _N}_{d,f}(x,y)\) can be computed explicitly by the polynomial chaos expansion (3.15) and it can be expressed as follows:

where we recall that \(\sigma _N^2={\mathbb {V}}\textrm{ar}(\xi _N(a,x))\), and for \(n\in {\mathbb {N}}_0 = \{0,1,2,\ldots \}\) and \(x \in {\mathbb {Z}}^2\) we define

The quantity \(U_N(n,x)\), which plays an important role throughout this paper, admits a probabilistic interpretation as a renewal function. More precisely, let \((\tau ^{(N)}_r, S^{(N)}_r)_{r\geqslant 0}\) denote the random walk (time-space renewal process) on \({\mathbb {N}}_0 \times {\mathbb {Z}}^2\) starting at (0, 0) and with one-step distribution

where \(R_N\) is the random walk overlap defined in (3.2). Then we can write, recalling (3.11),

When \(\lambda _N=1\), we see that \(U_N(n,x)\) is just the renewal function of \((\tau ^{(N)}_r, S^{(N)}_r)_{r\geqslant 0}\). When \(\lambda _N\ne 1\), we can think of \(U_N(n,x)\) as an exponentially weighted renewal function, weighted according to the number of renewals. Note that the first component \(\tau ^{(N)} = (\tau ^{(N)}_r)_{r\geqslant 0}\) is a renewal process with one-step distribution

where \(u(n)=\sum _x q_n(x)^2\) is defined in (3.3). Correspondingly, we can define

The asymptotic behaviors of \(U_N(n,x)\) and \(U_N(n)\) were obtained in [18], exploiting the fact that \(\tau ^{(N)}\) is in the domain of attraction of the so-called Dickman subordinator, defined as the pure jump Lévy process with Lévy measure \(\frac{1}{x} \, \mathbb {1}_{(0,1)}(x) \, \textrm{d}x\). More precisely, we have the following convergence result, which is an extension of [18, Proposition 2.2] from finite dimensional distribution convergence to process level convergence.

Lemma 3.3

Let \((\tau ^{(N)}_r, S^{(N)}_r)_{r\geqslant 0}\) be the space-time random walk defined in (3.32). Let \((Y_s)_{s\geqslant 0}\) be the so-called Dickman subordinator [18], i.e. the pure jump Lévy process with Lévy measure \(\frac{1}{t}\mathbb {1}_{(0,1)}(t)\textrm{d}t\), and let \(V_s:= \frac{1}{2} W_{Y_s}\) where W is an independent Brownian motion. Then we have the convergence in distribution

on the space of càdlàg paths equipped with the Skorohod topology.

Proof

Denote \({\varvec{Y}}^{(N)}_s=(Y^{(N)}_s, V^{(N)}_s):= \Big (\frac{\tau ^{(N)}_{\lfloor s\log N\rfloor }}{N}, \frac{S^{(N)}_{\lfloor s\log N\rfloor }}{\sqrt{N}} \Big )\). The convergence of finite dimensional distributions was already proved in [18, Proposition 2.2]. We prove tightness by verifying Aldous’ tightness criterion [62, Theorem 14.11], namely that for any bounded sequence of stopping times \(\tau _N\) with respect to \(({\varvec{Y}}^{(N)}_s)_{s\geqslant 0}\) and any positive constants \(h_N\downarrow 0\), we have \({\varvec{Y}}^{(N)}_{\tau _N+h_N}-{\varvec{Y}}^{(N)}_{\tau _N}\rightarrow 0\) in probability as \(N\rightarrow \infty \). This follows immediately from the fact that the increments of \({\varvec{Y}}^{(N)}\) are i.i.d. and \({\varvec{Y}}^{(N)}_{h_N}\rightarrow (0,0)\) in probability as \(N\rightarrow \infty \). \(\square \)

For \(\vartheta \in (0,\infty )\), we define the exponentially weighted Green’s function for \({\varvec{Y}}= ({\varvec{Y}}_s)_{s\geqslant 0}\):

where \({\varvec{f}}_s(\cdot , \cdot )\) is the density of the law of \({\varvec{Y}}_s\) on \([0,\infty )\times {\mathbb {R}}^2\), given that \({\varvec{Y}}_0=(0,0)\) (we take notation from (3.36)). It was shown in [18] that

where \(g_\cdot (\cdot )\) is the heat kernel, see (3.20), and \({\widehat{G}}_\vartheta (t) :=\int _{{\mathbb {R}}^2} {\widehat{G}}_\vartheta (t,x) \textrm{d}x\) is closely related to the so-called Dickman function in number theory. For \(t\leqslant 1\), it can be computed explicitly as

with \(\gamma \) as in (3.2) (see [18]Footnote 3). We will also denote \(G_\vartheta (t,x) := G_\vartheta (t) \, g_{\frac{t}{4}}(x)\). Note that for \(t\leqslant 1\), \(G_\vartheta (t,x)\) and \(G_\vartheta (t)\) are the continuum analogues of \(U_N(n,x)\) and \(U_N(n)\), respectively. It is therefore no surprise that the asymptotics of \(U_N\) will be expressed in terms of \(G_\vartheta \), which we record below for later use.

In light of (3.30), it is convenient to define

Recalling (3.31), we can give a graphical representation for \(\overline{U}_N(b-a,y-x)\) as follows:

where in the second line we assign weights \(q_{n'-n}(x'-x)\) to any solid line going from (n, x) to \((n',x')\) and we assign weight \(\sigma _N^2\) to every solid dot.

Recall that \(\sigma _N^2 \sim \frac{\pi }{\log N}\), see (3.11) and (3.2). We now rephrase some results from [18]. Fix \(T>0\).

-

By [18, Theorem 1.4], for any fixed \(\delta > 0\), as \(N\rightarrow \infty \) we have

$$\begin{aligned} \overline{U}_N(n) = \frac{\pi }{N} \, \big ( G_\vartheta \big (\tfrac{n}{N}\big ) + o(1)\big ) \qquad \textrm{uniformly} \ \ \textrm{for} \ \ \delta N \leqslant n \leqslant T N ,\nonumber \\ \end{aligned}$$(3.42)and moreover there is \(C < \infty \) such that

$$\begin{aligned} \overline{U}_N(n) \leqslant \frac{C}{N} \, G_\vartheta \big (\tfrac{n}{N}\big ) \qquad \forall 0 < n \leqslant T N . \end{aligned}$$(3.43) -

By [18, Theorem 2.3 and 3.7], for any fixed \(\delta > 0\), as \(N\rightarrow \infty \) we have

$$\begin{aligned} \begin{aligned} \overline{U}_N(n,x)&= \frac{\pi }{N^2} \, \big ( G_\vartheta \big (\tfrac{n}{N}, \tfrac{x}{\sqrt{N}}\big ) + o(1)\big ) \, 2 \, \mathbb {1}_{(n,x) \in {\mathbb {Z}}^3_\textrm{even}} \\&\quad \text {uniformly for} \ \ \delta N \leqslant n \leqslant T N \ \ \text {and} \ \ |x| \leqslant \tfrac{1}{\delta } \sqrt{N} . \end{aligned} \end{aligned}$$(3.44)The prefactor 2 is due to periodicity and, moreover, there is \(C < \infty \) such that

$$\begin{aligned} \overline{U}_N(n,x) \leqslant \frac{C}{N} \, \frac{1}{n} \, G_\vartheta \big (\tfrac{n}{N}\big ) \qquad \forall 0 < n \leqslant T N , \ \forall x \in {\mathbb {Z}}^2 . \end{aligned}$$(3.45) -

By [18, Proposition 1.6], for \(t\in (0,1]\) the function \(G_\vartheta (t)\) is \(C^\infty \) and strictly positive, and as \(t \downarrow 0\) it has the following asymptotic behavior:

$$\begin{aligned} G_\vartheta (t) = \frac{1}{t(\log \frac{1}{t})^2} \bigg \{ 1 + \frac{2\vartheta }{\log \frac{1}{t}} + O\bigg (\frac{1}{(\log \frac{1}{t})^2}\bigg ) \bigg \} , \end{aligned}$$(3.46)hence as \(t \downarrow 0\)

$$\begin{aligned} \int _0^t G_\vartheta (s) \textrm{d}s = \frac{1}{\log \frac{1}{t}} \bigg \{ 1 + \frac{\vartheta }{\log \frac{1}{t}} + O\bigg (\frac{1}{(\log \frac{1}{t})^2}\bigg ) \bigg \} . \end{aligned}$$(3.47)

Remark 3.4

In the proof of (3.42)–(3.45), the case \(T>1\) has to be treated differently from \(T=1\). In [18], the case \(T>1\) was reduced to \(T=1\) through a renewal decomposition and recursion (see [18, Section 7]). Alternatively, we can reduce the case \(T>1\) to \(T=1\) by first setting \({\widetilde{N}}:=TN\), \({\tilde{\vartheta }}:= \vartheta +\log T+o(1)\) so that \(\sigma _{N}^{2}=\sigma _{N}^{2}(\vartheta ) = \sigma _{\widetilde{N}}^2(\tilde{\vartheta })\) by their definitions in (3.11), and then applying (3.42)–(3.45) with N replaced by \(\widetilde{N}\), using the observation that \(\frac{1}{T}G_{\vartheta +\log T}(\tfrac{t}{T}) = G_\vartheta (t)\).

We will also need the following bound to complement (3.44).

Lemma 3.5

There exists \(c\in (0,\infty )\) such that for all \(\lambda \geqslant 0\) and \(0\leqslant n\leqslant N\),

Note that by the Markov inequality and optimisation over \(\lambda >0\), (3.48) implies that the probability kernel \(\overline{U}_N(n,\cdot )/\overline{U}_N(n)\) has Gaussian decay on the spatial scale \(\sqrt{n}\).

Proof

Recall the definition of \(U_N(n,x)\) from (3.33). Conditioned on \(\tau ^{(N)}_1, \ldots , \tau ^{(N)}_r\) with \(\tau ^{(N)}_r=n\), we can write \(S^{(N)}_r=\zeta _1+\cdots +\zeta _r\) for independent \(\zeta _i\)’s with

where \(n_i:=\tau ^{(N)}_i - \tau ^{(N)}_{i-1}\) and \(x\in {\mathbb {Z}}^2\). For each i, denote by \(\zeta _{i,1}\) and \(\zeta _{i,2}\) the two components of \(\zeta _i\in {\mathbb {Z}}^2\). Then we note that there exists \(c>0\) such that for any \(\lambda \geqslant 0\), \(n_i \in {\mathbb {N}}\),

This can be seen by Taylor expanding the exponential and using that \(\mathrm E[\zeta _{i,\cdot }]=0\) by symmetry, \(|\mathrm E[\zeta ^{2k+1}_{i,\cdot }]| \leqslant \frac{1}{2}(\mathrm E[\xi ^{2k}_{i,\cdot }] + \mathrm E[\zeta ^{2k+2}_{i,\cdot }])\) by Young’s inequality, as well as \(\mathrm E[\zeta ^{2k}_{i,\cdot }] \leqslant (Cn_i)^k (2k-1)!!\) for some \(C>0\) uniformly in \(n_i, k\in {\mathbb {N}}\). The bound on \(\mathrm E[\zeta ^{2k}_{i,\cdot }]\) holds because by (3.21),

where \(q_{n_i}\) has the same Gaussian tail decay as the heat kernel \(g_{n_i/2}\). Using \(e^{|x|} \leqslant e^x+e^{-x}\), this then implies

The bound (3.48) then follows readily from the definitions of \(U_N(n,x)\) and \(U_N(n)\) in (3.33) and (3.35), recalling that \(\overline{U}_N(n,x)\) and \(\overline{U}_N(n)\) are defined in (3.40). \(\square \)

3.6 Second moment of averaged partition function

Using \(X^{\beta _N}_{d,f}(x,y)\) as introduced in (3.29), and recalling (3.15), we can now rewrite the chaos expansion for the averaged partition function \({\mathcal {Z}}^{\beta _N}_{N, t}(\varphi ,\psi )\) in (3.19) as follows:

so that by (3.30) and the fact that \(\overline{U}_N:=\sigma _N^2 U_N\), we have

We now compute the limit of \({{\mathbb {E}}} \big [{\mathcal {Z}}^{\beta _N}_{N, t}(\varphi ,\psi )^2 \big ]\) as \(N\rightarrow \infty \). This was first obtained for the Stochastic Heat Equation in [7] in the special case \(\psi \equiv 1\); see also [19, Theorems 1.2 and 1.7] for an alternative derivation, that also includes directed polymers.

Proposition 3.6

(First and second moments) Recall \(G_\vartheta (t)\) from (3.39) for all \(t>0\). For \(\varphi : {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\), defineFootnote 4

Then for all \(\varphi \) with \(\Vert \varphi \Vert _{{{\mathcal {G}}} _t}<\infty \) and all \(\psi \in L^\infty ({\mathbb {R}}^2)\), we have

where

and the kernel \(K_{t}^{\vartheta }\) is defined by

Proof

The first moment convergence (3.53) holds because by \({{\mathbb {E}}} \big [{\mathcal {Z}}^{\beta _N}_{N, t}(\varphi , \psi ) \big ]\! = q^{N}_{0,Nt}(\varphi ,\psi )\), see (3.50), in view of the asymptotic relation (3.26).

For the second moment computation (3.54) we exploit (3.51), where the first term in the r.h.s. converges to \(\frac{1}{4} \, g_{t/2}(\varphi ,\psi )^2\) by (3.26), which matches the first term in the r.h.s. of (3.54). It remains to show that the sum in (3.51) converges to the term \(\frac{1}{2} {\mathscr {V}}_{t}^{\vartheta }(\varphi ,\psi )\) in (3.54).

Recall the definition of \(q^N_\cdot \) in (3.16)–(3.17). By the local limit theorem (3.21) and in view of (3.22), we see that for any \(\varepsilon > 0\), uniformly for \(m > \varepsilon N\) and \(w \in {\mathbb {Z}}^2\), we have as \(N\rightarrow \infty \)

and similarly, uniformly for \(n \leqslant (1- \varepsilon ) Nt\) and \(z \in {\mathbb {Z}}^2\),

Applying the asymptotic relation (3.44) for \(\overline{U}_N(f-d,y-x)\), we see that the sum in (3.51) is a Riemann sum that converges as \(N\rightarrow \infty \) to the multiple integralFootnote 5

where the prefactor \(\frac{1}{2}\) results from combining the periodicity factor 2 in (3.44) with the volume factor \(\frac{1}{2} \cdot \frac{1}{2}\) which originates from the restrictions \((d,x), (f,y)\in {\mathbb {Z}}^3_{\textrm{even}}\) in (3.51). Then it follows by (3.23), (3.24) and (3.38) that the equality in (3.55) holds with

We can simplify both brackets via the identity \(g_t(x) \, g_t(y) = g_{2t}(x-y) \, g_{\frac{t}{2}}(\frac{x+y}{2})\), see (3.20). Performing the integrals over \(a,b \in {\mathbb {R}}^2\) we then obtain (3.56).

The bound in (3.55) follows by bounding \(\psi \) with \(\Vert \psi \Vert _\infty \) and then successively integrating out \(w, w'\), followed by u and s in (3.56). \(\square \)

Remark 3.7

(Point-to-plane partition function) For \(\psi (w) = \mathbb {1}(w) \equiv 1\), we can view \({\mathcal {Z}}^{\beta _N}_{N, t}(\varphi , \mathbb {1})\) as the point-to-plane partition function \(Z_N^{\beta _N}(z)\) in (1.3) averaged over its starting point z. By (3.53)–(3.56),

where we set

We note that both the asymptotic mean and the asymptotic variance of \({\mathcal {Z}}^{\beta _N}_{N, t}(\varphi , \mathbb {1})\) are half of those obtained in [19, eq. (1.19)–(1.20)]. This is because here we have defined \({\mathcal {Z}}^{\beta _N}_{N, t}(\varphi ,\psi )\) as a sum over \({\mathbb {Z}}^2_\textrm{even}\), see (3.10), while in [19], the sum is over both \({\mathbb {Z}}^2_\textrm{odd}\) and \({\mathbb {Z}}^2_\textrm{even}\), which give rise to two i.i.d. limits as \(N\rightarrow \infty \) by the parity of the simple random walk on \({\mathbb {Z}}^2\).

4 Coarse-graining

In this section, we give the details of how to coarse-grain the averaged partition function and what is the precise definition of the coarse-grained model, which were outlined in Sect. 2. The main result is Theorem 4.7, which shows that the averaged partition function \({\mathcal {Z}}^{\beta _N}_{N, t}(\varphi ,\psi )\), see (3.10), can be approximated in \(L^2\) by the coarse-grained model.

4.1 Preparation

The starting point is the polynomial chaos expansion (3.19) for the averaged partition function \({\mathcal {Z}}^{\beta _N}_{N, t}(\varphi ,\psi )\), which is a multilinear polynomial in the disorder variables \(\xi _N(n,z)\). We will call the sequence of time-space points \((n_1, z_1)\), ..., \((n_r, z_r)\in {\mathbb {N}}\times {\mathbb {Z}}^2\) in the sum in (3.19) a microscopic (time-space) renewal configuration. We assume that the disorder strength is chosen to be \(\beta _N=\beta _N(\vartheta )\) as defined in (3.11)–(3.12). For simplicity, we assume the time horizon to be tN with \(t=1\).

Given \(\varepsilon \in (0,1)\) and \(N\in {\mathbb {N}}\), we partition discrete time-space \(\{1,\ldots , N\} \times {\mathbb {Z}}^2\) into mesoscopic boxes

where \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}})\) is mesoscopic time interval and \({{\mathcal {S}}} _{\varepsilon N}({\textsf{a}})\) a mesoscopic spatial square.Footnote 6 These boxes are indexed by mesoscopic variables

Recall from Sect. 2 that to carry out the coarse-graining, we need to organize the chaos expansion (3.19) according to which mesoscopic boxes \({{\mathcal {B}}} _{\varepsilon N}\) are visited by the microscopic renewal configuration \((n_1, z_1)\), ..., \((n_r, z_r)\). To perform the kernel replacement (2.6), which allows each summand in the chaos expansion (3.19) to factorize into a product of coarse-grained disorder variables \(\Theta _{N, \varepsilon }\) connected by heat kernels, we will impose some constraints on the set of visited mesoscopic time intervals \({{\mathcal {T}}} _{\varepsilon N}(\cdot )\) and spatial boxes \({{\mathcal {S}}} _{\varepsilon N}(\cdot )\), which will be shown to have negligible costs in \(L^2\). We first introduce the necessary notation.

Let us fix two thresholds

We will require that the visited mesoscopic time intervals \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}_1)\), ..., \({{\mathcal {T}}} _{\varepsilon N}({\textsf{i}}_k)\) belong to

We call this the no-triple condition, since it forbids three consecutive mesoscopic time indices \({\textsf{i}}_j, {\textsf{i}}_{j+1}, {\textsf{i}}_{j+2}\) with both \({\textsf{i}}_{j+1} -{\textsf{i}}_j< K_\varepsilon \) and \({\textsf{i}}_{j+2} -{\textsf{i}}_{j+1}< K_\varepsilon \). We can then partition \(({\textsf{i}}_1, \ldots , {\textsf{i}}_k)\) into time blocks such that \({\textsf{i}}_j, {\textsf{i}}_{j+1}\) belong to the same block whenever \({\textsf{i}}_{j+1}-{\textsf{i}}_j<K_\varepsilon \).

Definition 4.1

(Time block) We call a time block any pair \(\vec {\textsf{i}}= ({\textsf{i}}, {\textsf{i}}') \in {\mathbb {N}}\times {\mathbb {N}}\) with \({\textsf{i}}\leqslant {\textsf{i}}'\). The width of a time block is

The (non symmetric) “distance” between two time blocks \(\vec {\textsf{i}}, \vec {\textsf{m}}\) is defined by

and we write “\(\,\vec {\textsf{i}}< \vec {\textsf{m}}\)” to mean that “\(\,\vec {\textsf{i}}\) precedes \(\vec {\textsf{m}}\)” :

With the partitioning of the indices \(({\textsf{i}}_1, \ldots , {\textsf{i}}_k)\) of the visited mesoscopic time intervals into consecutive time blocks as defined above, which we denote by \(\vec {\textsf{i}}_1=({\textsf{i}}_1, {\textsf{i}}_1')\), ..., \(\vec {\textsf{i}}_r=({\textsf{i}}_r, {\textsf{i}}_r')\) with possibly \({\textsf{i}}_\ell ={\textsf{i}}_\ell '\), the constraint \({{\mathcal {A}}} _{\varepsilon }^{(\mathrm {no\, triple})}\) then becomes the following:

If the time horizon is Nt with \(t\ne 1\), then in (4.3) and (4.4) we just replace the upper bound \(\vec {\textsf{i}}_r \leqslant \lfloor \tfrac{1}{\varepsilon }\rfloor - K_\varepsilon \) by \(\vec {\textsf{i}}_r \leqslant \lfloor \tfrac{t}{\varepsilon }\rfloor - K_\varepsilon \).