Abstract

We study the distribution over measurement outcomes of noisy random quantum circuits in the regime of low fidelity, which corresponds to the setting where the computation experiences at least one gate-level error with probability close to one. We model noise by adding a pair of weak, unital, single-qubit noise channels after each two-qubit gate, and we show that for typical random circuit instances, correlations between the noisy output distribution \(p_{\text {noisy}}\) and the corresponding noiseless output distribution \(p_{\text {ideal}}\) shrink exponentially with the expected number of gate-level errors. Specifically, the linear cross-entropy benchmark F that measures this correlation behaves as \(F=\text {exp}(-2s\epsilon \pm O(s\epsilon ^2))\), where \(\epsilon \) is the probability of error per circuit location and s is the number of two-qubit gates. Furthermore, if the noise is incoherent—for example, depolarizing or dephasing noise—the total variation distance between the noisy output distribution \(p_{\text {noisy}}\) and the uniform distribution \(p_{\text {unif}}\) decays at precisely the same rate. Consequently, the noisy output distribution can be approximated as \(p_{\text {noisy}}\approx Fp_{\text {ideal}}+ (1-F)p_{\text {unif}}\). In other words, although at least one local error occurs with probability \(1-F\), the errors are scrambled by the random quantum circuit and can be treated as global white noise, contributing completely uniform output. Importantly, we upper bound the average total variation error in this approximation by \(O(F\epsilon \sqrt{s})\). Thus, the “white-noise approximation” is meaningful when \(\epsilon \sqrt{s} \ll 1\), a quadratically weaker condition than the \(\epsilon s\ll 1\) requirement to maintain high fidelity. The bound applies if the circuit size satisfies \(s \ge \Omega (n\log (n))\), which corresponds to only logarithmic depth circuits, and if, additionally, the inverse error rate satisfies \(\epsilon ^{-1} \ge {\tilde{\Omega }}(n)\), which is needed to ensure errors are scrambled faster than F decays. The white-noise approximation is useful for salvaging the signal from a noisy quantum computation; for example, it was an underlying assumption in complexity-theoretic arguments that noisy random quantum circuits cannot be efficiently sampled classically, even when the fidelity is low. Our method is based on a map from second-moment quantities in random quantum circuits to expectation values of certain stochastic processes for which we compute upper and lower bounds.

Similar content being viewed by others

1 Introduction

There is a fundamental trade-off in quantum computation between computation size and error rate. Naturally, the longer the computation, the lower the physical error rate must be to maintain a high probability of an errorless computation. Once the error rate is beneath a constant threshold, the theory of fault tolerance and quantum error correction [1, 2] may be employed to push the probability of a logical error arbitrarily close to zero, despite the prevalence of many physical errors during the computation; however, error correction comes at the cost of additional qubits and gates. These overheads, while acceptable in an asymptotic sense, are likely to be overwhelming in the near and intermediate term. This inspires the idea of an upcoming Noisy Intermediate-Scale Quantum (NISQ) era [3], where hardware capabilities are good enough to perform non-trivial quantum tasks on dozens or hundreds of qubits, but quantum error correction, which might require thousands or millions of qubits, remains beyond reach.

In this paper, we study a model of NISQ devices performing random computations and prove a precise sense in which, for typical circuit instances, local errors are quickly scrambled and can be treated as white noise. For some applications, this phenomenon makes it possible for the signal of the noiseless computation to be extracted by repetition despite a large overall chance that at least one error occurs.

Our local error model assumes that each two-qubit gate in the quantum circuit is followed by a pair of gate-independent single-qubit unital noise channels acting on the two qubits involved in the gate. For simplicity and ease of analysis, we assume each of these noise channels is identical, but we fully expect the takeaways from our work to apply when the noise strength is allowed to vary from location to location. For concreteness in this introduction, we can consider the depolarizing channel with error probability \(\epsilon \). The fidelity of the noisy computation with respect to the ideal computation is defined as \(f=\textrm{tr}(\rho _{\text {ideal}}\rho _{\text {noisy}})\) where \(\rho _{\text {ideal}}\) is the (pure) density matrix output by the ideal circuit and \(\rho _{\text {noisy}}\) is the (generally mixed) density matrix ouput by the noisy circuit. In this case, f is expected to be roughly equal to the probability that no errors occur, denoted here by F. We see that, for a circuit with s two-qubit gates, the quantity \(F = (1-\epsilon )^{2s}\) is close to 1 only if the quantity \(2\epsilon s\)—the average number of errors—satisfies \(2\epsilon s \ll 1\).

However, this high-fidelity requirement is quite restrictive in practice. Already for circuits with 50 qubits at depth 20, the error rate \(\epsilon \) must be on the order of \(10^{-4}\) for the whole computation to run without error at least 90% of the time; this error rate is more than an order of magnitude smaller than what has been achievable in recent experiments on superconducting qubit systems of that size [4,5,6]. Indeed, in their landmark 2019 quantum computational supremacy experiment [4], a group at Google performed random circuits on 53 qubits of depth 20, but the fidelity of the computation was estimated to be \(f \approx 0.002\), suggesting that at least one error occurs in all but a tiny fraction of the trials. Similar experiments at the University of Science and Technology of China on 56 [5] and 60 [6] qubits reported even smaller fidelities of 0.0007 [5] and 0.0004 [6]. This would not be an issue if one could determine when a trial is errorless: in this case, one could just repeat the experiment 1/f times. However, error detection requires overheads similar to error correction.

Rather, low-fidelity random circuit sampling experiments and their claim of quantum computational supremacy benefit from a key assumption [4, 7]: when at least one error does occur, the output of the experiment is well approximated by white noise, that is, the output is random and uncorrelated with the ideal (i.e., noiseless) output. When this is the case, the signal of diminished size F can, at least for some applications, be extracted from the white noise using \(O(1/F^2)\) trials, as we explain later. Specifically, for quantum computational supremacy, the white-noise assumption is that the distribution \(p_{\text {noisy}}\) over measurement outcomes of their noisy device is close to what we call the “white-noise distribution”

with \(p_{\text {ideal}}\) the ideal distribution and \(p_{\text {unif}}\) the uniformFootnote 1 distribution. In particular, for the approximation to be non-trivial, we demand that the total variation distance between \(p_{\text {noisy}}\) and \(p_{\text {wn}}\) be a small fraction of F, that is

This demand is necessary because we expect that \(p_{\text {noisy}}\) also decays toward \(p_{\text {unif}}\) such that \(\frac{1}{2}\Vert p_{\text {noisy}}- p_{\text {unif}}\Vert _1 = \Theta (F)\), and thus \(p_{\text {unif}}\) is a trivial approximation for \(p_{\text {noisy}}\) with error \(\Theta (F)\).

Prior to their experiment, the Google group provided numerical evidence [7] in favor of the white-noise assumptionFootnote 2 for randomly chosen circuits. They found that the output distribution of random circuits of depth 40 on a 2D lattice of 20 qubits approaches the uniform distribution when a local Pauli error model is applied. Furthermore, they observed that the correlation of \(p_{\text {noisy}}\) with respect to \(p_{\text {ideal}}\) appears to decay exponentially, consistent with \(p_{\text {noisy}}\approx p_{\text {wn}}\). However, their analysis did not specifically estimate the distanceFootnote 3 between \(p_{\text {noisy}}\) and \(p_{\text {wn}}\). The white-noise condition in Eq. (2) requires that the distance between \(p_{\text {noisy}}\) and \(p_{\text {wn}}\) decrease as the expected number of errors increases and F decays, so quantifying the differences between the distributions is vital for determining how well the white-noise approximation is obeyed.

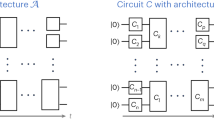

Here we prove rigorous bounds on the error in the white-noise approximation, averaged over circuits with randomly chosen gates. Our results fully apply in two random quantum circuit architectures: first, the 1D architecture with periodic boundary conditions, where qubits are arranged in a ring and alternating layers of nearest-neighbor gates are applied; and second, the complete-graph architecture, where each gate is chosen to act on a pair of qubits chosen uniformly at random among all \(n(n-1)/2\) pairs.Footnote 4 We show that, for Pauli noise channels, the error in the white-noise approximation is small as long as (1) \(\epsilon ^2 s \ll 1\), (2) \(s \ge \Omega (n\log (n))\), and (3) \(\epsilon \ll 1/(n\log (n)) \). We believe that condition (3) could be relaxed to read \(\epsilon < c/n\) for some universal constant \(c = O(1)\); numerics suggest \(c=0.3\) for the complete-graph architecture. Condition (1) is a quadratic improvement over the condition \(\epsilon s \ll 1\) needed for high fidelity. For circuits with \(\epsilon < 0.005\), as is the case in recent experiments [4,5,6], thousands of gates could potentially be implemented before condition (1) fails. Note that our technical statements hold for general (non-Pauli) error channels as well, but we find that the error in the white-noise approximation is small only for incoherent noise channels, which includes depolarizing and dephasing noise, but not unitary noise. We complement this analysis with numerical results that confirm the picture presented by our theoretical proofs for the complete-graph architecture, and demonstrate that realistic NISQ-era values of the error rate and circuit size can lead to a good white-noise approximation.

By putting the white-noise approximation for random quantum circuits on stronger theoretical footing, our work has several applications. First, the white-noise assumption has been an ingredient in formal complexity-theoretic arguments that the task accomplished on noisy devices running random quantum circuits is hard for classical computers, enabling the declaration of quantum computational supremacy [4]. We complement our main result by showing in Appendix C that classically sampling from the white-noise distribution within total variation distance \(\eta F\) is, in a certain complexity-theoretic sense, equivalent to sampling from the ideal output distribution within total variation distance \(O(\eta )\), up to a factor of F in the complexity. This makes low-fidelity experiments where errors are common nearly as defensible for quantum computational supremacy as high-fidelity experiments where errors are rare, at least in principle. However, by identifying a barrier at \(\epsilon = O(1/n)\) above which the white-noise assumption is expected to fail, our work accentuates limitations of existing high-noise quantum computational supremacy proposals: if the noise rate is order-1 as n increases—a more realistic experimental scenario—one should not rely on the white-noise assumption as Google [4, 7] did to justify an asymptotic advantage for the sampling problem. Second, our result lends theoretical justification to the usage [4,5,6] of the linear cross-entropy metric proposed in Ref. [4] to benchmark noise in random circuit experiments and verify that hardware has correctly performed the quantum computational supremacy task. Indeed, as a side result, we show that, for both incoherent and coherent noise, the metric decays precisely as \(e^{-2s\epsilon \pm O(s\epsilon ^2)}\) when \(\epsilon \) is sufficiently small, matching the expectation that it should be roughly equal to the probability that all 2s noise locations are error free. This also suggests that the linear cross-entropy benchmark could be reliably used to accurately estimate the underlying local noise rate \(\epsilon \) [9].

Beyond random circuit experiments for quantum computational supremacy, our work suggests that other scenarios where the white-noise assumption holds may be advantageous in the NISQ era, as one can eschew error correction and nonetheless perform a fairly long quantum computation, as long as one is willing to repeat the experiment \(O(1/F^2)\) times. One example of a scenario where the assumption may hold is quantum simulation of fixed chaotic Hamiltonians, since they are also believed to be efficient at scrambling errors.

The remainder of the paper is structured as follows: in Sect. 2, we describe our setup and in particular our model for local noise within a random quantum circuit; in Sect. 3, we precisely state our results; in Sect. 4, we discuss further implications and how our results fit in with prior work; in Sect. 5, we give an overview of the intuition behind our result and the method we use in our proofs, which is based on a map from random quantum circuits to certain stochastic processes. These stochastic processes can also be interpreted as partition functions of statistical mechanical systems. This method might be regarded as an extension of the method in Ref. [8], where we studied anti-concentration in noiseless random quantum circuits. In Sect. 6, we present a numerical calculation of our bound for the realistic values of the circuit parameters informed by the experiments in Refs. [4,5,6] (although for the complete-graph architecture, rather than 2D). We conclude the main text with an outlook in Sect. 7. The rigorous proofs and details behind the map to stochastic processes then appear in the appendices.

2 A Model of Noisy Random Quantum Circuits

Here we describe our model of noisy random quantum circuits. Let the circuit consist of s two-qudit gates acting on n qudits, each with local Hilbert space dimension q. We follow Ref. [8] in defining a random quantum circuit architecture as an efficient algorithm that takes the circuit specifications (n, s) as input and outputs a quantum circuit diagram with s two-qudit gates, that is, a length-s sequence of qudit pairs, without specifying the actual gates that populate the diagram. Our results fully apply for two specific architectures: the 1D architecture with periodic boundary conditions, and the complete-graph architecture, which were previously shown in Ref. [8] to have the anti-concentration property as long as \(s \ge \Omega (n\log (n))\), with a particular constant prefactor. Our results would also fully apply for standard architectures in D spatial dimensions with periodic boundary conditions if it could be proved that they also achieve anti-concentration whenever \(s \ge \Omega (n \log (n))\), as was conjectured in Ref. [8].

Given an architecture and parameters (n, s), we can generate a circuit instance by choosing the circuit diagram according to the architecture and then choosing each of the unitary gates in the diagram at random according to the Haar measure. Each instance is associated with an output probability distribution \(p_{\text {ideal}}\) over \(q^n\) possible computational basis measurement outcomes \(x \in [q]^n\) (where \([q] = \{0,1,\ldots ,q-1\}\)) that would be sampled if the circuit were implemented noiselessly. Note that in the formal analysis we include a layer of n (also Haar-random) single-qudit gates at the beginning and end of the circuit without counting these 2n gates toward the circuit size; these might be regarded as fixing the local basis for the input product state and the measurement of the output.Footnote 5

2.1 Local noise model

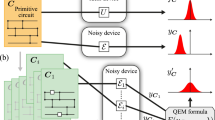

We augment this setup by inserting single-qudit noise channels into the circuit diagram, which act on qudits involved in a multi-qudit gate immediately following the gate, as shown in the example in Fig. 1. In our model, the single-qudit gates remain noiseless and measurements are assumed to be perfect.Footnote 6

Thus, the core assumption is that the noise is local, i.e. independent from qudit to qudit. We assume each noise channel \({\mathcal {N}}\) is a unitalFootnote 7 and completely positive trace-preserving map.

For a given noise channel, there are only two parameters that matter for our analysis, the average infidelity and the unitarity of the channel. The average infidelity for a channel \({\mathcal {N}}\) is defined as

where the integral is over the Haar-measure on \(q \times q\) unitary matrices V and \( |{\psi }\rangle \!\langle {\psi }| \) is any pure state. The average infidelity is one measure of the overall noise strength of the channel \({\mathcal {N}}\). Following Refs. [10, 11], the unitarity is defined for unital channels as

The unitarity is the expected purity of the output state under random choice of input state, scaled to have minimum value of 0 and maximum value of 1.

Examples: depolarizing, dephasing, and rotation channels

It is helpful to consider explicitly the following three channels. First, the depolarizing channel

where \(\gamma = \epsilon q^2/(q^2-1)\), \(\{P_i\}_{i=1}^{q^2-1}\) is the set of single-qudit Pauli matrices (appropriately generalized to higher q), and I is the \(q \times q\) identity matrix. There are two ways to think of the channel: first, with probability \(1-\gamma \) doing nothing and with probability \(\gamma \) resetting the state to the maximally mixed state on that qudit; second, with probability \(1-\epsilon \) doing nothing and with probability \(\epsilon \) choosing a Pauli operator at random to apply to the qudit.

We can also consider the dephasing channel

which represents doing nothing with probability \(1-q\epsilon /(q-1)\) and performing a measurement in the computational basis with probability \(q\epsilon /(q-1)\).

Finally, we can consider a coherent noise channel, for example the rotation channel

which applies a small unitary rotation by angle \(\theta \) to the state.

The average infidelity and unitary of these channels are given in Table 1. The core fact that differentiates the coherent rotation error channel from the incoherent depolarizing and dephasing error channels is how the size of the errors grow under repeated application of the channel. If an incoherent channel is applied m times, the average infidelity grows linearly in m, which is seen in our examples by replacing \(\epsilon \) with \(1-(1-\epsilon )^m\) and noting \(r=O(m\epsilon )\) up to leading order. However, if a coherent channel is applied m times, the average infidelity grows quadratically in m, which is seen in the rotation channel by replacing \(\theta \) with \(m\theta \) and noting \(r = O(m^2\theta ^2)\) up to leading order. Given r and u, the amount of coherence in the channel can be quantified by the parameter \(\delta = 2r(1+q^{-1}) - (1-u)(1-q^{-2})\) [12].

2.2 Output distributions of the quantum circuit

Suppose the locations of the s two-qudit gates have been fixed, with gate t acting on qudits \(\{i_t,j_t\}\). Then a circuit instance is specified by a sequence \((U^{(-n+1)},\ldots , U^{(s+n)})\), where \(U^{(t)}\) is a \(q^2 \times q^2\) (two-qudit) unitary matrix if \(1\le t \le s\) and a \(q \times q\) (single-qudit) unitary matrix otherwise. Accordingly, for each t, let

denote the unitary channel that acts as \(U^{(t)}\) on qudits \(i_t\) and \(j_t\) and as the identity channel, denoted by \({\mathcal {I}}\), on the other qudits. To account for noise, let

be the channel that applies noise channels after applying the unitary gate. Now we can define the ideal and noisy output distributions by

Our work compares the distribution \(p_{\text {noisy}}\) to the white-noise distribution \(p_{\text {wn}}\) (defined in Eq. (1) and repeated here)

for some choice of F. In the introduction, for simplicity our discussion set F to be equal to the probability of an errorless computation; in our more precise analysis below, we find that the choice of F that minimizes the distance between \(p_{\text {wn}}\) and \(p_{\text {noisy}}\) is given by a normalized version of the linear cross-entropy benchmark, which we show is nearly equal to the quantity chosen in the introduction.

The white-noise distribution is a mixture of the ideal distribution and the uniform distribution. Note that \(p_{\text {ideal}}\), \(p_{\text {noisy}}\), and \(p_{\text {wn}}\) all depend implicitly on the circuit instance U. In the analysis we treat F as a free parameter, and we choose it such that our bound on the distance between \(p_{\text {noisy}}\) and \(p_{\text {wn}}\) is minimized. The total variation distance between two distributions \(p_1\) and \(p_2\) is defined as

Comment on randomness in our setup

There are multiple types of randomness in our analysis, and in understanding our result it is important to keep track of how they interplay. First of all, the noiseless circuit instance U is generated randomly by choosing each gate to be Haar random—in an experimental setting, U is chosen randomly but known to the experimenter. The choice of U determines an ideal pure output state. Second of all, for each fixed choice of U, the noise channels may introduce randomness that makes the noisy output state mixed. When the noise is depolarizing noise, this might be regarded as the insertion of a randomly chosen pattern of Pauli errors. Lastly, the measurement of the state in the computational basis gives rise to a random measurement outcome drawn from a certain classical probability distribution: \(p_{\text {ideal}}\) if we are considering the noiseless circuit, and \(p_{\text {noisy}}\) if we are considering the noisy circuit. The important thing to remember is that we are primarily concerned with thinking about fixed instances U and the interplay between the resulting probability distributions \(p_{\text {ideal}}\), \(p_{\text {noisy}}\) and \(p_{\text {wn}}\) for that instance. Then, we make a statement about these distributions that holds in expectation over random choice of U. If desired, one could then use Markov’s inequality to form bounds on the fraction of instances U for which the white-noise approximation must be good. In practice, we expect strong concentration of typical instances near their expectation.

Comment on more general (universal) gate sets

We consider random quantum circuits built from local two-site unitary gates drawn randomly with respect to the Haar measure. As our analysis involves only second moment quantities, our results therefore directly apply to any gate set (or distribution on the 2-site unitary group) that forms an exact unitary 2-design, e.g. random Clifford circuits with each two-qubit gate drawn uniformly at random from the Clifford group. Furthermore, circuits constructed with gates drawn randomly from universal gate sets should give rise to similar scrambling phenomena and we expect that our results hold for such circuits, including the actual random circuit experiments performed in Refs. [4,5,6]. While our method is not directly generalizable to other gate sets, we anticipate that if our analysis were extendable to such gate sets, the results would only change by constant factors.

Some evidence for this is provided by the independence of the spectral gap for universal gate sets [13]. This implies that the depth at which random quantum circuits scramble (and converge to approximate unitary designs) only changes by a constant factor when one considers circuits comprised of gates drawn randomly for any universal gate set [14].

3 Overview of Contributions

The main result of this paper is a proof that, for typical random circuits, the output distribution \(p_{\text {noisy}}\) of the quantum circuit with local noise is very close to the white-noise distribution \(p_{\text {wn}}\) if the noise is sufficiently weak—for our results to apply, the noise strength must decay with the system size. Specifically, we prove an upper bound on the expectation value of the total variation distance between the two distributions. In proving that result, we also prove a statement about the expected linear cross-entropy benchmark—a proxy for fidelity—in noisy random quantum circuits, and another statement about the speed at which \(p_{\text {noisy}}\) approaches the uniform distribution. For all statements, the notation \({\mathbb {E}}_U\) denotes expectation over choice of Haar-random single-qudit and two-qudit gates.

In the rest of this section, we state our results for general noise channels, deferring the proofs to Appendix B, but first we summarize the contributions specifically applied to the depolarizing channel in Table 2.

Comment on architectures

The theorem statements below are expressed only for the 1D and complete-graph architectures, which are known to anti-concentrate after circuit size \(\Theta (n\log (n))\). In the appendix, we prove slightly more general statements that also hold for any architecture consisting of layers and satisfying a natural connectivity property (this includes standard architectures in D spatial dimensions with periodic boundary conditions). These statements depend on the anti-concentration size \(s_{AC}\) of these architectures, which is conjectured to be \(\Theta (n\log (n))\) but for which the best known upper bound is \(O(n^2)\) [8].

3.1 Decay of linear cross-entropy benchmark

Define the quantity

The quantity \({\bar{F}}\) may be regarded as an estimate of the fidelity of the noisy quantum device with respect to the ideal computation; however, we emphasize that it is a distinct quantity. When \(p_{\text {noisy}}(x)\) and \(p_{\text {ideal}}(x)\) are viewed as random variables in the instance U, \({\bar{F}}\) is equal to their covariance, normalized by the variance of \(p_{\text {ideal}}\). Note also that the numerator of \({\bar{F}}\) is the expected score on the linear cross-entropy benchmark, as proposed in Ref. [4], using samples from the noisy device, and the denominator is the expected score using samples from the ideal output distribution. Refs. [9, 15] studied a similar quantity, the difference being that the \({\mathbb {E}}_U\) appears outside the fraction in their case. Additionally, note that the denominator is given by \(q^n Z-1\), where Z is the collision probability studied in Refs. [8, 16]. The results of Ref. [8] imply that the denominator becomes within a small constant factor of \((q^n-1)/(q^n+1) \approx 1\) after \(\Theta (n\log (n))\) gates. Therefore, while our results are stated for the normalized linear cross-entropy benchmark, they apply equally well for the linear cross-entropy benchmark when the depth is at least logarithmic.

Theorem 1

Consider either the complete-graph architecture or the 1D architecture with periodic boundary conditions on n qudits of local Hilbert space dimension q and comprised of s gates. Let r be the average infidelity of the local noise channels. Then there exists constants c and \(n_0\) such that whenever \(r \le c/n\) and \(n \ge n_0\), the following holds:

where

Note that the relationship \(\epsilon = r(q+1)/q\) holds for the depolarizing channel as defined in Eq. (5), so, ignoring the \(O(q^{-2n})\) corrections,

indicating that the linear cross-entropy metric decreases exponentially with the expected number of Pauli errors \(2s\epsilon \), as long as the noise is sufficiently weak that the other terms can be ignored. In particular, three conditions must be met to approximate \(Q_1\) by 1 in Eq. (16): (1) \(\epsilon ^2 s \ll 1\); (2) \(s \ge \Omega (n\log (n))\), i.e., anti-concentration has been reached; and (3) \(\epsilon \ll 1/(n\log (n))\). One implication of Theorem 1 is that the same kind of decay extends to general noise channels and is observed even for coherent noise channels like the rotation channel.

3.2 Convergence to uniform

We show an upper bound on the expected total variation distance between the output of the noisy quantum device \(p_{\text {noisy}}\) and the uniform distribution. Our bound decays exponentially in the number of error locations, under certain circumstances. In particular, it decays exponentially in \((1-u)(1-q^{-2})s\) where u is the unitarity of the local noise channels.

Theorem 2

Consider either the complete-graph architecture or the 1D architecture with periodic boundary conditions on n qudits of local Hilbert space dimension q and s gates. Let u be the unitarity of the local noise channels (and define \(v=1-u\)). Then there exist constants c and \(n_0\) such that as long as \(v \le c/n\) and \(n \ge n_0\)

where \(p_{\text {unif}}\) is the uniform distribution, and

Note that \(Q_2\) is small under a similar three conditions as in the cross-entropy decay result: (1) \(s(1-u)^2 \ll 1\), (2) anti-concentration has been reached, and (3) \(n\log (n)(1-u) \ll 1\).

For the depolarizing channel, \(u = 1-2\epsilon (1-q^{-2})^{-1}\) up to first order in \(\epsilon \), so the distance to uniform decays like \(e^{-2s\epsilon }\), which is identical to the rate of linear cross-entropy decay. On the other hand, the unitarity of the rotation channel is \(u=1\), so our upper bound does not decay with s, even though \({\bar{F}}\) does decay for the rotation channel. This is expected because the rotation channel is coherent; indeed, unlike the other two examples, it sends pure states to pure states. The ideal pure state and the noisy pure state will become less and less correlated as more noise channels act, which explains why \({\bar{F}}\) decays, but the output distribution for the noisy pure state will not converge to uniform.

3.3 Distance to white-noise distribution

We also show a stronger statement: not only does the output distribution decay to uniform, it does so in a very particular way, preserving an uncorrupted signal from the ideal distribution. That is, we show that \(p_{\text {noisy}}\) is close to \(p_{\text {wn}}\) by upper bounding the expected total variation distance between the two distributions. Our bound can be applied for any noise channel, but it only evaluates to a small and meaningful number for incoherent noise channels.

Theorem 3

Consider either the complete-graph architecture or the 1D architecture with periodic boundary conditions on n qudits of local Hilbert space dimension q and s gates. Let r be the average infidelity and u the unitarity of the local noise channels (and define \(v=1-u\)). Let

Then, when we choose \(F = {\bar{F}}\) as in Eq. (14), there exist constants \(c_1\), \(c_2\), and \(n_0\) such that as long as \(v \le c_1/n\), \(r \le c_2/n\), and \(n \ge n_0\),

whenever the right-hand side of Eq. (22) is less than \({\bar{F}}\).

We make a couple of comments. First, we emphasize how small the right-hand side of Eq. (22) is. The quantity \({\bar{F}}\) is decaying exponentially in the number of expected errors, as shown in Theorem 1. We showed in Theorem 2 that \(p_{\text {noisy}}\) converges to uniform at roughly the same rate. However, the distance between \(p_{\text {noisy}}\) and \(p_{\text {wn}}\) is much smaller than \({\bar{F}}\) if the parameters are sufficiently weak, demonstrating that the noisy and white-noise distribution are much closer to each other than either are to uniform.

Second, let us examine the quantity \(\delta \). For the depolarizing channel and the dephasing channel, the leading term in \(\delta \) cancels out leaving \(\delta = O(\epsilon ^2)\), so the \(\sqrt{\delta }\) term in Eq. (22) is on the same order as the other terms. This is a signature of incoherent noise. The coherent rotation channel, which has \(u=1\) and \(r = O(\theta ^2)\), has \(\delta = O(\theta ^2)\), so \(\sqrt{\delta }\) is large compared to the other terms in the expression. In this case, we would need \(sr \ll 1\) for the approximation to be good, but if this is true, then \({\bar{F}} \approx 1\) and the white-noise approximation is trivial.

Relatedly, the parameter \(\delta \) can be connected to the diamond distance between the channel \({\mathcal {N}}\) and the identity channel. This distance, denoted by D, is defined as the trace distance between the input state \(\phi \) and the state \(\phi '\) obtained by applying the noise channel to \(\phi \), maximized over all possible \(\phi \), including \(\phi \) that are entangled with an auxiliary system of arbitrary size. If \({\mathcal {N}}\) is applied 2s times, the total deviation in trace norm from the ideal output can be as large as 2sD in the worst case. It was shown in Ref. [12] that \(D = O(\sqrt{\delta })\), specifically

It is also known that \(r \le O(D)\) and \(1-u \le O(D)\). Thus, working at sufficiently large circuit size and sufficiently small noise rate to neglect the final three terms in Eq. (22), we can write our result as

This emphasizes that the fundamental result is an improved trade-off between noise and circuit size; the strength of the signal decays exponentially, but the error on the renormalized signal grows quadratically slower (as \(O(D\sqrt{s})\)) in the case of random quantum circuits with incoherent noise than it does in the worst case (as O(Ds)) for arbitrary circuits and arbitrary noise channels with diamond distance D.

4 Related Work and Implications

4.1 Quantum computational supremacy

A central motivation for our work has been recent quantum computational supremacy experiments [4, 5] that sampled from the output of noisy random quantum circuits on superconducting devices. In this context, the main claim is that no classical computer could have performed the same feat in any reasonable amount of time. While no efficient classical algorithms to simulate the quantum device performing this task are known, there is a lack of concrete theoretical evidence that no such algorithm exists.

Our work bolsters the theory behind these experiments in two ways, assuming noise in the device is sufficiently well described by our local noise model. First, our result on the decay of \({\bar{F}}\) justifies the usage of the linear cross-entropy metric to benchmark the overall noise rate in the device, and to quantify the amount of signal from the ideal computation that survives the noise. Second, convergence to the white-noise distribution has theoretical benefits with respect to a potential proof that the random circuit sampling task accomplished by the device is actually hard for classical computers.

4.1.1 Linear cross-entropy benchmarking

Quantum computational supremacy experiments are complicated by the fact that since (by definition) they cannot be replicated on a classical computer, it is non-trivial to classically verify that they actually performed the correct computational task. A partial solution to this issue has been the proposal of linear cross-entropy benchmarking, whereby a sample x is generated by the device according to the noisy output distribution \(p_{\text {noisy}}\), and a classical supercomputer is used to compute \(p_{\text {ideal}}(x)\).Footnote 8 When T samples \(\{x_1,\ldots ,x_T\}\) are chosen, the average

is calculated, which is an empirical proxy for the circuit fidelity. We can see that the expected value of \({\mathcal {F}}\) is precisely \(\sum _x p_{\text {noisy}}(x)(q^n p_{\text {ideal}}(x) - 1)\), which is the numerator of the quantity \({\bar{F}}\) defined in Eq. (14). Meanwhile, the denominator of \({\bar{F}}\) becomes close to 1, so long as the output is anti-concentrated. In Theorem 1, we show that if the depolarizing error rate \(\epsilon \) satisfies \(\epsilon \ll 1/(n\log (n))\) and as long as \(\epsilon ^2 s \ll 1\), then there are matching upper and lower bounds on the expected value of \({\mathcal {F}}\), which decays with the circuit size like \(e^{-2\epsilon s}\). Thus, assuming our local noise model, we prove that one can infer \(\epsilon \) given \({\mathcal {F}}\) and s. The inferred value of \(\epsilon \) can then be compared to the noise strength estimated when testing each circuit component individually, thus providing one method of verification that the components are behaving as expected during the experiment.

Indeed, the idea of using random circuit sampling as an alternative to randomized benchmarking was formally proposed in Ref. [9], a work that has certain similarities to ours. In particular, like us, they find that the condition \(1/\epsilon \ge \Omega (n)\) appears necessary for controlled decay of the fidelity—our result can be expressed as requiring \(1/\epsilon \ge {\tilde{\Omega }}(n)\), where the tilde hides log factors, and we believe those log factors are not necessary for our result. They give analytical and numerical evidence that the fidelity decays as \(e^{-2 \epsilon s}\). Additionally, like us, they use a map from random quantum circuits to identity-swap configurations to motivate their results. However, they only analytically study the fidelity decay up to first order in the error rate for a 1D architecture; that is, they compute the expected fidelity due to contributions with an error at only one location or at a correlated set of locations all at the same depth. On the other hand, their error model is more general than ours as we do not consider correlated errors, while their theoretical analysis handles Pauli errors of up to weight three; in the context of noise characterization, this is important as correlated errors are often the most difficult to diagnose. On this point, we believe correlated errors could be handled by our method with a more intricate analysis, but we leave that for future work. Relatedly, exponential decay of fidelity in noisy systems has been proposed [17] as an experimentally detectable signature of quantum mechanics that distinguishes it from theories where quantum mechanics emerges from an underlying classical theory. Our work may help justify these proposals.

Note that as the fidelity decays, more samples must be generated to form a good estimate of the mean of \({\mathcal {F}}\). Since \(p_{\text {ideal}}(x)\) for uniformly random x has standard deviation on the order of \(q^{-n}\) (assuming anti-concentration), the standard deviation of \({\mathcal {F}}\) is expected to decay with the number of samples like \(1/\sqrt{T}\). Thus, resolving the mean of \({\mathcal {F}}\) with enough precision to differentiate it from 0 requires \(T = \Omega (1/{\mathcal {F}}^2)\) samples.

We comment that while our analysis assumes that each noise location has the same value of \(\epsilon \), this is not essential to our method. We expect it could be shown that the expected value of \({\mathcal {F}}\) decays like \(\exp (-\sum _i \epsilon _i)\) where i runs over all possible noise locations. Moreover, our analysis works for any kind of local noise, not just depolarizing noise; the only relevant parameter is the average infidelity of the noise channels. This includes coherent noise; for example, the average infidelity of the coherent rotation channel given in Eq. (7) is less than 1 and thus leads to exponential decay of \({\mathcal {F}}\). This is consistent with Ref. [9], which previously showed that from the perspective of fidelity decay, every channel is equivalent to an (incoherent) Pauli noise channel.

4.1.2 Classical hardness of sampling from the noisy output distribution

To claim to have achieved quantum computational supremacy, the low-fidelity random circuit sampling experiments in Refs. [4, 5] should be able to identify a concrete computational problem that their device solved, but a classical device could not also solve in any reasonable amount of time. Here there are a couple of options. One option is to simply rely directly on the linear cross-entropy benchmark and define the task to be generating a set of samples that scores at least \({\mathcal {F}} \ge 1/{{\,\textrm{poly}\,}}(n)\). A related idea is the task of Heavy Output Generation (HOG) [18], which is to generate outputs x for which \(p_{\text {ideal}}(x)\) is large (i.e. “heavy outputs”) significantly more often than a uniform generator. The upshot of these definitions is that in the regime where \(p_{\text {ideal}}(x)\) can be calculated classically with an exponential-time algorithm, it can be verified that the quantum device successfully performed the task. Their main drawback is that it is not clear whether running a (noisy) quantum computation is the only way to perform these tasks. Perhaps a (yet-to-be-discovered) classical algorithm can score well on the linear cross-entropy benchmark without performing an actual random circuit simulation; for example, this was the goal in Refs. [19, 20], both of which utilized similar techniques as the present paper in their analyses.

Another option is to define the task specifically in terms of the white-noise distribution. Namely, one must produce samples from a distribution \(p_{\text {noisy}}\) for which \(\frac{1}{2}\Vert p_{\text {noisy}}- p_{\text {wn}}\Vert _1 \le \eta F\) where F is not too small (ideally at least inverse polynomialFootnote 9 in n) and some small constant \(\eta \). We refer to this task as “white-noise random circuit sampling (RCS).” A downside of this option is that even with unlimited computational power, an exponential number of samples from the device would be needed to definitively verify that the distribution is close to \(p_{\text {wn}}\) in total variation distance. Our work provides a partial solution here, as we show that a local error model allows a device to accomplish the white-noise RCS task, as long as the error rate is sufficiently weak compared to the number of qubits. Thus, if the experimenters are sufficiently confident in the error model that describes their device, they can rely on our work to be confident they are performing the white-noise RCS task. This observation is especially important after recent work of Ref. [20] suggests that using the linear cross-entropy benchmark is insufficient as a way of verifying that the sampling task has been correctly performed. In that light, our results show that a high-score on the benchmark is sufficient when paired with an assumption on the underlying local error model.

The major upside of the white-noise RCS task is that one can give stronger evidence that it is classically hard to perform. For example, in the Supplementary Material of Ref. [4], it was shown that exactly (i.e. \(\eta = 0\)) sampling from \(p_{\text {wn}}\) (a task they called “unbiased noise F-approximate random circuit sampling”) in the worst case is a hard computational task in the sense that an efficient classical algorithm for it would cause the collapse of the polynomial hierarchy (\(\textsf {PH}{}\)), and further that its computational cost should be at most a factor of F smaller than sampling exactly from \(p_{\text {ideal}}\). In that spirit, we show in Theorem 4, in the appendix, that the more realistic task of sampling approximately from \(p_{\text {wn}}\) is essentially just as hard as sampling approximately from \(p_{\text {ideal}}\), up to a linear factor of F in the classical computational cost. This is important because some mild progress has been made toward establishing that approximately sampling from \(p_{\text {ideal}}\) is hard for the polynomial hierarchy, through a series of work that reduce the task of computing \(p_{\text {ideal}}(x)\) in the worst case to the task of computing \(p_{\text {ideal}}(x)\) in the average case up to some small error [21,22,23,24,25]. Weaknesses in this result as evidence for hardness of approximate sampling were discussed in more detail in Refs. [23, 26], but it remains true that the white-noise-centered definition of the computational task is the likeliest route to a more robust version of quantum computational supremacy that can be grounded in well-studied complexity theoretic principles.

Recently, Ref. [27] showed that in the regime of constant \(\epsilon =\Omega (1)\) local noise, the output of a typical random circuit can be classically sampled up to total variation distance error \(\delta \) in time \({{\,\textrm{poly}\,}}(n,1/\delta )\) whenever anti-concentration holds. This result is not in tension with our analysis since the runtime of their algorithm is exponential in \(1/\epsilon \) and thus exponential in n in the noise regime we study. The existence of their algorithm is further evidence that the assumption \(\epsilon = O(1/n)\) is necessary (and sufficient) for a successful hardness argument.

4.2 Convergence to uniform with circuit size

It is widely understood that incoherent and uncorrected unital noise in quantum circuits should typically lead the output of a quantum circuit to lose all correlation with the ideal circuit and become nearly uniform. It is further asserted that the decay to uniform should scale with the circuit size; however, rigorous results have only shown a decay in total variation distance to uniform with the circuit depth d, following the form \(e^{-\Omega (\epsilon d)}\). In particular, Ref. [28] showed that any (even non-random) circuit with interspersed local depolarizing noise approaches uniform at least this quickly. Later, Ref. [29] showed the same is true for any Pauli noise model, at least for most circuits chosen from a particular random ensemble. However, in Ref. [23], a stronger convergence at the rate of \(e^{-\Omega (\epsilon s)}\) in random quantum circuits like ours was desired in order to show a barrier on further improvements of their worst-to-average-case reduction for computing entries of \(p_{\text {ideal}}\). To that end, they showed that exponential convergence in circuit size occurs in a toy model where each layer of unitary evolution enacts an exact global unitary 2-design, and they conjectured the same is true in the local noise model we consider in this paper. Thus, our result in Theorem 2 gets close to providing the missing ingredient for their claim; for their application, we would need to extend our result to show \(e^{-\Omega (\epsilon s)}\) even in the regime where \(\epsilon = O(1)\), independent of n. However, recent work of Ref. [30] (which appeared roughly simultaneously with the first version of this work) casts doubt that this extension would be possible by showing a lower bound of \(e^{-O(\epsilon d)}\) in the regime where \(\epsilon = O(1)\). Our results are not in tension with theirs since our results apply only when \(\epsilon = O(1/n)\).

4.3 Signal extraction in noisy experiments

One implication of our work is that, in the parameter regime where our results apply, the signal from the noiseless random circuit experiment can be extracted by taking many samples. To illustrate this, suppose we are interested in some classical function f(x) for \(x\in [q]^n\) that takes values in the interval \([-1,1]\). Choosing x randomly from \(p_{\text {ideal}}\) induces a probability distribution over the resulting values of f(x). To understand this distribution empirically (e.g., estimate its mean or variance), samples \(x_i\) might be generated on a quantum device, but if the device is noisy, these samples will be drawn from \(p_{\text {noisy}}\) instead of \(p_{\text {ideal}}\). However, if \(p_{\text {noisy}}\approx p_{\text {wn}}\), then the sampled distribution over f(x) will be a mixture of the ideal with weight F, and the distribution that arises from uniform choice of x with weight \(1-F\). Supposing the latter is well understood, inferences can be made about the former by repetition. For example, if \(\sum _x p_{\text {ideal}}(x) f(x) = \mu = O(1)\) and \(\sum _x f(x)/q^n = 0\),Footnote 10 then the mean of f under samples from \(p_{\text {wn}}\) is \(F\mu \). Meanwhile, the standard deviation of f can be as large as O(1), indicating that \(O(1/F^2)\) samples from \(p_{\text {wn}}\) are required to compute the mean \(F\mu \) up to O(F) precision. Generally, this procedure requires knowing the value of F.

A concrete example of such a situation is the Quantum Approximate Optimization Algorithm (QAOA) [31], where samples x from the output of a parameterized quantum circuit are used to estimate the expectation of a classical cost function C(x). The parameters can then be varied to optimize the expected value of the cost function. Our work is for Haar-random local quantum circuits, which are, in a sense, very different from QAOA circuits. For example, the marginal of typical random circuits on any constant number of qubits is very close to maximally mixed, whereas QAOA circuits optimized for local cost functions will, by design, not have this property. Nevertheless, it is plausible that generic QAOA circuits might respond to local noise in a similar way as random quantum circuits. Indeed, in Refs. [32,33,34], numerical and analytic evidence was given for the conclusion that the expectation value of the cost function and its gradient with respect to the circuit parameters decay toward zero when local noise is inserted into a QAOA circuit. This behavior would be consistent with a stronger conclusion that the output is well described by \(p_{\text {wn}}\).

5 Summary of Method and Intuition

In this section, we present a heuristic argument about why the technical statements above should hold. Then we give an overview of how we actually show it using our method, which analyzes certain Markov processes derived from the quantum circuits, extending our previous work in Ref. [8].

5.1 Intuition behind error scrambling and error in white-noise approximation

Our result that \(p_{\text {noisy}}\) is very close to \(p_{\text {wn}}\) requires three conditions to be satisfied: (1) \(\epsilon ^2 s \ll 1\); (2) anti-concentration has been achieved, i.e. \(s \ge \Omega (n\log (n))\); and (3) \(\epsilon n\log (n) \ll 1\). Here, we try to motivate why these conditions should be sufficient and speculate about whether they are also necessary. In particular, we believe condition (3) can be significantly relaxed.

For simplicity, lets restrict to qubits (\(q=2\)). Let U denote the unitary enacted by the noiseless quantum circuit instance, so the ideal output state is the pure state \(\rho _{\text {ideal}} = U |{0^n}\rangle \!\langle {0^n}| U^{\dagger }\). If a location somewhere in the middle of the circuit experiences a Pauli error, then we could write the output state as \(U_2 P U_1 |{0^n}\rangle \!\langle {0^n}| U_1^{\dagger } P^\dagger U_2^{\dagger }\), where P is a Pauli operator with support on only one qubit, and \(U=U_2U_1\) is a decomposition of the unitary into gates that act before and after the error location. If we like, we can conjugate P so that it acts at the end of the circuit, giving \(O_PU |{0^n}\rangle \!\langle {0^n}| U^{\dagger }O_P^{\dagger }\) where \(O_P = U_2 P U_2^\dagger \). Unlike P, the operator \(O_P\) will likely have support over many qubits. Indeed, this is what we mean by scrambling; the portion of the circuit acting after the error location scrambles the local noise P into more global noise \(O_P\). We can handle error patterns E with multiple Pauli errors similarly, by commuting each to the end one at a time and forming an associated global noise operator \(O_E\).

Next, we expand the output quantum state \(\rho _{\text {noisy}}\) of the noisy circuit as a sum over all possible Pauli error patterns, weighted by the probability that each pattern occurs. Assuming the local noise is depolarizing, the probability of a pattern E depends only on the number of non-identity Pauli operators in the error pattern, denoted by |E|.

The classical probability distribution \(p_{\text {noisy}}\) is then given by \(p_{\text {noisy}}(x) = \langle x | \rho _{\text {noisy}} |x\rangle \) for each measurement outcome x. Observe that for the error pattern with \(|E| = 0\) (no errors), we have \(\text{\O }_E\rho _{\text {ideal}}O_E^{\dagger } = \rho _{\text {ideal}}\). There can be other error patterns for which \(O_E\rho _{\text {ideal}}O_E^{\dagger } = \rho _{\text {ideal}}\); for example, when a lone Pauli-Z error acts prior to any non-trivial gates, the state is unchanged since the initial state \(|0^n\rangle \) is an eigenstate of all the Pauli-Z operators. However, these error patterns are rare and for the sake of intuition we ignore this possibility. In essence, the white-noise assumption is the claim that when we take the mixture over output states for all of the error patterns, we arrive at a state \(\rho _{\text {err}}\) that produces measurement outcomes that are very close to uniform. (Note that in general \(\rho _{\text {err}}\) need not be close to maximally mixed to yield uniformly random measurement outcomes.) Letting \(F = (1-\epsilon )^{2\,s}\), we may write

where \(I/2^n\) denotes the maximally mixed state. This final term gives the deviations of the noisy output state \(\rho _{\text {noisy}}\) from a linear combination of the ideal state and \(I/2^n\).

This allows us to state more clearly the intuition for our result. Since the circuit is randomly chosen and scrambles the local error patterns, the operators \(O_E\) generally have large support and are essentially uncorrelated for different choices of error pattern E. Suppose we measure in the computational basis, and examine the probability of obtaining the outcome x. We can calculate the squared deviation between this value and the white-noise value under expectation over instance U.

where \(p_{E}(x) = \langle x |O_E \rho _{\text {ideal}} O_E^{\dagger } |x\rangle \). Suppose we now make the approximation that the quantities \(p_E(x)\) and \(p_{E'}(x)\), when considered as functions of the random instance U, are independently distributed unless \(E = E'\). Their mean is \(2^{-n}\) and, assuming anti-concentration (condition (2)), their standard deviation is \(O(2^{-n})\). Then we have

where the last line is true when \(\epsilon ^2 s \ll 1\). This implies that the deviation of each entry in the probability distribution \(p_{\text {noisy}}\) from the white-noise distribution is on the order of \(F2^{-n}\epsilon \sqrt{s}\), and since there are \(2^n\) entries, we have

In other words, the total variation distance is much smaller than F when \(\epsilon ^2 s \ll 1\), giving an intuitive reason for condition (1). Moreover, without condition (2), the contribution of each term would be much larger than \(O(2^{-2n})\), which illustrates why condition (2) is necessary.

The key step in this analysis was the assumption of independence between \(p_E\) and \(p_{E'}\) when \(E \ne E'\). This is only approximately true; indeed for a circuit that does not scramble errors, this will be a bad approximation because it might be common to have different error patterns E, \(E'\) that produce the same (or approximately the same) effective error \(O_E = O_{E'}\). However, for random quantum circuits, this outcome is unlikely for the vast majority of error pairs. Our rigorous proof, later, might be regarded as a justification of this intuition above.

Condition (3) is more subtle to motivate. In our analysis we require \(\epsilon \ll 1/(n\log (n))\) so that the chance an error occurs while the circuit is still anti-concentrating, which takes \(\Omega (n\log (n))\) gates, is small. This is helpful in the analysis because it allows us to essentially ignore the possibility that an error P occurs near the beginning or end of the circuit, where there is insufficient time to scramble the error (either forward or backward in time). However, a finer-grained analysis might be able to handle these kinds of errors: we believe condition (3) can be improved from \(\epsilon ^{-1} \gg \Omega (n\log (n)) = {\tilde{\Omega }}(n)\) to simply \(\epsilon ^{-1} \ge n/c\) for some constant c that depends only on the architecture (1D vs. complete-graph etc.). However, we do not believe that improvement beyond this point would be possible; there is a fundamental barrier that requires \(\epsilon \) to scale as O(1/n).

The reason for this is essentially that if the white-noise approximation is to hold, the errors need to be scrambled at least as fast as they appear. The probability of an errorless computation F decreases like \((1-\epsilon )^{2\,s} = \exp (-2\,s\epsilon - O(s\epsilon ^2))\), so each layer of O(n) gates causes a decrease by a factor \(\exp (-\Theta (n\epsilon ))\). Recall that we demand that the total variation distance between \(p_{\text {noisy}}\) and \(p_{\text {wn}}\) be much smaller than F, so as F decreases, this condition becomes increasingly stringent. Meanwhile, scrambling is fundamentally happening at the rate of increasing circuit depth, not size. One way to see this is simply that local Pauli errors P that appear at a certain circuit location are expected to be scrambled into larger operators that grow ballistically with the depth [35, 36]; each layer of O(n) gates yields a constant amount of operator growth. Another way to see this is to consider a pair of error patterns E and \(E'\), where E consists of a single Pauli error on qudit j at layer d and \(E'\) consists of a single Pauli error on qudit j at layer \(d + \Delta \). The correlation between \(p_E(x)\) and \(p_{E'}(x)\), as a function of the random instance U, which is roughly speaking the chance that the random circuit transforms the first error into something resembling the second error, will decay exponentially with \(\Delta \), the separation in depth between the two errors.Footnote 11 Yet a third way to see this fact is to notice that, after a circuit has initially reached anti-concentration, convergence of the collision probability \(Z={\mathbb {E}}_U[\sum _x p_{\text {ideal}}(x)^2]\) to its limiting value \(Z_H\) occurs like \(Z = Z_H + O(Z_H)\exp (-O(s/n))\) [8]. Each additional layer of O(n) gates only decreases the deviation of Z from \(Z_H\) by a constant factor. The terms \({\mathbb {E}}_U[(p_E - 2^{-n})(p_{E'}-2^{-n})]\) for \(E \ne E'\) that were ignored above are expected to obey a similar kind of decay to the value 0 for most choices of \((E,E')\), but if F is decaying too fast, we are not able to neglect these terms. Each layer of O(n) gates must incur at most a constant-factor decay of F to not exceed the rate of scrambling; equivalently, \(n\epsilon < c\) must hold for some constant c.

5.2 Noisy random quantum circuits as a stochastic process

Our method is a manifestation of the “stat mech method” for random quantum circuits, developed in Refs. [35,36,37,38] and further utilized in Refs. [8, 9, 19, 26, 39,40,41,42,43,44], whereby averages over k copies of random quantum circuits are mapped to partition functions of classical statistical mechanical systems. The mapping for \(k=2\), corresponding to second-moment quantities, is particularly simple and amenable to analysis [8, 26, 38, 39].

In Ref. [8], we analyzed the collision probability \(Z = {\mathbb {E}}_U[\sum _x p_{\text {ideal}}(x)^2]\), a second-moment quantity, using the stat mech method, although we found it more useful to interpret the result as the expectation value of a certain stochastic process, rather than as a partition function. As we will see, this work is essentially an extension of the analysis in Ref. [8] to account for the action of the single-qudit noise channels \({\mathcal {N}}\) that act after two-qudit gates. We explain the steps in this analysis below, and leave the formal proofs for the appendices.

We also mention that a number of works [16, 45,46,47,48,49,50,51,52] study noiseless random quantum circuits using a distinct technique that also maps certain second-moment quantities to a stochastic process; however, we emphasize that this results in a different stochastic process than the one studied here, and extending it to noisy random quantum circuits would require a distinct analysis.

Expressing the total variation distance in terms of second-moment quantities

To apply this method, the first step is to express \(\frac{1}{2}\Vert p_{\text {noisy}}-p_{\text {wn}}\Vert _1\) in terms of second-moment quantities. To do so, we use the general 1-norm to 2-norm bound: when \(p_1\) and \(p_2\) are vectors on a \(q^n\)-dimensional vector space, then

where \(\Vert p_1-p_2 \Vert _2 = \sqrt{\sum _x (p_1(x)-p_2(x))^2}\). Applying this identity with \(p_1 = p_{\text {wn}}\) and \(p_2 = p_{\text {noisy}}\) and invoking Jensen’s inequality for the concave function \(\sqrt{\cdot }\), we find

Now we can expand

where

are second-moment quantities (the second equality holds since by symmetry each term in the sum has the same value under expectation), with \(Z_w\) containing w copies of the noisy output and \(2-w\) copies of the ideal output for each \(w \in \{0,1,2\}\). Note that \(Z_0 =q^n Z\) with Z the collision probability studied in Refs. [8, 16]. Furthermore, note that F is a free parameter, and we may choose it so that it minimizes the right-hand sideFootnote 12 of Eq. (38), which occurs when

matching the definition for \({\bar{F}}\) in Eq. (14). Plugging in \(F={\bar{F}}\) yields

Mapping second-moment quantities to stochastic processes

We bound the quantities \(Z_0\), \(Z_1\), and \(Z_2\) by mapping them to stochastic processes. These stochastic processes are the same as the stochastic process we studied in Ref. [8], except that the noise channels introduce slightly modified transition rules, as we now discuss.

Second moment quantities include two copies of each random unitary gate in the circuit. The idea in Ref. [8] was to perform the expectation over the two copies of each gate independently, using Haar-integration techniques. For a density matrix \(\rho \) on two copies of a Hilbert space of dimension q, let

where \({\mathbb {E}}_V\) denotes expecation over choice of V from the Haar measure over \(q \times q\) matrices. Then, we have the following well-known formula (for which a derivation is provided in Ref. [8])

where I is the identity operation and S is the swap operation on two copies of the single-qudit system. The equation above states that, after Haar averaging, the state of the system is simply a linear combination of identity and swap, with certain coefficients that can be readily calculated. For an n-qudit system acted upon by a sequence of single and two-qudit gates, this formula can be applied sequentially to each gate. After t gates have been applied, the Haar-averaged state of the system can be expressed as a linear combination of n-fold tensor products of I and S (e.g. for \(n=3\), the state would be given by \(c_1 I \otimes I \otimes I + c_2 I \otimes I \otimes S + c_3 I \otimes S \otimes I + \ldots +c_8 S \otimes S \otimes S\)).

The important takeaway from Ref. [8] was to interpret the coefficients of these \(2^n\) terms as probabilities of a certain stochastic process over the set of length-n bit strings \(\{I,S\}^n\), which were called “configurations.” The stochastic process generates a sequence of \(s+1\) configurations \(\gamma ={(\vec {\gamma }^{(0)},\ldots ,\vec {\gamma }^{(s)})}\), which was called a “trajectory,” where the probabilistic transition from \(\vec {\gamma }^{(t-1)}\) to \(\vec {\gamma }^{(t)}\) depends only on the value of \(\vec {\gamma }^{(t-1)}\) (Markov property).

The transition rules of the stochastic process are calculated by computing the coefficients in Eq. (45); here we state the resultFootnote 13 of that calculation; more details can be found in Appendix A.1. First of all, the initial configuration \(\vec {\gamma }^{(0)}\) is chosen at random by independently choosing each of the n bits to be I with probability \(q/(q+1)\) and S with probability \(1/(q+1)\). Then, for each time step t, if the tth gate acts on qudits \(i_t\) and \(j_t\), then the transition from \(\vec {\gamma }^{(t-1)}\) to \(\vec {\gamma }^{(t)}\) can involve a bit flip at position \(i_t\), at position \(j_t\), or neither (but not at both), and no bit can flip at any other position. Moreover, \({\gamma _{i_t}^{(t)}}={\gamma _{j_t}^{(t)}}\) must hold, so if \({\gamma _{i_t}^{(t-1)}} \ne {\gamma _{j_t}^{(t-1)}}\), then one of the two bits must be flipped. In this situation, when one bit is assigned I and one is assigned S, the S is flipped to I with probability \(q^2/(q^2+1)\), and the I is flipped to S with probability \(1/(q^2+1)\). Thus, there is a bias toward making more of the assignments I. The quantity \(Z_0\) is given exactly by the expectation value of the quantity \(q^{|\vec {\gamma }^{(s)}|}\) when trajectories \(\gamma \) are generated in this fashion, where \(|\vec {\nu }|\) denotes the Hamming weight of the bit string \(\vec {\nu }\), that is, the number of S assignments out of n.

where here \({\mathbb {E}}_0\) denotes evolution by the stochastic process described above.

With the stochastic process now defined, a vital observation is that the process has two fixed points, the \(I^n\) configuration and the \(S^n\) configuration, since whenever all the bits agree, none can be flipped. In Ref. [8], we could precisely compute the fraction of the probability mass that eventually reaches each of these fixed points if the circuit is infinitely long. Specifically, \(q^n/(q^n+1)\) of the probability mass converges to \(I^n\) and \(1/(q^n+1)\) converges to \(S^n\).Footnote 14 Then, since the \(S^n\) fixed point receives a weighting of \(q^n\) and the \(I^n\) fixed point receives a weighting of 1 in Eq. (46), we find that \(Z_0 \rightarrow 2q^n/(q^n+1)\).

Noise introduces new rules into this stochastic process. Suppose the configuration immediately after the tth two-qudit gate is \(\vec {\nu }\), and a noise channel \({\mathcal {N}}\) acts on qudit \(i_t\). Since the noise channel is unital, if \(\nu _{i_t} = I\), representing the identity operator on a two-qudit system, then the configuration is left unchanged. However, if \(\nu _{i_t} = S\), then the action of the noise may cause a flip from S to I. For the calculation of \(Z_0\), there is no noise, so this happens with probability 0. For the calculation of \(Z_1\), where there is one copy of the noisy distribution and one copy of the ideal, we can again use the formula in Eq. (45) to compute the \(S \rightarrow I\) transition probability to be \(rq/(q-1)\), where r is the average infidelity given in Eq. (3). This is explained in Appendix A.2. For \(Z_2\), where there are two copies of the noisy distribution, the probability of an \(S \rightarrow I\) transition is calculated to be \(1-u\), where u is the unitarity of the noise channel given in Eq. (4). The values of \(Z_1\) and \(Z_2\) are thus given by

where \({\mathbb {E}}_\sigma \) denotes the stochastic process where \(S\rightarrow I\) bit flips occur at each noise location with probability \(\sigma \), generalizing Eq. (46).

Since noise can flip an S to an I but not vice versa, \(I^n\) is the only fixed point of the stochastic processes for \(Z_1\) and \(Z_2\); the \(S^n\) fixed point is only metastable: eventually, the action of noise will flip one of the S bits to an I, and the trajectory might re-equilibrate to the \(I^n\) fixed point. Our analysis consists of a careful accounting of the leakage of probability mass away from the metastable \(S^n\) fixed point.

Analyzing the stochastic processes for a toy example

Now, we consider a toy example which captures the essence of our analysis. Suppose a circuit consists of alternating rounds of (1) a global Haar-random transformation and (2) a depolarizing noise channel on a single qudit, as depicted in Fig. 2. Step (1) can be approximately accomplished by performing a very large number of two-qudit gates.

Toy example where global Haar-random gates \(U^{(t)}\) act in between a depolarizing noise channel on a single qudit. In this model we can exactly compute quantities \(Z_0\), \(Z_1\), and \(Z_2\) because the global Haar-random gates cause the probability mass in the stochastic process to fully re-equilibrate to one of the fixed points, \(I^n\) or \(S^n\)

This model is similar to the toy model considered in Ref. [23] (the difference being that they considered single-qudit noise channels on all n qudits in step (2)), which they analyzed using the Pauli string method of Refs. [45, 46].

The initial global Haar-random transformation induces perfect equilibration to the two fixed points, with \(q^n/(q^n+1)\) mass reaching the \(I^n\) fixed point and \(1/(q^n+1)\) mass reaching the (metastable) \(S^n\) fixed point. This is already sufficient to compute \(Z_0-1\), which is not sensitive to the noise.

Now suppose we want to calculate \(Z_1\). Consider a piece of probabiltiy mass that is part of the \(1/(q^n+1)\) fraction at the \(S^n\) fixed point. The single-qudit depolarizing noise channel will flip one of the S assignments to an I assignment with probability \(rq/(q-1) = \epsilon (1-q^{-2})^{-1}\). If this happens, there are \(n-1\) S assignments and 1 I assignment. While it may seem that this new configuration is still close to the \(S^n\) fixed point, we must remember that the random walk is biased in the I direction. When we perform the next global Haar-random transformation, we get perfect re-equilibration back to the two fixed points; with probability \(\frac{1-q^{-2}}{1-q^{-2n}}\) we end at the \(I^n\) fixed point, and with probability \(\frac{q^{-2}-q^{-2n}}{1-q^{-2n}}\) we end at the \(S^n\) fixed point. These probabilities were derived in Ref. [8], and are a basic consequence of Eq. (45). Now, the total mass that remains at the \(S^n\) fixed point is the \(\frac{1}{q^n+1}(1-\frac{\epsilon }{1-q^{-2}})\) that never left and the \(\frac{\epsilon }{1-q^{-2}}\frac{q^{-2}-q^{-2n}}{1-q^{-2n}}\) that left and returned, which comes out to \(\frac{1}{q^n+1}(1-\frac{\epsilon }{1-q^{-2n}})\). After 2s single-qudit error channels have been applied, the probability mass remaining at the \(S^n\) fixed point is precisely

This mass receives weighting of \(q^n\) toward \(Z_1\). Meanwhile the rest of the mass is at the \(I^n\) fixed point and receives weighting of 1. This tells us that

We see that in this toy model, the quantity \({\bar{F}} = (Z_1-1)/(Z_0-1)\) is precisely given by the fraction of probability mass originally destined for the \(S^n\) fixed point that remains at the \(S^n\) fixed point even after the noise locations have acted. Thus, the leakage of probability mass from \(S^n\) to \(I^n\) in the calculation of \(Z_1\) corresponds exactly to the decay of the linear cross-entropy benchmark.

Calculating \(Z_2-1\) is just as easy. Here transitions due to noise occur with probability \(1-u\) where u is the unitarity of the noise channel. For depolarizing noise, we have \(1-u = 2\epsilon (1-q^{-2})^{-1} - O(\epsilon ^2)\), so \(Z_2-1\) is the same as \(Z_1-1\) with the replacement \(\epsilon \rightarrow 2\epsilon - O(\epsilon ^2)\), giving

We can plug these calculations into Eq. (43) to find that

Extending the analysis to a full proof

In the proofs of our theorems, the difficulty is that the probability mass does not fully equilibrate to a fixed point before the next error location acts. Nonetheless, we manage to calculate tight bounds on \(Z_1\) and \(Z_2\) by keeping track of the amount of probability mass that would re-equilibrate back to \(S^n\) and \(I^n\) if the rest of the gates were noiseless, which we refer to as S-destined and I-destined probability mass. We show that, as long as \(\epsilon < c/n\) for some constant c, the S-destined probability mass is exponentially clustered near the \(S^n\) fixed point in the sense that the probability of being x bit flips away from \(S^n\) conditioned on being S-destined decays exponentially in x. Thus, for a piece of S-destined probability mass, nearly all the bits will be assigned S, and the action of a noise channel reduces the amount of S-destined mass by a factor of roughly \(1-\epsilon \). To see why exponential clustering of S-destined mass is necessary, suppose that this were not the case, and that at a certain point in the evolution, a considerable fraction of the S-destined probability mass has a constant fraction r of its bits assigned I. Then, if a noise channel acts on a random bit, the probability that the bit is already assigned I is equal to r, in which case the noise has no impact on the configuration. With probability \(1-r\), the bit will be assigned S, and the noise will cause a fraction roughly equal to \(1-\epsilon \) of the S-destined probability mass to become I-destined. Thus, the fraction of probability mass that remains S-destined after the noise channel would be roughly \(1-(1-r)\epsilon \), which is larger than \(1-\epsilon \) by an \(\Omega (\epsilon )\) amount. In this scenario, there would be significantly slower leakage from the \(S^n\) fixed point to the \(I^n\) fixed point, and we would not be able to assert that Eq. (50) is approximately true, ruining the delicate analysis that require \(Z_1-1\) and \(Z_2-1\) to have very precise rates of decay with s.

The reason \(\epsilon < c/n\) is required for the exponential clustering effect is that errors need to be rare enough for the S-destined mass to mostly re-equilibrate back to \(S^n\) before new errors pop up; to say it another way, the errors must get scrambled at a faster rate than they appear. If a configuration has \(n-1\) S assignments and 1 I assignment, it will take \(\Theta (n)\) gates before the single I-assigned qudit participates in a gate. Thus, if errors occur at a slower rate than one per \(\Theta (n)\) gates, full re-equilibration will happen before a new error pops up most of the time. It is not clear if this condition is truly necessary for the clustering statement to hold, but we show at the very least that it is sufficient.

However, we need \(\epsilon < c/n\) to hold for another (related) reason: the leakage from \(S^n\) to \(I^n\) must occur more slowly than the anti-concentration rate, which corresponds to the speed at which the probability mass initially equilibrates to \(I^n\) and \(S^n\). After all, even though the stochastic process is I-biased, the I-destined mass does not make it to the \(I^n\) fixed point instantaneously. After s gates, there will be some residual contribution from the not-yet-equilibrated I-destined mass to the calculation of quantities \(Z_0-1\), \(Z_1-1\), and \(Z_2-1\); this contribution decays by a constant factor with every additional O(n) gates. If \(\epsilon =O(1/n)\), a constant fraction of the S-destined mass will leak away with each set of O(n) gates, and if the constant prefactor on this leakage is too large, the I-destined mass will contribute more than the S-destined mass to the expectation values; as a result, the right-hand-side of Eq. (43) will not exhibit the same kind of cancellations observed for the toy example.

In our formal analysis, we actually assume something even stronger: we require that \(\epsilon \ll 1/(n\log (n))\), which essentially means that very few errors occur during the initial anti-concentration period. However, this is done to make the analysis easier, and we do not believe this condition is necessary.

6 Numerical Estimates of Error in White-Noise Approximation

In principle, it would be possible to determine the constant factors under the big-O notation in our proofs, but the result of this exercise would likely yield extremely unfavorable numbers due to our lack of optimization throughout, and the fact that it might be possible to eliminate some of the terms in our error expression altogether with a more fine-grained analysis. The goal of this section is to provide a numerical assessment of the bound on the error in the white-noise approximation for realistic values of the circuit parameters. We find that realistic NISQ-era values of the circuit parameters can lead to a small upper bound on the white-noise approximation error, even for circuits with several thousand gates, but we confirm that the noise rate needs to decrease like O(1/n) as the system size scales up for our upper bound to be meaningful.

6.1 Numerical method

The numerics we present are for the complete-graph architecture. In general, the stochastic process underlying our method (described in Sect. 5.2 and presented formally in the appendix) is a random walk over \(2^n\) possible configurations of a length-n bit string. However, for the complete-graph architecture there is an equivalence between all configurations with the same Hamming weight. Thus, the state space for the stochastic process is reduced to \(n+1\) distinct groups of configurations (associated with Hamming weights \(0,1,\ldots ,n\)). The quantities \(Z_0\), \(Z_1\), and \(Z_2\), as defined in Eqs. (39), (40), and (41) can then be precisely computed by multiplying the (sparse) \((n+1) \times (n+1)\) transition matrices for the stochastic process. This allows us to compute the right-hand-side of Eq. (43) for n substantially large, giving a bound on \({\mathbb {E}}_U[\frac{1}{2}\Vert p_{\text {noisy}}-p_{\text {wn}}\Vert _1]\).

In our analysis below, we suppose all noise locations are subject to depolarizing noise with error probability \(\epsilon \), given as in Eq. (5). We also restrict to \(q=2\) (qubits). We do not model readout errors, which are a large source of error in the actual experiments of Refs. [4,5,6]. We plug in specifications \((n,\epsilon ,s)\) and exactly compute the quantity

which gives the ratio of the bound in Eq. (43) to the normalized linear cross-entropy metric \({\bar{F}}\).

6.2 Numerical bound for realistic circuit parameters

We first examine the bound using the circuit parameters of existing experimental setups. The Google experiment [4] ran \(s=430\) gates on their \(n=53\) qubit processor called Sycamore, and their error rate per cycle, which is the analogous quantity to the total error in a two-qubit gate in our setup, was reported to be \(0.9\%\). This corresponds to \(\epsilon \approx 0.0045\) in our model where separate noise channels act on each of the two qubits. Meanwhile, the largest experiment from USTC [6] ran \(s=594\) gates on their \(n=60\) qubit processor called Zuchongzhi, with a similar overall error rate per cycle. In Fig. 3, we plot the numerically calculated bound on \(\frac{1}{{\bar{F}}}{\mathbb {E}}_U[\frac{1}{2}\Vert p_{\text {noisy}}-p_{\text {wn}}\Vert _1]\) as a function of circuit size for complete-graph circuits with \(n=53\) and \(n=60\) at \(\epsilon =0.0045\). The circuit sizes \(s=430\) and \(s=594\) appear as large dots.

Plot of the numerically calculated upper bound on the expected total variation distance between \(p_{\text {noisy}}\) and \(p_{\text {wn}}\) divided by F for a complete-graph version of recent random quantum circuit experiments by Google (53 qubits) [4] and USTC (60 qubits) [6]. The large dots represent the circuit sizes (number of two-qubit gates) implemented in those experiments. The dotted black line is the function \(2\epsilon \sqrt{s}/3\) for each experiment