Abstract

We study a general class of interacting particle systems called kinetically constrained models (KCM) in two dimensions. They are tightly linked to the monotone cellular automata called bootstrap percolation. Among the three classes of such models (Bollobás et al. in Combin Probab Comput 24(4):687–722, 2015), the critical ones are the most studied. Together with the companion paper by Marêché and the author (Hartarsky and Marêché in Combin Probab Comput 31(5):879–906, 2022), our work determines the logarithm of the infection time up to a constant factor for all critical KCM. This was previously known only up to logarithmic corrections (Hartarsky et al. in Probab Theory Relat Fields 178(1):289–326, 2020, Ann Probab 49(5):2141–2174, 2021, Martinelli et al. in Commun Math Phys 369(2):761–809, 2019). We establish that on this level of precision critical KCM have to be classified into seven categories. This refines the two classes present in bootstrap percolation (Bollobás et al. in Proc Lond Math Soc (3) 126(2):620–703, 2023) and the two in previous rougher results (Hartarsky et al. in Probab Theory Relat Fields 178(1):289–326, 2020, Ann Probab 49(5):2141–2174, 2021, Martinelli et al. in Commun Math Phys 369(2):761–809, 2019). In the present work we establish the upper bounds for the novel five categories and thus complete the universality program for equilibrium critical KCM. Our main innovations are the identification of the dominant relaxation mechanisms and a more sophisticated and robust version of techniques recently developed for the study of the Fredrickson-Andersen 2-spin facilitated model (Hartarsky et al. in Probab Theory Relat Fields 185(3):993–1037, 2023).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Kinetically constrained models (KCM) are interacting particle systems. They have challenging features including non-ergodicity, non-attractiveness, hard constraints, cooperative dynamics and dramatically diverging time scales. This prevents the use of conventional mathematical tools in the field.

KCM originated in physics in the 1980 s [12, 13] as toy models for the liquid-glass transition, which is still a hot and largely open topic for physicists [3]. The idea behind them is that one can induce glassy behaviour without the intervention of static interactions, disordered or not, but rather with simple kinetic constraints. The latter translate the phenomenological observation that at high density particles in a super-cooled liquid become trapped by their neighbours and require a scarce bit of empty space in order to move at all. We direct the reader interested in the motivations of these models and their position in the landscape of glass transition theories to [3, 14, 35].

Bootstrap percolation is the natural monotone deterministic counterpart of KCM (see [34] for an overview). Nevertheless, the two subjects arose for different reasons and remained fairly independent until the late 2000 s. That is when the very first rigorous results for KCM came to be [9], albeit much less satisfactory than their bootstrap percolation predecessors. The understanding of these two closely related fields did not truly unify until the recent series of works [20,21,22, 24, 30,31,32] elucidating the common points, as well as the serious additional difficulties in the non-monotone stochastic setting. It is the goal of this series that is accomplished by the present paper.

1.1 Models

Let us introduce the class of \(\mathcal U\)-KCM introduced in [9]. In \(d\geqslant 1\) dimensions an update family is a nonempty finite collection of finite nonempty subsets of \({\mathbb Z} ^d\setminus \{0\}\) called update rules. The \(\mathcal U\)-KCM is a continuous time Markov chain with state space \({\Omega }=\{0,1\}^{{\mathbb Z} ^d}\). Given a configuration \({\eta }\in {\Omega }\), we write \({\eta }_x\) for the state of \(x\in {\mathbb Z} ^d\) in \({\eta }\) and say that x is infected (in \({\eta }\)) if \({\eta }_x=0\). We write \({\eta }_A\) for the restriction of \({\eta }\) to \(A\subset {\mathbb Z} ^d\) and \(\textbf{0}_A\) for the completely infected configuration with A omitted when it is clear from the context. We say that the constraint at \(x\in {\mathbb Z} ^d\) is satisfied if there exists an update rule \(U\in \mathcal U\) such that \(x+U=\{x+y:y\in U\}\) is fully infected. We denote the corresponding indicator by

The final parameter of the model is its equilibrium density of infections \(q\in [0,1]\). We denote by \({\mu }\) the product measure such that \({\mu }({\eta }_x=0)=q\) for all \(x\in {\mathbb Z} ^d\) and by \({\text {Var}}\) the corresponding variance. Given a finite set \(A\subset {\mathbb Z} ^d\) and real function \(f:{\Omega }\rightarrow {\mathbb R} \), we write \({\mu }_A(f)\) for the average \({\mu }(f({\eta })|{\eta }_{{\mathbb Z} ^d\setminus A})\) of f over the variables in A. We write \({\text {Var}}_A(f)\) for the corresponding conditional variance, which is thus also a function from \({\Omega }_{{\mathbb Z} ^d\setminus A}\) to \({\mathbb R} \), where \({\Omega }_B=\{0,1\}^B\) for \(B\subset {\mathbb Z} ^d\).

With this notation the \(\mathcal U\)-KCM can be formally defined via its generator

and its Dirichlet form reads

where \({\mu }_x\) and \({\text {Var}}_x\) are shorthand for \({\mu }_{\{x\}}\) and \({\text {Var}}_{\{x\}}\). Alternatively, the process can be defined via a graphical representation as follows (see [28] for background). Each site \(x\in {\mathbb Z} ^d\) is endowed with a standard Poisson process called clock. Whenever the clock at x rings we assess whether its constraint is satisfied by the current configuration. If it is, we update \({\eta }_x\) to an independent Bernoulli variable with parameter \(1-q\) and call this a legal update. If the constraint is not satisfied, the update is illegal, so we discard it without modifying the configuration. It is then clear that \({\mu }\) is a reversible measure for the process (there are others, e.g. the Dirac measure on the fully non-infected configuration \(\textbf{1}\)).

Our regime of interest is \(q\rightarrow 0\), corresponding to the low temperature limit relevant for glasses. A quantitative observable, measuring the speed of the dynamics, is the infection time of 0

where \(({\eta }(t))_{t\geqslant 0}\) denotes the \(\mathcal U\)-KCM process. More specifically, we are interested in its expectation for the stationary process \({\mathbb E} _{{\mu }}[{\tau }_0]\), namely the process with random initial condition distributed according to \({\mu }\). This quantifies the equilibrium properties of the system and is closely related e.g. to the more analytic quantity called relaxation time (i.e. inverse of the spectral gap of the generator) that the reader may be familiar with.

\(\mathcal U\)-bootstrap percolation is essentially the \(q=1\) case of \(\mathcal U\)-KCM started out of equilibrium, from a product measure with \(q_0\rightarrow 0\) density of infections. More conventionally, it is defined as a synchronous cellular automaton, which updates all sites of \({\mathbb Z} ^d\) simultaneously at each discrete time step, by infecting sites whose constraint is satisfied and never removing infections. As the process is monotone, it may alternatively be viewed as a growing subset of the grid generated by its initial condition. We denote by \([A]_\mathcal U\) the set of sites eventually infected by the \(\mathcal U\)-bootstrap percolation process with initial condition \(A\subset {\mathbb Z} ^d\), that is, the sites which can become infected in the \(\mathcal U\)-KCM in finite time starting from \({\eta }(0)=({\mathbb {1}} _{x\not \in A})_{x\in {\mathbb Z} ^d}\). Strictly speaking, other than this notation, bootstrap percolation does not feature in our proofs, but its intuition and techniques are omnipresent. On the other hand, some of our intermediate results can translate directly to recover some bootstrap percolation results of [7, 8].

1.2 Universality setting

We direct the reader to the companion paper by Marêché and the author [20], a monograph of Toninelli and the author [26] and the author’s PhD thesis [19, Chap. 1], for comprehensive background on the universality results for two-dimensional KCM and their history. Instead, we provide a minimalist presentation of the notions we need. The definitions in this section were progressively accumulated in [7, 8, 15, 20, 22, 31] and may differ in phrasing from the originals, but are usually equivalent thereto (see [20] for more details).

Henceforth, we restrict our attention to models in two dimensions. The Euclidean norm and scalar product are denoted by \(\Vert \cdot \Vert \) and \(\langle \cdot ,\cdot \rangle \), and distances are w.r.t. \(\Vert \cdot \Vert \). Let \(S^1=\{x\in {\mathbb R} ^2:\Vert x\Vert =1\}\) be the unit circle consisting of directions, which we occasionally identify with \({\mathbb R} /2\pi {\mathbb Z} \) in the standard way. We denote the open half plane with outer normal \(u\in S^1\) and offset \(l\in {\mathbb R} \) by

and omit l if it is 0. We further denote its closure by \({\overline{{\mathbb H} }}_u(l)\), omitting zero offsets. We often refer to continuous sets such as \({\mathbb H} _u\), but whenever talking about infections or sites in them, we somewhat abusively identify them with their intersections with \({\mathbb Z} ^2\) without further notice.

Fix an update family \(\mathcal U\).

Definition 1.1

(Stability). A direction \(u\in S^1\) is unstable if there exists \(U\in \mathcal U\) such that \(U\subset {\mathbb H} _u\) and stable otherwise.

It is not hard to see that unstable directions form a finite union of finite open intervals in \(S^1\) [8, Theorem 1.10]. We say that a stable direction is semi-isolated (resp. isolated) if it is the endpoint of a nontrivial (resp. trivial) interval of stable directions.

Definition 1.2

(Criticality). Let \(\mathcal C\) be the set of open semicircles of \(S^1\). An update family is

-

supercritical if there exists \(C\in \mathcal C\) such that all \(u\in C\) are unstable;

-

subcritical if every semicircle contains infinitely many stable directions;

-

critical otherwise.

The following notion measures “how stable” a stable direction is.

Definition 1.3

(Difficulty). For \(u\in S^1\) the difficulty \({\alpha }(u)\) of u is

-

0 if u is unstable;

-

\(\infty \) if u is stable, but not isolated;

-

\(\min \{n:\exists Z\subset {\mathbb Z} ^2,|Z|=n,|[{\mathbb H} _u\cup Z]_\mathcal U{\setminus }{\mathbb H} _u|=\infty \}\) otherwise.

The difficulty of \(\mathcal U\) is

We say that a direction \(u\in S^1\) is hard if \({\alpha }(u)>{\alpha }\).

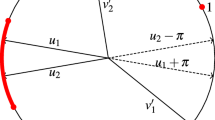

See Fig. 1 for an example update family with \({\alpha }=3\) and its difficulties. It can be shown that \({\alpha }(u)\in [1,\infty )\) for isolated stable directions [7, Lemma 2.8]. Consequently, a model is critical iff \(0<{\alpha }<\infty \) and supercritical iff \({\alpha }=0\), so difficulty is tailored for critical models and refines Definition 1.2. Furthermore, for supercritical models the notions of stable and hard direction coincide. Finally, observe that the definition implies that for any critical or supercritical update family there exists an open semicircle with no hard direction.

Definition 1.4

(Refined types). A critical or supercritical update family is

-

rooted if there exist two non-opposite hard directions;

-

unrooted if it is not rooted;

-

unbalanced if there exist two opposite hard directions;

-

balanced if it is not unbalanced, that is, there exists a closed semicircle containing no hard direction.

We further partition balanced unrooted update families into

-

semi-directed if there is exactly one hard direction;

-

isotropic if there are no hard directions.

We further consider the distinction between models with finite and infinite number of stable directions. The latter being necessarily rooted, but possibly balanced or unbalanced, we end up with a partition of all (two-dimensional non-subcritical) families into the seven classes studied in detail below in the critical case. We invite the interested reader to consult [20, Fig. 1] for simple representatives of each class with rules contained in the the lattice axes and reaching distance at most 2 from the origin. Naturally, many more examples have been considered in the literature (also see Fig. 1).

Let us remark that models in each class may have one axial symmetry, but non-subcritical models invariant under rotation by \(\pi \) are necessarily either isotropic or unbalanced unrooted (thus with finite number of stable directions), while invariance by rotation by \(\pi /2\) implies isotropy.

1.3 Results

Our result, summarised in Table 1, together with the companion paper by Marêché and the author [20], is the following complete refined classification of two-dimensional critical KCM (for the classification of supercritical ones, which only features the rooted/unrooted distinction, see [29,30,31]).

Theorem 1

(Universality classification of two-dimensional critical KCM). Let \(\mathcal U\) be a two-dimensional critical update family with difficulty \(\alpha \). We have the following exhaustive alternatives as \(q\rightarrow 0\) for the expected infection time of the origin under the stationary \(\mathcal U\)-KCM.Footnote 1 If \(\mathcal U\) is

-

(a)

unbalanced with infinite number of stable directions (so rooted), then

$$\begin{aligned} {\mathbb E} _{{\mu }}[{\tau }_0]=\exp \left( \frac{\Theta \left( \left( \log (1/q)\right) ^4\right) }{q^{2\alpha }}\right) ; \end{aligned}$$ -

(b)

balanced with infinite number of stable directions (so rooted), then

$$\begin{aligned} {\mathbb E} _{{\mu }}[{\tau }_0]=\exp \left( \frac{\Theta (1)}{q^{2\alpha }}\right) ; \end{aligned}$$ -

(c)

unbalanced rooted with finite number of stable directions, then

$$\begin{aligned} {\mathbb E} _{{\mu }}[{\tau }_0]=\exp \left( \frac{\Theta \left( \left( \log (1/q)\right) ^3\right) }{q^{\alpha }}\right) ; \end{aligned}$$ -

(d)

unbalanced unrooted (so with finite number of stable directions), then

$$\begin{aligned} {\mathbb E} _{{\mu }}[{\tau }_0]=\exp \left( \frac{\Theta \left( \left( \log (1/q)\right) ^2\right) }{q^{\alpha }}\right) ; \end{aligned}$$ -

(e)

balanced rooted with finite number of stable directions, thenFootnote 2

$$\begin{aligned} {\mathbb E} _{{\mu }}[{\tau }_0]=\exp \left( \frac{\Theta \left( \log (1/q)\right) }{q^{\alpha }}\right) ; \end{aligned}$$ -

(f)

semi-directed (so balanced unrooted with finite number of stable directions), then

$$\begin{aligned} {\mathbb E} _{{\mu }}[{\tau }_0]=\exp \left( \frac{\Theta \left( \log \log (1/q)\right) }{q^{\alpha }}\right) ; \end{aligned}$$ -

(g)

isotropic (so balanced unrooted with finite number of stable directions), then

$$\begin{aligned} {\mathbb E} _{{\mu }}[{\tau }_0]=\exp \left( \frac{\Theta (1)}{q^{\alpha }}\right) . \end{aligned}$$

This theorem is the result of a tremendous amount of effort by a panel of authors. It would be utterly unfair to claim that it is due to the present paper and its companion [20] alone. Indeed, parts of the result (sharp upper or lower bounds for certain classes) were established by (subsets of) Marêché, Martinelli, Morris, Toninelli and the author [21, 22, 31, 32]. Moreover, particularly for the lower bounds, the classification of two-dimensional critical \(\mathcal U\)-bootstrap percolation models by Bollobás, Duminil-Copin, Morris and Smith [7] (featuring only the balanced/unbalanced distinction) is heavily used, while upper bounds additionally use prerequisites from [23, 24]. Thus, a fully self-contained proof of Theorem 1 from common probabilistic background is currently contained only in all the above references combined and spans hundreds of pages. Our contribution is but the conclusive step.

More precisely, the lower bound for classes (d) and (g) was deduced from [7] in [32]; the lower bound for class (b) was established in [21], while the remaining four were proved in [20]. Turning to upper bounds, the one for class (a) was given in [31] and the one for class (c) is due to [22]. The remaining five upper bounds are new and those are the subject of our work. The most novel and difficult ones concern classes (e) and (f), the latter remaining quite mysterious prior to our work. Indeed, [22, Conjecture 6.2] predicted the above result with the exception of this class, whose behaviour was unclear. We should note that this conjecture itself rectified previous ones from [31, 34], which were disproved by the unexpected result of [22], and was new to physicists, as well as mathematicians.

Remark 1.5

It should be noted that universality results including Theorem 1 apply to KCM more general than the ones defined in Sect. 1.1. Namely, we may replace \(c_x\) in Eq. (2) by a fixed linear combination of the constraints \(c_x\) associated to any finite set of update families. For instance, we may update vertices at rate proportional to their number of infected neighbours. This and other models along these lines have been considered e.g. in [2, 5, 12]. For such mixtures of families, the universality class is determined by the family obtained as their union. Indeed, upper bounds follow by direct comparison of the corresponding Dirichlet forms, while lower bounds (e.g. [20]) generally rely on deterministic bottlenecks, which remain valid.

Remark 1.6

Let us note that for reasons of extremely technical nature, we do not provide a full proof of (the upper bound of) Theorem 1(e). More precisely, we prove it as stated for models with rules contained in the axes of the lattice. We also prove a fully general upper bound of

Furthermore, with very minor modifications (see Remark 7.1), the error factor can be reduced from \(\log \log \log \) to \(\log _*\), where \(\log _*\) denotes the number of iterations of the logarithm before the result becomes negative (the inverse of the tower function). Unfortunately, removing this minuscule error term requires further work, which we omit for the sake of concision. Instead, we provide a sketch of how to achieve this in “Appendix C”.

1.4 Organisation

The paper is organised as follows. In Sect. 2 we begin by outlining all the relevant relaxation mechanisms used by critical KCM, providing detailed intuition for the proofs to come. This section is particularly intended for readers unfamiliar with the subject, as well as physicists, for whom it may be sufficiently convincing on its own. In Sect. 3 we gather various notation and simple preliminaries.

In Sect. 4 we formally state the two fundamental techniques we use to move from one scale to the next, namely East-extensions and CBSEP-extensions, which import and generalise ideas of [24]. They are used in various combinations throughout the rest of the paper. The proofs of the results about those extensions, including the microscopic dynamics treated by [18] are deferred to “Appendix A”, since they are quite technical and do not require new ideas. The bounds arising from extensions feature certain conditional expectations. We provide technical tools for estimating them in Sect. 4.4. We leave the entirely new proofs of these general analogues of [24, Appendix A] to “Appendix B”.

Sections 5–9 are the core of our work and use the extensions mentioned above to prove the upper bounds of Theorem 1 for classes (g), (d), (f), (e), (b) respectively. As we will discuss in further detail (see Sect. 2 and Table 2b), some parts of the proofs are common to several of these classes, making the sections interdependent. Thus, they are intended for linear reading.

We conclude in “Appendix C” by explaining how to remove the corrective \(\log \log \log (1/q)\) factor discussed in Remark 1.6 to recover the result of Theorem 1(e) as stated in full generality. Due to their technical nature, we delegate Appendices A to C to the arXiv version of the present work.

Familiarity with the companion paper [20] or bootstrap percolation [7] is not needed. Inversely, familiarity with [22, 24] is strongly recommended for going beyond Sect. 2 and achieving a complete view of the proof of the upper bounds of Theorem 1. Nevertheless, we systematically state the implications of intermediate results of those works for our setting in a self-contained fashion, without re-proving them.

2 Mechanisms

In this section we attempt a heuristic explanation of Theorem 1 from the viewpoint of mechanisms, which is mostly related to upper bound proofs. Yet, let us say a few words about the lower bounds. The proof of the lower bounds in the companion paper [20] has the advantage and disadvantage of being unified for all seven classes. This is undeniably practical and spotlights the fact that all scaling behaviours can be viewed through the lens of the same bottleneck (few energetically costly configurations through which the dynamics has to go to infect the origin) on a class-dependent length scale. However, the downside is that it provides little insight on the particularities of each class, which turn out to be quite significant. To prove upper bounds we need a clear vision of an efficient mechanism for infecting the origin in each class. Since we work with the stationary process, efficient means that it should avoid configurations which are too unlikely w.r.t. \({\mu }\). However, while lower bounds only identify what cannot be avoided, they do not tell us how to avoid everything else, nor indeed how to reach the unavoidable bottleneck.

Instead of outlining the mechanism used by each class, we focus on techniques which are somewhat generic and then apply combinations thereof to each class. In figurative terms, we develop several computer hardware components (three processors, four RAMs, etc.), give a general scheme of how to compose a generic computer out of generic components and, finally, assemble seven concrete computer configurations, using the appropriate components for each, sometimes changing a single component from a machine to the other. Moreover, within each component type different instances are strictly comparable, so, at the assembly stage, we might simply choose the best possible component fitting with the requirements of model at hand. This enables us to highlight the robust tools developed and refined recently, which correspond to the components and how they are manufactured, as well as give a clean universal proof scheme into which they are plugged.

Our different components are called the microscopic, internal, mesoscopic and global dynamics and correspond to progressively increasing length scales on which we are able to relax, given a suitable infection configuration. As the notion of “suitable,” which we call super good (SG), depends on the class and lower scale mechanisms used, we mostly use it as a black box input extended progressively over scales in a recursive fashion.

In order to guide the reader through Sect. 2 and beyond, in Table 2, we summarise the optimal mechanisms for each universality class on each scale and its cost. While its full meaning will only become clear in Sect. 2.7, the reader may want to consult it regularly, as they progress through Sect. 2.

The SG events concern certain convex polygonal geometric regions called droplets. These events are designed so as to satisfy several conditions ensuring that the configuration of infections inside the droplet is sufficient to infect the entire droplet. The SG events defined by extensions from smaller to larger scales require the presence of a lower scale droplet inside the large one (see Fig. 2) in addition to well-chosen more sparse infections called helping sets in the remainder of the larger scale droplet (see Fig. 1). Helping sets allow the smaller one to move inside the bigger one.

We say that a droplet relaxes in a certain relaxation time if the dynamics restricted to the SG event and to this region “mixes” in this much time. Formally, this translates to a constrained Poincaré inequality for the conditional measure, but this is unimportant for our discussion.

One should think of droplets as extremely unlikely objects, which are able to move within a slightly favourable environment. Indeed, at all stages of our treatment, we need to control the inverse probability of droplets being SG and their relaxation times, keeping them as small as feasible. Furthermore, due to their inductive definition, the favourable environment required for their movement should not be too costly. Indeed, that would result in the deterioration of the probability of larger scale droplets, as those incorporate the lower scale environment in their internal structure. Hence, we seek a balance between asking for many infections to make the movement efficient and asking for few in order to keep the probability of droplets high enough.

2.1 Scales

Microscopic dynamics refers to modifying infections at the level of the lattice along the boundary of a droplet, while respecting the KCM constraint.

Internal dynamics refers to relaxation on scales from the lattice level to the internal scale \(\ell ^{\textrm{int}}= C^2\log (1/q)/q^{\alpha }\), where C is a large constant depending on \(\mathcal U\). This is the most delicate and novel step. Up to \(\ell ^{\textrm{int}}\) we account for the main contribution to the probability of droplets. That is, at all larger scales the probability of a droplet essentially saturates at a certain value \({\rho }_{\textrm{D}}\), because finding helping sets becomes likely. Thus, on smaller scales, it is important to only very occasionally ask for more than \({\alpha }\) infections to appear close to each other in order to get the right probability \({\rho }_{\textrm{D}}\). This means that up to the internal scale hard directions are practically impenetrable, since they require helping sets of more that \({\alpha }\) infections.

Mesoscopic dynamics refers to relaxation on scales from \(\ell ^{\textrm{int}}\) to the mesoscopic scale \(\ell ^{\textrm{mes}}= 1/q^C\). As our droplets grow to the mesoscopic scale and past it, it becomes possible to require larger helping sets, which we call W-helping sets. These allow droplets to move also in hard directions of finite difficulty, while nonisolated stable directions are still blocking.

Global dynamics refers to relaxation on scales from \(\ell ^{\textrm{mes}}\) to infinity. The extension to infinity being fairly standard (and not hard), one should rather focus on scales up to the global scale given by \(\ell ^{\textrm{gl}}=\exp (1/q^{3{\alpha }})\), which is notably much larger than all time scales we are aiming for, but otherwise rather arbitrary.

Roughly speaking, on each of the last three scales, one should decide how to move a droplet of the lower scale in a domain on the larger scale.

For simplicity, in the remainder of Sect. 2, we assume that the only four relevant directions are the axis ones so that droplets have rectangular shape (see Sect. 3.3). We further assume that all directions in the left semicircle have difficulties at most \({\alpha }\), while the down direction is hard, unless there are no hard directions (isotropic class).

2.2 Microscopic dynamics

The microscopic dynamics (see “Appendix A.2”) is the only place where we actually deal with the KCM directly and is the same, regardless of the size of the droplet and the universality class. Roughly speaking, from the outside of the droplet, we may think of it as fully infected, since it is able to relax and, therefore, bring infections where they are needed. Thus, the outer boundary of the droplet behaves like a 1-dimensional KCM with update family reflecting that we view the droplet as infected. Hence, provided there are enough helping sets at the boundary to infect it, we can apply results on 1-dimensional KCM supplied for this purpose by the author [18].

This way we establish that one additional column can relax in time \(\exp (O(\log (1/q))^2)\), similarly to the East model described in Sect. 2.3.2 below. Assuming we know how to relax on the droplet itself, this allows us to relax on a droplet with one column appended. However, applying this procedure recursively line by line is not efficient enough to be useful for extending droplets more significantly.

2.3 One-directional extensions

We next explain two fundamental techniques beyond the microscopic dynamics which we use to extend droplets on any scale in a single direction (see Sect. 4).

As mentioned above, our droplets are polygonal regions with a SG event (presence of a suitable arrangement of infections in the droplet). An extension takes as input a droplet and produces another one. In terms of geometry, it contains the original one and is obtained by extending it, say, horizontally, either to the left or both left and right (see Fig. 2). The extended droplet’s SG event requires that the smaller one is SG and, additionally, certain helping sets appear in the remaining volume. The choice of where we position the smaller droplet (at the right end of the bigger one, or anywhere inside it) depends on the type of extension. The additional helping sets are required in such a way that, with their help, the smaller droplet can, in principle, completely infect the larger one and, therefore, make it relax (resample its configuration within its SG event).

Thus, an extension is a procedure for iteratively defining SG events on larger and larger scales. For each of our two types of extensions we need to provide corresponding iterative bounds on the probability of the SG event and on the relaxation time of droplets on this event. The former is a matter of careful computation. For the latter task we intuitively use a large-scale version of an underlying one-dimensional spin model, which we describe first.

2.3.1 CBSEP-extension

In the one-dimensional spin version of CBSEP [23, 24] we work on \(\{\uparrow ,\downarrow \}^{\mathbb Z} \). At rate 1 we resample each pair of neighbouring spins, provided that at least one of them is \(\uparrow \). Their state is resampled w.r.t. the reference product measure, which is reversible, conditioned to still have a \(\uparrow \) in at least one of the two sites. In other words, \(\uparrow \) can perform coalescence, branching and symmetric simple exclusion moves, hence the name. The relaxation time of this model on volume V is roughly \(\min (V,1/q)^{2}\) in one dimension and \(\min (V,1/q)\) in two and more dimensions [23, 24], where q is the equilibrium density of \(\uparrow \), which we think of as being small.

For us \(\uparrow \) represent SG droplets, which we would like to move within a larger volume. However, as we would like them to be able to move possibly by an amount smaller than the size of the droplet, we need to generalise the model a bit. We equip each site of a finite interval of \({\mathbb Z} \) with a state space corresponding to the state of a column of the height of our droplet of interest in the original lattice \({\mathbb Z} ^2\). Then the event “there is a SG droplet” may occur on a group of \(\ell \) consecutive sites (columns). The long range generalised CBSEP, which, abusing notation, we call CBSEP, is defined as follows. We fix some range \(R>\ell \) and resample at rate 1 each group of R consecutive sites, if they contain a SG droplet. The resampling is performed conditionally on preserving the presence of a SG droplet in those R sites. Thus, one move of this process essentially delocalises the droplet within the range.

It is important to note (and this was crucial in [24]) that CBSEP does not have to create an additional droplet in order to evolve. Since SG droplets are unlikely, it suffices to move an initially available SG droplet through our domain in order to relax. Since infection needs to be able to propagate both left and right from the SG droplets, we will define (see Sect. 4.3 and particularly Definition 4.7, Fig. 2b) CBSEP-extension by extending our domain horizontally and asking for the SG droplet anywhere inside with suitable “rightwards-pointing” helping sets on its right and “leftwards-pointing” on its left.

Illustration of the perturbation of Lemma 4.11. The two thickened tubes are T and \(T'\). The regions concerned by their traversability are hatched in different directions

While we now know that droplets evolve according to CBSEP, it remains to see how one can reproduce one CBSEP move via the original dynamics. This is done inductively on R by a bisection procedure, the trickiest part being the case \(R=\ell +1\). We then dispose with a droplet plus one column—exactly the setting for microscopic dynamics. However, we not only want to resample the state of the additional column, but also allow the droplet to move by one lattice step. To achieve this, we have to look inside the structure of the SG droplet and require for its infections (which have no rigid structure and may therefore move around like the organelles of an amoeba) to be somewhat more on the side we want to move towards (see e.g. Fig. 4 and also Definitions 5.3, 6.5, 7.7 and 7.8). Then, together with a suitable configuration on the additional column provided by the microscopic dynamics, we easily recover our SG event shifted by one step, since most of the structure was already provided by the version of the SG event “contracted” towards the new column.

This amoeba-like motion (moving a droplet, by slightly rearranging its internal structure) leads to a very small relaxation time of the dynamics. Indeed, the time needed to move the droplet is the product of three contributions: the relaxation time of the 1-dimensional spin model; the relaxation time of the microscopic dynamics; the time needed to see a droplet contracting as explained above (see Proposition 4.9). The first of these is a power of the volume (number of sites); the second is \(\exp (O(\log (1/q)))^2)\); the third is also small, as we discuss in Sect. 2.3.2.

However, CBSEP-extensions can only be used for sufficiently symmetric update families. That is, the droplet needs to be able to move indifferently both left and right and its position should not be biased in one direction or the other. Specifically, if we are working on a scale that requires the use of helping sets of size \(\alpha \), these have to exist both for the left and right directions, so the model needs to be unrooted (if instead we use larger helping sets, having a finite number of stable directions suffices). The reason is that otherwise the position of the SG droplet is biased in one direction instead of being approximately uniform. This would break the analogy with the original one-dimensional spin model, which is totally symmetric. When symmetry is not available, we recourse to the East-extension presented next, which may also be viewed as a totally asymmetric version of the CBSEP-extension.

2.3.2 East-extension

The East model [27] is the one-dimensional KCM with \(\mathcal U=\{\{1\}\}\). That is, we are only allowed to resample the left neighbour of an infection. An efficient recursive mechanism for its relaxation is the following [33]. Assume we start with an infection at 0. In order to bring an infection to \(-2^n+1\), using at most n infections at a time (excluding 0), we first bring one to \(-2^{n-1}+1\), using \(n-1\) infections. We then place an infection at \(-2^{n-1}\) and reverse the procedure to remove all infections except 0 and \(-2^{n-1}\). Finally, start over with \(n-1\) infections, viewing \(-2^{n-1}\) as the new origin, thus reaching \(-2^{n}+1\). It is not hard to check that this is as far as one can get with n infections [11]. Thus, a number of infections logarithmic in the desired distance is needed. This is to be contrasted with CBSEP, for which only one infection is ever needed, as it can be moved indefinitely by SEP moves. The relaxation time of East on a segment of length L is \(q^{-O(\log \min (L,1/q))}\) [1, 9, 10], where q is the equilibrium density of infections. This corresponds to the cost of n infections when \(2^n\sim \min (L,1/q)\) is the typical distance to the nearest infection.

The long-range generalised version of the East model is defined similarly to that of CBSEP. The only difference is that now \(R>\ell \) consecutive columns are resampled together if there is a SG droplet on their extreme right. It is clear that this does not allow moving the droplet, but rather forces us to recreate a new droplet at a shifted position before we can progress. The associated East-extension of a droplet corresponds to extending its geometry to the left (see Sect. 4.2 and particularly Definition 4.4 and Fig. 2a). The extended SG event requires that the original SG droplet is present in the rightmost position and “leftwards-pointing” helping sets are available in the rest of the extended droplet.

The generalised East process goes back to [31], while the long range version is implicitly used in [22]. However, both works used a brutal strategy consisting of creating the new droplet from scratch. Instead, in this work we have to be much more careful, particularly for semi-directed models. Indeed, take \(\ell \) large and \(R=\ell +5\). Then it is intuitively clear that the presence of the original rightmost droplet overlaps greatly with the occurrence of the shifted SG one we would like to craft. Hence, the idea is to take advantage of this and only pay the conditional probability of the droplet we are creating, given the presence of the original one.

This is not as easy as it sounds for several reasons. Firstly, we should make the SG structure soft enough (in contrast with e.g. [22, 31]) so that small shifts do not change it much. Secondly, we need to actually have a quantitative estimate of the conditional probability of a complicated multi-scale event, given its translated version, which necessarily does not quite respect the same multi-scale geometry. To make matters worse, we do not have at our disposal a very sharp estimate of the probability of SG events (unlike in [24]), so directly computing the ratio of two rough estimates would yield a very poor bound on the conditional probability. In fact, this problem is also present when contracting a droplet in the CBSEP-extension—we need to evaluate the probability of a contracted version of the droplet, conditionally on the original droplet being present.

We deal with these issues in Sect. 4.4 (see also “Appendix B”). We establish that, as intuition may suggest, to create a droplet shifted by \(R-\ell \), given the original one, we roughly only need to pay the probability of a droplet on scale \(R-\ell \) rather than \(\ell \), which provides a substantial gain. Hence, the time necessary for an East-extension of a droplet to relax is essentially the product of the inverse probabilities of a droplet on scales of the form \(2^m\) up to the extension length (see Proposition 4.6).

2.4 Internal dynamics

The internal dynamics (see Sects. 5.1, 6.1, 7.1, and 8.1) is where most of our work goes. This is not surprising, as the probability of SG events saturates at its final value \({\rho }_{\textrm{D}}\) at the internal scale. The value of \({\rho }_{\textrm{D}}\) is given by \(\exp (-O(1)/q^{\alpha })\) for balanced models and \(\exp (-O(\log (1/q))^2/q^{\alpha })\) for unbalanced ones, as in bootstrap percolation [7]. However, relaxation times for some classes keep growing past the internal scale, so the internal dynamics does not necessarily give the final answer in Theorem 1 (see Table 2b).

2.4.1 Unbalanced internal dynamics

Let us begin with the simplest case of unbalanced models. If \(\mathcal U\) is unbalanced with infinite number of stable directions (class (a)), droplets in [31] on the internal scale consist of several infected consecutive columns, so that no relaxation is needed (the SG event is a singleton). The columns have size \(\ell ^{\textrm{int}}\), which justifies the value of \({\rho }_{\textrm{D}}=q^{-O(\ell ^{\textrm{int}})}=\exp (-O(\log (1/q))^2/q^{\alpha })\).

The events \(\overline{\mathcal S\mathcal G}({\Lambda }^{(n)}_2)\) and \(\overline{\mathcal S\mathcal T}({\Lambda }_3^{(n)})\) of Definition 6.5. \({\Lambda }_3^{(n)}\) is thickened. Black regions are entirely infected. Shaded tubes are \((\textbf{1},W)\)-symmetrically traversable

Assume \(\mathcal U\) is unbalanced with finite number of stable directions (classes (c) and (d), see Sect. 6.1). Then droplets on the internal scale are fully infected square frames of thickness O(1) and size \(\ell ^{\textrm{int}}\). That is, the \(\ell ^\infty \) ball of radius \(\ell ^{\textrm{int}}\) minus the one of radius \(\ell ^{\textrm{int}}-O(1)\) (see [22, Figs. 2–4] or Fig. 5 for more general geometry). This frame is infected with probability \({\rho }_{\textrm{D}}=q^{-O(\ell ^{\textrm{int}})}\). In order to relax inside the frame, one can divide its interior into groups of O(1) consecutive columns (see [22, Fig. 8]). We can then view them as performing a CBSEP dynamics with \(\uparrow \) corresponding to a fully infected group of columns. This is possible, because with the help of the frame each completely infected group is able to completely infect the neighbouring ones. Here we are using that there are finitely many stable directions to ensure both the left and right directions have finite difficulty, so finite-sized helping sets, as provided by the frame, are sufficient to propagate our group of columns. This was already done in [22] and the time necessary for this relaxation is easily seen to be \({\rho }_{\textrm{D}}^{-O(1)}\) (the cost for creating a group of infected columns)—see Proposition 6.2.

2.4.2 CBSEP internal dynamics

If \(\mathcal U\) is isotropic (class (g), see Sect. 5.1), up to the conditioning problems of Sect. 4.4 described above, we need only minor adaptations of the strategy of [24] for the paradigmatic isotropic model called FA-2f. Droplets on the internal scale have an internal structure as obtained by iterating Fig. 4a (see also [24, Fig. 2]). Our droplets are extended little by little alternating between the horizontal and vertical directions, so that their size is multiplied essentially by a constant at each extension. Thus, roughly \(\log (1/q)\) extensions are required to reach \(\ell ^{\textrm{int}}\). As isotropic models do not have any hard directions, we can move in all directions and thus the symmetry required for CBSEP-extensions is granted. Hence, this mechanism leads to a very fast relaxation of droplets in time \(\exp (q^{-o(1)})\)—see Theorem 5.2.Footnote 3

Remark 2.1

Note that for CBSEP-extensions to be used, we need a very strong symmetry. Namely, leftwards and rightwards pointing helping sets should be the same up to rotation by \(\pi \). Yet, for a general isotropic model we only know that there are no hard directions, so helping sets have the same size (equal to the difficulty \(\alpha \) of the model), but not necessarily the same shape. We circumvent this issue by artificially symmetrising our droplets and events. Namely, whenever we require helping sets in one direction, we also require the helping sets for the opposite direction rotated by \(\pi \) (see Definitions 3.8,4.1 and 4.7). Although these are totally useless for the dynamics, they are important to ensure that the positions of droplets are indeed uniform rather than suffering from a drift towards an “easier” non-hard direction (see Lemma 4.10).

2.4.3 East internal dynamics

The most challenging case is the balanced non-isotropic one (classes (b), (e) and (f)). It is treated in Sects. 7.1 and 8.1, but for the purposes of the present section only Sect. 7.1 is relevant. This is because we assume that only the four axis directions are relevant and our droplets are rectangular. The treatment of the general case for balanced rooted families is left to Sect. 8.1 and “Appendix C” (recall Remark 1.6).

For the internal dynamics the downwards hard direction prevents us from using CBSEP-extensions. To be precise, for semi-directed models (class (f)) it is possible to perform CBSEP-extensions horizontally (and not vertically), but the gain is insignificant, so we treat all balanced non-isotropic models identically up to the internal scale as follows.

Geometry of the nested droplets \({\Lambda }^{(n)}\) for \(k=2\) in the setting of Sect. 7.1. For \(n\in {\mathbb N} \) droplets are symmetric and homothetic to the black \({\Lambda }^{(0)}\). Intermediate ones \({\Lambda }^{(1+1/4)}\), \({\Lambda }^{(1+2/4)}\) and \({\Lambda }^{(1+3/4)}\) obtained by East-extensions (see Fig. 2a) in directions \(u_0\), \(u_1\) and \(u_2\) respectively are drawn in progressive shades of grey

We still extend droplets, starting from a microscopic one, by a constant factor alternating between the horizontal and vertical directions (see Fig 6a). However, in contrast with the isotropic case (see Fig. 4a), extensions are done in an oriented fashion, so that the original microscopic droplet remains anchored at the corner of larger ones. Thus, we may apply East-extensions on each step and obtain that the cost is given by the product of conditional probabilities from Sect. 2.3.2 over all scales and shifts of the form \(2^n\):

where \(a_m^{(n)}\) is the conditional probability of a SG droplet of size \(2^n\) being present at position \(2^m\), given that a SG droplet of size \(2^n\) is present at position 0. It is crucial that Eq. (5) is not the straightforward bound \(\prod _{n}({\rho }_{\textrm{D}}^{(n)})^{-n}\), with \({\rho }_{\textrm{D}}^{(n)}\) the probability of a droplet of scale n, that one would get by direct analogy with the East model (recall from Sect. 2.3.2 that the relaxation time of East on a small volume L is \(q^{-O(\log L)}\)), as that would completely devastate all our results. Indeed, as mentioned in Sect. 2.3.2, the term \(a_m^{(n)}\) in Eq. (5) is approximately equal to \({\rho }_{\textrm{D}}^{(m)}\), rather than \({\rho }_{\textrm{D}}^{(n)}\). This is perhaps one of the most important points to our treatment.

In total, a droplet of size \(2^n\) needs to be paid once per scale larger than \(2^n\) (see Eq. (42)). A careful computation shows that only droplets larger than \(q^{-{\alpha }}\) provide the dominant contribution and those all have probability essentially \({\rho }_{\textrm{D}}=\exp (-O(1)/q^{\alpha })\) (see Eq. (43)). Thus, the total cost would be

since there are \(\log \log (1/q)\) scales from \(q^{-{\alpha }}\) to \(\ell ^{\textrm{int}}\), as they increase exponentially.

Equation (6) is unfortunately a bit too rough for the semi-directed class, overshooting Theorem 1(f). However, the solution is simple. It suffices to introduce scales growing double-exponentially above \(q^{-{\alpha }}\) instead of exponentially (see Eq. (37)), so that the product over scales n in Eq. (6) becomes dominated by its last term, corresponding to droplet size \(\ell ^{\textrm{int}}\). This gives the optimal final cost

(see Theorem 7.3).

2.5 Mesoscopic dynamics

For the mesoscopic dynamics (see Sects. 5.1, 6.2, 7.2, and 9.1) we are given as input a SG event for droplets on scale \(\ell ^{\textrm{int}}=C^2\log (1/q)/q^\alpha \) and a bound on their relaxation time and occurrence probability \({\rho }_{\textrm{D}}\). We seek to output the same on scale \(\ell ^{\textrm{mes}}=q^{-C}\). Taking \(C\gg W\), once our droplets have size \(\ell ^{\textrm{mes}}\), we are able to find W-helping sets (sets of W consecutive infections, where W is large enough).

2.5.1 CBSEP mesoscopic dynamics

If \(\mathcal U\) is unrooted (classes (d), (f) and (g), see Sects. 6.2 and 7.2), recall that the hard directions (if any) are vertical. Then we can perform a horizontal CBSEP-extension directly from \(\ell ^{\textrm{int}}\) to \(\ell ^{\textrm{mes}}\), since \(\ell ^{\textrm{int}}=C^2\log (1/q)/q^{\alpha }\) makes it likely for helping sets (of size \(\alpha \)) to appear along all segments of length \(\ell ^{\textrm{int}}\) until we reach scale \(\ell ^{\textrm{mes}}= q^{-C}\). The resulting droplet is very wide, but short (see Fig. 5a). However, this is enough for us to be able to perform a vertical CBSEP-extension (see Fig. 5b), requiring W-helping sets, since they are now likely to be found. Again, CBSEP dynamics being very efficient, its cost is negligible. Note that, in order to perform the vertical extension, we are using that there are no nonisolated stable directions, so that W is larger than the difficulty of the up and down directions, making W-helping sets sufficient to induce growth in those directions. Thus, morally, there are no hard directions beyond scale \(\ell ^{\textrm{mes}}\) for unrooted models.

2.5.2 East mesoscopic dynamics

If \(\mathcal U\) is rooted (classes (a)–(c) and (e), see Sect. 9.1), CBSEP-extensions are still inaccessible. We may instead East-extend horizontally from \(\ell ^{\textrm{int}}\) to \(\ell ^{\textrm{mes}}\) in a single step. If the model is balanced or has a finite number of stable directions (classes (b), (c) and (e)), we may proceed similarly in the vertical direction, reaching a droplet of size \(\ell ^{\textrm{mes}}\) in time \({\rho }_{\textrm{D}}^{-O(\log (1/q))}\) (here we use the basic bound \(q^{-O(\log L)}\) for East dynamics recalled in Sect. 2.3.2, which is fairly tight in this case, since droplets are small compared to the volume: \(\log \ell ^{\textrm{mes}}\approx \log (\ell ^{\textrm{mes}}/\ell ^{\textrm{int}})\)). For the unbalanced case (class (c)) here we require W-helping sets along the long side of the droplet like in Sect. 2.5.1. Another way of viewing this is simply as extending the procedure used for the East internal dynamics all the way up to the mesoscopic scale \(\ell ^{\textrm{mes}}\) (see Sect. 9.1).

It should be noted that a version of this mechanism, which coincides with the above for models with rectangular droplets, but differs in general, was introduced in [22]. Though their snail mesoscopic dynamics can be replaced by our East one, for the sake of concision in Sect. 8.2 we directly import the results of [22] based on the snail mechanism.

2.5.3 Stair mesoscopic dynamics

For unbalanced families with infinite number of stable directions (class (a)) the following stair mesoscopic dynamics was introduced in [31]. Recall from Sect. 2.4.1 that for unbalanced models the internal droplet is simply a fully infected frame or group of consecutive columns. While moving the droplet left via an East motion, we pick up W-helping sets above or below the droplet. These sets allow us to make all droplets to their left shifted up or down by one row. Hence, we manage to create a copy of the droplet far to its left but also slightly shifted up or down (see [31, Fig. 6]. Repeating this (with many steps in our staircase) in a two-dimensional East-like motion, we can now relax on a mesoscopic droplet with horizontal dimension much larger than \(\ell ^{\textrm{mes}}\) but still polynomial in 1/q and vertical dimension \(\ell ^{\textrm{mes}}\) in time \({\rho }_{\textrm{D}}^{-O(\log (1/q))}\). Here, one should again intuitively imagine we are using the bound \(q^{-O(\log L)}\) but this time for the relaxation time of the 2-dimensional East model.

2.6 Global dynamics

The global dynamics (see Sects. 5.2, 6.3, 7.3, 8.2 and 9.2) receives as input a SG event for a droplet on scale \(\ell ^{\textrm{mes}}\) with probability roughly \({\rho }_{\textrm{D}}\) and a bound on its relaxation time, as provided by the mesoscopic dynamics. Its goal is to move such a droplet efficiently to the origin from its typical initial position at distance roughly \({\rho }_{\textrm{D}}^{-1/2}\).

2.6.1 CBSEP global dynamics

If \(\mathcal U\) has a finite number of stable directions (classes (c)–(g)) the mesoscopic droplet can perform a CBSEP motion in a typical environment. Indeed, the droplet is large enough for CBSEP-extensions with W-helping sets to be possible in all directions. Therefore, the cost of this mechanism is given by the relaxation time of CBSEP on a box of size \(\ell ^{\textrm{gl}}=\exp (1/q^{3\alpha })\) with density of \(\uparrow \) given by \({\rho }_{\textrm{D}}\). Performing this strategy carefully and using the 2-dimensional CBSEP, this yields a relaxation time \(\min ((\ell ^{\textrm{gl}})^2,1/{\rho }_{\textrm{D}})=1/{\rho }_{\textrm{D}}\) (recall Sect. 2.3.1 and see Sect. 5.2).

2.6.2 East global dynamics

If \(\mathcal U\) has infinite number of stable directions (classes (a) and (b)), the strategy is identical to the CBSEP global dynamics, but employs an East dynamics. Now the cost becomes the relaxation time of an East model with density of infections \({\rho }_{\textrm{D}}\), which yields a relaxation time of \({\rho }_{\textrm{D}}^{-O(\log \min (\ell ^{\textrm{gl}},1/{\rho }_{\textrm{D}}))}={\rho }_{\textrm{D}}^{-O(\log (1/{\rho }_{\textrm{D}}))}\) (recall Sect. 2.3.2 and see Sect. 9.2).

2.7 Assembling the components

To conclude, let us return to the summary provided in Table 2. In Table 2a we collect the mechanisms for each scale and their cost to the relaxation time. The results are expressed in terms of the probability of a droplet \({\rho }_{\textrm{D}}\), which equals \(\exp (-O(\log (1/q))^2/q^{\alpha })\) for unbalanced models and \(\exp (-O(1)/q^{\alpha })\) for balanced ones. The final bound on \({\mathbb E} _{{\mu }}[{\tau }_0]\) for each class then corresponds to the product of the costs of the mechanism employed at each scale. To complement this, in Table 2b we indicate the fastest mechanism available for each class on each scale. We further indicate which one gives the dominant contribution to the final result appearing in Theorem 1, once the bill is footed.

Finally, let us alert the reader that, for the sake of concision, the proof below does not systematically implement the optimal strategy for each class as indicated in Table 2b if that does not deteriorate the final result. Similarly, when that is unimportant, we may give weaker bounds than the ones in Table 2a. In Sect. 8.2 we tacitly import a weaker precursor of the CBSEP global mechanism from [22] not listed above.

3 Preliminaries

3.1 Harris inequality

Let us recall a well-known correlation inequality due to Harris [17]. It is used throughout and we state some particular formulations that are useful to us.

For Sect. 3.1 we fix a finite \({\Lambda }\subset {\mathbb Z} ^2\). We say that an event \(\mathcal A\subset {\Omega }_{\Lambda }\) is decreasing if adding infections does not destroy its occurrence.

Proposition 3.1

(Harris inequality). Let \(\mathcal A,\mathcal B\subset {\Omega }_{\Lambda }\) be decreasing. Then

Corollary 3.2

Let \(\mathcal A,\mathcal B,\mathcal C,\mathcal D\subset {\Omega }_{\Lambda }\) be nonempty and decreasing events such that \(\mathcal B\) and \(\mathcal D\) are independent, then

Proof

The first inequality in Eq. (8) is Eq. (9) for \(\mathcal C=\Omega _\Lambda \), the second follows from Eq. (7) and \({\mu }(\mathcal A|\mathcal B)={\mu }(\mathcal A\cap \mathcal B)/{\mu }(\mathcal B)\), while Eq. (9) is

using Eq. (7) in the numerator and independence in the denominator. \(\square \)

We collectively refer to Eqs. (7)–(9) as Harris inequality.

3.2 Directions

Throughout this work we fix a critical update family \(\mathcal U\) with difficulty \({\alpha }\). We call a direction \(u\in S^1\) rational if \(u{\mathbb R} \cap {\mathbb Z} ^2\ne \{0\}\). It follows from Definition 1.1 that isolated and semi-isolated stable directions are rational [8, Theorem 1.10]. Therefore, by Definition 1.3 there exists an open semicircle with rational midpoint \(u_0\) such that all directions in the semicircle have difficulty at most \( {\alpha }\). We may assume without loss of generality that the direction \(u_0+\pi /2\) is hard unless \(\mathcal U\) is isotropic. It is not difficult to show (see e.g. [8, Lemma 5.3]) that one can find a nonempty set \(\mathcal S'\) of rational directions such that:

-

all isolated and semi-isolated stable directions are in \(\mathcal S'\);

-

\(u_0\in \mathcal S'\);

-

for every two consecutive directions u, v in \(\mathcal S'\) either there exists a rule \(X\in \mathcal U\) such that \(X\subset {\overline{{\mathbb H} }}_{u}\cap {\overline{{\mathbb H} }}_v\) or all directions between u and v are stable.

We further consider the set \({\widehat{\mathcal S}}=\mathcal S'+\{0,\pi /2,\pi ,3\pi /2\}\) obtained by making \(\mathcal S'\) invariant by rotation by \(\pi /2\). It is not hard to verify that the three conditions above remain valid when we add directions, so they are still valid for \({\hat{\mathcal S}}\) instead of \(\mathcal S'\). We refer to the elements of \({\widehat{\mathcal S}}\) as quasi-stable directions or simply directions, as they are the only ones of interest to us. We label the elements of \({\widehat{\mathcal S}}=(u_i)_{i\in [4k]}\) clockwise and consider their indices modulo 4k (we write [n] for \(\{0,\dots ,n-1\}\)), so that \(u_{i+2k}=-u_{i}\) (the inverse being taken in \({\mathbb R} ^2\) and not w.r.t. the angle) is perpendicular to \(u_{i+k}\). In figures we take \({\widehat{\mathcal S}}=\frac{\pi }{4}({\mathbb Z} /8{\mathbb Z} )\) and \(u_0=(-1,0)\). Further observe that if all \(U\in \mathcal U\) are contained in the axes of \({\mathbb Z} ^2\), then we may set \({\widehat{\mathcal S}}=\frac{\pi }{2}({\mathbb Z} /4{\mathbb Z} )\).

For \(i\in [4k]\) we introduce \({\rho }_i=\min \{{\rho }>0:\exists x\in {\mathbb Z} ^2,\langle x,u_i\rangle ={\rho }\}\) and \({\lambda }_i=\min \{{\lambda }>0:{\lambda }u_i\in {\mathbb Z} ^2\}\), which are both well-defined, as the directions are rational (in fact \({\rho }_i{\lambda }_i=1\), but we use both notations for transparency).

3.3 Droplets

We next define the geometry of the droplets we use. Recall half planes from Eq. (3).

Definition 3.3

(Droplet). A droplet is a nonempty closed convex polygon of the form

for some radii \(\underline{r}\in {\mathbb R} ^{[4k]}\) (see the black regions in Fig. 2). For a sequence of radii \(\underline{r}\) we define the side lengths \(\underline{s}=(s_i)_{i\in [4k]}\) with \(s_i\) the length of the side of \({\Lambda }(\underline{r})\) with outer normal \(u_i\).

We say that a droplet is symmetric if it is of the form \(x+{\Lambda }(\underline{r})\) with \(2x\in {\mathbb Z} ^2\) and \(r_i=r_{i+2k}\) for all \(i\in [2k]\). If this is the case, we call x the center of the droplet.

Note that if all \(U\in \mathcal U\) are contained in the axes of \({\mathbb Z} ^2\), then droplets are simply rectangles with sides parallel to the axes.

We write \((\underline{e}_i)_{i\in [4k]}\) for the canonical basis of \({\mathbb R} ^{[4k]}\) and we write \(\underline{1}=\sum _{i\in [4k]}\underline{e}_i\), so that \({\Lambda }(r\underline{1})\) is a polygon with inscribed circle of radius r and sides perpendicular to \({\widehat{\mathcal S}}\). It is often more convenient to parametrise dimensions of droplets differently. For \(i\in [4k]\) we set

This way \({\Lambda }(\underline{r}+\underline{v}_i)\) is obtained from \({\Lambda }(\underline{r})\) by extending the two sides parallel to \(u_i\) by 1 in direction \(u_i\) and leaving all other side lengths unchanged (see Fig. 2a). Note that if \({\Lambda }(\underline{r})\) is symmetric, then so is \({\Lambda }(\underline{r}+{\lambda }_i\underline{v}_i)\) for \(i\in [4k]\).

Definition 3.4

(Tube). Given \(i\in [4k]\), \(\underline{r}\) and \(l>0\), we define the tube of length l, direction i and radii \(\underline{r}\) (see the thickened regions in Fig. 2)

We often need to consider boundary conditions for our events on droplets and tubes. Given two disjoint finite regions \(A,B\subset {\mathbb Z} ^2\) and two configurations \({\eta }\in {\Omega }_A\) and \({\omega }\in {\Omega }_{B}\), we define \({\eta }\cdot {\omega }\in {\Omega }_{A\cup B}\) as

3.4 Scales

Throughout the work we consider the positive integer constants

Each one is assumed to be large enough depending on \(\mathcal U\) and, therefore, \({\widehat{\mathcal S}}\) and \({\alpha }\) (e.g. \(W>{\alpha }\)), and much larger than any explicit function of the next (e.g. \(e^W<C\)). These constants are not allowed to depend on q. Whenever asymptotic notation is used, its implicit constants are not allowed to depend on the above ones, but only on \(\mathcal U\). Also recall Footnote \(^{1}\).

The following are our main scales corresponding to the mesoscopic and internal dynamics:

3.5 Helping sets

We next introduce various constant-sized sets of infections sufficient to induce growth. As the definitions are quite technical in general, in Fig. 1 we introduce a deliberately complicated example, on which to illustrate them.

3.5.1 Helping sets for a line

Recall \((u_i)_{i\in [4k]}\) and \((\lambda _i)_{i\in [4k]}\) from Sect. 3.2 and that for \(i\in [4k]\), the direction \(u_{i+k}\) is obtained by rotating \(u_i\) clockwise by \(\pi /2\).

Definition 3.5

(W-helping set in direction \(u_i\)). Let \(i\in [4k]\). A W-helping set in direction \(u_i\) is any set of W consecutive infected sites in \({\overline{{\mathbb H} }}_{u_i}\setminus {\mathbb H} _{u_i}\), that is, a set of the form \(x+[W]{\lambda }_{i+k}u_{i+k}\) for some \(x\in {\overline{{\mathbb H} }}_{u_i}\setminus {\mathbb H} _{u_i}\).

The relevance of W-helping sets in direction \(u_i\) is that, since W is large enough, \([Z\cup {\mathbb H} _{u_i}]_\mathcal U={\overline{{\mathbb H} }}_{u_i}\) for any direction \(u_i\) such that \(\alpha (u_i)<\infty \) and Z a W-helping set in direction \(u_i\) (see [8, Lemma 5.2]).

We next define some smaller sets which are sufficient to induce such growth but have the annoying feature that they are not necessarily contained in \({\overline{{\mathbb H} }}_{u_i}\) and do not necessarily induce growth in a simple sequential way like W-helping sets in direction \(u_i\). Let us note that except in “Appendix A.2” the reader will not lose anything conceptual by thinking that the sets \(Z_i\), \(u_i\)-helping sets and \({\alpha }\)-helping sets in direction \(u_i\) defined below are simply single infected sites in \({\overline{{\mathbb H} }}_{u_i}\setminus {\mathbb H} _{u_i}\) and the period Q is 1.

In words, the set \(Z_i\) provided by the following lemma together with \({\mathbb H} _{u_i}\) can infect a semi-sublattice of the first line outside \({\mathbb H} _{u_i}\) and only a finite number of other sites.

Lemma 3.6

Let \(i\in [4k]\) be such that \(0<{\alpha }(u_i)\leqslant {\alpha }\). Then there exists a set \(Z_i\subset {\mathbb Z} ^2\setminus {\mathbb H} _{u_i}\) and \(x_i\in {\mathbb Z} ^2\setminus \{0\}\) such that

where \({\mathbb N} =\{0,1,\dots \}\).

Proof

Definition 1.3 supplies a set \(Z\subset {\mathbb Z} ^2{\setminus }{\mathbb H} _{u_i}\) such that \({\overline{Z}}=[{\mathbb H} _{u_i}\cup Z]_\mathcal U{\setminus }{\mathbb H} _{u_i}\) is infinite and \(|Z|=\alpha (u_i)\). Among all possible such Z, choose Z to minimise \(l=\max \{\langle z,u_i\rangle :z\in Z\}\). Yet, \(u_i\) is stable, since \(\alpha (u_i)\ne 0\) (recall Definition 1.3). Therefore, \({\overline{Z}}\subset {\overline{{\mathbb H} }}_{u_i}(l)\setminus {\mathbb H} _{u_i}\), because \(Z\cup {\mathbb H} _{u_i}\subset {\overline{{\mathbb H} }}_{u_i}(l)\) (recall Definition 1.1 and observe that it implies that \([{\overline{{\mathbb H} }}_{u_i}(l)]_\mathcal U={\overline{{\mathbb H} }}_{u_i}(l)\)).

Then [7, Lemma 3.3] asserts that \(\overline{Z}\cap {\overline{{\mathbb H} }}_{u_i}\) is either finite or contains \(x_i{\mathbb N} \) for some \(x_i\in {\overline{{\mathbb H} }}_{u_i}\setminus ({\mathbb H} _{u_i}\cup \{0\})\). Assume that \(|{\overline{Z}}\setminus {\overline{{\mathbb H} }}_{u_i}|<\infty \), so that \(|\overline{Z}\cap {\overline{{\mathbb H} }}_{u_i}|=\infty \), since \(|{\overline{Z}}|=\infty \). Then we conclude by setting \(Z_i\) equal to the union of Z with \(\alpha -\alpha (u_i)\) arbitrarily chosen elements of \(\overline{Z}{\setminus } Z\), so that \(\overline{Z_i}={\overline{Z}}\).

Assume for a contradiction that, on the contrary, \(|\overline{Z}{\setminus }{\overline{{\mathbb H} }}_{u_i}|=\infty \). Set \(Z'=(Z-\rho _iu_i){\setminus }{\mathbb H} _u\) (i.e. shift Z one line closer to \({\mathbb H} _{u_i}\)) and observe that \(\overline{Z'}\supset ({\overline{Z}}\setminus {\overline{{\mathbb H} }}_{u_i}-\rho _iu_i)\) is still infinite. Therefore, by Definition 1.3\(\alpha (u_i)\leqslant |Z'|\leqslant |Z|=\alpha (u_i)\). This contradicts our choice of Z minimising l. \(\square \)

In the example of Fig. 1 the \(u_3\) direction admits a set \(Z_3\) of cardinality 3 such that \([Z_3\cup {\mathbb H} _{u_3}]_\mathcal U\) only contains every second site of the line \({\overline{{\mathbb H} }}_{u_i}\setminus {\mathbb H} _{u_i}\), while at least 4 sites are needed to infect the entire line. Thus, in order to efficiently infect \({\overline{{\mathbb H} }}_{u_3}\setminus {\mathbb H} _{u_3}\), assuming \({\mathbb H} _{u_3}\) is infected, we may use two translates of \(Z_3\) with different parity. This technicality is reflected in the next definition.

Definition 3.7

(\(u_i\)-helping set). For all \(i\in [4k]\) such that \(0<{\alpha }(u_i)\leqslant {\alpha }\) fix a choice of \(Z_i\) and \(x_i\) as in Lemma 3.6 in such a way that the period

is independent of i and sufficiently large so that the diameter of \(\{0\}\cup Z_i\) is much smaller than Q. A \(u_i\)-helping set is a set of the form

for some integers \(k_j\). For \(i\in [4k]\) with \({\alpha }(u_i)=0\), we define \(u_i\)-helping sets to be empty. For \(i\in [4k]\) with \(\alpha (u_i)>\alpha \) there are no \(u_i\)-helping sets.

Note that by Lemma 3.6 a \(u_i\)-helping set Z is sufficient to infect a half-line, but since that contains a W-helping set in direction \(u_i\), we have \([Z\cup {\mathbb H} _{u_i}]_\mathcal U\supset {\overline{{\mathbb H} }}_{u_i}\).

We further incorporate the artificial symmetrisation alluded to in Remark 2.1 in the next definition.

Definition 3.8

(\({\alpha }\)-helping set in direction \(u_i\)). Let \(i\in [4k]\).

-

If \({\alpha }(u_i)\leqslant {\alpha }\) and \({\alpha }(u_{i+2k})\leqslant {\alpha }\), then a \({\alpha }\)-helping set in direction \(u_i\) is a set of the form \(H\cup H'\) with H a \(u_i\)-helping set and \(-H'=\{-h:h\in H'\}\) a \(u_{i+2k}\)-helping set.

-

If \({\alpha }(u_i)\leqslant {\alpha }\) and \({\alpha }(u_{i+2k})>{\alpha }\), then a \({\alpha }\)-helping set in direction \(u_i\) is a \(u_i\)-helping set.

-

If \({\alpha }<{\alpha }(u_i)\leqslant \infty \), there are no \({\alpha }\)-helping sets in direction \(u_i\).

If \({\alpha }(u_i)<\infty \), any set which is either a W-helping set in direction \(u_i\) or a \({\alpha }\)-helping set in direction \(u_i\) is called helping set in direction \(u_i\). If \({\alpha }(u_i)=\infty \), there are no helping sets in direction \(u_i\).

In the example of Fig. 1\(u_0\) and \(u_2\) are both of difficulty \({\alpha }=3\), so \({\alpha }\)-helping sets in direction \(u_0\) correspond to \((z_1+\{(0,0),(2,0),(3,0)\})\cup (z_2+\{(0,0),(-2,1),(0,2)\})\) for some \((z_1,z_2)\in (\{0\}\times {\mathbb Z} )^2\). The set \(z_2+\{(0,0),(-2,1),(0,2)\}\) is not a \(u_0\)-helping set, but we include it in \({\alpha }\)-helping sets in direction \(u_0\). We do so, in order for \({\alpha }\)-helping sets in direction \(u_0\) and \(u_2\) to be symmetric. Namely, they satisfy that Z is a \(\alpha \)-helping set in direction \(u_0\) if and only if \(-Z\) is a \(\alpha \)-helping set in direction \(u_2\).

3.5.2 Helping sets for a segment

For this section we fix a direction \(u_{i}\in {\widehat{\mathcal S}}\) with \({\alpha }(u_i)<\infty \) and a discrete segment S perpendicular to \(u_i\) of the form

for some integer \(a\geqslant W\). The direction \(u_i\) is kept implicit in the notation, so it may be useful to view S as having an orientation.

Definition 3.9

For \(d\geqslant 0\), we denote by \(\mathcal H^W_d(S)\) the event that there is an infected W-helping set in direction \(u_i\) in S at distance at least d from its endpoints:

We write \(\mathcal H^W(S)=\mathcal H^W_0(S)\).

For helping sets the definition is more technical, since they are not included in S. We therefore require that they are close to S and at some distance from its endpoints.

Definition 3.10

For \(d\geqslant 0\), we denote by \(\mathcal H_{d}(S)\subset \Omega \) the event such that \(\eta \in \mathcal H_d(S)\) if there exists Z a helping set in direction \(u_i\) such that for all \(z\in Z\), we have \(\eta _z=0\),

Given a domain \({\Lambda }\supset S\) and a boundary condition \({\omega }\in {\Omega }_{{\mathbb Z} ^2\setminus {\Lambda }}\) we define \(\mathcal H^{{\omega }}_d(S)=\{{\eta }\in {\Omega }_{{\Lambda }}:{\omega }\cdot {\eta }\in \mathcal H_d(S)\}\). We write \(\mathcal H^{\omega }(S)=\mathcal H^{\omega }_0(S)\) and \(\mathcal H(S)=\mathcal H_0(S)\).

Note that in view of Definition 3.8, if \(\alpha (u_i)<\infty \), then \(\mathcal H^{\omega }(S)\supset \mathcal H^W(S)\) for any \({\omega }\) with equality if \(\alpha (u_i)>\alpha \). The next observation bounds the probability of the above events.

Observation 3.11

(Helping set probability) For any \(\Lambda \supset S\) and \({\omega }\in \Omega _{{\mathbb Z} ^2\setminus \Lambda }\) we have: if \({\alpha }(u_i)<\infty \), then

if \({\alpha }(u_i)\leqslant {\alpha }\), then

Proof

Assume \(\alpha (u_i)<\infty \). As already observed, by Definitions 3.8–3.10, \(\mathcal H^{\omega }(S)\supset \mathcal H^W(S)\), as W-helping sets in direction \(u_i\) are helping sets in direction \(u_i\). For the second inequality follows by dividing S into disjoint groups of W consecutive sites (each of which is a W-helping set in direction \(u_i\)). The final inequality follows since \(|S|\geqslant W\) and \((1-q^W)^{1/W}\leqslant e^{-q^W/(2W)}\leqslant e^{-q^{2W}}\).

The case \(\alpha (u_i)\leqslant \alpha \) is treated similarly. Indeed, in order for \(\mathcal H(S)\) to occur, we need to find each of the \(Q=O(1)\) pieces of a \(u_i\)-helping set in Eq. (12), each of which has cardinality \(\alpha \). We direct the reader to [7, Lemma 4.2] for more details. \(\square \)

3.6 Constrained Poincaré inequalities

We next define the (constrained) Poincaré constants of various regions. For \({\Lambda }\subset {\mathbb Z} ^2\), \(\eta ,\omega \in {\Omega }\) (or possibly \(\eta \) defined on a set including \(\Lambda \) and \(\omega \) on a set including \({\mathbb Z} ^2\setminus \Lambda \)) and \(x\in {\mathbb Z} ^2\), we denote by \(c_x^{{\Lambda },{\omega }}({\eta })=c_x({\eta }_{\Lambda }\cdot {\omega }_{{\mathbb Z} ^2\setminus {\Lambda }})\) (recall Eqs. (1) and (11)) the constraint at x in \(\Lambda \) with boundary condition \(\omega \). Given a finite \({\Lambda }\subset {\mathbb Z} ^2\) and a nonempty event \(\mathcal S\mathcal G^\textbf{1}({\Lambda })\subset {\Omega }_{\Lambda }\), let \({\gamma }({\Lambda })\) be the smallest constant \({\gamma }\in [1,\infty ]\) such that the inequality

holds for all \(f:{\Omega }\rightarrow {\mathbb R} \). Here we recall from Sect. 1.1 that \({\mu }\) denotes both the product Bernoulli probability distribution with parameter q and the expectation with respect to it. Moreover, for any function \(\phi :{\Omega }\rightarrow {\mathbb R} \), \({\mu }_{\Lambda }(\phi )={\mu }(\phi (\eta )|\eta _{{\mathbb Z} ^2\setminus {\Lambda }})\) is the average on the configuration \(\eta \) of law \({\mu }\) in \({\Lambda }\), conditionally on its state in \({\mathbb Z} ^2\setminus {\Lambda }\). Thus, \({\mu }_{\Lambda }(\phi )\) is a function on \({\Omega }_{{\mathbb Z} ^2\setminus {\Lambda }}\). Similarly, \({\text {Var}}_x(f)={\mu }(f^2(\eta )|\eta _{{\mathbb Z} ^2{\setminus } \{x\}})-{\mu }^2(f(\eta )|\eta _{{\mathbb Z} ^2{\setminus } \{x\}})\) and

Remark 3.12

It is important to note that in the r.h.s. of Eq. (15) we average w.r.t. \({\mu }_{\Lambda }\) and not \({\mu }_{\Lambda }(\cdot |\mathcal S\mathcal G^\textbf{1}({\Lambda }))\) (the latter would correspond to the usual definition of Poincaré constant, from which we deviate). In this respect Eq. (15) follows [22, Eq. (12)] and differs from [24, Eq. (4.5)]. Although this nuance is not important most of the time, this choice is crucial for the proof of Theorem 8.5 below.

3.7 Boundary conditions, translation invariance, monotonicity

Let us make a few conventions in order to lighten notation throughout the paper. As we already witnessed in Sect. 3.5, it is often the case that much of the boundary condition is actually irrelevant for the occurrence of the event. For instance, in Definition 3.10, \(\mathcal H^{\omega }(S)\) only depends on the restriction of \({\omega }\) to a finite-range neighbourhood of the segment S. Moreover, even the state in \({\omega }\) of sites close to S, but in \({\mathbb H} _{u_i}\) is of no importance. Such occasions arise frequently, so, by abuse, we allow ourselves to specify a boundary condition on any region containing the sites whose state actually matters for the occurrence of the event.

We also need the following natural notion of translation invariance.

Definition 3.13

(Translation invariance). Let \(A\subset {\mathbb R} ^2\). Consider a collection of events \(\mathcal E^{\omega }(A+x)\) for \(x\in {\mathbb Z} ^2\) and \({\omega }\in {\Omega }_{{\mathbb Z} ^2{\setminus }(A+x)}\). We say that \(\mathcal E(A)\) is translation invariant, if for all \(\eta \in {\Omega }_A\), \({\omega }\in {\Omega }_{{\mathbb Z} ^2{\setminus } A}\) and \(x\in {\mathbb Z} ^2\) we have

Similarly, we say that \(\mathcal E^{\omega }(A)\) is translation invariant, if the above holds for a fixed \({\omega }\in {\Omega }_{{\mathbb Z} ^2\setminus A}\).

We extend the events \(\mathcal H_d(S)\), \(\mathcal H_d^{\omega }(S)\), \(\mathcal H_d^W(S)\) from Definitions 3.9 and 3.10 in a translation invariant way. Similarly, \(\mathcal T\) and \(\mathcal S\mathcal T\) events for tubes defined in Sect. 4.1 below and \(\mathcal S\mathcal G\) events for droplets defined throughout the paper are translation invariant. Therefore, we sometimes only define them for a fixed region, as we did in Sect. 3.5.2, but systematically extended them in a translation invariant way to all translates of this region.

We also use the occasion to point out that, just like the event \(\mathcal H_d^{\omega }(S)\), all our \(\mathcal T\), \(\mathcal S\mathcal T\) and \(\mathcal S\mathcal G\) events are decreasing in both the configuration and the boundary condition, so that we are able to apply Sect. 3.1 as needed.

4 One-Directional Extensions

In this section we define our crucial one-directional CBSEP-extension and East-extension techniques (recall Sect. 2.3).

4.1 Traversability

We first need the following traversability \(\mathcal T\) and symmetric traversability \(\mathcal S\mathcal T\) events for tubes (recall Definition 3.4) requiring infected helping sets (recall Sect. 3.5.2) to appear for each of the segments composing the tube. The definition is illustrated in Fig. 2. Recall the constant C from Sect. 3.4

Definition 4.1

(Traversability). Fix a tube \(T=T(\underline{r},l,i)\). Assume that \(i\in [4k]\) is such that \({\alpha }(u_j)<\infty \) for all \(j\in (i-k,i+k)\). For \(m\geqslant 0\) and \(j\in (i-k,i+k)\) write \(S_{j,m}={\mathbb Z} ^2\cap {\Lambda }(\underline{r}+m\underline{v}_i+{\rho }_j\underline{e}_j)\setminus {\Lambda }(\underline{r}+m\underline{v}_i)\). Note that \(S_{j,m}\) is a discrete line segment perpendicular to \(u_j\) of length \(s_j-O(1)\) (recall from Definition 3.3 that \(\underline{s}\) is the sequence of side lengths of \(\Lambda (\underline{r})\)). For \({\omega }\in {\Omega }_{{\mathbb Z} ^2\setminus {\Lambda }(\underline{r}+l\underline{v}_i)}\) we denote by

the event that T is \(({\omega },d)\)-traversable. We set \(\mathcal T^{\omega }(T)=\mathcal T^{\omega }_0(T)\).

If moreover \(\alpha (u_i)<\infty \) for all \(i\in [4k]\), that is, \(\mathcal U\) has a finite number of stable directions, we denote by

the event that T is \(({\omega },d)\)-symmetrically traversable.

Thus, if all side lengths of \({\Lambda }(\underline{r})\) are larger than \(C^2+d\) by a large enough constant, the event \(\mathcal T^{\omega }_d(T(\underline{r},s,i))\) decomposes each of the hatched parallelograms in Fig. 2a into line segments parallel to its side that is not parallel to \(u_i\). A helping set is required for each of these segments in the direction perpendicular to them which has positive scalar product with \(u_i\). The last boundedly many segments may also use the boundary condition \({\omega }\), but it is irrelevant for the remaining ones, since it is far enough from them.

For symmetric traversability, we rather require W-helping sets for opposites of hard directions (recall from Definition 3.8 that if the direction itself is hard, helping sets are simply W-helping sets). In particular, if none of the directions \(u_j\) for \(j\in [4k]\setminus \{i+k,i-k\}\) is hard (implying that \(\mathcal U\) is unrooted), we have \(\mathcal S\mathcal T^{\omega }_d(T(\underline{r},l,i))=\mathcal T^{\omega }_d(T(\underline{r},l,i))\). The reason for the name “symmetric traversability” is that if \(\mathcal U\) has a finite number of stable directions and \({\Lambda }(\underline{r})\) is a symmetric droplet (recall Sect. 3.3), then, for any \(l>0\), \(i\in [4k]\), \({\omega }\in {\Omega }_{{\mathbb Z} ^2\setminus T(\underline{r},l,i)}\) and \({\eta }\in {\Omega }_{T(\underline{r},l,i)}\), we have

denoting by \({\omega }'\in {\Omega }_{{\mathbb Z} ^2\setminus T(\underline{r},l,i+2k)}\) the boundary condition obtained by rotating \({\omega }\) by \(\pi \) around the center of \({\Lambda }(\underline{r})\) and similarly for \(\eta '\). To see this, recall from Sect. 3.5.2 that \(\mathcal H^{\omega }(S)\supset \mathcal H^W(S)\) with equality when \(\alpha (u_i)>\alpha \) and note that the same symmetry as in Eq. (16) holds at the level of the segment \(S_{j,m}\) and its symmetric one, \(S'_{j+2k,m}={\mathbb Z} ^2\cap {\Lambda }(\underline{r}+m\underline{v}_{i+2k}+{\rho }_{j+2k}\underline{e}_{j+2k})\setminus {\Lambda }(\underline{r}+m\underline{v}_{i+2k})\):

all four cases following directly from Definitions 3.8–3.10.

We next state a simple observation which is used frequently to modify boundary conditions as we like at little cost.

Lemma 4.2