Abstract

We present a new construction of the Euclidean \(\Phi ^4\) quantum field theory on \({\mathbb {R}}^3\) based on PDE arguments. More precisely, we consider an approximation of the stochastic quantization equation on \({\mathbb {R}}^3\) defined on a periodic lattice of mesh size \(\varepsilon \) and side length M. We introduce a new renormalized energy method in weighted spaces and prove tightness of the corresponding Gibbs measures as \(\varepsilon \rightarrow 0\), \(M \rightarrow \infty \). Every limit point is non-Gaussian and satisfies reflection positivity, translation invariance and stretched exponential integrability. These properties allow to verify the Osterwalder–Schrader axioms for a Euclidean QFT apart from rotation invariance and clustering. Our argument applies to arbitrary positive coupling constant, to multicomponent models with O(N) symmetry and to some long-range variants. Moreover, we establish an integration by parts formula leading to the hierarchy of Dyson–Schwinger equations for the Euclidean correlation functions. To this end, we identify the renormalized cubic term as a distribution on the space of Euclidean fields.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(\Lambda _{M, \varepsilon } = ( (\varepsilon {\mathbb {Z}})/( M{\mathbb {Z}}))^3\) be a periodic lattice with mesh size \(\varepsilon \) and side length M where \(M/(2\varepsilon )\in {\mathbb {N}} .\) Consider the family \((\nu _{M, \varepsilon })_{M,\varepsilon }\) of Gibbs measures for the scalar field \(\varphi :\Lambda _{M, \varepsilon }\rightarrow {\mathbb {R}}\), given by

where \(\nabla _{\varepsilon }\) denotes the discrete gradient and \(a_{M, \varepsilon }, b_{M, \varepsilon }\) are suitable renormalization constants, \(m^2 \in {\mathbb {R}}\) is called the mass and \(\lambda > 0\) the coupling constant. The numerical factor in the exponential is chosen in order to simplify the form of the stochastic quantization equation (1.3) below. The main result of this paper is the following.

Theorem 1.1

There exists a choice of the sequence \((a_{M, \varepsilon }, b_{M, \varepsilon })_{M, \varepsilon }\) such that for any \(\lambda > 0\) and \(m^2 \in {\mathbb {R}}\), the family of measures \((\nu _{M, \varepsilon })_{M, \varepsilon }\) appropriately extended to \({\mathcal {S}}' ({\mathbb {R}}^3)\) is tight. Every accumulation point \(\nu \) is translation invariant, reflection positive and non-Gaussian. In addition, for every small \(\kappa > 0\) there exists \(\sigma > 0\), \(\beta > 0\) and \(\upsilon = O (\kappa ) > 0\) such that

Every \(\nu \) satisfies an integration by parts formula which leads to the hierarchy of the Dyson–Schwinger equations for n-point correlation functions.

For the precise definition of translation invariance and reflection positivity (RP) we refer the reader to Section 5.

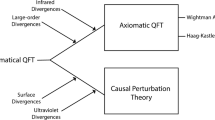

The proof of convergence of the family \((\nu _{M, \varepsilon })_{M, \varepsilon }\) has been one of the major achievements of the constructive quantum field theory (CQFT) program [VW73, Sim74, GJ87, Riv91, BSZ92, Jaf00, Jaf08, Sum12] which flourished in the 70s and 80s. In the two dimensional setting the existence of an analogous object has been one of the early successes of CQFT, while in four and more dimensions (after a proper normalization) any accumulation point is necessarily Gaussian [FFS92].

The existence of an Euclidean invariant and reflection positive limit \(\nu \) (plus some technical conditions) implies the existence of a relativistic quantum field theory in the Minkowski space-time \({\mathbb {R}}^{1 + 2}\) which satisfies the Wightman axioms [Wig76]. This is a minimal set of axioms capturing the essence of the combination of quantum mechanics and special relativity. The translation from the commutative probabilistic setting (Euclidean QFT) to the non-commutative Minkowski QFT setting is operated by a set of axioms introduced by Osterwalder–Schrader (OS) [OS73, OS75] for the correlation functions of the measure \(\nu \). These are called Schwinger functions or Euclidean correlation functions and shall satisfy: a regularity axiom, a Euclidean invariance axiom, a reflection positivity axiom, a symmetry axiom and a cluster property.

Euclidean invariance and reflection positivity conspire against each other. Models which easily satisfy one property hardly satisfy the other if they are not Gaussian, or simple transformations thereof, see e.g. [AY02, AY09]. Reflection positivity itself is a property whose crucial importance for probability theory and mathematical physics [Bis09, Jaf18] and representation theory [NO18, JT18] has been one of the byproducts of the constructive effort.

The original proof of the OS axioms, along with additional properties of the limiting measures which are called \(\Phi ^4_3\) measures, is scattered in a series of works covering almost a decade. Glimm [Gli68] first proved the existence of the Hamiltonian (with an infrared regularization) in the Minkowski setting. Then Glimm and Jaffe [GJ73] introduced the phase cell expansion of the regularized Schwinger functions, which revealed itself a powerful and robust tool (albeit complex to digest) in order to handle the local singularities of Euclidean quantum fields and to prove the ultraviolet stability in finite volume (i.e. the limit \(\varepsilon \rightarrow 0\) with M fixed). The proof of existence of the infinite volume limit (\(M \rightarrow \infty \)) and the verification of Osterwalder–Schrader axioms was then completed, for \(\lambda \) small and using cluster expansion methods, independently by Feldman and Osterwalder [FO76] and by Magnen and Sénéor [MS76]. Finally the work of Seiler and Simon [SS76] allowed to extend the existence result to all \(\lambda > 0\) (this is claimed in [GJ87] even though we could not find a clear statement in Seiler and Simon’s paper). Equations of motion for the quantum fields were established by Feldman and Ra̧czka [FR77].

Since this first, complete, construction, there have been several other attempts to simplify (both technically and conceptually) the arguments and the \(\Phi ^4_3\) measure has been since considered a test bed for various CQFT techniques. There exists at least six methods of proof: the original phase cell method of Glimm and Jaffe extended by Feldman and Osterwalder [FO76], Magnen and Sénéor [MS76] and Park [Par77] (among others), the probabilistic approach of Benfatto, Cassandro, Gallavotti, Nicoló, Olivieri, Presutti and Schiacciatelli [BCG+78], the block average method of Bałaban [Bał83] revisited by Dimock in [Dim13a, Dim13b, Dim14], the wavelet method of Battle–Federbush [Bat99], the skeleton inequalities method of Brydges, Fröhlich, Sokal [BFS83], the work of Watanabe on rotation invariance [Wat89] via the renormalization group method of Gawędzki and Kupiainen [GK86], and more recently the renormalization group method of Brydges, Dimock and Hurd [BDH95].

It should be said that, apart from the Glimm–Jaffe–Feldman–Osterwalder–Magnen–Sénéor result, none of the additional constructions seems to be as complete and to verify explicitly all the OS axioms. As Jaffe [Jaf08] remarks:

“Not only should one give a transparent proof of the dimension \(d = 3\) construction, but as explained to me by Gelfand [private communication], one should make it sufficiently attractive that probabilists will take cognizance of the existence of a wonderful mathematical object.”

The proof of Theorem 1.1 uses tools from the PDE theory as well as recent advances in the field of singular SPDEs, without using any input from traditional CQFT. It applies to all values of the coupling parameter \(\lambda >0\) as well as to natural extensions to N-dimensional vectorial and long-range variants of the model.

Our methods are very different from all the known constructions we enumerated above. In particular, we do not rely on any of the standard tools like cluster expansion or correlation inequalities or skeleton inequalities, and therefore our approach brings a new perspective to this extensively investigated classical problem, with respect to the removal of both ultraviolet and infrared regularizations.

Showing invariance under translation, reflection positivity, the regularity axiom of Osterwalder and Schrader and the non-Gaussianity of the measure, we go a long way (albeit not fully reaching the goal) to a complete independent construction of the \(\Phi ^4_3\) quantum field theory. Furthermore, the integration by parts formula that we are able to establish leads to the hierarchy of the Dyson–Schwinger equations for the Schwinger functions of the measure.

The key idea is to use a dynamical description of the approximate measure which relies on an additional random source term which is Gaussian, in the spirit of the stochastic quantization approach introduced by Nelson [Nel66, Nel67] and Parisi and Wu [PW81] (with a precursor in a technical report of Symanzik [Sym64]).

The concept of stochastic quantization refers to the introduction of a reversible stochastic dynamics which has the target measure as the invariant measure, here in particular the \(\Phi ^4_d\) measure in d dimensions. The rigorous study of the stochastic quantization for the two dimensional version of the \(\Phi ^4\) theory has been first initiated by Jona-Lasinio and Mitter [JLM85] in finite volume and by Borkar, Chari and Mitter [BCM88] in infinite volume. A natural \(d = 2\) local dynamics has been subsequently constructed by Albeverio and Röckner [AR91] using Dirichlet forms in infinite dimensions. Later on, Da Prato and Debussche [DPD03] have shown for the first time the existence of strong solutions to the stochastic dynamics in finite volume. Da Prato and Debussche have introduced an innovative use of a mixture of probabilistic and PDE techniques and constitute a landmark in the development of PDE techniques to study stochastic analysis problems. Similar methods have been used by McKean [McK95b, McK95a] and Bourgain [Bou96] in the context of random data deterministic PDEs. Mourrat and Weber [MW17b] have subsequently shown the existence and uniqueness of the stochastic dynamics globally in space and time. For the \(d = 1\) dimensional variant, which is substantially simpler and does not require renormalization, global existence and uniqueness have been established by Iwata [Iwa87].

In the three dimensional setting the progress has been significantly slower due to the more severe nature of the singularities of solutions to the stochastic quantization equation. Only very recently, there has been substantial progress due to the invention of regularity structures theory by Hairer [Hai14] and paracontrolled distributions by Gubinelli, Imkeller, Perkowski [GIP15]. These theories greatly extend the pathwise approach of Da Prato and Debussche via insights coming from Lyons’ rough path theory [Lyo98, LQ02, LCL07] and in particular the concept of controlled paths [Gub04, FH14]. With these new ideas it became possible to solve certain analytically ill-posed stochastic PDEs, including the stochastic quantization equation for the \(\Phi _3^4\) measure and the Kardar–Parisi–Zhang equation. The first results were limited to finite volume: local-in-time well-posedness has been established by Hairer [Hai14] and Catellier, Chouk [CC18]. Kupiainen [Kup16] introduced a method based on the renormalization group ideas of [GK86]. Long-time behavior has been studied by Mourrat, Weber [MW17a], Hairer, Mattingly [HM18b] and a lattice approximation in finite volume has been given by Hairer and Matetski [HM18a] and by Zhu and Zhu [ZZ18]. Global in space and time solutions have been first constructed by Gubinelli and Hofmanová in [GH18]. Local bounds on solutions, independent on boundary conditions, and stretched exponential integrability have been recently proven by Moinat and Weber [MW18].

However, all these advances still fell short of giving a complete proof of the existence of the \(\Phi ^4_3\) measure on the full space and of its properties. Indeed they, including essentially all of the two dimensional results, are principally aimed at studying the dynamics with an a priori knowledge of the existence and the properties of the invariant measure. For example Hairer and Matetski [HM18a] use a discretization of a finite periodic domain to prove that the limiting dynamics leaves the finite volume \(\Phi ^4_3\) measure invariant using the a priori knowledge of its convergence from the paper of Brydges et al. [BFS83]. Studying the dynamics, especially globally in space and time is still a very complex problem which has siblings in the ever growing literature on invariant measures for deterministic PDEs starting with the work of Lebowitz, Rose and Speer [LRS88, LRS89], Bourgain [Bou94, Bou96], Burq and Tzvetkov [BT08b, BT08a, Tzv16] and with many following works (see e.g. [CO12, CK12, NPS13, Cha14, BOP15]) which we cannot exhaustively review here.

The first work proposing a constructive use of the dynamics is, to our knowledge, the work of Albeverio and Kusuoka [AK17], who proved tightness of certain approximations in a finite volume. Inspired by this result, our aim here is to show how these recent ideas connecting probability with PDE theory can be streamlined and extended to recover a complete and independent proof of existence of the \(\Phi ^{4}_{3}\) measure on the full space. In the same spirit see also the work of Hairer and Iberti [HI18] on the tightness of the 2d Ising–Kac model.

Soon after Hairer’s seminal paper [Hai14], Jaffe [Jaf15] analyzed the stochastic quantization from the point of view of reflection positivity and constructive QFT and concluded that one has to necessarily take the infinite time limit to satisfy RP. Even with global solution at hand a proof of RP from dynamics seems nontrivial and actually the only robust tool we are aware of to prove RP is to start from finite volume lattice Gibbs measures for which RP can be established by elementary arguments.

Taking into account these considerations, our aim is to use an equilibrium dynamics to derive bounds which are strong enough to prove the tightness of the family \((\nu _{M, \varepsilon })_{M, \varepsilon }\). To be more precise, we study a lattice approximation of the (renormalized) stochastic quantization equation

where \(\xi \) is a space-time white noise on \({\mathbb {R}}^3\). The lattice dynamics is a system of stochastic differential equation which is globally well-posed and has \(\nu _{M, \varepsilon }\) as its unique invariant measure. We can therefore consider its stationary solution \(\varphi _{M, \varepsilon }\) having at each time the law \(\nu _{M, \varepsilon }\). We introduce a suitable decomposition together with an energy method in the framework of weighted Besov spaces. This allows us, on the one hand, to track down and renormalize the short scale singularities present in the model as \(\varepsilon \rightarrow 0\), and on the other hand, to control the growth of the solutions as \(M \rightarrow \infty \). As a result we obtain uniform bounds which allow us to pass to the limit in the weak topology of probability measures.

The details of the renormalized energy method rely on recent developments in the analysis of singular PDEs. In order to make the paper accessible to a wide audience with some PDE background we implement renormalization using the paracontrolled calculus of [GIP15] which is based on Bony’s paradifferential operators [Bon81, Mey81, BCD11]. We also rely on some tools from the paracontrolled analysis in weigthed Besov spaces which we developed in [GH18] and on the results of Martin and Perkowski [MP17] on Besov spaces on the lattice.

Remark 1.2

Let us comment in detail on specific aspects of our proof.

-

1.

The method we use here differs from the approach of [GH18] in that we are initially less concerned with the continuum dynamics itself. We do not try to obtain estimates for strong solutions and rely instead on certain cancellations in the energy estimate that permit to significantly simplify the proof. The resulting bounds are sufficient to provide a rather clear picture of any limit measure as well as some of its physical properties. In contrast, in [GH18] we provided a detailed control of the dynamics (1.3) (in stationary or non-stationary situations) at the price of a more involved analysis. Section 4.2 of the present paper could in principle be replaced by the corresponding analysis of [GH18]. However the adaptation of that analysis to the lattice setting (without which we do not know how to prove RP) would anyway require the further preparatory work which constitutes a large fraction of the present paper. Similarly, the recent results of Moinat and Weber [MW18] (which appeared after we completed a first version of this paper) can be conceivably used to replace a part of Section 4.

-

2.

The stretched exponential integrability in (1.2) is also discussed in the work of Moinat and Weber [MW18] (using different norms) and it is sufficient to prove the original regularity axiom of Osterwalder and Schrader but not its formulation given in the book of Glimm and Jaffe [GJ87].

-

3.

The Dyson–Schwinger equations were first derived by Feldman and Ra̧czka [FR77] using the results of Glimm, Jaffe, Feldman and Osterwalder.

-

4.

As already noted by Albeverio, Liang and Zegarlinski [ALZ06] on the formal level, the integration by parts formula gives rise to a cubic term which cannot be interpreted as a random variable under the \(\Phi ^4_3\) measure. Therefore, the crucial question that remained unsolved until now is how to make sense of this critical term as a well-defined probabilistic object. In the present paper, we obtain fine estimates on the approximate stochastic quantization equation and construct a coupling of the stationary solution to the continuum \(\Phi ^4_3\) dynamics and the Gaussian free field. This leads to a detailed description of the renormalized cubic term as a genuine random space-time distribution. Moreover, we approximate this term in the spirit of the operator product expansion.

-

5.

To the best of our knowledge, our work provides the first rigorous proof of a general integration by parts formula with an exact formula for the renormalized cubic term. In addition, the method applies to arbitrary values of the coupling constant \(\lambda \geqslant 0\) if \(m^2 > 0\) and \(\lambda > 0\) if \(m^2 \leqslant 0\) and we state the precise dependence of our estimates on \(\lambda \). In particular, we show that our energy bounds are uniform over \(\lambda \) in every bounded subset of \([0, \infty )\) provided \(m^2 >0\) (see Remark 4.6). Let us recall that for some \(m^{2}=m_{c}^{2}(\lambda )\) the physical mass of the continuum theory is zero and it is said that the model is critical. Existence of such a critical point was shown in [BFS83, Section 9, Part (4)]. We note that this case is included in our construction, even though we are not able to locate it since we do not have control over correlations. Its large scale limit is conjectured to correspond to the Ising conformal field theory, recently actively studied in [PRV19a] using the conformal bootstrap approach.

-

6.

By essentially the same arguments, we are able to treat the vector version of the model, where the scalar field \(\varphi : {\mathbb {R}}^3 \rightarrow {\mathbb {R}}\) is replaced by a vector valued one \(\varphi : {\mathbb {R}}^3 \rightarrow {\mathbb {R}}^N\) for some \(N \in {\mathbb {N}}\) and the measures \(\nu _{M, \varepsilon }\) are given by a similar expression as (1.1), where the norm \(| \varphi |\) is understood as the Euclidean norm in \({\mathbb {R}}^N\).

-

7.

Our proof also readily extends to the fractional variant of \(\Phi ^4_3\) where the base Gaussian measure is obtained from the fractional Laplacian \((-\Delta )^\gamma \) with \(\gamma \in (21/22,1)\) (see Section 7 for details). In general this model is sub-critical for \(\gamma \in (3/4,1)\) and in the mass-less case it has recently attracted some interest since it is bootstrappable [PRV19b, Beh19].

To conclude this introductory part, let us compare our result with other constructions of the \(\Phi ^4_3\) field theory. The most straightforward and simplest available proof has been given by Brydges, Fröhlich and Sokal [BFS83] using skeleton and correlation inequalities. All the other methods we cited above employ technically involved machineries and various kinds of expansions (they are however able to obtain very strong information about the model in the weakly-coupled regime, i.e. when \(\lambda \) is small). Compared to the existing methods, ours bears similarity in conceptual simplicity to that of [BFS83], with some advantages and some disadvantages. Both works construct the continuum \(\Phi ^4_3\) theory as a subsequence limit of lattice theories and the rotational invariance remains unproven. The main difference is that [BFS83] relies on correlation inequalities. On the one hand, this restricts the applicability to weak couplings and only models with \(N = (0,) 1, 2\) components (note that the \(N=0\) models have a meaning only in their formalism but not in ours). But, on the other hand, it allows to establish bounds on the decay of correlation functions, which we do not have. However, our results hold for every value of \(\lambda > 0\) and \(m^2 \in {\mathbb {R}}\) while the results in [BFS83] work only in the so-called “single phase region”, which corresponds to \(m^2>m_{c}^{2}(\lambda )\).

Our work is intended as a first step in the direction of using PDE methods in the study of Euclidean QFTs and large scale properties of statistical mechanical models. Another related attempt is the variational approach developed in [BG18] for the finite volume \(\Phi ^4_3\) measure. As far as the present paper is concerned the main open problem is to establish rotational invariance and to give more information on the limiting measures, in particular to establish uniqueness for small \(\lambda \). It is not clear how to deduce anything about correlations from the dynamics but it seems to be a very interesting and challenging problem.

Plan. The paper is organized as follows. Section 2 gives a summary of notation used throughout the paper, Section 3 presents the main ideas of our strategy and Section 4, Section 5 and Section 6 are devoted to the main results. First, in Section 4 we construct the Euclidean quantum field theory as a limit of the approximate Gibbs measures \(\nu _{M, \varepsilon }\). To this end, we introduce the lattice dynamics together with its decomposition. The main energy estimate is established in Theorem 4.5 and consequently the desired tightness as well as moment bounds are proven in Theorem 4.9. In Section 4.4 we establish finite stretched exponential moments. Consequently, in Section 5 we verify the translation invariance and reflection positivity, the regularity axiom and non-Gaussianity of any limit measure. Section 6 is devoted to the integration by parts formula and the Dyson–Schwinger equations. In Section 7 we discuss the extension of our results to a long-range version of the \(\Phi ^4_3\) model. Finally, in Appendix A we collect a number of technical results needed in the main body of the paper.

2 Notation

Within this paper we are concerned with the \(\Phi ^4_3\) model in discrete as well as continuous setting. In particular, we denote by \(\Lambda _{\varepsilon } = (\varepsilon {\mathbb {Z}})^d\) for \(\varepsilon = 2^{- N}\), \(N \in {\mathbb {N}}_0\), the rescaled lattice \({\mathbb {Z}}^d\) and by \(\Lambda _{M, \varepsilon } = \varepsilon {\mathbb {Z}}^d \cap {\mathbb {T}}^d_M = \varepsilon {\mathbb {Z}}^d \cap \left[ - \frac{M}{2}, \frac{M}{2} \right) ^d\) its periodic counterpart of size \(M > 0\) such that \(M/(2\varepsilon )\in {\mathbb {N}}\). For notational simplicity, we use the convention that the case \(\varepsilon = 0\) always refers to the continuous setting. For instance, we denote by \(\Lambda _0\) the full space \(\Lambda _0 ={\mathbb {R}}^d\) and by \(\Lambda _{M, 0}\) the continuous torus \(\Lambda _{M, 0} ={\mathbb {T}}^d_M\). With the slight abuse of notation, the parameter \(\varepsilon \) is always taken either of the form \(\varepsilon = 2^{- N}\) for some \(N \in {\mathbb {N}}_0\), \(N \geqslant N_0\), for certain \(N_0 \in {\mathbb {N}}_0\) that will be chosen as a consequence of Lemma A.9 below, or \(\varepsilon = 0\). Various proofs below will be formulated generally for \(\varepsilon \in {\mathcal {A}} :=\{ 0, 2^{- N} ; N \in {\mathbb {N}}_0, N \geqslant N_0 \}\) and it is understood that the case \(\varepsilon = 0\) or alternatively \(N = \infty \) refers to the continuous setting. All the proportionality constants, unless explicitly signalled, will be independent of \(M,\varepsilon ,\lambda ,m^2\). We will track the explicit dependence on \(\lambda \) as far as possible and signal when the constant depends on the value of \(m^2>0\).

For \(f \in \ell ^1 (\Lambda _{\varepsilon })\) and \(g \in L^1 (\hat{\Lambda }_{\varepsilon })\), respectively, we define the Fourier and the inverse Fourier transform as

where \(k \in (\varepsilon ^{- 1} {\mathbb {T}})^d =:\hat{\Lambda }_{\varepsilon }\) and \(x \in \Lambda _{\varepsilon }\). These definitions can be extended to discrete Schwartz distributions in a natural way, we refer to [MP17] for more details. In general, we do not specify on which lattice the Fourier transform is taken as it will be clear from the context.

Consider a smooth dyadic partition of unity \((\varphi _j)_{j \geqslant - 1}\) such that \(\varphi _{- 1}\) is supported in a ball around 0 of radius \(\frac{1}{2}\), \(\varphi _0\) is supported in an annulus, \(\varphi _j (\cdot ) = \varphi _0 (2^{- j} \cdot )\) for \(j \geqslant 0\) and if \(| i - j | > 1\) then \({\text {supp}} \varphi _i \cap {\text {supp}} \varphi _j = \emptyset \). For the definition of Besov spaces on the lattice \(\Lambda _{\varepsilon }\) for \(\varepsilon = 2^{- N}\), we introduce a suitable periodic partition of unity on \(\hat{\Lambda }_{\varepsilon }\) as follows

where \(k \in \hat{\Lambda }_{\varepsilon }\) and the parameter \(J \in {\mathbb {N}}_0\), whose precise value will be chosen below independently on \(\varepsilon \in {\mathcal {A}}\), satisfies \(0 \leqslant N - J \leqslant J_{\varepsilon } :=\inf \{ j : {\text {supp}} \varphi _j \not \subseteq [-\varepsilon ^{-1}/2, \varepsilon ^{- 1}/2 )^d \} \rightarrow \infty \) as \(\varepsilon \rightarrow 0\). We note that by construction there exists \(\ell \in {\mathbb {Z}}\) independent of \(\varepsilon = 2^{- N}\) such that \(J_{\varepsilon } = N - \ell \).

Then (2.1) yields a periodic partition of unity on \(\hat{\Lambda }_{\varepsilon }\). The reason for choosing the upper index as \(N - J\) and not the maximal choice \(J_{\varepsilon }\) will become clear in Lemma A.9 below, where it allows us to define suitable localization operators needed for our analysis. The choices of parameters \(N_0\) and J are related in the following way: A given partition of unity \((\varphi _j)_{j \geqslant - 1}\) determines the parameters \(J_{\varepsilon }\) in the form \(J_{\varepsilon } = N - \ell \) for some \(\ell \in {\mathbb {Z}}\). By the condition \(N - J \leqslant J_{\varepsilon }\) we obtain the first lower bound on J. Then Lemma A.9 yields a (possibly larger) value of J which is fixed throughout the paper. Finally, the condition \(0 \leqslant N - J\) implies the necessary lower bound \(N_0\) for N, or alternatively the upper bound for \(\varepsilon = 2^{- N} \leqslant 2^{- N_0}\) and defines the set \({\mathcal {A}}\). We stress that once the parameters \(J, N_0\) are chosen, they remain fixed throughout the paper.

Remark that according to our convention, \((\varphi ^0_j)_{j \geqslant - 1}\) denotes the original partition of unity \((\varphi _j)_{j \geqslant - 1}\) on \({\mathbb {R}}^d\), which can be also read from (2.1) using the fact that for \(\varepsilon = 0\) we have \(J_{\varepsilon } = \infty \).

Now we may define the Littlewood–Paley blocks for distributions on \(\Lambda _{\varepsilon }\) by

which leads us to the definition of weighted Besov spaces. Throughout the paper, \(\rho \) denotes a polynomial weight of the form

for some \(\nu \geqslant 0\) and \(h>0\). The constant h will be fixed below in Lemma 4.4 in order to produce a small bound for certain terms. Such weights satisfy the admissibility condition \(\rho (x)/\rho (y)\lesssim \rho ^{-1}(x-y)\) for all \( x, y \in {\mathbb {R}}^d . \) For \(\alpha \in {\mathbb {R}}\), \(p, q \in [1, \infty ]\) and \(\varepsilon \in [0, 1]\) we define the weighted Besov spaces on \(\Lambda _{\varepsilon }\) by the norm

where \(L^{p, \varepsilon }\) for \(\varepsilon \in {\mathcal {A}} \setminus \{ 0 \}\) stands for the \(L^p\) space on \(\Lambda _{\varepsilon }\) given by the norm

(with the usual modification if \(p = \infty \)). Analogously, we may define the weighted Besov spaces for explosive polynomial weights of the form \(\rho ^{-1}\). Note that if \(\varepsilon = 0\) then \(B^{\alpha , \varepsilon }_{p, q} (\rho )\) is the classical weighted Besov space \(B^{\alpha }_{p, q} (\rho )\). In the sequel, we also employ the following notations

In Lemma A.1 we show that one can pull the weight inside the Littlewood–Paley blocks in the definition of the weighted Besov spaces. Namely, under suitable assumptions on the weight that are satisfied by polynomial weights we have \( \Vert f \Vert _{B^{\alpha , \varepsilon }_{p, q} (\rho )} \sim \Vert \rho f \Vert _{B^{\alpha , \varepsilon }_{p, q}} \) in the sense of equivalence of norms, uniformly in \(\varepsilon \). We define the duality product on \(\Lambda _{\varepsilon }\) by

and Lemma A.2 shows that \(B^{- \alpha , \varepsilon }_{p', q'} (\rho ^{- 1})\) is included in the topological dual of \(B^{\alpha , \varepsilon }_{p, q} (\rho )\) for conjugate exponents \(p, p'\) and \(q, q'\).

We employ the tools from paracontrolled calculus as introduced in [GIP15], the reader is also referred to [BCD11] for further details. We shall freely use the decomposition \(f g = f \prec g + f \circ g + f \succ g\), where \(f \succ g = g \succ f\) and \(f \circ g\), respectively, stands for the paraproduct of f and g and the corresponding resonant term, defined in terms of Littlewood–Paley decomposition. More precisely, for \(f, g \in {\mathcal {S}}' (\Lambda _{\varepsilon })\) we let

We also employ the notations \(f\preccurlyeq g:= f\prec g+f\circ g\) and \(f\bowtie g:=f\prec g+f\succ g\). For notational simplicity, we do not stress the dependence of the paraproduct and the resonant term on \(\varepsilon \) in the sequel. These paraproducts satisfy the usual estimates uniformly in \(\varepsilon \), see e.g. [MP17], Lemma 4.2, which can be naturally extended to general \(B^{\alpha , \varepsilon }_{p, q} (\rho )\) Besov spaces as in [MW17b], Theorem 3.17.

Throughout the paper we assume that \(m^{2}>0\) and we only discuss in Remark 4.6 how to treat the case of \(m^{2}\leqslant 0\). In addition, we are only concerned with the 3 dimensional setting and let \(d = 3\). We denote by \(\Delta _{\varepsilon }\) the discrete Laplacian on \(\Lambda _{\varepsilon }\) given by

where \((e_i)_{i = 1, \ldots , d}\) is the canonical basis of \({\mathbb {R}}^d\). It can be checked by a direct computation that the integration by parts formula

holds for the discrete gradient

We let \({\mathscr {Q}}_{\varepsilon } :=m^{2} - \Delta _{\varepsilon }\), \({\mathscr {L}}_{\varepsilon } :=\partial _t + {\mathscr {Q}}_{\varepsilon }\) and we write \({\mathscr {L}}\ \) for the continuum analogue of \({\mathscr {L}}_{\varepsilon }\). We let \({\mathscr {L}}_{\varepsilon }^{- 1}\) to be the inverse of \({\mathscr {L}}_{\varepsilon }\) on \(\Lambda _{\varepsilon }\) such that \({\mathscr {L}}_{\varepsilon }^{- 1} f = v\) is a solution to \({\mathscr {L}}_{\varepsilon } v = f\), \(v (0) = 0.\)

3 Overview of the Strategy

With the goals and notations being set, let us now outline the main steps of our strategy.

Lattice dynamics. For fixed parameters \(\varepsilon \in {\mathcal {A}}, M > 0\), we consider a stationary solution \(\varphi _{M, \varepsilon }\) to the discrete stochastic quantization equation

whose law at every time \(t \geqslant 0\) is given by the Gibbs measure (1.1). Here \(\xi _{M, \varepsilon }\) is a discrete approximation of a space-time white noise \(\xi \) on \({\mathbb {R}}^{d}\) constructed as follows: Let \(\xi _M\) denote its periodization on \({\mathbb {T}}^d_M\) given by

where \(h\in L^{2}({\mathbb {R}}\times {\mathbb {R}}^{d})\) is a test function, and define the corresponding spatial discretization by

Then (3.1) is a finite-dimensional SDE in a gradient form and it has a (unique) invariant measure \(\nu _{M, \varepsilon }\) given by (1.1). Indeed, the global existence of solutions can be proved along the lines of Khasminskii nonexplosion test [Kha11, Theorem 3.5] whereas invariance of the measure (1.1) follows from [Zab89, Theorem 2].

Recall that due to the irregularity of the space-time white noise in dimension 3, a solution to the limit problem (1.3) can only exist as a distribution. Consequently, since products of distributions are generally not well-defined it is necessary to make sense of the cubic term. This forces us to introduce a mass renormalization via constants \(a_{M, \varepsilon }, b_{M, \varepsilon } \geqslant 0\) in (3.1) which shall be suitably chosen in order to compensate the ultraviolet divergencies. In other words, the additional linear term shall introduce the correct counterterms needed to renormalize the cubic power and to derive estimates uniform in both parameters \(M, \varepsilon \). To this end, \(a_{M, \varepsilon }\) shall diverge linearly whereas \(b_{M, \varepsilon }\) logarithmically and these are of course the same divergencies as those appearing in the other approaches, see e.g. Chapter 23 in [GJ87].

Energy method in a nutshell. Our aim is to apply the so-called energy method, which is one of the very basic approaches in the PDE theory. It relies on testing the equation by the solution itself and estimating all the terms. To explain the main idea, consider a toy model

driven by a sufficiently regular forcing f such that the solution is smooth and there are no difficulties in defining the cube. Testing the equation by u and integrating the Laplace term by parts leads to

Now, there are several possibilities to estimate the right hand side using duality and Young’s inequality, namely,

This way, the dependence on u on the right hand side can be absorbed into the good terms on the left hand side. If in addition u was stationary hence in particular \(t \mapsto {\mathbb {E}} \Vert u (t) \Vert _{L^2}^2\) is constant, then we obtain

To summarize, using the dynamics we are able to obtain moment bounds for the invariant measure that depend only on the forcing f. Moreover, we also see the behavior of the estimates with respect to the coupling constant \(\lambda \). Nevertheless, even though using the \(L^4\)-norm of u introduces a blow up for \(\lambda \rightarrow 0\), the right hand side f in our energy estimate below will always contain certain power of \(\lambda \) in order to cancel this blow up and to obtain bounds that are uniform as \(\lambda \rightarrow 0\).

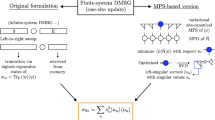

Decomposition and estimates. Since the forcing \(\xi \) on the right hand side of (1.3) does not possess sufficient regularity, the energy method cannot be applied directly. Following the usual approach within the field of singular SPDEs, we shall find a suitable decomposition of the solution \(\varphi _{M, \varepsilon }\), isolating parts of different regularity. In particular, since the equation is subcritical in the sense of Hairer [Hai14] (or superrenormalizable in the language of quantum field theory), we expect the nonlinear equation (1.3) to be a perturbation of the linear problem \( {\mathscr {L}}\ X =\xi .\) This singles out the most irregular part of the limit field \(\varphi \). Hence on the approximate level we set \(\varphi _{M, \varepsilon } = X_{M, \varepsilon } + \eta _{M, \varepsilon }\) where \(X_{M, \varepsilon }\) is a stationary solution to

and the remainder \(\eta _{M, \varepsilon }\) is expected to be more regular.

To see if it is indeed the case we plug our decomposition into (3.1) to obtain

Here \(\llbracket X^2_{M, \varepsilon } \rrbracket \) and \(\llbracket X^3_{M, \varepsilon } \rrbracket \) denote the second and third Wick power of the Gaussian random variable \(X_{M, \varepsilon }\) defined by

where \(a_{M, \varepsilon } :={\mathbb {E}} [X^2_{M, \varepsilon } (t)]\) is independent of t due to stationarity. It can be shown by direct computations that appeared already in a number of works (see [CC18, Hai14, Hai15, MWX16]) that \(\llbracket X^2_{M, \varepsilon } \rrbracket \) is bounded uniformly in \(M, \varepsilon \) as a continuous stochastic process with values in the weighted Besov space \({\mathscr {C}}^{- 1 - \kappa ,\varepsilon } (\rho ^{\sigma })\) for every \(\kappa , \sigma > 0\), whereas \(\llbracket X^3_{M, \varepsilon } \rrbracket \) can only be constructed as a space-time distribution. In addition, they converge to the Wick power \(\llbracket X^2 \rrbracket \) and \(\llbracket X^3 \rrbracket \) of X. In other words, the linearly growing renormalization constant \(a_{M, \varepsilon }\) gives counterterms needed for the Wick ordering.

Note that X is a continuous stochastic process with values in \({\mathscr {C}}^{- 1 / 2 - \kappa } (\rho ^{\sigma })\) for every \(\kappa , \sigma > 0\). This limits the regularity that can be obtained for the approximations \(X_{M, \varepsilon }\) uniformly in \(M, \varepsilon \). Hence the most irregular term in (3.3) is the third Wick power and by Schauder estimates we expect \(\eta _{M, \varepsilon }\) to be 2 degrees of regularity better. Namely, we expect uniform bounds for \(\eta _{M, \varepsilon }\) in \({\mathscr {C}}^{1 / 2 - \kappa } (\rho ^{\sigma })\) which indeed verifies our presumption that \(\eta _{M, \varepsilon }\) is more regular than \(\varphi _{M, \varepsilon }\). However, the above decomposition introduced new products in (3.3) that are not well-defined under the above discussed uniform bounds. In particular, both \(\eta _{M, \varepsilon } \llbracket X_{M, \varepsilon }^2 \rrbracket \) and \(\eta _{M, \varepsilon }^2 X_{M, \varepsilon }\) do not meet the condition that the sum of their regularities is strictly positive, which is a convenient sufficient condition for a product of two distributions to be analytically well-defined.

The usual way is to continue the decomposition in the same spirit and to cancel the most irregular term in (3.3), namely, \(\llbracket X^3_{M, \varepsilon } \rrbracket \). This approach can be found basically in all the available works on the stochastic quantization (see e.g. in [CC18, GH18, Hai14, Hai15, MW17a]) The idea is therefore to define  as the stationary solution to

as the stationary solution to

leading to the decomposition  . Writing down the dynamics for \(\zeta _{M, \varepsilon }\) we observe that the most irregular term is the paraproduct

. Writing down the dynamics for \(\zeta _{M, \varepsilon }\) we observe that the most irregular term is the paraproduct  which can be bounded uniformly in \({\mathscr {C}}^{- 1 - \kappa ,\varepsilon } (\rho ^{\sigma })\) and hence this is not yet sufficient for the energy method outlined above. Indeed, the expected (uniform) regularity of \(\zeta _{M, \varepsilon }\) is \({\mathscr {C}}^{1 - \kappa ,\varepsilon } (\rho ^{\sigma })\) and so the term

which can be bounded uniformly in \({\mathscr {C}}^{- 1 - \kappa ,\varepsilon } (\rho ^{\sigma })\) and hence this is not yet sufficient for the energy method outlined above. Indeed, the expected (uniform) regularity of \(\zeta _{M, \varepsilon }\) is \({\mathscr {C}}^{1 - \kappa ,\varepsilon } (\rho ^{\sigma })\) and so the term  cannot be controlled. However, we point out that not much is missing.

cannot be controlled. However, we point out that not much is missing.

In order to overcome this issue, we proceed differently than the above cited works and let \(Y_{M, \varepsilon }\) be a solution to

where \({\mathscr {U}}^{\varepsilon }_{>}\) is the localization operator defined in Section A.2. With a suitable choice of the constant \(L = L (\lambda , M, \varepsilon )\) determining \({\mathscr {U}}^{\varepsilon }_{>}\) (cf. Lemma A.12, Lemma 4.1) we are able to construct the unique solution to this problem via Banach’s fixed point theorem. Consequently, we find our decomposition \(\varphi _{M, \varepsilon } = X_{M, \varepsilon } + Y_{M, \varepsilon } + \phi _{M, \varepsilon }\) together with the dynamics for the remainder

The first term on the right hand side is the most irregular contribution, the second term is not controlled uniformly in \(M, \varepsilon \), the third term is needed for the renormalization and \(\Xi _{M, \varepsilon }\) contains various terms that are more regular and in principle not problematic or that can be constructed as stochastic objects using the remaining counterterm \(- 3 \lambda ^2 b_{M, \varepsilon } (X_{M, \varepsilon } + Y_{M, \varepsilon })\).

The advantage of this decomposition with \(\phi _{M, \varepsilon }\) as opposed to the usual approach leading to \(\zeta _{M, \varepsilon }\) above is that together with \(\llbracket X^3_{M, \varepsilon } \rrbracket \) we cancelled also the second most irregular contribution \(({\mathscr {U}}^{\varepsilon }_{>} \llbracket X_{M, \varepsilon }^2 \rrbracket ) \succ Y_{M, \varepsilon }\), which is too irregular to be controlled as a forcing f using the energy method. The same difficulty of course comes with \(\llbracket X_{M, \varepsilon }^2 \rrbracket \succ \phi _{M, \varepsilon }\) in (3.7), however, since it depends on the solution \(\phi _{M, \varepsilon }\) we are able to control it using a paracontrolled ansatz. To explain this, let us also turn our attention to the resonant product \(\llbracket X_{M, \varepsilon }^2 \rrbracket \circ \phi _{M, \varepsilon }\) which poses problems as well. When applying the energy method to (3.7), these two terms appear in the form

where we included a polynomial weight \(\rho \) as in (2.2). The key observation is that the presence of the duality product permits to show that these two terms approximately coincide, in the sense that their difference denoted by \(D_{\rho ^4, \varepsilon } (\phi _{M, \varepsilon }, - 3 \lambda \llbracket X^2_{M, \varepsilon } \rrbracket , \phi _{M, \varepsilon })\) is controlled by the expected uniform bounds. This is proven generally in Lemma A.13. As a consequence, we obtain

Finally, since the last term on the left hand side as well as the first term on the right hand side are diverging, the idea is to couple them by the following paracontrolled ansatz. We define

and expect that the sum of the two terms on the right hand side is more regular than each of them separately. In other words, \(\psi _{M, \varepsilon }\) is (uniformly) more regular than \(\phi _{M, \varepsilon }\). Indeed, with this ansatz we may complete the square and obtain

where the right hand side, given in Lemma 4.2, can be controlled by the norms on the left hand side, in the spirit of the energy method discussed above.

These considerations lead to our first main result proved as Theorem 4.5 below. In what follows, \(Q_{\rho }({\mathbb {X}}_{M,\varepsilon })\) denotes a polynomial in the \(\rho \)-weighted norms of the involved stochastic objects, the precise definition can be found in Section 4.1.

Theorem 3.1

Let \(\rho \) be a weight such that \(\rho ^{\iota } \in L^{4, 0}\) for some \(\iota \in (0, 1)\). There exists a constant \(\alpha = \alpha (m^2) > 0\) such that

where \(C_{\lambda , t} = \lambda ^3 + \lambda ^{(12 - \theta ) /(2+\theta )} | \log t |^{4 / (2 + \theta )} + \lambda ^7\) for \(\theta =\frac{1 / 2 - 4 \kappa }{1 - 2 \kappa }\).

Here we observe the precise dependence on \(\lambda \) which in particular implies that the bound is uniform over \(\lambda \) in every bounded subset of \([0, \infty )\) and vanishes as \(\lambda \rightarrow 0\).

Tightness. In order to proceed to the proof of the existence of the Euclidean \(\Phi ^4_3\) field theory, we shall employ the extension operator \({\mathcal {E}}^{\varepsilon }\) from Section A.4 which permits to extend discrete distributions to the full space \({\mathbb {R}}^3\). An additional twist originates in the fact that by construction the process \(Y_{M, \varepsilon }\) given by (3.6) is not stationary and consequently also \(\phi _{M, \varepsilon }\) fails to be stationary. Therefore the energy argument as explained above does not apply as it stands and we shall go back to the stationary decomposition  , while using the result of Theorem 3.1 in order to estimate \(\zeta _{M, \varepsilon }\). Consequently, we deduce tightness of the family of the joint laws of

, while using the result of Theorem 3.1 in order to estimate \(\zeta _{M, \varepsilon }\). Consequently, we deduce tightness of the family of the joint laws of  evaluated at any fixed time \(t \geqslant 0\), proven in Theorem 4.9 below. To this end, we denote by

evaluated at any fixed time \(t \geqslant 0\), proven in Theorem 4.9 below. To this end, we denote by  a canonical representative of the random variables under consideration and let

a canonical representative of the random variables under consideration and let  .

.

Theorem 3.2

Let \(\rho \) be a weight such that \(\rho ^{\iota } \in L^{4, 0}\) for some \(\iota \in (0, 1)\). Then the family of joint laws of  , \(\varepsilon \in {\mathcal {A}}\), \(M > 0\), evaluated at an arbitrary time \(t \geqslant 0\) is tight. Moreover, any limit measure \(\mu \) satisfies for all \(p \in [1, \infty )\)

, \(\varepsilon \in {\mathcal {A}}\), \(M > 0\), evaluated at an arbitrary time \(t \geqslant 0\) is tight. Moreover, any limit measure \(\mu \) satisfies for all \(p \in [1, \infty )\)

Osterwalder–Schrader axioms. The projection of a limit measure \(\mu \) onto the first component is the candidate \(\Phi ^4_3\) measure and we denote it by \(\nu \). Based on Theorem 3.2 we are able to show that \(\nu \) is translation invariant and reflection positive, see Section 5.2 and Section 5.3. In addition, we prove that the measure is non-Gaussian. To this end, we make use of the decomposition  together with the moment bounds from Theorem 3.2. Since X is Gaussian whereas

together with the moment bounds from Theorem 3.2. Since X is Gaussian whereas  is not, the idea is to use the regularity of \(\zeta \) to conclude that it cannot compensate

is not, the idea is to use the regularity of \(\zeta \) to conclude that it cannot compensate  which is less regular. In particular, we show that the connected 4-point function is nonzero, see Section 5.4.

which is less regular. In particular, we show that the connected 4-point function is nonzero, see Section 5.4.

It remains to discuss a stretched exponential integrability of \(\varphi \), leading to the distribution property shown in Section 5.1. More precisely, we show the following result which can be found in Proposition 4.11.

Proposition 3.3

Let \(\rho \) be a weight such that \(\rho ^{\iota } \in L^{4, 0}\) for some \(\iota \in (0, 1)\). For every \(\kappa \in (0, 1)\) small there exists \(\upsilon = O (\kappa ) > 0\) small such that

provided \(\beta > 0\) is chosen sufficiently small.

In order to obtain this bound we revisit the bounds from Theorem 3.1 and track the precise dependence of the polynomial \(Q_{\rho } ({\mathbb {X}}_{M, \varepsilon })\) on the right hand side of the estimate on the quantity \(\Vert {\mathbb {X}}_{M, \varepsilon } \Vert \) which will be defined through (4.4), (4.5), (4.6) below taking into account the number of copies of X appearing in each stochastic object. However, the estimates in Theorem 3.1 are not optimal and consequently the power of \(\Vert {\mathbb {X}}_{M, \varepsilon } \Vert \) in Theorem 3.1 is too large. To optimize we introduce a large momentum cut-off \(\llbracket X^3_{M, \varepsilon } \rrbracket _{\leqslant }\) given by a parameter \(K > 0\) and let \(\llbracket X^3_{M, \varepsilon } \rrbracket _{>} :=\llbracket X^3_{M, \varepsilon } \rrbracket - \llbracket X^3_{M, \varepsilon } \rrbracket _{\leqslant }\). Then we modify the dynamics of \(Y_{M, \varepsilon }\) to

which allows for refined bounds on \(Y_{M, \varepsilon }\), yielding optimal powers of \(\Vert {\mathbb {X}}_{M, \varepsilon } \Vert \).

Integration by parts formula. The uniform energy estimates from Theorem 3.2 and Proposition 3.3 are enough to obtain tightness of the approximate measures and to show that any accumulation point satisfies the distribution property, translation invariance, reflection positivity and non-Gaussianity. However, they do not provide sufficient regularity in order to identify the continuum dynamics or to establish the hierarchy of Dyson–Schwinger equations providing relations of various n-point correlation functions. This can be seen easily since neither the resonant product \(\llbracket X_{M, \varepsilon }^2 \rrbracket \circ \phi _{M, \varepsilon }\) nor \(\llbracket X_{M, \varepsilon }^2 \rrbracket \circ \psi _{M, \varepsilon }\) is well-defined in the limit. Another and even more severe difficulty lies in the fact that the third Wick power \(\llbracket X^3 \rrbracket \) only exists as a space-time distribution and is not a well-defined random variable under the \(\Phi ^{4}_{3}\) measure, cf. [ALZ06].

To overcome the first issue, we introduce a new paracontrolled ansatz  and show that \(\chi _{M,\varepsilon }\) possesses enough regularity uniformly in \(M,\varepsilon \) in order to pass to the limit in the resonant product \(\llbracket X^{2}_{M,\varepsilon }\rrbracket \circ \chi _{M,\varepsilon }\). Namely, we establish uniform bounds for \(\chi _{M,\varepsilon }\) in \(L^1_T B_{1, 1}^{1 + 3 \kappa , \varepsilon }(\rho ^{4})\). This not only allows to give meaning to the critical resonant product in the continuum, but it also leads to a uniform time regularity of the processes \(\varphi _{M,\varepsilon }\). We obtain the following result proved below as Theorem 6.2.

and show that \(\chi _{M,\varepsilon }\) possesses enough regularity uniformly in \(M,\varepsilon \) in order to pass to the limit in the resonant product \(\llbracket X^{2}_{M,\varepsilon }\rrbracket \circ \chi _{M,\varepsilon }\). Namely, we establish uniform bounds for \(\chi _{M,\varepsilon }\) in \(L^1_T B_{1, 1}^{1 + 3 \kappa , \varepsilon }(\rho ^{4})\). This not only allows to give meaning to the critical resonant product in the continuum, but it also leads to a uniform time regularity of the processes \(\varphi _{M,\varepsilon }\). We obtain the following result proved below as Theorem 6.2.

Theorem 3.4

Let \(\beta \in (0, 1 / 4)\) and \(\sigma \in (0,1)\). Then for all \(p \in [1, \infty )\) and \(\tau \in (0, T)\)

where \(L^{\infty }_{\tau , T} H^{- 1 / 2 -2 \kappa ,\varepsilon } (\rho ^2) = L^{\infty } (\tau , T ; H^{- 1 / 2 -2 \kappa ,\varepsilon } (\rho ^2))\).

This additional time regularity is then used in order to treat the second issue raised above and to construct a renormalized cubic term \(\llbracket \varphi ^3 \rrbracket \). More precisely, we derive an explicit formula for \(\llbracket \varphi ^{3}\rrbracket \) including \(\llbracket X^3 \rrbracket \) as a space-time distribution, where time indeed means the fictitious stochastic time variable introduced by the stochastic quantization, nonexistent under the \(\Phi ^{4}_{3}\) measure. In order to control \(\llbracket X^3 \rrbracket \) we re-introduce the stochastic time and use stationarity together with the above mentioned time regularity. Finally, we derive an integration by parts formula leading to the hierarchy of Dyson–Schwinger equations connecting the correlation functions. To this end, we recall that a cylinder function F on \({\mathcal {S}}' ({\mathbb {R}}^3)\) has the form \(F (\varphi ) = \Phi (\varphi (f_1), \ldots , \varphi (f_n))\) where \(\Phi : {\mathbb {R}}^n \rightarrow {\mathbb {R}}\) and \(f_1, \ldots , f_n \in {\mathcal {S}} ({\mathbb {R}}^3)\). Loosely stated, the result proved in Theorem 6.7 says the following.

Theorem 3.5

Let \(F : {\mathcal {S}}' ({\mathbb {R}}^3) \rightarrow {\mathbb {R}}\) be a cylinder function such that

for some \(n \in {\mathbb {N}}\), where \(\mathrm {D}F (\varphi )\) the \(L^2\)-gradient of F. Any accumulation point \(\nu \) of the sequence \((\nu _{M, \varepsilon } \circ ({\mathcal {E}}^{\varepsilon })^{-1})_{M, \varepsilon }\) satisfies for all \(f\in {\mathcal {S}}({\mathbb {R}}^{3})\)

where for a smooth \(h : {\mathbb {R}} \rightarrow {\mathbb {R}}\) with \({\text {supp}} h \subset [\tau , T]\) for some \(0< \tau< T < \infty \) and \(\int _{{\mathbb {R}}} h (t) \mathrm {d}t = 1\) we have for all \(f\in {\mathcal {S}}({\mathbb {R}}^{3})\)

and \(\llbracket \varphi ^{3}\rrbracket \) is given by an explicit formula, namely, (6.6).

In addition, we are able to characterize \({\mathcal {J}}_{\nu }(F)\) in the spirit of the operator product expansion, see Lemma 6.5.

4 Construction of the Euclidean \(\Phi ^4\) Field Theory

This section is devoted to our main result. More precisely, we consider (3.1) which is a discrete approximation of (1.3) posed on a periodic lattice \(\Lambda _{M, \varepsilon }\). For every \(\varepsilon \in (0, 1)\) and \(M > 0\) (3.1) possesses a unique invariant measure that is the Gibbs measure \(\nu _{M,\varepsilon }\) given by (1.1). We derive new estimates on stationary solutions sampled from these measures which hold true uniformly in \(\varepsilon \) and M. As a consequence, we obtain tightness of the invariant measures while sending both the mesh size as well as the volume to their respective limits, i.e. \(\varepsilon \rightarrow 0\), \(M \rightarrow \infty \).

4.1 Stochastic terms

Recall that the stochastic objects \(X_{M,\varepsilon },\llbracket X_{M,\varepsilon }^{2}\rrbracket , \llbracket X_{M,\varepsilon }^{3}\rrbracket \) and  were already defined in (3.2), (3.4) and (3.5). As the next step we provide further details and construct additional stochastic objects needed in the sequel. All the distributions on \(\Lambda _{M, \varepsilon }\) are extended periodically to the full lattice \(\Lambda _{\varepsilon }\). Then

were already defined in (3.2), (3.4) and (3.5). As the next step we provide further details and construct additional stochastic objects needed in the sequel. All the distributions on \(\Lambda _{M, \varepsilon }\) are extended periodically to the full lattice \(\Lambda _{\varepsilon }\). Then  which is a stationary solution to (3.5) satisfies

which is a stationary solution to (3.5) satisfies  with

with  , where \(P^{\varepsilon }_t\) denotes the semigroup generated by \(-{\mathscr {Q}}_{\varepsilon }\) on \(\Lambda _{\varepsilon }\). Then for every \(\kappa , \sigma > 0\) and some \(\beta > 0\) small

, where \(P^{\varepsilon }_t\) denotes the semigroup generated by \(-{\mathscr {Q}}_{\varepsilon }\) on \(\Lambda _{\varepsilon }\). Then for every \(\kappa , \sigma > 0\) and some \(\beta > 0\) small

uniformly in \(M, \varepsilon \) thanks to the presence of the weight. For details and further references see e.g. Section 3 in [GH18]. Here and in the sequel, \(T\in (0,\infty )\) denotes an arbitrary finite time horizon and \(C_{T}\) and \(C^{\beta /2}_{T}\) are shortcut notations for C([0, T]) and \(C^{\beta /2}([0,T])\), respectively. Throughout our analysis, we fix \(\kappa , \beta > 0\) in the above estimate such that \(\beta \geqslant 3 \kappa \). This condition will be needed for the control of a parabolic commutator in Lemma 4.4 below. On the other hand, the parameter \(\sigma > 0\) varies from line to line and can be arbitrarily small.

As already discussed in Section 3, in particular after equation (3.5), the usual decomposition  is not suitable for the energy method. Indeed, it would introduce the term

is not suitable for the energy method. Indeed, it would introduce the term  which cannot be cancelled or controlled by the available quantities. We overcome this issue by working rather with the decomposition \(\varphi _{M,\varepsilon }=X_{M,\varepsilon }+Y_{M, \varepsilon }+\phi _{M,\varepsilon }\) defined in the sequel. Note that a similar modification of the paracontrolled ansatz has been necessary to construct a renormalized control problem for the KPZ equation in [GP17]. Here, the price to pay is that the auxiliary variables \(Y_{M,\varepsilon }\), \(\phi _{M,\varepsilon }\) are not stationary. Thus, in Section 4.3 we go back to the stationary decomposition

which cannot be cancelled or controlled by the available quantities. We overcome this issue by working rather with the decomposition \(\varphi _{M,\varepsilon }=X_{M,\varepsilon }+Y_{M, \varepsilon }+\phi _{M,\varepsilon }\) defined in the sequel. Note that a similar modification of the paracontrolled ansatz has been necessary to construct a renormalized control problem for the KPZ equation in [GP17]. Here, the price to pay is that the auxiliary variables \(Y_{M,\varepsilon }\), \(\phi _{M,\varepsilon }\) are not stationary. Thus, in Section 4.3 we go back to the stationary decomposition  .

.

If \({\mathscr {U}}^{\varepsilon }_{>}\) is a localizer defined for some given constant \(L > 0\) according to Lemma A.12, we let \(Y_{M, \varepsilon }\) be the solution of (3.6) hence

Note that this is an equation for \(Y_{M, \varepsilon }\), which also implies that \(Y_{M, \varepsilon }\) is not a polynomial of the Gaussian noise. However, as shown in the following lemma, \(Y_{M, \varepsilon }\) can be constructed as a fixed point provided L is large enough.

Lemma 4.1

There exists \(L_{0}=L_{0}(\lambda )\geqslant 0\) and \(L=L(\lambda ,M,\varepsilon ) \geqslant 0\) with a (not relabeled) subsequence satisfying \(L(\lambda ,M,\varepsilon )\rightarrow L_{0}\) as \(\varepsilon \rightarrow 0\), \(M\rightarrow \infty \), such that (3.6) with \({\mathscr {U}}^{\varepsilon }_{>}\) determined by L has a unique solution \(Y_{M, \varepsilon }\) that belongs to \(C_T {\mathscr {C}}^{1 / 2 - \kappa } (\rho ^{\sigma }) \cap C_T^{\beta / 2} L^{\infty } (\rho ^{\sigma })\). Furthermore,

where the proportionality constant is independent of \(M,\varepsilon \).

Proof

We define a fixed point map

for some \(L > 0\) to be chosen below. Then in view of the Schauder estimates from Lemma 3.4 in [MP17], the paraproduct estimates as well as Lemma A.12, we have

for some \(\delta \in (0, 1)\) independent of \(\lambda , M,\varepsilon \) provided \(L=L(\lambda , M,\varepsilon )\) in the definition of the localizer \({\mathscr {U}}^{\varepsilon }_{>}\) is chosen to be the smallest \(L\geqslant 0\) such that

In particular, we have that

which will be used later in order to estimate the complementary operator \({\mathscr {U}}^{\varepsilon }_{\leqslant }\) by Lemma A.12. Note that \(L(\lambda ,{M,\varepsilon })\) a priori depends on \(M,\varepsilon \). However, due to the uniform bound on

valid for some \(\gamma \in (0,1)\), we may use compactness to deduce that for every fixed \(\lambda >0\) there exists a subsequence (not relabeled) such that \(L(\lambda ,M,\varepsilon )\rightarrow L_{0}(\lambda )\). This will also allow to identify the limit of the localized term below in Section 6.

Next, we estimate

Therefore we deduce that \({\mathcal {K}}\) leaves balls in \(C_T {\mathscr {C}}^{1 / 2 - \kappa , \varepsilon } (\rho ^{\sigma })\) invariant and is a contraction on \(C_T {\mathscr {C}}^{1 / 2 - \kappa , \varepsilon } (\rho ^{\sigma })\). Hence there exists a unique fixed point \(Y_{M, \varepsilon }\) and the first bound follows. Next, we use the Schauder estimates (see Lemma 3.10 in [MP17]) to bound the time regularity as follows

The proof is complete. \(\quad \square \)

According to this result, we remark that \(Y_{M, \varepsilon }\) itself is not a polynomial in the noise terms, but with our choice of localization it allows for a polynomial bound of its norm. As the next step, we introduce further stochastic objects needed below. Namely,

where \(b_{M, \varepsilon }, \tilde{b}_{M, \varepsilon } (t)\) are suitable renormalization constants. It follows from standard estimates that \(| \tilde{b}_{M, \varepsilon } (t) - b_{M, \varepsilon } | \lesssim | \log t |\) uniformly in \(M, \varepsilon \). We denote collectively

These objects can be constructed similarly as the usual \(\Phi ^{4}_{3}\) terms, see e.g. [GH18, Hai15, MWX16]. Note that we do not include  in \({\mathbb {X}}_{M, \varepsilon }\) since it can be controlled by \(\llbracket X_{M, \varepsilon }^2 \rrbracket \) using Schauder estimates. In order to have a precise control of the number of copies of X appearing in each stochastic term we define \(\Vert {\mathbb {X}}_{M,\varepsilon }\Vert \) as the smallest number bigger than 1 and all the quantities

in \({\mathbb {X}}_{M, \varepsilon }\) since it can be controlled by \(\llbracket X_{M, \varepsilon }^2 \rrbracket \) using Schauder estimates. In order to have a precise control of the number of copies of X appearing in each stochastic term we define \(\Vert {\mathbb {X}}_{M,\varepsilon }\Vert \) as the smallest number bigger than 1 and all the quantities

Note that it is bounded uniformly with respect to \(M, \varepsilon \). Besides, if we do not need to be precise about the exact powers, we denote by \(Q_{\rho } ({\mathbb {X}}_{M, \varepsilon })\) a generic polynomial in the above norms of the noise terms \({\mathbb {X}}_{M, \varepsilon }\), whose coefficients depend on \(\rho \) but are independent of \(M,\varepsilon , \lambda \), and change from line to line.

4.2 Decomposition and uniform estimates

With the above stochastic objects at hand, we let \(\varphi _{M, \varepsilon }\) be a stationary solution to (3.1) on \(\Lambda _{M, \varepsilon }\) having at each time \(t \geqslant 0\) the law \(\nu _{M, \varepsilon }\). We consider its decomposition \(\varphi _{M, \varepsilon } = X_{M, \varepsilon } + Y_{M, \varepsilon } + \phi _{M, \varepsilon }\) and deduce that \(\phi _{M, \varepsilon }\) satisfies

Our next goal is to derive energy estimates for (4.7) which hold true uniformly in both parameters \(M, \varepsilon \). To this end, we recall that all the distributions above were extended periodically to the full lattice \(\Lambda _{\varepsilon }\). Consequently, apart from the stochastic objects, the renormalization constants and the initial conditions, all the operations in (4.7) are independent of M. Therefore, for notational simplicity, we fix the parameter M and omit the dependence on M throughout the rest of this subsection. The following series of lemmas serves as a preparation for our main energy estimate established in Theorem 4.5. Here, we make use of the approximate duality operator \(D_{\rho ^{4},\varepsilon }\) as well as the commutators \(C_{\varepsilon },\,\tilde{C}_{\varepsilon }\) and \(\bar{C}_{\varepsilon }\) introduced Section A.3.

Lemma 4.2

It holds

with

and

Proof

Noting that (4.7) is of the form \({\mathscr {L}}_{\varepsilon } \phi _{\varepsilon } +\lambda \phi _{\varepsilon }^3 = U_{\varepsilon }\), we may test this equation by \(\rho ^4 \phi _{\varepsilon }\) to deduce

with

and

We use the fact that \((f \succ )\) is an approximate adjoint to \((f\circ )\) according to Lemma A.13 to rewrite the resonant term as

and use the definition of \(\psi \) in (4.9) to rewrite \(\Phi _{\rho , \varepsilon }\) as

For the first term we write

Next, we use again Lemma A.13 to simplify the quadratic term as

hence Lemma A.14 leads to

We conclude that

As the next step, we justify the definition of the resonant product appearing in \(\Psi _{\rho ^4, \varepsilon }\) and show that it is given by \(Z_{\varepsilon }\) from the statement of the lemma. To this end, let

and recall the definition of \(Y_{M,\varepsilon }\) (4.1). Hence by Lemma A.14

which is the desired formula. In this formulation we clearly see the structure of the renormalization and the appropriate combinations of resonant products and the counterterms.

\(\square \)

As the next step, we estimate the new stochastic terms appearing in Lemma 4.2. Here and in the sequel, \(\vartheta =O(\kappa )>0\) denotes a generic small constant which changes from line to line.

Lemma 4.3

It holds true

Proof

By definition of \(Z_{\varepsilon }\) and the discussion in Section 4.1, Lemma 4.1, Lemma A.14, Lemma A.12 and (4.2) we have (since the choice of exponent \(\sigma > 0\) of the weight corresponding to the stochastic objects is arbitrary, \(\sigma \) changes from line to line in the sequel)

and the first claim follows since \(\sigma > 0\) was chosen arbitrarily.

Next, we recall (4.1) and the fact that  can be constructed without any renormalization in \(C_T {\mathscr {C}}^{- \kappa , \varepsilon } (\rho ^{\sigma })\). As a consequence, the resonant term reads

can be constructed without any renormalization in \(C_T {\mathscr {C}}^{- \kappa , \varepsilon } (\rho ^{\sigma })\). As a consequence, the resonant term reads

where the for the second term we have (since \({\mathscr {U}}^{\varepsilon }_{>}\) is a contraction) that

For the two paraproducts we obtain directly

We proceed similarly for the remaining term, which is quadratic in \(Y_{\varepsilon }\). We have

Accordingly,

and for the paraproducts

This gives the second bound from the statement of the lemma. \(\quad \square \)

Let us now proceed with our main energy estimate. In view of Lemma 4.2, our goal is to control the terms in \(\Theta _{\rho ^4, \varepsilon } + \Psi _{\rho ^4, \varepsilon }\) by quantities of the from

where \(\delta > 0\) is a small constant which can change from line to line. Indeed, with such a bound in hand it will be possible to absorb the norms of \(\phi _{\varepsilon }, \psi _{\varepsilon }\) from the right hand side of (4.8) into the left hand side and a bound for \(\phi _{\varepsilon }, \psi _{\varepsilon }\) in terms of the noise terms will follow.

Lemma 4.4

Let \(\rho \) be a weight such that \(\rho ^{\iota } \in L^{4, 0}\) for some \(\iota \in (0, 1)\). Then

where \(\theta =\frac{1/2-4\kappa }{1-2\kappa }\).

Proof

Since the weight \(\rho \) is polynomial and vanishes at infinity, we may assume without loss of generality that \(0 < \rho \leqslant 1\) and consequently \(\rho ^{\alpha } \leqslant \rho ^{\beta }\) whenever \(\alpha \geqslant \beta \geqslant 0\). We also observe that due to the integrability of the weight (see Lemma A.6)

with a constant that depends only on \(\rho \). In the sequel, we repeatedly use various results for discrete Besov spaces established in Section A. Namely, the equivalent formulation of the Besov norms (Lemma A.1), the duality estimate (Lemma A.2), interpolation (Lemma A.3), embedding (Lemma A.4), a bound for powers of functions (Lemma A.7) as well as bounds for the commutators (Lemma A.14).

Even though it is not necessary for the present proof, we keep track of the precise power of the quantity \(\Vert {\mathbb {X}}_{\varepsilon }\Vert \) in each of the estimates. This will be used in Section 4.4 below to establish the stretched exponential integrability of the fields. We recall that \(\vartheta =O(\kappa )>0\) denotes a generic small constant which changes from line to line.

In view of Lemma 4.2 we shall bound each term on the right hand side of (4.8). We have

This term can be absorbed provided \(C_{\rho }=\Vert \rho ^{-4}[\nabla _{\varepsilon }, \rho ^4]\Vert _{L^{\infty , \varepsilon }}\) is sufficiently small, such that \(C_{\delta } C^2_{\rho } \leqslant m^2\), which can be obtained by choosing \(h > 0\) small enough (depending only on \(m^2\) and \(\delta \)) in the definition (2.2) of the weight \(\rho \). Next,

and we estimate explicitly

for another constant \(C_{\rho }\) depending only on the weight \(\rho \), which can be taken smaller than \(m^2\) by choosing \(h > 0\) small, and consequently

since \(\sigma \) is sufficiently small.

Using Lemma A.2, Lemma A.7, interpolation from Lemma A.3 with for \(\theta = \frac{1 - 4 \kappa }{1 - 2 \kappa }\) and Young’s inequality we obtain

Recall that since \(\sigma \) is chosen small, we have the interpolation inequality (see Lemma A.3)

where \(\theta = \frac{1 / 2 - 3 \kappa }{1 - 2 \kappa }\). Similar interpolation inequalities will also be employed below. Then, in view of Lemma A.13 and Young’s inequality, we have

Similarly,

where we further estimate by Schauder and paraproduct estimates

and hence we deduce by interpolation with \(\theta = \frac{1 - 6 \kappa }{1 - 2 \kappa }\) and embedding that

Due to Lemma A.14 and interpolation with \(\theta =\frac{1 - 5 \kappa }{1 - 2 \kappa }\), we obtain

Then we use the paraproduct estimates, the embedding \({\mathscr {C}}^{1 / 2 -\kappa , \varepsilon } (\rho ^{\sigma }) \subset H^{1 / 2 - 2 \kappa , \varepsilon } (\rho ^{2 - \sigma / 2})\) (which holds due to the integrability of \(\rho ^{4 \iota }\) for some \(\iota \in (0, 1)\) and the fact that \(\sigma \) can be chosen small), together with Lemma 4.1 and interpolation to deduce for \(\theta =\frac{1/2 - 5 \kappa }{1 - 2 \kappa }\) that

Next, we have

Here we employ Lemma A.7 and interpolation to obtain for \(\theta = \frac{1 / 2 - 4 \kappa }{1 - 2 \kappa }\)

and similarly for the other two terms, where we also use Lemma 4.3 and the embedding \(H^{1 - 2 \kappa , \varepsilon } (\rho ^2) \subset H^{1 / 2 + 2 \kappa , \varepsilon } (\rho ^{3 - \iota - \sigma })\) and \(H^{1 / 2 + 2 \kappa , \varepsilon } (\rho ^2) = B_{2, 2}^{1 / 2 + 2 \kappa , \varepsilon } (\rho ^2) \subset B_{1, 1}^{1 / 2 + \kappa , \varepsilon } (\rho ^{4 - \sigma })\) together with interpolation with \(\theta = \frac{1 / 2 - 4 \kappa }{1 - 2 \kappa }\)

Next, we obtain

and similarly

Then, by (4.2)

and finally for \(\theta = \frac{1 / 2 - 4 \kappa }{1 - 2 \kappa }\)

The proof is complete. \(\quad \square \)

Now we have all in hand to establish our main energy estimate.

Theorem 4.5

Let \(\rho \) be a weight such that \(\rho ^{\iota } \in L^{4, 0}\) for some \(\iota \in (0, 1)\). There exists a constant \(\alpha =\alpha (m^{2}) \in (0,1)\) such that for \(\theta =\frac{1/2-4\kappa }{1-2\kappa }\)

Proof

As a consequence of (4.9), we have according to Lemma A.5, Lemma A.4, Lemma A.1

Therefore, according to Lemma 4.4 we obtain that

Choosing \(\delta > 0\) sufficiently small (depending on \(m^2\) and the implicit constant C from Lemma A.5) allows to absorb the norms of \(\phi _{\varepsilon }, \psi _{\varepsilon }\) from the right hand side into the left hand side and the claim follows. \(\quad \square \)

Remark 4.6

We point out that the requirement of a strictly positive mass \(m^{2}>0\) is to some extent superfluous for our approach. To be more precise, if \(m^{2}\leqslant 0\) then we may rewrite the mollified stochastic quantization equation as

and the same decomposition as above introduces an additional term on the right hand side of (4.8). This can be controlled by

where we write \(C_{\delta ,\lambda ^{-1}}\) to stress that the constant is not uniform over small \(\lambda \). As a consequence, we obtain an analogue of Theorem 4.5 but the uniformity for small \(\lambda \) is not valid anymore.

Corollary 4.7

Let \(\rho \) be a weight such that \(\rho ^{\iota } \in L^{4, 0}\) for some \(\iota \in (0, 1)\). Then for all \(p \in [1, \infty )\) and \(\theta =\frac{1/2-4\kappa }{1-2\kappa }\)

Proof

Based on (4.22) we obtain

The \(L^4\)-norm on the left hand side can be estimated from below by the \(L^2\)-norm, whereas on the right hand side we use Young’s inequality to deduce

Hence we may absorb the second term from the right hand side into the left hand side.

\(\square \)

4.3 Tightness of the invariant measures

Recall that \(\varphi _{M, \varepsilon }\) is a stationary solution to (3.1) having at time \(t \geqslant 0\) law given by the Gibbs measure \(\nu _{M, \varepsilon }\). Moreover, we have the decomposition \(\varphi _{M, \varepsilon } = X_{M, \varepsilon } + Y_{M, \varepsilon } + \phi _{M, \varepsilon }\), where \(X_{M, \varepsilon }\) is stationary as well. By our construction, all equations are solved on a common probability space, say \((\Omega , {\mathcal {F}}, {\mathbb {P}})\), and we denote by \({\mathbb {E}}\) the corresponding expected value. In addition, we assume that the processes \(\varphi _{M,\varepsilon }\) and \(X_{M,\varepsilon }\) are jointly stationary. This could be achieved for instance by considering a solution to the coupled SDE for \((\varphi _{M,\varepsilon },X_{M,\varepsilon })\) starting from the product of the corresponding marginal invariant measures, and applying Krylov–Bogoliubov’s argument.

Theorem 4.8

Let \(\rho \) be a weight such that \(\rho ^{\iota } \in L^{4, 0}\) for some \(\iota \in (0, 1)\). Then for every \(p \in [1, \infty )\)

Proof

Let us show the first claim. Due to stationarity of \(\varphi _{M, \varepsilon } - X_{M, \varepsilon } = Y_{M, \varepsilon } + \phi _{M, \varepsilon }\) we obtain

In order to estimate the right hand side, we employ Theorem 4.5 together with Lemma 4.1 to deduce

Finally, taking \(\tau > 0\) large enough, we may absorb the second term from the right hand side into the left hand side to deduce

Observing that the right hand side is bounded uniformly in \(M,\varepsilon \), completes the proof of the first claim.

Now, we show the second claim for \(p \in [2, \infty )\). The case \(p \in [1, 2)\) then follows easily from the bound for \(p=2\). Using stationarity as above we have

Due to Corollary 4.7 applied to \(p - 1\) and the fact that for any \(\sigma >0\) and \(\tau \geqslant 1\)

we deduce

Plugging this back into (4.25) and using Young’s inequality we obtain

Taking \(\tau = {\max }(1,\lambda ^{{-2p}})\) leads to

and choosing \(\delta >0\) small enough, we may absorb the second term on the right hand side into the left hand side and the claim follows \(\quad \square \)

The above result directly implies the desired tightness of the approximate Gibbs measures \(\nu _{M, \varepsilon }\). To formulate this precisely we make use of the extension operators \({\mathcal {E}}^{\varepsilon }\) for distributions on \(\Lambda _{\varepsilon }\) constructed in Section A.4. We recall that on the approximate level the stationary process \(\varphi _{M, \varepsilon }\) admits the decomposition \( \varphi _{M, \varepsilon } = X_{M, \varepsilon } + Y_{M, \varepsilon } + \phi _{M, \varepsilon }, \) where \(X_{M,\varepsilon }\) is stationary and \(Y_{M,\varepsilon }\) is given by (4.1) with  being also stationary. Accordingly, letting

being also stationary. Accordingly, letting

we obtain  , where all the summands are stationary.

, where all the summands are stationary.

The next result shows that the family of joint laws of  at any chosen time \(t\geqslant 0\) is tight. In addition, we obtain bounds for arbitrary moments of the limiting measure. To this end, we denote by

at any chosen time \(t\geqslant 0\) is tight. In addition, we obtain bounds for arbitrary moments of the limiting measure. To this end, we denote by  a canonical representative of the random variables under consideration and let

a canonical representative of the random variables under consideration and let  .

.

Theorem 4.9

Let \(\rho \) be a weight such that \(\rho ^{\iota } \in L^4\) for some \(\iota \in (0, 1)\). Then the family of joint laws of  , \(\varepsilon \in {\mathcal {A}},M>0,\) evaluated at an arbitrary time \(t \geqslant 0\) is tight on \(H^{-1/2-3\kappa }(\rho ^{2+\kappa })\times {\mathscr {C}}^{-1/2-\kappa }(\rho ^{\sigma })\times {\mathscr {C}}\ ^{1/2-\kappa }(\rho ^{\sigma })\). Moreover, any limit probability measure \(\mu \) satisfies for all \(p \in [1, \infty )\)

, \(\varepsilon \in {\mathcal {A}},M>0,\) evaluated at an arbitrary time \(t \geqslant 0\) is tight on \(H^{-1/2-3\kappa }(\rho ^{2+\kappa })\times {\mathscr {C}}^{-1/2-\kappa }(\rho ^{\sigma })\times {\mathscr {C}}\ ^{1/2-\kappa }(\rho ^{\sigma })\). Moreover, any limit probability measure \(\mu \) satisfies for all \(p \in [1, \infty )\)

Proof

Since by Lemma A.15

uniformly in \(M,\varepsilon \), we deduce from Theorem 4.8 that

uniformly in \(M,\varepsilon \). Integrating (4.24) in time and using the decomposition of \(\varphi _{M,\varepsilon }\) leads to

Hence due to Theorem 4.8 we obtain a uniform bound

for all \(t\geqslant 0\). In addition, the following expressions are bounded uniformly in \(M, \varepsilon \) according to Lemma 4.1 and Theorem 4.5

whenever the weight \(\rho \) is such that \(\rho ^{\iota } \in L^4\) for some \(\iota \in (0, 1)\). In view of stationarity of \(\zeta _{M, \varepsilon }\) and the embedding \({\mathscr {C}}^{1 - \kappa , \varepsilon } (\rho ^{\sigma }) \subset H^{1 - 2 \kappa , \varepsilon } (\rho ^2)\), we therefore obtain a uniform bound \( {\mathbb {E}} \Vert \zeta _{M, \varepsilon } (t) \Vert _{H^{1 - 2 \kappa , \varepsilon } (\rho ^2)}^2 \lesssim \lambda ^2 + \lambda ^{7}\) as well as \({\mathbb {E}} \Vert \zeta _{M, \varepsilon } (t) \Vert _{L^{2,\varepsilon } (\rho ^2)}^{2p} \lesssim {\lambda ^{p}+\lambda ^{3p+4}+\lambda ^{4p}}\) for every \(t \geqslant 0\). Similarly, using stationarity together with the embedding \({\mathscr {C}}^{1 - \kappa , \varepsilon } (\rho ^{\sigma }) \subset B^{0, \varepsilon }_{4, \infty } (\rho )\) as well as \(L^{4, \varepsilon } (\rho ) \subset B^{0, \varepsilon }_{4, \infty }(\rho )\) we deduce a uniform bound \({\mathbb {E}} \Vert \zeta _{M, \varepsilon } (t) \Vert _{B^{0, \varepsilon }_{4, \infty } (\rho )}^4 \lesssim {\lambda + \lambda ^{6}}\) for every \(t \geqslant 0\).

Consequently, by Lemma A.15 the same bounds hold for the corresponding extended distributions and hence the family joint laws of  at any time \(t\geqslant 0\) is tight on \(H^{-1/2-3\kappa }(\rho ^{2+\kappa })\times {\mathscr {C}}\ ^{-1/2-\kappa }(\rho ^{\sigma })\times {\mathscr {C}}\ ^{1/2-\kappa }(\rho ^{\sigma })\). Indeed, this is a consequence of the compact embedding

at any time \(t\geqslant 0\) is tight on \(H^{-1/2-3\kappa }(\rho ^{2+\kappa })\times {\mathscr {C}}\ ^{-1/2-\kappa }(\rho ^{\sigma })\times {\mathscr {C}}\ ^{1/2-\kappa }(\rho ^{\sigma })\). Indeed, this is a consequence of the compact embedding