Abstract

Tool condition monitoring (TCM) is a mean to optimize production systems trying to use cutting tool life at its best. Nevertheless, nowadays available TCM algorithms typically lack robustness in order to be consistently applied in industrial scenarios. In this paper, an unsupervised artificial intelligence technique, based on Growing Self-Organizing Maps (GSOM), is presented in synergy with real-time specific force coefficients (SFC) estimation through the regression of instantaneous cutting forces. The conceived approach allows robustly mapping the SFC, exploiting process parameters and similarity to manage the variability of their estimation due to unmodelled phenomena, like machine dynamics and tool run-out. The devised approach allowed detecting the tool end-of-life in cutting tests with variable lubrication, machine tool and cutting speed, through the adoption of a self-starting control chart running on real-time clustered data. The solution was validated through the comparison of the GSOM framework with respect to the optimized self-starting control chart applied without GSOM clustering. The GSOM reached a root mean squared percentage error (RMSPE) of 13.2% with respect to 56.1% obtained with the analogous control chart in a full-set optimization scenario. When optimised on tests for a unique machine tool and tested on another machine tool, GSOM scored an RMSPE of 34.5%, whereas the optimized control chart scored 64.5%.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Manufacturing systems require increased accuracy, flexibility, and reliability to face market demand. Being capable of assessing the tool condition in real-time, is receiving increasing attention from the production system manufacturers [Bernini et al. [6]], since such information can reduce machine downtimes and maintenance costs, as well as being introduced in process optimization algorithms [Mia et al. [28]]. Tool condition monitoring (TCM) techniques allow for the detection of worn cutting tools either from direct wear measurements (i.e., quantities directly associated to the tool wear like cutting edge pictures) or from indirect wear measurements (i.e., from quantities underlying information about wear). The development of an indirect TCM solution is the main focus of this paper. In general, tool condition monitoring and prognostics strategies belong to four categories [Peng et al. [30], Baur et al ([4, 5],Pimenov et al. [31]]: knowledge-based, model-based, statistical-based and data-driven. Knowledge-based approaches include fuzzy logics and expert systems, trying to translate experts’ knowledge in rules. Model-based approaches exploit dynamical models of wear evolution: they typically outperform other methods but generally they are not available for complex degradation phenomena. Statistical-based methods allow to identify model parameters from the data, introducing the concept of confidence of the tool condition estimation. Data-driven approaches build the model and estimate the model coefficients directly from data, learning complex correlations between signals and degradation but, in general, preventing model interpretation.

Dealing with milling operations, the main limitation associated to TCM techniques based on indirect measurements regards the management of variable regimes or process conditions. The most used signals in TCM are vibrations (e.g. [Cheng et al. [11], McLeay et al. [27]]), cutting forces or torque (like in [Colosimo et al. [13], Cheng et al. [11], Jim´enez et al. [19]; McLeay et al. [27]]) and acoustic emissions [McLeay et al. [27], Wickramarachchi et al. [34]], which are typically pre-processed through machine learning approaches [Liang et al. [24],Tao et al. [32]] or by manually extracting features like rms, skewness and kurtosis, like in [Cheng et al. [11]]. These quantities are dependent on process parameters, such as depth of cuts, feed per tooth as well as lubricating conditions and materials; this represents the main limitation for a consistent application of TCM approaches in industrial scenarios. Indeed, it would be necessary to perform run-to-failure tests with all the parameter combinations.

In order to overcome the above limitations related to traditional TCM algorithms, an unsupervised TCM strategy for gradual wear assessment is proposed in this paper, based on the online identification of Specific Force Coefficients (SFC) and artificial intelligence. SFC are estimated from the mechanistic milling model introduced in [Bernini et al. [7, 8], referring to the Altintas’ model Altintas [3]. Mechanistic milling models include a geometric description of the cutting process, thus, relating undeformed chip thickness formulation to the cutting forces based on the SFC. The development of a TCM strategy based on SFC estimation allows, at least from a theoretical point of view, reducing the dependence of measured indirect variables (i.e., cutting forces) from process parameters. Many improvements have been introduced by researchers to the Altintas’ model: Kumanchik and Schmitz, together with Matsumura and Tumura included run-out and teeth trochoidal trajectory in the model [Kumanchik and Schmitz [22],Matsumura and Tamura [26]]; Wan et al. decomposed the cutting forces in nominal and run-out effects Wan et al. [33]; Kilic and Altintas developed a general model for chip removal operations including machine dynamics and run-out Kilic and Altintas [20, 21]; Li et al. included the contribution of more than one previous teeth in the computation of undeformed chip thickness Li et al. [23]; Zhang et al. included minimum chip thickness [Zhang et al. [40]] while Zhou et al. introduced elastic recovery and variable entry/exit angles [Zhou et al. [42]]; Zhang et al. proposed the associated average uncut chip thickness formulation [Zhang et al. [41]]; Wojciechowski et al. proposed an original force model that included micro end milling kinematics, geometric errors of the machine tool-toolholder-mill system, both elastic and plastic deformations of workpiece correlated with the minimum uncut chip thickness, tool flexibility and a novel instantaneous area of cut formulation [Wojciechowski et al. [35]]; at last, Hajdu et al. proposed a curved uncut chip thickness formulation [Hajdu et al. [17]]. In the conceived approach, the used mechanistic milling model considers variable engagement along the mill axis and cutter double-phased geometry.

SFC were selected as monitoring features for the tool condition by Nouri et al. [Nouri et al. [30], using a method based on mean forces, following the classical approach from [Altintas [3]]. Anyway, this method required experimental tests with continuously varying feed (not the typical case of parts production). Recently, methods based on instantaneous forces were introduced, relaxing such need [Guo et al. [16],Farhadmanesh and Ahmadi [15]]. Nevertheless, such method brings to a higher uncertainty of estimated cutting coefficients, both due to machine dynamics, non-homogeneous materials and imperfect cutting models. Thus, it is necessary to properly analyse instantaneous SFC data. Another novelty of this paper is to introduce a real-time post-processing layer on the estimated instantenous SFC, in order to improve the robustness of the solution with respect to state of the art TCM techniques.

In this paper, a TCM method based on instantaneous SFC mapping is introduced, not requiring a predefined database of cutting operations for all the cutting combinations. The uncertainty in the estimation of SFC with instantaneous forces is shown to prevent a correct detection of an out-of-control cutting process. Thus, an unsupervised clustering technique (growing self-organising maps — GSOM) is introduced to deal with the estimation variability, adapting the solutions from [Liu et al. [25],Cholette et al. [12]] applied in other scenarios. The paper structure follows: in Sect. 2, the experimental activities are presented in details, starting from the experimental set-ups, the milling strategies and cutting tools, up to the experimental campaign; the algorithm development and the validation procedures are explained in Sect. 3; in Sect. 4, the results are presented for two scenarios: optimisation on the full set of experiments; an industrial portability context. In Sect. 5, final considerations about the developed approach are drawn to the reader attention.

2 Materials

The objective of this section is to introduce the experimental activities at the base of this research work. The structure of this section follows Fig. 1. The experimental campaign was conducted on two different machine tools for milling applications (Fig. 1A). The first one is a Mandelli M5 machine tool (M5, from now on), featured by a horizontal spindle system. The other machine tool is a Sigma Flexi FFG group (Flexi, from now on), featuring a vertical spindle unit. The machine tools were shown in Fig. 1B and C, respectively, together with the mill and the workpiece. The experiments were conducted on two machining centers in order to validate the robustness of the proposed approach. With the goal of highlighting the differences between the machine tools, Table 1 shows the comparison between their identified modal parameters. The tooling system was composed of a Mitsubishi AJX06R203SA20S milling tool held by HSK80 and HSK63 tool-holders, for the M5 and Flexi, respectively. The milling tool, featuring a nominal diameter of \(20mm\), was equipped with three high-feed cutting inserts (JOMT06T216ZZER-JL MP9140). The high-feed cutting inserts were made of a double-phased cutting edge, with two consecutive sections with different lead angles (\(12.{5}^{\circ }\) and \(24.{5}^{\circ }\)). The clearance angle major was equal to \(13.{0}^{\circ }\) and the corner radius was equal to \(1.6mm\). The high-feed cutter also presented a wiper edge, \(1.2mm\) long. The rake angle was \(3.{9}^{\circ }\) in the first phase of the cutting tool, \(3.{7}^{\circ }\) in the second phase. The flank angles were equal to \(9.{1}^{\circ }\) and \(9.{3}^{\circ }\), for the first and second phase, respectively. The helix angle of the tool was \(4.{0}^{\circ }\).

Paper experimentation: (A) experimental campaign parameters; adopted machining centers: (B) M5 and (C) Flexi; (D) milling kinematics and representation of the mill trajectory; (E) qualitative representation of the effect of unmodeled terms in specific force coefficients estimation and their link with machining direction

Both the machines were equipped with two lubrication systems: a conventional lubrication system and a cryogenic system. Measurement set-up consisted of two hardware parts. From the machine side, the SinuCom NC acquisition system from Siemens was used to access and store quantities (axis positions, speeds and torques) from the Numeric Control of the machine tools at a frequency of \(250Hz\). On the workpiece side, a dynamometric plate was installed in order to measure the cutting forces generated during the milling operations. The dynamometer was a Kistler 9255B, connected to a Kistler 5070A charge amplifier. The cutting forces were acquired through a NI cDAQ-9174, with a NI 9215 acquisition card at a sampling frequency of \(5kHz\). Despite dynamometric plates are the most accurate force transducers, they are not affordable in industrial scenarios due to their limited size, mounting constraints and high cost. For industrial applications, indirect force estimation methods have been developed, which make use of acceleration and displacement measurements, and observers like the Kalman filter [Albertelli et al. [1]]. In order to tune such systems, a proper excitation of the structure should be introduced through the use of dynamometric hammers or ad-hoc loading devices [Zhang et al. [39]]. Indeed, the development of a TCM strategy on cutting forces like the one presented in this paper is not limiting its application. For the inspection of wear on cutters, a Keyence VHX-7000 microscope was used.

A set of experimental run-to-failure tests was performed, bringing any cutter from a “new” condition to a fully degraded condition. Run-to-failures were considered as concluded either when an average flank wear of \(300\mu m\) or a maximum flank wear of \(600\mu m\) was reached. The workpiece was a \(255\) x \(262mm\) block made of \(T{i}_{6}A{l}_{4}V\), grade \(5\). The experimentation was performed with variable cutting conditions, i.e. changing machine tools (M5 and Flexi), cutting speeds (\(50m/min\), \(70m/min\) and \(125m/min\)) and lubrication set-ups (lubricant and cryogenic). The set of experimental tests consisted of five run-to-failures, for which the variable cutting conditions were reported in Table 2. The axial depth of cut \(a\) was set to \(0.4mm\), while the feed per tooth \(c\) was fixed to \(0.7mm\). Each experimental test consisted in a sequence of face-milling high-feed operations. The cutting process was performed following a down-milling strategy. The radial depth of cut \(b\) was set to \(13mm\) and was constant throughout the experiments. This was achieved through a mill trajectory featured by a spiral-inspired shape, as shown in Fig. 1D, offset by \(b\) when the whole perimeter of the workpiece was machined. Figure 1E shows the qualitative effect of unmodeled terms on the estimation of the specific force coefficients (explained in Sect. 3.1 and the link with the direction of cut. A summary of the experimental campaign was reported in Fig. 1A.

3 Methods

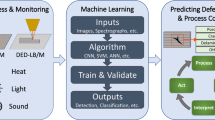

In this section, the algorithm is described, following the framework proposed in Fig. 2. The algorithm starts with the identification of SFC coefficients from the instantaneous cutting force measurements, by fitting a mechanistic cutting force model. Then, the Growing Self-Organizing Maps algorithm allows for anomaly detection and robust tool condition monitoring.

Algorithm framework: (A-B) identification of the specific force coefficients through the multivariate linear regression approach based on instantaneous cutting forces; (C) the unsupervised clustering of SFC based on GSOM (Growing Self-Organising Maps), where a self-starting control chart is run inside each GSOM’s region

3.1 SFC estimation

The conceived approach is based on the estimation of SFC during the milling test by means of multivariate linear regression on instantaneous forces (Fig. 2A–B). Since the geometrical features of the mill cutter are not traditional, it was necessary to apply an adequate formulation for the uncut chip thickness. In this case, the reference model was the one proposed in [Bernini et al. [7]]. The mathematical formulations for the high-feed tangential (\({F}_{t}\)) and radial (\({F}_{r}\)) instantaneous cutting forces are here re-proposed (Eq. (1)):

where \({K}_{tc}\), \({K}_{te}\), \({K}_{rc}\) and \({K}_{re}\) are the SFC; \({A}_{p}\) and \({A}_{c}\) are the areas underneath the previous and current tooth passes; \(l\) is the chip edge length. Cutting forces in the feed (\({F}_{x}\)) and normal (\({F}_{y}\)) directions are obtained by projecting and summing up each cutter contribution. The \({A}_{p}\) and \({A}_{c}\) areas are not typical components of cutting force models. We thus report a graphical representation of their meaning in Fig. 3. As can be seen in the figure, the difference between the above two terms represents the uncut chip area of the milling operation. The reader can find complete details about their computation in [Bernini et al. [7]]. A comment must be added about the choice of omitting \({K}_{ac}\) and \({K}_{ae}\), too. This is related to the industrial implementation of the developed algorithm. Since a dynamometric plate is typically not affordable in an industrial scenario, indirect force estimation methods should be used, as explained in Sect. 2. Such methods are developed to estimate only the in-plane components of cutting forces and not axial ones [Albertelli et al. [1]]. Indeed, no information about the \({K}_{ac}\) and \({K}_{ae}\) can be retrieved.

At this point, it is possible to formulate the instantaneous SFC identification procedure. The SFC estimation \({\widehat{{\varvec{\beta}}}}^{(o)}\) for the generic \(o\)-th package of cutting forces consisting of 3 mill rotation is obtained through Eq. (2) [Bernini et al. [8]]:

where the SFC (elements of \({\widehat{{\varvec{\beta}}}}^{(o)}\)) estimated through this formula will be indicated as \({\widehat{K}}_{tc}^{(o)}\), \({\widehat{K}}_{te}^{(o)}\), \({\widehat{K}}_{rc}^{(o)}\) and \({\widehat{K}}_{re}^{(o)}\); \({\mathbf{X}}^{(o)}\) is the design matrix; \({\mathbf{y}}^{(o)}\) is the vector containing the instantaneous cutting forces samples [Bernini et al. [8]]. The proposed method was based on linear regression, however other methods could be used for model identification [Farhadmanesh and Ahmadi [15],Wu et al. [36]].

3.2 Growing Self-Organising Maps adaptation

Besides the approach of [Bernini et al. [8]], where principal component regression was used to reduce the effect of multi-collinearity, an AI approach capable of mapping similar behaviours of SFC and managing the SFC variability may be appropriate. Furthermore, this may also apply to different sources of variability for the SFC: the variability in machine dynamics along the cutting axis, tool variable run-outs, material heterogeneity are just some examples of possible causes for SFC variability issues. Here, an adaptation of the GSOM is proposed (Fig. 2C).

The GSOM is an unsupervised neural network that divides the input space in a variable number of regions (i.e. creating a map), through the Voronoi tessellation [Liu et al. [25]]. Each region \({V}_{m}\) is defined by a centroid \({{\varvec{\xi}}}_{m}\) with \(m=\mathrm{1,2},...,M\) (being \(M\) the total number of regions), Eq. (3):

where \(\mathbf{x}\) is a point belonging to the input space. Thus, a point of the input space belongs to the region with the nearest centroid. The centroids have the possibility to move over time, giving the self-organisation property to the map. Differently form Self-Organising Maps, GSOM are allowed to grow, i.e. to increase the number of centroids (and consequently regions) as time passes. This makes GSOM a particularly suitable tool for unsupervised learning scenarios. For the purposes of this paper, the GSOM process begins with an initialisation phase. In this phase, the input space for the GSOM deployment is firstly selected. The input vectors are assumed to be constituted by \(p\) elements (here \(p=6\)), as reported in Eq. (4):

where \(c\) is the feed per tooth and \({\phi }_{c}\) is the angle between the feed direction and the x-axis in the x–y reference frame (i.e. the cutting direction). It has to be notice that any other cutting parameter or measured quantity may be included here. Obviously the more the input space dimension is increased, the slower the algorithm is. The feed per tooth was included since it may influence the estimation of the SFC (e.g. as assumed by exponential mechanistic models [Budak [10],Dang et al. [14],Yang et al. [38]]), whereas the cutting direction influences cutting forces due to the different dynamical compliance of the machine tool in the x and y directions. The initialisation phase includes also the choice relative to the initial number of regions \(M\) (here, \(M=1\) is selected) and the definition of the associated centroid (here, the first measured input vector is proposed as the centroid initial position). From here on, the deployment of the GSOM takes place.

A new SFC estimation is available every 3 mill revolutions. Indeed, an input vector \({\mathbf{x}}^{(o)}\) is ready, too. First of all, the GSOM computes the Best Matching Unit (BMU, Eq. (5)):

\(bmu\) represents the index of the region with the nearest centroid to the input vector (according to the Euclidean distance). Once the BMU is selected, the input vector is assigned and stored only within the BMU. A first hyper-parameter \(n\) of the network is here defined and referred to as memory factor. \(n\) is the number of past input vectors retained within each region. Once a region collects \(n\) input vectors, their mean value \({\overline{\mathbf{x}}}_{m}\) and their estimated covariance matrix \({\mathbf{S}}_{m}\) are computed. In order to decide whether the GSOM should enter in a growing phase or a learning phase, the squared Mahalanobi’s distance \({d}_{M}^{2}\left({\mathbf{x}}^{(o)},{\overline{\mathbf{x}}}_{bmu}^{(o-1)}\right)\) between the input vector \({\mathbf{x}}^{(o)}\) and \({\overline{\mathbf{x}}}_{bmu}^{(o)}\) is computed (Eq. (6)):

where \({\left({\mathbf{S}}_{bmu}^{(o-1)}\right)}^{-1}\) is the inverse of the previously estimated covariance matrix \({\mathbf{S}}_{m}\) for the BMU at sample \(o\). Equation (6) represents a measure of how far the new input vector is from the distribution of the previous \(n\) samples. Since matrix \({\mathbf{S}}_{m}\) may result to be singular, the generalised inverse is computed. Thus, the eigenvalues (\({\lambda }_{m,i}\)) and the eigenvectors (\({{\varvec{\nu}}}_{m,i}\)) of the matrix \({\mathbf{S}}_{m}\) are firstly derived such that \({\mathbf{S}}_{m}{{\varvec{\nu}}}_{m,i}={\lambda }_{m,i}{{\varvec{\nu}}}_{m,i}\). Each eigenvalue is then normalised in order to represent the fraction of described variance by \({\rho }_{m,i}=\frac{{\lambda }_{m,i}}{{\sum }_{i}{\lambda }_{m,i}}\). The generalised inverse is then computed through Eq. (7):

Based on Mahalanobi’s distance it is possible to generate a prediction region for a new input vector:

where \(\mathcal{F}(p,n-p)\) is a Fisher distribution with a numerator having \(p\) degrees of freedom and a denominator having \(n-p\) degrees of freedom. This relation is valid for normally distributed past input vectors and creates an elliptical region in the input space: thus, fixing a confidence level \(\alpha\), the associated ellipse squared radius is given by the \({F}_{1-alpha,n,n-p}\) quantile. If this assumption is not verified, the ellipse radius can be tuned, for example through Monte-Carlo approaches. Since in this context, no assumptions can be made on the distribution of the past input vectors, the ellipse radius will be referred to as \({R}_{t}\) (growth threshold). The left hand side term in Eq. (8) will be referred to as \(R\). Thus, the GSOM is allowed to grow only when \(R>{R}_{t}\). This condition, implies that the input vector is dramatically changed with respect to the previous \(n\), thus representing a different cutting condition. It is assumed that gradual wear produces a more progressive evolution of the input vector.

It has to be noticed that Eq. (6) can be computed only once \(n\) input vectors are stored in the BMU. Thus, the GSOM is allowed to grow only when a significant sample size is collected within the BMU. If the \(n\) samples are not yet collected, or if \(R\le {R}_{t}\), the BMU is only allowed to learn. The learning phase consists of a smoothing process where the centroid \({{\varvec{\xi}}}_{bmu}\) is shifted towards the current input vector by Eq. (9):

where \(\eta\) is a hyper-parameter called learning rate [Liu et al. [25]] and governs the nervousness of the centroids.

The described procedure, allows to automatically cluster the input vectors and consequently the SFC as soon as they arrive to the GSOM. From here, a post-process of the SFC is carried out online, inside each region. When \({\mathbf{x}}^{(o)}\) is assigned to the BMU, and the growth or learning phases are performed, the computation of two important synthetic coefficients is carried out following the approach presented in [Nouri et al. [30]. These two coefficients (\({K}_{t}^{(o)}\) and \({K}_{r}^{(o)}\)) are computed through Eq. (10):

where \({\overline{K}}_{tc}\), \({\overline{K}}_{te}\), \({\overline{K}}_{rc}\) and \({\overline{K}}_{re}\) are the SFC means computed on the first \(N\) input vectors collected in the BMU. It is then possible to compute a summary indicator \({K}^{(o)}\), starting from \({K}_{t}^{(o)}\) and \({K}_{r}^{(o)}\):

while \({K}_{t}^{(o)}\) and \({K}_{r}^{(o)}\) are representative of the effect of tool wear on the tangential and radial forces, respectively, \({K}^{(o)}\) carries global wear information [Nouri et al. [30]. These coefficients are computed in order to try to reduce the multicollinearity effect [Nouri et al. [30]; Bernini et al. [8]].

From now on, the focus is redirected inside the \(bmu\) region. Indeed, the index \(o-1\) will refer to the last element of the \(bmu\) collected before the \(o\)-th one. The moving range of \({K}^{(o)}\) is computed through Eq. (12):

Following the processing method proposed in [Nouri et al. [30], every \(N\) samples collected by the BMU, it is possible to compute a mean moving range which will be the monitored variable \({v}_{k,bmu}\) (Eq. (13)):

Thus, each time \(N\) samples are collected within a region of the GSOM, a sample for the monitored variable \({v}_{k,bmu}\) becomes available. The monitoring process is performed through a self-starting control chart within each region of the GSOM. It has to be noticed that in the GSOM, only one region per time is activated and monitored. The control chart is thus reported following [Bernini et al. [8]] and [Montgomery [29]. The running average is updated once \({v}_{k,bmu}\) becomes available (Eq. (14)):

The sum of squared deviations becomes (Eq. (15)):

The running standard deviation is updated through Eq. (16):

The k-th observation undergoes a standardisation performed through Eq. (17):

where \({T}_{k,bmu}\) is the standardised monitored variable. Following [Montgomery [29]; Bernini et al. [8]], the \({U}_{k,bmu}\) variable computed through Eq. (18) is distributed as a standard normal:

where \({\Phi }^{-1}\left(\cdot \right)\) is the inverse normal cumulative distribution; \(CD{F}_{t,k-2}\left(\cdot \right)\) is a \(k-2\) degrees of freedom cumulative \(t\) distribution; \({a}_{k,bmu}=\sqrt{\frac{k-1}{k}}\). A tabular Cusum control chart is then applied on \({U}_{k,bmu}\).

The accumulation of the two deviations above (\({C}_{k,bmu}^{+}\)) and below (\({C}_{k,bmu}^{-}\)) the target value are initialised to be null (\({C}_{0,bmu}^{+}=0\) and \({C}_{0,bmu}^{-}=0\)) and updated as follows (Eq. (19)):

where \({K}_{cc}\) is set to \(\frac{1}{2}{\sigma }_{0}\); \({\mu }_{0}\) and \({\sigma }_{0}\) are the mean and standard deviation of \(U\) (\({\mu }_{0}=0\) and \({\sigma }_{0}=1\)). Then, the BMU is considered out of control when either \({C}_{k,bmu}^{+}\) or \({C}_{k,bmu}^{-}\) overcome the threshold \(H\), which becomes here a GSOM hyper-parameter (typically set to \(5\), [Nouri et al. [30]; Montgomery [29]). If a region goes out-of-control, it is not allowed to return an in-control output for future samples. Having generated a clustered growing map of SFC values, it is necessary to define a method for determining the out-of-control state at the GSOM level (not only at a regional level). Thus, a combination of the out of control outputs of each region is proposed through Eq. (20):

where \({\Delta }^{(o)}\) is the combined GSOM output indicator at the current \(o\)-th sample, varying between \(0\) and \(1\); \(m\) is the region index, while \({M}^{(o)}\) is the current number of regions in the GSOM; \({\delta }_{m}^{(o)}\) is a quantity equal to \(0\) or \(1\), if the \(m\)-th region is, at the current sample, in control or out of control, respectively; \({n}_{m,bmu}^{(o)}\) is the current number of times that region \(m\) was selected as the BMU; \(o\) is the current sample number. This indicator takes into account the control condition of each region through a weighted mean operation. The weight is heavier if the region is older and more frequented. The GSOM is considered out-of-control when \({\Delta }^{(o)}\) overcomes the threshold \({\Delta }_{t}\), which constitutes the last hyper-parameter of the GSOM.

4 Results

The whole algorithm, starting from the SFC estimation up to the GSOM and control charts, was implemented in Python from scratch, using standard libraries (Numpy, Pandas, Scipy and Matplotlib). The analyses were performed on a Dell XPS 15 7590 featuring an Intel Core i7-9750H CPU @ 2.60 GHz.

As presented in Sect. 2, a set of five run-to-failure tests was run. The instantaneous cutting forces of the five tests were used to fit the mechanistic force model presented in [Bernini et al. [8]]. In Fig. 4, the estimations of SFC were presented for the whole experimental set. The evolution of the SFC based on instantaneous cutting forces shows some peculiarities. The SFC in fact present the multicollinearity effect.Footnote 1As shown in [Bernini et al. [8]], the instantaneous identification process, tends to confuse between the effects of the four regressors on the predicted output (cutting forces). This implies a phenomenon in the SFC which is referred to as see-saw effect. This phenomenon consists in a correlation between the oscillations of the SFC. For instance, when the estimated \({K}_{tc}\) increases, the \({K}_{te}\) decreases. Such phenomenon is particularly evident in tests 2 (Fig. 4b) and 3 (Fig. 4c). This phenomenon is responsible for a high variability and instability of the SFC estimation over time, which hides tool wear influence on their values. Nevertheless, the oscillations are governed by unmodelled phenomena that cause cutting forces measurements to vary during workpiece machining. These phenomena may be related to run-out, cutting temperature, or by the different dynamic compliance of the machine tool with respect to the cutting directions.

In order to face the undesired variability of the SFC, the GSOM was introduced. A visual representation of the GSOM application to each experimental test was shown in Fig. 5. Figure 5 is a qualitative figure, summarising most of the information included in the GSOM clustering. The hexagonal cells are the representation of the regions created by the GSOM. In general, the number of generated cells is correlated to the overall variability of the estimated coefficients and to the number of samples of the test. In fact, when a sudden difference is found in two consecutive input vectors, it is more probable that the Mahalanobi’s distance overcomes the growth threshold. Furthermore, the longer is the test, the higher is the probability of finding high variations in data. A second level of information is provided by the background colour of the cells. The figure shows the state of the GSOM map when a GSOM level out of control detection is observed. Thus, a grey cell background colour stands for an in-control cell at the end of the detection process; on the contrary, a red background colour represents an out-of control cell. Test cases number 2 and 3 present GSOM maps, where all the cells are out-of-control at the end of the process; whereas the remaining tests show also in-control cells. This behaviour is associated to the fact that the GSOM level out-of-control indicator \({\Delta }^{(o)}\) returns a detection when a \({\Delta }_{t}\) threshold is overcame. \({\Delta }^{(o)}\) varies between \(0\) and \(1\). Being \({\Delta }_{t}\) less than \(1\) (in this case, it is set to 0.7), it is not necessary that all the cells are out-of-control. Another useful point of view is represented by the scatter plots drawn inside any cell. These plots represent the pairs of \({K}_{r}\) and \({K}_{t}\) summary coefficients collected in the associated cell. The two coefficients are normalised and centred in the cell, thus no absolute values can be retrieved from the graph. At the same time, the scatter plots are drawn with progressive colours: blue points represent samples from the early stages of the cutting tool life, while red points refer to samples near the GSOM out-of-control detection. The scatter plots provide two levels of information. The first level is related to the efficacy of the region in describing the wear degradation. This can be noticed by a shift of the distribution of the two coefficients from the initial phases (blue), towards the cutting tool end-of-life (red). A strong separation within the data points represents a clear correlation with tool wear. This is also supported by the fact that, by construction, a cell can represent only a gradual progression of the coefficients. In general, diverging distribution of coefficients correlate to out-of-control cells, as expected. The frequency at which a cell is chosen as BMU represents a second level of information. The amount of times in which the cell was chosen as BMU is representative of the experience of the cell. In fact, frequently visited cells may be older than others, or more representative than other cells. It is important to notice that the \({\Delta }^{(o)}\) coefficient was thought to give more attention to cells with more experience. In this way, false positive and false negative cells have a lower effect on the overall performance of the GSOM. Some cells that present a meaningful trend of coefficients may be in-control because a significant number of expert cells already detected an out-of-control condition. The opposite case occurs in Fig. 5b, where the cell on top seems not to highlight a relevant shift in the coefficients, but it is still supported by expert out-of-control cells.

Representation of the GSOM maps at the GSOM level out of control condition. Regions are represented by hexagonal cells. Grey background stands for in-control cells, red for out-of-control cells. 2D scatter plots represent the evolutions of the Kr and Kt coefficients in a normalised fashion. The colour represents the sample number from the first (blue) to the last (red)

The results presented in the previous paragraph where obtained after an optimisation process of the GSOM hyper-parameters. More specifically, the results were optimised with respect to an average flank wear of \(200\mu m\). This choice was related to the chosen cutting conditions following ISO standards indications [International Standards [18] and scientific literature [Albertelli et al. [2]]. First of all, \(T{i}_{6}A{l}_{4}V\) is a hard-to-cut titanium alloy. Cryogenic lubrication is a relatively new cooling technology, still more unstable than conventional media. Furthermore, some tests were performed at very high cutting speed. All these aspects support a more conservative choice of the flank wear threshold. The optimisation results are reported according to two different industrial scenarios: the first one, used for the above reported figures represents the optimisation of the approach over the full set of experiments; the second one represents the case where the solution is optimised for a machine tool and ported to another one.

4.1 Full set optimisation

As previously explained, the proposed GSOM algorithm is a completely unsupervised solution for automatically clustering high variability SFC estimations and detecting the correct end-of-life of the cutting tool. Here, the hyper-parameters of the GSOM are optimised in order to detect a tool with \(200\mu m\) mean flank wear. An optimisation procedure over the full set of experimental run-to-failures is performed and the prediction error is measured on the full dataset. The optimisation method was performed through a two phases grid-search procedure [Cheng et al. [11]]. A grid of parameters was selected and fully explored in order to reduce the searching space. A second grid was determined to find the combination leading to the minimum root mean squared percentage error (RMSPE, [Botchkarev [9]]). The hyper-parameter combinations of the grids are reported in Table 3.

The grid-search optimisation procedure consists in the evaluation of the full set of hyper-parameter combinations. The combination with the minimum RMSPE is chosen as the best. For this scenario, the best combination was: \(\eta =0.045\), \({R}_{t}=7\), \(n=50\), \(N=35\), \(H=7\) and \({\Delta }_{t}=0.7\). The algorithm was capable of predicting the end-of-life time with a RMSPE of \(13.2\mathrm{\%}\), with a tendency to underestimate the end-of-life of cutting tools. The relative percentage errors for the single tests are instead reported in the first row of Table 4.

In this scenario, the GSOM was capable to predict well almost all the tests. Only the first and last test presented a relative percentage error higher than \(10\mathrm{\%}\). The last cutting test remains the worst one, being overestimated with a relatively high error. Nevertheless, it must be underlined that the algorithm performed well despite the different cutting conditions of the tests. Furthermore, the solution is completely unsupervised, and the map is self generated along the evolution of each test. The results of the conceived approach were compared to the control chart presented in [Bernini et al. [8]], applied to the SFC estimated with multivariate linear regression, in order to make them comparable. The control chart was not applied with standard threshold and 15 samples as averaging dimension, as presented in [Bernini et al. [8]], but an optimisation of the Two parameters was performed. Here, the control chart was optimised with respect to the full set of tests. The prediction results for the reference control chart showed an underestimation behaviour with a RMSPE of \(56.1\mathrm{\%}\). The predictions improvement of the GSOM were not negligible. Furthermore, in Table 4 it is possible to compare the two algorithms on each test, by looking at the first two rows of the table. The GSOM improved the prediction performances on every test, even if on Test 5 the GSOM leads to an overestimation of the cutting tool life.

4.2 Portability scenario optimisation

This second scenario faces the case where the conceived solution is firstly optimised on the tests performed on a single machine tool (Flexi) and then, the algorithm needs to be used also for another machine tool. The proposed scenario allows to evaluate the portability of the conceived solution. The phases for the algorithm optimisation are the same and the associated hyper-parameter combinations can be found in Table 3. In this case, the algorithm is optimised in order to minimise the RMSPE on the first three run-to-failures, run on the Flexi machine tool. The performances of the algorithm are then tested on the fourth and fifth run-to-failures, performed on the M5 machine tool. The optimal combination of parameters was: \(\eta =0.035\), \({R}_{t}=7\), \(n=50\), \(N=30\), \(H=10\) and \({\Delta }_{t}=0.7\). The RMSPE on the optimised set of tests was found to be \(2.9\mathrm{\%}\), with a tendency to underestimate the cutting tool end-of-life. When testing the algorithm on the M5 set of run-to-failures, the predictions were underestimating the end-of-life with a RMSPE of \(34.5\mathrm{\%}\). The relative percentage errors for this scenario were reported in the third row of Table 4. In this context, a really high performance was reached on the first three experiments. The algorithm was accurate with negligible errors. Of course, when testing the algorithm on unseen run-to-failures, the prediction errors rose. The algorithm underestimated both the tests. Nevertheless, the last two tests consisted of a double change in the cutting parameters. First of all, the machine tool was changed; secondly the cutting speed was almost doubled. These two changes increased the complexity of the estimations. Furthermore the optimisation set was constituted by a low number of tests. As for the previous scenario, the results of the conceived approach were compared to the control chart presented in [Bernini et al. [8]]. The control chart was optimised with respect to the Flexi tests and tested on the M5 run-to-failures. An underestimation with RMSPE of \(58.8\mathrm{\%}\) and \(64.5\) were obtained, respectively. Thus, GSOM led to dramatical improvements of the predictions. In Table 4, the algorithm performances were compared on each test, by looking at the third and fourth rows. The GSOM improved the prediction performances on every test, both on Flexi and M5 machine tool.

5 Conclusions

In this paper, a Growing-self Organising Map (GSOM) algorithm was introduced in order to perform tool condition monitoring in an unsupervised learning scenario. The algorithm was capable of managing the variability within the specific force coefficients (SFC) estimation, generated from the multicollinearity phenomenon and induced by unpredicted sources of variability, like machine tools dynamics dependent on the cutting direction or run-out. The conceived approach allowed to:

• cluster the SFC, by the automatic creation of regions with a similar behaviour of the coefficients. Thus, each region tends to monitor a gradual evolution of the SFC, while separating fast and sudden variations in their estimations.

• monitor the tool wear according to a voting system. Control charts are run inside any region and each of them contributes to a combined out-of-control indicator. The weight associated to each region is based on the region experience, i.e. based on its rate of being chosen as the best matching unit and the time of its creation. The voting system gave stronger weights to expert regions.

• outperform the prediction results of an optimised version of the control chart of [Bernini et al. [8], in two different scenarios. The first scenario analysed the performances of the GSOM and the reference approach when optimised on the full set of available run-to-failures. The GSOM reached a RMSPE of \(13.2\mathrm{\%}\), generally underestimating the tool life, whereas the optimised control chart was capable to reach only a \(56.1\mathrm{\%}\) RMSPE, highlighting the improvements introduced by the algorithm. A second scenario tested the portability of the algorithm. Both the approaches were optimised on a machine tool and tested on run-to-failures performed on a different one. The GSOM RMSPE were of \(2.9\mathrm{\%}\) and \(34.5\mathrm{\%}\), respectively, whereas the control chart application resulted in \(58.8\mathrm{\%}\) and \(64.5\mathrm{\%}\).

Notes

From a geometrical perspective, multicollinearity is easily explained in a bivariate regression problem. When two regressors are correlated, during the acquisition of a sample the points tend to be aligned in the 2D input space. Indeed, the regression curve (a plane) should be fitted to a cloud of points distributed nearly as a line. This means that random errors (points farther from the line) have a strong influence on the plane orientation, which is defined by the regression coefficients.

References

Albertelli P, Goletti M, Torta M et al (2016) Model-based broadband estimation of cutting forces and tool vibration in milling through in process indirect multiple-sensors measurements. Int J Adv Manuf Technol 82(5):779–796. https://doi.org/10.1007/s00170-015-7402-x. (publisher: Springer-Verlag London Ltd)

Albertelli P, Mussi V, Strano M et al (2021) Experimental investigation of the effects of cryogenic cooling on tool life in Ti6Al4V milling. Int J Adv Manuf Technol 117(7):2149–2161. https://doi.org/10.1007/s00170-021-07161-9. (publisher: Springer Science and Business Media Deutschland GmbH)

Altintas Y (2012) Manufacturing automation: metal cutting mechanics, machine tool vibrations, and CNC design. Cambridge University Press, Cambridge. https://doi.org/10.1017/CBO9780511843723

Baur M, Albertelli P, Monno M (2020) A review of prognostics and health management of machine tools. Int J Adv Manuf Technol 107(5):2843–2863. https://doi.org/10.1007/s00170-020-05202-3

Baur M, Albertelli P, Monno M (2020) A review of prognostics and health management of machine tools. Int J Adv Manuf Technol 107(5):2843–2863. https://doi.org/10.1007/s00170-020-05202-3

Bernini L, Waltz D, Albertelli P, Monno M (2021) A novel prognostics solution for machine tool sub-units: the hydraulic case. Proc Ins Mech Eng Part B: J Eng Manuf 236(9):1199–1215. https://doi.org/10.1177/09544054211064682

Bernini L, Albertelli P, Monno M (2023) Mechanistic force model for double-phased high-feed mills. Int J Mech Sci 237:107801. https://doi.org/10.1016/j.ijmecsci.2022.107801

Bernini L, Albertelli P, Monno M (2023) Mill condition monitoring based on instantaneous identification of specific force coefficients under variable cutting conditions. Mech Syst Signal Process 185(109):820. https://doi.org/10.1016/j.ymssp.2022.109820 (https://www.sciencedirect.com/science/article/pii/S0888327022008883)

Botchkarev A (2018) Evaluating performance of regression machine learning models using multiple error metrics in azure machine learning studio. SSRN Electr J. https://doi.org/10.2139/ssrn.3177507

Budak E (2006) Analytical models for high performance milling. Part I: cutting forces, structural deformations and tolerance integrity. Int J Machine Tools Manuf 46(12–13):1478–1488. https://doi.org/10.1016/j.ijmachtools.2005.09.009 (https://linkinghub.elsevier.com/retrieve/pii/S0890695505002622)

Cheng M, Jiao L, Shi X et al (2020) An intelligent prediction model of the tool wear based on machine learning in turning high strength steel. Proc Inst Mech Eng Part B: J Eng Manuf 234(13):1580–1597. https://doi.org/10.1177/0954405420935787

Cholette ME, Liu J, Djurdjanovic D et al (2012) Monitoring of complex systems of interacting dynamic systems. Applied Intelligence 37(1):60–79. https://doi.org/10.1007/s10489-011-0313-0

Colosimo B, Moroni G, Grasso M (2010) Realtime tool condition monitoring in milling by means of control charts for auto-correlated data. J Machine Eng 10:5–17

Dang JW, Zhang WH, Yang Y et al (2010) Cutting force modeling for flat end milling including bottom edge cutting effect. Int J Machine Tools Manuf 50(11):986–997. https://doi.org/10.1016/j.ijmachtools.2010.07.004 (https://linkinghub.elsevier.com/retrieve/pii/S0890695510001380)

Farhadmanesh M, Ahmadi K (2021) Online identification of mechanistic milling force models. Mech Syst Signal Process 149:1–18. https://doi.org/10.1016/j.ymssp.2020.107318,publisher:ElsevierLtd

Guo M, Wei Z, Wang M et al (2018) An identification model of cutting force coefficients for five-axis ball-end milling. Int J Adv Manuf Technol 99(1–4):937–949. https://doi.org/10.1007/s00170-018-2451-6

Hajdu D, Astarloa A, Dombovari Z (2021) Cutting force prediction based on a curved uncut chip thickness model. https://doi.org/10.48550/arXiv.2111.00795

International Standards (1993) ISO 3685: tool-life testing with single-point turning tools. ISO 3685:1993. https://www.iso.org/standard/9151.html

Jiménez A, Arizmendi M, Sánchez JM (2021) Extraction of tool wear indicators in peck-drilling of Inconel 718. Int J Adv Manuf Technol 114(9):2711–2720. https://doi.org/10.1007/s00170-021-07058-7

Kilic Z, Altintas Y (2016) Generalized mechanics and dynamics of metal cutting operations for unified simulations. Int J Machine Tools Manuf 104:1–13. 10.1016/j.ijmachtools.2016.01.006 (https://linkinghub.elsevier.com/retrieve/pii/S0890695516300074)

Kilic Z, Altintas Y (2016) Generalized modelling of cutting tool geometries for unified process simulation. Int J Machine Tools Manuf 104:14–25. https://doi.org/10.1016/j.ijmachtools.2016.01.007 (https://linkinghub.elsevier.com/retrieve/pii/S0890695516300062)

Kumanchik LM, Schmitz TL (2007) Improved analytical chip thickness model for milling. Precis Eng 31(3):317–324. https://doi.org/10.1016/j.precisioneng.2006.12.001

Li K, Zhu K, Mei T (2016) A generic instantaneous undeformed chip thickness model for the cutting force modeling in micromilling. Int J Machine Tools Manuf 105:23–31. https://doi.org/10.1016/j.ijmachtools.2016.03.002 (https://linkinghub.elsevier.com/retrieve/pii/S0890695516300116)

Liang J, Wang L, Wu J et al (2020) Elimination of end effects in LMD by Bi-LSTM regression network and applications for rolling element bearings characteristic extraction under different loading conditions. Digital Signal Process 107(102):881. https://doi.org/10.1016/j.dsp.2020.102881 (https://www.sciencedirect.com/science/article/pii/S1051200420302268)

Liu J, Djurdjanovic D, Marko K et al (2009) Growing structure multiple model systems for anomaly detection and fault diagnosis. J Dyn Syst Meas Control Trans ASME 131(5):1–13. https://doi.org/10.1115/1.3155004

Matsumura T, Tamura S (2017) Cutting force model in milling with cutter runout. Procedia CIRP 58:566–571. https://doi.org/10.1016/j.procir.2017.03.268

McLeay T, Turner MS, Worden K (2021) A novel approach to machining process fault detection using unsupervised learning. Proc Inst Mech Eng Part B: J Eng Manuf 235(10):1533–1542. https://doi.org/10.1177/0954405420937556

Mia M, Kr´olczyk G, Maruda R et al (2019) Intelligent optimization of hard-turning parameters using evolutionary algorithms for smart manufacturing. Materials 12(6):879. https://doi.org/10.3390/ma12060879 (https://www.mdpi.com/1996-1944/12/6/879)

Montgomery DC (2008) Introduction to statistical quality control. John Wiley & Sons Inc

Nouri M, Fussell BK, Ziniti BL et al (2015) Real-time tool wear monitoring in milling using a cutting condition independent method. Int J Machine Tools Manuf 89:1–13. https://doi.org/10.1016/j.ijmachtools.2014.10.011. (publisher: Elsevier)

Peng Y, Dong M, Zuo MJ (2010) Current status of machine prognostics in condition based maintenance: a review. Int J Adv Manuf Technol 50(1–4):297–313. https://doi.org/10.1007/s00170-009-2482-0

Pimenov DY, Bustillo A, Wojciechowski S et al (2023) Artificial intelligence systems for tool condition monitoring in machining: analysis and critical review. J Intell Manuf 34(5):2079–2121. https://doi.org/10.1007/s10845-022-01923-2 (https://link.springer.com/10.1007/s10845-022-01923-2)

Tao X, Zhang D, Ma W, Liu X, Xu D (2018) Automatic metallic surface defect detection and recognition with convolutional neural networks. Appl Sci 8(9):1575. https://doi.org/10.3390/app8091575

Wan M, Zhang WH, Tan G et al (2007) New algorithm for calibration of instantaneous cutting-force coefficients and radial runout parameters in flat end milling. Proc Inst Mech Eng Part B: J Eng Manuf 221(6):1007–1019. https://doi.org/10.1243/09544054JEM515,publisher:IMECHE

Wickramarachchi CT, Rogers TJ, McLeay TE et al (2022) Online damage detection of cutting tools using Dirichlet process mixture models. Mech Syst Signal Process 180(109):434. https://doi.org/10.1016/j.ymssp.2022.109434 (https://linkinghub.elsevier.com/retrieve/pii/S0888327022005520)

Wojciechowski S, Matuszak M, Powalka B et al (2019) Prediction of cutting forces during micro end milling considering chip thickness accumulation. Int J Machine Tools Manuf 147(103):466. https://doi.org/10.1016/j.ijmachtools.2019.103466 (https://linkinghub.elsevier.com/retrieve/pii/S0890695519305358)

Wu J, Wang J, You Z (2010) An overview of dynamic parameter identification of robots. Robot Comput-Integr Manuf 26(5):414–419. https://doi.org/10.1016/j.rcim.2010.03.013 (https://www.sciencedirect.com/science/article/pii/S0736584510000232)

Yang Y, Liu Q, Zhang B (2014) Three-dimensional chatter stability prediction of milling based on the linear and exponential cutting force model. Int J Adv Manuf Technol 72(9–12):1175–1185. https://doi.org/10.1007/s00170-014-5703-0 (http://link.springer.com/10.1007/s00170-014-5703-0)

Zhang R, Wu J, Wu C, Li Q (2022) Two-step optimum design of a six-axis loading device with parallel mechanism considering dynamic coupling. Int J Robotics Autom 37(6):498–511. https://doi.org/10.2316/J.2022.206-0689

Zhang X, Ehmann KF, Yu T et al (2016) Cutting forces in micro-end-milling processes. Int J Machine Tools Manuf 107:21–40. https://doi.org/10.1016/j.ijmachtools.2016.04.012 (https://linkinghub.elsevier.com/retrieve/pii/S0890695516300451)

Zhang Y, Li S, Zhu K (2020) Generic instantaneous force modeling and comprehensive real engagement identification in micro-milling. Int J Mech Sci 176(105):504. https://doi.org/10.1016/j.ijmecsci.2020.105504 (https://linkinghub.elsevier.com/retrieve/pii/S0020740319328668)

Zhou Y, Tian Y, Jing X et al (2017) A novel instantaneous uncut chip thickness model for mechanistic cutting force model in micro-end-milling. Int J Adv Manuf Technol 93(5–8):2305–2319. https://doi.org/10.1007/s00170-017-0638-x

Acknowledgements

The authors would like to thank Eng. Arfini for its contribution during the development and implementation of the conceived work.

Funding

Open access funding provided by Politecnico di Milano within the CRUI-CARE Agreement. This work was developed within the ECOSISTER project “2022–2025 ECOSISTER PNRR Spoke 3: Ecosystem for sustainable Transition in Emilia-Romagna—Green manufacturing for a sustainable economy, National Recovery and Resilience Plan (NRRP), Mission 4, Component 2 Investment 1.4, funded from the European Union – NextGenerationEU” and within the DIGIMAN project, funded by Asse 1 – Azione 1.2.2 POR-FESR 2014 – 2020 Emilia-Romagna”.

Author information

Authors and Affiliations

Contributions

L. Bernini conceptualized the study, designed and performed the experiments, conceived and implemented the methodology, wrote and revised the paper. P. Albertelli conceived the research, designed the experiments, performed them and supervised the research. M. Monno performed the proofread of the paper.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Bernini, L., Albertelli, P. & Monno, M. Robust tool condition monitoring in Ti6Al4V milling based on specific force coefficients and growing self-organizing maps. Int J Adv Manuf Technol 128, 3761–3774 (2023). https://doi.org/10.1007/s00170-023-11930-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-023-11930-z