Abstract

This contribution provides an overview of the SINAPS@ French research project and its main achievements. SINAPS@ stands for “Earthquake and Nuclear Facility: Improving and Sustaining Safety”, and it has gathered the broad research community together to propose an innovative seismic safety analysis for nuclear facilities. This five-year project was funded by the French government after the 2011 Japanese Tohoku large earthquake and associated tsunami that caused a major accident at the Fukushima Daïchi nuclear power plant. Soon after this disaster, the international community involved in nuclear safety questioned the current methodologies used to define and to account for seismic loadings for nuclear facilities during the design and periodic assessment review phases. Within this framework, worldwide nuclear authorities asked nuclear licensees to perform ‘stress tests’ to estimate the capacity of their existing facilities for sustaining extreme seismic motions. As an introduction, the French nuclear regulatory framework is presented here, to emphasize the key issues and the scientific challenges. An analysis of the current French practices and the need to better assess the seismic margin of nuclear facilities contributed to the shaping of the scientific roadmap of SINAPS@. SINAPS@ was aimed at conducting a continuous analysis of completeness and gaps in databases (for all data types, including geology, seismology, site characterization, materials), of reliability or deficiency of models available to describe physical phenomena (i.e., prediction of seismic motion, site effects, soil and structure interactions, linear and nonlinear wave propagation, material constitutive laws in the nonlinear domain for structural analysis), and of the relevance or weakness of methodologies used for seismic safety assessment. This critical analysis that confronts the methods (either deterministic or probabilistic) and the available data in terms of the international state of the art systematically addresses the uncertainty issues. We present the key results achieved in SINAPS@ at each step of the full seismic analysis, with a focus on uncertainty identification, quantification, and propagation. The main lessons learned from SINAPS@ are highlighted. SINAPS@ promotes an innovative integrated approach that is consistent with Guidelines #22, as recently published by the French Nuclear Safety Authority (Guidelines ASN #22 2017), and opens the perspectives to improve current French practice.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Post-Fukushima Context, Complementary Safety Studies, and PSHA Studies in France

Following the magnitude (Mw) 9 Tohoku earthquake off the Pacific coast of Japan in March 2011, a tsunami caused many deaths and great damage, and also led to a major nuclear accident at the Fukushima-Daïchi nuclear power plant, that was ranked level 7 (i.e., highest on the International Nuclear and Radiological Event Scale of nuclear and radiological accidents). The international community involved in nuclear safety was immediately questioned about the reliability of its estimates, especially regarding external events (e.g., earthquakes, floods), the appropriateness of the approaches deployed, and the adequacy of the margins retained at the design stage of new nuclear facilities or during periodic assessment review of existing nuclear facilities.

The nuclear accident triggered an immediate reaction from the nuclear authorities worldwide to urge all operators of nuclear plants to carry out seismic risk and safety analysis on their existing structures. The objective of these stress tests was to verify the adequacy of the safety standards used when nuclear power plants (NPPs) received their authorization, in view of potential extreme external hazards (e.g., seismic, tsunamic, flood hazard). In France, these on-site analyses and verifications were carried out in 2011 as the initial phase of the complementary safety studies (2011–2012). These allowed an appreciation of the seismic margins of the various structures and components that were mainly based on expert opinions and computational methodologies, traditionally implemented for design purposes.

In a second step, the French Nuclear Safety Authority (ASN) that is an independent body handling the nuclear safety (http://www.asn.gouv.fr/) and the licensees defined a new concept, the ‘hard core’, “as a set of engineering buildings, equipment, and organization processes that will significantly increase the seismic safety of a plant, to allow it to sustain extreme natural events”. This hard core aims to make installations even more robust and able to withstand rare and extreme natural hazards. The hard core structures and equipment need to be scaled for seismic levels, known as the ‘hard core seismic levels’ (HCSLs). The proposal by the licensees of these HCSLs was the subject of discussions with the ASN and its technical support, the Institute of Radiation Protection and Nuclear Safety (IRSN), between 2012 and 2016. At the end of 2012, the review of French complementary safety studies by a committee of international experts led the ASN to request licensees to update their HCSL proposals based on purely deterministic French historical practice, through probabilistic seismic hazard assessment (PSHA) to characterize the seismic hazard in terms of probability of occurrence. In 2014 and 2015, the ASN finally defined the characteristics that the HCSLs had to meet for licensees, as a set of deterministic and probabilistic levels known as the ‘safe shutdown earthquake’, increased by a 50% factor and a 2 × 104 years return period, respectively.

The implementation of state-of-the-art probabilistic approaches for seismic hazard assessment (SHA) is a first in France for nuclear plants. Previously, the characterization of seismic hazard for French nuclear plants was only based on the seismic scenario philosophy (i.e., a seismic level corresponds to a scenario of specific magnitude and one distance to the site), with the uncertainties considered implicitly or explicitly through safety coefficients. The implementation of PSHA gave rise to numerous expert discussions and highlighted the need for additional scientific enhancement (see for instance Martin et al. 2018). Indeed, if nobody can agree on what a seismic hazard can be estimated, it is very questionable that an agreement on seismic risk evaluation can be reached. Beyond the seismic hazard, estimation of the whole facility capacity remains a huge challenge. Even during the Tohoku earthquake, the components related to plant safety worked appropriately during the vibratory phase, whereas the global safety failed during the tsunami phase (IAEA 2015 report). There was a specific need to improve seismic margin assessments and to propose the rationale to design and verify structures, systems and components as consistent with the performance objectives, and consequently to define the explicit criteria. In France in particular, there was no consensual rationale (and at the time, no regulatory documents), this lack of approved practice for assessing of the ultimate seismic capacity of structures, systems and components has resulted in notably increased difficulties since the introduction of the ‘beyond design’ seismic levels, such as associated with the hard core in France. These difficulties happened in France during the post-Fukushima complementary safety studies, and even though every seismic expert agreed on the existence of these margins, there was no confidence in their values. This difficulty was reported by the board of experts of the European countries involved in the cross-assessment of all of these studies. This situation also motivated and justified the research performed in SINAPS@, as further detailed below.

1.2 Implementation of the SINAPS@ Project

In this post-Fukushima context in 2012, 12 partners built the SINAPS@ project (Earthquake and Nuclear Installation: Improving and Sustaining Safety): Commissariat à l’Energie Atomique et aux Energies Alternatives (CEA), Electricité de France (EDF), École Normale Supérieure Paris-Saclay, CentraleSupelec, Institute of Radiation Protection and Nuclear Safety, Soil-Solids-Structures and Risks Laboratory (Polytechnic Institute of Grenoble), École Centrale de Nantes (ECN), and EGIS—industry, FRAMATOME, ISTERRE, IFSTTAR and CEREMA. The SINAPS@ research program identified three critical weaknesses in French practice at the time for seismic analysis (especially following the design regulatory practices): (1) the large gap between hazard and vulnerability assessment methodologies; (2) the lack of a consistent and explicit treatment of uncertainties; and (3) the incompleteness of seismic analyses, which were rarely, if ever, taken to the ultimate stage of the risk estimate (i.e., combination of the failure probability and the consequences). Although identified, these three critical points greatly reduced, or if not, made impossible, assessment of the available seismic margins of nuclear facilities.

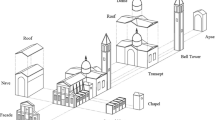

SINAPS@ was then intended to evaluate seismic safety continuously from the fault to the civil engineering works and equipment, to emphasize the quantification and propagation of the uncertainties. The exploration of epistemic and aleatory uncertainties concerned all of the databases and metadata that were used, the knowledge and modeling of the physical processes of the coupling and interactions between the geological medium and the building that are strongly related to the level of the seismic occurrences considered, and the methods used at each stage of the assessment of seismic hazard and the vulnerability of the nuclear structures and components. SINAPS@ was structured around six topics, called Work Packages (WPs) (see Fig. 1), that covered the entire seismic analysis chain: WP1, Seismic hazard; WP 2, Nonlinear interactions between near and far wave fields, the geological medium, and the structures; WP 3, Seismic behavior of structures and components; WP4, Seismic risk assessment; WP5, Experimental contributions/building–building interaction studies; and WP6, Knowledge dissemination and training sessions. The project was financed by the French Government in 2013, and benefited from this national funding. In particular, SINAPS@ supported nine PhD students and 21 one-year post-doctoral positions. The main objectives of SINAPS@ were to:

SINAPS@ project organization according to the steps of the seismic risk assessment chain (from Berge-Thierry et al. 2017a)

Rank various parameters and evaluate the impact of uncertainties (through data and methods) at each step: seismic hazards, site effects, dynamic soil-structure interaction (SSI), seismic behavior of structures and equipment, and failure assessment;

Propose an innovative method (as verified and validated calculation software) for the modeling of seismic wave propagation in the most integrated and continuous way, from the fault to the seismic response of the structures and equipment;

Improve seismic margin assessment methodologies;

Formulate recommendations on research and development actions and data acquisition, and on engineering practices, and in particular for assessment of the vulnerability of structures and components, and enhancement of analytical methodologies, including preparation of their dissemination and the evolution of the regulations.

1.3 Seismic Risk Regulatory Framework in France for Nuclear Facilities

In France, nuclear safety is handled by ASN within the framework of a set of documents that have different legal standing, as illustrated in Fig. 2.

At the top of the regulatory corpus there is the Nuclear Safety and Transparency (TSN) Law, which was promulgated in 2006, as declared by the Government through Decrees and by the ASN through Decisions (the approval of which is shared between the Government and the ASN, or is the responsibility of the ASN only). These documents constitute a legally binding system for licensees. At the base of the corpus, the ASN published a set of technical documents, of approaches that were considered acceptable for the licensees to use in the safety demonstration: Guidelines and Fundamental Safety Rules (RFS). These documents are not legally binding, meaning that licensees can propose alternative methods if supported by the necessary justification: although apparently pragmatic and flexible, this regulatory scheme is often driven, and sometimes dominated, by expert debate.

The documents published by the ASN related to the safety management of seismic risk are shown Fig. 3. RFS 1.3.c deals with “geological and geotechnical studies of the site; determination of soil characteristics, and studies of soil behavior”. RFS 2001-01 proposes an acceptable method for determination of seismic movement that must be taken into account for the design of a facility with respect to seismic risk (RFS 2001-01, 2001). Guideline ASN/2/01 (2006) proposes acceptable methods for the ASN to determine the seismic response of the civil engineering structures, by considering their interactions with the equipment and evaluation of the stresses to be considered in their design (Civil Engineering—CW—and materials). Finally, RFS 1.3.b. defines the nature, location and operating conditions of the seismic instrumentation required for rapid acquisition of seismic movement to which buildings and structures with systems and components that are important for safety can be subjected, to compare these to the seismic movement that serves as the basis for the design of the facilities.

The French nuclear safety approach is based on a purely deterministic concept, with postulation of failure modes and putting in place of defense lines. This means, in particular, within the framework of seismic risk, such that the estimation of the seismic hazard is of a seismic scenario type that is related to reference earthquakes that arise from analysis of the seismicity catalogs (i.e., historical, instrumental, paleo-historical). Some uncertainties are taken into account through conservatism (implicit or explicit), such as for the safety coefficients (see Berge-Thierry et al. 2017b).

Two natural consequences of this approach are that the seismic hazard results (i.e., seismic movement) are not characterized by confidence levels, as uncertainties are not explicitly explored, and are not associated to any probabilistic information, such as the annual frequency of occurrence or exceedance. Due to this latter reason, these deterministic SHAs cannot be used as inputs of probabilistic safety assessments that are currently carried out; e.g., during quantification of seismic margins (except to check possible cliff-edge effects). The deterministic concept is illustrated in the structural and equipment part by use of simplified methods and constraining assumptions, such as the need to ensure linear behavior. In addition, the uncertainties and/or specific effects are, or can be, accounted for through fixed margins that are not always very explicit. All of these practices basically lead to the impossibility to correctly and fully assess seismic margins, and in particularly, they clearly do not allow to quantify them.

This deterministic approach prevails in RFS 2001-01 and Guidelines ASN/2/01, which singled the French practice for several decades from other nuclear countries and international recommendations that were more oriented toward probabilistic approaches. In the most recent references, however, the International Atomic Energy Agency (IAEA; e.g., IAEA SSG 9 Guidelines hereafter IAEA 2010), the Western European Nuclear Regulators Association (e.g., Safety Reference Levels for Existing Reactors) and Guidelines ASN #22 published in 2017 recommended the use of both deterministic and probabilistic methods to assess seismic hazard (i.e., for the design and assessment phases), and to perform probabilistic safety analysis. In these latter Guidelines ASN #22, the target value of the seismic level is an annual frequency of 10−4 for the design, and the need to reach at least the minimal spectral acceleration of 0.1 × g. Again, in Guidelines ASN #22, the objective is not formulated in view of an overall global safety performance, nor in terms of associated criteria.

Note that most of these documents were prepared and published more than 30 years ago (RFS 1.3.C in 1985, RFS 1.3.b. in 1984), with the most recent ones dated more than 10 years ago, with Guidelines ASN/2/01 revised in 2006, and RFS 2001-01 revised in 2001. From this regulatory framework, we can also highlight that:

- 1.

there is no grading of seismic hazard estimate that depends on the nature of the nuclear facility and its potential to cause damage to humans and/or the environment (i.e., the actual associated risk). The earthquake-related stresses are estimated using the same approach used for a laboratory or facility, as used here for a pressurized water reactor: while the behavioral requirements of the structures should differ allowing to reach specific safety requirements. It should be noted that at the international level of the nuclear countries, the IAEA Safety Standards (cf. Chapter 10, Guidelines SSG-9, IAEA 2010) recommend that the importance of seismic levels be classified by facility category according to their associated risks and potential (i.e., radiological contamination potential, in particular). This difference stems directly from the application of a deterministic approach to define seismic hazard in France; conversely, the definition of seismic hazard via a probabilistic approach implies a target in terms of the level of protection (i.e., the probability of exceeding a parameter over a given period of time).

- 2.

RFS 2001-01 and Guidelines ASN/2/01 provide acceptable methods for the definition of seismic movement for the design of the facilities. There are no specific reference documents for the safety review phases of installations, which take place every 10 years in France. Guidelines ASN #22 (2017) as applicable to the design of nuclear power reactors are also considered as the main reference for nuclear plant assessment. During these review periods, safety is entirely evaluated by the licensee. From the seismic risk point of view, this includes conducting seismic margin studies on the existing facility in the light of an updated state-of-the-art SHA. These studies require implementation of the most up-to-date databases, and also the most representative methods to define the actual behavior of the structure during an earthquake. Taking uncertainties into account in seismic analysis is necessary to fully assess the behavior of any facility with respect to an updated hazard, or on the contrary, to identify its weaknesses and to define potential solutions to improve its seismic safety, or to confirm the safety coefficients. The SINAPS@ project is fully in line with this framework for estimation and quantification of seismic margins of new and existing nuclear facilities.

1.4 Synthesis of French Seismic Risk Management for Nuclear Safety: from 1960s Until Today, and Perspectives

Figure 4 summarizes the chronology of the reference documents applicable to France for management of seismic risk for all basic nuclear facilities (excluding deep storage) that were applicable between 1960 and 2006, the date of the last revision of the design principles. To this corpus of regulations are added references shared between licensees for the design, such as the Rules for Design, Manufacture, Installation and Commissioning (RCCs; e.g., mechanical components, civil engineering), and in line with other international standards (e.g., American Society of Mechanical Engineers, American Society of Civil Engineering).

Figure 5 complements Fig. 4 from 2001 and 2005, respectively, as the last update of the RFS and Guidelines to Design, including the new important documentation and key dates and projects related to seismic risk management in France and elsewhere. After the TSN law in 2006, the Basic Nuclear Plant Decree was published, which stated the general rules for nuclear plants. This Decree stipulates that “their application will be based on an approach commensurate with the importance of the risks or inconveniences presented by the installation”. Finally, in 2015, the ASN published a Decision (Décision ASN 2015) that established a classification of the basic nuclear installations with regard to the risks and disadvantages they pose for the interests mentioned in Article L. 593-1 of the Environment Code, where three categories were defined on the basis of criteria set out in the Decision. The ASN confirmed this classification by Decision in 2017 (Décision ASN 2017), with the publication of a list of all of the basic nuclear facilities in France, and their association according to category.

Highlights since the last revision of the reference documents in France. Emergence in France and prescription of the PSHA for the hard core levels defined after the Fukushima accident in the framework of the French complementary safety studies. PSHA and DSHA promoted in the new Guidelines ASN #22 (2017 applicable to NPP) and requested for performing probabilistic safety analysis

Guidelines ASN #22 were published in 2017, although they are not yet used operationally, and they are presented as being the reference for the design of pressurized water reactors. They are also indicated as the reference document in the case of assessments, without giving any performance objectives or any associated criteria. This is quite surprising, as on the other hand, these Guidelines require the licensee to systematically perform probabilistic seismic analysis. Furthermore, as the Guidelines cover all types of seismic levels, from the classical design levels (i.e., safe shutdown earthquake) to beyond the design, to include the HCSLs. As the guidelines are not written in a performance-based approach, their application should be accompanied by, or even preceded by, the definition of the safety objectives for each context (i.e., design, assessment, beyond design), and the associated performance expectations from structures and components. Definition of the criteria is needed, as the acceptable assumptions and methods to demonstrate that objectives are reached and criteria fulfilled. The texts published from 2015 to 2017 are thus an evolution in relation to the initial doctrine, as described above.

At the international scale, the Niigata-Chuetsu Oki earthquakes in 2007 and especially the Tohoku earthquake in 2011 in Japan have indeed reinforced the need to characterize and quantify the seismic margin analysis of nuclear facilities. In France, complementary safety studies led the ASN to prescribe seismic levels beyond the design of the structures and components of the hard core. In particular, the ASN enjoined licensees to propose hard core spectra of deterministic (RFS 2001-01) and probabilistic spectra to 20,000 years of return period (without confidence level precision), and including site effects. This decision is the first regarding the use of PSHA. At the same time, international and French experience from the feedback post-Fukushima, research programs on seismic margins, and all of the seismic risk themes (including SINAPS@) provided an opportunity for stakeholders to update the French corpus of regulations. The possible evolution towards integrated seismic safety approaches is illustrated through the approach of the Nuclear Regulatory Commission in the USA (US-NRC), as illustrated in Fig. 6. The explicit propagation of uncertainties to define seismic hazard (i.e., via PSHA) has been a requirement of the US-NRC since 1996, and the evolution toward integrated risk approaches has been effective since 2005 (through the Regulatory Guidelines and supporting documents), under the term ‘Risk-Informed’ Performance Based Seismic Design (see NRC RG.1.208 2007).

Illustration of the evolution and revisions of references to the consideration of seismic risk for nuclear power, as carried out by the US-NRC in 2017 (source: N. Chockshi, SMIRT-24, Busan, 2017). RG, regulatory guidelines; DOE, Department of Energy Technical Standards; ASCE, American Society of Civil Engineering; ASME, American Society of Mechanical Engineers

1.5 Links Between the French Nuclear Seismic Context and the SINAPS@ Research

To summarize the issues in France, important facts that motivated the SINAPS@ research directions are listed here:

The regulatory framework is fully and only dedicated to the design stage of structures and components, although it is clearly not a ‘performance-based approach’ nor a ‘risk-informed approach’, as it is in the US, for example.

There is no grading regarding the severity of a facility failure with respect to its environmental and human impacts.

Without a clear performance-based approach, discussions during the assessment of existing facilities are particularly difficult, as they are constrained by the whole deterministic safety approach. The historical French choice to retain a deterministic seismic level to design nuclear facilities implicitly prevents the association of seismic motion with occurrence frequency. Such deterministic SHA can then not be used to perform any classical probabilistic seismic analysis, as requested in Guidelines ASN #22.

The Guidelines ASN #22 requirement to initially perform deterministic SHA (DSHA), and then if possible PSHA, is surprising, as the licensee needs to conduct full probabilistic seismic analysis. The coexistence of DSHA and PSHA in these Guidelines should be further explained. Indeed, it is not recommended to mix nor confuse these two approaches, the outputs for which are very different in terms of physical meaning, of engineering practice, and with respect to the treatment of uncertainties (and consequently to the confidence levels).

As a consequence of previous observations, SINAPS@ promotes alternative approaches to those currently used in France for the design stage (which are mainly based on simplified assumptions and methods, with nonexplicit treatment of uncertainties through fixed safety coefficients, which lead to not homogeneous margins between sites).

As a first priority, the association of reliable confidence levels and return periods to seismic hazard outputs is clearly defended, as it is not possible to avoid any probabilistic assessment. There is also a necessity to be able to appreciate the overall risk of a specific nuclear facility with respect to another nuclear facility for the same seismic level, and/or to rank the various hazards between two nuclear facilities. Secondly, an integrated methodology is promoted to compute the seismic wavefield from its nucleation on a fault, through the complex geological medium, up to the structure foundations and its transmission to the sensitive equipment. For this approach, there is an explicit and mastered propagation of the uncertainties. SINAPS@ focuses on the consistency between all of these interfaces, to ensure that the seismic hazard outputs are adapted and well used by geotechnicians and engineers. Last but not least, the relevance of the assumptions and methods used to model the seismic response is checked; in particular, the physical phenomena involved strongly depend on the seismic level considered (i.e., nonlinear behavior of soil and materials, and interactions).

1.6 Identification of the SINAPS@ Specificities and Research Directions

Due to the French context, as detailed above, and driven mainly by a deterministic safety approach that is devoted to the design of nuclear facilities, the probabilistic tools remain sparse and are not, or are weakly, consolidated. There was then a real necessity to improve the probabilistic practice to satisfy the new safety requirements, as for those mentioned in the recent Guidelines ASN #22 that integrated what was learnt after the Fukushima-Daïchi accident. SINAPS@ promoted and developed tools that made it possible to progress toward probabilistic seismic analysis, the outputs of which are associated with confidence levels that are representative of an explicit propagation of the uncertainties. The proposed methods are adequate for the physical processes that are involved for the seismic levels considered; i.e., the nonlinearities of all of the materials, soils, and buildings, the interactions between the seismic field, the geological complex and the often heterogeneous medium, the foundations of the structure, and the transfer within buildings up to equipment through their anchorage. These physical phenomena are obviously driven by the level of the seismic load. Since the Fukushima-Daïchi accident, the request to account for rare extreme events (i.e., beyond classical safe shutdown earthquake and paleo-event levels) quickens the need for accurate methods. The SINAPS@ research goes beyond the usual design context, with its associated simplified methods and fixed safety coefficients, and promotes the definition of the performance objectives and the formulation of the realistic failure criteria.

As shown in Fig. 7, SINAPS@ was built to investigate the data, models, and methods available to perform seismic analysis, aiming at the assessing of failure probability of a studied facility, structure or component, according to Eq. (1) defined below (where the risk is computed by the convolution of the failure probability with the consequences—the latter being out of the scope of SINAPS@). WP1 mainly worked to better constrain H and the way to generate and select the time series a(α). By definition, in Eq. (1), \(H\left( \alpha \right)\) is the hazard function obtained through PSHA. As the current French context still requires DSHA, some WP1 research concerned DSHA specifically. WP1 also worked on some site effects aspects, and shared the methodology and recommendations with WP2 and WP4, to promote a bedrock SHA definition and a control point definition consistent with the study case and configuration. WP2 contributed to the nonlinear SSI assessment and the full three-dimensional (3D) wavefield estimate from fault to foundation, with integration of the uncertainties in the soil and structure materials. Site effects topics were treated both in WP1 and WP2, to improve the interface between the reference SHA and the SSI. WP3 focused on structure and equipment seismic response predictions, with the development of simplified and complex nonlinear structural mechanics models that are relevant to the treatment of various situations. WP4 was the integrative block of the WP1, WP2, and WP3 outputs, and challenged its developments against the data from the KARISMA benchmark exercise (IAEA 2014), based on the Kashiwazaki-Kariwa NPP site technical details provided by TEPCO. Finally, WP5 was fully focused on the building–building interactions topic and the damping identification, through the combination of a specific experimental approach and simulation.

Illustration of the different contributions of SINAPS@, for WP1, 2, 3, 4 and 5, in the probabilistic seismic analysis scheme (Figure from SINAPS@ training 2018, Berge-Thierry et al. 2018). The risk analysis needs to assess the consequences, a topic not covered by SINAPS@

By definition, SINAPS@ covered a very broad scientific domain and produced a large amount of data and a number of publications for each aspect. This article mainly presents the achievements or recommendations, with the invitation to the reader to further refer to the specific SINAPS@-related publications or to Berge-Thierry et al. (2018) for more detail.

2 Main Scientific Advances in Seismic Hazard Assessment (WP1)

2.1 Preamble

Before presenting the main achievements of SINAPS@ WP1, it is important to remember that:

- 1.

currently, and for several decades now, two SHA approaches have co-existed: deterministic and probabilistic, as previously discussed.

- 2.

In France, the current ‘reference’ approach to assess seimic hazards is guided by RFS 2001-01, which prescribes a DSHA-type methodology in which the hazard is defined through reference earthquakes at the following three levels:

The Séisme Maximal Historiquement Vraisemblable (SMHV; Maximal Historic Plausible Earthquake), chosen in the seismic catalog (as historic to instrumental) as being the worst known event in terms of intensity produced at the site of interest, when shifted as close as possible to the site according to geological and seismological relevance (e.g., with respect to the seismic zoning);

The Séisme Majoré de Sûreté (SMS; usually compared to the concept of ‘Safe Shutdown Earthquake’ in international nuclear documents), defined by an increment of 1 in Intensity (or 0.5 in magnitude units) with respect to the SMHV characteristics;

The Paleo-earthquake, related to a significant event that produced surface ruptures and as suggested by field indices of active faults.

The definition of these three seismic levels dates back to discussions between the nuclear licensees and the ASN and IRSN, the most recent of which took place in the 2000s, along with the updating of the RFS. The way to obtain the reference events (i.e., SMHV, SMS, paleo-events) is guided by RFS 2001-01, in the perspective of accounting for uncertainties that are mainly related to historical seismic data.

Once the reference events are defined, the seismic movement is evaluated using a mandatory ground motion prediction equation (GMPE) (Berge-Thierry et al. 2003), and it is defined as the mean response spectrum associated to the considered levels (i.e., SMHV, SMS, paleo-event). The choice to consider the mean pseudo-spectral accelerations also resulted from consideration of the treatment of uncertainties when defining the reference events and their characteristics (i.e., in terms of magnitude, intensity on site, depth, and distance from the site) in a whole seismic safety analysis framework. The seismic design level is then defined on the basis of the two (or three) seismic levels listed above. A fourth seismic level was established in the post-Fukushima context: the HCSL.

RFS 2001-01 must be considered in its entirety. Indeed, treatment of uncertainties cannot be separated from the whole approach, which as mentioned above, is specifically intended to cover the uncertainties inherent in the definition of the reference earthquakes. The uncertainties taken into account within the RFS 2001-01 approach implicitly introduce safety margins into the seismic analysis. As a consequence, reconsideration of the treatment of the uncertainties in the RFS 2001-01 approach would necessary lead to reconsideration of the associated margins.

SINAPS@ WP1 worked on the characterization of the data and methods uncertainties, and investigated alternative approaches to integrate and explore the uncertainties, using DSHA or PSHA.

It is also crucial to remember that:

- 3.

any DSHA and PSHA performed for a target site shares:

The overall knowledge in the seismic hazard field, and the references (e.g., the guidelines, practices, scientific publications);

The basic data (e.g., geological, geophysical, seismological ones), the available software, and the empirical and numerical predictions models.

At the same time, DSHA and PSHA usually differ, as follows:

First, in their intrinsic objective: DSHA aims to define one (or several) reference seismic event(s) where the seismic characteristics (e.g., magnitude, intensity, depth, distance) are directly translated into seismic motion (usually through a spectral response spectrum) using one (or several) prediction model(s). Whereas PSHA produces a seismic hazard through a probability of exceeding a certain seismic motion measure (e.g., acceleration, pseudo acceleration) during a defined period (or as an annual frequency of exceedance), and the output is usually a uniform hazard spectrum (UHS): in this case, there is no reference event(s) nor seismic scenario associated to the UHS as it is the result of the spatial and temporal seismicity distribution. This conceptual difference explains why only a PSHA provides, by definition, an annual frequency of exceedance, while a DSHA will never provide this probabilistic information. As an extension, this means that however it is performed, a DSHA will never produce an output that is relevant to conduct a full seismic risk analysis, as it requires, by definition, a probabilistic description of the hazard.

Then in the treatment of uncertainties (of both data and models), be it epistemic or aleatory. On this point, the SINAPS@ WP1 research proposes some alternatives to current French practice.

As a consequence, several SINAPS@ WP1 actions finally benefited both DSHA and PSHA, as the improvement of all of the basic data, the earthquake occurrence, the ground motion prediction models, and the characterization of the uncertainties. In addition, the knowledge evolution achieved globally in the field of seismic hazard, and in specific topics within seismic hazard (e.g., ground motion prediction, site geological, geotechnical characterization) should be accounted for in both DSHA and PSHA approaches, and should motivate the updating of obsolete practices, and simplified assumptions and references.

Nevertheless, it is important to remember that SINAPS@ promotes continuous seismic risk analysis, which can be simply described as follows: Risk = Probability of occurrence of Hazard × conditional probability of Failure × Consequences. In the framework of SINAPS@, the Consequences were not assessed. The SINAPS@ research relates to each component of the following Eq. (1):

Equation (1) is in line with probabilistic risk analysis and performance-based earthquake engineering approaches [see EPRI, PEER and NRC references; Günay and Mosalam 2013]. In Eq. (1), Pfail is the conditional probability distribution function of failure of the studied structure or equipment, Pfc is the fragility curve that is defined as the probability that the seismic response of the structural element/equipment and the soil column (the properties of which are parameterized by the vectorial quantity \(m\), possibly random too) quantified through the Y engineering demand parameter (e.g.., drift, displacement, acceleration), Yf is the chosen threshold associated with the Y parameter in terms of the failure criterion, \(a\left( \alpha \right)\) is the time series, with α as the chosen seismic or intensity parameter (e.g., peak ground acceleration, pseudo acceleration, Arias intensity), and \(H\left( \alpha \right)\) is the probabilistic seismic hazard curve at a specific return period and confidence level, to be explained. \(H\left( \alpha \right)\) is necessarily obtained from PSHA. DSHA is not of interest in this framework. Equation (1) needs to be associated to the hazard curve annual frequency occurrence and the confidence level information. As Guidelines ASN #22 require the licensee to perform probabilistic safety assessment to evaluate the failure probabilities of structures and components important to safety, PSHA then becomes mandatory.

Fragility curves are a valuable tool, as they establish the link between seismic hazard and the effects on the built environment. Fragility curves express the probability distribution functions associated to a class of assets for the reaching or exceeding of predefined damage states for a range of ground motion intensities. A database of a great number of ground motions is required to provide enough information to estimate the parameters defining the fragility curves in any reliable way. However, earthquake-induced damage data in most earthquake-prone regions are too scarce to provide sufficient statistical information. Thus, two appropriate alternatives to correctly estimate the performance of structures under seismic load might be: (1) through certain sets of site-specific ground motion records and synthetic ground motion records; and (2) through a large set of non-site-specific unscaled ground motion records. This latter might provide information about the structural damage for extreme cases, and allow the evolution of the damage with increasing earthquake intensity to be shown.

The interest of performing seismic analysis following this approach is that uncertainties are explicitly integrated and well identified in each term of Eq. (1). This can be used whatever the context of the study, either for the design stage or the assessment of an existing facility.

2.2 Synthesis of Main Contributions and Lessons from SINAPS@ WP1

2.2.1 Quality Assurance: Recommendation Across All WP1 Topics

Seismic hazard assessment studies use large amounts of input data from databases, models, and hypotheses proposed in different studies, and also from experts or extracted from the literature. Some documents that are ‘regulation related’ (e.g., RFS, Guidelines) explicitly indicate sources to be favored (e.g., SisFrance www.sisfrance.net, [EDF-BRGM-IRSN] database, specific GMPEs).

From the SINAPS@ study, we recommend that:

Any database or model used in SHA studies must be published and fully accessible, and their procedures for data processing and modeling must be explained and justified, to ensure traceability and transparency.

The selection of models (to describe seismicity in time and space, and/or to predict ground motion) should be driven by the level of their verification and validation against qualified data. The complexity of empirical models (and the number of proxies they consider) should be chosen with respect to the confidence level for the data and metadata; fixing some of the parameters with a priori values might induce additional uncertainties into the process.

The most recent releases should generally be used, even if past observations, data and studies should not be disregarded (recommendation 3.4 of the OECD/NEA workshop, NEA/CSNIR(2015)15, 2015). For expert debate, the data (or database, model) as published is acceptable until superseded, with justification for new peer-reviewed publications.

2.2.2 Basic Data in Metropolitan France

SINAPS@ mainly continued the work initiated in the SIGMA project, see (Pecker et al. 2017). In particular, the SIHEX catalog was completed and made available for the community. This catalog contains information and metadata of instrumental events, and has been standardized to Mw through an explicit procedure, using the coda of signals. The SIHEX catalog has been merged with the historical events also characterized by Mw: the FCAT17 catalog was published as a SIGMA output (Manchuel et al. 2017; Traversa et al. 2017; Baumont et al. 2018).

SINAPS@ WP1 considered FCAT17 was the most up-to-date seismic catalog for metropolitan France that covered historical and instrumental periods. Of note, it has the advantage of being published through the peer-review system, and was available to the whole community. Quantification of the uncertainties associated to the metadata was proposed. Some SINAPS@ research allow the uncertainty associated to specific metadata to be better appreciated (e.g., constraint on the depth depending on the event location and velocity model process; Turquet et al. 2019).

SINAPS@ WP1 noted that the seismic catalog is a crucial input data for any SHA. It is common to DSHA and PSHA. Its completeness, the metadata, and the uncertainties it contains are the basic information that fully drive all assumptions and models used to perform SHA, be they deterministic or probabilistic (e.g., seismicity occurrence with Gutenberg-Richter or any other model, ‘maximal magnitude’).

2.2.3 Seismic Source Characteristics

SINAPS@ WP1 contributed to improvements to the focal mechanisms of French earthquakes. These focal mechanisms are important, as they are, for example, used to propose seismotectonic zoning. They also characterize the deformation regime of faults. In addition to improving the French focal mechanism database, the work performed in SINAPS@ showed the strong dependency between the metadata, as shown in Fig. 8 (Do Paco et al. 2017). In some cases, the focal mechanism was correlated with the depth of the event, with the depth itself mainly controlled by the velocity model used to locate the event. This also means that the uncertainties of all of these metadata are correlated, and they cannot be considered as propagated independently when performing a SHA.

Variance reduction of focal solutions with respect to the velocity model and the event depth, for the Oléron Island event (28/04/2016) (Do Paco et al. 2017)

2.2.4 Seismic Source Characterization in Metropolitan France

SINAPS@ WP1 had two main contributions to this field, the conclusions and impacts of which are of great importance for DSHA and PSHA. The first was the study of Craig et al. (2016) on the Fennoscandia region, which suffered a cluster of several severe earthquakes (Mw > 7) that were triggered by post-glaciation processes (i.e., elastic rebound of the crust delayed in time after ice melting). Craig et al. (2016) showed that this phenomenon still impacts today on the stress field of the French territory. This study drew two important conclusions regarding the interpretation of singular severe events (e.g., paleo-earthquake indices): (1) some of them occur on faults that are not promoted by the present day stress regime revealed by the seismicity considered in SHA models; and (2) there was non Poissonian behavior of the seismicity. Craig et al. (2016) showed that this phenomenon also impacted, and still impacts (although at lower amplitudes), upon the static stress changes applied to the faults in the French territory.

In line with a previous study, Bertran et al. (2017) investigated some periglacial deformation indices. They discussed these with respect to other brittle deformation indices of seismic origin. This study concluded that in metropolitan France, some quaternary indices of soft soil deformation are related to periglacial deformation, so they cannot be associated to paleo-seismic origins, and so they do not contribute to build or constrain assumptions of seismogenic fault behavior.

2.2.5 Seismic Motion Prediction

SINAPS@ WP1 partners used the strong motions available in open databases. The quality and usefulness of the NGA-West 2 (gathering worldwide, as shallow crustal earthquakes in active regimes) and the Resorce Euro Mediterranean databases are highlighted by SINAPS@ WP1. These two databases:

Fulfil the quality, traceability and signal treatment processes required for the use of the data and metadata, and the uncertainties they contain.

Supersede previous published databases. In particular, the strong motion database used in 2000 to derive the GMPE prescribed by RFS2001-01 is not yet recommended (choice of metadata not appropriate, in terms of magnitude scale and event-to-station distance definition, or site characterization through classes based on large intervals of estimated shear-wave velocity).

SINAPS@ WP1 underlines that in some cases, the metadata provided by the strong motion databases are not fully derived from empirical raw data. As an example, sometimes rupture parameters are provided, but they are related to a seismological model, and not directly deduced from the data. This is of great importance when these metadata (and their uncertainties) are used to produce ground motion prediction models. Some recent GMPEs proposed very complex functional forms, including rupture parameters; but are all of these seismic rupture models compatible? Is the model complexity in agreement with the level of knowledge provided by the raw seismological data? Among all of the metadata, the distance between the event and the recording station is of primary importance. The multiplicity of definitions with respect to the rupture area is representative of the seismic models behind these distance definitions.

For GMPEs, which are a key tool in any SHA (i.e., DSHA, PSHA), SINAPS@ WP1 recommends the use of recently published GMPEs (or the use of older GMPEs should be clearly justified). Indeed, peer review should guarantee the requirements (e.g., quality, relevance of the functional form with respect to the data) of any GMPEs in 2018. SINAPS@ WP1 recommends the use of GMPEs where the proxies are the most compatible with those chosen in the seismic catalog (e.g., magnitude scale, distance definition), to avoid as many conversions as possible. Indeed, these conversions add artificial uncertainties to the whole process.

2.2.6 Toward Physical Based Strong Motion Prediction

SINAPS@ WP1 worked on the ground motion predictions using physical rupture-based models. Kinematic rupture modeling was performed on extended seismic faults. The SINAPS@ study confirmed the relevance of a number of the available and published software packages. The focus was on the use of empirical Green’s functions (EGFs; assumed to be a good representation of the seismic wavefield from the source to the site over a broad frequency range) to predict site-specific seismic responses. Several assumptions are nevertheless associated with the summation of EGFs to predict strong motion due to an extended seismic source, in terms of the relevance of EGFs in relation to focal mechanism with respect to the target event. The scarcity of strong motion data in metropolitan France meant that this technique was of very limited use in France, as there were few well-characterized events available (i.e., in terms of 3D location, magnitude, focal mechanism), and those that were available were clearly associated with a specific fault of interest. In the framework of SINAPS@, the work performed by Del Gaudio et al. (2017) that arose through an investigation of the source parameters, concluded on the capacity of such EGF kinematic models to predict strong motion in agreement with the GMPE predictions for the same scenario, including the variability of several intensity parameters. Dujardin et al. (2018a) conducted an extensive sensitivity study on a canonical case using the same EGF summation and kinematic source model. This work focused on the numerous precautions to respect when working in a region or with a fault case characterized by rare events that could be retained as EGFs. In such cases, many delicate corrections have to be implemented to allow for the distortion of the focal mechanism on the rupture plane. Without these adjustments, bias was introduced in the near-field strong motion. Despite current difficulties associated with these emergent techniques, the numerical ground motion prediction models should be developed in the future as a strategy to overcome the limits of GMPEs close to faults, given the lack of ground motion data. They are of interest for sensitivity analysis, and in particular to explore the hazard variability induced by complex seismic rupture processes and uncertainties.

In addition Dujardin et al. (2018b) present in this issue an application of the EGF summation method in a test case in southeastern France, at the nuclear Cadarache site. This Cadarache site-specific study supports several important SINAPS@ conclusions that apply to all sites:

The necessity to correctly and fully carry out SHA for the bedrock condition, to define the reference motion;

The inadequacy of generic GMPEs where the site characteristics are given through Vs-based classes roughly estimated or poorly constrained;

The relevance of assessing the realistic seismic response by performing site-specific studies and taking advantage of in situ representative data;

The interest in, and potential of, seismic networks.

2.2.7 Bayesian Tool as an Objective Alternative to Expert Judgment

SINAPS@ WP1 successfully tested the potential of Bayesian model averaging approaches to rank and weight a set of GMPE candidates to be included in DSHA or PSHA. This technique was applied using state-of-the-art GMPEs that were taken from a study in the French metropolitan territory. A strong motion dataset that was considered to be representative of the expected ground motion on site was extracted from the RESORCE database, to challenge the predictions of the different GMPEs against these observed data. The SINAPS@ study clearly showed that without any a priori information on the GMPEs, the Bayesian model averaging provided hierarchy and weighting of the GMPEs that was only based on their relevance with respect to real data (Bertin et al. 2019). This finally provided an objective ranking that avoided any expert advice that might be questionable (e.g., on the choice of the candidate GMPEs, on their weight in a logic-tree), as shown in Fig. 9. Using such an approach, any kind of GMPE (be it purely empirical, hybrid, or based on strong motion simulation as ‘physical based’ modeling) can be challenged against the data, and the final weight is justified objectively. The potential of Bayesian techniques is expected to be revealed in the future; e.g., for a posteriori testing of short return period outputs (i.e., hazard levels) of PSHA, to update a model (either considering existing data, or including new information).

Quadratic means of prediction error using the Bayesian model averaging approach on a large GMPE set. a Prior predictions. b Posterior predictions. The black line corresponds to the predicted residual error sum of squares (PRESS) of the Bayesian model averaging combination of calibrated GMPEs (from Bertin et al. 2019)

2.2.8 Site-Specific SHA

Consistent with WP2 and WP4, SINAPS@ WP1 contributed to better constrain SHA from a site-specific point of view. SINAPS@ WP1 underlined the improvements that were made since the 2000s in the characterization of site properties. These have superseded the way in which sites were described; e.g., through rough categories such as ‘rock’ or ‘soil’. Nowadays, any site SHA requires site measurements, especially if the facility is of a nuclear type (see Dujardin et al. 2018b; this issue).

SINAPS@ and other national and worldwide research projects have converged to affirm that describing a seismic site response through a unique value (such as Vs30m, which is sometimes not directly measured but assessed from geological maps) is clearly insufficient. There is the need to at least complete an estimate of the depth of the ‘reference bedrock’ and its velocity (Dujardin et al. 2018b). Other seismic parameters are of particular importance (e.g., Kappa, resonance frequency) and should complete the site description. State-of-the-art GMPEs require several site-specific parameters, used as ‘proxys’ in their functional forms.

Laurendeau et al. (2017) and Bora et al. (2015, 2017) took advantages of the availability of well-characterized strong motion databases (i.e., KikNet, Resorce, respectively) to propose alternative approaches to classical host-to-target corrections, mainly based on the Vs30m and Kappa parameters. These studies showed that the host-to-target adjustment is particularly ‘approach-sensitive’, and results might vary widely.

Site-specific studies require permanent and temporal geophysical and seismological instrumentations to better constrain the ‘site term’ (to allow adjustment to the GMPE predictions based on the ergodicity assumption, and derived in other territories). Instrumental investment is unavoidable to reduce the uncertainties for strong motion site-specific predictions. These instrumental geophysical data are also crucial to verify and validate site response simulations, whatever the site configuration (i.e., 1D, 2D, 3D), as largely discussed and illustrated in WP2 and WP4.

SINAPS@ WP1 also underlined that the Fourier spectrum is strongly preferable to the response spectrum for seismic analysis (Bora et al. 2017). Indeed, the Fourier spectrum is a direct measure of the strong motion, whereas the response spectrum is already an envelope of one degree-of-freedom maximal responses. In terms of uncertainties and margin assessment, the Fourier spectrum appears to be a better tool and should be used for future GMPE developments, and should be chosen as the intensity measure in SHA. The other advantages are its fully physical meaning (linked to the seismic scenario) and the direct generation of the corresponding time series.

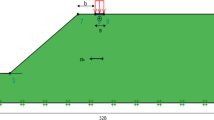

Consistent with WP2 and WP4, SINAPS@ WP1 strongly supported the evolution of the current French practice toward site-specific approaches, with clear definition of SHA at the relevant ‘control point’. The control point is characterized by its depth and geotechnical/geophysical properties (see Fig. 10, where control points are considered for the Kashiwazaki Kariwa Nuclear Power Plant (KK NPP) demonstrative study case). The control point is chosen depending on the study case. A control point at the reference bedrock is strongly recommended to perform SHA (i.e., at least for outcropping bedrock, or at depth). The site effects are assessed in collaboration with geotechnicians and engineers through SSI computations. SINAPS@ WP4 studies clearly showed the bias of a ‘bad control point definition’ when performing the whole computation from seismic hazard to SSI, and for fragility curve assessment (Wang and Feau 2019). In particular, the deconvolution step currently systematically applied by engineers is at least questionable or indeed inappropriate (especially when severe seismic levels are considered, and non-linear soil and/or structure behaviors are expected or suspected; in such cases, the relevance of the linear equivalent approach has not been supported).

Simplified scheme of the KK SINAPS@ demonstrative case (see WP4). Reactor building RB7 is embedded by over 25 m. Beneath the soil, the bedrock is found at ~ 167 m in depth. Stars indicate locations of different ‘control points’: star 1 ‘free field at soil site condition’; star 2 ‘free field at the outcropping bedrock condition’ and star 3 ‘at depth—foundation level—local soil condition’ (modified from Berge-Thierry et al. 2017a)

2.2.9 Selection of Time Series That are Compatible with Spectral Hazard Descriptions: Relevance to Engineering Application Needs and Variability Treatments

The step of selecting the time series representative of the site-specific seismic hazard is crucial to the whole process of seismic analysis, as these are the seismic inputs of simulations performed to assess the site response, which include all interactions, as studied in WP2, WP3, and WP4.

SINAPS@ WP1 and WP4 contributed to this topic through considering the outputs from DSHA and PSHA, to provide response spectra related to specific seismic scenarios and to uniform hazard spectra, respectively. These two kinds of outputs have significantly different physical meanings: the way to select time series (i.e., from real strong motion databases, or generated from simulations) that are representative of these spectra might drive their seismic characteristics (e.g., in terms of frequency content, amplitude). Therefore, the seismic response of structures or components might vary from one set to another, especially in the case of nonlinear behavior.

Research on these topics was carried out from DSHA through a PhD (Isbiliroglu 2017), and a site specific case-study (Wang and Feau 2019), while Zentner et al. (2018) reported on the time series selection compatible with a UHS from PSHA, with the introduction of the state-of-the-art concept of conditional spectrum, as widely used in risk-based seismic analysis. The practical study of Wang and Feau (2019) was performed on the KK NPP site, and it outlined several important points that should be considered further, as follows:

The choice of a synthetic ground motion generator and its relevance (i.e., consistency of the synthetic ground motion with respect to natural strong motion, in terms of several intensity measures beyond the unique spectral aspect).

The scaling of the strong motion to cover a large motion intensity range, to perform vulnerability and failure analysis.

The definition of the control point (e.g., free field including local site responses or outcropping bedrock, or at depth at a specific bedrock reference level). This assumption is crucial, as its drives the deconvolution process and finally strongly impacts upon the seismic loading properties imposed at the structure foundations.

The relevance of using a linear equivalent method in this site specific study (nonlinear soil behavior needed under high seismic motion).

This study is particularly interesting for at least two reasons: (1) it illustrates how the strong motion variability can be fully integrated into the seismic analysis, and how it finally affects (or not) the response when the whole nonlinear soil structure equipment system is modeled; (2) it shows how the cumulation of simplified assumptions and methods can lead to poor representations of physical phenomena, which leads to bad failure probability predictions (Fig. 11).

Two examples of median fragility curves and 95% confidence intervals for the 4 Hz resonant equipment, computed for a failure criterion of 0.2 × g (left) and for a failure criterion of 0.7 × g. These data suggest that defining the control point of the input motion at the soil surface (blue curves) as prescribed for French nuclear practice is not appropriate, and can lead to biased results when performing nonlinear soil-structure fragility analysis. This study has shown that the approach for which the excitation is defined at the surface tends to quasi-systematically underestimate the risk of failure (Wang and Feau 2019; Berge-Thierry et al. 2017c)

As already emphasized, the SINAPS@ research contributes to each step of an integrated seismic safety analysis, the aim of which was to compute the failure probability of key structures, systems and/or components, following Eq. (1). To follow such an approach, the need to assess the seismic hazard of a site using a probabilistic methodology is underlined: this requirement is because only PSHA can, by definition, be associated with the hazard probability (or the frequency exceedance) of the chosen seismic intensity measure over a specific time duration. The outputs of DSHA (i.e., response spectra associated to seismic scenarios) and PSHA (UHS, which result from the entire magnitude scenario range, with each weighted according to its occurrence rate) have significantly different physical meanings. Among these differences, and with respect to the probabilistic objectives and the mastered uncertainties propagation, it needs to be remembered that the UHS is a kind of spectral envelop, as there is no unique earthquake scenario that can generate the frequency content of a UHS. Then the process to select a set of ground motions (natural or synthetic) from a UHS might be different and optimized with respect to the methodologies classically used when spectra result from DSHA (as investigated by Isbiriloglu 2017 and Wang and Feau 2019). During SINAPS@, this step was studied and greatly improved by Zentner et al. (2018), as follows:

First, the simulation of ground motion time histories in agreement with conditional spectra was developed through a stochastic ground motion simulation model. The methodology used was inspired by an innovative ground motion selection procedure that was previously proposed by Lin et al. (2013). The original method was based on simulation of sets of conditional spectra that represented the spectral shape as median and sigma, and selection (and possible scaling) of the recorded time history that best fit the conditional spectra, one by one. Ground motion simulation allows time histories to be obtained without having to resort to scaling and modification. Moreover, there is no limitation to the number of available appropriate time histories for the matching of various criteria, such as spectral shape, strong motion duration, and other ground motion proxies, which can be generated at low cost.

Secondly, different ways to implement the conditional spectra approach for the computation of fragility curves and probabilistic floor spectra were investigated.

Finally, an integrated approach was investigated that was based on the transfer of ground motion defined on outcropping rock by conditional mean spectra to the NPP floor level, by means of a computational methodology with Code_Aster. The procedure is illustrated in Fig. 12, and this is in line with the international state-of-the-art procedures and with practice in a regulatory context in the USA, for example (Zentner 2014, 2017; Trevlopoulos and Zentner 2019).

Fig. 12 Illustration of the Zentner et al. (2018) integrated approach

3 WP2 Non-linear Site Effects and Soil Structure Interactions

3.1 The Scientific Context

In the framework of wave propagation from a source to equipment housed within a structure, the WP2 activities are positioned at the interface between soil and structure, seismology and structural dynamics, and hazard and structure vulnerability. Even if SSI effects are well known from the 1970s, they have often been tackled under simplified assumptions, including Winkler springs, uniform incident wave fields, shallow rigid footings, or linear equivalent soil behavior, among others. Some of these assumptions have been improved in recent years, to highlight the intrinsic safety margins. Moreover, these previous studies showed high sensitivity to the uncertainty for both the seismic loading and the properties of the soil domain surrounding the structure. Moreover, so as to take into account extreme events in the post-elastic behavior of structures, it is necessary to have a more detailed description of the seismic loading, both in time and space, that exceeds the given maximum acceleration or codified spectrum. Finally, instrumental and theoretical seismology has highlighted the complexity and variability of the incoming seismic waves, with near-field effects, site effects, non-linear filtering strong movement, and spatial variability. These advances have now been built into the bigger picture, which combines diverse methods with the associated difficulties, and is sometimes inconsistent with the regulations and common methods used around the world. WP2 was divided into three axes according to the tasks in the research, which define its main objectives.

3.2 Objectives of WP2

WP2 has the following three objectives:

- 1.

Improvement of traditional methods that define the input motion at the base of a structure,

- (a)

based on the results obtained in WP1;

- (b)

including spatial variability of the signals;

- (c)

with quantification of uncertainties of diverse soil materials.

- (a)

- 2.

Development of new methods,

- (a)

from the fault to the equipment, including nonlinearity and variability of soil properties;

- (b)

coupling structural and wave propagation codes.

- (a)

- 3.

New seismic data acquisition to validate:

- (a)

in the seismicity framework of France (i.e., low to moderate);

- (b)

for the validation site (Argostoli site, Kephalonia Island, Greece).

- (a)

It is important to bear in mind that the deliverables of each task define the final product, which is to create a large-scale probabilistic model from the source to the structure, taking into account nonlinear site effects, SSI, and the propagation of uncertainties (e.g., material properties, type of sources) for the demonstrative case study which was performed in WP4.

3.3 Soil-Structure Coupling

One of the goals of this work was to simulate nonlinear SSI using the ‘domain reduction method’ approach. Usually a nonlinear SSI (NL-SSI) problem is solved using direct methods, which can be very expensive in terms of computation time, due to the treatment of infinite domains (i.e., fictive boundaries for a large-scale domain). To reduce the computation time, a possible strategy is to reduce the computational domain (i.e., the soil domain) and to get the soil boundaries close to the structure. In this case, two aspects are very important: (1) in the full finite element model (FEM) approach, the incident waves must be imposed according to the hypothesis of soil behavior at the fictive boundaries; and (2) moving the fictive boundaries close to the structure means that the influence of the outgoing waves induced from them are important. Then, absorbing boundaries are needed to satisfy the radiation condition for the incompatible outgoing waves. Thus, the efficiency of the absorbing layer is a key point to reduce the size of the problem. These points are the main highlights relating to domain reduction methods.

In this study, using the input data of the benchmark KARISMA exercise (IAEA 2014), the objective was to compare different strategies for fully nonlinear analysis for SSI problems using fictive boundaries to represent the infinite domain. Usually, to perform SSI simulations, periodic conditions are used to approximate the lateral boundaries. Moreover, the seismic loading is imposed as vertical incident waves at the bottom of the model. This approach is accurate under two conditions: the fictive boundaries are distant from the structure, and the problem respects the period conditions (e.g., symmetry of geometry and loading). Due to the presence of the structure, with the idea to reduce the size of the model, this is not the most accurate technique, and other kinds of boundary models are needed to eliminate toward infinity the outgoing waves from the structure. Hence, a parametric study was carried out using some strategies to absorb all of the outgoing waves for symmetrical and asymmetrical systems. In addition, a numerical procedure was defined to simulate the incoming waves. This was based on computation of the equivalent force field at the boundaries from a model without the structure. In this case, for the soil close to the structure, the computations were performed considering nonlinear behavior, which was described by a simple constitutive law developed in Cast3 M FEM Code.

3.4 Validation of Nonlinear Soil Models for Strong Ground Motion

The aim of this study was to provide some clues concerning the evaluation and propagation of epistemic uncertainties in the simulation of seismic site responses. In this context, a benchmark allowed the performance of the different numerical models to be shown to represent the nonlinear soil behavior in a 1D nonlinear site response analysis. The results were compared to the observations at two sites of the Japanese accelerometric network (i.e., Sendai, Kushiro KSRH10) that were intensively characterized with in situ measurements and multiple laboratory measurements conducted on disturbed and undisturbed soil samples (Régnier et al. 2017).

The predictions obtained by the large amount of software were compared to observations on the selected sites of the Japanese accelerometric network. Then, the residuals and the software-to-software variability were calculated for the two sites, and compared to the part of the uncertainty in GMPEs that is associated to site amplification. According to these data, the misfit was generally higher than the software-to-software variability. In addition, it was also seen that contrary to what was expected, the software-to-software variability and the misfit were closely equivalent for the weakest input motions.

Another important aspect from this benchmark was that to simulate the seismic soil response numerically, a truly nonlinear approach was mainly considered (i.e., time domain analysis). Two types of nonlinear models were used: (1) those that rely on the description of the backbone and hysteretic curves; and (2) the advanced constitutive models based on the plasticity framework that can take into account the initial and critical state, volume deformations, and drainage conditions, among others. Here, the former numerical model approach allowed simulation of the dynamic soil behavior in a simple way, with an acceptable level of accuracy.

The levels of software-to-software variability obtained and the misfit might be due to the accuracy of the soil characterization. A large variety of tests were available, each of which has different advantages and limitations, which are mainly related to the level of strain tested. While efforts can be made to reduce errors in the interpretation, testing equipment, and sampling disturbance, uncertainties related to the inherent variability of soils resulting from natural geological formations and inherent or induced anisotropy should not be neglected.

3.5 Validation Site and Data Acquisition at the Argostoli Test Site

It is well known that the nonlinear behavior of soil can drastically change site responses in cases of strong ground motion. It is necessary to validate the nonlinear evaluation practices through comparison of simulation results with real data. Accelerometers were then installed along a vertical array (and in a rock reference site) within a small sedimentary basin near the town of Argostoli, on Kefalonia Island (Greece), one of the most seismic areas of Europe. This vertical array represented long-term investment toward the constitution of a new database for possible nonlinear practice validation in a 3D case. This site was chosen due to the feedback from previous studies, and especially the NERA European research program. The vertical array was installed in July 2015, and consisted of four downhole, three-component sensors, which were complemented by two surface sensors, one at the mouth of the borehole, and the other a few hundred meters away, on a rock outcrop. This array was operational, and has recorded hundreds of high-quality accelerograms, with peak ground acceleration sometimes up to 0.15 × g (local Mw 3–4 events, and a distant Mw 6.5 event). The data recorded from July 2015 to December 2017 are available through various FTP sites, and were indicated by Theodoulidis et al. (2018) and Cushing et al. (2016).

This site was selected following a temporary seismological experiment that was accompanied by various geophysical measurements performed in 2011–2012. The available geotechnical and geophysical information was significantly improved within the SINAPS@ framework, with the first geological and geophysical survey conducted in September 2013, and with the borehole investigations (i.e., Vs, geotechnical measurements). The former led to a new geological map and a 2D cross-section, estimation of Vs profiles (obtained with surface-wave-based noninvasive methods), and a 3D overview of the basin through H/V measurements. In addition, considering the occurrence of the January 26, 2014, Mw 6.2 earthquake less than 20 km from the test sites, it was decided to launch a post-seismic survey with two main objectives: (1) to install temporary accelerometers prior to installation of the permanent array, to record possible strong after-shocks; and (2) to install a dense array of sensors to set-up a database to study spatial short-scale variability. Different sensor types were used: accelerometers, broadband velocimeters, and rotational sensors, and these were deployed for different soil conditions. Even if this kind of database did not address the nonlinearity issue, it was also essential for the SSI studies within the WP2. The temporary accelerometric network has been operating since February 3, 2014 (a few hours after the second strong earthquake, with Mw 6.0) until July 2015, and it recorded several thousands of events. A first analysis of this database allowed the computation of standard spectral ratios between the rock site and several sites within the basin, which confirmed the location of the permanent vertical array. The dense array was composed of 21 broadband seismometers that were arranged on a five branches of a star, with a maximum radius 180 m. It was in operation over a 5-week period. A database composed by more than 1800 well-recorded earthquakes is now available. These two outstanding databases have been extensively used within the whole SINAPS@ program (Svay 2017; Svay et al. 2017, Sbaa et al. 2017).

Finally, this study provided the opportunity to complement standard translational measurements by rotational measurements, to allow ‘six degrees of freedom’ recordings. The rotational measurements were performed on different sites, which allowed the study of soil conditions on rotation motion. The rotation measurements led to the recording of a total of 1373 events for three successive sites (Sbaa et al. 2017).

3.6 Numerical Developments Performed in WP2: Use of the Argostoli Case Study for Verification and Validation

The objective of this study was to develop high-performance numerical tools for earthquake scenarios. This relates to the possibility to perform a 3D regional scale nonlinear and probabilistic model. Simulation of realistic ground-shaking scenarios requires reliable estimation of several different parameters related to the source mechanism (e.g., extended fault or localized double-couple seismic moment), the geological configuration, and the mechanical properties of the soil layers and the crustal rocks. Due to the enormous extension of these regional scale scenarios, the degree of uncertainty associated with the whole earthquake process (from fault to site) is extremely large, and eventually increases at higher frequencies and when structural models are included. Another drawback lies in the computational effort required to routinely solve wave propagation on such huge domains and over such a large number of degrees of freedom. At this point, it appeared necessary to build up a multi-tool numerical platform to construct and calibrate the seismological model. To this end, three main issues had to be be tackled: (1) to mesh the domain of interest, its geological conformation (bedrock to sediment geological surfaces), the surface topography, and the bathymetry (if present); (2) to describe the natural heterogeneity of the Earth crust and the soil properties at different scales (i.e., regional geology, local basin-type structures, heterogeneity of granular materials); and (3) to couple the wave-propagation problem with the structural dynamic problem. In the present work, the definition of regional scale is not strictly equivalent to the one of IAEA (2010) which indicates a radius of 300 km to perform the regional scale SHA site investigation (data, construction of geological and seismological models …). Here the regional scale is related to the numerical seismic scenario and site-specific SSI computation constraints: indeed the numerical model includes the extended fault for which the complex rupture process is assessed, and the geological-geophysical model from the seismic source up to the site were the seismic waves propagate. The regional scale in this case significates that the numerical model is not restricted to the site itself, and corresponds to several tenths of kilometers in the 3 directions (dimensions driven by the size of the seismic source to be modeled and its location with respect to the target site).