Abstract

I describe the long way from the first theoretical ideas about multiple particle production up to the situation in which constructing of a statistical model of strong interactions seemed natural. I begin in 1936, and argue that the statistical method came to be from a large network of observations and theoretical ideas. I shall pick up only a few primary lines, chosen for their common end point: the statistical bootstrap model of 1964/65.

Keynote talk at the “Hot Hadronic Matter: theory and experiment” workshop, Divonne 1994, Published in Proceedings, NATO-ASI 346, pp13–46, (Plenum Press, New York 1995).With kind permission of © Plenum Press, New York, 1995.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

It is the nature of a hypothesis when once a man has conceived it, that it assimilates everything to itself, as proper nourishment; and, from the first moment of your begetting it, it generally grows the stronger by everything you see, hear, read or understand. This is of great use. [1]

1 Introduction

The Statistical Bootstrap Model (SBM) is a statistical model of strong interactions based on the observation that hadrons not only form bound and resonance states but also decay statistically into such states if they are heavy enough. This leads to the concept of a possibly unlimited sequence of heavier and heavier bound and resonance states, each being a possible constituent of a still heavier resonance, while at the same time being itself composed of lighter ones. We call these states clusters (in the older literature heavier clusters are called fireballs; the pion is the lightest ‘one-particle-cluster’) and label them by their masses. Let ρ(m)dm be the number of such states in the mass interval {m, dm}; we call ρ(m) the ‘SBM mass spectrum’. Bound and resonance states are due to strong interactions; if introduced as new, independent particles in a statistical model, they also simulate the strong interactions to which they owe their existence. To simulate all attractive strong interactions we need all of them (including the not yet discovered ones), that is, we need the complete mass spectrum ρ(m). To simulate repulsive forces we may use proper cluster volumes à la van der Waals. In order to obtain the full mass spectrum, we require that the above picture, namely that a cluster is composed of clusters, be self-consistent. This leads to the ‘bootstrap condition and/or bootstrap equation’ for the mass spectrum ρ(m). The bootstrap equation (BE) is an integral equation embracing all hadrons of all masses. It can be solved analytically with the result that the mass spectrum ρ(m) has to grow exponentially. Consequently, any thermodynamics employing this mass spectrum has a singular temperature T 0 generated by the asymptotic mass spectrum \(\rho (m) \sim \exp (m/T_{0})\). Today this singular temperature is interpreted as the temperature where (for baryon chemical potential zero) the phase transition hadron gas \(\longleftrightarrow\) quark-gluon plasma occurs.

The main power of the SBM derives from the postulate that the strong interaction—as far as needed in statistical thermodynamical models—is completely simulated by the presence of clusters with an exponential mass spectrum and with mass-proportional proper volumes. This postulate implies that in SBM the strongly interacting hadron gas is formally replaced by a non-interacting (i.e., ideal) infinite-component cluster gas with van der Waals volume corrections and exponential mass spectrum, which can be handled analytically without recourse to perturbative methods.

The story of how this model was first conceived in the language of the grand canonical ensemble, reached maturity in the language of the microcanonical ensemble (i.e., phase space), and was finally equipped with finite particle volumes in order to become applicable to heavy-ion collisions and to the question of the phase transition is presented in Chapter 25 [2].

Here I describe the long way from the first theoretical ideas about multiple particle production up to the situation in which constructing SBM seemed natural. The story starts in 1936, and in my record I omit everything that did not lie on or near the way leading to SBM. What I wish to show is that SBM did not suddenly appear in 1965 as a deus ex machina, but was rather the logical consequence of a history of almost 30 years. Thus, from a large network of observations and theoretical ideas, I shall pick up only a few lines, chosen for their common end point: SBM. A complete and impartial picture of this history up to 1972 is presented by E.L. Feinberg in his exhaustive and instructive report [3], which is an indispensable complement to the present biased lecture.

I will try to be as non-technical as possible. Formulae are meant merely as illustrations (often oversimplified); for hard information the reader should consult the quoted literature. Units are \(\hslash = c = k\) (Boltzmann) = 1; energy in MeV or GeV.

2 From 1936 to 1965

We list here experimental facts and theoretical concepts which were important and instrumental to the construction of SBM.

2.1 Fireballs

How did we come to believe that ‘fireballs’—the things called ‘clusters’ in SBM—exist?

2.1.1 Multiple Production: Heisenberg (1936)

Before Yukawa’s paper postulating the pion [4], one tended to believe that the particles produced in cosmic ray events were electron–positron pairs. The only field theory then known, quantum electrodynamics (as yet without a consistent renormalization scheme), suggested that events with many secondaries should have vanishingly small cross-sections [proportional to (e 2)n]. This led many theoreticians to the interpretation that such events must be the result of many interactions with different nucleons in the same heavy nucleus, each single interaction producing just one pair, a point of view [5–7] persisting even after the advent of meson theory and in spite of growing experimental evidence in favour of multiple production. Heisenberg—still unaware of Yukawa’s paper—was the first to claim that, in a single elementary interaction, many secondaries might be produced [8], which at that time was a heretical idea—the pion was discovered 11 years later! Heisenberg followed this idea through many years (until \(\sim \) 1955) and devised different theoretical approaches to it, all invoking strong non-linearities and/or diverging field theories. The final, irrevocable decision between his views and his opponents’ only came with the first hydrogen bubble chamber pictures: Heisenberg’s revolutionary idea had been right.Footnote 1 We summarize this line of thought in‘Lesson 1’:

Lesson One (L1).

In a single elementary hadron–hadron collision, many secondaries can be produced.

Today this is so obvious that calling it a ‘lesson’ seems ridiculous, but seen in a historical perspective, it challenged a strong prejudice.

2.1.2 Dulles–Walker Variables (1954)

Assume a source of particles (a ‘fireball’) moving with velocity β [Lorentz factor \(\gamma = (1 -\beta ^{2})^{-1/2}\)] as seen from the lab and assume further that this source emits particles with velocities \(\beta _{i}^{{\prime}}\) isotropically in its own rest frame. We put the z-axis in the direction of motion and call \(\theta _{i}\) the polar angle under which particle i is emitted. Quantities in the fireball’s rest frame are primed, those in the lab frame are not. In any book on relativistic kinematics, one finds the formula for the angle transformation:

where the last approximation is true when β and \(\beta _{i}^{{\prime}}\) are both near 1, which will be assumed from now on.

The fraction F of particles emitted inside a cone of polar angle \(\theta ^{{\prime}}\) is, from elementary geometry, in the fireball’s frame:

while in the lab the same particles—and the same fraction F—will be found inside the cone of angle [see Eq. (17.1)]

so that in the assumed approximation

Hence

We note in passing that, with \(F = 1/2\), we find the angle \(\theta _{1/2}\) of the cone into which half of the particles fall:

Now define for each particle i the fraction F i of particles falling inside the cone of polar angle \(\theta _{i}\) (i.e., those having an angle smaller than or equal to that of particle i) and plot the points \(y_{i} =\log F_{i}/(1 - F_{i})\) versus \(x_{i} =\log \tan \theta _{i}\). These points will—under the supposed conditions \(\beta _{1}\beta _{i}^{{\prime}} \approx 1\) and isotropy—scatter about the straight line given by Eq. (17.5) with slope 2 and intercepts

as depicted in Fig. 17.1.

The discovery of these variables by Dulles and Walker [9] proved of great importance for the analysis of cosmic ray events: if the points are plotted according to the above rule, then if anything similar to a straight line emerged, an isotropically emitting centre had to be conjectured and its Lorentz factor γ could be read off. Although things were not that simple, the method revealed a lot of information, as we shall soon see.

2.1.3 ‘Constant’ Mean Transverse Momentum (1956)

The invariance of the transverse momenta (of the produced particles) under a Lorentz boost in the z-direction made them interesting from the beginning. The amazement was therefore great when it gradually turned out that their average \(\langle p_{\perp }\rangle\) seemed to be practically independent of the primary energy of the collision from which they emerged. This was reported in so many papers over so many years that I cannot quote all of them. Probably J. Nishimura was the first to have pointed it out [10]. The result was by 1958 rather well confirmed [11] and remained so until the ‘large transverse momenta’ were discovered in 1973 [12], which—important as they were—corrected this result only slightly. We write down ‘Lesson 2’:

Lesson Two (L2).

Secondaries emerging from high-energy hadron collisions have mean transverse momenta of order \(\langle p_{\perp }\rangle \approx \) 500 MeV/c, rather independently of the collision energy.

2.1.4 The Two-Centre Model (1958)

The most prominent qualitative feature of the particle tracks in emulsions and/or cloud chambers was that they were arranged in two cones: a wide one and, inside it, a narrow one. No measurements were needed to see this and to guess a simple mechanism that would produce it: two ‘centres’, one moving slowly and another moving fastFootnote 2 along the collision line, both emitting particles isotropically and with rather small, energy-independent momenta [Lesson 2] in their respective rest frames. I do not know whether one could say that a particular physicist had this idea first (it might have been Takagi [13], but I am not sure): it must have appeared obvious to anyone who saw pictures of these events. It was another thing to analyse such pictures quantitatively. The pioneers were the Cracow–Czech collaboration [14] and Cocconi [11]. They exploited the powers of the Dulles–Walker representation.

The story went like this: one applied the Dulles–Walker plot to the available events [11, 14] and, instead of finding the points representing the tracks scattering about a straight line—as expected for a single ‘fireball’—one found something very different. The result is show in Figs. 17.2 and 17.3 which I copy from Cocconi’s paper [11]. The spirit of Cocconi’s paper is so well concentrated in a few original passages that I repeat them here. Cocconi says:

Some of the events of Fig. 17.2. Forward and backward (CM) particles plotted separately

It is evident from an examination of Fig. 2 [our Fig. 17.2] that in most cases the relativistic secondaries are separated into two groups as if they were emitted, in the CM centre of the collision, not by a single centre but by two bodies, as described in Section II(d).Footnote 3 The evidence is so striking that we are going to analyse these events in a slightly different manner, more adjusted to the model.

Instead of considering all the relativistic particles produced in the collision together, let us divide them into two groups: the forward group, b 1, and the backward group, b 2 (the narrow and wide cones).

Let n 1 and n 2 be the number of particles falling in each group and let us analyse them in terms of \(\log \tan \theta\) versus \(\log [F_{1}/(1 - F_{1})]\) and versus \(\log [F_{2}/(1 - F_{2})]\). The results are plotted in Fig. 3 [our Fig. 17.3].

Figures 17.2 and 17.3 and Cocconi’s remarks need no further comment. It should be noted, however, that he is aware of the possibility of other interpretations, in which not individual ‘fireballs’, but a two-jet structure produces much the same effect.

The two-centre model was popular for a long time, as witnessed by the review paper written by Gierula [15] in 1963, 5 years later, and based on more than 100 events with \(E_{\mathrm{lab}} \gtrsim 10^{3}\) GeV. If I remember well from those years, the model did not always work—sometimes three or more fireballs had to be invoked—but on the whole it was rather successful. That it seems never to have been disproved came perhaps from the shift of interest to other questions arising from working with accelerators, where single events were analysed mostly in the hope of discovering new particles, but not to prove or disprove a two-centre model. The famous ‘flat rapidity plateau’ was, of course, no argument against a two- (or few-) centre model, as it arose from averaging over the impact parameter in many collisions contributing to the measured inclusive distributions. We thus draw ‘Lesson 3’:

Lesson Three (L3).

Secondaries produced in elementary hadron collisions seem to be emitted from few ( ≈ 2) ‘fireballs’ rather isotropically with small momenta in the fireball’s rest frame.

2.1.5 Conclusion: Fireballs with Limited\(\langle p\rangle\) Exist

We conclude that ‘fireballs’, decaying with limited momenta, do exist. In other words, lumps of highly excited hadronic matter keep together for a very short time before they decay in a very specific and—on this level—not yet understood way.

2.2 Statistical and Thermodynamical Methods

Having collected, in the previous section, the arguments in favour of the existence of ‘fireballs’, we now turn to their description. The methods and the models used eventually for this description were developed long before the existence of their final objects was established. In fact, the story goes back to two early theoretical ideas:

-

the compound nucleus of Bohr in 1936,

-

the incorporation of interaction in statistical thermodynamics via scattering phase shifts by Beth and Uhlenbeck in 1937.

2.2.1 Bohr’s Compound Nucleus (1936)

Bohr [16] proposed the following picture for a certain class of nuclear reactions: if a heavy nucleus is hit by a nuclear particle, then the strong interaction among the constituents and with the projectile can often lead to a complete dissipation of the available energy, so that no single nucleon gets enough of it to escape at once. This excited ‘compound nucleus’ will then live a rather long time before it decays by emitting nucleons which accidentally obtained sufficient kinetic energy to overcome the binding force. Of course, this picture cries out for a statistical description.

2.2.2 The Weisskopf Evaporation Model (1937)

We did not have to wait long for it. Weisskopf [17] writes, in his famous paper on nuclear evaporation:

Qualitative statistical conclusions about the energy exchange between the nuclear constituents in the compound state have led to simple explanations of many characteristic features of nuclear reactions. In particular the use of thermodynamical analogies has proved very convenient for describing the general trend of nuclear processes. The energy stored in the compound nucleus can in fact be compared with the heat energy of a solid body or a liquid, and, as first emphasized by Frenkel [18],Footnote 4 the subsequent expulsion of particles is analogous to an evaporation process.

Weisskopf is cautious. He does not claim right away that thermodynamics is applicable to nuclei; he rather derives first from elementary quantum mechanics a formula for the emission of a neutron by the excited nucleus A, leaving another excited nucleus B behind. For this he uses the principle of detailed balance by considering the inverse reaction \(B + n \rightarrow A\), of which the cross-section is supposed to be known. From this, the emission probability can be calculated; it is a very simple expression containing the above cross-section, the level densities ω A (E A ) and ω B (E B ) of nuclei A and B, at their respective energies, and the phase space available for a neutron of given kinetic energy ε. Then he introduces quite formally an ‘entropy’, viz.,

and a ‘temperature’, viz.,

In these variables, the derived formula for the emission probability assumes the usual form of an evaporation probability with a Boltzmann spectrum:

The rest of the paper discusses when the formula is valid and what corrections are necessary. What interests us here is that this is (to my knowledge) the first time that it was shown quantitatively that thermodynamics might be applied to such a tiny system as a nucleus. The reason is the enormous level density of heavy nuclei at high excitation energy. Note also that the formula was derived for the emission of a single neutron with only a few degrees of freedom (phase space). We conclude with ‘Lesson 4’:

Lesson Four (L4).

Thermodynamics and/or statistics might be (cautiously!) applied to very small systems, provided these have a very large level density (whatever that means).

When Weisskopf wrote his paper, not much was known about the level densities of nuclei, and he proposed to learn about them from the observed emission spectra, taking his formulae for granted.

2.2.3 Koppe’s Attempt and the Fermi Statistical Model (1948/1950)

Although traditionally all credit for the invention of a statistical model for particle production goes to Fermi (see below), it was actually H. Koppe who proposed, in fact 2 years earlier, the essence of such a model. He wrote [19]

In a recent paper [20],Footnote 5 a simple method has been given for the calculation of the yield of mesons produced by the interaction of light nuclei. It was based on the assumption of strong interaction between mesons and nucleons which should make it possible to treat a nucleus as a ‘black body’ with regard to meson radiation and to calculate the probability for emission of a meson by statistical methods.

At that time, available energies were not high (Berkeley: α-particles of \(\sim \) 380 MeV) and consequently the temperatures remained small (\(\sim \) 15 MeV), well below the pion rest mass. Yet the model did not work too badly. Note that (for him) the high level density justifying the treatment was located not in the meson field but in the interacting nuclei (‘black body’).

Fermi [21] then takes the important step of considering the pion field itself as the thermal (or better, statistical) system without the need for a background ‘black body’ à la Koppe. Thus he claims that, e.g., a proton–proton collision could be treated statistically. He writes [21]:

When two nucleons collide with very great energy in their CM system, this energy will be suddenly released in a small volume surrounding the two nucleons. We may think pictorially of the event as of a collision in which the nucleons with their surrounding retinue of pions hit against each other so that all the portion of space occupied by the nucleons and by their surrounding pion field will be suddenly loaded with a very great amount of energy. Since the interactions of the pion field are strong, we may expect that rapidly this energy will be distributed among the various degrees of freedom present in this volume according to statistical laws. One can then compute statistically the probability that in this volume a certain number of pions will be created with a given energy distribution. It is then assumed that the concentration of energy will rapidly dissolve and that the particles into which the energy has been converted will fly out in all directions.

After some further discussion he writes down his basic formula for the production of n pions (in modern notation):

where V 0 is the ‘interaction volume’ [order \((4\pi /3)m_{\pi }^{-3}\) and Lorentz-contracted or not, according to taste], E the total centre-of-mass (CM) energy, and m the pion mass (or that of another species if considered).

The rest of his paper discusses applications at low, medium, and very high energies; in the latter case a thermodynamic formulation is proposed, where the temperature is proportional to E 1∕4—that is, a Stefan–Boltzmann gas of (massless) pions is assumed. The way to this is already prepared when he discusses medium energies, where a good number of pions are produced: in order to use the only existing analytical expressions for n-body momentum space (namely for m = 0 and/or for \(m \rightarrow \infty \)), he treats pions as massless and nucleons as non-relativistic. At this time (1950), these assumptions were reasonable. The discovery of ‘limited transverse momenta’ [10], which of course would invalidate them, was to come only 6 years later. He also mentions angular momentum conservation, but only to argue that it is unimportant; he soon comes back to this question in an attempt to explain the observed anisotropy in CM [22], which failed. We pass over these details.

What is important for us is that Fermi actually tries to describe the disintegration of what we called above ‘fireballs’—8 years before they were discovered experimentally [11, 14].

While the model fails quantitatively (Heisenberg [23] quotes a measured event with an estimated primary energy of 40 GeV, where about 27 pions were actually produced, in contrast to 2.7 predicted by Fermi in the thermodynamic version), it is nevertheless the starting point for the development leading to the SBM.

Looking back we see a line of thought that leads from the Bohr compound nucleus directly to the theoretical concept of a hadronic fireball and its statistical (thermodynamical) description.

2.2.4 Beth–Uhlenbeck, Belenkij (1937/1956)

At the beginning of this section two main theoretical ideas were said to be essential for a statistical description of fireballs. One was the Bohr compound nucleus, leading directly to the Fermi model. The second is found in a paper by Beth and Uhlenbeck [24]. The authors incorporate interaction in statistical thermodynamics quantum mechanically via scattering phase shifts. We shall only sketch the idea. Details may be found in [25, 26].

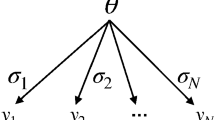

Suppose you have an ideal gas consisting of N non-interacting particles with masses m 1, m 2, …, m N at total energy E enclosed in a volume V. Let the level density of this gas be \(\sigma _{N}(E,V,m_{1},\ldots,m_{N}\)). If a force acts between particles numbered 1 and 2, they may form a bound state m 12, and (if nothing else happens) the level density of this new system becomes \(\sigma _{N-1}(E,V,m_{12},m_{3},\ldots,m_{N}\)). The interaction has changed the level density and the system with interaction would be described as a mixture of two ideal gases with and without bound states.

Beth and Uhlenbeck extend this argument to the case where the interaction leads not to a bound state but only to scattering. Single out from our gas two particles that are to scatter on each other, and take as normalization volume a sphere of radius R centred at the point of impact of the two particles. The density of states of this two-particle subsystem will be affected by the scattering process in that the ℓ th partial wave of the common wave function of our two particles will be, asymptotically:

where p is their relative momentum, r their distance, and η ℓ (p) the scattering phase shift. The wave function should vanish at r = R :

Thus n labels the allowed (discrete in R) two-body momentum states {p 0, p 1, …}. For a fixed \(p^{{\prime}}\), there are \(n(p^{{\prime}})\) states below \(p^{{\prime}}\), the density of states near \(p^{{\prime}}\) is

Without interaction, η ℓ (p) ≡ 0. Hence, the interaction changes the two-particle density of states by \((1/\pi )\mathrm{d}\eta _{\ell}/\mathrm{d}p\). Of course this argument has to be repeated for all partial waves and all particle pairs with the final result that the sum over ℓ gives a contribution to the partition function containing the derivative of the scattering amplitude [25, 27]. The formal extension of this method to include all interactions is due to Bernstein et al. [28].

For the following argument of Belenkij [29], the simple equation Eq. (17.14) is most illustrative. Let the two-body subsystem have a sharp resonance at relative momentum p ∗. Then the phase shift rises there by π within a short interval, so that \((1/\pi )\mathrm{d}\eta _{\ell}(p)/\mathrm{d}p \approx \delta (p - p^{{\ast}})\). Such a δ-function appearing in the density of states is equivalent to introducing an additional particle with mass m ∗ = m(m 1, m 2, p ∗) into the system, very much as a bound state would be introduced. The actual proof is somewhat complicated due to the switching between different sets of momenta. Belenkij does this in detail. We state ‘Lesson 5’:

Lesson Five (L5).

If in a statistical–thermodynamical system two-body bound and resonance states occur, then they should be treated as new, independent particles. Thereby a corresponding part of the interaction is taken into account.

Note that after doing so, the system is still formally an ‘ideal gas’, but now with some additional species of particles (simulating part of the interaction).

Belenkij’s motivation for his work had been the known fact that Fermi’s model gave wrong multiplicities: “This discrepancy may be as high as 20 times.” He had hoped that his new remedy [expressed by (L5)] would cure the disease of the Fermi model; it did so only partially, for reasons to become clear soon.

When we adopted Belenkij’s argument for including resonances, we did so because it was intuitively obvious that resonances should be included even when the formal derivation could not be directly invoked, as for instance in a process \(A + B \rightarrow \) resonance \(\rightarrow n\) particles, where a phase shift increasing by π is not defined. Incorporating resonances quite generally was later justified by Sertorio via the S-matrix approach to statistical bootstrap in an important paper [30].

2.2.5 The CERN Statistical Model (1958–1962)

When in 1957 the CERN PS was near completion, planning of secondary beam installations required estimates of particle production yields and momentum spectra. Bruno Ferretti, our division leader at that time, asked Frans Cerulus and me to do some calculations with the Fermi model (“just a fortnight of easy work …”, he said), not surmising that by that request he triggered a new development. In fact, we soon found out that the Fermi model, as it was, could not be used:

-

1.

In the fireball rest frame, neither were pions ultrarelativistic nor nucleons non-relativistic; indeed Lesson 2 (limited transverse momenta) excluded this, so there were no analytic formulae available to calculate momentum space integrals [Eq. (17.11)].

-

2.

Interaction between the produced particles might be important; the ideal gas approximation could lead to large errors.

For the second problem Belenkij had already given the solution: include all known particles and resonances (Lesson 5). For the rest, we were confident: fireballs seemed to exist (Lesson 3 was known to us by hearsay) and their statistical description in principle possible (Lesson 4). We earnestly hoped that an improved Fermi model would do. Problem 2 being trivial (thanks to Belenkij), once problem 1 was overcome, we concentrated on the calculation of momentum space integrals. Cerulus had the idea to use the Monte Carlo method and we worked it out. At that time this was a new method, not familiar to physicists; moreover the first CERN computer was only to come in a year or so. So we had tried our new methods [31] with the help of the Institute of Applied Mathematics at Darmstadt, where an IBM computer was available (not very powerful in 1958!) and we had found that momentum space integrals with up to \(\sim \) 15 particles could be computed in reasonable time and reliably with prescribable error (5–10 %).

I then took it over to write a program (my first!) for the expected CERN computer, a Ferranti Mercury. It was an adventure: I had to learn to program a non-existing machine, still under development, with no possibility of checking written parts of the program. Everything was to be expressed in machine code—a simple addition required four lines of code and all store addresses were absolute. One had to keep account of where each number (including intermediary results) was stored—and there were thousands of them (momentum spectra of some 15 species of particles).

After half a year I had finished the program (so I thought) and went to Saclay in France to test it on the first delivered Mercury machine. It failed beyond all expectation. Correcting was even more tricky than writing the program (which consisted of thousands of lines of numbers—no letters, no symbols; find the error!). It even required manual skill: input and output was via punched paper tape and one had to find the erroneous part (reading the tape by eye had to be learned, too), cut it out, and replace it carefully by the corrected piece, gluing everything together properly in case the teletape reader refused it or tore it to pieces.

In short, it was a mess, but finally it came to work, and in hundreds of hours we produced kilometers of tape with our precious results: the first accurate evaluations of the Fermi model including some interaction (all known particles plus some resonances) at several primary energies from 2 to 30 GeV (lab) and for \(pp\ \) and \(\uppi\) p collisions. Cerulus [32] had used a very elegant group theoretical method to solve the problem of charge distribution, and then employed the same method to implement angular momentum conservation in phase space [33], in the hope of reproducing the known anisotropy. It failed (because it required more computing than was then possible and also) because the process was not so statistical as we had hoped: angular momentum conservation could not produce the pronounced anisotropy found in cosmic ray events and well accounted for by two-centre models [11, 14]—a fact strongly suggesting that phase relations between partial waves survived the statistical mixing assumed in the Fermi model. In principle we had the tools to build and correctly evaluate a two-centre model, but it would have required at least ten times more computing (summing over impact parameters with varying fireball energies), which was impossible (we had already spent several years to do all the computing for single fireballs). Thus the angular distribution could not be described adequately.

But we had a more important success [34]: from the calculated momentum spectra it followed that the mean kinetic energy of all particles was practically independent of the primary lab energy (6–30 GeV). Thus the model more or less correctly produced the empirical fact stated in Lesson 2 (limited transverse momenta). However, this was only a numerical result, due to the large number of species of particles entering our calculations: counting spin, isospin, and antiparticles of the included ones (\(\uppi\), ρ, ω, K, N, \(\Delta \), Λ, Σ, Ξ), we came to 83 different particle states, equivalent to 83 species. This proved important in the following development, where it was the key opening the door to the statistical bootstrap model, when we tried to understand this mechanism analytically.

We state ‘Lesson 6’:

Lesson Six (L6).

A properly evaluated Fermi model with some interaction (resonances; L5) produces, in a limited interval of primary energy, practically constant mean kinetic energies of secondaries and reasonable multiplicities.

A review of our work is given in [34]. See also [35].

2.3 The Decisive Turn of the Screw: Large-Angle Elastic Scattering

Early in 1964 evidence was growing that the elastic pp cross-section around 90∘ (CM) decreased exponentially with the total CM energy, at least in the then known region 10 ≤ E ≤ 30 GeV of primary energies (lab). Many experiments contributed to this, and we cannot list them all here. The situation—theoretical and experimental—is well described in a paper by Cocconi [36], where references to the original experiments are given.

One can include angles a little below and above 90∘ by using transverse momentum \(p_{\perp } = p\sin \theta\). Orear [37] obtained in this way an impressive fit to large-angle elastic scattering (in what follows, E is always the total CM energy and dω is the solid angle, frequently denoted dΩ):

which is shown in Fig. 17.4. The cross-section follows this empirical formula over nine orders of magnitude in the interval 1. 7 ≤ p 0 ≤ 31. 8 GeV/c (primary lab momentum). Moreover, the reaction \(p + p \rightarrow \uppi +d\), as well as \(\uppi\) p elastic scattering, showed the same behaviour. In particular the exponential decrease had the same slope as in pp elastic scattering [37].

The two original figures of Orear [37] for large-angle pp scattering and for \(p + p \rightarrow \uppi +d\), showing the exponential decrease with the same slope

It is tempting to interpret Eq. (17.15) as a thermal Boltzmann spectrum. In that case 0.158 GeV would be the ‘temperature’ at which the two nucleons were emitted.Footnote 6 It thus seemed that there was something statistical, a suspicion strangely corroborated by the observation that the same law (with slightly different ‘temperature’) was obeyed by secondaries in inelastic processes [36]. Almost 2 years before the Orear plot was published, L.W. Jones had already proposed a statistical interpretation: the two colliding particles would sometimes form a fireball—in analogy to the compound nucleus—which would decay into many channels, among them the two-body channel containing the original particles. This two-body decay could only be observed far outside the diffraction peak, that is, at large angles. He asked me whether such a picture could be described quantitatively by our statistical model.

2.3.1 Statistical Model Description of Large-Angle Elastic Scattering

For some obscure reasons, we had archived all results obtained since 1958,Footnote 7 and even included the two-body channel. It was simple to analyse them again and to find the amazing result

where P 0 is the probability of the two-body channel and \(\varSigma P_{b}\) the sum over the probabilities of all channels. P 0 and all the P b were numerical results from hundreds of phase-space calculations as described above. When we established Eq. (17.16), no free parameters were available, everything was in our archived data. This result [38] agreed reasonably well with early experimental data [36], but when the Orear fit [37] was published, the agreement became perfect: the number 3.17 in the exponent of Eq. (17.16) corresponds to a temperature T = 0. 158 if E = 2p ⊥ (at 90∘) is inserted; then Eq. (17.15) results (the factor E in front of the exponential is negligible).

Thus L.W. Jones’ proposal was immensely successful. An independent confirmation by the observation of Ericson fluctuations [39] would have been desirable, but I do not know if it was ever tried. It was probably too difficult.

2.3.2 Thermal Description

Our results were so convincing to me (unfortunately not to most others; among the few exceptions was G. Cocconi) that I firmly believed that in Eq. (17.16) we really had an exponential function and not something approximately exponential. This belief (which was directly leading to the statistical bootstrap model) had to be justified better than by Eq. (17.16), which was merely a numerical result established in a rather small range of energy \(\sim 2.4 \leq E \leq 6.8\) GeV.

However, the belief was seriously challenged by Białas and Weisskopf [40] who had given a thermodynamical description based on assumptions that I considered unsuitable, but which nevertheless also gave a good fit to the data. What were these assumptions? Mainly these:

-

The compound system is a hot gas.

-

As constituents only pions are considered. K mesons and resonances are assumed to be unimportant.

-

Pions are taken as massless.

These were exactly the assumptions that Fermi [21] had already made and that had led to wrong multiplicity estimates [23, 29].

From the above assumptions, it follows immediately that the gas is at the black-body temperature (Stefan–Boltzmann law)

Therefore a Boltzmann spectrum for elastic scattering at 90∘ would be of the form

instead of our result \(\sim \exp (-\mathrm{const.} \times E)\) as given in Eq. (17.16). For \((\mathrm{d}\sigma /\mathrm{d}\omega )_{90^{\circ }}\), the authors derive an expression that contains Eq. (17.18) as the essential part, the rest being algebraic factors.

The difference is in principle fundamental, but it is numerically insignificant in the range of energies then available. Apparently the Orear plot [37], which might have pleaded in favour of a pure exponential, was not yet available to the authors (as seen from the dates of reception of the two papers).

2.3.3 Exponential or Not?

This question was so important that I wish to formulate it in two different ways:

-

1.

Our result was [see Eq. (17.16)] that \(\varSigma P_{b}\) grows exponentially with E (the other factors being algebraic). Now, a given phase-space integral for b particles is the density of states of the b-particle system at energy E; thus \(\varSigma P_{b}\) is the total density of states of the ‘fireball’ at energy E. If our result Eq. (17.16) were true, it would mean that the density of states of hadronic fireballs would grow exponentially with their mass (= E) up to at least m = 8 GeV.

-

2.

The second formulation is a consequence of the first. The entropy is the logarithm of the density of states, hence the entropy of a fireball would be

$$\displaystyle{ S(E) =\mathrm{ const.} \times E }$$(17.19)and therefore its ‘internal temperature’ would be

$$\displaystyle{ T = (\mathrm{d}S/\mathrm{d}E)^{-1} =\mathrm{ const.} }$$(17.20)In words, if our result Eq. (17.16) were true, it would mean that the internal temperature of hadronic fireballs would be independent of their mass \((M\ \equiv \ E)\) .

This would also explain (in a thermodynamic language) why our phase-space calculations had given ‘constant’ mean kinetic energies [Lesson 6]: particles were emitted with a Boltzmann spectrum at an energy-independent temperature. We had suspected that this behaviour was due to our including interaction by admitting all relevant species of particles and resonances known to us, but that had remained a speculation up to then.

Cocconi had clearly seen what was going on. He writes [36]:

If the dependence of S on E is of the form S = aE n, it follows that \(\mathrm{d}\sigma /\mathrm{d}\omega = const. \times \exp (-aE^{n})\) and that the temperature of the compound system is \(T = (naE^{n-1})^{-1}\). The value of n characterizes the ‘gas’ of the compound system […]; n = 1 corresponds to the case of a system in which, for E increasing, the number of possible kinds of particles increases so as to keep the energy per particle, and hence the temperature, constant. Footnote 8

Commenting on our phase-space results [34], he wrote:

This model produces an essentially ‘constant temperature’ because, in the compound system, beside the nucleons and mesons, also the known excited states are counted separately.

All this can be conveniently summarized in Lesson 7:

Lesson Seven (L7).

The exponential decrease in the elastic pp cross-section at large angles up to a CM energy of about 8 GeV had empirically established the existence of ‘fireballs’ (clusters; compound states) up to at least m = 8 GeV. Moreover, their density of states had to grow exponentially as a function of their mass up to at least m = 8 GeV, which means that, if the level density is interpreted as a mass spectrum, there were an unexpectedly large number of resonance-like states above those few then explicitly known.

The question now was: could a reasonable analytical model for fireballs be constructed, which would lead to an exponentially growing density of states and, consequently, to an energy-independent temperature?

2.3.4 Asymptotics of Momentum Space

The question just formulated was in the mind of several people who therefore investigated the asymptotic behaviour of momentum space integrals for \(E \rightarrow \infty \) [41–43]. They all consider essentially a pion gas and show that for \(E \rightarrow \infty \) the masses become negligible and that the asymptotics can be evaluated there. All authors agree that (in general) the density of states for \(E \rightarrow \infty \) then grows like \(\exp (\mathrm{const.} \times E^{3/4})\), just as for a gas of particles with m = 0. Vandermeulen as well as Auberson and Escoubès consider also the pathological case where the usual factor 1∕n! in front of the phase-space integral is omitted. They discover the amazing fact that, if the factor 1∕n! in front of the phase-space integral is omitted, then the density of states for \(E \rightarrow \infty \) grows like exp(const. × E). I give here a simple derivation of this result, taking all masses m = 0 from the outset and passing over subtleties such as the difference between \(\langle E\rangle\) and E in thermodynamics.

For zero masses, the particle energies equal their momenta and the n-body phase-space integral (= n-body density of states at energy E) with spatial volume V becomes

The n-body partition function is then the Laplace transform of \(\sigma \,\):

where \(\beta = 1/T\) (we use β and T for convenience). The last integral equals 8π T 3, so that

Summing over n gives the partition function for our gas with particle number not fixed:

where F is the free energy and S the entropy. It follows that

which is the Stefan–Boltzmann law for Boltzmann statistics. Further

If S is expressed as a function of E (as it should be), we have

and the density of states becomes, as derived more rigorously in the above papers,

But the situation changes drastically if the factor 1∕n! is omitted. Go back to Eqs. (17.23) and (17.24) and drop 1∕n! there. The sum now gives

For \(T \rightarrow T_{0}\) the partition function diverges. Hence T 0 is a singular temperature for this gas.

Now the miracle happens. Assume V to be the usual ‘interaction volume’ of strong interactions [21] (without Lorentz contraction):

This is almost the mysterious ‘constant’ temperature so often encountered in this report. Following the standard procedure, we calculate the energy and entropy. Both become simple for \(T \rightarrow T_{0}\,\):

The energy diverges for \(T \rightarrow T_{0}\) (therefore T 0 is the maximum temperature for this gas). For the level density, we obtain

that is, omitting the factor 1∕n! leads to a maximum temperature and to an exponentially growing density of states. Equation (17.31) implies that, for E ≥ 10T 0, one always finds a temperature 0. 9T 0 ≤ T < T 0, hence practically constant.

This result brought me—me, but nobody else—to a state of obsession. Did it not explain one of the most intriguing features of strong interaction processes? And was it not obviously wrong because of its unrealistic assumptions? Yet, there was an interpretation that opened the way to a better model.

2.3.5 Interpretation: Distinguishable Particles and Pomeranchuk’s Ansatz

The factor 1∕n! in front of the phase-space integral Eq. (17.21) serves to compensate for ‘double’ counting: given a set of fixed momenta \(\{\overline{p}_{1},\overline{p}_{2},\ldots,\overline{p}_{n}\}\), all n! permutations of this set occur during the integration over p 1, p 2, …, p n . If the particles are indistinguishable, one has therefore to divide by n! . If all n particles are different from each other, one should not divide. This was exactly the point: in our statistical model calculations we had used \(\sim 80\) different particle states and had therefore to replace

in front of the phase-space integrals. However, since the number of produced secondaries remained far below 80, the values n k remained, for all essentially contributing phase-space integrals, either 0 or 1. Hence, practically all n k ! = 1, and 1∕n! was effectively replaced by 1. If this situation was to be simulated analytically by a solvable model [namely all masses = 0 (for \(E \rightarrow \infty )]\), then in order to come near to reality, the factor n! should be dropped, as if the particles were distinguishable.

This argument led Auberson and Escoubès to look at the case where 1∕n! is dropped. They also considered a scenario corresponding to Eq. (17.33), namely where there are r different species of particles, while inside each species, particles are indistinguishable. They are cautious in the interpretation of their results [41]:

If it is probable that the discernibility hypothesis is the most realistic at low energies, one cannot very well locate the energy at which this hypothesis must be abandoned (if at all).

And later:

Clearly r could be larger than 3, to take into account the resonances at high energies.Footnote 9 (If, however, in reality the strongly interacting particles should have an infinity of excited states […] we fall back essentially on discernible particles.)

They leave the question open.

In the present context, a paper by Pomeranchuk [44] must be mentioned. He proposes to improve the Fermi model by admitting that real pions are not pointlike. Therefore n pions would not find room in a volume V 0 \((\approx 4\pi m_{\pi }^{-3}/3)\), but need at least a volume nV 0. Thus the space volume factor in front of the integral in Eq. (17.21) would be [nV 0∕8π 3]n instead of the one appearing in Eq. (17.21). However, for large n,

the factor n n arising from the corrected volume will essentially cancel the factor 1∕n! , and one thus arrives effectively at a model with ‘distinguishable’ particles in a volume eV 0. In this way, Pomeranchuk also obtains a maximal temperature of the order of m π , that is, a practically constant temperature. His paper came about 13 years too early—or at least five, since the constant mean transverse momenta became popular only after 1956 [10] (the decisive large-angle scattering took shape around 1964).

While the model of distinguishable particles was useful because it produced the surprise that motivated the investigations described in the following section, it was clear that all further efforts had to be made on the realistic basis of massive particles with Bose and/or Fermi statistics. The principal lesson to be kept in mind was that there should be many, many different species of particles.

3 The Statistical Bootstrap Model (SBM)

Up to here we have collected everything that helped to motivate the construction of SBM. We now describe this construction. For what follows, a few formulae need to be recalled; although everybody knows them, it is necessary to have them ready at hand in order not to interrupt the argument. We use Boltzmann statistics for simplicity (the first paper on SBM used correct statistics [45]).

3.1 A Few Well-Known Formulae

We go back to Eq. (17.21), rewrite it for relativistic massive particles, and follow the same derivations as there. The density of states of n particles of mass m enclosed in a volume V at energy E is then

Its Laplace transform is the n-particle partition function:

and the integral is

where K 2 is the second modified Hankel function [which for m ≫ T goes as \((\pi T/2m)^{1/2}\exp (-m/T)\) and for m ≪ T as (T∕m)2].

We obtain

by which the ‘one-particle partition function’ Z 1 is defined. Summing over n gives the (grand canonical) partition function for an unfixed particle number:

For a mixture of two gases with particles of masses m 1 and m 2, respectively, we have Z(T, V, m 1, m 2) = Z(T, V, m 1)Z(T, V, m 2). We generalize this to a mixture of gases of many different sorts of particles by introducing the (as yet unknown) mass spectrum ρ(m):

and obtain

On the other hand, any Z(T, V ) can be written as

Given Z(T, V ), the energy spectrum (density of states) \(\sigma (E,V )\) can be calculated, and vice versa.

Doing the same steps, i.e., summing over n and introducing a mass spectrum without, however, executing the Laplace transformations, yields the phase-space analogue of Eqs. (17.41) and (17.42):

where \(E_{i} = (p_{i}^{2} + m_{i}^{2})^{1/2}\). Note the enormous difference between the two densities of states, ρ(m) and \(\sigma (M,V )\). Suppose there is a single species with mass m 0; then \(\rho (m) =\delta (m - m_{0})\) is zero everywhere except at m = m 0, while \(\sigma (M,V )\) grows (for M ≫ m 0) as \(\exp (\mathrm{const.} \times M^{3/4})\) as shown in Eq. (17.28).

3.2 Introducing the Statistical Bootstrap Hypothesis

From an article that does not otherwise concern our subject, R. Carreras [46] picked up a bon mot which may serve very well as a motto for this section:

[…] all of these arguments can be questioned, even when they are based on facts that are not controversial.

Here are these arguments:

-

If anything deserves the name ‘fireball’, then it is the lump of hadronic matter in the state just before it decays isotropically into a two-body final state, as observed in large-angle elastic [\(p + p \rightarrow p + p\), \(\uppi +p(n) \rightarrow \uppi +p(n)]\) or two-body inelastic scattering [\(p + p \rightarrow \uppi ^{+} + d\)].

-

This fireball answers, within experimental accuracy, to the description by an improved Fermi statistical model, as witnessed by the agreement of our phase-space results with the Orear plot (Fig. 17.4).

-

We therefore postulate that fireballs describable by statistical models do exist, provided that in such models interaction is taken into account by including known particles and resonances (Lessons 5, 6, and 7).

-

While practically a limited number of sorts of particles and resonances was already sufficient to describe, within experimental accuracy, fireballs up to a mass of 8 GeV, we should in principle include all of them with the help of an as yet unknown mass spectrum ρ(m).

-

Recalling Eqs. (17.41)–(17.43), we observe that there are two mass spectra appearing in the statistical description:

-

1.

\(\sigma (M,V _{0})\mathrm{d}M\) is the number of states (of species) of fireballs (volume V 0) in the mass interval {M, dM}.

-

2.

ρ(m)dm is the number of species of possible constituents (of such fireballs) having a mass in {m, dm}.

-

1.

-

A glance at the Review of Particle Properties [47] informs us, under the headings Partial Decay Modes that heavy resonances [to be counted in ρ(m)] have many decay channels, some of them containing resonances once again. Thus, heavy resonances ‘consist’ (statistically) of particles and lighter resonances—just as fireballs do.

-

Therefore there is no principal difference between resonances and fireballs: the states counted in \(\sigma (M,V _{0})\) should also be admitted as possible constituents of fireballs of larger mass—that is, they should be counted in ρ(m).

-

We conclude that ρ(m) and \(\sigma (m,V _{0})\) count essentially the same set of hadronic masses and that therefore they must be—up to details—the ‘same’ function.

-

They cannot be exactly equal, because ρ(m) starts with a number of δ-functions (π, K, p, …) while \(\sigma (m,V _{0})\) is continuous above 2m π .

-

Leaving the door open for such differences and others, we postulate only that the corresponding entropies should become asymptotically equal:

$$\displaystyle{ \frac{\log \sigma (m,V _{0})} {\log \rho (m)} \;\mathop{\longrightarrow }\limits_{m \rightarrow \infty }\;1\;. }$$(17.44)We call this the ‘bootstrap condition’ [45], which is a very strong requirement in view of the great difference between ρ and \(\sigma\) in ‘ordinary’ thermodynamics (see the remark at the end of the last section).

3.3 The Solution

The rest is mathematics (and the above motto no longer applies). It could be shown [45] that ρ and \(\sigma\) have to grow asymptotically like \(\mathrm{const.} \times m^{-\alpha }\exp (m/T_{0})\), while possible solutions growing faster than exponentially are inadmissible in statistical thermodynamics. Nahm [48] proved that, by adding certain refinements, the condition Eq. (17.44) could be sharpened and that the power of the prefactor is then α = 3. He also derived sum rules, which allowed him to estimate T 0 to lie in the region of 140–160 MeV, results which agreed with Frautschi’s (and collaborators) numerical results [49]. Thus the question put after Lesson 7 had been answered in the affirmative: SBM was born.

It is a self-consistent scheme in which the ‘particles’—i.e., clusters or resonances or fireballs, call them what you like—are at the same time:

-

the object being described,

-

the constituents of this object,

-

the generators of the interaction which keeps the object together.

Thus it is a ‘statistical bootstrap’ [49] embracing all hadrons.

3.4 Further Developments

Everything that happened to SBM after its birth is reported in some detail, and with all references known to me, in the review Chapter 25 [2], which I shall not try to sum up here.

Even so, a few more important steps must be mentioned:

-

The thermodynamic description of fireballs is so simple that it can be combined with collective motions (as in two-centre models [11]) and summed over impact parameters. Leading particles and conservation laws are easily taken care of. In this way, Ranft and myself constructed the so-called ‘thermodynamical model’, which proved useful for predicting particle momentum spectra [50].

-

Frautschi, in a most important paper [49], had written down and solved the first phase-space formulation of SBM. His work triggered an avalanche of further papers reviewed in Chapter 25 [2], see also Chapter 22, leading to a new development. His ‘bootstrap equation’ (BE) is much stronger and more elegant than our above ‘bootstrap condition’ Eq. (17.44). Later it was put in a manifestly Lorentz invariant form and analytically solved by Yellin [51]. This formulation has become standard. The Laplace-transformed BE is a functional equation for the Laplace transform of the mass spectrum. This equation was already knownFootnote 10 in 1870 [52] and independently rediscovered by Yellin. All this was so important that I cannot resist illustrating it with the help of a simple toy model, in which clusters are composed of clusters with vanishing kinetic energy. In this limit the Frautschi–Yellin BE reads

$$\displaystyle{ \rho (m) =\delta (m - m_{0}) +\sum _{ n=2}^{\infty } \frac{1} {n!}\int \delta \left (m -\sum _{i=1}^{n}m_{ i}\right )\prod _{i=1}^{n}\rho (m_{ i})\mathrm{d}m_{i}\;. }$$(17.45)In words, the cluster with mass m is either the ‘input particle’ with mass m 0 or else it is composed of any number of clusters of any masses m i such that \(\varSigma m_{i} = m\). We Laplace-transform Eq. (17.45):

$$\displaystyle{ \int \rho (m)\exp (-\beta m)\mathrm{d}m =\exp (-\beta m_{0}) +\sum _{ n=2}^{\infty } \frac{1} {n!}\prod _{i=1}^{n}\int \exp (-\beta m_{ i})\rho (m_{i})\mathrm{d}m_{i}\;. }$$(17.46)Define

$$\displaystyle{ z(\beta ):=\exp (-\beta m_{0})\;,\qquad G(z):=\int \exp (-\beta m)\rho (m)\mathrm{d}m\;. }$$(17.47)Thus Eq. (17.46) becomes \(G(z) = z +\exp [G(z)] - G(z) - 1\) or

$$\displaystyle{ z = 2G(z) -\exp [G(z)] + 1\;, }$$(17.48)which is the above-mentioned functional equation for the function G(z), the Laplace transform of the mass spectrum. This function proved most important in all further development. For instance, the coefficients of its power expansion in z are directly related to the multiplicity distribution of the final particles in the decay of a fireball [53]. It is most remarkable that the ‘Laplace-transformed BE’ Eq. (17.48) is ‘universal’ in the sense that it is not restricted to the above toy model, but turns out to be the same in all (non-cutoff) realistic SBM cases [49, 51]. Moreover, it is independent of:

-

the number of space-time dimensions [54],

-

the number of ‘input particles’ (z becomes a sum over modified Hankel functions of input masses),

-

Abelian or non-Abelian symmetry constraints [55].

What is wanted is of course G(z), given implicitly by Eq. (17.48). Solutions are reviewed in Chapter 25 [2]. Simplest is its graphic solution: we draw z(G) according to Eq. (17.48) and exchange the axes (see Fig. 17.5). One sees immediately that (universally!)

$$\displaystyle{z_{\mathrm{max}}(G) =: z_{0} =\ln 4 - 1 = 0.3863\ldots \;,\qquad G_{0} = G(z_{0}) =\ln 2\;.}$$The parabola-like maximum of z(G) implies a square root singularity of G(z) at z 0, first remarked by Nahm [48]. Upon inverse Laplace transformation, this leads to \(\rho (m) \sim m^{-3}\exp (\beta _{0}m)\) where (in our present case, not universally!):

$$\displaystyle{ \beta _{0} = - \frac{1} {m_{0}}\ln z_{0} = \frac{0.95} {m_{0}} \qquad [\mbox{ see Eq.,(<InternalRef RefID="Equ47">17.47</InternalRef>)}]\;. }$$(17.49)Putting \(m_{0} = m_{\uppi }\), we find a reasonable value for \(T_{0} =\beta _{ 0}^{-1}\):

$$\displaystyle{ T_{0}(\mbox{ toy model}) = 0.145\ \mathrm{GeV}\;. }$$(17.50)Thermodynamics of a gas of the above clusters Eq. (17.45) has T 0 as a singular temperature. Thus, the simple toy model already yields all essential features of SBM. For a very short representation of the more realistic case of pion clustering in full relativistic momentum space, see [53, Sect. 2].

-

-

Much work was done by groups in Bielefeld, Kiev, Leipzig, Paris, and Turin to clear up the relation of SBM to other trends in strong interaction physics (Regge, Veneziano, etc.) and to the theory of phase transitions; this is reviewed in Chapter 25 [2].

-

In the mid-1970s J. Rafelski arrived at CERN and immediately began pushing me: SBM should be polished up to become applicable to heavy-ion collisions. There were two problems: baryon-number (and, eventually, strangeness) conservation and proper particle volumes—pointlike heavy ions would be nonsense. Thus we introduced baryon (strangeness) chemical potential and—less trivially—proper particle volumes, first in the BE [56], then in the ensuing thermodynamics [57]. We found that particle volumes had to be proportional to particle masses with a universal proportionality constant. The argument was the following. Let a cluster (fireball) of mass m and volume V be composed of constituents with masses m i and volumes V i . In contrast to standard assumptions in thermodynamics, the cluster is not confined to an externally imposed volume; rather it carries its volume with it (as already stressed by Nahm [48]), and so does each of its constituents. Let any one of them have four-momentum p i μ. Then its volume moves with four-velocity p i μ∕m i . With Touschek [58], we define a ‘four-volume’

$$\displaystyle{ V _{i}^{\mu } = \frac{V _{i}} {m_{i}}p_{i}^{\mu }\;. }$$(17.51)The constituents’ volumes have to add up to the total cluster volume and their momenta to the total momentum:

$$\displaystyle{ \frac{V } {m}p^{\mu } =\sum _{ i=1}^{n}\frac{V _{i}} {m_{i}}\ p_{i}^{\mu }\;,\qquad p^{\mu } =\sum _{ i=1}^{n}p_{ i}^{\mu }\;. }$$(17.52)This is possible for arbitrary n and p i μ if and only if

$$\displaystyle{ \frac{V } {m} = \frac{V _{i}} {m_{i}} =\mathrm{ const.} = 4\mathcal{B}\;, }$$(17.53)where the proportionality constant is written \(4\mathcal{B}\) in order to emphasize the similarity to MIT bags [59], which have the same mass-volume relation. Moreover, as the energy spectrum of SBM clusters and MIT bags is the same even in the detail [60, 61], one is led to consider these two objects to be the same, at least in the sense that statistical thermodynamics of MIT bags is identical to that of SBM clusters. This ‘identity’ is interesting, because MIT bags ‘consist of’ quarks and gluons, SBM clusters of hadrons: it suggests a phase transition from one to the other.

-

The thermodynamics of clusters with proper volumes still had a singularity at T 0, but a weaker one: while in the old (point-particle) version of SBM the energy density diverged at T 0 (thus making T 0 an ‘ultimate’ temperature), the energy density was now finite at T 0, making a phase transition (already anticipated by Cabibbo and Parisi [62]) to a quark-gluon plasma possible [57, 63, 64].

-

Technical problems in handling the particle volumes explicitly could be elegantly solved [65–69] by using the ‘pressure (or isobaric) partition function’ invented by Guggenheim [70]. This technique allows one to treat the thermodynamics of bags with an exponential mass spectrum (pioneered by Baacke [71]) in a beautiful way: Letessier and Tounsi [72–74] succeeded, following the methods of the Kiev group [65–69], in describing with a single partition function the hadron gas, the quark-gluon plasma, and the phase transition between the two in a realistic case. This opens the way to solving a number of problems connected with proving (or disproving?) the actual presence of a quark-gluon phase in the first stage of relativistic heavy-ion collisions.

4 Some Further Remarks

4.1 The Difficulty in Killing an Exponential Spectrum

The most prominent feature of SBM is its exponentially increasing mass spectrum. Many objections to it were put forward: symmetry constraints would forbid a number of the states counted in it; correct Bose–Einstein and Fermi–Dirac statistics would also reduce the number of states; and in composing clusters of clusters and so on, one should take into account the Pauli principle, which again might eliminate many states. Furthermore, the original argument for including resonances was based on the Beth–Uhlenbeck method [see Eq. (17.14) and Lesson 5], which invokes phase shifts and their sudden rise by π when going through a resonance. But then the phase shifts should go to zero for infinite momentum and the Levinson theorem states that \(\delta _{\ell}(0) -\delta _{\ell}(\infty ) = N_{\ell}\pi\), with N ℓ = number of bound states with angular momentum ℓ. Therefore phase shifts cannot go on increasing by π for each of our (exponentially growing number of) resonances—they must decrease again. That is, each and every one of the masses added somewhere to the mass spectrum must be (smoothly) subtracted later on. How then can an exponential mass spectrum survive?

All these objections turn out to leave the mass spectrum intact, because the exponential function is extremely resistant to manipulations: multiplication by a polynomial of any (positive or negative) order, squaring, differentiating, or integrating it will not do much harm (consider the leading term of its logarithm!)—at most change its exponential parameter \((T_{0} \rightarrow T_{0}^{{\prime}})\).

Therefore, once our self-consistency requirement—crude as it may be—has led to this particular mass spectrum, it is difficult to get rid of it. Incidentally, in the first paper on SBM [45], correct Bose–Einstein/Fermi–Dirac statistics was employed (easy in the grand canonical formulation, awful in phase space [75–79]) and the result was that the mass spectrum \(\rho (m) =\rho _{\mathrm{Bose}}(m) +\rho _{\mathrm{Fermi}}(m)\) had to grow exponentially. The role of conservation laws has been dealt with in the literature (see references in Chapter 25 [2]). None of these explicit attacks killed the leading exponential part of the mass spectrum.

It remains, as an illustration of the resistance of ρ(m), to assume that, for whatever reason, every mass once added to it, has to be eliminated again. (I do not know of any serious argument which would require this. The Levinson theorem derived in non-relativistic potential scattering [80] cannot be invoked in a situation where all kinds of reactions between constituents take place—but assume it had to be so.) Then, arriving at mass m, we subtract everything that had been added at \(m - \Delta m\), whence

and the leading exponential remains untouched (a kind of differentiation).

4.2 What is the Value of\(T_{0}\)?

The most fundamental constant of SBM, namely T 0, escapes precise determination. There are several ways to try to fix T 0:

-

1.

Theoretically,

-

(a)

inside SBM,

-

(b)

from lattice QCD.

-

(a)

-

2.

Empirically,

-

(a)

from the mass spectrum,

-

(b)

from the transverse momentum distribution,

-

(c)

from production rates of heavy antiparticles (\(\overline{\mathrm{He}^{3}}\), \(\overline{d}\)),

-

(d)

from the phase transition to the quark-gluon phase.

-

(a)

We obtain the following.

1a. T 0 from Inside SBM

The crude model of Eq. (17.45) yields with pions only | T 0 ≈ 0.145 GeV |

|---|---|

If K and N were added to the input | T 0 ≈ 0.135 GeV |

The unrealistic model of distinguishable, massless particles | |

as described by Eq. (17.31) gives | T 0 ≈ 0.184 GeV |

A more realistic model (pions + invariant phase space) | |

yields [81] | T 0 ≈ 0.152 GeV |

1b. T 0 from Lattice QCD The determination of T 0 from lattice QCD is still hampered with difficulties. First estimates using pure gauge gave rather high T 0, while the introduction of quarks pose their own problems. Nevertheless compromises have been devised which circumvent these problems and provide a way of dealing with quarks (but paying a price depending on what one is after). Table 17.1 is taken from a review article by F. Karsch, where the methods are described and references to original work are given [83, Table 2] (see also [84]).

It is believed that the value ‘T 0 from m ρ ’ is the most realistic. It agrees rather well with the above-listed estimates of T 0 from inside SBM [except the one for distinguishable massless particles which—accidentally?—lies nearer to the pure gauge (n f = 0) value].

2a. From the Mass Spectrum Here the difficulty is that approximate completeness of the empirical mass spectrum ends somewhere around 1.5 GeV, because the density of mass states increases and the production rate decreases (both exponentially, as predicted by SBM), and the identification of all masses rapidly becomes impossible. On the other hand, we know only the asymptotic form of the mass spectrum \(\sim m^{-3}\exp (m/T_{0})\) and have to guess an extrapolation towards lower masses, which does not diverge for \(m \rightarrow 0\). Various attempts (after 1970):

2b. From the p ⊥ Spectrum

Large-angle elastic scattering. Orear [37] finds an | |

apparent temperature T = 0.158 GeV, which should | |

lie near to T 0. Hence | \(T_{0} \gtrsim \) 0.158 GeV |

Folklore has it that the p ⊥ distribution in the soft region | |

is \(\exp (-6p_{\perp })\). The exact formula for the distribution is | |

[88] quite different from \(\exp (-p_{\perp }/T)\), but for p ⊥ ≫ m π | |

one might generously accept \(\exp (-6p_{\perp })\). Then | \(T \approx T_{0}\mathop{\cong}0.167\) GeV |

A serious attempt to fix T 0 from the p ⊥ distribution is | |

reported in [89]. In a region where p ∥ is very small (no | |

integration over p ∥), the authors fit the p ⊥ distribution | |

to a Bose–Einstein formula and obtain the surprisingly | |

low result | T 0 > T ≈ 0. 117 GeV |

They give reasons why they identify this with T 0, although the primary momentum is only 28.5 GeV (Brookhaven), so that T 0 could still lie somewhat higher (as I believe).

Remark

The determination of T from p ⊥ suffers from a number of perturbing effects, which have been discussed in detail in [88]: resonance \((\mbox{ $\rho$ },\Delta,\ldots )\) decay, leakage of ‘large p ⊥’ down to the soft region, etc. It seems that none of these effects influence the two-body large-angle scattering, so that the value found by Orear [37] might be more trustworthy than the values obtained by fitting the soft p ⊥ distribution by various formulae (partly not well justified).

2c. From Production Ratios of Heavy Antiparticles Production rates of anti-3He, antitritium \((\overline{\mathrm{t}})\), antideuteron \((\overline{d})\), and of many other particles have been measured [90].Footnote 11 By taking ratios we avoid (at least in part) problems coming from the pion production rate, not well known theoretically, and from (not very) different momenta and target materials. From the quoted paper [90], we take ratios \(\overline{^{3}\mathrm{He}}/\overline{d}\) and \(\overline{\mathrm{t}}/\overline{d}\) and average the values [all around (0.8 to 2) × 10−4]. We find

From SBM—taking into account the fact that, for each produced antibaryon, another baryon must be produced along with it—one easily works out (spin, etc., factors included)

The exponential is easily understood: \(\overline{^{3}\mathrm{He}}\) or \(\overline{\mathrm{t}}\) require production of six nucleon masses, while \(\overline{d}\) requires 4, and in the ratio, four of the six cancel out.

Putting in numbers, one finds that the experimental values 17.54 | |

are obtained with a temperature between 0.15 and 0.16 GeV | |

when for V we assume the usual 4π∕3m π 3. Hence (at a | |

primary momentum of \(\sim \) 200 GeV/c) | \(T_{0} \gtrsim 0.155\) GeV |

2d. From the Phase Transition to the Quark-Gluon Phase As the existence of the quark-gluon phase is still hypothetical, no direct measurement is available. A theoretical estimate was proposed by Letessier and Tounsi [91]: they require that the curves P = 0 for an SBM hadron gas and for a quark-gluon phase coincide “as well as can be achieved”. They findT 0 ≈ 0.170 GeV

Remark 17.4.1

The collective motions expected in the expansion and decay of the ‘fireballs’ produced in relativistic heavy-ion collisions will ‘Doppler-shift’ the temperatures read off from transverse momentum distributions. Too high temperatures will result if the collective transverse motion is not corrected for. Thus in our early work on heavy-ion collisions [92], we (erroneously?) assumed a value T 0 ≈ 0.19 GeV, which does not seem, in view of all the other estimates, to be realistic.

Remark 17.4.2

While no precise value can yet be assigned to T 0, it is satisfying that so many different methods yield values which differ typically by less than 20 %. An average over all values listed above givesFootnote 12: T 0 = 0. 150 ± 0. 011 GeV

4.3 Where Is Landau, Where Are the Californian Bootstrappers?

History as told above makes it evident that Landau’s model [93] is orthogonal to our approach and did not influence its development. There was, however, one moment after the formulation of SBM, namely when we tried to combine it with collective motions to obtain momentum spectra of produced particles, when we considered a combination of Landau’s hydrodynamical approach with SBM—only to discard it almost immediately. Landau dealt with ‘prematter’ expanding after a central collision, while we needed the evolution of hadron matter after collisions averaged over impact parameter. We had to take into account various sorts of final particles (\(\uppi\), K, N, hyperons, and antiparticles) obeying conservation laws (baryon number and strangeness). We had to care for leading particles, etc. All that forced us to pursue the semi-empirical ‘thermodynamical model’ [50] whose aim was not theoretical understanding, but practical predictions for use in the laboratory.

Moreover there was a psychological obstacle which I never overcame: namely the Lorentz-contracted volume from which everything was supposed to start. True, when two nucleons hit head on, then just before the impact they are Lorentz-contracted (seen from the CM); then they collide, heat up, and come to rest. When they start to expand, they are at rest and hence no longer Lorentz-contracted. On the other hand, one can conceive that the mechanical shock has indeed compressed them. But into what state would be another complicated hydro-thermodynamical problem in which their viscosity, compressibility, specific heat, and what-not would enter.Footnote 13 Why should this state of compression, which constitutes the initial condition for the following expansion, be exactly equal to the Lorentz ‘compression’ before the shock? Since nobody among the people working on this model shared my uneasiness, I guessed it was my fault—but it somehow prevented me from ever becoming enthusiastic about the Landau model.

This is the place to mention another Russian physicist whose work would have inspired us, had we been aware of it. In 1960, 5 years before SBM, Yu.B. Rumer [94] wrote an article with the title Negative and Limiting Temperatures, in which he states the necessary and sufficient conditions for the existence of a limiting temperature—namely an exponential spectrum—and gives an example: an ideal gas in an external logarithmic potential. Unfortunately, he remained essentially on the formal side of the problem and did not connect it with particle production in strong interactions. Otherwise, who knows?

Now for the Californian bootstrappers. Even a most modest account of what has been done in the heroic effort of a great number of theoreticians on the program of ‘Hadron Bootstrap’ or ‘Analytic S-Matrix’ would fill a whole book. For me the question is: did it in any way help the conception of SBM in 1964? And the answer is negative. To realize that, one only has to remember the above-reported history up to 1964 and hold it against the best non-technical expositions of the basic ideas and the general philosophy of ‘Hadron Bootstrap’, namely, the two articles by G.F. Chew: ‘Bootstrap’: A scientific idea? [95] and Hadron bootstrap: Triumph or frustration? [96]. After 1964, however, the influence was enormous, although not technically. But it was of great value for all those who worked on SBM to see their philosophical basis shared with others. Most influential, of course, was that S. Frautschi, one of the leading Californian bootstrappers, joined our efforts, not only lending his prestige, but indeed giving SBM a turn that upgraded it (he also coined its name ‘statistical bootstrap’) and made it acceptable to particle physicists: the phase-space formulation and its numerous consequences.

The Californian bootstrappers credo was the analytic S-matrix: Poincaré invariant, unitary, maximally analytic (with crossing, pole-particle correspondence), which was believed—or hoped—to be uniquely fixed by these requirements. Another aspect was that it should generate the whole hadron spectrum, where each hadron “plays three different roles: it may be a ‘constituent’, it may be ‘exchanged’ between constituents and thereby constitute part of the force holding the structure together, and it may be itself the entire composite” [95].

I know of only two realizations of this aspect: the π–ρ system [97, 98] and SBM—both remaining infinitely far behind the ambitious bootstrap program. In spite of a large number of other achievements obtained in and around the program (dispersion relations, Regge poles, Veneziano model, even string theories), one sees today a strong resurrection of Lagrangian field theories, which have now taken the lead in the race toward a ‘theory of everything’. I believe that the bootstrap philosophy and the Lagrangian fundamentalism must complement each other. Each one alone will never obtain complete success.

5 Conclusion

Had I been asked to speak only 5 min, my review might have been much better. Here it is. On the long way to SBM, we stopped at a few milestones:

-

The realization that in a single hadron–hadron collision, many secondaries can be produced (1936).

-

The discovery of limited \(\langle p_{\perp }\rangle\) (1956).

-

The discovery that fireballs exist and that a typical collision seems to produce just two of them (1954–1958).

-

The concept of the compound nucleus and its thermal behaviour (1936–1937).

-

The construction of simple statistical/thermodynamical models for particle production in analogy to compound nuclei (1948–1950).

-

The introduction of interaction into such models via phase shifts at resonance (1937, 1956).

-

The discovery that large-angle elastic cross-sections decrease exponentially with CM energy (1963).

-

The discovery that a parameter-free and numerically correct description of this exponential decrease existed already, buried in archived Monte Carlo phase-space results (1963).

The birth of SBM in 1964 was but the logical consequence of all this. Between 1971 and 1973 the child SBM became a promising youngster, when it was reformulated and solved in phase space. It became adult in 1978–1980, when it acquired finite particle volumes. Today, another 14 years later, it shows signs of age and is ready for retirement: in not too long a time, all of its detailed results will have been derived from QCD, maybe from ‘statistical QCD’.

So then, was that all? I believe that something remains: SBM has opened a (one-sided but) intuitive view of strong interactions, revealing:

-

their ‘thermal behaviour’ and thus their accessibility to statistical thermodynamical descriptions,Footnote 14

-

the existence of clusters (fireballs),

-

the production rates of particles and their typical multiplicity distributions,

-

large-angle elastic scattering,

-

the ‘universal’ soft-p ⊥ distribution (plotted against \(\sqrt{p_{\perp }^{2 } + m^{2}}\), please!), and

-

the existence of a singular temperature T 0 where a phase transition takes place.

In fact, all these are nothing else than obvious (and calculable) manifestations of one single, fundamental property of strong interactions, namely the fact that they possess an exponential mass spectrum \(\rho (m) \propto \exp (m/T_{0})\).